Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Joel Maher: Alert Manager has a more documentation and a roadmap |

I have been using alert manager for a few months to help me track performance regressions. It is time to take it to the next level and increase productivity with it.

Yesterday I created a wiki page outlining the project. Today I filed a bug of bugs to outline my roadmap.

Basically we have:

* a bunch of little UI polish bugs

* some optimizations

* addition of reporting

* more work flow for easier management and investigations

In the near future we will work on making this work for high resolution alerts (i.e. each page that we load in talos collects numbers and we need to figure out how to track regressions on those instead of the highly summarized version of a collection).

Thanks for looking at this, improving tools always allows for higher quality work.

http://elvis314.wordpress.com/2014/07/03/alert-manager-has-a-more-documentation-and-a-roadmap/

|

|

Joel Maher: More thoughts on Auto-land and try server |

Last week I wrote a post with some thoughts on AutoLand and Try Server, this had some wonderful comments and because of that I have continued to think in the same problem space a bit more.

In chatting with Vaibhav1994 (who is really doing an awesome GSoC project this summer for Mozilla), we started brainstorming another way to resolve our intermittent orange problem.

What if we rerun the test case that caused the job to go orange (yes in a crash, leak, shutdown timeout we would rerun the entire job) and if it was green then we could deem the failure as intermittent and ignore it!

With some of the work being done in bug 1014125, we could achieve this outside of buildbot and the job could repeat itself inside the single job instance yielding a true green.

One thought- we might want to ensure that if it is a test failing that we run it 5 times and it only fails 1 time, otherwise it is too intermittent.

A second thought- we would do this by try by default for autoland, but still show the intermittents on integration branches.

I will eventually get to my thoughts on massive chunking, but for now, lets hear more pros and cons of this idea/topic.

http://elvis314.wordpress.com/2014/07/03/more-thoughts-on-auto-land-and-try-server/

|

|

Michael Verdi: Videoblogging 10 years later |

Yesterday I was on another episode of The Web Ahead. This time, talking about the videoblogging movement that I was involved in starting back in 2004. It was then that my friend Ryanne and I created Freevlog to teach people how to get video on the web (we later turned that into a book). Firefox was a really big thing for us back then and it was the beginning of my love of the Mozilla mission and eventually led to me working at Mozilla.

https://blog.mozilla.org/verdi/437/videoblogging-10-years-later/

|

|

Henrik Skupin: Firefox Automation report – week 19/20 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 19 and 20.

Highlights

When we noticed that our Mozmill-CI production instance is quickly filling up the /data partition, and having nearly no space left to actually run Jenkins, Henrik did a quick check, and has seen that the problem were the update jobs. Instead of producing log files with about 7MB in size, files with more than 100MB each were present. Inspecting those files revealed that the problem were all the SPDY log entries. As a fix Henrik reduced the amount of logging information, so it is still be helpful but it’s not exploding.

In the past days we also have seen a lot of JSBridge disconnects while running our Mozmill tests. Andrei investigated this problem, and it turned out that the reduced delay for add-on installations were the cause of it. Something is most likely messing up with Mozmill and our SOCKS server. Increasing the delay for that dialog fixed the problem for now.

We are using Bugsahoy for a long time now, but we never actually noticed that the Github implementation was somewhat broken when it comes to filtering for languages. To fix that Henrik added all the necessary language mappings. After updating the Github labels for all of our projects, we were seeing a good spike of new contributors interested to work with us.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 19 and week 20.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 19 and week 20.

http://www.hskupin.info/2014/07/03/firefox-automation-report-week-19-20-2014/

|

|

Roberto A. Vitillo: Most popular GPUs on Nightly and Release |

We have a long-standing bug about Firefox not scrolling smoothly with Intel GPUs. It turns out that recently, thanks to a driver update, the performance has drastically improved for some of our users. While trying to confirm this hypothesis I found some interesting data about Firefox users on Nightly 33 and Release 30 that might come in handy in deciding on what hardware to run our benchmarks in the future.

First things first, let’s have a look at the popularity of the various GPU vendors:

That doesn’t really come as a surprise considering how ubiquitous Intel chips are nowadays. This also means we should concentrate our optimization efforts towards Intel GPUs since it’s there where we can have the biggest impact.

But how well does Firefox perform with GPUs from the above mentioned vendors? It turns out that one of our telemetry metrics, that measures the average time to render a frame during a tab animation, comes in pretty handy to answer this question:

Ideally we would like to reach 60 frames per second for any vendor. Considering that practically every second user has an Intel GPU, the fact that our performance on those chips is not splendid weights even more on our shoulders. Though, as one might suspect, Intel chips are usually not as performant as Nvidia’s or AMD’s ones. There is also a suspicious difference in performance between Nightly and Release; could this be related to Bug 1013262?

The next question is what specific models do our users possess. In order to answer it, let’s have a look at the most popular GPUs that account for 25% of our user base on Nightly:

and on Release:

As you can notice there are only Intel GPUs in the top 25% of the population. The HD 4000 and 3000 are dominating on Nightly while the distribution is much more spread out on Release with older models, like the GMA 4500, being quite popular. Unsurprisingly though the most popular chips are mobile ones.

Let’s take now as reference the most popular chips on Release and see how their performance compares to Nightly:

We can immediately spot that newer chips perform generally better than older ones, unsurprisingly. But more importantly, there is a marked performance difference between the same models on Nightly and Release which will require further investigation. Also noteworthy is that older desktop models outperform newer mobile ones.

And finally we can try to answer the original question by correlating the tab animation performance with the various driver versions. In order to do that, let’s take the Intel HD 4000 and plot the performance for its most popular drivers on the release channel and compare it to the nightly one:

We can notice that there is a clear difference in performance between the older 9.X drivers and the newer 10.X which answers our original question. Unfortunately though only about 25% of our users on the release channel have updated their driver to a recent 10.X version. Also, the difference between the older and newer drivers is more marked on the nightly channel than on the release one.

We are currently working on an alerting system for Telemetry that will notify us when a relevant change appears in our metrics. This should allow us to catch pre-emptively regressions, like Bug 1032185, or improvements, like the one mentioned in this blog post, without accidentally stumbling on it.

http://ravitillo.wordpress.com/2014/07/03/most-popular-gpus-on-nightly/

|

|

Armen Zambrano: Tbpl's blobber uploads are now discoverable |

This is useful since it allows uploads of screenshots, crashdumps and any other file needed to debug what failed on a test job.

Up until now, if you wanted your scripts determine the files uploaded in a job, you would have to download the log and parse it to find the TinderboxPrint lines for Blobbler uploads, e.g.

15:21:18 INFO - (blobuploader) - INFO - TinderboxPrint: Uploaded 70485077-b08a-4530-8d4b-c85b0d6f9bc7.dmp to http://mozilla-releng-blobs.s3.amazonaws.com/blobs/mozilla-inbound/sha512/5778e0be8288fe8c91ab69dd9c2b4fbcc00d0ccad4d3a8bd78d3abe681af13c664bd7c57705822a5585655e96ebd999b0649d7b5049fee1bd75a410ae6ee55afNow, you can look for the set of files uploaded by looking at the uploaded_files.json that we upload at the end of all uploads. This can be discovered by inspecting the buildjson files or by listening to the pulse events. The key used is called "blobber_manifest_url" e.g.

"blobber_manifest_url": "http://mozilla-releng-blobs.s3.amazonaws.com/blobs/try/sha512/39e400b6b94ac838b4e271ed61a893426371990f1d0cc45a7a5312d495cfdb485a1866d7b8012266621b4ee4df0cf9aa7d0f6d0e947ff63785543d80962aaf9b",In the future, this feature will be useful when we start uploading structured logs. It will help us not to download logs to extract meta-data about the jobs!

|

| No, your uploads are not this ugly |

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/qBxRfcHstnc/tbpls-blobber-uploads-are-now.html

|

|

Robert O'Callahan: Implementing Scroll Animations Using Web Animations |

It's fashionable for apps to perform fancy animations during scrolling. Some examples:

- Parallax scrolling

- Sticky headers that scroll normally until they would scroll out of view and then stop moving

- Panels that slide laterally as you scroll vertically

- Elements that shrink as their available space decreases instead of scrolling out of view

- Scrollable panels that resist scrolling as you get near the end

Obviously we need to support these behaviors well on the Web. Also obviously, we don't want to create a CSS property for each of them. Normally we'd handle this diversity by exposing a DOM API which lets developers implement their desired behavior in arbitrary Javascript. That's tricky in this case because script normally runs on the HTML5 event loop which is shared with a lot of other page activities, but for smooth touch tracking these scrolling animation calculations need to be performed reliably at the screen refresh rate, typically 60Hz. Even for skilled developers, it's easy to have a bug where once in a while some page activity (e.g. an event handler working through some unexpected large data set) blows the 16ms budget to make touch dragging less than perfect, especially on low-end mobile devices.

There are a few possible approaches to fixing this. One is to not provide any new API, hope that skilled developers can avoid blowing the latency budget, and carefully engineer the browser to minimize its overhead. We took this approach to implementing homescreen panning in FirefoxOS. This approach sounds fragile to me. We could make it less fragile by changing event dispatch policy during a touch-drag, e.g. to suppress the firing of "non-essential" event handlers such as setTimeouts, but that would add platform complexity and possibly create compatibility issues.

Another approach would be to move scroll animation calculations to a Worker script, per an old high-level proposal from Google (which AFAIK they are not currently pursuing). This would be more robust than main-thread calculations. It would probably be a bit clumsy.

Another suggestion is to leverage the Web's existing and proposed animation support. Basically we would allow an animation on an element to be use another element's scroll position instead of time as the input to the animation function. Tab Atkins proposed this with declarative CSS syntax a while ago, though it now seems to make more sense as part of Web Animations. This approach is appealing because this animation data can be processed off the main thread, so these animations can happen at 60Hz regardless of what the main thread is doing. It's also very flexible; versions of all of the above examples can be implemented using it.

One important question is how much of the problem space is covered by the Web Animations approach. There are two sub-issues:

- What scrolling effects depend on more than just the scroll position, e.g. scroll velocity? (There certainly are some, such as headers that appear when you scroll down but disappear when you scroll up.)

- For effects that depend on just the scroll position, which ones can't be expressed just by animating CSS transforms and/or opacity as a function of the scroll position?

http://robert.ocallahan.org/2014/07/implementing-scroll-animations-using.html

|

|

Benjamin Kerensa: Release Management Work Week |

Last week in Portland, Oregon, we had our second release management team work week of the year focusing on our goals and work ahead in Q3 of 2014. I was really excited to meet the new manager of the team, our new intern and two other team members I had not yet met.

It was quite awesome to have the face-to-face time with the team to knock out some discussions and work that required the kind of collaboration that a work week offers. One thing I liked working on the most was discussing the current success of the Early Feedback Community Release Manager role I have had on the team (I’m the only non-employee on the team currently) and discussing ideas for improving the pathways for future contributors in the team while also creating new opportunities and a new pathway for me to continue to grow.

One thing unique about this work week is we also took some time to participate in Open Source Bridge a local conference that Mozilla happened to be sponsoring at The Eliot Center and that Lukas Blakk from our team was speaking at. Lukas used her keynote talk to introduce her awesome project she is working on called the Ascend Project which she will be piloting soon in Portland.

While this was a great work week and I think we accomplished a lot, I hope in future work weeks that they are either out of town or that I can block off other life obligations to spend more time on-site as I did have to drop off a few times for things that came up or run off to the occasional meeting or Vidyo call.

Thanks to Lawrence Mandel for being such an awesome leader of our team and seeing the value in operating open by default. Thanks to Lukas for being a great mentor and awesome person to contribute alongside. Thanks to Sylvestre for bringing us French Biscuits and fresh ideas. Thanks to Bhavana for being so friendly and always offering new ideas and thanks to Pranav for working so hard on picking up where Willie left off and giving us a new tool that will help our release continue to be even more awesome.

|

|

Fr'ed'eric Harper: Microsoft nominated me as a Most Valuable Professional |

You read well; Microsoft nominated me, and I’m now a MVP, a Most Valuable Professional about Internet Explorer. For those of you that don’t know, it’s an important recognition in the Microsoft ecosystem: it’s given to professionals with a certain area of expertise, around Microsoft technology or technology Microsoft use, for their work in the community.

So why Microsoft gave me recognition for Internet Explorer? In the end, I’m working at Mozilla. Well, I see this as a good news for everything I was doing while I was there (like Make Web Not War), and everything I’m doing right now: it’s another proof that Microsoft is more, and more open. As I’ve always said, it’s not perfect, but it’s going in the right direction (MS Open Tech is a great example). In my case, this award is less about Internet Explorer itself, than about the Web, and it’s lovely technology that is HTML5. They recognize the work I’m doing in that sense, either with Open Source, with communities in Montreal or with talks I’m doing about the Web. At first, it may seem weird for you, but I think it makes a lot of sense that Microsoft recognize someone like me, even if I’m working in a company making a competing product. Microsoft is doing a better job since Internet Explorer 9, and the browser is getting better, and better. No matter if you don’t use it, but other people do, and by making a better browser, which respect more the standards, they are helping the web to move forward. It’s also a good thing for us, developers, as we can more easily build great experiences for any users, no matter the browser they use. On that note, I was happy to see a bit more transparency about IE with the platform status website.

So, I salute the openness of Microsoft to nominate someone like me (they even hired me in the past). By being part of that MVP program, I’m looking forward to seeing how I can continue to work on the Mozilla mission while helping Microsoft to be more open with web technologies.

--

Microsoft nominated me as a Most Valuable Professional is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- I’m joining Mozilla It was a bold move to leave Microsoft without knowing...

- I’m leaving Microsoft, looking for a new opportunity For two years, and a half, I was a proud...

- Make Web Not War TV – Make Web Not War at Microsoft with Keith Loo For this eleventh (on twelve) interviews I did for the...

|

|

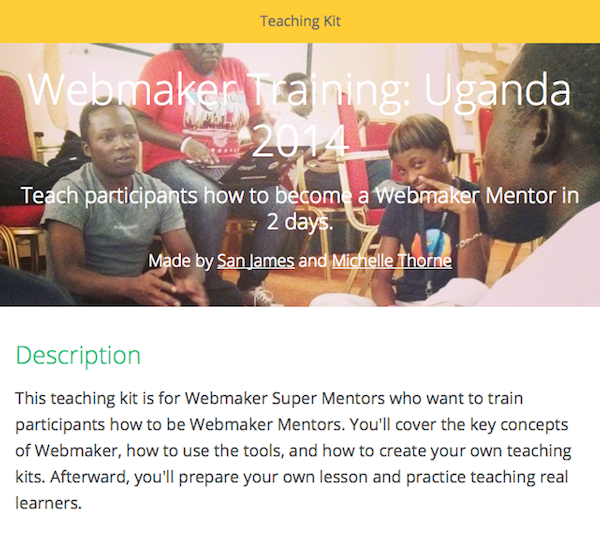

Michelle Thorne: Webmaker Training in Uganda |

60 newly trained Webmaker Mentors. 12 amazing Webmaker Super Mentors. 200 students taught how to participate on the web. 1 epic weekend in Kampala!

Mozillians from Uganda, Kenya and Rwanda gathered together for the first time to run a train-the-trainer event for East Africa. The goal was to teach the local community–a lovely mix of educators, techies and university students in Kampala–how to teach the web.

The training in Uganda builds on Webmaker’s free online professional development. Our theory is that blending online and in-person professional development, participants get the most out of the experience and better retain the skills they learned. Not to mention staying connected to a local community as well as a global one.

Training Agenda

Together with the amazing event hosts, we crafted a modular training agenda.

It cover 2 days of training and a half day practice event. Participants had little to no experience teaching the web before the event. But after the training, they would go on to teach 200 secondary school students!

The training helped the participants get ready for the practice event and to teach the web to the communities they care about. We covered these four main learning objectives:

- understanding the value of the open web, making as learning and participatory learning.

- using the Webmaker tools to teach web literacy

- how to create your own teaching kit and be a good facilitator

- how to participate in the global & local Webmaker community

Not to mention lots of fun games and interstitial activities. I learned, for example, how to play a Ugandan schoolyard game called “Ali Baba and the 40 Thieves.”

Community Leaders

For me, the most exciting part of this event was meeting and supporting the emerging community leaders.

Some of the Webmaker Super Mentors were part of our first training a year ago in Athens. Others were quite experienced event organizers, mentors and facilitators who stepped up to the role of teaching others how to teach.

The training facilitators had a beautiful blend of experiences, and each facilitator, in addition to each participant, got to level up their skills as part of the training.

Lessons Learned

Every event is a learning experience, no matter which role you have. I learned a lot by helping San James teach people to teach people how to teach the web.

Practice events are invaluable. The highlight of the training was bringing the 60 freshly trained Webmaker Mentors to a live event, where they taught 200 secondary school students and put their new skills to practice. They prepared their own agendas, rehearsed them, and then split into small groups to teach these students. Floating around, it was amazing to hear the mentors sharing the knowledge they just learned the day before. And from the smiles on everyone’s faces, you could tell it was a fun and memorable event.

Prepare low-fi / no-fi activities. We missed a big opportunity to test the amazing new “low-fi/no-fi” teaching kit, for when you want to teach the web without internet or computers. Given our connectivity issues, this would have been perfect.

Make time for participants to take immediate next steps. I was proud how well we worked in debriefs and reflections into the training agenda. However, it would have been better if participants had had time to make an action plan and even take the first step in it. For example, they could pledge to host a small Maker Party., log the event and draft an agenda.

Thank you!

This was one of the most inspiring and fun events I’ve been to with Mozilla.

The hugest of thank yous to all the Super Mentors–from Uganda, Kenya and Rwanda–for making the event possible. A special thanks to San James and Lawrence for believing in this event for a long time. Your upcoming Mozilla Festival East Africa will be a success thanks to your wonderful team and the people you trained. This is only the beginning!

http://michellethorne.cc/2014/07/webmaker-training-in-uganda/

|

|

Pete Moore: Weekly review 2014-07-02 |

The focus of this week has been finishing off the Q1 goals.

L10N vcs sync

Regarding l10n, I have this now running in staging, and have to clean up the several workarounds I put in place to manage particular problems, such as:

- locales being defined for a branch, but there being no hg repository for it

- not being allowed to update passed-in configs on-the-fly in mozharness, but l10n configs being different to other vcs sync configs

- not completely failing when one repo fails to exist, but others do exist (“do as much as possible but report on failures”)

- cleaning up error handling

gecko-dev and gecko-projects

This piece is done, but I am quite busy setting up full end-to-end staging tests for it. However, the change is enabling features that are enabled for other repos (namely, generation of git notes, and publishing mappings to mapper). Therefore I would propose a simpler and more pragmatic approach, might be to enable these features in production, and if they fail, to disable again. These are just config settings, and no harm should be done if they fail (they do not affect other parts of the vcs sync process, such as altering SHAs or changing urls of repos to pull from / push to.

gecko-git

This piece is the last main one, but I will be doing that when the ones above are ready.

Other things I’ve touched since last review:

Bug 1023843 – Possible bug in end_to_end_reconfig.sh when using -p option?

Bug 1019438 – end_to_end_reconfig.sh should store logs from manage_foopies.py

Closed!

Bug 1019434 – update_maintenance_wiki.sh is truncating text content

Closed!

Bug 1018975 – buildfarm/maintenance/manage_foopies.py not executable

Closed!

Bug 1018248 – End-to-end reconfig should also update tools version on foopies

Closed!

Bug 1018118 – Growing pending queues for tegras and time between jobs per tegra x3e 6 hours

Bug 1013511 – (byebyebuildduty) [tracking] Eliminate buildduty

Bug 978928 – Reconfigs should be automatic, and scheduled via a cron job

Bug 976106 – tegra/panda health checks (verify.py) should not swallow exceptions

Bug 976100 – Slave Health should link to the watcher log files of the tegras and pandas

Bug 962853 – vcs-sync needs to be able to publish git-hg mappings to mapper

Closed!

Bug 946019 – cut over gecko.git to the new vcs-sync system

Bug 939817 – more vcs-sync email tweaks

Bug 929336 – permanent location for vcs-sync mapfiles, status json, logs

Bug 904176 – reconfigs as a buildbot/jenkins job

Bug 892691 – Add HD Panda chassis to android production pandas

Closed!

Bug 876715 – Determine how to update watcher

Closed!

Bug 869051 – Race condition between builders that push updates to in-tree files

Bug 862910 – cache MAR + installer downloads in update verify

Bug 850743 – Clean up / redesign of configuration in buildbot-configs/mozilla

Bug 770428 – Cleanup org.mozilla.f3nn3c.PasswordsProvider in verify.py

Bug 742479 – Tegra Cleanup should delete some additional files on the sdcard

|

|

David Rajchenbach Teller: Souhaitez-vous aider le renard `a acc'el'erer ? |

Je recois r'eguli`erement des propositions de volontaires qui souhaiteraient contribuer `a Firefox. Habituellement, je les guide vers Bugs Ahoy – si vous ne connaissez pas Bugs Ahoy, foncez le voir, ce moteur de recherche d'edi'e aux t^aches accessibles aux d'ebutants est fabuleux. Aujourd’hui, changement de programme : si vous souhaitez contribuer `a Firefox, et plus pr'ecis'ement si vous souhaitez contribuer `a am'eliorer les performances de Firefox, voici quelques mani`eres de participer `a l’effort de l’'equipe Performance.

Session Restore

Session Restore est le composant de Firefox charg'e de sauvegarder l’'etat du navigateur en permanence pour permettre de r'ecup'erer d’un crash du navigateur, du syst`eme ou du mat'eriel ou d’un red'emarrage intempestif sans perdre de donn'ees. Je suis en train de r'e'ecrire certaines parties de Session Restore pour am'eliorer sa r'eactivit'e (en le rendant parall`ele) et sa contribution au temps de d'emarrage.

Pour vous lancer, quelques bugs d’introduction, e-mentor'es par moi :

- https://bugzilla.mozilla.org/show_bug.cgi?id=1030765

- https://bugzilla.mozilla.org/show_bug.cgi?id=1031298

- https://bugzilla.mozilla.org/show_bug.cgi?id=1029471

Tous ces bugs sont en JavaScript.

File I/O

OS.File est le composant de Firefox qui permet `a JavaScript d’acc'eder au disque `a haute performance. Je suis en train de r'e'ecrire certaines parties de OS.File pour le rendre plus extensible et pour am'eliorer la r'eactivit'e de certaines fonctions critiques. Quelques bugs d’introduction, e-mentor'es par moi :

|

|

Soledad Penades: Invest in the future: build for the web! |

I spoke at GOTO Amsterdam a few weeks ago. I was really thrilled to be back in the Netherlands after so many years! So thanks to Sergi Mansilla, who curated the HTML5 track, and the organisation in general for bringing me there!

The talk wasn’t recorded, but I made a screencast just in case you really want to listen to me. I am also posting the outline/notes I wrote, and they differ in places because I don’t read them during the talk (I don’t even have them handy) and I sometimes went a bit off topic, but that’s the beauty of improvisation!

Here are the slides, and the slides source code just in case you wanted it too.

On to the notes-expect some MASSIVE GIFs and amazingly clever photomanipulation! tee hee hee!

Invest in the future: build for the web!

Hey all and thanks for coming! My name is Soledad Penad'es (@supersole in Twitter) and I am an Apps Engineer at Mozilla. But some time before that… I worked on Android apps.

They sold us this “dream” that you could earn a living with Android apps. And of course, who wouldn’t want to earn enough money building things they loved? So I did this sort of market research and tried to figure out what I wanted to do. The first thing I tried to build was an audio tool, but it was too slow and too glitchy. I kept wanting to do something which had to do with graphics or audio but I didn’t want to build games; they need things such as a story, audio, artwork, etc, and I didn’t think I could do all of them, but I still didn’t know what to build.

One day I was walking around in London and I saw something really cool. I wanted to take a picture but I realised I had forgotten my camera. I thought: well maybe I could take the picture with the phone instead! But it was really freezing, so I was wearing gloves. And although you can buy gloves with conductive tips nowadays, I didn’t know that back then, so I had to take out the glove in order to touch the screen and take the picture. Also, the interface for selecting different options was really slow to use, and it was specially painful when standing there in the cold!

Photography apps

So I had this idea: what if I built a camera app that was better than the stock camera app?

It would…

- be less clunky than stock – it would be faster to navigate the options

- use the hardware buttons – so you wouldn’t need to touch the screen to take pictures

- silent – I was so tired of the shutter sound because it would scare my sister’s cats away each time I tried to take a picture of them

And that was the first photography app I built. Shortly afterwards I decided I wanted to play a little bit more with the Camera API and thought that I could maybe build an app that would apply filters to images. At that time Instagram didn’t exist for Android yet, and the existing filter apps were about taking a picture and applying postprocessing afterwards, so the process wasn’t as fluid and playful as I would have liked it to be.

I then built this realtime effects app that would take the live stream off the camera and process the image in real time.

Good feedback, but…

The apps were generally well welcomed and the feedback was mostly positive, but still there were lots of hardware issues that I couldn’t test because I either didn’t have the phones or couldn’t buy them either. Some people were using weird Chinese built rebranded phones and it was really difficult to find out where the error actually was-was it the OS version, was it the extra rebranded stuff, was it the actual phone? And even if I wanted to buy the phones, sometimes it was impossible because they were exclusive to a certain geography, so my only solution would have been buying them from eBay.

On the other hand, people liked the filters from my app and they started to ask for ports to their favourite operating system. iOS? Blackberry? (yes, Blackberry was still a thing back then). Even desktop platforms: Windows? Mac OS? That was driving me crazy because I really liked being liked and appreciated the positive feedback, but there was just no way I could please them. I was running Linux, for whatever it’s worth!

Interactive picture books start-up

At some point I decided to start working for others again, so I ended up joining a start-up that wanted to enable authors make great interactive picture books. There was work going on in two fronts: first the Mac OS authoring environment, which was the tool for the authors and where they could assemble their text, images, and possibly sounds and animations. This would then be saved into an XML based format that could in turn be read and ‘executed’ by the second ‘front’, which was the engine. There were two versions of the engine: iOS and Android.

Layout was DIFFICULT

I was just working on the Android side, and there we probably spent like 70% of our time fighting the operating system to make things look and behave the way we wanted them to work. The worst part was that there was no preview (specially when using Custom Views) or that the preview differed from the actual results. You then had to push the app to the device, and because it was really rich in graphics it would took so long that when it finished, one minute or so later, you had totally forgotten what you wanted to look at. You’d ask yourself: what was that thing I was working on? I can’t remember. Oh well, I guess I’ll just have another coffee, or something

. Ironically, for complex layouts -specially layouts with lots of text- we actually had to use an embedded WebView because it was just faster to layout using HTML and CSS than styling “the Android way”.

Another big issue was that native animations were quite limited. The books had transitions between pages, and often within each page too, and we wanted those to be smooth. But the native animations didn’t offer many parameters, and felt really mechanical. Plus the elements we tried to animate often ended up being really janky! We weren’t sure of where the problem was. Was it our code? Was it the way we were animating? If we were using Android’s native animations, should we have garbage collection issues? And since we weren’t sure at all, we had to spend inordinate amounts of time in the profiler, trying to trace where did those slowdowns come from. Still, jank continued to be there! Plus weird graphical glitches, and we kept finding cargo cult tricks in Stack Overflow and applying them out of desperation, but of course, the jank was still there!

And what about testing… LOL about testing to be quite honest

. I would just say that at some point we just half joked about creating a kickstarter to try and get some Google engineer to make it possible to test asynchronous code for reals, because no matter how many testing frameworks and solutions we tried, none would work.

Bad habits, deeply ingrained

There were other issues which were less about the tech and more about the process and the mindset. The biggest issue was that “different output sizes” was treated as an exception and not as the norm. So when a project was initially released for the iPad 1, the designs only considered those dimensions and aspect ratios, which made it really hard to port to variable sized environments such as Android.

For example, the graphic design would call for an overlap on top of the menu that would be sized exactly for the iPad 1, so using that same overlay in an Android tablet, which had a wider screen aspect ratio size, ended up stretching the image in a bad way.

Imagine when the retina iPads were introduced. It was fun fun fun.

One day I woke up and wondered…

why is this not HTML+JS+CSS?

So I asked my boss, which politely replied that he had been experimenting a lot with it, but…

… the web is not ready yet…

… you can’t have smooth animations and audio in the browser…

I hadn’t actually tried to build the engine in HTML5, so what could I argue against that? So I just said “OK” and continued work.

Contracting for local newspaper

We built this app for a local newspaper which was, pretty much, a glorified RSS reader.

With the exception that the layout kept getting more and more complex, and even then we had lots of embedded WebViews for more complicated things and for rendering the articles (which were, basically, the HTML pulled raw from the RSS feeds) and embedded JavaScript for things such as adding favourites and bookmarks.

This app kept getting new features and more complex layout until we reached this point in which we reached the maximum limit of nested views. But–and here’s the funny thing– NOT ALL the devices exhibited that error.

We ended up reaching that point in which the only solution we could suggest was:

hey, so… what about a total rewrite of this less than two years app?

One day I woke up and wondered again…

Why is this not HTML+JS+CSS?

And again I asked my boss. And again I got a similar answer:

… because the web is not ready yet…

… you can’t store offline data…

… you can’t have push notifications…

which only mildly convinced me, so I muttered a meh “A-ha” and kept working on the thing.

One day I was updating the company website

And opened DevTools to live edit it. And I had an epiphany.

We were recreating browsers again and again because “the web is not ready”, but I had had enough.

I said to myself:

I’m out of this madness, and back to the web.

Back with a vengeance!

Two sides of the fence

But I’d be back after having been to the two sides of the fence. I knew about the upsides and the downsides, and I decided that I wanted to teach people about the upsides and help them take the maximum advantage of them, and work in fixing the downsides.

So…

I joined Mozilla

We want YOU to build for the web

Why build for the web?

Of course you will be asking me why should I bother writing for the Web?

Well, the first reason is that it is the only non proprietary platform. No one owns it, and no one controls it. Compare that to the Windows or Apple platforms, and where sometimes you’re not even allowed to discuss the issues you’re having when developing because you’re under an NDA.

And it’s also the closest to Write Once Run Everywhere you’ll ever get. As a sort of secondary bonus, writing for the web, using standards, gives you a high chance that whatever you build will still work in the future. Compare that to trying to get some old binaries to work in newer platforms–specially when you don’t have access to the source code or to the toolchain that generated those binaries in the past.

Also, fragmentation is not an issue: it’s business as usual. And encouraged. The web is about enabling people to access and work on their data, and if they prefer to use a cheaper phone with dithered screens because they can’t afford to get a super high end device, then we shouldn’t be adding artificial barriers. Working this way, taking into account that your apps might be running on a variety of devices widens your base of customers, and at the same time cuts down on development costs–because you don’t need to rewrite the same app for each of the native platforms that customers might use.

And finally, because it is everywhere–e-books, TV set top boxes, GPS trackers, mobile native via WebKit views, native via PhoneGap, Ludei, AppCelerator, desktop environments (GNOME 3), even Mac OS scripting… so maybe all these people cannot be wrong.

We helped unlock desktop browsers from monopoly

Just as Mozilla helped unlock desktop browsers from monopoly with Firefox OS…

We’re doing the same in mobile with Firefox OS

And doing “the same” means giving power back to developers and enabling them to build awesome cool stuff, which means definining and implementing new JavaScript APIs for accessing features that so far have only been accessible to native code.

New APIs

These are some of the new APIs that are accessible for code running in Firefox OS:

- Network Information

- Bluetooth

- Mobile Connection

- Network Stats

- TCP Socket

- Telephony

- WebSMS

- WiFi Information

- Ambient Light Sensor

- Battery Status

- Proximity

- Device Orientation

- Screen Orientation

- Vibration

- WebFM

- Camera

- Power Management

- FileHandle

- Contacts

- Device Storage

- Settings

- Alarm

- Simple Push

- Web Notifications

- Web Activities

- WebPayment

- Browser

- Idle

- Permissions

- Time/Clock

Most of these APIs are prefixed for now, but that’s because Firefox OS is the testing ground–the place where APIs are not only designed but also put in practice and battle tested with real users, apps and needs. And these APIs are also submitted to the appropriate standards tracks, so that in the future not only Firefox OS, but all browsers can use this specification to implement those features, which in turn gives more power to developers to do more amazing stuff that works everywhere.

Or in other words: our guiding goal is to help shaping standards, not to build a proprietary OS in JS.

Existing APIs

Existing Web APIs which are implemented in most of the browsers do need some love for mobile implementations too. They have to be efficient.

That might mean that the C/C++ code that does something in Firefox Desktop has to be rewritten to take advantage of the ARM processor or the hardware decoders that come with the phone and can do that way faster than a software decoder would. Or maybe read and writes to storage need to be optimised for battery usage.

These are some of those APIs:

The conclusion to this is that spending developer efforts in making APIs available and code runnable anywhere has a multiplier effect where way more people benefit than if those developers simply focused their efforts on building native apps.

But there’s still more work to be done…

Over two billion people still don’t have access to the Internet

That’s right, 2,000,000,000+ people still can’t “browse” just like you and me can.

At Mozilla we believe the Internet must be open and accessible, so we are working on fixing this too. We partnered with some manufacturers to make a phone that would run Firefox OS and also be affordable.

$25 phone

This phone is in the same price bracket that the feature phones that are sold in many countries yet runs entirely on Firefox OS and has access to 2.5 EDGE networks. It might look underpowered and “crappy” compared to whatever you have in your pocket right now, but compared to those featurephones this is a massive step forward: updatable and a wider range of apps and services can be accessed because it is, basically, a portable browser, rather than a J2ME device.

Better tooling

We are also aware that the web is so much more than just documents today, and we need to have better tools.

These are some examples of the tools that have been added to Firefox:

Responsive design mode

This allows you to quickly see how your app looks and behaves in differently sized viewports, and you can also store presets to go back to those specific values.

Network + cache inspector

Not only lets you inspect the requests that happen when you load a page in your app, but it also allows you to see the difference in requests when you load the page for the first time compared to when it’s loaded a second time. So you can make sure that static content is correctly cached, and your app is as fast as it can be.

Scratchpad

This is a sort of a notepad where you can both write code with autocompletion and suggestions and also execute it on the context of the page you’re on. This will help you debug and experiment without having to go to an external editor to write your code and then reloading the page.

Canvas inspector

This lets you capture all the drawing requests that go to a canvas context between two requestAnimationFrame calls, and then also lets you replay them so you can see what is being done and can both understand how the drawing is made and if there are any extra or redundant calls that could be optimised.

Shader editor

Just as the JavaScript debugger lets you inspect the code in the current page, the shader editor lets you inspect the code in the current WebGL context. And also allows you to pick any program and edit it with instant results. This is really a great tool for developers using WebGL since being able to test your shaders in the right context makes a huge difference compared to editing them and seeing the results being applied to a cube rotating over a white background.

Web Audio Editor

This is the last addition to the tools, so it’s still a bit early times for this inspector! It will let you inspect the Web Audio context node graph, and if you click on a node it will let you change its parameters on the fly, so again you can get instant feedback.

The graph is also very helpful when trying to debug complex hierarchies that might have been built programmatically and thus aren’t easily understandable just by looking at the code-the graph provides a much better visual insight.

Polyfills and libraries

Despite all the work in implementing APIs and pushing them to be standards, this process moves slowly and sometimes the needs of developers are way ahead of the needs of implementors. Luckily JavaScript is flexible enough that it’s often possible to write polyfills and libraries that will either fill the gap or provide some proof of concept API that could maybe become a native API later on if enough people adopt it.

So there is also a number of libraries that we develop to make writing apps more enjoyable.

Brick

Brick is a carefully curated selection of web components to build the UI of a modern web app. You can write your own elements using web components, and this in turn generates a sort of lightweight DSL where you have things such as the header of the app, the main content with a deck element and several cards on it, and a footer with a tabbar in it. The tabbar is linked to the deck, and so when you click on a given tab, the corresponding card in the deck will become active and be brought to the foreground.

Phonegap + Firefox OS

Despite our efforts to make the web the best platform, some features are still only available to native code unless you use something like PhoneGap-then you can keep writing your app in HTML+JS+CSS.

We want to make it easy for PhoneGap developers and we are working on plugins for Firefox OS, so the apps can be exported to Firefox OS too.

I know this all sounds ironic–building native apps using HTML? But I’d like to quote Brian Leroux, one of the stewards of PhoneGap, who gave a talk here past year:

our ultimate goal is to cease to exist

and I really liked the spirit in that sentence. For some things to change, we sometimes need transition solutions, and PhoneGap is one of those.

localForage

While new APIs such as ServiceWorkers get implemented, developers still need to store content for offline access, and the existing set of APIs was very inconsistent and unpleasant to use, so we built this library-mega-polyfill that provides a unified simple interface while trying to use the most efficient solution under the hood without bothering the developer with decisions. So for example if IndexedDB is available it will try to use that but exposing an API that is way less hostile than IndexedDB, and if IndexedDB is not available, as was the case in most iOS platforms until recently, it will try and use WebSQL if available, and if that doesn’t work either it will try to defer back to good old localStorage, which is the slowest of them all because it’s synchronous, so the whole script blocks until localStorage operations have finished.

// In localStorage, we would do:

localStorage.setItem('key', JSON.stringify('value'));

doSomethingElse();

// With localForage, we use callbacks:

localforage.setItem('key', 'value', doSomethingElse);

// Or we can use Promises:

localforage.setItem('key', 'value').then(doSomethingElse);Animated_GIF

This is another example of API that maybe should be available in the browser but until it is, JavaScript nowadays is powerful enough that this can work decently fast even if performing lots of arithmetic operations.

var imgs = document.querySelectorAll('img');

var ag = new Animated_GIF();

var animatedImage = document.createElement('img');

ag.setSize(320, 240);

for(var i = 0; i < imgs.length; i++) {

ag.addFrame(imgs[i]);

}

ag.getBase64GIF(function(image) {

animatedImage.src = image;

document.body.appendChild(animatedImage);

});This is an example of the output you can generate: a GIF adding several frames, using dithering and an specific color palette.

Maybe in the future some spec writer will look at this and say oh, this is disgusting, what a poor API design! I will design something so much better and submit it to a standards track!

And I’ll be super happy to hear that.

Yeah, but…

Of course not everything is perfect. Of course there’s still lots of work to do.

Are you missing a feature? Don’t just complain, get involved!

This is not the 90s anymore

You can do so much more than complain because your browser vendor hasn’t updated the browser in years and bugs still plague you left, right and center.

The Web is YOURS, so shape it! Get involved!

Getting involved means you make informed decisions. What works? What doesn’t? Why? And… is there a work around?

Getting involved means your needs are taken into account. You can say We need this feature for our use case…

, or also This feature can’t work for this reason…

and it will be listened to.

Ultimately it boils down to this:

A W3C editor sitting on their chair on a lonely room will never know about your needs unless you tell them.

Ways to get involved

- Always assume good intent, and be respectful. You might have had a bad day trying to build your app, and it isn’t working and you can’t figure out why, but it’s not the job of other people to listen to a parade of hateful swearing. Also if your plea for help apparently goes unnoticed, maybe wait a bit more until someone has the time to pick it and answer you. It’s not that people are unresposive–it’s just they might just be busy!

- Find your channel: mailing lists, GitHub repos, IRC, meet ups, the Extensible web summit…. Find the channel that makes you feel more comfortable, and study it for a while-you don’t need to get active from day 1. It’s OK to lurk for a while until you get familiarised with the dynamics of the place. Then…

- Ask questions. Questions are incredibly valuable for everyone. Phrasing your question in a way that is clear is an skill that requires development, but will help you actually understand what is it that you do not understand. And being asked a question will provide useful feedback to an implementor: it is a way of letting them know that something in the API is not as clear as they think it is, and that needs improvement.

- Try your code in different browsers. Often developers try their code in a more permissive browser and when they release their app to the world they start getting reports that it doesn’t work as well as they expected in other, more restrictive browsers. It’s not that the other browsers are wrong but maybe you didn’t test enough. Or maybe they are-so this is a fantastic opportunity to read the spec and ensure you got it right. Maybe this will highlight other errors in your code?

- Try nightlies for preview features. This will allow you to make sure your code keeps working even when new versions of an API are introduced. Maybe the API breaks in some edge cases that only your code uses. Is this in the spec? If it is and it shouldn’t behave this way, you should bring that up. If it’s not, maybe that should be in the spec to make things clearer. You can help here!

- For the braver souls, maybe you could even learn to compile your favourite browser! And maybe you can even help fix issues that bother you.

- File bugs. If you don’t think you can fix anything yet, but keep seeing things that don’t work as they should, you should file bugs. Often some issues only arise when certain factors fall in place at the same time. There are so many configurations and there’s no way to reproduce them all.

- Build things. It’s the only way to put APIs to a test, and because each developer is unique, it’s the only way to try APIs in unique ways.

- Break things! It’s OK if things break! It either highlights that you didn’t understand something correctly, because it’s unclear or because you need to give another go to reading the specs, or maybe it’s that you found something that truly didn’t work correctly. Congratulations!

- File more bugs! You should not keep those extra bugs to yourself. Share them with implementors and browser vendors and help make the web even better!

For the Web to be ours, it needs everyone’s input.

Let’s build this together.

http://soledadpenades.com/2014/07/02/invest-in-the-future-build-for-the-web/

|

|

Hal Wine: 2014-06 try server update |

2014-06 try server update

Chatting with Aki the other day, I realized that word of all the wonderful improvements to the try server issue have not been publicized. A lot of folks have done a lot of work to make things better - here’s a brief summary of the good news.

- Before:

- Try server pushes could appear to take up to 4 hours, during which time others would be locked out.

- Now:

- The major time taker has been found and eliminated: ancestor processing. And we understand the remaining occasional slow downs are related to caching . Fortunately, there are some steps that developers can take now to minimize delays.

What folks can do to help

The biggest remaining slowdown is caused by rebuilding the cache. The cache is only invalidated if the push is interrupted. If you can avoid causing a disconnect until your push is complete, that helps everyone! So, please, no Ctrl-C during the push! The other changes should address the long wait times you used to see.

What has been done to infrastructure

There has long been a belief that many of our hg problems, especially on try, came from the fact that we had r/w NFS mounts of the repositories across multiple machines (both hgssh servers & hgweb servers). For various historical reasons, a large part of this was due to the way pushlog was implemented.

Ben did a lot of work to get sqlite off NFS, and much of the work to synchronize the repositories without NFS has been completed.

What has been done to our hooks

All along, folks have been discussing our try server performance issues with the hg developers. A key confusing issue was that we saw processes “hang” for VERY long times (45 min or more) without making a system call. Kendall managed to observe an hg process in such an infinite-looking-loop-that-eventually-terminated a few times. A stack trace would show it was looking up an hg ancestor without makes system calls or library accesses. In discussions, this confused the hg team as they did not know of any reason that ancestor code should be being invoked during a push.

Thanks to lots of debugging help from glandium one evening, we found and disabled a local hook that invoked the ancestor function on every commit to try. \o/ team work!

Caching – the remaining problem

With the ancestor-invoking-hook disabled, we still saw some longish periods of time where we couldn’t explain why pushes to try appeared hung. Granted it was a much shorter time, and always self corrected, but it was still puzzling.

A number of our old theories, such as “too many heads” were discounted by hg developers as both (a) we didn’t have that many heads, and (b) lots of heads shouldn’t be a significant issue – hg wants to support even more heads than we have on try.

Greg did a wonderful bit of sleuthing to find the impact of ^C during push. Our current belief is once the caching is fixed upstream, we’ll be in a pretty good spot. (Especially with the inclusion of some performance optimizations also possible with the new cache-fixed version.)

What is coming next

To take advantage of all the good stuff upstream Hg versions have, including the bug fixes we want, we’re going to be moving towards removing roadblocks to staying closer to the tip. Historically, we had some issues due to http header sizes and load balancers; ancient python or hg client versions; and similar. The client issues have been addressed, and a proper testing/staging environment is on the horizon.

There are a few competing priorities, so I’m not going to predict a completion date. But I’m positive the future is coming. I hope you have a glimpse into that as well.

http://dtor.com/halfire/2014/07/02/2014_06_try_server_update.html

|

|

Mike Hommey: Firefox and Gtk+ 3 |

Folks from Collabora and Red Hat have been working on making Firefox on Gtk+ 3 a thing. See Emilio’s blog post for some recent update. But getting Firefox to build and run locally is unfortunately not the whole story.

I’ve been working on getting Gtk+ 3 Firefox builds going on Mozilla build infrastructure, and I’m proud to announce today that those builds are now going through Mozilla continuous integration on a project branch: Elm, and receive the same automated testing as mozilla-central.

And when I said getting Firefox to build and run was unfortunately not the whole story, I meant it: if you click on the Elm link above, you’ll notice that there’s a lot of orange, when it should be all green.

So, yes, Firefox on Gtk+ 3 is a thing, and it now has continuous integration. But there’s still a whole bunch of things to fix. So if you’re interested in making those builds work better, you can hop in, there are many things you can do:

- check the Gtk+ 3 tracking bug and its dependencies for a list of known issues or improvements to be made.

- download one of the builds from the elm branch, test it, and file bugs if you find some that aren’t currently tracked. There aren’t nightlies, but you can get the latest builds for 32-bits and 64-bits systems.

- and if you have level 1 commit access, you can test patches on the Try server, provided you pull from the elm branch or apply this patch on top of the tree you push there.

|

|

James Long: Compiling JSX with Sweet.js using Readtables |

JSX is a Facebook project that embeds an XML-like language in JavaScript, and is typically used with React. Many people love it and find it highly useful. Unfortunately it requires its own compiler and doesn't mix with other language extensions. I have implemented a JSX "compiler" with sweet.js macros, so you can use it alongside any other language extensions implemented as macros.

I have a vision. A vision where somebody can add a feature as complicated as pattern matching to JavaScript, and all I have to do is install the module to use it. With how far-reaching JavaScript is today, I think this kind of language extensibility is important.

It's not just important for you or me to be able to have something like native goroutines or native syntax for persistent data structures. It's incredibly important that we use features in the wild that could potentially be part of a future ES spec, and become standardized in JavaScript itself. Future JavaScript will be better because of your feedback. We need a modular way to extend the language, one that works, seamlessly with any number of extensions.

I'm not going to explain why sweet.js macros are the answer to this. If you'd like to hear more, watch my JSConf 2014 talk about it. If you are already writing a negative comment about this, please read this first.

So why did I implement JSX in sweet.js? If you use JSX, now you can use it alongside any other macros available with jsx-reader. Want native syntax for dealing with persistent data structures? Read on...

The Problem

JSX works like this: XML elements are expressions that are transformed to simple JavaScript objects.

var div =

{ header }

;

This is transformed into:

var div = React.DOM.div(null, React.DOM.h1(null, header));

JSX could not be implemented in sweet.js until this week's release. What made it work? Readtables.

I will briefly explain a few things about sweet.js for some technical context. Sweet.js mainly works with tokens, not an AST, which is the only way to get real composable language extensions *. The algorithm is heavily grounded in decades of work done by the Lisp and Scheme communities, particularly Racket.

The idea is that you work on a lightweight tree of tokens, and provide a language for defining macros that expand these tokens. Specialized pattern matching and automatic hygiene make it easy to do really complex expansion, and you get a lot for free like sourcemaps.

The general pipeline looks like this:

- read - Takes a string of code and produces a tree of tokens. It disambiguates regular expression syntax, divisions, and all kinds of other fun stuff. You get back a nice tree of tokens that represent atomic pieces of syntax. It's a tree because anything inside a delimiter (one of

{}()[]) exists as children of the delimiter token. - expand - Takes the token tree and walks through it, expanding any macros that it finds. It looks for macros by name and invokes them with the rest of the syntax. This phase also does quite a bit of light parsing, like figuring out if something is a valid expression.

- parse - Takes the final expanded tree and generates a real JavaScript AST. Currently sweet.js uses a patched version of esprima for this.

- generate - Generate the final JavaScript code from the AST. sweet.js uses escodegen for this.

The expand phase essentially adds extensibility to the language parser. This is good for a whole lot of features. There are a few features, like types and modules, that require knowledge of the whole program, and those are better off with an AST somewhere in between the parse and generate phase. Currently we don't have extensibility there yet.

There are very rare features that require extensibility to the read phase, and JSX is one of them. Lisp has had something called readtables for a long time, and I recently realized that we needed something like that in sweet.js in order to support JSX. So I implemented it!

There are a few reasons JSX needs to work as a reader and not a macro:

- Most importantly, the closing tag

is completely invalid JavaScript syntax. The default reader thinks it's starting a regular expression but it can't find the end of it, so it just errors in thereadphase. - JSX has very specific rules about whitespace handling. Whitespace inside elements needs to be preserved, and a space is added between sibling expressions, for example. Macros don't know anything about whitespace.

Reader extensions allow you install a custom reader that is invoked when a specific character is encountered in the source. You can read as much of the source as you need, and return a list of tokens. Reader extensions can only be invoked on punctuators (symbols like < and #), so you can't do awful things like change how quotes are handled.

A readtable is a mapping of characters to reader extensions. Read more about how this works in here in the docs.

Available on npm: jsx-reader

Now that we have readtables, we can implement JSX! I have done exactly that with jsx-reader. It is a literal port of the JSX compiler, with all the whitespace rules and other edge cases kept in tact (hopefully).

To load a reader extension, pass the module name to the sweet.js compiler sjs with the -l flag. Here are all the steps you need to expand a file with JSX:

$ npm install sweet.js

$ npm install jsx-reader

$ sjs -l jsx-reader file.js

Of course, you can load any other macro with sjs as well and use it within your file. That's the beauty of composable language extensions. (Try out es6-macros.)

I have also created a webpack loader and a gulp loader that is up-to-date that supports readtable loading.

This is beta software. I have tested it across the small test cases that the original JSX compiler includes, in addition to many large files, and it works well. However, there are likely small bugs and edge cases which need to be fixed as people discover them.

Not only do you get reliable sourcemaps (pass -c to sjs), but you also get better error messages than the original JSX compiler. For example, if you forget to close a tag:

var div =

Title

You get a nice, clean error message that directly points to the problem in your code:

SyntaxError: [JSX] Expected corresponding closing tag for p

4: One downside is that this will be slower than the original JSX compiler. A large file with 2000 lines of code will take ~.7s to compile (excluding warmup time, since you'll be using a watcher in almost all projects), while the original compiler takes ~.4s. In reality, it's barely noticeable, since most files are a lot smaller and things compile in a matter of a couple hundred milliseconds most of the time. Also, sweet.js will only get more optimized with time.

Example: Persistent Data Structures

React works even better when using persistent data structures. The problem is that JavaScript doesn't have them natively, but luckily libraries like mori are available. The problem is that you can't use object literals anymore; you have to do things like mori.vector(1,2,3) instead of [1,2,3].

What if we implement literal syntax for mori data structures? Potentially you could use #[1, 2, 3] to create persistent vectors, and #{x: 1, y: 2} to create persistent maps. That would be awesome! (Unfortunately, I haven't actually done this yet, but I want to.)

Now everyone using JSX will be able to use my literal syntax for persistent data structures with React. That is truly a powerful toolkit.

The JSX Read Algorithm

Adding new syntax to JavaScript, especially a feature with a large surface area like JSX, must be done with great care. It has to be 100% backwards-compatible, and done with future ES.next features in mind.

Both jsx-reader and the original JSX compiler look for the < token and trigger a JSX expression parse. There's a key difference though. You may have noticed that jsx-reader, as a reader, is invoked from the raw source and has no context. The original JSX compiler monkeypatches esprima to invoke < only when parsing an expression, so it is easier to guarantee correct parsing. < is never valid in expression position in JavaScript, so it can get away with it.

jsx-reader is invoked on < whenever it occurs in the source, even if it's a comparison operator. That sounds scary, and it is; we need to be very careful. But I have figured out a read algorithm that works. You don't always need a full AST.

jsx-reader begins parsing < and anything after it as a JSX expression, and if it finds something unexpected at certain points, it bails. For the most part, it's able to figure out extremely early whether or not < is really a JSX expression or not. Here's the algorithm:

- Read

< - Read an identifier. If that fails, bail.

- If a

>is not next:- Skip whitespace

- Read an identifier. If that fails, bail.

- If a

>is next, go to 4.1 - Read

= - If a

{is next, read a JS expression similar to 4.3.1 - If a

<is next, go to 1 - Otherwise, read a string literal

- If a

>is next, go to 4.1 - Otherwise, go to 3.1

- Otherwise:

- Read

> - Read any raw text up until

{or< - If a

{is next:- Read

{ - Read all JS tokens up until

} - Read

} - Go to 4.2

- Read

- If a

<is next:- If a

<and/is next, go to 5 - Otherwise, go to 1

- If a

- If at end of file, bail.

- Read

- Read

< - Attempt to read a regular expression. If that succeeds, bail.

- Read

/ - Read an identifier. Make sure it matches opening identifier.

- Read

>

I typed that out pretty fast, and there's probably a better way to format it, but you get the idea. The gist is that it's easy to disambiguate the common cases, but all the edge cases should work too. The edge cases aren't very performant, since our reader could do a bunch of work and then trash it, but though should never happen in 99.9% of code.

In our algorithm, if we say read without a corresponding "if it fails, bail", it throws an error. We can still give the user good errors while disambiguating the edge cases of JavaScript.

Here are a few cases where our reader bails:

if(x < y) {}- it bails because it looks for an attribute aftery, and)is not a valid identifier characterif(x < y > z) {}- it bails because it reads all the way to the end of the file and doesn't find a closing block. This only happens with top-level elements, and is the worst case performance-wise, butx < y > zdoesn't do what you think it does and nobody ever does that.if(x < div > y < /foo>/) {}- this is the most complicated case, and is completely valid JavaScript. It bails because it reads a valid regex at the end.

We take advantage of the fact that expressions like x < y foo don't make sense in javascript. Here, it looks for either a = to parse an attribute or a > to close the element, and errors if it doesn't find it.

Are You Concerned?

Macros invoke mixed feelings in some people, and many are hesitant to think they are a good thing to use. You may think this, and argue that things like readtables are signs that we have gone off the deep end.

I ask that you think hard about sweet.js. Give it 5 minutes. Maybe give it a couple of hours. Play around with it: set up a gulp watcher, install some macros from npm, and use it. Don't push back against it unless you actually understand the problem we are trying to solve. Many arguments that people give don't make sense (but some of them do!).

Regardless, even if you think this isn't the right approach, it's certainly a valid one. One of the most troubling things about the software industry to me is how vicious we can be to one another, so please be constructive.

Give jsx-reader a try today, and please file bugs if you find any!

http://jlongster.com/Compiling-JSX-with-Sweet.js-using-Readtables

|

|

Armen Zambrano: Down Memory Lane |

Here's an excerpt:

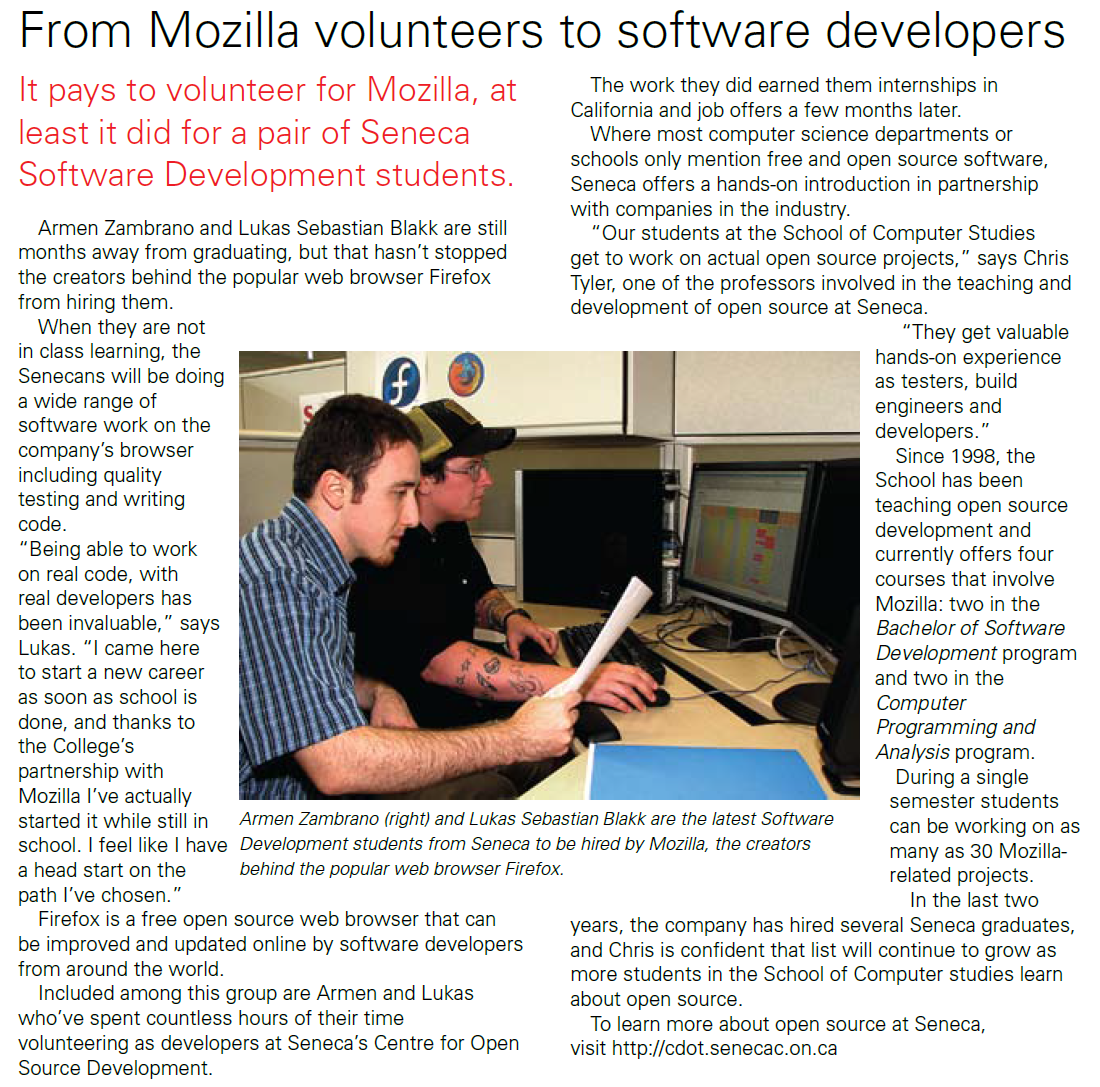

From Mozilla volunteers to software developers

It pays to volunteer for Mozilla, at least it did for a pair of Seneca Software Development students.

Armen Zambrano and Lukas Sebastian Blakk are still months away from graduating, but that hasn't stopped the creators behind the popular web browser Firefox from hiring them.

When they are not in class learning, the Senecans will be doing a wide range of software work on the company’s browser including quality testing and writing code. “Being able to work on real code, with real developers has been invaluable,” says Lukas. “I came here to start a new career as soon as school is done, and thanks to the College’s partnership with Mozilla I've actually started it while still in school. I feel like I have a head start on the path I've chosen.”

Firefox is a free open source web browser that can...

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/14H1yP-5BVg/down-memory-lane.html

|

|

Christian Heilmann: Google IOU – where was the web? |

I’ve always been a fan of Google IO. It is a huge event, full of great announcements. Google goes all in organising a great show and it is tricky to get tickets. You always walked home with the newest gadgets and were the first to learn about new products coming out. The first years I got invites as an expert. This year I got a VIP ticket which meant I paid for it but didn’t have to wait or be part of a lottery or find an easter egg or whatever else people had to do. I was off to the races and excited to go.

IO, a kick-ass mobile and web show

I liked the two day keynote format of the last years: on the first day you learned all about Android and new phones and tablets and on the second it was all about Chrome and Google Web Services like Google+. This wasn’t the case this year; the second day keynote didn’t happen. Sadly enough the content format seems to have stayed the same.

I liked Google IO as it meant lots of great announcements for the web. As someone working for a browser maker I had a sense of dread each year to see what amazing things Chrome would get and what web based product would draw more people to using Chrome as their main browser. Google did well getting the hearts and minds of the web (and web developer) community.

This is good: competition keeps us strong and the Chrome team has always been fair announcing standards support in their browser as a shared effort between browser makers instead of pretending to have invented it all. The Chrome Summit last year was a great example how that can work. This hasn’t changed. I have quite a few friends in the Chrome team and can rely on them moving the web forward for all instead of building bespoke APIs.

Google, a web company

Google is a company that grew on the web. Google is a company that innovated with simplicity where others overwhelmed their users. We used their search engine because it was a simple search box, not a search box in a huge web site full of news, weather, chat systems, sports news and all kind of other media provided by partners. We used GMail because it made us independent of the computer we read our mails on and it had amazingly simple keyboard shortcuts. Google understood the web and its needs – where others only allowed you to make money by plastering huge banners all over your blog, Google was OK with simple text links.

With this in mind I was super happy to go and get my fix of Google IO for this year.

Where is the web?

Suffice to say, in this respect I was disappointed by this year’s Google IO. Not the whole event, but the keynote. My main worry is that there was hardly any mention of the web. It was all about the redesign of Android. It was about wearables Google doesn’t create or have much of a say in. It was about “introducing” Android TV (which has been done before and then scrapped it seems). It was about Android Auto. It was about Android’s new design and the ideas behind it. Mentions of Chrome were scarce and misguided.

What do I mean by that? I was sure that Google will announce HTML5 Chrome Apps to run on Android and be available in the Play store. This would’ve been a huge win for the web and HTML5 developers. Instead we got an announcement that Android Apps will now run on Chromebooks. There was no detailed technical explanation how that would happen but I learned later that this means the Android Apps run in Native Client. This is as backwards as it can get from a web perspective. Chromebooks were meant to make the web the platform to work on and HTML5 as the technology. Heck, Firefox on Android creates dynamic APKs from Open Web Apps. I’d have expected Google to pull the same trick.

There was no annoucement about Chromebooks either (other than them being the best-seller on Amazon for laptops) and when you followed Google+ in the last months, there were some massive advancements in their APIs and standards support.

The other mention was that the new look and feel of Android will also be implemented for the web using Polymer.

Android’s new face looks beautiful and I love the concept of drop shadows and lighting effects being handled by the OS for you. It felt like Google finally made a stand about their design guidelines. It also felt very much like Google tries to beat Apple in their own game. The copious use of “delightful experiences” in every mention of the new “material design” refresh became tiring really fast.

Chrome and the web didn’t get any love in the IO keynote. The promise that the new design is implemented “running at 60FPS” using Polymer in Chrome was an aside and can’t have been a simple feat. It also must have meant a lot of great work of the Android team together with the Polymer and the Chrome team. Lots of good stories and learning could have been shown and explained. Other work by the Chrome and Polymer team that must have been a lot of effort like the search result page integration of app links or the Chromebook demos were just side notes; easy to miss. Most of the focus was on what messages you could get on your watch or that there might be an amazing feature like in-car navigation coming soon later this year if you can afford a brand new car.

Chromecast was mentioned to get a few updates including working without sharing a wireless network. This could be huge, but again this was mostly about sending streaming content from Google Play to a TV, not about the web.

Google+ apparently doesn’t exist and the fact that Hangouts now don’t need any plugin any longer and instead use WebRTC wasn’t noteworthy. Google Glass was also suspiciously missing from the keynote.

Props to the Chrome Devrel team

I got my web fix from the Google Devrel team and their talks. That is, when I arrived early enough and didn’t get stuck in a queue outside the room as the speaker beforehand went 10 minutes over.

I got lots of respect for the team and a few of the talks are really worth watching:

- Paul Bakaus – Developing across Devices – DevTools in 2014: Paul did a great job showing the newest and upcoming features in DevTools. I especially liked the feature allowing you to simulate different connection speeds on different tabs.

- Pierre Far and Ilya Grigorik – HTTPS Everywhere: a nice reminder that encryption of data traffic is essential and HTTPS2 is around the corner.

- Alex Russel and Jake Archibald – Bridging the gap between the web and apps: a very entertaining and interesting talk about ServiceWorker in Chrome and Firefox. We need this, go and have a look.

- Paul Lewis – Mobile Web performance auditing: insights into how Paul reviews web products in terms of performance. Paul is a super thorough guy, and delivers very no-nonsense content.

- Lara Swanson and Paul Lewis – Perf culture: Paul is joined by Lara from Etsy to explain how you can make your company understand the importance of performance.