Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

David Rajchenbach Teller: Q2 2014 Report |

Q2 2014 was a difficult quarter at Mozilla, with all the agitation around Brendan Eich, Australis, Media Extensions, etc. Still, I have the feeling that we managed to get a lot done despite the intense pressure. Here is a quick highlight of my main accomplishments for Q2 2014.

Session Restore

A considerable amount of my time was spent working on Session Restore. The main objective is to decrease the jank caused by Session Restore taking snapshots of the session and to decrease the time Session Restore takes to restore the state of Firefox. Much of the activity this quarter dealt with measuring performance, so as to best optimize it and improving safety.

Reworking Session Restore backups

With Firefox 33, the backups of Session Restore state have been completely redesigned. The new system should prove orders of magnitude safer, in addition to now being fully transparent.

Next steps We are still lacking measurements to confirm that this is as successful as the mathematics suggest. If you are interested, there is a mentored bug open.

Talos tests and Telemetry on Session Restore startup

Optimizing startup is difficult, and generally impossible if you do not know what to optimize. With Firefox 32 and 33, we have new benchmarks and real world measurements to help us determine immediately the influence of patches on Session Restore startup.

Next steps Using these benchmarks to experiment with possible optimizations. This is in progress.

Cleaning up Session Restore file

One of our objectives is to decrease the size of the Session Restore file, to reduce the amount of I/O (hence battery use and hardware wear and tear) and memory usage. As a first step, we have introduced a mechanism that progressively removes from the “Undo Close” feature tabs and windows that have been closed at least 2 weeks ago. Interestingly, Telemetry indicates that this clean-up has no effect on the size of the Session Restore file. Experiments run later during the quarter, using the Talos tests, also strongly suggest that the data that we could clean up and that we do not clean up yet have essentially no influence on startup duration.

Next steps I believe that this strategy will therefore not be pursued during the next quarters.

Preserving compatibility with Tor Browser

While refactoring Session Restore, we have hit a number of obstacles in the form of add-ons using private or semi-private APIs that we wished to remove. We have managed to work along with add-on authors and, as far as I know, we have not broken any add-on yet. In particular, we have maintained compatibility with the Tor Browser, which is a heavily customized distribution of Firefox targeted towards privacy.

Next steps Providing a clean API for add-ons. This will require discussing with add-on authors to find out what they need.

Async tooling

I am in charge of the Async Project, which is all about giving front-end and add-on developers tools to develop asynchronous code that does not jank. As usual, this involved plenty of activity in a number of different directions.

Auto-closing Sqlite.jsm databases (mentoring Michael Brennan)

Sqlite.jsm databases can now be closed automatically during garbage-collection. On user’s computers, this will increase safety, as failing to close a database causes shutdown-time assertion failures. However, to use resources effectively, pragmatism dictates that databaes should be closed manually, so failing to close a database in the Mozilla codebase will still cause test failures.

Reworking OS.File shutdown

On devices with little memory (typically Firefox Phones), one of the techniques used to save memory is to shutdown the OS.File worker as early as possible, re-launching it later if necessary. As it turns out, the task is more complicated than it seems, due to possibilities of race conditions. Unfortunately, this means that in some extreme cases, Firefox OS applications could lock down and fail to shutdown properly without being killed by the OS. This is now fixed. Somewhere along the way, this helped us to make the PromiseWorker used by OS.File more resilient to low-level errors.

Next steps Making the PromiseWorker usable by other modules than OS.File, including testing and add-ons.

OS.File for Android and Firefox OS

OS.File was initially designed for desktop devices. Now that it is used in a number of places on mobile devices, I have mercilessly hunted down all compatibility issues between OS.File and our two mobile platforms. Compatibility tests are now activated on all platforms and should avoid any regression.

AsyncShutdown Barrier mechanism

The shutdown process of Firefox has always been a dark and scary place, full of unspecified dependencies. As a result, any refactoring or addition a new dependency could break many things in new and interesting ways. I have introduced the AsyncShutdown Barrier mechanism that lets us specify clear, explicit and extensible dependencies, handles ordering of shutdowns, as well as error reporting if a dependency is unmet. This Barrier is now used by Sqlite.jsm, OS.File, Firefox Health Report, Session Restore, Page Thumbnails and fixes a number of major issues.

Next steps Porting AsyncShutdown Barrier to allow native components to register with it.

Fixing Firefox 30 shutdown freezes (with Tim Taubert)

Many users of Firefox 30 encountered issues that caused Firefox to freeze during shutdown. We found out that the issue was caused was triggered by Page Thumbnails and caused by a bug in ChromeWorkers, which did not handle an error case gracefully. I applied AsyncShutdown Barrier to ensure that Page Thumbnails always completed without triggering the error case, while Tim Taubert ensured that the Chrome Workers handled the error robustly.

Making Firefox Health Report shutdown more robust

While porting Firefox Health Report to AsyncShutdown, we encountered an elusive bug that manifested itself by causing rare shutdown crashes. After months of experimenting, instrumenting and attempting to fix the issue, we eventually traced it back to a more serious bug in shutdown, which apparently does not always send the proper notifications. Using the AsyncShutdown Barrier, we managed to work around the issue and make FHR’s shutdown both more robust and better instrumented in case of crash. This later helped us locate another issue that prevents a proper shutdown when some databases have been corrupted.

Next steps Fix the upstream shutdown bug, make our shutdown more resilient in case of database corruption.

Async testing

The other aspect of writing asynchronous code is making sure that developers can debug it. Now that we have hit a critical mass of developers writing async code, it was high time to help them work with it.

Rewriting Task stack traces to be meaningful

Now that we know how to handle uncaught errors, the main remaining weaknesses of Promise-based and Task-based code is that their stack traces lose much information. Since Firefox 33, Task-based stack traces are now transparently rewritten into something developer-redable. Somewhere along the way, I have also patched xpcshell and mochitests to ensure that they take advantage of this rewriting. Experience shows that this is very useful and that the runtime cost is negligible.

Next steps Evaluate the runtime cost of doing the same thing for Promise-based code.

Making xpcshell tests fail in case of uncaught promise error

Uncaught promise errors were treated by the test suites as warnings, TBPL did not report them, and they remained consequantly more often than not ignored (or even unseen) by the developers. I have reworked the xpcshell test harness to consider all uncaught promise errors as oranges and fixed all offenders.

Next steps Doing the same for mochitests. Code is ready, but a few offenders remain.

Community

Dealing with political feedback around the nomination and departure of Brendan Eich

Along with many others, I made my best to engage people who voiced their negative feedback either at the nomination or at the departure of Brendan Eich. Unfortunately, this took time and efforts, but I believe that staying in touch with our users is part of what makes the difference between Mozilla and other browser vendors.

Working with new contributors

I estimate that I have worked with ~30 potential new contributors during the quarter. Many have unfortunately decided to postpone or abandon their efforts towards contributing, but a few have stayed, to work either with me or with other teams. At the moment, I am following 5 promising contributors. In particular, I am quite happy to welcome Dexter (who is working on a very sophisticated patch to let code watch for file modifications) and Kushagra (who has landed several test suite bugs).

Next steps More of it!

Working with universities

A group of 'Ecole Centrale de Lyon successfully completed an online tool to help grassroot projects find volunteers. It was nice mentoring them.

Zedge

I was invited to deliver a presentation on performance at Zedge, in Trondheim, Norway. That was fun :)

Next steps Publish the slides.

And now?

Let’s get started with Q3!

http://dutherenverseauborddelatable.wordpress.com/2014/07/01/q2-2014-report/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1029991] “bug_comments” hook should run after comment tags are pre-loaded

- [1023865] New product (Tree Management) & components for Treeherder

- [669535] User pref for “Possible Duplicates”

- [1030617] editing a product’s groups clears the suggested reviewer list

- [1030747] Recent changes to secure bugmail subject lines break GMail threading

- [1028027] cloning a bug pre-fills mentors with “—” instead of an empty value

- [1031428] Move the “Mentors” field under “QA Contact” field

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/07/01/happy-bmo-push-day-101/

|

|

Gregory Szorc: Update Bugzilla Automatically on Push |

Do you manually create Bugzilla comments when you push changes to a Firefox source repository? Yeah, I do too.

That's always annoyed me.

It is screaming to be automated.

So I automated it.

You can too. From a Firefox source checkout:

$ ./mach mercurial-setup

That should clone the version-control-tools repository into ~/.mozbuild/version-control-tools.

Then, add the following to your ~/.hgrc file:

[extensions] bzpost = ~/.mozbuild/version-control-tools/hgext/bzpost [bugzilla] username = me@example.com password = password

Now, when you hg push to a Firefox repository, the commit URLs will get posted to referenced bugs automatically.

Please note that pushing to release trees such as mozilla-central is not yet supported. In due time.

Please let me know if you run into any issues.

Estimated Cost Savings

Assuming the following:

- It costs Mozilla $200,000 per year per full-time engineer working on Firefox (a general rule of thumb for non-senior positions is that your true employee cost is 2x your base salary).

- Each full-time engineer works 40 hours per week for 46 weeks out of the year.

- It takes 15 seconds to manually update Bugzilla for each push.

- There are 20,000 pushes requiring Bugzilla attention per year.

We arrive at the following:

- Cost per employee per hour worked: $108.70

- Total person-time to manually update Bugzilla: ~83 hours

- Total cost to manually update Bugzilla after push: $9,058.

I was intentionally conservative with all the inputs except time worked (I think many of us work more than 40 hour weeks). My estimates also don't take into account the lost productivity associated with getting mentally derailed by interacting with Bugzilla. With this in mind, I could very easily justify a total cost at least 2x-3x higher.

It took me maybe 3 hours to crank this out. I could spend another few weeks on it full time and Mozilla would still save money (assuming 100% adoption).

I encourage people to run their own cost calculations on other tasks that can be automated. Inefficiencies multiplied by millions of dollars (your collective employee cost) result in large piles of money. Not having tools (even simple ones like this) is equivalent to setting loads of cash on fire.

http://gregoryszorc.com/blog/2014/06/30/update-bugzilla-automatically-on-push

|

|

Pascal Chevrel: My Q2-2014 report |

Australis release

At the end of April, we shipped Firefox 29 which was our first major redesign of the Firefox user interface since Firefox 4 (released in 2011). The code name for that was Australis and that meant replacing a lot of content on mozilla.org to introduce this new UI and the new features that go with it. That also means that we were able to delete a lot of old content that now had become really obsolete or that was now duplicated on our support site.Since this was a major UI change, we decided to show an interactive tour of the new UI to both new users and existing users upgrading to the new version. That tour was fully localized in a few weeks time in close to 70 languages, which represents 97.5% of our user base. For the last locales not ready on time, we either decided to show them a partially translated site (some locales had translated almost everything or some of the non-translated strings were not very visible to most users, such as alternative content to images for screen readers) or to let the page fall back to the best language available (like Occitan falling back to French for example).

Mozilla.org was also updated with 6 new product pages replacing a lot of old content as well as updates to several existing pages. The whole site was fully ready for the launch with 60 languages 100% ready and 20 partially ready, all that done in a bit less than 4 weeks, parallel to the webdev integration work.

I am happy to say that thanks to our webdev team, our amazing l10n community and with the help of my colleagues Francesco Lodolo (also Italian localizer) and my intern Th'eo Chevalier (also French localizer), we were able to not only offer a great upgrading experience for the quasi totality of our user base, we were also able to clean up a lot of old content, fix many bugs and prepare the site from an l10n perspective for the upcoming releases of our products.

Today, for a big locale spanning all of our products and activities, mozilla.org is about 2,000 strings to translate and maintain (+500 since Q1), for a smaller locale, this is about 800 strings (+200 since Q1). This quarter was a significant bump in terms of strings added across all locales but this was closely related to the Australis launch, we shouldn't have such a rise in strings impacting all locales in the next quarters.

Transvision releases

Last quarter we did 2 releases of Transvision with several features targeting out 3 audiences: localizers, localization tools, current and potential Transvision developers.For our localizers, I worked on a couple of features, one is quick filtering of search results per component for Desktop repositories (you search for 'home' and with one click, you can filter the results for the browser, for mail or for calendar for example). The other one is providing search suggestions when your search yields no results with the best similar matches ("your search for 'lookmark' yielded no result, maybe you were searching for 'Bookmark'?").

For the localization tools community (software or web apps like Pontoon, Mozilla translator, Babelzilla, OmegaT plugins...), I rewrote entirely our old Json API and extended it to provide more services. Our old API was initially created for our own purposes and basically was just giving the possibility to get our search results as a Json feed on our most popular views. Tools started using it a couple of years ago and we also got requests for API changes from those tool makers, therefore it was time to rewrite it entirely to make it scalable. Since we don't want to break anybody's workflow, we now redirect all the old API calls to the new API ones. One of the significant new service to the API is a translation memory query that gives you results and a quality index based on the Levenshtein distance with the searched terms. You can get more information on the new API in our documentation.

I also worked on improving our internal workflow and make it easier for potential developers wanting to hack on Transvision to install and run it locally. That meant that now we do continuous integration with Travis CI (all of our unit tests are ran on each commit and pull request on PHP 5.4 and 5.5 environments), we have made a lot of improvements to our unit tests suite and coverage, we expose to developers peak memory usage and time per request on all views so as to catch performance problems early, and we also now have a "dev" mode that allows getting Transvision installed and running on the PHP development server in a matter of minutes instead of hours for a real production mode. One of the blockers for new developers was the time required to install Transvision locally. Since it is a spidering tool looking for localized strings in Mozilla source repositories, it needed to first clone all the repositories it indexes (mercurial/git/svn) which is about 20GB of data and takes hours even with a fast connection. We are now providing a snapshot of the final extracted data (still 400MB ;)) every 6 hours that is used by the dev install mode.

Check the release notes for 3.3 and 3.4 to see what other features were added by the team (/ex: on demand TMX generation or dynamic Gaia comparison view added by Th'eo, my intern).

Web dashboard / Langchecker

The main improvement I brought to the web dashboard is probably this quarter the deadline field to all of our .lang files, which allows to better communicate the urgency of projects and for localizers are an extra parameter allowing them to prioritize their work.Theo's first project for his internship was to build a 'project' view on the web dashboard that we can use to get an overview of the translation of a set of pages/files, this was used for the Australis release (ex: http://l10n.mozilla-community.org/webdashboard/?project=australis_all) but can be used to any other project we want to define , here is an example for the localization of two Android Add-ons I did for the World Cup that we did and tracked with .lang files.

We brought other improvements to our maintenance scripts for example to be able to "bulk activate" a page for all the locales ready, we improved our locamotion import scripts, started adding unit tests etc. Generally speaking, the Web dashboard keeps improving regularly since I rewrote it last quarter and we regularly experiment using it for more projects, especially for projects which don't fit in the usual web/product categories and that also need tracking. I am pretty happy too that now I co-own the dashboard with Francesco who brings his own ideas and code to streamline our processes.

Th'eo's internship

I mentionned it before, our main French localizer Th'eo Chevalier, is doing an internship with me and Delphine Leb'edel as mentors, this is the internship that ends his 3rd year of engineering (in a 5 years curriculum). He is based in Montain View, started early April and will be with us until late July.He is basically working on almost all of the projects I, Delphine and Flod work on.

So far, apart from regular work as an l10n-driver, he has worked for me on 3 projects, the Web Dashboard projects view, building TMX files on demand on Transvision and the Firefox Nightly localized page on mozilla.org. This last project I haven't talked about yet and he blogged about it recently, in short, the first page that is shown to users of localized builds of Firefox Nightly can now be localized, and by localized we don't just mean translated, we mean that we have a community block managed by the local community proposing Nightly users to join their local team "on the ground". So far, we have this page in French, Italian, German and Czech, if your locale workflow is to translate mozilla-central first, this is a good tooll for you to reach a potential technical audience to grow your community .

Community

This quarter, I found 7 new localizers (2 French, 1 Marahati, 2 Portuguese/Portugal, 1 Greek, 1 Albanian) to work with me essentially on mozilla.org content. One of them, Nicolas Delebeque, took the lead on the Australis launch and coordinated the French l10n team since Th'eo, our locale leader for French, was starting his internship at Mozilla.For Transvision, 4 people in the French community (after all, Transvision was created initially by them ;)) expressed interest or small patches to the project, maybe all the efforts we did in making the application easy to install and hack are starting to pay, we'll probably see in Q3/Q4

I spent some time trying to help rebuild the Portugal community which is now 5 people (instead of 2 before), we recently resurrected the mozilla.pt domain name to actually point to a server, the MozFR one already hosting the French community and WoMoz (having the French community help the Portuguese one is cool BTW). A mailing list for Portugal was created (accessible also as nntp and via google groups) and the #mozilla-portugal IRC channel was created. This is a start, I hope to have time in Q3 to help launch a real Portugal site and help them grow beyond localization because I think that communities focused on only one activity have no room to grow or renew themselves (you also need coding, QA, events, marketing...).

I also started looking at Babelzilla new platform rewrite project to replace the current aging platform (https://github.com/BabelZilla/WTS/) to see if I can help J"urgen, the only Babelzilla dev, with building a community around his project. Maybe some of the experience I gained through Transvision will be transferable to Babelzilla (was a one man effort, now 4 people commit regularly out of 10 committers). We'll see in the next quarters if I can help somehow, I only had time to far to install the app locally.

In terms of events, this was a quiet quarter, apart from our l10n-drivers work week, the only localization event I was in was the localization sprint over a whole weekend in the Paris office. Clarista, the main organizer blogged about it in French, many thanks to her and the whole community that came over, it was very productive, we will definitely do it again and maybe make it a recurring event.

Summary

This quarter was a good balance between shipping, tooling and community building. The beginning of the quarter was really focused on shipping Australis and as usual with big releases, we created scripts and tools that will help us ship better and faster in the future. Tooling and in particular Transvision work which is probably now my main project, took most of my time in the second part of the quarter.

Community building was as usual a constant in my work, the one thing that I find more difficult now in this area is finding time for it in the evening/week end (when most potential volunteers are available for synchronous communication) basically because it conflicts with my family life a bit. I am trying to be more efficient recruiting using asynchronous communication tools (email, forums…) but as long as I can get 5 to 10 additional people per quarter to work with me, it should be fine with scaling our projects.

http://www.chevrel.org/carnet/?post/2014/06/30/My-Q2-2014-report

|

|

Adam Lofting: The Power of Webmaker Landing Pages |

We just started using our first webmaker.org landing page, and I thought I’d write about why this is so important and how it’s working out so far.

We just started using our first webmaker.org landing page, and I thought I’d write about why this is so important and how it’s working out so far.

Who’s getting involved?

Every day people visit the webmaker.org website. They come from many places, for many reasons. Sometimes they know about Webmaker, but most of the time it’s new to them. Some of those people take an action; they sign-up to find out more, to make something with our tools, or even to throw a Maker Party. But, most of the people who visit webmaker.org don’t.

The percentage of people who do take action is our conversion rate. Our conversion rate is an important number that can help us to be more effective. And being more effective is key to winning.

If you’re new to thinking about our conversion rate, it can seem complex at first, but it is something we can influence. And I choose the word influence deliberately, as a conversion rate is not typically something you can control.

The good thing about a conversion rate is that you can monitor what happens to it when you change your website, or your marketing, or your product. In all product design, marketing and copy-writing we’re communicating with busy human beings. And human beings are brilliant and irrational (despite our best objections). The things that inspire us to take action are often hard to believe.

For the Webmaker story to cut-through and resonate with someone as they’re skimming links on their phone while eating breakfast and trying convince a toddler to eat breakfast too, is really difficult.

How we present Webmaker, the words we use to ask people to get involved, and how easy we make it for them to sign-up, all combine to determine what percentage of people who visit webmaker.org today will sign-up and get involved.

- Conversion rate is a number that matters.

- It’s a number we can accurately track.

- And it’s a number we can improve.

It gets more complex though

The people who visit webmaker.org today are not all equally likely to take an action.

How people hear about Webmaker, and their existing level of knowledge affects their ‘predisposition to convert’.

- If my friend is hosting a Maker Party and I’ve volunteered to help and I’m checking out the site before the event, odds are I’ll sign-up.

- If I clicked a link shared on twitter that sounded funny but didn’t really explain what webmaker was, I’m much less likely to sign-up.

Often, the traffic sources that drive the biggest number of visitors, are the people with less existing knowledge about Webmaker, and who are less likely to convert. This is true of most ‘markets’ where an increase in traffic often results in a decrease in overall conversion rate.

Enter, The Snippet

Mozilla will be promoting Maker Party on The Snippet, and the snippet reaches such a vast audience that we just ran an early test to make sure everything is working OK and to establish some baseline metrics. The expectation of the snippet is high visits, low conversion rate, and overall a load of new people who hear about Webmaker.

By all accounts, a large volume of traffic from a hugely diverse audience whose only knowledge of Webmaker is a line of text and a small flashing icon should result in a very low conversion rate. And when you add this traffic into the overall webmaker.org mix, our overall average conversion rate should plummet (though this would be an artifact of the stats rather than any decline in effectiveness elsewhere).

However, after a few days of testing the snippet, our conversion rate overall is up. This is quite frankly astounding, and a great endorsement for the work that is going into our new landing pages. This is really something to celebrate.

So, how did this happen?

Well, mostly by design. Though the actual results are even better than I was personally expecting and hoping for.

You could say we’re cheating, because we chose a new type of conversion for this audience. Rather than ‘creating a webmaker.org account‘, we only asked them to ‘join a mailing list‘. It’s a lower-bar call to action. But, while it’s a lower-bar call to action, what really matters is that it’s an appropriate call to action for the level of existing knowledge we expect this audience to have. Appropriate is a really important part of the design.

Traffic from the snippet goes to a really simple introduction to webmaker.org page with an immediate call to action to join the mailing list, then it ‘routes’ you into the Maker Party website to explore more. That way, even if you’re busy now, you can tell us you’re interested and we can keep in touch and remind you in a weeks’ time (when you have a quiet moment, perhaps) about “that awesome Mozilla educational programme you saw last week but didn’t have time to look at properly”.

It’s built on the idea that many of the people who currently visit webmaker.org, but who don’t take action are genuinely interested but didn’t make it in the door. We just have to give them an easy enough way to let us know they’re interested. And then, we have to hold their hands and welcome them into this crazy world of remixed education.

A good landing page is like a good concierge.

The results so far:

Even with this lower bar call to action, I was expecting lower conversion rates for visitors who come from the snippet. Our usual ‘new account’ conversion rate for Webmaker.org is in the region of 3% depending on traffic source. The snippet landing page is currently converting around 8%, for significant volumes of traffic. And this is before any testing of alternative page designs, content tweaking, and other optimization that can usually uncover even higher conversion rates.

Our very first audience specific landing page is already having a significant impact.

So, here’s to many more webmaker.org landing pages that welcome users into our world, and in turn make that world even bigger.

On keeping the balance

Measuring conversion rate is a game of statistics, but improving conversion rate is about serving individual human beings well by meeting them where they are. Our challenge is to jump back and forth between these two ways of thinking without turning people into numbers, or forgetting that numbers help us work with people at scale.

Learning more about conversion rates?

- This talk I gave a little while ago covers most of the theory: Things they don’t tell you about conversion rates

http://feedproxy.google.com/~r/adamlofting/blog/~3/p5Pro0yGIoU/

|

|

Gregory Szorc: Track Firefox Repositories with Local-Only Mercurial Tags |

After reading my recent Please Stop Using MQ post, a number of people asked me about my development workflow. While I still owe a full answer to that question, one of the cornerstores is a unified Mercurial repository. Instead of having separate clones for mozilla-central, mozilla-inbound, aurora, beta, etc, I have a single clone with the changesets from all the repositories.

I feel having a unified repository has made me more productive. I no longer have to waste time shuffling changesets between local clones. This introduced all kinds of extra cognitive load and manual processes that slowed me down. I highly encourage others to adopt unified repositories.

Because the various Firefox repositories don't have unique branches or bookmarks tracking the various heads, aggregating multiple repositories introduces a client-side problem of identifying which head is which. If you merely do a hg pull, you'll get a bunch of anonymous heads.

To solve this problem, you'll need to employ minor client-side magic. Previously, I recommended my heavyweight mozext extension.

Today, I'm proud to announce a new, lighter extension: firefoxtree. When pulling from a known Firefox repository, this extension will add a local-only Mercurial tag for that repository. For example, when pulling mozilla-central, the central tag will be created.

Local-only tags are a Mercurial feature only available to extensions. A local-only tag is effectively an overlay of tags that don't get transferred as part of push and pull operations. They behave like normal tags: you can hg up to them and reference them elsewhere changeset identifiers are used. They are also read only: if you update to a tag and then commit, the tag will not move forward (contrast with branches or bookmarks).

Example Usage

Clone https://hg.mozilla.org/hgcustom/version-control-tools and add the following to your .hg/hgrc:

[extensions]

firefoxtree = /path/to/version-control-tools/hgext/firefoxtree

To use, simply pull from a Firefox repo:

$ hg pull https://hg.mozilla.org/mozilla-central $ hg up central # Do your development work. # Time to land. $ hg pull https://hg.mozilla.org/integration/mozilla-inbound $ hg rebase -d inbound $ hg out -r . https://hg.mozilla.org/integration/mozilla-inbound $ hg push -r . https://hg.mozilla.org/integration/mozilla-inbound

Please note that hg push tries to push all local changes on all heads to a remote by default. When operating a unified repo, you'll need to use the -r argument to hg push and hg out to limit what changesets are considered. I most frequently use -r . to limit changes to the current checked out changeset.

Also note that this extension conflicts with my mozext extension. I hope to update mozext to make it behave in the same manner. (It was easier to write a new extension than to update mozext.)

http://gregoryszorc.com/blog/2014/06/30/track-firefox-repositories-with-local-only-mercurial-tags

|

|

Wladimir Palant: Please don't use externally hosted JavaScript libraries |

A few days ago I outlined that the Reuters website relies on 40 external parties with its security. What particularly struck me was the use of external code hosting services, e.g. loading the jQuery library directly from the jQuery website and GSAP library from cdnjs. It seems that in this particular case Reuters isn’t the one to blame — they don’t seem to include these scripts directly, it’s rather some of the other scripts they are using that are doing this.

Why would one use externally hosted libraries as opposed to just uploading them to your own server? I can imagine three possible reasons:

- Simplicity: No need to upload the script, you simply add a

https://palant.de/2014/06/30/please-don-t-use-externally-hosted-javascript-libraries

|

|

Raluca Podiuc: Like all magnificent things, it's very simple. |

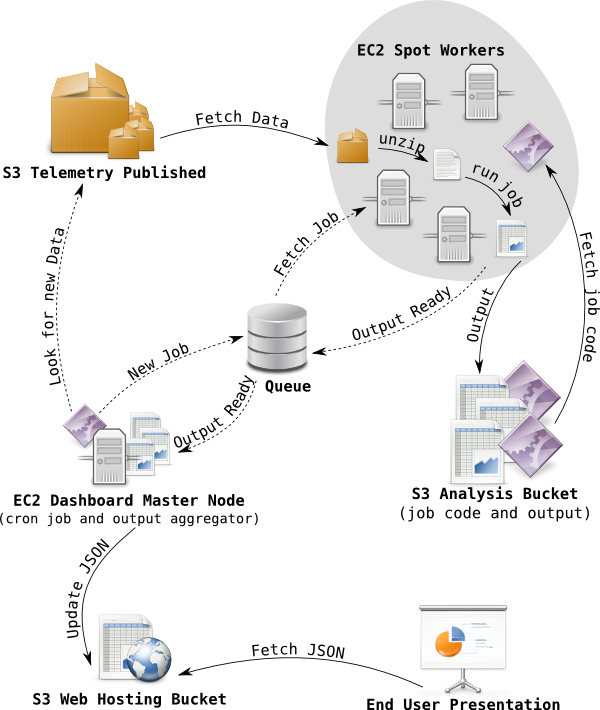

Two weeks ago I started working on Telemetry Analysis Framework.

We are simplifying the MapReduce workflow to be as flexible yet as easy to use and debug as possible. Jonas has been developing TaskCluster for a while and he came up with the idea of porting the analysis to it.

What is TaskCluster

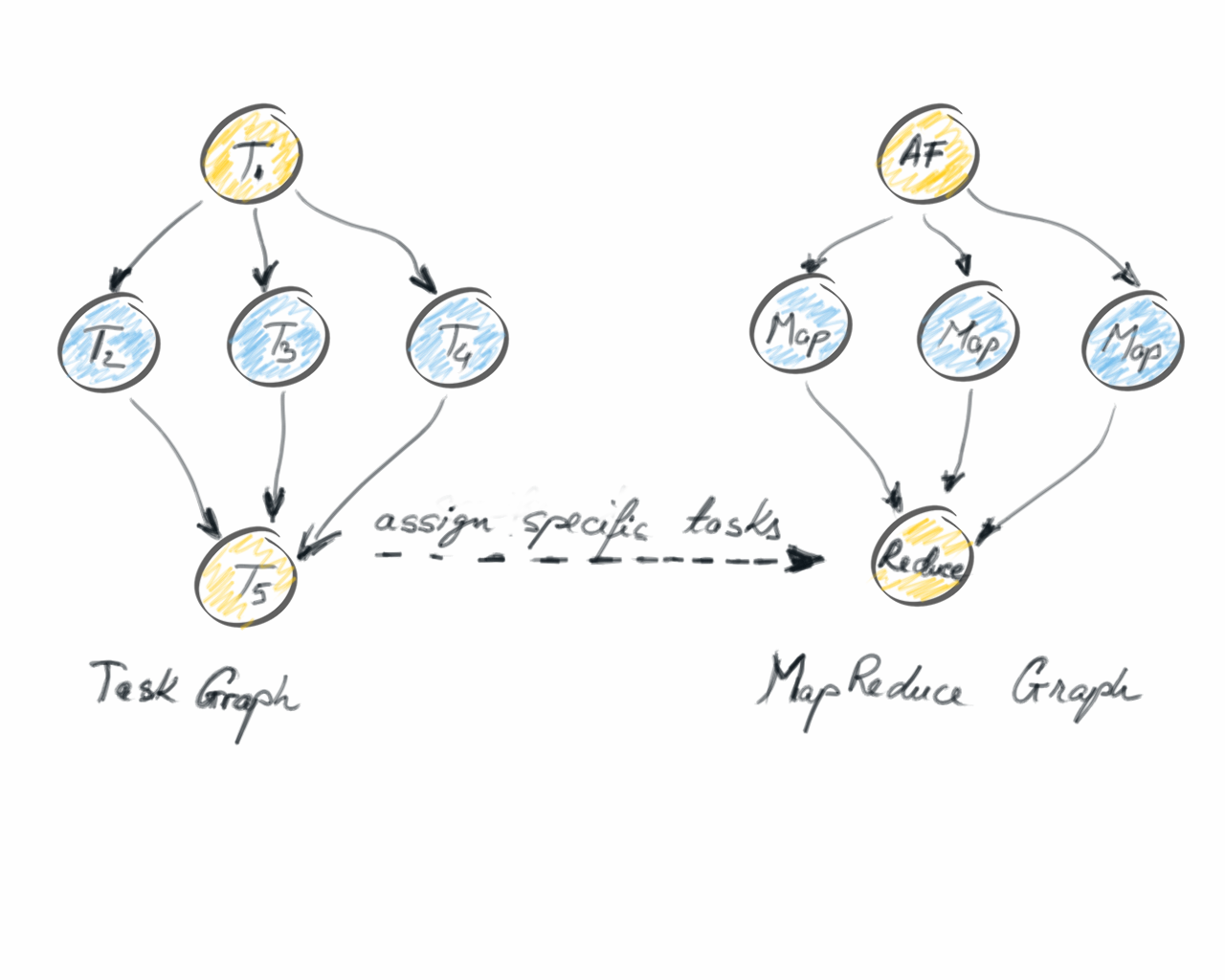

TaskCluster is a set of components that manages task queuing, scheduling, execution and provisioning of resources. It is designed to run automated builds and tests at Mozilla. You can imagine it like the diagram below:TaskCluster for our MapReduce Analysis

When you talk about MapReduce it is usually referring to a directed graph workflow like the one below. Taskcluster provides task graphs that can easily be used as a MapReduce workflow.As mentioned above we want this framework to be:

- simple to use

- flexible

- easy to debug

Simple to use

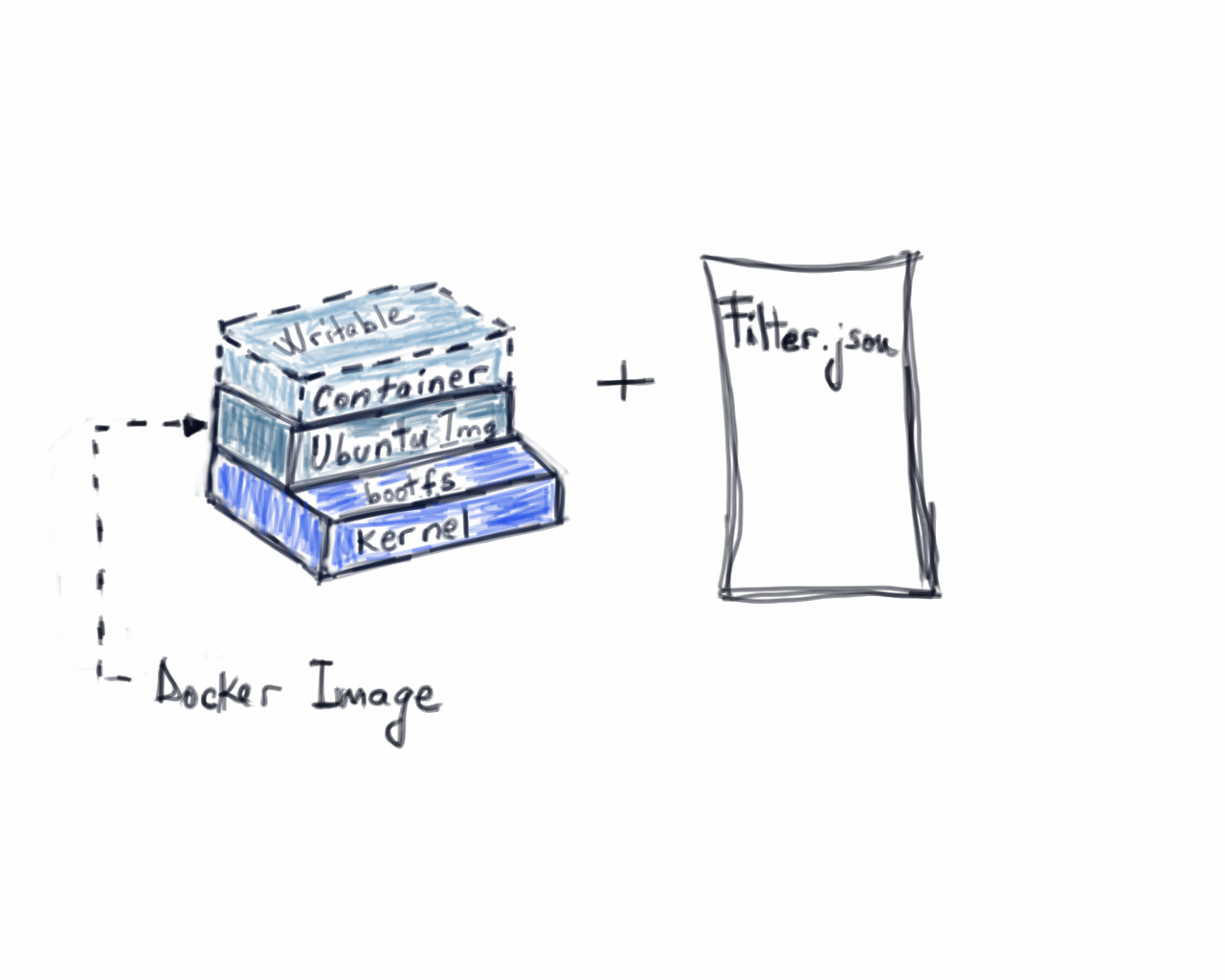

Simplicity in this case means the programmer has to specify as little as possible:

Because we know that starting with something new can be annoying we provide a base image where you only need to write your custom code. Also we provide dead easy documentation for each step. More information about Docker can be found here.

Filter.json

Filter.json is the file given so we can extract from S3 bucket the files needed for the analysis.

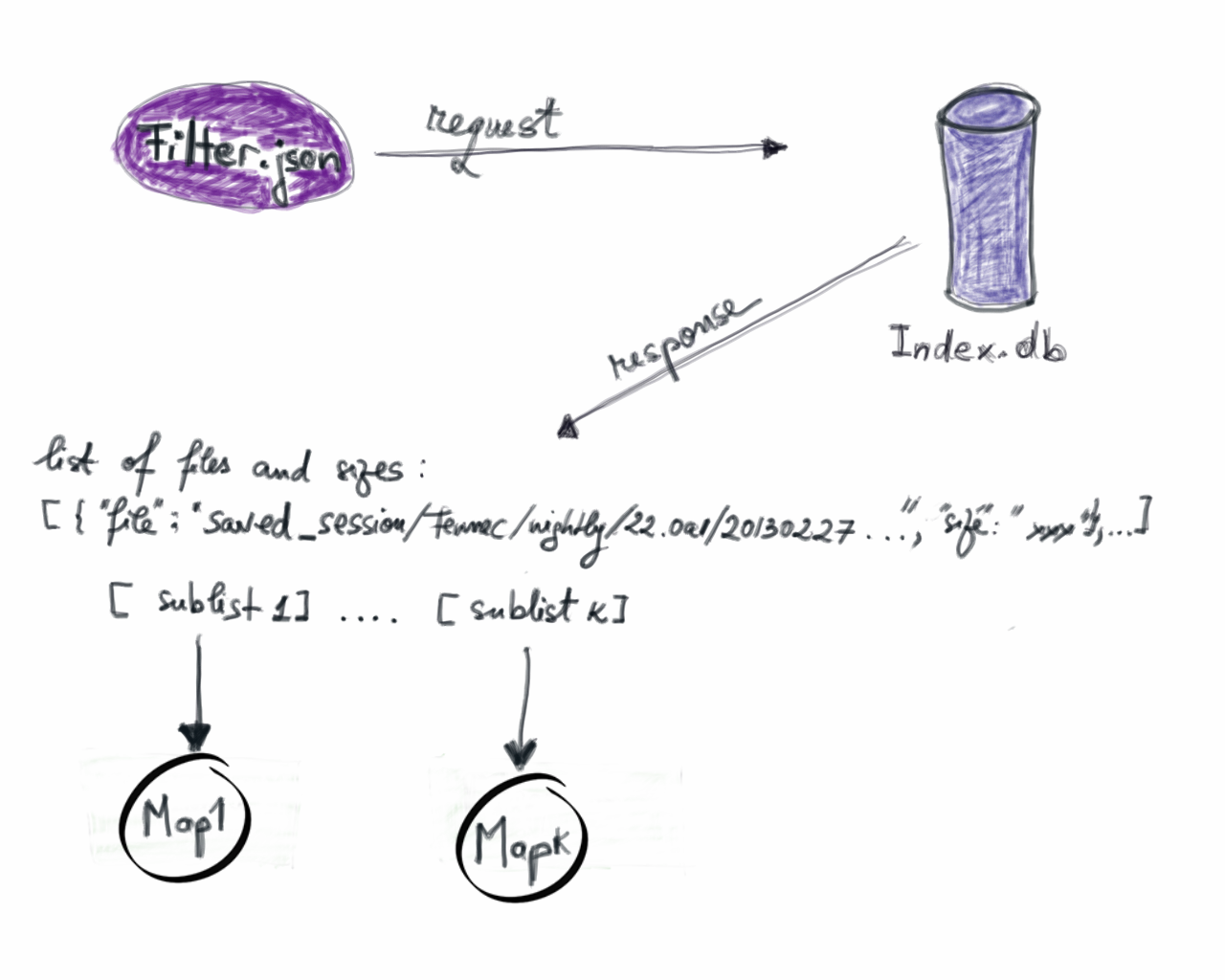

By providing these two elements the framework will proceed as follows:

The Analysis Framework will parse the Filter.json, make a request to the index database, split the response in sublists of files and start a map task for each batch of files.

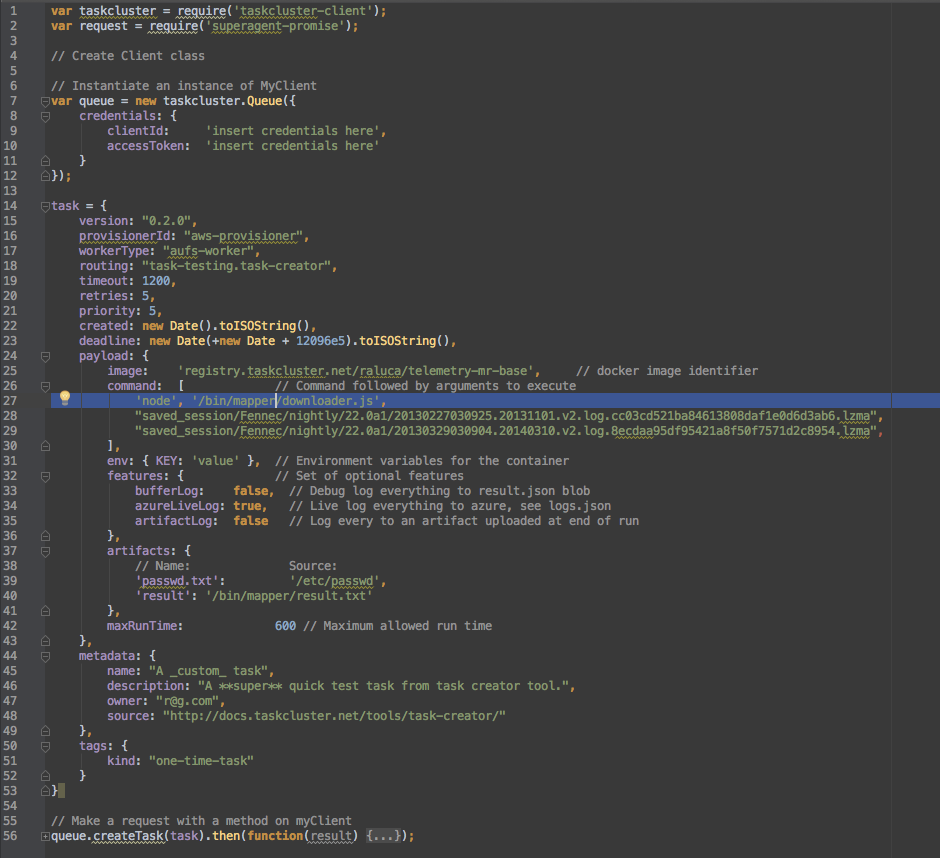

A map task would look as follows:

For a mapper task we would need to specify the Docker image (line 25), a command (line 27) and the files that need to be processed (lines 28 and 29).

The output of this task will be an artifact /bin/mapper/result.txt and will be uploaded to an intermediate bucket on Amazon.

Flexible

We call this framework flexible because you can customize all the layers provided.The framework comes with a downloader utility, several mapper helpers (python, javascript) that can decompress the files and feed the result to the loaded custom map function.

If you would like a different Docker image you can customize that too. The framework includes a default image and also the Docker file out of which you can build the image. By modifying that file you can easily get another Docker image that suits your needs.

If you are on MacOS you probably need some way to work with Docker. By installing Vagrant and adding the Vagrantfile to your directory you can work easily with Docker on your machine.

Easy to debug

Until now the analysis that we performed had close to zero logging. This had to change so we could get a robust and easy way to debug the workflow. With the approach we are trying to develop right now we have logging at each step. The developer has the option to see how many rows were processed, what were the files downloaded with success, what were the ones decompressed successfully and if an error occurred. With this information some retry policies could be implemented.Last but not least

I will be blogging about this next week and also provide you with the link to the official repo. Right now a lot of changes happen in my playground :) ..

http://amozillastory.blogspot.com/2014/06/like-all-magnificent-things-its-very.html

|

|

Nick Thomas: Keeping track of buildbot usage |

Mozilla Release Engineering provides some simple trending of the Buildbot continuous integration system, which can be useful to check how many jobs are currently running versus pending. There are graphs of the last 24 hours broken out in various ways – for example compilation separate from tests, compilation on try and everything else. This data also feeds into the pending queue on trychooser.

Until recently the mapping of job name to machine pool was out of date, due to our rapid growth for b2g and into Amazon’s AWS, so the graphs were more misleading than useful. This has now been corrected and I’m working on making sure it stays up to date automatically.

Until recently the mapping of job name to machine pool was out of date, due to our rapid growth for b2g and into Amazon’s AWS, so the graphs were more misleading than useful. This has now been corrected and I’m working on making sure it stays up to date automatically.

http://ftangftang.wordpress.com/2014/06/30/keeping-track-of-buildbot-usage/

|

|

Michael Kelly: What is an about:home snippet? |

I was reading Adam Lofting's post, The Power of Webmaker Landing Pages, and it referred to something called "The Snippet", and linked to a post on fundraising.mozilla.org called What is the "snippet"?.

The page the fundraising blog refers to is actually called about:home; the icon and bit of text below the search bar is the "snippet". Every time you view about:home, a snippet is randomly chosen from a set of snippets and shown. Once every 24 hours, the set of snippets you might see is updated. Snippets can execute JavaScript code, which allows things like snippets that replace the Firefox logo or snippets that show videos.

To understand how about:home snippets really work, you have to understand the two main players: Firefox and the Snippets Service.

Firefox Fetches Snippet Code

From Firefox's perspective, snippets are just one big chunk of HTML, CSS, and JavaScript that it injects into about:home when you view it. It fetches this code from the Snippets Service, a web service running at https://snippets.mozilla.com.

Whenever you view about:home, Firefox checks to see if it has fetched snippet code within the last 24 hours. If it has, it loads the snippet code. If it hasn't, it makes a request and fetches the snippet code, and saves it. The URL used for this request is long and contains several pieces of info useful for deciding which snippets to send you. On one of my test copies of Firefox 30, about:home fetches snippets with this URL:

The most useful parts of the URL are the version (30.0), the locale (en-US), and the release channel (release). These let us do things like localize snippets for different locales or create snippets that promote new features to the release channels that already have them.

Once it has the snippet code, Firefox injects it into about:home. After that, Firefox is done; it has no idea that the snippet code may contain multiple snippets or that only one should be displayed. Instead, the snippet code that is injected contains its own logic for choosing a snippet to display.

Snippets Show Themselves

The Snippets Service stores a large set of snippets and chooses which ones to send to you based on the URL shown above. For example, a snippet that is in French will be configured to be shown only to users with "fr" as their locale.

Along with the snippets themselves, the snippet code sent by the service contains JavaScript code that randomly chooses one of the included snippets and displays it. This code also performs a few other important functions, such as measuring impressions on snippets so that we can measure the conversion rate.

Because snippets can contain JavaScript, they aren't limited to showing an icon and text. In the past, we've run snippets that have:

- Swapped out the Firefox logo with another image

- Loaded a video player within the homepage

- Blacked out the entire homepage in protest

You Can Too!

An about:home snippet is a powerful way of reaching a huge number of people. If you're interested in running a snippet to spread the word about something, file a bug under the Snippets :: Campaign Bugzilla component. The snippets content team will review your request and determine if your snippet should be accepted.

Have any more questions about how snippets work? Let me know, I'm happy to answer them!

|

|

Mike Conley: Alice in DocShell Land |

I’ve been reading a book called The Annotated Alice. In this book, the late and great Martin Gardner shows us the stories of Alice’s Adventures in Wonderland and Through the Looking-Glass but supplies copious footnotes to illustrate the puns, wordplay, allusions, logic problems and satire going on beneath the text. Some of these footnotes delve into pure conjecture (there are still people to this day who theorize about various aspects of the stories), and other footnotes show quite clearly that Carrol wrote these stories with a sophisticated wit and whimsy that isn’t immediately obvious at first glance.

And it’s clear that Gardner (and others like him) have spent hours upon hours thinking and theorizing about these stories. A purposeful misspelling gets awarded a two page footnote here, and a mention of a mirror sends us off talking about matter and anti-matter and other matters (ha) of quantum physics.

So much thinking and effort to interpret these stories, and what you get out of it is a fascinating tapestry of ideas and history.

Needless to say, I’ve been finding the whole thing fascinating. It’s a hell of a read.

While reading it, I’ve wondered what it’d be like to apply the same practice to source code. Take some relatively mysterious piece of source code that only a few people feel comfortable with, and explode it out. Go through the source control history, and all of the old bugs, and see where this code came from. What was its purpose to begin with? What is its purpose now? What are the battle scars?

After much thinking, I’ve decided to try this, and I’m going to try it on a piece of Gecko called “DocShell”.

I think I just heard Ms2ger laughing somewhere.

It’s become pretty clear having talked to a few seasoned Mozilla hackers that DocShell is not well understood. The wiki page on it makes that even more clear – it starts:

The goal of this page is to serve as a dumping/organization ground for docshell docs. When someone finds out something, it should be added here in a reasonable way. By the time this gets unwieldy, hopefully we will have enough material for several actual docs on what docshell does and why.

So, I’m going to attempt to figure out what DocShell was supposed to do, and figure out what it currently does. I’m going to dig through source code, old bugs, and old CVS commits, back to the point where Netscape first open-sourced the Mozilla code-base.

It’s not going to be easy. It’s definitely going to be a multiple month, multiple post effort. I’m likely to get things wrong, or only partially correct. I’ll need help in those cases, so please comment.

And I might not succeed in figuring out what DocShell was supposed to do. But I’m pretty confident I can get a grasp on what it currently does.

So in the end, if I’m lucky, we’ll end up with a few things:

- A greater shared understanding of DocShell

- Materials that can be used to flesh out the DocShell wiki

- Better inline documentation for DocShell maybe?

I’ve also asked bz to forward me feedback requests for DocShell patches, so that way I get another angle of attack on understanding the code.

So, deep breath. Here goes. Watch this space.

http://mikeconley.ca/blog/2014/06/29/alice-in-docshell-land/

|

|

Doug Belshaw: Live Session: The Essential Elements of Digital Literacies |

I usually write about things related to Web Literacy and my role at Mozilla here. However, this evening (UK time) I’m running a live session to celebrate the launch of my new e-book, The Essential Elements of Digital Literacies*.

Update: The recording can be found below, or here.

Date: Sunday 29th June 2014

Time: 8pm BST (what time is that for me?)

URL: http://goo.gl/hJOA7K

I’ll post the video recording after the session!

|

|

Kaustav Das Modak: Proposing an event format for Firefox OS in India |

|

|

Tarek Ziad'e: NGinxTest |

I've been playing with Lua and Nginx lately, using the OpenResty bundle.

This bundle is an Nginx distribution on steroids, that includes some extensions and in particular the HTTPLuaModule which let you script Nginx using the Lua programming language.

Coming from a Python background, I was quite pleased with the Lua syntax, which feels like a cleaner Javascript inspired from Pascal and Python - if that even makes any sense :)

Here's how a Lua function look like:

-- rate limiting

function rate_limit(remote_ip, stats)

local hits = stats:get(remote_ip)

if hits == nil then

stats:set(remote_ip, 1, throttle_time)

return false

end

hits = hits + 1

stats:set(remote_ip, hits, throttle_time)

return hits >= max_hits

end

Peformance-wise, interacting with incoming web requests in Lua co-routines in Nginx is blazing fast. And there are a lot of work that can be done there to spare your proxied Python/Node.js/Go/Whatever application some cycles and complexity.

It can also help you standardize and reuse good practices across all your web apps no matter what language/framework they use.

Some things that can be done there:

- web application firewalling

- caching

- dynamic routing

- logging, load balancing

- a ton of other pre-work...

To put it simply:

Nginx become very easy to extend with Lua scripting without having to re-compile it all the time, and Lua lowers the barrier for ops and developers to implement new server behaviors.

Testing with Test::Nginx

When you start to add some Lua scripting in your Nginx environment, testing soon become mandatory. Pure unit testing Lua scripts in that context is quite hard because you are interacting with Nginx variables and functions.

The other approach is doing only pure functional tests by launching Nginx with the Lua script loaded, and interacting with the server using an HTTP client.

OpenResty offers a Perl tool to do this, called Test::Nginx where you can describe in a light DSL an interaction with the NGinx server.

Example from the documentation:

# foo.t

use Test::Nginx::Socket;

repeat_each(1);

plan tests => 2 * repeat_each() * blocks();

$ENV{TEST_NGINX_MEMCACHED_PORT} ||= 11211; # make this env take a default value

run_tests();

__DATA__

=== TEST 1: sanity

--- config

location /foo {

set $memc_cmd set;

set $memc_key foo;

set $memc_value bar;

memc_pass 127.0.0.1:$TEST_NGINX_MEMCACHED_PORT;

}

--- request

GET /foo

--- response_body_like: STORED

--- error_code: 201

The data section of the Perl script describes the Nginx configuration, the request made and the expected response body and status code.

For simple tests it's quite handy, but as soon as you want to do more complex tests it becomes hard to use the DSL. In my case I needed to run a series of requests in a precise timing to test my rate limiting script.

I missed my usual tools like WebTest, where you can write plain Python to interact with a web server.

Testing with NginxTest

Starting and stopping an Nginx server with a specific configuration loaded is not hard, so I started a small project in Python in order to be able to write my tests using WebTest.

It's called NGinxTest and has no ambitions to provide all the features the Perl tool provides, but is good enough to write complex scenarios in WebTest or whatever Python HTTP client you want in a Python unit test class.

The project provides a NginxServer class that takes care of driving an Nginx server.

Here's a full example of a test using it:

import os

import unittest

import time

from webtest import TestApp

from nginxtest.server import NginxServer

LIBDIR = os.path.normpath(os.path.join(os.path.dirname(__file__),

'..', 'lib'))

LUA_SCRIPT = os.path.join(LIBDIR, 'rate_limit.lua')

_HTTP_OPTIONS = """\

lua_package_path "%s/?.lua;;";

lua_shared_dict stats 100k;

""" % LIBDIR

_SERVER_OPTIONS = """\

set $max_hits 4;

set $throttle_time 0.3;

access_by_lua_file '%s/rate_limit.lua';

""" % LIBDIR

class TestMyNginx(unittest.TestCase):

def setUp(self):

hello = {'path': '/hello', 'definition': 'echo "hello";'}

self.nginx = NginxServer(locations=[hello],

http_options=_HTTP_OPTIONS,

server_options=_SERVER_OPTIONS)

self.nginx.start()

self.app = TestApp(self.nginx.root_url, lint=True)

def tearDown(self):

self.nginx.stop()

def test_rate(self):

# the 3rd call should be returning a 429

self.app.get('/hello', status=200)

self.app.get('/hello', status=200)

self.app.get('/hello', status=404)

def test_rate2(self):

# the 3rd call should be returning a 200

# because the blacklist is ttled

self.app.get('/hello', status=200)

self.app.get('/hello', status=200)

time.sleep(.4)

self.app.get('/hello', status=200)

Like the Perl script, you provide bits of configuration for your Nginx server -- in this case pointing the Lua script to test and some general configuration.

Then I test my rate limiting feature using Nose:

$ bin/nosetests -sv tests/test_rate_limit.py test_rate (test_rate_limit.TestMyNginx) ... ok test_rate2 (test_rate_limit.TestMyNginx) ... ok ---------------------------------------------------------------------- Ran 2 tests in 1.196s OK

Out of the 1.2 seconds, the test sleeps half a second, and the class starts and stops a full Nginx server twice. Not too bad!

I have not released that project at PyPI - but if you think it's useful to you and if you want some more features in it, let me know!

|

|

Rizky Ariestiyansyah: Fixing Slow Touchpad Speed on Fedora 20 |

http://oonlab.com/fixing-slow-touchpad-speed-on-fedora-20.onto

|

|

Mike Conley: Australis Performance Post-mortem Summary |

Over the last few months, I’ve been talking about all of the work we put into making Australis feel fast when it shipped in Firefox 29.

I talked about where we started with our performance work, and how we grappled with the ts_paint and tpaint performance (“talos”) tests. After that, I talked a bit about the excellent tools we have (and ones we developed ourselves) to make finding our performance bottlenecks easier.

After a brief delay, I rounded out the series by talking about our tab animation performance work, and the customization transition performance work.

I think over the course of working on these things, I’ve learned quite a bit about performance work in general. If I had to distill it down to a few tidbits, it’d be:

- Measure first to get a baseline, then try to improve. (Alternatively, “you can’t improve what you can’t measure”)

- Finding the solutions to performance problems is usually the easy part. The hard part is finding and isolating the problems to begin with.

- While performance work can be a bit of a grind, users do feel and appreciate the efforts. It’s totally worth it.

So that’s it on the series. Enjoy your zippy Firefox!

http://mikeconley.ca/blog/2014/06/28/australis-performance-post-mortem-summary/

|

|

Robert Helmer: Deploying Socorro quickly |

I've been seeing a lot more people looking for help and information about installing and running Socorro (the software that powers the crash-stats.mozilla.com)

We've done a lot of work the past few years on making the system more flexible and are constantly working on improving the documentation, especially the installation instructions - and the more people that are able to get the system going, the more contributions we've seen.

Still, the docs have been mostly focused on getting a developer install for hacking on the system, and less so on installing and upgrading the software without having to configure and understand every component.

In response to some specific questions on the mailing list about how to install and then upgrade Socorro, we've released the deploy script that Mozilla uses internally (with some modifications to work in a more vanilla environment).

The easiest way to get a system going is to spin up a Vagrant VM and then follow the "Installing from binary package" instructions.

We also run a Jenkins bot to ensure that the Vagrant and deploy script don't regress.

This is easy enough that it's making our "Installing from source" instructions look quite baroque, so expect those to see some improvements soon too!

I'd like to give a particular shout-out to Jorgen P. Tjerno who has been doing quite a bit of work to make sure deploys are smooth - thanks Jorgen!

|

|

Daniel Stenberg: improving the curl docs, step 1 |

As I mentioned before, the curl documentation needs improvement. As a first step I converted the man page for curl_easy_setopt into no less than 210(!) individual man pages. One new for each option the function supports.

The man page was originally (a few days ago) almost 3000 lines, and now with them all split up we end up with a lot more text. This because the new format encourages more text per option and each page now has to detail itself more. This should also make each option much easier to google/search and to link to when we help users understand the options.

I’ve made some server-side scripts to generate html versions of them all, I generate a list of all options we have and the examples we host on the web site now have all mentions of the options linked directly to these new pages.

The curl_easy_setopt man page will then get most explanations cut out and mainly be used as an index with the options grouped into logical sections to help users find the options they want to use. I could cut out almost 2500 lines.

The new man pages add about 7500 lines of documentation (excluding the headers in each file)…

http://daniel.haxx.se/blog/2014/06/21/improving-the-curl-docs-step-1/

|

|

Will Kahn-Greene: Using the bug_mentor field with the Bugzilla REST API to get mentored bugs |

Bugzilla grew a mentor field recently. This is really fantastic as it solves some interesting problems and makes it easier to track various aspects of mentoring which have been previously difficult to track. Yay to everyone involved in making that happen!

Migrating from the old way (sticking [mentor=xxx] in the whiteboard field) to the new way caused a problem that I spent a while working on today. I heard reports of other people having the same problem, hence this blog post.

There are a bunch of Bugzilla-symbiotic systems which would show a list of mentored bugs by checking to see if the string mentor= was in the whiteboard field. That no longer works. Instead we have to check to see if the bug_mentor field is empty. However, this is difficult to express with the old Bugzilla REST API (BzAPI).

The bug_mentor field is unique in that it holds email addresses which have the @ in them. So we can (ab)use this property by seeing if the bug_mentor field contains the @ character.

I did this with the GetInvolved/input.mozilla.org page. Here is the diff in case that's helpful.

Here's some Python that shows this with the old BzAPI and the new BMO API which pulls mentored bugs for Input:

import requests # Using the old BzAPI: https://wiki.mozilla.org/Bugzilla:REST_API r = requests.get( 'https://api-dev.bugzilla.mozilla.org/latest' + '/bug?product=Input&bug_mentor_type=contains&bug_mentor=@' ) data = r.json() print len(data['bugs']) # Prints 9 # Using the new BMO API. https://wiki.mozilla.org/BMO/REST r = requests.get( 'https://bugzilla.mozilla.org/rest' + '/bug?product=Input&bug_mentor_type=substring&bug_mentor=@' ) data = r.json() print len(data['bugs']) # Prints 9

|

|

Brad Lassey: New Android Widget Peer |

I’m happy to announce that James Willcox, better known to many as snorp, is now an Android Widget peer. James has been doing reviews and acting as a de-facto peer for a while now so it is about time to make it official.

In reviewing this module ownership and speaking to the current peers, Vlad, Doug and Michael Wu have been removed from the peers list. While each of them have made fundamental and foundational contributions to the porting of Gecko to Android, all 3 have moved on to other projects over the last couple years and the code base has changed significantly since. I would, however, like to thank them for their contributions and say that I’m looking forward to any patches they may want to throw our way in the future.

|

|

.png)