Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Pascal Chevrel: What I did in Q3 |

Tools and code

I spent significantly more time on tool this quarter than in the past, I am also happy to say that Transvision is now a 6 people team and that we will all be at Brussels for the Summit (see my blog post in April). I like it, I like to create the small tools and scripts that make my life or localizers life better.

- Two releases of Transvision (release notes) + some preparatory work for future l20n compatibility

- Created a mini-dashboard for our team so as to help us follow FirefoxOS work

- Wrote the conversion script to convert our Serbian Cyrillic string repository to Serbian Latin (see this blog post)

- Turned my langchecker scripts (key part of the Web Dashboard) into a github project and worked with Flod on improving our management scripts for mozilla.org and fhr. A recent improvement is that we can now import automatically translations done on Locamotion You can see a list of the changes in the release notes.

- Worked on scripts allowing to query bugzilla without using the official API (because the data I want is specific to the mozilla customizations we need for locales), that will probably be part of the Webdashboard soon so as to be able to extract Web localization bugs from multiple components (gist here). Basically I had the idea to use the CSV export feature for advanced search in Bugzilla as a public read-only API

- Several python patches to mozilla.org to fix l10n bugs or improve our tools to ship localized pages (Bug 891835, Bug 905165, Bug 904703).

Mozilla.org localization

Since we merged all of our major websites (mozilla.org, mozilla.com, mozilla-europe.org, mozillamessaging.com) under the single mozilla.org domain name two years ago with a new framework based on Django, we have gained in consistency but localization of several backends under one single domain and a new framework slowed us down for a while. I'd say that we are now mostly back to the old mozilla.com speed of localization, lots of bugs and features were added to Bedrok (nickname of our Django-powered site), we have a very good collaboration with the webdev/webprod teams on the site and we are more people working on it. I think this quarter localizer felt that a lot more work was asked from them on mozilla.org, I'll try to make sure we don't loose locales on the road, this is a website that hosts content for 90 locales, but we are back to speed with tons of new people!

- Main Firefox download page (and all the download buttons across the site) finally migrated to Bedrock, our Django instance. Two major updates to that page this quarter (+50 locales), more to come next quarter, this is part of a bigger effort to simplify our download process, stop maintaining so many different specialized download pages and SEO improvements.

- Mozilla.org home page is now l10n-friendly and we just shipped it in 28 languages. Depending on your locale, visitor see custom content (news items, calls for contribution or translation...)

- Several key high traffic pages (about products updade) are now localized and maintained at large scale (50+ locales)

- Newsletter center and newsletter subscription process largely migrated to Bedrock and additional locales supported (but there is still work to do there)

- The plugincheck web application is also largely migrated to Bedrock (61 locales on bedrock, about 30 more to migrate before we can delete the older backend and maintain only one version)

- The contribute page scaled up tp 28 locales with local teams of volunteers behind answering people that contact us

- Firefox OS consumer and industry sub-sites released/updated for +10 locales, with some geoIP in addition to locale detection for tailored content!

- Many small updates to other existing pages and templates

Community growth

This quarter, I tried to spend some time looking for localizers to work on web content as well as acompanying volunteers that contact us. I know that I am good at finding volunteers that share our values and are achievers, unfortunately I don't have that much time to spend on that. Hopefully I will be able to spend a few days on that every quarter because we need to grow and we need to grow with the best open source contributors!

- About 20 people got involved for the folowing locales: French, Czech, Catalan, Slovenian, Tamil, Bengali, Greek, Spanish (Spain variant), Swedish. Several became key localizers and will be at the Mozilla summit

- A couple of localizers moved from mozilla.org localization to product localization where their help was more needed, I helped them by finding new people to replace them on web localization and/or empowering existing community members to avoid any burn-out

- I spent several days in a row specifically helping the Catalan community as it needed help to scale since they now also do all the mobile stuff. I opened a #mozilla-cat IRC channel and found 9 brand new volunteers, some of them professional translators, some of them respected localizers from other open source projects. I'll probably spend some more time to help them next quarter to consolidate this growth. I may keep this strategy every quarter since it seems to be efficient (look for localizers in general and also help one specific team to grow and organize itself to scale up)

Other

- Significant localization work for Firefox Health Report, both Desktop (shipped) and Mobile versions (soon to be shipped)

- Lots of meetings for lots of different projects for next quarter

- Two work weeks, one focused on tooling in Berlin, one focused on training my new colleagues Peying and Francesco (but to be honest, Francesco didn't need much of it thanks to his 10 years of involvement in Mozilla as a contributor

)

) - A lot of work to adjust my processes to work with my new colleague Francesco Lodolo (also an old-timer in the community, he is the Italian Firefox localizer). Kudos to Francesco for helping me with all of the projects! Now I can go on holidays knowing that i have a good backup

French community involvement

- In the new Mozilla paris office I organized a meeting with the LinuxFR admins, because I think it's important to work with the rest of the Open Source ecosystem

- With Julien Wajsberg (Gaia developer) we organized a one day meeting with the Dotclear community, a popular blogging platform alternative to Wordpress in France (purely not-for-profit), because we think it's important to work with project that build software that allows people to create content on the Web

- Preparation of more open source events in the Paris office

- We migrated our server (hosting Transvision, womoz.org, mozfr.org...) to the latest Debian Stable, which finally brings us a decent modern version of PHP (5.4). We grew our admin community to 2 more people with Ludo and Geb :). Our server flies!

In a nutshell, a very busy quarter! If you want to speak about some of it with me, I will be at the Mozilla Summit in Brussels this week

http://www.chevrel.org/carnet/?post/2013/10/01/What-I-worked-on-last-quarter

|

|

Christian Heilmann: [video+slides] FirefoxOS – HTML5 for a truly world-wide-web (Sapo Codebits 2014) |

Today the good people at Sapo released the video of my Codebits 2014 keynote.

In this keynote, I talk about FirefoxOS and what it means in terms of bringing mobile web connectivity to the world. I explain how mobile technology is unfairly distributed and how closed environments prevent budding creators from releasing their first app. The slides are available on Slideshare as the video doesn’t show them.

There’s also a screencast on YouTube.

Since this event, Google announced their Android One project, and I am very much looking forward how this world-wide initiative will play out and get more people connected.

|

|

Henrik Skupin: Firefox Automation report – week 21/22 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 21 and 22.

Highlights

To assist everyone from our community to learn more about test automation at Mozilla, we targeted 4 full-day automation training days from mid of May to mid of June. The first training day was planned for May 21rd and went well. Lots of [people were present and actively learning more about automation[https://quality.mozilla.org/2014/05/automation-training-day-may-21st-results/). Especially about testing with Mozmill.

To support community members to get in touch with Mozmill testing a bit easier, we also created a set of one-and-done tasks. Those start from easy tasks like running Mozmill via the Mozmill Crowd extension, and end with creating the first simple Mozmill test.

Something, which hit us by surprise was that with the release of Firefox 30.0b3 we no longer run any automatically triggered Mozmill jobs in our CI. It took a bit of investigation but finally Henrik found out that the problem has been introduced by RelEng when they renamed the product from ”’firefox”’ to ”’Firefox”’. A quick workaround fixed it temporarily, but for a long term stable solution we might need a frozen API for build notifications via Mozilla Pulse.

One of our goals in Q2 2014 is also to get our machines under the control of PuppetAgain. So Henrik started to investigate the first steps, and setup the base manifests as needed for our nodes and the appropriate administrative accounts.

The second automation training day was also planned by Andreea and took place on May 28th. Again, a couple of people were present, and given the feedback on one-and-done tasks, we fine-tuned them.

Last but not least Henrik setup the new Firefox-Automation-Contributor team, which finally allows us now to assign people to specific issues. That was necessary because Github doesn’t let you do that for anyone, but only known people.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 21 and week 22.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 21 and week 22.

http://www.hskupin.info/2014/07/10/firefox-automation-report-week-21-22-2014/

|

|

Henrik Gemal: Bringing SIMD to JavaScript |

In an exciting collaboration with Mozilla and Google, Intel is bringing SIMD to JavaScript. This makes it possible to develop new classes of compute-intensive applications such as games and media processing—all in JavaScript—without the need to rely on any native plugins or non-portable native code. SIMD.JS can run anywhere JavaScript runs. It will, however, run a lot faster and more power efficiently on the platforms that support SIMD. This includes both the client platforms (browsers and hybrid mobile HTML5 apps) as well as servers that run JavaScript, for example through the Node.js V8 engine.

https://01.org/blogs/tlcounts/2014/bringing-simd-javascript

http://gemal.dk/blog/2014/07/10/bringing_simd_to_javascript/index.html?from=rss-category

|

|

Nicholas Nethercote: Dipping my toes in the Servo waters |

I’m very interested in Rust and Servo, and have been following their development closely. I wanted to actually do some coding in Rust, so I decided to start making small contributions to Servo.

At this point I have landed two changes in the tree — one to add very basic memory measurements for Linux, and the other for Mac — and I thought it might be interesting for people to hear about the basics of contributing. So here’s a collection of impressions and thoughts in no particular order.

Getting the code and building Servo was amazingly easy. The instructions actually worked first time on both Ubuntu and Mac! Basically it’s just apt-get install (on Ubuntu) or port install (on Mac), git clone, configure, and make. The configure step takes a while because it downloads an appropriate version of Rust, but that’s fine; I was expecting to have to install the appropriate version of Rust first so I was pleasantly surprised.

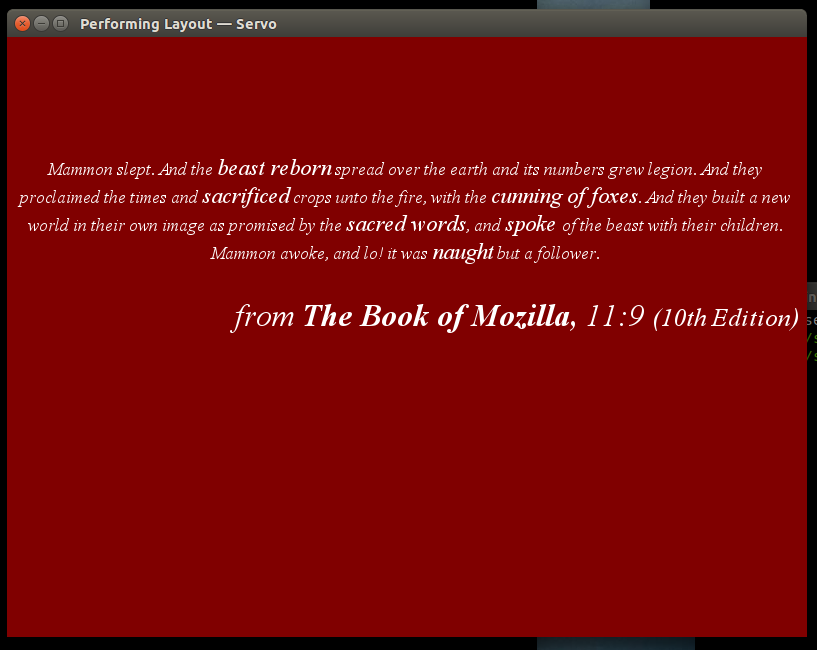

Once you build it, Servo is very bare-boned. Here’s a screenshot.

There is no address bar, or menu bar, or chrome of any kind. You simply choose which page you want to display from the command line when you start Servo. The memory profiling I implemented is enabled by using the -m option, which causes memory measurements to be periodically printed to the console.

Programming in Rust is interesting. I’m not the first person to observe that, compared to C++, it takes longer to get your code past the compiler, but it’s more likely to to work once you do. It reminds me a bit of my years programming in Mercury (imagine Haskell, but strict, and with a Prolog ancestry). Discriminated unions, pattern matching, etc. In particular, you have to provide code to handle all the error cases in place. Good stuff, in my opinion.

One thing I didn’t expect but makes sense in hindsight: Servo has seg faulted for me a few times. Rust is memory-safe, and so shouldn’t crash like this. But Servo relies on numerous libraries written in C and/or C++, and that’s where the crashes originated.

The Rust docs are a mixed bag. Libraries are pretty well-documented, but I haven’t seen a general language guide that really leaves me feeling like I understand a decent fraction of the language. (Most recently I read Rust By Example.) This is meant to be an observation rather than a complaint; I know that it’s a pre-1.0 language, and I’m aware that Steve Klabnik is now being paid by Mozilla to actively improve the docs, and I look forward to those improvements.

The spotty documentation isn’t such a problem, though, because the people in the #rust and #servo IRC channels are fantastic. When I learned Python last year I found that simply typing “how to do X in Python” into Google almost always leads to a Stack Overflow page with a good answer. That’s not the case for Rust, because it’s too new, but the IRC channels are almost as good.

The code is hosted on GitHub, and the project uses a typical pull request model. I’m not a particularly big fan of git — to me it feels like a Swiss Army light-sabre with a thousand buttons on the handle, and I’m confident using about ten of those buttons. And I’m also not a fan of major Mozilla projects being hosted on GitHub… but that’s a discussion for another time. Nonetheless, I’m sufficiently used to the GitHub workflow from working on pdf.js that this side of things has been quite straightforward.

Overall, it’s been quite a pleasant experience, and I look forward to gradually helping build up the memory profiling infrastructure over time.

https://blog.mozilla.org/nnethercote/2014/07/10/dipping-my-toes-in-the-servo-waters/

|

|

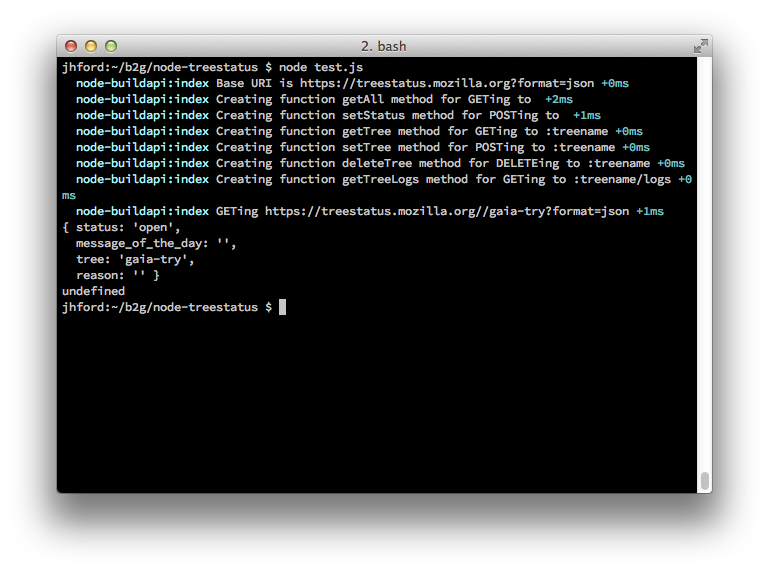

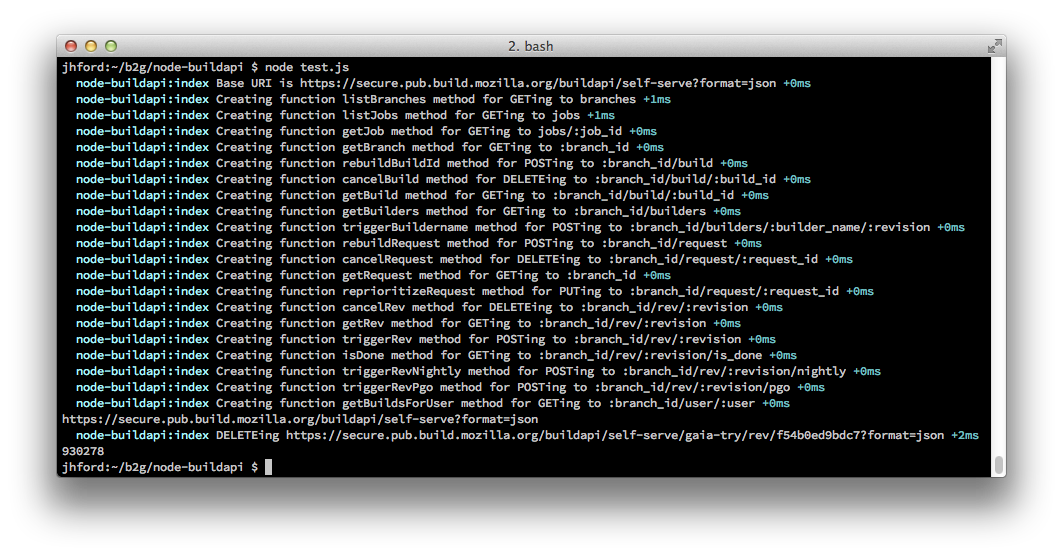

John Ford: Node.js modules to talk to Treestatus and Build API |

Proof that it works:

They aren't perfect, but they work well. What I really should do is split the API data into a JSON file, create a single module that knows how to consume those JSON files and build the API Objects and Docs then have both of these APIs use that module to do their work. Wanna do that? I swear, I'll look at pull requests!

Edit: Forgot to actually link to the code. It's here and here.

http://blog.johnford.org/2014/07/nodejs-modules-to-talk-to-treestatus.html

|

|

Patrick Cloke: Mentoring and Time |

No, this is not about being late places, it’s about respecting people’s time. I won’t go deep into why this is important as, Michael Haggerty wrote an awesome article on this. His thoughts boiled down to a single line of advice:

DON’T WASTE OTHER PEOPLE’S TIME

I think this applies to any type of mentoring, and not only open source work, but any formal or informal mentoring! This advice isn’t meant just for GSoC students, for interns or new employees, but also things I’d like to remind myself to do when someone is helping me.

To make this sound positive, I’d reword the above advice as:

Respect other people’s time!

Someone is willing to help you, so assume some good faith, but help them help you! Some actions to focus on:

- Ask focused questions! If you do not understand an answer, do not re-ask the same question, but ask followup question. Show you’ve researched the original answer and attempted to understand it. Write sample code, play with it, etc. If you think the answer given doesn’t apply to your question, reword your question: your mentor probably did not understand.

- Be cognizant of timezones: if you’d like a question answered (in realtime), ask it when the person is awake! (And this includes realizing if they have just woken up or are going to bed.)

- Your mentor may not have the context you do: they might be helping many people at once, or even working on something totally different than you! Try not to get frustrated if you have to explain your context to them multiple times or have to clarify your question. You are living and breathing the code you’re working in; they are not.

- Don’t be afraid to share code. It’s much easier to ask a question when there’s a specific example in front of you. Be specific and don’t talk theoretically.

- Don’t be upset if you’re asked to change code (e.g. receive an r-)! Part of helping you to grow is telling you what you’re doing wrong.

- Working remotely is hard. It requires effort to build a level of trust between people. Don’t just assume it will come in time, but work at it and try to get to know and understand your mentor.

- Quickly respond to both feedback and questions. Your mentor is taking their precious time to help you. If they ask you a question or ask something of you, do it ASAP. If you can’t answer their question immediately, at least let them know you received it and will soon look at it.

- If there are multiple people helping you, assume that they communicate

(without your knowledge). Don’t…

- …try to get each of them to do separate parts of a project for you.

- …ask the same question to multiple people hoping for different answers.

The above is a lot to consider. I know that I have a tendency to do some of the above. Using your mentors time efficiently will not only make your mentor happy, but it will probably cause them to want to give you more of their time.

Mentoring is also hard and a skill to practice. Although I’ve talked a lot about what a mentee needs to do, it is also important that a mentor makes h(im|er)self available and open. A few thoughts on interacting as a mentor:

- Be cognizant of culture and language (as in, the level at which a mentor and mentee share a common language). In particular, colloquialisms should be avoided whenever possible. At least until a level of trust is reached.

- Be tactful when giving feedback. Thank people for submitting a patch, give good, actionable feedback quickly. Concentrate more on overall code design and algorithms than nits. (Maybe even point out nits, but fix them yourself for an initial patch.)

http://patrick.cloke.us/posts/2014/07/09/mentoring-and-time/

|

|

Byron Jones: using “bugmail filtering” to exclude notifications you don’t want |

a common problem with bugzilla emails (aka bugmail) is there’s too much of it. if you are involved in a bug or watching a component you receive all changes made to bugs, even those you have no interest in receiving.

earlier this week we pushed a new feature to bugzilla.mozilla.org : bugmail filtering.

this feature is available on the “bugmail filtering” tab on the “user preference” page.

there are many reasons why bugzilla may send you notification of a change to a bug:

- you reported the bug

- the bug is assigned to you

- you are the qa-contact for the bug

- you are on the cc list

- you are watching the bug’s product/component

- you are watching another user who received notification of the bug

- you are a “global watcher”

dealing with all that bugmail can be time consuming. one way address this issue is to use the x-headers present in every bugmail to categorise and separate bugmail into different folders in your inbox. unfortunately this option isn’t available to everyone (eg. gmail users still cannot filter on any email header).

bugmail filtering allows you to tell bugzilla to notify you only if it’s a change that you’re interested in.

for example, you can say:

don’t send me an email when the qa-whiteboard field is changed unless the bug is assigned to me

if multiple filters are applicable to the same bug change, include filters override exclude filters. this interplay allows you to write filters to express “don’t send me an email unless …”

don’t send me an email for developer tools bugs that i’m CC’ed on unless the bug’s status is changed

- first, exclude all developer tools emails:

- then override the exclusion with an inclusion for just the status changes:

Filed under: bmo

|

|

Pete Moore: Weekly review 2014-07-09 |

This week I am on build duty.

At the tail end of last week, I managed to finish of l10n patches and sent them over to Aki to get them reviewed. He has now reviewed them, and the next step is to process his review comments.

Other than this, I raised a bug about refactoring the beagle config and created a patch, and am currently in discussions with Aki about it.

I’m still processing the loaners for Joel Maher (thanks Coop for taking care of the windows loaners) - I hit some problems on the way setting up vnc on Mountain Lion - working through this currently (also involved Armen to get his expertise).

After the loaners are done, I also have my own queue of optimisations that I’d like to look at that are related to build duty (open bugs).

|

|

Niko Matsakis: An experimental new type inference scheme for Rust |

While on vacation, I’ve been working on an alternate type inference scheme for rustc. (Actually, I got it 99% working on the plane, and have been slowly poking at it ever since.) This scheme simplifies the code of the type inferencer dramatically and (I think) helps to meet our intutions (as I will explain). It is however somewhat less flexible than the existing inference scheme, though all of rustc and all the libraries compile without any changes. The scheme will (I believe) make it much simpler to implement to proper one-way matching for traits (explained later).

Note: Changing the type inference scheme doesn’t really mean much to end users. Roughly the same set of Rust code still compiles. So this post is really mostly of interest to rustc implementors.

The new scheme in a nutshell

The new scheme is fairly simple. It is based on the observation that

most subtyping in Rust arises from lifetimes (though the scheme is

extensible to other possible kinds of subtyping, e.g. virtual

structs). It abandons unification and the H-M infrastructure and takes

a different approach: when a type variable V is first related to

some type T, we don’t set the value of V to T directly. Instead,

we say that V is equal to some type U where U is derived by

replacing all lifetimes in T with lifetime variables. We then relate

T and U appropriately.

Let me give an example. Here are two variables whose type must be inferred:

'a: { // 'a --> name of block's lifetime

let x = 3;

let y = &x;

...

}

Let’s say that the type of x is $X and the type of y is $Y,

where $X and $Y are both inference variables. In that case, the

first assignment generates the constraint that int <: $X and the

second generates the constraint that &'a $X <: $Y. To resolve the

first constraint, we would set $X directly to int. This is because

there are no lifetimes in the type int. To resolve the second

constraint, we would set $Y to &'0 int – here '0 represents a

fresh lifetime variable. We would then say that &'a int <: &'0 int,

which in turn implies that '0 <= 'a. After lifetime inference is

complete, the types of x and y would be int and &'a int as

expected.

Without unification, you might wonder what happens when two type variables are related that have not yet been associated with any concrete type. This is actually somewhat challenging to engineer, but it certainly does happen. For example, there might be some code like:

let mut x; // type: $X

let mut y = None; // type: Option<$0>

loop {

if y.is_some() {

x = y.unwrap();

...

}

...

}

Here, at the point where we process x = y.unwrap(), we do not yet

know the values of either $X or $0. We can say that the type of

y.unwrap() will be $0 but we must now process the constrint that

$0 <: $X. We do this by simply keeping a list of outstanding

constraints. So neither $0 nor $X would (yet) be assigned a

specific type, but we’d remember that they were related. Then, later,

when either $0 or $X is set to some specific type T, we can go

ahead and instantiate the other with U, where U is again derived

from T by replacing all lifetimes with lifetime variables. Then we

can relate T and U appropriately.

If we wanted to extend the scheme to handle more kinds of inference beyond lifetimes, it can be done by adding new kinds of inference variables. For example, if we wanted to support subtyping between structs, we might add struct variables.

What advantages does this scheme have to offer?

The primary advantage of this scheme is that it is easier to think about for us compiler engineers. Every type variable is either set – in which case its type is known precisely – or unset – in which case its type is not known at all. In the current scheme, we track a lower- and upper-bound over time. This makes it hard to know just how much is really known about a type. Certainly I know that when I think about inference I still think of the state of a variable as a binary thing, even though I know that really it’s something which evolves.

What prompted me to consider this redesign was the need to support

one-way matching as part of trait resolution. One-way matching is

basically a way of saying: is there any substitution S such that T

<: S(U) (whereas normal matching searches for a substitution applied

to both sides, like S(T) <: S(U)).

One-way matching is very complicated to support in the current

inference scheme: after all, if there are type variables that appear

in T or U which are partially constrained, we only know bounds

on their eventual type. In practice, these bounds actually tell us a

lot: for example, if a type variable has a lower bound of int, it

actually tells us that the type variable is int, since in Rust’s

type system there are no super- of sub-types of int. However,

encoding this sort of knowledge is rather complex – and ultimately

amounts to precisely the same thing as this new inference scheme.

Another advantage is that there are various places in the Rust’s type

checker whether we query the current state of a type variable and make

decisions as a result. For example, when processing *x, if the type

of x is a type variable T, we would want to know the current state

of T – is T known to be something inherent derefable (like &U

or &mut U) or a struct that must implement the Deref trait? The

current APIs for doing this bother me because they expose the bounds

of U – but those bounds can change over time. This seems “risky” to

me, since it’s only sound for us to examine those bounds if we either

(a) freeze the type of T or (b) are certain that we examine

properties of the bound that will not change. This problem does not

exist in the new inference scheme: anything that might change over

time is abstracted into a new inference variable of its own.

What are the disadvantages?

One form of subtyping that exists in Rust is not amenable to this

inference. It has to do with universal quantification and function

types. Function types that are “more polymorphic” can be subtypes of

functions that are “less polymorphic”. For example, if I have a

function type like <'a> fn(&'a T) -> &'a uint, this indicates a

function that takes a reference to T with any lifetime 'a and

returns a reference to a uint with that same lifetime. This is a

subtype of the function type fn(&'b T) -> &'b uint. While these

two function types look similar, they are quite different: the former

accepts a reference with any lifetime but the latter accepts only a

reference with the specific lifetime 'b.

What this means is that today if you have a variable that is assigned many times from functions with varying amounts of polymorphism, we will generally infer its type correctly:

fn example<'b>(..) {

let foo: <'a> |&'a T| -> &'a int = ...;

let bar: |&'b T| -> &'b int = ...;

let mut v;

v = foo;

v = bar;

// type of v is inferred to be |&'b T| -> &'b int

}

However, this will not work in the newer scheme. Type ascription of some form would be required. As you can imagine, this is not a very .common problem, and it did not arise in any existing code.

(I believe that there are situations which the newer scheme infers correct types and the older scheme will fail to compile; however, I was unable to come up with a good example.)

How does it perform?

I haven’t done extensive measurements. The newer scheme creates a lot of region variables. It seems to perform roughly the same as the older scheme, perhaps a bit slower – optimizing region inference may be able to help.

|

|

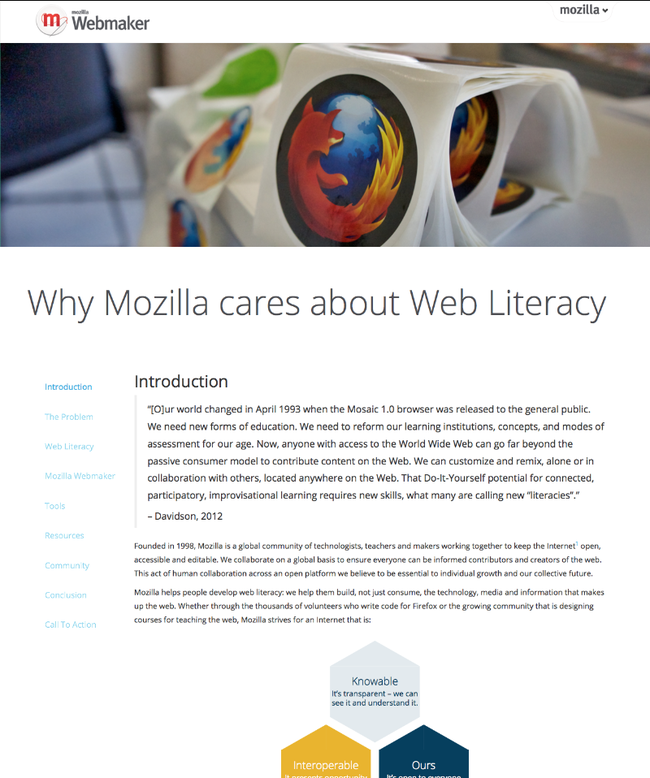

Doug Belshaw: Why Mozilla cares about Web Literacy [whitepaper] |

One of my responsibilities as Web Literacy Lead at Mozilla is to provide some kind of theoretical/conceptual underpinning for why we do what we do. Since the start of the year, along with Karen Smith and some members of the community, I’ve been working on a Whitepaper entitled Why Mozilla cares about Web Literacy.

The thing that took time wasn’t really the writing of it – Karen (a post-doc researcher) and I are used to knocking out words quickly – but the re-scoping and design of it. The latter is extremely important as this will serve as a template for future whitepapers. We were heavily influenced by P2PU’s reports around assessment, but used our own Makerstrap styling. I’d like to thank FuzzyFox for all his work around this!

Thanks also to all those colleagues and community members who gave feedback on earlier drafts of the whitepaper. It’s available under a Creative Commons Attribution 4.0 International license and you can fork/improve the template via the GitHub repository. We’re planning for the next whitepaper to be around learning pathways. Once that’s published, we’ll ensure there’s a friendlier way to access them - perhaps via a subdomain of webmaker.org.

Questions? Comments? I’m @dajbelshaw and you can email me at doug@mozillafoundation.org.

|

|

Gervase Markham: The Latest Airport Security Theatre |

All passengers flying into or out of the UK are being advised to ensure electronic and electrical devices in hand luggage are sufficiently charged to be switched on.

All electronic devices? Including phones, right? So you must be concerned that something dangerous could be concealed inside a package the size of a phone. And including laptops, right? Which are more than big enough to contain said dangerous phone-sized electronics package in the CD drive bay, or the PCMCIA slot, and still work perfectly. Or, the evilness could even be occupying 90% of the body of the laptop, while the other 10% is taken up by an actual phone wired to the display and the power button which shows a pretty picture when the laptop is “switched on”.

Or are the security people going to make us all run 3 applications of their choice and take a selfie using the onboard camera to demonstrate that the device is actually fully working, and not just showing a static image?

I can’t see this as being difficult to engineer around. And meanwhile, it will cause even more problems trying to find charging points in airports. Particularly for people who are transferring from one long flight to another.

http://feedproxy.google.com/~r/HackingForChrist/~3/_ePD9HzY9LA/

|

|

Just Browsing: Speeding Up Grunt Builds Using Watchify |

Grunt is a great tool for automating boring tasks. Browserify is a magical bundler that allows you to require your modules, Node.js style, in your frontend code.

One the most useful plugins for grunt is grunt-contrib-watch. Simply put, it watches your file system and runs predefined commands whenever a change occurs (i.e. file was deleted, added or updated). This comes in especially handy when you want to run your unit tests every time anything changes.

Browserify works by parsing your JS files and scanning for require and exports statements. Then it determines the dependency order of the modules and concatenates them together to create a “superbundle” JS file. Hence every time your code changes, you need to tell browserify to rebuild your superbundle or your changes will not be picked up by the browser.

Watching Your Build: the Naive Approach

When connecting Grunt with Browserify, it might be tempting to do something like this:

watch: {

sources: {

files: [

'/**/*.coffee',

'/**/*.js'

],

tasks: ['browserify', 'unittest']

}

}

And it would work. All your code would be parsed and processed and turned into a superbundle when a file changes. But there’s a problem here. Can you spot it? Hint: all your code would be parsed and processed and turned into a superbundle.

Yep. Sloooooow.

On my MacBook Air with SSD and 8GB of RAM, this takes about 4 seconds (and that’s after I made all the big dependencies such as jQuery or Angular external). That’s a long time to wait for feedback from your tests, but not long enough to go grab a coffee. The annoying kind of long, in other words. We can do better.

Enter Watchify

Watchify is to Browserify what grunt-contrib-watch is to Grunt. It watches the filesystem for you and recompiles the bundle when a change is detected. There is an important twist, however, and that is caching. Watchify remembers the parsed JS files, making the rebuild much faster (about ten times faster in my case).

There’s one caveat you have to look out for though. When you’re watching your files in order to run tests (which you still need to do via grunt-contrib-watch because Browserify only takes care of the bundling), make sure you target the resulting (browserified) files and not the source files. Otherwise your changes might not get detected by Grunt watch (on some platforms Watchify seems to “eat” the file system events and they don’t get through to grunt-contrib-watch).

In other words, do something like this:

watch: {

sources: {

files: [

'/**/*.coffee',

'/**/*.js'

]

tasks: ['test']

}

}

where test is an alias for (for example):

test: [ 'mocha_phantomjs', 'notify:build_complete' ]

You should see a huge improvement in your build times.

Happy grunting!

http://feedproxy.google.com/~r/justdiscourse/browsing/~3/UH_WxAtkX8g/

|

|

Marco Zehe: Accessibility in Google Apps – an overview |

I recently said that I would write a blog series about Google apps accessibility, providing some hints and caveats when it comes to using Google products such as GMail, Docs, and Drive in a web browser.

However, when I researched this topic further, I realized that the documentation Google provide on each of their products for screen reader users is actually quite comprehensive. So, instead of repeating what they already said, I’m going to provide some high-level tips and tricks, and links to the relevant documentation so you can look the relevant information up yourself if you need to.

There is really not much difference between Google Drive, GMail and the other consumer-related products and the enterprise-level offerings called Google Apps for Business. All of them are based on the same technology base. The good thing is that there is also no way for administrators to turn accessibility off entirely when they administrate the Google Apps for Business setup for their company. And unless they mess with default settings, themes and other low-vision features should also work in both end user and enterprise environments.

A basic rule: Know your assistive technology!

This is one thing I notice pretty often when I start explaining certain web-related stuff to people, be they screen reader users or users of other assistive technologies. It is vital for your personal, but even more your professional life, to know your assistive technology! As a screen reader user, just getting around a page by tabbing simply isn’t enough to get around complex web applications efficiently and deal with stuff in a timely fashion. You need to be familiar with concepts such as the difference between a virtual document (or virtual buffer or browse mode document) and the forms or focus mode of your screen reader, especially when on Windows. You need to know at least some quick navigation commands available in most browse/virtual mode scenarios. You should also be familiar with what landmarks are to navigate to certain sections of a page. If you just read this and don’t know what I was talking about, consult your screen reader manual and key stroke reference now! If you are a person who requires training to memorize these things and isn’t good at self-paced learning this, go get help and training for this, especially in professional environments. You will be much more proficient afterwards and provide much better services. And besides, it’ll make you feel better because you will have a feeling of greater accomplishment and less frustrations. I promise!

Now with that out of the way, let’s move on to some specific accessibility info, shall we?

GMail

One of the most used things you’l be using is GMail. If you want to use a desktop or mobile e-mail client because that is easiest for you, you can do so! Talk to your administrator if you’re in a corporate or educational environment, or simply set up your GMail account in your preferred client. Today, most clients even won’t require you to enter an IMAP or SMTP server any more, because they know what servers they need to talk to for GMail. So unless your administrator has turned off IMAP and SMTP access, which they most likely haven’t, you should be able to just use your preferred client of choice. Only if you want to add server-side e-mail filters and change other settings will you then need to enter the web interface.

If you want to, or have to, use the web interface, don’t bother with the basic HTML view. It is so stripped down in functionality that the experience by today’s standards is less than pleasant. Instead, familiarize yourself with the GMail guide for screen reader users, and also have a look at the shortcuts for GMail. Note that the latter will only work if your screen reader’s browse or virtual mode is turned off. If you’re a screen reader user, experiment with which way works better for you, browse/virtual mode or non-virtual mode.

Personally, I found the usability of GMail quite improved in recent months compared to earlier times. I particularly am fond of the conversation threading capabilities and the power of the search which can also be applied to filters.

Note that in some documentation, it is said that the chat portion of GMail is not accessible. However, I found that this seems to be outdated information, since the above guide very well states that Chat works, and describes some of its features. Best way to find out: Try it!

Contacts

Contacts are accessible on the web, too, but again you can use your e-mail client’s capabilities or extension to sync your contacts through that as well.

Calendar

Google Calendar’s Agenda View can be used with screen readers on the web, but it, too, allows access from desktop or mobile CalDav clients as well. The Google Calendar guide for screen reader users and Keyboard Shortcuts for Google Calendar provide the relevant info.

Google Docs and Sites

This is probably the most complicated suite of the Google offerings, but don’t fear, they are accessible and you can actually work with them nowadays. For this to work best, Google recommends to use either JAWS or NVDA with Firefox, or IE, or Chrome + ChromeVox. I tested, and while Safari and VoiceOver on OS X also provided some feedback, the experience wasn’t as polished as one would hope. So if you’re on the Mac, using Google Chrome and ChromeVox is probably your best bet.

Also, all of these apps work best if you do not rely on virtual/browse modes when on Windows. In NVDA, it’s easy to just turn it off by pressing NVDA+Space. For JAWS, the shortcut is JAWSKey+Z. Bt since this has multiple settings, consult your JAWS manual to make this setting permanent for the Google Drive domain.

The documentation on Drive is extensive. I suggest to start at this hub and work your way through all linked documentation top to bottom. It’s a lot at first, but you’ll quickly get around and grasp the concepts, which are pretty consistent throughout.

Once you’re ready to dive into Docs, Sheets, Slides and the other office suite apps, use the Docs Getting Started document as a springboard to all the others and the in-depth documentation on Docs itself.

One note, in some places, it is said that creating forms is not accessible yet. However, since there is documentation on that, too, those documents stating that creating forms isn’t accessible yet are out of date. One of those, among other things, is the Administrator Guide to Apps Accessibility.

I found that creating and working in documents and spreadsheets works amazingly well already. There are some problems sometimes with read-only documents which I’ve made the Docs team aware of, but since these are sometimes hard to reproduce, it may need some more time before this works a bit better. I found that, if you get stuck, alt-tabbing out of and back into your browser often clears things up. Sometimes, it might even be enough to just open the menu bar by pressing the Alt key.

Closing remarks

Like with any other office productivity suite, Google Docs is a pretty involved product. In a sense, it’s not less feature-rich than a desktop office suite of programs, only that it runs in a web browser. So in order to effectively use Google Apps, it cannot be said enough: Know your browser, and know your assistive technology! Just tabbing around won’t get you very far!

If you need more information not linked to above, here’s the entry page for all things Google accessibility in any of their apps, devices and services. From here, most of the other pages I mention above can also be found.

And one more piece of advice: If you know you’ll be switching to Google Apps in the future in your company or government or educational institution, and want to get a head start, get yourself a GMail account if you don’t have one. Once you have that, all of Google Drive, Docs, and others, are available to you as well to play around with. There’s no better way than creating a safe environment and play around with it! Remember, it’s only a web application, you can’t break any machines by using it! And if you do, you’re up for some great reward from Google! ![]()

Enjoy!

http://www.marcozehe.de/2014/07/08/accessibility-in-google-apps-an-overview/

|

|

Christian Heilmann: Have we lost our connection with the web? Let’s #webexcite |

I love the web. I love building stuff in it using web standards. I learned the value of standards the hard way: building things when browser choices were IE4 or Netscape 3. The days when connections were slow enough that omitting quotes around attributes made a real difference to end users instead of being just an opportunity to have another controversial discussion thread. The days when you did everything possible – no matter how dirty – to make things look and work right. The days when the basic functionality of a product was the most important part of it – not if it looks shiny on retina or not.

I am not alone. Many out there are card-carrying web developers who love doing what I do. And many have done it for a long, long time. Many of us don a blue beanie hat once a year to show our undying love for the standard work that made our lives much, much easier and predictable and testable in the past and now.

Enough with the backpatting

However, it seems we live in a terrible bubble of self-affirmation about just how awesome and ever-winning the web is. We’re lacking proof. We build things to impress one another and seem to forget that what we do sooner than later should improve the experience of people surfing the web out there.

In places of perceived affluence (let’s not analyse how much of that is really covered-up recession and living on borrowed money) the web is very much losing mind-share.

Apps excite people

People don’t talk about “having been to a web site”; instead they talk about apps and are totally OK if the app is only available on one platform. Even worse, people consider themselves a better class than others when they have iOS over Android which dares to also offer cheaper hardware.

The web has become mainstream and boring; it is the thing you use, and not where you get your Oooohhhs and Aaaahhhhs.

Why is that? We live in amazing times:

- New input types allow for much richer forms

- Video and Audio in HTML5 has matured to a stage where you can embed a video without worrying about showing a broken grey box

- Canvas allows us to create and manipulate graphics on the fly

- WebRTC allows for Skype-like functionality straight in the browser.

- With Web Audio we can create and manipulate music in the browser

- SVG is now an embed in HTML and doesn’t need to be an own document which allows us scalable vector graphics (something Flash was damn good in)

- IndexedDB allows us to store data on the device

- AppCache, despite all its flaws allows for basic offline functionality

- WebGL brings 3D environments to the web (again, let’s not forget VRML)

- WebComponents hint at finally having a full-fledged Widget interface on the web.

Shown, but never told

The worry I have is that most of these technologies never really get applied in commercial, customer-facing products. Instead we build a lot of “technology demos” and “showcases” to inspire ourselves and prove that there is a “soon to come” future where all of this is mainstream.

This becomes even more frustrating when the showcases vanish or never get upgraded. Many of the stuff I showed people just two years ago only worked in WebKit and could be easily upgraded to work across all browsers, but we’re already bored with it and move on to the next demo that shows the amazing soon to be real future.

I’m done with impressing other developers; I want the tech we put in browsers to be used for people out there. If we can’t do that, I think we failed as passionate web developers. I think we lost the connection to those we should serve. We don’t even experience the same web they do. We have fast macs with lots of RAM and Adblock enabled. We get excited about parallax web sites that suck the battery of a phone empty in 5 seconds. We happily look at a loading bar for a minute to get an amazing WebGL demo. Real people don’t do any of that. Let’s not kid ourselves.

Exciting, real products

I remember at the beginning of the standards movement we had showcase web sites that showed real, commercial, user-facing web sites and praised them for using standards. The first CSS layout driven sites, sites using clever roll-over techniques for zooming into product images, sites with very clean and semantic markup – that sort of thing. #HTML on ircnet had a “site of the day”, there was a “sightings” site explaining a weekly amazing web site, “snyggt” in Sweden showcased sites with tricky scripts and layout solutions.

I think it may be time to re-visit this idea. Instead of impressing one another with codepens, dribbles and other in-crowd demos, let’s tell one another about great commmercial products aimed not at web developers using up-to-date technology in a very useful and beautiful way.

That way we have an arsenal of beautiful and real things to show to people when they are confused why we like the web so much. The plan is simple:

- If you find a beautiful example of modern tech used in the wild, tweet or post about it using the #webexcite hash tag

- We can also set up a repository somewhere on GitHub once we have a collection going

|

|

Gervase Markham: Spending Our Money Twice |

Mozilla Corporation is considering moving its email and calendaring infrastructure from an in-house solution to an outsourced one, seemingly primarily for cost but also for other reasons such as some long-standing bugs and issues. The in-house solution is corporate-backed open source, the outsourced solution under consideration is closed source. (The identities of the two vendors concerned are well-known, but are not relevant to appreciate the point I am about to make.) MoCo IT estimates the outsourced solution as one third of the price of doing it in-house, for equivalent capabilities and reliability.

I was pondering this, and the concept of value for money. Clearly, it makes sense that we avoid spending multiple hundreds of thousands of dollars that we don’t need to. That prospect makes the switch very attractive. Money we don’t spend on this can be used to further our mission. However, we also need to consider how the money we do spend on this furthers our mission.

Here’s what I mean: I understand that we don’t want to self-host. IT has enough to do. I also understand that it may be that no-one is offering to host an open source solution that meets our feature requirements. And the “Mozilla using proprietary software or web services” ship hasn’t just sailed, it’s made it to New York and is half way back and holding an evening cocktail party on the poop deck. However, when we do buy in proprietary software or services, I assert we should nevertheless aim to give our business to companies which are otherwise aligned with our values. That means whole-hearted support for open protocols and data formats, and for the open web. For example, it would be odd to be buying in services from a company who had refused to, or dragged their feet about, making their web sites work on Firefox for Android or Firefox OS.

If we deploy our money in this way, then we get to “spend it twice” – it gets us the service we are paying for, and it supports companies who will spend it again to bring about (part of) the vision of the world we want to see. So I think that a values alignment between our vendors and us (even if their product is not open source) is something we should consider strongly when outsourcing any service. It may give us better value for money even if it’s a little more expensive.

http://feedproxy.google.com/~r/HackingForChrist/~3/rxdYSDu3-qY/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1033258] bzexport fails to leave a comment when attaching a file using the BzAPI compatibility layer

- [1003244] Creation of csv file attachement on new Mozilla Reps Swag Request

- [1033955] pre-load all related bugs during show_bug initialisation

- [1034678] Use of uninitialized value $_[0] in pattern match (m//) at Bugzilla/Util.pm line 74. The new value for request reminding interval is invalid: must be numeric.

- [1033445] Certain webservice methods such as Bug.get and Bug.attachments should not use shadow db if user is logged in

- [990980] create an extension for server-side filtering of bugmail

server-side bugmail filtering

accessible via the “Bugmail Filtering” user preference tab, this feature provides fine-grained control over what changes to bugs will result in an email notification.

for example to never receive changes made to the “QA Whiteboard” field for bugs where you are not the assignee add the following filter:

Field: QA Whiteboard

Product: __Any__

Component: __Any__

Relationship: Not Assignee

Action:Exclude

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/07/08/happy-bmo-push-day-102/

|

|

Christie Koehler: Driving Project-wide Community Growth by Improving the Mozilla Wiki |

At the Mozilla project there are many ways to contribute. Some contributions are directly to our products: Firefox Desktop, Firefox for Android, Firefox OS, Webmaker, etc. Some contributions are to things that make those products better: QA, localization, release engineering, etc. Some contributions are to tools that help us work together better, such as: Pontoon, Bugzilla, Mozillians and the Mozilla Wiki.

I’ve long had a personal interest in the Mozilla Wiki. When I started as a paid contributor in 2011, it was my main source of information about the many, many Mozilla projects.

And I’m not alone in this. Contributor Sujith Reddy says:

The wiki page of Mozilla has got info about every project running around. For instance, being a Rep, I get questioned by many people on mails, What exactly is the ReMo program. I would reply’em with a single link: https://wiki.mozilla.org/ReMo Basically, it makes my work easier to explain people. It is Mozilla-Encyclopedia :)

And contributor Mark A. Hershberger says:

Wikis provide the best way for a community with many members to collaborate to disseminate knowledge about their shared interest…The wiki provides one of the easiest ways to start contributing to the shared work and become a contributing member of the Mozilla community.

And it’s not just volunteer contributors who find the wiki essential. Here’s Benjamin Sternthal from Web Production:

The Mozilla Wiki is an essential part of how Web Productions manages projects and involves community. The Wiki is particularly valuable for our project hubs, the central place where anyone can view information about a project without having to hunt around in various systems.

History of the Mozilla Wiki

The Mozilla Wiki has been around for a long time. According to WikiApiary it was founded on in November of 2004 making it nearly 10 years old! It has over 90,000 pages, all of which are public, and roughly 600 daily users.

During most of its existence the Wiki has been maintained by community without organized effort. Mozilla IT has supported it on Mozilla’s corporate infrastructure, and various community members, paid and volunteer, have worked to keep it as up-to-date and functional as possible.

This approach worked fairly well for a long time. But during the last couple of years, as our community has experienced incredible growth, this ad-hoc approach stopped serving us well. The wiki has become harder and harder to use when it should become easier and easier to use.

Formation of the Wiki Working Group

And that’s why a group of us came together in March 2014 and formed the Wiki Working Group. It’s been a few months and the group is going very well. We meet twice a month as a full group, and in smaller groups as needed to work through specific issues. There are 25 people on our mailinglist and meeting attendance averages 8-12, with a mix of paid and volunteer contributors in about a 1:1 ratio. Of the paid contributors, I am the only with time dedicated to work on the Wiki.

In a short amount of time we’ve made some significant accomplishments, including:

- triaged all open bugs (>100, some open several years without updates)

- created a formal governance structure by creating a submodule for the Wiki within Websites

- reduced the clutter and improved usability on the wiki by eliminating new spam (spam accounts and pages previously numbered in the several hundreds per day on average)

- improved usability of the wiki by fixing a few critical but long-standing bugs, including an issue with table sorting

- created an About page for the Wiki that clarifies its scope and role in the project, including what is appropriate content and how to report issues

One of the long-standing bugs was to re-enable the WikiEditor which greatly improves usability by giving users an easy-to-use toolbar to allow page authoring without having to know wiki markup.

Chris More from Web Productions gave us this feedback on these recent changes:

With the re-introduction of the visual wikieditor, it has allowed non-technical people to be able to maintain their project’s wiki page without having to learn the common wiki markup language. This has been invaluable with getting the new process adopted across the Engagement team.

We’ve also worked hard to create a clear vision for the purpose of the Wiki Working Group. Early on we reached consensus that it is not our role to be the only ones contributing to the wiki. Rather, it is our role to enable everyone across the project to feel empowered to participate and collaborate to make the Mozilla Wiki an enjoyable and lively place to document and communicate about our work.

Where we’re going in 2014

With that in mind, we’re working towards the following milestones for this year:

- increasing usability and stability) upgrading to current version of Mediawiki

- updating the default skin (theme) to be more usable and mobile-friendly

- improving the information architecture of the site so content is easier to find and maintain

- engage contributors to learn to use the wiki and help us improve it by running a series of “wiki missions”

- create compelling visual dashboards that will help us better understand and recognize wiki activity

We expect these changes to increase participation on the wiki itself considerably, and to increase community activity in other areas of the project by making it easier to document and discover contribution pathways. In this way, the WWG serves all teams at Mozilla in their community building efforts.

Chris More from Web Production again:

The use of the wiki has recently been amplified by the introduction of the Integrated Marketing process. The new process is essentially program management best practices to ensure what Engagement is working on is relevant, organized, and transparent. The wiki has been used to document, share, and to be the hub for both the process and every major project Engagement is working on. Without the wiki, Engagement would have no central public location to share our plans with the world and to understand how to get involved.

So, while our group is small, we are highly engaged. As we continue our work, we’ll enable many, many more people to become contributors and to continue contributing across the project.

How to Get Involved

If you’re interested in joining or following the Wiki Working Group, take a look at the How to Participate section on our wiki page for links to our mailinglist and meeting schedule.

If you have general feedback about the Mozilla Wiki, or things you’d like to see improved there, leave comments on this Sandbox page.

|

|

Jennie Rose Halperin: about:Mozilla: more than just a newsletter |

“The about:Mozilla newsletter reaches 70,000 people?” I asked Larissa Shapiro incredulously in March when she suggested that our team assist in reviving the dormant newsletter. Indeed, with about:Mozilla, we have the opportunity to reach the inboxes of 70,000 potential contributors, all of whom have already expressed interest in learning more about our work. Though the newsletter is several years old, the revamp focuses on contribution and community. Its renewal has been a boon for our team and helped us continue working both cross-functionally and with our contributor base.

Spreading the Mozilla mission by connecting at scale is one of next quarter’s goals, and the about:Mozilla newsletter is a unique and dynamic way for us to do so. The about:Mozilla newsletter brings us back to our roots: We are seeking out the best in contribution activities and delighting a large community of motivated, excited people who love our products, projects and mission. As our Recognition Working Group asserts: “People contribute to Mozilla because they believe in our message.” The newsletter brings that message to new contributors and reminds casual contributors what they can do for Mozilla.

Reinvigorating the newsletter was a high priority for the Community Building team in Q2 and its success and consistency speaks to the continued collaboration between Community Building and Engagement to create a fantastic, contributor-led newsletter. We’ve released four newsletters since May, and found that with each issue we continue to find our voice, empower new contributions, and seek out relevant, highly engaged channels for new contributors to get involved at scale. The newsletter team, which consists of myself, Jan Bambach, Brian King, Jessilyn Davis, and Larissa Shapiro, seek to provide readers the best opportunities to volunteer across Mozilla.

The easy, digestible, and fun opportunities in the newsletter have been identified by a variety of teams, and every week we present more chances to connect. We’ve given contributors the tools to contribute in a variety of functional areas, from Maker Party to Security to Marketplace to Coding. We have yet to be sure of our return on investment: the newsletter is new and our tracking system is still limited in terms of how we identify new contributions across the organization, but we are excited to see this continue to scale in Q3. We hope to become a staple in the inboxes of contributors and potential contributors around the world.

Our click rates are stable and at industry average with approximately 25% of subscribers opening the newsletter, and our bounce rate is very low. We are working together to improve the quality and click rate for our community news and updates as well as featuring a diverse set of Mozilla contributors from a variety of different contribution areas. Though our current click rate is at 3%, we’re fighting for at least 6% and the numbers have been getting incrementally better.

Identifying bite-sized contribution activities across the organization continues to be a struggle from week to week. We keep our ears open for new opportunities, but would like more teams to submit through our channels in order to identify diverse opportunities. Though we put out a call for submissions at the bi-monthly Grow meeting, we find it difficult to track down teams with opportunities to engage new Mozillians. Submissions remain low despite repeated reminders and outreach.

My favorite part of the newsletter is definitely our “Featured Contributor” section. We’ve featured people from four countries (the United States, China, India, and the Phillipines,) and told their varied and inspirational stories. People are excited to be featured in the newsletter, and we are already getting thank you emails and reposts about this initiative. Thank you also to all the contributors who have volunteered to be interviewed!

I’d like to encourage all Mozillians to help, and here are some easy things that you can do to help us connect at scale:

-

If you know of a contribution activity on your team, please submit it to the newsletter! It just needs to be a sentence or two. You can find all submission information on the wiki.

-

Sean Bolton, Community Building Intern, is collecting stories about Mozillians to be featured on our website, in our newsletters, and in other channels. Do you know an inspiring Mozillian? Nominate them!

-

Are you or someone you know someone interested in contributing? Sign up for the newsletter and make a difference.

- And of course, you can always Design for Participation.

Here is what I would like to see in the next quarter:

-

I’d like to see our click rate increase to 8%. I’ve been reading a lot about online newsletters, and we have email experts like Jessilyn Davis on our team, so I think that this can be done.

-

The name about:Mozilla is no longer descriptive, and we would like to discuss a name change to about:Community by the end of the year.

-

I will set up a system for teams to provide feedback on whether or not the newsletter brought in new contributors. Certain teams have done this well: the MoFo Net Neutrality petition from last week contained analytics that tracked if the signature came from the newsletter. (Security-minded folks: I can say honestly that it tracked nothing else!)

-

I would like to see the newsletter and other forms of Engagement become a pathway for new contributors. This newsletter cannot happen without the incredible work of Jan Bambach, a motivated and long-time volunteer from Germany, but I’d love to see others getting involved too. We have a link at the bottom of the page that encourages people to Get Involved, but I think we can do more. The newsletter provides a pathway that can help contributors practice writing for the web, learn about news and marketing cycles, and also learn to code in html. A few more hands would provide a variety of voices.

- I will continue to reach out to a variety of teams in new and creative ways to encourage diverse submissions and opportunities. The form seems to be underutilized, and there are definitely other ways to do outreach to teams across the organization.

-

Eventually, I’d love to see the newsletter translated into other languages besides English!

While the newsletter is only a part of what we do, it has become a symbol for me of how a small group of motivated people can reboot a project to provide consistent quality to an increasingly large supporter base. The about:Mozilla newsletter is not only a success for the Community Building Team, it’s a success for the whole organization because it helps us get the word out about our wonderful work.

http://jennierosehalperin.me/aboutmozilla-more-than-just-a-newsletter/

|

|

Michael Verdi: Recent work on Bookmarks and Firefox Reset |

I’ve been working on a number of things over the last couple of months and I wanted to share two of them. First, bookmarks. Making this bookmarks video for Firefox 29 reminded me of a long-standing issue that’s bothered me. By default, new bookmarks are hidden away in the unsorted bookmarks folder. So without any instruction, they’re pretty hard to find. Now that we have this fun animation that shows you where your new bookmark went, I thought it would be good if you could actually see that bookmark when clicking on the bookmarks menu button. After thinking about a number of approaches we decided to move the list of recent bookmarks from a sub-menu and expose them directly in the main bookmarks menu.

With the design done, this is currently waiting to be implemented.

Another project that I’ve been focusing on is Firefox Reset. The one, big, unimplemented piece of this work that began about three years ago, is making this feature discoverable when people need it. And the main place we like to surface this option is when people try to reinstall the same version of Firefox that they are currently running. We often see people try to do this, expecting that it will fix various problems. The issue is that reinstalling doesn’t fix many things at all. What most people are expecting to happen, actually happens when you reset Firefox. So here we’d like to take two approaches. If the download page knows that you have the same version of Firefox that you are trying to download, it should offer you a reset button instead of the regular download button.

The other approach is to have Firefox detect if it’s just had the same version installed and offer you the opportunity to reset Firefox.

The nice thing about these approaches is that work together. If you determine that something is wrong with Firefox and you want to fix it by reinstalling, you’ll see a reset button on the download page. If you use that, the reset process takes just a few seconds and you can be on your way. If you want to download and install a new copy you can, and you’ll have another opportunity to reset after Firefox has launched and you’ve verified whether the issue has been fixed. This presentation explains in more detail how these processes might work. This work isn’t final and there are a few dependencies to work out but I’m hopeful these pieces can be completed soon.

https://blog.mozilla.org/verdi/439/recent-work-on-bookmarks-and-firefox-reset/

|

|