Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Hacks.Mozilla.Org: WebAssembly Interface Types: Interoperate with All the Things! |

People are excited about running WebAssembly outside the browser.

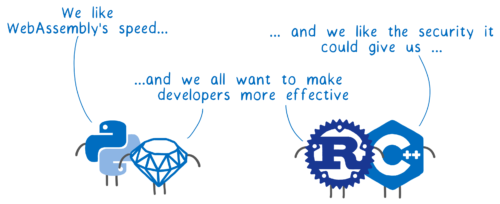

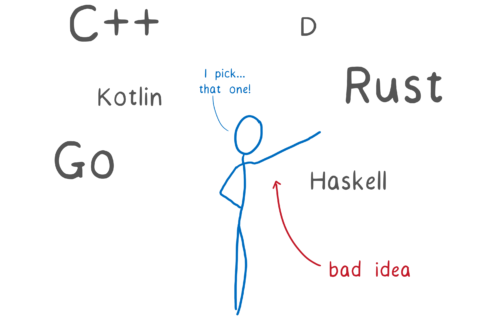

That excitement isn’t just about WebAssembly running in its own standalone runtime. People are also excited about running WebAssembly from languages like Python, Ruby, and Rust.

Why would you want to do that? A few reasons:

- Make “native” modules less complicated

Runtimes like Node or Python’s CPython often allow you to write modules in low-level languages like C++, too. That’s because these low-level languages are often much faster. So you can use native modules in Node, or extension modules in Python. But these modules are often hard to use because they need to be compiled on the user’s device. With a WebAssembly “native” module, you can get most of the speed without the complication. - Make it easier to sandbox native code

On the other hand, low-level languages like Rust wouldn’t use WebAssembly for speed. But they could use it for security. As we talked about in the WASI announcement, WebAssembly gives you lightweight sandboxing by default. So a language like Rust could use WebAssembly to sandbox native code modules. - Share native code across platforms

Developers can save time and reduce maintainance costs if they can share the same codebase across different platforms (e.g. between the web and a desktop app). This is true for both scripting and low-level languages. And WebAssembly gives you a way to do that without making things slower on these platforms.

So WebAssembly could really help other languages with important problems.

But with today’s WebAssembly, you wouldn’t want to use it in this way. You can run WebAssembly in all of these places, but that’s not enough.

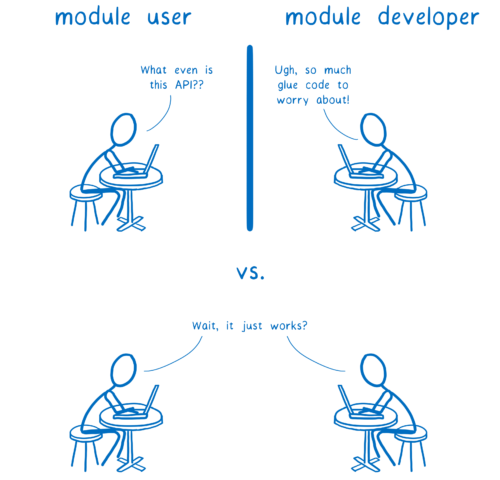

Right now, WebAssembly only talks in numbers. This means the two languages can call each other’s functions.

But if a function takes or returns anything besides numbers, things get complicated. You can either:

- Ship one module that has a really hard-to-use API that only speaks in numbers… making life hard for the module’s user.

- Add glue code for every single environment you want this module to run in… making life hard for the module’s developer.

But this doesn’t have to be the case.

It should be possible to ship a single WebAssembly module and have it run anywhere… without making life hard for either the module’s user or developer.

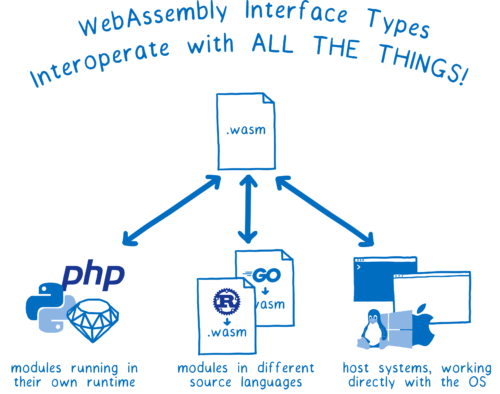

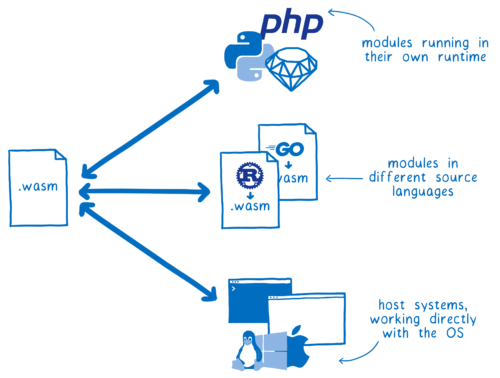

So the same WebAssembly module could use rich APIs, using complex types, to talk to:

- Modules running in their own native runtime (e.g. Python modules running in a Python runtime)

- Other WebAssembly modules written in different source languages (e.g. a Rust module and a Go module running together in the browser)

- The host system itself (e.g. a WASI module providing the system interface to an operating system or the browser’s APIs)

And with a new, early-stage proposal, we’re seeing how we can make this Just Work , as you can see in this demo.

, as you can see in this demo.

So let’s take a look at how this will work. But first, let’s look at where we are today and the problems that we’re trying to solve.

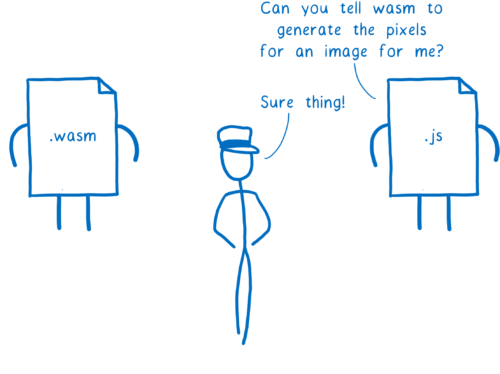

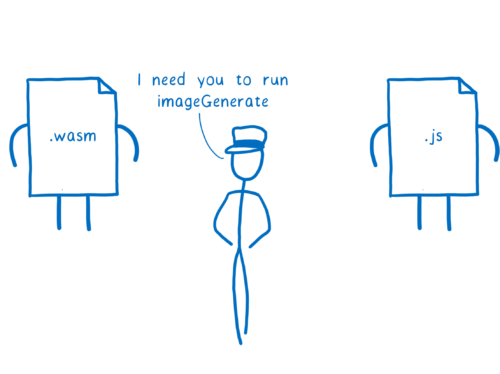

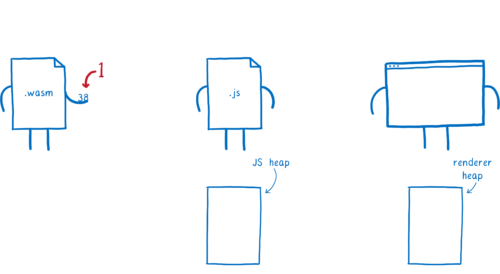

WebAssembly talking to JS

WebAssembly isn’t limited to the web. But up to now, most of WebAssembly’s development has focused on the Web.

That’s because you can make better designs when you focus on solving concrete use cases. The language was definitely going to have to run on the Web, so that was a good use case to start with.

This gave the MVP a nicely contained scope. WebAssembly only needed to be able to talk to one language—JavaScript.

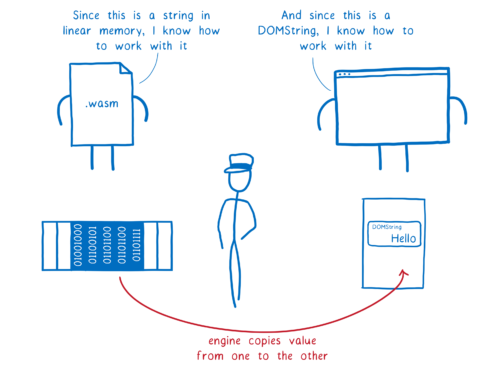

And this was relatively easy to do. In the browser, WebAssembly and JS both run in the same engine, so that engine can help them efficiently talk to each other.

But there is one problem when JS and WebAssembly try to talk to each other… they use different types.

Currently, WebAssembly can only talk in numbers. JavaScript has numbers, but also quite a few more types.

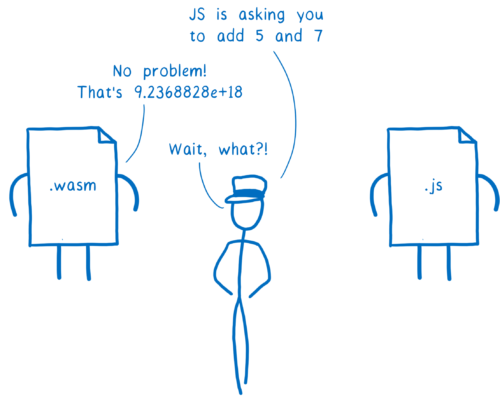

And even the numbers aren’t the same. WebAssembly has 4 different kinds of numbers: int32, int64, float32, and float64. JavaScript currently only has Number (though it will soon have another number type, BigInt).

The difference isn’t just in the names for these types. The values are also stored differently in memory.

First off, in JavaScript any value, no matter the type, is put in something called a box (and I explained boxing more in another article).

WebAssembly, in contrast, has static types for its numbers. Because of this, it doesn’t need (or understand) JS boxes.

This difference makes it hard to communicate with each other.

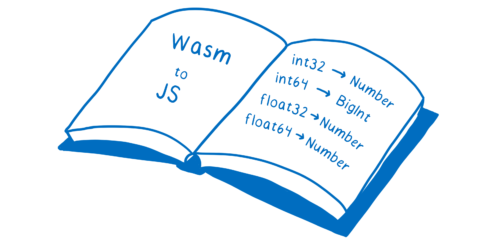

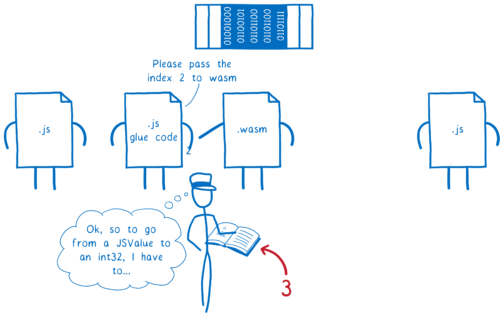

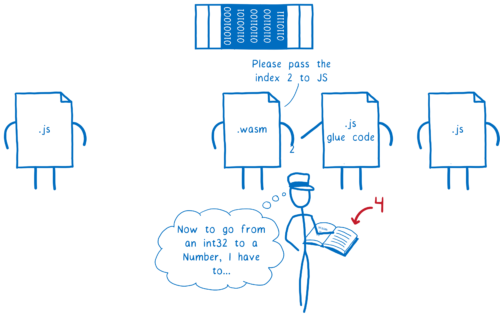

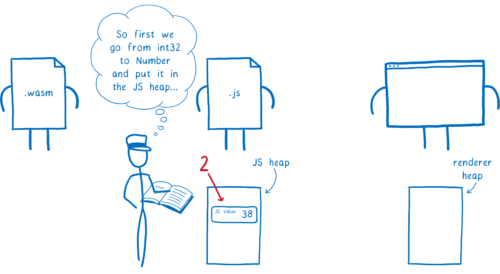

But if you want to convert a value from one number type to the other, there are pretty straightforward rules.

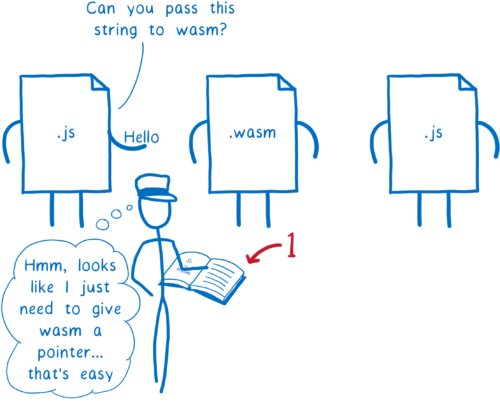

Because it’s so simple, it’s easy to write down. And you can find this written down in WebAssembly’s JS API spec.

This mapping is hardcoded in the engines.

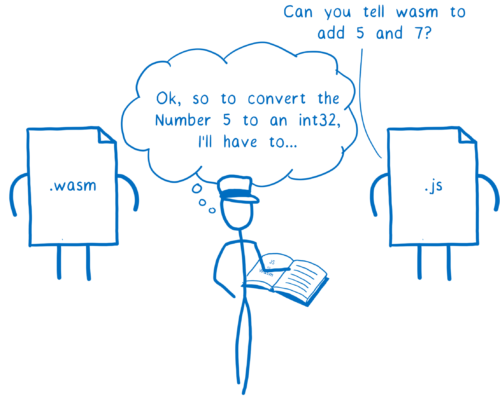

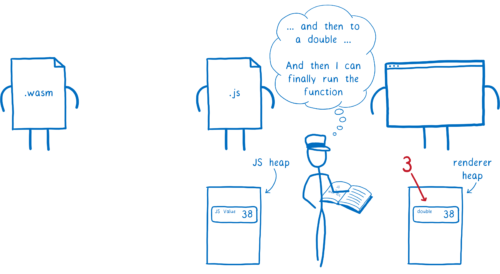

It’s kind of like the engine has a reference book. Whenever the engine has to pass parameters or return values between JS and WebAssembly, it pulls this reference book off the shelf to see how to convert these values.

Having such a limited set of types (just numbers) made this mapping pretty easy. That was great for an MVP. It limited how many tough design decisions needed to be made.

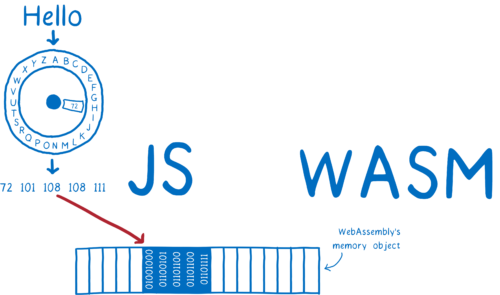

But it made things more complicated for the developers using WebAssembly. To pass strings between JS and WebAssembly, you had to find a way to turn the strings into an array of numbers, and then turn an array of numbers back into a string. I explained this in a previous post.

This isn’t difficult, but it is tedious. So tools were built to abstract this away.

For example, tools like Rust’s wasm-bindgen and Emscripten’s Embind automatically wrap the WebAssembly module with JS glue code that does this translation from strings to numbers.

And these tools can do these kinds of transformations for other high-level types, too, such as complex objects with properties.

This works, but there are some pretty obvious use cases where it doesn’t work very well.

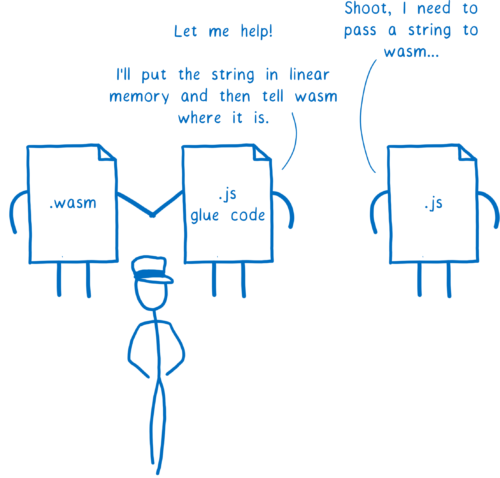

For example, sometimes you just want to pass a string through WebAssembly. You want a JavaScript function to pass a string to a WebAssembly function, and then have WebAssembly pass it to another JavaScript function.

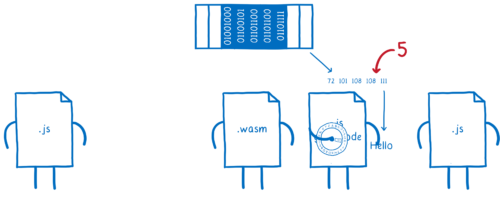

Here’s what needs to happen for that to work:

- the first JavaScript function passes the string to the JS glue code

-

the JS glue code turns that string object into numbers and then puts those numbers into linear memory

-

then passes a number (a pointer to the start of the string) to WebAssembly

-

the WebAssembly function passes that number over to the JS glue code on the other side

-

the second JavaScript function pulls all of those numbers out of linear memory and then decodes them back into a string object

-

which it gives to the second JS function

So the JS glue code on one side is just reversing the work it did on the other side. That’s a lot of work to recreate what’s basically the same object.

If the string could just pass straight through WebAssembly without any transformations, that would be way easier.

WebAssembly wouldn’t be able to do anything with this string—it doesn’t understand that type. We wouldn’t be solving that problem.

But it could just pass the string object back and forth between the two JS functions, since they do understand the type.

So this is one of the reasons for the WebAssembly reference types proposal. That proposal adds a new basic WebAssembly type called anyref.

With an anyref, JavaScript just gives WebAssembly a reference object (basically a pointer that doesn’t disclose the memory address). This reference points to the object on the JS heap. Then WebAssembly can pass it to other JS functions, which know exactly how to use it.

So that solves one of the most annoying interoperability problems with JavaScript. But that’s not the only interoperability problem to solve in the browser.

There’s another, much larger, set of types in the browser. WebAssembly needs to be able to interoperate with these types if we’re going to have good performance.

WebAssembly talking directly to the browser

JS is only one part of the browser. The browser also has a lot of other functions, called Web APIs, that you can use.

Behind the scenes, these Web API functions are usually written in C++ or Rust. And they have their own way of storing objects in memory.

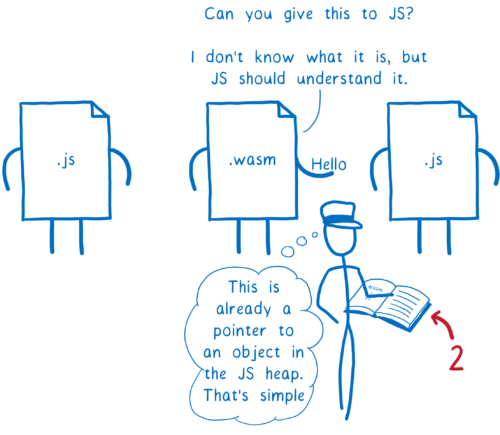

Web APIs’ parameters and return values can be lots of different types. It would be hard to manually create mappings for each of these types. So to simplify things, there’s a standard way to talk about the structure of these types—Web IDL.

When you’re using these functions, you’re usually using them from JavaScript. This means you are passing in values that use JS types. How does a JS type get converted to a Web IDL type?

Just as there is a mapping from WebAssembly types to JavaScript types, there is a mapping from JavaScript types to Web IDL types.

So it’s like the engine has another reference book, showing how to get from JS to Web IDL. And this mapping is also hardcoded in the engine.

For many types, this mapping between JavaScript and Web IDL is pretty straightforward. For example, types like DOMString and JS’s String are compatible and can be mapped directly to each other.

Now, what happens when you’re trying to call a Web API from WebAssembly? Here’s where we get to the problem.

Currently, there is no mapping between WebAssembly types and Web IDL types. This means that, even for simple types like numbers, your call has to go through JavaScript.

Here’s how this works:

- WebAssembly passes the value to JS.

- In the process, the engine converts this value into a JavaScript type, and puts it in the JS heap in memory

- Then, that JS value is passed to the Web API function. In the process, the engine converts the JS value into a Web IDL type, and puts it in a different part of memory, the renderer’s heap.

This takes more work than it needs to, and also uses up more memory.

There’s an obvious solution to this—create a mapping from WebAssembly directly to Web IDL. But that’s not as straightforward as it might seem.

For simple Web IDL types like boolean and unsigned long (which is a number), there are clear mappings from WebAssembly to Web IDL.

But for the most part, Web API parameters are more complex types. For example, an API might take a dictionary, which is basically an object with properties, or a sequence, which is like an array.

To have a straightforward mapping between WebAssembly types and Web IDL types, we’d need to add some higher-level types. And we are doing that—with the GC proposal. With that, WebAssembly modules will be able to create GC objects—things like structs and arrays—that could be mapped to complicated Web IDL types.

But if the only way to interoperate with Web APIs is through GC objects, that makes life harder for languages like C++ and Rust that wouldn’t use GC objects otherwise. Whenever the code interacts with a Web API, it would have to create a new GC object and copy values from its linear memory into that object.

That’s only slightly better than what we have today with JS glue code.

We don’t want JS glue code to have to build up GC objects—that’s a waste of time and space. And we don’t want the WebAssembly module to do that either, for the same reasons.

We want it to be just as easy for languages that use linear memory (like Rust and C++) to call Web APIs as it is for languages that use the engine’s built-in GC. So we need a way to create a mapping between objects in linear memory and Web IDL types, too.

There’s a problem here, though. Each of these languages represents things in linear memory in different ways. And we can’t just pick one language’s representation. That would make all the other languages less efficient.

But even though the exact layout in memory for these things is often different, there are some abstract concepts that they usually share in common.

For example, for strings the language often has a pointer to the start of the string in memory, and the length of the string. And even if the string has a more complicated internal representation, it usually needs to convert strings into this format when calling external APIs anyways.

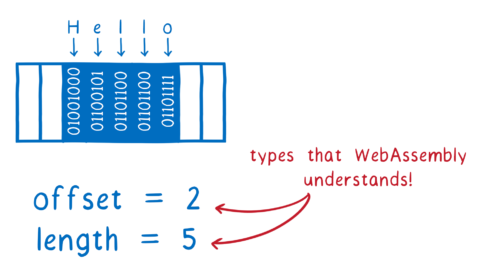

This means we can reduce this string down to a type that WebAssembly understands… two i32s.

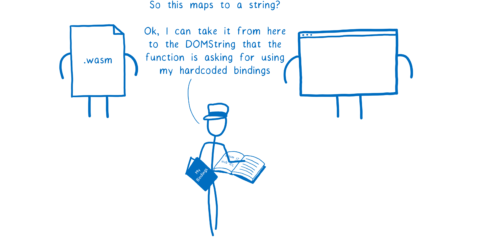

We could hardcode a mapping like this in the engine. So the engine would have yet another reference book, this time for WebAssembly to Web IDL mappings.

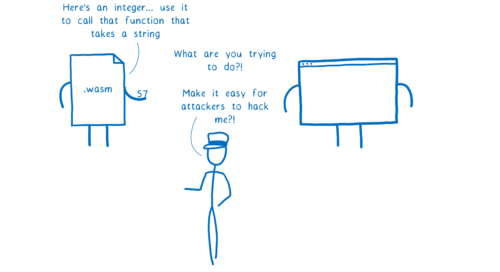

But there’s a problem here. WebAssembly is a type-checked language. To keep things secure, the engine has to check that the calling code passes in types that match what the callee asks for.

This is because there are ways for attackers to exploit type mismatches and make the engine do things it’s not supposed to do.

If you’re calling something that takes a string, but you try to pass the function an integer, the engine will yell at you. And it should yell at you.

So we need a way for the module to explicitly tell the engine something like: “I know Document.createElement() takes a string. But when I call it, I’m going to pass you two integers. Use these to create a DOMString from data in my linear memory. Use the first integer as the starting address of the string and the second as the length.”

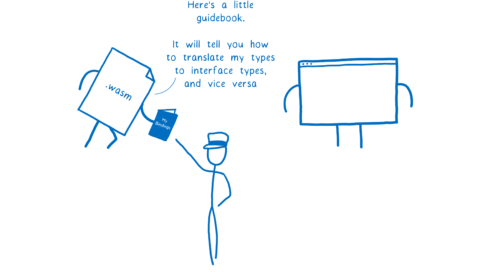

This is what the Web IDL proposal does. It gives a WebAssembly module a way to map between the types that it uses and Web IDL’s types.

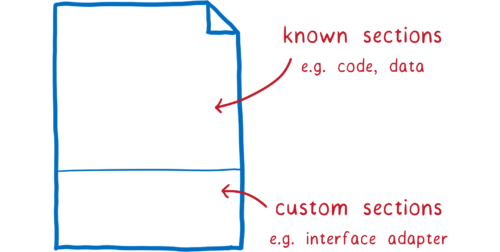

These mappings aren’t hardcoded in the engine. Instead, a module comes with its own little booklet of mappings.

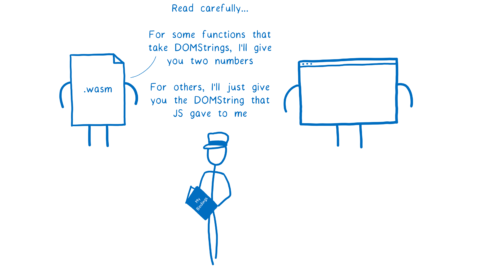

So this gives the engine a way to say “For this function, do the type checking as if these two integers are a string.”

The fact that this booklet comes with the module is useful for another reason, though.

Sometimes a module that would usually store its strings in linear memory will want to use an anyref or a GC type in a particular case… for example, if the module is just passing an object that it got from a JS function, like a DOM node, to a Web API.

So modules need to be able to choose on a function-by-function (or even even argument-by-argument) basis how different types should be handled. And since the mapping is provided by the module, it can be custom-tailored for that module.

How do you generate this booklet?

The compiler takes care of this information for you. It adds a custom section to the WebAssembly module. So for many language toolchains, the programmer doesn’t have to do much work.

For example, let’s look at how the Rust toolchain handles this for one of the simplest cases: passing a string into the alert function.

#[wasm_bindgen]

extern "C" {

fn alert(s: &str);

}

The programmer just has to tell the compiler to include this function in the booklet using the annotation #[wasm_bindgen]. By default, the compiler will treat this as a linear memory string and add the right mapping for us. If we needed it to be handled differently (for example, as an anyref) we’d have to tell the compiler using a second annotation.

So with that, we can cut out the JS in the middle. That makes passing values between WebAssembly and Web APIs faster. Plus, it means we don’t need to ship down as much JS.

And we didn’t have to make any compromises on what kinds of languages we support. It’s possible to have all different kinds of languages that compile to WebAssembly. And these languages can all map their types to Web IDL types—whether the language uses linear memory, or GC objects, or both.

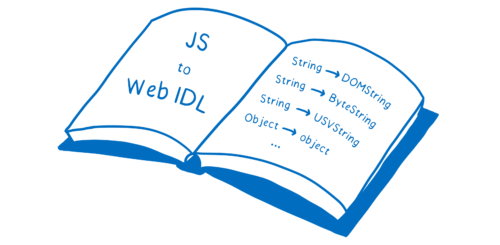

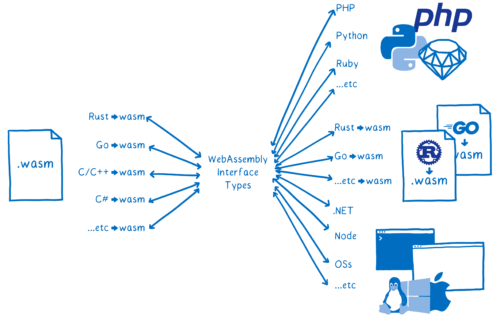

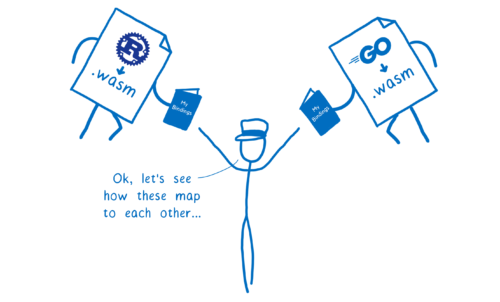

Once we stepped back and looked at this solution, we realized it solved a much bigger problem.

WebAssembly talking to All The Things

Here’s where we get back to the promise in the intro.

Is there a feasible way for WebAssembly to talk to all of these different things, using all these different type systems?

Let’s look at the options.

You could try to create mappings that are hardcoded in the engine, like WebAssembly to JS and JS to Web IDL are.

But to do that, for each language you’d have to create a specific mapping. And the engine would have to explicitly support each of these mappings, and update them as the language on either side changes. This creates a real mess.

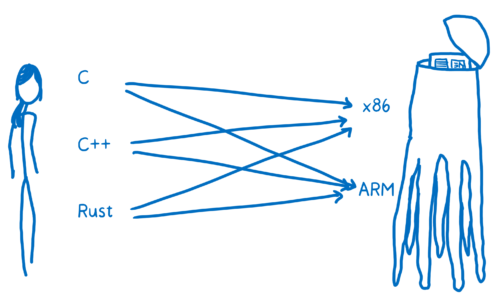

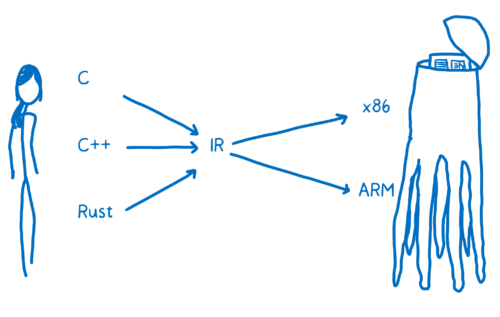

This is kind of how early compilers were designed. There was a pipeline for each source language to each machine code language. I talked about this more in my first posts on WebAssembly.

We don’t want something this complicated. We want it to be possible for all these different languages and platforms to talk to each other. But we need it to be scalable, too.

So we need a different way to do this… more like modern day compiler architectures. These have a split between front-end and back-end. The front-end goes from the source language to an abstract intermediate representation (IR). The back-end goes from that IR to the target machine code.

This is where the insight from Web IDL comes in. When you squint at it, Web IDL kind of looks like an IR.

Now, Web IDL is pretty specific to the Web. And there are lots of use cases for WebAssembly outside the web. So Web IDL itself isn’t a great IR to use.

But what if you just use Web IDL as inspiration and create a new set of abstract types?

This is how we got to the WebAssembly interface types proposal.

These types aren’t concrete types. They aren’t like the int32 or float64 types in WebAssembly today. There are no operations on them in WebAssembly.

For example, there won’t be any string concatenation operations added to WebAssembly. Instead, all operations are performed on the concrete types on either end.

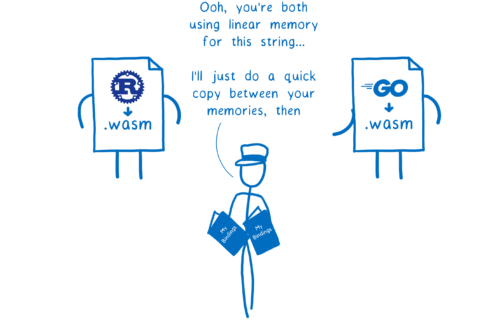

There’s one key point that makes this possible: with interface types, the two sides aren’t trying to share a representation. Instead, the default is to copy values between one side and the other.

There is one case that would seem like an exception to this rule: the new reference values (like anyref) that I mentioned before. In this case, what is copied between the two sides is the pointer to the object. So both pointers point to the same thing. In theory, this could mean they need to share a representation.

In cases where the reference is just passing through the WebAssembly module (like the anyref example I gave above), the two sides still don’t need to share a representation. The module isn’t expected to understand that type anyway… just pass it along to other functions.

But there are times where the two sides will want to share a representation. For example, the GC proposal adds a way to create type definitions so that the two sides can share representations. In these cases, the choice of how much of the representation to share is up to the developers designing the APIs.

This makes it a lot easier for a single module to talk to many different languages.

In some cases, like the browser, the mapping from the interface types to the host’s concrete types will be baked into the engine.

So one set of mappings is baked in at compile time and the other is handed to the engine at load time.

But in other cases, like when two WebAssembly modules are talking to each other, they both send down their own little booklet. They each map their functions’ types to the abstract types.

This isn’t the only thing you need to enable modules written in different source languages to talk to each other (and we’ll write more about this in the future) but it is a big step in that direction.

So now that you understand why, let’s look at how.

What do these interface types actually look like?

Before we look at the details, I should say again: this proposal is still under development. So the final proposal may look very different.

Also, this is all handled by the compiler. So even when the proposal is finalized, you’ll only need to know what annotations your toolchain expects you to put in your code (like in the wasm-bindgen example above). You won’t really need to know how this all works under the covers.

But the details of the proposal are pretty neat, so let’s dig into the current thinking.

The problem to solve

The problem we need to solve is translating values between different types when a module is talking to another module (or directly to a host, like the browser).

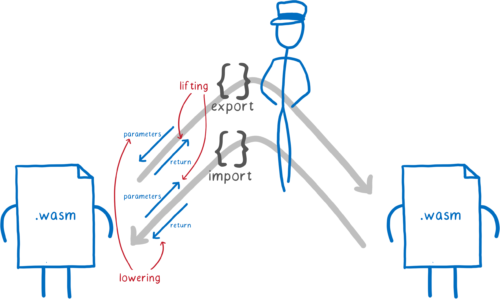

There are four places where we may need to do a translation:

For exported functions

- accepting parameters from the caller

- returning values to the caller

For imported functions

- passing parameters to the function

- accepting return values from the function

And you can think about each of these as going in one of two directions:

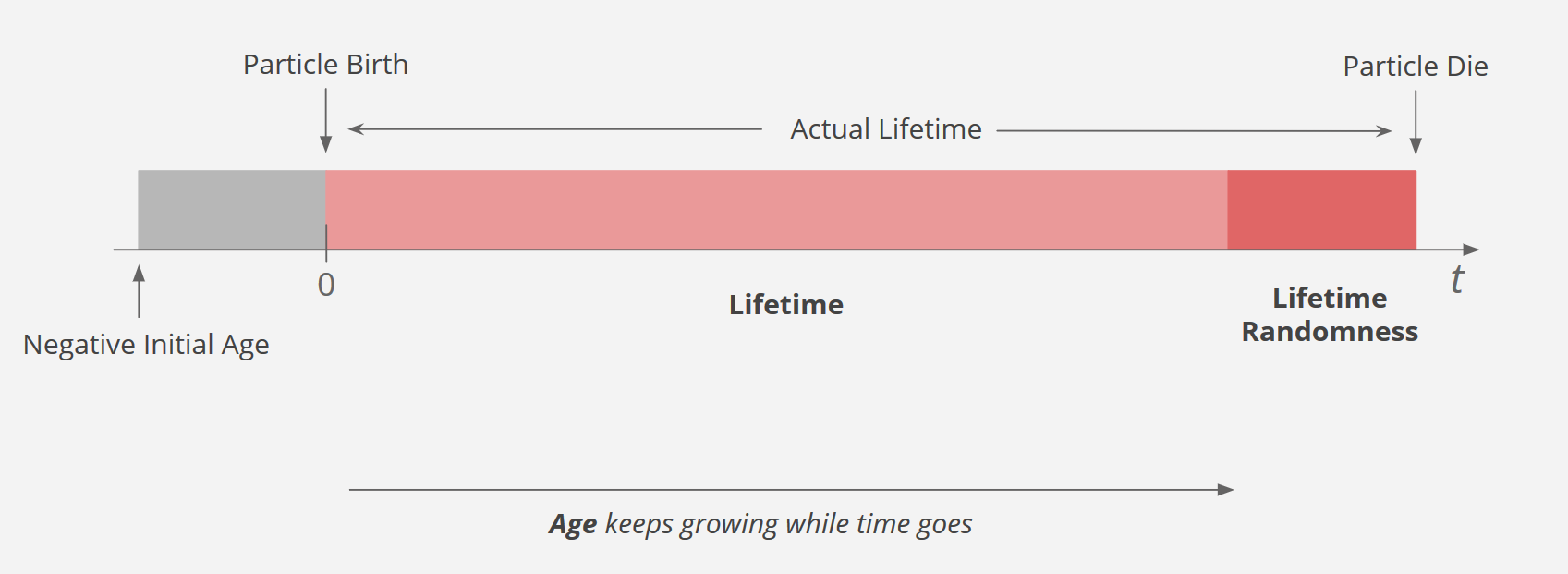

- Lifting, for values leaving the module. These go from a concrete type to an interface type.

- Lowering, for values coming into the module. These go from an interface type to a concrete type.

Telling the engine how to transform between concrete types and interface types

So we need a way to tell the engine which transformations to apply to a function’s parameters and return values. How do we do this?

By defining an interface adapter.

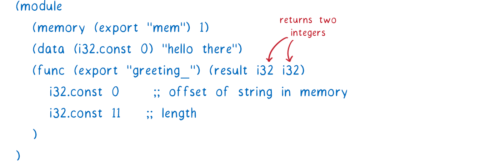

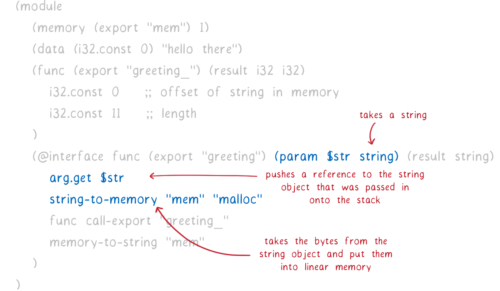

For example, let’s say we have a Rust module compiled to WebAssembly. It exports a greeting_ function that can be called without any parameters and returns a greeting.

Here’s what it would look like (in WebAssembly text format) today.

So right now, this function returns two integers.

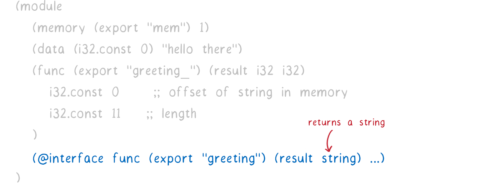

But we want it to return the string interface type. So we add something called an interface adapter.

If an engine understands interface types, then when it sees this interface adapter, it will wrap the original module with this interface.

It won’t export the greeting_ function anymore… just the greeting function that wraps the original. This new greeting function returns a string, not two numbers.

This provides backwards compatibility because engines that don’t understand interface types will just export the original greeting_ function (the one that returns two integers).

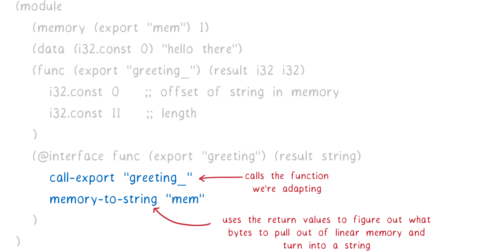

How does the interface adapter tell the engine to turn the two integers into a string?

It uses a sequence of adapter instructions.

The adapter instructions above are two from a small set of new instructions that the proposal specifies.

Here’s what the instructions above do:

- Use the

call-exportadapter instruction to call the originalgreeting_function. This is the one that the original module exported, which returned two numbers. These numbers get put on the stack. - Use the

memory-to-stringadapter instruction to convert the numbers into the sequence of bytes that make up the string. We have to specifiy “mem” here because a WebAssembly module could one day have multiple memories. This tells the engine which memory to look in. Then the engine takes the two integers from the top of the stack (which are the pointer and the length) and uses those to figure out which bytes to use.

This might look like a full-fledged programming language. But there is no control flow here—you don’t have loops or branches. So it’s still declarative even though we’re giving the engine instructions.

What would it look like if our function also took a string as a parameter (for example, the name of the person to greet)?

Very similar. We just change the interface of the adapter function to add the parameter. Then we add two new adapter instructions.

Here’s what these new instructions do:

- Use the

arg.getinstruction to take a reference to the string object and put it on the stack. - Use the

string-to-memoryinstruction to take the bytes from that object and put them in linear memory. Once again, we have to tell it which memory to put the bytes into. We also have to tell it how to allocate the bytes. We do this by giving it an allocator function (which would be an export provided by the original module).

One nice thing about using instructions like this: we can extend them in the future… just as we can extend the instructions in WebAssembly core. We think the instructions we’re defining are a good set, but we aren’t committing to these being the only instruct for all time.

If you’re interested in understanding more about how this all works, the explainer goes into much more detail.

Sending these instructions to the engine

Now how do we send this to the engine?

These annotations gets added to the binary file in a custom section.

If an engine knows about interface types, it can use the custom section. If not, the engine can just ignore it, and you can use a polyfill which will read the custom section and create glue code.

How is this different than CORBA, Protocol Buffers, etc?

There are other standards that seem like they solve the same problem—for example CORBA, Protocol Buffers, and Cap’n Proto.

How are those different? They are solving a much harder problem.

They are all designed so that you can interact with a system that you don’t share memory with—either because it’s running in a different process or because it’s on a totally different machine across the network.

This means that you have to be able to send the thing in the middle—the “intermediate representation” of the objects—across that boundary.

So these standards need to define a serialization format that can efficiently go across the boundary. That’s a big part of what they are standardizing.

Even though this looks like a similar problem, it’s actually almost the exact inverse.

With interface types, this “IR” never needs to leave the engine. It’s not even visible to the modules themselves.

The modules only see the what the engine spits out for them at the end of the process—what’s been copied to their linear memory or given to them as a reference. So we don’t have to tell the engine what layout to give these types—that doesn’t need to be specified.

What is specified is the way that you talk to the engine. It’s the declarative language for this booklet that you’re sending to the engine.

This has a nice side effect: because this is all declarative, the engine can see when a translation is unnecessary—like when the two modules on either side are using the same type—and skip the translation work altogether.

How can you play with this today?

As I mentioned above, this is an early stage proposal. That means things will be changing rapidly, and you don’t want to depend on this in production.

But if you want to start playing with it, we’ve implemented this across the toolchain, from production to consumption:

- the Rust toolchain

- wasm-bindgen

- the Wasmtime WebAssembly runtime

And since we maintain all these tools, and since we’re working on the standard itself, we can keep up with the standard as it develops.

Even though all these parts will continue changing, we’re making sure to synchronize our changes to them. So as long as you use up-to-date versions of all of these, things shouldn’t break too much.

So here are the many ways you can play with this today. For the most up-to-date version, check out this repo of demos.

Thank you

- Thank you to the team who brought all of the pieces together across all of these languages and runtimes: Alex Crichton, Yury Delendik, Nick Fitzgerald, Dan Gohman, and Till Schneidereit

- Thank you to the proposal co-champions and their colleagues for their work on the proposal: Luke Wagner, Francis McCabe, Jacob Gravelle, Alex Crichton, and Nick Fitzgerald

- Thank you to my fantastic collaborators, Luke Wagner and Till Schneidereit, for their invaluable input and feedback on this article

The post WebAssembly Interface Types: Interoperate with All the Things! appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/08/webassembly-interface-types/

|

|

The Mozilla Blog: Mozilla takes action to protect users in Kazakhstan |

|

|

Mozilla Security Blog: Protecting our Users in Kazakhstan |

|

|

Cameron Kaiser: FPR16 delays |

While you're waiting, read about today's big OpenPOWER announcement. Isn't it about time for a modern PowerPC under your desk?

|

|

Mozilla GFX: moz://gfx newsletter #47 |

Hi there! Time for another mozilla graphics newsletter. In the comments section of the previous newsletter, Michael asked about the relation between WebRender and WebGL, I’ll try give a short answer here.

Both WebRender and WebGL need access to the GPU to do their work. At the moment both of them use the OpenGL API, either directly or through ANGLE which emulates OpenGL on top of D3D11. They, however, each work with their own OpenGL context. Frames produced with WebGL are sent to WebRender as texture handles. WebRender, at the API level, has a single entry point for images, video frames, canvases, in short for every grid of pixels in some flavor of RGB format, be them CPU-side buffers or already in GPU memory as is normally the case for WebGL. In order to share textures between separate OpenGL contexts we rely on platform-specific APIs such as EGLImage and DXGI.

Beyond that there isn’t any fancy interaction between WebGL and WebRender. The latter sees the former as a image producer just like 2D canvases, video decoders and plain static images.

What’s new in gfx

Wayland and hidpi improvements on Linux

- Martin Stransky made a proof of concept implementation of DMABuf textures in Gecko’s IPC mechanism. This dmabuf EGL texture backend on Wayland is similar what we have on Android/Mac. Dmabuf buffers can be shared with main/compositor process, can be bound as a render target or texture and can be located at GPU memory. The same dma buf buffer can be also used as hardware overlay when it’s attached to wl_surface/wl_subsurface as wl_buffer.

- Jan Horak fixed a bug that prevented tabs from rendering after restoring a minimized window.

- Jan Horak fixed the window parenting hierarchy with Wayland.

- Jan Horak fixed a bug with hidpi that was causing select popups to render incorrectly after scrolling.

WebGL multiview rendering

WebGL’s multiview rendering extension has been approved by the working group and it’s implementation by Jeff Gilbert will be shipping in Firefox 70.

This extension allows more efficient rendering into multiple viewports, which is most commonly use by VR/AR for rendering both eyes at the same time.

Better high dynamic range support

Jean Yves landed the first part of his HDR work (a set of 14 patches). While we can’t yet output HDR content to HDR screen, this work greatly improved the correctness of the conversion from various HDR formats to low dynamic range sRGB.

You can follow progress on the color space meta bug.

What’s new in WebRender

WebRender is a GPU based 2D rendering engine for web written in Rust, currently powering Firefox‘s rendering engine as well as the research web browser servo.

If you are curious about the state of WebRender on a particular platform, up to date information is available at http://arewewebrenderyet.com

Speaking of which, darkspirit enabled webrender on Nightly for Nvidia+Nouveau drivers linux users in Firefox Nightly.

More filters in WebRender

When We run into a primitive that isn’t supported by WebRender, we make it go through software fallback implementation which can be slow for some things. SVG filters are a good example of primitives that perform much better if implemented on the GPU in WebRender.

Connor Brewster has been working on implementing a number of SVG filters in WebRender:

- Bug 1555483 – Introduce some infrastructure for SVG filters in WebRender and implement the feBlend filter.

- Bug 1178765 – Implement backdrop-filter from the Filter Effects Module Level 2 specification.

See the SVG filters in WebRender meta bug.

Texture swizzling and allocation

WebRender previously only worked with BGRA for color textures. Unfortunately this format is optimal on some platforms but sub-optimal (or even unsupported) on others. So a conversion sometimes has to happen and this conversion if done by the driver can be very costly.

Kvark reworked the texture caching logic to support using and swizzling between different formats (for example RGBA and BGRA).

A document that landed with the implementation provides more details about the approach and context.

Kvark also improved the texture cache allocation behavior.

Kvark also landed various refactorings (1), (2), (3), (4).

Android improvements

Jamie fixed emoji rendering on webrender on android and continues investigating driver issues on Adreno 3xx devices.

Displaylist serialization

Dan replaced bincode in our DL IPC code with a new bespoke and private serialization library (peek-poke), ending the terrible reign of the secret serde deserialize_in_place hacks and our fork of serde_derive.

Picture caching improvements

Glenn landed several improvements and fixes to the picture caching infrastructure:

- Bug 1566712 – Fix quality issueswith picture caching when transform has fractional offsets.

- Bug 1572197 – Fix world clip region for preserve-3d items with picture caching.

- Bug 1566901 – Make picture caching more robust to float issues.

- Bug 1567472 – Fix bug in preserve-3d batching code in WebRender.

Font rendering improvements

Lee landed quite a few font related patches:

- Bug 1569950 – Only partially clear WR glyph caches if it is not necessary to fully clear.

- Bug 1569174 – Disable embedded bitmaps if ClearType rendering mode is forced.

- Bug 1568841 – Force GDI parameters for GDI render mode.

- Bug 1568858 – Always stretch box shadows except for Cairo.

- Bug 1568841 – Don’t use enhanced contrast on GDI fonts.

- Bug 1553818 – Use GDI ClearType contrast for GDI font gamma.

- Bug 1565158 – Allow forcing DWrite symmetric rendering mode.

- Bug 1563133 – Limit the GlyphBuffer capacity.

- Bug 1560520 – Limit the size of WebRender’s glyph cache.

- Bug 1566449 – Don’t reverse glyphs in GlyphBuffer for RTL.

- Bug 1566528 – Clamp the amount of synthetic bold extra strikes.

- Bug 1553228 – Don’t free the result of FcPatternGetString.

Various fixes and improvements

- Gankra fixed an issue with addon popups and document splitting.

- Sotaro prevented some unnecessary composites on out-of-viewport external image updates.

- Nical fixed an integer oveflow causing the browser to freeze

- Nical improved the overlay profiler by showing more relevant numbers when the timings are noisy.

- Nical fixed corrupted rendering of progressively loaded images.

- Nical added a fast path when none of the primitives of an image batch need anti-aliasing or repetition.

https://mozillagfx.wordpress.com/2019/08/19/moz-gfx-newsletter-47/

|

|

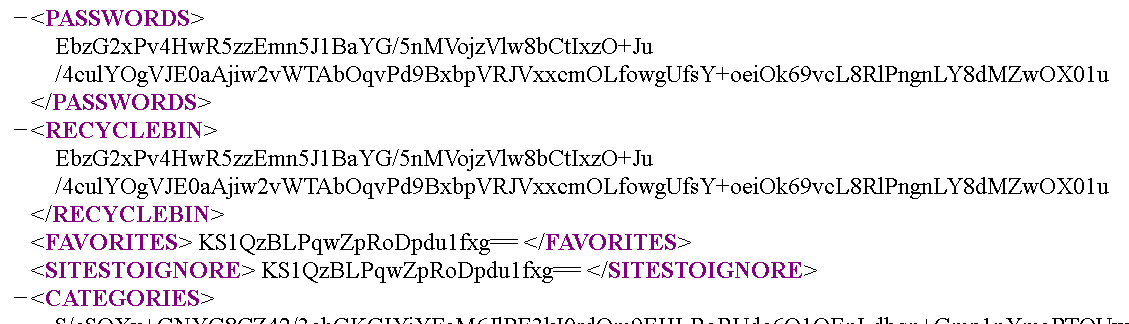

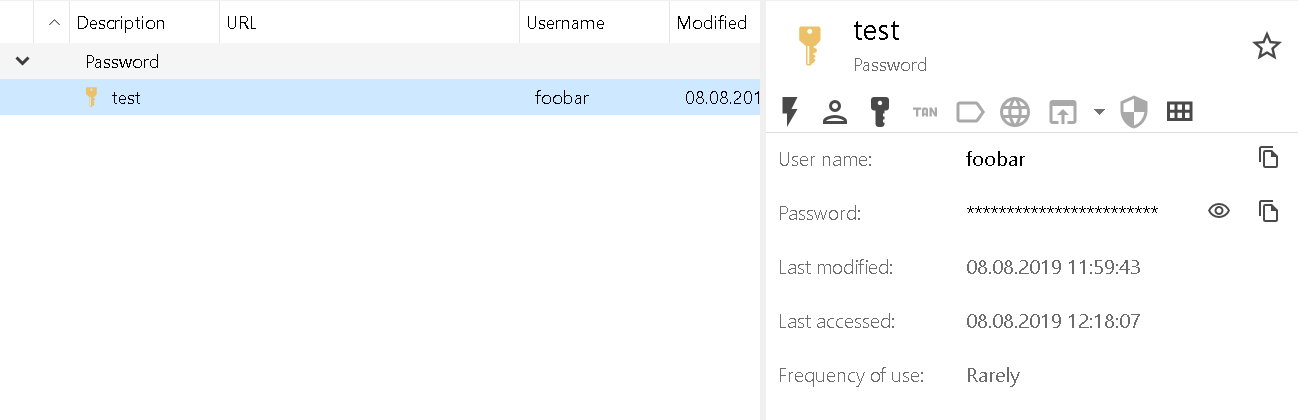

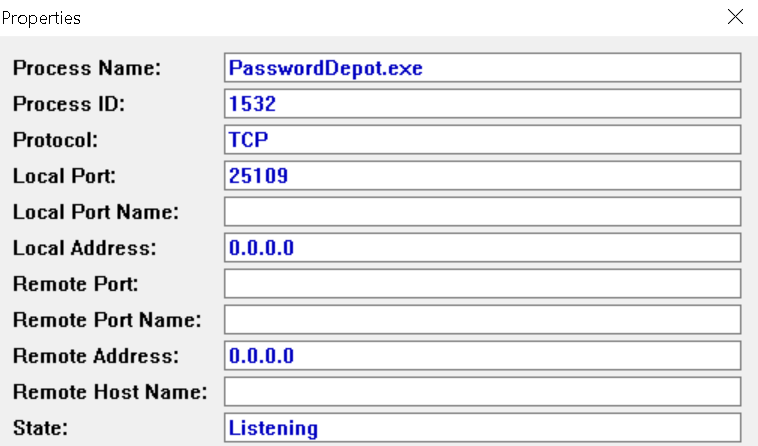

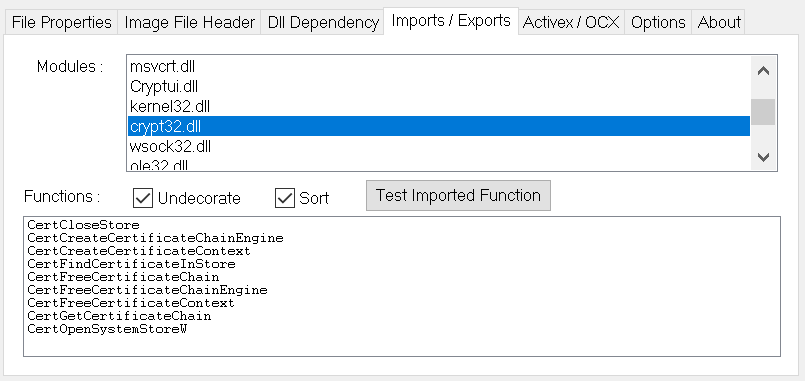

Wladimir Palant: Kaspersky in the Middle - what could possibly go wrong? |

Roughly a decade ago I read an article that asked antivirus vendors to stop intercepting encrypted HTTPS connections, this practice actively hurting security and privacy. As you can certainly imagine, antivirus vendors agreed with the sensible argument and today no reasonable antivirus product would even consider intercepting HTTPS traffic. Just kidding… Of course they kept going, and so two years ago a study was published detailing the security issues introduced by interception of HTTPS connections. Google and Mozilla once again urged antivirus vendors to stop. Surely this time it worked?

Of course not. So when I decided to look into Kaspersky Internet Security in December last year, I found it breaking up HTTPS connections so that it would get between the server and your browser in order to “protect” you. Expecting some deeply technical details about HTTPS protocol misimplementations now? Don’t worry, I don’t know enough myself to inspect Kaspersky software on this level. The vulnerabilities I found were far more mundane.

I reported eight vulnerabilities to Kaspersky Lab between 2018-12-13 and 2018-12-21. This article will only describe three vulnerabilities which have been fixed in April this year. This includes two vulnerabilities that weren’t deemed a security risk by Kaspersky, it’s up to you to decide whether you agree with this assessment. The remaining five vulnerabilities have only been fixed in July, and I agreed to wait until November with the disclosure to give users enough time to upgrade.

Edit (2019-08-22): In order to disable this functionality you have to go into Settings, select “Additional” on the left side, then click “Network.” There you will see a section called “Encryption connection scanning” where you need to choose “Do not scan encrypted connections.”

{{toc}}

The underappreciated certificate warning pages

There is an important edge case with HTTPS connections: what if a connection is established but the other side uses an invalid certificate? Current browsers will generally show you a certificate warning page in this scenario. In Firefox it looks like this:

This page has seen a surprising amount of changes over the years. The browser vendors recognized that asking users to make a decision isn’t a good idea here. Most of the time, getting out is the best course of action, and ignoring the warning only a viable option for very technical users. So the text here is very clear, low on technical details, and the recommended solution is highlighted. The option to ignore the warning is well-hidden on the other hand, to prevent people from using it without understanding the implications. While the page looks different in other browsers, the main design considerations are the same.

But with Kaspersky Internet Security in the middle, the browser is no longer talking to the server, Kaspersky is. The way HTTPS is designed, it means that Kaspersky is responsible for validating the server’s certificate and producing a certificate warning page. And that’s what the certificate warning page looks like then:

There is a considerable amount of technical details here, supposedly to allow users to make an informed decision, but usually confusing them instead. Oh, and why does it list the URL as “www.example.org”? That’s not what I typed into the address bar, it’s actually what this site claims to be (the name has been extracted from the site’s invalid certificate). That’s a tiny security issue here, wasn’t worth reporting however as this only affects sites accessed by IP address which should never be the case with HTTPS.

The bigger issue: what is the user supposed to do here? There is “leave this website” in the text, but experience shows that people usually won’t read when hitting a roadblock like this. And the highlighted action here is “I understand the risks and wish to continue” which is what most users can be expected to hit.

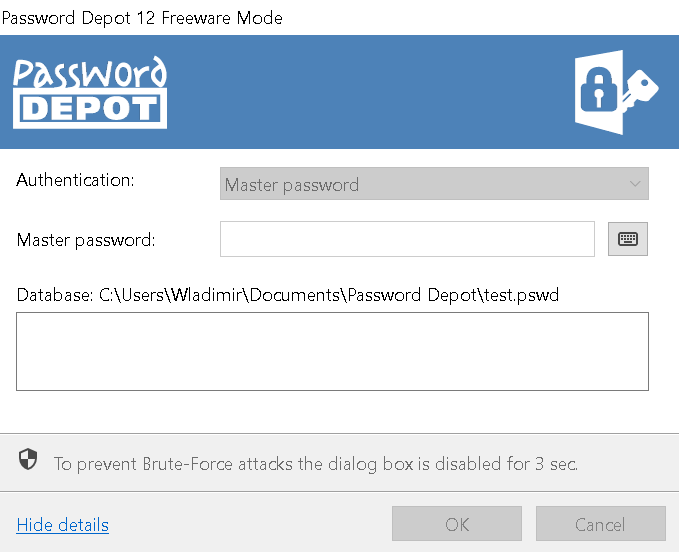

Using clickjacking to override certificate warnings

Let’s say that we hijacked some user’s web traffic, e.g. by tricking them into connecting to our malicious WiFi hotspot. Now we want to do something evil with that, such as collecting their Google searches or hijacking their Google account. Unfortunately, HTTPS won’t let us do it. If we place ourselves between the user and the Google server, we have to use our own certificate for the connection to the user. With our certificate being invalid, this will trigger a certificate warning however.

So the goal is to make the user click “I understand the risks and wish to continue” on the certificate warning page. We could just ask nicely, and given how this page is built we’ll probably succeed in a fair share of cases. Or we could use a trick called clickjacking – let the user click it without realizing what they are clicking.

There is only one complication. When the link is clicked there will be an additional confirmation pop-up:

But don’t despair just yet! That warning is merely generic text, it would apply to any remotely insecure action. We would only need to convince the user that the warning is expected and they will happily click “Continue.” For example, we could give them the following page when they first connect to the network, similar to those captive portals:

Looks like a legitimate Kaspersky warning page but isn’t, the text here was written by me. The only “real” thing here is the “I understand the risks and wish to continue” link which actually belongs to an embedded frame. That frame contains Kaspersky’s certificate warning for www.google.com and has been positioned in such a way that only the link is visible. When the user clicks it, they will get the generic warning from above and without doubt confirm ignoring the invalid certificate. We won, now we can do our evil thing without triggering any warnings!

How browser vendors deal with this kind of attack? They require at least two clicks to happen on different spots of the certificate warning page in order to add an exception for an invalid certificate, this makes clickjacking attacks impracticable. Kaspersky on the other hand felt very confident about their warning prompt, so they opted for adding more information to it. This message will now show you the name of the site you are adding the exception for. Let’s just hope that accessing a site by IP address is the only scenario where attackers can manipulate that name…

Something you probably don’t know about HSTS

There is a slightly less obvious detail to the attack described above: it shouldn’t have worked at all. See, if you reroute www.google.com traffic to a malicious server and navigate to the site then, neither Firefox nor Chrome will give you the option to override the certificate warning. Getting out will be the only option available, meaning no way whatsoever to exploit the certificate warning page. What is this magic? Did browsers implement some special behavior only for Google?

They didn’t. What you see here is a side-effect of the HTTP Strict-Transport-Security (HSTS) mechanism, which Google and many other websites happen to use. When you visit Google it will send the HTTP header Strict-Transport-Security: max-age=31536000 with the response. This tells the browser: “This is an HTTPS-only website, don’t ever try to create an unencrypted connection to it. Keep that in mind for the next year.”

So when the browser later encounters a certificate error on a site using HSTS, it knows: the website owner promised to keep HTTPS functional. There is no way that an invalid certificate is ok here, so allowing users to override the certificate would be wrong.

Unless you have Kaspersky in the middle of course, because Kaspersky completely ignores HSTS and still allows users to override the certificate. When I reported this issue, the vendor response was that this isn’t a security risk because the warning displayed is sufficient. Somehow they decided to add support for HSTS nevertheless, so that current versions will no longer allow overriding certificates here.

It’s no doubt that there are more scenarios where Kaspersky software weakens the security precautions made by browsers. For example, if a certificate is revoked (usually because it has been compromised), browsers will normally recognize that thanks to OCSP stapling and prevent the connection. But I noticed recently that Kaspersky Internet Security doesn’t support OCSP stapling, so if this application is active it will happily allow you to connect to a likely malicious server.

Using injected content for Universal XSS

Kaspersky Internet Security isn’t merely listening in on connections to HTTPS sites, it is also actively modifying those. In some cases it will generate a response of its own, such as the certificate warning page we saw above. In others it will modify the response sent by the server.

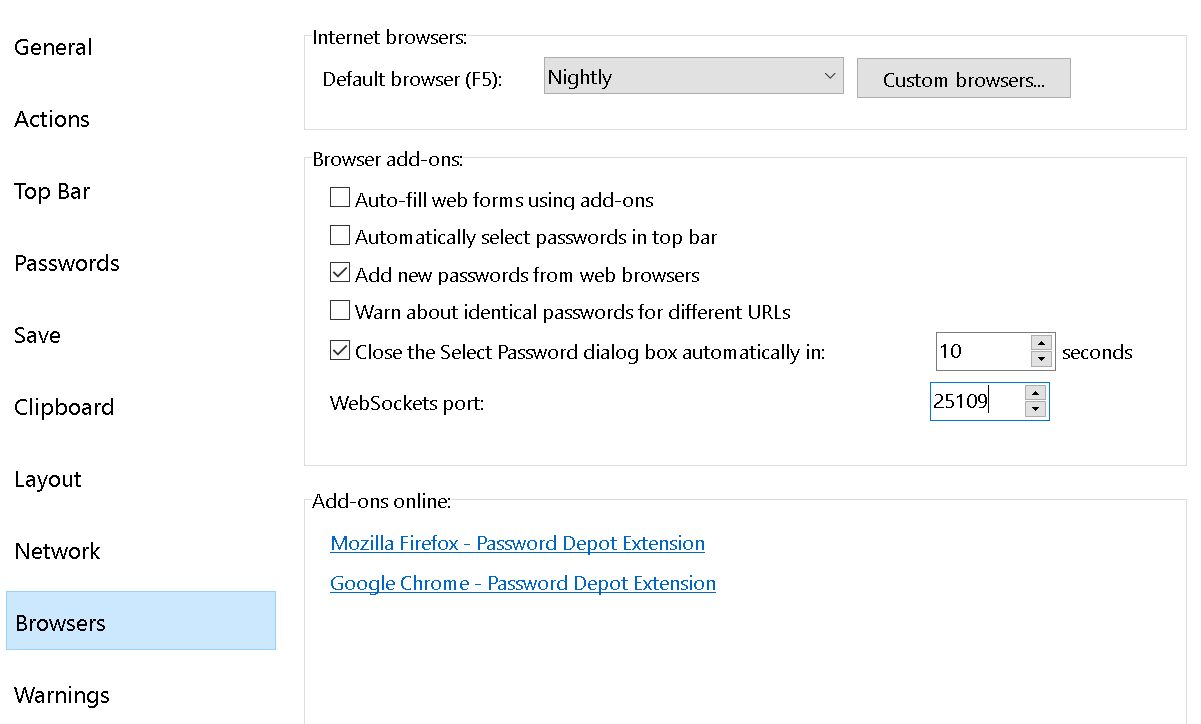

For example, if you didn’t install the Kaspersky browser extension, it will fall back to injecting a script into server responses which is then responsible for “protecting” you. This protection does things like showing a green checkmark next to Google search results that are considered safe. As Heise Online wrote merely a few days ago, this also used to leak a unique user ID which allowed tracking users regardless of any protective measures on their side. Oops…

There is a bit more to this feature called URL Advisor. When you put the mouse cursor above the checkmark icon a message appears stating that you have a safe site there. That message is a frame displaying url_advisor_balloon.html. Where does this file load from? If you have the Kaspersky browser extension, it will be part of that browser extension. If you don’t, it will load from ff.kis.v2.scr.kaspersky-labs.com in Firefox and gc.kis.v2.scr.kaspersky-labs.com in Chrome – Kaspersky software will intercept requests to these servers and answer them locally. I noticed however that things were different in Microsoft Edge, here this file would load directly from www.google.com (or any other website if you changed the host name).

Certainly, when injecting their own web page into every domain on the web Kaspersky developers thought about making it very secure? Let’s have a look at the code running there:

var policeLink = document.createElement("a");

policeLink.href = IsDefined(UrlAdvisorLinkPoliceDecision) ? UrlAdvisorLinkPoliceDecision : locales["UrlAdvisorLinkPoliceDecision"];

policeLink.target = "_blank";

div.appendChild(policeLink);

This creates a link inside the frame dynamically. Where the link target comes from? It’s part of the data received from the parent document, no validation performed. In particular, javascript: links will be happily accepted. So a malicious website needs to figure out the location of url_advisor_balloon.html and embed it in a frame using the host name of the website they want to attack. Then they send a message to it:

frame.contentWindow.postMessage(JSON.stringify({

command: "init",

data: {

verdict: {

url: "",

categories: [

21

]

},

locales: {

UrlAdvisorLinkPoliceDecision: "javascript:alert('Hi, this JavaScript code is running on ' + document.domain)",

CAT_21: "click here"

}

}

}), "*");

What you get is a link labeled “click here” which will run arbitrary JavaScript code in the context of the attacked domain when clicked. And once again, the attackers could ask the user nicely to click it. Or they could use clickjacking, so whenever the user clicks anywhere on their site, the click goes to this link inside an invisible frame.

And here you have it: a malicious website taking over your Google or social media accounts, all because Kaspersky considered it a good idea to have their content injected into secure traffic of other people’s domains. But at least this particular issue was limited to Microsoft Edge.

Timeline

- 2018-12-13: Sent report via Kaspersky bug bounty program: Lack of HSTS support facilitating MiTM attacks.

- 2018-12-17: Sent reports via Kaspersky bug bounty program: Certificate warning pages susceptible to clickjacking and Universal XSS in Microsoft Edge.

- 2018-12-20: Response from Kaspersky: HSTS and clickjacking reports are not considered security issues.

- 2018-12-20: Requested disclosure of the HSTS and clickjacking reports.

- 2018-12-24: Disclosure denied due to similarity with one of my other reports.

- 2019-04-29: Kaspersky notifies me about the three issues here being fixed (KIS 2019 Patch E, actually released three weeks earlier).

- 2019-04-29: Requested disclosure of these three issues, no response.

- 2019-07-29: With five remaining issues reported by me fixed (KIS 2019 Patch F and KIS 2020), requested disclosure on all reports.

- 2019-08-04: Disclosure denied on HSTS report because “You’ve requested too many tickets for disclosure at the same time.”

- 2019-08-05: Disclosure denied on five not yet disclosed reports, asking for time until November for users to update.

- 2019-08-06: Notified Kaspersky about my intention to publish an article about the three issues here on 2019-08-19, no response.

- 2019-08-12: Reminded Kaspersky that I will publish an article on these three issues on 2019-08-19.

- 2019-08-12: Kaspersky requesting an extension of the timeline until 2019-08-22, citing that they need more time to prepare.

- 2019-08-16: Security advisory published by Kaspersky without notifying me.

https://palant.de/2019/08/19/kaspersky-in-the-middle-what-could-possibly-go-wrong/

|

|

Cameron Kaiser: Chrome murders FTP like Jeffrey Epstein |

This leaves an interesting situation where Google has, in its very own search index, HTML pages served by FTP its own browser won't be able to view:

At the top of the search results, even!

Obviously those FTP HTML pages load just fine in mainline Firefox, at least as of this writing, and of course TenFourFox. (UPDATE: This won't work in Firefox either after Fx70, though FTP in general will still be accessible. Note that it references Chrome's announcements; as usual, these kinds of distributed firing squads tend to be self-reinforcing.)

Is it a little ridiculous to serve pages that way? Okay, I'll buy that. But it works fine and wasn't bothering anyone, and they must have some relevance to be accessible because Google even indexed them.

Why is everything old suddenly so bad?

http://tenfourfox.blogspot.com/2019/08/chrome-murders-ftp-like-jeffrey-epstein.html

|

|

Tantek Celik: IndieWebCamps Timeline 2011-2019: Amsterdam to Utrecht |

At the beginning of IndieWeb Summit 2019, I gave a brief talk on State of the IndieWeb and mentioned that:

We've scheduled lots of IndieWebCamps this year and are on track to schedule a record number of different cities as well.

I had conceived of a graphical representation of the growth of IndieWebCamps over the past nine years, both in number and across the world, but with everything else involved with setting up and running the Summit, ran out of time. However, the idea persisted, and finally this past week, with a little help from Aaron Parecki re-implementing Dopplr’s algorithm for turning city names into colors, was able to put togther something pretty close to what I’d envisioned:

I don’t know of any tools to take something like this kind of locations vs years data and graph it as such. So I built an HTML table with a cell for each IndieWebCamp, as well as cells for the colspans of empty space. Each colored cell is hyperlinked to the IndieWebCamp for that city for that year.

2011-2018 and over half of 2019 are IndieWebCamps (and Summits) that have already happened. 2019 includes bars for four upcoming IndieWebCamps, which are fully scheduled and open for sign-ups.

The table markup is copy pasted from the IndieWebCamp wiki template where I built it, and you can see the template working live in the context of the IndieWebCamp Cities page. I’m sure the markup could be improved, suggestions welcome!

https://tantek.com/2019/228/b1/indiewebcamps-timeline-amsterdam-utrecht

|

|

Julien Vehent: The cost of micro-services complexity |

It has long been recognized by the security industry that complex systems are impossible to secure, and that pushing for simplicity helps increase trust by reducing assumptions and increasing our ability to audit. This is often captured under the acronym KISS, for "keep it stupid simple", a design principlepopularized by the US Navy back in the 60s. For a long time, we thought the enemy were application monoliths that burden our infrastructure with years of unpatched vulnerabilities.

So we split them up. We took them apart. We created micro-services where each function, each logical component, is its own individual service, designed, developed, operated and monitored in complete isolation from the rest of the infrastructure. And we composed them ad vitam aeternam. Want to send an email? Call the rest API of micro-service X. Want to run a batch job? Invoke lambda function Y. Want to update a database entry? Post it to A which sends an event to B consumed by C stored in D transformed by E and inserted by F. We all love micro-services architecture. It’s like watching dominoes fall down. When it works, it’s visceral. It’s when it doesn’t that things get interesting. After nearly a decade of operating them, let me share some downsides and caveats encountered in large-scale production environments.

High operational cost

The first problem is operational cost. Even in a devops cloud automated world, each micro-service, serverless or not, needs setup, maintenance and deployment. We never fully got to the holy grail of completely automated everything, so humans are still involved with these things. Perhaps someone sold you on the idea devs could do the ops work on their free time, but let’s face it, that’s a lie, and you need dedicated teams of specialists to run the stuff the right way. And those folks don’t come cheap.

The more services you have, the harder it is to keep up with them. First you’ll start noticing delays in getting new services deployed. A week. Two weeks. A month. What do you mean you need a three months notice to get a new service setup?

Then, it’s the deployments that start to take time. And as a result, services that don’t absolutely need to be deployed, well, aren’t. Soon they’ll become outdated, vulnerable, running on the old version of everything and deploying a new version means a week worth of work to get it back to the current standard.

QA uncertainty

A second problem is quality assurance. Deploying anything in a micro-services world means verifying everything still works. Got a chain of 10 services? Each one probably has its own dev team, QA specialists, ops people that need to get involved, or at least notified, with every deployment of any service in the chain. I know it’s not supposed to be this way. We’re supposed to have automated QA, integration tests, and synthetic end-to-end monitoring that can confirm that a butterfly flapping its wings in us-west-2 triggers a KPI update on the leadership dashboard. But in the real world, nothing’s ever perfect and things tend to break in mysterious ways all the time. So you warn everybody when you deploy anything, and require each intermediate service to rerun their own QA until the pain of getting 20 people involved with a one-line change really makes you wish you had a monolith.

The alternative is that you don’t get those people involved, because, well, they’re busy, and everything is fine until a minor change goes out, all testing passes, until two days later in a different part of the world someone’s product is badly broken. It takes another 8 hours for them to track it back to your change, another 2 to roll it back, and 4 to test everything by hand. The post-mortem of that incident has 37 invitees, including 4 senior directors. Bonus points if you were on vacation when that happened.

Huge attack surface

And finally, there’s security. We sure love auditing micro-services, with their tiny codebases that are always neat and clean. We love reviewing their infrastructure too, with those dynamic security groups and clean dataflows and dedicated databases and IAM controlled permissions. There’s a lot of security benefits to micro-services, so we’ve been heavily advocating for them for several years now.

And then, one day, someone gets fed up with having to manage API keys for three dozen services in flat YAML files and suggests to use oauth for service-to-service authentication. Or perhaps Jean-Kevin drank the mTLS Kool-Aid at the FoolNix conference and made a PKI prototype on the flight back (side note: do you know how hard it is to securely run a PKI over 5 or 10 years? It’s hard). Or perhaps compliance mandates that every server, no matter how small, must run a security agent on them.

Even when you keep everything simple, this vast network of tiny services quickly becomes a nightmare to reason about. It’s just too big, and it’s everywhere. Your cross-IAM role assumptions keep you up at night. 73% of services are behind on updates and no one dares touch them. One day, you ask if anyone has a diagram of all the network flows and Jean-Kevin sends you a dot graph he generated using some hacky python. Your browser crashes trying to open it, the damn thing is 158MB of SVG.

Most vulnerabilities happen in the seam of things. API credentials will leak. Firewall will open. Access controls will get mis-managed. The more of them you have, the harder it is to keep it locked down.

Everything in moderation

I’m not anti micro-services. I do believe they are great, and that you should use them, but, like a good bottle of Lagavulin, in moderation. It’s probably OK to let your monolith do more than one thing, and it’s certainly OK to extract the one functionality that several applications need into a micro-service. We did this with autograph, because it was obvious that handling cryptographic operations should be done by a dedicated micro-service, but we don’t do it for everything. My advice is to wait until at least three services want a given thing before turning it into a micro-service. And if the dependency chain becomes too large, consider going back to a well-managed monolith, because in many cases, it actually is the simpler approach.https://j.vehent.org/blog/index.php?post/2019/08/15/The-cost-of-micro-services-complexity

|

|

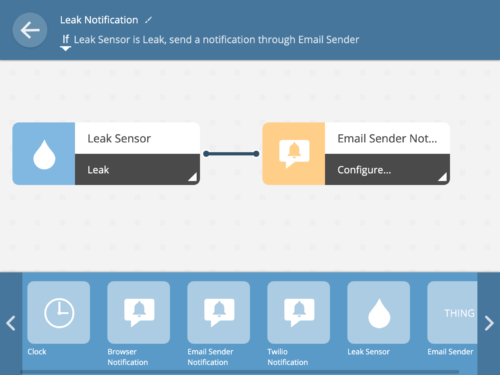

Hacks.Mozilla.Org: Using WebThings Gateway notifications as a warning system for your home |

Ever wonder if that leaky pipe you fixed is holding up? With a trip to the hardware store and a Mozilla WebThings Gateway you can set up a cheap leak sensor to keep an eye on the situation, whether you’re home or away. Although you can look up detector status easily on the web-based dashboard, it would be better to not need to pay attention unless a leak actually occurs. In the WebThings Gateway 0.9 release, a number of different notification mechanisms can be set up, including emails, apps, and text messages.

Leak Sensor Demo

In this post I’ll show you how to set up gateway notifications to warn you of changes in your home that you care about. You can set each notification to one of three levels of severity–low, normal, and high–so that you can identify which are informational changes and which alerts should be addressed immediately (fire! intruder! leak!). First, we’ll choose a device to worry about. Next, we’ll decide how we want our gateway to contact us. Finally, we’ll set up a rule to tell the gateway when it should contact us.

Choosing a device

First, make sure the device you want to monitor is connected to your gateway. If you haven’t added the device yet, visit the Gateway User Guide for information about getting started.

Now it’s time to figure out which things’ properties will lead to interesting notifications. For each thing you want to investigate, click on its splat icon to get a full view of all its properties.

You may also want to log properties of various analog devices over time to see what values are “normal”. For example, you can monitor the refrigerator temperature for a couple of days to help determine what qualifies as an abnormal temperature. In this graph, you can see the difference between baseline power draw (around 20 watts) and charging (up to 90 watts).

In my case, I’ve selected a leak sensor so I won’t need to log data in advance. It’s pretty clear that I want to be notified when the leak property of my sensor becomes true (i.e., when a leak is detected). If instead you want to monitor a smart plug, you can look at voltage, power, or on/off state. Note that the notification rules you create will let you combine multiple inputs using “and” or “or” logic. For example, you might want to be alerted if indoor motion is detected “and” all of the family smartphone “presence” states are “inactive” (i.e., no one in your family is home, so what caused motion?). Whatever your choice, keep the logical states of your various sensors in mind while you set up your notifier.

Setting up your notifier

The 0.9 WebThings Gateway release added support for notifiers as a specific form of add-on. Thanks to the efforts of the community and a bit of our own work, your gateway can already send you notifications over email, SMS, Telegram, or specialized push notification apps with new add-ons released every week. You can find several notification add-on options by clicking “+” on the Settings > Add-ons page.

The easiest-to-use notifiers are email and SMS since there are fewer moving parts, but feel free to choose whichever approach you prefer. Follow the configuration instructions in your chosen notifier’s README file. You can get to the README for your notifier by clicking on the author’s name in the add-on list then scrolling down.

You’ll find a complete guide to the email notifier here: https://github.com/mozilla-iot/email-sender-adapter#email-sender-adapter.

Creating a rule

Finally, let’s teach our gateway how and when it should yell for attention. We can set this up in a simple drag-and-drop rule. First, drag your device to the left as a trigger and select the “Leak” property.

Next, drag your notification channel to the right as an effect and configure its title, body, and level as desired.

Your rule is now set up and ready to go!

You can now manually test it out. For a leak sensor you can just spill a little water on it to make sure you get a text, email, or other notification warning you about a possible scary flood. This is also a perfect time to start experimenting. Can you set up a second, louder notification for when you’re asleep? What about only notifying when you’re at home so you can deal with the leak immediately?

Notifications are just one small piece of the WebThings Gateway ecosystem. We’re trying to build a future where the convenience of a connected life doesn’t require giving up your security and privacy. If you have ideas about how the WebThings Gateway can better orchestrate your home, please comment on Discourse or contribute on GitHub. If your preferred notification channel is missing and you can code, we love community add-ons! Check out the source code of the email add-on for inspiration. Coming up next, we’ll be talking about how you can have a natural spoken dialogue with the WebThings Gateway without sending your voice data to the cloud.

The post Using WebThings Gateway notifications as a warning system for your home appeared first on Mozilla Hacks - the Web developer blog.

|

|

The Rust Programming Language Blog: Announcing Rust 1.37.0 |

The Rust team is happy to announce a new version of Rust, 1.37.0. Rust is a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.37.0 is as easy as:

rustup update stable

If you don't have it already, you can get rustup from the appropriate page on our website, and check out the detailed release notes for 1.37.0 on GitHub.

What's in 1.37.0 stable

The highlights of Rust 1.37.0 include referring to enum variants through type aliases, built-in cargo vendor, unnamed const items, profile-guided optimization, a default-run key in Cargo, and #[repr(align(N))] on enums. Read on for a few highlights, or see the detailed release notes for additional information.

Referring to enum variants through type aliases

With Rust 1.37.0, you can now refer to enum variants through type aliases. For example:

type ByteOption = Option;

fn increment_or_zero(x: ByteOption) -> u8 {

match x {

ByteOption::Some(y) => y + 1,

ByteOption::None => 0,

}

}

In implementations, Self acts like a type alias. So in Rust 1.37.0, you can also refer to enum variants with Self::Variant:

impl Coin {

fn value_in_cents(&self) -> u8 {

match self {

Self::Penny => 1,

Self::Nickel => 5,

Self::Dime => 10,

Self::Quarter => 25,

}

}

}

To be more exact, Rust now allows you to refer to enum variants through "type-relative resolution", >::Variant. More details are available in the stabilization report.

Built-in Cargo support for vendored dependencies

After being available as a separate crate for years, the cargo vendor command is now integrated directly into Cargo. The command fetches all your project's dependencies unpacking them into the vendor/ directory, and shows the configuration snippet required to use the vendored code during builds.

There are multiple cases where cargo vendor is already used in production: the Rust compiler rustc uses it to ship all its dependencies in release tarballs, and projects with monorepos use it to commit the dependencies' code in source control.

Using unnamed const items for macros

You can now create unnamed const items. Instead of giving your constant an explicit name, simply name it _ instead. For example, in the rustc compiler we find:

/// Type size assertion where the first parameter

/// is a type and the second is the expected size.

#[macro_export]

macro_rules! static_assert_size {

($ty:ty, $size:expr) => {

const _: [(); $size] = [(); ::std::mem::size_of::<$ty>()];

// ^ Note the underscore here.

}

}

static_assert_size!(Option>, 8); // 1.

static_assert_size!(usize, 8); // 2.

Notice the second static_assert_size!(..): thanks to the use of unnamed constants, you can define new items without naming conflicts. Previously you would have needed to write static_assert_size!(MY_DUMMY_IDENTIFIER, usize, 8);. Instead, with Rust 1.37.0, it now becomes easier to create ergonomic and reusable declarative and procedural macros for static analysis purposes.

Profile-guided optimization

The rustc compiler now comes with support for Profile-Guided Optimization (PGO) via the -C profile-generate and -C profile-use flags.

Profile-Guided Optimization allows the compiler to optimize code based on feedback from real workloads. It works by compiling the program to optimize in two steps:

- First, the program is built with instrumentation inserted by the compiler. This is done by passing the

-C profile-generateflag torustc. The instrumented program then needs to be run on sample data and will write the profiling data to a file. - Then, the program is built again, this time feeding the collected profiling data back into

rustcby using the-C profile-useflag. This build will make use of the collected data to allow the compiler to make better decisions about code placement, inlining, and other optimizations.

For more in-depth information on Profile-Guided Optimization, please refer to the corresponding chapter in the rustc book.

Choosing a default binary in Cargo projects

cargo run is great for quickly testing CLI applications. When multiple binaries are present in the same package, you have to explicitly declare the name of the binary you want to run with the --bin flag. This makes cargo run not as ergonomic as we'd like, especially when a binary is called more often than the others.

Rust 1.37.0 addresses the issue by adding default-run, a new key in Cargo.toml. When the key is declared in the [package] section, cargo run will default to the chosen binary if the --bin flag is not passed.

#[repr(align(N))] on enums

The #[repr(align(N))] attribute can be used to raise the alignment of a type definition. Previously, the attribute was only allowed on structs and unions. With Rust 1.37.0, the attribute can now also be used on enum definitions. For example, the following type Align16 would, as expected, report 16 as the alignment whereas the natural alignment without #[repr(align(16))] would be 4:

#[repr(align(16))]

enum Align16 {

Foo { foo: u32 },

Bar { bar: u32 },

}

The semantics of using #[repr(align(N)) on an enum is the same as defining a wrapper struct AlignN with that alignment and then using AlignN:

#[repr(align(N))]

struct AlignN(T);

Library changes

In Rust 1.37.0 there have been a number of standard library stabilizations:

BufReader::bufferandBufWriter::bufferCell::from_mutCell::as_slice_of_cellsDoubleEndedIterator::nth_backOption::xor- [

{i,u}{8,16,32,64,128,size}::reverse_bits] andWrapping::reverse_bits slice::copy_within

Other changes

There are other changes in the Rust 1.37 release: check out what changed in Rust, Cargo, and Clippy.

Contributors to 1.37.0

Many people came together to create Rust 1.37.0. We couldn't have done it without all of you. Thanks!

New sponsors of Rust infrastructure

We'd like to thank two new sponsors of Rust's infrastructure who provided the resources needed to make Rust 1.37.0 happen: Amazon Web Services (AWS) and Microsoft Azure.

- AWS has provided hosting for release artifacts (compilers, libraries, tools, and source code), serving those artifacts to users through CloudFront, preventing regressions with Crater on EC2, and managing other Rust-related infrastructure hosted on AWS.

- Microsoft Azure has sponsored builders for Rust’s CI infrastructure, notably the extremely resource intensive rust-lang/rust repository.

|

|

Mozilla Localization (L10N): L10n Report: August Edition |

Please note some of the information provided in this report may be subject to change as we are sometimes sharing information about projects that are still in early stages and are not final yet.

Welcome!

New localizers:

- Mohsin of Assanese (as) is committed to rebuild the community and has been contributing to several projects.

- Emil of Syriac (syc) joined us through the Common Voice project.

- Ratko and Isidora of Serbian (sr) have been prolific contributors to a wide range of products and projects since joining the community.

- Haile of Amheric (am) joined us through the Common Voice project, is busy localizing and recruiting more contributors so he can rebuild the community.

- Ahsun Mahmud of Bengali (bn) focuses his interest on Firefox.

Are you a locale leader and want us to include new members in our upcoming reports? Contact us!

New community/locales added

- Maltese (mt)

- Romansh Vallader (rm-vallery)

- Syriac (syc)

New content and projects

What’s new or coming up in Firefox desktop

We’re quickly approaching the deadline for Firefox 69. The last day to ship your changes in this version is August 20, less than a week away.

A lot of content targeting Firefox 70 already landed and it’s available in Pontoon for translation, with more to come in the following days. Here are a few of the areas where you should focus your testing on.

about:logins

This is the new password manager for Firefox. If you don’t plan to store the passwords in your browser, you should at least create a new profile to test the feature and its interactions (adding logins, editing, removing, etc.).

Enhanced Tracking Protection (ETP) and Protection Panels

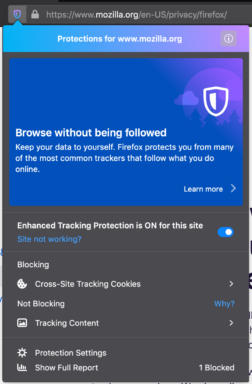

This is going to be the main focus for Firefox 70:

This is going to be the main focus for Firefox 70:

- New protection panel displayed when clicking the shield icon in the address bar.

- Updated preferences.

- New about:protections page. The content of this page will be exposed for localization in the coming days.

With ETP there will be several new terms to define for your language, like “Cross-Site Tracking Cookies” or “Social Media Trackers”. Make sure they’re translated consistently across the products and websites.

The deadline to ship localization for Firefox 70 will be October 8.

What’s new or coming up in mobile

It’s summer vacation time in mobile land, which means most projects are following the usual course of things.

Just like for Desktop, we’re quickly approaching the deadline for Firefox Android v69. The last day to ship your changes in this version is August 20.

Another thing to note is that we’ve exposed strings for Firefox iOS v19 (deadline TBD soon).

Other projects are following the usual continuous localization workflow. Stay tuned for the next report as there will be novelties then for sure!

What’s new or coming up in web projects

Firefox Accounts

A lot of strings landed earlier this month. If you need to prioritize what to localize first, look for string IDs containing `delete_account` or `sync-engines`. Expect more strings to land in the coming weeks.

Mozilla.org

The following files were added or updated since the last report.

- New: firefox/adblocker.lang and firefox/whatsnew_69.lang (due on 26 of August)

- Update: firefox/new/trailhead.lang

The navigation.lang file has been made available for localization for some time. This is a shared file, and the content is on production whether the file is fully localized or not. If this is not fully translated, make sure to give this file higher priority to complete soon.

What’s new or coming up in Foundation projects

More content from foundation.mozilla.org will be exposed to localization in de, es, fr, pl, pt-BR over the next few weeks! Content is exposed in different stages, because the website is built using different technologies, which makes it challenging for localization. The main pages will be available in the Engagement project, and a new tag can help have a look at them. Other template strings will be exposed in a new project later.

donate.mozilla.org is getting an update too! The website is being rebuilt from the ground up with a new system that will make it easier to maintain. The UI won’t change too much, so the copy will mostly remain the same. However, it won’t be possible to migrate the current translations to the new system, instead we will heavily rely on Pontoon’s translation memory.

Once the new website is ready, the current project in Pontoon will be set to “read only” mode during a transition period and a new project will be enabled.

Please make sure to review any pending suggestion over the next few weeks, so that they get properly added to the translation memory and are ready to be reused into the new project.

What’s new or coming up in SuMo

Newly published articles:

What’s new or coming up in Pontoon

The Translate.Next work moves on. We hope to have it wrapped up by the end of this quarter (i.e., end of September). Help us test by turning on Translate.Next from the Pontoon translation editor.

Newly published localizer facing documentation

Events

- Want to showcase an event coming up that your community is participating in? Reach out to any l10n-driver and we’ll include that (see links to emails at the bottom of this report)

Friends of the Lion

Know someone in your l10n community who’s been doing a great job and should appear here? Contact one of the l10n-drivers, and we’ll make sure they get a shout-out (see list at the bottom)!

Know someone in your l10n community who’s been doing a great job and should appear here? Contact one of the l10n-drivers, and we’ll make sure they get a shout-out (see list at the bottom)!

Useful Links

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Facebook group: it’s new! Come check it out!

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

- #l10n irc channel: this wiki page will help you get set up with IRC. For L10n, we use the #l10n channel for all general discussion. You can also find a list of IRC channels in other languages here.

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying (CocoMo) – l10n Project Manager for mozilla.org, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Axel (Pike) – l10n Tech Team Lead

- Sta's – l20n/FTL tamer

- Matjaz – Pontoon dev

- Adrian – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

- Rub'en – Interim L10n community coordinator for Support

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2019/08/14/l10n-report-august-edition-3/

|

|

Mozilla VR Blog: WebXR category in JS13KGames! |

Today starts the 8th edition of the annual js13kGames competition and we are sponsoring its WebXR category with a bunch of prizes including Oculus Quest headsets!

Like many other game development contests, the main goal of the js13kGames competition is to make a game based on a given theme under a specific amount of time. This year’s theme is "BACK" and the time you have to work on your game is a whole month, from today to September 13th.

There is, of course, another important rule you must follow: the zip containing your game should not weigh more than 13kb. (Please follow this link for the complete set of rules). Don’t let the size restriction discourage you. Previous competitors have done amazing things in 13kb.

This year, as in the previous editions, Mozilla is sponsoring the competition, with special emphasis on the WebXR category, where, among other prizes, the best three games will get an Oculus Quest headset!

Like many other game development contests, the main goal is to release a game based on a given theme under a specific amount of time. This year’s theme is "BACK" and the time you have to work on your game is a whole month, from today to 13th September.