Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Will Kahn-Greene: crashstats-tools v1.0.1 released! cli for Crash Stats. |

What is it?

crashstats-tools is a set of command-line tools for working with Crash Stats (https://crash-stats.mozilla.org/).

crashstats-tools comes with two commands:

- supersearch: for performing Crash Stats Super Search queries

- fetch-data: for fetching raw crash, dumps, and processed crash data for specified crash ids

v1.0.1 released!

I extracted two commands we have in the Socorro local dev environment as a separate Python project. This allows anyone to use those two commands without having to set up a Socorro local dev environment.

The audience for this is pretty limited, but I think it'll help significantly for testing analysis tools.

Say I'm working on an analysis tool that looks at crash report minidump files and does some additional analysis on it. I could use supersearch command to get me a list of crash ids to download data for and the fetch-data command to download the requisite data.

$ export CRASHSTATS_API_TOKEN=foo $ mkdir crashdata $ supersearch --product=Firefox --num=10 | \ fetch-data --raw --dumps --no-processed crashdata

Then I can run my tools on the dumps in crashdata/upload_file_minidump/.

Be thoughtful about using data

Make sure to use these tools in compliance with our data policy:

https://crash-stats.mozilla.org/documentation/memory_dump_access/

Where to go for more

See the project on GitHub which includes a README which contains everything about the project including examples of usage, the issue tracker, and the source code:

https://github.com/willkg/crashstats-tools

Let me know whether this helps you!

https://bluesock.org/~willkg/blog/mozilla/crashstats_tools_v1_0_1.html

|

|

Dustin J. Mitchell: CODEOWNERS syntax |

The GitHub docs page for CODEOWNERS is not very helpful in terms of how the file is interpreted. I’ve done a little experimentation to figure out how it works, and here are the results.

Rules

For each modified file in a PR, GitHub examines the codeowners file and selects the last matching entry. It then combines the set of mentions for all files in the PR and assigns them as reviewers.

An entry can specify no reviewers by containing only a pattern and no mentions.

Test

Consider this CODEOWNERS:

* @org/reviewers

*.js @org/js-reviewers

*.go @org/go-reviewers

security/** @org/sec-reviewers

generated/**

Then a change to:

README.mdwould get review from@org/reviewerssrc/foo.jswould get review from@org/js-reviewersbar.gowould get review from@org/go-reviewerssecurity/crypto.gowould get review from@org/sec-reviewers(but not@org/go-reviewers!)generated/reference.gowould get review from nobody

And thus a PR with, for example:

M src/foo.js

M security/crypto.go

M generated/reference.go

would get reviewed by @org/js-reviewers and @org/sec-reviewers.

If I wanted per-language reviews even under security/, then I’d use

security/** @org/sec-reviewers

security/**/*.js @org/sec-reviewers @org/js-reviewers

security/**/*.go @org/sec-reviewers @org/go-reviewers

|

|

Hacks.Mozilla.Org: New CSS Features in Firefox 68 |

Firefox 68 landed earlier this month with a bunch of CSS additions and changes. In this blog post we will take a look at some of the things you can expect to find, that might have been missed in earlier announcements.

CSS Scroll Snapping

The headline CSS feature this time round is CSS Scroll Snapping. I won’t spend much time on it here as you can read the blog post for more details. The update in Firefox 68 brings the Firefox implementation in line with Scroll Snap as implemented in Chrome and Safari. In addition, it removes the old properties which were part of the earlier Scroll Snap Points Specification.

The ::marker pseudo-element

The ::marker pseudo-element lets you select the marker box of a list item. This will typically contain the list bullet, or a number. If you have ever used an image as a list bullet, or wrapped the text of a list item in a span in order to have different bullet and text colors, this pseudo-element is for you!

With the marker pseudo-element, you can target the bullet itself. The following code will turn the bullet on unordered lists to hot pink, and make the number on an ordered list item larger and blue.

ul ::marker {

color: hotpink;

}

ol ::marker {

color: blue;

font-size: 200%;

}

With ::marker we can style our list markers

There are only a few CSS properties that may be used on ::marker. These include all font properties. Therefore you can change the font-size or family to be something different to the text. You can also color the bullets as shown above, and insert generated content.

Using ::marker on non-list items

A marker can only be shown on list items, however you can turn any element into a list-item by using display: list-item. In the example below I use ::marker, along with generated content and a CSS counter. This code outputs the step number before each h2 heading in my page, preceded by the word “step”. You can see the full example on CodePen.

h2 {

display: list-item;

counter-increment: h2-counter;

}

h2::marker {

content: "Step: " counter(h2-counter) ". ";

}If you take a look at the bug for the implementation of ::marker you will discover that it is 16 years old! You might wonder why a browser has 16 year old implementation bugs and feature requests sitting around. To find out more read through the issue, where you can discover that it wasn’t clear originally if the ::marker pseudo-element would make it into the spec.

There were some Mozilla-specific properties that achieved the result developers were looking for with something like ::marker. The properties ::moz-list-bullet and ::moz-list-marker allowed for the styling of bullets and markers respectively, using a moz- vendor prefix.

The ::marker pseudo-element is standardized in CSS Lists Level 3, and CSS Pseudo-elements Level 4, and currently implemented as of Firefox 68, and Safari. Chrome has yet to implement ::marker. However, in most cases you should be able to use ::marker as an enhancement for those browsers which support it. You can allow the markers to fall back to the same color and size as the rest of the list text where it is not available.

CSS Fixes

It makes web developers sad when we run into a feature which is supported but works differently in different browsers. These interoperability issues are often caused by the sheer age of the web platform. In fact, some things were never fully specified in terms of how they should work. Many changes to our CSS specifications are made due to these interoperability issues. Developers depend on the browsers to update their implementations to match the clarified spec.

Most browser releases contain fixes for these issues, making the web platform incrementally better as there are fewer issues for you to run into when working with CSS. The latest Firefox release is no different – we’ve got fixes for the ch unit, and list numbering shipping.

Developer Tools

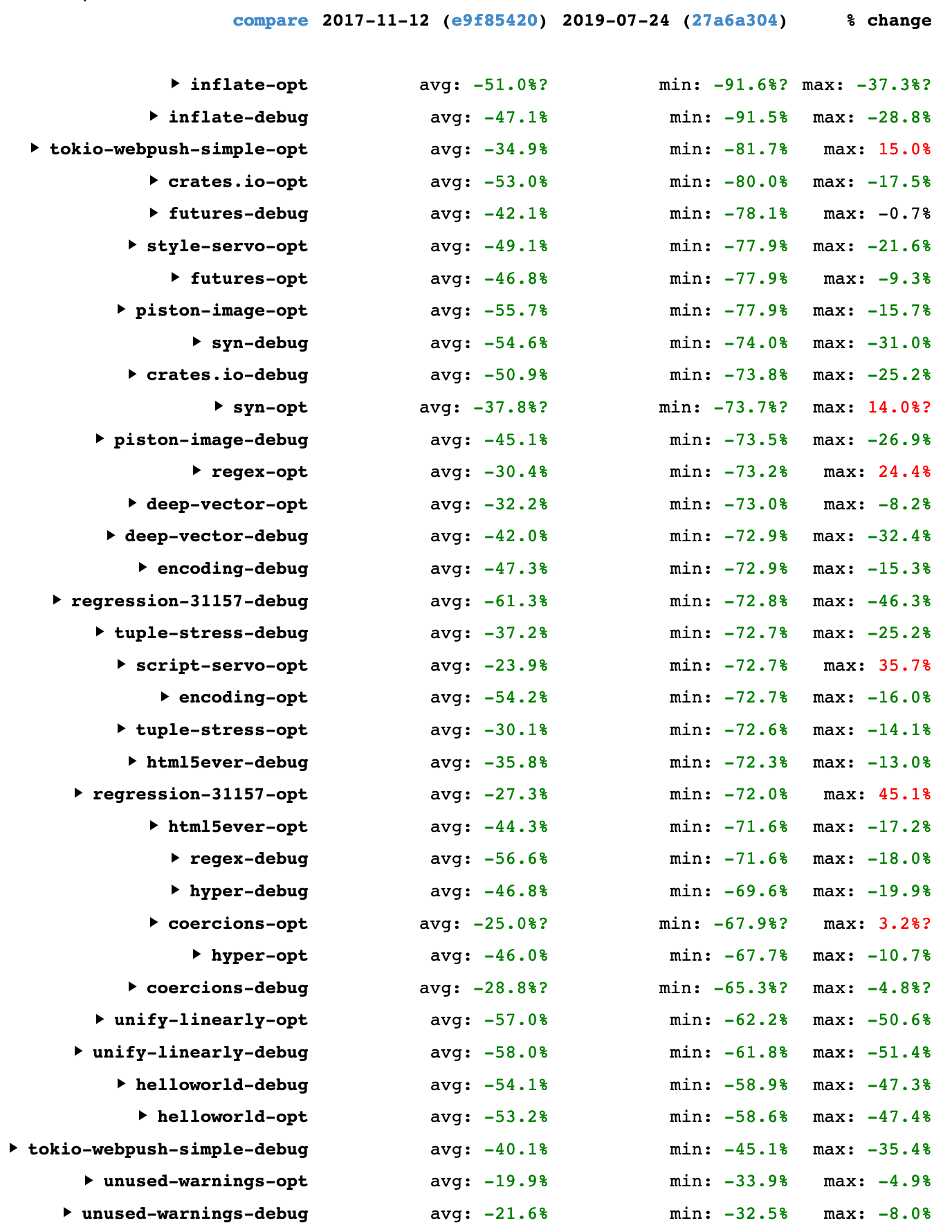

In addition to changes to the implementation of CSS in Firefox, Firefox 68 brings you some great new additions to Developer Tools to help you work with CSS.

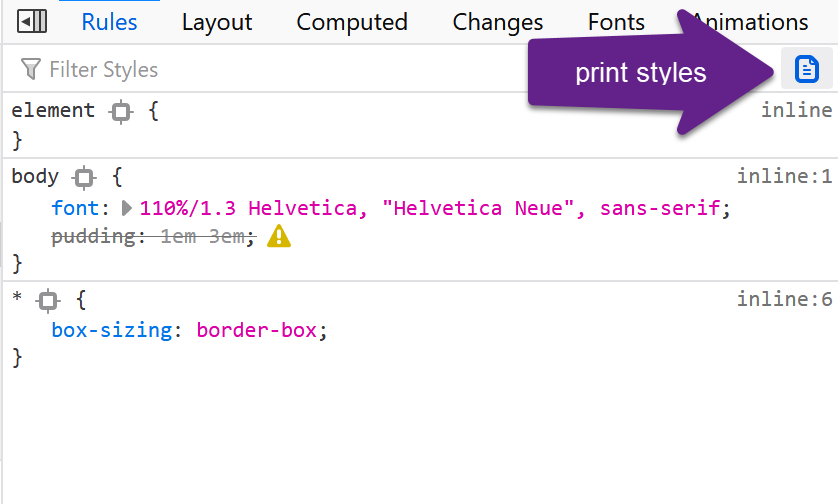

In the Rules Panel, look for the new print styles button. This button allows you to toggle to the print styles for your document, making it easier to test a print stylesheet that you are working on.

Staying with the Rules Panel, Firefox 68 shows an icon next to any invalid or unsupported CSS. If you have ever spent a lot of time puzzling over why something isn’t working, only to realise you made a typo in the property name, this will really help!

In this example I have spelled padding as “pudding”. There is (sadly) no pudding property so it is highlighted as an error.

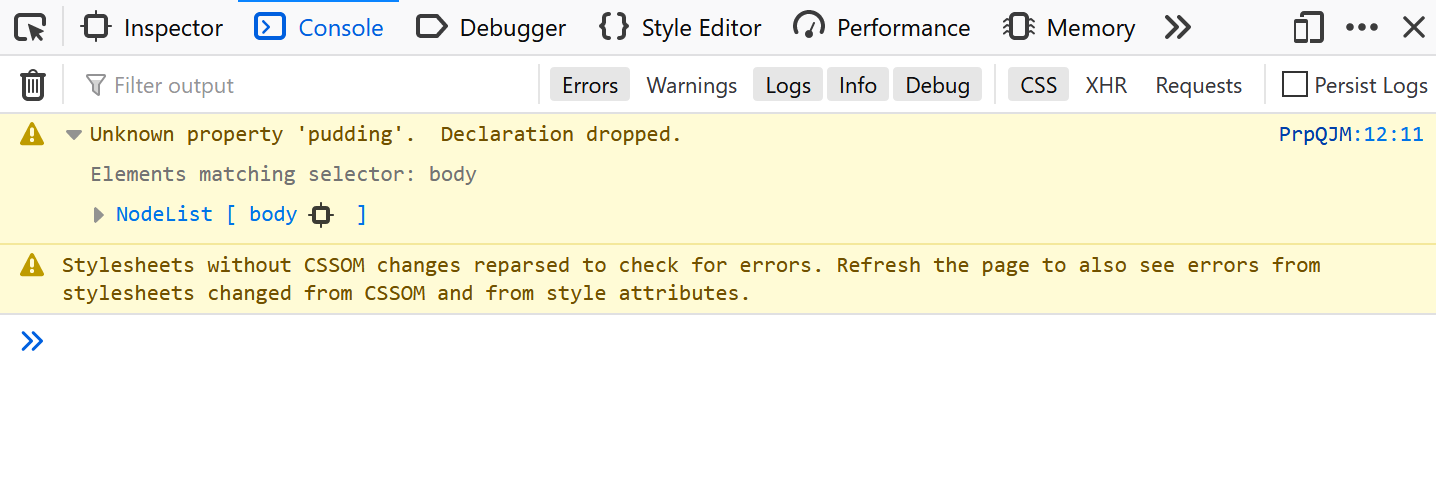

The console now shows more information about CSS errors and warnings. This includes a nodelist of places the property is used. You will need to click CSS in the filter bar to turn this on.

So that’s my short roundup of the features you can start to use in Firefox 68. Take a look at the Firefox 68 release notes to get a full overview of all the changes and additions that Firefox 68 brings you.

The post New CSS Features in Firefox 68 appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/07/new-css-features-in-firefox-68/

|

|

Mozilla Future Releases Blog: DNS-over-HTTPS (DoH) Update – Detecting Managed Networks and User Choice |

At Mozilla, we are continuing to experiment with DNS-over-HTTPS (DoH), a new network protocol that encrypts Domain Name System (DNS) requests and responses. This post outlines a new study we will be conducting to gauge how many Firefox users in the United States are using parental controls or enterprise DNS configurations.

With previous studies, we have tried to understand the performance impacts of DoH, and the results have been very promising. We found that DoH queries are typically the same speed or slightly slower than DNS queries, and in some cases can be significantly faster. Furthermore, we found that web pages that are hosted by Akamai–a content distribution network, or “CDN”–have similar performance when DoH is enabled. As such, DoH has the potential to improve user privacy on the internet without impeding user experience.

Now that we’re satisfied with the performance of DoH, we are shifting our attention to how we will interact with existing DNS configurations that users have chosen. For example, network operators often want to filter out various kinds of content. Parents and schools in particular may use “parental controls”, which block access to websites that are considered unsuitable for children. These controls may also block access to malware and phishing websites. DNS is commonly used to implement this kind of content filtering.

Similarly, some enterprises set up their own DNS resolvers that behave in special ways. For example, these resolvers may return a different IP address for a domain name depending on whether the user that initiated the request is on a corporate network or a public network. This behavior is known as “split-horizon”, and it is often to host a production and development version of a website. Enabling DoH in this scenario could unintentionally prevent access to internal enterprise websites when using Firefox.

We want to understand how often users of Firefox are subject to these network configurations. To do that, we are performing a study within Firefox for United States-based users to collect metrics that will help answer this question. These metrics are based on common approaches to implementing filters and enterprise DNS resolvers.

Detecting DNS-based parental controls

This study will generate DNS lookups from participants’ browsers to detect DNS-based parental controls. First, we will resolve test domains operated by popular parental control providers to determine if parental controls are enabled on a network. For example, OpenDNS operates exampleadultsite.com. It is not actually an adult website, but it is present on the blocklists for several parental control providers. These providers often block access to such websites by returning an incorrect IP address for DNS lookups.

As part of this test, we will resolve exampleadultsite.com. According to OpenDNS, this domain name should only resolve to the address 146.112.255.155. Thus, if a different address is returned, we will infer that DNS-based parental controls have been configured. The browser will not connect to, or download any content from the website.

We will also attempt to detect when a network has forced “safe search” versions of Google and YouTube for its users. The way that safe search works is that the network administrator configures their resolver to redirect DNS requests for a search provider to a “safe” version of the website. For example, a network administrator may force all users that look up www.google.com to instead look up forcesafesearch.google.com. When the browser connects to the IP address for forcesafesearch.google.com, the search provider knows that safe search is enabled and returns filtered search results.

We will resolve the unrestricted domain names provided by Google and YouTube from the addon, and then resolve the safe search domain names. Importantly, the safe search domain names for Google and YouTube are hosted on fixed IP addresses. Thus, if the IP address for an unrestricted and safe search domain name match, we will infer that parental controls are enabled. The tables below show the domain names we will resolve to detect safe search.

| YouTube | |

| www.youtube.com | www.google.com |

| m.youtube.com | google.com |

| youtubeapi.googleapis.com | |

| youtube.googleapis.com | |

| www.youtube-nocookie.com |

Table 1: The unrestricted domain names provided by YouTube and Google

| YouTube | |

| restrict.youtube.com | forcesafesearch.google.com |

| restrictmoderate.youtube.com |

Table 2: The safe search domain names provided by YouTube and Google

Detecting split-horizon DNS resolvers

We also want to understand how many Firefox users are behind networks that use split-horizon DNS resolvers, which are commonly configured by enterprises. We will perform two checks locally in the browser on DNS answers for websites that users visit during the study. First, we will check if the domain name does not contain a TLD that can be resolved by publicly-available DNS resolvers (such as .com). Second, if the domain name does contain such a TLD, we will check if the domain name resolves to a private IP address.

If either of these checks return true, we will infer that the user’s DNS resolver has configured split-horizon behavior. This is because the public DNS can only resolve domain names with particular TLDs, and it must resolve domain names to addresses that can be accessed over the public internet.

To be clear, we will not collect any DNS requests or responses. All checks will occur locally. We will count how many unique domain names appear to be resolved by a split-horizon resolver and then send only these counts to us.

Study participation

Users that do not wish to participate in this study can opt-out by typing “about:studies’ in the navigation bar, looking for an active study titled “Detection Logic for DNS-over-HTTPS”, and disabling it. (Not all users will receive this study, so don’t be alarmed if you can’t find it.) Users may also opt out of participating in any future studies from this page.

As always, we are committed to maintaining a transparent relationship with our users. We believe that DoH significantly improves the privacy of our users. As we move toward a rollout of DoH to all United States-based Firefox users, we intend to provide explicit mechanisms allowing users and local DNS administrators to opt-out.

The post DNS-over-HTTPS (DoH) Update – Detecting Managed Networks and User Choice appeared first on Future Releases.

|

|

This Week In Rust: This Week in Rust 297 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

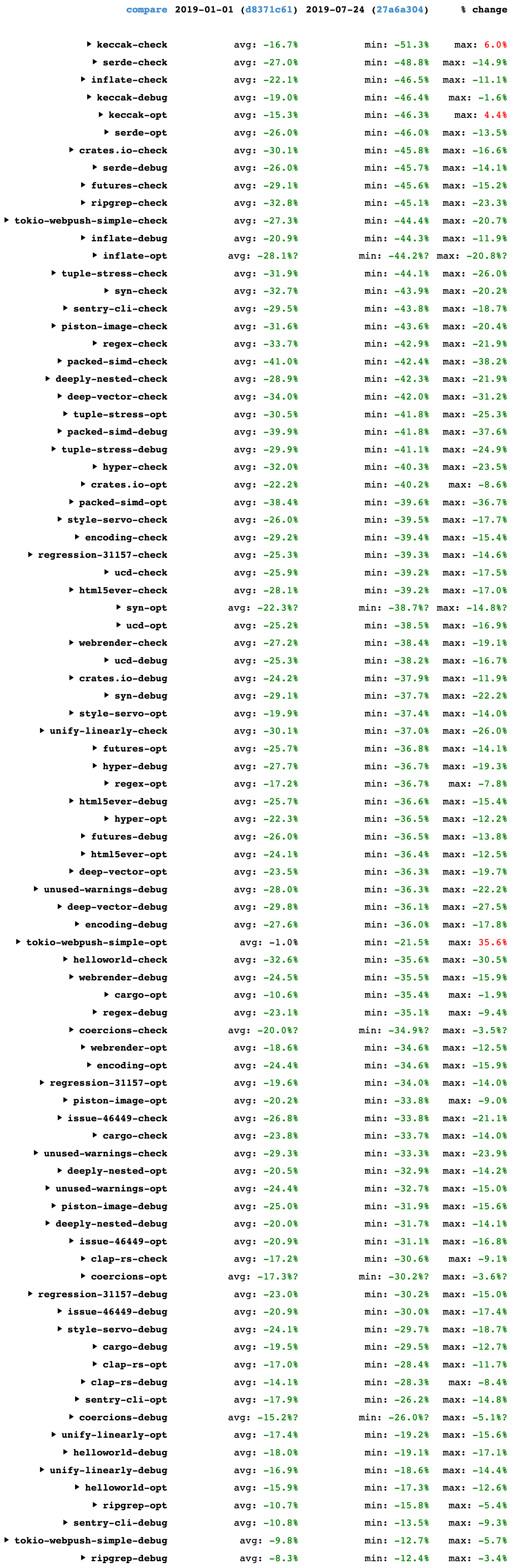

- The Rust compiler is still getting faster.

- Unsafe as a human-assisted type system.

- Why does the Rust compiler not optimize code assuming that two mutable references cannot alias?

- Python vs Rust for neural networks.

- Dependency management and trust scaling.

Crate of the Week

This week's crate is async-trait, a procedural macro to allow async fns in trait methods.

Thanks to Ehsan M. Kermani for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

324 pull requests were merged in the last week

- Add support for UWP targets

- Add

riscv32i-unknown-none-elftarget - Update wasm32 support for LLVM 9

- Move unescape module to rustc_lexer

- Make the parser TokenStream more resilient after mismatched delimiter recovery

- Improve diagnostics for _ const/static declarations

- Avoid ICE when referencing desugared local binding in borrow error

- Suggest trait bound on type parameter when it is unconstrained

- Allow lifetime elision in

Pin<&(mut) Self> - Stop bare trait lint applying to macro call sites

- Add note suggesting to borrow a String argument to find

- Add method disambiguation help for trait implementation

- miri: Enable Intrptrcast by default

- Don't access a static just for its size and alignment

- Use const array repeat expressions for

uninit_array - Stabilize the

type_nameintrinsic incore::any - Constantly improve the

Vec(Deque) arrayPartialEqimpls - hashbrown: Do not grow the container if an insertion is on a tombstone

- rust-bindgen: Cleanup

wchar_tlayout computation to happen later - rustdoc: Make

#[doc(include)]relative to the containing file - docs.rs: Fix weird layout workflow issues on firefox

- Force clippy to run every time (finally!)

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

No new RFCs were proposed this week.

Tracking Issues & PRs

- [disposition: merge] Stabilize checked_duration_since for 1.38.0.

- [disposition: merge] Stabilize duration_float.

- [disposition: merge] Implement DoubleEndedIterator for iter::{StepBy, Peekable, Take.

- [disposition: merge] Give built-in macros stable addresses in the standard library.

- [disposition: merge] Add a few trait impls for AccessError.

New RFCs

Upcoming Events

Africa

Asia Pacific

- Aug 5. Auckland, NZ - Rust AKL August - Rust usage in Firefox.

- Aug 10. Singapore, SG - Rust Meetup.

- Aug 17. Taipei, TW - "Everything in Rust" at COSCUP 2019.

Europe

- Aug 4. St. Petersburg, RU - St. Petersburg Rust Meetup.

- Aug 7. Erlangen, DE - Rust Franken Meetup #1.

- Aug 7. Berlin, DE - OpenTechSchool Berlin - Rust Hack and Learn.

North America

- Aug 7. Portland, OR, US - PDXRust - Trees = Boxes + Enums + Iterators.

- Aug 7. Vancouver, BC, CA - Vancouver Rust meetup.

- Aug 7. Atlanta, GA, US - Grab a beer with fellow Rustaceans.

- Aug 7. Indianapolis, IN, US - Indy.rs.

- Aug 8. San Diego, CA, US - San Diego Rust August Meetup.

- Aug 8. Columbus, OH, US - Columbus Rust Society - Monthly Meeting.

- Aug 8. Arlington, VA, US - Rust DC — Mid-month Rustful.

- Aug 13. Toronto, ON, CA - Rust Toronto - Toronto Rustaceans Tech and Talk.

- Aug 13. Denver, CO, US - Rust Boulder/Denver - Hack 'N Snack.

- Aug 13. Seattle, WA, US - Seattle Rust Meetup - Monthly meetup.

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

- Senior Platform Engineer - Layout as Mozilla, Portland, US.

- Senior Software Engineer at ConsenSys R&D, Remote.

- Rust Developer at Finhaven, Vancouver, CA.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Rust clearly popularized the ownership model, with similar implementations being considered in D, Swift and other languages. This is great news for both performance and memory safety in general.

Also let's not forget that Rust is not the endgame. Someone may at one point find or invent a language that will offer an even better position in the safety-performance-ergonomics space. We should be careful not to get too attached to Rust, lest we stand in progress' way.

Thanks to Vikrant for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nasa42, llogiq, and Flavsditz.

https://this-week-in-rust.org/blog/2019/07/30/this-week-in-rust-297/

|

|

Eitan Isaacson: HTML Text Snippet Extension |

I often need to quickly test a snippet of HTML, mostly to see how it interacts with our accessibility APIs.

Instead of creating some throwaway HTML file each time, I find it easier to paste in the HTML in devtools, or even make a data URI.

Last week I spent an hour creating an extension that allows you to just paste some HTML into the address bar and have it rendered immediately.

You just need to prefix it with the html keyword, and you’re good to go. Like this html

Hello, World!

.You can download it from github.

There might be other extensions or ways of doing this, but it was a quick little project.

https://blog.monotonous.org/2019/07/30/html-snippet-extension/

|

|

IRL (podcast): The Tech Worker Resistance |

There's a movement building within tech. Workers are demanding higher standards from their companies — and because of their unique skills and talent, they have the leverage to get attention. Walkouts and sit-ins. Picket protests and petitions. Shareholder resolutions, and open letters. These are the new tools of tech workers, increasingly emboldened to speak out. And, as they do that, they expose the underbellies of their companies' ethics and values or perceived lack of them.

In this episode of IRL, host Manoush Zomorodi meets with Rebecca Stack-Martinez, an Uber driver fed up with being treated like an extension of the app; Jack Poulson, who left Google over ethical concerns with a secret search engine being built for China; and Rebecca Sheppard, who works at Amazon and pushes for innovation on climate change from within. EFF Executive Director Cindy Cohn explains why this movement is happening now, and why it matters for all of us.

IRL is an original podcast from Firefox. For more on the series go to irlpodcast.org

Rebecca Stack-Martinez is a committee member for Gig Workers Rising.

Here is Jack Poulson's resignation letter to Google. For more, read Google employees' open letter against Project Dragonfly.

Check out Amazon employees' open letter to Jeff Bezos and Board of Directors asking for a better plan to address climate change.

Cindy Cohn is the Executive Director of the Electronic Frontier Foundation. EFF is a nonprofit that defends civil liberties in the digital world. They champion user privacy, free expression, and innovation through impact litigation, policy analysis, grassroots activism, and technology development.

|

|

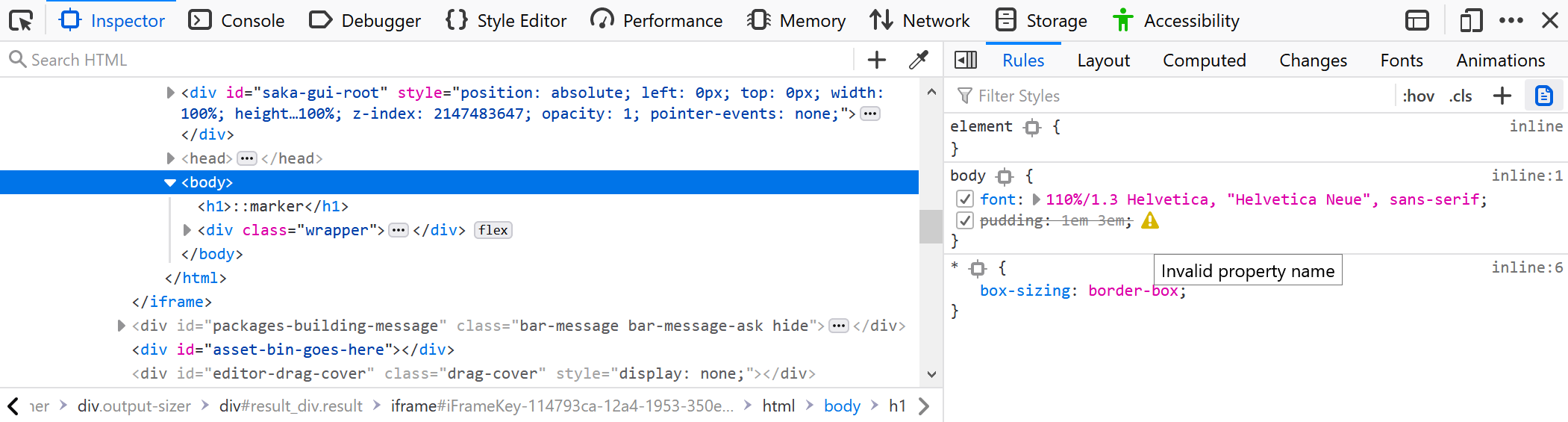

Mozilla VR Blog: MrEd, an Experiment in Mixed Reality Editing |

We are excited to tell you about our experimental Mixed Reality editor, an experiment we did in the spring to explore online editing in MR stories. What’s that? You haven’t heard of MrEd? Well please allow us to explain.

For the past several months Blair, Anselm and I have been working on a visual editor for WebXR called the Mixed Reality Editor, or MrEd. We started with this simple premise: non-programmers should be able to create interactive stories and experiences in Mixed Reality without having to embrace the complexity of game engines and other general purpose tools. We are not the first people to tackle this challenge; from visual programming tools to simplified authoring environments, researchers and hobbyists have grappled with this problem for decades.

Looking beyond Mixed Reality, there have been notable successes in other media. In the late 1980s Apple created a ground breaking tool for the Macintosh called Hypercard. It let people visually build applications at a time when programming the Mac required Pascal or assembly. It did this by using the concrete metaphor of a stack of cards. Anything could be turned into a button that would jump the user to another card. Within this simple framework people were able to create eBooks, simple games, art, and other interactive applications. Hypercard’s reliance on declaring possibly large numbers of “visual moments” (cards) and using simple “programming” to move between them is one of the inspirations for MrEd.

We also took inspiration from Twine, a web-based tool for building interactive hypertext novels. In Twine, each moment in the story (seen on the screen) is defined as a passage in the editor as a mix of HTML content and very simple programming expressions executed when a passage is displayed, or when the reader follows a link. Like Hypercard, the author directly builds what the user sees, annotating it with small bits of code to manage the state of the story.

No matter what the medium — text, pictures, film, or MR — people want to tell stories. Mixed Reality needs tools to let people easily tell stories by focusing on the story, not by writing a simulation. It needs content focused tools for authors, not programmers. This is what MrEd tries to be.

Scenes Linked Together

At first glance, MrEd looks a lot like other 3D editors, such as Unity3D or Amazon Sumerian. There is a scene graph on the left, letting authors create scenes, add anchors and attach content elements under them. Select an item in the graph or in the 3D windows, and a property pane appears on the right. Scripts can be attached to objects. And so on. You can position your objects in absolute space (good for VR) or relative to other objects using anchors. An anchor lets you do something like look for this poster in the real world, then position this text next to it, or look for this GPS location and put this model on it. Anchors aren’t limited to basic can also express more semantically meaningful concepts like find the floor and put this on it (we’ll dig into this in another article).

Dig into the scene graph on the left, and differences appear. Instead of editing a single world or game level, MrEd uses the metaphor of a series of scenes (inspired by Twine’s passages and Hypercard’s cards). All scenes in the project are listed, with each scene defining what you see at any given point: shapes, 3D models, images, 2D text and sounds. You can add interactivity by attaching behaviors to objects for things like ‘click to navigate’ and ‘spin around’. The story advances by moving from scene to scene; code to keep track of story state is typically executed on these scene transitions, like Hypercard and Twine. Where most 3D editors force users to build simulations for their experiences, MrEd lets authors create stores that feel more like “3D flip-books”. Within a scene, the individual elements can be animated, move around, and react to the user (via scripts), but the story progresses by moving from scene to scene. While it is possible to create complex individual scenes that begin to feel like a Unity scene, simple stories can be told through sequences of simple scenes.

We built MrEd on Glitch.com, a free web-based code editing and hosting service. With a little hacking we were able to put an entire IDE and document server into a glitch. This means anyone can share and remix their creations with others.

One key feature of MrEd is that it is built on top of a CRDT data structure to enable editing the same project on multiple devices simultaneously. This feature is critical for Mixed Reality tools because you are often switching between devices during development; the networked CRDT underpinnings also mean that logging messages from any device appear in any open editor console viewing that project, simplifying distributed development. We will tell you more details about the CRDT and Glitch in future posts.

We ran a two week class with a group of younger students in Atlanta using MrEd. The students were very interested in telling stories about their school, situating content in space around the buildings, and often using memes and ideas that were popular for them. We collected feedback on features, bugs and improvements and learned a lot from how the students wanted to use our tool.

Lessons Learned

As I said, this was an experiment, and no experiment is complete without reporting on what we learned. So what did we learn? A lot! And we are going to share it with you over the next couple of blog posts.

First we learned that idea of building a 3D story from a sequence of simple scenes worked for novice MR authors: direct manipulation with concrete metaphors, navigation between scenes as a way of telling stories, and the ability to easily import images and media from other places. The students were able to figure it out. Even more complex AR concepts like image targets and geospatial anchors were understandable when turned into concrete objects.

MrEd’s behaviors scripts are each a separate Javascript file and MrEd generates the property sheet from the definition of the behavior in the file, much like Unity’s behaviors. Compartmentalizing them in separate files means they are easy to update and share, and (like Unity) simple scripts are a great way to add interactivity without requiring complex coding. We leveraged Javascript’s runtime code parsing and execution to support scripts with simple code snippets as parameters (e.g., when the user finds a clue by getting close to it, a proximity behavior can set a global state flag to true, without requiring a new script to be written), while still giving authors the option to drop down to Javascript when necessary.

Second, we learned a lot about building such a tool. We really pushed Glitch to the limit, including using undocumented APIs, to create an IDE and doc server that is entirely remixable. We also built a custom CRDT to enable shared editing. Being able to jump back and forth between a full 2d browser with a keyboard and then XR Viewer running on an ARKit enabled iPhone is really powerful. The CRDT implementation makes this type of real time shared editing possible.

Why we are done

MrEd was an experiment in whether XR metaphors can map cleanly to a Hypercard-like visual tool. We are very happy to report that the answer is yes. Now that our experiment is over we are releasing it as open source, and have designed it to run in perpetuity on Glitch. While we plan to do some code updates for bug fixes and supporting the final WebXR 1.0 spec, we have no current plans to add new features.

Building a community around a new platform is difficult and takes a long time. We realized that our charter isn’t to create platforms and communities. Our charter is to help more people make Mixed Reality experiences on the web. It would be far better for us to help existing platforms add WebXR than for us to build a new community around a new tool.

Of course the source is open and freely usable on Github. And of course anyone can continue to use it on Glitch, or host their own copy. Open projects never truly end, but our work on it is complete. We will continue to do updates as the WebXR spec approaches 1.0, but there won’t be any major changes.

Next Steps

We are going to polish up the UI and fix some remaining bugs. MrEd will remain fully usable on Glitch, and hackable on GitHub. We also want to pull some of the more useful chunks into separate components, such as the editing framework and the CRDT implementation. And most importantly, we are going to document everything we learned over the next few weeks in a series of blogs.

If you are interested in integrating WebXR into your own rapid prototyping / educational programming platform, then please let us know. We are very happy to help you.

You can try MrEd live on Glitch today. You can get the source from the main MrEd github repo.

https://blog.mozvr.com/mred-an-experiment-in-mixed-reality-editing/

|

|

Botond Ballo: Trip Report: C++ Standards Meeting in Cologne, July 2019 |

Summary / TL;DR (new developments since last meeting in bold)

| Project | What’s in it? | Status |

| C++20 | See below | On track |

| Library Fundamentals TS v3 | See below | Under development |

| Concepts | Constrained templates | In C++20 |

| Parallelism TS v2 | Task blocks, library vector types and algorithms, and more | Published! |

| Executors | Abstraction for where/how code runs in a concurrent context | Targeting C++23 |

| Concurrency TS v2 | See below | Under active development |

| Networking TS | Sockets library based on Boost.ASIO | Published! Not in C++20. |

| Ranges | Range-based algorithms and views | In C++20 |

| Coroutines | Resumable functions (generators, tasks, etc.) | In C++20 |

| Modules | A component system to supersede the textual header file inclusion model | In C++20 |

| Numerics TS | Various numerical facilities | Under active development |

| C++ Ecosystem TR | Guidance for build systems and other tools for dealing with Modules | Under active development |

| Contracts | Preconditions, postconditions, and assertions | Pulled from C++20, now targeting C++23 |

| Pattern matching | A match-like facility for C++ |

Under active development, targeting C++23 |

| Reflection TS | Static code reflection mechanisms | Publication imminent |

| Reflection v2 | A value-based constexpr formulation of the Reflection TS facilities |

Under active development, targeting C++23 |

| Metaclasses | Next-generation reflection facilities | Early development |

A few links in this blog post may not resolve until the committee’s post-meeting mailing is published (expected within a few days of August 5, 2019). If you encounter such a link, please check back in a few days.

Introduction

Last week I attended a meeting of the ISO C++ Standards Committee (also known as WG21) in Cologne, Germany. This was the second committee meeting in 2019; you can find my reports on preceding meetings here (February 2019, Kona) and here (November 2018, San Diego), and previous ones linked from those. These reports, particularly the Kona one, provide useful context for this post.

This week the committee reached a very important milestone in the C++20 publication schedule: we approved the C++20 Committee Draft (CD), a feature-complete draft of the C++20 standard which includes wording for all of the new features we plan to ship in C++20.

The next step procedurally is to send out the C++20 CD to national standards bodies for a formal ISO ballot, where they have the opportunity to comment on it. The ballot period is a few months, and the results will be in by the next meeting, which will be in November in Belfast, Northern Ireland. We will then spend that meeting and the next one addressing the comments, and then publishing a revised draft standard. Importantly, as this is a feature-complete draft, new features cannot be added in response to comments; only bugfixes to existing features can be made, and in rare cases where a serious problem is discovered, a feature can be removed.

Attendance at this meeting once again broke previous records, with over 200 people present for the first time ever. It was observed that one of the likely reasons for the continued upward trend in attendance is the proliferation of domain-specific study groups such as SG 14 (Games and Low-Latency Programming) and SG 19 (Machine Learning) which is attracting new experts from those fields.

Note that the committe now tracks its proposals in GitHub. If you’re interested in the status of a proposal, you can find its issue on GitHub by searching for its title or paper number, and see its status — such as which subgroups it has been reviewed by and what the outcome of the reviews were — there.

C++20

Here are the new changes voted into C++20 Working Draft at this meeting. For a list of changes voted in at previous meetings, see my Kona report. (As a quick refresher, major features voted in at previous meetings include modules, coroutines, default comparisons (<=>), concepts, and ranges.)

- Language:

- Removal of Contracts. The most notable change at this meeting was not an addition but a removal: Contracts were pulled from C++20 because the consensus for their current design has disappeared. I talk more about the reasons for this below.

- Class template argument deduction improvements:

constexprimprovements:- More

constexprcontainers. This is the language change that allows the library changes mentioned below to makestd::vectorandstd::stringconstexpr. - Adding the

constinitkeyword - Permitting trivial default initialization in

constexprcontexts - Enabling

constexprintrinsics by permitting unevaluated inline assembly inconstexprfunctions

- More

- Modules bugfixes:

- Mitigating minor modules maladies. The changes in this paper concerning default arguments, and classes having typedef-names for linkage purposes, were also adopted as a Defect Report against previous standard versions.

- Relaxing redefinition restrictions for re-exportation robustness

- Recognizing header unit imports requires full preprocessing. This was a change motivated by tooling concerns: with this in place, it’s possible to scan a source file for module dependencies in a way that’s both fast (doesn’t require full preprocessing) and reliable.

- Concepts bugfixes:

- Bugfixes for default comparisons / operator spaceship (

<=>): using enum. This allows introducing the enumerators of a scoped enumeration into a local scope, and thereby avoiding the repetition of the enumeration name in that scope.- Additional contexts for implicit move construction. This is a merger of two papers on this topic.

- Conditionally trivial special member functions

[[nodiscard("should have a reason")]][[nodiscard]]for constructors. This is also a Defect Report against C++17.- Deprecate uses of the comma operator in subscripting expressions. This paves the way for multi-dimensional array subscripting (

matrix[row, col]) in a future standard. - Deprecating

volatile(only the core language portions; the library portions are still under review) - Interaction of

memory_order_consumewith release sequences

- Library:

- Text formatting. A long time in the making, this is one of the most significant library features to be added in C++20!. There are also a few related papers:

- The C++20 Synchronization Library, along with two related proposals, add wait/notify to

atomic_ref, and add wait/notify toatomic - Input range adaptors

- Making

std::vectorconstexpr - Making

std::stringconstexpr - Stop token and joining thread

- Adopt

source_locationfor C++20. This provides a modern replacement for macros like__FILE__and__LINE__. - Rename concepts to standard_case for C++20, while we still can. This paper renames all standard library concepts from

PascalCasetosnake_case, for consistency with other standard library names, and tweaks the names further in cases where the mechanical rename results in a conflict or other issue. - The mothership has landed (this adds operator

<=>to standard libary types) - Standard library header units for C++20

to_arrayfrom LFTS with updates- Bit operations

- Math constants

- Efficient access to

basic_stringbuf‘s buffer constexprINVOKE- Movability of single-pass iterators

basic_istream_view::iteratorshould not be copyable- Layout-compatibility and pointer-interconvertibility traits

- Remove dedicated precalculated hash lookup interface. This was removed because people discovered that you can precalculate hashes using the existing interface, making the complexity of the new interface unnecessary.

- Miscellaneous minor fixes for

chrono char8_tbackward compatibility remediationbind_frontshould not unwrapreference_wrapper- Iterator difference type and integer overflow

- Helpful pointers for

ContiguousIterator - Views and size types

- Exposing a narrow contract for

ceil2 constexprfeature macro concerns

Technical Specifications

In addition to the C++ International Standard (IS), the committee publishes Technical Specifications (TS) which can be thought of experimental “feature branches”, where provisional specifications for new language or library features are published and the C++ community is invited to try them out and provide feedback before final standardization.

At this meeting, the focus was on the C++20 CD, and not so much on TSes. In particular, there was no discussion of merging TSes into the C++ IS, because the deadline for doing so for C++20 was the last meeting (where Modules and Coroutines were merged, joining the ranks of Concepts which was merged a few meetings prior), and it’s too early to be discussing mergers into C++23.

Nonetheless, the committee does have a few TSes in progress, and I’ll mention their status:

Reflection TS

The Reflection TS was approved for publication at the last meeting. The publication process for this TS is a little more involved than usual: due to the dependency on the Concepts TS, the Reflection TS needs to be rebased on top of C++14 (the Concepts TS’ base document) for publication. As a result, the official publication has not happened yet, but it’s imminent.

As mentioned before, the facilities in the Reflection TS are not planned to be merged into the IS in their current form. Rather, a formulation based on constexpr values (rather than types) is being worked on. This is a work in progress, but recent developments have been encouraging (see the SG7 (Reflection) section) and I’m hopeful about them making C++23.

Library Fundamentals TS v3

This third iteration (v3) of the Library Fundamentals TS continues to be open for new features. It hasn’t received much attention in recent meetings, as the focus has been on libraries targeted at C++20, but I expect it will continue to pick up material in the coming meetings.

Concurrency TS v2

A concrete plan for Concurrency TS v2 is starting to take shape.

The following features are planned to definitely be included:

- Executors

- Hazard pointers

- Read-Copy-Update (RCU)

fiber_context. This is the latest formulation of stackful coroutines (fibers). It’s a very long time in the making, and has been bounced around various strudy groups for years; I’m glad to see it finally have a concrete ship vehicle!

The following additional features might tag along if they’re ready in time:

I don’t think there’s a timeline for publication yet; it’s more “when the features in the first list are ready”.

Networking TS

As mentioned before, the Networking TS did not make C++20. As it’s now targeting C++23, we’ll likely see some proposal for design changes between now and its merger into C++23.

One such potential proposal is one that would see the Networking TS support TLS out of the box. JF Bastien from Apple has been trying to get browser implementers on board with such a proposal, which might materialize for the upcoming Belfast meeting.

Evolution Working Group

As usual, I spent most of the week in EWG. Here I will list the papers that EWG reviewed, categorized by topic, and also indicate whether each proposal was approved, had further work on it encouraged, or rejected. Approved proposals are targeting C++20 unless otherwise mentioned; “further work” proposals are not.

Concepts

- (Approved) Rename concepts to

standard_casefor C++20, while we still can. Concepts have been part of the C++ literature long before the C++20 language feature that allows them to be expressed in code; for example, they are discussed in Stepanov’s Elements of Programming, and existing versions of the IS document describe the notional concepts that form the requirements for various algorithms. In this literature, concepts are conventionally named inPascalCase. As a result, the actual language-feature concepts added to the standard library in C++20 were named inPascalCaseas well. However, it was observed that essentially every other name in the standard library usessnake_case, and remaining consistent with that might be more important than respecting naming conventions from non-code literature. This was contentious, for various reasons: (1) it was late in the cycle to make this change; (2) a pure mechanical rename resulted in some conflicts with existing names, necessitating additional changes that went beyond case; and (3) some people liked the visual distinction thatPascalCaseconferred onto concept names. Nonetheless, EWG approved the change. - (Approved) On the non-uniform semantics of return-type-requirements. This proposal axes concept requirements of the form

expression -> Type, because their semantics are not consistent with trailing return types which share the same syntax. - (Approved) Using unconstrained template template parameters with constrained templates. This paper allows unconstrained template template parameters to match constrained templates; without this change, it would have been impossible to write a template template parameter that matches any template regardless of constraints, which is an important use case.

Contracts

Contracts were easily the most contentious and most heavily discussed topic of the week. In the weeks leading up the meeting, there were probably 500+ emails on the committee mailing lists about them.

The crux of the problem is that contracts can have a range of associated behaviours / semantics: whether they are checked, what happens if they are checked and fail, whether the compiler can assume them to be true in various scenarios, etc. The different behaviours lend themselves to different use cases, different programming models, different domains, and different stages of the software lifecycle. Given the diversity of all of the above represented at the committee, people are having a really hard time agreeing on what set of possible behaviours the standard should allow for, what the defaults should be, and what mechanisms should be available to control the behaviour in case you want something other than the defaults.

A prominent source of disagreement is around the possibility for contracts to introduce undefined behaviour (UB) if we allow compilers to assume their truth, particularly in cases where they are not checked, or where control flow is allowed to continue past a contract failure.

Contracts were voted into the C++20 working draft in June 2018; the design that was voted in was referred to as the “staus quo design” during this week’s discussions (since being in the working draft made it the status quo). In a nutshell, in the status quo design, the programmer could annotate contracts as having one of three levels — default, audit, or axiom — and the contract levels were mapped to behaviours using two global switches (controlled via an implementation-defined mechanism, such as a compiler flag): a “build level” and a “continuation mode”.

The status quo design clearly had consensus at the time it was voted in, but since then that consensus had begun to increasingly break down, leading to a slew of Contracts-related proposals submitted for the previous meeting and this one.

I’ll summarize the discussions that took place this week, but as mentioned above, the final outcome was that Contracts was removed from C++20 and is now targeting C++23.

EWG discussed Contracts on two occasions during the week, Monday and Wednesday. On Monday, we started with a scoping discussion, where we went through the list of proposals, and decided which of them we were even willing to discuss. Note that, as per the committee’s schedule for C++20, the deadline for making design changes to a C++20 feature had passed, and EWG was only supposed to consider bugfixes to the existing design, though as always that’s a blurry line.

Anyways, the following proposals were rejected with minimal discussion on the basis of being a design change:

- Contracts that work

- Avoiding undefined behaviour in contracts

- Local contract restrictions

- Simplifying contract syntax

- Allowing contract predicates on non-first declarations

That left the following proposals to be considered. I list them here in the order of discussion. Please note that the “approvals” from this discussion were effectively overturned by the subsequent removal of Contracts from C++20.

- (Rejected) What to do about contracts? This proposed two alternative minimal changes to the status quo design, with the primary aim of addressing the UB concerns, but neither had consensus. (Another paper was essentially a repeat of one of the alternatives and was not polled separately.)

- (Rejected) Axioms should be assumable. This had a different aim (allowing the compiler to assume contracts in more cases, not less) and also did not have consensus.

- (Approved) Minimizing contracts. This was a two-part proposal. The first part removed the three existing contract levels (

default,audit, andaxiom), as well as the build level and continuation mode, and made the way the behaviour of a contract checking statement is determined completely implementation-defined. The second part essentially layered on top the “Contracts that work” proposal, which introduces literal semantics: rather than annotating contracts with “levels” which are somehow mapped onto behaviours, contracts are annotated with their desired behaviour directly; if the programmer wants different behaviours in different build modes, they can arrange for that themselves, using e.g. macros that expand to different semantics in different build modes. EWG approved both parts, which was somewhat surprising because “Contracts that work” was previously voted as not even being in scope for discussion. I think the sentiment was that, while this is a design change, it has more consensus than the status quo, and so it’s worth trying to sneak it in even though we’re past the design change deadline. Notably, while this proposal did pass, it was far from unanimous, and the dissenting minority was very vocal about their opposition, which ultimately led to the topic being revisited and Contracts being axed from C++20 on Wednesday. - (Approved) The “default” contract build-level and continuation-mode should be implementation-defined. This was also approved, which is also somewhat suprising given that it was mooted by the previous proposal. Hey, we’re not always a completely rational bunch!

To sum up what happened on Monday: EWG made a design change to Contracts, and that design change had consensus among the people in the room at the time. Unfortunately, subsequent discussions with people not in the room, including heads of delegations from national standards bodies, made it clear that the design change was very unlikely to have the consensus of the committee at large in plenary session, largely for timing reasons (i.e. it being too late in the schedule to make such a nontrivial design change).

As people were unhappy with the status quo, but there wasn’t consensus for a design change either, that left removing contracts from C++20 and continuing to work on it in the C++23 cycle. A proposal to do so was drafted and discussed in EWG on Wednesday, with a larger group of people in attendance this time, and ultimately garnered consensus.

To help organize further work on Contracts in the C++23 timeframe, a new Study Group, SG 21 (Contracts) was formed, which would incubate and refine an updated proposal before it comes back to EWG. It’s too early to say what the shape of that proposal might be.

I personally like literal semantics, though I agree it probably wouldn’t have been prudent to make a significant change like that for C++20. I would welcome a future proposal from SG 21 that includes literal semantics.

Modules

A notable procedural development in the area of Modules, is that the Modules Study Group (SG 2) was resurrected at the last meeting, and met during this meeting to look at all Modules-related proposals and make recommendations about them. EWG then looked at the ones SG 2 recommended for approval for C++20:

- (Approved) Mitigating minor Modules maladies. EWG affirmed SG2’s recommendation to accept the first and third parts (concerning typedef names and default arguments, respectively) for C++20.

- (Approved) Relaxing redefinition restrictions for re-exportation robustness. This proposal makes “include translation” (the automatic translation of some

#includedirectives into module imports) optional, because it is problematic for some use cases, and solves the problems that motivated mandatory include translation in another way. (Aside: Richard Smith, the esteemed author of this paper and the previous one, clearly has too much time on his hands if he can come up with alliterations like this for paper titles. We should give him some more work to do. Perhaps we could ask him to be the editor of the C++ IS document? Oh, we already did that… Something else then. Finish implementing Concepts in clang perhaps?) - (Approved) Standard library header units for C++20. This allows users to consume C++ standard library headers (but not headers inherited from C like

importrather than#include(without imposing any requirements (yet) that their contents actually be modularized). It also reserves module names whose first component isstd, orstdfollowed by a number, for use by the standard library. - (Approved) Recognizing header unit imports requires full preprocessing. This tweaks the context sensitivity rules for the

importkeyword in such a way that tools can quickly scan source files and gather their module dependencies without having to do too much processing (and in particular without having to do a full run of the preprocessor).

There were also some Modules-related proposals that SG2 looked at and decided not to advance for C++20, but instead continue iterating for C++23:

- (Further work) The

inlinekeyword is not in line with the design of modules. This proposal will be revised before EWG looks at it. - (Further work) ABI isolation for member functions. EWG did look at this, towards the end of the week when it ran out of C++20 material. The idea here is that people like to define class methods inline for brevity (to avoid repeating the function header in an out-of-line definition), but the effects this has on linkage are sometimes undesirable. In module interfaces in particular, the recently adopted rule changes concerning internal linkage mean that users can run into hard-to-understand errors as a result of giving methods internal linkage. The proposal therefore aims to dissociate whether a method is defined inline or out of line, from semantic effects on linkage (which could still be achieved by using the

inlinekeyword explicitly). Reactions were somewhat mixed, with some concerns about impacts on compile-time and runtime performance. Some felt that if we do this at all, we should do it in C++20, so our guidance to authors of modular code can be consistent from the get-go; while it seems to be too late to make this change in C++20 itself, the idea of a possible future C++20 Defect Report was raised.

Finally, EWG favourably reviewed at the Tooling Study Group’s plans for a C++ Ecosystem Technical Report. One suggestion made was to give the TR a more narrowly scoped name to reflect its focus on Modules-related tooling (lest people are misled into expecting that it addresses every “C++ Ecosystem” concern).

Coroutines

EWG considered several proposed improvements to coroutines. All of them were rejected for C++20 due to being too big of a change at this late stage.

- (Rejected) Allowing both

co_return;andco_return value;in the same coroutine - (Rejected) Coroutine changes for C++20 and beyond, and its two companion papers which outline motivations, “Adding async RAII support to coroutines” and “Supporting return-value-optimisation in coroutines”

- (Rejected) Better keywords for coroutines, which tried to do away with the

co_await/co_yield/co_returnugliness by makingyieldetc. context-sensitive keywords.

Coroutines will undoubtedly see improvements in the C++23 timeframe, including possibly having some of the above topics revisited, but of course we’ll now be limited to making changes that are backwards-compatible with the current design.

constexpr

- (Approved) Enabling

constexprintrinsics by permitting unevaluated inline assembly inconstexprfunctions. Withstd::is_constant_evaluated(), you can already give an operation different implementations for runtime and compile-time evaluation. This proposal just allows the runtime implementations of such functions to use inline assembly. - (Approved) A tweak to

constinit: EWG was asked to clarify the intended rules for non-initializing declarations. The Core Working Group’s recommendation — that a non-initializing declaration of a variable be permitted to containconstinit, and if it does, the initializing declaration must beconstinitas well — was accepted.

Comparisons

- (Approved) Spaceship needs a tune-up. This fixes some relatively minor fallout from recent spaceship-related bugfixes.

- (Rejected) The spaceship needs to be grounded: pull spaceship from C++20. Concerns about the fact that we keep finding edge cases where we need to tweak spaceship’s behaviour, and that the rules have become rather complicated as a result of successive bug fixes, prompted this proposal to remove spaceship from C++20. EWG disagreed, feeling that the value this feature delivers for common use cases outweighs the downside of having complex rules to deal with uncommon edge cases.

Lightweight Exceptions

In one of the meeting’s more exciting developments, Herb Sutter’s lightweight exceptions proposal (affectionately dubbed “Herbceptions” in casual conversation) was finally discussed in EWG. I view this proposal as being particularly important, because it aims to heal the current fracture of the C++ user community into those who use exceptions and those who do not.

The proposal has four largely independent parts:

- The first and arguably most interesting part (section 4.1 in the paper) provides a lightweight exception handling mechanism that avoids the overhead that today’s dynamic exceptions have, namely that of dynamic memory allocation and runtime type information (RTTI). The new mechanism is opt-in on a per-function basis, and designed to allow a codebase to transition incrementally from the old style of exceptions to the new one.

- The next two parts have to do with using exceptions in fewer scenarios:

- The second part (section 4.2) is about transitioning the standard library to handle logic errors not via exceptions like

std::logic_error, but rather via a contract violation. - The third part (section 4.3) is about handling allocation failure via termination rather than an exception. Earlier versions of the proposal were more aggressive on this front, and aimed to make functions that today only throw exceptions related to allocation failure

noexcept. However, that’s unlikely to fly, as there are good use cases for recovering from allocation failure, so more recent versions leave the choice of behaviour up to the allocator, and aim to make such functions conditionallynoexcept.

- The second part (section 4.2) is about transitioning the standard library to handle logic errors not via exceptions like

- The fourth part (section 4.5), made more realistic by the previous two, aims to make the remaining uses of exceptions more visible by allowing expressions that propagate exceptions to be annotated with the

trykeyword, not unlike Rust’stry!macro. Of course, unlike Rust, use of the annotation would have to be optional for backwards compatibility, though one can envision enforcing its use locally in a codebase (or part of a codebase) via static analysis.

As can be expected from such an ambitious proposal, this prompted a lot of discussion in EWG. A brief summary of the outcome for each part:

- There was a lot of discussion both about how performant we can make the proposed lightweight exceptions, and about the ergonomics of the two mechanisms coexisting in the same program. (For the latter, a particular point of contention was that functions that opt into the new exceptions require a modified calling convention, which necessitates encoding the exception mode into the function type (for e.g. correct calling via function pointers), which fractures the type system). EWG cautiously encouraged further exploration, with the understanding that further experiments and especially implementation experience are needed to be able to provide more informed directional guidance.

- Will be discussed jointly by Evolution and Library Evolution in the future.

- EWG was somewhat skeptical about this one. In particular, the feeling in the room was that, while Library Evolution may allow writing allocators that don’t throw and library APIs may be revised to take advantage of this and make some functions conditionally

noexcept, there was no consensus to move in the direction of making the default allocator non-throwing. - EWG was not a fan of this one. The feeling was that the annotations would have limited utility unless they’re required, and we can’t realistically ever make them required.

I expect the proposal will return in revised form (and this will likely repeat for several iterations). The road towards achieving consensus on a significant change like this is a long one!

I’ll mention one interesting comment that was made during the proposal’s presentation: it was observed that since we need to revise the calling convention as part of this proposal anyways, perhaps we could take the opportunity to make other improvements to it as well, such as allowing small objects to be passed in registers, the lack of which is a pretty unfortunate performance problem today (certainly one we’ve run into at Mozilla multiple times). That seems intriguing.

Other new features

- (Approved*) Changes to expansion statements. EWG previously approved a “

for ...” construct which could be used to iterate at compile time over tuple-like objects and parameter packs. Prior to this meeting, it was discovered that the parameter pack formulation has an ambiguity problem. We couldn’t find a fix in time, so the support for parameter packs was dropped, leaving only tuple-like objects. However, “for ...” no longer seemed like an appropriate syntax if parameter packs are not supported, so the syntax was changed to “template for“. Unfortunately, while EWG approved “template for“, the Core Working Group ran out of time to review its wording, so (*) the feature didn’t make C++20. It will likely be revisited for C++23, possibly including ways to resolve the parameter pack issue. - (Further work) Pattern matching. EWG looked at a revised version of this proposal which features a refined pattern syntax among other improvements. The review was generally favourable, and the proposal, which is targeting C++23, is getting close to the stage where standard wording can be written and implementation experience gathered.

Bug / Consistency Fixes

(Disclaimer: don’t read too much into the categorization here. One person’s bug fix is another’s feature.)

For C++20:

- (Approved*) Non-type template parameters are incomplete without floating-point types. This paper observes that a floating-point value can be encoded into a struct that meets the current requirements for a non-type template parameter, and argues that floating-point types should thus be supported out of the box. This was approved by EWG, but (*) subsequently voted down in plenary over implementer concerns, and so is not part of C++20.

- (Approved)

rethrow_exceptionmust be allowed to copy. This is a small bugfix to an exception handling support utility. - (Approved)

[[nodiscard]]for constructors. This fixes what was largely a wording oversight. - (CWG to handle as a DR) Inline namespaces: fragility bites. This concerns some code breakage caused by the resolution of core issue #2601. EWG instructed the Core Working Group to decide on a solution and handle it as a Defect Report.

For C++23:

- (Approved) Size feedback in

operator new. This allowsoperator newto communicate to its caller how many bytes it actually allocated, which can sometimes be larger than the requested amount. - (Approved) A type trait to detect scoped enumerations. This adds a type trait to tell apart

enum classes from planenums, which is not necessarily possible to do in pure library code. - (Approved in part) Literal suffixes for

size_tandptrdiff_t. The suffixesuzforsize_tandzforssize_twere approved. The suffixestforptrdiff_tandutfor a corresponding unsigned type had no consensus. - (Further work) Callsite based inlining hints:

[[always_inline]]and[[never_inline]]. EWG was generally supportive, but requested the author provide additional motivation, and also clarify if they are orders to the compiler (usable in cases where inlining or not actually has a semantic effect), or just strong optimization hints. - (Further work) Defaultable default constructors and destructors for all unions. The motivation here is to allow having unions which are trivial but have nontrivial members. EWG felt this was a valid usecase, but the formulation in the paper erased important safeguards, and requested a different formulation.

- (Further work) Name lookup should “find the first thing of that name”. EWG liked the proposed simplification, but requested that research be done to quantify the scope of potential breakage, as well as archaeology to better understand the motivation for the current rule (which no one in the room could recall.)

Proposals Not Discussed

As usual, there were papers EWG did not get to discussing at this meeting; see the committee website for a complete list. At the next meeting, after addressing any national body comments on the C++20 CD which are Evolutionary in nature, EWG expects to spend the majority of the meeting reviewing C++23-track proposals.

Evolution Working Group Incubator

Evolution Incubator, which acts as a filter for new proposals incoming to EWG, met for two days, and reviewed numerous proposals, approving the following ones to advance to EWG at the next meeting:

auto(x): decay-copy in the language. This is a construct for performing explicitly the “decay” that values undergo implicitly today when being passed as a function argument by value.- Homogeneous variadic function parameters. This allows parameter packs like

int...(more generally,A...whereAis not a template parameter), where all elements of the pack have the same type. - Extended floating-point types, and its companion paper fixed-layout floating-point type aliases. These are the successors to the

short floatproposal. They will be seen by Library Evolution as well.

Other Working Groups

Library Groups

Having sat in the Evolution group, I haven’t been able to follow the Library groups in any amount of detail, but I’ll call out some of the library proposals that have gained design approval at this meeting:

std::to_underlying()- Remove return type deduction in

std::apply() - A more

constexprbitset - Support for C atomics in C++

basic_string::resize_default_initstarts_with()andends_with()- An executor property for occupancy of execution agents

- Printing

volatilepointers snapshot_source()contains()forstring- (This list is incomplete. Please see the post-meeting mailing for an “LEWG Summary” paper with a complete list.)

Note that the above is all C++23 material; I listed library proposals which made C++20 at this meeting above.

There are also efforts in place to consolidate general design guidance that the Library Evolution group would like to apply to all proposals into a policy paper.

While still at the Incubator stage, I’d like to call attention to web_view, a proposal for embedding a view powered by a web browser engine into a C++ application, for the purpose of allowing C++ applications to leverage the wealth of web technologies for purposes such as graphical output, interaction, and so on. As mentioned in previous reports, I gathered feedback about this proposal from Mozilla engineers, and conveyed this feedback (which was a general discouragement for adding this type of facility to C++) both at previous meetings and this one. However, this was very much a minority view, and as a whole the groups which looked at this proposal (which included SG13 (I/O) and Library Evolution Incubator) largely viewed it favourably, as a promising way of allow C++ applications to do things like graphical output without having to standardize a graphics API ourselves, as previously attempted.

Study Groups

SG 1 (Concurrency)

SG 1 has a busy week, approving numerous proposals that made it into C++20 (listed above), as well as reviewing material targeted for the Concurrency TS v2 (whose outline I gave above).

Another notable topic for SG 1 was Executors, where a consensus design was reviewed and approved. Error handling remains a contentious issue; out of two different proposed mechanics, the first one seems to have the greater consensus.

Progress was also made on memory model issues, aided by the presence of several memory model experts who are not regular attendees. It seems the group may have an approach for resolving the “out of thin air” (OOTA) problem (see relevant papers); according to SG 1 chair Olivier Giroux, this is the most optimistic the group has been about the OOTA problem in ~20 years!

SG 7 (Compile-Time Programming)

The Compile-Time Programming Study Group (SG 7) met for half a day to discuss two main topics.

First on the agenda was introspection. As mentioned in previous reports, the committee put out a Reflection TS containing compile-time introspection facilities, but has since agreed that in the C++ IS, we’d like facilities with comparable expressive power but a different formulation (constexpr value-based metaprogramming rather than template metaprogramming). Up until recently, the nature of the new formulation was in dispute, with some favouring a monotype approach and others a richer type hierarchy. I’m pleased to report that at this meeting, a compromise approach was presented and favourably reviewed. With this newfound consensus, SG 7 is optimistic about being able to get these facilities into C++23. The compromise proposal does require a new language feature, parameter constraints, which will be presented to EWG at the next meeting.

(SG 7 also looked at a paper asking to revisit some of the previous design choices made regarding parameter names and access control in reflection. The group reaffirmed its previous decisions in these areas.)

The second main topic was reification, which can be thought of as the “next generation” of compile-time programming facilities, where you can not only introspect code at compile time, but perform processing on its representation and generate (“reify”) new code. A popular proposal in this area is Herb Sutter’s metaclasses, which allow you to “decorate” classes with metaprograms that transform the class definition in interesting ways. Metaclasses is intended to be built on a suite of underlying facilties such as code injection; there is now a concrete proposal for what those facilities could look like, and how metaclasses could be built on top of them. SG 7 looked at an overview of this proposal, although there wasn’t time for an in-depth design review at this stage.

SG 15 (Tooling)

The Tooling Study Group (SG 15) met for a full day, focusing on issues related to tooling around modules, and in particular proposals targeting the C++ Modules Ecosystem Technical Report mentioned above.

I couldn’t be in the room for this session as it ran concurrently with Reflection and then Herbceptions in EWG, but my understanding is that the main outcomes were:

- The Ecosystem TR should contain guidelines for module naming conventions. There was no consensus to include conventions for other things such as project structure, file names, or namespace names.

- The Ecosystem TR should recommend that implementations provide a way to implicitly build modules (that is, to be able to build them even in the absence of separate metadata specifying what modules are to be built and how), without requiring a particular project layout or file naming scheme. It was observed that implementing this in a performant way will likely require fast dependency scanning tools to extract module dependencies from source files. Such tools are actively being worked on (see e.g.

clang-scan-deps), and the committee has made efforts to make them tractable (see e.g. the tweak to the context-sensitivity rules forimportwhich EWG approved this week).

A proposal for a file format for describing dependencies of source files was also reviewed, and will continue to be iterated on.

One observation that was made during informal discussion was that SG 15’s recent focus on modules-related tooling has meant less time available for other topics such as package management. It remains to be seen if this is a temporary state of affairs, or if we could use two different study groups working in parallel.

Other Study Groups

Other Study Groups that met at this meeting include:

- SG 2 (Modules), covered in the Modules section above.

- SG 6 (Numerics) reviewed a dozen or so proposals, related to topics such as fixed-point numbers, type interactions, limits and overflow, rational numbers, and extended floating-point types. There was also a joint session with SG 14 (Games & Low Latency) and SG 19 (Machine Learning) to discuss linear algebra libraries and multi-dimensional data structures.

- SG 12 (Undefined and Unspecified Behaviour). Topics discussed include pointer provenance, the C++ memory object model, and various other low-level topics. There was also the usual joint session with WG23 – Software Vulnerabilities; there is now a document describing the two groups’ relationship.

- SG 13 (I/O), which reviewed proposals related to audio (proposal, feedback paper),

web_view, 2D graphics (which continues to be iterated on in the hopes of a revised version gaining consensus), as well as few proposals related to callbacks which are relevant to the design of I/O facilities. - SG 14 (Games & Low Latency), whose focus at this meeting was on linear algebra proposals considered in joint session with SG 19

- SG 16 (Unicode). Topics discussed include guidelines for where we want to impose requirements regarding character encodings, and filenames and the complexities they involve. The group also provided consults for relevant parts of other groups’ papers.

- SG 19 (Machine Learning). In addition to linear algebra, the group considered proposals for adding statistical mathematical functions to C++ (simple stuff like mean, median, and standard deviation — somewhat surprising we don’t have them already!), as well as graph data structures.

- SG 20 (Education), whose focus was on iterating on a document setting out proposed educational guidelines.

In addition, as mentioned, a new Contracts Study Group (SG 21) was formed at this meeting; I expect it will have its inaugural meeting in Belfast.

Most Study Groups hold regular teleconferences in between meetings, which is a great low-barrier-to-entry way to get involved. Check out their mailing lists here or here for telecon scheduling information.

Next Meeting

The next meeting of the Committee will be in Belfast, Northern Ireland, the week of November 4th, 2019.

Conclusion

My highlights for this meeting included:

- Keeping the C++ release train schedule on track by approving the C++20 Committee Draft

- Forming a Contracts Study Group to craft a high-quality, consensus-bearing Contracts design in C++23

- Approving

constexprdynamic allocation, including constexprvectorandstringfor C++20 - The standard library gaining a modern text formatting facility for C++20

- Broaching the topic of bringing the

-fno-exceptionssegment of C++ users back into the fold - Breaking record attendance levels as we continue to gain representation of different parts of the community on the committee

Due to the sheer number of proposals, there is a lot I didn’t cover in this post; if you’re curious about a specific proposal that I didn’t mention, please feel free to ask about it in the comments.

Other Trip Reports

Other trip reports about this meeting include Herb Sutter’s and the collaborative Reddit trip report — I encourage you to check them out as well!

https://botondballo.wordpress.com/2019/07/26/trip-report-c-standards-meeting-in-cologne-july-2019/

|

|

Mozilla VR Blog: Firefox Reality for Oculus Quest |

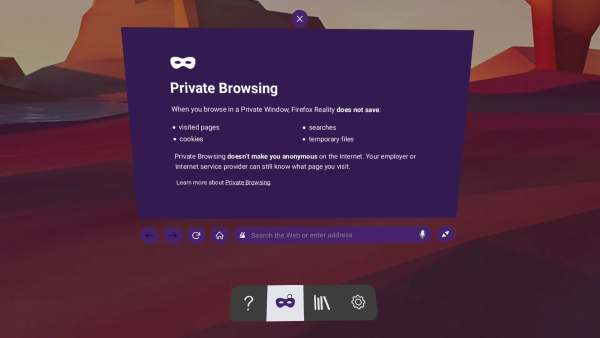

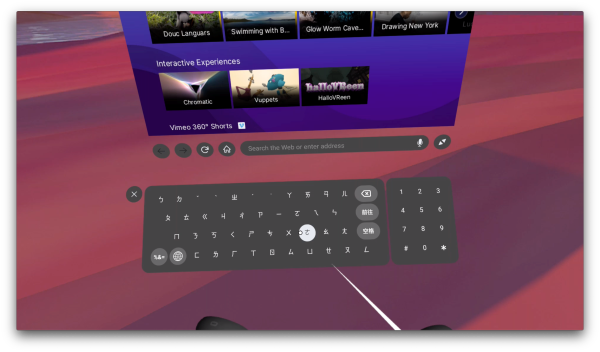

We are excited to announce that Firefox Reality is now available for the Oculus Quest!

Following our releases for other 6DoF headsets including the HTC Vive Focus Plus and Lenovo Mirage, we are delighted to bring the Firefox Reality VR web browsing experience to Oculus' newest headset.