Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Wladimir Palant: Various RememBear security issues |

Whenever I write about security issues in some password manager, people will ask what I’m thinking about their tool of choice. And occasionally I’ll take a closer look at the tool, which is what I did with the RememBear password manager in April. Technically, it is very similar to its competitor 1Password, to the point that the developers are being accused of plagiarism. Security-wise the tool doesn’t appear to be as advanced however, and I quickly found six issues (severity varies) which have all been fixed since. I also couldn’t fail noticing a bogus security mechanism, something that I already wrote about.

Stealing remembear.com login tokens

Password managers will often give special powers to “their” website. This is generally an issue, because compromising this website (e.g. via an all too common XSS vulnerability) will give attackers access to this functionality. In case of RememBear, things turned out to be easier however. The following function was responsible for recognizing privileged websites:

isRememBearWebsite() {

let remembearSites = this.getRememBearWebsites();

let url = window.getOriginUrl();

let foundSite = remembearSites.firstOrDefault(allowed => url.indexOf(allowed) === 0, undefined);

if (foundSite) {

return true;

}

return false;

}

We’ll get back to window.getOriginUrl() later, it not actually producing the expected result. But the important detail here: the resulting URL is being compared against some whitelisted origins by checking whether it starts with an origin like https://remembear.com. No, I didn’t forget the slash at the end here, there really is none. So this code will accept https://remembear.com.malicious.info/ as a trusted website!

Luckily, the consequences aren’t as severe as with similar LastPass issues for example. This would only give attacker’s website access to the RememBear login token. That token will automatically log you into the user’s RememBear account, which cannot be used to access passwords data however. It will “merely” allow the attacker to manage user’s subscription, with the most drastic available action being deleting the account along with all passwords data.

Messing with AutoFill functionality

AutoFill functionality of password managers is another typical area where security issues are found. RememBear requires a user action to activate AutoFill which is an important preventive measure. Also, AutoFill user interface will be displayed by the native RememBear application, so websites won’t have any way of messing with it. I found multiple other aspects of this functionality to be exploitable however.

Most importantly, RememBear would not verify that it filled in credentials on the right website (a recent regression according to the developers). Given that considerable time can pass between the user clicking the bear icon to display AutoFill user interface and the user actually selecting a password to be filled in, one cannot really expect that the browser tab is still displaying the same website. RememBear will happily continue filling in the password however, not recognizing that it doesn’t belong to the current website.

Worse yet, RememBear will try to fill out passwords in all frames of a tab. So if https://malicious.com embeds a frame from https://mybank.com and the user triggers AutoFill on the latter, https://malicious.com will potentially receive the password as well (e.g. via a hidden form). Or even less obvious: if you go to https://shop.com and that site has third-party frames e.g. for advertising, these frames will be able to intercept any of your filled in passwords.

Public Suffix List implementation issues

One point on my list of common AutoFill issues is: Domain name is not “the last two parts of a host name.” On the first glance, RememBear appears to have this done correctly by using Mozilla’s Public Suffix List. So it knows in particular that the relevant part of foo.bar.example.co.uk is example.co.uk and not co.uk. On a closer glance, there are considerable issues in the C# based implementation however.

For example, there is some rather bogus logic in the CheckPublicTLDs() function and I’m not even sure what this code is trying to accomplish. You will only get into this function for multi-part public suffixes where one of the parts has more than 3 characters – meaning pilot.aero for example. The code will correctly recognize example.pilot.aero as being the relevant part of the foo.bar.example.pilot.aero host name, but it will come to the same conclusion for pilot.aeroexample.pilot.aero as well. Since domains are being registered under the pilot.aero namespace, the two host names here actually belong to unrelated domains, so the bug here allows one of them to steal credentials for the other.

The other issue is that the syntax of the Public Suffix List is processed incorrectly. This results for example in the algorithm assuming that example.asia.np and malicious.asia.np belong to the same domain, so that credentials will be shared between the two. With asia.np being the public suffix here, these host names are unrelated however.

Issues saving passwords

When you enter a password on some site, RememBear will offer you to save it – fairly common functionality. However, this will fail spectacularly under some circumstances, and that’s partially due to the already mentioned window.getOriginUrl() function which is implemented as follows:

if (window.location.ancestorOrigins != undefined

&& window.location.ancestorOrigins.length > 0) {

return window.location.ancestorOrigins[0];

}

else {

return window.location.href;

}

Don’t know what window.location.ancestorOrigins does? I didn’t know either, it being a barely documented Chrome/Safari feature which undermines referrer policy protection. It contains the list of origins for parent frames, so this function will return the origin of the parent frame if there is any – the URL of the current document is completely ignored.

While AutoFill doesn’t use window.getOriginUrl(), saving passwords does. So if in Chrome https://evil.com embeds a frame from https://mybank.com and the user logs into the latter, RememBear will offer to save the password. But instead of saving that password for https://mybank.com it will store it for https://evil.com. And https://evil.com will be able to retrieve the password later if the user triggers AutoFill functionality on their site. But at least there will be some warning flags for the user along the way…

There was one more issue: the function hostFromString() used to extract host name from URL when saving passwords was using a custom URL parser. It wouldn’t know how to deal with “unusual” URL schemes, so for data:text/html,foo/example.com:// or about:blank#://example.com it would return example.com as the host name. Luckily for RememBear, its content scripts wouldn’t run on any of these URLs, at least in Chrome. In their old (and already phased out) Safari extension this likely was an issue and would have allowed websites to save passwords under an arbitrary website name.

Timeline

- 2019-04-09: After discovering the first security vulnerability I am attempting to find a security contact. There is none, so I ask on Twitter. I get a response on the same day, suggesting to invite me to a private bug bounty program. This route fails (I’ve been invited to that program previously and rejected), so we settle on using the support contact as fallback.

- 2019-04-10: Reported issue: “RememBear extensions leak remembear.com token.”

- 2019-04-10: RememBear fixes “RememBear extensions leak remembear.com token” issue and updates their Firefox and Chrome extensions.

- 2019-04-11: Reported issue “No protection against logins being filled in on wrong websites.”

- 2019-04-12: Reported issues: “Unrelated websites can share logins”, “Wrong interpretation of Mozilla’s Public Suffix list”, “Login saved for wrong site (frames in Chrome)”, “Websites can save logins for arbitrary site (Safari).”

- 2019-04-23: RememBear fixes parts of the “No protection against logins being filled in on wrong websites” issue in the Chrome extension.

- 2019-04-24: RememBear confirms that “Websites can save logins for arbitrary site (Safari)” issue doesn’t affect any current products but they intend to remove

hostFromString()function regardless. - 2019-05-27: RememBear reports having fixed all outstanding issues in the Windows application and Chrome extension. macOS application is supposed to follow a week later.

- 2019-06-12: RememBear updates Firefox extension as well.

- 2019-07-08: Coordinated disclosure.

https://palant.de/2019/07/08/various-remembear-security-issues/

|

|

Cameron Kaiser: TenFourFox FPR15 available |

Also, we now have Korean and Turkish language packs available for testing. If you want to give these a spin, download them here; the plan is to have them go-live at the same time as FPR15. Thanks again to new contributor Tae-Woong Se and, of course, to Chris Trusch as always for organizing localizations and doing the grunt work of turning them into installers.

Not much work will occur on the browser for the next week or so due to family commitments and a couple out-of-town trips, but I'll be looking at a few new things for FPR16, including some minor potential performance improvements and a font subsystem upgrade. There's still the issue of our outstanding JavaScript deficiencies as well, of course. More about that later.

http://tenfourfox.blogspot.com/2019/07/tenfourfox-fpr15-available.html

|

|

About:Community: Firefox 68 new contributors |

With the release of Firefox 68, we are pleased to welcome the 55 developers who contributed their first code change to Firefox in this release, 49 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

- afshan.shokath: 1537535

- clement.allain: 1437446, 1438896, 1532652

- mariahajmal: 1530285

- mihir17166: 1509464, 1532944

- nagpalm7: 1543581

- saschanaz: 1500748, 1550949, 1551893

- sonali18317: 1151735, 1395824

- trushita: 1494948, 1496193

- Alex Catarineu: 1542309

- Ananth: 1546277

- Andre KENY: 1542904, 1542906

- Arlak: 1445923

- Arpit Bharti: 1483077, 1531791, 1543043

- Brenda adel: 1474759

- Camil Staps: 1513492

- Christina Cheung: 1549800

- Christoph Walcher : 1530138

- Chujun Lu: 1522858, 1545129, 1548390

- DILIP: 1548742

- Daiki Ueno: 1511989

- Damien: 1539089, 1542187

- Dave Justice: 1522848

- Derek: 1545697, 1550902

- Emily McMinn: 1551566, 1551613

- Fabio Alessandrelli: 1529695

- Hamzah Akhtar: 1507368

- Ivan Yung: 1519884

- Jason Kratzer: 1513071

- Jeremy Ir: 1548341

- Kenny Levinsen: 1467127, 1546098

- Kevin Jacobs: 1515465, 1532757, 1535210

- Khyati Agarwal: 1469694, 1520711, 1524359, 1524362, 1529981, 1533290, 1540283

- Mariana Meireles: 1546521

- Matthew Finkel: 1478438, 1480877

- Megan Bailey: 1545270

- Miriam: 1537740

- Mirko Brodesser: 1057858, 1165211, 1374045, 1446722, 1540632, 1545359, 1549283, 1549696, 1550671, 1551857

- Mohd Umar Alam: 1533533

- Myeongjun Go: 1512171, 1517993

- Paul Morris: 1531870

- Paul Z"uhlcke: 1372033, 1412561, 1540685, 1549261, 1550647

- Rob Lemley: 1491371

- Ruihui Yan: 1508819

- Sean Feng: 1531917, 1533861, 1551935

- Sebastian Streich: 1386214, 1402530, 1444503, 1539853, 1546913

- Srujana Peddinti: 1212103, 1259660, 1451127, 1477832

- TheQwertiest: 1526245

- Thomas: 1521917

- TomS: 1514766

- Valentin MILLET: 1456269, 1456962, 1530178

- Vrinda Singhal: 1424287

- anthony: 1540771

- ariasuni : 1394376

- emanuela: 1521956

- hafsa: 1494469

- jaril: 1537884, 1538054, 1544240, 1547858, 1549777

https://blog.mozilla.org/community/2019/07/05/firefox-68-new-contributors/

|

|

The Mozilla Blog: Mozilla’s Latest Research Grants: Prioritizing Research for the Internet |

We are very happy to announce the results of our Mozilla Research Grants for the first half of 2019. This was an extremely competitive process, and we selected proposals which address twelve strategic priorities for the internet and for Mozilla. This includes researching better support for integrating Tor in the browser, improving scientific notebooks, using speech on mobile phones in India, and alternatives to advertising for funding the internet. The Mozilla Research Grants program is part of our commitment to being a world-class example of using inclusive innovation to impact culture, and reflects Mozilla’s commitment to open innovation.

We will open a new round of grants in Fall of 2019. See our Research Grant webpage for more details and to sign up to be notified when applications open.

| Lead Researchers | Institution | Project Title |

|---|---|---|

| Valentin Churavy | MIT | Bringing Julia to the Browser |

| Jessica Outlaw | Concordia University of Portland | Studying the Unique Social and Spatial affordances of Hubs by Mozilla for Remote Participation in Live Events |

| Neha Kumar | Georgia Tech | Missing Data: Health on the Internet for Internet Health |

| Piotr Sapiezynski, Alan Mislove, & Aleksandra Korolova | Northeastern University & University of Southern California | Understanding the impact of ad preference controls |

| Sumandro Chattapadhyay | The Centre for Internet and Society (CIS), India | Making Voices Heard: Privacy, Inclusivity, and Accessibility of Voice Interfaces in India |

| Weihang Wang | State University of New York | Designing Access Control Interfaces for Wasmtime |

| Bernease Herman | University of Washington | Toward generalizable methods for measuring bias in crowdsourced speech datasets and validation processes |

| David Karger | MIT | Tipsy: A Decentralized Open Standard for a Microdonation-Supported Web |

| Linhai Song | Pennsylvania State University | Benchmarking Generic Functions in Rust |

| Leigh Clark | University College Dublin | Creating a trustworthy model for always-listening voice interfaces |

| Steven Wu | University of Minnesota | DP-Fathom: Private, Accurate, and Communication-Efficient |

| Nikita Borisov | University of Illinois, Urbana-Champaign | Performance and Anonymity of HTTP/2 and HTTP/3 in Tor |

Many thanks to everyone who submitted a proposal and helped us publicize these grants.

The post Mozilla’s Latest Research Grants: Prioritizing Research for the Internet appeared first on The Mozilla Blog.

|

|

Mozilla GFX: moz://gfx newsletter #46 |

Hi there! As previously announced WebRender has made it to the stable channel and a couple of million users are now using it without having opted into it manually. With this important milestone behind us, now is a good time to widen the scope of the newsletter and give credit to other projects being worked on by members of the graphics team.

The WebRender newsletter therefore becomes the gfx newsletter. This is still far from an exhaustive list of the work done by the team, just a few highlights in WebRender and graphics in general. I am hoping to keep the pace around a post per month, we’ll see where things go from there.

What’s new in gfx

Async zoom for desktop Firefox

Botond has been working on desktop zooming

- The work is currently focused on the ability to use pinch gestures to zoom (scaling only, no reflow, like on mobile) on desktop platforms.

- The initial focus is on touchscreens, with support for touchpads to follow.

- We hope to have this ready for some early adopters to try out in the coming weeks.

WebGL power usage

Jeff Gilbert has been working on power preference options for WebGL.

WebGL has three power preference options available during canvas context creation:

- default

- low-power

- high-performance

The vast majority of web content implicitly requests “default”. Since we don’t want to regress web content performance, we usually treat “default” like “high-performance”. On macOS with multiple GPUs (MacBook Pro), this means activating the power-hungry dedicated GPU for almost all WebGL contexts. While this keeps our performance high, it also means every last one-off or transient WebGL context from ads, trackers, and fingerprinters will keep the high-power GPU running until they are garbage-collected.

In bug 1562812, Jeff added a ramp-up/ramp-down behavior for “default”: For the first couple seconds after WebGL context creation, things stay on the low-power GPU. After that grace period, if the context continues to render frames for presentation, we migrate to the high-power GPU. Then, if the context stops producing frames for a couple seconds, we release our lock of the high-power GPU, to try to migrate back to the low-power GPU.

What this means is that active WebGL content should fairly quickly end up ramped-up onto the high-power GPU, but inactive and orphaned WebGL contexts won’t keep the browser locked on to the high-power GPU anymore, which means better battery life for users on these machines as they wander the web.

DisplayList building optimization

Miko, Matt, Timothy and Dan have worked on improving display list build times

- The two main areas of improvement have been avoiding unnecessary work during display list merging, and improving the memory access patterns during display list building.

- The improved display list merging algorithm utilizes the invalidation assumptions of the frame tree, and avoids preprocessing sub display lists that cannot have changed. (bug 1544948)

- Some commonly used display items have drastically shrunk in size, which has reduced the memory usage and allocations. For example, the size of transform display item went down from 1024 bytes to 512 bytes. (bug 1502049, bug 1526941)

- The display item size improvements have also tangentially helped with caching and prefetching performance. For example, the base display item state booleans were collapsed into a bit field and moved to the first cache line. (bug 1526972, bug 1540785)

- Retained display lists were enabled for Android devices and for the parent process. (bugs 1413567 and 1413546)

- Telemetry probes show that since the Orlando All Hands, the mean display list build time has gone down by 40%, from ~1.8ms to ~1.1ms. The 95th percentile has gone down by 30%, from ~6.2ms to ~4.4ms.

- While these numbers might seem low, they are still a considerable proportion of the target 16ms frame budget. There is more promising follow-up work scheduled in bugs 1539597 and 1554503.

What’s new in WebRender

WebRender is a GPU based 2D rendering engine for web written in Rust, currently powering Firefox‘s rendering engine as well as the research web browser servo.

Software backend investigations

Glenn, Jeff Muizelaar and Jeff Gilbert are investigating WebRender on top of swiftshader or llvmpipe when the avialable GPU featureset is too small or too buggy. The hope is that these emulation layers will help us quickly migrate users that can’t have hardware acceleration to webrender with a minimal amount of specific code (most likely some simple specialized blitting routines in a few hot spots where the emulation layer is unlikely provide optimal speed).

It’s too early to tell at this point whether this experiment will pan out. We can see some (expected) regressions compared to the non-webrender software backend, but with some amount of optimization it’s probable that performance will get close enough and provide an acceptable user experience.

This investigation is important since it will determine how much code we have to maintain specifically for non-accelerated users, a proportion of users that will decrease over time but will probably never quite get to zero.

Pathfinder 3 investigations

Nical spent some time in the last quarter familiarizing with pathfinder’s internals and investigating its viability for rendering SVG paths in WebRender/Firefox. He wrote a blog post explaining in details how pathfinder fills paths on the GPU.

The outcome of this investigation is that we are optimistic about pathfinder’s approach and think it’s a good fit for integration in Firefox. In particular the approach is flexible and robust, and will let us improve or replace parts in the longer run.

Nical is also experimenting with a different tiling algorithm, aiming at reducing the CPU overhead.

The next steps are to prototype an integration of pathfinder 3 in WebRender, start using it for simple primitives such as CSS clip paths and gradually use it with more primitives.

Picture caching improvements

Glenn continues his work on picture caching. At the moment WebRender only triggers the optimization for a single scroll root. Glenn has landed a lot of infrastructure work to be able to benefit from picture caching at a more granular level (for example one per scroll-root), and pave the way for rendering picture cached slices directly into OS compositor surfaces.

The next steps are, roughly:

- Add a couple follow up optimizations such as exact dirty rectangles for smaller updates / multi-resolution tiles, detect tiles that are solid colors.

- Enable caching on content + UI explicitly (as an intermediate step, to give caching on the UI).

- Implement the right support for multiple cached slices that have transparency and subpixel text anti-aliasing.

- Enable fine grained caching on multiple slices.

- Expose those multiple cached slices to OS compositor for power savings

and better scrolling performance where supported.

WebRender on Android

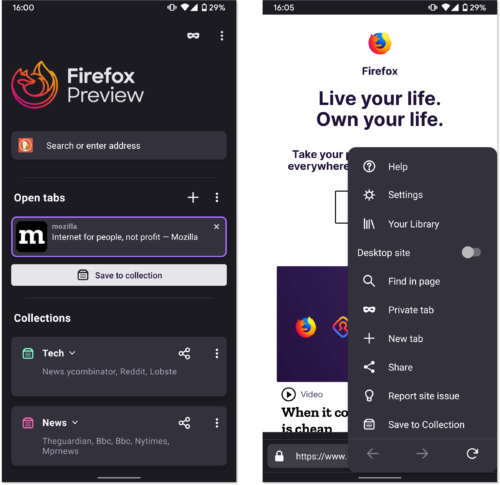

Thanks to the continuous efforts of Jamie, Kats, WebRender is starting to be pretty solid on android. GeckoView is required to enable WebRender so it isn’t possible to enable it now in Firefox for Android, but we plan make the option available for the Firefox Preview browser (which is powered by GeckoView) when it will have nightly builds.

https://mozillagfx.wordpress.com/2019/07/05/moz-gfx-newsletter-46/

|

|

Mozilla Reps Community: Rep of the Month – June 2019 |

Please join us in congratulating Pranshu Khanna, Rep of the Month for June 2019!

Pranshu is from Surat, Gujarat, India. His journey started with a Connected Devices workshop in 2016, since then he’s been a super active contributor and a proud Mozillian. He joined the Reps Program in March 2019 and has been instrumental ever since.

In addition to that, he’s been one of the most active Reps in his region since he joined the program. He has worked to get his local community, Mozilla Gujarat, to meet very regularly and contribute to Common Voice, BugHunter, Localization, SUMO, A-Frame, Add-ons, and Open Source Contribution. He’s an active contributor and a maintainer of the OpenDesign Repository and frequently contributes to the Mozilla India & Mozilla Gujarat Social Channels.

Congratulations and keep rocking the open web!

https://blog.mozilla.org/mozillareps/2019/07/05/rep-of-the-month-june-2019/

|

|

Mozilla Localization (L10N): L10n report: July edition |

Please note some of the information provided in this report may be subject to change as we are sometimes sharing information about projects that are still in early stages and are not final yet.

Welcome!

New localizers

- Sivakumar of Tamil

Are you a locale leader and want us to include new members in our upcoming reports? Contact us!

New community/locales added

- Manx was added to Pontoon and they’re starting to work on localizing Firefox.

New content and projects

What’s new or coming up in Firefox desktop

As usual, let’s start with some important dates:

- Firefox 68 will be released on July 9. At that point, Firefox 69 will be in beta, while Nightly will have Firefox 70.

- The deadline to ship localization updates in Firefox 69 is August 20.

Firefox 69 has been a lightweight release in terms of new strings, with most of the changes coming from Developer Tools. Expect a lot more content in Firefox 70, with particular focus on the Password Manager – integrating most of the Lockwise add-on features directly into Firefox – and work around Tracking Protection and Security, with brand new about pages.

What’s new or coming up in mobile

Since our last report, we’ve shipped the first release of Firefox Preview (Fenix) in 11 languages (including en-US). The next upcoming step will be to open up the project to more locales. If you are interested, make sure to follow closely the dev.l10n mailing list this week. And congratulations to the teams that helped make this a successful localized first release!

On another note, Firefox for iOS deadline for l10n work on v18 just passed this week, and we’re adding two new locales: Finnish and Gujarati. Congratulations to the teams!

What’s new or coming up in web projects

Keep brand and product names in English

The current policy per branding and legal teams is that the trademarked names should be left unchanged. Unless indicated in the comments, brand names should be left in English, they cannot be transliterated or declined. If you have any doubt, ask through the available communication channels.

Product names such as Lockwise, Monitor, Send, and Screenshot, to name a few, used alone or with Firefox, should be left unchanged. Pocket is a trademark and must remain as is too. Locale managers, please inform the rest of the communities on the policy especially to new contributors. Search in Pontoon or Transvision for these names and revert them to English.

Mozilla.org

The recently added new pages contain marketing languages to promote all the Firefox products. Localize them and start spreading the words on social media and other platforms. The deadline is indicative.

- New: firefox/best-browser.lang, firefox/campaign-trailhead.lang, firefox/all-unified.lang;

- Update: firefox/accounts-2019.lang;

- Obsolete: firefox/accounts.lang will be removed soon. Stop working on this page if is incomplete.

What’s new or coming up in SuMo

- SUMO report on a localization experiment around machine translation

- Firefox 68 and Firefox 18 iOS articles ready

- Firefox Preview articles ready for localization.

- Follow other announcements and conversations about SUMO l10n on discourse.

Newly published localizer facing documentation

The Firefox marketing guides are living documents and will be updated as needed.

- The guide in German was written by Mozilla staff copywriter. It sets the tone for marketing content localization, including the mozilla.org site. With this guide, the hope is that the site has a more unified tone from page to page, regardless of who contributed to the localization: the community or a staff.

- The guide in English was derived from the guide written in German and is meant to be a template for any communities who want to create a marketing content localization guide. This is in addition to the style guide a community has already created.

- The guide in French will be authored by a Mozilla staff copywriter. We will update you in a future report.

Events

- Want to showcase an event coming up that your community is participating in? Reach out to any l10n-driver and we’ll include that (see links to emails at the bottom of this report)

Useful Links

- Dev.l10n mailing list and Dev.l10n.web mailing list – where project updates happen. If you are a localizer, then you should be following this

- Facebook group: it’s new! Come check it out!

- Telegram (contact one of the l10n-drivers below so we will add you)

- L10n blog

- #l10n irc channel: this wiki page will help you get set up with IRC. For L10n, we use the #l10n channel for all general discussion. You can also find a list of IRC channels in other languages here.

Questions? Want to get involved?

- If you want to get involved, or have any question about l10n, reach out to:

- Delphine – l10n Project Manager for mobile

- Peiying (CocoMo) – l10n Project Manager for mozilla.org, marketing, and legal

- Francesco Lodolo (flod) – l10n Project Manager for desktop

- Th'eo Chevalier – l10n Project Manager for Mozilla Foundation

- Axel (Pike) – l10n Tech Team Lead

- Sta's – l20n/FTL tamer

- Matjaz – Pontoon dev

- Adrian – Pontoon dev

- Jeff Beatty (gueroJeff) – l10n-drivers manager

- Rub'en – Interim L10n community coordinator for Support

Did you enjoy reading this report? Let us know how we can improve by reaching out to any one of the l10n-drivers listed above.

https://blog.mozilla.org/l10n/2019/07/04/l10n-report-july-edition/

|

|

The Rust Programming Language Blog: Announcing Rust 1.36.0 |

The Rust team is happy to announce a new version of Rust, 1.36.0. Rust is a programming language that is empowering everyone to build reliable and efficient software.

If you have a previous version of Rust installed via rustup, getting Rust 1.36.0 is as easy as:

$ rustup update stable

If you don't have it already, you can get rustup from the appropriate page on our website,

and check out the detailed release notes for 1.36.0 on GitHub.

What's in 1.36.0 stable

This release brings many changes, including the stabilization of the Future trait,

the alloc crate, the MaybeUninit type, NLL for Rust 2015,

a new HashMap implementation, and --offline support in Cargo.

Read on for a few highlights, or see the detailed release notes for additional information.

The Future is here!

In Rust 1.36.0 the long awaited Future trait has been stabilized!

With this stabilization, we hope to give important crates, libraries,

and the ecosystem time to prepare for async / .await,

which we'll tell you more about in the future.

The alloc crate is stable

Before 1.36.0, the standard library consisted of the crates std, core, and proc_macro.

The core crate provided core functionality such as Iterator and Copy

and could be used in #![no_std] environments since it did not impose any requirements.

Meanwhile, the std crate provided types like Box and OS functionality

but required a global allocator and other OS capabilities in return.

Starting with Rust 1.36.0, the parts of std that depend on a global allocator, e.g. Vec,

are now available in the alloc crate. The std crate then re-exports these parts.

While #![no_std] binaries using alloc still require nightly Rust,

#![no_std] library crates can use the alloc crate in stable Rust.

Meanwhile, normal binaries, without #![no_std], can depend on such library crates.

We hope this will facilitate the development of a #![no_std] compatible ecosystem of libraries

prior to stabilizing support for #![no_std] binaries using alloc.

If you are the maintainer of a library that only relies on some allocation primitives to function,

consider making your library #[no_std] compatible by using the following at the top of your lib.rs file:

#![no_std]

extern crate alloc;

use alloc::vec::Vec;

MaybeUninit instead of mem::uninitialized

In previous releases of Rust, the mem::uninitialized function has allowed you to bypass Rust's

initialization checks by pretending that you've initialized a value at type T without doing anything.

One of the main uses of this function has been to lazily allocate arrays.

However, mem::uninitialized is an incredibly dangerous operation that essentially

cannot be used correctly as the Rust compiler assumes that values are properly initialized.

For example, calling mem::uninitialized:: causes instantaneous undefined behavior

as, from Rust's point of view, the uninitialized bits are neither 0 (for false)

nor 1 (for true) - the only two allowed bit patterns for bool.

To remedy this situation, in Rust 1.36.0, the type MaybeUninit has been stabilized.

The Rust compiler will understand that it should not assume that a MaybeUninit is a properly initialized T.

Therefore, you can do gradual initialization more safely and eventually use .assume_init()

once you are certain that maybe_t: MaybeUninit contains an initialized T.

As MaybeUninit is the safer alternative, starting with Rust 1.38,

the function mem::uninitialized will be deprecated.

To find out more about uninitialized memory, mem::uninitialized,

and MaybeUninit, read Alexis Beingessner's blog post.

The standard library also contains extensive documentation about MaybeUninit.

NLL for Rust 2015

In the announcement for Rust 1.31.0, we told you about NLL (Non-Lexical Lifetimes), an improvement to the language that makes the borrow checker smarter and more user friendly. For example, you may now write:

fn main() {

let mut x = 5;

let y = &x;

let z = &mut x; // This was not allowed before 1.31.0.

}

In 1.31.0 NLL was stabilized only for Rust 2018, with a promise that we would backport it to Rust 2015 as well. With Rust 1.36.0, we are happy to announce that we have done so! NLL is now available for Rust 2015.

With NLL on both editions, we are closer to removing the old borrow checker. However, the old borrow checker unfortunately accepted some unsound code it should not have. As a result, NLL is currently in a "migration mode" wherein we will emit warnings instead of errors if the NLL borrow checker rejects code the old AST borrow checker would accept. Please see this list of public crates that are affected.

To find out more about NLL, MIR, the story around fixing soundness holes, and what you can do about the warnings if you have them, read Felix Klock's blog post.

A new HashMap implementation

In Rust 1.36.0, the HashMap implementation has been replaced

with the one in the hashbrown crate which is based on the SwissTable design.

While the interface is the same, the HashMap implementation is now

faster on average and has lower memory overhead.

Note that unlike the hashbrown crate,

the implementation in std still defaults to the SipHash 1-3 hashing algorithm.

--offline support in Cargo

During most builds, Cargo doesn't interact with the network. Sometimes, however, Cargo has to. Such is the case when a dependency is added and the latest compatible version needs to be downloaded. At times, network access is not an option though, for example on an airplane or in isolated build environments.

In Rust 1.36, a new Cargo flag has been stabilized: --offline.

The flag alters Cargo's dependency resolution algorithm to only use locally cached dependencies.

When the required crates are not available offline, and a network access would be required,

Cargo will exit with an error.

To prepopulate the local cache in preparation for going offline,

use the cargo fetch command, which downloads all the required dependencies for a project.

To find out more about --offline and cargo fetch, read Nick Cameron's blog post.

For information on other changes to Cargo, see the detailed release notes.

Library changes

The dbg! macro now supports multiple arguments.

Additionally, a number of APIs have been made const:

New APIs have become stable, including:

task::Wakerandtask::PollVecDeque::rotate_leftandVecDeque::rotate_rightRead::read_vectoredandWrite::write_vectoredIterator::copiedBorrowMutfor String str::as_mut_ptr

Other library changes are available in the detailed release notes.

Other changes

Detailed 1.36.0 release notes are available for Rust, Cargo, and Clippy.

Contributors to 1.36.0

Many people came together to create Rust 1.36.0. We couldn't have done it without all of you. Thanks!

|

|

Will Kahn-Greene: Crash pings (Telemetry) and crash reports (Socorro/Crash Stats) |

I keep getting asked questions that stem from confusion about crash pings and crash reports, the details of where they come from, differences between the two data sets, what each is currently good for, and possible future directions for work on both. I figured I'd write it all down.

This is a brain dump and sort of a blog post and possibly not a good version of either. I desperately wished it was more formal and mind-blowing like something written by Chutten or Alessio.

It's likely that this is 90% true today but as time goes on, things will change and it may be horribly wrong depending on how far in the future you're reading this. As I find out things are wrong, I'll keep notes. Any errors are my own.

Table of contents because this is long

Summary

We (Mozilla) have two different data sets for crashes: crash pings in Telemetry and crash reports in Socorro/Crash Stats. When Firefox crashes, the crash reporter collects information about the crash and this results in crash ping and crash report data. From there, the two different data things travel two different paths and end up in two different systems.

This blog post covers these two different journeys, their destinations, and the resulting properties of both data sets.

This blog post specifically talks about Firefox and not other products which have different crash reporting stories.

CRASH!

Firefox crashes. It happens.

The crash reporter kicks in. It uses the Breakpad library to collect data about the crashed process, package it up into a minidump. The minidump has information about the registers, what's in memory, the stack of the crashing thread, stacks of other threads, what modules are in memory, and so on.

- https://firefox-source-docs.mozilla.org/toolkit/crashreporter/crashreporter/index.html

- https://chromium.googlesource.com/breakpad/breakpad/+/master/docs/getting_started_with_breakpad.md

- https://chromium.googlesource.com/breakpad/breakpad/+/master/docs/exception_handling.md

Additionally, the crash reporter collects a set of annotations for the crash. Annotations like ProductName, Version, ReleaseChannel, BuildID and others help us group crashes for the same product and build.

The crash reporter assembles the portions of the crash report that don't have personally identifiable information (PII) in them into a crash ping. It uses minidump-analyzer to unwind the stack. The crash ping with this stack is sent via the crash ping sender to Telemetry.

- https://firefox-source-docs.mozilla.org/toolkit/components/telemetry/telemetry/internals/pingsender.html

- https://firefox-source-docs.mozilla.org/toolkit/components/telemetry/telemetry/data/crash-ping.html

If Telemetry is disabled, then the crash ping will not get sent to Telemetry.

The crash reporter will show a crash report dialog informing the user that Firefox crashed. The crash report dialog includes a comments field for additional data about the crash and an email field. The user can choose to send the crash report or not.

If the user chooses to send the crash report, then the crash report is sent via HTTP POST to the collector for the crash ingestion pipeline. The entire crash ingestion pipeline is called Socorro. The website part is called Crash Stats.

If the user chooses not to send the crash report, then the crash report never goes to Socorro.

If Firefox is unable to send the crash report, it keeps it on disk. It might ask the user to try to send it again later. The user can access about:crashes and send it explicitly.

Relevant backstory

What is symbolication?

Before we get too much further, let's talk about symbolication.

minidump-whatever will walk the stack starting with the top-most frame. It uses frame information to find the caller frame and works backwards to produce a list of frames. It also includes a list of modules that are in memory.

For example, part of the crash ping might look like this:

"modules": [

...

{

"debug_file": "xul.pdb",

"base_addr": "0x7fecca50000",

"version": "69.0.0.7091",

"debug_id": "4E1555BE725E9E5C4C4C44205044422E1",

"filename": "xul.dll",

"end_addr": "0x7fed32a9000",

"code_id": "5CF2591C6859000"

},

...

],

"threads": [

{

"frames": [

{

"trust": "context",

"module_index": 8,

"ip": "0x7feccfc3337"

},

{

"trust": "cfi",

"module_index": 8,

"ip": "0x7feccfb0c8f"

},

{

"trust": "cfi",

"module_index": 8,

"ip": "0x7feccfae0af"

},

{

"trust": "cfi",

"module_index": 8,

"ip": "0x7feccfae1be"

},

...

The "ip" is an instruction pointer.

The "module_index" refers to another list of modules that were all in memory at the time.

The "trust" refers to how the stack unwinding figured out how to unwind that frame. Sometimes it doesn't have enough information and it does an educated guess.

Symbolication takes the module name, the module debug id, and the offset and looks it up with the symbols it knows about. So for the first frame, it'd do this:

- module index 8 is xul.dll

- get the symbols for xul.pdb debug id 4E1555BE725E9E5C4C4C44205044422E1 which is at https://symbols.mozilla.org/xul.pdb/4E1555BE725E9E5C4C4C44205044422E1/xul.sym

- figure out that 0x7feccfc3337 (ip) - 0x7fecca50000 (base addr for xul.pdb module) is 0x573337

- look up 0x573337 in the SYM file and I think that's nsTimerImpl::InitCommon(mozilla::BaseTimeDuration const &,unsigned int,nsTimerImpl::Callback &&)

Symbolication does that for every frame and then we end up with a helpful symbolicated stack.

Tecken has a symbolication API which takes the module and stack information in a minimal form and symbolicates using symbols it manages.

It takes a form like this:

{

"memoryMap": [

[

"xul.pdb",

"44E4EC8C2F41492B9369D6B9A059577C2"

],

[

"wntdll.pdb",

"D74F79EB1F8D4A45ABCD2F476CCABACC2"

]

],

"stacks": [

[

[0, 11723767],

[1, 65802]

]

]

}

This has two data structures. The first is a list of (module name, module debug id) tuples. The second is a list of (module id, memory offset) tuples.

What is Socorro-style signature generation?

Socorro has a signature generator that goes through the stack, normalizes the frames so that frames look the same across platforms, and then uses that to generate a "signature" for the crash that suggests a common cause for all the crash reports with that signature.

It's a fragile and finicky process. It works well for some things and poorly for others. There are other ways to generate signatures. This is the one that Socorro currently uses. We're constantly honing it.

I export Socorro's signature generation system as a Python library called siggen.

For examples of stacks -> signatures, look at crash reports on Crash Stats.

What is it and where does it go?

Crash pings in Telemetry

Crash pings are only sent if Telemetry is enabled in Firefox.

The crash ping contains the stack for the crash, but little else about the crashed process. No register data, no memory data, nothing found on the heap.

The stack is unwound by minidump-analyzer in the client on the user's machine. Because of that, driver information can be used by unwinding so for some kinds of crashes, we may get a better stack than crash reports in Socorro.

- https://hg.mozilla.org/mozilla-central/file/tip/toolkit/crashreporter/minidump-analyzer

- https://firefox-source-docs.mozilla.org/toolkit/components/telemetry/telemetry/data/crash-ping.html#stack-traces

Stacks in crash pings are not symbolicated.

There's an error aggregates data set generated from the crash pings which is used by Mission Control.

Crash reports in Socorro

Socorro does not get crash reports if the user chooses not to send a crash report.

Socorro collector discards crash reports for unsupported products.

Socorro collector throttles incoming crash reports for Firefox release channel--it accepts 10% of those for processing and rejects the other 90%.

The Socorro processor runs minidump-stackwalk on the minidump which unwinds the stack. Then it symbolicates the stack using symbols uploaded during the build process to symbols.mozilla.org.

- https://github.com/mozilla-services/socorro/tree/master/minidump-stackwalk

- https://tecken.readthedocs.io/en/latest/download.html

If we don't have symbols for modules, minidump-stackwalk will guess at the unwinding. This can work poorly for crashes that involve drivers and system libraries we don't have symbols for.

Crash pings vs. Crash reports

Because of the above, there are big differences in collection of crash data between the two systems and what you can do with it.

Representative of the real world

Because crash ping data doesn't require explicit consent by users on a crash-by-crash basis and crash pings are sent using the Telemetry infrastructure which is pretty resilient to network issues and other problems, crash ping data in Telemetry is likely more representative of crashes happening for our users.

Crash report data in Socorro is limited to what users explicitly send us. Further, there are cases where Firefox isn't able to run the crash reporter dialog to ask the user.

For example, on Friday, June 28th, 2019 for Firefox release channel:

- Telemetry got 1,706,041 crash pings

- Socorro processed 42,939 crash reports, so figure it got around 420,000 crash reports

Stack quality

A crash report can have a different stack in the crash ping than in the crash report.

Crash ping data in Telemetry is unwound in the client. On Windows, minidump-analyzer can access CFI unwinding data, so the stacks can be better especially in cases where the stack contains system libraries and drivers.

- https://hg.mozilla.org/mozilla-central/file/tip/toolkit/crashreporter/minidump-analyzer/Win64ModuleUnwindMetadata.cpp

- https://bugzilla.mozilla.org/show_bug.cgi?id=1372830

We haven't implemented this yet on non-Windows platforms.

Crash report data in Socorro is unwound by the Socorro processor and is heavily dependent on what symbols we have available. It doesn't do a good job with unwinding through drivers and we often don't have symbols for Linux system libraries.

Gabriele says sometimes stacks are unwound better for crashes on MacOS and Linux platforms than what the crash ping contains.

Symbolication and signatures

Crash ping data is not symbolicated and we're not generating Socorro-style signatures, so it's hard to bucket them and see change in crash rates for specific crashes.

There's an fx-crash-sig Python library which has code to symbolicate crash ping stacks and generate a Socorro-style signature from that stack. This is helpful for one-off analysis but this is not a long-term solution.

Crash report data in Socorro is symbolicated and has Socorro-style signatures.

The consequence of this is that in Telemetry, we can look at crash rates for builds, but can't look at crash rates for specific kinds of crashes as bucketed by signatures.

The Signature Report and Top Crashers Report in Crash Stats can't be implemented in Telemetry (yet).

Tooling

Telemetry has better tooling for analyzing crash ping data.

Crash ping data drives Mission Control.

Socorro's tooling is limited to Supersearch web ui and API which is ok at some things and not great at others. I've heard some people really like the Supersearch web ui.

There are parts of the crash report which are not searchable. For example, it's not possible to search for crash reports where a certain module is in the stack. Socorro has a signature report and a topcrashers page which help, but they're not flexible for answering questions outside of what we've explicitly coded them for.

Socorro sends a copy of processed crash reports to Telemetry and this is in the "socorro_crash" dataset.

PII and data access

Telemetry crash ping data does not contain PII. It is not publicly available, except in aggregate via Mission Control.

Socorro crash report data contains PII. A subset of the crash data is available to view and search by anyone. The PII data is restricted to users explicitly granted access to it. PII data includes user email addresses, user-provided comments, CPU register data, what else was in memory, and other things.

Data expiration

Telemetry crash ping data isn't expired, but I think that's changing at some point.

Socorro crash report data is kept for 6 months.

Data latency

Socorro data is near real-time. Crash reports get collected and processed and are available in searches and reports within a few minutes.

Crash ping data gets to Telemetry almost immediately.

Main ping data has some latency between when it's generated and when it is collected. This affects normalization numbers if you were looking at crash rates from crash ping data.

- https://www.a2p.it/wordpress/tech-stuff/mozilla/firefox-data-faster-shutdown-pingsender/ (June 2017)

- https://blog.mozilla.org/data/2017/09/19/two-days-or-how-long-until-the-data-is-in/ (September 2017)

Derived data sets may have some latency depending on how they're generated.

Conclusions and future plans

Socorro

Socorro is still good for deep dives into specific crash reports since it contains the full minidump and sometimes a user email address and user comments.

Socorro has Socorro-style signatures which make it possible to aggregate crash reports into signature buckets. Signatures are kind of fickle and we adjust how they're generated over time as compilers, symbols extraction, and other things change. We can build Signature Reports and Top Crasher reports and those are ok, but they use total counts and not rates.

I want to tackle switching from Socorro's minidump-stackwalk to minidump-analyzer so we're using the same stack walker in both places. I don't know when that will happen.

Socorro is going to GCP which means there will be different tools available for data analysis. Further, we may switch to BigQuery or some other data store that lets us search the stack. That'd be a big win.

Telemetry

Telemetry crash ping data is more representative of the real world, but stacks are symbolicated and there's no signature generation, so you can't look at aggregates by cause.

Symbolication and signature generation of crash pings will get figured out at some point.

Work continues on Mission Control 2.0.

Telemetry is going to GCP which means there will be different tools available for data analysis.

Together

At the All Hands, I had a few conversations about fixing tooling for both crash reports and crash pings so the resulting data sets were more similar and you could move from one to the other. For example, if you notice a troubling trend in the crash ping data, can you then go to Crash Stats and find crash reports to deep dive into?

I also had conversations around use cases. Which data set is better for answering certain questions?

We think having a guide that covers which data set is good for what kinds of analysis, tools to use, and how to move between the data sets would be really helpful.

Thanks!

Many thanks to everyone who helped with this: Will Lachance, W Chris Beard, Gabriele Svelto, Nathan Froyd, and Chutten.

Also, many thanks to Chutten and Alessio who write fantastic blog posts about Telemetry things. Those are gold.

Updates

- 2019-07-04: Crash ping data is not publicly available. Blog post updated accordingly. Thanks, Chutten!

https://bluesock.org/~willkg/blog/mozilla/crash_pings_crash_reports.html

|

|

Mozilla Reps Community: 8 Years of Reps Program, Celebrating Community Successes! |

The Reps program idea was started in 2010 by William Quiviger and Pierros Papadeas, until officially launched and welcoming volunteers onboard as Mozilla Reps in 2011. The Mozilla Reps program aims to empower and support volunteer Mozillians who want to be official representatives of Mozilla in their region/locale/country. The program provides a framework and a specific set of tools to help Mozillians to organize and/or attend events, recruit and mentor new contributors, document and share activities, and support their local communities better. The Reps program was created to help communities around the world. Community is the backbone of the Mozilla project. As the Mozilla project grows in scope and scale, community needs to be strengthened and empowered accordingly. This is the central aim of the Mozilla Reps program: to empower and to help push responsibility to the edges, in order to help the Mozilla contributor base grow. Nowadays, the Reps are taking a stronger point by becoming the Community Coordinators.

You can learn more about the program here.

Success Stories

Mozilla Reps program proven to be successful to help to identify talents from local communities to contribute to Mozilla projects. Reps also help to run local/international events and helping the campaigns to take place. Some of the campaigns happen in the past, was a collaboration work with other Mozilla teams. These are list of some activities or campaigns which collaborating the Reps program with other Mozilla teams. Until today, we have total 7623 events, 261 active Reps, and 51,635 activities reported on Reps portal.

Historically, the Reps have been supporting major Mozilla products through the whole existence, from the Firefox OS Launch to supporting the latest campaigns. Events that help to promote the launch of new version of Firefox such Firefox 4.0, Firefox Quantum, and every Firefox major release updates. Events that care about users and community such Privacy Month, Aadhar, The Dark Funnel, Techspeakers, Web Compatibility Sprint, Maker Party. Events relate to new product release such Firefox Rocket/Lite and Screenshot Go for South East Asia and India market. Events relate to localization such The Add-on Localization. Events relate to Mozilla’s product such Rust, Webmaker, Firefox OS.

Do You Have More Ideas?

With those many success stories on the past in helping engagement of local communities and helping many different campaigns, Mozilla Reps still looking forward to many different activities and campaigns in future. So, if you have more ideas for campaigns or engagement for local communities, or want to collaborate with Mozilla Reps program to get in touch with local communities, Let’s do it together!

|

|

Will Kahn-Greene: Socorro Engineering: June 2019 happenings |

Summary

Socorro Engineering team covers several projects:

- Socorro is the crash ingestion pipeline and Crash Stats web service for Mozilla's products like Firefox.

- Tecken is the symbols server for uploading, downloading, and symbolicating stacks.

- Buildhub2 is the build information index.

- Buildhub is the previous iteration of Buildhub2 that's currently deprecated and will get decommissioned soon.

- PollBot and Delivery Dashboard are something something.

This blog post summarizes our activities in June.

Highlights of June

- Socorro: Fixed the collector's support of a single JSON-encoded field in the HTTP POST payload for crash reports. This is a big deal because we'll get less junk data in crash reports going forward.

- Socorro: Reworked how Crash Stats manages featured versions: if the product defines a product_details/PRODUCTNAME.json file, it'll pull from that. Otherwise it calculates featured versions based on the crash reports it's received.

- Buildhub: deprecated Buildhub in favor of Buildhub2. Current plan is to decommission Buildhub in July.

- Across projects: Updated tons of dependencies that had security vulnerabilities. It was like a hamster wheel of updates, PRs, and deploys.

- Tecken: Worked on GCS emulator for local dev environment.

- All hands discussions:

- GCP migration plan for Tecken and figure out what needs to be done.

- Possible GCP migration schedule for Tecken and Socorro.

- Migrating applications using Buildhub to Buildhub2 and decommissioning Buildhub in July.

- What would happen if we switched from Elasticsearch to BigQuery?

- Switching from Socorro's minidump-stackwalk to minidump-analyzer.

- Re-implementing the Socorro Top Crashers and Signature reports using Telemetry tools and data.

- Writing a symbolicator and Socorro-style signature generator in Rust that can be used for crash reports in Socorro and crash pings in Telemetry.

- The crash ping vs. crash report situation (blog post coming soon).

Read more… (5 min remaining to read)

https://bluesock.org/~willkg/blog/mozilla/socorro_2019_06.html

|

|

The Mozilla Blog: Mozilla joins brief for protection of LGBTQ employees from discrimination |

Last year, we joined the call in support of transgender equality as part of our longstanding commitment to diversity, inclusion and fostering a supportive work environment. Today, we are proud to join over 200 companies, big and small, as friends of the court, in a brief brought to the Supreme Court of the United States.

Proud to reaffirm that everyone deserves to be protected from discrimination, whatever their sexual orientation or gender identity.

Diversity fuels innovation & competition. It's a necessary part of a healthy, supportive workplace and of a healthy internet. https://t.co/T9gXX4kiI5

— Mozilla (@mozilla) July 2, 2019

The brief says, in part:

“Amici support the principle that no one should be passed over for a job, paid less, fired, or subjected to harassment or any other form of discrimination based on their sexual orientation or gender identity. Amici’s commitment to equality is violated when any employee is treated unequally because of their sexual orientation or gender identity. When workplaces are free from discrimination against LGBT employees, everyone can do their best work, with substantial benefits for both employers and employees.”

The post Mozilla joins brief for protection of LGBTQ employees from discrimination appeared first on The Mozilla Blog.

|

|

Dave Hunt: State of Performance Test Engineering (H1/2019) |

|

|

Mozilla Open Policy & Advocacy Blog: Building on the UK white paper: How to better protect internet openness and individuals’ rights in the fight against online harms |

In April 2019 the UK government unveiled plans for sweeping new laws aimed at tackling illegal and harmful content and activity online, described by the government as ‘the toughest internet laws in the world’. While the UK government’s proposal contains some interesting avenues of exploration for the next generation of European content regulation laws, it also includes several critical weaknesses and grey areas. We’ve just filed comments with the government that spell out the key areas of concern and provide recommendations on how to address them.

The UK government’s white paper responds to legitimate public policy concerns around how technology companies deal with illegal and harmful content online. We understand that in many respects the current European regulatory paradigm is not fit for purpose, and we support an exploration of what codified content ‘responsibility’ might look like in the UK and at EU-level, while ensuring strong and clear protections for individuals’ free expression and due process rights.

As we have noted previously, we believe that the white paper’s proposed regulatory architecture could have some potential. However, the UK government’s vision to put this model into practice contains serious flaws. Here are some of the changes we believe the UK government must make to its proposal to avoid the practical implementation pitfalls:

- Clarity on definitions: The government must provide far more detail on what is meant by the terms ‘reasonableness’ and ‘proportionality’, if these are to serve as meaningful safeguards for companies and citizens. Moreover, the government must clearly define the relevant ‘online harms’ that are to be made subject to the duty of care, to ensure that companies can effectively target their trust and safety efforts.

ddd - A rights-protective governance model: The regulator tasked with overseeing the duty of care must be truly co-regulatory in nature, with companies and civil society groups central to the process by which the Codes of Practice are developed. Moreover, the regulator’s mission must include a mandate to protect fundamental rights and internet openness, and it must not have power to issue content takedown orders.

ddd - A targeted scope: The duty of care should be limited to online services that store and publicly disseminate user-uploaded content. There should be clear exemptions for electronic communications services, internet service providers, and cloud services, whose operational and technical architecture are ill-suited and problematic for a duty of care approach.

ddd - Focus on practices over outcomes: The regulator’s role should be to operationalise the duty of care with respect to companies’ practices – the steps they are taking to reduce ‘online harms’ on their service. The regulator should not have a role in assessing the legality or harm of individual pieces of content. Even the best content moderation systems can sometimes fail to identify illegal or harmful content, and so focusing exclusively on outcomes-based metrics to assess the duty of care is inappropriate.

We look forward to engaging more with the UK government as it continues its consultation on the Online Harms white paper, and hopefully the recommendations in this filing can help address some of the white paper’s critical shortcomings. As policymakers from Brussels to Delhi contemplate the next generation of online content regulations, the UK government has the opportunity to set a positive standard for the world.

The post Building on the UK white paper: How to better protect internet openness and individuals’ rights in the fight against online harms appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2019/07/02/building-on-the-uk-online-harms-white-paper/

|

|

Mike Hommey: Git now faster than Mercurial to clone Mozilla Mercurial repos |

How is that for clickbait?

With the now released git-cinnabar 0.5.2, the cinnabarclone feature is enabled by default, which means it doesn’t need to be enabled manually anymore.

Cinnabarclone is to git-cinnabar what clonebundles is to Mercurial (to some extent). Clonebundles allow Mercurial to download a pre-generated bundle of a repository, which reduces work on the server side. Similarly, Cinnabarclone allows git-cinnabar to download a pre-generated bundle of the git form of a Mercurial repository.

Thanks to Connor Sheehan, who deployed the necessary extension and configuration on the server side, cinnabarclone is now enabled for mozilla-central and mozilla-unified, making git-cinnabar clone faster than ever for these repositories. In fact, under some conditions (mostly depending on network bandwidth), cloning with git-cinnabar is now faster than cloning with Mercurial:

$ time git clone hg::https://hg.mozilla.org/mozilla-unified mozilla-unified_git

Cloning into 'mozilla-unified_git'...

Fetching cinnabar metadata from https://index.taskcluster.net/v1/task/github.glandium.git-cinnabar.bundle.mozilla-unified/artifacts/public/bundle.git

Receiving objects: 100% (12153616/12153616), 2.67 GiB | 41.41 MiB/s, done.

Resolving deltas: 100% (8393939/8393939), done.

Reading 172 changesets

Reading and importing 170 manifests

Reading and importing 758 revisions of 570 files

Importing 172 changesets

It is recommended that you set "remote.origin.prune" or "fetch.prune" to "true".

git config remote.origin.prune true

or

git config fetch.prune true

Run the following command to update tags:

git fetch --tags hg::tags: tag "*"

Checking out files: 100% (279688/279688), done.

real 4m57.837s

user 9m57.373s

sys 0m41.106s

$ time hg clone https://hg.mozilla.org/mozilla-unified

destination directory: mozilla-unified

applying clone bundle from https://hg.cdn.mozilla.net/mozilla-unified/5ebb4441aa24eb6cbe8dad58d232004a3ea11b28.zstd-max.hg

adding changesets

adding manifests

adding file changes

added 537259 changesets with 3275908 changes to 523698 files (+13 heads)

finished applying clone bundle

searching for changes

adding changesets

adding manifests

adding file changes

added 172 changesets with 758 changes to 570 files (-1 heads)

new changesets 8b3c35badb46:468e240bf668

537259 local changesets published

updating to branch default

(warning: large working directory being used without fsmonitor enabled; enable fsmonitor to improve performance; see "hg help -e fsmonitor")

279688 files updated, 0 files merged, 0 files removed, 0 files unresolved

real 21m9.662s

user 21m30.851s

sys 1m31.153sTo be fair, the Mozilla Mercurial repos also have a faster “streaming” clonebundle that they only prioritize automatically if the client is on AWS currently, because they are much larger, and could take longer to download. But you can opt-in with the --stream command line argument:

$ time hg clone --stream https://hg.mozilla.org/mozilla-unified mozilla-unified_hg

destination directory: mozilla-unified_hg

applying clone bundle from https://hg.cdn.mozilla.net/mozilla-unified/5ebb4441aa24eb6cbe8dad58d232004a3ea11b28.packed1.hg

525514 files to transfer, 2.95 GB of data

transferred 2.95 GB in 51.5 seconds (58.7 MB/sec)

finished applying clone bundle

searching for changes

adding changesets

adding manifests

adding file changes

added 172 changesets with 758 changes to 570 files (-1 heads)

new changesets 8b3c35badb46:468e240bf668

updating to branch default

(warning: large working directory being used without fsmonitor enabled; enable fsmonitor to improve performance; see "hg help -e fsmonitor")

279688 files updated, 0 files merged, 0 files removed, 0 files unresolved

real 1m49.388s

user 2m52.943s

sys 0m43.779sIf you’re using Mercurial and can download 3GB in less than 20 minutes (in other words, if you can download faster than 2.5MB/s), you’re probably better off with the streaming clone.

Bonus fact: the Git clone is smaller than the Mercurial clone

The Mercurial streaming clone bundle contains data in a form close to what Mercurial puts on disk in the .hg directory, meaning the size of .hg is close to that of the clone bundle. The Cinnabarclone bundle contains a git pack, meaning the size of .git is close to that of the bundle, plus some more for the pack index file that unbundling creates.

The amazing fact is that, to my own surprise, the git pack, containing the repository contents along with all git-cinnabar needs to recreate Mercurial changesets, manifests and files from the contents, takes less space than the Mercurial streaming clone bundle.

And that translates in local repository size:

$ du -h -s --apparent-size mozilla-unified_hg/.hg

3.3G mozilla-unified_hg/.hg

$ du -h -s --apparent-size mozilla-unified_git/.git

3.1G mozilla-unified_git/.gitAnd because Mercurial creates so many files (essentially, two per file that ever was in the repository), there is a larger difference in block size used on disk:

$ du -h -s mozilla-unified_hg/.hg

4.7G mozilla-unified_hg/.hg

$ du -h -s mozilla-unified_git/.git

3.1G mozilla-unified_git/.gitIt’s even more mind blowing when you consider that Mercurial happily creates delta chains of several thousand revisions, when the git pack’s longest delta chain is 250 (set arbitrarily at pack creation, by which I mean I didn’t pick a larger value because it didn’t make a significant difference). For the casual readers, Git and Mercurial try to store object revisions as a diff/delta from a previous object revision because that takes less space. You get a delta chain when that previous object revision itself is stored as a diff/delta from another object revision itself stored as a diff/delta … etc.

My guess is that the difference is mainly caused by the use of line-based deltas in Mercurial, but some Mercurial developer should probably take a deeper look. The fact that Mercurial cannot delta across file renames is another candidate.

|

|

Mozilla Security Blog: Fixing Antivirus Errors |

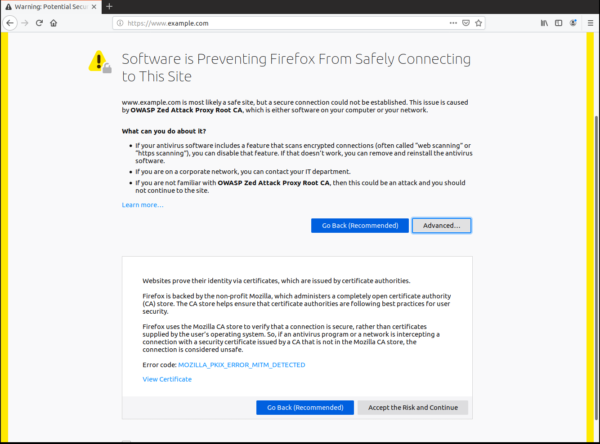

After the release of Firefox 65 in December, we detected a significant increase in a certain type of TLS error that is often triggered by the interaction of antivirus software with the browser. Today, we are announcing the results of our work to eliminate most of these issues, and explaining how we have done so without compromising security.

On Windows, about 60% of Firefox users run antivirus software and most of them have HTTPS scanning features enabled by default. Moreover, CloudFlare publishes statistics showing that a significant portion of TLS browser traffic is intercepted. In order to inspect the contents of encrypted HTTPS connections to websites, the antivirus software intercepts the data before it reaches the browser. TLS is designed to prevent this through the use of certificates issued by trusted Certificate Authorities (CAs). Because of this, Firefox will display an error when TLS connections are intercepted unless the antivirus software anticipates this problem.

Firefox is different than a number of other browsers in that we maintain our own list of trusted CAs, called a root store. In the past we’ve explained how this improves Firefox security. Other browsers often choose to rely on the root store provided by the operating system (OS) (e.g. Windows). This means that antivirus software has to properly reconfigure Firefox in addition to the OS, and if that fails for some reason, Firefox won’t be able to connect to any websites over HTTPS, even when other browsers on the same computer can.

The interception of TLS connections has historically been referred to as a “man-in-the-middle”, or MITM. We’ve developed a mechanism to detect when a Firefox error is caused by a MITM. We also have a mechanism in place that often fixes the problems. The “enterprise roots” preference, when enabled, causes Firefox to import any root CAs that have been added to the OS by the user, an administrator, or a program that has been installed on the computer. This option is available on Windows and MacOS.

We considered adding a “Fix it” button to MITM error pages (see example below) that would allow users to easily enable the “enterprise roots” preference when the error is displayed. However, we realized that this was something we want users to do rather than an “override” button that allows a user to bypass an error at their own risk.

Example of a MitM Error Page in Firefox

Example of a MitM Error Page in Firefox

Beginning with Firefox 68, whenever a MITM error is detected, Firefox will automatically turn on the “enterprise roots” preference and retry the connection. If it fixes the problem, then the “enterprise roots” preference will remain enabled (unless the user manually sets the “security.enterprise_roots.enabled” preference to false). We’ve tested this change to ensure that it doesn’t create new problems. We are also recommending as a best practice that antivirus vendors enable this preference (by modifying prefs.js) instead of adding their root CA to the Firefox root store. We believe that these actions combined will greatly reduce the issues encountered by Firefox users.

In addition, in Firefox ESR 68, the “enterprise roots” preference will be enabled by default. Because extended support releases are often used in enterprise settings where there is a need for Firefox to recognize the organization’s own internal CA, this change will streamline the process of deploying Firefox for administrators.

Finally, we’ve added an indicator that allows the user to determine when a website is relying on an imported root CA certificate. This notification is on the site information panel accessed by clicking the lock icon in the URL bar.

It might cause some concern for Firefox to automatically trust CAs that haven’t been audited and gone through the rigorous Mozilla process. However, any user or program that has the ability to add a CA to the OS almost certainly also has the ability to add that same CA directly to the Firefox root store. Also, because we only import CAs that are not included with the OS, Mozilla maintains our ability to set and enforce the highest standards in the industry on publicly-trusted CAs that Firefox supports by default. In short, the changes we’re making meet the goal of making Firefox easier to use without sacrificing security.

The post Fixing Antivirus Errors appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2019/07/01/fixing-antivirus-errors/

|

|

Mike Hommey: Announcing git-cinnabar 0.5.2 |

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

These release notes are also available on the git-cinnabar wiki.

What’s new since 0.5.1?

- Updated git to 2.22.0 for the helper.

- cinnabarclone support is now enabled by default. See details in

README.mdandmercurial/cinnabarclone.py. - cinnabarclone now supports grafted repositories.

git cinnabar fscknow does incremental checks against last known good state.- Avoid git cinnabar sometimes thinking the helper is not up-to-date when it is.

- Removing bookmarks on a Mercurial server is now working properly.

|

|

Firefox UX: iPad Sketching with GoodNotes 5 |

I’m always making notes and sketching on my iPad and people often ask me what app I’m using. So I thought I’d make a video of how I use GoodNotes 5 in my design practice.

Links:

This video on YouTube

GoodNotes

Templates: blank, storyboard, crazy 8s, iPhone X

Transcript:

Hi, I’m Michael Verdi, and I’m a product designer for Firefox. Today, I wanna talk about how I use sketching on my iPad in my design practice. So, first, sketching on a iPad, what I really like about it is that these apps on the iPad allow me to collect all my stuff in one place. So, I’ve got photos and screenshots, I’ve got handwritten notes, typed up notes, whatever, it’s all together. And I can organize it, and I can search through it and find what I need.

There’s really two basic kind of apps that you can use for this. There’s drawing and sketching apps, and then there’s note-taking apps. Personally, I prefer the note-taking apps because they usually have better search tools and organization. The thing that I like that I’m gonna talk about today is GoodNotes 5.

I’ve got all kinds of stuff in here. I’ve got handwritten notes with photographs, I’ve got some typewritten notes, screenshots, other things that I’ve saved, photographs, again. Yeah, I really like using this. I can do storyboards in here, right, or I can draw things, copy and paste them so that I can iterate quickly, make multiple variations over and over again. I can stick in screenshots and then draw on top of them or annotate them. Right, so, let me do a quick demo of using this to draw.

So, one of the things that I’ll do is actually, maybe I’ve drawn some stuff before, and I’ll save that drawing as an image in my photo library. And then I’ll come stick it in here, and I’ll draw on top of it. So, I work on search, so here’s Firefox with no search box. So, I’m gonna draw one. Let’s use some straight lines to draw one. I’m gonna draw a big search box, but I’m doing it here in the middle because I’m gonna place it a little better in a second. And we have the selection tool, and I’m gonna make the, the selection is not selecting images, right? So, I can come over here and just grab my box, and then I can move my box around on top. Okay, so, I still have this gray line. I can’t erase that because it’s an image. So, I’m gonna come over here, and I’m gonna get some white, and I’m gonna just draw over it. Right, okay. Let’s go back and get my gray color. I can zoom in when I need to, and I’m gonna copy this, and I’m gonna pate it a bunch of times. Then I can annotate this. Right, so, there we go.