Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Mozilla Blog: State of Mozilla 2017: Annual Report |

The State of Mozilla annual report for 2017 is now available here.

The new report outlines how Mozilla operates, provides key information on the ways in which we’ve made an impact, and includes details from our financial reports for 2017. The State of Mozilla report release is timed to coincide with when we submit the Mozilla non-profit tax filing for the previous calendar year.

Mozilla is unique. We were founded nearly 20 years ago with the mission to ensure the internet is a global public resource that is open and accessible to all. That mission is as important now as it has ever been.

The demand to keep the internet transparent and accessible to all, while preserving user control of their data, has always been an imperative for us and it’s increasingly become one for consumers. From internet health to digital rights to open source — the movement of people working on internet issues is growing — and Mozilla is at the forefront of the fight.

We measure our success not only by the adoption of our products, but also by our ability to increase the control people have in their online lives, our impact on the internet, our contribution to standards, and how we work to protect the overall health of the web.

As we look ahead, we know that leading the charge in changing the culture of the internet will mean doing more to develop the direct relationships that will make the Firefox and Mozilla experiences fully responsive to the needs of consumers today.

We’re glad not to be at this alone. None of the work we do or the impact we have would be possible without the dedication of our global community of contributors and loyal Firefox users. We are incredibly grateful for the support and we will continue to fight for the open internet.

We encourage you to get involved to help protect the future of the internet, join Mozilla.

The post State of Mozilla 2017: Annual Report appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/11/27/state-of-mozilla-2017-annual-report/

|

|

Mozilla Open Policy & Advocacy Blog: Brussels Mozilla Mornings: Critically assessing the EU Terrorist Content regulation |

On the morning of 12 December, Mozilla will host the first of our Brussels Mozilla Mornings series – regular breakfast meetings where we bring together policy experts, policy-makers and practitioners for insight and discussion on latest EU digital policy developments. This first session will focus on the recently-proposed EU Terrorist Content regulation.

The panel discussion will seek to unpack the Commission’s legislative proposal – what it means for the internet, users’ rights, and the fight against terrorism. The discussions will be substantive in nature, and will deal with some of the most contentious issues in the proposal, including the 60 minute takedown procedure and upload filtering obligations.

Speakers

Fanny Hidv'egi, Access Now

Joris van Hoboken, Free University of Brussels

Owen Bennett, Mozilla

Moderated by Jen Baker

Logistical details

08:30-10:00

Wednesday 12 December

Radisson RED hotel, rue d’Idalie 35, Brussels

Register your attendance here.

The post Brussels Mozilla Mornings: Critically assessing the EU Terrorist Content regulation appeared first on Open Policy & Advocacy.

|

|

William Lachance: Making contribution work for Firefox tooling and data projects |

One of my favorite parts about Mozilla is mentoring and working alongside third party contributors. Somewhat surprisingly since I work on internal tools, I’ve had a fair amount of luck finding people to help work on projects within my purview: mozregression, perfherder, metrics graphics, and others have all benefited from the contributions of people outside of Mozilla.

In most cases (a notable exception being metrics graphics), these have been internal-tooling projects used by others to debug, develop, or otherwise understand the behaviour of Firefox. On the face of it, none of the things I work on are exactly “high profile cutting edge stuff” in the way, say, Firefox or the Rust Programming Language are. So why do they bother? The exact formula varies depending on contributor, but I think it usually comes down to some combination of these two things:

- A desire to learn and demonstrate competence with industry standard tooling (the python programming language, frontend web development, backend databases, “big data” technologies like Parquet, …).

- A desire to work with and gain recognition inside of a community of like-minded people.

Pretty basic, obvious stuff — there is an appeal here to basic human desires like the need for security and a sense of belonging. Once someone’s “in the loop”, so to speak, generally things take care of themselves. The real challenge, I’ve found, is getting people from the “I am potentially interested in doing something with Mozilla internal tools” to the stage that they are confident and competent enough to work in a reasonably self-directed way. When I was on the A-Team, we classified this transition in terms of a commitment curve:

prototype commitment curve graphic by Steven Brown

The hardest part, in my experience, is the initial part of that curve. At this point, people are just dipping their toe in the water. Some may not have a ton of experience with software development yet. In other cases, my projects may just not be the right fit for them. But of course, sometimes there is a fit, or at least one could be developed! What I’ve found most helpful is “clearing a viable path” forward for the right kind of contributor. That is, some kind of initial hypothesis of what a successful contribution experience would look like as a new person transitions from “explorer” stage in the chart above to “associate”.

I don’t exactly have a perfect template for what “clearing a path” looks like in every case. It depends quite a bit on the nature of the contributor. But there are some common themes that I’ve found effective:

First, provide good, concise documentation both on the project’s purpose and vision and how to get started easily and keep it up to date. For projects with a front-end web component, I try to decouple the front end parts from the backend services so that people can yarn install && yarn start their way to success. Being able to see the project in action quickly (and not getting stuck on some mundane getting started step) is key in maintaining initial interest.

Second, provide a set of good starter issues (sometimes called “good first bugs”) for people to work on. Generally these would be non-critical-path type issues that have straightforward instructions to resolve and fix. Again, the idea here is to give people a sense of quick progress and resolution, a “yes I can actually do this” sort of feeling. But be careful not to let a contributor get stuck here! These bugs take a disproportionate amount of effort to file and mentor compared to their actual value — the key is to progress the contributor to the next level once it’s clear they can handle the basics involved in solving such an issue (checking out the source code, applying a fix, submitting a patch, etc). Otherwise you’re going to feel frustrated and wonder why you’re on an endless treadmill of writing up trivial bugs.

Third, once a contributor has established themselves by fixing a few of these simple issues, I try to get to know them a little better. Send them an email, learn where they’re from, invite them to chat on the project channel if they can. At the same time, this is an opportunity to craft a somewhat larger piece of work (a sort of mini-project) that they can do, tailored to the interests. For example, a new contributor on the Mission Control has recently been working on adding Jest tests to the project — I provided some basic guidance of things to look at, but did not dictate exactly how to perform the task. They figured that out for themselves.

As time goes by, you just continue this process. Depending on the contributor, they may start coming up with their own ideas for how a project might be improved or they might still want to follow your lead (or that of the team), but at the least I generally see an improvement in their self-directedness and confidence after a period of sustained contribution. In either case, the key to success remains the same: sustained and positive communication and sharing of goals and aspirations, making sure that both parties are getting something positive out of the experience. Where possible, I try to include contributors in team meetings. Where there’s an especially close working relationship (e.g. Google Summer of Code). I try to set up a weekly one on one. Regardless, I make reviewing code, answering questions, and providing suggestions on how to move forward a top priority (i.e. not something I’ll leave for a few days). It’s the least I can do if someone is willing to take time out to contribute to my project.

If this seems similar to the best practices for how members of a team should onboard each other and work together, that’s not really a coincidence. Obviously the relationship is a little different because we’re not operating with a formal managerial structure and usually the work is unpaid: I try to bear that mind and make double sure that contributors are really getting some useful skills and habits that they can take with them to future jobs and other opportunities, while also emphasizing that their code contributions are their own, not Mozilla’s. So far it seems to have worked out pretty well for all concerned (me, Mozilla, and the contributors).

|

|

Daniel Stenberg: HTTP/3 Explained |

I'm happy to tell that the booklet HTTP/3 Explained is now ready for the world. It is entirely free and open and is available in several different formats to fit your reading habits. (It is not available on dead trees.)

The book describes what HTTP/3 and its underlying transport protocol QUIC are, why they exist, what features they have and how they work. The book is meant to be readable and understandable for most people with a rudimentary level of network knowledge or better.

These protocols are not done yet, there aren't even any implementation of these protocols in the main browsers yet! The book will be updated and extended along the way when things change, implementations mature and the protocols settle.

If you find bugs, mistakes, something that needs to be explained better/deeper or otherwise want to help out with the contents, file a bug!

It was just a short while ago I mentioned the decision to change the name of the protocol to HTTP/3. That triggered me to refresh my document in progress and there are now over 8,000 words there to help.

The entire HTTP/3 Explained contents are available on github.

If you haven't caught up with HTTP/2 quite yet, don't worry. We have you covered for that as well, with the http2 explained book.

|

|

Daniel Pocock: UN Forum on Business and Human Rights |

This week I'm at the UN Forum on Business and Human Rights in Geneva.

What is the level of influence that businesses exert in the free software community? Do we need to be more transparent about it? Does it pose a risk to our volunteers and contributors?

https://danielpocock.com/un-forum-business-human-rights-2018

|

|

The Servo Blog: This Week In Servo 119 |

In the past 3 weeks, we merged 243 PRs in the Servo organization’s repositories.

Planning and Status

Our roadmap is available online, including the overall plans for 2018.

This week’s status updates are here.

Notable Additions

- avadacatavra created the infrastructure for implementing the Resource Timing and Navigation Timing web standards.

- mandreyel added support for tracking focused documents in unique top-level browsing contexts.

- SimonSapin updated many crates to the Rust 2018 edition.

- ajeffrey improved the Magic Leap port in several ways.

- nox implemented some missing WebGL extensions.

- eijebong fixed a bug with fetching large HTTP responses.

- cybai added support for firing the

rejectionhandledDOM event. - Avanthikaa and others implemented additional oscillator node types.

- jdm made scrolling work more naturally on backgrounds of scrollable content.

- SimonSapin added support for macOS builds to the Taskcluster CI setup.

- paulrouget made rust-mozjs support long-term reuse without reinitializing the library.

- paulrouget fixed a build issue breaking WebVR support on android devices.

- nox cleaned up a lot of texture-related WebGL code.

- ajeffrey added a laser pointer in the Magic Leap port for interacting with web content.

- pyfisch made webdriver composition events trigger corresponding DOM events.

- nox reduced the amount of copying required when using HTML images for WebGL textures.

- vn-ki implemented the missing JS contrstructor for HTML audio elements.

- Manishearth added support for touch events on Android devices.

- SimonSapin improved the treeherder output for the new Taskcluster CI.

- jdm fixed a WebGL regression breaking non-three.js content on Android devices.

- paulrouget made Servo shut down synchronously to avoid crashes on Oculus devices.

- Darkspirit regenerated several important files used for HSTS and network requests.

New Contributors

- Jan Andre Ikenmeyer

- Josh Abraham

- Seiichi Uchida

- Timothy Guan-tin Chien

- Vishnunarayan K I

- Yaw Boakye

- fabrizio8

Interested in helping build a web browser? Take a look at our curated list of issues that are good for new contributors!

|

|

Mozilla GFX: WebRender newsletter #31 |

Greetings! I’ll introduce WebRender’s 31st newsletter with a few words about batching.

Efficiently submitting work to GPUs isn’t as straightforward as one might think. It is not unusual for a CPU renderer to go through each graphic primitive (a blue filled circle, a purple stroked path, and image, etc.) in z-order to produce the final rendered image. While this isn’t the most efficient way, greater care needs to be taken in optimizing the inner loop of the algorithm that renders each individual object than in optimizing the overhead of alternating between various types of primitives. GPUs however, work quite differently, and the cost of submitting small workloads is often higher than the time spent executing them.

I won’t go into the details of why GPUs work this way here, but the big takeaway is that it is best to not think of a GPU API draw call as a way to draw one thing, but rather as a way to submit as many items of the same type as possible. If we implement a shader to draw images, we get much better performance out of drawing many images in a single draw call than submitting a draw call for each image. I’ll call a “batch” any group of items that is rendered with a single drawing command.

So the solution is simply to render all images in a draw call, and then all of the text, then all gradients, right? Well, it’s a tad more complicated because the submission order affects the result. We don’t want a gradient to overwrite text that is supposed to be rendered on top of it, so we have to maintain some guarantees about the order of the submissions for overlapping items.

In the 29th newsletter intro I talked about culling and the way we used to split the screen into tiles to accelerate discarding hidden primitives. This tiling system was also good at simplifying the problem of batching. In order to batch two primitives together we need to make sure that there is no primitive of a different type in between. Comparing all primitives on screen against every other primitive would be too expensive but the tiling scheme reduced this complexity a lot (we then only needed to compare primitives assigned to the same tile).

In the culling episode I also wrote that we removed the screen space tiling in favor of using the depth buffer for culling. This might sound like a regression for the performance of the batching code, but the depth buffer also introduced a very nice property: opaque elements can be drawn in any order without affecting correctness! This is because we store the z-index of each pixel in the depth buffer, so if some text is hidden by an opaque image we can still render the image before the text and the GPU will be configured to automatically discard the pixels of the text that are covered by the image.

In WebRender this means we were able to separate primitives in two groups: the opaque ones, and the ones that need to perform some blending. Batching opaque items is trivial since we are free to just put all opaque items of the same type in their own batch regardless of their painting order. For blended primitives we still need to check for overlaps but we have less primitives to consider. Currently WebRender simply iterates over the last 10 blended primitives to see if there is a suitable batch with no other type of primitive overlapping in between and defaults to starting a new batch. We could go for a more elaborate strategy but this has turned out to work well so far since we put a lot more effort into moving as many primitives as possible into the opaque passes.

In another episode I’ll describe how we pushed this one step further and made it possible to segment primitives into the opaque and non-opaque parts and further reduce the amount of blended pixels.

Notable WebRender and Gecko changes

- Henrik added reftests for the ImageRendering propertiy: (1), (2) and (3).

- Bobby changed the way pref changes propagate through WebRender.

- Bobby improved the texture cache debug view.

- Bobby improved the texture cache eviction heuristics.

- Chris fixed the way WebRender activation interacts with the progressive feature rollout system.

- Chris added a marionette test running on a VM with a GPU.

- Kats and Jamie experimented with various solution to a driver bug on some Adreno GPUs.

- Kvark removed some smuggling of clipIds for clip and reference frame items in Gecko’s displaylist building code. This fixed a few existing bugs: (1), (2) and (3).

- Matt and Jeff added some new telemetry and analyzed the results.

- Matt added a debugging indicator that moves when a frame takes too long to help with catching performance issues.

- Andrew landed his work on surface sharing for animated images which fixed most of the outstanding performance issues related to animated images.

- Andrew completed animated image frame recycling.

- Lee fixed a bug with sub-pixel glyph positioning.

- Glenn fixed a crash.

- Glenn fixed another crash.

- Glenn reduced the need for full world rect calculation during culling to make it easier to do clustered culling.

- Nical switched device coordinates to signed integers in order to be able to meet some of the needs of blob image recoordination and simplify some code.

- Nical added some debug checks to avoid global OpenGL states from the embedder causing issues in WebRender.

- Sotaro fixed an intermittent timeout.

- Sotaro fixed a crash on Android related to SurfaceTexture.

- Sotaro improved the frame synchronization code.

- Sotaro cleaned up the frame synchronization code some more.

- Timothy ported img AltFeedback items to WebRender.

Ongoing work

- Glenn is about to land a major piece of his tiled picture caching work which should solve a lot of the remaining issues with pages that generate too much GPU work.

- Matt, Dan and Jeff keep investigating into CPU performance, a lot which revolving around many memory copies generated by rustc when moving structures on the stack.

- Doug is investigating talos performance issues with document splitting.

- Nical is making progress on improving the invalidation of tiled blob images during scrolling.

- Kvark keeps catching smugglers in gecko’s displaylist building code.

- Kats and Jamie are hunting driver bugs on Android.

Enabling WebRender in Firefox Nightly

In about:config, set the pref “gfx.webrender.all” to true and restart the browser.

Reporting bugs

The best place to report bugs related to WebRender in Firefox is the Graphics :: WebRender component in bugzilla.

Note that it is possible to log in with a github account.

https://mozillagfx.wordpress.com/2018/11/21/webrender-newsletter-31/

|

|

The Firefox Frontier: Translate the Web |

Browser extensions make Google Translate even easier to use. With 100+ languages at the ready, Google Translate is the go-to translation tool for millions of people around the world. But … Read more

The post Translate the Web appeared first on The Firefox Frontier.

|

|

Mozilla Open Policy & Advocacy Blog: The EU Terrorist Content Regulation – a threat to the ecosystem and our users’ rights |

In September the European Commission proposed a new regulation that seeks to tackle the spread of ‘terrorist’ content on the internet. As we’ve noted already, the Commission’s proposal would seriously undermine internet health in Europe, by forcing companies to aggressively suppress user speech with limited due process and user rights safeguards. Here we unpack the proposal’s shortfalls, and explain how we’ll be engaging on it to protect our users and the internet ecosystem.

As we’ve highlighted before, illegal content is symptomatic of an unhealthy internet ecosystem, and addressing it is something that we care deeply about. To that end, we recently adopted an addendum to our Manifesto, in which we affirmed our commitment to an internet that promotes civil discourse, human dignity, and individual expression. The issue is also at the heart of our recently published Internet Health Report, through its dedicated section on digital inclusion.

At the same time lawmakers in Europe have made online safety a major political priority, and the Terrorist Content regulation is the latest legislative initiative designed to tackle illegal and harmful content on the internet. Yet, while terrorist acts and terrorist content are serious issues, the response that the European Commission is putting forward with this legislative proposal is unfortunately ill-conceived, and will have many unintended consequences. Rather than creating a safer internet for European citizens and combating the serious threat of terrorism in all its guises, this proposal would undermine due process online; compel the use of ineffective content filters; strengthen the position of a few dominant platforms while hampering European competitors; and, ultimately, violate the EU’s commitment to protecting fundamental rights.

Many elements from the proposal are worrying, including:

- The definition of ‘terrorist’ content is extremely broad, opening the door for a huge amount of over-removal (including the potential for discriminatory effect) and the resulting risk that much lawful and public interest speech will be indiscriminately taken down;

- Government-appointed bodies, rather than independent courts, hold the ultimate authority to determine illegality, with few safeguards in place to ensure these authorities act in a rights-protective manner;

- The aggressive one hour timetable for removal of content upon notification is barely feasible for the largest platforms, let alone the many thousands of micro, small and medium-sized online services whom the proposal threatens;

- Companies could be forced to implement ‘proactive measures’ including upload filters, which, as we’ve argued before, are neither effective nor appropriate for the task at hand; and finally,

- The proposal risks making content removal an end in itself, simply pushing terrorist off the open internet rather than tackling the underlying serious crimes.

As the European Commission acknowledges in its impact assessment, the severity of the measures that it proposes could only ever be justified by the serious nature of terrorism and terrorist content. On its face, this is a plausible assertion. However, the evidence base underlying the proposal does not support the Commission’s approach. For as the Commission’s own impact assessment concedes, the volume of ‘terrorist’ content on the internet is on a downward trend, and only 6% of Europeans have reported seeing terrorist content online, realities which heighten the need for proportionality to be at the core of the proposal. Linked to this, the impact assessment predicts that an estimated 10,000 European companies are likely to fall within this aggressive new regime, even though data from the EU’s police cooperation agency suggests terrorist content is confined to circa 150 online services.

Moreover, the proposal conflates online speech with offline acts, despite the reality that the causal link between terrorist content online, radicalisation, and terrorist acts is far more nuanced. Within the academic research around terrorism and radicalisation, no clear and direct causal link between terrorist speech and terrorist acts has been established (see in particular, research from UNESCO and RAND). With respect to radicalisation in particular, the broad research suggests exposure to radical political leaders and socio-economic factors are key components of the radicalisation process, and online speech is not a determinant. On this basis, the high evidential bar that is required to justify such a serious interference with fundamental rights and the health of the internet ecosystem is not met by the Commission. And in addition, the shaky evidence base demands that the proposal be subject to far greater scrutiny than it has been afforded thus far.

Besides these concerns, it is saddening that this new legislation is likely to create a legal environment that will entrench the position of the largest commercial services that have the resources to comply, undermining the openness on which a healthy internet thrives. By setting a scope that covers virtually every service that hosts user content, and a compliance bar that only a handful of companies are capable of reaching, the new rules are likely to engender a ‘retreat from the edge’, as smaller, agile services are unable to bear the cost of competing with the established players. In addition, the imposition of aggressive take-down timeframes and automated filtering obligations is likely to further diminish Europe’s standing as a bastion for free expression and due process.

Ultimately, the challenge of building sustainable and rights-protective frameworks for tackling terrorism is a formidable one, and one that is exacerbated when the internet ecosystem is implicated. With that in mind, we’ll continue to highlight how the nuanced interplay between hosting services, terrorist content, and terrorist acts mean this proposal requires far more scrutiny, deliberation, and clarification. At the very least, any legislation in this space must include far greater rights protection, measures to ensure that suppression of online content doesn’t become an end in itself, and a compliance framework that doesn’t make the whole internet march to the beat of a handful of large companies.

Stay tuned.

The post The EU Terrorist Content Regulation – a threat to the ecosystem and our users’ rights appeared first on Open Policy & Advocacy.

|

|

Chris AtLee: PyCon Canada 2018 |

I've very happy to have had the opportunity to attend and speak at PyCon Canada here in Toronto last week.

PyCon has always been a very well organized conference. There are a wide range of talks available, even on topics not directly related to Python. I've attended previous PyCon events in the past, but never the Canadian one!

My talk was titled How Mozilla uses Python to Build and Ship Firefox. The slides are available here if you're interested. I believe the sessions were recorded, but they're not yet available online. I was happy with the attendance at the session, and the questions during and after the talk.

As part of the talk, I mentioned how Release Engineering is a very distributed team. Afterwards, many people had followup questions about how to work effectively with remote teams, which gave me a great opportunity to recommend John O'Duinn's new book, Distributed Teams.

Some other highlights from the conference:

-

CircuitPython: Python on hardware I really enjoyed learning about CircuitPython, and the work that Adafruit is doing to make programming and electronics more accessible.

-

Using Python to find Russian Twitter troll tweets aimed at Canada A really interesting dive into 3 million tweets that FiveThirtyEight made available for analysis.

-

PEP 572: The Walrus Operator My favourite quote from the talk: "Dictators are people too!" If you haven't followed Python governance, Guido stepped down as BDFL (Benevolent Dictator for Life) after the PEP was resolved. Dustin focused much of his talk about how we in the Python community, and more generally in tech, need to treat each other better.

-

Who's There? Building a home security system with Pi & Slack A great example of how you can get started hacking on home automation with really simple tools.

-

Froil'an Irzarry's Keynote talk on the second day was really impressive.

-

You Don't Need That! Design patterns in Python My main takeaway from this was that you shouldn't try and write Python code as if it were Java or C++ :) Python has plenty of language features built-in that make many classic design patterns unnecessary or trivial to implement.

-

Numpy to PyTorch Really neat to learn about PyTorch, and leveraging the GPU to accelerate computation.

-

Flying Python - A reverse engineering dive into Python performance Made me want to investigate Balrog performance, and also look at ways we can improve Python startup time. Some neat tips about examining disassembled Python bytecode.

-

Working with Useless Machines Hilarious talk about (ab)using IoT devices.

-

Gathering Related Functionality: Patterns for Clean API Design I really liked his approach for creating clean APIs for things like class constructors. He introduced a module called variants which lets you write variants of a function / class initializer to support varying types of parameters. For example, a common pattern is to have a function that takes either a string path to a file, or a file object. Instead of having one function that supports both types of arguments, variants allows you to make distinct functions for each type, but in a way that makes it easy to share underlying functionality and also not clutter your namespace.

|

|

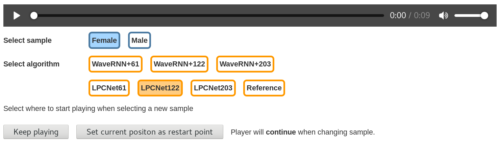

Hacks.Mozilla.Org: LPCNet: DSP-Boosted Neural Speech Synthesis |

LPCNet is a new project out of Mozilla’s Emerging Technologies group — an efficient neural speech synthesiser with reduced complexity over some of its predecessors. Neural speech synthesis models like WaveNet have already demonstrated impressive speech synthesis quality, but their computational complexity has made them hard to use in real-time, especially on phones. In a similar fashion to the RNNoise project, our solution with LPCNet is to use a combination of deep learning and digital signal processing (DSP) techniques.

Figure 1: Screenshot of a demo player that demonstrates the quality of LPCNet-synthesized speech.

LPCNet can help improve the quality of text-to-speech (TTS), low bitrate speech coding, time stretching, and more. You can hear the difference for yourself in our LPCNet demo page, where LPCNet and WaveNet speech are generated with the same complexity. The demo also explains the motivations for LPCNet, shows what it can achieve, and explores its possible applications.

You can find an in-depth explanation of the algorithm used in LPCNet in this paper.

The post LPCNet: DSP-Boosted Neural Speech Synthesis appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2018/11/lpcnet-dsp-boosted-neural-speech-synthesis/

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla files comments on NTIA’s proposed American privacy framework |

Countries around the world are considering how to protect their citizens’ data – but there continues to be a lack of comprehensive privacy protections for American internet users. But that could change. The National Telecommunications and Information Administration (NTIA) recently proposed an outcome-based framework to consumer data privacy, reflecting internationally accepted principles for privacy and data protection. Mozilla believes that the NTIA framework represents a good start to address many of these challenges, and we offered our thoughts to help Americans realize the same protections enjoyed by users in other countries around the world (you can see all the comments that were received at the NTIA’s website).

Mozilla has always been committed to strong privacy protections, user controls, and security tools in our policies and in the open source code of our products. We are pleased that the NTIA has embraced similar considerations in its framework, including the need for user control over the collection and use of information; minimization in the collection, storage, and the use of data; and security safeguards for personal information. While we generally support these principles, we also encourage the NTIA to pursue a more granular set of outcomes to provide more guidance for covered entities.

To supplement the proposed framework, our submission encourages the NTIA to adopt the following additional recommendations to protect user privacy:

- Include the explicit right to object to the processing of personal data as a core component of reasonable user control.

- Mandate the use of security and role-based access controls and protections against unlawful disclosures.

- Close the current gap in FTC oversight to cover telecommunications carriers and major non-profits that handle significant amounts of personal information.

- Expand FTC authority to provide the agency with the ability to make rules and impose civil penalties to deter future violations of consumer privacy.

- Provide the FTC with more resources and staff to better address threats in a rapidly evolving field.

In its request for comment, the NTIA stated that it believes that the United States should lead on privacy. The framework outlined by the agency represents a promising start to those efforts, and we are encouraged that the NTIA has sought the input of a broad variety of stakeholders at this pivotal juncture. But if the U.S. plans to lead on privacy, it must invest accordingly and provide the FTC with the legal tools and resources to demonstrate that commitment. Ultimately, this will lead to long-term benefits for users and internet-based businesses, providing greater certainty for data-driven entities and flexibility to address future threats.

The post Mozilla files comments on NTIA’s proposed American privacy framework appeared first on Open Policy & Advocacy.

|

|

Hacks.Mozilla.Org: Decentralizing Social Interactions with ActivityPub |

In the Dweb series, we are covering projects that explore what is possible when the web becomes decentralized or distributed. These projects aren’t affiliated with Mozilla, and some of them rewrite the rules of how we think about a web browser. What they have in common: These projects are open source and open for participation, and they share Mozilla’s mission to keep the web open and accessible for all.

Social websites first got us talking and sharing with our friends online, then turned into echo-chamber content silos, and finally emerged in their mature state as surveillance capitalist juggernauts, powered by the effluent of our daily lives online. The tail isn’t just wagging the dog, it’s strangling it. However, there just might be a way forward that puts users back in the driver seat: A new set of specifications for decentralizing social activity on the web. Today you’ll get a helping hand into that world from from Darius Kazemi, renowned bot-smith and Mozilla Fellow.

– Dietrich Ayala

Introducing ActivityPub

Hi, I’m Darius Kazemi. I’m a Mozilla Fellow and decentralized web enthusiast. In the last year I’ve become really excited about ActivityPub, a W3C standard protocol that describes ways for different social network sites (loosely defined) to talk to and interact with one another. You might remember the heyday of RSS, when a user could subscribe to almost any content feed in the world from any number of independently developed feed readers. ActivityPub aims to do for social network interactions what RSS did for content.

Architecture

ActivityPub enables a decentralized social web, where a network of servers interact with each other on behalf of individual users/clients, very much like email operates at a macro level. On an ActivityPub compliant server, individual user accounts have an inbox and an outbox that accept HTTP GET and POST requests via API endpoints. They usually live somewhere like https://social.example/users/dariusk/inbox and https://social.example/users/dariusk/outbox, but they can really be anywhere as long as they are at a valid URI. Individual users are represented by an Actor object, which is just a JSON-LD file that gives information like username and where the inbox and outbox are located so you can talk to the Actor. Every message sent on behalf of an Actor has the link to the Actor’s JSON-LD file so anyone receiving the message can look up all the relevant information and start interacting with them.

A simple server to send ActivityPub messages

One of the most popular social network sites that uses ActivityPub is Mastodon, an open source community-owned and ad-free alternative to social network services like Twitter. But Mastodon is a huge, complex project and not the best introduction to the ActivityPub spec as a developer. So I started with a tutorial written by Eugen Rochko (the principal developer of Mastodon) and created a partial reference implementation written in Node.js and Express.js called the Express ActivityPub server.

The purpose of the software is to serve as the simplest possible starting point for developers who want to build their own ActivityPub applications. I picked what seemed to me like the smallest useful subset of ActivityPub features: the ability to publish an ActivityPub-compliant feed of posts that any ActivityPub user can subscribe to. Specifically, this is useful for non-interactive bots that publish feeds of information.

To get started with Express ActivityPub server in a local development environment, install

git clone https://github.com/dariusk/express-activitypub.git

cd express-activitypub/

npm i

In order to truly test the server it needs to be associated with a valid, https-enabled domain or subdomain. For local testing I like to use ngrok to test things out on one of the temporary domains that they provide. First, install ngrok using their instructions (you have to sign in but there is a free tier that is sufficient for local debugging). Next run:

ngrok http 3000

This will show a screen on your console that includes a domain like abcdef.ngrok.io. Make sure to note that down, as it will serve as your temporary test domain as long as ngrok is running. Keep this running in its own terminal session while you do everything else.

Then go to your config.json in the express-activitypub directory and update the DOMAIN field to whatever abcdef.ngrok.io domain that ngrok gave you (don’t include the http://), and update USER to some username and PASS to some password. These will be the administrative password required for creating new users on the server. When testing locally via ngrok you don’t need to specify the PRIVKEY_PATH or CERT_PATH.

Next run your server:

node index.js

Go to https://abcdef.ngrok.io/admin (again, replace the subdomain) and you should see an admin page. You can create an account here by giving it a name and then entering the admin user/pass when prompted. Try making an account called “test” — it will give you a long API key that you should save somewhere. Then open an ActivityPub client like Mastodon’s web interface and try following @test@abcdef.ngrok.io. It should find the account and let you follow!

Back on the admin page, you’ll notice another section called “Send message to followers” — fill this in with “test” as the username, the hex key you just noted down as the password, and then enter a message. It should look like this:

Screenshot of form

Hit “Send Message” and then check your ActivityPub client. In the home timeline you should see your message from your account, like so:

Post in Mastodon mobile web view

And that’s it! It’s not incredibly useful on its own but you can fork the repository and use it as a starting point to build your own services. For example, I used it as the foundation of an RSS-to-ActivityPub conversion service that I wrote (source code here). There are of course other services that could be built using this. For example, imagine a replacement for something like MailChimp where you can subscribe to updates for your favorite band, but instead of getting an email, everyone who follows an ActivityPub account will get a direct message with album release info. Also it’s worth browsing the predefined Activity Streams Vocabulary to see what kind of events the spec supports by default.

Learn More

There is a whole lot more to ActivityPub than what I’ve laid out here, and unfortunately there aren’t a lot of learning resources beyond the specs themselves and conversations on various issue trackers.

If you’d like to know more about ActivityPub, you can of course read the ActivityPub spec. It’s important to know that while the ActivityPub spec lays out how messages are sent and received, the different types of messages are specified in the Activity Streams 2.0 spec, and the actual formatting of the messages that are sent is specified in the Activity Streams Vocabulary spec. It’s important to familiarize yourself with all three.

You can join the Social Web Incubator Community Group, a W3C Community Group, to participate in discussions around ActivityPub and other social web tech standards. They have monthly meetings that you can dial into that are listed on the wiki page.

And of course if you’re on an ActivityPub social network service like Mastodon or Pleroma, the #ActivityPub hashtag there is always active.

The post Decentralizing Social Interactions with ActivityPub appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2018/11/decentralizing-social-interactions-with-activitypub/

|

|

Ludovic Hirlimann: CR Capitole du Libre 2018 |

Cette ann'ee, comme les ann'ees pr'ec'edentes j’ai “organis'e” la pr'esence de Mozilla au Capitole du libre. je dis organis'e entre guillemet car au dernier moment l’ami Rzr nous a rejoint pour nous aider `a tenir le stand et pr'esenter ce que Mozilla fait en mati`ere d’IOT de nos jours. Nous avons donc pu tenir le stand et allez donner nos pr'esentations sans que le stand soit vide.

La pr'esence de nombreux goodies sur le stand plus la plante et les lumi`eres d’IOT ont fait qu’il y a eut une affluence – qui sans l’avoir compt'e approchait celle des ann'ees pr'ec'edentes – sauf durant la conf'erence de Tristant, en effet tout le monde l’'ecoutait.

En termes de stand nous pr'esentions Common Voice – et a notre grand surprise beaucoup de visiteurs connaissaient d'ej`a. Nous pr'esentions aussi la pr'esence de Focus et de Firefox sur mobile; Deux questions r'ecurrentes sont revenues, comment trouver Firefox sur F-droid et pourquoi il n’y avait pas de lien de t'el'echargement F-droid sur nos pages officielles.

- Rzr a pr'esent'e Web of things using iot.js, en video c'est l`a.

- Genma a pr'esent'e faire de l'agent avec du logiciel libre.

- J’ai pr'esent'e Open data - Qui [Video\|Transparent] et plus ou moins organis'e la Key Signing party.

J’aimerais remercier El doctor pour les stickers Common Voice, teoli pour les t-shirts MDN, Sylvestre et Pascal pour les nombreux auto-collant Firefox et Firefox nightly. Les organisateurs et leurs sponsors, de nous avoir fait confiance `a la fois au niveau du village du libre et pour les conf'erences. Les b'en'evoles sans qui y aurait rien eut. Enfin un grand merci `a Linkid qui a jou'e avec mes enfants sur le stand de la LAN party durant tout le week-end.

En note plus personnel j’ai pu assister `a une conf'erence super int'eressante de Vincent sur l’import d’un fond photographique sur la galaxie Wiki. J'ai revu un copain de Fac, que j'avais perdu depuis 20 ans, ca m'a fait bien plaisr de te revoir Christian. Enfin je suis tr`es content d'avoir pu participer `a la campagne de financement de nos-oignons.

https://www.hirlimann.net/Ludovic/carnet/?post/2018/11/20/CR-Capitole-du-Libre-2018

|

|

Chris Ilias: How to fix Firefox Sync issues by resetting your data |

Firefox has a refresh feature, which resets some non-essential data to help fix and prevent issues you have using Firefox. Your Firefox Sync data does not get reset, but there may be cases when resetting that data will help fix issues. It will erase data on the server, but don’t worry; your local data will stay intact.

- Open the Firefox Settings page (about:preferences), and select the Firefox Account panel.

- Click Disconnect, then click Just Disconnect to make sure you’re not signed in.

- Click Sign in.

- Enter your Firefox Account email address, then click Continue.

- Click the Forgot password link.

- Enter your Firefox Account address, and click Begin Reset to receive a password reset email.

- Follow the instructions in your reset email to change your password.

After you’ve reset your password and reconnected Firefox to your account, your local data will be uploaded to the server.

http://ilias.ca/blog/2018/11/how-to-fix-firefox-sync-issues-by-resetting-your-data/

|

|

Mike Hoye: Faint Signal |

It’s been a little over a decade since I first saw Clay Shirky lay out his argument about what he called the “cognitive surplus”, but it’s been on my mind recently as I start to see more and more people curtail or sever their investments in always-on social media, and turn their attentions to… something.

Something Else.

I was recently reminded of some reading I did in college, way back in the last century, by a British historian arguing that the critical technology, for the early phase of the industrial revolution, was gin.

The transformation from rural to urban life was so sudden, and so wrenching, that the only thing society could do to manage was to drink itself into a stupor for a generation. The stories from that era are amazing– there were gin pushcarts working their way through the streets of London.

And it wasn’t until society woke up from that collective bender that we actually started to get the institutional structures that we associate with the industrial revolution today. Things like public libraries and museums, increasingly broad education for children, elected leaders–a lot of things we like–didn’t happen until having all of those people together stopped seeming like a crisis and started seeming like an asset.

It wasn’t until people started thinking of this as a vast civic surplus, one they could design for rather than just dissipate, that we started to get what we think of now as an industrial society.

– Clay Shirky, “Gin, Television and the Cognitive Surplus“, 2008.

I couldn’t figure out what it was at first – people I’d thought were far enough ahead of the curve to bend its arc popping up less often or getting harder to find; I’m not going to say who, of course, because who it is for me won’t be who it is for you. But you feel it too, don’t you? That quiet, empty space that’s left as people start dropping away from hyperconnected. The sense of getting gently reacquainted with loneliness and boredom as you step away from the full-court vanity press and stop synchronizing your panic attacks with the rest of the network. The moment of clarity, maybe, as you wake up from that engagement bender and remember the better parts of your relationship with absence and distance.

How, on a good day, the loneliness set your foot on the path, how the boredom could push you to push yourself.

I was reading the excellent book MARS BY 1980 in bed last night and this term just popped into my head as I was circling sleep. I had to do that thing where you repeat it in your head twenty times so that I’d remember it in the morning. I have no idea what refuture or refuturing really means, except that “refuturing” connects it in my mind with “rewilding.” The sense of creating new immediate futures and repopulating the futures space with something entirely divorced from the previous consensus futures.

Refuture. Refuturing. I don’t know. I wanted to write it down before it went away.

Which I guess is what we do with ideas about the future anyway.

– Warren Ellis, August 21, 2018.

Maybe it’s just me. I can’t quite see the shape of it yet, but I can hear it in the distance, like a radio tuned to a distant station; signal in the static, a song I can’t quite hear but I can tell you can dance to. We still have a shot, despite everything; whatever’s next is coming.

I think it’s going to be interesting.

|

|

The Firefox Frontier: 6 Essential Tips for Safe Online Shopping |

The turkey sandwiches are in the fridge, and you didn’t argue with your uncle. It’s time to knock out that gift list, and if you’re like millions of Americans, you’re … Read more

The post 6 Essential Tips for Safe Online Shopping appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/6-tips-for-safe-online-shopping/

|

|