Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Cameron: Rust in 2022 |

A response to the call for 2019 roadmap blog posts.

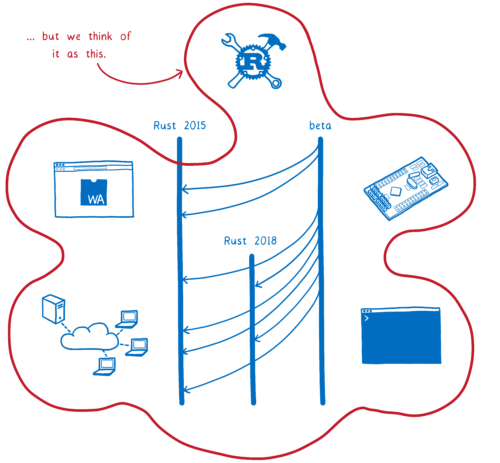

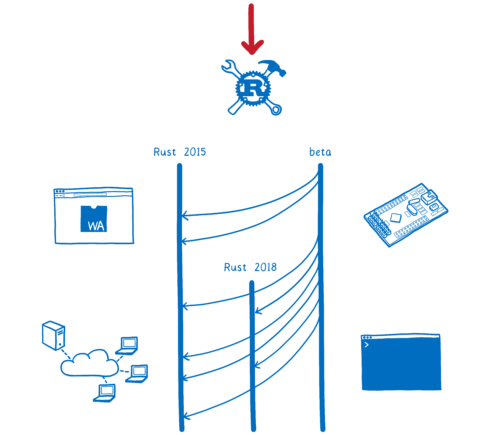

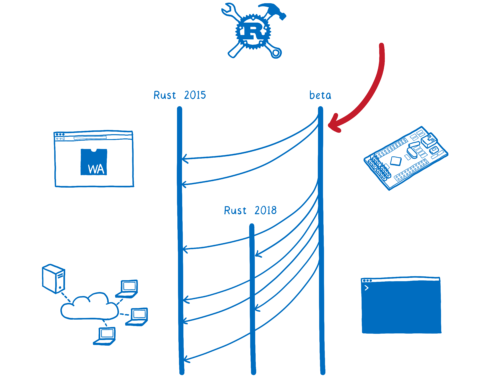

In case you missed it, we released our second edition of Rust this year! An edition is an opportunity to make backwards incompatible changes, but more than that it's an opportunity to bring attention to how programming in Rust has changed. With the 2018 edition out of the door, now is the time to think about the next edition: how do we want programming in Rust in 2022 to be different to programming in Rust today? Once we've worked that out, lets work backwards to what should be done in 2019.

Without thinking about the details, lets think about the timescale and cadence it gives us. It was three years from Rust 1.0 to Rust 2018 and I expect it will be three years until the next edition. Although I think the edition process went quite well, I think that if we'd planned in advance then it could have gone better. In particular, it felt like there were a lot of late changes which could have happened earlier so that we could get more experience with them. In order to avoid that I propose that we aim to avoid breaking changes and large new features landing after the end of 2020. That gives 2021 for finishing, polishing, and marketing with a release late that year. Working backwards, 2020 should be an 'impl year' - focussing on designing and implementing the things we know we want in place for the 2021 edition. 2019 should be a year to invest while we don't have any release pressure.

To me, investing means paying down technical debt, looking at our processes, infrastructure, tooling, governance, and overheads to see where we can be more efficient in the long run, and working on 'quality of life' improvements for users, the kind that don't make headlines but will make using Rust a better experience. It's also the time to investigate some high-risk, high-reward ideas that will need years of iteration to be user-ready; 2019 should be an exciting year!

The 2021 edition

I think Rust 2021 should be about Rust's maturity. But what does that mean? To me it means that for a programmer in 2022, Rust is a safe choice with many benefits, not a high-risk/high-reward choice. Choosing Rust for a project should be a competitive advantage (which I think it is today), but it should not require investment in libraries, training, or research.

Some areas that I think are important for the 2021 edition:

- sustainability: Rust has benefited from being a new and exciting language. As we get more mature, we'll become less exciting. We need to ensure that the Rust project has sufficient resources for the long term, and that we have a community and governance structure that can support Rust through the (hopefully) long, boring years of its existence. We'll need to think of new ways to attract and retain users (as well as just being awesome).

- diversity: Rust's community as a good reputation as a welcoming community. However, we are far from being a diverse one. We need to do more.

- mature tools: Cargo must be more flexible, IDE support must be better, debugging, profiling, and testing need to be easy to use, powerful, and flexible. We need to provide the breadth and depth of tool support which users expect from established languages.

- async programming: high-performance network programming is an amazing fit for Rust. Work on Linkerd and Fuchsia has already proved this out. Having a good story around async programming will make things even better. I believe Rust could be the best choice in this domain by a wide margin. However, building out the whole async programming story is a huge task, touching on language features, libraries, documentation, programming techniques, and crates. The current work already feels huge and I believe that this is only the beginning of iteration.

- std, again: Rust's standard library is great, a point in favour of Rust. Since Rust 1.0 there have been steady improvements, but the focus has been on the wider ecosystem. I think for the next edition we will need to consider a second wave of work. There are some fundamental things that are worth re-visiting (e.g., Parking Lot mutexes), and a fair few things where our approach has been to let things develop as crates 'for now', and where we either need to move them into std, or re-think user workflow around discoverability, etc. (e.g., Crossbeam, Serde).

- error handling: we really need to improve the ergonomics of error handling. Although the mechanism is (IMO) really great, it is pretty high-friction compared to exceptions. We should look at ways to improve this (

Ok-wrapping,throwsin function signatures, etc.). I think this is an edition issue since it will probably require a breaking change, and at the least will change the style of programming. - macros: we need to 'finish' macros, both procedural and declarative. That means being confident about our hygiene and naming implementation, iterating on libraries, stabilising everything, and supporting the modern

macrosyntax. - internationalisation: we need to do much better. We need internationalisation and localisation libraries on a path to stabilisation, either in std or discoverable and easily usable as crates. It should be as easy to print or use a multi-locale string as a single-locale one. We also need to do better at the project-level - more local conferences, localised documentation, better ways for non-English speakers to be part of the Rust community.

Let's keep focussing on areas where Rust has proven to be a good fit (systems, networking, embedded (both devices and as performance-critical components in larger systems), WASM, games) and looking for new areas to expand into.

2019

So, with the above in mind, what should we be doing next year? Each team should be thinking about what are the high-risk experiments to try. How can we tackle technical (and community) debt? What can be polished? Lets spend the year making sure we are on the right track for the next edition and the long-term by encouraging people to dedicate more energy to strategy.

Of course we want to plan some concrete work too, so in no particular order here are some specific ideas I think are worth looking into:

- discoverability of libraries and tools: how does a new user find out about Serde or Clippy?

- project infrastructure: are bors, homu, our repo structures, and our processes optimal? How can we make contributing to Rust easier and faster?

- the RFC process: RFCs are good, but the process has some weaknesses: discussion is scattered around different venues, discussions are often stressful, writing an RFC is difficult, and so forth. We can do better!

- project governance: we have to keep scaling and iterating. 2019 is a good opportunity to think about our governance structures.

- moderation and 'tone' of discussion: we need to keep scaling moderation and think about better ways to moderate forums. Right now we do a good job of enforcing the CoC and removing egregious offenders, however, many of our forums can feel overwhelming or hostile. We should strive for more professional and considerate spaces.

- security of Rust code: starting the secure code working group is very timely. I think we're going to have look closely at ways we can make using and working with Rust code safer. From changes to Cargo and crates.io, to reasoning about unsafe code.

- games and graphics: as well as keeping going with the existing domain working groups, we should also look at games and graphics. Since these are such performance sensitive areas, Rust should be a good fit. There is already a small, but energetic community here and some initial uses in production.

- async/await and async programming: we need to keep pushing here on all fronts (language, std, crates, docs, gaining experience)

And lets dive a tiny bit deeper into a few areas:

Dev-tools

- Cargo: Cargo needs to integrate better into other build system and IDEs. There are a lot of places people want more flexibility, it will take another blog post to get into this.

- More effort on making the RLS good.

- Better IDE experience: more features, more polish, debugging and profiling integration.

- integrating Rustdoc, docs.rs, and cargo-src to make a best-in-class documentation and source code exploration experience

- work on a query API for the compiler to be a better base for building tools. Should support Clippy as well as the RLS, code completion, online queries, etc.

Language

Even more than the other areas, the language team needs to think about development cadence in preparation for the next edition. We need to ensure that everything, especially breaking changes, get enough development and iteration time.

Some things I think we should consider in 2019:

- generic associated types or HKTs or whatever: we need to make sure we have a feature which solves the problems we need to solve and isn't going to require more features down the road (i.e., we should aim for this being the last addition to the trait system).

- specialisation: is this going to work? I think that in combination with macros, there are some smart ways that we can work with error types and do some inheritance-like things.

- keep pushing on unfinished business (macros, impl Trait, const functions, etc.).

- named and optional arguments: this idea has been around for a while, but has been low priority. I think it would be very useful (there are some really ergonomic patterns in Swift that we might do well to emulate).

- enum variant types: this is small, but I REALLY WANT IT!

- gain experience with, and polish the new module system

- identify and fix paper cuts

Compiler

Nothing really new here, but we need to keep pushing on compiler performance - it comes up again and again as a Rust negative. We should also take the timing opportunity to do some refactoring, for example, landing Chalk and doing something similar for name resolution.

|

|

Wladimir Palant: If your bug bounty program is private, why do you have it? |

The big bug bounty platforms are structured like icebergs: the public bug bounty programs that you can see are only a tiny portion of everything that is going on there. As you earn your reputation on these platforms, they will be inviting you to private bug bounty programs. The catch: you generally aren’t allowed to discuss issues reported via private bug bounty programs. In fact, you are not even allowed to discuss the very existence of that bug bounty program.

I’ve been playing along for a while on Bugcrowd and Hackerone and submitted a number of vulnerability reports to private bug bounty programs. As a result, I became convinced that these private bug bounty programs are good for the bottom line of the bug bounty platforms, but otherwise their impact is harmful. I’ll try to explain here.

What is a bug bounty?

When you collect a bug bounty, that’s not because you work for a vendor. There is no written contract that states your rights and obligations. In its original form, you simply stumble upon a security vulnerability in a product and you decide to do the right thing: you inform the vendor. In turn, the vendor gives you the bug bounty as a token of their appreciation. It could be a monetary value but also some swag or an entry in the Hall of Fame.

Why pay you when the vendor has no obligation to do so? Primarily to keep you doing the right thing. Some vulnerabilities could be turned into money on the black market. Some could be used to steal data or extort the vendor. Everybody prefers people to earn their compensation in a legal way. Hence bug bounties.

What the bug bounty isn’t

There are so many bug bounty programs around today that many people made them their main source of income. While there are various reasons for that, one thing should not be forgotten: there is no law guaranteeing that you will be paid fairly. No contract means that your reward is completely dependent on the vendor. And it is hard to know in advance, sometimes the vendor will claim that they cannot reproduce, or downplay severity, or mark your report as a duplicate of a barely related report. In at least some cases there appears to be intent behind this behavior, the vendor trying to fit the bug bounty program into a certain budget regardless of the volume of the reports. So any security researcher trying to make a living from bug bounties has to calculate pessimistically, e.g. expecting that only one out of five reports will get a decent reward.

On the vendor’s side, there is a clear desire for the bug bounty program to replace penetration tests. Bugcrowd noticed this trend and is tooting their bug bounty programs as the “next gen pen test.” The trouble is, bug bounty hunters are only paid for bugs where they can demonstrate impact. They have no incentives to report minor issues, not only will the effort of demonstrating the issue be too high for the expected reward, it also reduces their rating on the bug bounty platform. They have no incentives to point out structural weaknesses, because these reports will be closed as “informational” without demonstrated impact. They often have no incentives to go for the more obscure parts of the product, these require more time to get familiar with but won’t necessarily result in critical bugs being discovered. In short, a “penetration test” performed by bug bounty hunters will be everything but thorough.

How are private bug bounties different for researchers?

If you feel that you are treated unfairly by the vendor, you have essentially two options. You can just accept it and vote with your feet: move on to another bug bounty program and learn how to recognize programs that are better avoided. The vendor won’t care as there will be plenty of others coming their way. Or you can make a fuzz about it. You could try to argue and probably escalate to the bug bounty platform vendor, but IMHO this rarely changes anything. Or you could publicly shame the vendor for their behavior and warn others.

The latter is made impossible by the conditions to participate in private bug bounty programs. Both Bugcrowd and Hackerone disallow you from talking about your experience with the program. Bug bounty hunters are always dependent on the good will of the vendor, but with private bug bounties it is considerably worse.

But it’s not only that. Usually, security researchers want recognition for their findings. Hackerone even has a process for disclosing vulnerability reports once the issue has been fixed. Public Bugcrowd programs also usually provision for coordinated disclosure. This gives the reporters the deserved recognition and allows everybody else to learn. But guess what: with private bug bounty programs, disclosure is always forbidden.

Why will people participate in private bug bounties at all? Main reason seems to be the reduced competition, finding unique issues is easier. In particular, when you join in the early days of a private bug bounty program, you have a good opportunity to generate cash with low hanging fruit.

Why do companies prefer private bug bounties?

If a bug bounty is about rewarding a random researcher who found a vulnerability in the product, how does a private bug bounty program make sense then? After all, it is like an exclusive club and unlikely to include the researcher in question. In fact, that researcher is unlikely to know about the bug bounty program, so they won’t have this incentive to do the right thing.

But the obvious answer is: the bug bounty platforms aren’t actually selling bug bounty management, they are selling penetration tests. They promise vendors to deliver high-quality reports from selected hackers instead of the usual noise that a public bug bounty program has to deal with. And that’s what many companies expect (but don’t receive) when they create a private bug bounty.

There is another explanation that seems to match many companies. These companies know perfectly well that they just aren’t ready for it yet. Sometimes they simply don’t have the necessary in-house expertise to write secure code, so even with they bug bounty program always pointing out the same mistakes they will keep repeating them. Or they won’t free up developers from feature work to tackle security issues, so every year they will fix five issues that seem particularly severe but leave all the others untouched. So they go for a private bug bounty program because doing the same thing in public would be disastrous for their PR. And they hope that this bug bounty program will somehow make their product more secure. Except it doesn’t.

On Hackerone I also see another mysterious category: private bug bounty programs with zero activity. So somebody went through the trouble of setting up a bug bounty program but failed to make it attractive to researchers. Either it offers no rewards, or it expects people to buy some hardware that they are unlikely to own already, or the description of the program is impossible to decipher. Just now I’ve been invited to a private bug bounty program where the company’s homepage was completely broken, and I still don’t really understand what they are doing. I suspect that these bug bounty programs are another example of features that somebody got a really nice bonus for but nobody cared putting any thought into.

Somebody told me that their company went with a private bug bounty because they work with selected researchers only. So it isn’t actually a bug bounty program but really a way to manage communication with that group. I hope that they still have some other way to engage with researchers outside that elite group, even if it doesn’t involve monetary rewards for reported vulnerabilities.

Conclusions

As a security researcher, I’ve collected plenty of bad experiences with private bug bounty programs, and I know that other people did as well. Let’s face it: the majority of private bug bounty programs shouldn’t have existed in the first place. They don’t really make the products in question more secure, and they increase frustration among security researchers. And while some people manage to benefit financially from these programs, others are bound to waste their time on them. The confidentiality clauses of these programs substantially weaken the position of the bug bounty hunters, which isn’t too strong to start with. These clauses are also an obstacle to learning on both sides, ideally security issues should always be publicized once fixed.

Now the ones who should do something to improve this situations are the bug bounty platforms. However, I realize that they have little incentive to change this situation and are in fact actively embracing it. So while one can ask for example for a way to comment on private bug bounty programs so that newcomers can learn from the experience that others made with this program, such control mechanisms are unlikely to materialize. Publishing anonymized reports from private bug bounty programs would also be nice and just as unlikely. I wonder whether the solution is to add such features via a browser extension and whether it would gain sufficient traction then.

But really, private bug bounty programs are usually a bad idea. Most companies doing that right now should either switch to a public bug bounty or just drop their bug bounty program altogether. Katie Moussouris is already very busy convincing companies to drop bug bounty programs they cannot make use of, please help her and join that effort.

https://palant.de/2018/12/10/if-your-bug-bounty-program-is-private-why-do-you-have-it

|

|

Wladimir Palant: If your bug bounty program is private, why do you have it? |

The big bug bounty platforms are structured like icebergs: the public bug bounty programs that you can see are only a tiny portion of everything that is going on there. As you earn your reputation on these platforms, they will be inviting you to private bug bounty programs. The catch: you generally aren’t allowed to discuss issues reported via private bug bounty programs. In fact, you are not even allowed to discuss the very existence of that bug bounty program.

I’ve been playing along for a while on Bugcrowd and Hackerone and submitted a number of vulnerability reports to private bug bounty programs. As a result, I became convinced that these private bug bounty programs are good for the bottom line of the bug bounty platforms, but otherwise their impact is harmful. I’ll try to explain here.

What is a bug bounty?

When you collect a bug bounty, that’s not because you work for a vendor. There is no written contract that states your rights and obligations. In its original form, you simply stumble upon a security vulnerability in a product and you decide to do the right thing: you inform the vendor. In turn, the vendor gives you the bug bounty as a token of their appreciation. It could be a monetary value but also some swag or an entry in the Hall of Fame.

Why pay you when the vendor has no obligation to do so? Primarily to keep you doing the right thing. Some vulnerabilities could be turned into money on the black market. Some could be used to steal data or extort the vendor. Everybody prefers people to earn their compensation in a legal way. Hence bug bounties.

What the bug bounty isn’t

There are so many bug bounty programs around today that many people made them their main source of income. While there are various reasons for that, one thing should not be forgotten: there is no law guaranteeing that you will be paid fairly. No contract means that your reward is completely dependent on the vendor. And it is hard to know in advance, sometimes the vendor will claim that they cannot reproduce, or downplay severity, or mark your report as a duplicate of a barely related report. In at least some cases there appears to be intent behind this behavior, the vendor trying to fit the bug bounty program into a certain budget regardless of the volume of the reports. So any security researcher trying to make a living from bug bounties has to calculate pessimistically, e.g. expecting that only one out of five reports will get a decent reward.

On the vendor’s side, there is a clear desire for the bug bounty program to replace penetration tests. Bugcrowd noticed this trend and is tooting their bug bounty programs as the “next gen pen test.” The trouble is, bug bounty hunters are only paid for bugs where they can demonstrate impact. They have no incentives to report minor issues, not only will the effort of demonstrating the issue be too high for the expected reward, it also reduces their rating on the bug bounty platform. They have no incentives to point out structural weaknesses, because these reports will be closed as “informational” without demonstrated impact. They often have no incentives to go for the more obscure parts of the product, these require more time to get familiar with but won’t necessarily result in critical bugs being discovered. In short, a “penetration test” performed by bug bounty hunters will be everything but thorough.

How are private bug bounties different for researchers?

If you feel that you are treated unfairly by the vendor, you have essentially two options. You can just accept it and vote with your feet: move on to another bug bounty program and learn how to recognize programs that are better avoided. The vendor won’t care as there will be plenty of others coming their way. Or you can make a fuzz about it. You could try to argue and probably escalate to the bug bounty platform vendor, but IMHO this rarely changes anything. Or you could publicly shame the vendor for their behavior and warn others.

The latter is made impossible by the conditions to participate in private bug bounty programs. Both Bugcrowd and Hackerone disallow you from talking about your experience with the program. Bug bounty hunters are always dependent on the good will of the vendor, but with private bug bounties it is considerably worse.

But it’s not only that. Usually, security researchers want recognition for their findings. Hackerone even has a process for disclosing vulnerability reports once the issue has been fixed. Public Bugcrowd programs also usually provision for coordinated disclosure. This gives the reporters the deserved recognition and allows everybody else to learn. But guess what: with private bug bounty programs, disclosure is always forbidden.

Why will people participate in private bug bounties at all? Main reason seems to be the reduced competition, finding unique issues is easier. In particular, when you join in the early days of a private bug bounty program, you have a good opportunity to generate cash with low hanging fruit.

Why do companies prefer private bug bounties?

If a bug bounty is about rewarding a random researcher who found a vulnerability in the product, how does a private bug bounty program make sense then? After all, it is like an exclusive club and unlikely to include the researcher in question. In fact, that researcher is unlikely to know about the bug bounty program, so they won’t have this incentive to do the right thing.

But the obvious answer is: the bug bounty platforms aren’t actually selling bug bounty management, they are selling penetration tests. They promise vendors to deliver high-quality reports from selected hackers instead of the usual noise that a public bug bounty program has to deal with. And that’s what many companies expect (but don’t receive) when they create a private bug bounty.

There is another explanation that seems to match many companies. These companies know perfectly well that they just aren’t ready for it yet. Sometimes they simply don’t have the necessary in-house expertise to write secure code, so even with they bug bounty program always pointing out the same mistakes they will keep repeating them. Or they won’t free up developers from feature work to tackle security issues, so every year they will fix five issues that seem particularly severe but leave all the others untouched. So they go for a private bug bounty program because doing the same thing in public would be disastrous for their PR. And they hope that this bug bounty program will somehow make their product more secure. Except it doesn’t.

On Hackerone I also see another mysterious category: private bug bounty programs with zero activity. So somebody went through the trouble of setting up a bug bounty program but failed to make it attractive to researchers. Either it offers no rewards, or it expects people to buy some hardware that they are unlikely to own already, or the description of the program is impossible to decipher. Just now I’ve been invited to a private bug bounty program where the company’s homepage was completely broken, and I still don’t really understand what they are doing. I suspect that these bug bounty programs are another example of features that somebody got a really nice bonus for but nobody cared putting any thought into.

Somebody told me that their company went with a private bug bounty because they work with selected researchers only. So it isn’t actually a bug bounty program but really a way to manage communication with that group. I hope that they still have some other way to engage with researchers outside that elite group, even if it doesn’t involve monetary rewards for reported vulnerabilities.

Conclusions

As a security researcher, I’ve collected plenty of bad experiences with private bug bounty programs, and I know that other people did as well. Let’s face it: the majority of private bug bounty programs shouldn’t have existed in the first place. They don’t really make the products in question more secure, and they increase frustration among security researchers. And while some people manage to benefit financially from these programs, others are bound to waste their time on them. The confidentiality clauses of these programs substantially weaken the position of the bug bounty hunters, which isn’t too strong to start with. These clauses are also an obstacle to learning on both sides, ideally security issues should always be publicized once fixed.

Now the ones who should do something to improve this situations are the bug bounty platforms. However, I realize that they have little incentive to change this situation and are in fact actively embracing it. So while one can ask for example for a way to comment on private bug bounty programs so that newcomers can learn from the experience that others made with this program, such control mechanisms are unlikely to materialize. Publishing anonymized reports from private bug bounty programs would also be nice and just as unlikely. I wonder whether the solution is to add such features via a browser extension and whether it would gain sufficient traction then.

But really, private bug bounty programs are usually a bad idea. Most companies doing that right now should either switch to a public bug bounty or just drop their bug bounty program altogether. Katie Moussouris is already very busy convincing companies to drop bug bounty programs they cannot make use of, please help her and join that effort.

https://palant.de/2018/12/10/if-your-bug-bounty-program-is-private-why-do-you-have-it

|

|

Wladimir Palant: BBN challenge resolution: Exploiting the Screenshotter.PRO browser extension |

The time has come to reveal the answer to my next BugBountyNotes challenge called Try out my Screenshotter.PRO browser extension. This challenge is a browser extension supposedly written by a naive developer for the purpose of taking webpage screenshots. While the extension is functional, the developer discovered that some websites are able to take a peek into their Gmail account. How does that work?

If you haven’t looked at this challenge yet, feel free to stop reading at this point and go try it out. Mind you, this one is hard and only two people managed to solve it so far. Note also that I won’t look at any answers submitted at this point any more. Of course, you can also participate in any of the ongoing challenges as well.

Still here? Ok, I’m going to explain this challenge then.

Taking control of the extension UI

This challenge has been inspired by the vulnerabilities I discovered around the Firefox Screenshots feature. Firefox Screenshots is essentially a built-in browser extension in Firefox, and while it takes care to isolate its user interface in a frame protected by the same-origin policy, I discovered a race condition that allowed websites to change that frame into something they can access.

This race condition could not be reproduced in the challenge because the approach used works in Firefox only. So the challenge uses a different approach to protect its frame from unwanted access: it creates a frame pointing to https://example.com/ (the website cannot access it due to same-origin policy), then injects its user interface into this frame via a separate content script. And since a content script can only be injected into all frames of a tab, the content script uses the (random) frame name to distinguish the “correct” frame.

And here lies the issue of course. While the webpage cannot predict what the frame name will be, it can see the frame being injected and change the src attribute into something else. It can load a page from the same server, then it will be able to access the injected extension UI. A submission I received for this challenge solved this even more elegantly: by assigning window.name = frame.name it made sure that the extension UI was injected directly into their webpage!

Now the only issue is bringing up the extension UI. With Firefox Screenshots I had to rely on the user clicking “Take a screenshot.” The extension in the challenge allowed triggering its functionality via a hotkey however. And, like so often, it failed checking for event.isTrusted, so it would accept events generated by the webpage. Since the extension handles events synchronously, the following code is sufficient here:

window.dispatchEvent(new KeyboardEvent("keydown", {

key: "S",

ctrlKey: true,

shiftKey: true

}));

let frame = document.getElementsByTagName("iframe")[0];

frame.src = "blank.html";Recommendation for developers: Any content which you inject into websites should always be contained inside a frame that is part of your extension. This at least makes sure that the website cannot access the frame contents, but you still have to worry about clickjacking and spoofing attacks.

Also, if you ever attach event listeners to website content, always make sure that event.isTrusted is true, so it’s a real event rather than the website playing tricks on you.

What to screenshot?

Once the webpage can access the extension UI, clicking the “Screenshot to clipboard” button programmatically is trivial. Again Event.isTrusted is not being checked here. However, even though Firefox Screenshots only accepted trusted events, it didn’t help it much. At this point the webpage can make the button transparent and huge, so when the user clicks somewhere the button is always triggered.

The webpage can create a screenshot, but what’s the deal? With Firefox Screenshots I only realized it after creating the bug report, the big issue here is that the webpage can screenshot third-party pages. Just load some page in a frame and it will be part of the screenshot even though you normally cannot access its contents. Only trouble: really critical sites such as Gmail don’t allow being loaded in a frame these days.

Luckily, this challenge had to be compatible with Chrome. And while Firefox extensions can use tabs.captureTab method to capture a specific tab, there is nothing comparable for Chrome. The solution that the hypothetical extension author took was using tabs.captureVisibleTab method which works in any browser. Side-effect: the visible tab isn’t necessarily the tab where the screenshotting UI lives.

So the attacks starts by asking the user to click a button. When clicked, that button opens Gmail in a new tab. The original page stays in background and initiates screenshotting. When the screenshot is done it will contain Gmail, not the attacking website.

How to get the screenshot?

The last step is getting the screenshot which is being copied to clipboard. Here, a Firefox bug makes things a lot easier for attackers. Until very recently, the only way to copy something to clipboard was calling document.execCommand() on a text field. And Firefox doesn’t allow this action to be performed on the extension’s background page, so extensions will often resort to doing it in the context of web pages that they don’t control.

The most straight-forward solution is registering a copy event listener on the page, it will be triggered when the extension attempts to copy to the clipboard. That’s how I did it with Firefox Screenshots, and one of the submitted answers also uses this approach. But I actually forgot about it when I created my own solution for this challenge, so I used mutation observers to see when a text field is inserted into the page and read out its value (the actual screenshot URL):

let observer = new MutationObserver(mutationList =>

{

for (let mutation of mutationList)

{

if (mutation.addedNodes && mutation.addedNodes[0].localName == "textarea")

document.body.innerHTML = `Here is what Gmail looks like for you:

`;

}

});

observer.observe(document.body, {childList: true});

`;

}

});

observer.observe(document.body, {childList: true});I hope that the new Clipboard API finally makes things sane here, so it isn’t merely more elegant but also gets rid of this huge footgun. But I didn’t have any chance to play with it yet, this API only being available since Chrome 66 and Firefox 63. So the recommendation is still: make sure to run any clipboard operations in a context that you control. If the background page doesn’t work, use a tab or frame belonging to your extension.

The complete solution

That’s pretty much it, everything else is only about visuals and timing. The attacking website needs to hide the extension UI so that the user doesn’t suspect anything. It also has no way of knowing when Gmail finishes loading, so it has to wait some arbitrary time. Here is what I got altogether. It is one way to solve this challenge but certainly not the only one.

|

|

Wladimir Palant: BBN challenge resolution: Exploiting the Screenshotter.PRO browser extension |

The time has come to reveal the answer to my next BugBountyNotes challenge called Try out my Screenshotter.PRO browser extension. This challenge is a browser extension supposedly written by a naive developer for the purpose of taking webpage screenshots. While the extension is functional, the developer discovered that some websites are able to take a peek into their Gmail account. How does that work?

If you haven’t looked at this challenge yet, feel free to stop reading at this point and go try it out. Mind you, this one is hard and only two people managed to solve it so far. Note also that I won’t look at any answers submitted at this point any more. Of course, you can also participate in any of the ongoing challenges as well.

Still here? Ok, I’m going to explain this challenge then.

Taking control of the extension UI

This challenge has been inspired by the vulnerabilities I discovered around the Firefox Screenshots feature. Firefox Screenshots is essentially a built-in browser extension in Firefox, and while it takes care to isolate its user interface in a frame protected by the same-origin policy, I discovered a race condition that allowed websites to change that frame into something they can access.

This race condition could not be reproduced in the challenge because the approach used works in Firefox only. So the challenge uses a different approach to protect its frame from unwanted access: it creates a frame pointing to https://example.com/ (the website cannot access it due to same-origin policy), then injects its user interface into this frame via a separate content script. And since a content script can only be injected into all frames of a tab, the content script uses the (random) frame name to distinguish the “correct” frame.

And here lies the issue of course. While the webpage cannot predict what the frame name will be, it can see the frame being injected and change the src attribute into something else. It can load a page from the same server, then it will be able to access the injected extension UI. A submission I received for this challenge solved this even more elegantly: by assigning window.name = frame.name it made sure that the extension UI was injected directly into their webpage!

Now the only issue is bringing up the extension UI. With Firefox Screenshots I had to rely on the user clicking “Take a screenshot.” The extension in the challenge allowed triggering its functionality via a hotkey however. And, like so often, it failed checking for event.isTrusted, so it would accept events generated by the webpage. Since the extension handles events synchronously, the following code is sufficient here:

window.dispatchEvent(new KeyboardEvent("keydown", {

key: "S",

ctrlKey: true,

shiftKey: true

}));

let frame = document.getElementsByTagName("iframe")[0];

frame.src = "blank.html";Recommendation for developers: Any content which you inject into websites should always be contained inside a frame that is part of your extension. This at least makes sure that the website cannot access the frame contents, but you still have to worry about clickjacking and spoofing attacks.

Also, if you ever attach event listeners to website content, always make sure that event.isTrusted is true, so it’s a real event rather than the website playing tricks on you.

What to screenshot?

Once the webpage can access the extension UI, clicking the “Screenshot to clipboard” button programmatically is trivial. Again Event.isTrusted is not being checked here. However, even though Firefox Screenshots only accepted trusted events, it didn’t help it much. At this point the webpage can make the button transparent and huge, so when the user clicks somewhere the button is always triggered.

The webpage can create a screenshot, but what’s the deal? With Firefox Screenshots I only realized it after creating the bug report, the big issue here is that the webpage can screenshot third-party pages. Just load some page in a frame and it will be part of the screenshot even though you normally cannot access its contents. Only trouble: really critical sites such as Gmail don’t allow being loaded in a frame these days.

Luckily, this challenge had to be compatible with Chrome. And while Firefox extensions can use tabs.captureTab method to capture a specific tab, there is nothing comparable for Chrome. The solution that the hypothetical extension author took was using tabs.captureVisibleTab method which works in any browser. Side-effect: the visible tab isn’t necessarily the tab where the screenshotting UI lives.

So the attacks starts by asking the user to click a button. When clicked, that button opens Gmail in a new tab. The original page stays in background and initiates screenshotting. When the screenshot is done it will contain Gmail, not the attacking website.

How to get the screenshot?

The last step is getting the screenshot which is being copied to clipboard. Here, a Firefox bug makes things a lot easier for attackers. Until very recently, the only way to copy something to clipboard was calling document.execCommand() on a text field. And Firefox doesn’t allow this action to be performed on the extension’s background page, so extensions will often resort to doing it in the context of web pages that they don’t control.

The most straight-forward solution is registering a copy event listener on the page, it will be triggered when the extension attempts to copy to the clipboard. That’s how I did it with Firefox Screenshots, and one of the submitted answers also uses this approach. But I actually forgot about it when I created my own solution for this challenge, so I used mutation observers to see when a text field is inserted into the page and read out its value (the actual screenshot URL):

let observer = new MutationObserver(mutationList =>

{

for (let mutation of mutationList)

{

if (mutation.addedNodes && mutation.addedNodes[0].localName == "textarea")

document.body.innerHTML = `Here is what Gmail looks like for you:

`;

}

});

observer.observe(document.body, {childList: true});

`;

}

});

observer.observe(document.body, {childList: true});I hope that the new Clipboard API finally makes things sane here, so it isn’t merely more elegant but also gets rid of this huge footgun. But I didn’t have any chance to play with it yet, this API only being available since Chrome 66 and Firefox 63. So the recommendation is still: make sure to run any clipboard operations in a context that you control. If the background page doesn’t work, use a tab or frame belonging to your extension.

The complete solution

That’s pretty much it, everything else is only about visuals and timing. The attacking website needs to hide the extension UI so that the user doesn’t suspect anything. It also has no way of knowing when Gmail finishes loading, so it has to wait some arbitrary time. Here is what I got altogether. It is one way to solve this challenge but certainly not the only one.

|

|

Cameron Kaiser: TenFourFox FPR11 available |

FPR12 will be a smaller-scope release but there will still be some minor performance improvements and bugfixes, and with any luck we will also be shipping Rapha"el's enhanced AltiVec string matcher in this release as well. Because of the holidays, family visits, etc., however, don't expect a beta until around the second week of January.

http://tenfourfox.blogspot.com/2018/12/tenfourfox-fpr11-available.html

|

|

The Mozilla Blog: Goodbye, EdgeHTML |

Microsoft is officially giving up on an independent shared platform for the internet. By adopting Chromium, Microsoft hands over control of even more of online life to Google.

This may sound melodramatic, but it’s not. The “browser engines” — Chromium from Google and Gecko Quantum from Mozilla — are “inside baseball” pieces of software that actually determine a great deal of what each of us can do online. They determine core capabilities such as which content we as consumers can see, how secure we are when we watch content, and how much control we have over what websites and services can do to us. Microsoft’s decision gives Google more ability to single-handedly decide what possibilities are available to each one of us.

From a business point of view Microsoft’s decision may well make sense. Google is so close to almost complete control of the infrastructure of our online lives that it may not be profitable to continue to fight this. The interests of Microsoft’s shareholders may well be served by giving up on the freedom and choice that the internet once offered us. Google is a fierce competitor with highly talented employees and a monopolistic hold on unique assets. Google’s dominance across search, advertising, smartphones, and data capture creates a vastly tilted playing field that works against the rest of us.

From a social, civic and individual empowerment perspective ceding control of fundamental online infrastructure to a single company is terrible. This is why Mozilla exists. We compete with Google not because it’s a good business opportunity. We compete with Google because the health of the internet and online life depend on competition and choice. They depend on consumers being able to decide we want something better and to take action.

Will Microsoft’s decision make it harder for Firefox to prosper? It could. Making Google more powerful is risky on many fronts. And a big part of the answer depends on what the web developers and businesses who create services and websites do. If one product like Chromium has enough market share, then it becomes easier for web developers and businesses to decide not to worry if their services and sites work with anything other than Chromium. That’s what happened when Microsoft had a monopoly on browsers in the early 2000s before Firefox was released. And it could happen again.

If you care about what’s happening with online life today, take another look at Firefox. It’s radically better than it was 18 months ago — Firefox once again holds its own when it comes to speed and performance. Try Firefox as your default browser for a week and then decide. Making Firefox stronger won’t solve all the problems of online life — browsers are only one part of the equation. But if you find Firefox is a good product for you, then your use makes Firefox stronger. Your use helps web developers and businesses think beyond Chrome. And this helps Firefox and Mozilla make overall life on the internet better — more choice, more security options, more competition.

The post Goodbye, EdgeHTML appeared first on The Mozilla Blog.

|

|

Mozilla Future Releases Blog: Firefox Coming to the Windows 10 on Qualcomm Snapdragon Devices Ecosystem |

At Mozilla, we’ve been building browsers for 20 years and we’ve learned a thing or two over those decades. One of the most important lessons is putting people at the center of the web experience. We pioneered user-centric features like tabbed browsing, automatic pop-up blocking, integrated web search, and browser extensions for the ultimate in personalization. All of these innovations support real users’ needs first, putting business demands in the back seat.

Mozilla is uniquely positioned to build browsers that act as the user’s agent on the web and not simply as the top of an advertising funnel. Our mission not only allows us to put privacy and security at the forefront of our product strategy, it demands that we do so. You can see examples of this with Firefox’s Facebook Container extension, Firefox Monitor, and its private by design browser data syncing features. This will become even more apparent in upcoming releases of Firefox that will block certain cross-site and third-party tracking by default while delivering a fast, personal, and highly mobile experience.

When we set out several years ago to build a new version of Firefox called Quantum, one that utilized multiple computer processes the way an operating system does, we didn’t simply break the browser into as many processes as possible. We investigated what kinds of hardware people had and built a solution that took best advantage of processors with multiple cores, which also makes Firefox a great browser for Snapdragon. We also offloaded significant page loading tasks to the increasingly powerful GPUs shipping with modern PCs and we re-designed the browser front-end to bring more efficiency to everyday tasks.

Today, Mozilla is excited to be collaborating with Qualcomm and optimizing Firefox for the Snapdragon compute platform with a native ARM64 version of Firefox that takes full advantage of the capabilities of the Snapdragon compute platform and gives users the most performant out of the box experience possible. We can’t wait to see Firefox delivering blazing fast experiences for the always on, always connected, multi-core Snapdragon compute platform with Windows 10.

Stay tuned. It’s going to be great!

The post Firefox Coming to the Windows 10 on Qualcomm Snapdragon Devices Ecosystem appeared first on Future Releases.

|

|

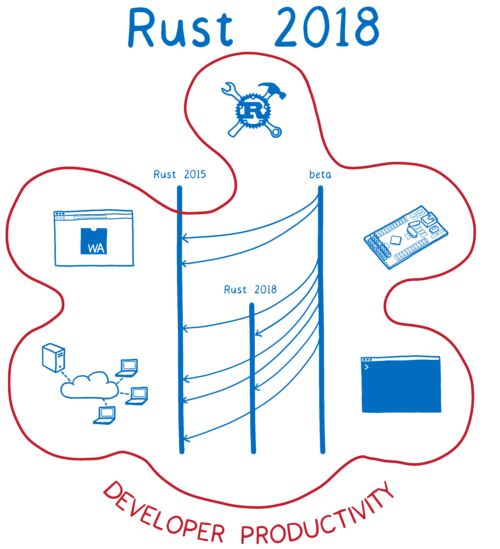

Hacks.Mozilla.Org: Rust 2018 is here… but what is it? |

This post was written in collaboration with the Rust Team (the “we” in this article). You can also read their announcement on the Rust blog.

Starting today, the Rust 2018 edition is in its first release. With this edition, we’ve focused on productivity… on making Rust developers as productive as they can be.

But beyond that, it can be hard to explain exactly what Rust 2018 is.

Some people think of it as a new version of the language, which it is… kind of, but not really. I say “not really” because if this is a new version, it doesn’t work like versioning does in other languages.

In most other languages, when a new version of the language comes out, any new features are added to that new version. The previous version doesn’t get new features.

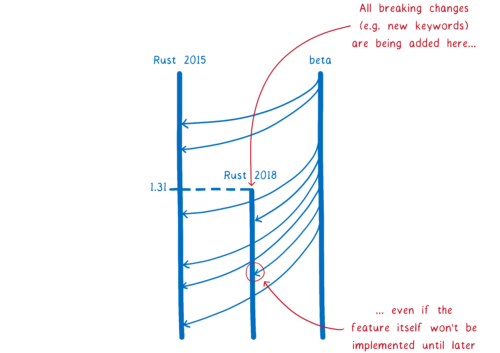

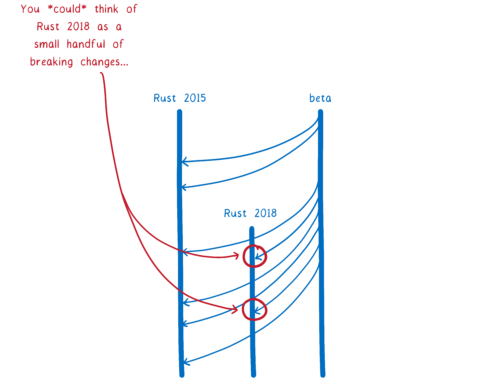

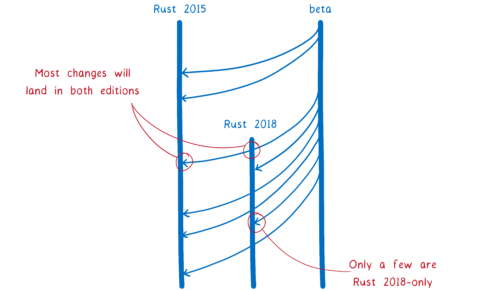

Rust editions are different. This is because of the way the language is evolving. Almost all of the new features are 100% compatible with Rust as it is. They don’t require any breaking changes. That means there’s no reason to limit them to Rust 2018 code. New versions of the compiler will continue to support “Rust 2015 mode”, which is what you get by default.

But sometimes to advance the language, you need to add things like new syntax. And this new syntax can break things in existing code bases.

An example of this is the async/await feature. Rust initially didn’t have the concepts of async and await. But it turns out that these primitives are really helpful. They make it easier to write code that is asynchronous without the code getting unwieldy.

To make it possible to add this feature, we need to add both async and await as keywords. But we also have to be careful that we’re not making old code invalid… code that might’ve used the words async or await as variable names.

So we’re adding the keywords as part of Rust 2018. Even though the feature hasn’t landed yet, the keywords are now reserved. All of the breaking changes needed for the next three years of development (like adding new keywords) are being made in one go, in Rust 1.31.

Even though there are breaking changes in Rust 2018, that doesn’t mean your code will break. Your code will continue compiling even if it has async or await as a variable name. Unless you tell it otherwise, the compiler assumes you want it to compile your code the same way that it has been up to this point.

But as soon as you want to use one of these new, breaking features, you can opt in to Rust 2018 mode. You just run cargo fix, which will tell you if you need to update your code to use the new features. It will also mostly automate the process of making the changes. Then you can add edition=2018 to your Cargo.toml to opt in and use the new features.

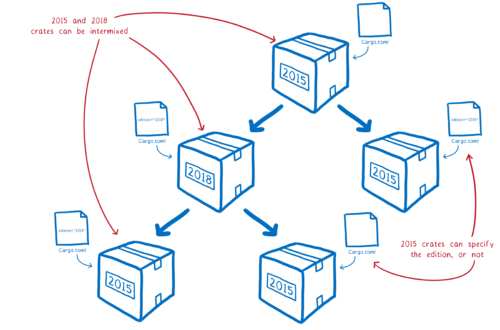

This edition specifier in Cargo.toml doesn’t apply to your whole project… it doesn’t apply to your dependencies. It’s scoped to just the one crate. This means you’ll be able to have crate graphs that have Rust 2015 and Rust 2018 interspersed.

Because of this, even once Rust 2018 is out there, it’s mostly going to look the same as Rust 2015. Most changes will land in both Rust 2018 and Rust 2015. Only the handful of features that require breaking changes won’t pass through.

Rust 2018 isn’t just about changes to the core language, though. In fact, far from it.

Rust 2018 is a push to make Rust developers more productive. Many productivity wins come from things outside of the core language… things like tooling. They also come from focusing on specific use cases and figuring out how Rust can be the most productive language for those use cases.

So you could think of Rust 2018 as the specifier in Cargo.toml that you use to enable the handful of features that require breaking changes…

Or you can think about it as a moment in time, where Rust becomes one of the most productive languages you can use in many cases — whenever you need performance, light footprint, or high reliability.

In our minds, it’s the second. So let’s look at all that happened outside of the core language. Then we can dive into the core language itself.

Rust for specific use cases

A programming language can’t be productive by itself, in the abstract. It’s productive when put to some use. Because of this, the team knew we didn’t just need to make Rust as a language or Rust tooling better. We also needed to make it easier to use Rust in particular domains.

In some cases, this meant creating a whole new set of tools for a whole new ecosystem.

In other cases, it meant polishing what was already in the ecosystem and documenting it well so that it’s easy to get up and running.

The Rust team formed working groups focused on four domains:

- WebAssembly

- Embedded applications

- Networking

- Command line tools

WebAssembly

For WebAssembly, the working group needed to create a whole new suite of tools.

Just last year, WebAssembly made it possible to compile languages like Rust to run on the web. Since then, Rust has quickly become the best language for integrating with existing web applications.

Rust is a good fit for web development for two reasons:

- Cargo’s crates ecosystem works in the same way that most web app developers are used to. You pull together a bunch of small modules to form a larger application. This means that it’s easy to use Rust just where you need it.

- Rust has a light footprint and doesn’t require a runtime. This means that you don’t need to ship down a bunch of code. If you have a tiny module doing lots of heavy computational work, you can introduce a few lines of Rust just to make that run faster.

With the web-sys and js-sys crates, it’s easy to call web APIs like fetch or appendChild from Rust code. And wasm-bindgen makes it easy support high-level data types that WebAssembly doesn’t natively support.

Once you’ve coded up your Rust WebAssembly module, there are tools to make it easy to plug it into the rest of your web application. You can use wasm-pack to run these tools automatically, and push your new module up to npm if you want.

Check out the Rust and WebAssembly book to try it yourself.

What’s next?

Now that Rust 2018 has shipped, the working group is figuring out where to take things next. They’ll be working with the community to determine the next areas of focus.

Embedded

For embedded development, the working group needed to make existing functionality stable.

In theory, Rust has always been a good language for embedded development. It gives embedded developers the modern day tooling that they are sorely lacking, and very convenient high-level language features. All this without sacrificing on resource usage. So Rust seemed like a great fit for embedded development.

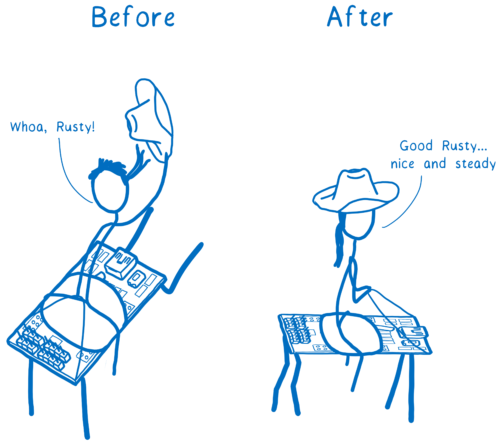

However, in practice it was a bit of a wild ride. Necessary features weren’t in the stable channel. Plus, the standard library needed to be tweaked for use on embedded devices. That meant that people had to compile their own version of the Rust core crate (the crate which is used in every Rust app to provide Rust’s basic building blocks — intrinsics and primitives).

Together, these two things meant developers had to depend on the nightly version of Rust. And since there were no automated tests for micro-controller targets, nightly would often break for these targets.

To fix this, the working group needed to make sure that necessary features were in the stable channel. We also had to add tests to the CI system for micro-controller targets. This means a person adding something for a desktop component won’t break something for an embedded component.

With these changes, embedded development with Rust moves away from the bleeding edge and towards the plateau of productivity.

Check out the Embedded Rust book to try it yourself.

What’s next?

With this year’s push, Rust has really good support for ARM Cortex-M family of microprocessor cores, which are used in a lot of devices. However, there are lots of architectures used on embedded devices, and those aren’t as well supported. Rust needs to expand to have the same level of support for these other architectures.

Networking

For networking, the working group needed to build a core abstraction into the language—async/await. This way, developers can use idiomatic Rust even when the code is asynchronous.

For networking tasks, you often have to wait. For example, you may be waiting for a response to a request. If your code is synchronous, that means the work will stop—the CPU core that is running the code can’t do anything else until the request comes in. But if you code asynchronously, then the function that’s waiting for the response can go on hold while the CPU core takes care of running other functions.

Coding asynchronous Rust is possible even with Rust 2015. And there are lots of upsides to this. On the large scale, for things like server applications, it means that your code can handle many more connections per server. On the small scale, for things like embedded applications that are running on tiny, single threaded CPUs, it means you can make better use of your single thread.

But these upsides came with a major downside—you couldn’t use the borrow checker for that code, and you would have to write unidiomatic (and somewhat confusing) Rust. This is where async/await comes in. It gives the compiler the information it needs to borrow check across asynchronous function calls.

The keywords for async/await were introduced in 1.31, although they aren’t currently backed by an implementation. Much of that work is done, and you can expect the feature to be available in an upcoming release.

What’s next?

Beyond just enabling productive low-level development for networking applications, Rust could enable more productive development at a higher level.

Many servers need to do the same kinds of tasks. They need to parse URLs or work with HTTP. If these were turned into components—common abstractions that could be shared as crates—then it would be easy to plug them together to form all sorts of different servers and frameworks.

To drive the component development process, the Tide framework is providing a test bed for, and eventually example usage of, these components.

Command line tools

For command line tools, the working group needed to bring together smaller, low-level libraries into higher level abstractions, and polish some existing tools.

For some CLI scripts, you really want to use bash. For example, if you just need to call out to other shell tools and pipe data between them, then bash is best.

But Rust is a great fit for a lot of other kinds of CLI tools. For example, it’s great if you are building a complex tool like ripgrep or building a CLI tool on top of an existing library’s functionality.

Rust doesn’t require a runtime and allows you to compile to a single static binary, which makes it easy to distribute. And you get high-level abstractions that you don’t get with other languages like C and C++, so that already makes Rust CLI developers productive.

What did the working group need to make this better still? Even higher-level abstractions.

With these higher-level abstractions, it’s quick and easy to assemble a production ready CLI.

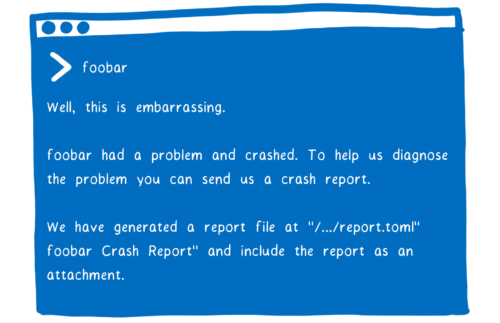

An example of one of these abstractions is the human panic library. Without this library, if your CLI code panics, it probably outputs the entire back trace. But that’s not very helpful for your end users. You could add custom error handling, but that requires effort.

If you use human panic, then the output will be automatically routed to an error dump file. What the user will see is a helpful message suggesting that they report the issue and upload the error dump file.

The working group also made it easier to get started with CLI development. For example, the confy library will automate a lot of setup for a new CLI tool. It only asks you two things:

- What’s the name of your application?

- What are configuration options you want to expose (which you define as a struct that can be serialized and deserialized)?

From that, confy will figure out the rest for you.

What’s next?

The working group abstracted away a lot of different tasks that are common between CLIs. But there’s still more that could be abstracted away. The working group will be making more of these high level libraries, and fixing more paper cuts as they go.

Rust tooling

When you experience a language, you experience it through tools. This starts with the editor that you use. It continues through every stage of the development process, and through maintenance.

This means that a productive language depends on productive tooling.

Here are some tools (and improvements to Rust’s existing tooling) that were introduced as part of Rust 2018.

IDE support

Of course, productivity hinges on fluidly getting code from your mind to the screen quickly. IDE support is critical to this. To support IDEs, we need tools that can tell the IDE what Rust code actually means — for example, to tell the IDE what strings make sense for code completion.

In the Rust 2018 push, the community focused on the features that IDEs needed. With Rust Language Server and IntelliJ Rust, many IDEs now have fluid Rust support.

Faster compilation

With compilation, faster means more productive. So we’ve made the compiler faster.

Before, when you would compile a Rust crate, the compiler would recompile every single file in the crate. But now, with incremental compilation, the compiler is smart and only recompiles the parts that have changed. This, along with other optimizations, has made the Rust compiler much faster.

rustfmt

Productivity also means not having to fix style nits (and never having to argue over formatting rules).

The rustfmt tool helps with this by automatically reformatting your code using a default code style (which the community reached consensus on). Using rustfmt ensures that all of your Rust code conforms to the same style, like clang format does for C++ and Prettier does for JavaScript.

Clippy

Sometimes it’s nice to have an experienced advisor by your side… giving you tips on best practices as you code. That’s what Clippy does —it reviews your code as you go and tells you how to make that code more idiomatic.

rustfix

But if you have an older code base that uses outmoded idioms, then just getting tips and correcting the code yourself can be tedious. You just want someone to go into your code base an make the corrections.

For these cases, rustfix will automate the process. It will both apply lints from tools like Clippy and update older code to match Rust 2018 idioms.

Changes to Rust itself

These changes in the ecosystem have brought lots of productivity wins. But some productivity issues could only be fixed with changes to the language itself.

As I talked about in the intro, most of the language changes are completely compatible with existing Rust code. These changes are all part of Rust 2018. But because they don’t break any code, they also work in any Rust code… even if that code doesn’t use Rust 2018.

Let’s look at a few of the big language features that were added to all editions. Then we can look at the small list of Rust 2018-specific features.

New language features for all editions

Here’s a small sample of the big new language features that are (or will be) in all language editions.

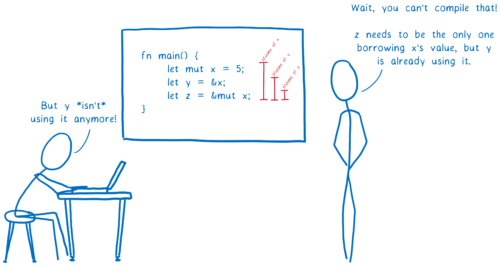

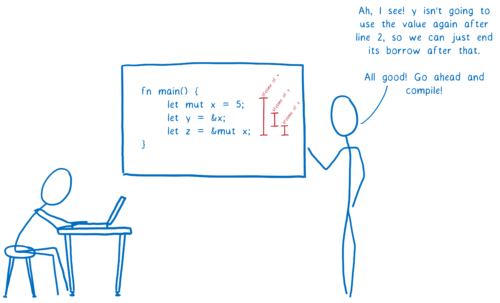

More precise borrow checking (e.g. Non-Lexical Lifetimes)

One big selling point for Rust is the borrow checker. The borrow checker helps ensure that your code is memory safe. But it has also been a pain point for new Rust developers.

Part of that is learning new concepts. But there was another big part… the borrow checker would sometimes reject code that seemed like it should work, even to those who understood the concepts.

This is because the lifetime of a borrow was assumed to go all the way to the end of its scope — for example, to the end of the function that the variable is in.

This meant that even though the variable was done with the value and wouldn’t try to access it anymore, other variables were still denied access to it until the end of the function.

To fix this, we’ve made the borrow checker smarter. Now it can see when a variable is actually done using a value. If it is done, then it doesn’t block other borrowers from using the data.

While this is only available in Rust 2018 as of today, it will be available in all editions in the near future. I’ll be writing more about all of this soon.

Procedural macros on stable Rust

Macros in Rust have been around since before Rust 1.0. But with Rust 2018, we’ve made some big improvements, like introducing procedural macros.

With procedural macros, it’s kind of like you can add your own syntax to Rust.

Rust 2018 brings two kinds of procedural macros:

Function-like macros

Function-like macros allow you to have things that look like regular function calls, but that are actually run during compilation. They take in some code and spit out different code, which the compiler then inserts into the binary.

They’ve been around for a while, but what you could do with them was limited. Your macro could only take the input code and run a match statement on it. It didn’t have access to look at all of the tokens in that input code.

But with procedural macros, you get the same input that a parser gets — a token stream. This means can create much more powerful function-like macros.

Attribute-like macros

If you’re familiar with decorators in languages like JavaScript, attribute macros are pretty similar. They allow you to annotate bits of code in Rust that should be preprocessed and turned into something else.

The derive macro does exactly this kind of thing. When you put derive above a struct, the compiler will take that struct in (after it has been parsed as a list of tokens) and fiddle with it. Specifically, it will add a basic implementation of functions from a trait.

More ergonomic borrowing in matching

This change is pretty straight-forward.

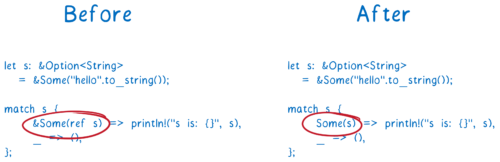

Before, if you wanted to borrow something and tried to match on it, you had to add some weird looking syntax:

But now, you don’t need the &Some(ref s) anymore. You can just write Some(s), and Rust will figure it out from there.

New features specific to Rust 2018

The smallest part of Rust 2018 are the features specific to it. Here are the small handful of changes that using the Rust 2018 edition unlocks.

Keywords

There are a few keywords that have been added to Rust 2018.

trykeywordasync/awaitkeyword

These features haven’t been fully implemented yet, but the keywords are being added in Rust 1.31. This means we don’t have to introduce new keywords (which would be a breaking change) in the future, once the features behind these keywords are implemented.

The module system

One big pain point for developers learning Rust is the module system. And we could see why. It was hard to reason about how Rust would choose which module to use.

To fix this, we made a few changes to the way paths work in Rust.

For example, if you imported a crate, you could use it in a path at the top level. But if you moved any of the code to a submodule, then it wouldn’t work anymore.

// top level module

extern crate serde;

// this works fine at the top level

impl serde::Serialize for MyType { ... }

mod foo {

// but it does *not* work in a sub-module

impl serde::Serialize for OtherType { ... }

}

Another example is the prefix ::, which used to refer to either the crate root or an external crate. It could be hard to tell which.

We’ve made this more explicit. Now, if you want to refer to the crate root, you use the prefix crate:: instead. And this is just one of the path clarity improvements we’ve made.

If you have existing Rust code and you want it to use Rust 2018, you’ll very likely need to update it for these new module paths. But that doesn’t mean that you’ll need to manually update your code. Run cargo fix before you add the edition specifier to Cargo.toml and rustfix will make all the changes for you.

Learn More

Learn all about this edition in the Rust 2018 edition guide.

The post Rust 2018 is here… but what is it? appeared first on Mozilla Hacks - the Web developer blog.

|

|

The Servo Blog: Experience porting Servo to the Magic Leap One |

Introduction

We now have nightly releases of Servo for the Magic Leap One augmented reality headset. You can head over to https://download.servo.org/, install the application, and browse the web in a virtual browser.

This is a developer preview release, designed for as a testbed for future products, and as a venue for experimenting with UI design. What should the web look like in augmented reality? We hope to use Servo to find out!

We are providing these nightly snapshots to encourage other developers to experiment with AR web experiences. There are still many missing features, such as immersive or 3D content, many types of user input, media, or a stable embedding API. We hope you forgive the rough edges.

This blog post will describe the experience of porting Servo to a new architecture, and is intended for system developers.

Magic Leap under the hood

The Magic Leap software development kit (SDK) is based on commonly-used open-source

technologies. In particular, it uses the clang compiler and the gcc toolchain

for support tools such as ld, objcopy, ranlib and friends.

The architecture is 64-bit ARM, using the same application binary interface as Android.

Together these give the target as being aarch64-linux-android, the same as for many

64-bit Android devices. Unlike Android, Magic Leap applications are

native programs, and do not require a Java Native Interface (JNI) to the OS.

Magic Leap provides a lot of support for developing AR applications, in the form of the Lumin Runtime APIs, which include 3D scene descriptions, UI elements, input events including device placement and orientation in 3D space, and rendering to displays which provide users with 3D virtual visual and audio environments that interact with the world around them.

The Magic Leap and Lumin Runtime SDKs are available from https://creator.magicleap.com/ for Mac and Windows platforms.

Building the Servo library

The Magic Leap library is built using ./mach build --magicleap,

which under the hood calls cargo build

--target=aarch64-linux-android. For most of the Servo library and its

dependencies, this just works, but there are a couple of corner cases:

C/C++ libraries and crates with special treatment for Android.

Some of Servo’s dependencies are crates which link against C/C++

libraries, notably openssl-sys and mozjs-sys. Each of these

libraries uses slightly different build environments (such as Make,

CMake or Autoconf, often with custom build scripts). The challenge for

software like Servo that uses many such libraries is to find a

configuration which will work for all the dependencies. This comes

down to finding the right settings for environment variables such as

$CFLAGS, and is complicated by cross-compiling the libraries which

often means ensuring that the Magic Leap libraries are included, not

the host libraries.

The other main source of issues with the build is that since Magic

Leap uses the same ABI as Android, its target is

aarch64-linux-android, which is the same as for 64-bit ARM Android

devices. As a result, many crates which need special treatment for

Android (for example for JNI or to use libandroid) will treat the

Magic Leap build as an Android build rather than a Linux build. Some

care is needed to undo all of this special treatment. For example,

the build scripts of Servo, SpiderMonkey and OpenSSL all contain code

to guess the directory layout of the Android SDK, which needs to be

undone when building for Magic Leap.

One thing that just worked turned out to be debugging Rust code on the Magic Leap device. Magic Leap supports the Visual Studio Code IDE, and remote debugging of code running natively. It was great to see the debugging working out of the box for Rust code as well as it did for C++.

Building the Magic Leap application

The first release of Servo for Magic Leap comes with a rudimentary application for browsing 2D web content. This is missing many features, such as immersive 3D content, audio or video media, or user input by anything other than the controller.

Magic Leap applications come in two flavors: universe applications, which are immersive experiences that have complete control over the device, and landscape applications, which co-exist and present the user with a blended experience where each application presents part of a virtual scene. Currently, Servo is a landscape application, though we expect to add a universe application for immersive web content.

Landscape applications can be designed using the Lumin Runtime Editor, which gives a visual presentation of the various UI components in the scene graph.

The most important object in Servo’s scene graph is the content

node, since it is a Quad that can contain a 2D resource. One of the

kinds of resource that a Quad can contain is an EGL context, that

Servo uses to render web content. The runtime editor generates C++

code that can be included in an application to render and access the

scene graph; Servo uses this to access the content node, and the EGL

context it contains.

The other hooks that the Magic Leap Servo application uses are for events such as moving the laser pointer, which are mapped to mouse events, a heartbeat for animations or other effects which must be performed on the main thread, and a logger which bridges Rust’s logging API to Lumin’s.

The Magic Leap application is built each night by Servo’s CI system, using the Mac builders since there is no Linux SDK for Magic Leap. This builds the Servo library, and packages it is a Magic Leap application, which is hosted on S3 and linked to from the Servo download page.

Summary

The pull request that added Magic Leap support to Servo is https://github.com/servo/servo/pull/21985 which adds about 1600 lines to Servo, mostly in the build scripts and the Magic Leap application. Work on the Magic Leap port of Servo started in early September 2018, and the pull request was merged at the end of October, so took about two person-months.

Much of the port was straightforward, due to the maturity of the Rust cross-compilation and build tools, and the use of common open-source technologies in the Magic Leap platform. Lumin OS contains many innovative features in its treatment of blending physical and virtual 3D environments, but it is built on a solid open-source foundation, which makes porting a complex application like Servo relatively straightforward.

Servo is now making its first steps onto the Magic Leap One, and is available for download and experimentation. Come try it out, and help us design the immersive web!

|

|

Cameron Kaiser: Edge gets Chrome-plated, and we're all worse off |

In the sense that Anaheim won't (at least in name) be Google, just Chromium, there's reason to believe that it won't have the repeated privacy erosions that have characterized Google's recent moves with Chrome itself. But given how much DNA WebKit and Blink share, that means there are effectively two current major rendering engines left: Chromium and Gecko (Firefox). The little ones like NetSurf, bless its heart, don't have enough marketshare (or currently features) to rate, Trident in Internet Explorer 11 is intentionally obsolete, and the rest are too deficient to be anywhere near usable (Dillo, etc.). So this means Chromium arrogates more browsershare to itself and Firefox will continue to be the second class citizen until it, too, has too small a marketshare to be relevant. Then Google has eaten the Web. And we are worse off for it.

Bet Mozilla's reconsidering that stupid embedding decision now.

http://tenfourfox.blogspot.com/2018/12/edge-gets-chrome-plated-and-were-all.html

|

|