Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Dustin J. Mitchell: Elementary Bugs |

Mozilla is a well-known open-source organization, and thus draws a lot of interested contributors. But Mozilla is huge, and even the more limited scope of Firefox development is a wilderness to a newcomer. We have developed various tools to address this, one of which was an Outreachy project by Fienny Angelina called Codetribute.

The site aggregates bugs that experienced developers have identified as good for new contributors (“good first bugs”, although often they are features or tasks) across Bugzilla and Github. It’s useful both for self-motivated contributors and for those looking for starting point for a deeper engagement with Mozilla (an internship or even a full-time job).

However, it’s been tricky to help developers identify good-first-bugs.

Elementary

I watched a Youtube video, “Feynman’s Lost Lecture”. It is not the lecture itself (which is lost..) but Sanderson covers the content of the lecture – “an elementary demonstration of why planets orbit in ellipses”.

He quotes Feynman himself defining “elementary”:

I am going to give what I will call an elementary demonstration. But elementary does not mean easy to understand. Elementary means that very little is required to know ahead of time to understand it, except to have an infinite amount of intelligence.

I propose that this definition captures the perfect good-first-bug, too.

Elementary Bugs

An elementary bug is not an easy bug. An elementary bug is one that requires very little knowledge of the software ahead of time, except to have the skills to figure things out.

A newcomer does not have the breadth of knowledge that an experienced developer does. For example, members of the Taskcluster team understand how all of the microservices and workers fit together, how they are deployed, and how Firefox CI utilizes the functionality. A task that requires understanding all of these things would take a long time, even for a highly skilled newcomer to the project. But a task limited to, say, a single microservice or library provides a much more manageable scope.

Bug 1455130, “Add pagination to auth.listRoles”, is a good example. Solving this bug requires that the contributor understand the API method definitions in the Auth service, and how Taskcluster handles pagination. It does not involve understanding how Taskcluster, as a whole, functions. So: a little bit of knowledge (of JS, of Git, of HTTP APIs), and the skills to jump into a codebase, find similar implementations, adopt a coding style, and so on.

Having completed a bug like this, a budding contributor can use their newfound understanding as a home base to start exploring related topics. Having understood that the Auth services is concerned with Clients and Roles, what are those? How do they relate to permissions to do things, and what sort of things? What scopes are required to create a task? What can a task do? Before long, the intrepid contributor is a Taskcluster pro..

Other forms of elementary bugs might include:

- Refactoring an existing, well-tested function (so, only need to understand what the function does and how to do it better)

- Adding unit tests for well-factored functions (but not integration tests, which by their nature require too much knowledge of how things fit together)

- Implementing a new component from a well-defined specification (such as a new React component)

By contrast, some things do not make good first bugs:

- Repetitive tasks such as renaming or fixing lint (but note that these can be good for practicing version-control processes!)

- Open-ended debugging, which often involves a lot of intuition (born of experience) and digging through layers a newcomer will not be familiar with

- Anything requiring design, as a newcomer lacks the perspective to evaluate designs’ suitability to the situation

I have found the term “elementary” to be a helpful yardstick in evaluating whether to tag something as a good-first-bug. Hopefully it can help others as well!

|

|

Daniel Stenberg: I’m leaving Mozilla |

It's been five great years, but now it is time for me to move on and try something else.

It's been five great years, but now it is time for me to move on and try something else.

During these five years I've met and interacted with a large number of awesome people at Mozilla, lots of new friends! I got the chance to work from home and yet work with a global team on a widely used product, all done with open source. I have worked on internet protocols during work-hours (in addition to my regular spare-time working with them) and its been great! Heck, lots of the HTTP/2 development and the publication of that was made while I was employed by Mozilla and I fondly participated in that. I shall forever have this time ingrained in my memory as a very good period of my life.

I had already before I joined the Firefox development understood some of the challenges of making a browser in the modern era, but that understanding has now been properly enriched with lots of hands-on and code-digging in sometimes decades-old messy C++, a spaghetti armada of threads and the wild wild west of users on the Internet.

I had already before I joined the Firefox development understood some of the challenges of making a browser in the modern era, but that understanding has now been properly enriched with lots of hands-on and code-digging in sometimes decades-old messy C++, a spaghetti armada of threads and the wild wild west of users on the Internet.

A very big thank you and a warm bye bye go to everyone of my friends at Mozilla. I won't be far off and I'm sure I will have reasons to see many of you again.

My last day as officially employed by Mozilla is December 11 2018, but I plan to spend some of my remaining saved up vacation days before then so I'll hand over most of my responsibilities way before.

The future is bright but unknown!

I don't yet know what to do next.

I have some ideas and communications with friends and companies, but nothing is firmly decided yet. I will certainly entertain you with a totally separate post on this blog once I have that figured out! Don't worry.

Will it affect curl or other open source I do?

I had worked on curl for a very long time already before joining Mozilla and I expect to keep doing curl and other open source things even going forward. I don't think my choice of future employer should have to affect that negatively too much, except of course in periods.

I had worked on curl for a very long time already before joining Mozilla and I expect to keep doing curl and other open source things even going forward. I don't think my choice of future employer should have to affect that negatively too much, except of course in periods.

With me leaving Mozilla, we're also losing Mozilla as a primary sponsor of the curl project, since that was made up of them allowing me to spend some of my work days on curl and that's now over.

Short-term at least, this move might increase my curl activities since I don't have any new job yet and I need to fill my days with something...

What about toying with HTTP?

I was involved in the IETF HTTPbis working group for many years before I joined Mozilla (for over ten years now!) and I hope to be involved for many years still. I still have a lot of things I want to do with curl and to keep curl the champion of its class I need to stay on top of the game.

I was involved in the IETF HTTPbis working group for many years before I joined Mozilla (for over ten years now!) and I hope to be involved for many years still. I still have a lot of things I want to do with curl and to keep curl the champion of its class I need to stay on top of the game.

I will continue to follow and work with HTTP and other internet protocols very closely. After all curl remains the world's most widely used HTTP client.

Can I enter the US now?

No. That's unfortunately not related, and I'm not leaving Mozilla because of this problem and I unfortunately don't expect my visa situation to change because of this change. My visa counter is now showing more than 214 days since I applied.

|

|

Shing Lyu: New Rust Course - Building Reuseable Code with Rust |

My first ever video course is now live on Udemy, Safari Books and Packt. It really took me a long time and I’d love to share with you what I’ve prepared for you.

What’s this course about?

This course is about the Rust programming language, but it’s not those general introductory course on basic Rust syntax. This course focus on the code reuse aspect of the Rust language. So we won’t be touch every language feature, but we’ll help you understand how a selected set of features will help you achieve code reuse.

What’s so special about it?

Since these course is not a general introduction course, it is structured in a way that is bottom-up and help you learn how the features are actually used out in the wild.

A bottom up approach

We started from the most basic programming language construct: loops, iterators and functions. Then we see how we can further generalize functions and data structures (structs and enums) using generics. With these tools, we can avoid copy-pasting and stick to the DRY (Don’t Repeat Yourself) principle.

But simply avoiding repeated code snippet is not enough. What comes next naturally is to define a clear interface, or internal API between the modules (in a general sense, not the Rust mod). This is when traits comes in handy. Traits help you define and enforce interfaces. We’ll also discuss the performance impact on static dispatch vs. dynamic dispatch by using generics and trait object.

Finally we talk about more advanced (i.e. you shouldn’t use it unless necessary) tool like macros, which will help do crazier things by tapping directly into the compiler. You can write function-like macros that can help you reuse code that needs lower level access. You can also create custom derive with macros.

Finally, with these tools at hand, we can package our code into modules (Rust mod), which can help you define a hierarchical namespace. We can then organize these modules into Crates, which are software packages or libraries that can contain multiple files. When you reach this level, you can already consume and produce libraries and frameworks and work with a team of Rust developers.

A guided tour though the std

It’s very easy to learn a lot of syntax, but never understand how they are used in real life. In each section, we’ll guide you through how these programming tools are used in std, or the Rust standard library. Standard libraries are the extreme form of code reuse, you are reusing code that is produced by the core language team. You’ll be able to see how these features are put to real use in std to solve their code reuse needs.

We’ll also show you how you can publish your code onto crates.io, Rust’s package registry. Therefore you’ll not only be comfortable reusing other people’s crate, but be a valuable contributor to the wider Rust community.

Conclusion

So this summarized the highlights of this course. If you’ve already learned the basics of Rust and would like to take your Rust skill to the next level, please check this course out. You can find this course on the following platforms: Udemy, Safari Books and Packt.

https://shinglyu.github.io/web/2018/11/16/new-rust-course-building-reuseable-code-with-rust.html

|

|

Shing Lyu: New Rust Course - Building Reuseable Code with Rust |

My first ever video course is now live on Udemy, Safari Books and Packt. It really took me a long time and I’d love to share with you what I’ve prepared for you.

What’s this course about?

This course is about the Rust programming language, but it’s not those general introductory course on basic Rust syntax. This course focus on the code reuse aspect of the Rust language. So we won’t be touch every language feature, but we’ll help you understand how a selected set of features will help you achieve code reuse.

What’s so special about it?

Since these course is not a general introduction course, it is structured in a way that is bottom-up and help you learn how the features are actually used out in the wild.

A bottom up approach

We started from the most basic programming language construct: loops, iterators and functions. Then we see how we can further generalize functions and data structures (structs and enums) using generics. With these tools, we can avoid copy-pasting and stick to the DRY (Don’t Repeat Yourself) principle.

But simply avoiding repeated code snippet is not enough. What comes next naturally is to define a clear interface, or internal API between the modules (in a general sense, not the Rust mod). This is when traits comes in handy. Traits help you define and enforce interfaces. We’ll also discuss the performance impact on static dispatch vs. dynamic dispatch by using generics and trait object.

Finally we talk about more advanced (i.e. you shouldn’t use it unless necessary) tool like macros, which will help do crazier things by tapping directly into the compiler. You can write function-like macros that can help you reuse code that needs lower level access. You can also create custom derive with macros.

Finally, with these tools at hand, we can package our code into modules (Rust mod), which can help you define a hierarchical namespace. We can then organize these modules into Crates, which are software packages or libraries that can contain multiple files. When you reach this level, you can already consume and produce libraries and frameworks and work with a team of Rust developers.

A guided tour though the std

It’s very easy to learn a lot of syntax, but never understand how they are used in real life. In each section, we’ll guide you through how these programming tools are used in std, or the Rust standard library. Standard libraries are the extreme form of code reuse, you are reusing code that is produced by the core language team. You’ll be able to see how these features are put to real use in std to solve their code reuse needs.

We’ll also show you how you can publish your code onto crates.io, Rust’s package registry. Therefore you’ll not only be comfortable reusing other people’s crate, but be a valuable contributor to the wider Rust community.

Conclusion

So this summarized the highlights of this course. If you’ve already learned the basics of Rust and would like to take your Rust skill to the next level, please check this course out. You can find this course on the following platforms: Udemy, Safari Books and Packt.

https://shinglyu.github.io/web/2018/11/16/new-rust-course-building-reuseable-code-with-rust.html

|

|

Shing Lyu: New Rust Course - Building Reuseable Code with Rust |

My first ever video course is now live on Udemy, Safari Books and Packt. It really took me a long time and I’d love to share with you what I’ve prepared for you.

What’s this course about?

This course is about the Rust programming language, but it’s not those general introductory course on basic Rust syntax. This course focus on the code reuse aspect of the Rust language. So we won’t be touch every language feature, but we’ll help you understand how a selected set of features will help you achieve code reuse.

What’s so special about it?

Since these course is not a general introduction course, it is structured in a way that is bottom-up and help you learn how the features are actually used out in the wild.

A bottom up approach

We started from the most basic programming language construct: loops, iterators and functions. Then we see how we can further generalize functions and data structures (structs and enums) using generics. With these tools, we can avoid copy-pasting and stick to the DRY (Don’t Repeat Yourself) principle.

But simply avoiding repeated code snippet is not enough. What comes next naturally is to define a clear interface, or internal API between the modules (in a general sense, not the Rust mod). This is when traits comes in handy. Traits help you define and enforce interfaces. We’ll also discuss the performance impact on static dispatch vs. dynamic dispatch by using generics and trait object.

Finally we talk about more advanced (i.e. you shouldn’t use it unless necessary) tool like macros, which will help do crazier things by tapping directly into the compiler. You can write function-like macros that can help you reuse code that needs lower level access. You can also create custom derive with macros.

Finally, with these tools at hand, we can package our code into modules (Rust mod), which can help you define a hierarchical namespace. We can then organize these modules into Crates, which are software packages or libraries that can contain multiple files. When you reach this level, you can already consume and produce libraries and frameworks and work with a team of Rust developers.

A guided tour though the std

It’s very easy to learn a lot of syntax, but never understand how they are used in real life. In each section, we’ll guide you through how these programming tools are used in std, or the Rust standard library. Standard libraries are the extreme form of code reuse, you are reusing code that is produced by the core language team. You’ll be able to see how these features are put to real use in std to solve their code reuse needs.

We’ll also show you how you can publish your code onto crates.io, Rust’s package registry. Therefore you’ll not only be comfortable reusing other people’s crate, but be a valuable contributor to the wider Rust community.

Conclusion

So this summarized the highlights of this course. If you’ve already learned the basics of Rust and would like to take your Rust skill to the next level, please check this course out. You can find this course on the following platforms: Udemy, Safari Books and Packt.

https://shinglyu.github.io/web/2018/11/16/new-rust-course-building-reuseable-code-with-rust.html

|

|

The Mozilla Blog: Mozilla Fights On For Net Neutrality |

Mozilla took the next step today in the fight to defend the web and consumers from the FCC’s attack on an open internet. Together with other petitioners, Mozilla filed our reply brief in our case challenging the FCC’s elimination of critical net neutrality protections that require internet providers to treat all online traffic equally.

The fight for net neutrality, while not a new one, is an important one. We filed this case because we believe that the internet works best when people control for themselves what they see and do online.

The FCC’s removal of net neutrality rules is not only bad for consumers, it is also unlawful. The protections in place were the product of years of deliberation and careful fact-finding that proved the need to protect consumers, who often have little or no choice of internet provider. The FCC is simply not permitted to arbitrarily change its mind about those protections based on little or no evidence. It is also not permitted to ignore its duty to promote competition and protect the public interest. And yet, the FCC’s dismantling of the net neutrality rules unlawfully removes long standing rules that have ensured the internet provides a voice for everyone.

Meanwhile, the FCC’s defenses of its actions and the supporting arguments of large cable and telco company ISPs, who have come to the FCC’s aid, are misguided at best. They mischaracterize the internet’s technical structure as well as the FCC’s mandate to advance internet access, and they ignore clear evidence that there is little competition among ISPs. They repeatedly contradict themselves and have even introduced new justifications not outlined in the FCC’s original decision to repeal net neutrality protections.

Nothing we have seen from the FCC since this case began has changed our mind. Our belief in this action remains as strong as it was when its plan to undo net neutrality protections last year was first met with outcry from consumers, small businesses and advocates across the country.

We will continue to do all that we can to support an open and vibrant internet that is a resource accessible to all. We look forward to making our arguments directly before the D.C. Court of Appeals and the public. FCC, we’ll see you in court on February 1.

Mozilla v FCC – Joint Reply Brief

The post Mozilla Fights On For Net Neutrality appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/11/16/mozilla-fights-on-for-net-neutrality/

|

|

The Mozilla Blog: Mozilla Fights On For Net Neutrality |

Mozilla took the next step today in the fight to defend the web and consumers from the FCC’s attack on an open internet. Together with other petitioners, Mozilla filed our reply brief in our case challenging the FCC’s elimination of critical net neutrality protections that require internet providers to treat all online traffic equally.

The fight for net neutrality, while not a new one, is an important one. We filed this case because we believe that the internet works best when people control for themselves what they see and do online.

The FCC’s removal of net neutrality rules is not only bad for consumers, it is also unlawful. The protections in place were the product of years of deliberation and careful fact-finding that proved the need to protect consumers, who often have little or no choice of internet provider. The FCC is simply not permitted to arbitrarily change its mind about those protections based on little or no evidence. It is also not permitted to ignore its duty to promote competition and protect the public interest. And yet, the FCC’s dismantling of the net neutrality rules unlawfully removes long standing rules that have ensured the internet provides a voice for everyone.

Meanwhile, the FCC’s defenses of its actions and the supporting arguments of large cable and telco company ISPs, who have come to the FCC’s aid, are misguided at best. They mischaracterize the internet’s technical structure as well as the FCC’s mandate to advance internet access, and they ignore clear evidence that there is little competition among ISPs. They repeatedly contradict themselves and have even introduced new justifications not outlined in the FCC’s original decision to repeal net neutrality protections.

Nothing we have seen from the FCC since this case began has changed our mind. Our belief in this action remains as strong as it was when its plan to undo net neutrality protections last year was first met with outcry from consumers, small businesses and advocates across the country.

We will continue to do all that we can to support an open and vibrant internet that is a resource accessible to all. We look forward to making our arguments directly before the D.C. Court of Appeals and the public. FCC, we’ll see you in court on February 1.

Mozilla v FCC – Joint Reply Brief

The post Mozilla Fights On For Net Neutrality appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/11/16/mozilla-fights-on-for-net-neutrality/

|

|

The Mozilla Blog: Mozilla Fights On For Net Neutrality |

Mozilla took the next step today in the fight to defend the web and consumers from the FCC’s attack on an open internet. Together with other petitioners, Mozilla filed our reply brief in our case challenging the FCC’s elimination of critical net neutrality protections that require internet providers to treat all online traffic equally.

The fight for net neutrality, while not a new one, is an important one. We filed this case because we believe that the internet works best when people control for themselves what they see and do online.

The FCC’s removal of net neutrality rules is not only bad for consumers, it is also unlawful. The protections in place were the product of years of deliberation and careful fact-finding that proved the need to protect consumers, who often have little or no choice of internet provider. The FCC is simply not permitted to arbitrarily change its mind about those protections based on little or no evidence. It is also not permitted to ignore its duty to promote competition and protect the public interest. And yet, the FCC’s dismantling of the net neutrality rules unlawfully removes long standing rules that have ensured the internet provides a voice for everyone.

Meanwhile, the FCC’s defenses of its actions and the supporting arguments of large cable and telco company ISPs, who have come to the FCC’s aid, are misguided at best. They mischaracterize the internet’s technical structure as well as the FCC’s mandate to advance internet access, and they ignore clear evidence that there is little competition among ISPs. They repeatedly contradict themselves and have even introduced new justifications not outlined in the FCC’s original decision to repeal net neutrality protections.

Nothing we have seen from the FCC since this case began has changed our mind. Our belief in this action remains as strong as it was when its plan to undo net neutrality protections last year was first met with outcry from consumers, small businesses and advocates across the country.

We will continue to do all that we can to support an open and vibrant internet that is a resource accessible to all. We look forward to making our arguments directly before the D.C. Court of Appeals and the public. FCC, we’ll see you in court on February 1.

Mozilla v FCC – Joint Reply Brief

The post Mozilla Fights On For Net Neutrality appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/11/16/mozilla-fights-on-for-net-neutrality/

|

|

Mozilla Open Innovation Team: Virtual meeting rooms don’t have to be boring. We challenge you to design better ones! |

We are excited to announce the launch of the VR Design Challenge: Mozilla Hubs Clubhouse, a competition co-sponsored by Mozilla and Sketchfab, the world’s largest 3D-content platform. The goal of the competition is to create stunning 3D assets for Hubs by Mozilla.

What’s Hubs by Mozilla?

Mozilla’s mission is to make the Internet a global public resource, open and accessible to all, including innovators, content creators, and builders on the web. VR is changing the very future of web interaction, so advancing it is crucial to Mozilla’s mission. That was the initial idea behind Hubs by Mozilla, a VR interaction platform launched in April 2018 that lets you meet and talk to your friends, colleagues, partners, and customers in a shared 360-environment using just a browser, on any device from head-mounted displays like HTC Vive to 2D devices like laptops and mobile phones.

Since then, the Mozilla VR team has kept integrating new and exciting features to the Hubs experience: the ability bring videos, images, documents, and even 3D models into Hubs by simply pasting a link. In early October, two more useful features were added: drawing and photo uploads.

A challenge for Sketchfab’s content-creating community

The work to make the Hubs more comfortable to its denizens has only begun, and to spur creativity in this new area of Mozilla’s Mixed Reality program, Mozilla is partnering (again) with Sketchfab, a one-million-strong community of creators of 3D content, for a new VR Design Challenge: Mozilla Hubs Clubhouse.

We chose “Clubhouse” as the competition theme, because we want you to imagine and build the places you like to hang out and meet with your pals, family or colleagues. This can be any VR space from a treehouse to a luxury penthouse suite, a hobbit hole, the garage you used to jam back in the days or a super-secret gathering space at the bottom of the ocean. But it doesn’t stop with just the rooms. We challenge you to design all the props to be placed in these: decorations on the wall, furniture, artwork, machinery, or even objects that users could move around, such as coffee cups, and food bins. You totally own the place!

How to participate

The competition will run until Tuesday, November 27th (23:59 New York time — EDT), and the submitted assets will be evaluated by a four-judge panel composed of the members of Mozilla Hub and Sketchfab Community teams. Prizes will include Amazon Gift Cards, Oculus Go headsets, and Sketchfab PRO.

In order to participate in the competition you should have a Sketchfab account. If you don’t already have a Sketchfab account, you can sign up for free here.

Dreaming of hosting a virtual holiday party with your buddies in your own Clubhouse? Thinking of having a poster of your favorite band on the wall? An entire aquarium wall? Create them!

Virtual meeting rooms don’t have to be boring. We challenge you to design better ones! was originally published in Mozilla Open Innovation on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Firefox Test Pilot: Thoughts on the Firefox Email Tabs experiment |

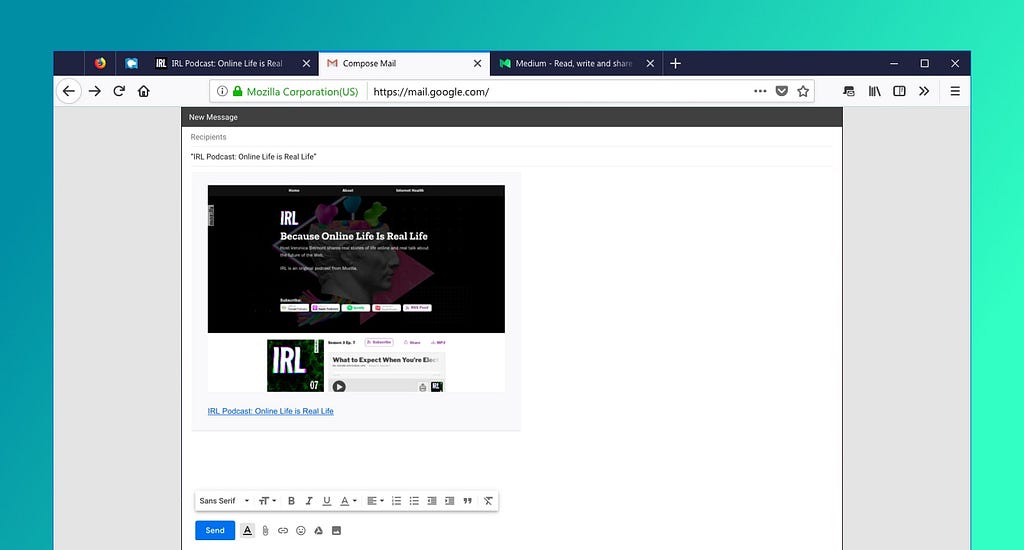

We recently released Email Tabs in Firefox Test Pilot. This was a project I championed, and I wanted to offer some context on it.

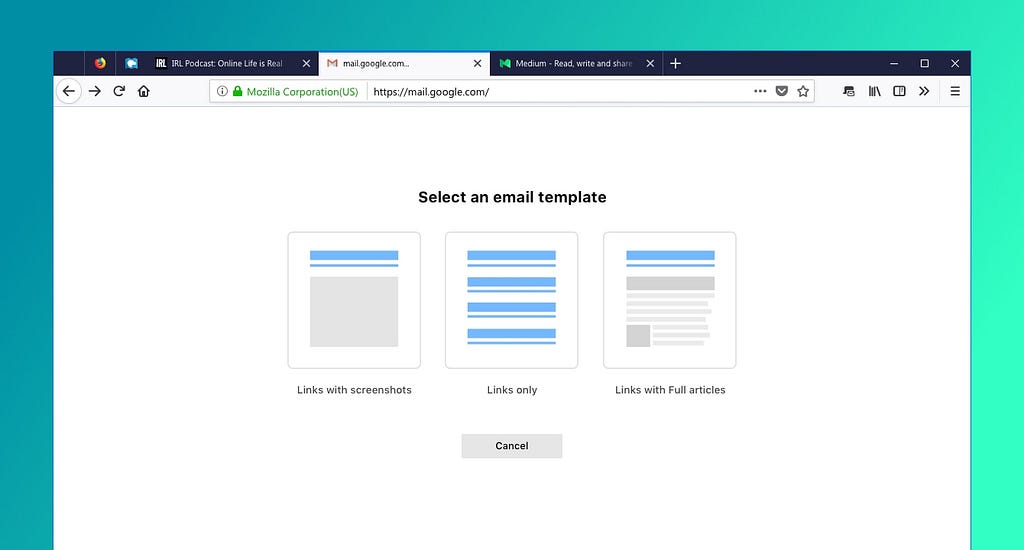

Email Tabs is a browser add-on that makes it easier to compose an email message from your tabs/pages. The experiment page describes how it works, but to summarize it from a technical point of view:

- You choose some tabs

- The add-on gets the best title and URL from the tab(s), makes a screenshot, and uses reader view to get simplified content from the tab

- It opens a email compose page

- It asks you what you want your email to look like (links, entire articles, screenshots)

- It injects the HTML (somewhat brutally) into the email composition window

- When the email is sent, if offers to close the compose window or all the tabs you’ve sent

It’s not fancy engineering. It’s just-make-it-work engineering. It does not propose a new standard for composing HTML emails. It doesn’t pay attention to your existing mail settings. It does not push forward the state of the art. It has no mobile component. Notably, no one in Mozilla ever asked us to make this thing. And yet I really like this add-on, and so I feel a need to explain why.

User Research origins

A long time ago Mozilla did research into Save/Share/Revisit. The research was based on interviews, journals, and directly watching people do work on their computers.

The results should not be surprising, but it was important to actually have them documented and backed up by research:

- People use simple techniques

- Everyone claims to be happy with their current processes

- Everyone used multiple tools that typically fed into each other

- Those processes might seem complicated and inefficient to me, but didn’t to them

- People said they might be open to improvements in specific steps

- People were not interested in revamping their overall processes

- Some techniques were particularly popular:

- Screenshots

- URLs

- Texting

- Bookmarks

Not everyone used all of these, but they were all popular.

The research made me change a page-freezing/saving tool (Page Shot) into what is now Firefox Screenshots (I didn’t intend to lose the freezing functionality along the way, but things happen when you ship).

Even if the research was fairly clear, it wasn’t prescriptive, and life moved on. But it sat in the back of my head, both email and the general question of workflows. And once I was doing less work on Screenshots I felt compelled to come back to it. Email stuck out, both because of how ubiquitous it was, and how little anyone cared about it. It seemed easy to improve on.

Email is also multipurpose. People will apologetically talk about emailing themselves something in order to save it, even though everyone does it. It can be a note for the future, something to archive for later, a message, a question, an FYI. One of the features of Email Tabs that I’m fond of is the ability to send a set of tabs, and then close those same tabs. Have a set of tabs that represent something you don’t want to forget, but don’t want to use right now? Send it to yourself. And unlike structured storage (like a bookmark folder), you can describe as much as you want about your next steps in the email itself.

Choosing to integrate with webmail

The obvious solution is to make something that emails out a page. A little web service perhaps, and you give it a URL and it fetches it, and then something that forwards the current URL to that service…

What seems simple becomes hard pretty quickly. Of course you have to fetch the page, and render it, and worry about JavaScript, and so on. But even if you skip that, then what email address will it come from? Do you integrate with a contact list? Make your own? How do you let people add a little note? A note per tab? Do you save a record?

Prepopulating an email composition answers a ton of questions:

- All the mail infrastructure

- From addresses, email verification, selecting your address, etc

- To field, CC, address book

- The editable body gives the user control and the ability to explain why they are sending something

- It’s very transparent to the user what they are sending, and they confirm it by hitting Send

Since then I’ve come to appreciate the power of editable documents. Again it should be obvious, but there’s this draw any programmer will feel towards structured data and read-only representations of that data. The open world of an email body is refreshingly unconstrained.

Email providers & broken browsers

One downside to this integration is that we are shipping with only Gmail support. That covers most people, but it feels wrong.

This is an experiment, so keep it simple, right? And that was the plan until internal stakeholders kept asking over and over for us to support other clients and we thought: if they care so much maybe they are right.

We didn’t get support for other providers ready for launch, but we did get a good start on it. Along the way I saw that Yahoo Mail is broken and Outlook isn’t supported at all in Firefox (and the instructions point to a pretty unhelpful addons.mozilla.org search). That’s millions of users who aren’t getting a good experience.

Growing Firefox is a really hard problem. Of course Mozilla has also studied this quite a bit, and one of the strongest conclusions is that people change their browser when their browser breaks. People aren’t out there looking for something better, everyone has better things to do than think about their web browser. But if things don’t work people go searching.

But what does broken really mean? I suspect if we looked more closely we might be surprised. The simple answer: something is broken if it doesn’t act the way it should. But what “should” something do? If you click a link and Mail.app opens up, and you don’t use Mail.app, that’s broken. To Mozilla developers, if Mail.app is your registered default mail provider, then it “should” open up. Who’s right?

Email Tabs doesn’t offer particular insight into this, but I do like that we’ve created something with the purpose of enabling a successful workflow. Nothing is built to a spec, or to a standard, it’s only built to work. I think that process is itself revealing.

Listening to the research

Research requires interpretation. We asked questions about saving, sharing, and revisiting content, and we got back answers on those topics. Were those the right questions to ask? They seem pretty good, but there’s other pretty good questions that will lead to very different answers. What makes you worried when you use the web? What do you want from a browser? What do you think about Firefox? What do you use a browser for? What are you doing when you switch from a phone to a desktop browser? We’ve asked all these questions, and they point to different answers.

And somewhere in there you have to find an intersection between what you hear in the research and what you know how to do. There’s no right answer. But there is something different when you start from the user research, instead of using the user research. There’s a different sort of honesty to the conclusions, and I hope that comes through in the product.

I know what Email Tabs isn’t: it’s not part of any strategy. It’s not part of any kind of play for anything. There’s no ulterior motives. There’s no income potential. This makes its future very hazy. And it won’t be a blow-up success. It is merely useful. But I like it, and I hope we do more.

Originally published at www.ianbicking.org.

Thoughts on the Firefox Email Tabs experiment was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Mike Hommey: PSA: Firefox Nightly now with experimental Wayland support |

As of last nightly (20181115100051), Firefox now supports Wayland on Linux, thanks to the work from Martin Stransky and Jan Horak, mostly.

Before that, it was possible to build your own Firefox with Wayland support (and Fedora does it), but now the downloads from mozilla.org come with Wayland support out of the box for the first time.

However, being experimental and all, the Wayland support is not enabled by default, meaning by default, you’ll still be using XWayland. To enable wayland support, first set the GDK_BACKEND environment variable to wayland.

To verify whether Wayland support is enabled, go to about:support, and check “WebGL 1 Driver WSI Info” and/or “WebGL 2 Driver WSI Info”. If they say something about GLX, Wayland support is not enabled. If they say something about EGL, it is. I filed a bug to make it more obvious what is being used.

It’s probably still a long way before Firefox enables Wayland support on Wayland by default, but we reached a major milestone here. Please test and report any bug you encounter.

Update: I should mention that should you build your own Firefox, as long as your Gtk+ headers come with Wayland support, you’ll end up with the same Wayland support as the one shipped by Mozilla.

|

|

Cameron Kaiser: And now for something completely different: SCSI2SD is not a panacea |

The first bit of "advice" drives me up the wall because while it's true for many Macs, it's now uttered in almost a knee-jerk fashion anywhere almost any vintage computer is mentioned (this kind of mindlessness infests pinball machine restoration as well). To be sure, in my experience 68030-based Macs and some of the surrounding years will almost invariably need a recap. All of my Color Classics, IIcis, IIsis and SE/30s eventually suffered capacitor failures and had to be refurbished, and if you have one of these machines that has not been recapped yet it's only a matter of time.

But this is probably not true for many other systems. Many capacitors have finite lifetimes but good quality components can function for rather long periods especially with regular periodic use; in particular, early microcomputers from the pre-surface mount era (including early Macs) only infrequently need capacitor replacement. My 1985-vintage Mac Plus and my Commodore microcomputers, for example, including my blue foil PET 2001 and a Revision A KIM-1 older than I am, function well and have never needed their capacitors to be serviced. Similarly, newer Macs, even many beige Power Macs, still have long enough residual capacitor lifespan that caps are (at least presently) very unlikely to be the problem, and trying to replace them runs you a greater risk of damaging the logic board than actually fixing anything (the capacitor plague of the early 2000s notwithstanding).

That brings us to the second invariant suggestion, a solid state disk replacement, of which at least for SCSI-based systems the SCSI2SD is probably the best known. Unlike capacitors, this suggestion has much better evidence to support it as a general recommendation as indicated by the SCSI2SD's rather long list of reported working hardware (V5 device compatibility, V6 device compatibility). However, one thing that isn't listed (much) on those pages is whether the functionality is still the same for other OSes these computers might run. That will become relevant in a moment.

My own history with SCSI2SD devices has been generally positive, but there have been issues. My very uncommon IBM ThinkPad "800" (the predecessor of the ThinkPad 850, one of the famous PowerPC IBM ThinkPads) opened its SCSI controller fuse after a SCSI2SD was installed, and so far has refused to boot from any device since then. The jury is still out on whether the ThinkPad's components were iffy to begin with or the SCSI2SD was defective, but the card went to the recycler regardless since I wasn't willing to test it on anything else. On the other hand, my beautiful bright orange plasma screen Solbourne S3000 has used a V5 SCSI2SD since the day I got it without any issues whatsoever, and I installed a 2.5" version in a Blackbird PowerBook 540c which runs OS 7.6.1 more or less stably.

You have already met thule, the long-suffering Macintosh IIci which runs the internal DNS and DHCP and speaks old-school EtherTalk, i.e., AppleTalk over DDP, to my antediluvian Mac (and one MS-DOS PC) clients for file and print services on the internal non-routable network. AFAIK NetBSD is the only maintained OS left that still supports DDP. Although current versions of the OS apparently continue to support it, I had problems even as early as 1.6 with some of my clients, so I keep it running NetBSD 1.5.2 with my custom kernel. However, none of the essential additional software packages such as netatalk are available anywhere obvious for this version, so rebuilding from scratch would be impossible; instead, a full-system network backup runs at regular intervals to archive the entire contents of the OS, which I can restore to the system from one of the other machines (see below). It has a Farallon EtherWave NuBus Ethernet card, 128MB of RAM (the maximum for the IIci) and the IIci 32K L1 cache card. It has run nearly non-stop since 1999. Here it is in the server room:

In 2014 it finally blew its caps and needed a logic board refurbishment (the L1 card was recapped at the same time). Its hard disk has long been marginal and the spare identical drive had nearly as many hours of service time. Rotating between the two drives and blacklisting the bad sectors as they appeared was obviously only a temporary solution. Though my intent was to run the drive(s) completely into the ground before replacing them, bizarre errors like corrupt symbols not being found in libc.so told me the end was near. Last week half of the house wasn't working because nothing could get a DHCP lease. I looked on the console and saw this:

The backup server had a mangled full system tar backup on it that was only half-completed and timestamped around the time the machine had kernel-panicked, implying the machine freaked out while streaming the backup to the network. This strongly suggested the hard disk had seized up at some point during the process. After I did some hardware confidence testing with Snooper and Farallon's diagnostics suite, I concluded that the RAM, cache, CPU, network card and logic board were fine and the hard disk was the only problem.

I have three NetBSD machines right now: this IIci, a Quadra 605 with a full 68040 which I use for disk cloning and system rescue (1.6.2), and a MIPS-based Cobalt RaQ 2 which acts as an NFS server. I briefly considered moving services to the RaQ, but pretty much nothing could replace this machine for offering AppleShare services and I still have many old Mac clients in the house that can only talk to thule, so I chose to reconstruct it. While it still came up in the System 7.1 booter partition (NetBSD/mac68k requires Mac OS to boot), I simply assumed that everything was corrupt and the hard disk was about to die, and that I should rebuild to a brand new device from the last successfully completed backup rather than try to get anything out of the mangled most recent one.

Some people had success with using SCSI2SDs on other NetBSD systems, so I figured this was a good time to try it myself. I had a SCSI2SD v5 and v6 I had recently ordered and a few 8GB microSD cards which would be more than ample space for the entire filesystem and lots of wear-leveling. I tried all of these steps on the v5 and most of them on the v6, both with up-to-date firmware, and at least a couple iterations with different SD media. Here goes.

First attempt: I set up the Q605 to run a block-level restore from an earlier block-level image. Assuming this worked, the next step would have been overlaying the image with changed files from the last good tar backup. After the block-level image restore was complete I put the SCSI2SD into the IIci to make sure it had worked so far. It booted the Mac OS partition, started the NetBSD booter and immediately panicked.

Second attempt: I manually partitioned the SCSI2SD device into the Mac and NetBSD root and swap partitions. That seemed to work, so next I attempted to use the Mac-side Mkfs utility to build a clean UFS filesystem on the NetBSD partition, but Mkfs didn't like the disk geometry and refused to run. After suppressing the urge to find my ball-peen hammer, I decided to reconfigure the SCSI2SD with the geometry from the Quantum Fireball drive it was replacing and it accepted that. I then connected it to the Q605 and restored the files from the last good tar backup to the NetBSD partition. It mounted, unmounted and fscked fine. Triumphantly, I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and immediately panicked.

Third attempt: theorizing maybe something about the 1.6 system was making the 1.5.2 system unstable, I decided to create a 1.5.2 miniroot from an old install CD I still had around, boot that on the IIci, and then install on the IIci (and eliminate the Q605 as a factor). I decided to do this as a separate SCSI target, so I configured the SCSI2SD to look like two 2GB drives. Other than an odd SCSI error #5, Mkfs seemed to successfully create a new UFS filesystem on the second target. I then ran the Mac-side Installer to install a clean 1.5.2. The minishell started and seemed to show a nice clean UFS volume, so I attempted to install the package sets (copied to the disk earlier). After a few files from the first install set were written, however, the entire system locked up.

Fourth attempt: maybe it didn't like the second target, and maybe I had to take a break to calm down at this point, maybe. I removed the second target and repartitioned the now single 2GB SCSI target into a Mac, miniroot, and full root and swap partitions. This time, Mkfs refused to newfs the new miniroot no matter what the geometry was set to and no matter how I formatted the partition, and I wasn't in any mood to mess around with block and fragment sizes.

Fifth attempt: screw the miniroot and screw Apple and screw the horse Jean-Louis Gassee rode in on and all his children with a rusty razorblade sideways. I might also have scared the cat around this time. I repartitioned again back to a MacOS, swap and root partition, and Mkfs on the IIci grudgingly newfsed that. I was able to mount this partition on the Q605 and restore from the last good backup. It mounted, unmounted and fscked fine. I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and immediately panicked.

At this point I had exceeded my patience and blood pressure, and there was also a non-zero probability the backup itself was bad. There was really only one way to find out. As it happens, I had bought a stack of new-old-stock Silicon Graphics-branded Quantum Atlas II drives to rebuild my SGI Indigo2, so I pulled one of those out of the sealed anti-static wrap, jumpered it to spin up immediately, low-level formatted, partitioned and Mkfsed it on the IIci, connected it to the Q605 and restored from the tar backup. It mounted, unmounted and fscked fine. I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and ... the OS came up and, after I was satisfied with some cursory checks in single-user, uneventfully booted into multi-user. The backup was fine and the system was restored.

Since the Atlas II is a 7200rpm drive (the Fireball SE it replaced was 5400rpm) and runs a little hot, I took the lid off and placed it sideways to let it vent a bit better.

As a nice bonus the Atlas II is a noticeably faster drive, not only due to the faster rotation but also the larger cache. There's been no more weird errors, the full system backup overnight yesterday terminated completely and in record time, most system functions are faster overall and even some of the other weird glitchiness of the machine has disappeared. (The device on top of the case is the GSM terminal I use for sending text message commands to the main server.) I've allocated another one of the NOS Atlas drives to the IIci, and I'll find something else for rebuilding the Indigo2. I think I've got some spare SCSI2SDs sitting around ...

So what's the moral of this story?

The moral of the story is that SCSI is awfully hard to get right and compatibility varies greatly on the controller, operating system and device. I've heard of NetBSD/mac68k running fine with SCSI2SD devices on other Quadras, and it appeared to work on my Quadra 605 as well (the infamous "LC" Quadra), but under no circumstances would my IIci want any truck with any hardware revision of it. Despite that, however, the IIci worked fine with it in MacOS except when the NetBSD installer took over (and presumably started using its own SCSI routines instead of the SCSI Manager) or tried to boot the actual operating system. It's possible later versions of NetBSD would have corrected this, but it's unlikely they would be corrected in the Mac-side Mkfs and Installer because of their standalone codebase, and the whole point of a solid-state disk emulator is to run the old OS your system has to run anyway. Of course, all of this assumes my IIci doesn't have some other hardware flaw that only manifests in this particular fashion, but this seems unlikely.

Thus, if you've got a mission-critical or otherwise irreplaceable machine with a dying hard drive, maybe your preservation strategy should simply be to find it another spinning disk. SCSI2SD is perfectly fine for hobbyist applications, and probably works well enough in typical environments, but it's not a panacea for every situation and the further you get away from frequent usage cases the less likely it's been tested in those circumstances. If it doesn't work or you don't want to risk it, you're going to need that spare drive after all.

There's also the issue of how insane it is to use almost 30-year-old hardware for essential services (the IIci was introduced in 1989), but anyone who brings that up in the comments on this particular blog gets to stand in the corner and think about what they just said.

http://tenfourfox.blogspot.com/2018/11/and-now-for-something-completely.html

|

|

Cameron Kaiser: And now for something completely different: SCSI2SD is not a panacea |

The first bit of "advice" drives me up the wall because while it's true for many Macs, it's now uttered in almost a knee-jerk fashion anywhere almost any vintage computer is mentioned (this kind of mindlessness infests pinball machine restoration as well). To be sure, in my experience 68030-based Macs and some of the surrounding years will almost invariably need a recap. All of my Color Classics, IIcis, IIsis and SE/30s eventually suffered capacitor failures and had to be refurbished, and if you have one of these machines that has not been recapped yet it's only a matter of time.

But this is probably not true for many other systems. Many capacitors have finite lifetimes but good quality components can function for rather long periods especially with regular periodic use; in particular, early microcomputers from the pre-surface mount era (including early Macs) only infrequently need capacitor replacement. My 1987-vintage Mac Plus and my Commodore microcomputers, for example, including my blue foil PET 2001 and a Revision A KIM-1 older than I am, function well and have never needed their capacitors to be serviced. Similarly, newer Macs, even many beige Power Macs, still have long enough residual capacitor lifespan that caps are (at least presently) very unlikely to be the problem, and trying to replace them runs you a greater risk of damaging the logic board than actually fixing anything (the capacitor plague of the early 2000s notwithstanding).

That brings us to the second invariant suggestion, a solid state disk replacement, of which at least for SCSI-based systems the SCSI2SD is probably the best known. Unlike capacitors, this suggestion has much better evidence to support it as a general recommendation as indicated by the SCSI2SD's rather long list of reported working hardware (V5 device compatibility, V6 device compatibility). However, one thing that isn't listed (much) on those pages is whether the functionality is still the same for other OSes these computers might run. That will become relevant in a moment.

My own history with SCSI2SD devices has been generally positive, but there have been issues. My very uncommon IBM ThinkPad "800" (the predecessor of the ThinkPad 850, one of the famous PowerPC IBM ThinkPads) opened its SCSI controller fuse after a SCSI2SD was installed, and so far has refused to boot from any device since then. The jury is still out on whether the ThinkPad's components were iffy to begin with or the SCSI2SD was defective, but the card went to the recycler regardless since I wasn't willing to test it on anything else. On the other hand, my beautiful bright orange plasma screen Solbourne S3000 has used a V5 SCSI2SD since the day I got it without any issues whatsoever, and I installed a 2.5" version in a Blackbird PowerBook 540c which runs OS 7.6.1 more or less stably.

You have already met thule, the long-suffering Macintosh IIci which runs the internal DNS and DHCP and speaks old-school EtherTalk, i.e., AppleTalk over DDP, to my antediluvian Mac (and one MS-DOS PC) clients for file and print services on the internal non-routable network. AFAIK NetBSD is the only maintained OS left that still supports DDP. Although current versions of the OS apparently continue to support it, I had problems even as early as 1.6 with some of my clients, so I keep it running NetBSD 1.5.2 with my custom kernel. However, none of the essential additional software packages such as netatalk are available anywhere obvious for this version, so rebuilding from scratch would be impossible; instead, a full-system network backup runs at regular intervals to archive the entire contents of the OS, which I can restore to the system from one of the other machines (see below). It has a Farallon EtherWave NuBus Ethernet card, 128MB of RAM (the maximum for the IIci) and the IIci 32K L2 cache card. It has run nearly non-stop since 1999. Here it is in the server room:

In 2014 it finally blew its caps and needed a logic board refurbishment (the L1 card was recapped at the same time). Its hard disk has long been marginal and the spare identical drive had nearly as many hours of service time. Rotating between the two drives and blacklisting the bad sectors as they appeared was obviously only a temporary solution. Though my intent was to run the drive(s) completely into the ground before replacing them, bizarre errors like corrupt symbols not being found in libc.so told me the end was near. Last week half of the house wasn't working because nothing could get a DHCP lease. I looked on the console and saw this:

The backup server had a mangled full system tar backup on it that was only half-completed and timestamped around the time the machine had kernel-panicked, implying the machine freaked out while streaming the backup to the network. This strongly suggested the hard disk had seized up at some point during the process. After I did some hardware confidence testing with Snooper and Farallon's diagnostics suite, I concluded that the RAM, cache, CPU, network card and logic board were fine and the hard disk was the only problem.

I have three NetBSD machines right now: this IIci, a Quadra 605 with a full 68040 which I use for disk cloning and system rescue (1.6.2), and a MIPS-based Cobalt RaQ 2 which acts as an NFS server. I briefly considered moving services to the RaQ, but pretty much nothing could replace this machine for offering AppleShare services and I still have many old Mac clients in the house that can only talk to thule, so I chose to reconstruct it. While it still came up in the System 7.1 booter partition (NetBSD/mac68k requires Mac OS to boot), I simply assumed that everything was corrupt and the hard disk was about to die, and that I should rebuild to a brand new device from the last successfully completed backup rather than try to get anything out of the mangled most recent one.

Some people had success with using SCSI2SDs on other NetBSD systems, so I figured this was a good time to try it myself. I had a SCSI2SD v5 and v6 I had recently ordered and a few 8GB microSD cards which would be more than ample space for the entire filesystem and lots of wear-leveling. I tried all of these steps on the v5 and most of them on the v6, both with up-to-date firmware, and at least a couple iterations with different SD media. Here goes.

First attempt: I set up the Q605 to run a block-level restore from an earlier block-level image. Assuming this worked, the next step would have been overlaying the image with changed files from the last good tar backup. After the block-level image restore was complete I put the SCSI2SD into the IIci to make sure it had worked so far. It booted the Mac OS partition, started the NetBSD booter and immediately panicked.

Second attempt: I manually partitioned the SCSI2SD device into the Mac and NetBSD root and swap partitions. That seemed to work, so next I attempted to use the Mac-side Mkfs utility to build a clean UFS filesystem on the NetBSD partition, but Mkfs didn't like the disk geometry and refused to run. After suppressing the urge to find my ball-peen hammer, I decided to reconfigure the SCSI2SD with the geometry from the Quantum Fireball drive it was replacing and it accepted that. I then connected it to the Q605 and restored the files from the last good tar backup to the NetBSD partition. It mounted, unmounted and fscked fine. Triumphantly, I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and immediately panicked.

Third attempt: theorizing maybe something about the 1.6 system was making the 1.5.2 system unstable, I decided to create a 1.5.2 miniroot from an old install CD I still had around, boot that on the IIci, and then install on the IIci (and eliminate the Q605 as a factor). I decided to do this as a separate SCSI target, so I configured the SCSI2SD to look like two 2GB drives. Other than an odd SCSI error #5, Mkfs seemed to successfully create a new UFS filesystem on the second target. I then ran the Mac-side Installer to install a clean 1.5.2. The minishell started and seemed to show a nice clean UFS volume, so I attempted to install the package sets (copied to the disk earlier). After a few files from the first install set were written, however, the entire system locked up.

Fourth attempt: maybe it didn't like the second target, and maybe I had to take a break to calm down at this point, maybe. I removed the second target and repartitioned the now single 2GB SCSI target into a Mac, miniroot, and full root and swap partitions. This time, Mkfs refused to newfs the new miniroot no matter what the geometry was set to and no matter how I formatted the partition, and I wasn't in any mood to mess around with block and fragment sizes.

Fifth attempt: screw the miniroot and screw Apple and screw the horse Jean-Louis Gassee rode in on and all his children with a rusty razorblade sideways. I might also have scared the cat around this time. I repartitioned again back to a MacOS, swap and root partition, and Mkfs on the IIci grudgingly newfsed that. I was able to mount this partition on the Q605 and restore from the last good backup. It mounted, unmounted and fscked fine. I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and immediately panicked.

At this point I had exceeded my patience and blood pressure, and there was also a non-zero probability the backup itself was bad. There was really only one way to find out. As it happens, I had bought a stack of new-old-stock Silicon Graphics-branded Quantum Atlas II drives to rebuild my SGI Indigo2, so I pulled one of those out of the sealed anti-static wrap, jumpered it to spin up immediately, low-level formatted, partitioned and Mkfsed it on the IIci, connected it to the Q605 and restored from the tar backup. It mounted, unmounted and fscked fine. I then moved it over to the IIci, where it booted the Mac OS partition, started the NetBSD booter and ... the OS came up and, after I was satisfied with some cursory checks in single-user, uneventfully booted into multi-user. The backup was fine and the system was restored.

Since the Atlas II is a 7200rpm drive (the Fireball SE it replaced was 5400rpm) and runs a little hot, I took the lid off and placed it sideways to let it vent a bit better.

As a nice bonus the Atlas II is a noticeably faster drive, not only due to the faster rotation but also the larger cache. There's been no more weird errors, the full system backup overnight yesterday terminated completely and in record time, most system functions are faster overall and even some of the other weird glitchiness of the machine has disappeared. (The device on top of the case is the GSM terminal I use for sending text message commands to the main server.) I've allocated another one of the NOS Atlas drives to the IIci, and I'll find something else for rebuilding the Indigo2. I think I've got some spare SCSI2SDs sitting around ...

So what's the moral of this story?

The moral of the story is that SCSI is awfully hard to get right and compatibility varies greatly on the controller, operating system and device. I've heard of NetBSD/mac68k running fine with SCSI2SD devices on other Quadras, and it appeared to work on my Quadra 605 as well (the infamous "LC" Quadra), but under no circumstances would NetBSD on my IIci want any truck with any hardware revision of it. Despite that, however, the IIci worked fine with it in MacOS except when the NetBSD installer took over (and presumably started using its own SCSI routines instead of the SCSI Manager) or tried to boot the actual operating system. It's possible later versions of NetBSD would have corrected this, but it's unlikely they would be corrected in the Mac-side Mkfs and Installer because of their standalone codebase, and the whole point of a solid-state disk emulator is to run the old OS your system has to run anyway. Of course, all of this assumes my IIci doesn't have some other hardware flaw that only manifests in this particular fashion, but that seems unlikely.

Thus, if you've got a mission-critical or otherwise irreplaceable machine with a dying hard drive, maybe your preservation strategy should simply be to find it another spinning disk. SCSI2SD is perfectly fine for hobbyist applications, and probably works well enough in typical environments, but it's not a panacea for every situation and the further you get away from frequent usage cases the less likely it's been tested in those circumstances. If it doesn't work or you don't want to risk it, you're going to need that spare drive after all.

There's also the issue of how insane it is to use almost 30-year-old hardware for essential services (the IIci was introduced in 1989), but anyone who brings that up in the comments on this particular blog gets to stand in the corner and think about what they just said.

http://tenfourfox.blogspot.com/2018/11/and-now-for-something-completely.html

|

|

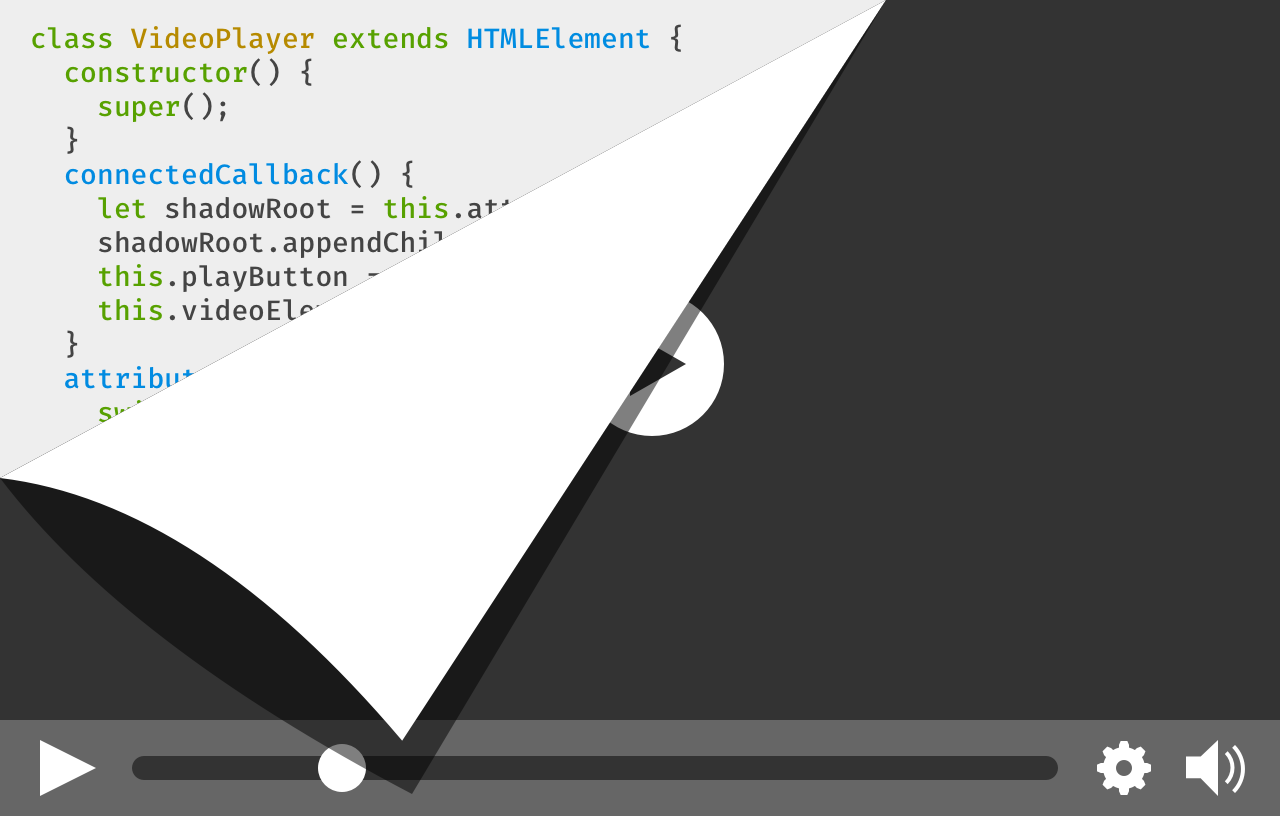

Hacks.Mozilla.Org: The Power of Web Components |

Background

Ever since the first animated DHTML cursor trails and “Site of the Week” badges graced the web, re-usable code has been a temptation for web developers. And ever since those heady days, integrating third-party UI into your site has been, well, a semi-brittle headache.

Using other people’s clever code has required buckets of boilerplate JavaScript or CSS conflicts involving the dreaded !important. Things are a bit better in the world of React and other modern frameworks, but it’s a bit of a tall order to require the overhead of a full framework just to re-use a widget. HTML5 introduced a few new elements like and , which added some much-needed common UI widgets to the web platform. But adding new standard elements for every sufficiently common web UI pattern isn’t a sustainable option.

In response, a handful of web standards were drafted. Each standard has some independent utility, but when used together, they enable something that was previously impossible to do natively, and tremendously difficult to fake: the capability to create user-defined HTML elements that can go in all the same places as traditional HTML. These elements can even hide their inner complexity from the site where they are used, much like a rich form control or video player.

The standards evolve

As a group, the standards are known as Web Components. In the year 2018 it’s easy to think of Web Components as old news. Indeed, early versions of the standards have been around in one form or another in Chrome since 2014, and polyfills have been clumsily filling the gaps in other browsers.

After some quality time in the standards committees, the Web Components standards were refined from their early form, now called version 0, to a more mature version 1 that is seeing implementation across all the major browsers. Firefox 63 added support for two of the tent pole standards, Custom Elements and Shadow DOM, so I figured it’s time to take a closer look at how you can play HTML inventor!

Given that Web Components have been around for a while, there are lots of other resources available. This article is meant as a primer, introducing a range of new capabilities and resources. If you’d like to go deeper (and you definitely should), you’d do well to read more about Web Components on MDN Web Docs and the Google Developers site.

Defining your own working HTML elements requires new powers the browser didn’t previously give developers. I’ll be calling out these previously-impossible bits in each section, as well as what other newer web technologies they draw upon.

The

This first element isn’t quite as new as the others, as the need it addresses predates the Web Components effort. Sometimes you just need to store some HTML. Maybe it’s some markup you’ll need to duplicate multiple times, maybe it’s some UI you don’t need to create quite yet. The element takes HTML and parses it without adding the parsed DOM to the current document.

This won't display!

Where does that parsed HTML go, if not to the document? It’s added to a “document fragment”, which is best understood as a thin wrapper that contains a portion of an HTML document. Document fragments dissolve when appended to other DOM, so they’re useful for holding a bunch of elements you want later, in a container you don’t need to keep.

“Well okay, now I have some DOM in a dissolving container, how do I use it when I need it?”

You could simply insert the template’s document fragment into the current document:

let template = document.querySelector('template');

document.body.appendChild(template.content);

This works just fine, except you just dissolved the document fragment! If you run the above code twice you’ll get an error, as the second time template.content is gone. Instead, we want to make a copy of the fragment prior to inserting it:

document.body.appendChild(template.content.cloneNode(true));

The cloneNode method does what it sounds like, and it takes an argument specifying whether to copy just the node itself or include all its children.

The template tag is ideal for any situation where you need to repeat an HTML structure. It particularly comes in handy when defining the inner structure of a component, and thus is inducted into the Web Components club.

New Powers:

- An element that holds HTML but doesn’t add it to the current document.

Review Topics:

- Document Fragments

- Duplicating DOM nodes using

cloneNode

Custom Elements

Custom Elements is the poster child for the Web Components standards. It does what it says on the tin – allowing developers to define their own custom HTML elements. Making this possible and pleasant builds fairly heavily on top of ES6’s class syntax, where the v0 syntax was much more cumbersome. If you’re familiar with classes in JavaScript or other languages, you can define classes that inherit from or “extend” other classes:

class MyClass extends BaseClass {

// class definition goes here

}

Well, what if we were to try this?

class MyElement extends HTMLElement {}

Until recently that would have been an error. Browsers didn’t allow the built-in HTMLElement class or its subclasses to be extended. Custom Elements unlocks this restriction.

The browser knows that a

tag maps to the HTMLParagraphElement class, but how does it know what tag to map to a custom element class? In addition to extending built-in classes, there’s now a “Custom Element Registry” for declaring this mapping:

customElements.define('my-element', MyElement);

Now every on the page is associated with a new instance of MyElement. The constructor for MyElement will be run whenever the browser parses a tag.

What’s with that dash in the tag name? Well, the standards bodies want the freedom to create new HTML tags in the future, and that means that developers can’t just go creating an

In addition to having your constructor called whenever your custom element is created, there are a number of additional “lifecycle” methods that are called on a custom element at various moments:

connectedCallbackis called when an element is appended to a document. This can happen more than once, e.g. if the element is moved or removed and re-added.disconnectedCallbackis the counterpart toconnectedCallback.attributeChangeCallbackfires when attributes from a whitelist are modified on the element.

A slightly richer example looks like this:

class GreetingElement extends HTMLElement {

constructor() {

super();

this._name = 'Stranger';

}

connectedCallback() {

this.addEventListener('click', e => alert(`Hello, ${this._name}!`));

}

attributeChangedCallback(attrName, oldValue, newValue) {

if (attrName === 'name') {

if (newValue) {

this._name = newValue;

} else {

this._name = 'Stranger';

}

}

}

}

GreetingElement.observedAttributes = ['name'];

customElements.define('hey-there', GreetingElement);

Using this on a page will look like the following:

Greeting

Personalized Greeting

But what if you want to extend an existing HTML element? You definitely can and should, but using them within markup looks fairly different. Let’s say we want our greeting to be a button:

class GreetingElement extends HTMLButtonElement

We’ll also need to tell the registry we’re extending an existing tag:

customElements.define('hey-there', GreetingElement, { extends: 'button' });

Because we’re extending an existing tag, we actually use the existing tag instead of our custom tag name. We use the new special is attribute to tell the browser what kind of button we’re using:

It may seem a bit clunky at first, but assistive technologies and other scripts wouldn’t know our custom element is a kind of button without this special markup.

From here, all the classic web widget techniques apply. We can set up a bunch of event handlers, add custom styling, and even stamp out an inner structure using . People can use your custom element alongside their own code, via HTML templating, DOM calls, or even new-fangled frameworks, several of which support custom tag names in their virtual DOM implementations. Because the interface is the standard DOM interface, Custom Elements allows for truly portable widgets.

New Powers

- The ability to extend the built-in ‘HTMLElement’ class and its subclasses

- A custom element registry, available via

customElements.define() - Special lifecycle callbacks for detecting element creation, insertion to the DOM, attribute changes, and more.

Review Topics

- ES6 Classes, particularly subclassing and the

extendskeyword

Shadow DOM

We’ve made our friendly custom element, we’ve even thrown on some snazzy styling. We want to use it on all our sites, and share the code with others so they can use it on theirs. How do we prevent the nightmare of conflicts when our customized element runs face-first into the CSS of other sites? Shadow DOM provides a solution.

The Shadow DOM standard introduces the concept of a shadow root. Superficially, a shadow root has standard DOM methods, and can be appended to as if it was any other DOM node. Shadow roots shine in that their contents don’t appear to the document that contains their parent node:

// attachShadow creates a shadow root.

let shadow = div.attachShadow({ mode: 'open' });

let inner = document.createElement('b');

inner.appendChild(document.createTextNode('Hiding in the shadows'));

// shadow root supports the normal appendChild method.

shadow.appendChild(inner);

div.querySelector('b'); // empty

In the above example, the

The contents of a shadow root are styled by adding a

https://hacks.mozilla.org/2018/11/the-power-of-web-components/

|

|

Mozilla GFX: WebRender newsletter #30 |

Hi! This is the 30th issue of WebRender’s most famous newsletter. At the top of each newsletter I try to dedicate a few paragraphs to some historical/technical details of the project. Today I’ll write about blob images.

WebRender currently doesn’t support the full set of graphics primitives required to render all web pages. The focus so far has been on doing a good job of rendering the most common elements and providing a fall-back for the rest. We call this fall-back mechanism “blob images”.

The general idea is that when we encounter unsupported primitives during displaylist building we create an image object and instead of backing it with pixel data or a texture handle, we assign it a serialized list of drawing commands (the blob). For WebRender, blobs are just opaque buffers of bytes and a handler object is provided by the embedder (Gecko in our case) to turn this opaque buffer into actual pixels that can be used as regular images by the rest of the rendering pipeline.

This opaque blob representation and an external handler lets us implement these missing features using Skia without adding large and messy dependencies to WebRender itself. While a big part of this mechanism operates as a black box, WebRender remains responsible for scheduling the blob rasterization work at the appropriate time and synchronizing it with other updates. Our long term goal is to incrementally implement missing primitives directly in WebRender.

Notable WebRender and Gecko changes

- Bobby keeps improving the texture cache heuristics to reduce peak GPU memory usage.

- Bobby improved the texture cache’s debug display.

- Jeff fixed a pair of blob image related crashes.

- Jeff investigated content frame time telemetry data.

- Dan improved our score on the dl_mutate talos benchmark by 20%.

- Kats landed the async zooming changes for WebRender on Android.

- Kats fixed a crash.

- Kvark simplified some of the displaylist building code.

- Matt Fixed a crash with tiled blob images.

- Lee fixed a bug causing fonts to use the wrong DirectWrite render mode on Windows.

- Emilio fixed a box-shadow regression.

- Emilio switched the CI to an osmesa version that works with clang 7.

- Glenn landed the picture caching work for text shadows.

- Glenn refactored some of the plane splitting code to move it into the picture traversal pass.

- Glenn removed some dead code.

- Glenn separated brush segment descriptors form clip mask instance.

- Glenn refactored the none-patch segment generation code.

- Glenn removed the local_rect from PictureState.

- Sotaro improved the frame synchronization yielding a nice performance improvement on the glterrain talos benchmark.

- Sotaro recycled the D3D11 Query object, which improved the glterrain, tart and tscrollx talos scores on Windows.

- Sotaro simplified some of the image sharing code.

- The gfx team has a new manager, welcome Jessie!

Ongoing work

- Matt and Dan are investigating performance.

- Doug is investigating the effects of document splitting on talos scores.

- Lee is fixing font rendering issues.

- Kvark is making progress on the clip/scroll API refactoring.

- Kats keeps investigating WebRender on Android.

- Glenn is incrementally working towards picture caching for scrolled surfaces.

Enabling WebRender in Firefox Nightly

In about:config

- set “gfx.webrender.all” to true,

- restart Firefox.

https://mozillagfx.wordpress.com/2018/11/15/webrender-newsletter-30/

|

|

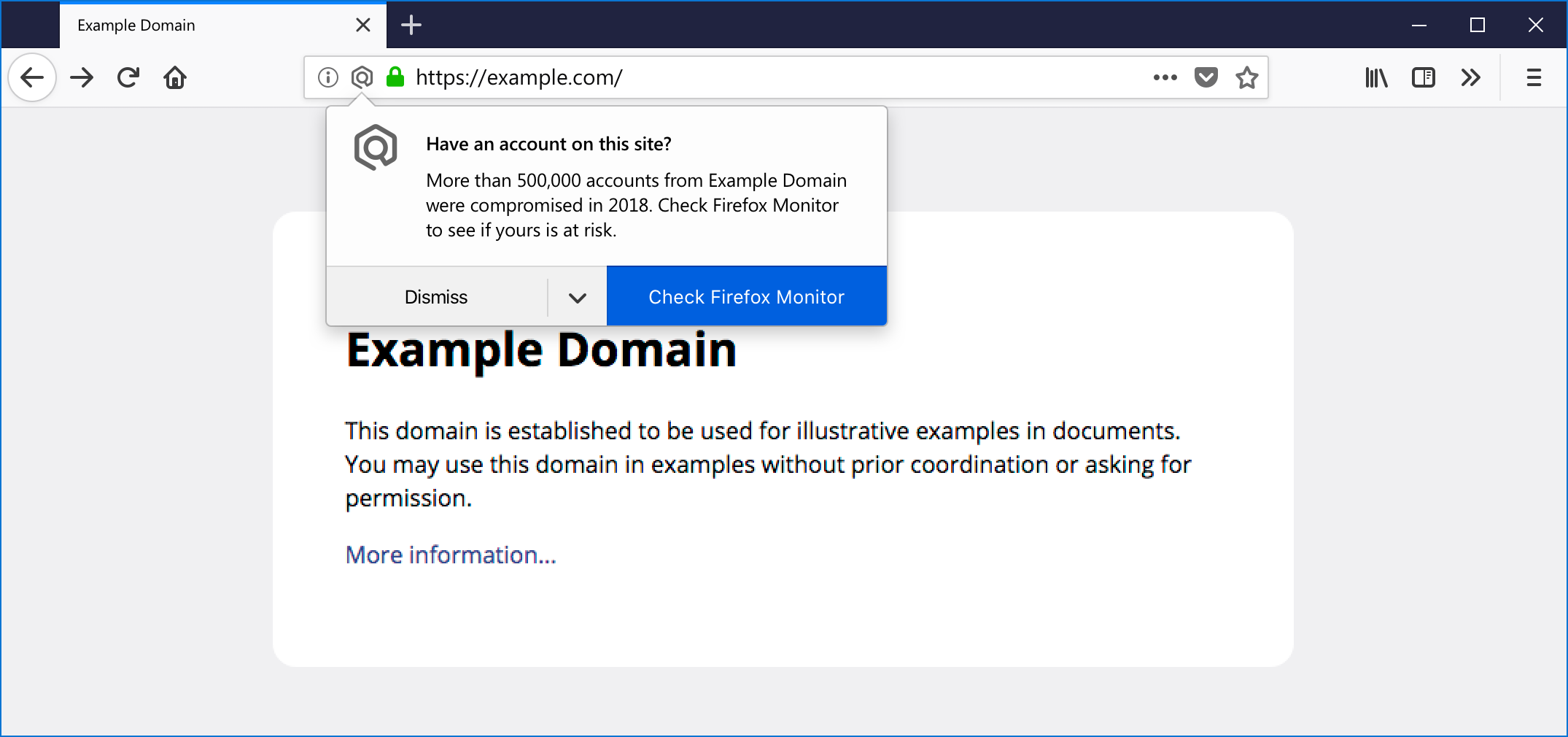

The Mozilla Blog: Firefox Monitor Launches in 26 Languages and Adds New Desktop Browser Feature |