Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Mozilla Blog: Your Privacy Focused Holiday Shopping Guide |

|

|

Hacks.Mozilla.Org: Private by Design: How we built Firefox Sync |

|

|

Hacks.Mozilla.Org: Private by Design: How we built Firefox Sync |

|

|

Robert O'Callahan: Comparing The Quality Of Debug Information Produced By Clang And Gcc |

I've had an intuition that clang produces generally worse debuginfo than gcc for optimized C++ code. It seems that clang builds have more variables "optimized out" — i.e. when stopped inside a function where a variable is in scope, the compiler's generated debuginfo does not describe the value of the variable. This makes debuggers less effective, so I've attempted some qualitative analysis of the issue.

I chose to measure, for each parameter and local variable, the range of instruction bytes within its function over which the debuginfo can produce a value for this variable, and also the range of instruction bytes over which the debuginfo says the variable is in scope (i.e. the number of instruction bytes in the enclosing lexical block or function). I add those up over all variables, and compute the ratio of variable-defined-bytes to variable-in-scope-bytes. The higher this "definition coverage" ratio, the better.

This metric has some weaknesses. DWARF debuginfo doesn't give us accurate scopes for local variables; the defined-bytes for a variable defined halfway through its lexical scope will be about half of its in-scope-bytes, even if the debuginfo is perfect, so the ideal ratio is less than 1 (and unfortunately we can't compute it). In debug builds, and sometimes in optimized builds, compilers may give a single definition for the variable value that applies to the entire scope; this improves our metric even though the results are arguably worse. Sometimes compilers produce debuginfo that is simply incorrect; our metric doesn't account for that. Not all variables and functions are equally interesting for debugging, but this metric weighs them all equally. The metric assumes that the points of interest for a debugger are equally distributed over instruction bytes. On the other hand, the metric is relatively simple. It focuses on what we care about. It depends only on the debuginfo, not on the generated code or actual program executions. It's robust to constant scaling of code size. We can calculate the metric for any function or variable, which makes it easy to drill down into the results and lets us rank all functions by the quality of their debuginfo. We can compare the quality of debuginfo between different builds of the same binary at function granularity. The metric is sensitive to optimization decisions such as inlining; that's OK.

I built a debuginfo-quality tool in Rust to calculate this metric for an arbitrary ELF binary containing DWARF debuginfo. I applied it to the main Firefox binary libxul.so built with clang 8 (8.0.0-svn346538-1~exp1+0~20181109191347.1890~1.gbp6afd8e) and gcc 8 (8.2.1 20181105 (Red Hat 8.2.1-5)) using the default Mozilla build settings plus ac_add_options --enable-debug; for both compilers that sets the most relevant options to -g -Os -fno-omit-frame-pointer. I ignored the Rust compilation units in libxul since they use LLVM in both builds.

In our somewhat arbitrary metric, gcc is significantly ahead of clang for both parameters and local variables. "Parameters" includes the parameters of inlined functions. As mentioned above, the ideal ratio for local variables is actually less than 1, which explains at least part of the difference between parameters and local variables here.

gcc uses some debuginfo features that clang doesn't know about yet. An important one is DW_OP_GNU_entry_value (standardized as DW_OP_entry_value in DWARF 5). This defines a variable (usually a parameter) in terms of an expression to be evaluated at the moment the function was entered. A traditional debugger can often evaluate such expressions after entering the function, by inspecting the caller's stack frame; our Pernosco debugger has easy access to all program states, so such expressions are no problem at all. I evaluated the impact of DW_OP_GNU_entry_value and the related DW_OP_GNU_parameter_ref by configuring debuginfo-quality to treat definitions using those features as missing. (I'm assuming that gcc only uses those features when a variable value is not otherwise available.)

DW_OP_GNU_entry_value has a big impact on parameters but almost no impact on local variables. It accounts for the majority, but not all, of gcc's advantage over clang for parameters. DW_OP_GNU_parameter_ref has almost no impact at all. However, in most cases where DW_OP_GNU_entry_value would be useful, users can work around its absence by manually inspecting earlier stack frames, especially when time-travel is available. Therefore implementing DW_OP_GNU_entry_value may not be as high a priority as these numbers would suggest.

Improving the local variable numbers may be more useful. I used debuginfo-quality to compare two binaries (clang-built and gcc-built), computing, for each function, the difference in the function's definition coverage ratios, looking only at local variables and sorting functions according to that difference:

debuginfo-quality --language cpp --functions --only-locals ~/tmp/clang-8-libxul.so ~/tmp/gcc-8-libxul.soThis gives us a list of functions starting with those where clang is generating the worst local variable information compared to gcc (and ending with the reverse). There are a lot of functions where clang failed to generate any variable definitions at all while gcc managed to generate definitions covering the whole function. I wonder if anyone is interested in looking at these functions and figuring out what needs to be fixed in clang.

Designing and implementing this kind of analysis is error-prone. I've made my analysis tool source code available, so feel free to point out any improvements that could be made.

Update Helpful people on Twitter pointed me to some excellent other work in this area. Dexter is another tool for measuring debuginfo quality; it's much more thorough than my tool, but less scalable and depends on a particular program execution. I think it complements my work nicely. It has led to ongoing work to improve LLVM debuginfo. There is also CheckDebugify infrastructure in LLVM to detect loss of debuginfo, which is also driving improvements. Alexandre Oliva has an excellent writeup of what gcc does to preserve debuginfo through optimization passes.

Update #2 Turns out llvm-dwarfdump has a --statistics option which measures something very similar to what I'm measuring. One difference is that if a variable has any definitions at all, llvm-dwarfdump treats the program point where it's first defined as the start of its scope. That's an assumption I didn't want to make. There is a graph of this metric over the last 5.5 years of clang, using clang 3.4 as a benchmark. It shows that things got really bad a couple of years ago but have since been improving.

http://robert.ocallahan.org/2018/11/comparing-quality-of-debug-information.html

|

|

Firefox Test Pilot: Shop intelligently with Price Wise |

Just in time for the holiday shopping season, we’re excited to announce the launch of Price Wise in Firefox Test Pilot.

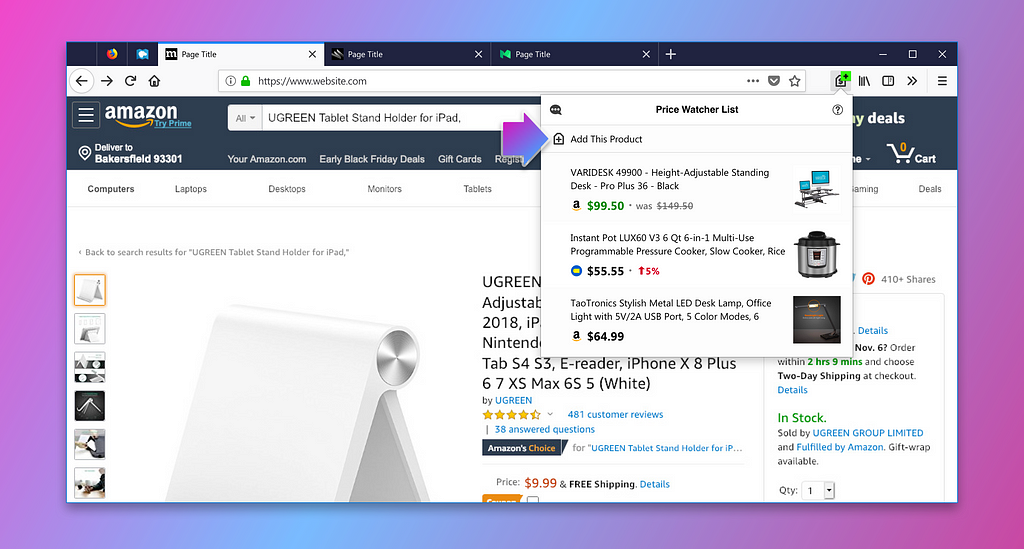

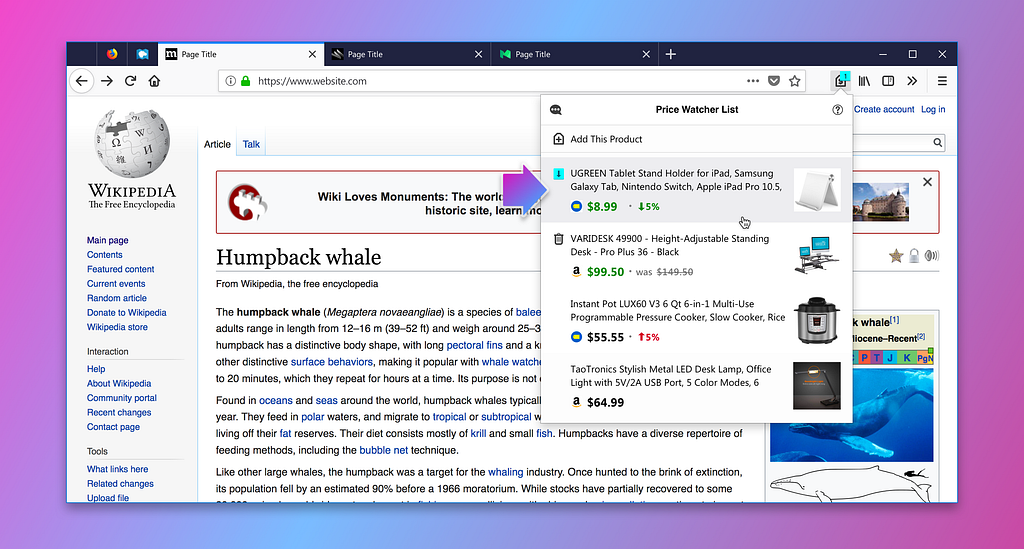

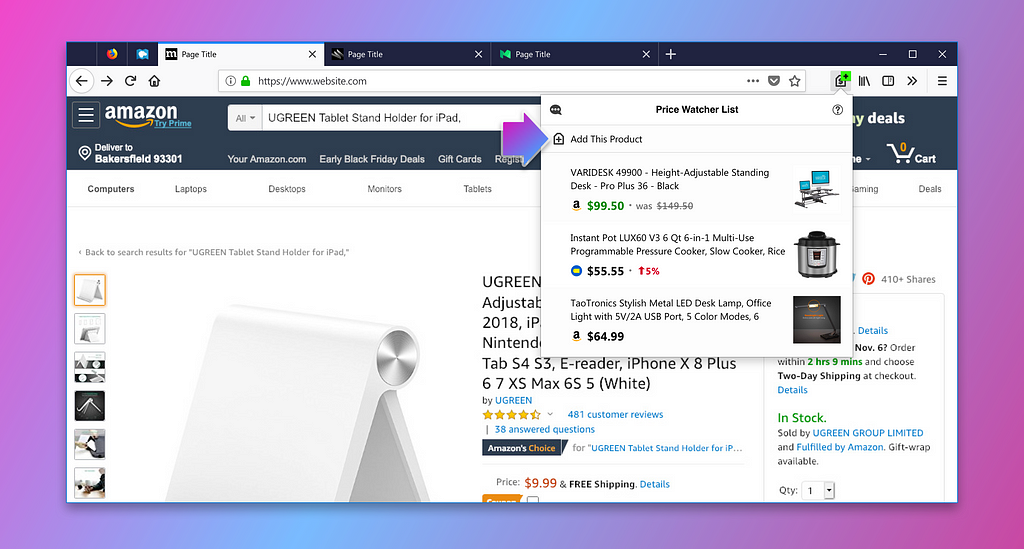

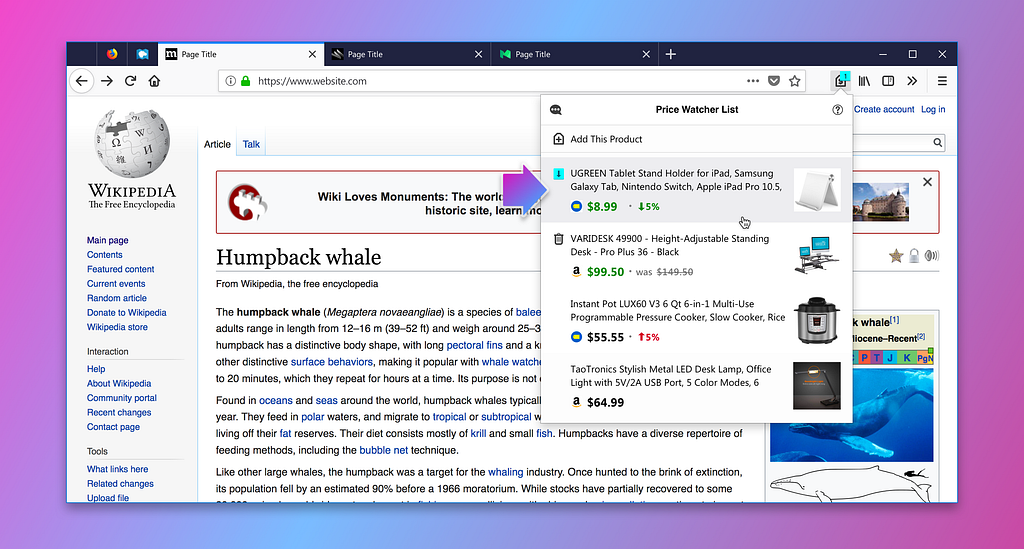

Price Wise is a new, smart tool to help you save money online. While browsing a product of interest, add it to your watch list:

Price Wise will automatically monitor the prices of products on your watch list. When they drop, we’ll let you know:

Price checks are done locally, so your shopping data never leaves Firefox. We’re particularly excited about that; Price Wise is the first Firefox feature designed around Fathom, a toolkit for understanding the content of webpages you browse.

Existing software like this works by tracking you across the web, and it’s often run by advertisers and social networks seeking to learn more about you. Your browser can do these checks for you, while making sure the gathered information never leaves your computer. We know it’s possible to deliver great utility while protecting your privacy, and want you to get a great deal without getting a raw deal.

https://medium.com/media/d4c43d59a67397f159f15c1798de5053/hrefPrice Wise is launching in the U.S. (English only), and we’ll support the top 5 U.S. shopping sites: Amazon, eBay, Walmart, Best Buy, and Home Depot. We’re launching a narrow pilot to understand usage and site compatibility but plan to expand coverage to other sites, countries, and currencies.

Give Price Wise a try today on Firefox Test Pilot, and let us know what you think!

Shop intelligently with Price Wise was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Firefox Test Pilot: Shop intelligently with Price Wise |

Just in time for the holiday shopping season, we’re excited to announce the launch of Price Wise in Firefox Test Pilot.

Price Wise is a new, smart tool to help you save money online. While browsing a product of interest, add it to your watch list:

Price Wise will automatically monitor the prices of products on your watch list. When they drop, we’ll let you know:

Price checks are done locally, so your shopping data never leaves Firefox. We’re particularly excited about that; Price Wise is the first Firefox feature designed around Fathom, a toolkit for understanding the content of webpages you browse.

Existing software like this works by tracking you across the web, and it’s often run by advertisers and social networks seeking to learn more about you. Your browser can do these checks for you, while making sure the gathered information never leaves your computer. We know it’s possible to deliver great utility while protecting your privacy, and want you to get a great deal without getting a raw deal.

https://medium.com/media/d4c43d59a67397f159f15c1798de5053/hrefPrice Wise is launching in the U.S. (English only), and we’ll support the top 5 U.S. shopping sites: Amazon, eBay, Walmart, Best Buy, and Home Depot. We’re launching a narrow pilot to understand usage and site compatibility but plan to expand coverage to other sites, countries, and currencies.

Give Price Wise a try today on Firefox Test Pilot, and let us know what you think!

Shop intelligently with Price Wise was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Mozilla Reps Community: New Council Members – Fall 2018 Elections |

We are very happy to announce that our 2 new Council members Monica Bonilla and Yofie Setiawan are fully on-boarded and already working moving the Mozilla Reps program forward. A warm welcome from all of us. We we are very excited to have you and can’t wait to build the program together.

Of course we would like to thank a lot the 2 outgoing members: Mayur Patil and Prathamesh Chavan. You have worked extremely hard to move the program forward and your input and strategic thinking have inspired the rest of the Reps.

The Mozilla Reps Council is the governing body of the Mozilla Reps Program. It provides the general vision of the program and oversees day-to-day operations globally. Currently, 7 volunteers and 2 paid staff sit on the council. Find out more on the Reps wiki.

https://blog.mozilla.org/mozillareps/2018/11/12/new-council-members-fall-2018-elections/

|

|

Mozilla Reps Community: New Council Members – Fall 2018 Elections |

We are very happy to announce that our 2 new Council members Monica Bonilla and Yofie Setiawan are fully on-boarded and already working moving the Mozilla Reps program forward. A warm welcome from all of us. We we are very excited to have you and can’t wait to build the program together.

Of course we would like to thank a lot the 2 outgoing members: Mayur Patil and Prathamesh Chavan. You have worked extremely hard to move the program forward and your input and strategic thinking have inspired the rest of the Reps.

The Mozilla Reps Council is the governing body of the Mozilla Reps Program. It provides the general vision of the program and oversees day-to-day operations globally. Currently, 7 volunteers and 2 paid staff sit on the council. Find out more on the Reps wiki.

https://blog.mozilla.org/mozillareps/2018/11/12/new-council-members-fall-2018-elections/

|

|

The Firefox Frontier: Let Price Wise track prices for you this holiday shopping season |

The online shopping experience is really geared towards purchases that are made immediately. Countless hours have been spent to get you checked out as soon as possible. If you know … Read more

The post Let Price Wise track prices for you this holiday shopping season appeared first on The Firefox Frontier.

|

|

The Firefox Frontier: Let Price Wise track prices for you this holiday shopping season |

The online shopping experience is really geared towards purchases that are made immediately. Countless hours have been spent to get you checked out as soon as possible. If you know … Read more

The post Let Price Wise track prices for you this holiday shopping season appeared first on The Firefox Frontier.

|

|

The Firefox Frontier: Sharing links via email just got easier thanks to Email Tabs |

If your family is anything like ours, the moment the calendar flips to October, you’re getting texts and emails asking for holiday wish lists. Email remains one of the top … Read more

The post Sharing links via email just got easier thanks to Email Tabs appeared first on The Firefox Frontier.

|

|

The Firefox Frontier: Sharing links via email just got easier thanks to Email Tabs |

If your family is anything like ours, the moment the calendar flips to October, you’re getting texts and emails asking for holiday wish lists. Email remains one of the top … Read more

The post Sharing links via email just got easier thanks to Email Tabs appeared first on The Firefox Frontier.

|

|

The Mozilla Blog: Firefox Ups the Ante with Latest Test Pilot Experiment: Price Wise and Email Tabs |

|

|

The Mozilla Blog: Firefox Ups the Ante with Latest Test Pilot Experiment: Price Wise and Email Tabs |

|

|

Wladimir Palant: As far as I'm concerned, email signing/encryption is dead |

It’s this time of year again, sending emails from Thunderbird fails with an error message:

The certificates I use to sign my emails have expired. So I once again need to go through the process of getting replacements. Or I could just give up on email signing and encryption. Right now, I am leaning towards the latter.

Why did I do it in the first place?

A while back, I used to communicate a lot with users of my popular open source project. So it made sense to sign emails and let people verify — it’s really me writing. It also gave people a way to encrypt their communication with me.

The decision in favor of S/MIME rather than PGP wasn’t because of any technical advantage. The support for S/MIME is simply built into many email clients by default, so the chances that the other side would be able to recognize the signature were higher.

How did this work out?

In reality, I had a number of confused users asking about that “attachment” I sent them. What were they supposed to do with this smime.p7s file?

Over the years, I received mails from more than 7000 email addresses. Only 72 signed their emails with S/MIME, 52 used PGP to sign. I only exchanged encrypted mails with one person.

What’s the point of email signing?

The trouble is, signing mails is barely worth it. If somebody receives an unsigned mail, they won’t go out of their way to verify the sender. Most likely, they won’t even notice, because humans are notoriously bad at recognizing the absence of something. But even if they do, unsigned is what mails usually look like.

Add to this that the majority of mail users are using webmail now. So their email clients have no support for either S/MIME or PGP. Nor is it realistic to add this support without introducing a trusted component such as a browser extension. But with people who didn’t want to install a dedicated email client, how likely are they to install this browser extension even if a trustworthy solution existed?

Expecting end users to take care of sender verification just isn’t realistic. Instead, approaches like SPF or DKIM emerged. While these aren’t perfect and expect you to trust your mail provider, fake sender addresses are largely a solved issue now.

Wouldn’t end-to-end encryption be great?

Now we know of course about state-level actors spying on the internet traffic, at least since 2013 there is no denying. So there has been tremendous success in deprecating unencrypted HTTP traffic. Shouldn’t the same be done for emails?

Sure, but I just don’t see it happen by means of individual certificates. Even the tech crowd is struggling when it comes to mobile email usage. As to the rest of the world, good luck explaining them why they need to jump through so many hoops, starting with why webmail is a bad choice. In fact, we considered rolling out email encryption throughout a single company and had to give up. The setup was simply too complicated and limited the possible use cases too much.

So encrypting email traffic is now done by enabling SSL in all those mail relays. Not really end-to-end encryption, with the mail text visible on each of those relays. Not entirely safe either, as long as the unencrypted fallback still exists — an attacker listening in the middle can always force the mail servers to fall back to an unencrypted connection. But at least passive eavesdroppers will be dealt with.

But what if S/MIME or PGP adoption increases to 90% of the population?

Good luck with that. As much as I would love to live in this perfect world, I just don’t see it happen. It’s all a symptom of the fact that security is bolted on top of email. I’m afraid, if we really want end-to-end encryption we’ll need an entirely different protocol. Most importantly, secure transmissions should be the default rather than an individual choice. And then we’ll only have to validate the approach and make sure it’s not a complete failure.

https://palant.de/2018/11/12/as-far-as-i-m-concerned-email-signing-encryption-is-dead

|

|

Wladimir Palant: As far as I'm concerned, email signing/encryption is dead |

It’s this time of year again, sending emails from Thunderbird fails with an error message:

The certificates I use to sign my emails have expired. So I once again need to go through the process of getting replacements. Or I could just give up on email signing and encryption. Right now, I am leaning towards the latter.

Why did I do it in the first place?

A while back, I used to communicate a lot with users of my popular open source project. So it made sense to sign emails and let people verify — it’s really me writing. It also gave people a way to encrypt their communication with me.

The decision in favor of S/MIME rather than PGP wasn’t because of any technical advantage. The support for S/MIME is simply built into many email clients by default, so the chances that the other side would be able to recognize the signature were higher.

How did this work out?

In reality, I had a number of confused users asking about that “attachment” I sent them. What were they supposed to do with this smime.p7s file?

Over the years, I received mails from more than 7000 email addresses. Only 72 signed their emails with S/MIME, 52 used PGP to sign. I only exchanged encrypted mails with one person.

What’s the point of email signing?

The trouble is, signing mails is barely worth it. If somebody receives an unsigned mail, they won’t go out of their way to verify the sender. Most likely, they won’t even notice, because humans are notoriously bad at recognizing the absence of something. But even if they do, unsigned is what mails usually look like.

Add to this that the majority of mail users are using webmail now. So their email clients have no support for either S/MIME or PGP. Nor is it realistic to add this support without introducing a trusted component such as a browser extension. But with people who didn’t want to install a dedicated email client, how likely are they to install this browser extension even if a trustworthy solution existed?

Expecting end users to take care of sender verification just isn’t realistic. Instead, approaches like SPF or DKIM emerged. While these aren’t perfect and expect you to trust your mail provider, fake sender addresses are largely a solved issue now.

Wouldn’t end-to-end encryption be great?

Now we know of course about state-level actors spying on the internet traffic, at least since 2013 there is no denying. So there has been tremendous success in deprecating unencrypted HTTP traffic. Shouldn’t the same be done for emails?

Sure, but I just don’t see it happen by means of individual certificates. Even the tech crowd is struggling when it comes to mobile email usage. As to the rest of the world, good luck explaining them why they need to jump through so many hoops, starting with why webmail is a bad choice. In fact, we considered rolling out email encryption throughout a single company and had to give up. The setup was simply too complicated and limited the possible use cases too much.

So encrypting email traffic is now done by enabling SSL in all those mail relays. Not really end-to-end encryption, with the mail text visible on each of those relays. Not entirely safe either, as long as the unencrypted fallback still exists — an attacker listening in the middle can always force the mail servers to fall back to an unencrypted connection. But at least passive eavesdroppers will be dealt with.

But what if S/MIME or PGP adoption increases to 90% of the population?

Good luck with that. As much as I would love to live in this perfect world, I just don’t see it happen. It’s all a symptom of the fact that security is bolted on top of email. I’m afraid, if we really want end-to-end encryption we’ll need an entirely different protocol. Most importantly, secure transmissions should be the default rather than an individual choice. And then we’ll only have to validate the approach and make sure it’s not a complete failure.

https://palant.de/2018/11/12/as-far-as-i-m-concerned-email-signing-encryption-is-dead

|

|

Mozilla Reps Community: Rep of the Month – October 2018 |

Please join us in congratulating Tim Maks van den Broek, our Rep of the Month for October 2018!

Tim is one of our most active members in the Dutch community. During his 15+ years as a Mozilla Volunteer he has touched many parts of the Project. More recently his focus is on user support and he is active in our Reps Onboarding team.

On the Onboarding Team he dedicates time for new Reps joining the project to ensure a smooth process in getting to know our processes and work… He is also helping the Participation Systems team in operationalizing (i.e. bug fixing) identity and access management at Mozilla (shortly known as IAM login system).

To congratulate him, please head over to the Discourse topic!

https://blog.mozilla.org/mozillareps/2018/11/12/rep-of-the-month-october-2018/

|

|

Mozilla Reps Community: Rep of the Month – October 2018 |

Please join us in congratulating Tim Maks van den Broek, our Rep of the Month for October 2018!

Tim is one of our most active members in the Dutch community. During his 15+ years as a Mozilla Volunteer he has touched many parts of the Project. More recently his focus is on user support and he is active in our Reps Onboarding team.

On the Onboarding Team he dedicates time for new Reps joining the project to ensure a smooth process in getting to know our processes and work… He is also helping the Participation Systems team in operationalizing (i.e. bug fixing) identity and access management at Mozilla (shortly known as IAM login system).

To congratulate him, please head over to the Discourse topic!

https://blog.mozilla.org/mozillareps/2018/11/12/rep-of-the-month-october-2018/

|

|