Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nathan Froyd: when an implementation monoculture might be the right thing |

It’s looking increasingly likely that Firefox will, in the not-too-distant future, build with a single C++ compiler across the four major platforms we support. I’m uneasy with this, but I think I’ve made my peace with it, partly as a result of writing the piece below.

Firefox currently builds with three major C++ compilers across four platforms: Microsoft’s Visual C++ compiler (MSVC), GCC, and Clang. A fair amount of work has been done to deal with peculiar bugs in all three compilers: you can go search the source code and/or Bugzilla to find hacks that were needed for one reason or another. A fair amount of work has also been stalled or shelved because one or two compilers don’t quite measure up in some required area (e.g. standards support). As you might imagine, many a Firefox engineer has bemoaned the need for cross-compiler compatibility.

Cross-implementation compatibility is something that Mozilla expends a lot of effort on in a different context. We have a Tech Evangelism bugzilla component for outreach to sites who use techniques that don’t translate across browsers. When new sites appear that deliberately block Firefox (whether because the launch team took the time to test with Firefox and determine the user experience wouldn’t be acceptable, or because cross-browser compatibility was an explicit non-goal), Firefox engineers go find the performance cliffs and fix them. Mozilla has a long-history of promoting the benefits of multiple implementations of the web platform; some of the old guard might remember “Works best in all browsers” campaigns and the like. If you squint properly, you can even see this promotion in the manifesto (principles 2, 5, 6, 7, and 9, by my reckoning).

So as nice as a single implementation might be, dealing with multiple implementations was a fact of life in building an high quality open-source browser. We dealt with it, because it seemed like we would always need to support MSVC; who would invest the time to create an open source, MSVC-compatible compiler?

Well, Google, mostly, and a host of other people, because the past several releases of Clang have included an MSVC-compatible frontend, clang-cl. (Indeed, Firefox has been using clang-cl for Windows static analysis builds for some time.) And now that we have a usable non-MSVC compiler on Windows, we can contemplate using an open-source compiler to create our release Windows builds. And once we have that, we can consider using (and potentially only supporting) a single compiler (Clang) for all of the major platforms we support; Linux would be the remaining holdout. (Chrome already ships on Windows with clang and requires clang everywhere, FWIW.)

We might continue to require that things build with MSVC and GCC on relevant platforms, even if we’re not shipping these builds; even if this happened, such builds seem unlikely to last for very long, for all the reasons that we wanted them dropped in the first place. I imagine we’d probably continue to accept patches to make things build with non-Clang compilers, as long as the patches were not intrusive, just like we accept patches for non-tier 1 platforms.

Supporting a single compiler has a number of advantages:

- Cross-language LTO (i.e. inlining) between Rust and C++ (we could, of course, do this today, but we wouldn’t get the win on all platforms);

- Mozilla engineers can fix bugs in Clang/LLVM if need be;

- Fixes can be more easily backported from the Clang/LLVM development tree;

- Contributors have fewer compiler quirks to hold up their patches;

- Integrating and/or upgrading local copies of upstream projects becomes easier;

- Performance tuning becomes somewhat more straightforward when you have a single compiler to worry about.

I am probably forgetting some along the way. (I don’t think it’s true that we’ll be able to entirely eliminate hacks to pacify the compiler; you push on C++ hard enough and long enough, and you find yourself doing all manner of unusual things. We might even find ourselves doing more hacks, since we can justify it via, “Since we can/can’t rely on the compiler to do X…”)

I can see all the advantages. I can even admire the sheer coolness of some of them; cross-language inlining sounds fantastic! But the analogy between the Web situation and the C++ compiler situation makes me uneasy: we ask web developers to write cross-browser compatible websites, with all the time and energy that requires. We tout the goodness of supporting multiple implementations of the web platform. However, in the implementation of that web platform, we are in the process of deciding that the benefits of supporting a single C++ implementation are greater than whatever benefits (engineering, philosophical, etc.) might accrue from supporting multiple implementations.

To be explicit: we are making the exact style of decision that we ask web development teams not to make.

After having proposed this and thought about it for a while, I think the analogy is a bit strained. We make the argument that websites should be cross-browser compatible because we support the freedom of users to access those sites with whatever browser they like. Whereas Firefox engineering is the only “consumer” of the compiler(s), and so we should optimize for that single consumer. Indeed, we don’t really concern ourselves with cross-engine compatibility for the JavaScript that lies behind our UI. Firefox users (generally) don’t care too much what compiler gets used to build Firefox, and they’d probably support a switch to a compiler monoculture if that meant the browser got faster!

(I’m not completely at ease with calling the two situations dissimilar; it’d be all too easy for a website to say they only care about a single “user”, viz. users of $BROWSER, and dispense with cross-browser support. I want to have a stronger argument for this case, but I don’t at the moment…)

At the end of the day, I think I’m mostly in support (0.6 on the Apache voting scale?). I think it will be cool when it’s done, and I will probably wind up doing some work in support of the project. But I can’t completely shake my uneasiness. What do you think?

https://blog.mozilla.org/nfroyd/2018/05/29/when-implementation-monoculture-right-thing/

|

|

Mozilla Open Policy & Advocacy Blog: ARCEP report: “Device neutrality” and the open internet |

What happened?

A french version of this blog post is also available.

In February 2018, the French regulator, ARCEP, published a report on how device, browser, and OS level restrictions (under the broad label of “devices”) could be the ‘missing link’ towards achieving an open internet. In March 2018, the Body of European Regulators for Electronic Communications (BEREC) also published a report on how devices can impact user choice, where it noted the possible incentives for providers with sufficient market power to allow for a “less open use of the internet.”

It should not be possible for device, OS, and app store providers to leverage their gatekeeping power to distort the level playing field for content, to unfairly favour their own content or demote that of competitors. This could be done in a variety of ways, and the report highlights some of these: restricting device and API functions, unfairly discouraging the use of alternative app stores, or non-transparency in app store rankings. In this blog, we put forth a principles-based response to these concerns, and potential policy solutions put forth by ARCEP.

Overall, we think that:

- Applications should generally have the opportunity to become full replacements of default applications.

- Users should be informed of privacy risks associated with downloading content from alternative app stores. However, in order to exercise meaningful choice, greater specificity rather than blanket warnings are preferable.

- Some degree of transparency in app store rankings is critical to ensuring accountability, although “algorithmic transparency” might be an unhelpful framing.

- Access to device or API functions should not be restricted to distort the level playing field for content services, for example, by unfairly limiting access to non-affiliated content.

Is this a net-neutrality concern?

The ARCEP report notes that issues of device-level restrictions have been “overlooked” in Europe’s net neutrality regulation (2015/2120). While we welcome this discussion as taking a holistic view of internet policy and the multiple levers that influence user choice and innovation, we think these issues fall outside the scope of the net neutrality legal framework. Net neutrality rules are directed at the behaviour of internet service providers (ISPs) on the premise that they are uniquely situated to act as gatekeepers to access content/information. More bluntly, the traffic shaping and management practices of ISPs are wholly outside the control of users, and therefore protections are needed to ensure ISPs do not abuse their gatekeeper position. Foundational principles of net neutrality like the end-to-end principle and the best efforts principle translate into practical requirements on ISPs to treat all data on the internet without discrimination, restriction, or interference no matter the sender, receiver, content, website, platform, application, feature, attached equipment, or means of communication, or any types thereof.

Where this discussion does resonate with net neutrality, however, is in its commitment to the principle of “innovation without permission.” This is the principle that everyone and anyone should be able to innovate on the internet without seeking permission from anyone, any entity, or other gatekeepers. This includes device, OS, and app store providers. In this spirit, we review and respond to the the four courses of action that ARCEP proposes to put into effect at the national level.

ARCEPs policy proposals

1. Allow users to delete pre-installed apps

ARCEP’s Proposal: The report proposes that users must be allowed to delete pre-installed applications. Pre-installed apps (whether configured by the device manufacturer or the OS provider), the report notes, can “lure users away from certain content and services, particularly when these apps are displayed on the device home screen.”

Our view: Applications should have the opportunity to become full replacements of default applications, which includes the ability to delete the default option. For certain categories of applications, this value is amplified, for example the ability to toggle between or change the default maps, calendars, or emails. However, device manufacturers may reasonably restrict the deletion of pre-installed applications when doing so would risk the core functionality or security of the device (e.g., system preferences and settings). Following this principle, ARCEP refers to the South Korean example, where guidelines allows users to delete any pre-installed app from their device, provided they are not vital to the device’s operation or security.

2. Enable alternative rankings for the online content and services available in app stores/ More transparency in app store rankings

ARCEP’s Proposal: The ARCEP report recommends enabling alternative rankings as a possible solution for the app store. More accountability in app store rankings is valuable, however, we think there may be less interventionist (but equally impactful) methods to go about this, such as transparency.

ARCEP does highlight transparency in indexing and ranking as a key proposal, noting with concern that app store providers remain opaque about the rules they use to approve and index these same apps, whether in terms of how long it takes to review an app or rules of a more editorial nature. ARCEP notes that transparency “would encourage app stores to treat developers more fairly, in a way that would foster internet openness.”

Our view: Some degree of transparency in how app stores rank and index content is critical to accountability. Without it, it is possible for app stores to leverage their gatekeeping power to unfairly favour affiliated content, demote the content of competitors, or to distort the level playing field.

The two dominant app stores do publicly disclose broad criteria for ranking. The Google Play Store states that “apps are ranked based on a combination of ratings, reviews, downloads, and other factors” but states that the relative weights and values of these criteria are “a proprietary part of the Google search algorithm.” On the other hand, the Apple app store discloses that ranking is based on two main criteria: “text relevance” such as apps title, keywords, primary category (and tips on how to optimize for this), as well as “user behaviour” such as downloads and the number and quality of ratings and reviews.

These disclosures give developers and the public a standard with which to hold app stores accountable. It provides a basis on which to call out preferential treatment to apps that is not explained by these criteria.

Beyond these broad criteria, the ARCEP report is also concerned about proprietary search and ranking algorithms being “black boxes.” We believe, however, that “accountability” rather than “transparency” is the best frame in which to consider algorithmic decision-making. While asking to “show me how it’s done” is an appealing idea in principle, in practice it is often very difficult, and ultimately not as helpful as may seem in understanding and addressing problems that arise.

This also opens up a larger debate on how to optimize discoverability to best serve user interest. In Mozilla’s work on Equal Rating, we urged app stores to give users more control over how information was displayed in order to better surface locally relevant content, which is seen as an important driver for getting people to see the value in using and paying for the internet. This is fertile ground for further research.

3. Allow users to easily access applications offered by alternative app stores, once they have been deemed reliable

ARCEP’s Proposal: The Report recommends that users should be able to easily access applications offered by alternative app stores. They make the case for conditions that are conducive to the “emergence of effective competition between stores.” While they acknowledge that “app stores undeniably play a crucial role in terms of security,” this should not mean that “other stores could not guarantee the same prerogatives.” As an example of a perceived barrier to entry, ARCEP refers to the F-Droid Open Source app store which can only be downloaded after activating an advanced setting and acknowledging a warning that apps downloaded off third-party stores can be harmful.

Our view: As a matter of principle, users should be informed of risks and given the opportunity to exercise meaningful choices. Users do indeed often face significant barriers to using alternative app stores. We generally believe that users own their devices and should be able to put whatever apps and services they desire on those devices. However, we also note that many alternative app stores do contain significant amounts of malware, which OS and device manufacturers are rightly concerned with protecting users from. To that end, we would caution against restricting security warnings. Users should be informed of security risks, although greater granularity and specificity in warnings may be useful. For example, there may be a less stringent warning attached to an app in an alternative app store that has otherwise verified its security bona fides. Giving warnings about an app store itself (e.g., “all apps on the F-Droid store may be insecure”) doesn’t provide the user with the most helpful information.

Finally, we note that the need to empower an entity to determine an app or an app store’s reliability risks creating another gatekeeper with complex incentives in the internet ecosystem.

4. Allow all content and service developers to access the same device function.

ARCEP’s Proposal: ARCEPs recommendation is that device and OS providers should not prevent app providers from accessing the functions they need to fully operate their services “merely for business reasons.” In addition to device functions like calling or access to phonebook or messages, they recommend that it should not be possible to “confine access to one or several APIs to only certain content and service providers, and particularly to apply different pricing terms depending on the content and service provider, for no reason other than commercial ones.” As an example, they state that Google should no longer be the only entity able to use Android’s APIs for accessing ‘physical geolocation components’; a company such as Open Street Map should also be able to use them.

Our view: In principle, device and OS providers should not exercise their gatekeeping powers to unfairly restrict access to device and API functions. We would agree with ARCEPs proposed limitation on commercially motivated restrictions, such as preferences to affiliated content or shutting out competitor services. Core functions like access to the kernel, however, may justifiably be restricted since it raises potential security and privacy concerns.

The principle here, as it is in data protection, should be collection limitation, purpose limitation, data minimization, and privacy by design. The direction to collect what you need, and to use data and permissions for the purposes that relate to the service offered to the user, is a useful rule of thumb for the kinds of access that should be made available to third party app developers.

Conclusion

While outside the scope of traditional net neutrality regulation, regulators should be vigilant about the potential of device and OS providers to abuse their their roles as gatekeepers to online content and services. ARCEP’s report, which demonstrates a strong commitment to the open internet, is a good first step.

The post ARCEP report: “Device neutrality” and the open internet appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2018/05/29/arcep-report-device-neutrality/

|

|

Patrick McManus: The Benefits of HTTPS for DNS |

DoH builds on the great foundation of DoT. The most important part of each protocol is that they provide encrypted and authenticated communication between clients and arbitrary DNS resolvers. DNS transport does get regularly attacked and using either one of these protocols allows clients to protect against such shenanigans. What DoH and DoT have in common is far more important than their differences and for some use cases they will work equally well.

However, I think by integrating itself into the scale of the established HTTP ecosystem DoH will likely gain more traction and solver a broader problem set than a DNS specific solution will.

DoH implementations, by virtue of also being HTTP applications, have easy access to a tremendous amount of commodity infrastructure with which to jump start deployments. Examples are CDNs, hundreds of programming libraries, authorization libraries, proxies, sophisticated load balancers, super high volume servers, and more than a billion deployed Javascript engines that already have HTTP interfaces (they also come with a reasonable security model (CORS) for accessing resources behind firewalls). DoH natively includes HTTP content negotiation as well - letting new expressions of DNS (json, xml, etc..) data blossom in non traditional programming environments.

Using HTTP also has advantages at the protocol level. Both DoH and DoT use TCP and therefore need to implement a multiplexing layer to deal with head of line blocking. DoT uses the Message-ID as a key (inherited from DNS over TCP). DoH addresses this via HTTP/2's multiplexed stream approach. The latter is a richer set of functionality that deals with a wider set of edge cases. For instance HTTP/2 includes prioritization between streams, the ability to interleave different responses on the wire (e.g. to pre-empt an AXFR transfer), and flow control to limit the number of requests in flight without resorting to head of line blocking

As HTTP/2 evolves into QUIC, DoH will benefit from being on the QUIC train without any further standardization. Likely all it will take to deploy DoH over QUIC will be a library upgrade. This will be a very important performance improvement for the protocol. Both DoH and DoT are essentially tunneling datagrams over TCP. Anybody who has run a VPN over TCP knows that's not optimal because packet loss in TCP delays the delivery of many other packets within the connection. This creates an unnecessary slowdown when unrelated things, such as different DNS responses, are carried in the same TCP flow. QUIC addresses that issue and DoH will definitely benefit from the free upgrade.

The big picture potential of DoH emerges only when you zoom out from the DNS context. When you integrate DNS into HTTP you don't just use HTTP as a substrate for DNS transfers but you also integrate DNS into the gigantic pool of pre-existing HTTP traffic.

HTTP has been working for the last few years to reduce the number of connections necessary to transport HTTP exchanges. Every time this happens the transport gets cheaper due to fewer handshakes, faster due to better congestion management, more private due to reduced use of SNI, and more robust to traffic analysis because the pool of mixed up encrypted data becomes more diverse.

Roughly speaking, this is represented by the evolution of HTTP/2 and its extensions. Highlights include HTTP/2 itself (mixing slices of different HTTP exchanges together in real time on the wire), Connection Coalescing (allowing some classes of different origins to share the same connection), Alternative Services (allowing HTTP to control routing and therefore increase Connection Coalescing), the HTTP/2 Origin Frame (making management easier to fine tune Connection Coalescing), and the upcoming Secondary Certificates (allowing a connection to add identities post handshake and therefore, you guessed it, increase Connection Coalescing). This system forms a positive feedback loop - new data enjoys the benefits but it also increases the benefits to all of the existing data. Adding DNS to the mix is a win-win.

The last important bit to touch on is more speculative. One of the newer HTTP semantics is push. Push allows an HTTP connection to send along unsolicited HTTP exchanges on existing connections. By defining an HTTP transport for DNS it can logically be pushed by servers that have reason to believe you will soon be interested in a DNS record you haven't even requested yet. This is an exciting concept, but it also comes with some tricky issues around security and tracking that need to be fully explored and standardized before it can be deployed. Nonetheless, architecting a secure DNS around HTTP enables that possibility down the road. The HTTP and DNS communities are just starting to look at the issues of "Resolverless DNS".

http://bitsup.blogspot.com/2018/05/the-benefits-of-https-for-dns.html

|

|

Cameron Kaiser: A weekend on the new computer (or, introducing "TenNineFox") |

First was to grab all the updates. This fixed amdgpu for X11 and now I'm running a fully accelerated GNOME desktop on the AMD Radeon Pro WX 7100. I got Sabrent Bluetooth and USB audio dongles, which "just worked" with Linux, and even got VLC to play some Blu-ray movies (as well as VLC can play them, given that BD+ is still not a solved problem). The T2 firmware update to 1.04 also diminished some of the fan hunting I was hearing and while it's still louder than the G5, it's definitely getting better and better. I'm thinking of getting one of the Supermicro "superquiet" PSUs next, since I notice its higher-pitch fan sound more than the case fans. The only hardware glitch still left over is that I can't figure out why Linux won't recognize the Sonnet FireWire/USB PCIe combo card. It should work, the chipsets should be supported. More on that later.

Next was to get working on builds. After most of Saturday spent hacking on it, I'm pleased to note that Firefox 60 will compile on the T2 with a minimal .mozconfig if you apply this patch, this patch and this patch, and chmod -x /usr/bin/ld.gold because the Firefox build system insists, nay, demands to use the (useless on PowerPC) gold linker; I don't even know why Fedora bothers installing it. You also need to turn jemalloc off because it barfs on the default PPC64 page size of 64K. The official Fedora 28 build of Firefox 60, which actually does work, apparently cheats a little by disabling tests and WebRTC, part of what those patches address, though I'm uncertain how they got around the jemalloc or WASM signal handlers issue. It runs fully multiprocess and I'm looking at enabling WebGL next. Even though JavaScript in Firefox 60 on the T2 is about twice as slow as the G5 in TenFourFox FPR7 (remember, no JIT), everything else is tremendously faster due to the 32 threads (8 cores, SMT-4 each), the monstrous cache and the 3+ GHz clock speed, so you really only notice it's not quite as fast as it ought to be on pages with a lot of scripts. So imagine what it will be like when I get the POWER9 JIT, I mean, nothing! I said nothing! Pay no attention to the man behind the curtain! If you build Firefox with -O3 -mcpu=power9, you get about a 3-5% speed boost over the -O2 mcpu=power8 Fedora build, which is worth it because it only takes the Talos 20 minutes to build Firefox at -j24 (compared to 3.5 hours with the Quad G5 in Highest performance mode roaring away at -j3). For posterity, here is the .mozconfig I'm currently using, which I intend to refine further:

mk_add_options MOZ_MAKE_FLAGS="-j24"

ac_add_options --enable-application=browser

ac_add_options --enable-optimize="-O3 -mcpu=power9"

ac_add_options --disable-jemalloc

You may call this the first build of "TenNineFox" if you like. :) Some mochitests fail which I'm investigating, but the test suite can run. By the way, Firefox Containers is awesome. I like to segregate my higher-security items like billpay and banking from the browser, which I use a TenFourFoxBox for on the G5, but with a Container it's integrated into the same browser instance and still keeps the cookies and data separate. Cool stuff.

On to QEMU. QEMU will build relatively uneventfully from source, or you can pre-install the Fedora package if you're lazy. Using the generic Power Mac profile mac99 both MacOS 9.1 and 10.4 start up largely happily under qemu-system-ppc, though there is an odd glitch with 9.1 where I have to quadruple-click on anything to get it detected as a double-click. However, while it was certainly useable, it didn't feel very fast. The System Profiler within the emulated Tiger instance said it was a "1GHz G4" with a "400MHz FSB." This seemed low, and the reason it is was ... drumroll please ... it was running with CPU emulation.

After some checking, I confirmed KVM was indeed installed on this system, so I tried running a 64-bit guest with qemu-system-ppc64 emulating an IBM pSeries machine with KVM-HV. That started up and ran at a nice clip, noticeably faster when I turned on KVM, so I tried to run the 32-bit guest with KVM-PR (which ought to emulate the proper CPU) and got an error message. Even the 64-bit guest that ran just dandy with KVM-HV wouldn't run with KVM-PR. Some digging determined that the KVM-PR kernel module existed, but did not load. Some more digging turned up that KVM-PR wouldn't load with modprobe. Even more digging turned up that ... KVM-PR doesn't run on bare-metal POWER9 yet, and unfortunately all PowerNV machines like the T2 are bare-metal.

This is a bummer, but it sounds like an eventually solveable problem. In the meantime, QEMU's performance as a Power Mac emulator is currently acceptable on the T2, just unspectacular. I'll be setting up an install of OS 9 to start with and getting some of my old software loaded into a workspace, and possibly hacking QEMU to autorelease the mouse and switch workspaces with a key combination so I can just jump back and forth easily. When the issues with KVM-PR are ironed out, then everything should "just work," just faster.

For yuks, I tried installing a couple earlier emulation efforts. SheepShaver is the one most people know, and it will compile (if you update config.{guess,sub} and tell configure to use the PowerPC emulator; it will not run natively), but it will not start. Even with sudo sysctl vm.mmap_min_addr=0 and sudo setsebool -P mmap_low_allowed 1 to get the kernel and SELinux to allow its unusual memory mapping requirements, it threw an error message saying it could not allocate enough memory and unceremoniously aborted. On a 32GB system trying to emulate a 256MB Mac a low-memory state seemed unlikely, so I'm guessing this may be a 64-bit bug. I then tried the other well-known Power Mac emulator, PearPC. This also required a new autoconf and a number of hacks to get it to build with current releases of gcc, but it does work, and it does start, and it's even worse, about 20% the speed of QEMU. The reason for this is that QEMU actually has a trivial JIT (TCG), while PearPC is a strict interpreter on systems that don't have JIT support, so while you could do stuff it felt like a 601 was running it instead.

The other parts of the weekend was figuring out what I needed to port over, and how to make the Talos happy on my highly Mac-centric network. Installing gvfs-afp and hfsplus-tools was easy and got the T2 talking to the G4 file server running 10.4.11. I don't like the Linux font set much, so I'll be copying my font folder from the G5 over and converting things with Fondu as necessary. VLC will play CDs, but I will probably try to port my command-line player since it's easier for me to manipulate. I also need to move my Quake PAKs and Doom WADs over, because everyone needs a coffee break now and then, and finally get my Pixel XL to backup its photos to it. I also added even more Mac key combinations to AutoKey to maintain my Mac command-key muscle memory.

Anyway, after I've submitted this post I'll power down the Talos tonight and wake the G5 back up again tomorrow to continue work on TenFourFox FPR8, having slept peacefully and properly over the entire holiday weekend. Now that same-site cookies are working, it's time to get some sort of basic CSS grid support operational (or at least whitelisted for those sites that need it), and I still want to finish idle callback support and date-time picker support. After all, even though the T2 is getting closer and closer to being suitable as my main computer, there's still a lot I'll need to keep the G5 around for, so I'm certainly not planning to get rid of it. Or, you know, "put it to sleep" in the veterinary sense. Just because it's old doesn't mean it's useless.

http://tenfourfox.blogspot.com/2018/05/a-weekend-on-new-computer-or.html

|

|

Armen Zambrano: Splitting the Firefox Health dashboard |

Back in January I had to make a critical decision. I had to determine if to separate the Firefox health dashboard (formely known as Platform health) into a backend and frontend projects or to keep it together.

The intent was to make it easier to maintain the project by reducing the complexity of having code that is presentational versus processing code. I also wanted to remove the boilerplate needed for webpack and babel. It was also beneficial to have the liberty of changing packages without worrying of regressing the frontend or the backend. The only disadvantages was to have to do the work and that we might need in the future coordinated changes (or versioned APIs). We did not see the disadvantage of code being duplicated since there wasn’t any (or much — I can’t recall now) shared between the two apps.

This all came from hitting a very odd production specific issue. I thought this was all caused from the complex webpack configuration the project had. Because we were not making progress determining the root issue I decided to switch to Neutrino. Switching to Neutrino made everything easier, however, it was unclear how to make it work with the original project’s design. The original design had the frontend files being served as static assets of the Koa app. Switching to Neutrino took away webpack headaches since it makes good default configuration options for the project.

Keeping both frontend and backend apps within the same repository complicated the deployment story since there were some Heroku restrictions. I tried using subtrees, however, it still required manual intervention (see explanation). I didn’t know at the time that we could have deployed the backend to Heroku while deploying the frontend to Netlify. This would have allowed to keep both project within the same repository. Alas! We now have two repositories.

If you want to look at the code changes you can see them here.

|

|

Armen Zambrano: Additions to Firefox’s health dashboard |

At the beginning of the month I came back from my last few weeks of parental leave (thanks Mozilla!). While I was away Sarah Clements took over some Firefox Quantum release criteria work and I’m pleased to see that she managed to tackle everything well by herself.

Some of the major changes she made was to separate the Quantum criteria page into 32-bit and 64-bit. This simplifies the graphs and allows release stakeholders to see more clearly how one specific architecture is doing.

She also added the new release criteria for Firefox’s GeckoView efforts.

To learn more you can visit https://health.graphics to see the changes.

If you would like to contribute visit https://github.com/mozilla/firefox-health-dashboard.

|

|

Andy McKay: Mt. Seymour |

When I normally do a long ride, I get graphs that are kind of messy. The North Shore is notoriously hilly and so you get crazy graphs that show my heart rate and speed all over the place.

However, I live in Deep Cove and occasionally just go straight up Mount Seymour, back down and then collapse in a heap at my house. I'm not fast or fit, but I'm trying to get better.

This produces some satisfyingly nice graphs.

Elevation:

Then there's speed (yeah, I'm not fast):

And then the inverse, my heart rate (yeah, I'm not fit):

Most satisfying.

|

|

Firefox Test Pilot: Welcome Punam to the Test pilot team! |

A couple months ago Punam transferred from another team at Mozilla to join the Test Pilot team. Below she answers some questions about her experience and what she’s looking forward to. Welcome, Punam!

How would you describe your role on the Test Pilot team?

I joined Test Pilot team as a Front-End Engineer on Project Screenshots. As a front-end developer, I am responsible for implementing new features that improve user experience and make Firefox awesome. My role involves scoping the problems, then designing, implementing, testing and releasing code to solve them!

What does a typical day at Mozilla look like for you?

As I am transitioning from the Partner Engineering team inside Business Affairs, my day is split between supporting/wrapping up experiments in the Partner Engineering project and ramping myself up on Project Screenshots. This involves attending screenshots standups, absorbing project details, debugging the codebase, collaborating with my peers via code reviews and fixing first bugs.

Where were you before the Test Pilot team?

I have been a Mozillian for over 5 years, working in Firefox OS Media, Connected Devices and most recently Partner Engineering. Over that period, I have gained a wealth of experience in developing an HTML5 based mobile OS from the ground up, developing prototypes in IoT space and now core Firefox.

Before Mozilla I have worked with SonicWall, eBay and Symantec doing web development.

What’s coming up that you’re excited about?

I am excited to expand Screenshots feature set to bring the best experiences to our users. I am looking forward to exploring opportunities, testing new ideas and experiments geared towards taking Screenshots beyond the desktop browser.

What is something most people at Mozilla don’t know about you?

I have two boys who are cub scouts and am an active scout volunteer. We just wrapped up Scout-O-Rama, where our pack hosted the water rockets booth. I enjoy nature, love hiking and camping with friends and family, my favorite being Yosemite and Big Sur.

Any fun side projects you’re working on (outside of work)?

As far as side projects go, currently my time is taken up by kids mostly. So I have been dabbling with kids educational projects, most recently I’m working with them on a doorbell IoT project using raspberry pi and a camera. Will share a picture once it’s ready!

Welcome Punam to the Test pilot team! was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Mozilla VR Blog: This week in Mixed Reality: Issue 7 |

Missed us last week? Our team met in Chicago for a work week. If you had the chance to come and meet us at the CHIVR / AR Chicago meetup, thanks for swinging by. We strategized our short and long term plans and we're really excited to share what we're unfolding in the coming weeks.

Browsers

Week by week we are adding more features towards a MVP:

- Implemented and tested native Android permission requests on all platforms

- Implemented webview permission prompts

- Improved URL detection on the URLBar

- Ensured MotionEvent consistency to maintain correct button state

- Starting to work on immersive mode

Here is a video of the webview permission prompts!

Social

We are continously making performance improvements and adding new features to the Hubs by Mozilla product:

- Shipped second pass of UX improvements for Oculus Go which will improve performance

- New, direct manipulation phone locomotion controls

- R&D spikes on in-VR UI with react-canvas and react-360

- Space bubble usability improvements

- Overall wrapping up polish/bugfixing pass and Oculus Go UX and performance push and pivoting to new feature development again next week

Join our public WebVR Slack #social channel to participate in on the discussion!

Content ecosystem

We are improving the Unity Editor's VR preview mode for rapid development, including fixing controller bugs and documentation for Unity WebVR exporter tool.

We'd like to invite Unity game designers and developers to try it out and reach out to us on the public WebVR Slack #unity channel to participate in on the discussion!

Stay tuned for new features and improvements across our three areas next week!

|

|

The Firefox Frontier: What’s the 411 on 404 messages: Internet error messages explained |

Nothing’s worse than a broken website. Well, maybe an asteroid strike. Or a plague. So maybe a broken website isn’t the end of the world, but it’s still annoying. And … Read more

The post What’s the 411 on 404 messages: Internet error messages explained appeared first on The Firefox Frontier.

|

|

Robert O'Callahan: Intel CPU Bug Affecting rr Watchpoints |

I investigated an rr bug report and discovered an annoying Intel CPU bug that affects rr replay using data watchpoints. It doesn't seem to be hit very often in practice, which is good because I don't know any way to work around it. It turns out that the bug is probably covered by an existing Intel erratum for Skylake and Kaby Lake (and probably later generations, but I'm not sure), which I even blogged about previously! However, the erratum does not mention watchpoints and the bug I've found definitely depends on data watchpoints being set.

I was able to write a stand-alone testcase to characterize the bug. The issue seems to be that if a rep stos (and probably rep movs) instruction writes between 1 and 64 bytes (inclusive), and you have a read or write watchpoint in the range [64, 128) bytes from the start of the writes (i.e., not triggered by the instruction), then one spurious retired conditional branch is (usually) counted. The alignment of the writes does not matter, and it's not related to speculative execution.

If you find rr failing during replay with watchpoints set, and the failures go away if you remove the watchpoints, it could well be this bug. Broadwell and earlier don't seem to have the bug.

A possible workaround would be to disable "fast-string optimization" in the kernel at boot time. I don't think there's any way for users to force this to happen in Linux, currently, but someone could write a kernel patch adding a command-line option for that and send it upstream. It would be great if they did!

Fortunately this sort of bug does not affect Pernosco.

http://robert.ocallahan.org/2018/05/intel-cpu-bug-affecting-rr-watchpoints.html

|

|

Air Mozilla: Reps Weekly Meeting, 24 May 2018 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

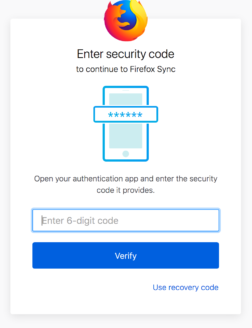

Mozilla Cloud Services Blog: Two-step authentication in Firefox Accounts |

Two-step authentication in Firefox Accounts

Starting on 5/23/2018, we are beginning a phased rollout to allow Firefox Accounts users to opt into two-step authentication. If you enable this feature, then in addition to your password, an additional security code will be required to log in.

We chose to implement this feature using the well-known authentication standard TOTP (Time-based One-Time Password). TOTP codes can be generated using a variety of authenticator applications. For example, Google Authenticator, Duo and Authy all support generating TOTP codes.

Additionally, we added support for single-use recovery codes in the event you lose access to the TOTP application. It is recommend that you save your recovery codes in a safe spot since they can be used to bypass TOTP.

To enable two-step authentication, go to your Firefox Accounts preferences and click “Enable” on the “Two-step authentication” panel.

Note: If you do not see the Two-step authentication panel, you can manually enable it by following these instructions.

Using one of the authenticator applications, scan the QR code and then enter the security code it displays. Doing this will confirm your device, enable TOTP and show your recovery codes.

Note: After setup, make sure you download and save your recovery codes in a safe location! You will not be able to see them again, unless you generate new ones.

Once two-step authentication is enabled, every login will require a security code from your TOTP device.

Thanks to everyone that helped to work on this feature including UX designers, engineers, quality assurance and security teams!

https://blog.mozilla.org/services/2018/05/22/two-step-authentication-in-firefox-accounts/

|

|

Hacks.Mozilla.Org: Progressive Web Games |

With the recent release of the Progressive Web Apps core guides on MDN, it’s easier than ever to make your website look and feel as responsive as native on mobile devices. But how about games?

In this article, we’ll explore the concept of Progressive Web Games to see if the concept is practical and viable in a modern web development environment, using PWA features built with Web APIs.

Let’s look at the Enclave Phaser Template (EPT) — a free, open sourced mobile boilerplate for HTML5 games that I created using the Phaser game engine. I’m using it myself to build all my Enclave Games projects.

The template was recently upgraded with some PWA features: Service Workers provide the ability to cache and serve the game when offline, and a manifest file allows it to be installed on the home screen. We also provide access to notifications, and much more. These PWA features are already built-in into the template, so you can focus on developing the game itself.

We will see how those features can solve problems developers have today: adding to home screen and working offline. The third part of this article will introduce the concept of progressive loading.

Add to Home screen

Web games can show their full potential on mobile, especially if we create some features and shortcuts for developers. The Add to Home screen feature makes it easier to build games that can compete with native games for screen placement and act as first class citizens on mobile devices.

Progressive Web Apps can be installed on modern devices with the help of this feature. You enable it by including a manifest file — the icon, modals and install banners are created based on the information from ept.webmanifest:

{

"name": "Enclave Phaser Template",

"short_name": "EPT",

"description": "Mobile template for HTML5 games created using the Phaser game engine.",

"icons": [

{

"src": "img/icons/icon-32.png",

"sizes": "32x32",

"type": "image/png"

},

// ...

{

"src": "img/icons/icon-512.png",

"sizes": "512x512",

"type": "image/png"

}

],

"start_url": "/index.html",

"display": "fullscreen",

"theme_color": "#DECCCC",

"background_color": "#CCCCCC"

}It’s not the only requirement though — be sure to check the Add to Home Screen article for all the details.

Offline capabilities

Developers often have issues getting desktop games (or mobile-friendly games showcased on a PC with a monitor) to work offline. This is especially challenging when demoing a game at a conference with unreliable wifi! Best practice is to plan ahead and have all the files of the game available locally, so that you can launch them offline.

Offline builds can be tricky, as you’ll have to manage the files yourself, remember your versions, and whether you’ve applied the latest patch or fixed that bug from previous conferences, work out the hardware setup, etc. This takes time and extra preparation.

Web games are easier to handle online when you have reliable connectivity: You point the browser to a URL and you have the latest version of your game running in no time. The network connection is the problem. It would be nice to have an offline solution.

The good news is that Progressive Web Apps can help — Service Workers cache and serve assets offline, so an unstable network connection is not the problem it used to be.

The Service Worker file in the Enclave Phaser Template contains everything we need. It starts with the list of files to be cached:

var cacheName = 'EPT-v1';

var appShellFiles = [

'./',

'./index.html',

// ...

'./img/overlay.png',

'./img/particle.png',

'./img/title.png'

];Then the install event is handled, which adds all the files to the cache:

self.addEventListener('install', function(e) {

e.waitUntil(

caches.open(cacheName).then(function(cache) {

return cache.addAll(appShellFiles);

})

);

});Next comes the fetch event, which will serve content from cache and add a new one, if needed:

self.addEventListener('fetch', function(e) {

e.respondWith(

caches.match(e.request).then(function(r) {

return r || fetch(e.request).then(function(response) {

return caches.open(cacheName).then(function(cache) {

cache.put(e.request, response.clone());

return response;

});

});

})

);

});Be sure to check the Service Worker article for a detailed explanation.

Progressive loading

Progressive loading is an interesting concept that can provide many benefits for web game development. Progressive loading is basically “lazy loading” in the background. It’s not dependent on a specific API, but it follows the PWA approach and uses several of the key features we’ve just described, focused on games, and their specific requirements.

Games are heavier than apps in terms of resources — even for small and casual ones, you usually have to download 5-15 MB of assets, from images to sounds. This is supposed to be instantaneous, but you still have to wait through the loading screen when everything is downloaded. Or, if might be problematic if the player has a poor connection: the longer the download time, the bigger the chance that gameplay will be abandoned and the tab will be closed.

But what if instead of downloading everything, you loaded only what’s really needed first, and then downloaded the rest in the background? This way the player would see the main menu of your game way faster than with the traditional approach. They would spend at least a few seconds looking around while the files for the gameplay are retrieved in the background invisibly. And even if they clicked the play button really quickly, we could show a loading animation while everything else is loaded.

Instant Games are gaining in popularity, and a game developer building casual mobile HTML5 games should probably consider putting them on the Facebook or Google platforms. There are some requirements to meet, especially concerning the initial file size and download time, as the games are supposed to be instantly available for play.

Using the lazy loading technique, the game will feel faster than it would otherwise, given the amount of data required for it to be playable. You can achieve these results using the Phaser framework, which is pre-built to load most of the assets after the main menu resources arrive. You can also implement this yourself in pure JavaScript, using the link prefetch/defer mechanism. There’s more than one way to achieve progressive loading – it’s a powerful idea that’s independent of the specific approach or tools you choose, following the principles of Progressive Web Apps.

Conclusion

Do you have any more ideas on how to enhance the gaming experience? Feel free to play and experiment, and shape the future of web games. Drop a comment here if you’ve got ideas to share.

|

|

Air Mozilla: Mozilla Open Leaders - Round 5 Final Demos (Open Internet Ninja Foxes) |

http://public.etherpad-mozilla.org/p/ol5-demos-a Meet our open leadership grads: https://medium.com/read-write-participate/meet-our-open-leadership-grads-232800db1e21 Timestamps: 3:20 - Observed City // Fiona @observedcity 7:18 - MBac-Taking a closer look on how bacteria move!...

http://public.etherpad-mozilla.org/p/ol5-demos-a Meet our open leadership grads: https://medium.com/read-write-participate/meet-our-open-leadership-grads-232800db1e21 Timestamps: 3:20 - Observed City // Fiona @observedcity 7:18 - MBac-Taking a closer look on how bacteria move!...

https://air.mozilla.org/mozilla-open-leaders-round-5-final-demos-open-internet-ninja-foxes/

|

|

Cameron Kaiser: Spectre Number 4, STEP RIGHT UP! |

In the continuing saga of Meltdown and Spectre (tl;dr: G4/7400, G3 and likely earlier 60x PowerPCs don't seem vulnerable at all; G4/7450 and G5 are so far affected by Spectre while Meltdown has not been confirmed, but IBM documentation implies "big" POWER4 and up are vulnerable to both) is now Spectre variant 4. In this variant, the fundamental issue of getting the CPU to speculatively execute code it mistakenly predicts will be executed and observing the effects on cache timing is still present, but here the trick has to do with executing a downstream memory load operation speculatively before other store operations that the CPU (wrongly) believes the load does not depend on. The processor will faithfully revert the stores and the register load when the mispredict is discovered, but the loaded address will remain in the L1 cache and be observable through means similar to those in other Spectre-type attacks.

The G5, POWER4 and up are so aggressively out of order with memory accesses that they are almost certainly vulnerable. In an earlier version of this post, I didn't think the G3 and 7400 were vulnerable (as they don't appear to be to other Spectre variants), but after some poring over IBM's technical documentation I now believe with some careful coding it could be possible -- just not very probable. The details have to do with the G3 (and 7400)'s Load-Store Unit, or LSU, which is responsible for reading and writing memory. Unless a synchronizing instruction intervenes, up to one load instruction can execute ahead of a store, which makes the attack theoretically possible. However, the G3 and 7400 cannot reorder multiple stores in this fashion, and because only a maximum of two instructions may be dispatched to the LSU at any time (in practice less since those two instructions are spread across all of the processor's execution units), the victim load and the confounding store must be located immediately together or have no LSU-issued instructions between them. Even then, reliably ensuring that both instructions get dispatched in such a way that the CPU will reorder them in the (attacker-)desired order wouldn't be trivial.

The 7450, as with other Spectre variants, makes the attack a bit easier. It can dispatch up to four instructions to its execution units, which makes the attack more feasible because there is more theoretical flexibility on where the victim load can be located downstream (especially if all four instructions go to its LSU). However, it too can execute at most just one load instruction ahead of a store, and it cannot reorder stores either.

That said, as a practical matter, Spectre in any variant (including this one) is only a viable attack vector on Power Macs through native applications, which have far more effective methods of pwning your Power Mac at their disposal than an intermittently successful attempt to read memory. Although TenFourFox has a JavaScript JIT, no 7450 and probably not even the Quad is fast enough to obtain enough of a memory timing delta to make the attack functional (let alone reliable), and we disabled the high-resolution timers necessary for the exploit "way back" in FPR5 anyway. The new variant 4 is a bigger issue for Talos II owners like myself because such an attack is possible and feasible on the POWER9, but we can confidently expect that there will be patches from IBM and Raptor to address it soon.

http://tenfourfox.blogspot.com/2018/05/spectre-number-4-step-right-up.html

|

|

Daniel Pocock: OSCAL'18 Debian, Ham, SDR and GSoC activities |

Over the weekend I've been in Tirana, Albania for OSCAL 2018.

Crowdfunding report

The crowdfunding campaign to buy hardware for the radio demo was successful. The gross sum received was GBP 110.00, there were Paypal fees of GBP 6.48 and the net amount after currency conversion was EUR 118.29. Here is a complete list of transaction IDs for transparency so you can see that if you donated, your contribution was included in the total I have reported in this blog. Thank you to everybody who made this a success.

The funds were used to purchase an Ultracell UCG45-12 sealed lead-acid battery from Tashi in Tirana, here is the receipt. After OSCAL, the battery is being used at a joint meeting of the Prishtina hackerspace and SHRAK, the amateur radio club of Kosovo on 24 May. The battery will remain in the region to support any members of the ham community who want to visit the hackerspaces and events.

Debian and Ham radio booth

Local volunteers from Albania and Kosovo helped run a Debian and ham radio/SDR booth on Saturday, 19 May.

The antenna was erected as a folded dipole with one end joined to the Tirana Pyramid and the other end attached to the marquee sheltering the booths. We operated on the twenty meter band using an RTL-SDR dongle and upconverter for reception and a Yaesu FT-857D for transmission. An MFJ-1708 RF Sense Switch was used for automatically switching between the SDR and transceiver on PTT and an MFJ-971 ATU for tuning the antenna.

I successfully made contact with 9A1D, a station in Croatia. Enkelena Haxhiu, one of our GSoC students, made contact with Z68AA in her own country, Kosovo.

Anybody hoping that Albania was a suitably remote place to hide from media coverage of the British royal wedding would have been disappointed as we tuned in to GR9RW from London and tried unsuccessfully to make contact with them. Communism and royalty mix like oil and water: if a deceased dictator was already feeling bruised about an antenna on his pyramid, he would probably enjoy water torture more than a radio transmission celebrating one of the world's most successful hereditary monarchies.

A versatile venue and the dictator's revenge

It isn't hard to imagine communist dictator Enver Hoxha turning in his grave at the thought of his pyramid being used for an antenna for communication that would have attracted severe punishment under his totalitarian regime. Perhaps Hoxha had imagined the possibility that people may gather freely in the streets: as the sun moved overhead, the glass facade above the entrance to the pyramid reflected the sun under the shelter of the marquees, giving everybody a tan, a low-key version of a solar death ray from a sci-fi movie. Must remember to wear sunscreen for my next showdown with a dictator.

The security guard stationed at the pyramid for the day was kept busy chasing away children and more than a few adults who kept arriving to climb the pyramid and slide down the side.

Meeting with Debian's Google Summer of Code students

Debian has three Google Summer of Code students in Kosovo this year. Two of them, Enkelena and Diellza, were able to attend OSCAL. Albania is one of the few countries they can visit easily and OSCAL deserves special commendation for the fact that it brings otherwise isolated citizens of Kosovo into contact with an increasingly large delegation of foreign visitors who come back year after year.

We had some brief discussions about how their projects are starting and things we can do together during my visit to Kosovo.

Workshops and talks

On Sunday, 20 May, I ran a workshop Introduction to Debian and a workshop on Free and open source accounting. At the end of the day Enkelena Haxhiu and I presented the final talk in the Pyramid, Death by a thousand chats, looking at how free software gives us a unique opportunity to disable a lot of unhealthy notifications by default.

|

|