Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Gervase Markham: A Case for the Total Abolition of Software Patents |

A little while back, I wrote a piece outlining the case for the total abolition (or non-introduction) of software patents, as seen through the lens of “promoting innovation”. Few of the arguments are new, but the “Narrow Road to Patent Goodness” presentation of the information is quite novel as far as I know, and may form a good basis for anyone trying to explain all the possible problems with software (or other) patents.

You can find it on my website.

http://feedproxy.google.com/~r/HackingForChrist/~3/l_llzOl9qGo/

|

|

William Lachance: Mission Control 1.0 |

Just a quick announcement that the first “production-ready” version of Mission Control just went live yesterday, at this easy-to-remember URL:

https://missioncontrol.telemetry.mozilla.org

For those not yet familiar with the project, Mission Control aims to track release stability and quality across Firefox releases. It is similar in spirit to arewestableyet and other crash dashboards, with the following new and exciting properties:

- Uses the full set of crash counts gathered via telemetry, rather than the arbitrary sample that users decide to submit to crash-stats

- Results are available within minutes of ingestion by telemetry (although be warned initial results for a release always look bad)

- The denominator in our crash rate is usage hours, rather than the probably-incorrect calculation of active-daily-installs used by arewestableyet (not a knock on the people who wrote that tool, there was nothing better available at the time)

- We have a detailed breakdown of the results by platform (rather than letting Windows results dominate the overall rates due to its high volume of usage)

In general, my hope is that this tool will provide a more scientific and accurate idea of release stability and quality over time. There’s lots more to do, but I think this is a promising start. Much gratitude to kairo, calixte, chutten and others who helped build my understanding of this area.

The dashboard itself an easier thing to show than talk about, so I recorded a quick demonstration of some of the dashboard’s capabilities and published it on air mozilla:

https://wlach.github.io/blog/2018/06/mission-control-1-0?utm_source=Mozilla&utm_medium=RSS

|

|

Daniel Pocock: Public Money Public Code: a good policy for FSFE and other non-profits? |

FSFE has been running the Public Money Public Code (PMPC) campaign for some time now, requesting that software produced with public money be licensed for public use under a free software license. You can request a free box of stickers and posters here (donation optional).

Many non-profits and charitable organizations receive public money directly from public grants and indirectly from the tax deductions given to their supporters. If the PMPC argument is valid for other forms of government expenditure, should it also apply to the expenditures of these organizations too?

Where do we start?

A good place to start could be FSFE itself. Donations to FSFE are tax deductible in Germany, the Netherlands and Switzerland. Therefore, the organization is partially supported by public money.

Personally, I feel that for an organization like FSFE to be true to its principles and its affiliation with the FSF, it should be run without any non-free software or cloud services.

However, in my role as one of FSFE's fellowship representatives, I proposed a compromise: rather than my preferred option, an immediate and outright ban on non-free software in FSFE, I simply asked the organization to keep a register of dependencies on non-free software and services, by way of a motion at the 2017 general assembly:

The GA recognizes the wide range of opinions in the discussion about non-free software and services. As a first step to resolve this, FSFE will maintain a public inventory on the wiki listing the non-free software and services in use, including details of which people/teams are using them, the extent to which FSFE depends on them, a list of any perceived obstacles within FSFE for replacing/abolishing each of them, and for each of them a link to a community-maintained page or discussion with more details and alternatives. FSFE also asks the community for ideas about how to be more pro-active in spotting any other non-free software or services creeping into our organization in future, such as a bounty program or browser plugins that volunteers and staff can use to monitor their own exposure.

Unfortunately, it failed to receive enough votes (minutes: item 24, votes: 0 for, 21 against, 2 abstentions)

In a blog post on the topic of using proprietary software to promote freedom, FSFE's Executive Director Jonas "Oberg used the metaphor of taking a journey. Isn't a journey more likely to succeed if you know your starting point? Wouldn't it be even better having a map that shows which roads are a dead end?

In any IT project, it is vital to understand your starting point before changes can be made. A register like this would also serve as a good model for other organizations hoping to secure their own freedoms.

For a community organization like FSFE, there is significant goodwill from volunteers and other free software communities. A register of exposure to proprietary software would allow FSFE to crowdsource solutions from the community.

Back in 2018

I'll be proposing the same motion again for the 2018 general assembly meeting in October.

If you can see something wrong with the text of the motion, please help me improve it so it may be more likely to be accepted.

Offering a reward for best practice

I've observed several discussions recently where people have questioned the impact of FSFE's campaigns. How can we measure whether the campaigns are having an impact?

One idea may be to offer an annual award for other non-profit organizations, outside the IT domain, who demonstrate exemplary use of free software in their own organization. An award could also be offered for some of the individuals who have championed free software solutions in the non-profit sector.

An award program like this would help to showcase best practice and provide proof that organizations can run successfully using free software. Seeing compelling examples of success makes it easier for other organizations to believe freedom is not just a pipe dream.

Therefore, I hope to propose an additional motion at the FSFE general assembly this year, calling for an award program to commence in 2019 as a new phase of the PMPC campaign.

Please share your feedback

Any feedback on this topic is welcome through the FSFE discussion list. You don't have to be a member to share your thoughts.

|

|

The Mozilla Blog: Facebook Must Do Better |

The recent New York Times report alleging expansive data sharing between Facebook and device makers shows that Facebook has a lot of work to do to come clean with its users and to provide transparency into who has their data. We raised these transparency issues with Facebook in March and those concerns drove our decision to pause our advertising on the platform. Despite congressional testimony and major PR campaigns to the contrary, Facebook apparently has yet to fundamentally address these issues.

In its response, Facebook has argued that device partnerships are somehow special and that the company has strong contracts in place to prevent abuse. While those contracts are important, they don’t remove the need to be transparent with users and to give them control. Suggesting otherwise, as Facebook has done here, indicates the company still has a lot to learn.

The post Facebook Must Do Better appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/06/05/facebook-must-do-better/

|

|

Henrik Skupin: My 15th Bugzilla account anniversary |

Exactly 15 years ago at “2003-06-05 09:51:47 PDT” my journey in Bugzilla started. At that time when I created my account I would never have imagined where all these endless hours of community work ended-up. And even now I cannot predict how it will look like in another 15 years…

Here some stats from my activities on Bugzilla:

| Bugs filed | 4690 | |

|---|---|---|

| Comments made | 63947 | |

| Assigned to | 1787 | |

| Commented on | 18579 | |

| QA-Contact | 2767 | |

| Patches submitted | 2629 | |

| Patches reviewed | 3652 |

https://www.hskupin.info/2018/06/05/my-15th-bugzilla-account-anniversary/

|

|

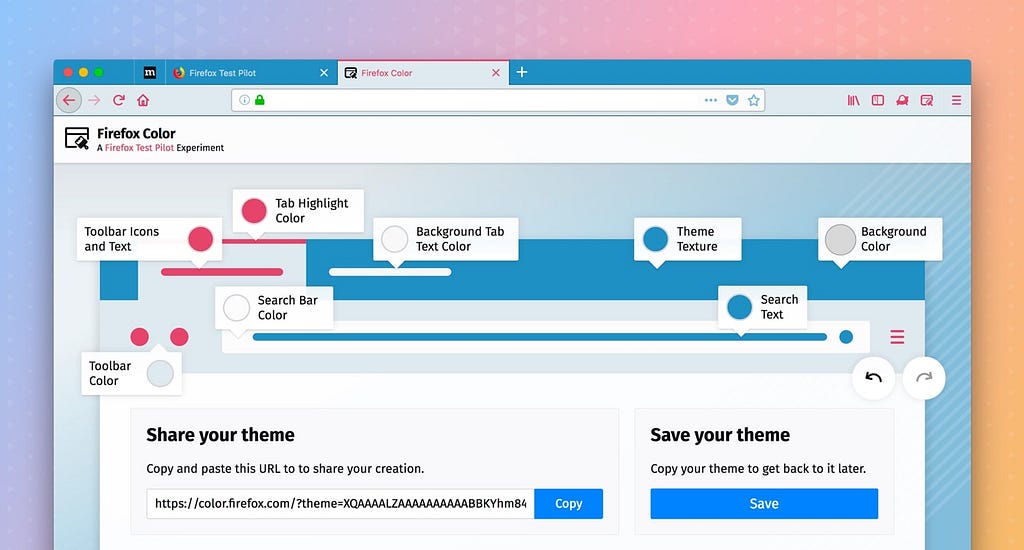

Firefox Test Pilot: Introducing Firefox Color and Side View |

We’re excited to launch two new Test Pilot experiments that add power and style to Firefox.

https://medium.com/media/32dc65c89184767b96c26896efb78323/hrefSide View enables you to multitask with Firefox like never before by letting you keep two websites open side by side in the same window.

https://medium.com/media/66965c47993ec6ef8783ef525951c67d/hrefFirefox Color makes it easy to customize the look and feel of your Firefox browser. With just a few clicks you can create beautiful Firefox themes all your own.

Both experiments are available today from Firefox Test Pilot. Try them out, and don’t forget to give us feedback. You’re helping to shape the future of Firefox!

Introducing Firefox Color and Side View was originally published in Firefox Test Pilot on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

The Firefox Frontier: Get All the Color, New Firefox Extension Announced |

Remember when you were a kid and wanted to paint your room your favorite color? Or the first time you dyed your hair a different color and couldn’t wait to … Read more

The post Get All the Color, New Firefox Extension Announced appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/get-all-the-color-new-firefox-extension-announced/

|

|

The Firefox Frontier: It’s A New Firefox Multi-tasking Extension: Side View |

Introducing Side View! Side View is a Firefox extension that allows you to view two different browser tabs simultaneously in the same tab, within the same browser window. Firefox Extensions … Read more

The post It’s A New Firefox Multi-tasking Extension: Side View appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/its-a-new-firefox-multi-tasking-extension-side-view/

|

|

The Mozilla Blog: Latest Firefox Test Pilot Experiments: Custom Color and Side View |

Before we bring new features to Firefox, we give them a test run to make sure they’re right for our users. To help determine which features we add and how exactly they should work, we created the Test Pilot program.

Since the launch of Test Pilot, we have experimented with 16 different features, and three have graduated to live in Firefox full time: Activity Stream, Containers and Screenshots. Recently, Screenshots surpassed more than 100M+ screenshots since it launched. Thanks to active Firefox users who opt to take part in Test Pilot experiments.

This week, the Test Pilot team is continuing to evolve Firefox features with two new extensions that will offer users a more customizable and productive browsing experience.

Introducing Firefox Color

One of the greatest things about the Test Pilot program is being the first to try new things. Check out this video from one of our favorite extensions we’re hoping you’ll try out:

New Firefox Color extension allows you to customize several different elements of your browser, including background texture, text, icons, the toolbar and highlights.

Color changes update automatically to the browser as you go, so you can mix and match until you find the perfect combo to suit you. Whether you like to update with the seasons, or rep your favorite sports team during playoffs, the new color extension makes it simple to customize your Firefox experience to match anything from your mood or your wardrobe.

You can also save and share your creation with others, or choose from a variety of pre-installed themes.

Introducing Side View

Side View is a great tool for comparison shopping, allowing you to easily scope the best deals on flights or home appliances, and eliminating the need to switch between two separate web pages. It also allows you to easily compare news stories or informational material–perfect for writing a research paper or conducting side-by-side revisions of documents all in one window.

The Side View extension allows you to view two different browser tabs in the same tab, within the same browser window.

You can also opt for Side View to log your recent comparisons, so you can easily access frequently viewed pages.

Join our Test Pilot Program

The Test Pilot program is open to all Firefox users. Your feedback helps us test and evaluate a variety of potential Firefox features. We have more than 100k daily participants, and if you’re interested in helping us build the future of Firefox, visit testpilot.firefox.com to learn more. We’ve made it easy for anyone around the world to join with experiments available in 48 languages.

If you’re familiar with Test Pilot then you know all our projects are experimental, so we’ve made it easy to give us feedback or disable features at any time from testpilot.firefox.com.

Check out the new Color and Side View extensions. We’d love to hear your feedback!

The post Latest Firefox Test Pilot Experiments: Custom Color and Side View appeared first on The Mozilla Blog.

|

|

Kim Moir: Management books in review |

I became a manager of a fantastic team in February. My standard response to a new role is to read many books and talk to a lot of people who are have experience is this area so I have the background to be successful. Nothing can prepare you like doing the actual day to day work of being a manager, but these are some concepts I found helpful from my favourite books on this topic:

- Accelerate: Building and Scaling High Performing Technology Operations by Nicole Forsgren, PhD, Jez Humble and Gene Kim. The main topic of this book is not leadership, but many of the chapters deal with how lead effective teams.

- Lean management practices entail:

- Limiting work in process (WIP) and using these limits to drive process improvement and increase throughput

- Creating and maintain visual displays showing key productivity metrics and the current status of work that are available to both engineers and managers

- Use data from application performance and infrastructure monitoring to make business decisions on a daily basis

- Definition of burnout:

- Lean management practices entail:

- Complex and painful deployment strategies that must be performed out of business hours lead to stress and lack of control, which can increase burnout

- Tips to improve culture and support your teams

- Build trust with counterparts on other teams

- Encourage people to move between departments

- Actively seek, encourage and reward work that facilitates collaboration

- Create a training budget and advocating using it

- Ensure your team has the resources and space to engage in informal learning and explore new ideas

- Make it safe to fail. Many projects fail. Learning from them and holding blameless post-mortems allows people to understand that failure is okay and allows them to feel safe to take risks

- Share information with others

- lighting talks, lunch and learns and demo days allow teams to share their work and celebrate what they have accomplished

- Allow teams to choose their tools

- Make monitoring a priority

- Radical Focus by Christina Wodtke is a book about OKRs and written in a similar fable format to the The Phoenix project is written about DevOps. It’s the story of a struggling startup and how they had to focus on priorities.

- If you were hired as a new CEO, what would you do? Is this a different direction than the current strategy the company is pursuing?

- How to specify OKRs with measurable results

- “You don’t need people to work more, you need people to work on the right things” Look at the work you are doing every week and see how it will impact reaching OKRs.

- OKR fundamentals

- The sentence that describes the Objective should be

- Able to inspire a sense of meaning and purpose. Use the language of your team to write the objective

- Bound by time – able to be completed in a set amount of time, like a month or a quarter

- Accountable by the team independently. Completing it shouldn’t be blocked by the work of another team.

- The sentence that describes the Objective should be

- The Key results answer the question – “How do we know we met our results?”

- Measurable – You can measure opposing forces like growth and performance or revenue and qualify. For instance, a growth metric doesn’t matter if you customers don’t continue to use the product after using it once

- Be the Best Boss Everyone Wants to Work for by William Gentry.

I read this book as part of the management book club we have at Mozilla where we discuss a chapter of a book on management every two weeks.

- The overarching theme of this book is to “flip your mindset”. As an individual contributor, your role was to do your individual work to the best of your abilities.

- As a manager, it’s not about you, it’s about your team. Your role is to make your team the more effective it can be, and help the individual team members learn new skills, develop their careers, all while meeting the company’s business objectives.

- An effective team has

- Direction

- Team members should agree on the same goals and that this work is worth pursuing

- Alignment

- Everyone on the team knows their role on the team and what others are doing. Everyone knows what “meets expectations” means and what “excellent” performance looks like. If people feel isolated in their work and don’t know what is happening or have different opinions constitutes excellent performance, the team is lacking alignment.

- Commitment

- Each person is committed to the team and dedicated to the work.

- Direction

- Turn this Ship Around by David Marquet is an account of managing the staff of a nuclear submarine that were under-performing and their path to becoming one of the most improved ships in the fleet.

- The main theme of this book is to push decision making down to lower and lower levels of the organization.

- The mechanisms to strengthen technical competence are:

- Take deliberate action

- We learn all the time and from everywhere

- Don’t just brief people on what to do, certify that it has been done

- The person in charge of the team asks questions. At the end o the certification, the decision is made whether the team is ready to perform the operation.

- Continually and consistently repeat the message

- Specify goals, not methods

- I liked the chapter on clarity a lot.

- Achieve excellence, don’t just avoid errors

- Build trust and take care of your people

- Use guiding principles for decision criteria

- Immediate recognition to reinforce desired behaviours

- Begin with the end in mind

- For instance, if you wanted to reduce the number of operational errors, quantify the end metric you want to reach

- Encourage asking questions instead of blind obedience

- The mechanisms to strengthen technical competence are:

- The First 90 days by Micheal D. Watkins. It describes the skills you need to learn to transition to a new job in management, whether it be as a line manager of a team or as a new CTO.

- The purpose of the book to describe how to successfully accelerate through leadership and career transitions

- Traps during career transitions

- Using skills from your previous job that don’t apply to your new one

- Changing things before gaining trust of your team or understanding the scope of work

- Neglecting relationships with peers

- Keys to a successful transition

- Figure out what you need to learn, learn it quickly, and prioritize

- Match your strategy to the situation

- Secure early wins

- expands your credibility and creates momentum

- Negotiate success

- You have a new job with new responsibilities, how is success defined, how will you be evaluated in your new role?

- Being hired is a external leader is more difficult than being promoted from within.

- Lack of familiarity with internal networks, corporate culture, lack of credibility

- To overcome this take a business orientation to understand the business, develop the right relationships and align expectations. Understand the latitude you have to make changes.

- Businesses are in different states and this impacts the strategy you employ (STARS strategy)

- Startup

- Turnaround

- Accelerated Growth

- Realignment

- Sustaining Success

- It also includes a checklist on managing yourself as a leader

- The Progress Principle by Therasa Amabile and Steven Kramer.

This central these of this book is that “the best way to motivate people, day in and day out, is by facilitating progress—even small wins.”

- “People are more creative and productive when they are deeply engaged in the work, when they feel happy, and when they think highly of their projects, coworkers, managers and organizations.”

- This translates into performance benefits for the company.

- Ongoing progress makes people more motivated

- On days when there are setbacks leads to apathy and lack of motivation

- As an aside, I always think of this when onboarding new employees or interns. If you can get them to land a small change in the code base in the first few days they will feel like they are making progress. If you hand them a giant project that hasn’t been broken down into smaller projects, it can become overwhelming and lead to a lack of progress and frustration.

- How to nourish a team

- I have written previously about Camille Fournier’s The Manager’s Path. I really enjoy the structure of the book, as it describes the path from individual contributor to CTO. Also, it also addresses specific issues related to engineering management. I have recommended this book to many people and refer to it often.

- I also wrote about How F*cked up your Management? by Johnathan Nightingale and Melissa Nightingale in this blog post. Delightful yet raw stories of management in the trenches of various tech companies.

What books on management have you found insightful?

|

|

Hacks.Mozilla.Org: Overscripted! Digging into JavaScript execution at scale |

This research was conducted in partnership with the UCOSP (Undergraduate Capstone Open Source Projects) initiative. UCOSP facilitates open source software development by connecting Canadian undergraduate students with industry mentors to practice distributed development and data projects.

The team consisted of the following Mozilla staff: Martin Lopatka, David Zeber, Sarah Bird, Luke Crouch, Jason Thomas

2017 student interns — crawler implementation and data collection: Ruizhi You, Louis Belleville, Calvin Luo, Zejun (Thomas) Yu

2018 student interns — exploratory data analysis projects: Vivian Jin, Tyler Rubenuik, Kyle Kung, Alex McCallum

As champions of a healthy Internet, we at Mozilla have been increasingly concerned about the current advertisement-centric web content ecosystem. Web-based ad technologies continue to evolve increasingly sophisticated programmatic models for targeting individuals based on their demographic characteristics and interests. The financial underpinnings of the current system incentivise optimizing on engagement above all else. This, in turn, has evolved an insatiable appetite for data among advertisers aggressively iterating on models to drive human clicks.

Most of the content, products, and services we use online, whether provided by media organisations or by technology companies, are funded in whole or in part by advertising and various forms of marketing.

–Timothy Libert and Rasmus Kleis Nielsen [link]

We’ve talked about the potentially adverse effects on the Web’s morphology and how content silos can impede a diversity of viewpoints. Now, the Mozilla Systems Research Group is raising a call to action. Help us search for patterns that describe, expose, and illuminate the complex interactions between people and pages!

Inspired by the Web Census recently published by Steven Englehardt and Arvind Narayanan of Princeton University, we adapted the OpenWPM crawler framework to perform a comparable crawl gathering a rich set of information about the JavaScript execution on various websites. This enables us to delve into further analysis of web tracking, as well as a general exploration of client-page interactions and a survey of different APIs employed on the modern Web.

In short, we set out to explore the unseen or otherwise not obvious series of JavaScript execution events that are triggered once a user visits a webpage, and all the first- and third-party events that are set in motion when people retrieve content. To help enable more exploration and analysis, we are providing our full set of data about JavaScript executions open source.

The following sections will introduce the data set, how it was collected and the decisions made along the way. We’ll share examples of insights we’ve discovered and we’ll provide information on how to participate in the associated “Overscripted Web: A Mozilla Data Analysis Challenge”, which we’ve launched today with Mozilla’s Open Innovation Team.

The Dataset

In October 2017, several Mozilla staff and a group of Canadian undergraduate students forked the OpenWPM crawler repository to begin tinkering, in order to collect a plethora of information about the unseen interactions between modern websites and the Firefox web browser.

Preparing the seed list

The master list of pages we crawled in preparing the dataset was itself generated from a preliminary shallow crawl we performed in November 2017. We ran a depth-1 crawl, seeded by Alexa’s top 10,000 site list, using 4 different machines at 4 different IP addresses (all in residential non-Amazon IP addresses served by Canadian internet service providers). The crawl was implemented using the Requests Python library and collected no information except for an indication of successful page loads.

Of the 2,150,251 pages represented in the union of the 4 parallel shallow crawls, we opted to use the intersection of the four lists in order to prune out dynamically generated (e.g. personalized) outbound links that varied between them. This meant a reduction to 981,545 URLs, which formed the seed list for our main OpenWPM crawl.

The Main Collection

The following workflow describes (at a high level) the collection of page information contained in this dataset.

- Alexa top 10k (10,000 high traffic pages as of November 1st, 2017)

- Precrawl using the python Requests library, visits each one of those pages

- Request library requests that page

- That page sends a response

- All href tags in the response are captured to a depth of 1 (away from Alexa page)

- For each of those href tags all valid pages (starts with “http”) are added to the link set.

- The link set union (2,150,251) was examined using the request library in parallel, which gives us the intersection list of 981,545.

- The set of urls in the list 981,545 is passed to the deeper crawl for JavaScript analysis in a parallelized form.

- Each of these pages was sent to our adapted version of OpenWPM to have the execution of JavaScript recorded for 10 seconds.

- The

window.locationwas hashed as the unique identifier of the location where the JavaScript was executed (to ensure unique source attribution).- When OpenWPM hits content that is inside an iFrame, the location of the content is reported.

- Since we use the

window.locationto determine the location element of the content, each time an iFrame is encountered, that location can be split into the parent location of the page and the iFrame location. - Data collection and aggregation performed through a websocket associates all the activity linked to a location hash for compilation of the crawl dataset.

Interestingly, for the Alexa top 10,000 sites, our depth-1 crawl yielded properties hosted on 41,166 TLDs across the union of our 4 replicates, whereas only 34,809 unique TLDs remain among the 981,545 pages belonging to their intersection.

A modified version of OpenWPM was used to record JavaScript calls potentially used for browsers tracking data from these pages. The collected JavaScript execution trace was written into an s3 bucket for later aggregation and analysis. Several additional parameters were defined based on cursory ad hoc analyses.

For example, the minimum dwell time per page required to capture the majority of JavaScript activity was set as 10 seconds per page. This was based on a random sampling of the seed list URLs and showed a large variation in time until no new JavaScript was being executed (from no JavaScript, to what appeared to be an infinite loop of self-referential JavaScript calls). This dwell time was chosen to balance between capturing the majority of JavaScript activity on a majority of the pages and minimizing the time required to complete the full crawl.

Several of the probes instrumented in the Data Leak repo were ported over to our hybrid crawler, including instrumentation to monitor JavaScript execution occuring inside an iFrame element (potentially hosted on a third-party domain). This would prove to provide much insight into the relationships between pages in the crawl data.

Exploratory work

In January 2018, we got to work analyzing the dataset we had created. After substantial data cleaning to work through the messiness of real world variation, we were left with a gigantic Parquet dataset (around 70GB) containing an immense diversity of potential insights. Three example analyses are summarized below. The most important finding is that we have only just scratched the surface of the insights this data may hold.

Examining session replay activity

Session replay is a service that lets websites track users’ interactions with the page—from how they navigate the site, to their searches, to the input they provide. Think of it as a “video replay” of a user’s entire session on a webpage. Since some session replay providers may record personal information such as personal addresses, credit card information and passwords, this can present a significant risk to both privacy and security.

We explored the incidence of session replay usage, and a few associated features, across the pages in our crawl dataset. To identify potential session replay, we obtained the Princeton WebTAP project list, containing 14 Alexa top-10,000 session replay providers, and checked for calls to script URLs belonging to the list.

Out of 6,064,923 distinct script references among page loads in our dataset, we found 95,570 (1.6%) were to session replay providers. This translated to 4,857 distinct domain names (netloc) making such calls, out of a total of 87,325, or 5.6%. Note that even if scripts belonging to session replay providers are being accessed, this does not necessarily mean that session replay functionality is being used on the site.

Given the set of pages making calls to session replay providers, we also looked into the consistency of SSL usage across these calls. Interestingly, the majority of such calls were made over HTTPS (75.7%), and 49.9% of the pages making these calls were accessed over HTTPS. Additionally, we found no pages accessed over HTTPS making calls to session replay scripts over HTTP, which was surprising but encouraging.

Finally, we examined the distribution of TLDs across sites making calls to session replay providers, and compared this to TLDs over the full dataset. We found that, along with .com, .ru accounted for a surprising proportion of sites accessing such scripts (around 33%), whereas .ru domain names made up only 3% of all pages crawled. This implies that 65.6% of .ru sites in our dataset were making calls to potential session replay provider scripts. However, this may be explained by the fact that Yandex is one of the primary session replay providers, and it offers a range of other analytics services of interest to Russian-language websites.

Eval and dynamically created function calls

JavaScript allows a function call to be dynamically created from a string with the eval() function or by creating a new Function() object. For example, this code will print hello twice:

eval("console.log('hello')")

var my_func = new Function("console.log('hello')")

my_func()While dynamic function creation has its uses, it also opens up users to injection attacks, such as cross-site scripting, and can potentially be used to hide malicious code.

In order to understand how dynamic function creation is being used on the Web, we analyzed its prevalence, location, and distribution in our dataset. The analysis was initially performed on 10,000 randomly selected pages and validated against the entire dataset. In terms of prevalence, we found that 3.72% of overall function calls were created dynamically, and these originated from across 8.76% of the websites crawled in our dataset.

These results suggest that, while dynamic function creation is not used heavily, it is still common enough on the Web to be a potential concern. Looking at call frequency per page showed that, while some Web pages create all their function calls dynamically, the majority tend to have only 1 or 2 dynamically generated calls (which is generally 1-5% of all calls made by a page).

We also examined the extent of this practice among the scripts that are being called. We discovered that they belong to a relatively small subset of script hosts (at an average ratio of about 33 calls per URL), indicating that the same JavaScript files are being used by multiple webpages. Furthermore, around 40% of these are known trackers (identified using the disconnectme entity list), although only 33% are hosted on a different domain from the webpage that uses them. This suggests that web developers may not even know that they are using dynamically generated functions.

Cryptojacking

Cryptojacking refers to the unauthorized use of a user’s computer or mobile device to mine cryptocurrency. More and more websites are using browser-based cryptojacking scripts as cryptocurrencies rise in popularity. It is an easy way to generate revenue and a viable alternative to bloating a website with ads. An excellent contextualization of crypto-mining via client-side JavaScript execution can be found in the unabridged cryptojacking analysis prepared by Vivian Jin.

We investigated the prevalence of cryptojacking among the websites represented in our dataset. A list of potential cryptojacking hosts (212 sites total) was obtained from the adblock-nocoin-list GitHub repo. For each script call initiated on a page visit event, we checked whether the script host belonged to the list. Among 6,069,243 distinct script references on page loads in our dataset, only 945 (0.015%) were identified as cryptojacking hosts. Over half of these belonged to CoinHive, the original script developer. Only one use of AuthedMine was found. Viewed in terms of domains reached in the crawl, we found calls to cryptojacking scripts being made from 49 out of 29,483 distinct domains (0.16%).

However, it is important to note that cryptojacking code can be executed in other ways than by including the host script in a script tag. It can be disguised, stealthily executed in an iframe, or directly used in a function of a first-party script. Users may also face redirect loops that eventually lead to a page with a mining script. The low detection rate could also be due to the popularity of the sites covered by the crawl, which might dissuade site owners from implementing obvious cryptojacking scripts. It is likely that the actual rate of cryptojacking is higher.

The majority of the domains we found using cryptojacking are streaming sites. This is unsurprising, as users have streaming sites open for longer while they watch video content, and mining scripts can be executed longer. A Chinese variety site called 52pk.com accounted for 207 out of the overall 945 cryptojacking script calls we found in our analysis, by far the largest domain we observed for cryptojacking calls.

Another interesting fact: although our cryptojacking host list contained 212 candidates, we found only 11 of them to be active in our dataset, or about 5%.

Limitations and future directions

While this is a rich dataset allowing for a number of interesting analyses, it is limited in visibility mainly to behaviours that occur via JS API calls.

Another feature we investigated using our dataset is the presence of Evercookies. Evercookies is a tracking tool used by websites to ensure that user data, such as a user ID, remains permanently stored on a computer. Evercookies persist in the browser by leveraging a series of tricks including Web API calls to a variety of available storage mechanisms. An initial attempt was made to search for evercookies in this data by searching for consistent values being passed to suspect Web API calls.

Acar et al., “The Web Never Forgets: Persistent Tracking Mechanisms in the Wild”, (2014) developed techniques for looking at evercookies at scale. First, they proposed a mechanism to detect identifiers. They applied this mechanism to HTTP cookies but noted that it could also be applied to other storage mechanisms, although some modification would be required. For example, they look at cookie expiration, which would not be applicable in the case of localStorage. For this dataset we could try replicating their methodology for set calls to window.document.cookie and window.localStorage.

They also looked at Flash cookies respawning HTTP cookies and HTTP respawning Flash cookies. Our dataset contains no information on the presence of Flash cookies, so additional crawls would be required to obtain this information. In addition, they used multiple crawls to study Flash respawning, so we would have to replicate that procedure.

In addition to our lack of information on Flash cookies, we have no information about HTTP cookies, the first mechanism by which cookies are set. Knowing which HTTP cookies are initially set can serve as an important complement and validation for investigating other storage techniques then used for respawning and evercookies.

Beyond HTTP and Flash, Samy Kamkar’s evercookie library documents over a dozen mechanisms for storing an id to be used as an evercookie. Many of these are not detectable by our current dataset, e.g. HTTP Cookies, HSTS Pinning, Flask Cookies, Silverlight Storage, ETags, Web cache, Internet Explorer userData storage, etc. An evaluation of the prevalence of each technique would be a useful contribution to the literature. We also see the value of an ongoing repeated crawl to identify changes in prevalence and accounting for new techniques as they are discovered.

However, it is possible to continue analyzing the current dataset for some of the techniques described by Samy. For example, window.name caching is listed as a technique. We can look at this property in our dataset, perhaps by applying the same ID technique outlined by Acar et al., or perhaps by looking at sequences of calls.

Conclusions

Throughout our preliminary exploration of this data it became quickly apparent that the amount of superficial JavaScript execution on a Web page only tells part of the story. We have observed several examples of scripts running parallel to the content-serving functionality of webpages, these appear to fulfill a diversity of other functions. The analyses performed so far have led to some exciting discoveries, but so much more information remains hidden in the immense dataset available.

We are calling on any interested individuals to be part of the exploration. You’re invited to participate in the Overscripted Web: A Mozilla Data Analysis Challenge and help us better understand some of the hidden workings of the modern Web!

Note: In the interest of being responsive to all interested contest participants and curious readers in one centralized location, we’ve closed comments on this post. We encourage you to bring relevant questions and discussion to the contest repo at: https://github.com/mozilla/Overscripted-Data-Analysis-Challenge

Acknowledgements

Extra special thanks to Steven Englehardt for his contributions to the OpenWPM tool and advice throughout this project. We also thank Havi Hoffman for valuable editorial contributions to earlier versions of this post. Finally, thanks to Karen Reid of University of Toronto for coordinating the UCOSP program.

https://hacks.mozilla.org/2018/06/overscripted-digging-into-javascript-execution-at-scale/

|

|

Mozilla Open Innovation Team: Overscripted Web: a Mozilla Data Analysis Challenge |

Help us explore the unseen JavaScript and what this means for the Web

What happens while you are browsing the Web? Mozilla wants to invite data and computer scientists, students and interested communities to join the “Overscripted Web: a Data Analysis Challenge”, and help explore JavaScript running in browsers and what this means for users. We gathered a rich dataset and we are looking for exciting new observations, patterns and research findings that help to better understand the Web. We want to bring the winners to speak at MozFest, our annual festival for the open Internet held in London.

The Dataset

Two cohorts of Canadian Undergraduate interns worked on data collection and subsequent analysis. The Mozilla Systems Research Group is now open sourcing a dataset of publicly available information that was collected by a Web crawl in November 2017. This dataset is currently being used to help inform product teams at Mozilla. The primary analysis from the students focused on:

- Session replay analysis: when do websites replay your behavior in the site

- Eval and dynamically created function calls

- Cryptojacking: websites using user’s computers to mine cryptocurrencies are mainly video streaming sites

Take a look on Mozilla’s Hacks blog for a longer description of the analysis.

The Data Analysis Challenge

We see great potential in this dataset and believe that our analysis has only scratched the surface of the insights it can offer. We want to empower the community to use this data to better understand what is happening on the Web today, which is why Mozilla’s Systems Research Group and Open Innovation team partnered together to launch this challenge.

We have looked at how other organizations enable and speed up scientific discoveries through collaboratively analyzing large datasets. We’d love to follow this exploratory path: We want to encourage the crowd to think outside the proverbial box, get creative, get under the surface. We hope participants get excited to dig into the JavaScript executions data and come up with new observations, patterns, research findings.

To guide thinking, we’re dividing the Challenge into three categories:

- Tracking and Privacy

- Web Technologies and the Shape of the Modern Web

- Equality, Neutrality, and Law

You will find all of the necessary information to join on the Challenge website. The submissions will close on August 31st and the winners will be announced on September 14th. We will bring the winners of the best three analyses (one per category) to MozFest, the world’s leading festival for the open internet movement, taking place in London from October 26th to the 28th 2018. We will cover their airfare, hotel, admission/registration, and if necessary visa fees in accordance to the official rules. We may also invite the winners to do 15-minute presentations of their findings.

We are looking forward to the diverse and innovative approaches from the data science community and we want to specifically encourage young data scientists and students to take a stab at this dataset. It could be the basis for your final university project and analyzing it can grow your data science skills and build your resum'e (and GitHub profile!). The Web gets more complex by the minute, keeping it safe and open can only happen if we work together. Join us!

Overscripted Web: a Mozilla Data Analysis Challenge was originally published in Mozilla Open Innovation on Medium, where people are continuing the conversation by highlighting and responding to this story.

https://medium.com/mozilla-open-innovation/overscripted-91881d8662c3?source=rss----410b8dc3986d---4

|

|

Cameron Kaiser: Just call it macOS Death Valley and get it over with |

In other news, besides the 32-bit apocalypse, they just deprecated OpenGL and OpenCL in order to make way for all the Metal apps that people have just been lining up to write. Not that this is any surprise, mind you, given how long Apple's implementation of OpenGL has rotted on the vine. It's a good thing they're talking about allowing iOS apps to run, because there may not be any legacy Mac apps compatible when macOS 10.15 "Zzyzx" rolls around.

Yes, looking forward to that Linux ARM laptop when the MacBook Air "Sierra Forever" wears out. I remember when I was excited about Apple's new stuff. Now it's just wondering what they're going to break next.

http://tenfourfox.blogspot.com/2018/06/just-call-it-macos-death-valley-and-get.html

|

|

Nicholas Nethercote: How to speed up the Rust compiler some more in 2018 |

I recently wrote about some work I’ve done to speed up the Rust compiler. Since then I’ve done some more.

rustc-perf improvements

Since my last post, rustc-perf — the benchmark suite, harness and visualizer — has seen some improvements. First, some new benchmarks were added: cargo, ripgrep, sentry-cli, and webrender. Also, the parser benchmark has been removed because it was a toy program and thus not a good benchmark.

Second, I added support for several new profilers: Callgrind, Massif, rustc’s own -Ztime-passes, and the use of ad hoc eprintln! statements added to rustc. (This latter case is more useful than it might sound; in combination with post-processing it can be very helpful, as we will see below.)

Finally, the graphs shown on the website now have better y-axis scaling, which makes many of them easier to read. Also, there is a new dashboard view that shows performance across rustc releases.

Failures and incompletes

After my last post, multiple people said they would be interested to hear about optimization attempts of mine that failed. So here is an incomplete selection. I suggest that rustc experts read through these, because there is a chance they will be able to identify alternative approaches that I have overlooked.

nearest_common_ancestors 1: I managed to speed up this hot function in a couple of ways, but a third attempt failed. The representation of the scope tree is not done via a typical tree data structure; instead there is a HashMap of child/parent pairs. This means that moving from a child to its parent node requires a HashMap lookup, which is expensive. I spent some time designing and implementing an alternative data structure that stored nodes in a vector and the child-to-parent links were represented as indices to other elements in the vector. This meant that child-to-parent moves only required stepping through the vector. It worked, but the speed-up turned out to be very small, and the new code was significantly more complicated, so I abandoned it.

nearest_common_ancestors 2: A different part of the same function involves storing seen nodes in a vector. Searching this unsorted vector is O(n), so I tried instead keeping it in sorted order and using binary search, which gives O(log n) search. However, this change meant that node insertion changed from amortized O(1) to O(n) — instead of a simple push onto the end of the vector, insertion could be at any point, which which required shifting all subsequent elements along. Overall this change made things slightly worse.

PredicateObligation SmallVec: There is a type Vec that is instantiated frequently, and the vectors often have few elements. I tried using a SmallVec instead, which avoids the heap allocations when the number of elements is below a threshold. (A trick I’ve used multiple times.) But this made things significantly slower! It turns out that these Vecs are copied around quite a bit, and a SmallVec is larger than a Vec because the elements are inline. Furthermore PredicationObligation is a large type, over 100 bytes. So what happened was that memcpy calls were inserted to copy these SmallVecs around. The slowdown from the extra function calls and memory traffic easily outweighed the speedup from avoiding the Vec heap allocations.

SipHasher128: Incremental compilation does a lot of hashing of data structures in order to determine what has changed from previous compilation runs. As a result, the hash function used for this is extremely hot. I tried various things to speed up the hash function, including LEB128-encoding of usize inputs (a trick that worked in the past) but I failed to speed it up.

LEB128 encoding: Speaking of LEB128 encoding, it is used a lot when writing metadata to file. I tried optimizing the LEB128 functions by special-casing the common case where the value is less than 128 and so can be encoded in a single byte. It worked, but gave a negligible improvement, so I decided it wasn’t worth the extra complication.

Token shrinking: A previous PR shrunk the Token type from 32 to 24 bytes, giving a small win. I tried also replacing the Option in Literal with just ast::Name and using an empty name to represent “no name”. That change reduced it to 16 bytes, but produced a negligible speed-up and made the code uglier, so I abandoned it.

#50549: rustc’s string interner was structured in such a way that each interned string was duplicated twice. This PR changed it to use a single Rc‘d allocation, speeding up numerous benchmark runs, the best by 4%. But just after I posted the PR, @Zoxc posted #50607, which allocated the strings out of an arena, as an alternative. This gave better speed-ups and was landed instead of my PR.

#50491: This PR introduced additional uses of LazyBTreeMap (a type I had previously introduced to reduce allocations) speeding up runs of multiple benchmarks, the best by 3%. But at around the same time, @porglezomp completed a PR that changed BTreeMap to have the same lazy-allocation behaviour as LazyBTreeMap, rendering my PR moot. I subsequently removed the LazyBTreeMap type because it was no longer necessary.

#51281: This PR, by @Mark-Simulacrum, removed an unnecessary heap allocation from the RcSlice type. I had looked at this code because DHAT’s output showed it was hot in some cases, but I erroneously concluded that the extra heap allocation was unavoidable, and moved on! I should have asked about it on IRC.

Wins

#50339: rustc’s pretty-printer has a buffer that can contain up to 55 entries, and each entry is 48 bytes on 64-bit platforms. (The 55 somehow comes from the algorithm being used; I won’t pretend to understand how or why.) Cachegrind’s output showed that the pretty printer is invoked frequently (when writing metadata?) and that the zero-initialization of this buffer was expensive. I inserted some eprintln! statements and found that in the vast majority of cases only the first element of the buffer was ever touched. So this PR changed the buffer to default to length 1 and extend when necessary, speeding up runs for numerous benchmarks, the best by 3%.

#50365: I had previously optimized the nearest_common_ancestor function. Github user @kirillkh kindly suggested a tweak to the code from that PR which reduced the number comparisons required. This PR implemented that tweak, speeding up runs of a couple of benchmarks by another 1–2%.

#50391: When compiling certain annotations, rustc needs to convert strings from unescaped form to escaped form. It was using the escape_unicode function to do this, which unnecessarily converts every char to \u{1234} form, bloating the resulting strings greatly. This PR changed the code to use the escape_default function — which only escapes chars that genuinely need escaping — speeding up runs of most benchmarks, the best by 13%. It also slightly reduced the on-disk size of produced rlibs, in the best case by 15%.

#50525: Cachegrind showed that even after the previous PR, the above string code was still hot, because string interning was happening on the resulting string, which was unnecessary in the common case where escaping didn’t change the string. This PR added a scan to determine if escaping is necessary, thus avoiding the re-interning in the common case, speeding up a few benchmark runs, the best by 3%.

#50407: Cachegrind’s output showed that the trivial methods for the simple BytePos and CharPos types in the parser are (a) extremely hot and (b) not being inlined. This PR annotated them so they are inlined, speeding up most benchmarks, the best by 5%.

#50564: This PR did the same thing for the methods of the Span type, speeding up incremental runs of a few benchmarks, the best by 3%.

#50931: This PR did the same thing for the try_get function, speeding up runs of many benchmarks, the best by 1%.

#50418: DHAT’s output showed that there were many heap allocations of the cmt type, which is refcounted. Some code inspection and ad hoc instrumentation with eprintln! showed that many of these allocated cmt instances were very short-lived. However, some of them ended up in longer-lived chains, in which the refcounting was necessary. This PR changed the code so that cmt instances were mostly created on the stack by default, and then promoted to the heap only when necessary, speeding up runs of three benchmarks by 1–2%. This PR was a reasonably large change that took some time, largely because it took me five(!) attempts (the final git branch was initially called cmt5) to find the right dividing line between where to use stack allocation and where to use refcounted heap allocation.

#50565: DHAT’s output showed that the dep_graph structure, which is a IndexVec>, caused many allocations, and some eprintln! instrumentation showed that the inner Vec‘s were mostly only a few elements. This PR changed the Vec to SmallVec<[DepNodeIndex;8]>, which avoids heap allocations when the number of elements is less than 8, speeding up incremental runs of many benchmarks, the best by 2%.

#50566: Cachegrind’s output shows that the hottest part of rustc’s lexer is the bump function, which is responsible for advancing the lexer onto the next input character. This PR streamlined it slightly, speeding up most runs of a couple of benchmarks by 1–3%.

#50818: Both Cachegrind and DHAT’s output showed that the opt_normalize_projection_type function was hot and did a lot of heap allocations. Some eprintln! instrumentation showed that there was a hot path involving this function that could be explicitly extracted that would avoid unnecessary HashMap lookups and the creation of short-lived Vecs. This PR did just that, speeding up most runs of serde and futures by 2–4%.

#50855: DHAT’s output showed that the macro parser performed a lot of heap allocations, particular on the html5ever benchmark. This PR implemented ways to avoid three of them: (a) by storing a slice of a Vec in a struct instead of a clone of the Vec; (b) by introducing a “ref or box” type that allowed stack allocation of the MatcherPos type in the common case, but heap allocation when necessary; and (c) by using Cow to avoid cloning a PathBuf that is rarely modified. These changes sped up runs of html5ever by up to 10%, and crates.io by up to 3%. I was particularly pleased with these changes because they all involved non-trivial changes to memory management that required the introduction of additional explicit lifetimes. I’m starting to get the hang of that stuff… explicit lifetimes no longer scare me the way they used to. It certainly helps that rustc’s error messages do an excellent job of explaining where explicit lifetimes need to be added.

#50932: DHAT’s output showed that a lot of HashSet instances were being created in order to de-duplicate the contents of a commonly used vector type. Some eprintln! instrumentation showed that most of these vectors only had 1 or 2 elements, in which case the de-duplication can be done trivially without involving a HashSet. (Note that the order of elements within this vector is important, so de-duplication via sorting wasn’t an option.) This PR added special handling of these trivial cases, speeding up runs of a few benchmarks, the best by 2%.

#50981: The compiler does a liveness analysis that involves vectors of indices that represent variables and program points. In rare cases these vectors can be enormous; compilation of the inflate benchmark involved one that has almost 6 million 24-byte elements, resulting in 143MB of data. This PR changed the type used for the indices from usize to u32, which is still more than large enough, speeding up “clean incremental” builds of inflate by about 10% on 64-bit platforms, as well as reducing their peak memory usage by 71MB.

What’s next?

These improvements, along with those recently done by others, have significantly sped up the compiler over the past month or so: many workloads are 10–30% faster, and some even more than that. I have also seen some anecdotal reports from users about the improvements over recent versions, and I would be interested to hear more data points, especially those involving rustc nightly.

The profiles produced by Cachegrind, Callgrind, and DHAT are now all looking very “flat”, i.e. with very little in the way of hot functions that stick out as obvious optimization candidates. (The main exceptions are the SipHasher128 functions I mentioned above, which I haven’t been able to improve.) As a result, it has become difficult for me to make further improvements via “bottom-up” profiling and optimization, i.e. optimizing hot leaf and near-leaf functions in the call graph.

Therefore, future improvement will likely come from “top-down” profiling and optimization, i.e. observations such as “rustc spends 20% of its time in phase X, how can that be improved”? The obvious place to start is the part of compilation taken up by LLVM. In many debug and opt builds LLVM accounts for up to 70–80% of instructions executed. It doesn’t necessarily account for that much time, because the LLVM parts of execution are parallelized more than the rustc parts, but it still is the obvious place to focus next. I have looked at a small amount of generated MIR and LLVM IR, but there’s a lot to take in. Making progress will likely require a lot broader understanding of things than many of the optimizations described above, most of which require only a small amount of local knowledge about a particular part of the compiler’s code.

If anybody reading this is interested in trying to help speed up rustc, I’m happy to answer questions and provide assistance as much as I can. The #rustc IRC channel is also a good place to ask for help.

https://blog.mozilla.org/nnethercote/2018/06/05/how-to-speed-up-the-rust-compiler-some-more-in-2018/

|

|

The Rust Programming Language Blog: Announcing Rust 1.26.2 |

The Rust team is happy to announce a new version of Rust, 1.26.2. Rust is a systems programming language focused on safety, speed, and concurrency.

If you have a previous version of Rust installed via rustup, getting Rust 1.26.2 is as easy as:

$ rustup update stable

If you don’t have it already, you can get rustup from the

appropriate page on our website, and check out the detailed release notes for

1.26.2 on GitHub.

What’s in 1.26.2 stable

This patch release fixes a bug in the borrow checker verification of match expressions. This bug

was introduced in 1.26.0 with the stabilization of match ergonomics. Specifically, it permitted

code which took two mutable borrows of the bar path at the same time.

let mut foo = Some("foo".to_string());

let bar = &mut foo;

match bar {

Some(baz) => {

bar.take(); // Should not be permitted, as baz has a unique reference to the bar pointer.

},

None => unreachable!(),

}

1.26.2 will reject the above code with this error message:

error[E0499]: cannot borrow `*bar` as mutable more than once at a time

--> src/main.rs:6:9

|

5 | Some(baz) => {

| --- first mutable borrow occurs here

6 | bar.take(); // Should not be permitted, as baz has a ...

| ^^^ second mutable borrow occurs here

...

9 | }

| - first borrow ends here

error: aborting due to previous error

The Core team decided to issue a point release to minimize the window of time in which this bug in the Rust compiler was present in stable compilers.

|

|

Armen Zambrano: Some webdev knowledge gained |

Easlier this year I had to split a Koa/SPA app into two separate apps. As part of that I switched from webpack to Neutrino.

Through this work I learned a lot about full stack development (frontend, backend and deployments for both). I could write a blog post per item, however, listing it all in here is better than never getting to write a post for any of them.

Note, I’m pointing to commits that I believe have enough information to understand what I learned.

Npm packages to the rescue:

Node/backend notes:

- How to better configure HMR

- Make HMR work by closing the previous server

- Add source-map-support to help development

- Start project on debugging mode (use --inspect rather than inspect)

- How to properly start Neutrino in production

Neutrino has been a great ally to me and here’s some knowledge on how to use it:

- Add PostCss support

- Specify custom env variables to be passed down into the app

- Add a favicon.ico to your app

- Configure Travis to use --inspect with Neutrino after any failures

- Include a custom font to your SPA

- How to properly call Neutrino with Istanbul

Heroku was my tool for deployment and here you have some specific notes:

- Add a postbuild script for Heroku as well as adding a static.json to configure how to server the frontend code

- Tell the server to send all routes from the root (since we use dynamic routing via React-router)

- Same change but for Netlify

- Changes to enable Heroku review apps and configue for SPAs

|

|

The Mozilla Blog: Mozilla Announces $225,000 for Art and Advocacy Exploring Artificial Intelligence |

Mozilla’s latest awards will support people and projects that examine the effects of AI on society

At Mozilla, one way we support a healthy internet is by fueling the people and projects on the front lines — from grants for community technologists in Detroit, to fellowships for online privacy activists in Rio.

Today, we are opening applications for a new round of Mozilla awards. We’re awarding $225,000 to technologists and media makers who help the public understand how threats to a healthy internet affect their everyday lives.

Specifically, we’re seeking projects that explore artificial intelligence and machine learning. In a world where biased algorithms, skewed data sets, and broken recommendation engines can radicalize YouTube users, promote racism, and spread fake news, it’s more important than ever to support artwork and advocacy work that educates and engages internet users.

These awards are part of the NetGain Partnership, a collaboration between Mozilla, Ford Foundation, Knight Foundation, MacArthur Foundation, and the Open Society Foundation. The goal of this philanthropic collaboration is to advance the public interest in the digital age.

What we’re seeking

We’re seeking projects that are accessible to broad audiences and native to the internet, from videos and games to browser extensions and data visualizations.

We will consider projects that are at either the conceptual or prototype phases. All projects must be freely available online and suitable for a non-expert audience. Projects must also respect users’ privacy.

In the past, Mozilla has supported creative media projects like:

Data Selfie, an open-source browser add-on that simulates how Facebook interprets users’ data.

Chupadados, a mix of art and journalism that examines how women and non-binary individuals are tracked and surveilled online.

Paperstorm, a web-based advocacy game that lets users drop digital leaflets on cities — and, in the process, tweet messages to lawmakers.

Codemoji, a tool for encoding messages with emoji and teaching the basics of encryption.

*Privacy Not Included, a holiday shopping guide that assesses the latest gadgets’ privacy and security features.

Details and how to apply

Mozilla is awarding a total of $225,000, with individual awards ranging up to $50,000. Final award amounts are at the discretion of award reviewers and Mozilla staff, but it is currently anticipated that the following awards will be made:

Two $50,000 total prize packages ($47,500 award + $2,500 MozFest travel stipend)

Five $25,000 total prize packages ($22,500 award + $2,500 MozFest travel stipend)

Awardees are selected based on quantitative scoring of their applications by a review committee and a qualitative discussion at a review committee meeting. Committee members include Mozilla staff, current and alumni Mozilla Fellows, and — as available — outside experts. Selection criteria are designed to evaluate the merits of the proposed approach. Diversity in applicant background, past work, and medium are also considered.

Mozilla will accept applications through August 1, 2018, and notify winners by September 15, 2018. Winners will be publicly announced on or around MozFest, which is held October 26-28, 2018.

APPLY. Applicants should attend the informational webinar on Monday, June 18 (4pm EDT, 3pm CDT, 1pm PDT). Sign up to be notified here.

Brett Gaylor is Commissioning Editor at Mozilla

The post Mozilla Announces $225,000 for Art and Advocacy Exploring Artificial Intelligence appeared first on The Mozilla Blog.

|

|

Daniel Pocock: Free software, GSoC and ham radio in Kosovo |

After the excitement of OSCAL in Tirana, I travelled up to Prishtina, Kosovo, with some of Debian's new GSoC students. We don't always have so many students participating in the same location. Being able to meet with all of them for a coffee each morning gave some interesting insights into the challenges people face in these projects and things that communities can do to help new contributors.

On the evening of 23 May, I attended a meeting at the Prishtina hackerspace where a wide range of topics, including future events, were discussed. There are many people who would like to repeat the successful Mini DebConf and Fedora Women's Day events from 2017. A wiki page has been created for planning but no date has been confirmed yet.

On the following evening, 24 May, we had a joint meeting with SHRAK, the ham radio society of Kosovo, at the hackerspace. Acting director Vjollca Caka gave an introduction to the state of ham radio in the country and then we set up a joint demonstration using the equipment I brought for OSCAL.

On my final night in Prishtina, we had a small gathering for dinner: Debian's three GSoC students, Elena, Enkelena and Diellza, Renata Gegaj, who completed Outreachy with the GNOME community and Qendresa Hoti, one of the organizers of last year's very successful hackathon for women in Prizren.

Promoting free software at Doku:tech, Prishtina, 9-10 June 2018

One of the largest technology events in Kosovo, Doku:tech, will take place on 9-10 June. It is not too late for people from other free software communities to get involved, please contact the FLOSSK or Open Labs communities in the region if you have questions about how you can participate. A number of budget airlines, including WizzAir and Easyjet, now have regular flights to Kosovo and many larger free software organizations will consider requests for a travel grant.

https://danielpocock.com/free-software-ham-radio-gsoc-in-kosovo-2018-05

|

|

Cameron Kaiser: Another weekend on the new computer (or, making the Talos II into the world's biggest Power Mac) |

Your eyes do not deceive you -- this is QEMU running Tiger with full virtualization on the Talos II. For proof, look at my QEMU command line in the Terminal window. I've just turned my POWER9 into a G4.

Recall last entry that there was a problem using virtualization to run Power Mac operating systems on the T2 because the necessary KVM module, KVM-PR, doesn't load on bare-metal POWER9 systems (the T2 is PowerNV, so it's bare-metal). That means you'd have to run your Mac operating systems under pure emulation, which eked out something equivalent to a 1GHz G4 in System Profiler but was still a drag. With some minimal tweaks to KVM-PR, I was able to coax Tiger to start up under virtualization, increasing the apparent CPU speed to over 2GHz. Hardly a Quad G5, but that's the fastest Power Mac G4 you'll ever see with the fastest front-side bus on a G4 you'll ever see. Ever. Maximum effort.

The issue on POWER9 is actually a little more complex than I described it (thanks to Paul Mackerras at IBM OzLabs for pointing me in the right direction), so let me give you a little background first. To turn a virtual address into an actual real address, PowerPC and POWER processors prior to POWER9 exclusively used a hash table of page table entries (PTEs or HPTEs, depending on who's writing) to find the correct location in memory. The process in a simplified fashion is thus: given a virtual address, the processor translates it into a key for that block of memory using the segment lookaside buffer (SLB), and then hashes that key and part of the address to narrow it down to two page table entry groups (PTEGs), each containing eight PTEs. The processor then checks those 16 entries for a match. If it's there, it continues, or else it sends a page fault to the operating system to map the memory.

The first problem is that the format of HPTEs changed slightly in POWER9, so this needs to be accommodated if the host CPU does lookups of its own (it does in KVM-HV, but this was already converted for the new POWER9 and thus works already).

The bigger problem, though, is that hash tables can be complex to manage and in the worst case could require a lot of searches to map a page. POWER8 and earlier reduce this cost with the translation lookaside buffer (TLB), used to cache a PTE once it's found. However, the POWER9 has another option called the radix MMU. In this scheme (read the patent if you're bored), the SLB entry for that block of memory now has a radix page table pointer, or RPTP. The RPTP in turn points to a chain of hierarchical translation tables ("radix tree") that through a series of cascading lookups build the real address for that page of RAM. This is fast and flexible, and particularly well-suited to discontinuous tracts of addressing space. However, as an implementational detail, a guest operating system running in user mode (i.e., KVM-PR) on a radix host has limitations on so-called quadrant 3 (memory in the 0xc... range). This isn't a problem for a VM that can execute supervisor instructions (i.e., KVM-HV) because it can just remap as necessary, but KVM-HV can't emulate a G3 or G4 on a POWER9; only KVM-PR can do that.

Fortunately, the POWER9 still can support the HPT and turn the radix MMU off by booting the kernel with disable_radix. That gets around the second problem. As it turns out, the first problem actually isn't a problem for booting OS X on KVM once radix mode is off, assuming you hack the KVM-PR kernel module to handle a couple extra interrupt types and remove the lockout on POWER9. And here we are.(*)

Anyway, you lot will be wanting the Geekbench numbers, won't you? Such a competitive bunch, always demanding to know the score. Let's set two baselines. First, my trusty backup workstation, the 1GHz iMac G4: It's not very fast and it has no L3 cache, which makes it worse, but the arm is great, the form-factor has never been equaled, I love the screen and it fits very well on a desk. That gets a fairly weak 580 Geekbench (Geekbench 2.2 on 10.4, integer 693, floating point 581, memory 500, stream 347). For the second baseline, I'll use my trusty Quad G5, but I left it in Reduced power mode since that's how I normally run it. In Reduced, it gets a decent 1700 Geekbench (1907/2040/1002/1190).

First up, Geekbench with pure emulation (using the TCG JIT):

... aah, forget it. I wasn't going to wait all night for that. How about hacked KVM-PR?

Well, damn, son: 1733 (1849/2343/976/536). That's into the G5's range, at least with math performance, and the G5 did it with four threads while this poor thing only has one (QEMU's Power Mac emulation does not yet support SMP, even with KVM). Again, do remember that the G5 was intentionally being run gimped here: if it were going full blast, it would have blown the World's Baddest Power Mac G4 out of the water. But still, this is a decent showing for the T2 in "Mac mode" given all the other overhead that's going on, and the T2 is doing that while running Firefox with a buttload of tabs and lots of Terminal sessions and I think I was playing a movie or something in the background. I will note for the record that some of the numbers seem a bit suspect; although there may well be a performance delta between image compression and decompression, it shouldn't be this different and it would more likely be in the other direction. Likewise, the poor showing for the standard library memory work might be syscall overhead, which is plausible, but that doesn't explain why a copy is faster than a simple write. Regardless, that's heaps better than the emulated CPU which wouldn't have finished even by the time I went to dinner.

The other nice thing is that KVM-PR-Hacky-McHackface doesn't require any changes to QEMU to work, though the hack is pretty hacky. It is not sufficient to boot Mac OS 9; that causes the kernel module to err out with a failure in memory mapped I/O, which is probably because it actually does need the first problem to be fixed, and similarly I would expect Linux and NetBSD won't be happy either for the same reason (let alone nesting KVM-PR within them, which is allowed and even supported). Also, I/O performance in QEMU regardless of KVM is dismal. Even with my hacked KVM-PR, a raw disk image and rebuilding a stripped down QEMU with -O3 -mcpu=power9, disk and network throughput are quite slow and it's even worse if there are lots of graphics updates occurring simultaneously, such as installing Mac OS X with the on-screen Aqua progress bar. Minimizing such windows helps, but only when you're able to do so, of course. More ominously I'll get occasional soft lockouts in the kernel (though everything keeps running), usually if it's doing heavy disk access, and it acts very strangely with stuff that messes with the hardware such as system updates. For that reason I let Software Update run in emulated mode so that if a bug occurred during the installation, it wouldn't completely hose everything and make the disk image unbootable (which did, in fact, happen the first time I tried to upgrade to 10.4.11). Another unrelated annoyance is that QEMU's emulated video card doesn't offer 16:9 resolutions, which is inconvenient on this 1920x1080 display. I could probably hack that in later.

QEMU also has its own bugs, of course; support for running OS 9/OS X is very much still a work in progress. For example, you'll notice there are no screenshots of the T2 running TenFourFox. That's because it can't. I installed the G3 version and tried running it in QEMU+KVM-PR, and TenFourFox crashed with an illegal instruction fault. So I tried starting it in safe mode on the assumption the JIT was making it unsteady, which seemed to work when it gave me the safe mode window, but then when I tried to start the full browser still crashed with an illegal instruction fault (in a different place). At that point I assumed it was a bug in KVM-PR and tried starting it in pure emulation. This time, TenFourFox crashed the entire emulator (which exited with an illegal instruction fault). I think we can safely conclude that this is a bug in QEMU. I haven't even tried running Classic on it yet; I'm almost afraid to.

Still, this means my T2 is a lot further along at being able to run my Power Mac software. It also means I need to go through and reprogram all my AutoKey remappings to not remap the Command-key combinations when I'm actually in QEMU. That's a pain, but worth it. If enough people are interested in playing with this, I'll go post the diff in a gist on new Microsoft Visual GitHub, but remember it will rock your socks, taint your kernel, (possibly) crash your computer and (definitely) slap yo mama. You'll also need to apply it as a patch to the source code for your current kernel, whatever it is, as I will not post binaries to make you do it your own bad and irresponsible self, but you won't have to wait long as the T2 will build the Linux kernel from scratch and all its relevant modules in about 20 minutes at -j24. Now we're playing with POWER!

What else did I learn this weekend?