Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Hacks.Mozilla.Org: What Makes a Great Extension? |

We’re in the middle of our Firefox Quantum Extensions Challenge and we’ve been asking ourselves: What makes a great extension?

Great extensions add functionality and fun to Firefox, but there’s more to it than that. They’re easy to use, easy to understand, and easy to find. If you’re building one, here are some simple steps to help it shine.

Make It Dynamic

Firefox 57 added dynamic themes. What does that mean? They’re just like standard themes that change the look and feel of Firefox, but they can change over time. Create new themes for daytime, nighttime, private browsing, and all your favorite things.

Mozilla developer advocate Potch created a wonderful video explaining how dynamic themes work in Firefox:

Make It Fun

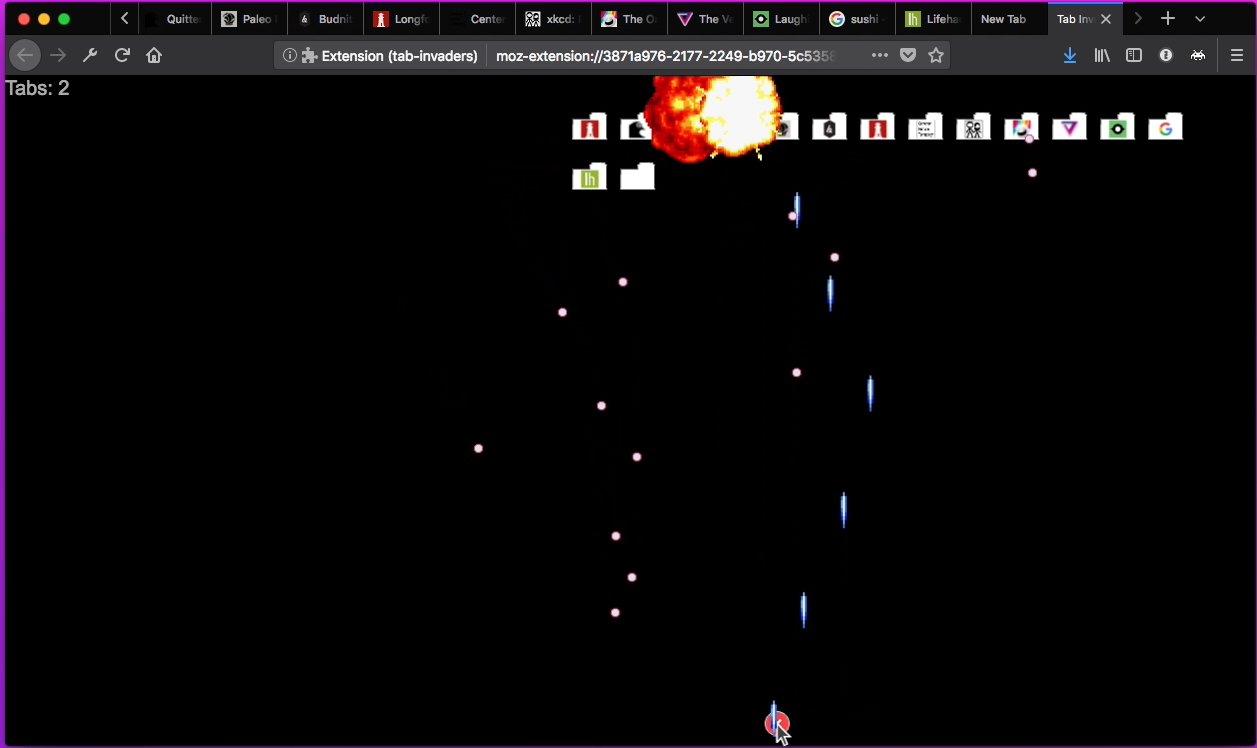

Browsing the web is fun, but it can be downright hilarious with an awesome extension. Firefox extensions support JavaScript, which means you can create and integrate full-featured games into the browser. Tab Invaders is a fun example. This remake of the arcade classic Space Invaders lets users blast open tabs into oblivion. It’s a cathartic way to clear your browsing history and start anew.

But you don’t have to build a full-fledged game to have fun. Tabby Cat adds an interactive cartoon cat to every new tab. The cats nap, meow, and even let you pet them. Oh, and the cats can wear hats.

Make It Functional

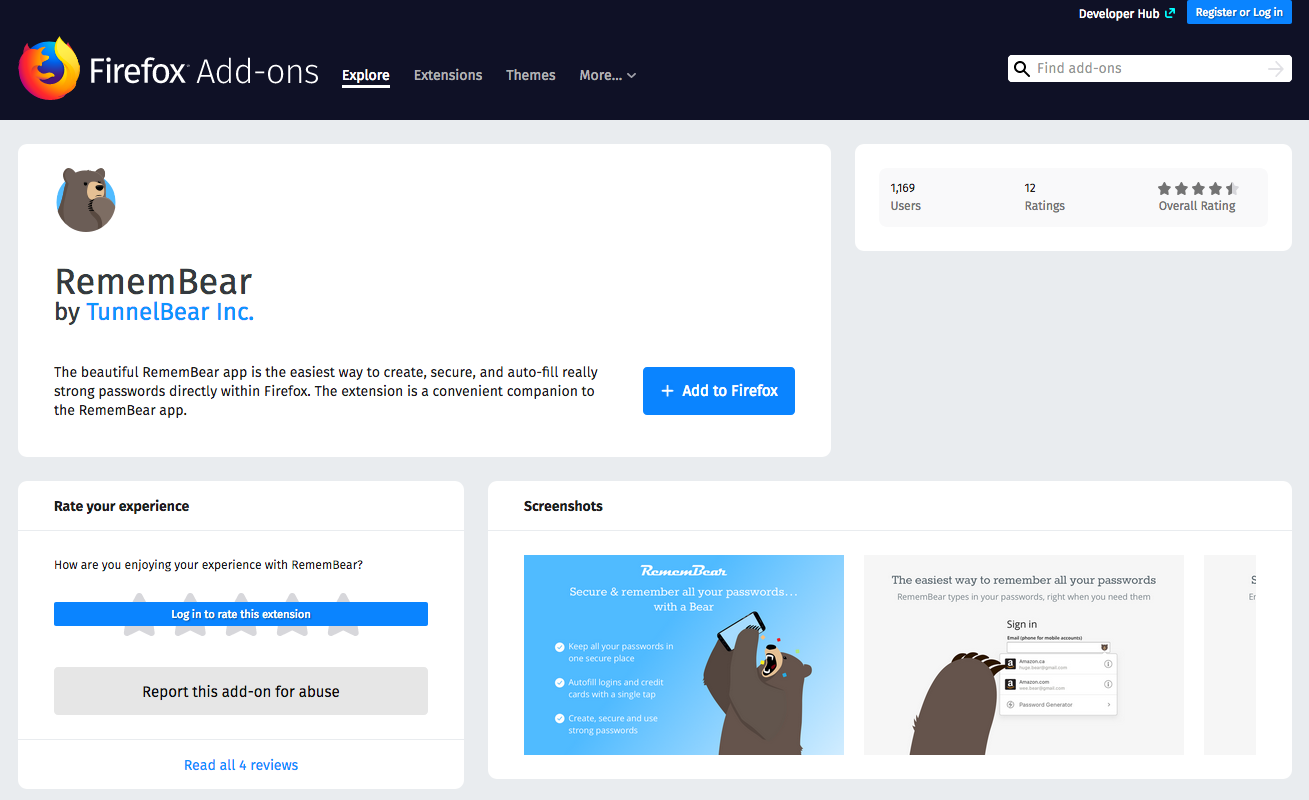

A fantastic extension helps users do everyday tasks faster and more easily. RememBear, from the makers of TunnelBear, remembers usernames and passwords (securely) and can generate new strong passwords. Tree Style Tab lets users order tabs in a collapsible tree structure instead of the traditional tab structure. The Grammarly extension integrates the entire Grammarly suite of writing and editing tools in any browser window. Excellent extensions deliver functionality. Think about ways to make browsing the web faster, easier, and more secure when you’re building your extension.

Make It Firefox

The Firefox UI is built on the Photon Design System. A good extension will fit seamlessly into the UI design language and seem to be a native part of the browser. Guidelines for typography, color, layout, and iconography are available to help you integrate your extension with the Firefox browser. Try to keep edgy or unique design elements apart from the main Firefox UI elements and stick to the Photon system when possible.

Make It Clear

When you upload an extension to addons.mozilla.org (the Firefox add-ons site), pay close attention to its listing information. A clear, easy-to-read description and well-designed screenshots are key. The Notebook Web Clipper extension is a good example of an easy-to-read page with detailed descriptions and clear screenshots. Users know exactly what the extension does and how to use it. Make it easy for users to get started with your extension.

Make It Fresh

Firefox 60, now available in Firefox Beta, includes a host of brand-new APIs that let you do even more with your extensions. We’ve cracked open a cask of theme properties that let you control more parts of the Firefox browser than ever before, including tab color, toolbar icon color, frame color, and button colors.

The tabs API now supports a tabs.captureTab method that can be passed a tabId to capture the visible area of the specified tab. There are also new or improved APIs for proxies, network extensions, keyboard shortcuts, and messages.

For a full breakdown of all the new improvements to extension APIs in Firefox 60, check out Firefox engineer Mike Conca’s excellent post on the Mozilla Add-ons Blog.

Submit Your Extension Today

The Quantum Extensions Challenge is running until April 15, 2018. Visit the Challenge homepage for rules, requirements, tips, tricks, and more. Prizes will be awarded to the top extensions in three categories: Games & Entertainment, Dynamic Themes, and Tab Manager/Organizer. Winners will be awarded an Apple iPad Pro 10.5” Wifi 256GB and be featured on addons.mozilla.org. Runners up in each category will receive a $250 USD Amazon gift card. Enter today and keep making awesome extensions!

https://hacks.mozilla.org/2018/04/what-makes-a-great-extension/

|

|

Eric Shepherd: Results of the MDN “Internal Link Optimization” SEO experiment |

Our fourth and final SEO experiment for MDN, to optimize internal links within the open web documentation, is now finished. Optimizing internal links involves ensuring that each page (in particular, the ones we want to improve search engine results page (SERP) positions for, are easy to find.

This is done by ensuring that each page is linked to from as many topically relevant pages as possible. In addition, it should in turn link outward to other relevant pages. The more quality links we have among related pages, the better our position is likely to be. The object, from a user’s perspective, is to ensure that even if the first page they find doesn’t answer their question, it will link to a page that does (or at least might help them find the right one).

Creating links on MDN is technically pretty easy. There are several ways to do it, including:

- Selecting the text to turn into a link and using the toolbar’s “add link” button

- Using the “add link” hotkey (Ctrl-K or Cmd-K)

- Any one of a large number of macros that generate properly-formatted links automatically, such as the

domxrefmacro, which creates a link to a page within the MDN API reference; for example: {{domxref(“RTCPeerConnection.createOffer()”)}} creates a link to https://developer.mozilla.org/en-US/docs/Web/API/RTCPeerConnection/createOffer, which looks like this:RTCPeerConnection.createOffer(). Many of the macros offer customization options, but the default is usually acceptable and is almost always better than trying to hand-make the links.

Our style guide talks about where links should be used. We even have a guide to creating links on MDN that covers the most common ways to do so. Start with these guidelines.

The content updates

10 pages were selected for the internal link optimization experiment.

Changes made to the selected pages

In general, the changes made were only to add links to pages; sometimes content had to be added to accomplish this but ideally the changes were relatively small.

- Existing text that should have been a link but was not, such as mentions of terms that need definition, concepts for which pages exist that should have been linked to, were turned into links.

- The uses of API names, element names, attributes, CSS properties, SVG element names, and so forth were turned into links when either first used in a section or if a number of paragraphs had elapsed since they were last a link. While repeated links to the same page don’t count, this is good practice for usability purposes.

- Any phrase for which a more in-depth explanation is available was turned into a link.

- Links to related concepts or topics were added where appropriate; for example, on the article about

HTMLFormElement.elements, a note is provided with a link to the relatedDocument.formsproperty. - Links to related functions or HTML elements or whatever were added.

- The “See also” section was reviewed and updated to include appropriate related content.

Changes made to targeted pages

Targeted pages—pages to which links were added—in some cases got smaller changes made, such as the addition of a link back to the original page, and in some cases new links were added to other relevant content if the pages were particularly in need of help.

Pages to be updated

The pages selected to be updated for this experiment:

- https://developer.mozilla.org/en-US/docs/Web/CSS/object-position

- https://developer.mozilla.org/en-US/docs/Web/API/XMLHttpRequest/HTML_in_XMLHttpRequest

- https://developer.mozilla.org/en-US/docs/Web/API/Document_object_model/Locating_DOM_elements_using_selectors

- https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/enumerateDevices

- https://developer.mozilla.org/en-US/docs/Web/API/HTMLFormElement/elements

- https://developer.mozilla.org/en-US/docs/Web/API/XMLSerializer

- https://developer.mozilla.org/en-US/docs/Web/CSS/all

- https://developer.mozilla.org/en-US/docs/Web/API/HTMLCollection/item

- https://developer.mozilla.org/en-US/docs/Web/CSS/inherit

- https://developer.mozilla.org/en-US/docs/Web/CSS/unset

The results

The initial data was taken during the four weeks from December 29, 2017 through January 25th, 2018. The “after” data was taken just over a month after the work was completed, covering the period of March 6 through April 2, 2018.

The results from this experiment were fascinating. Of all of the SEO experiments we’ve done, the results of this one were the most consistently positive.

| Landing Page | Unique Pageviews | Organic Searches | Entrances | Hits |

|---|---|---|---|---|

| /en-us/docs/web/api/document_object_model/locating_dom_elements_using_selectors | +51.44% | +27.89% | +34.08% | +65.62% |

| /en-us/docs/web/api/htmlcollection/item | +62.29% | +48.44% | +64.81% | +46.97% |

| /en-us/docs/web/api/htmlformelement/elements | +77.59% | +63.75% | +76.83% | +78.34% |

| /en-us/docs/web/api/mediadevices/enumeratedevices | +27.84% | +15.88% | +21.05% | +20.48% |

| /en-us/docs/web/api/xmlhttprequest/html_in_xmlhttprequest | +53.52% | -1.43% | +27.79% | +63.51% |

| /en-us/docs/web/api/xmlserializer | +40.08% | +53.19% | +35.94% | +42.64% |

| /en-us/docs/web/css/all | +53.92% | +27.62% | +31.11% | +54.90% |

| /en-us/docs/web/css/inherit | +26.05% | +30.79% | +24.71% | +19.94% |

| /en-us/docs/web/css/object-position | +111.77% | +128.45% | +116.12% | +426.94% |

| /en-us/docs/web/css/unset | +30.09% | +23.03% | +23.93% | +41.01% |

These results are amazing. Every single value is up, with the sole exception of a small decline in organic search views (that is, appearances in Google search result lists) for the article “HTML in XMLHttpRequest.” Most values are up substantially, with many being impressively improved.

Uncertainties

Due to the implementation of the experiment and certain timing issues, there are uncertainties surrounding these results. Those include:

- Ideally, much more time would have elapsed between completing the changes and collecting final data.

- The experiment began during the winter holiday season, when overall site usage is at a low point.

- There was overall site growth of MDN traffic over the time this experiment was underway.

Decisions

Certain conclusions can be reached:

- The degree to which internal link improvements benefited traffic to these pages can’t be ignored, even after factoring in the uncertainties. This is easily the most benefit we got from any experiment, and on top of that, the amount of work required was often much lower. This should be a high priority portion of our SEO plans.

- The MDN meta-documentation will be further improved to enhance recommendations around linking among pages on MDN.

- We should consider enhancements to macros used to build links to make them easier to use, especially in cases where we commonly have to override default behavior to get the desired output. Simplifying the use of macros to create links will make linking faster and easier and therefore more common.

- We’ll re-evaluate the data after more time has passed to ensure the results are correct.

Discussion?

If you’d like to comment on this, or ask questions about the results or the work involved in making these changes, please feel free to follow up or comment on the thread I’ve created on our Discourse forum.

https://www.bitstampede.com/2018/04/05/mdn-internal-link-experiment/

|

|

Francesco Lodolo: Why Fluent Matters for Localization |

In case you don’t know what Fluent is, it’s a localization system designed and developed by Mozilla to overcome the limitations of the existing localization technologies. If you have been around Mozilla Localization for a while, and you’re wondering what happened to L20n, you can read this explanation about the relation between these two projects.

With Firefox 58 we started moving Firefox Preferences to Fluent, and today we’re migrating the last pane (Firefox Account – Sync) in Firefox Nightly (61). The work is not done yet, there are still edge cases to migrate in the existing panes, and subdialogs, but we’re on track. If you’re interested in the details, you can read the full journey in two blog posts from Zibi (2017 and 2018), covering not only Fluent, but also the huge amount of work done on the Gecko platform to improve multilingual support.

At this point, you might be wondering: do we really need another localization system? What’s wrong with what we have?

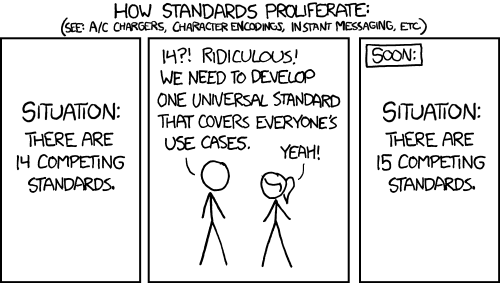

The truth is that there is a lot wrong with the current systems. In Gecko alone, we support 4 different file formats to localize content: .dtd, .properties, .inc, .inc. And since none of them support plural forms, we built hacks on top of .properties to support pluralization.

The truth is that there is a lot wrong with the current systems. In Gecko alone, we support 4 different file formats to localize content: .dtd, .properties, .inc, .inc. And since none of them support plural forms, we built hacks on top of .properties to support pluralization.

Here are a few practical examples of why Fluent is a huge improvement over existing technologies, and will allow us to improve the quality of the localizations we ship.

DTDs and Concatenations

You want to localize this simple fragment of XUL code without using JavaScript.

Please sign in to reconnect

This turns into 2 separate strings in a DTD file, and a long localization comment:

Why the empty string at the end? Because, while English doesn’t need it, other languages might need to change the structure of the sentence, adding content after the email address. On top of that, some localization tools don’t support empty strings correctly, not allowing localizers to mark an empty translation as a “translated” string.

In Fluent, this is simply:

sync-signedin-login-failure = Please sign in to reconnect { $email }

One single string, full visibility on the context, flexibility to move around the email address.

Plural Forms

Plural forms are supported in Gecko only for .properties files. Fluent supports plural forms natively, and with a lot of additional flexibility.

First of all, if you’re not familiar with the complexity of plurals across languages (limiting the discussion to cardinal integer numbers):

- English, like many other European languages, only has 2 plural forms: n=1 uses one form (“1 page”), all other numbers (n!=1) use a different form (“2 pages”). Sadly, this makes a lot of people think about plural in terms of “1 vs many”, while that’s not really the case for most languages.

- French still has 2 plural forms, but uses the same form for both 0 and 1.

- Other languages can only have one form (e.g. Chinese), or have up to 6 different plural forms (e.g. Arabic). Fluent uses the CLDR categories (zero, one, two, few, many, other) to match a number to the correct plural form. For example, in Russian 1 and 21 will use the form “one”, but 11 will use “other”.

- The behavior might change if the actual number is present or not. For example, Turkic languages don’t need to pluralize a noun after a number (“1 page”), but need plural forms in sentences referencing to one or more elements (“this” vs “them”).

Consider for example this use case: in Firefox, the button to set the home page changes from “Use Current Page” to “Use Current Pages”, depending on the number of open tabs.

If you want to use a proper plural form, you need to add the number of tabs to the string. In .properties, it would look like this (plural forms are separated by a semicolon):

use-current-pages = Use Current Page;Use Current #1 Pages

This will force languages to create all plural forms for their locales, even if they might not be needed. If your language has 6 forms, you need to provide all 6 forms, even if they’re all identical. Fun, isn’t it? Note that this is not just a limitation of the plural system used in .properties, the same happens in GetText (.po files).

Here’s how Fluent improve things: first, you don’t need to add all plural forms, you can rely on the fall back to the default value (indicated by *), without raising any error:

use-current-pages =

.label =

{

*[other] Использовать текущие страницы

}

More important, you can match a specific number (1 in this case):

use-current-pages =

.label =

{

[1] Использовать текущую страницу

*[other] Использовать текущие страницы

}

In Russian, the “[one]” form would be also used for 21 tabs, while here it’s only used for 1 tab.

Let’s assume the English message looks like this:

use-current-pages =

.label =

{ $tabCount ->

[1] Use Current Page

*[other] Use Current Pages

}

Do you need to expose the number in your message and treat it like a standard plural form? You can:

use-current-pages =

.label =

{ $tabCount ->

[one] Use { $tabCount } Page

*[other] Use { $tabCount } Pages

}

Do you only need one form? Again, you can simplify it into:

use-current-pages =

.label = Use { $tabCount } Pages

or even:

use-current-pages =

.label = Use Current Pages

Variants

This is one of the most exciting changes introduced to the localization paradigm.

Consider this example: “Firefox Account” is a special brand within Firefox. While “Firefox” itself should not be localized or declined, “account” can be localized and moved. In Italian it’s “Account Firefox”, “Cuenta de Firefox” in Spanish.

A special entity is defined in order to be reused in other strings:

For example:

In Italian this results in “Connetti &syncBrand.fxAccount.label;”. It’s not natural, and it looks wrong, because we don’t capitalize nouns in the middle of a sentence.

My only option to improve the translation, and make it sound more natural, would have been to drop the entity and just add the translated name. That defies the entire concept of having a central definition for the brand.

Here’s what I can do in Fluent. The brand is defined as a term, a special type of message that can only be referenced from other strings (not code), and can have additional attributes.

-fxaccount-brand-name = Firefox Account

sync-signedout-account-title = Connect with a { -fxaccount-brand-name }

And now in Italian I can do:

-fxaccount-brand-name =

{

[lowercase] account Firefox

*[uppercase] Account Firefox

}

sync-signedout-account-title = Connetti il tuo { -fxaccount-brand-name[lowercase] }

While uppercase vs lowercase is a trivial example, variants can have a much deeper impact on localization quality for complex languages that use declensions, where the word “account” changes based on its role within the sentence (nominative, accusative, etc.).

This is only the tip of the iceberg, there’s more you can do with Fluent, and the new localization API will allow us to drastically improve the experience for non English users in Firefox. Here are some additional links if you want to learn more about Fluent:

https://www.yetanothertechblog.com/2018/04/05/why-fluent-matters-for-localization/

|

|

Daniel Stenberg: curl another host |

Sometimes you want to issue a curl command against a server, but you don't really want curl to resolve the host name in the given URL and use that, you want to tell it to go elsewhere. To the "wrong" host, which in this case of course happens to be the right host. Because you know better.

Don't worry. curl covers this as well, in several different ways...

Fake the host header

The classic and and easy to understand way to send a request to the wrong HTTP host is to simply send a different Host: header so that the server will provide a response for that given server.

If you run your "example.com" HTTP test site on localhost and want to verify that it works:

curl --header "Host: example.com" http://127.0.0.1/

curl will also make cookies work for example.com in this case, but it will fail miserably if the page redirects to another host and you enable redirect-following (--location) since curl will send the fake Host: header in all further requests too.

The --header option cleverly cancels the built-in provided Host: header when a custom one is provided so only the one passed in from the user gets sent in the request.

Fake the host header better

We're using HTTPS everywhere these days and just faking the Host: header is not enough then. An HTTPS server also needs to get the server name provided already in the TLS handshake so that it knows which cert etc to use. The name is provided in the SNI field. curl also needs to know the correct host name to verify the server certificate against (server certificates are rarely registered for an IP address). curl extracts the name to use in both those case from the provided URL.

As we can't just put the IP address in the URL for this to work, we reverse the approach and instead give curl the proper URL but with a custom IP address to use for the host name we set. The --resolve command line option is our friend:

curl --resolve example.com:443:127.0.0.1 https://example.com/

Under the hood this option populates curl's DNS cache with a custom entry for "example.com" port 443 with the address 127.0.0.1, so when curl wants to connect to this host name, it finds your crafted address and connects to that instead of the IP address a "real" name resolve would otherwise return.

This method also works perfectly when following redirects since any further use of the same host name will still resolve to the same IP address and redirecting to another host name will then resolve properly. You can even use this option multiple times on the command line to add custom addresses for several names. You can also add multiple IP addresses for each name if you want to.

Connect to another host by name

As shown above, --resolve is awesome if you want to point curl to a specific known IP address. But sometimes that's not exactly what you want either.

Imagine you have a host name that resolves to a number of different host names, possibly a number of front end servers for the same site/service. Not completely unheard of. Now imagine you want to issue your curl command to one specific server out of the front end servers. It's a server that serves "example.com" but the individual server is called "host-47.example.com".

You could resolve the host name in a first step before curl is used and use --resolve as shown above.

Or you can use --connect-to, which instead works on a host name basis. Using this, you can make curl replace a specific host name + port number pair with another host name + port number pair before the name is resolved!

curl --connect-to example.com:443:host-47.example.com:443 https://example.com/

Crazy combos

Crazy combos

Most options in curl are individually controlled which means that there's rarely logic that prevents you from using them in the awesome combinations that you can think of.

-- resolve, -- connect-to and -- header can all be used in the same command line!

Connect to a HTTPS host running on localhost, use the correct name for SNI and certificate verification, but then still ask for a separate host in the Host: header? Sure, no problem:

curl --resolve example.com:443:127.0.0.1 https://example.com/ --header "Host: diff.example.com"

All the above with libcurl?

When you're done playing with the curl options as described above and want to convert your command lines to libcurl code instead, your best friend is called --libcurl.

Just append --libcurl example.c to your command line, and curl will generate the C code template for you in that given file name. Based on that template, making use of that code correctly is usually straight-forward and you'll get all the options to read up in a convenient way.

Good luck!

Update: thanks to @Manawyrm, I fixed the ndash issues this post originally had.

|

|

Mozilla Addons Blog: April’s Featured Extensions |

Pick of the Month: Passbolt

by Passbolt

A password manager made for secure team collaboration. Great for safekeeping wifi and admin passwords, or login credentials for your company’s social media accounts.

“The interface is user-friendly, installation is explained step by step.”

Featured: FoxClocks

by Andy McDonald

Display times from locations all over the world in a tidy spot at the bottom of your browser.

“This is one of the best extensions for people who work with globally distributed teams.”

Featured: Video DownloadHelper

by mig

A simple but powerful tool for downloading video. Works well with the web’s most popular video sharing sites, like YouTube, Vimeo, Facebook, and others.

“Without a doubt the best completely trouble-free add-on which does exactly what it claims to do without fuss, complicated settings, or preferences. It just works.”

Nominate your favorite add-ons

Featured extensions are selected by a community board made up of developers, users, and fans. Board members change every six months. Here’s further information on AMO’s featured content policies.

If you’d like to nominate an add-on for featuring, please send it to amo-featured [at] mozilla [dot] org for the board’s consideration. We welcome you to submit your own add-on!

The post April’s Featured Extensions appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/04/04/aprils-featured-extensions/

|

|

Air Mozilla: Bugzilla Project Meeting, 04 Apr 2018 |

The Bugzilla Project Developers meeting.

The Bugzilla Project Developers meeting.

|

|

Air Mozilla: Weekly SUMO Community Meeting, 04 Apr 2018 |

This is the SUMO weekly call

This is the SUMO weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-20180404/

|

|

Will Kahn-Greene: Socorro Smooth Mega-Migration 2018 |

Summary

Socorro is the crash ingestion pipeline for Mozilla's products like Firefox. When Firefox crashes, the Breakpad crash reporter asks the user if the user would like to send a crash report. If the user answers "yes!", then the Breakpad crash reporter collects data related to the crash, generates a crash report, and submits that crash report as an HTTP POST to Socorro. Socorro collects and saves the crash report, processes it, and provides an interface for aggregating, searching, and looking at crash reports.

Over the last year and a half, we've been working on a new infrastructure for Socorro and migrating the project to it. It was a massive undertaking and involved changing a lot of code and some architecture and then redoing all the infrastructure scripts and deploy pipelines.

On Thursday, March 28th, we pushed the button and switched to the new infrastructure. The transition was super smooth. Now we're on new infra!

This blog post talks a little about the old and new infrastructures and the work we did to migrate.

Read more… (13 mins to read)

http://bluesock.org/~willkg/blog/mozilla/socorro_migration_2018.html

|

|

Gervase Markham: Top 50 DOS Problems Solved: Can I Have A Single Drive? |

Q: I intend to upgrade from MS-DOS v3.3 to either DR DOS 6 or MS-DOS 5, both of which will allow me to have my 40MB hard disk configured as a single drive instead of being partitioned into twin 20MB drives. Am I right in thinking that to do this I will re-format my hard disk, and that I must first back up all the data? I dread doing this since I have almost 30MB on there.

A: First the bad news: yes, you will need to re-format your disk to take advantage of the ability to work with partitions greater than 32MB. However, backing up needn’t be as nasty a job as you think. But your question does beg another[0]: since backing up is going to be such a large job, it sounds as though you haven’t done it before.

… The most basic approach … would be to copy important data to a floppy disk, perhaps with the aid of a file compression utility such as LHA. If the worst happens, you simply reinstall applications from their original disks (or, much better still, back-ups of them) and copy your data back from floppy. [Or, you could use] a dedicated backup utility. My current favourite is Fastbak Plus (lb110)[1].

32Mb ought to be enough for everyone? How did that work out – 512 byte sectors and a 16-bit index?

[0] No, it doesn’t – Ed.

[1] lb210 in today’s money. For a backup program!

http://feedproxy.google.com/~r/HackingForChrist/~3/jXWMvnNDGuM/

|

|

Cameron Kaiser: 45.6.0 final available |

45.7 will not have substantial changes and I don't anticipate doing a beta. However, one change I do intend to make is to mirror Mozilla's work on updating default settings, starting with layout paint delay. The rationale for delaying layout painting specifically was to wait for sufficient data to come through rather than guessing an incorrect layout with incomplete data that then has to be invalidated: without the delay, although the screen would be busier, the browser often would end up taking more total wall-clock time on wasted work. Now that data arrives faster on most people's systems today than in the days of dialup and low-speed DSL, it's time for these older settings from another age to be re-examined, and paint delay is probably the most visible one of those settings.

Stuff like that has long been part of the various unofficial Firefox "optimization guides" that circulate, including Erik's set for TenFourFox. I have generally avoided comment on his recommendations (except for a couple that I knew would be net negative for most users) because as far as I'm concerned, it's your computer and you can tune it as you like -- just don't file bug reports if you muck it up because some of those settings have undesirable side effects in edge cases. For that reason I have declined to move too far from the Firefox base settings because the browser out-of-the-box has to work for as many systems as possible in as many situations as possible, and one thing unique to us is we still do have a substantial minority of users using Power Macs on dialup networks. One user sticks out in my mind who is a missionary in the mountains north of Myanmar and completely reliant on the modem in his G4 mini. We don't want to unnecessarily tank these users with settings that are overly optimistic about bandwidth availability, so whatever setting Mozilla determines for Firefox users at large may not be the best fit for our legacy population.

In bug 1283302, Mozilla settled on 5ms for desktop users and left Firefox Android at 250ms (which is actually smaller than a refresh tick, so near as I can determine it might as well be zero). Since we're not in the same processing class as current machines by a long shot and we do need to still support users with limited bandwidth, I think a safer setting will be 100ms, which as an otherwise arbitrary number seemed not to regress anything on the local machines. If you want to try this, go into about:config, create a numeric pref nglayout.initialpaint.delay if it does not already exist, and enter a value of 100. Optimally it might be nice to have such settings specific to each architecture build and tuned accordingly, but that's something to consider at a later time. If you have other reasonable recommendations for this setting, do post them in the comments, along with the specifications of the system and network you tried it on. I will consider other changes in future versions as Mozilla re-examines them internally.

Meanwhile, I'll be on a plane to Australia next week on what may be my last Spaceseat flight on Air New Zealand, which I loved. Before I do, however, I'll be stopping by the parents' house to look at the dual 2.5GHz G5 they use for uploading their church videos. My suspicion is the liquid cooling system blew. Currently I am sad.

http://tenfourfox.blogspot.com/2016/12/4560-final-available.html

|

|

Fr'ed'eric Wang: STIX Two in Gecko and WebKit |

On the 1st of December, the STIX Fonts project announced the release of STIX 2. If you never heard about this project, it is described as follows:

The mission of the Scientific and Technical Information Exchange (STIX) font creation project is the preparation of a comprehensive set of fonts that serve the scientific and engineering community in the process from manuscript creation through final publication, both in electronic and print formats.

This sounds a very exciting goal but the way it has been achieved has made the STIX project infamous for its numerous delays, for its poor or confusing packaging, for delivering math fonts with too many bugs to be usable, for its lack of openness & communication, for its bad handling of third-party feedback & contribution…

Because of these laborious travels towards unsatisfactory releases, some snarky people claim that the project was actually named after Styx (

|

|

Andy McKay: Cycling around Hawaii |

Just because.

Day One: Waikoloa to Ocean View

I couldn't figure out a ride for the first day that was a decent distance, not too short and not too long without making the other days harder. In the end I decided to just go for a long ride. That was probably a bad idea.

It started out wet and rainy with flood warnings. But as I got to Kona it stopped raining. Had a chance to stop at wonderful beach for a snack.

By the afternoon as I was getting near Captain Cook it got hot and I hit the steep hill and that was horrible. It floored me and I struggled at the end. Had to seriously recuperate at the top.

The views past Captain Cook of the island stretching out was fabulous and then next 30km cruised by.

But after 90km I started to hit a wall and slowed down and took frequent breaks. Arriving at Ocean View at 4pm, I was absolutely exhausted and dehydrated. Add in jet lag and it was quite a day.

Day Two: Ocean View to Volcano

I figured out I'd strained my achilles yesterday. It was raining. Really raining. There were flash flood warnings and the road was about to be closed. But my plan was to get Volcano. So I did.

The first hour or two were the worst, heavy pounding rain that formed rivers on the road. Brakes basically became useless and visibility was terrible. And my ankle hurt.

After about 2 hours I got to a police road block, due to flooding they were about to shut the road. They said "well you can probably make it" through a deep puddle stretching the road. So I did, while cars were turning back.

It let up a bit, but it did not stop for 6 hours and as I climbed above 2,500ft in elevation it started to get colder. This was a long day.

Day Three: Volcano to Hilo

Short ride downhill into Hilo and then a rest day. I needed that to get some drugs, a support strap for my ankle and some relaxation. Oh and it rained insanely hard all my ride, so this was a chance to dry out my clothes.

Day Four: Hilo to Waimea

Hands down the best day. After my rest I was feeling better and the support strap was helping my ankle. I left Hilo early in the morning and rode along the sea front. The road winds over gulchs, waterfalls and has fabulous views. After 60km+ it turns left and climbs up the volcano towards Waimea.

What was then a fabulous ride become more better as it went to a winding back road past farms lined with trees. After a climb you break out of the trees into wide green fields with the snowcapped volcanoes towering in the distance. Just amazing.

As it turns out, for me right now about 90km is just about the right distance for me.

Day Five: Waimea to Waikoloa

You could do this straight downhill to Waikoloa (25km), but that would be boring. So instead I looped up to Hawi. Boy was I glad I did, this was another highlight. A nice climb up a hill with fabulous views across Hawaii. Followed by a wonderful roller coaster of slow downhill past lovely tree lined roads, views of Maui in the distance. A wonderful last day.

The remaining 40km back around to Waikoloa was boring and tiring.

And that's it. Five days, over 427km, around two volcanoes, one really sore achilles tendon and one flash flood. Would do again.

Thanks to Mozilla for being crazy enough to run a company meeting in Hawaii.

|

|

Mitchell Baker: Helen Turvey Joins the Mozilla Foundation Board of Directors |

This post was originally posted on the Mozilla.org website.

Today, we’re welcoming Helen Turvey as a new member of the Mozilla Foundation Board of Directors. Helen is the CEO of the Shuttleworth Foundation. Her focus on philanthropy and openness throughout her career makes her a great addition to our Board.

Throughout 2016, we have been focused on board development for both the Mozilla Foundation and the Mozilla Corporation boards of directors. Our recruiting efforts for board members has been geared towards building a diverse group of people who embody the values and mission that bring Mozilla to life. After extensive conversations, it is clear that Helen brings the experience, expertise and approach that we seek for the Mozilla Foundation Board.

Helen has spent the past two decades working to make philanthropy better, over half of that time working with the Shuttleworth Foundation, an organization that provides funding for people engaged in social change and helping them have a sustained impact. During her time with the Shuttleworth Foundation, Helen has driven the evolution from traditional funder to the current co-investment Fellowship model.

Helen was educated in Europe, South America and the Middle East and has 15 years of experience working with international NGOs and agencies. She is driven by the belief that openness has benefits beyond the obvious. That openness offers huge value to education, economies and communities in both the developed and developing worlds.

Helen’s contribution to Mozilla has a long history: Helen chaired the digital literacy alliance that we ran in UK in 2013 and 2014; she’s played a key role in re-imagining MozFest; and she’s been an active advisor to the Mozilla Foundation executive team during the development of the Mozilla Foundation ‘Fuel the Movement’ 3 year plan.

Please join me in welcoming Helen Turvey to the Mozilla Foundation Board of Directors.

Mitchell

You can read Helen’s message about why she’s joining Mozilla here.

Background:

Twitter: @helenturvey

|

|

This Week In Rust: This Week in Rust 159 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

- Redox released its first ISO image.

- Starting a new Rust project right, with error-chain.

- Reflections on rusting trust.

- Zero-cost abstractions in Rust.

- Building native macOS applications with Rust.

- ripgrep code review with focus on its design decisions and interesting implementation solutions.

- Taking TRust-DNS IntoFuture.

- WebVR coming to Servo: Architecture and latency optimizations.

- Three bytes and a space: or, Rust bugs, non-compliance, and how I learned to love IRC.

- I used to use pointers - now what? Common C pointer patterns, and what to do in Rust instead.

- Russian dolls and clean Rust code. Replacing nested pattern matching with

and_then. - 24 days of Rust - cargo subcommands.

- 24 days of Rust - structured logging.

- 24 days of Rust - environment variables.

- [survey] Crate evaluation user research survey.

Other Weeklies from Rust Community

- This week in Rust docs 33. Updates from the Rust documentation team.

- This week in TiKV 2016-12-05. TiKV is a distributed Key-Value database.

- This week in Ruma 2016-12-04. Ruma is a Matrix homeserver written in Rust.

Crate of the Week

This week's Crate of the Week is seahash, a statistically well-tested fast hash. Thanks to Vikrant Chaudhary for the suggestion! Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- [less easy] unicode-reverse: Fuzz testing. unicode-reverse is a Unicode-aware in-place string reverse function in Rust.

- [easy] tera: Use 64 bits for int/float. Tera is a template engine for Rust based on Jinja2/Django.

- [easy] tera: Fix include whitespace.

- [easy] tera: Adding tests (not unit test, the tester feature).

- [hard] tera: Add not to mean

!. - [hard] tera: Add a magical variable that dumps the context.

- [less easy] rayon: Parity with the

Iteratortrait. Rayon: A data parallelism library for Rust.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

93 pull requests were merged in the last week. This contains a good number of plugin-breaking changes.

- desugar UFCS in HIR

AdtDefandTraidDefno longer carry type information- Preparing for LLVM 4.0 Use string length instead of 0 terminator, Handle new DlFlag enum

- Rustc now emits a DWARF flag to help debuggers find the main entry point

- Avoid loading needless procedural macro dependencies

HashMapuses displacement instead of initial bucketsave-analysisredirects a module declaration to the start of defining file- More output with

-Z incremental-info -Z incremental-dump-hashflag-Z mir-stats- new option to dump target spec as JSON

- Refactor trait object representation

- Fuchsia support for

std::process - Caching of build script output

- impl items no longer wind up with multiple parents

- HIR: Separate signatures from function bodies

- Obligations are now evaluated in LIFO order

- Support

?Sizedinwhereclauses - New

type_size_limitcrate attribute target_featureattributeunmarked_apifeature removedcopy_from_slice(_)got faster for small slicesString::split_off(..)- show short multiline spans in full

- SUpport macro invocation paths (e.g.

foo::bar!(..)) - Cargo will now correctly retry downloading in case of network error

- Cargo has release branches now

- Cargo: Fixed SSL paths (this broke downloading for some days in nightly)

New Contributors

- Clar Charr

- Theodore DeRego

- Xidorn Quan

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Allow intrinsics to be marked as safe, overriding the implicit

unsafefrom being in an extern block. - Procedural macros.

New RFCs

- Default struct field values.

- Alloca for Rust. Add a builtin

fn core::mem::reserve<'a, T>(elements: usize) -> StackSlice<'a, T>that reserves space for the given number of elements on the stack and returns aStackSlice<'a, T>to it which derefs to&'a [T].

Style RFCs

Style RFCs are part of the process for deciding on style guidelines for the Rust community and defaults for Rustfmt. The process is similar to the RFC process, but we try to reach rough consensus on issues (including a final comment period) before progressing to PRs. Just like the RFC process, all users are welcome to comment and submit RFCs. If you want to help decide what Rust code should look like, come get involved!

PRs:

Final comment period:

- boolean and arithmetic expressions.

- struct and union declarations.

- type aliases.

- match.

- #[macro_use].

- To indent empty lines or not?.

Other notable issues:

Upcoming Events

- 12/8. Columbus Rust Society.

- 12/8. San Diego Rust.

- 12/12. Seattle Rust Meetup.

- 12/14. South Florida Rust: Intro to Rust.

- 12/14. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 12/14. Rust Documentation Team Meeting at #rust-docs on irc.mozilla.org.

- 12/15. Rust Bay Area: Syn/Macros 1.1, Helix, and Binding C in OpenSSL.

- 12/17. South Florida Rust: Intro to Rust.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email the Rust Community Team for access.

fn work(on: RustProject) -> Money

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Such large. Very 128. Much bits.

— @nagisa introducing 128-bit integers in Rust.

Thanks to leodasvacas for the suggestion.

Submit your quotes for next week!

This Week in Rust is edited by: nasa42, llogiq, and brson.

https://this-week-in-rust.org/blog/2016/12/06/this-week-in-rust-159/

|

|

Seif Lotfy: Playing with .NET (dotnet) and IronFunctions |

Again if you missed it, IronFunctions is open-source, lambda compatible, on-premise, language agnostic, server-less compute service.

While AWS Lambda only supports Java, Python and Node.js, Iron Functions allows you to use any language you desire by running your code in containers.

With Microsoft being one of the biggest players in open source and .NET going cross-platform it was only right to add support for it in the IronFunctions's fn tool.

TL;DR:

The following demos a .NET function that takes in a URL for an image and generates a MD5 checksum hash for it:

Using dotnet with functions

Make sure you downloaded and installed dotnet. Now create an empty dotnet project in the directory of your function:

dotnet new

By default dotnet creates a Program.cs file with a main method. To make it work with IronFunction's fn tool please rename it to func.cs.

mv Program.cs func.cs

Now change the code as you desire to do whatever magic you need it to do. In our case the code takes in a URL for an image and generates a MD5 checksum hash for it. The code is the following:

using System;

using System.Text;

using System.Security.Cryptography;

using System.IO;

namespace ConsoleApplication

{

public class Program

{

public static void Main(string[] args)

{

// if nothing is being piped in, then exit

if (!IsPipedInput())

return;

var input = Console.In.ReadToEnd();

var stream = DownloadRemoteImageFile(input);

var hash = CreateChecksum(stream);

Console.WriteLine(hash);

}

private static bool IsPipedInput()

{

try

{

bool isKey = Console.KeyAvailable;

return false;

}

catch

{

return true;

}

}

private static byte[] DownloadRemoteImageFile(string uri)

{

var request = System.Net.WebRequest.CreateHttp(uri);

var response = request.GetResponseAsync().Result;

var stream = response.GetResponseStream();

using (MemoryStream ms = new MemoryStream())

{

stream.CopyTo(ms);

return ms.ToArray();

}

}

private static string CreateChecksum(byte[] stream)

{

using (var md5 = MD5.Create())

{

var hash = md5.ComputeHash(stream);

var sBuilder = new StringBuilder();

// Loop through each byte of the hashed data

// and format each one as a hexadecimal string.

for (int i = 0; i < hash.Length; i++)

{

sBuilder.Append(hash[i].ToString("x2"));

}

// Return the hexadecimal string.

return sBuilder.ToString();

}

}

}

}

Note: IO with an IronFunction is done via stdin and stdout. This code

Using with IronFunctions

Let's first init our code to become IronFunctions deployable:

fn init /

Since IronFunctions relies on Docker to work (we will add rkt support soon) the

In our case we will use dotnethash as the

fn init seiflotfy/dotnethash

When running the command it will create the func.yaml file required by functions, which can be built by running:

Push to docker

fn push

This will create a docker image and push the image to docker.

Publishing to IronFunctions

To publish to IronFunctions run ...

fn routes create

where is (no surprise here) the name of the app, which can encompass many functions.

This creates a full path in the form of http://

In my case, I will call the app myapp:

fn routes create myapp

Calling

Now you can

fn call

or

curl http://:/r//

So in my case

echo http://lorempixel.com/1920/1920/ | fn call myapp /dotnethash

or

curl -X POST -d 'http://lorempixel.com/1920/1920/' http://localhost:8080/r/myapp/dotnethash

What now?

You can find the whole code in the examples on GitHub. Feel free to join the Iron.io Team on Slack.

Feel free to write your own examples in any of your favourite programming languages such as Lua or Elixir and create a PR :)

http://seif.codes/playing-with-net-dotnet-and-ironfunctions/

|

|

The Mozilla Blog: Why I’m joining Mozilla’s Board, by Helen Turvey |

Today, I’m very honored to join Mozilla’s Board.

Firefox is how I first got in contact with Mozilla. The browser was my first interaction with free and open source software. I downloaded it in 2004, not with any principled stance in mind, but because it was better, faster, more secure and allowed me to determine how I used it, with add-ons and so forth.

Helen Turvey joins the Mozilla Foundation Board

My love of open began, seeing the direct implications for philanthropy, for diversity, moving from a scarcity to abundance model in terms of the information and data we need to make decisions in our lives. The web as a public resource is precious, and we need to fight to keep it an open platform, decentralised, interoperable, secure and accessible to everyone.

Mozilla is community driven, and it is my belief that it makes a more robust organisation, one that bends and evolves instead of crumbles when facing the challenges set before it. Whilst we need to keep working towards a healthy internet, we also need to learn to behave in a responsible manner. Bringing a culture of creating, not just consuming, questioning, not just believing, respecting and learning, to the citizens of the web remains front and centre.

I am passionate about people, and creating spaces for them to evolve, grow and lead in the roles they feel driven to effect change in. I am interested in all aspects of Mozilla’s work, but helping to think through how Mozilla can strategically and tactically support leaders, what value we can bring to the community who is working to protect and evolve the web is where I will focus in my new role as a Mozilla Foundation Board member.

For the last decade I have run the Shuttleworth Foundation, a philanthropic organisation that looks to drive change through open models. The FOSS movement has created widely used software and million dollar businesses, using collaborative development approaches and open licences. This model is well established for software, it is not the case for education, philanthropy, hardware or social development.

We try to understand whether, and how, applying the ethos, processes and licences of the free and open source software world to areas outside of software can add value. Can openness help provide key building blocks for further innovation? Can it encourage more collaboration, or help good ideas spread faster? It is by asking these questions that I have learnt about effectiveness and change and hope to bring that along to the Mozilla Foundation Board.

https://blog.mozilla.org/blog/2016/12/05/why-im-joining-mozillas-board-by-helen-turvey/

|

|

The Mozilla Blog: Helen Turvey Joins the Mozilla Foundation Board of Directors |

Today, we’re welcoming Helen Turvey as a new member of the Mozilla Foundation Board of Directors. Helen is the CEO of the Shuttleworth Foundation. Her focus on philanthropy and openness throughout her career makes her a great addition to our Board.

Throughout 2016, we have been focused on board development for both the Mozilla Foundation and the Mozilla Corporation boards of directors. Our recruiting efforts for board members has been geared towards building a diverse group of people who embody the values and mission that bring Mozilla to life. After extensive conversations, it is clear that Helen brings the experience, expertise and approach that we seek for the Mozilla Foundation Board.

Helen has spent the past two decades working to make philanthropy better, over half of that time working with the Shuttleworth Foundation, an organization that provides funding for people engaged in social change and helping them have a sustained impact. During her time with the Shuttleworth Foundation, Helen has driven the evolution from traditional funder to the current co-investment Fellowship model.

Helen was educated in Europe, South America and the Middle East and has 15 years of experience working with international NGOs and agencies. She is driven by the belief that openness has benefits beyond the obvious. That openness offers huge value to education, economies and communities in both the developed and developing worlds.

Helen’s contribution to Mozilla has a long history: Helen chaired the digital literacy alliance that we ran in UK in 2013 and 2014; she’s played a key role in re-imagining MozFest; and she’s been an active advisor to the Mozilla Foundation executive team during the development of the Mozilla Foundation ‘Fuel the Movement’ 3 year plan.

Please join me in welcoming Helen Turvey to the Mozilla Foundation Board of Directors.

Mitchell

You can read Helen’s message about why she’s joining Mozilla here.

Background:

Twitter: @helenturvey

|

|

Dustin J. Mitchell: Connecting Bugzilla to TaskWarrior |

I’ve mentioned before that I use TaskWarrior to organize my life. Mostly for work, but for personal stuff too (buy this, fix that thing around the house, etc.)

At Mozilla, at least in the circles I run in, the central work queue is Bugzilla. I have bugs assigned to me, suggesting I should be working on them. And I have reviews or “NEEDINFO” requests that I should respond to. Ideally, instead of serving two masters, I could just find all of these tasks represented in TaskWarrior.

Fortunately, there is an integration called BugWarrior that can do just this! It can be a little tricky to set up, though. So in hopes of helping the next person, here’s my configuration:

[general]

targets = bugzilla_mozilla, bugzilla_mozilla_respond

annotation_links = True

log.level = WARNING

legacy_matching = False

[bugzilla_mozilla]

service = bugzilla

bugzilla.base_uri = bugzilla.mozilla.org

bugzilla.ignore_cc = True

# assigned

bugzilla.query_url = https://bugzilla.mozilla.org/query.cgi?list_id=13320987&resolution=---&emailtype1=exact&query_format=advanced&emailassigned_to1=1&email1=dustin%40mozilla.com&product=Taskcluster

add_tags = bugzilla

project_template = moz

description_template = http://bugzil.la/

bugzilla.username = USERNAME

bugzilla.password = PASSWORD

[bugzilla_mozilla_respond]

service = bugzilla

bugzilla.base_uri = bugzilla.mozilla.org

bugzilla.ignore_cc = True

# ni?, f?, r?, not assigned

bugzilla.query_url = https://bugzilla.mozilla.org/query.cgi?j_top=OR&list_id=13320900&emailtype1=notequals&emailassigned_to1=1&o4=equals&email1=dustin%40mozilla.com&v4=dustin%40mozilla.com&o7=equals&v6=review%3F&f8=flagtypes.name&j5=OR&o6=equals&v7=needinfo%3F&f4=requestees.login_name&query_format=advanced&f3=OP&bug_status=UNCONFIRMED&bug_status=NEW&bug_status=ASSIGNED&bug_status=REOPENED&f5=OP&v8=feedback%3F&f6=flagtypes.name&f7=flagtypes.name&o8=equals

add_tags = bugzilla, respond

project_template = moz

description_template = http://bugzil.la/

bugzilla.username = USERNAME

bugzilla.password = PASSWORD

Out of the box, this tries to do some default things, but they are not very fine-grained.

The bugzilla_query_url option overrides those default things (along with bugzilla_ignore_cc) to just sync the bugs matching the query.

Sadly, this does, indeed, require me to include my Bugzilla password in the configuration file. API token support would be nice but it’s not there yet – and anyway, that token allows everything the password does, so not a great benefit.

The query URLs are easy to build if you follow this one simple trick:

Use the Bugzilla search form to create the query you want.

You will end up with a URL containing buglist.cgi.

Change that to query.cgi and put the whole URL in BugWarrior’s bugzilla_query_url parameter.

I have two stanzas so that I can assign the respond tag to bugs for wihch I am being asked for review or needinfo.

When I first set this up, I got a lot of errors about duplicate tasks from BugWarrior, because there were bugs matching both stanzas.

Write your queries carefully so that no two stanzas will match the same bug.

In this case, I’ve excluded bugs assigned to me from the second stanza – why would I be reviewing my own bug, anyway?

I have a nice little moz report that I use in TaskWarrior.

Its output looks like this:

ID Pri Urg Due Description

98 M 7.09 2016-12-04 add a docs page or blog post

58 H 18.2 http://bugzil.la/1309716 Create a framework for displaying team dashboards

96 H 7.95 http://bugzil.la/1252948 cron.yml for periodic in-tree tasks

91 M 6.87 blog about bugwarrior config

111 M 6.71 guide to microservices, to help folks find the services they need to read th

59 M 6.08 update label-matching in taskcluster/taskgraph/transforms/signing.py to use

78 M 6.02 http://bugzil.la/1316877 Allow `test-sets` in `test-platforms.yml`

92 M 5.97 http://bugzil.la/1302192 Merge android-test and desktop-test into a "test" k

94 M 5.96 http://bugzil.la/1302804 Ensure that tasks in a taskgraph do not have duplic

http://code.v.igoro.us/posts/2016/12/taskwarrior-bugwarrior.html

|

|

Cameron Kaiser: 45.6.0b1 available, plus sampling processes for fun and profit |

There is now apparently a potential workaround for those of you still having trouble getting the default search engine to stick. I still don't have a good theory for what's going on, however, so if you want to try the workaround please read my information request and post the requested information about your profile before and after to see if the suggested workaround affects that.

I will be in Australia for Christmas and New Years' visiting my wife's family, so additional development is likely to slow over the holidays. Higher priority items coming up will be implementing user agent support in the TenFourFox prefpane, adding some additional HTML5 features and possibly excising telemetry from garbage and cycle collection, but probably for 45.8 instead of 45.7. I'm also looking at adding some PowerPC-specialized code sections to the platform-independent Ion code generator to see if I can crank up JavaScript performance some more, and possibly some additional work to the AltiVec VP9 codec for VMX-accelerated intraframe prediction. I'm also considering adding AltiVec support to the Theora (VP3) decoder; even though its much lighter processing requirements yield adequate performance on most supported systems it could be a way to get higher resolution video workable on lower-spec G4s.

One of the problems with our use of a substantially later toolchain is that (in particular) debugging symbols from later compilers are often gibberish to older profiling and analysis tools. This is why, for example, we have a customized gdb, or debugging at even a basic level wouldn't be possible. If you're really a masochist, go ahead and compile TenFourFox with the debug profile and then try to use a tool like sample or vmmap, or even Shark, to analyze it. If you're lucky, the tool will just freeze. If you're unlucky, your entire computer will freeze or go haywire. I can do performance analysis on a stripped release build, but this yields sample backtraces which are too general to be of any use. We need some way of getting samples off a debug build but not converting the addresses in the backtrace to function names until we can transfer the samples to our own tools that do understand these later debugging symbols.

Apple's open source policy is problematic -- they'll open source the stuff they have to, and you can get at some components like the kernel this way, but many deep dark corners are not documented and one of those is how tools like /usr/bin/sample and Shark get backtraces from other processes. I suspect this is so that they can keep the interfaces unstable and avoid abetting the development of applications that depend on any one particular implementation. But no one said I couldn't disassemble the damn thing. So let's go.

(NB: the below analysis is based on Tiger 10.4.11. It is possible, and even likely, the interface changed in Leopard 10.5.)

With Depeche Mode blaring on the G5, because Dave Gahan is good for debugging, let's look at /usr/bin/sample since it's a much smaller nut to crack than Shark.

% otool -L /usr/bin/sample

/usr/bin/sample:

/System/Library/Frameworks/Foundation.framework/Versions/C/Foundation (compatibility version 300.0.0, current version 567.29.0)

/System/Library/PrivateFrameworks/vmutils.framework/Versions/A/vmutils (compatibility version 1.0.0, current version 93.1.0)

/usr/lib/libgcc_s.1.dylib (compatibility version 1.0.0, current version 1.0.0)

/usr/lib/libSystem.B.dylib (compatibility version 1.0.0, current version 88.3.4)

Interesting! A private framework! Let's see what Objective-C calls we might get (which are conveniently text strings).

% strings /usr/bin/sample |& more

__dyld_make_delayed_module_initializer_calls

__dyld_image_count

__dyld_get_image_name

__dyld_get_image_header

__dyld_NSLookupSymbolInImage

__dyld_NSAddressOfSymbol

libobjc

__objcInit

__dyld_mod_term_funcs

release

printStatistics

writeOutput:append:

stopSampling

sampleForDuration:interval:

preloadSymbols

initWithPid:symbolRichBinaries:

alloc

intValue

UTF8String

removeObjectAtIndex:

objectAtIndex:

count

indexOfObject:

arrayWithArray:

arguments

processInfo

forceStop

NSSampler

NSMutableArray

NSProcessInfo

NSAutoreleasePool

Interrupted

Not currently sampling -- exiting immediately.

-wait

-mayDie

-file

Waiting for '%s' to appear...

%s appeared.

%s cannot find a process you have access to which has a name like '%s'

sample

Sampling process %d each %u msecs %u times

syntax: sample

options: {-mayDie} {-wait} {-subst

-file filename specifies where results should be written

-mayDie reads symbol information right away

-wait wait until the process named (usually by partial name) exists, then start sampling

-subst can be used to replace a stripped executable by another

Note that the program must have been started using a full path, rather than a relative path, for analysis to work, or that the -subst option must be specified

setObject:forKey:

dictionary

autorelease

mutableCopy

NSMutableDictionary

%s cannot examine process %d for unknown reasons, even though it appears to exist.

%s cannot examine process %d because the process does not exist.

%s cannot examine process %d (with name like %s) because it no longer appears to be running.

%s cannot examine process %d because you do not have appropriate privileges to examine it.

%s cannot examine process %d for unknown reasons.

-subst

Most of that looks fairly straightforward Objective-C stuff, but what's NSSampler? That's not documented anywhere. Magic Hat can't find it either with the default libraries, but it does if we add those private frameworks. If I use class-dump (3.1.2 works with 10.4), I can get a header file with its methods and object layout. (The header file it generates is usually better than Magic Hat's since Magic Hat sorts things in alphabetical rather than memory order, which will be problematic shortly.) Edited down, it looks like this. (I added the byte offsets, which are only valid for the 32-bit PowerPC OS X ABI.)

@interface NSSampler : NSObject

/*

{

00 BOOL _stop;

04 BOOL _stopped;

08 unsigned int _task;

12 int _pid;

16 double _duration;

24 double _interval;

32 NSMutableArray *_sampleData;

36 NSMutableArray *_sampleTimes;

40 double _previousTime;

48 unsigned int _numberOfDataPoints;

52 double _sigma;

60 double _max;

68 unsigned int _sampleNumberForMax;

72 ImageSymbols *_imageSymbols;

76 NSDictionary *_symbolRichBinaryMappings;

80 BOOL _writeBadAddresses;

84 TaskMemoryCache *_tmc;

88 BOOL _stacksFixed;

92 BOOL _sampleSelf;

96 struct backtraceMagicNumbers _magicNumbers;

}

*/

- (void) _cleanupStacks;

- (void) _initStatistics;

- (void) _makeHighPriority;

- (void) _makeTimeshare;

- (void) _runSampleThread: (id) parameter1;

- (void) dealloc;

- (void) finalize;

- (void) forceStop;

- (void) getStatistics: (void*) parameter1;

- (id) imageSymbols;

- (id) initWithPid: (int) parameter1;

- (id) initWithPid: (int) parameter1 symbolRichBinaries: (id) parameter2;

- (id) initWithSelf;

- (void) preloadSymbols;

- (void) printStatistics;

- (id) rawBacktraces;

- (void) sampleForDuration2: (double) parameter1 interval: (double) parameter2;

- (void) sampleForDuration: (unsigned int) parameter1 interval: (unsigned int) parameter2;

- (int) sampleTask;

- (void) setImageSymbols: (id) parameter1;

- (void) startSamplingWithInterval: (unsigned int) parameter1;

- (void) stopSampling;

- (id) stopSamplingAndReturnCallNode;

- (void) writeBozo;

- (void) writeOutput: (id) parameter1 append: (char) parameter2;

@end

Okay, so now we know what methods are there. How does one call this thing? Let's move to the disassembler. I'll save you my initial trudging through the machine code and get right to the good stuff. I've annotated critical parts below from stepping through the code in the debugger.

% otool -tV /usr/bin/sample

/usr/bin/sample:

(__TEXT,__text) section

00002aa4 or r26,r1,r1 << enter

00002aa8 addi r1,r1,0xfffc

00002aac rlwinm r1,r1,0,0,26

00002ab0 li r0,0x0

00002ab4 stw r0,0x0(r1)

:

:

:

00003260 b 0x3310

00003264 bl 0x3840 ; symbol stub for: _getgid

00003268 bl 0x37d0 ; symbol stub for: _setgid

This looks like something that's trying to get at a process. Let's see what's here.

0000326c lis r3,0x0

00003270 or r4,r30,r30

00003274 addi r3,r3,0x3b9c

00003278 or r5,r29,r29

0000327c or r6,r26,r26

00003280 bl 0x37c0 ; symbol stub for: _printf$LDBL128 // "Sampling process ..."

00003284 lbz r0,0x39(r1)

00003288 cmpwi cr7,r0,0x1

0000328c bne+ cr7,0x32a0 // jumps to 32a0

:

:

:

000032a0 lis r4,0x0

000032a4 lwz r3,0x0(r31)

000032a8 or r5,r25,r25

000032ac lwz r4,0x5010(r4) // 0x399c "sampleForDuration:..."

000032b0 or r6,r23,r23

000032b4 bl 0x3800 ; symbol stub for: _objc_msgSend

000032b8 lis r4,0x0

000032bc lwz r3,0x0(r31)

000032c0 lwz r4,0x500c(r4) // 0x946ba288 "stopSampling"

000032c4 bl 0x3800 ; symbol stub for: _objc_msgSend

000032c8 lis r4,0x0

000032cc lwz r3,0x0(r31)

000032d0 lwz r4,0x5008(r4) // 0x3978 "writeOutput:..."

000032d4 or r5,r22,r22

000032d8 li r6,0x0

000032dc bl 0x3800 ; symbol stub for: _objc_msgSend

That seems simple enough. It seems to allocate and initialize an NSSampler object, (we assume) sets it up with [sampler initWithPid], calls [sampler sampleForDuration], calls [sampler stopSampling] and then calls [sampler writeOutput] to write out the result.

This is not what we want to do, however. What I didn't see in either the disassembly or the class description was an explicit step to convert addresses to symbols, which is what we want to avoid. We might well suspect -(void) writeOutput is doing that, and if we put together a simple-minded program to make these calls as sample does, we indeed get a freeze when we try to write the output. We want to get to the raw addresses instead, but Apple doesn't provide any getter for those tantalizing NSMutableArrays containing the sample data.

Unfortunately for Apple, class-dump gave us the structure of the NSSampler object (recall that Objective-C objects are really just structs with delusions of grandeur), and conveniently those object pointers are right there, so we can pull them out directly! Since they're just NSArrays, hopefully they're smart enough to display themselves. Let's see. (In the below, replace XXX with the process you wish to spy on.)

Drumroll please.

/* gcc -g -o samplemini samplemini.m \

-F/System/Library/PrivateFrameworks \

-framework Cocoa -framework CHUD \

-framework vmutils -lobjc */

#includeCocoa.h>

#include "NSSampler.h"

int main(int argc, char **argv) {

NSSampler *sampler;

NSMutableArray *sampleData;

NSMutableArray *sampleTimes;

uint32_t count, sampleAddr;

NSAutoreleasePool *shutup = [[NSAutoreleasePool alloc] init];

sampler = [[NSSampler alloc] init];

[sampler initWithPid:XXX]; // you provide

[sampler sampleForDuration:10 interval:10]; // 10 seconds, 10 msec

[sampler stopSampling];

// break into the NSSampler struct

sampleAddr = (uint32_t)sampler;

count = *(uint32_t *)(sampleAddr + 48);

fprintf(stdout, "count = %i\n", count);

sampleData = (NSMutableArray *)*(uint32_t *)(sampleAddr + 32);

sampleTimes = (NSMutableArray *)*(uint32_t *)(sampleAddr + 36);

fprintf(stdout, "%s", [[sampleData description] cString]);

fprintf(stdout, "%s", [[sampleTimes description] cString]);

[sampler dealloc];

return 0;

}

count = 519

(

,

,

,

:

:

:

)(

0.01796096563339233,

0.01785099506378174,

0.01814299821853638,

0.01780200004577637,

:

:

:

)

We now have the raw backtraces and the timings, in fractions of a second. There is obviously much more we can do with this, and subsequent to my first experiment I improved the process further, but this suffices for explaning the basic notion. In a future post we'll look at how we can turn those addresses into actual useful function names, mostly because I have a very hacky setup to do so right now and I want to refine it a bit more. :) The basic notion is to get the map of where dyld loaded each library in memory and then compute which function is running based on that offset from the sampled address. /usr/bin/vmmap would normally be the tool we'd employ to do this, but it barfs on TenFourFox too. Fortunately our custom gdb7 can get such a map, at least on a running process. More on that later.

One limitation is that NSSampler doesn't seem able to get samples more frequently than every 15ms or so from a running TenFourFox process even if you ask. I'm not sure yet why this is because other processes have substantially less overhead, though it could be thread-related. Also, even though NSSampler accepts an interval argument, it will grab samples as fast as it can no matter what that interval is. When run against Magic Hat as a test it grabbed them as fast as 0.1ms, so stand by for lots of data!

Incidentally, this process is not apparently what Shark does; Shark uses the later PerfTool framework and an object called PTSampler to do its work instead of vmutils. Although it has analogous methods, the structure of PTSampler is rather more complex than NSSampler and I haven't fully explored its depths. Nevertheless, when it works, Shark can get much more granular samples of processor activity than NSSampler, so it might be worth looking into for a future iteration of this tool. For now, I can finally get backtraces I can work with, and as a result, hopefully some very tricky problems in TenFourFox might get actually solved in the near future.

http://tenfourfox.blogspot.com/2016/12/4560b1-available-plus-sampling.html

|

|

Christian Heilmann: Taking a look behind the scenes before publicly dismissing something |

Lately I started a new thing: watching “behind the scenes” features of movies I didn’t like. At first this happened by chance (YouTube autoplay, to be precise), but now I do it deliberately and it is fascinating.

Van Helsing to me bordered on the unwatchable, but as you can see there are a lot of reasons for that.

When doing that, one thing becomes clear: even if you don’t like something — *it was done by people*. People who had fun doing it. People who put a lot of work into it. People who — for a short period of time at least — thought they were part of something great.

That the end product us flawed or lamentable might not even be their fault. Many a good movie was ruined in the cutting room or hindered by censorship.

Hitchcock’s masterpiece Psycho almost didn’t make it to the screen because you see the flushing of a toilet. Other movies are watered down to get a rating that is more suitable for those who spend the most in cinemas: teenagers. Sometimes it is about keeping the running time of the movie to one that allows for just the right amount of ads to be shown when aired on television.

Take for example Halle Berry as Storm in X-Men. Her “What happens to a toad when it gets struck by lightning? The same thing that happens to everything else.” in her battle with Toad is generally seen as one of the cheesiest and most pointless lines:

This was a problem with cutting. Originally this is a comeback for Toad using this as his tagline throughout the movie:

However, as it turns out, that was meant to be the punch line to a running joke in the movie. Apparently, Toad had this thing that multiple times throughout the movie, he would use the line ‘Do you know what happens when a Toad…’ and whatever was relevant at the time. It was meant to happen several times throughout the movie and Storm using the line against him would have actually seemed really witty. If only we had been granted the context.

In many cases this extra knowledge doesn’t change the fact that I don’t like the movie. But it makes me feel different about it. It makes my criticism more nuanced. It makes me realise that a final product is a result of many changes and voices and power being yielded and it isn’t the fault of the actors or sometimes even the director.

And it is arrogant and hurtful of me to viciously criticise a product without knowing what went into it. It is easy to do. It is sometimes fun to do. It makes you look like someone who knows their stuff and is berating bad quality products. But it is prudent to remember that people are behind things you criticise.

Let’s take this back to the web for a moment. Yesterday I had a quick exchange on Twitter that reminded me of this approach of mine.

- Somebody said people write native apps because a certain part of the web stack is broken.

- Somebody else answered that if you want to write apps that work you shouldn’t even bother with JavaScript in the first place

- I replied that this makes no sense and is not helping the conversation about the broken technology. And that it is overly dismissive and hurtful

- The person then admitted knowing nothing about app creation, but was pretty taken aback by me saying what he did was hurtful instead of just dismissive.

But it was. And it is hurtful. Right now JavaScript is hot. JavaScript is relatively easy to learn and the development environment you need for it is free and in many cases a browser is enough. This makes it a great opportunity for someone new to enter our market. Matter of fact, I know people who do exactly that right now and get paid JavaScript courses by the unemployment office to up their value in the market and find a job.

Now imagine this person seeing this exchange. Hearing a developer relations person who worked for the largest and coolest companies flat out stating that what you’re trying to get your head around right now is shit. Do you think you’ll feel empowered? I wouldn’t.