Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Robert Kaiser: I Want an Internet of Humans |

Some awesome person or group of people wrote a great dialog into Star Wars Episode I, peaking in Yoda's "Fear is the path to the dark side. Fear leads to anger. Anger leads to hate. Hate leads to suffering." - And so true this is. Think about it.

People fear about securing their well-being, about being able to live the life they find worth living (including their jobs(, and about knowing what to expect of today and tomorrow. When this fear is nurtured, it grows, leads to anger about anything that seems to threaten it. They react hatefully to anyone just seeming to support those perceived threats. And those targeted by that hate hurt and suffer, start to fear the "haters", and go through the cycle from the other side. And and in that climate, the basic human uneasy feeling of "life for me is mostly OK, so any change and anything different is something to fear" falls onto fertile ground and grows into massive metathesiophobia (fear of change) and things like racism, homophobia, xenophobia, hate of other religions, and all kinds of other demons rise up.

Those are all deeply rooted in sincere, common human emotions (maybe even instincts) that we can live with, overcome and even turn around into e.g. embracing infinite diversity in infinite combinations like e.g. Star Trek, or we can go and shove them away into a corner of our existence, not decomposing them at their basic stage, and letting them grow until they are large enough that they drive our thinking, our personality - and make us easy to influence by people talking to them. And that works well both for the fears that e.g. some politicians spread and play with and the same for the fears of their opponents. Even the fear of hate and fear taking over is not excluded from this - on the contrary, it can fire up otherwise loving humans into going fully against what the actually want to be.

That said, when a human stands across another human and looks in his or her face, looks into their eyes, as long as we still realize there is a feeling, caring other person on the receiving end of whatever we communicate, it's often harder to start into this circle - if we are already deep into the fear and hate, and in some other circumstances this may not be always true, but in a lot of cases it is.

On the Internet, not so much. We interact with and through a machine, see an "account" on the other end, remove all the context of what was said before and after, of the tone of voice and body language, of what surroundings others are in, we reduce to a few words that are convenient to type or what the communication system limits us to - and we go for whatever gives us the most attention. Because we don't actually feel like we interact with other real humans, it's mostly about what we get out of it. A lot of likes, reshares, replies, interactions. It helps that the services we use maximize whatever their KPI are and not optimize for what people actually want - after all, they want to earn money and that means having a lot of activity, and making people happy is not an actual goal, at best a wishful side effect.

We need to change that. We need to make social media actually social again (this talk by Chris Heilmann is really worth watching). We need to spread love ("make Trek, not Wars" in a tounge-in-cheek kind of way, no meaning negativity towards any franchise, but thinking about meanings and how we can make things better for our neighbors, our community, our world), not even hate the fear or fear the hate (which leads back into the circle), but analyze it, take it seriously and break it down. If we understand it, know how to deal with it, but not let it overcome us, fear can even be healthy - as another great screenwriter put it "Fear only exists for one purpose: To be conquered". That is where we need to get ourselves, and need to help those other humans end up that spread hate and unreflected fear - or act out of that. Not by hating them back, but by trying to understand and help them.

We need to see the people, the humans, behind what we read on the Internet (I deeply recommend for you to watch this very recent talk by Erika Baker as well). I don't see it as a "Crusade against Internet hate" as mentioned in the end of that talk, but more as a "Rally for Internet love" (unfortunately, some people would ridicule that wording but I see it as the love of humanity, the love for the human being inside each and everyone of us). I'm always finding it mind-blowing that every single person I see around me, that reads this, that uses some software I helped with, and every single other person on this planet (or in its orbit, there are none out further at this time as far as I know), is a fully, thinking, feeling, caring human being. Every one of those is different, every one of those has their own thoughts and fears that need to be addressed and that we need to address. And every one of those wants to be loved. And they should be. No matter who they voted for. No matter if they are a president elect or a losing candidate. We don't need to agree with everything they are saying. But their fears should be addressed and conquered. And yes, they should be loved. Their differences should be celebrated and their commonalities embraced at the same time. Yes, that's possible, think about it. Again, see the philosophy of infinite diversity in infinite combinations.

I want an Internet that connects those humans, brings them closer together, makes them understand each other more, makes them love each other's humanity. I don't care how many "things" we connect to the Internet, I care that the needs and feelings of humans and their individual and shared lives improve. I care that their devices and gadgets are their own, help their individuality, and help them embrace other humans (not treat them as accounts and heaps to data to be analyzed and sold stuff to). I want everyone to see that everyone else is (just) human, and spread love to or at least embrace them as humans. Then the world, the humans in it, and myself, can make it out of the difficult times and live long and prosper in the future.

I want an Internet of humans.

We all, me, you can start creating that in how we interact with each other on social networks and other places on the web and even in the real world, and we can build it into whatever work we are doing.

I want an Internet of humans.

Can, will, you help?

http://home.kairo.at/blog/2016-12/i_want_an_internet_of_humans

|

|

Mike Hoye: William Gibson Overdrive |

From William Gibson’s “Spook Country”:

She stood beneath Archie’s tail, enjoying the flood of images rushing from the arrowhead fluke toward the tips of the two long hunting tentacles. Something about Victorian girls in their underwear had just passed, and she wondered if that was part of Picnic at Hanging Rock, a film which Inchmale had been fond of sampling on DVD for preshow inspiration. Someone had cooked a beautifully lumpy porridge of imagery for Bobby, and she hadn’t noticed it loop yet. It just kept coming.

And standing under it, head conveniently stuck in the wireless helmet, let her pretend she wasn’t hearing Bobby hissing irritably at Alberto for having brought her here.

It seemed almost to jump, now, with a flowering rush of silent explosions, bombs blasting against black night. She reached up to steady the helmet, tipping her head back at a particularly bright burst of flame, and accidentally encountered a control surface mounted to the left of the visor, over her cheekbone. The Shinjuku squid and its swarming skin vanished.

Beyond where it had been, as if its tail had been a directional arrow, hung a translucent rectangular solid of silvery wireframe, crisp yet insubstantial. It was large, long enough to park a car or two in, and easily tall enough to walk into, and something about these dimensions seemed familiar and banal. Within it, too, there seemed to be another form, or forms, but because everything was wireframed it all ran together visually, becoming difficult to read.

She was turning, to ask Bobby what this work in progress might become, when he tore the helmet from her head so roughly that she nearly fell over.

This left them frozen there, the helmet between them. Bobby’s blue eyes loomed owl-wide behind diagonal blondness, reminding her powerfully of one particular photograph of Kurt Cobain. Then Alberto took the helmet from them both. “Bobby,” he said, “you’ve really got to calm down. This is important. She’s writing an article about locative art. For Node.”

“Node?”

“Node.”

“The fuck is Node?”

I just finished building that. A poor man’s version of that, at least – there’s more to do, but you can stand it up in a couple of seconds and it works; a Node-based Flyweb discovery service that serves up a discoverable VR environment.

It was harder than I expected – NPM and WebVR are pretty uneven experiences from a novice web-developer’s perspective, and I have exciting opinions about the state of the web development ecosystem right now – but putting that aside: I just pushed the first working prototype up to Github a few minutes ago. It’s crude, the code’s ugly but it works; a 3D locative virtual art gallery. If you’ve got the right tools and you’re standing in the right place, you can look through the glass and see another world entirely.

Maybe the good parts of William Gibson’s visions of the future deserve a shot at existing too.

http://exple.tive.org/blarg/2016/12/02/william-gibson-overdrive/

|

|

Manish Goregaokar: Reflections on Rusting Trust |

The Rust compiler is written in Rust. This is overall a pretty common practice in compiler development. This usually means that the process of building the compiler involves downloading a (typically) older version of the compiler.

This also means that the compiler is vulnerable to what is colloquially known as the “Trusting Trust” attack, an attack described in Ken Thompson’s acceptance speech for the 1983 Turing Award. This kind of thing fascinates me, so I decided to try writing one myself. It’s stuff like this which started my interest in compilers, and I hope this post can help get others interested the same way.

To be clear, this isn’t an indictment of Rust’s security. Quite a few languages out there have popular self-hosted compilers (C, C++, Haskell, Scala, D, Go) and are vulnerable to this attack. For this attack to have any effect, one needs to be able to uniformly distribute this compiler, and there are roughly equivalent ways of doing the same level of damage with that kind of access.

If you already know what a trusting trust attack is, you can skip the next section. If you just want to see the code, it’s in the trusting-trust branch on my Rust fork, specifically this code.

The attack

The essence of the attack is this:

An attacker can conceivably change a compiler such that it can detect a particular kind of application and

make malicious changes to it. The example given in the talk was the UNIX login program — the attacker

can tweak a compiler so as to detect that it is compiling the login program, and compile in a

backdoor that lets it unconditionally accept a special password (created by the attacker) for any

user, thereby giving the attacker access to all accounts on all systems that have login compiled

by their modified compiler.

However, this change would be detected in the source. If it was not included in the source, this change would disappear in the next release of the compiler, or when someone else compiles the compiler from source. Avoiding this attack is easily done by compiling your own compilers and not downloading untrusted binaries. This is good advice in general regarding untrusted binaries, and it equally applies here.

To counter this, the attacker can go one step further. If they can tweak the compiler so as to

backdoor login, they could also tweak the compiler so as to backdoor itself. The attacker needs to

modify the compiler with a backdoor which detects when it is compiling the same compiler, and

introduces itself into the compiler that it is compiling. On top of this it can also introduce

backdoors into login or whatever other program the attacker is interested in.

Now, in this case, even if the backdoor is removed from the source, every compiler compiled using this backdoored compiler will be similarly backdoored. So if this backdoored compiler somehow starts getting distributed, it will spread itself as it is used to compile more copies of itself (e.g. newer versions, etc). And it will be virtually undetectable — since the source doesn’t need to be modified for it to work; just the non-human-readable binary.

Of course, there are ways to protect against this. Ultimately, before a compiler for language X existed, that compiler had to be written in some other language Y. If you can track the sources back to that point you can bootstrap a working compiler from scratch and keep compiling newer compiler versions till you reach the present. This raises the question of whether or not Y’s compiler is backdoored. While it sounds pretty unlikely that such a backdoor could be so robust as to work on two different compilers and stay put throughout the history of X, you can of course trace back Y back to other languages and so on till you find a compiler in assembly that you can verify1.

Backdooring Rust

Alright, so I want to backdoor my compiler. I first have to decide when in the pipeline the code that insert backdoors executes. The Rust compiler operates by taking source code, parsing it into a syntax tree (AST), transforming it into some intermediate representations (HIR and MIR), and feeding it to LLVM in the form of LLVM IR, after which LLVM does its thing and creates binaries. A backdoor can be inserted at any point in this stage. To me, it seems like it’s easier to insert one into the AST, because it’s easier to obtain AST from source, and this is important as we’ll see soon. It also makes this attack less practically viable2, which is nice since this is just a fun exercise and I don’t actually want to backdoor the compiler.

So the moment the compiler finishes parsing, my code will modify the AST to insert a backdoor.

First, I’ll try to write a simpler backdoor; one which doesn’t affect the compiler but instead affects some programs. I shall write a backdoor that replaces occurrences of the string “hello world” with “

http://manishearth.github.io/blog/2016/12/02/reflections-on-rusting-trust/

|

|

Air Mozilla: Participation Demos - Q4/2016 |

Find out what Participation has been up to in Q4 2016

Find out what Participation has been up to in Q4 2016

|

|

Christian Heilmann: Pixels, Politics and P2P – Internet Days Stockholm 2016 |

I just got back from the Internet Days conference in Stockholm, Sweden. I was flattered when I was asked to speak at this prestigious event, but I had no idea until I arrived just how much fun it would be.

I loved the branding of he conference as it was all about pixels and love. Things we now need more of – the latter more than the former. As a presenter, I felt incredibly pampered. I had a driver pick me up at the airport (which I didn’t know, so I took the train) and I was put up in the pretty amazing Waterfront hotel connected to the convention centre of the conference.

This was the first time I heard about the internet days and for those who haven’t either, I can only recommend it. Imagine a mixture of a deep technical conference on all matters internet – connectivity, technologies and programming – mixed with a TED event on current political matters.

The technology setup was breathtaking. The stage tech was flawless and all the talks were streamed and live edited (mixed with slides). Thus they became available on YouTube about an hour after you delivered them. Wonderful work, and very rewarding as a presenter.

I talked in detail about my keynote in another post, so here are the others I enjoyed:

Juliana Rotich of BRCK and Ushahidi fame talked about connectivity for the world and how this is far from being a normal thing.

Erika Baker gave a heartfelt talk about how she doesn’t feel safe about anything that is happening in the web world right now and how we need to stop seeing each other as accounts but care more about us as people.

Incidentally, this reminded me a lot of my TEDx talk in Linz about making social media more social again:

The big bang to end the first day of the conference was of course the live skype interview with Edward Snowden. In the one hour interview he covered a lot of truths about security, privacy and surveillance and he had many calls to action anyone of us can do now.

What I liked most about him was how humble he was. His whole presentation was about how it doesn’t matter what will happen to him, how it is important to remember the others that went down with him, and how he wishes for us to use the information we have now to make sure our future is not one of silence and fear.

In addition to my keynote I also took part in a panel discussion on how to inspire creativity.

The whole conference was about activism of sorts. I had lots of conversations with privacy experts of all levels: developers, network engineers, journalists and lawyers. The only thing that is a bit of an issue is that most talks outside the keynotes were in Swedish, but having lots of people to chat with about important issues made up for this.

The speaker present was a certificate that all the CO2 our travel created was off-set by the conference and an Arduino-powered robot used to teach kids. In general, the conference was about preservation and donating to good courses. There was a place where you can touch your conference pass and coins will fall into a hat describing that your check-in just meant that the organisers donated a Euro to doctors without frontiers.

The catering was stunning and with the omission of meat CO2 friendly. Instead of giving out water bottles the drinks were glasses of water, which in Stockholm is in some cases better quality than bottled water.

I am humbled and happy that I could play my part in this great event. It gave me hope that the web isn’t just run over by trolls, privileged complainers and people who don’t care if this great gift of information exchange is being taken from us bit by bit.

Make sure to check out all the talks, it is really worth your time. Thank you to everyone involved in this wonderful event!

https://www.christianheilmann.com/2016/12/02/pixels-politics-and-p2p-internet-days-stockholm-2016/

|

|

Mike Hommey: Faster git-cinnabar graft of gecko-dev |

Cloning Mozilla repositories from scratch with git-cinnabar can be a long process. Grafting them to gecko-dev is an equally long process.

The metadata git-cinnabar keeps is such that it can be exchanged, but it’s also structured in a way that doesn’t allow git push and fetch to do that efficiently, and pushing refs/cinnabar/metadata to github fails because it wants to push more than 2GB of data, which github doesn’t allow.

But with some munging before a push, it is possible to limit the push to a fraction of that size and stay within github limits. And inversely, some munging after fetching allows to produce the metadata git-cinnabar wants.

The news here is that there is now a cinnabar head on https://github.com/glandium/gecko-dev that contains the munged metadata, and a script that fetches it and produces the git-cinnabar metadata in an existing clone of gecko-dev. An easy way to run it is to use the following command from a gecko-dev clone:

$ curl -sL https://gist.github.com/glandium/56a61454b2c3a1ad2cc269cc91292a56/raw/bfb66d417cd1ab07d96ebe64cdb83a4217703db9/import.py | git cinnabar pythonOn my machine, the process takes 8 minutes instead of more than an hour. Make sure you use git-cinnabar 0.4.0rc for this.

Please note this doesn’t bring the full metadata for gecko-dev, just the metadata as of yesterday. This may be updated irregularly in the future, but don’t count on that.

So, from there, you still need to add mercurial remotes and pull from there, as per the original workflow.

Planned changes for version 0.5 of git-cinnabar will alter the metadata format such that it will be exchangeable without munging, making the process simpler and faster.

|

|

Matthew Ruttley: Avoiding multiple reads with top-level imports |

Recently I’ve been working with various applications that require importing large JSON definition files which detail complex application settings. Often, these files are required by multiple auxiliary modules in the codebase. All principles of software engineering point towards importing this sort of file only once, regardless of how many secondary modules it is used in.

My instinctive approach to this would be to have a main handler module read in the file and then pass its contents as a class initialization argument:

# main_handler.py

import json

from module1 import Class1

from module2 import Class2

with open("settings.json") as f:

settings = json.load(f)

init1 = Class1(settings=settings)

init2 = Class2(settings=settings)The problem with this is that if you have an elaborate import process, and multiple files to import, it could start to look messy. I recently discovered that this multiple initialization argument approach isn’t actually necessary.

In Python, you can actually import the same settings loader module in the two auxiliary modules (module1 and module2), and python will only load it once:

# main_handler.py from module1 import Class1 from module2 import Class2 init1 = Class1() init2 = Class2()

# module1.py

import settings_loader

class Class1:

def __init__(self):

self.settings = settings_loader.settings# module2.py

import settings_loader

class Class2:

def __init__(self):

self.settings = settings_loader.settings# settings_loader.py

import json

with open("settings.json") as f:

print "Loading the settings file!"

settings = json.load(f)Now when we test this out in the terminal:

MRMAC:importtesting mruttley$ MRMAC:importtesting mruttley$ MRMAC:importtesting mruttley$ python main_handler.py Loading the settings file! MRMAC:importtesting mruttley$

Despite calling

import settings_loadertwice, Python actually only called it once. This is extremely useful but also could cause headaches if you actually wanted to import the file twice. If so, then I would include the settings importer inside the

__init__()of each ClassX and instantiate it twice.

|

|

Mozilla Addons Blog: December’s Featured Add-ons |

Pick of the Month: Enhancer for YouTube

by Maxime RF

Watch YouTube on your own terms! Tons of customizable features, like ad blocking, auto-play setting, mouse-controlled volume, cinema mode, video looping, to name a few.

“All day long, I watch and create work-related YouTube videos. I think I’ve tried every video add-on. This is easily my favorite!”

Featured: New Tab Override

by S"oren Hentzschel

Designate the page that appears every time you open a new tab.

“Simply the best, trouble-free, new tab option you’ll ever need.”

Nominate your favorite add-ons

Featured add-ons are selected by a community board made up of add-on developers, users, and fans. Board members change every six months. Here’s further information on AMO’s featured content policies.

If you’d like to nominate an add-on for featuring, please send it to amo-featured@mozilla.org for the board’s consideration. We welcome you to submit your own add-on!

https://blog.mozilla.org/addons/2016/12/01/decembers-featured-add-ons/

|

|

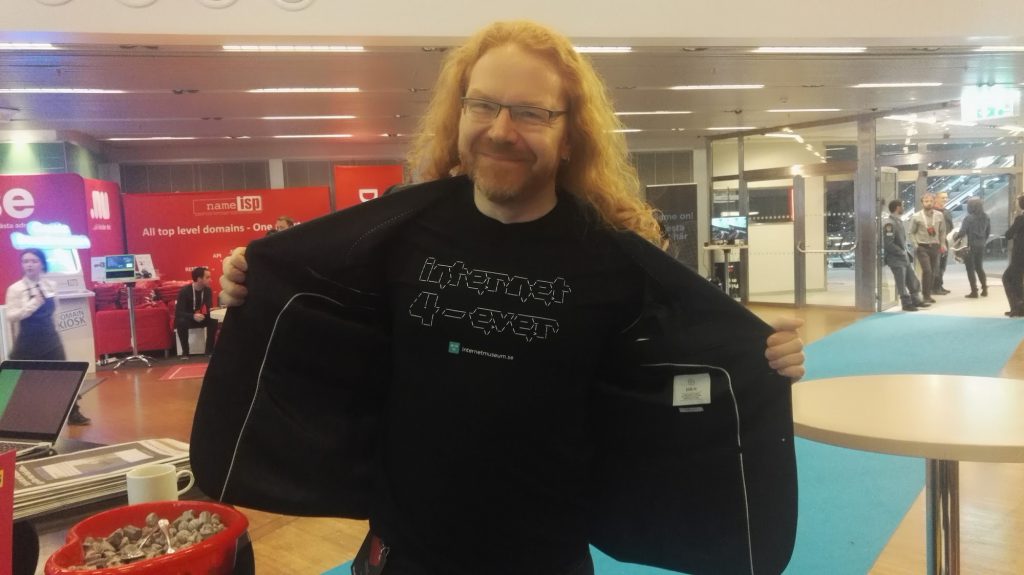

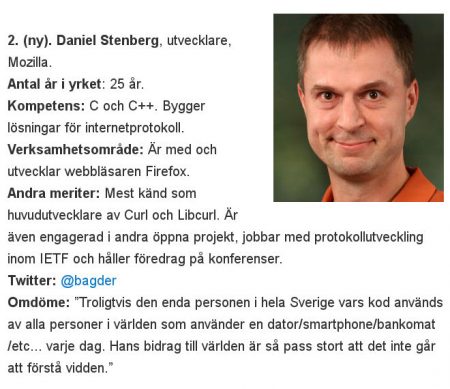

Daniel Stenberg: 2nd best in Sweden |

“Probably the only person in the whole of Sweden whose code is used by all people in the world using a computer / smartphone / ATM / etc … every day. His contribution to the world is so large that it is impossible to understand the breadth. “

(translated motivation from the Swedish original page)

Thank you everyone who nominated me. I’m truly grateful, honored and humbled. You, my community, is what makes me keep doing what I do. I love you all!

To list “Sweden’s best developers” (the list and site is in Swedish) seems like a rather futile task, doesn’t it? Yet that’s something the Swedish IT and technology news site Techworld has been doing occasionally for the last several years. With two, three year intervals since 2008.

Everyone reading this will of course immediately start to ponder on what developers they speak of or how they define developers and how on earth do you judge who the best developers are? Or even who’s included in the delimiter “Sweden” – is that people living in Sweden, born in Sweden or working in Sweden?

I’m certainly not alone in having chuckled to these lists when they have been published in the past, as I’ve never seen anyone on the list be even close to my own niche or areas of interest. The lists have even worked a little as a long-standing joke in places.

It always felt as if the people on the lists were found on another planet than mine – mostly just Java and .NET people. and they very rarely appeared to be developers who actually spend their days surrounded by code and programming. I suppose I’ve now given away some clues to some characteristics I think “a developer” should posses…

This year, their fifth time doing this list, they changed the way they find candidates, opened up for external nominations and had a set of external advisors. This also resulted in me finding several friends on the list that were never on it in the past.

Tonight I got called onto the stage during the little award ceremony and I was handed this diploma and recognition for landing at second place in the best developer in Sweden list.

And just to keep things safe for the future, this is how the listing looks on the Swedish list page:

Yes I’m happy and proud and humbled. I don’t get this kind of recognition every day so I’ll take this opportunity and really enjoy it. And I’ll find a good spot for my diploma somewhere around the house.

Yes I’m happy and proud and humbled. I don’t get this kind of recognition every day so I’ll take this opportunity and really enjoy it. And I’ll find a good spot for my diploma somewhere around the house.

I’ll keep a really big smile on my face for the rest of the day for sure!

|

|

Mozilla Privacy Blog: Remember when we Protected Net Neutrality in the U.S. ? |

We may have to do it again. Importantly, we can and we will.

President-Elect Trump has picked his members of the “agency landing team” for the Federal Communications Commission. Notably, two of them are former telecommunications executives who weren’t supportive of the net neutrality rules ultimately adopted on February 25, 2015. There is no determination yet of who will ultimately lead the FCC – that will likely wait until next year. However, the current “landing team” picks have people concerned that the rules enacted to protect net neutrality – the table stakes for an open internet – are in jeopardy of being thrown out.

Is this possible? Of course it is – but it isn’t quite that simple. We should all pay attention to these picks – they are important for many reasons – but we need to put this into context.

The current FCC, who ultimately proposed and enacted the rules, faced a lot of public pressure in its process of considering them. The relevant FCC docket on net neutrality (“Protecting and Promoting the Open Internet”) currently contains 2,179,599 total filings from interested parties. The FCC also received 4 million comments from the public – most in favor of strong net neutrality rules. It took all of our voices to make it clear that net neutrality was important and needed to be protected.

So, what can happen now? Any new administration can reconsider the issue. We hope they don’t. We have been fighting this fight all over the world, and it would be nice to continue to count the United States as among the leaders on this issue, not one of the laggards. But, if the issue is revisited – we are all still here, and so are others who supported and fought for net neutrality.

We all still believe in net neutrality and in protecting openness, access and equality. We will make our voices heard again. As Mozilla, we will fight for the rules we have – it is a fight worth having. So, pay attention to what is going on in these transition teams – but remember we have strength in our numbers and in making our voices heard.

https://blog.mozilla.org/netpolicy/2016/12/01/remember-when-we-protected-net-neutrality-in-the-u-s/

|

|

The Mozilla Blog: Why I’m joining Mozilla’s Board, by Julie Hanna |

Today, I’m joining Mozilla’s Board. What attracts me to Mozilla is its people, mission and values. I’ve long admired Mozilla’s noble mission to ensure the internet is free, open and accessible to all. That Mozilla has organized itself in a radically transparent, massively distributed and crucially equitable way is a living example of its values in action and a testament to the integrity with which Mozillians have pursued that mission. They walk the talk. Similarly, having had the privilege of knowing a number of the leaders at Mozilla, their sincerity, character and competence are self-evident.

Julie Hanna, new Mozilla Corporation Board member (Photo credit: Chris Michel)

(photo credit: Chris Michel)

The internet is the most powerful force for good ever invented. It is the democratic air half our planet breathes. It has put power into the hands of people that didn’t have it. Ensuring the internet continues to serve our humanity, while reaching all of humanity is vital to preserving and advancing the internet as a public good.

The combination of these things are why helping Mozilla maximize its impact is an act with profound meaning and a privilege for me.

Mozilla’s mission is bold, daring and simple, but not easy. Preserving the web as a force for good and ensuring the balance of power between all stakeholders – private, commercial, national and government interests – while preserving the rights of individuals, by its nature, is a never ending challenge. It is a deep study in choice, consequence and unintended consequences over the short, medium and long term. Understanding the complex, nuanced and dynamic forces at work so that we can skillfully and collaboratively architect a digital organism that’s in service to the greater public good is by its very nature complicated.

And then there’s the challenge all organizations face in today’s innovate or die world – how to stay agile, innovative, and relevant, while riding the waves of disruption. Not for the faint of heart, but incredibly worthwhile and consequential to securing the future of the internet.

I prescribe to the philosophy of servant leadership. When it comes to Board service, my emphasis is on the service part. First and foremost, being in service to the mission and to Mozillians, who are doing the heavy lifting on the front lines. I find that a mindset of radical empathy and humility is critical to doing this effectively. The invisible work of deep listening and effort to understand what it’s like to walk a mile in their shoes. As is creating a climate of trust and psychic safety so that tough strategic issues can be discussed with candor and efficiency. Similarly, cultivating a creative tension so diverse thoughts and ideas have the headroom to emerge in a way that’s constructive and collaborative. My continual focus is to listen, learn and be of service in the areas where my contribution can have the greatest impact.

Mozilla is among the pioneers of Open Source Software. Open Source Software is the foundation of an open internet and a pervasive building block in 95% of all applications. The net effect is a shared public good that accelerates innovation. That will continue. Open source philosophy and methodology are also moving into other realms like hardware and medicine. This will also continue. We tend to overestimate the short term impact of technology and underestimate its long term effect. I believe we’ve only begun catalyzing the potential of open source.

Harnessing the democratizing power of the internet to enable a more just, abundant and free world is the long running purpose that has driven my work. The companies I helped start and lead, the products I have helped build all sought to democratize access to information, communication, collaboration and capital on a mass scale. None of that would have been possible without the internet. This is why I passionately believe that the world needs Mozilla to succeed and thrive in fulfilling its mission.

This post was re-posted on Julie’s Medium channel.

https://blog.mozilla.org/blog/2016/12/01/why-im-joining-mozillas-board-by-julie-hanna/

|

|

Cameron Kaiser: 45.5.1 available, and 32-bit Intel Macs go Tier-3 |

In other news, the announcement below was inevitable after Mozilla dropped support for 10.6 through 10.8, but for the record (from BDS):

As of Firefox 53, we are intending to switch Firefox on mac from a universal x86/x86-64 build to a single-architecture x86-64 build.To simplify the build system and enable other optimizations, we are planning on removing support for universal mac build from the Mozilla build system.

The Mozilla build and test infrastructure will only be testing the x86-64 codepaths on mac. However, we are willing to keep the x86 build configuration supported as a community-supported (tier 3) build configuration, if there is somebody willing to step forward and volunteer as the maintainer for the port. The maintainer's responsibility is to periodically build the tree and make sure it continues to run.

Please contact me directly (not on the list) if you are interested in volunteering. If I do not hear from a volunteer by 23-December, the Mozilla project will consider the Mac-x86 build officially unmaintained.

The precipitating event for this is the end of NPAPI plugin support (see? TenFourFox was ahead of the curve!), except, annoyingly, Flash, with Firefox 52. The only major reason 32-bit Mac Firefox builds weren't ended with the removal of 10.6 support (10.6 being the last version of Mac OS X that could run on a 32-bit Intel Mac) was for those 64-bit Macs that had to run a 32-bit plugin. Since no plugins but Flash are supported anymore, and Flash has been 64-bit for some time, that's the end of that.

Currently we, as OS X/ppc, are a Tier-3 configuration also, at least for as long as we maintain source parity with 45ESR. Mozilla has generally been deferential to not intentionally breaking TenFourFox and the situation with 32-bit x86 would probably be easier than our situation. That said, candidly I can only think of two non-exclusive circumstances where maintaining the 32-bit Intel Mac build would be advantageous, and they're both bigger tasks than simply building the browser for 32 bits:

- You still have to run a 32-bit plugin like Silverlight. In that case, you'd also need to undo the NPAPI plugin block (see bug 1269807) and everything underlying it.

- You have to run Firefox on a 32-bit Mac. As a practical matter this would essentially mean maintaining support for 10.6 as well, roughly option 4 when we discussed this in a prior blog post with the added complexity of having to pull the legacy Snow Leopard support forward over a complete ESR cycle. This is non-trivial, but hey, we've done just that over six ESR cycles, although we had the advantage of being able to do so incrementally.

I'm happy to advise anyone who wants to take this on but it's not something you'll see coming from me. If you decide you'd like to try, contact Benjamin directly (his first name, smedbergs, us).

http://tenfourfox.blogspot.com/2016/12/4551-available-and-32-bit-intel-macs-go.html

|

|

Support.Mozilla.Org: What’s Up with SUMO – 1st December |

Greetings, SUMO Nation!

This is it! The start of the last month of the year, friends :-) We are kicking off the 12th month of 2016 with some news for your reading pleasure. Dig in!

Welcome, new contributors!

If you just joined us, don’t hesitate – come over and say “hi” in the forums!

Contributors of the week

- All the forum supporters who tirelessly helped users out for the last week.

- All the writers of all languages who worked tirelessly on the KB for the last week.

- Huge thanks to philipp, pollti and Artist for their help with getting more Klar-ity :-)

We salute all of you!

SUMO Community meetings

- LATEST ONE: 30th of November – you can read the notes here (and see the video at AirMozilla once we get it up there, since we had some technical issues).

- NEXT ONE: happening on the 14th of December!

- If you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- The State of Mozilla Report is here!

- Help us connect all people to the open Internet through the #EqualRating Innovation Challenge.

- Please remember that Persona has shut down as of yesterday. All affected services will offer alternative log in methods, so look for more information about that on their sites.

- Final reminder: If you want to help us brainstorm how to promote “Internet Awareness” among our visitors, please join us here.

- Get musical with SUMO! The playlist is here… and the forum thread is here.

Platform

- Check the notes from the last meeting in this document. (and don’t forget about our meeting recordings).

- The main points of today’s meeting were:

- Announcing a new Bugzilla component for working on further optimization of the new platform.

- Postponing of the final migration until the first week of 2017.

- Announcing that the next Platform meeting will take place on the 15th of December.

- Reminder: You can preview the current migration test site here.

- If you can’t access the site, contact Madalina.

- Reminder: The post-migration feature list can be found here (courtesy of Roland – ta!)

- We are still looking for user stories to focus on immediately after the migration (also known as “Phase 1”)

Social

- Reminder: Army of Awesome (as a “community trademark”) is going away. Please reach out to the Social Support team or ask in #sumo for more information.

- Remember, you can contact Sierra (sreed@), Elisabeth (ehull@), or Rachel (guigs@) to get started with Social support. Help us provide friendly help through the likes of @firefox, @firefox_es, @firefox_fr and @firefoxbrasil on Twitter and beyond :-)

Support Forum

- If you see Firefox users asking about the “zero day exploit”, please let them know that “We have been made aware of the issue and are working on a fix. We will have more to say once the fix has been shipped.” (some media context here)

- A polite reminder: please do not delete posts without an explanation to the poster in the forums, it can be frustrating to both the author and the user in the thread – thank you!

Knowledge Base & L10n

- Over 280 edits in the KB in all locales in the last week – thank you so much, Editors & Reviewers!

- The review of the l10n flow in Lithium has .a new version for your reading pleasure

Firefox

- for Android

- The forum thread for version 50 is here!

- Firefox 50.1 is coming around December 13th, bringing a few fixes and optimizations.

- for Desktop

- A chemspill release (50.0.2) is in the works due to a vulnerability detected in handling JavaScript. More details in this thread (thank you, Philipp!). The security advisory is available on this site.

- The forum thread for version 50 is here (thanks to Philipp).

- Firefox Klar has launched for German-speaking countries. Alles klar? ;-)

- for iOS

- Firefox for iOS 6.0 coming up your way before the end of this year, we hear! :-)

- Firefox for iOS 6.0 coming up your way before the end of this year, we hear! :-)

…and that’s it for today! So, what are you usually looking forward to about December? For me it would be the snow (not the case any more, sadly) and a few special dishes… Well, OK, also the fun party on the last night of the year in our calendar! Let us hear from you about your December picks – tell us in the forums or in the comments!

https://blog.mozilla.org/sumo/2016/12/01/whats-up-with-sumo-1st-december/

|

|

Mitchell Baker: Julie Hanna Joins the Mozilla Corporation Board of Directors |

This post was originally posted on the Mozilla.org website.

Today, we are very pleased to announce the latest addition to the Mozilla Corporation Board of Directors – Julie Hanna. Julie is the Executive Chairman for Kiva and a Presidential Ambassador for Global Entrepreneurship and we couldn’t be more excited to have her joining our Board.

Throughout this year, we have been focused on board development for both the Mozilla Foundation and the Mozilla Corporation boards of directors. We envisioned a diverse group who embodied the same values and mission that Mozilla stands for. We want each person to contribute a unique point of view. After extensive conversations, it was clear to the Mozilla Corporation leadership team that Julie brings exactly the type of perspective and approach that we seek.

Born in Egypt, Julie has lived in various countries including Jordan and Lebanon before finally immigrating to the United States. Julie graduated from the University of Alabama at Birmingham with a B.S. in Computer Science. She currently serves as Executive Chairman at Kiva, a peer-peer lending pioneer and the world’s largest crowdlending marketplace for underserved entrepreneurs. During her tenure, Kiva has scaled its reach to 190+ countries and facilitated nearly $1 billion dollars in loans to 2 million people with a 97% repayment rate. U.S. President Barack Obama appointed Julie as a Presidential Ambassador for Global Entrepreneurship to help develop the next generation of entrepreneurs. In that capacity, her signature initiative has delivered over $100M in capital to nearly 300,000 women and young entrepreneurs across 86 countries.

Julie is known as a serial entrepreneur with a focus on open source. She was a founder or founding executive at several innovative technology companies directly relevant to Mozilla’s world in browsers and open source. These include Scalix, a pioneering open source email/collaboration platform and developer of the most advanced AJAX application of its time, the first enterprise portal provider 2Bridge Software, and Portola Systems, which was acquired by Netscape Communications and become Netscape Mail.

She has also built a wealth of experience as an active investor and advisor to high-growth technology companies, including sharing economy pioneer Lyft, Lending Club and online retail innovator Bonobos. Julie also serves as an advisor to Idealab, Bill Gross’ highly regarded incubator which has launched dozens of IPO-destined companies.

Please join me in welcoming Julie Hanna to the Mozilla Board of Directors.

Mitchell

Background:

Twitter: @JulesHanna

|

|

The Mozilla Blog: Julie Hanna Joins the Mozilla Corporation Board of Directors |

Today, we are very pleased to announce the latest addition to the Mozilla Corporation Board of Directors – Julie Hanna. Julie is the Executive Chairman for Kiva and a Presidential Ambassador for Global Entrepreneurship and we couldn’t be more excited to have her joining our Board.

Throughout this year, we have been focused on board development for both the Mozilla Foundation and the Mozilla Corporation boards of directors. We envisioned a diverse group who embodied the same values and mission that Mozilla stands for. We want each person to contribute a unique point of view. After extensive conversations, it was clear to the Mozilla Corporation leadership team that Julie brings exactly the type of perspective and approach that we seek.

Born in Egypt, Julie has lived in various countries including Jordan and Lebanon before finally immigrating to the United States. Julie graduated from the University of Alabama at Birmingham with a B.S. in Computer Science. She currently serves as Executive Chairman at Kiva, a peer-peer lending pioneer and the world’s largest crowdlending marketplace for underserved entrepreneurs. During her tenure, Kiva has scaled its reach to 190+ countries and facilitated nearly $1 billion dollars in loans to 2 million people with a 97% repayment rate. U.S. President Barack Obama appointed Julie as a Presidential Ambassador for Global Entrepreneurship to help develop the next generation of entrepreneurs. In that capacity, her signature initiative has delivered over $100M in capital to nearly 300,000 women and young entrepreneurs across 86 countries.

Julie is known as a serial entrepreneur with a focus on open source. She was a founder or founding executive at several innovative technology companies directly relevant to Mozilla’s world in browsers and open source. These include Scalix, a pioneering open source email/collaboration platform and developer of the most advanced AJAX application of its time, the first enterprise portal provider 2Bridge Software, and Portola Systems, which was acquired by Netscape Communications and become Netscape Mail.

She has also built a wealth of experience as an active investor and advisor to high-growth technology companies, including sharing economy pioneer Lyft, Lending Club and online retail innovator Bonobos. Julie also serves as an advisor to Idealab, Bill Gross’ highly regarded incubator which has launched dozens of IPO-destined companies.

Please join me in welcoming Julie Hanna to the Mozilla Board of Directors.

Mitchell

You can read Julie’s message about why she’s joining Mozilla here.

Background:

Twitter: @JulesHanna

High-res photo (photo credit: Chris Michel)

|

|

Air Mozilla: Connected Devices Weekly Program Update, 01 Dec 2016 |

Weekly project updates from the Mozilla Connected Devices team.

Weekly project updates from the Mozilla Connected Devices team.

https://air.mozilla.org/connected-devices-weekly-program-update-20161201/

|

|

The Mozilla Blog: State of Mozilla 2015 Annual Report |

We just released our State of Mozilla annual report for 2015. This report highlights key activities for Mozilla in 2015 and includes detailed financial documents.

Mozilla is not your average company. We’re a different kind of organization – a nonprofit, global community with a mission to ensure that the internet is a global public resource, open and accessible to all.

I hope you enjoy reading and learning more about Mozilla and our developments in products, web technologies, policy, advocacy and internet health.

https://blog.mozilla.org/blog/2016/12/01/state-of-mozilla-2015-annual-report/

|

|

Air Mozilla: Webinar 2: What is Equal Rating. |

Overview of Equal Rating

Overview of Equal Rating

|

|

Mozilla Open Innovation Team: The Problem with Privacy in IoT |

Every year Mozilla hosts DinoTank, an internal pitch platform, and this year instead of pitching ideas we focused on pitching problems. To give each DinoTank winner the best possible start, we set up a design sprint for each one. This is the first installment of that series of DinoTank sprints…

The Problem

I work on the Internet of Things at Mozilla but I am apprehensive about bringing most smart home products into my house. I don’t want a microphone that is always listening to me. I don’t want an internet connected camera that could be used to spy on me. I don’t want my thermostat, door locks, and light bulbs all collecting unknown amounts of information about my daily behavior. Suffice it to say that I have a vague sense of dread about all the new types of data being collected and transmitted from inside my home. So I pitched this problem to the judges at DinoTank. It turns out they saw this problem as important and relevant to Mozilla’s mission. And so to explore further, we ran a 5 day product design sprint with the help of several field experts.

Brainstorming

A team of 8 staff members was gathered in San Francisco for a week of problem refinement, insight gathering, brainstorming, prototyping, and user testing. Among us we had experts in Design Thinking, user research, marketing, business development, engineering, product, user experience, and design. The diversity of skillsets and backgrounds allowed us to approach the problem from multiple different angles, and through our discussion several important questions arose which we would seek to answer by building prototypes and putting them in front of potential consumers.

The Solution

After 3 days of exploring the problem, brainstorming ideas and then them narrowing down, we settled on a single product solution. It would be a small physical device that plugs into the home’s router to monitor the network activity of local smart devices. It would have a control panel that could be accessed from a web browser. It would allow the user to keep up to date through periodic status emails, and only in critical situations would it notify the user’s phone with needed actions. We mocked up an end-to-end experience using clickable and paper prototypes, and put it in front of privacy aware IoT home owners.

What We Learned

Surprisingly, our test users saw the product as more of an all inclusive internet security system rather than a IoT only solution. One of our solutions focused more on ‘data protection’ and here we clearly learned that there is a sense of resignation towards large data collection, with comments like “Google already has all my data anyway.”

Of the positive learnings, the mobile notifications really resonated with users. And interestingly — though not surprisingly — people became much more interested in the privacy aspects of our mock-ups when their children were involved in the conversation.

Next Steps

The big question we were left with was: is this a latent but growing problem, or was this never a problem at all? To answer this, we will tweak our prototypes to test different market positioning of the product as well as explore potential audiences that have a larger interest in data privacy.

My Reflections

Now, if I had done this project without DinoTank’s help, I probably would have started by grabbing a Raspberry Pi and writing some code. But instead I learned how to take a step back and start by focusing on people. Here I learned about evaluating a user problem, sussing out a potential solution, and testing its usefulness in front of users. And so regardless of what direction we now take, we didn’t waste any time because we learned about a problem and the people whom we could reach.

If you’re looking for more details about my design sprint you can find the full results in our report. If you would like to learn more about the other DinoTank design sprints, check out the tumblr. And if you are interested in learning more about the methodologies we are using, check out our Open Innovation Toolkit.

People I’d like to thank:

Katharina Borchert, Bertrand Neveux, Christopher Arnold, Susan Chen, Liz Hunt, Francis Djabri, David Bialer, Kunal Agarwal, Fabrice Desr'e, Annelise Shonnard, Janis Greenspan, Jeremy Merle and Rina Jensen.

The Problem with Privacy in IoT was originally published in Mozilla Open Innovation on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Myk Melez: Mozilla and Node.js |

Recently the Node.js Foundation announced that Mozilla is joining forces with IBM, Intel, Microsoft, and NodeSource on the Node.js API. So what’s Mozilla doing with Node? Actually, a few things…

You may already know about SpiderNode, a Node.js implementation on SpiderMonkey, which Ehsan Akhgari announced in April. Ehsan, Trevor Saunders, Brendan Dahl, and other contributors have since made a bunch of progress on it, and it now builds successfully on Mac and Linux and runs some Node.js programs.

Brendan additionally did the heavy lifting to build SpiderNode as a static library, link it with Positron, and integrate it with Positron’s main process, improving that framework’s support for running Electron apps. He’s now looking at opportunities to expose SpiderNode to WebExtensions and to chrome code in Firefox.

Meanwhile, I’ve been analyzing the Node.js API being developed by the API Working Group, and I’ve also been considering opportunities to productize SpiderNode for Node developers who want to use emerging JavaScript features in SpiderMonkey, such as WebAssembly and Shared Memory.

If you’re a WebExtension developer or Firefox engineer, would you use Node APIs if they were available to you? If you’re a Node programmer, would you use a Node implementation running on SpiderMonkey? And if so, would you require Node.js Addons (i.e. native modules) to do so?

|

|