Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Chris AtLee: Taskcluster migration update: we're finished! |

We're done!

Over the past few weeks we've hit a few major milestones in our project to migrate all of Firefox's CI and release automation to taskcluster.

Firefox 60 and higher are now 100% on taskcluster!

Tests

At the end of March, our Release Operations and Project Integrity teams finished migrating Windows tests onto new hardware machines, all running taskcluster. That work was later uplifted to beta so that CI automation on beta would also be completely done using taskcluster.

This marked the last usage of buildbot for Firefox CI.

Periodic updates of blocklist and pinning data

Last week we switched off the buildbot versions of the periodic update jobs. These jobs keep the in-tree versions of blocklist, HSTS and HPKP lists up to date.

These were the last buildbot jobs running on trunk branches.

Partner repacks

And to wrap things up, yesterday the final patches landed to migrate partner repacks to taskcluster. Firefox 60.0b14 was built yesterday and shipped today 100% using taskcluster.

A massive amount of work went into migrating partner repacks from buildbot to taskcluster, and I'm really proud of the whole team for pulling this off.

So, starting today, Firefox 60 and higher will be completely off taskcluster and not rely on buildbot.

It feels really good to write that :)

We've been working on migrating Firefox to taskcluster for over three years! Code archaeology is hard, but I think the first Firefox jobs to start running in Taskcluster were the Linux64 builds, done by Morgan in bug 1155749.

Into the glorious future

It's great to have migrated everything off of buildbot and onto taskcluster, and we have endless ideas for how to improve things now that we're there. First we need to spend some time cleaning up after ourselves and paying down some technical debt we've accumulated. It's a good time to start ripping out buildbot code from the tree as well.

We've got other plans to make release automation easier for other people to work with, including doing staging releases on try(!!), making the nightly release process more similar to the beta/release process, and for exposing different parts of the release process to release management so that releng doesn't have to be directly involved with the day-to-day release mechanics.

|

|

The Mozilla Blog: Building Bold New Worlds With Virtual Reality |

“I wanted people to feel the whole story with their bodies, not just with their minds. Once I discovered virtual reality was the place to do that, it was transformative.”

– Nonny de la Pe~na, CEO of Emblematic

Great creators can do more than just tell a story. They can build entirely new worlds for audiences to experience and enjoy.

From rich text to video to podcasts, the Internet era offers an array of new ways for creators to build worlds. Here at Mozilla, we are particularly excited about virtual reality. Imagine moving beyond watching or listening to a story; imagine also feeling that story. Imagine being inside it with your entire mind and body. Now imagine sharing and entering that experience with something as simple as a web URL. That’s the potential before us.

To fully realize that potential, we need people who think big. We need artists and developers and engineers who are driven to push the boundaries of the imagination. We need visionaries who can translate that imagination into virtual reality.

The sky is the limit with virtual reality, and we’re driven to serve as the bridge that connects artists and developers. We are also committed to providing those communities with the tools and resources they need to begin building their own worlds. Love working with Javascript? Check out the A-Frame framework. Do you prefer building with Unity? We have created a toolkit to bring your VR Unity experience to the web with WebVR.

We believe browsers are the future of virtual and augmented reality. The ability to click on a link and enter into an immersive, virtual world is a game-changer. This is why we held our ‘VR the People’ panel at the Sundance Film Festival, and why we will be at the Tribeca Film Festival in New York next week. We want to connect storytellers with this amazing technology. If you’re at Tribeca (or just in the area), please reach out. We’d love to chat.

This concludes our four part series about virtual reality, storytelling, and the open web. It’s our mission to empower creators, and we hope these posts have left you inspired. If you’d like to watch our entire VR the People panel. Check out the video below.

Be sure to visit https://mixedreality.mozilla.org/ to learn more about the tools and resources Mozilla offers to help you build new worlds from your imagination.

Read more on VR the People

- An Open Call to Filmmakers: Make Something Amazing With Virtual Reality and the Open Web

- Virtual Reality at the Intersection of Art & Technology

- Nonny de la Pe~na & the Power of Immersive Storytelling

The post Building Bold New Worlds With Virtual Reality appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/04/20/building-bold-new-worlds-with-virtual-reality/

|

|

Mozilla VR Blog: This Week in Mixed Reality: Issue 3 |

This week we’re heads down focusing on adding features in the three broad areas of Browsers, Social and the Content Ecosystem.

Browsers

This week we focused on building Firefox Reality and we’re excited to announce additional features:

- Implemented private tabs

- Tab overflow popup list

- Added contextual menu for “more options” in the header

- Improvements for SVR based devices:

- Update SDK to v2.1.2 for tracking improvements

- Fallback to head tracking based input when there are not controllers available

- Implement scrolling using wheel and trackpad input buttons in ODG devices

- Working on the virtual keyboard across Android platform

- We are designing the transitions for WebVR immersive mode

Check out the video clip of additional features we added this week of the contextual menu and private tabs:

Firefox Reality private browsing from Imanol Fern'andez Gorostizaga on Vimeo.

Social

We're working on a web-based social experience for Mixed Reality.

In the last week, we have:

- Landed next 2D UX pass which cleans up a bunch of CSS and design inconsistencies, and prompts users for avatar and name customization before entry until they customize their name.

- Ongoing work for final push of in-VR UX: unified 3D cursor, “pause/play” mode for blocking UX, finalized HUD design and positioning, less error-prone teleporting component should all land this week.

- Worked through remaining issues with deployments, cleaned up bugs and restart issues with Habitat (as well as filed a number of bugs.)

- Set-up room member capping and room closing.

Join our public WebVR Slack #social channel to join in the discussion!

Content ecosystem

This week, Blair MacIntyre released a new version of the iOS WebXR Viewer app that includes support for experimenting with Computer Vision.

Check out the video below:

Stay tuned next week for some exciting news!

|

|

Chris Cooper: New to me: the Taskcluster team |

All entities move and nothing remains still.

At this time last year, I had just moved on from Release Engineering to start managing the Sheriffs and the Developer Workflow teams. Shortly after the release of Firefox Quantum, I also inherited the Taskcluster team. The next few months were *ridiculously* busy as I tried to juggle the management responsibilities of three largely disparate groups.

By mid-January, it became clear that I could not, in fact, do it all. The Taskcluster group had the biggest ongoing need for management support, so that’s where I chose to land. This sanity-preserving move also gave a colleague, Kim Moir, the chance to step into management of the Developer Workflow team.

Meet the Team

Let me start by introducing the Taskcluster team. We are:

- Hassan Ali

- Wander Lairson Costa

- John Ford

- Jonas Finnemann Jensen

- Dustin Mitchell

- Pete Moore

- Eli Perelman

- Brian Stack

We are an eclectic mix of curlers, snooker players, pinball enthusiasts, and much else besides. We also write and run continous integration (CI) software at scale.

What are we doing?

The part I understand is excellent, and so too is, I dare say, the part I do not understand…

One of the reasons why I love the Taskcluster team so much is that they have a real penchant for documentation. That includes their design and post-mortem processes. Previously, I had only managed others who were using Taskcluster…consumers of their services. The Taskcluster documentation made it really easy for me to plug-in quickly and help provide direction.

If you’re curious about what Taskcluster is at a foundational level, you should start with the tutorial.

The Taskcluster team currently has three, big efforts in progress.

1. Redeployability

Many Taskcluster team members initially joined the team with the dream of building a true, open source CI solution. Dustin has a great post explaining the impetus behind redeployability. Here’s the intro:

Taskcluster has always been open source: all of our code is on Github, and we get lots of contributions to the various repositories. Some of our libraries and other packages have seen some use outside of a Taskcluster context, too.

But today, Taskcluster is not a project that could practically be used outside of its single incarnation at Mozilla. For example, we hard-code the name taskcluster.net in a number of places, and we include our config in the source-code repositories. There’s no legal or contractual reason someone else could not run their own Taskcluster, but it would be difficult and almost certainly break next time we made a change.

The Mozilla incarnation is open to use by any Mozilla project, although our focus is obviously Firefox and Firefox-related products like Fennec. This was a practical decision: our priority is to migrate Firefox to Taskcluster, and that is an enormous project. Maintaining an abstract ability to deploy additional instances while working on this project was just too much work for a small team.

The good news is, the focus is now shifting. The migration from Buildbot to Taskcluster is nearly complete, and the remaining pieces are related to hardware deployment, largely by other teams. We are returning to work on something we’ve wanted to do for a long time: support redeployability.

We’re a little further down that path than when he first wrote about it in January, but you can read more about our efforts to make Taskcluster more widely deployable in Dustin’s blog.

2. Support for packet.net

packet.net provides some interesting services, like baremetal servers and access to ARM hardware, that other cloud providers are only starting to offer. Experiments with our existing emulator tests on the baremetal servers have shown incredible speed-ups in some cases. The promise of ARM hardware is particularly appealing for future mobile testing efforts.

Over the next few months, we plan to add support for packet.net to the Mozilla instance of Taskcluster. This lines up well with the efforts around redeployability, i.e. we need to be able to support different and/or multiple cloud providers anyway.

3. Keeping the lights on (KTLO)

While not particularly glamorous, maintenance is a fact of life for software engineers supporting code that in running in production. That said, we should actively work to minimize the amount of maintenance work we need to do.

One of the first things I did when I took over the Taskcluster team full-time was halt *all* new and ongoing work to focus on stability for the entire month of February. This was precipitated by a series of prolonged outages in January. We didn’t have an established error budget at the time, but if we had, we would have completely blown through it.

Our focus on stability had many payoffs, including more robust deployment stories for many of our services, and a new IRC channel (#taskcluster-bots) full of deployment notices and monitoring alerts. We needed to put in this stability work to buy ourselves the time to work on redeployability.

What are we *not* doing?

With all the current work on redeployability, it’s tempting to look ahead to when we can incorporate some of these improvements into the current Firefox CI setup. While we do plan to redeploy Firefox CI at some point this year to take advantage of these systemic improvements, it is not our focus…yet.

One of the other things I love about the Taskcluster team is that they are really good at supporting community contribution. If you’re interested in learning more about Taskcluster or even getting your feet wet with some bugs, please drop by the #taskcluster channel on IRC and say Hi!

|

|

Nick Cameron: Dev-tools in 2018 |

This is a bit late (how is it the middle of April already?!), but the dev-tools team has lots of exciting plans for 2018 and I want to talk about them!

Our goals for 2018

Here's a summary of our goals for the year.

Ship it!

We want to ship high quality, mature, 1.0 tools in 2018. Including,

- Rustfmt (1.0)

- Rust Language Server (RLS, 1.0)

- Rust extension for Visual Studio Code using the RLS (non-preview, 1.0)

- Clippy (1.0, though possibly not labeled that, including addressing distribution issues)

Support the epoch transition

2018 will bring a step change in Rust with the transition from 2015 to 2018 epochs. For this to be a smooth transition it will need excellent tool support. Exactly what tool support will be required will emerge during the year, but at the least we will need to provide a tool to convert crates to the new epoch.

We also need to ensure that all the currently existing tools continue to work through the transition. For example, that Rustfmt and IntelliJ can handle new syntax such as dyn Trait, and the RLS copes with changes to the compiler internals.

Cargo

The Cargo team have their own goals. Some things on the radar from a more general dev-tools perspective are integrating parts of Xargo and Rustup into Cargo to reduce the number of tools needed to manage most Rust projects.

Custom test frameworks

Testing in Rust is currently very easy and natural, but also very limited. We intend to broaden the scope of testing in Rust by permitting users to opt-in to custom testing frameworks. This year we expect the design to be complete (and an RFC accepted) and for a solid and usable implementation to exist (though stabilisation may not happen until 2019).The current benchmarking facilities will be reimplemented as a custom test framework. The framework should support testing for WASM and embedded software.

Doxidize

Doxidize is a successor to Rustdoc. It adds support for guide-like documentation as well as API docs. This year there should be an initial release and it should be practical to use for real projects.

Maintain and improve existing tools

Maintenance and consistent improvement is essential to avoid bit-rot. Existing mature tools should continue to be well-maintained and improved as necessary. This includes

- debugging support,

- Rustdoc,

- Rustup,

- Bindgen,

- editor integration.

Good tools info on the Rust website

The Rust website is planned to be revamped this year. The dev-tools team should be involved to ensure that there is clear and accurate information about key tools in the Rust ecosystem and that high quality tools are discoverable by new users.

Organising the team

The dev-tools team should be reorganised to continue to scale and to support the goals in this roadmap. I'll outline the concrete changes next.

Re-organising the dev-tools team

The dev-tools team has always been large and somewhat broad - there are a lot of different tools at different levels of maturity with different people working on them. There has always been a tension between having a global, strategic view vs having a detailed, focused view. The peers system was one way to tackle that. This year we're trying something new - the dev-tools team will become something of an umbrella team, coordinating work across multiple teams and working groups.

We're creating two new teams - Rustdoc, and IDEs and editors - and going to work more closely with the Cargo team. We're also spinning up a bunch of working groups. These are more focused, less formal teams, they are dedicated to a single tool or task, rather than to strategy and decision making. Primarily they are a way to let people working on a tool work more effectively. The dev-tools team will continue to coordinate work and keep track of the big picture.

We're always keen to work with more people on Rust tooling. If you'd like to get involved, come chat to us on Gitter in the following rooms:

- dev-tools

- cargo

- Rustdoc

- IDEs and editors

- Bindgen working group

- Debugging working group

- Clippy working group

- Doxidize working group

- Rustfmt working group

- Rustup working group

- 2018 edition tools working group

The teams

Dev-tools

Manish Goregaokar, Steve Klabnik, and Without Boats will be joining the dev-tools team. This will ensure the dev-tools team covers all the sub-teams and working groups.

IDEs and editors

The new IDEs and editors team will be responsible for delivering great support for Rust in IDEs and editors of every kind. That includes the foundations of IDE support such as Racer and the Rust Language Server. The team is Nick Cameron (lead), Igor Matuszewski, Vlad Beskrovnyy, Alex Butler, Jason Williams, Junfeng Li, Lucas Bullen, and Aleksey Kladov.

Rustdoc

The new Rustdoc team is responsible for the Rustdoc software, docs.rs, and related tech. The docs team will continue to focus on the documentation itself, while the Rustdoc team focuses on the software. The team is QuietMisdreavus (lead), Steve Klabnik, Guillaume Gomez, Oliver Middleton, and Onur Aslan.

Cargo

No change to the Cargo team.

Working groups

- Bindgen

- Bindgen and C Bindgen

- Nick Fitzgerald and Emilio 'Alvarez

- Debugging

- Debugger support for Rust - from compiler support, through LLVM and debuggers like GDB and LLDB, to the IDE integration.

- Tom Tromey, Manish Goregaokar, and Michael Woerister

- Clippy

- Oliver Schneider, Manish Goregaokar, llogiq, and Pascal Hertleif

- Doxidize

- Steve Klabnik, Alex Russel, Michael Gatozzi, QuietMisdreavus, and Corey Farwell

- Rustfmt

- Nick Cameron and Seiichi Uchida

- Rustup

- Nick Cameron, Alex Crichton, Without Boats, and Diggory Blake

- Testing

- Focused on designing and implementing custom test frameworks.

- Manish Goregaokar, Jon Gjengset, and Pascal Hertleif

- 2018 edition tooling

- Using Rustfix to ease the edition transition; ensure a smooth transition for all tools.

- Pascal Hertleif, Manish Goregaokar, Oliver Schneider, and Nick Cameron

Thank you to everyone for the fantastic work they're been doing on tools, and for stepping up to be part of the new teams!

|

|

Air Mozilla: Emerging Tech Speaker Series Talk with Rian Wanstreet |

Precision Agriculture, or high tech farming, is being heralded as a panacea solution to the ever-growing demands of an increasing global population - but the...

Precision Agriculture, or high tech farming, is being heralded as a panacea solution to the ever-growing demands of an increasing global population - but the...

https://air.mozilla.org/emerging-tech-speaker-series-talk-with-rian-wanstreet/

|

|

Air Mozilla: Reps Weekly Meeting, 19 Apr 2018 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

The Mozilla Blog: Nonny de la Pe~na & the Power of Immersive Storytelling |

“I want you to think: if she can walk into that room and change her entire life and help create this whole energy and buzz, you can do it too.”

– Nonny de la Pe~na

This week, we’re highlighting VR’s groundbreaking potential to take audiences inside stories with a four part video series. There aren’t many examples of creators doing that more effectively and powerfully than Nonny de la Pe~na.

Nonny de la Pe~na is a former correspondent for Newsweek, the New York Times and other major outlets. For more than a decade now, de la Pe~na has been focused on merging her passion for documentary filmmaking with a deep-seeded expertise in VR. She essentially invented the field of “immersive journalism” through her company, Emblematic Group.

What makes de la Pe~na’s work particularly noteworthy (and a primary reason we’ve been driven to collaborate with her), is that her journalism often uses virtual reality to bring attention to under-served and overlooked groups.

To that end, our panel at this year’s Sundance Festival doubled as another installation in Nonny’s latest project, Mother Nature.

Mother Nature is an open and collaborative project that amplifies the voices of women and creators working in tech. It rebukes the concept that women are underrepresented in positions of power in tech and engineer roles because of anything inherent in their gender.

It’s a clear demonstration of how journalists and all storytellers can use VR to create experiences that can change minds and hearts, and help move our culture towards a more open and human direction.

For more on Nonny de la Pe~na and her immersive projects, visit Emblematic Group. I’d also encourage you to access our resources and open tools at https://mixedreality.mozilla.org/ and learn how you can use virtual reality and the web to tell your own stories.

Read more on VR the People

- An Open Call to Filmmakers: Make Something Amazing With Virtual Reality and the Open Web

- Virtual Reality at the Intersection of Art & Technology

The post Nonny de la Pe~na & the Power of Immersive Storytelling appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/04/19/nonny-de-la-pena-the-power-of-immersive-storytelling/

|

|

Nick Cameron: Announcing cargo src (beta) |

cargo src is a new tool for exploring your Rust code. It is a cargo plugin which runs locally and lets you navigate your project in a web browser. It has syntax highlighting, jump to definition, type on hover, semantic search, find uses, find impls, and more.

Today I'm announcing version 0.1, our first beta; you should try it out! (But be warned, it is definitely beta quality - it's pretty rough around the edges).

To install: cargo install cargo-src, to run: cargo src --open in your project directory. You will need a nightly Rust toolchain. See below for more directions.

When cargo src starts up it will need to check and index your project. If it is a large project, that can take a while. You can see the status in the bottom left of the web page (this is currently not live, it'll update when you load a file). Build information from Cargo is displayed on the console where you ran cargo src. While indexing, you'll be able to see your code with syntax highlighting, but won't get any semantic information or be able to search.

Actionable identifiers are underlined. Click on a reference to jump to the definition. Click on a definition to search for all references to that definition. Right click on a link to see more options (such as 'find impls').

Hover over an identifier to see it's type, documentation, and fields (or similar info).

On the left-hand side there are tabs for searching, and for browsing files and symbols (which to be honest that last one is not working that well yet). Searching is for identifiers only and is case-sensitive. I hope to support text search and fuzzy search in the future.

A big thank you to Nicole Anderson and Zahra Traboulsi for their work - they've helped tremendously with the frontend, making it look and function much better than my attempts. Thanks to everyone who has contributed by testing or coding!

Cargo src is powered by the same tech as the Rust Language Server, taking it's data straight from the compiler. The backend is a Rust web server using Hyper. The frontend uses React and is written in Javascript with a little TypeScript. I think it's a fun project to work on because it's at the intersection of so many interesting technologies. It grew out of an old project - rustw - which was a web-based frontend for the Rust compiler.

Contributions are welcome! It's really useful to file issues if you encounter problems. If you want to get more involved, the code is on GitHub; come chat on Gitter.

|

|

K Lars Lohn: Things Gateway - Series 2, Episode 1 |

With the release of version of 0.4, the Things Gateway introduces something entirely new that the other products in the field don't yet do. Mozilla is thinking about the Internet of Things in a different way: a way that plays directly to the company's strengths. What if all these home automation devices, switches, plugs, bulbs, spoke a protocol that already exists and is cross platform, cross language and fully open: the Web protocols. Imagine if each plug or bulb would respond to HTTP requests as if it were a Web app. You could just use a browser to control them: no need for proprietary software stacks and phone apps. This could be revolutionary.

In this, the beginning of Series Two of my blog posts about the Things Gateway, I'm going to show how to use the Things Framework to create virtual Web things.

Right now, the Mozilla team on this project is focused intensely on making the Web Things Framework easy to implement by hardware manufacturers. Targeting the Maker Movement, the team is pushing to make it easy to enable small Arduino and similar tiny computers to speak the Web of Things (WoT) protocol. They've created libraries and modules in various languages that implement the Things Framework: Javascript, Java, Python 3 have been written, with C++ and Rust on the horizon.

I'm going to focus on the Python implementation of the Things Framework. It is pip installable with this command on a Linux machine:

The webthing-python github repo provides some programming examples on how to use the module.

$ sudo pip3 install webthing

One of the first things that a Python programmer is going to notice about this module is that it closely tracks the structure of a reference implementation. That reference implementation is written in Javascript. As such, it imposes a rather Javascript style and structure onto the Python API. For some that can roll with the punches, this is not a problem, for others, like myself, I'd rather have a more Pythonic API to deal with. So I've wrapped the webthing module with my own pywot (python Web of Things) module.

pywot paves over some of the awkward syntax exposed in the Python webthing implementation and offers some services that further reduce the amount code it takes to create a Web thing.

For example, I don't have one of those fancy home weather stations in my yard. However, I can make a virtual weather station that fetches data from Weather Underground with the complete set of current conditions for my community. Since I can access a RESTful API from Weather Underground in a Python program, I can wrap that API as a Web Thing. The Thing Gateway then sees it as a device on the network and integrates it into the UI as a sensor for multiple values.

For example, I don't have one of those fancy home weather stations in my yard. However, I can make a virtual weather station that fetches data from Weather Underground with the complete set of current conditions for my community. Since I can access a RESTful API from Weather Underground in a Python program, I can wrap that API as a Web Thing. The Thing Gateway then sees it as a device on the network and integrates it into the UI as a sensor for multiple values.Weather Underground offers a software developers license that will allows up to 500 API calls per day at no cost. All you have to do is sign up and they'll give you an API key. Embed that key in a URL and you can fetch data from just about any weather station on their network. The license agreement says that if you publicly post data from their network, you must provide attribution. However, this application of their data is totally private. Of course, it could be argued that turning your porch light blue when Weather Underground says the temperature is cold may be considered a public display of WU data.

class WeatherStation(WoTThing):

async def get_weather_data(self):

async with aiohttp.ClientSession() as session:

async with async_timeout.timeout(config.seconds_for_timeout):

async with session.get(config.target_url) as response:

self.weather_data = json.loads(await response.text())

self.temperature = self.weather_data['current_observation']['temp_f']

self.wind_speed = self.weather_data['current_observation']['wind_mph']

temperature = WoTThing.wot_property(

'temperature',

initial_value=0.0,

description='the temperature in °F',

value_source_fn=get_weather_data,

metadata={

'units': '°F'

}

)

wind_speed = WoTThing.wot_property(

'wind speed',

initial_value=0.0,

description='the wind speed in MPH',

value_source_fn=get_weather_data,

metadata={

'units': 'MPH'

}

)

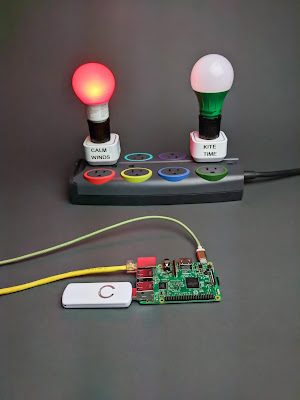

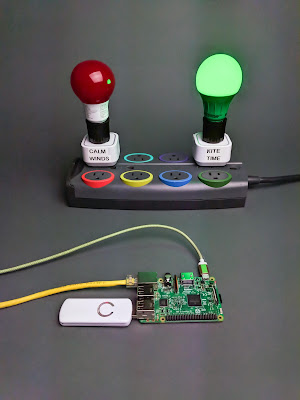

The ability to change the status of devices in my home based on the weather is very useful. I could turn off the irrigation system if there's been enough rain. I could have a light give warning if frost is going to endanger my garden. I could have a light tell me that it is windy enough to go fly a kite.

Is the wind calm or is it perfect kite flying weather?

If you want to jump right in, you can see the full code in my pywot git hub repo. The demo directory has several examples. However, in my next posting, I'm going to explain the virtual weather station in detail.

From Ben Francis of the Mozilla ET IoT team: ...there is currently no authentication and while HTTPS support is provided, it can only really be used with self-signed certificates on a local network. We're not satisfied with that level of security and are exploring ways to provide authentication (in discussions with the W3C WoT Interest Group) and a solution for HTTPS on local networks (via the HTTPS in Local Network Community Group https://www.w3.org/community/httpslocal/). This means that for the time being we would strongly recommend against exposing native web things directly to the Internet using the direct integration pattern unless some form of authentication is used.

http://www.twobraids.com/2018/04/things-gateway-series-2-episode-1.html

|

|

Wladimir Palant: The ticking time bomb: Fake ad blockers in Chrome Web Store |

People searching for a Google Chrome ad blocking extension have to choose from dozens of similarly named extensions. Only few of these are legitimate, most are forks of open source ad blockers trying to attract users with misleading extension names and descriptions. What are these up to? Thanks to Andrey Meshkov we now know what many people already suspected: these extensions are malicious. He found obfuscated code hidden carefully within a manipulated jQuery library that accepted commands from a remote server.

As it happens, I checked out some fake ad blockers only in February. Quite remarkably, all of these turned up clean: the differences to their respective open source counterparts were all minor, mostly limited to renaming and adding Google Analytics tracking. One of these was the uBlock Plus extension which now showed up on Andrey’s list of malicious extensions and has been taken down by Google. So at some point in the past two months this extension was updated in order to add malicious code.

And that appears to be the point here: somebody creates these extensions and carefully measures user counts. Once the user count gets high enough the extension gets an “update” that attempts to monetize the user base by spying on them. At least stealing browsing history was the malicious functionality that Andrey could see, additional code could be pushed out by the server at will. That’s what I suspected all along but this is the first time there is actual proof.

Chrome Web Store has traditionally been very permissive as far as the uploaded content goes. Even taking down extensions infringing trademarks took forever, extensions with misleading names and descriptions on the other hand were always considered “fine.” You have to consider that updating extensions on Chrome Web Store is a fully automatic process, there is no human review like with Mozilla or Opera. So nobody stops you from turning an originally harmless extension bad.

On the bright side, I doubt that Andrey’s assumption of 20 million compromised Chrome users is correct. There are strong indicators that the user numbers of these fake ad blockers have been inflated by bots, simply because the user count is a contributing factor to the search ranking. I assume that this is also the main reason behind the Google Analytics tracking: whoever is behind these extensions, they know exactly that their Chrome Web Store user numbers are bogus.

For reference, the real ad blocking extensions are:

https://palant.de/2018/04/18/the-ticking-time-bomb-fake-ad-blockers-in-chrome-web-store

|

|

Georg Fritzsche: Firefox Data engineering newsletter Q1 / 2018 |

As the Firefox data engineering teams we provide core tools for using data to other teams. This spans from collection through Firefox Telemetry, storage & processing in our Data Platform to making data available in Data Tools.

To make new developments more visible we aim to publish a quarterly newsletter. As we skipped one, some important items from Q4 are also highlighted this time.

This year our teams are putting their main focus on:

- Making experimentation easy & powerful.

- Providing a low-latency view into product release health.

- Making it easy to work with events end-to-end.

- Addressing important user issues with our tools.

Usage improvements

Last year we started to investigate how our various tools are used by people working on Firefox in different roles. From that we started addressing some of the main issues users have.

Most centrally, the Telemetry portal is now the main entry point to our tools, documentation and other resources. When working with Firefox data you will find all the important tools linked from there.

We added the probe dictionary to make it easy to find what data we have about Firefox usage.

For STMO, our Redash instance, we deployed a major UI refresh from the upstream project.

There is new documentation on prototyping and optimizing STMO queries.

Our data documentation saw many other updates, from cookbooks on how to see your own pings and sending new pings to adding more datasets. We also added documentation on how our data pipeline works.

Enabling experimentation

For experimentation, we have focused on improving tooling. Test Tube will soon be our main experiment dashboard, replacing experiments viewer. It displays the results of multivariant experiments that are being conducted within Firefox.

We now have St. Moab as a toolkit for automatically generating experiment dashboards.

Working with event data

To make working with events easier, we improved multiple stages in the pipeline. Our documentation has an overview of the data flow.

On the Firefox side, events can now be recorded through the events API, from add-ons, and whitelisted Firefox content. From Firefox 61, all recorded events are automatically counted into scalars, to easily get summary statistics.

Event data is available for analysis in Redash in different datasets. We can now also connect more event data to Amplitude, a product analytics tool. A connection for some mobile events to Amplitude is live, for Firefox Desktop events it will be available soon.

Low-latency release health data

To enable low-latency views into release health data, we are working on improving Mission Control, which will soon replace arewestableyet.com.

It has new features that enable comparing quality measures like crashes release-over-release across channels.

Firefox Telemetry tools

For Firefox instrumentation we expanded on the event recording APIs. To make build turnaround times faster, we now support adding scalars in artifact builds and will soon extend this to events.

Following the recent Firefox data preferences changes, we adopted Telemetry to only differentiate between “release” and “prerelease” data.

This also impacted the measurement dashboard and telemetry.js users as the current approach to publishing this data from the release channel does not work anymore.

The measurement dashboard got some smaller usability improvements thanks to a group of contributors. We also prototyped a use counter dashboard for easier analysis.

Datasets & analysis tools

To power LetsEncrypt stats, we publish a public Firefox SSL usage dataset.

The following datasets are newly available in Redash or through Spark:

- client_counts_daily — This is useful for estimating user counts over a few dimensions and a long history with daily precision.

- first_shutdown_summary — A summary of the first main ping of a client’s lifetime. This accounts for clients that do not otherwise appear in main_summary.

- churn — A pre-aggregated dataset for calculating the 7-day churn for Firefox Desktop.

- retention — A pre-aggregated dataset for calculating retention for Firefox Desktop. The primary use-case is 1-day retention.

For analysis tooling we now have Databricks available. This offers instant-on-notebooks with no more waiting for clusters to spin up and supports Scala, SQL and R. If you’re interested sign up to the databricks-discuss mailing list.

We also got the probe info service into production, which scrapes the probe data in Firefox code and makes a history of it available to consumers. This is what powers the probe dictionary, but can also be used to power other data tooling.

Getting in touch

Please reach out to us with any questions or concerns.

- You can find us on IRC in #telemetry and #datapipeline.

- We are available on slack in #fx-metrics.

- The main mailing list for data topics is fx-data-dev.

- Bugs can be filed in one of these components.

- We are on Twitter as @MozTelemetry.

Cheers from

- The data engineering team (Katie Parlante), consisting of

- The Firefox Telemetry team (Georg Fritzsche)

- The Data Platform team (Mark Reid)

- The Data Tools team (Rob Miller)

Firefox Data engineering newsletter Q1 / 2018 was originally published in Georg Fritzsche on Medium, where people are continuing the conversation by highlighting and responding to this story.

|

|

Air Mozilla: The Joy of Coding - Episode 136 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

The Firefox Frontier: Working for Good: Metalwood Salvage of Portland |

The web should be open to everyone, a place for unbridled innovation, education, and creative expression. That’s why Firefox fights for Net Neutrality, promotes online privacy rights, and supports open-source … Read more

The post Working for Good: Metalwood Salvage of Portland appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/working-for-good-metalwood-salvage/

|

|

Air Mozilla: Weekly SUMO Community Meeting, 18 Apr 2018 |

This is the SUMO weekly call

This is the SUMO weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-20180418/

|

|

Mozilla Addons Blog: Friend of Add-ons: Viswaprasath Ks |

Please meet our newest Friend of Add-ons, Viswaprasanth Ks! Viswa began contributing to Mozilla in January 2013, when he met regional community members while participating in a Firefox OS hackathon in Bangalore, India. Since then, he has been a member of the Firefox Student Ambassador Board, a Sr. Firefox OS app reviewer, and a Mozilla Rep and Tech Speaker.

In early 2017, Viswa began developing extensions for Firefox using the WebExtensions API. From the start, Viswa wanted to invite his community to learn this framework and create extensions with him. At community events, he would speak about extension development and help participants build their first extensions. These presentations served as a starting point for creating the Activate campaign “Build Your Own Extension.” Viswa quickly became a leader in developing the campaign and testing iterations with a variety of different audiences. In late 2017, he collaborated with community members Santosh Viswanatham and Trishul Goel to re-launch the campaign with a new event flow and more learning resources for new developers.

Viswa continues to give talks about extension development and help new developers become confident working with WebExtensions APIs. He is currently creating a series of videos about the WebExtensions API to be released this summer. When he isn’t speaking about extensions, he mentors students in the Tamilnadu region in Rust and Quality Assurance.

These experiences have translated into skills Viswa uses in everyday life. “I learned about code review when I became a Sr. Firefox OS app reviewer,” he says. “This skill helps me a lot at my office. I am able to easily point out errors in the product I am working on. The second important thing I learned by contributing to Mozilla is how to build and work with a diverse team. The Mozilla community has a lot of amazing people all around the world, and there are unique things to learn from each and every one.”

In his free time, Viswa watches tech-related talks on YouTube, plays chess online, and explores new Mozilla-related projects like Lockbox.

He’s also quick to add, “I feel each and every one who cares about the internet should become Mozilla contributors so the journey will be awesome in future.”

If that describes you and you would like get more involved with the add-ons community, please take a look at our wiki for some opportunities to contribute to the project.

Thank you so much for all of your contributions, Viswa! We’re proud to name you Friend of Add-ons.

The post Friend of Add-ons: Viswaprasath Ks appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2018/04/18/friend-of-add-ons-viswaprasath-ks/

|

|

The Mozilla Blog: Virtual Reality at the Intersection of Art & Technology |

“If someone can imagine a world…they can create an experience.”

– Reggie Watts

This is the second video in our four part series around creators, virtual reality, and the open web. As we laid out in the opening post of this series, virtual reality is more than a technology, and it is far more than mere eye-candy. VR is an immensely powerful tool that is honed and developed every day. In the hands of a creator, that tool has the potential to transport audiences into new worlds and provide new perspectives.

It’s one thing to read about the crisis in Sudan, but being transported inside that crisis is deeply affecting in a way we haven’t seen before.

The hard truth is that all the technological capabilities in the world won’t matter if creators don’t have the proper tools to shape that technology into experiences. To make a true impact, technology and art can’t live parallel lives. They must intersect. Bringing together those worlds was the thrust for our VR the People panel at the Sundance Festival.

“You’re gonna end up finding someone who’s a 16-year-old in the basement with an open-source VR headset and some crappy computer and they download free software so they can build [an experience].”

– Brooks Brown, Global Director of Virtual Reality, Starbreeze Studios

That quote above is exactly why Mozilla spent years working to build WebVR, and why we held our panel at Sundance. It’s why we are writing these posts. We’re hoping they reach someone out there – anyone, anywhere – who has a world in their head and a story to tell. We’re hoping they pick up the tools our engineers built and use them in ways that inspire and force those same engineers to build new tools that keep pace with the evolving creative force.

So go ahead, check out our resources and tools at https://mixedreality.mozilla.org/. We promise you won’t be creating alone. You bring the art, we’ll bring the technology, and together we can make something special.

Read more on VR the People

- An Open Call to Filmmakers: Make Something Amazing With Virtual Reality and the Open Web

The post Virtual Reality at the Intersection of Art & Technology appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2018/04/18/virtual-reality-at-the-intersection-of-art-technology/

|

|

The Firefox Frontier: No-Judgment Digital Definitions: App vs Web App |

Just when you think you’ve got a handle on this web stuff, things change. The latest mixup? Apps vs Web Apps. An app should be an app no matter what, … Read more

The post No-Judgment Digital Definitions: App vs Web App appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/no-judgment-digital-definitions-app-vs-web-app/

|

|