Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Karl Dubost: UA Detection and code libs legacy |

A Web site is a mix of technologies with different lifetimes. The HTML MarkUp is mostly rock-solid for years. CSS is not bad too, apart of the vendor prefixes. JavaScript, PHP, name-your-language are also not that bad. And then there's the full social and business infrastructure of these pieces put together. How do we create Web sites which are resilient and robust over time?

Cyclic Release of Web sites

The business infrastructure of Web agencies is set up for the temporary. They receive a request from a client. They create the Web site with the mood of the moment. After 2 or 3 years, the client thinks the Web site is not up to the new fashion, new trends. The new Web agency (because it's often the case) praises that they will do a better job. They throw the old Web site, breaking at the same time old URIs. They create a new Web site with the technologies of the day. Best case scenario, they understand that keeping URIs is good for branding and karma. They release the site for the next 2-3 years.

Basically the full Web site has changed in terms of content and technologies and is working fine in current browser. It's a 2-3 years release cycle of maintenance.

Understanding Legacy and Maintenance

Web browsers are being updated every 6 weeks or so. This is a very fast cycle. They are released with a lot of unstable technologies. Sometimes, they release entirely new browsers with new version numbers and new technologies. Device makers are releasing also new devices very often. It triggers both a consumerism habit and a difficulty for these to exist.

The Web developers design and focus their code on what is the most popular at the moment. Most of the time it's not their fault. These are the requirements from the business team in the Web agency or the clients. A lot of small Web agencies to not have the resources to invest in automated testing for the Web site. So they focus on two browsers. The one they develop with (the most popular of the moment) and the one the client said it was an absolute minimum bar (the most popular of the past).

Libraries of code are relying on User Agent detection for coping with bugs or unstable features of each browser. These libraries know only the past, never the future, not even the now. Libraries of code are often piles of legacy by design. Some are opensource, some have licenses fees attached to them. In both cases, they require a lot of maintenance and testing which are not planned into the budget of a Web site (which is already exploded by the development of the new shiny Web site).

UA detection and Legacy

The Web site will break for some users at a point in time. They chose to use a Web browser which didn't fit in the box of the current Web site. Last week, I went through WPTouch lib Web Compatibility bugs. Basically Firefox OS was not recognized by the User Agent detection code and in return WordPress Web sites didn't send the mobile version to the mobile devices. We opened that bug in August 2013. We contacted the BraveNewCode company which fixed the bug in March 2014. As of today, December 2014, there are still 7 sites in our list of 12 sites which have not switched to the new version of the library.

These were bugs reported by users of these sites. It means people who can't use their favorite browsers for accessing a particular Web site. I'm pretty sure that theree are more sites with the old version of WPTouch. Users either think their browser is broken or just don't understand what is happening.

Eventually these bugs will go away. It's one of my axioms in Web Compatibility: Wait long enough and the bug goes away. Usually the Web site doesn't exist anymore, or redesign from the ground up. In the meantime some users had a very bad experience.

We need a better story for code legacy, one with fallback, one which doesn't rely only on the past for making it work.

Otsukare.

|

|

Francois Marier: Mercurial and Bitbucket workflow for Gecko development |

While it sounds like I should really switch to a bookmark-based Mercurial workflow for my Gecko development, I figured that before I do that, I should document how I currently use patch queues and Bitbucket.

Starting work on a new bug

After creating a new bug in Bugzilla, I do the following:

- Create a new

mozilla-central-mq-BUGNUMBERrepo on Bitbucket using the web interface and usehttps://bugzilla.mozilla.org/show_bug.cgi?id=BUGNUMBERas the description. - Create a new patch queue:

hg qqueue -c BUGNUMBER - Initialize the patch queue:

hg init --mq - Make some changes.

- Create a new patch:

hg qnew -Ue bugBUGNUMBER.patch - Commit the patch to the mq repo:

hg commit --mq -m "Initial version" - Push the mq repo to Bitbucket:

hg push ssh://hg@bitbucket.org/fmarier/mozilla-central-mq-BUGNUMBER Make the above URL the default for pull/push by putting this in

.hg/patches-BUGNUMBER/.hg/hgrc:[paths] default = https://bitbucket.org/fmarier/mozilla-central-mq-BUGNUMBER default-push = ssh://hg@bitbucket.org/fmarier/mozilla-central-mq-BUGNUMBER

Working on a bug

I like to preserve the history of the work I did on a patch. So once I've got some meaningful changes to commit to my patch queue repo, I do the following:

- Add the changes to the current patch:

hg qref - Check that everything looks fine:

hg diff --mq - Commit the changes to the mq repo:

hg commit --mq - Push the changes to Bitbucket:

hg push --mq

Switching between bugs

Since I have one patch queue per bug, I can easily work on more than one bug at a time without having to clone the repository again and work from a different directory.

Here's how I switch between patch queues:

- Unapply the current queue's patches:

hg qpop -a - Switch to the new queue:

hg qqueue BUGNUMBER - Apply all of the new queue's patches:

hg qpush -a

Rebasing a patch queue

To rebase my patch onto the latest mozilla-central tip, I do the following:

- Unapply patches using

hg qpop -a - Update the branch:

hg pull -u - Reapply the first patch:

hg qpushand resolve any conflicts - Update the patch file in the queue:

hg qref - Repeat steps 3 and 4 for each patch.

- Commit the changes:

hg commit --mq -m "Rebase patch"

Credits

Thanks to Thinker Lee for telling me about

qqueue and Chris Pearce for explaining to me

how he uses mq repos on Bitbucket.

Of course, feel free to leave a comment if I missed anything useful or if there's a easier way to do any of the above.

http://feeding.cloud.geek.nz/posts/mercurial-bitbucket-workflow-for-gecko-development/

|

|

Daniel Glazman: Bloomberg |

Welcoming Bloomberg as a new customer of Disruptive Innovations. Just implemented the proposed caret-color property for them in Gecko.

http://www.glazman.org/weblog/dotclear/index.php?post/2014/12/21/Bloomberg

|

|

Patrick Cloke: The so-called IRC "specifications" |

In a previous post I had briefly gone over the "history of IRC" as I know it. I’m going to expand on this a bit as I’ve come to understand it a bit more while reading through documentation. (Hopefully it won’t sound too much like a rant, as it is all driving me crazy!)

IRC Specifications

So there’s the original specification (RFC 1459) in May 1993; this was expanded and replaced by four different specifications (RFC 2810, 2811, 2812, 2813) in April 2000. Seems pretty straightforward, right?

DCC/CTCP

Well, kind of…there’s also the DCC/CTCP specifications, which is a separate protocol embedded/hidden within the IRC protocol (e.g. they’re sent as IRC messages and parsed specially by clients, the server sees them as normal messages). DCC/CTCP is used to send files as well as other particular messages (ACTION commands for roleplaying, SED for encrypting conversations, VERSION to get client information, etc.). Anyway, this get’s a bit more complicated — it starts with the DCC specification. This was replaced/updated by the CTCP specification (which fully includes the DCC specification) in 1994. An "updated" CTCP specification was released in February 1997. There’s also a CTCP/2 specification from October 1998, which was meant to reformulate a lot of the previous three versions. And finally, there’s the DCC2 specification (two parts: connection negotiation and file transfers) from April 2004.

But wait! I lied…that’s not really the end of DCC/CTCP, there’s also a bunch of extensions to it: Turbo DCC, XDCC (eXtended DCC) in 1993, DCC Whiteboard, and a few other variations of this: RDCC (Reverse DCC), SDD (Secure DCC), DCC Voice, etc. Wikipedia has a good summary.

Something else to note about the whole DCC/CTCP mess…parts of it just don’t have any documentation. There’s noneat all for SED (at least that I’ve found, I’d love to be proved wrong) and very little (really just a mention) for DCC Voice.

So, we’re about halfway through now. There’s a bunch of extensions to the IRC protocol specifications that add new commands to the actual protocol.

Authentication

Originally IRC had no authentication ability except the PASS command, which very few servers seem to use, a variety of mechanisms have replaced this, including SASL authentication (both PLAIN and BLOWFISH methods, although BLOWFISH isn’t documented); and SASL itself is covered by at least four RFCs in this situation. There also seems to be a method called "Auth" which I haven’t been able to pin down, as well as Ident (which is a more general protocol authentication method I haven’t looked into yet).

Extension Support

This includes a few that generally add a way by which servers are able to tell their clients exactly what a server supports. The first of these was RPL_ISUPPORT, which was defined as a draft specification in January 2004, and updated in January of 2005.

A similar concept was defined as IRC Capabilities in March 2005.

Protocol Extensions

IRCX, a Microsoft extension to IRC used (at one point) for some of it’s instant messaging products exists as a draft from June 1998.

There’s also:

Services

To fill in some of the missing features of IRC, services were created (Wikipedia has a good summary again). This commonly includes ChanServ, NickServ, OperServ, and MemoServ. Not too hard, but different server packages include different services (or even the same services that behave differently), one of more common ones is Anope, however (plus they have awesome documentation, so they get a link).

There was an attempt to standardize how to interact with services called IRC+, which included three specifications: conference control protocol, identity protocol and subscriptions protocol. I don’t believe this are supported widely (if at all).

IRC URL Scheme

Finally this brings us to the IRC URL scheme of which there are a few versions. A draft from August 1996 defines the original irc: URL scheme. This was updated/replaced by another draft which defines irc: and ircs: URL schemes.

As of right now that’s all that I’ve found…an awful lot. Plus it’s not all compatible with each other (and sometimes out right contradicts each other). Often newer specifications say not to support older specifications, but who knows what servers/clients you’ll end up talking to! It’s difficult to know what’s used in practice, especially since there’s an awful lot of IRC servers out there. Anyway, if someone does know of another specification, etc. that I missed please let me know!

- Updated [2014-12-20]

- Fixed some dead links. Unfortunately some links now point to the Wayback Machine. There are also copies of most, if not all, of these links in my irc-docs repository. Thanks Ultra Rocks for the heads up!

http://patrick.cloke.us/posts/2011/03/08/so-called-irc-specifications/

|

|

Laura Thomson: 2014: Engineering Operations Year in Review |

On the first day of Mozlandia, Johnny Stenback and Doug Turner presented a list of key accomplishments in Platform Engineering/Engineering Operations in 2014.

I have been told a few times recently that people don’t know what my teams do, so in the interest of addressing that, I thought I’d share our part of the list. It was a pretty damn good year for us, all things considered, and especially given the level of organizational churn and other distractions.

We had a bit of organizational churn ourselves. I started the year managing Web Engineering, and between March and September ended up also managing the Release Engineering teams, Release Operations, SUMO and Input Development, and Developer Services. It’s been a challenging but very productive year.

Here’s the list of what we got done.

Web Engineering

- Migrate crash-stats storage off HBase and into S3

- Launch Crash-stats “hacker” API (access to search, raw data, reports)

- Ship fully-localized Firefox Health Report on Android

- Many new crash-stats reports including GC-related crashes, JS crashes, graphics adapter summary, and modern correlation reports

- Crash-stats reporting for B2G

- Pluggable processing architecture for crash-stats, and alternate crash classifiers

- Symbol upload system for partners

- Migrate l10n.mozilla.org to modern, flexible backend

- Prototype services for checking health of the browser and a support API

- Solve scaling problems in Moztrap to reduce pain for QA

- New admin UI for Balrog (new update server)

- Bouncer: correctness testing, continuous integration, a staging environment, and multi-homing for high availability

- Grew Air Mozilla community contributions from 0 to 6 non-staff committers

- Many new features for Air Mozilla including: direct download for offline viewing of public events, tear out video player, WebRTC self publishing prototype, Roku Channel, multi-rate HLS streams for auto switching to optimal bitrate, search over transcripts, integration with Mozilla Popcorn functionality, and access control based on Mozillians groups (e.g. “nda”)

DXR

- Modeless, explorable UI with all-new JS

- Case-insensitive searching

- Proof-of-concept Rust analysis

- Improved C++ analysis, with lots of new search types

- Multi-tree support

- Multi-line selection (linkable!)

- HTTP API for search

- Line-based searching

- Multi-language support (Python already implemented, Rust and JS in progress)

- Elasticsearch backend, bringing speed and features

- Completely new plugin API, enabling binary file support and request-time analysis

SUMO

- Offline SUMO app in Marketplace

- SUMO Community Hub

- Improved SUMO search with Synonyms

- Instant search for SUMO

- Redesigned and improved SUMO support forums

- Improved support for more products in SUMO (Thunderbird, Webmaker, Open Badges, etc.)

- BuddyUP app (live support for FirefoxOS) (in progress, TBC Q1 2015)

Input

- Dashboards for everyone infrastructure: allowing anyone to build charts/dashboards using Input data

- Backend for heartbeat v1 and v2

- Overhauled the feedback form to support multiple products, streamline user experience and prepare for future changes

- Support for Loop/Hello, Firefox Developer Edition, Firefox 64-bit for Windows

- Infrastructure for automated machine and human translations

- Massive infrastructure overhaul to improve overall quality

Release Engineering

- Cut AWS costs by over 70% during 2014 by switching builds to spot instances and using intelligent bidding algorithms

- Migrated all hardware out of SCL1 and closed datacenter to save $1 million per year (with Relops)

- Optimized network transfers for build/test automation between datacenters, decreasing bandwidth usage by 50%

- Halved build time on b2g-inbound

- Parallelized verification steps in release automation, saving over an hour off the end-to-end time required for each release

- Decommissioned legacy systems (e.g. tegras, tinderbox) (with Relops)

- Enabled build slave reboots via API

- Self-serve arbitrary builds via API

- b2g FOTA updates

- Builds for open H.264

- Built flexible new update service (Balrog) to replace legacy system (will ship first week of January)

- Support for Windows 64 as a first class platform

- Supported FX10 builds and releases

- Release support for switch to Yahoo! search

- Update server support for OpenH264 plugins and Adobe’s CDM

- Implement signing of EME sandbox

- Per-checkin and nightly Flame builds

- Moved desktop firefox builds to mach+mozharness, improving reproducibility and hackability for devs.

- Helped mobile team ship different APKs targeted by device capabilities rather than a single, monolithic APK.

Release Operations

- Decreased operating costs by $1 million per year by consolidating infrastructure from one datacenter into another (with Releng)

- Decreased operating costs and improved reliability by decommissioning legacy systems (kvm, redis, r3 mac minis, tegras) (with Releng)

- Decreased operating costs for physical Android test infrastructure by 30% reduction in hardware

- Decreased MTTR by developing a simplified releng self-serve reimaging process for each supported build and test hardware platforms

- Increased security for all releng infrastructure

- Increased stability and reliability by consolidating single point of failure releng web tools onto a highly available cluster

- Increased network reliability by developing a tool for continuous validation of firewall flows

- Increased developer productivity by updating windows platform developer tools

- Increased fault and anomaly detection by auditing and augmenting releng monitoring and metrics gathering

- Simplified the build/test architecture by creating a unified releng API service for new tools

- Developed a disaster recovery and business continuation plan for 2015 (with RelEng)

- Researched bare-metal private cloud deployment and produced a POC

Developer Services

- Ship Mozreview, a new review architecture integrated with Bugzilla (with A-team)

- Massive improvements in hg stability and performance

- Analytics and dashboards for version control systems

- New architecture for try to make it stable and fast

- Deployed treeherder (tbpl replacement) to production

- Assisted A-team with Bugzilla performance improvements

I’d like to thank the team for their hard work. You are amazing, and I look forward to working with you next year.

At the start of 2015, I’ll share our vision for the coming year. Watch this space!

http://www.laurathomson.com/2014/12/2014-engineering-operations-year-in-review/

|

|

Mozilla Fundraising: Thanks to Our Amazing Supporters: A New Goal |

https://fundraising.mozilla.org/thanks-to-our-amazing-supporters-a-new-goal/

|

|

Chris Pearce: Firefox video playback's skip-to-next-keyframe behavior |

The fundamental question that skip-to-next-keyframe answers is, "what do we do when the video stream decode can't keep up with the playback speed?

Video playback is a classic producer/consumer problem. You need to ensure that your audio and video stream decoders produce decoded samples at a rate no less that the rate at which the audio/video streams need to be rendered. You also don't want to produce decoded samples at a rate too much greater than the consumption rate, else you'll waste memory.

For example, if we're running on a low end PC, playing a 30 frames per second video, and the CPU is so slow that it can only decode an average of 10 frames per second, we're not going to be able to display all video frames.

This is also complicated by our video stack's legacy threading model. Our first video decoding implementation did the decoding of video and audio streams in the same thread. We assumed that we were using software decoding, because we were supporting Ogg/Theora/Vorbis, and later WebM/VP8/Vorbis, which are only commonly available in software.

The pseudo code for our "decode thread" used to go something like this:

while (!AudioDecodeFinished() || !VideoDecodeFinished()) {

if (!HaveEnoughAudioDecoded()) {

DecodeSomeAudio();

}

if (!HaveEnoughVideoDecoded()) {

DecodeSomeVideo();

}

if (HaveLotsOfAudioDecoded() && HaveLotsOfVideoDecoded()) {

SleepUntilRunningLowOnDecodedData();

}

}

This was an unfortunate design, but it certainly made some parts of our code much simpler and easier to write.

We've recently refactored our code, so it no longer looks like this, but for some of the older backends that we support (Ogg, WebM, and MP4 using GStreamer on Linux), the pseudocode is still effectively (but not explicitly or obviously) this. MP4 on Windows, MacOSX, and Android in Firefox 36 and later now decode asynchronously, so we are not limited to decoding only on one thread.

The consequence of decoding audio and video on the same thread only really bites on low end hardware. I have an old Lenovo x131e netbook, which on some videos can take 400ms to decode a Theora keyframe. Since we use the same thread to decode audio as video, if we don't have at least 400ms of audio already decoded while we're decoding such a frame, we'll get an "audio underrun". This is where we don't have enough audio decoded to keep up with playback, and so we end up glitching the audio stream. This sounds is very jarring to the listener.

Humans are very sensitive to sound; the audio stream glitching is much more jarring to a human observer than dropping a few video frames. The tradeoff we made was to sacrifice the video stream playback in order to not glitch the audio stream playback. This is where skip-to-next-keyframe comes in.

With skip-to-next-keyframe, our pseudo code becomes:

while (!AudioDecodeFinished() || !VideoDecodeFinished()) {

if (!HaveEnoughAudioDecoded()) {

DecodeSomeAudio();

}

if (!HaveEnoughVideoDecoded()) {

bool skipToNextKeyframe =

(AmountOfDecodedAudio < LowAudioThreshold()) ||

HaveRunOutOfDecodedVideoFrames();

DecodeSomeVideo(skipToNextKeyframe);

}

if (HaveLotsOfAudioDecoded() && HaveLotsOfVideoDecoded()) {

SleepUntilRunningLowOnDecodedData();

}

}

We also monitor how long a video frame decode takes, and if a decode takes longer than the low-audio-threshold, we increase the low-audio-threshold.

If we pass a true value for skipToNextKeyframe to the decoder, it is supposed to give up and skip its decode up to the next keyframe. That is, don't try to decode anything between now and the next keyframe.

Video frames are typically encoded as a sequence of full images (called "key frames", "reference frames", or I-frames in H.264) and then some number of frames which are "diffs" from the key frame (P-Frames in H.264 speak). (H.264 also has B-frames which are a combination of diffs of frames frames both before and after the current frame, which can lead the encoded stream to be muxed out-of-order).

The idea here is that we deliberately drop video frames in the hope that we give time back to the audio decode, so we are less likely to get audio glitches.

Our implementation of this idea is not particularly good.

Often on low end Windows machines playing HD videos without hardware accelerated video decoding, you'll get a run of say half a second of video decoded, and then we'll skip everything up to the next keyframe (a couple of seconds), before playing another half a second, and then skipping again, ad nasuem, giving a slightly weird experience. Or in the extreme, you can end up with only getting the keyframes decoded, or even no frames if we can't get the keyframes decoded in time. Or if it works well enough, you can still get a couple of audio glitches at the start of playback until the low-audio-threshold adapts to a large enough value, and then playback is smooth.

The FirefoxOS MediaOmxReader also never implemented skip-to-next-keyframe correctly, our behavior there is particularly bad. This is compounded by the fact that FirefoxOS typically runs on lower end hardware anyway. The MediaOmxReader doesn't actually skip decode to the next keyframe, it decodes to the next keyframe. This will cause the video decode to hog the decode thread for even longer; this will give the audio decode even less time, which is the exact opposite of what you want to do. What they should do is skip the demux of video up to the next keyframe, but if I recall correctly there was bugs in the Android platform's video decoder library that FirefoxOS is based on that caused this to be unreliable.

All these issues occur because we share the same thread for audio and video decoding. This year we invested some time refactoring our video playback stack to be asynchronous. This enables backends that support it to do their decoding asynchronously, on another own thread. So since audio decodes on a separate thread to video, we should have glitch-free audio even when the video decode can't keep up, even without engaging skip-to-next-keyframe. We still need to do something like skipping the video decode when the video decode is falling behind, but it can probably engage less aggressively.

I did a quick test the other day on a low end Windows 8.0 tablet with an Atom Z2760 CPU with skip-to-next-keyframe disabled and async decoding enabled, and although the video decode falls behind and gets out of sync with audio (frames are rendered late) we never glitched audio.

So I think it's time to revisit our skip-to-next-keyframe logic, since we don't need to sacrifice video decode to ensure that audio playback doesn't glitch.

When using async decoding we still need some mechanism like skip-to-next-keyframe to ensure that when the video decode falls behind it can catch up. The existing logic to engage skip-to-next-keyframe also performs that role, but often we enter skip-to-next-keyframe and start dropping frames when video decode actually could keep up if we just gave it a chance. This often happens when switching streams during MSE playback.

Now that we have async decoding, we should experiment with modifying the HaveRunOutOfDecodedVideoFrames() logic to be more lenient, to avoid unnecessary frame drops during MSE playback. One idea would be to only engage skip-to-next-keyframe if we've missed several frames. We need to experiment on low end hardware.

http://blog.pearce.org.nz/2014/12/firefox-video-playbacks-skip-to-next.html

|

|

Gervase Markham: Global Posting Privileges on the Mozilla Discussion Forums |

Have you ever tried to post a message to a Mozilla discussion forum, particularly one you haven’t posted to before, and received back a “your message is held in a queue for the moderator” message?

Turns out, if you are subscribed to at least one forum in its mailing list form, you get global posting privileges to all forums via all mechanisms (mail, news or Google Groups). If you aren’t so subscribed, you have to be whitelisted by the moderator on a per-forum basis.

If this sounds good, and you are looking for a nice low-traffic list to use to get this privilege, try mozilla.announce.

http://feedproxy.google.com/~r/HackingForChrist/~3/lXB2PTfkqhk/

|

|

Wladimir Palant: Can Mozilla be trusted with privacy? |

A year ago I would have certainly answered the question in the title with “yes.” After all, who else if not Mozilla? Mozilla has been living the privacy principles which we took for the Adblock Plus project and called our own. “Limited data” is particularly something that is very hard to implement and defend against the argument of making informed decisions.

But maybe I’ve simply been a Mozilla contributor way too long and don’t see the obvious signs any more. My colleague Felix Dahlke brought my attention to the fact that Mozilla is using Google Analytics and Optimizely (trusted third parties?) on most of their web properties. I cannot really find a good argument why Mozilla couldn’t process this data in-house, insufficient resources certainly isn’t it.

And then there is Firefox Health Report and Telemetry. Maybe I should have been following the discussions, but I simply accepted the prompt when Firefox asked me — my assumption was that it’s anonymous data collection and cannot be used to track behavior of individual users. The more surprised I was to read this blog post explaining how useful unique client IDs are to analyze data. Mind you, not the slightest sign of concern about the privacy invasion here.

Maybe somebody else actually cared? I opened the bug but the only statement on privacy is far from being conclusive — yes, you can opt out and the user ID will be removed then. However, if you don’t opt out (e.g. because you trust Mozilla) then you will continue sending data that can be connected to a single user (and ultimately you). And then there is this old discussion about the privacy aspects of Firefox Health Reporting, a long and fruitless one it seems.

Am I missing something? Should I be disabling all feedback functionality in Firefox and recommend that everybody else do the same?

Side-note: Am I the only one who is annoyed by the many Mozilla bugs lately which are created without a description and provide zero context information? Are there so many decisions being made behind closed doors or are people simply too lazy to add a link?

https://palant.de/2014/12/19/can-mozilla-be-trusted-with-privacy

|

|

Laura Hilliger: Web Literacy Lensing: Identity |

Theory

First things first - there’s a media education theory (in this book) suggesting that technology has complicated our “identity”. It’s worth mentioning because it’s interesting, and I think it’s worth noting that I didn’t consider all the nuances of these various identities in thinking about how the Web Literacy Map becomes the Web Literacy Map for Identity. We as human beings have multiple, distinct identities we have to deal with in life. We have to deal with who we are with family vs with friends vs alone vs professionally regardless of whether or not we are online, but with the development of the virtual space, the theory suggests that identity has become even more complicated. Additionally, we now have to deal with:- The Real Virtual: an anonymous online identity that you try on. Pretending to be a particular identity online because you are curious as to how people react to it? That’s not pretending, really, it’s part of your identity that you need answers to curiosities.

- The Real IN Virtual: an online identity that is affiliated with an offline identity. My name is Laura offline as well. Certain aspects of my offline personality are mirrored in the online space. My everyday identity is (partially) manifested online.

- The Virtual IN Real: a kind of hybrid identity that you adopt when you interact first in an online environment and then in the physical world. People make assumptions about you when they meet you for the first time. Technology partially strips us of certain communication mannerisms (e.g. Body language, tone, etc), so those assumptions are quite different if you met through technology and then in real life.

- The Virtual Real: an offline identity from a compilation of data about a particular individual. Shortly: Identity theft.

Exploring Identity (and the web)

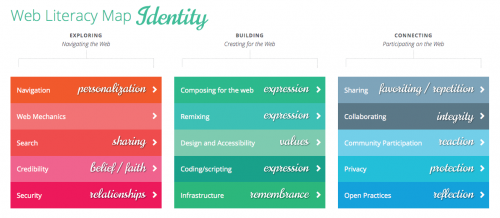

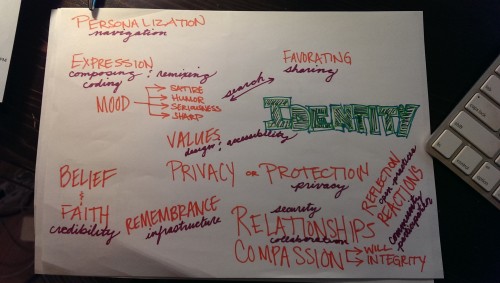

Navigation – Identity is personal, so maybe part of web literacy is about personalizing your experience. Perhaps skills become more granular when we talk about putting a lens on the Map? Example granularity: common features of the browser skill might break down into “setting your own homepage” and “pinning apps and bookmarks”. Web Mechanics - I didn’t find a way to lens this competency. It’s the only one I couldn’t. Very frustrating to have ONE that doesn’t fit. What does that say about Web Mechanics or the Web Literacy Map writ large? Search – Identity is manifested, so your tone and mood might dictate what you search for and how you share it. Are you a satirist? Are you funny? Are you serious or terse? Search is a connective competency under this lens because it connects your mood/tone to your manifestation of identity. Example skill modification/addition: Locating or finding desired information within search results ——> using specialized search machines to find desired emotional expression. (e.g. GIPHY!) Credibility – Identity is formed through beliefs and faith, and I wouldn’t have a hard time arguing that those things influence your understanding of credible information. If you believe something and someone confirms your belief, you’ll likely find that person more credible than someone who rejects your belief. Example skill modification/addition: Comparing information from a number of sources to judge the trustworthiness of content ——> Comparing information from a number of sources to judge the trustworthiness of people Security - Identity is influenced heavily by relationships. Keeping other people’s data secure seems like part of the puzzle, and there’s something about the innate need to keep people who have influenced your identity positively secure. I don’t have an example for this one off the top of my head, but it’s percolating. [caption id="attachment_2514" align="aligncenter" width="500"] braindump[/caption]

braindump[/caption]

Building Identity (and the web)

Composing for the Web, Remixing, and Coding/Scripting allow us to be expressive about our identities. The expression is the WHY of any of this, so directly connected to your own identity. It connects into your personality, motivations, and a mess of thinking skills we need to function in our world. Skills underneath these competencies could be modified to incorporate those emotional and psychological traits of that expression. Design and Accessibility – Values are inseparable from our identities. I think design and accessibility is a competency that radiates a persons values. It’s ok to back burner this if you’re being expressive for the sake of being expressive, but if you have a message, if you are being expressive in an effort to connect with other people (which, let’s face it, is part of the human condition), design and accessibility is a value. Not sure how I would modify the skills… Infrastructure - I was thinking that this one pulled in remembrance as a part of identity. Exporting data, moving data, understanding the internet stack and how to adequately use it so that you can keep a record of your or someone else’s online identity has lots of implications for remembrance, which I think influences who we are as much as anything else. Example skill modification/addition: “Exporting and backing up your data from web services” might lead to “Analyzing historical data to determine identity shifts” That's all for now. I've thought a little about the final strand, but I'm going to save it for next year. I would like to hear what you all think. Is this a useful experiment for the Web Literacy Map? Does this kind of thinking help hone in on ways to structure learning activities that use the web? Can you help me figure out what my brain is doing? Happy holidays everyone ;)http://www.zythepsary.com/psycho/web-literacy-lensing-identity/

|

|

Gregory Szorc: Why hg.mozilla.org is Slow |

At Mozilla, I often hear statements like Mercurial is slow. That's a very general statement. Depending on the context, it can mean one or more of several things:

- My Mercurial workflow is not very efficient

- hg commands I execute are slow to run

- hg commands I execute appear to stall

- The Mercurial server I'm interfacing with is slow

I want to spend time talking about a specific problem: why hg.mozilla.org (the server) is slow.

What Isn't Slow

If you are talking to hg.mozilla.org over HTTP or HTTPS (https://hg.mozilla.org/), there should not currently be any server performance issues. Our Mercurial HTTP servers are pretty beefy and are able to absorb a lot of load.

If https://hg.mozilla.org/ is slow, chances are:

- You are doing something like cloning a 1+ GB repository.

- You are asking the server to do something really expensive (like generate JSON for 100,000 changesets via the pushlog query interface).

- You don't have a high bandwidth connection to the server.

- There is a network event.

Previous Network Events

There have historically been network capacity issues in the datacenter where hg.mozilla.org is hosted (SCL3).

During Mozlandia, excessive traffic to ftp.mozilla.org essentially saturated the SCL3 network. During this time, requests to hg.mozilla.org were timing out: Mercurial traffic just couldn't traverse the network. Fortunately, events like this are quite rare.

Up until recently, Firefox release automation was effectively overwhelming the network by doing some clownshoesy things.

For example, gaia-central was being cloned all the time We had a ~1.6 GB repository being cloned over a thousand times per day. We were transferring close to 2 TB of gaia-central data out of Mercurial servers per day

We also found issues with pushlogs sending 100+ MB responses.

And the build/tools repo was getting cloned for every job. Ditto for mozharness.

In all, we identified a few terabytes of excessive Mercurial traffic that didn't need to exist. This excessive traffic was saturating the SCL3 network and slowing down not only Mercurial traffic, but other traffic in SCL3 as well.

Fortunately, people from Release Engineering were quick to respond to and fix the problems once they were identified. The problem is now firmly in control. Although, given the scale of Firefox's release automation, any new system that comes online that talks to version control is susceptible to causing server outages. I've already raised this concern when reviewing some TaskCluster code. The thundering herd of automation will be an ongoing concern. But I have plans to further mitigate risk in 2015. Stay tuned.

Looking back at our historical data, it appears that we hit these network saturation limits a few times before we reached a tipping point in early November 2014. Unfortunately, we didn't realize this because up until recently, we didn't have a good source of data coming from the servers. We lacked the tooling to analyze what we had. We lacked the experience to know what to look for. Outages are effective flashlights. We learned a lot and know what we need to do with the data moving forward.

Available Network Bandwidth

One person pinged me on IRC with the comment Git is cloning much faster than Mercurial. I asked for timings and the Mercurial clone wall time for Firefox was much higher than I expected.

The reason was network bandwidth. This person was performing a Git clone between 2 hosts in EC2 but was performing the Mercurial clone between hg.mozilla.org and a host in EC2. In other words, they were partially comparing the performance of a 1 Gbps network against a link over the public internet! When they did a fair comparison by removing the network connection as a variable, the clone times rebounded to what I expected.

The single-homed nature of hg.mozilla.org in a single datacenter in northern California is not only bad for disaster recovery reasons, it also means that machines far away from SCL3 or connecting to SCL3 over a slow network aren't getting optimal performance.

In 2015, expect us to build out a geo-distributed hg.mozilla.org so that connections are hitting a server that is closer and thus faster. This will probably be targeted at Firefox release automation in AWS first. We want those machines to have a fast connection to the server and we want their traffic isolated from the servers developers use so that hiccups in automation don't impact the ability for humans to access and interface with source code.

NFS on SSH Master Server

If you connect to http://hg.mozilla.org/ or https://hg.mozilla.org/, you are hitting a pool of servers behind a load balancer. These servers have repository data stored on local disk, where I/O is fast. In reality, most I/O is serviced by the page cache, so local disks don't come into play.

If you connect to ssh://hg.mozilla.org/, you are hitting a single, master server. Its repository data is hosted on an NFS mount. I/O on the NFS mount is horribly slow. Any I/O intensive operation performed on the master is much, much slower than it should be. Such is the nature of NFS.

We'll be exploring ways to mitigate this performance issue in 2015. But it isn't the biggest source of performance pain, so don't expect anything immediately.

Synchronous Replication During Pushes

When you hg push to hg.mozilla.org, the changes are first made on the SSH/NFS master server. They are subsequently mirrored out to the HTTP read-only slaves.

As is currently implemented, the mirroring process is performed synchronously during the push operation. The server waits for the mirrors to complete (to a reasonable state) before it tells the client the push has completed.

Depending on the repository, the size of the push, and server and network load, mirroring commonly adds 1 to 7 seconds to push times. This is time when a developer is sitting at a terminal, waiting for hg push to complete. The time for Try pushes can be larger: 10 to 20 seconds is not uncommon (but fortunately not the norm).

The current mirroring mechanism is overly simple and prone to many failures and sub-optimal behavior. I plan to work on fixing mirroring in 2015. When I'm done, there should be no user-visible mirroring delay.

Pushlog Replication Inefficiency

Up until yesterday (when we deployed a rewritten pushlog extension, the replication of pushlog data from master to server was very inefficient. Instead of tranferring a delta of pushes since last pull, we were literally copying the underlying SQLite file across the network!

Try's pushlog is ~30 MB. mozilla-central and mozilla-inbound are in the same ballpark. 30 MB x 10 slaves is a lot of data to transfer. These operations were capable of periodically saturating the network, slowing everyone down.

The rewritten pushlog extension performs a delta transfer automatically as part of hg pull. Pushlog synchronization now completes in milliseconds while commonly only consuming a few kilobytes of network traffic.

Early indications reveal that deploying this change yesterday decreased the push times to repositories with long push history by 1-3s.

Try

Pretty much any interaction with the Try repository is guaranteed to have poor performance. The Try repository is doing things that distributed versions control systems weren't designed to do. This includes Git.

If you are using Try, all bets are off. Performance will be problematic until we roll out the headless try repository.

That being said, we've made changes recently to make Try perform better. The median time for pushing to Try has decreased significantly in the past few weeks. The first dip in mid-November was due to upgrading the server from Mercurial 2.5 to Mercurial 3.1 and from converting Try to use generaldelta encoding. The dip this week has been from merging all heads and from deploying the aforementioned pushlog changes. Pushing to Try is now significantly faster than 3 months ago.

Conclusion

Many of the reasons for hg.mozilla.org slowness are known. More often than not, they are due to clownshoes or inefficiencies on Mozilla's part rather than fundamental issues with Mercurial.

We have made significant progress at making hg.mozilla.org faster. But we are not done. We are continuing to invest in fixing the sub-optimal parts and making hg.mozilla.org faster yet. I'm confident that within a few months, nobody will be able to say that the servers are a source of pain like they have been for years.

Furthermore, Mercurial is investing in features to make the wire protocol faster, more efficient, and more powerful. When deployed, these should make pushes faster on any server. They will also enable workflow enhancements, such as Facebook's experimental extension to perform rebases as part of push (eliminating push races and having to manually rebase when you lose the push race).

http://gregoryszorc.com/blog/2014/12/19/why-hg.mozilla.org-is-slow

|

|

Roberto A. Vitillo: ClientID in Telemetry submissions |

A new functionality landed recently that allows to group Telemetry sessions by profile ID. Being able to group sessions by profile turns out be extremely useful for several reasons. For instance, as some users tend to generate an enourmous amount of sessions daily, analyses tend to be skewed towards those users.

Take uptime duration; if we just consider the uptime distribution of all sessions collected in a certain timeframe on Nightly we would get a distribution with a median duration of about 15 minutes. But that number isn’t really representative of the median uptime for our users. If we group the submissions by Client ID and compute the median uptime duration for each group, we can build a new distribution that is more representative of the general population:

And we can repeat the exercise for the startup duration, which is expressed in ms:

Our dashboards are still based on the session distributions but it’s likely that we will provide both session and user based distributions in our next-gen telemetry dash.

Our dashboards are still based on the session distributions but it’s likely that we will provide both session and user based distributions in our next-gen telemetry dash.

edit:

Please keep in mind that:

- Telemetry does not collect privacy-sensitive data.

- You do not have to trust us, you can verify what data Telemetry is collecting in the about:telemetry page in your browser and in aggregate form in our Telemetry dashboards.

- Telemetry is an opt-in feature on release channels and a feature you can easily disable on other channels.

- The new Telemetry Client ID does not track users, it tracks Telemetry performance & feature-usage metrics across sessions.

http://robertovitillo.com/2014/12/19/clientid-in-telemetry-submissions/

|

|

Will Kahn-Greene: Input status: December 18th, 2014 |

Preface

It's been 3 months since the last status report. Crimey! That's not great, but it's even worse because it makes this report crazy long.

First off, lots of great work done by Adam Okoye, L. Guruprasad, Bhargav Kowshik, and Deshraj Yadav! w00t!

Second, we did a ton of stuff and broke 1,000 commits! w00t!

Third, I've promised to do more frequent status reports. I'll go back to one every two weeks.

Onward!

Development

High-level summary:

- Lots of code quality work.

- Updated ElasticUtils to 0.10.1 so we can upgrade our Elasticsearch cluster.

- Heartbeat v2.

- Overhauled the generic feedback form.

- remote-troubleshooting data capture.

- contribute.json file.

- Upgrade to Django 1.6.

- Upgrade to Python 2.7!!!!!

- Improved and added to pre-commit and commit-msg linters.

Landed and deployed:

- ce95161 Clarify source and campaign parameters in API

- 286869e bug 788281 Add update_product_details to deploy

- bbedc86 bug 788281 Implement basic events API

- 1c0ff9f bug 1071567 Update ElasticUtils to 0.10.1

- 7fd52cd bug 1072575 Rework smart_timedelta

- ce80c56 bug 1074276 Remove abuse classification prototype

- 7540394 bug 1075563 Fix missing flake8 issue

- 11e4855 bug 1025925 Change file names (Adam Okoye)

- 23af92a bug 1025925 Change all instances of fjord.analytics.tools to fjord.analytics.utils (Adam Okoye)

- ae28c60 bug 1025925 Change instances of of util relating to fjord/base/util.py (Adam Okoye)

- bc77280 Add Adam Okoye to CONTRIBUTORS

- 545dc52 bug 1025925 Change test module file names

- fc24371 bug 1041703 Drop prodchan column

- 9097b8f bug 1079376 Add error-email -> response admin view

- d3cfdfe bug 1066618 Tweak Gengo account balance warning

- a49f1eb bug 1020303 Add rating column

- 55fede0 bug 1061798 Reset page number Resets page number when filter checkbox is checked (Adam Okoye)

- 1d4fd00 bug 854479 Fix ui-lightness 404 problems

- a9bf3b1 bug 940361 Change size on facet calls

- c2b2c2b bug 1081413 Move url validation code into fjord_utils.js Rewrote url validation code that was in generic_feedback.js and added it to fjord_utils.js (Adam Okoye)

- 4181b5e bug 1081413 Change code for url validation (Adam Okoye)

- 2cd62ad bug 1081413 Add test for url validation (Adam Okoye)

- f72652a bug 1081413 Correct operator in test_fjord_utils.js (aokoye)

- c9b83df bug 1081997 Fix unicode in smoketest

- cba9a2d bug 1086643 bug 1086650 Redo infrastructure for product picker version

- e8a9cc7 bug 1084387 Add on_picker field to Product

- 2af4fca bug 1084387 Add on_picker to management forms

- 1ced64a bug 1081411 Create format test (Adam Okoye)

- 00f8a72 Add template for mentored bugs

- e95d0f1 Cosmetic: Move footnote

- d0cb705 Tweak triaging docs

- d5b35a2 bug 1080816 Add A/B for ditching chart

- fa1a47f Add notes about running tests with additional warnings

- ddde83c Fix mimetype -> content_type and int division issue

- 2edb3b3 bug 1089650 Add a contribute.json file (Bhargav Kowshik)

- d341977 bug 1089650 Add test to verify that the JSON is valid (Bhargav Kowshik)

- dcb9380 Add Bhargav Kowshik to CONTRIBUTORS

- 7442513 Fix throttle test

- f27e31c bug 1072285 Update Django, django-nose and django-cache-machine

- dd74a3c bug 1072285 Update django-adminplus

- ececdf7 bug 1072285 Update requirements.txt file

- 6669479 bug 1093341 Tweak Gengo account balance warning

- f233aab bug 1094197 Fix JSONObjectField default default

- 11193d7 Tweak chart display

- 9d311ca Make journal.Record not derive from ModelBase

- f778c9d Remove all Heartbeat v1 stuff

- e5f6f4d Switch test__utils.py to test_utils.py

- cab7050 bug 1092296 Implement heartbeat v2 data model

- 5480c42 bug 1097352 Response view is viewable by all

- 46b5897 bug 1077423 Overhaul generic feedback form dev

- da31b47 bug 1077423 Update smoke tests for generic feedback form dev

- e84094b Fix l10n email template

- d6c8ea9 Remove gettext calls for product dashboards

- e1a0f74 bug 1098486 Remove under construction page

- 032a660 Fix l10n_status.py script history table

- 19cec37 Fix JSONObjectField

- 430c462 Improve display_history for l10n_status

- d6c18c6 Windows NT 6.4 -> Windows 10

- 73a4225 bug 1097847 Update django-grappelli to 2.5.6

- 4f3b9c7 bug 1097847 Fix custom views in admin

- 3218ea3 Fix JSONObjectfield.value_to_string

- 67c6bf9 Fix RecordManager.log regarding instances

- a5e8610 bug 1092299 Implement Heartbeat v2 API

- 17226db bug 1092300 Add Heartbeat v2 debugging views

- 11681c4 Rework env view to show python and django version

- 9153802 bug 1087387 Add feedback_response.browser_platform

- f5d8b56 bug 1087387 bug 1096541 Clean up feedback view code

- c9c7a81 bug 1087391 Fix POST API user-agent inference code

- 4e93fc7 bug 1103024 Gengo kill switch

- de9d1c7 Capture the user-agent

- 4796e4e bug 1096541 Backfill browser_platform for Firefox dev

- f5fe5cf bug 1103141 Add experiment_version to HB db and api

- 98c40f6 bug 1103141 Add experiment_version to views

- 0996148 bug 1103045 Create a menial hb survey view

- 965b3ee bug 1097204 Rework product picker

- 6907e6f bug 1097203 Add link to SUMO

- e8f3075 bug 1093843 Increase length of product fields

- 2c6d24b bug 1103167 Raise GET API throttle

- d527071 bug 1093832 Move feedback url documentation

- 6f4eb86 Abstract out python2.6 in deploy script

- f843286 Fix compile-linted-mo.sh to take pythonbin as arg

- 966da77 Add celery health check

- 1422263 Add space before subject of celery health email

- 5e07dbd [heartbeat] Add experiment1 static page placeholders

- 615ccf1 [heartbeat] Add experiment1 static files

- d8822df [heartbeat] Add SUMO links to sad page

- 3ee924c [heartbeat] Add twitter thing to happy page

- d87a815 [heartbeat] Change thank you text

- 06e73e6 [heartbeat] Remove cruft, fix links

- 8208a72 [heartbeat] Fix "addons"

- 2eca74c [heartbeat] Show profile_age always because 0 is valid

- 4c4598b bug 1099138 Fix "back to picker" url

- b2e9445 Add note about "Commit to VCS" in l10n docs

- 9c22705 Heartbeat: instrument email signup, feedback, NOT Twitter (Gregg Lind)

- 340adf9 [heartbeat] Fix DOCTYPE and ispell pass

- 486bf65 [heartbeat] Change Thank you text

- d52c739 [heartbeat] Switch to use Input GA account

- f07716b [heartbeat] Fix favicons

- eff9d0b [heartbeat] Fixed page titles

- 969c4a0 [heartbeat] Nix newsletter form for a link

- dce6f86 [heartbeat] Reindent code to make it legible

- dad6d82 bug 1099138 Remove [DEV] from title

- 4204b43 fixed typo in getting_started.rst (Deshraj Yadav)

- 7042ead bug 1107161 Fix hb answers view

- a024758 bug 1107809 Fix Gengo language guesser

- 808fa83 bug 1107803 Rewrite Response inference code

- d9e8ffd bug 1108604 Tweak paging links in hb data view

- 00e8628 bug 1108604 Add sort and display ts better in hb data view

- 17b908a bug 1108604 Change paging to 100 in hb data view

- 39dc943 bug 1107083 Backfill versions

- fee0653 bug 1105512 Rip out old generic form

- b5bb54c Update grappelli in requirements.txt file

- f984935 bug 1104934 Add ResponseTroubleshootingInfo model

- c2e7fd3 bug 950913 Move 'TRUNCATE_LENGTH' and make accessable to other files (Adam Okoye)

- b6f30e1 bug 1074315 Ignore deleted files for linting in pre-commit hook (L. Guruprasad)

- 4009a59 Get list of .py files to lint using just git diff (L. Guruprasad)

- c81da0b bug 950913 Access TRUNCATE_LENGTH from generic_feedback template (Adam Okoye)

- 9e3cec6 bug 1111026 Fix hb error page paging

- b89daa6 Dennis update to master tip

- 61e3e18 Add django-sslserver

- 93d317b bug 1104935 Add remote.js

- ad3a5cb bug 1104935 Add browser data section to generic form

- cc54daf bug 1104935 Add browserdata metrics

- 31c2f74 Add jshint to pre-commit hook (L. Guruprasad)

- 68eae85 Pretty-print JSON blobs in hb errorlog view

- 8588b42 bug 1111265 Restrict remote-troubleshooting to Firefox

- b0af9f5 Fix sorby error in hb data view

- 8f622cf bug 1087394 Add browser, browser_version, and browser_platform to response view (Adam Okoye)

- c4b6f85 bug 1087394 Change Browser Platform label (Adam Okoye)

- eb1d5c2 Disable expansion of $PATH in the provisioning script (L. Guruprasad)

- 59eebda Cosmetic test changes

- aac733b bug 1112210 Tweak remote-troubleshooting capture

- 6f24ce7 bug 1112210 Hide browser-ask by default

- 278095d bug 1112210 Note if we have browser data in response view

- 869a37c bug 1087395 Add fields to CSV output (Adam Okoye)

Landed, but not deployed:

- 4ee7fd6 Update the name of the pre-commit hook script in docs (L. Guruprasad)

- d4c5a09 bug 1112084 create requirements/dev.txt (L. Guruprasad)

- 4f03c48 bug 1112084 Update provisioning script to install dev requirements (L. Guruprasad)

- 03c5710 Remove instructions for manual installation of flake8 (L. Guruprasad)

- a36a231 bug 1108755 Add a git commit message linter (L. Guruprasad)

Current head: f0ec99d

Rough plan for the next two weeks

- PTO. It's been a really intense quarter (as you can see) and I need some rest. Maybe a nap. Plus we have a deploy freeze through to January, so we can't push anything out anyhow. I hope everyone else gets some rest, too.

That's it!

http://bluesock.org/~willkg/blog/mozilla/input_status_20141218

|

|

Schalk Neethling: Getting “Cannot read property ‘contents’ of undefined” with grunt-contrib-less? Read this… |

|

|

Matej Cepl: Third Wave and Telecommuting |

I have been reading Tim Bray’s blogpost on how he started to work in Amazon, and I got ignited by the comment by len and particularly by this (he starts quoting Tim):

“First, I am totally sick of working remotely. I want to go and work in rooms with other people working on the same things that I am.”

And that says a lot. Whatever the web has enabled in terms of access, it has proven to be isolating where human emotions matter and exposing where business affairs matter. I can’t articulate that succinctly yet, but there are lessons to be learned worthy of articulation. A virtual glass of wine doesn’t afford the pleasure of wine. Lessons learned.

Although I generally agree with your sentiment (these are really not your Friends, except if they already are), I believe the situation with the telecommuting is more complex. I have been telecommuting for the past eight years (or so, yikes, the time fly!) and I do like it most of the time. However, it really requires special type of personality, special type of environment, special type of family, and special type of work to be able to do it well. I know plenty of people who do well working from home (with occasional stay in the coworking office) and some who just don’t. It has nothing to do with IQ or anything like that. Just for some people it works, and I have some colleagues who left Red Hat just because they cannot work from home and the nearest Red Hat office was just too far from them.

However, this trivial statement makes me think again about stuff which is much more profound in my opinion. I am a firm believer in the coming of what Alvin and Heidi Toffler called “The Third Wave”. That after the mainly agricultural and mainly industrial societies the world is changing, so that “much that once was is lost” and we don’t know exactly what is coming. One part of this change is substantial change in the way we organize our work. It really sounds weird but there were times when there were no factories, no offices, and most people were working from their homes. I am not saying that the future will be like the distant past, it never is, but the difference makes it clear to me that what is now is not the only possible world we could live in.

I believe that the standard of people leaving their home in the morning to work will be in future very very diminished. Probably some parts of the industrial world will remain around us (after all, there are still big parts of the agricultural world around us), but I think it might have the same impact (or so little impact) as the agricultural world has on the current world. If the trend of the offices dissolution will continue (and I don’t see the reason why it wouldn’t, in the end all those office buildings and commuting is terrible waste of money) we can expect really massive change in almost everything: ways we build homes (suddenly your home is not just the bedroom to survive night between two workshifts), transportation, ways we organize our communities (suddenly it does matter who is your neighbor), and of course a lot of social rules will have to change. I think we are absolutely not-prepared for this and also we are not talking about this enough. But we should.

https://luther.ceplovi.cz/blog/2014/12/19/third-wave-and-telecommuting/

|

|

Mozilla Reps Community: Reps Weekly Call – December 18th 2014 |

Last Thursday we had our regular weekly call about the Reps program, where we talk about what’s going on in the program and what Reps have been doing during the last week.

Summary

- Privacy Day & Hello Plan.

- End of the year! What should we do?

- Mozlandia videos.

Note: Due holiday dates, next weekly call will be January 8.

AirMozilla video

Don’t forget to comment about this call on Discourse and we hope to see you next year!

https://blog.mozilla.org/mozillareps/2014/12/19/reps-weekly-call-december-18th-2014/

|

|

Henrik Skupin: Firefox Automation report – week 45/46 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 45 and 46.

Highlights

In our Mozmill-CI environment we had a couple of frozen Windows machines, which were running with 100% CPU load and 0MB of memory used. Those values came from the vSphere client, and didn’t give us that much information. Henrik checked the affected machines after a reboot, and none of them had any suspicious entries in the event viewer either. But he noticed that most of our VMs were running a very outdated version of the VMware tools. So he upgraded all of them, and activated the automatic install during a reboot. Since then the problem is gone. If you see something similar for your virtual machines, make sure to check that used version!

Further work has been done for Mozmill CI. So were finally able to get rid of all the traces for Firefox 24.0ESR since it is no longer supported. Further we also setup our new Ubuntu 14.04 (LTS) machines in staging and production, which will soon replace the old Ubuntu 12.04 (LTS) machines. A list of the changes can be found here.

Beside all that Henrik has started to work on the next Jenkins v1.580.1 (LTS) version bump for the new and more stable release of Jenkins. Lots of work might be necessary here.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 45 and week 46.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 45 and week 46.

http://www.hskupin.info/2014/12/19/firefox-automation-report-week-45-46-2014/

|

|

Adam Okoye: OPW Week Two – Coming to a Close |

Ok so technically it’s the first full week because OPW started last Tuesday December 9th, but either way – my first almost two weeks of OPW are coming to a close. My experience has been really good so far and I think I’m starting to find my groove in terms of how and where I work best location wised. I also think I’ve been pretty productive considering this is the first internship or job I’ve had (not counting the Ascend Project) in over 5 years.

So far I’ve resolved three bugs (some of which were actually feature requests) and have a fourth pull request waiting. I’ve been working entirely in Django so far primarily editing model and template files. One of the really nice things that I’ve gotten out of my project is learning while and via working on bugs/features.

Four weeks ago I hadn’t done any work with Django and realized that it would likely behoove me to dive into some tutorials (originally my OPW project with Input was going to be primarily in JavaScript but in reality doing things in Python and Django makes a lot more sense and thus the switch despite the fact that I’m still working with Input). I started a three or four basic tutorials and completed one and a half of them – in short, I didn’t have a whole lot of experience with Django ten days ago. Despite that. all of the the looking through and editing of files that I’ve done has really improved my skills both in terms of syntax and also in terms of being able to find information – both where to take information from and also where to put it. I look forward to all of the new things that I will learn and put in to practice.

http://www.adamokoye.com/2014/12/opw-week-two-coming-to-a-close/

|

|

Mozilla Fundraising: A/B Testing: ‘Sequential form’ vs ‘Simple PayPal’ |

https://fundraising.mozilla.org/ab-testing-sequential-form-vs-simple-paypal/

|

|

Dietrich Ayala: Remixable Quilts for the Web |

Atul Varma set up a quilt for MozCamp Asia 2012 which I thought was a fantastic tool for that type of event. It provided an engaging visualization, was collaboratively created, and allowed a quick and easy way to dive further into the details about the participating groups.

I wanted to use it for a couple of projects, but the code was tied pretty closely to that specific content and layout.

I wanted to use it for a couple of projects, but the code was tied pretty closely to that specific content and layout.

This week I finally got around to moving the code over to Mozilla Webmaker, so it could be easily copied and remixed. I made a couple of changes:

- Update font to Open Sans

- Make it easy and clear how to re-theme the colors

- Allow arbitrary content in squares

The JS code is still a bit too complex for what’s needed, but it works on Webmaker now!

View my demo quilt. Hit the “remix” button to clone it and make your own.

The source for the core JS and CSS is at https://github.com/autonome/quilt.

https://autonome.wordpress.com/2014/12/18/remixable-quilts-for-the-web/

|

|