Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

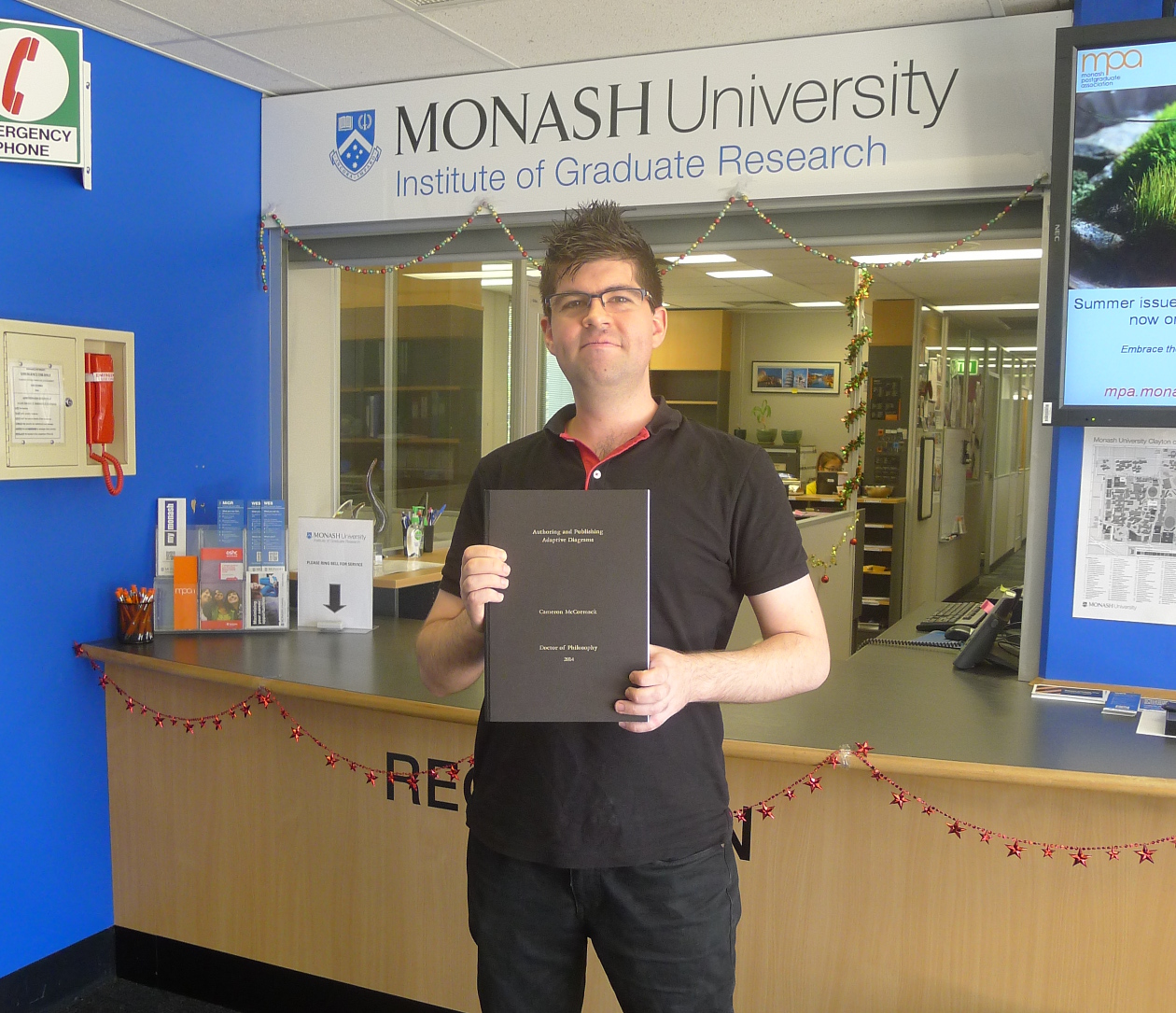

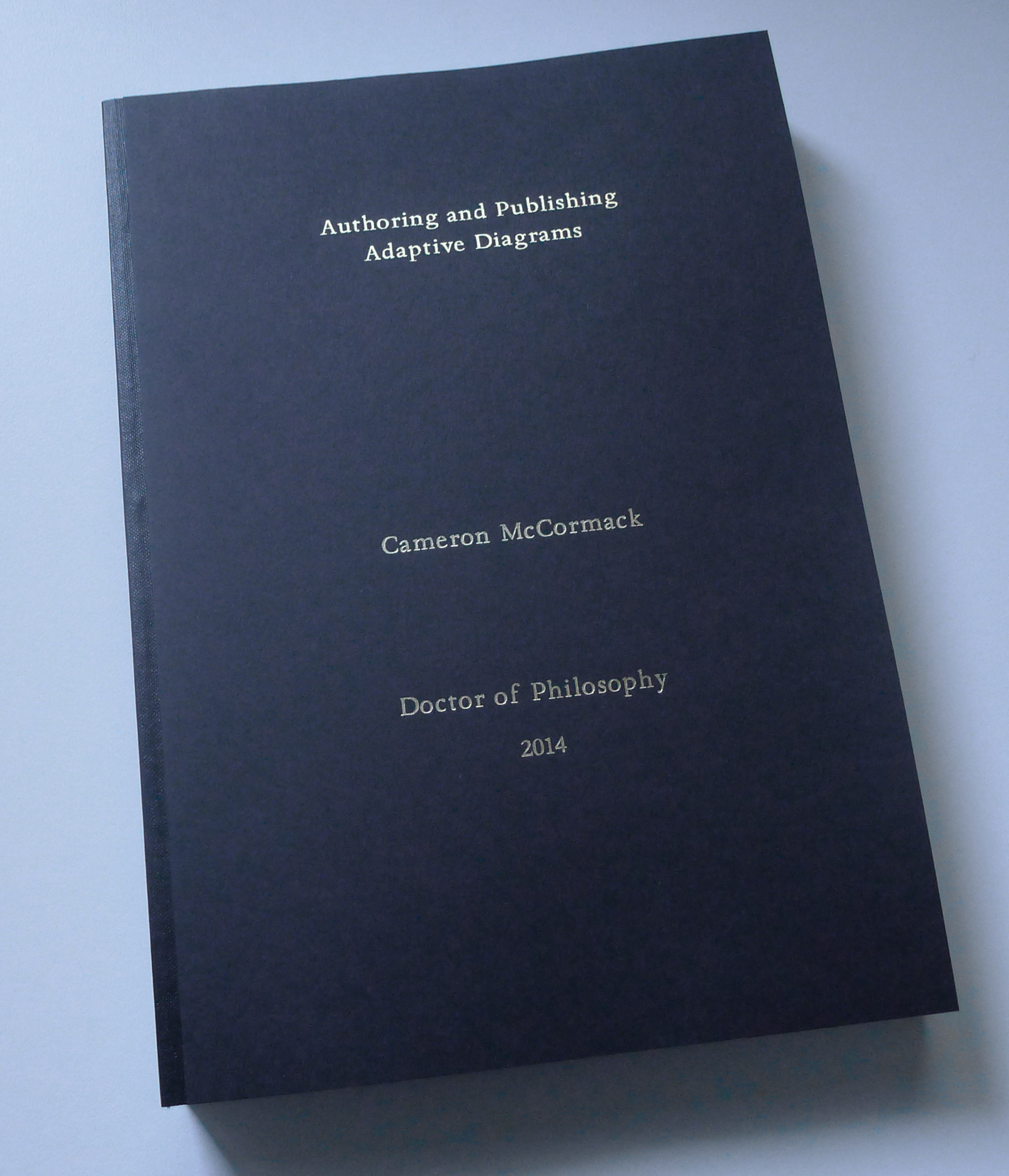

Cameron McCormack: Submission |

254 pages, eleven and a half years of my life.

Now for the months-long wait for the examiners to review it.

|

|

Paul Rouget: Firefox.html screencast |

Firefox.html screencast. Contribute: http://github.com/paulrouget/firefox.html.

Youtube video: https://www.youtube.com/watch?v=IBzrCmGVDkA|

|

David Humphrey: Video killed the radio star |

One of the personal experiments I'm considering in 2015 is a conscious movement away from video-based participation in open source communities. There are a number of reasons, but the main one is that I have found the preference for "realtime," video-based communication media inevitably leads to ever narrowing circles of interaction, and eventually, exclusion.

I'll speak about Mozilla, since that's the community I know best, but I suspect a version of this is happening in other places as well. At some point in the past few years, Mozilla (the company) introduced a video conferencing system called Vidyo. It's pretty amazing. Vidyo makes it trivial to setup a virtual meeting with many people simultaneously or do a 1:1 call with just one person. I've spent hundreds of hours on Vidyo calls with Mozilla, and other than the usual complaints one could level against meetings in general, I've found them very productive and useful, especially being able to see and hear colleagues on the other side of the country or planet.

Vidyo is so effective that for many parts of the project, it has become the default way people interact. If I need to talk to you about a piece of code, for example, it would be faster if we both just hopped into Vidyo and spent 10 minutes hashing things out. And so we do. I'm guilty of this.

I'm talking about Vidyo above, but substitute Skype or Google Hangouts or appear.in or some cool WebRTC thing your friend is building on Github. Video conferencing isn't a negative technology, and provides some incredible benefits. I believe it's part of what allows Mozilla to be such a successful remote-friendly workplace (vs. project). I don't believe, however, that it strengthens open source communities in the same way.

It's possible on Vidyo to send an invitation URL to someone without an account (you need an account to use it, by the way). You have to be invited, though. Unlike irc, for example, there is no potential for lurking (I spent years learning about Mozilla code by lurking on irc in #developers). You're in or you're out, and people need to decide which it will be. Some people work around this by recording the calls and posting them online. The difficulty here is that doing so converts what was participation into performance--one can watch what happened, but not engage it, not join the conversation and therefore the decision making. And the more we use video, the more likely we are to have that be where we make decisions, further making it difficult for those not in the meeting to be part of the discussion.

Even knowing that decisions have been made becomes difficult in a world where those decisions aren't sticky, and go un-indexed. If we decided in a mailing list, bug, irc discussion, Github issue, etc. we could at least hope to go back and search for it. So too could interested members of the community, who may wish to follow along with what's happening, or look back later when the details around how the decision came to be become important.

I'll go further and suggest that in global, open projects, the idea that we can schedule a "call" with interested and affected parties is necessarily flawed. There is no time we can pick that has us all, in all timezones, able to participate. We shouldn't fool ourselves: such a communication paradigm is necessarily geographically rooted; it includes people here, even though it gives the impression that everyone and anyone could be here. They aren't. They can't be. The internet has already solved this problem by privileging asynchronous communication. Video is synchronous.

Not everything can or should be open and public. I've found that certain types of communication work really well over video, and we get into problems when we do too much over email, mailing lists, or bugs. For example, a conversation with a person that requires some degree of personal nuance. We waste a lot of time, and cause unnecessary hurt, when we always choose open, asynchronous, public communication media. Often scheduling an in person meeting, getting on the phone, or using video chat would allow us to break through a difficult impasse with another person.

But when all we're doing is meeting as a group to discuss something public, I think it's worth asking the question: why aren't we engaging in a more open way? Why aren't we making it possible for new and unexpected people to observe, join, and challenge us? It turns out it's a lot easier and faster to make decisions in a small group of people you've pre-chosen and invited; but we should consider what we give up in the name of efficiency, especially in terms of diversity and the possibility of community engagement.

When I first started bringing students into open source communities like Mozilla, I liked to tell them that what we were doing would be impossible with other large products and companies. Imagine showing up at the offices of Corp X and asking to be allowed to sit quietly in the back of the conference room while the engineers all met. Being able to take them right into the heart of a global project, uninvited, and armed only with a web browser, was a powerful statement; it says: "You don't need permission to be one of us."

I don't think that's as true as it used to be. You do need permission to be involved with video-only communities, where you literally have to be invited before taking part. Where most companies need to guard against leaks and breaches of many kinds, an open project/company needs to regularly audit to ensure that its process is porous enough for new things to get in from the outside, and for those on the inside to regularly encounter the public.

I don't know what the right balance is exactly, and as with most aspects of my life where I become unbalanced, the solution is to try swinging back in the other direction until I can find equilibrium. In 2015 I'm going to prefer modes of participation in Mozilla that aren't video-based. Maybe it will mean that those who want to work with me will be encouraged to consider doing the same, or maybe it will mean that I increasingly find myself on the outside. Knowing what I do of Mozilla, and its expressed commitment to working open, I'm hopeful that it will be the former. We'll see.

|

|

Daniel Stenberg: Can curl avoid to be in a future funnily named exploit that shakes the world? |

During this year we’ve seen heartbleed and shellshock strike (and a few more big flaws that I’ll skip for now). Two really eye opening recent vulnerabilities in projects with many similarities:

- Popular corner stones of open source stacks and internet servers

- Mostly run and maintained by volunteers

- Mature projects that have been around since “forever”

- Projects believed to be fairly stable and relatively trustworthy by now

- A myriad of features, switches and code that build on many platforms, with some parts of code only running on a rare few

- Written in C in a portable style

Does it sound like the curl project to you too? It does to me. Sure, this description also matches a slew of other projects but I lead the curl development so let me stay here and focus on this project.

Are we in jeopardy? I honestly don’t know, but I want to explain what we do in our project in order to minimize the risk and maximize our ability to find problems on our own before they become serious attack vectors somewhere!

Are we in jeopardy? I honestly don’t know, but I want to explain what we do in our project in order to minimize the risk and maximize our ability to find problems on our own before they become serious attack vectors somewhere!

previous flaws

There’s no secret that we have let security problems slip through at times. We’re right now working toward our 143rd release during our around 16 years of life-time. We have found and announced 28 security problems over the years. Looking at these found problems, it is clear that very few security problems are discovered quickly after introduction. Most of them linger around for several years until found and fixed. So, realistically speaking based on history: there are security bugs still in the code, and they have probably been present for a while already.

code reviews and code standards

We try to review all patches from people without push rights in the project. It would probably be a good idea to review all patches before they go in for real, but that just wouldn’t work with the (lack of) man power we have in the project while we at the same time want to develop curl, move it forward and introduce new things and features.

We maintain code standards and formatting to keep code easy to understand and follow. We keep individual commits smallish for easier review now or in the future.

test cases

As simple as it is, we test that the basic stuff works. We don’t and can’t test everything but having test cases for most things give us the confidence to change code when we see problems as we then remain fairly sure things keep working the same way as long as the test go through. In projects with much less test coverage, you become much more conservative with what you dare to change and that also makes you more vulnerable.

We always want more test cases and we want to improve on how we always add test cases when we add new features and ideally we should also add new test cases when we fix bugs so that we know that we don’t introduce any such bug again in the future.

static code analyzes

We regularly scan our code base using static code analyzers. Both clang-analyzer and coverity are good tools, and they help us by pointing out code that look wrong or suspicious. By making sure we have very few or no such flaws left in the code, we minimize the risk. A static code analyzer is better than run-time tools for cases where they can check code flows that are hard to repeat in my local environment.

valgrind

Valgrind is an awesome tool to detect memory problems in run-time. Leaks or just stupid uses of memory or related functions. We have our test suite automatically use valgrind when it runs tests in case it is present and it helps us make sure that all situations we test for are also error-free from valgrind’s point of view.

Valgrind is an awesome tool to detect memory problems in run-time. Leaks or just stupid uses of memory or related functions. We have our test suite automatically use valgrind when it runs tests in case it is present and it helps us make sure that all situations we test for are also error-free from valgrind’s point of view.

autobuilds

Building and testing curl on a plethora of platforms non-stop is also useful to make sure we don’t depend on behaviors of particular library implementations or non-standard features and more. Testing it all is basically the only way to make sure everything keeps working over the years while we continue to develop and fix bugs. We would course be even better off with more platforms that would test automatically and with more developers keeping an eye on problems that show up there…

code complexity

Arguably, one of the best ways to avoid security flaws and bugs in general, is to keep the source code as simple as possible. Complex functions need to be broken down into smaller functions that are possible to read and understand. A good way to identify functions suitable for fixing is pmccabe,

essential third parties

curl and libcurl are usually built to use a whole bunch of third party libraries in order to perform all the functionality. In order to not have any of those uses turn into a source for trouble we must of course also participate in those projects and help them stay strong and make sure that we use them the proper way that doesn’t lead to any bad side-effects.

You can help!

All this takes time, energy and system resources. Your contributions and help will be appreciated where ever among these tasks that you can insert any. We could do more of all this, more often and more thorough if we only were more people involved!

|

|

Julien Vehent: Stripe's AWS-Go and uploading to S3 |

Yesterday, I discovered Stripe's AWS-Go library, and the magic of auto-generated API clients (which is one fascinating topic that I'll have to investigate for MIG).

I took on the exercise of writing a simple file upload tool using aws-go. It was fairly easy to achieve, considering the complexity of AWS's APIs. I would have to evaluate aws-go further before recommending it as a comprehensive AWS interface, but so far it seems complete. Check out http://godoc.org/github.com/stripe/aws-go/gen for a detailed doc.

The source code is below. It reads credentials from ~/.awsgo:$ cat ~/.awsgo

[credentials]

accesskey = "AKI...."

secretkey = "mw0...."

It takes a file to upload as the only argument, and returns the URL where it is posted.

https://s3.amazonaws.com/testawsgo/s3up

package main

import (

"code.google.com/p/gcfg"

"fmt"

"github.com/stripe/aws-go/aws"

"github.com/stripe/aws-go/gen/s3"

"os"

)

// conf takes an AWS configuration from a file in ~/.awsgo

// example:

//

// [credentials]

// accesskey = "AKI...."

// secretkey = "mw0...."

//

type conf struct {

Credentials struct {

AccessKey string

SecretKey string

}

}

func main() {

var (

err error

conf conf

bucket string = "testawsgo" // change to your convenience

fd *os.File

contenttype string = "binary/octet-stream"

)

// obtain credentials from ~/.awsgo

credfile := os.Getenv("HOME") + "/.awsgo"

_, err = os.Stat(credfile)

if err != nil {

fmt.Println("Error: missing credentials file in ~/.awsgo")

os.Exit(1)

}

err = gcfg.ReadFileInto(&conf, credfile)

if err != nil {

panic(err)

}

// create a new client to S3 api

creds := aws.Creds(conf.Credentials.AccessKey, conf.Credentials.SecretKey, "")

cli := s3.New(creds, "us-east-1", nil)

// open the file to upload

if len(os.Args) != 2 {

fmt.Printf("Usage: %s \n", os.Args[0])

os.Exit(1)

}

fi, err := os.Stat(os.Args[1])

if err != nil {

fmt.Printf("Error: no input file found in '%s'\n", os.Args[1])

os.Exit(1)

}

fd, err = os.Open(os.Args[1])

if err != nil {

panic(err)

}

defer fd.Close()

// create a bucket upload request and send

objectreq := s3.PutObjectRequest{

ACL: aws.String("public-read"),

Bucket: aws.String(bucket),

Body: fd,

ContentLength: aws.Integer(int(fi.Size())),

ContentType: aws.String(contenttype),

Key: aws.String(fi.Name()),

}

_, err = cli.PutObject(&objectreq)

if err != nil {

fmt.Printf("Error: %v\n", err)

} else {

fmt.Printf("%s\n", "https://s3.amazonaws.com/"+bucket+"/"+fi.Name())

}

// list the content of the bucket

listreq := s3.ListObjectsRequest{

Bucket: aws.StringValue(&bucket),

}

listresp, err := cli.ListObjects(&listreq)

if err != nil {

panic(err)

}

if err != nil {

fmt.Printf("Error: %v\n", err)

} else {

fmt.Printf("Content of bucket '%s': %d files\n", bucket, len(listresp.Contents))

for _, obj := range listresp.Contents {

fmt.Println("-", *obj.Key)

}

}

}

https://jve.linuxwall.info/blog/index.php?post/2014/12/15/Stripe-s-AWS-Go-and-uploading-to-S3

|

|

Mozilla Open Policy & Advocacy Blog: Spotlight on Public Knowledge: A Ford-Mozilla Open Web Fellow Host |

(This is the fourth in our series spotlighting host organizations for the 2015 Ford-Mozilla Open Web Fellowship. For years, Public Knowledge has been at the forefront of fighting for citizens and informing complex telecommunications policy to protect people. Working at Public Knowledge, the Fellow will be at the center of emerging policy that will shape the Internet as we know it. Apply to be a Ford-Mozilla Open Web Fellow and use your tech skills at Public Knowledge to protect the Web.)

Spotlight on Public Knowledge: A Ford-Mozilla Open Web Fellow Host

by Shiva Stella, Communications Manager of Public Knowledge

This year has been especially intense for policy advocates passionate about protecting a free and open internet, user protections, and our digital rights. Make no mistake: From net neutrality to the Comcast/Time Warner Cable merger, policy makers will continue to have an outsized influence over the web.

In order to enhance our advocacy efforts, Public Knowledge is hosting a Ford-Mozilla Open Web Fellow. We are looking for a leader with technical skills and drive to defend the internet, focusing on fair-use copyright and consumer protections. There’s a lot of important work to be done, and we know the public could use your help.

Public Knowledge works steadfastly in the telecommunications and digital rights space. Our goal is to inform the public of key policies that impact and limit a wide range of technology and telecom users. Whether you’re the child first responders fail to locate accurately because you dial 911 from a cell phone or the small business owner who can’t afford to “buy into” the internet “fast lane,” these policies affect your digital rights – including the ability to access, use and own communications tools like your set-top box (which you currently lease forever from your cable company, by the way) and your cell phone (which your carrier might argue can’t be used on a competing network due to copyright law).

There is no doubt that public policy impacts people’s lives, and Public Knowledge is advocating for the public interest at a critical time when special interests are attempting to shape policy that benefits them at our cost or that overlooks an issue’s complexity.

Indeed, in this interconnected world, the right policy outcome isn’t always immediately clear. Location tracking, for example, can impact people’s sense of privacy; and yet, when deployed in the right way, can lead to first responders swiftly locating someone calling 911 from a mobile device. Public Knowledge sifts through the research and makes sure consumers have a seat at the table when these issues are decided.

Public policy in this area can also impact the broader economy, and raises larger questions: Should we have an internet with a “fast lane“ for the relatively few companies that can afford it, and a slow lane for the rest of us? What would be the impact on innovation and small business if we erase net neutrality as we know it?

The answers to these questions require a community of leaders to advocate for policies that serve the public interest. We need to state in clear language the impact of ill-informed policies and how they affect people’s digital rights —including the ability to access, use and own communications tools, as well as the ability to create and innovate.

Even as the U.S. Federal Communications Commission reviews millions of net neutrality comments and considers approving huge mergers that risk consumers, the cable industry is busy hijacking satellite bills (STAVRA), stealthily slipping “pro-cable” provisions into legislation and that must be passed so 1.5 million satellite subscribers may continue receiving their (non-cable!) service. Public Knowledge shines light on these policies to prevent them from harming innovation or jeopardizing our creative and connected future. To this end we advocate for an open internet and public access to affordable technologies and creative works, engaging policy makers and the public in key policy decisions that affect us all.

Let us be clear: private interests are hoping you won’t notice or just don’t care about these issues. We’re betting that’s not the case. Please apply today to join the Public Knowledge team as a Ford-Mozilla Open Web Fellow to defend the internet we love.

Apply to be a Ford-Mozilla Open Web Fellow. Application deadline for the 2015 Fellowship is December 31, 2014.

|

|

Gervase Markham: FirefoxOS 3 Ideas: Hack The Phone Call |

People are brainstorming ideas for FirefoxOS 3, and how it can be more user-centred. Here’s one:

There should be ways for apps to transparently be hooked into the voice call creation and reception process. I want to use the standard dialer and address book that I’m used to (and not have to use replacements written by particular companies or services), and still e.g.:

- My phone company can write a Firefox OS extension (like TU Go on O2) such that when I’m on Wifi, all calls transparently use that

- SIP or WebRTC contacts appear in the standard contacts app, but when I press “Call”, it uses the right technology to reach them

- Incoming calls can come over VoIP, the phone network or any other way and they all look the same when ringing

- When I dial, I can configure rules such that calls to certain prefixes/countries/numbers transparently use a dial-through operator, or VoIP, or a particular SIM

- If a person has 3 possible contact methods, it tries them in a defined order, or all simultaneously, or best quality first, or whatever I want

These functions don’t have to be there by default; what I’m arguing for is the necessary hooks so that apps can add them – an app from your carrier, an app from your SIP provider, an app from a dial-through provider, or just a generic app someone writes to define call routing rules. But the key point is, you don’t have to use a new dialer or address book to use these features – they can be UI-less (at least when not explicitly configuring them.)

In other words, I want to give control over the phone call back to the user. At the moment, doing SIP on Android requires a new app. TU Go requires a new app. There’s no way to say “for all international calls, when I’m in the UK, use this dial-through operator”. I don’t have a dual-SIM Android phone, so I’m not sure if it’s possible on Android to say “all calls to this person use SIM X” or “all calls to this network (defined by certain number prefixes) use SIM Y”. But anyway, all these things should be possible on FirefoxOS 3. They may not be popular with carriers, because they will all save the user money. But if we are being user-centric, we should do them.

http://feedproxy.google.com/~r/HackingForChrist/~3/1YAPnSIAOXw/

|

|

Benjamin Kerensa: Give a little |

Give by Time Green (CC-BY-SA)

Give by Time Green (CC-BY-SA)The year is coming to an end and I would encourage you all to consider making a tax-deductible donation (If you live in the U.S.) to one of the following great non-profits:

The Mozilla Foundation is a non-profit organization that promotes openness, innovation and participation on the Internet. We promote the values of an open Internet to the broader world. Mozilla is best known for the Firefox browser, but we advance our mission through other software projects, grants and engagement and education efforts.

The Electronic Frontier Foundation is the leading nonprofit organization defending civil liberties in the digital world. Founded in 1990, EFF champions user privacy, free expression, and innovation through impact litigation, policy analysis, grassroots activism, and technology development.

The ACLU is our nation’s guardian of liberty, working daily in courts, legislatures and communities to defend and preserve the individual rights and liberties that the Constitution and laws of the United States guarantee everyone in this country.

The Wikimedia Foundation, Inc. is a nonprofit charitable organization dedicated to encouraging the growth, development and distribution of free, multilingual, educational content, and to providing the full content of these wiki-based projects to the public free of charge. The Wikimedia Foundation operates some of the largest collaboratively edited reference projects in the world, including Wikipedia, a top-ten internet property.

Feeding America is committed to helping people in need, but we can’t do it without you. If you believe that no one should go hungry in America, take the pledge to help solve hunger.

ACF International, a global humanitarian organization committed to ending world hunger, works to save the lives of malnourished children while providing communities with access to safe water and sustainable solutions to hunger.

These six non-profits are just one of many causes to support but these ones specifically are playing a pivotal role in protecting the internet, protecting liberties, educating people around the globe or helping reduce hunger.

Even if you cannot support one of these causes, consider giving this post a share to add visibility to your friends and family and help support these causes in the new year!

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/JQ6GpO6rON0/give-a-little

|

|

Nigel Babu: Mozlandia - Arrival |

Portland. The three words that come to mind are overwhelmed, cold, and exhilarating. Getting there was a right pain, I’d have to admit. Though, flying around the US the weekend after Black Friday isn’t the best idea anyway. According to my rough calculations, it took about 25 hours from take off in Delhi to wheels down in Portland. That’s a heck a lot of time on planes and at airports. But hey, I’ve been doing this for weeks in a row at this point.

At the airport, I ran into people holding up the Mozilla board. As I waited for the shuttle, I was very happy to run into Luke, from the MDN team. We met at the summit and he was a familiar face. We were chatting all the way to the hotel about civic hacking.

This work week is the most exciting Mozilla event that I’ve attended. I’m finally getting to meet a lot of people I know and renewing friendships from the last few events. I started contributing to Mozilla by contributing to the Webdev team. My secret plan at this work week was to meet all the folks from the old Webdev team in person. I’ve known them for more than 3 years and never quite managed to meet everyone in person.

After a quick shower, I decided to step out to the Mozilla PDX. According to Google Maps, it was a quick walk away and I was trying not to sleep all day despite my body trying to convince me it was a good idea. At the office, I met Fred’s team and we sat around talking for a while. It was good to meet Christie again too! That’s when a wave of exhaustion hit. I didn’t see it coming. Suddenly, I felt sluggish and a warm bed seemed very tempting. I quickly retired to bed after lunch with Jen, Sole, and Matt.

When I got down after the nap, there was a small group headed to the opening event. This was good, because I got very confused with Google Maps (paper maps were much more helpful).

Whoa, people overload. I walked around a few rounds meeting lots of people. It was fun running into a lot of people from IRC in the flesh. I enjoyed meeting the folks from the Auckland office (I often back them out :P). And I finally met Laura and her team. For change, I’m visiting bkero’s town this time instead of him visiting mine ;)

The rest of the evening is a bit of a blur. Eventually, I was exhausted and walked back to the hotel for a good night’s sleep before the fun really started!

|

|

Andy McKay: Self Examination |

A few weeks ago we had the Mozilla Mozlanida meet up in Portland. I had a few things on my agenda going into that meeting. My biggest was to critically examine the project my team and I have been working on for almost two years.

That project is Marketplace Payments, which we provide through the Firefox Marketplace for developers. We don't limit what kind of payment system you use in Web Apps, unlike Google or Apple.

In Mozlandia, I was arguing (along with some colleagues) that there really is little point in working on this much anymore. There are many reasons for this, but here's the high level:

Providing a payments service that competes against every other web based payment service in existence is outside of our core goals

We can't actually compete against every other web based payment service without significant investment

Developer uptake doesn't support further investment in the project.

There was mostly agreement on this, so we've agreed to complete our existing work on it and then leave it as it is for a while. We'll watch the metrics, see what happens and make some decisions based on the that.

But really the details of this are not that important. What I believe is really, really important is the ability to critically examine your job and projects and examine their worth.

What normally happens is that you get a group of people and tell them to work on project X. They will iterate through features and complete features. And repeat and keep going. And if you don't stop at some point and critically examine what is going on, it will keep repeating. People will find new features, new enhancements, new areas to add to the project. Just as they have been trained to do so. And the project will keep growing.

That's a perfectly normal thing for a team to do. It's harder to call a project done, the features complete and realize that there might be an end.

Normally that happens externally. Sometimes its done a positive way, sometimes it's done negatively. In the latter people get upset and recriminations and accusations fly. It's not a fun time.

But being able to step aside and declare the project done internally can be hard for one main reason: people fear for their job.

That's what some people said to me in Mozlandia "Andy you've just talked yourself out of a job" or "You've just thrown yourself under a bus".

Maybe, but so be it. I have no fear that there's important stuff to be doing at Mozilla and that my awesome team will have plenty to do.

Right, next project.

Update: Marketplace Payments are still there and we are completing the last projects we have for them. But we aren't going to be doing development beyond that on them for a while. Let's see what the data shows.

|

|

Doug Belshaw: Bittorrent's Project Maelstrom is 'Firecloud' on steroids |

Earlier this week, BitTorrent, Inc. announced Project Maelstrom. The idea is to apply the bittorrent technologies and approaches to more of the web.

Note: if you can’t read the text in the image, it says: “This is a webpage powered by 397 people + You. Not a central server.” So. Much. Win.

The blog post announcing the project doesn’t have lots of details, but a follow-up PC World article includes an interview with a couple of the people behind it.

I think the key thing comes in this response from product manager Rob Velasquez:

We support normal web browsing via HTTP/S. We only add the additional support of being able to browse the distributed web via torrents

This excites me for a couple of reasons. First, I’ve thought on-and-off for years about how to build a website that’s untakedownable. I’ve explored DNS based on the technology powering Bitcoin, experimented with the PirateBay’s now-defunct blogging platform Baywords, and explored the dark underbelly of the web with sites available only through Tor.

Second, Vinay Gupta and I almost managed to get a project off the ground called Firecloud. This would have used a combination of interesting technologies such as WebRTC, HTML5 local storage and DHT to provide distributed website hosting through a Firefox add-on.

I really, really hope that BitTorrent turn this into a reality. I’d love to be able to host my website as a torrent. :-D

Update: People pay more attention to products than technologies, but I’d love to see Webtorrent get more love/attention/exposure.

Comments? Questions Email me: doug@mozillafoundation.org

http://literaci.es/bittorrents-project-maelstrom-is-firecloud-on-steroids

|

|

Mozilla Fundraising: Privacy-Forward Fundraising |

https://fundraising.mozilla.org/privacy-forward-fundraising/

|

|

Fabien Cazenave: "pip install" & "gem install" without sudo |

Following yesterday’s post about using “npm install -g” without root privileges, here are the Python and Ruby counterparts for your beloved OSX or Linux box.

By default, pip install and gem install try to install stuff in /usr/, which requires root privileges. Hence, most users will “naturally” do a sudo to perform the install — which is, in my opinion at least, a very bad idea (do you really want to give root privileges to packages that haven’t been reviewed?). Fortunately, there’s more than the default setting.

Python: pip install --user

With Python 2.6 and later you can avoid “sudoing” your pip install by using the --user argument (thanks @cmdevienne for the tip!). Let’s test this with html-linter:

$ pip install --user html-linter

By default on Linux and OSX (non-framework builds) this will install your package into ~/.local, which is just fine for me. All executables are in ~/.local/bin/, which is included in my $PATH, and all Python libraries are in ~/.local/lib/python2.7/. The world couldn’t be any better.

You can specify a custom destination by setting the PYTHONUSERBASE environment variable:

$ export PYTHONUSERBASE=/myappenv $ pip install --user html-linter

Of course, you’ll have to add that to your $PATH to make it work. You can add the following lines to your ~/.profile like that:

export PYTHONUSERBASE=/myappenv

PATH="$PYTHONUSERBASE/bin:${PATH}"

The only downside (compared to npm) is that you’ll have to remember to use the --user argument when installing Python packages. If there’s a way to make it the default mode, please let me know.

EDIT: a good workaround is to define a custom pip function in your ~/.bash_aliases (or bashrc, zshrc, whatever), as suggested in comment #1.

Ruby: gem install --user-install

gem’s --user-install argument is quite similar. One good thing is that you can easily make it the default mode:

$ echo "gem: --user-install" >> ~/.gemrc

Now let’s try that with the most valuable gem I know:

$ gem install vimgolf

Fetching: vimgolf-0.4.6.gem (100%)

WARNING: You don't have /home/kaze/.gem/ruby/1.8/bin in your PATH,

gem executables will not run.

As you can see, gem installs everything in ~/.gem by default; unfortunately, the file structure does not allow to put executables in the same ~/.local/bin/ directory. Never mind, we’ll add those ~/.gem/ruby/*/bin/ directories to the $PATH manually by adding these lines to the ~/.profile:

for dir in $HOME/.gem/ruby/*; do

[ -d "$dir/bin" ] && PATH="${dir}/bin:${PATH}"

done

Source your ~/.profile, you’re done.

http://kazhack.org/?post/2014/12/12/pip-gem-install-without-sudo

|

|

Joel Maher: Tracking Firefox performance as we uplift – the volume of alerts we get |

For the last year, I have been focused on ensuring we look at the alerts generated by Talos. For the last 6 months I have also looked a bit more carefully at the uplifts we do every 6 weeks. In fact we wouldn’t generate alerts when we uplifted to beta because we didn’t run enough tests to verify a sustained regression in a given time window.

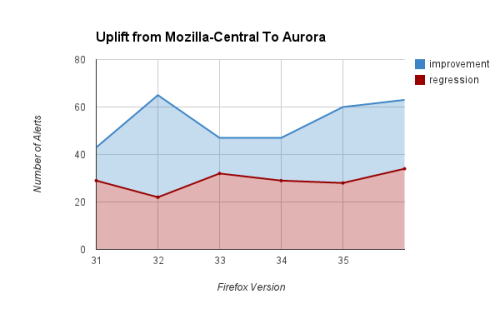

Lets look at data, specifically the volume of alerts:

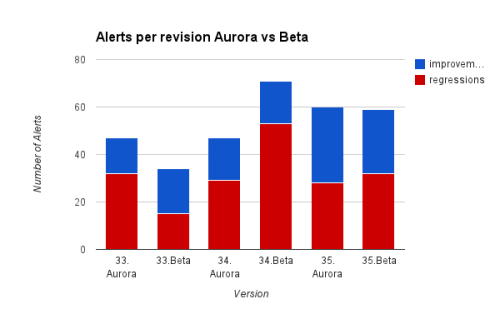

this is a stacked graph, you can interpret it as Firefox 32 had a lot of improvements and Firefox 33 had a lot of regressions. I think what is more interesting is how many performance regressions are fixed or added when we go from Aurora to Beta. There is minimal data available for Beta. This next image will compare alert volume for the same release on Aurora then on Beta:

One way to interpret this above graph is to see that we fixed a lot of regressions on Aurora while Firefox 33 was on there, but for Firefox 34, we introduced a lot of regressions.

The above data is just my interpretation of this, Here are links to a more fine grained view on the data:

As always, if you have questions, concerns, praise, or other great ideas- feel free to chat via this blog or via irc (:jmaher).

|

|

Mozilla Reps Community: Reps Weekly Call – December 11st 2014 |

Last Thursday we had our regular weekly call about the Reps program, where we talk about what’s going on in the program and what Reps have been doing during the last week.

Summary

- FOSDEM update.

- Portland Work Week.

- ReMo/Mozillians websites testing.

- End of year receipts campaign.

- Remo challenges.

- Stumbling in a box events.

- Reps Monthly newsletter.

AirMozilla video

Don’t forget to comment about this call on Discourse and we hope to see you next week!

https://blog.mozilla.org/mozillareps/2014/12/12/reps-weekly-call-december-11st-2014/

|

|

Blair McBride: UX Deisgn Day, Dunedin 2014 |

Things I’ve been saying for a long time: I need to blog more. I haven’t been very good at achieving that.

So, recently I was at UX Design Day – a one-day conference focused on UX and design. It’s the only conference of it’s kind in NZ, and it started here in Dunedin. Working remotely and not really part of the design community, I don’t often get a chance to sit down and talk UX/design in-person with people. This year the conference was back in Dunedin, so I jumped at the chance to attend.

I was impressed by the diverse turnout this year. Interaction design, visual design, content strategy, marketing, education, user research, and software development were all represented. I had tried to drum up support from the local developer community to attend, and that seemed to have worked well. Too often do I see developers ignoring UX/design issues – either being very dismissive, or claiming it’s another person’s job; so this felt like a good sign.

I was impressed by the diverse turnout this year. Interaction design, visual design, content strategy, marketing, education, user research, and software development were all represented. I had tried to drum up support from the local developer community to attend, and that seemed to have worked well. Too often do I see developers ignoring UX/design issues – either being very dismissive, or claiming it’s another person’s job; so this felt like a good sign.

Alone those lines, one of the things that stuck with me was the talk around not having UX teams separate from everything else. The largest example talked about was UX and content strategy, but I think it applies equally to software development teams too. Having these two groups work closely together, not segregated, helps bring so much context to both teams.

The other important take-away for me was the importance of not accepting crap. That is, experiences or systems that are, intentionally or not, lacking in design forethought and therefore lead to an unnecessarily difficult experiences, or a design that by default leads to harm. The primary concrete example here was physical safety in various workplaces, where people were put at needless risk due to the lack of safety by default design. I think this is a very relevant point for those of us building software, given that we so often experience design in software that feels broken, but too often don’t do anything constructive to help fix it.

Obligatory wall of Post-It notes

On the whole, I enjoyed the conference. However, since the talks covered such a wide corpus, I feel it didn’t provide enough time for any one area. Diversity is an asset, but I would have liked time for more in-depth explorations of topics.

http://theunfocused.net/2014/12/12/ux-deisgn-day-dunedin-2014/

|

|

Jeff Walden: Introducing the JavaScript Internationalization API |

(also cross-posted on the Hacks blog — comment over there if you have anything to say)

Firefox 29 issued half a year ago, so this post is long overdue. Nevertheless I wanted to pause for a second to discuss the Internationalization API first shipped on desktop in that release (and passing all tests!). Norbert Lindenberg wrote most of the implementation, and I reviewed it and now maintain it. (Work by Makoto Kato should bring this to Android soon; b2g may take longer due to some b2g-specific hurdles. Stay tuned.)

What’s internationalization?

Internationalization (i18n for short — i, eighteen characters, n) is the process of writing applications in a way that allows them to be easily adapted for audiences from varied places, using varied languages. It’s easy to get this wrong by inadvertently assuming one’s users come from one place and speak one language, especially if you don’t even know you’ve made an assumption.

function formatDate(d)

{

// Everyone uses month/date/year...right?

var month = d.getMonth() + 1;

var date = d.getDate();

var year = d.getFullYear();

return month + "/" + date + "/" + year;

}

function formatMoney(amount)

{

// All money is dollars with two fractional digits...right?

return "$" + amount.toFixed(2);

}

function sortNames(names)

{

function sortAlphabetically(a, b)

{

var left = a.toLowerCase(), right = b.toLowerCase();

if (left > right)

return 1;

if (left === right)

return 0;

return -1;

}

// Names always sort alphabetically...right?

names.sort(sortAlphabetically);

}

JavaScript’s historical i18n support is poor

i18n-aware formatting in traditional JS uses the various toLocaleString() methods. The resulting strings contained whatever details the implementation chose to provide: no way to pick and choose (did you need a weekday in that formatted date? is the year irrelevant?). Even if the proper details were included, the format might be wrong e.g. decimal when percentage was desired. And you couldn’t choose a locale.

As for sorting, JS provided almost no useful locale-sensitive text-comparison (collation) functions. localeCompare() existed but with a very awkward interface unsuited for use with sort. And it too didn’t permit choosing a locale or specific sort order.

These limitations are bad enough that — this surprised me greatly when I learned it! — serious web applications that need i18n capabilities (most commonly, financial sites displaying currencies) will box up the data, send it to a server, have the server perform the operation, and send it back to the client. Server roundtrips just to format amounts of money. Yeesh.

A new JS Internationalization API

The new ECMAScript Internationalization API greatly improves JavaScript’s i18n capabilities. It provides all the flourishes one could want for formatting dates and numbers and sorting text. The locale is selectable, with fallback if the requested locale is unsupported. Formatting requests can specify the particular components to include. Custom formats for percentages, significant digits, and currencies are supported. Numerous collation options are exposed for use in sorting text. And if you care about performance, the up-front work to select a locale and process options can now be done once, instead of once every time a locale-dependent operation is performed.

That said, the API is not a panacea. The API is “best effort” only. Precise outputs are almost always deliberately unspecified. An implementation could legally support only the oj locale, or it could ignore (almost all) provided formatting options. Most implementations will have high-quality support for many locales, but it’s not guaranteed (particularly on resource-constrained systems such as mobile).

Under the hood, Firefox’s implementation depends upon the International Components for Unicode library (ICU), which in turn depends upon the Unicode Common Locale Data Repository (CLDR) locale data set. Our implementation is self-hosted: most of the implementation atop ICU is written in JavaScript itself. We hit a few bumps along the way (we haven’t self-hosted anything this large before), but nothing major.

The Intl interface

The i18n API lives on the global Intl object. Intl contains three constructors: Intl.Collator, Intl.DateTimeFormat, and Intl.NumberFormat. Each constructor creates an object exposing the relevant operation, efficiently caching locale and options for the operation. Creating such an object follows this pattern:

var ctor = "Collator"; // or the others var instance = new Intl[ctor](locales, options);

locales is a string specifying a single language tag or an arraylike object containing multiple language tags. Language tags are strings like en (English generally), de-AT (German as used in Austria), or zh-Hant-TW (Chinese as used in Taiwan, using the traditional Chinese script). Language tags can also include a “Unicode extension”, of the form -u-key1-value1-key2-value2..., where each key is an “extension key”. The various constructors interpret these specially.

options is an object whose properties (or their absence, by evaluating to undefined) determine how the formatter or collator behaves. Its exact interpretation is determined by the individual constructor.

Given locale information and options, the implementation will try to produce the closest behavior it can to the “ideal” behavior. Firefox supports 400+ locales for collation and 600+ locales for date/time and number formatting, so it’s very likely (but not guaranteed) the locales you might care about are supported.

Intl generally provides no guarantee of particular behavior. If the requested locale is unsupported, Intl allows best-effort behavior. Even if the locale is supported, behavior is not rigidly specified. Never assume that a particular set of options corresponds to a particular format. The phrasing of the overall format (encompassing all requested components) might vary across browsers, or even across browser versions. Individual components’ formats are unspecified: a short-format weekday might be “S”, “Sa”, or “Sat”. The Intl API isn’t intended to expose exactly specified behavior.

Date/time formatting

Options

The primary options properties for date/time formatting are as follows:

weekday,era"narrow","short", or"long". (erarefers to typically longer-than-year divisions in a calendar system: BC/AD, the current Japanese emperor’s reign, or others.)month"2-digit","numeric","narrow","short", or"long"yeardayhour,minute,second"2-digit"or"numeric"timeZoneName"short"or"long"timeZone- Case-insensitive

"UTC"will format with respect to UTC. Values like"CEST"and"America/New_York"don’t have to be supported, and they don’t currently work in Firefox.

The values don’t map to particular formats: remember, the Intl API almost never specifies exact behavior. But the intent is that "narrow", "short", and "long" produce output of corresponding size — “S” or “Sa”, “Sat”, and “Saturday”, for example. (Output may be ambiguous: Saturday and Sunday both could produce “S”.) "2-digit" and "numeric" map to two-digit number strings or full-length numeric strings: “70” and “1970”, for example.

The final used options are largely the requested options. However, if you don’t specifically request any weekday/year/month/day/hour/minute/second, then year/month/day will be added to your provided options.

Beyond these basic options are a few special options:

hour12- Specifies whether hours will be in 12-hour or 24-hour format. The default is typically locale-dependent. (Details such as whether midnight is zero-based or twelve-based and whether leading zeroes are present are also locale-dependent.)

There are also two special properties, localeMatcher (taking either "lookup" or "best fit") and formatMatcher (taking either "basic" or "best fit"), each defaulting to "best fit". These affect how the right locale and format are selected. The use cases for these are somewhat esoteric, so you should probably ignore them.

Locale-centric options

DateTimeFormat also allows formatting using customized calendaring and numbering systems. These details are effectively part of the locale, so they’re specified in the Unicode extension in the language tag.

For example, Thai as spoken in Thailand has the language tag th-TH. Recall that a Unicode extension has the format -u-key1-value1-key2-value2.... The calendaring system key is ca, and the numbering system key is nu. The Thai numbering system has the value thai, and the Chinese calendaring system has the value chinese. Thus to format dates in this overall manner, we tack a Unicode extension containing both these key/value pairs onto the end of the language tag: th-TH-u-ca-chinese-nu-thai.

For more information on the various calendaring and numbering systems, see the full DateTimeFormat documentation.

Examples

After creating a DateTimeFormat object, the next step is to use it to format dates via the handy format() function. Conveniently, this function is a bound function: you don’t have to call it on the DateTimeFormat directly. Then provide it a timestamp or Date object.

Putting it all together, here are some examples of how to create DateTimeFormat options for particular uses, with current behavior in Firefox.

var msPerDay = 24 * 60 * 60 * 1000; // July 17, 2014 00:00:00 UTC. var july172014 = new Date(msPerDay * (44 * 365 + 11 + 197));

Let’s format a date for English as used in the United States. Let’s include two-digit month/day/year, plus two-digit hours/minutes, and a short time zone to clarify that time. (The result would obviously be different in another time zone.)

var options =

{ year: "2-digit", month: "2-digit", day: "2-digit",

hour: "2-digit", minute: "2-digit",

timeZoneName: "short" };

var americanDateTime =

new Intl.DateTimeFormat("en-US", options).format;

print(americanDateTime(july172014)); // 07/16/14, 5:00 PM PDT

Or let’s do something similar for Portuguese — ideally as used in Brazil, but in a pinch Portugal works. Let’s go for a little longer format, with full year and spelled-out month, but make it UTC for portability.

var options =

{ year: "numeric", month: "long", day: "numeric",

hour: "2-digit", minute: "2-digit",

timeZoneName: "short", timeZone: "UTC" };

var portugueseTime =

new Intl.DateTimeFormat(["pt-BR", "pt-PT"], options);

// 17 de julho de 2014 00:00 GMT

print(portugueseTime.format(july172014));

How about a compact, UTC-formatted weekly Swiss train schedule? We’ll try the official languages from most to least popular to choose the one that’s most likely to be readable.

var swissLocales = ["de-CH", "fr-CH", "it-CH", "rm-CH"];

var options =

{ weekday: "short",

hour: "numeric", minute: "numeric",

timeZone: "UTC", timeZoneName: "short" };

var swissTime =

new Intl.DateTimeFormat(swissLocales, options).format;

print(swissTime(july172014)); // Do. 00:00 GMT

Or let’s try a date in descriptive text by a painting in a Japanese museum, using the Japanese calendar with year and era:

var jpYearEra =

new Intl.DateTimeFormat("ja-JP-u-ca-japanese",

{ year: "numeric", era: "long" });

print(jpYearEra.format(july172014)); // http://whereswalden.com/2014/12/11/introducing-the-javascript-internationalization-api/

|

|

Sriram Ramasubramanian: Centered Buttons |

How can we use the same hack as Multiple Text Layout in some UI we need most of the times? Let’s take buttons for example. If we want the glyph in the button to be centered along with the text, we cannot use compound drawables — as they are always drawn along the edges of the container.

We could use our getCompoundPaddingLeft() to pack the glyph with the text.

@Override

public int getCompoundPaddingLeft() {

// Ideally we should be overriding getTotalPaddingLeft().

// However, android doesn't make use of that method,

// instead uses this method for calculations.

int paddingLeft = super.getCompoundPaddingLeft();

paddingLeft += mDrawableWidth + getCompoundDrawablePadding();

return paddingLeft;

}

This offsets the space on the left and Android will take care of placing the text accordingly. Now we can place the Drawable in the space we created.

@Override

protected void onMeasure(int widthMeasureSpec, int heightMeasureSpec) {

super.onMeasure(widthMeasureSpec, heightMeasureSpec);

int paddingLeft = getPaddingLeft();

int paddingRight = getPaddingRight();

int drawableVerticalHeight = mDrawableHeight + getPaddingTop() + getPaddingBottom();

int width = getMeasuredWidth();

int height = Math.max(drawableVerticalHeight, getMeasuredHeight());

setMeasuredDimension(width, height);

int compoundPadding = getCompoundDrawablePadding();

float totalWidth = mDrawableWidth + compoundPadding + getLayout().getLineWidth(0);

float offsetX = (width - totalWidth - paddingLeft - paddingRight)/2.0f;

mTranslateX = offsetX + paddingLeft;

mTranslateY = (height - mDrawableHeight)/2.0f;

}

The mTranslateX and mTranslateY are used to hold how far to translate to draw the drawable. Either the Drawable’s bounds can be shifted inside onMeasure() to reflect the translation. Or, the Canvas can be translated inside onDraw(). This will help us draw the glyph centered along with the text as a part of a Button!

http://sriramramani.wordpress.com/2014/12/11/centered-buttons/

|

|

Kim Moir: Releng 2015 CFP now open |

|

| Il Duomo di Firenze by ©runner310, Creative Commons by-nc-sa 2.0 |

Delicious food and drink.

|

| Panzanella by © Pete Carpenter, Creative Commons by-nc-sa 2.0 |

|

| Caff`e ristretto by © Marcelo C'esar Augusto Romeo, Creative Commons by-nc-sa 2.0 |

And next May, release engineering :-)

The CFP for Releng 2015 is now open. The deadline for submissions is January 23, 2015. It will be held on May 19, 2015 in Florence Italy and co-located with ICSE 2015. We look forward to seeing your proposals about the exciting work you're doing in release engineering!

If you have questions about the submission process or anything else, please contact any of the program committee members. My email is kmoir and I work at mozilla.com.

http://relengofthenerds.blogspot.com/2014/12/releng-2015-cfp-now-open.html

|

|

Naoki Hirata: Einstein Quote for Mozillians |

“Out of clutter, find simplicity. From discord find harmony. In the middle of difficulty lies opportunity.” – Albert Einstein

From : http://www.folderarchy.com/albert-einstein/

Filed under: Planet Tagged: Planet

http://shizen008.wordpress.com/2014/12/11/einstein-quote-for-mozillians/

|

|