Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Adam Okoye: OPW Internship |

Tomorrow, December 9th (which is only about an 45 minutes at this point) is the start of my OPW internship with Mozilla. I’ll be working on the SUMO/Input Web Designer/Developer project and, from my understanding, primarily working on the “thank you” page that people see after they leave feedback. The goal is for people who leave feedback, especially negative feedback, to not feel brushed off, but rather to feel like their feedback was well received. We also want to be able to a. point them to knowledge base articles that might mitigate the issue(s) they are having with Firefox based on their feedback (what they wrote in the text field) and b. to point them towards additional ways that they can become involved with Mozilla.

Like I said above, the internship starts on December 9th and it ends on March 9th. The internship, like all OPW projects, is remote but, because there is a Portland Mozilla office I will be able to work in one of their conference rooms. Most of the programming I will be doing will be in Python and I will also be doing a lot of work in with Django. That said I will also likely be doing some work HTML, CSS, and Javascript. In addition to the thank you page, I’m also going to be working on other assorted Input bugs.

As part of the agreements of my internship I will be posting at least one internship related post every two weeks. In reality I am going to hope to post at least one internship related post a week as it will get me back into the practice of blogging and I will also have a back up plan if there is a week that I can’t post for whatever reason.

Here’s to a productive three months!

|

|

Paul Rouget: Firefox.html |

Firefox.html

I just posted on the Firefox-dev mailing about Firefox.html, an experimental re-implementation of the Firefox UI in HTML. If you have comments, please post on the mailing list.

Code, builds, screenshots: https://github.com/paulrouget/firefox.html.

|

|

Lukas Blakk: Ascend New Orleans: We need a space! |

I’m trying to bring the second pilot of the Ascend Project http://ascendproject.org to New Orleans in February and am looking for a space to hold the program. We have a small budget to rent space but would prefer to find a partnership and/or sponsor if possible to help keep costs low.

The program takes 20 adults who are typically marginalized in technology/open source and offers them a 6 week accelerated learning environment where they build technical skills by contributing to open source – specifically, Mozilla. Ascend provides the laptops, breakfast, lunch, transit & childcare reimbursement, and a daily stipend in order to lift many of the barriers to participation.

Our first pilot completed 6 weeks ago in Portland, OR and it was a great success with 18 participants completing the 6 week course and fixing many bugs in a wide range of Mozilla projects. They have now continued on to internships both inside and outside of Mozilla as well as seeking job opportunities in the tech industry.

To do this again, in New Orleans, Ascend needs a space to hold the classes!

Space requirements are simple:

* Room for 25 people to comfortably work on laptops

* Strong & reliable internet connectivity

* Ability to bring in our own food & beverages

Bonus if the space helps network participants with other tech workers, has projector/whiteboards (though we can bring our own in), or video capability.

Please let me know if you have a connection who can help with getting a space booked for this project and if you have any other leads I can look into, I’d love to hear about them.

|

|

Armen Zambrano: Test mozharness changes on Try |

NOTE: This currently only works for desktop, mobile and b2g test jobs. More to come.

NOTE: We only support named branches, tags or specific revisions. Do not use bookmarks as it doesn't work.

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/_B3LTVbxLj0/test-mozharness-changes-on-try.html

|

|

Sean Martell: Thank You, Mozlandia |

Well, that was a week.

Sitting here on the Monday after, coffee in hand and reading all of the fresh new posts detailing our recent Coincidental Work Week, I’ve decided to share a few quick thoughts while they’re still fresh in my mind.

For me, last week was a particularly emotionally overwhelming one. There was high energy around once again gathering as a whole, sadness around friends/family moving on, fear in what’s next, excitement in what’s next, and a fine juggling act of trying to manage all those feels as they kicked in all at once.

The work week itself (the actual work part) was just amazing and I’m pretty sure it was the most productive travel week I’ve ever had in any job setting. Things were laid out, solutions discussed, alliances forged. Good stuff.

Then Friday hit.

So did all the emotions. All the feels. All of them.

The night started with me traveling through the swarms of Mozillians getting folks to sign a farewell card for Johnny Slates, my partner in crime for the majority of my Mozilla experience. A tough start. Tears were shed, but really they were thank you tears, in thanks for an awesome time shared at Mozilla.

Later, as I stood in a sea of Mozillians dancing, cheering and smiles all around, I was standing once again in tears. I was watching Mozillians letting loose. I was watching Mozillians get pumped for the future of the Internetz and our role in it. Even though I was listening to lyrics on topics that have brought Mozilla together and torn us apart all at the same time, we were dancing together and having fun.

I felt like I was watching my work family heal.

It was a very, very happy cry.

Thank you to my past, current and future Mozilla family members. To me, there is no old or new guard, just an ever evolving extended family.

<3

|

|

Priyanka Nag: Portland coincidental work-week |

On the first day, when I walked into the Portland Art Museum in the morning, I was overwhelmed to see so many known faces and being able to flag a few new faces to their IRC nicks (or twitter handles), whom I was meeting for the first time outside of the virtual world.

|

| What's your slingshot? |

During this one week, I heard a lot of amazing people, from David Slater to Chris Beard, from Mark Surman to Mitchel Baker....too much awesomeness on the stage! The guest speakers on the first day was Brian Muirhead, from NASA who made us realize that even though we were not NASA engineers, and our work was limited to the earthen atmosphere, sometimes the criticality of projects or the way of handling them didn't need to differ much. The second day's guest speaker, Michael Lopp (@rands), was a person I had been following on twitter but never knew his real name or how he looked untill the moring of 3rd of December. The talk about Old guards vs New guards was not only something I could relate to but also had a few very interestig points we could all learn from.

After the opening addresses on both days, I found a comfortable spot with the MDN folks. I knew that under all possible circumstances, these would be the people I would mostly hang-around with for the rest of the week. Well, MDN is undoubtedly my most favorite project among all other possible contribution pathways that I have (or still do) tried contributing to.

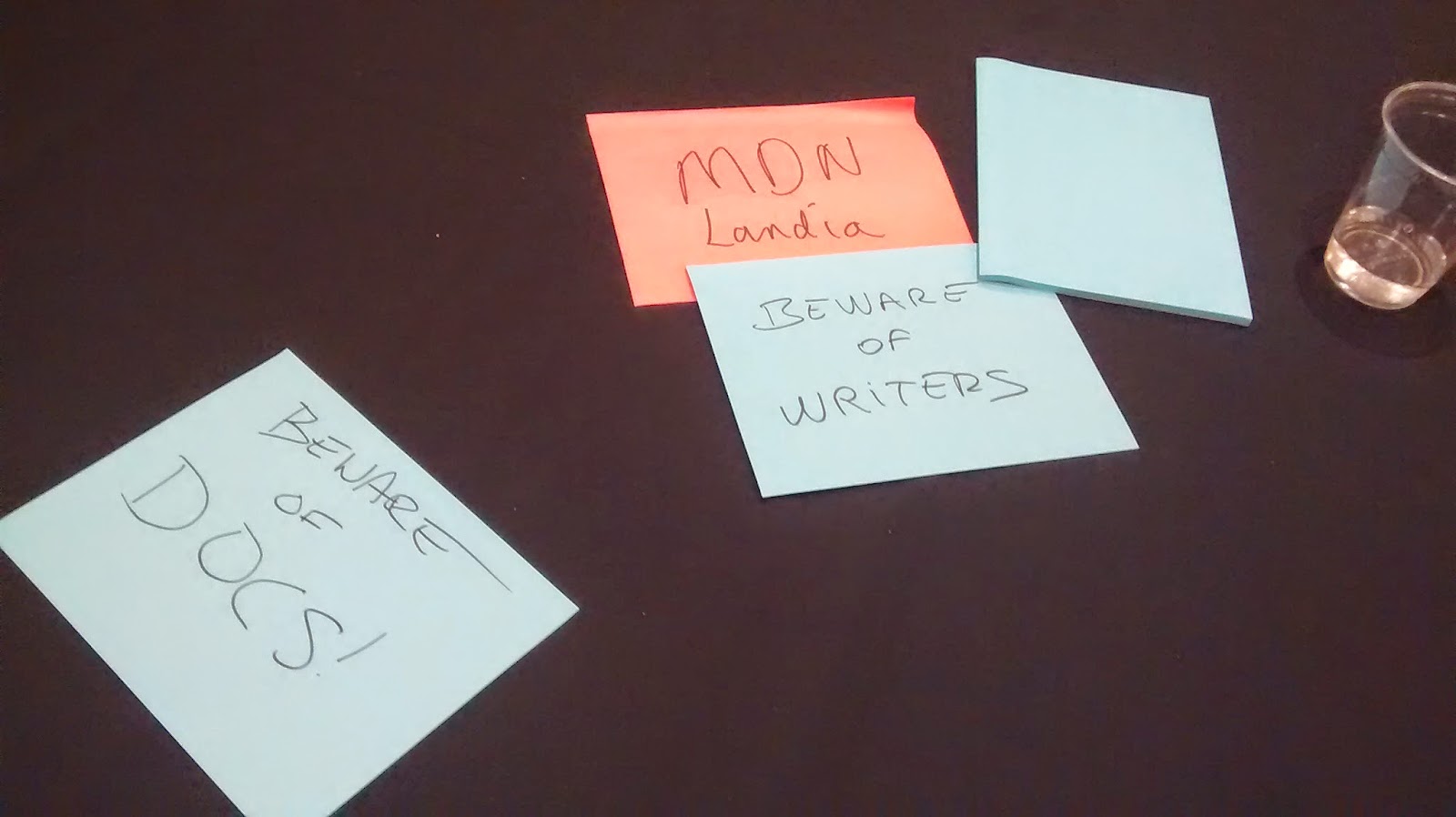

|

| We do know how to mark our territory! |

Just like most Mozilla work-weeks, this week also had a lot of sticky notes all around, so many etherpads created and a master etherpad to link all the etherpads and a lot of wiki pages! When you know that you are gonna be haunted my sticky notes for at-least the next one week, you can be sure that you had a great workweek and a lot of planning. Plannings around the different contribution metrics for MDN, contribution recognition, MDN events for 2015, growing the community as well as a few technical changes and a few responsibilities which I have committed to and will be trying to complete before the calender changes it reading to 2015....it was a crazy crazy fun week. One important initiative that I am not only interested in being executed by also am willing to jump into in any possible manner is the linking of Webmaker and MDN. To me, its like my two superheros who are planning to work together to save this world!

I didn't spend much time with the community building team this week, other than the last day when I could finally join the group. First and foremost, Allen Gunner is undoubtedly one of the best facilitators I have seem in my life. Half of the session, my focus on his skills and how I could learn a few of them. I am happy to have been able to join the community building team on their concluding day as I got a summary of the week's discussion as well as could help the concluding plans and also make a few new commitments to a few new interesting things that are being planned in the year 2015.

Well, I am not sure if I have been able to do a good job at thanking Dietrich personally for inviting me and hosting me at his place for the fun filled get-together, but I sincerely do confess that I had way more fun at his party than I had expected to. Meeting so many new people there, mostly meeting so many amazing engineerings who are building the new mobile operating system which I not only extensively use but also brag about to my friends, family and colleagues.

A few wow moments -

[1] Seeing @rage outside the twitter world, live infront of me!

[2] Mitchel's talk on how Mozilla acknowledges the tensions created around the last few decisions that went out and her explanation around why and how they were made and were important.

[3] Macklemore & Ryan Lewis' live performance at the mega party.

[4] My first ever experience of trying to 'dance' with other Mozillians. Yes, I had successfully avoided them during the Summit, MozFest and all other previous events in the last 2 years.

[5] The proudest moment for me was probably the meeting of the MDN and the webmaker team. When neither of the teams knew every other member of the other team, I was probably the one person who knew every person in that circle. Having worked very closely with both the teams, it was my cloud nine (++) moment of the workweek to be sitting with all my rock-stars together!

A lot of people met, a lot of planning done, a lot of things learnt and most importantly, a lot of commitments made which I am looking forward to execute in 2015.

http://priyankaivy.blogspot.com/2014/12/portland-coincidental-work-week.html

|

|

Christian Heilmann: The next UX challenge on the web: gaining offline trust |

A few weeks ago, I released http://removephotodata.com as a tool. It is a simple web app (well, a page) that allows you to remove the EXIF data of an image before sharing it online. I created it as a companion to my “Put social back in social media” talk at TEDx Linz. During this talk I pointed out the excellent exiftool. A command line tool to remove extra information embedded in images people might not want to share. As such, it is too hard to use for most users. So I thought this would be a good solution.

It had some success and people – including the press in Spain – talked about it. Without fail though, every thread of comments or Twitter conversation will have one person pointing out the “seemingly obvious”:

So you create a tool to remove personal data from images and to do that I need to send the photo to your server! FAIL! LOLZ0RZ (and similar)

Which is not true at all. The only server interaction needed is the first load of the page. All the JavaScript analysis and removal of EXIF data happens on your computer. I even added a appcache to ensure that the tool itself works offline. In essence, everything happens on your computer or smartphone. This makes a lot of sense – it would be nonsense to use a service on some machine to remove personal data for you.

I did explain this in the page:

Your photo does not get uploaded anywhere, all of this happens on your device, in your browser. It even works offline.

Nobody seems to read that, though and it is quicker to complain about a seemingly non-sensical security tool.

The web needs a connection, apps do not?

This is not the user’s fault, it is conditioning. We’ve so far have done a bad job advocating the need for offline functionality. The web is an online medium. It’s understandable that people don’t expect a browser to work without an internet connection.

Apps, on the other hand, are expected to work offline. This, of course, is nonsense. The sad state of affairs is that most apps do not work offline. Look around on a train when people are not connected. You see almost everyone on their phone either listening to local music, reading books or playing games. Games are the only things that work offline. All other apps are just sitting there until you connect. You can’t even write your posts as drafts in most of them – something any email client was able to do a long time ago.

The web is unsafe, apps are secure?

People also seem to trust native apps more as they are on your device. You have to go through an install and uninstall process to get them. You see them downloading and installing. Web Apps arrive by magic. This is less re-assuring.

This is security by obscurity and thus to me more dangerous. Of course it is good to know when something gets to your computer. But an install process gives the app more rights to do things, it doesn’t necessarily mean that software is more secure.

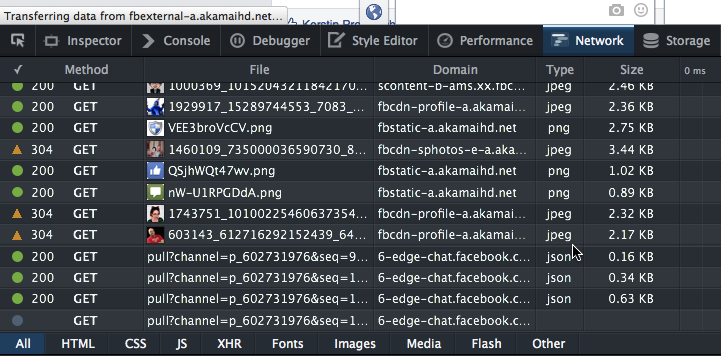

Native apps don’t give us more security or insight into what is going on – on the contrary. A packaged format with no indicator when the app is sending or receiving data from the web allows me to hide a lot more nasties than a web site could. It is pretty simple with developer tools in a browser to see what is going on:

On my mobile, I have to hope that the Android game doesn’t call home in the background. And I should read the terms and conditions and understand the access the game has to my device. But, no, I didn’t read that and just skimmed through the access rights and ticked “yes” as I wanted to play that game.

There is no doubt that JavaScript in browsers has massive security issues. But it isn’t worse or better than any other of the newer languages. When Richard Stallman demonised JavaScript as a trap as you run code that might not be open on your computer he was right. He was also naive in thinking that people cared about that. We live in a world where we give away privacy and security for convenience. That’s the issue we need to address. Not if you could read all the code that is on your device. Only a small amount of people on this world can make sense of that anyways.

Geek mode on: offline web work in the making

There is great work in the making towards an offline web. Google’s and Mozilla’s ServiceWorker implementations are going places. The latest changes in Chrome give the browser on the device much more power to store things offline. IndexedDB, WebSQL and other local storage solutions are available across browsers. Web Cryptography is coming. Tim Taubert gave an interesting talk about this at JSConf called “Keeping secrets with JavaScript: An Introduction to the WebCrypto API“.

The problem is that we also need to create a craving in our users to have that kind of functionality. And that’s where we don’t do well.

Offline first needs UX love

There is no indicator in the browser that something works offline. We need to tell the user in our copy or with non-standardised icons. That’s not good. We assume a lot from our users when we do that.

When we started offering offline functionality with appcache we did an even worse job. We warned users that the site is trying to store information on their device. In essence we conditioned our users to not trust things that come from the web – even if they requested that data.

Offline functionality is a must. The wonderful world of constant, free and fast connectivity only exists in movies and advertisements for mobiles and smart devices. This is not going to happen any time soon as physics is not likely to change and replacing a lot of copper cable in the ground is quite a job.

We also need to advocate better that users have a right to use their devices offline. Mobile phones are multi-processor machines with a lot of RAM and storage. Why not use that? Why would I have to store my information in the cloud for everything I do? Can I trust the cloud? What is the cloud? To me, it is “someone else’s computer” and they have the right to analyse my data, read it and even cut me off from it once their first few rounds of funding money runs out. My phone I got, why can’t I do more with it when I am offline? Why can’t I sync data with a USB cable?

Of course, all of this is about convenience. It is easier to have my data synced across devices with a cloud service. That way I never lose anything – if the cloud provider is OK with me getting to my data.

Our devices are powerful machines and we should be able to create, encrypt and store information without someone online snooping on me doing it. For this to happen, we need to create users that are aware of these options and see them as a value-add. It is not an easy job – the marketing around the simplicity of closed systems with own cloud services is excellent. But so are we, aren’t we?

http://christianheilmann.com/2014/12/08/the-next-ux-challenge-on-the-web-gaining-offline-trust/

|

|

Doug Belshaw: Feedback on the Web Literacy Map from the LRA conference |

Last week, leaving midway through the Mozilla coincidental workweek, I headed to Florida for the Literacy Research Association conference. Mozilla was invited by contributor Ian O'Byrne to lead a session on Web Literacy Map v1.1 and our plans for v2.0.

You can find what I talked about in this post: Toward The Development of a Web Literacy Map: Exploring, Building, and Connecting Online.

We received some great feedback from the following discussants:

- Richard Beach, University of Minnesota

- Amy Stornaiuolo, University of Pennsylvania

- Bridget Dalton, University of Colorado Boulder

- Colin Harrison, University of Nottingham

It was difficult to capture it all, so I’m just going to list my takeaways. Special thanks to Amy who sent me her notes!

What’s the theory of learning driving the Web Literacy Map?

We talk about Mozilla’s approach to learning in the Webmaker whitepaper, but this isn’t tied closely enough to the Web Literacy Map as it currently stands.

We can do a better job around recontextualisation

According to Wikipedia:

Recontextualisation is a process that extracts text, signs or meaning from its original context (decontextualisation) in order to introduce it into another context. Since the meaning of texts and signs depend on their context, recontextualisation implies a change of meaning, and often of the communicative purpose too.

That’s a pretty academic way to say that we can do a better job of explaining how memes and other (what Henry Jenkins would call) spreadable media work.

We’re doing well at practising what we’re preaching

Discussants liked the openness and transparency of the Web Literacy Map work, leading to multiple diverse perspectives and voices. They appreciated the way it was fed back for anyone to be able to read and then jump in on. They also liked the apprenticeship model, with ‘mentoring’ explicitly called out through things like Webmaker Mentors.

'Reading, Writing and Participating’ is a problematic approach

This approach along with the 'grid’ approach of the Web Literacy Map’s competency layer is outdated from a new literacies point of view. Talking of 'web literacy’ in a singular way is also reflective of a traditional understanding of the field and invokes a 'deficit’ model. As Mozilla has influence we should think carefully about what we’re amplifying and what we’re foregrounding. This has implications for how people are measured, ranked and sorted.

How and where does criticality emerge in the Web Literacy Map?

There should be a recognition in the map that, as we make the web, the web makes us. The Web Literacy Map implies a neutral process of acquiring skills that lead unproblematically to particular outcomes. Instead, we should move to a practice-oriented approach. Using this approach, practices are situated in activities in relation to other people and things.

Where does the notion of 'critical internet literacy’ fit into the Web Literacy Map?

It’s not good enough just to say that learning pathways are not linear

There’s a particular logic in the Web Literacy Map as it currently stands – in the way that it’s represented and is framed – that there is a 'correct’ way to become an expert. This constrains the way people in fact learn and make sense of the web (despite what we say by way of contextualisation).

It may be called a 'map’ but it looks like a standard

The three columns imply a linearity and separation for progressing in particular skills. Where are the relationships and connections between skills and competencies? There’s plans to focus on cross-cutting themes, but how can we go further? Reading, writing and participating are not separate activities in practice so it makes little sense for them to be separate on the Web Literacy Map.

Having each individually articulated in helpful for teaching and learning but as a whole, it mitigates against fluency. A 'competency grid’ fits an older, outdated model of learning that doesn’t recognise multiple pathways to learning and participating.

Why can’t we have multiple views of the Web Literacy Map?

Having just one representation of the skills and competencies of the Web Literacy Map limits creativity and leads to a 'recipes’ based approach. A 'stories’ based approach might be better, perhaps using a Universal Design for Learning approach. This would lead to greater learner agency and freedom. We should design for difference.

Using the web is an aesthetic expression

I didn’t make good enough notes here, but a couple of discussants talked about how using the web is an aesthetic expression. As a result we should do a better job of expressing this in the Web Literacy Map.

Producing/consuming as an alternative frame

Web 1.0 was monologic, whereas Web 2.0 onwards is dialogic:

The dialogic work carries on a continual dialogue with other works of literature and other authors. It does not merely answer, correct, silence, or extend a previous work, but informs and is continually informed by the previous work. Dialogic literature is in communication with multiple works. This is not merely a matter of influence, for the dialogue extends in both directions, and the previous work of literature is as altered by the dialogue as the present one is. (Wikipedia)

We should connect to other groups doing similar work

There are people like Media Smarts (“Canada’s Centre for Digital and Media Literacy”) doing similar work here. To what extent are they aware and involved with our work?

Where does user/consumer protection fit into the Web Literacy Map?

We include production and navigation in the map, but to what extent are we educating people about organisations that want to use their data for their own purposes?

Conclusion

I really enjoyed the session and, later, it made me think about some research I’d re-read recently about the importance of building up in the learner a 'three-dimensional’ model of the focus are:

Cognitive flexibility theory (Jacobson & Spiro, 1995; Spiro & Jehng, 1990), for instance, suggests that learning about a complex, ill-structured domain requires numerous carefully designed traversals (i.e., paths) across the terrain that defines that domain, and that different traversals yield different insights and understandings. Flexibility is thought to arise from the appreciation learners acquire for variability within the domain and their capacity to use this understanding to reconceptualize knowledge.“ (McEneaney, J. E. (2000). Learning on the Web: A Content Literacy Perspective.

We’ve got lots to think about on the upcoming community calls. As well as thanking the discussants, I’d like to thank Ian O'Byrne and Greg McVerry for making me feel so welcome. They introduced me to lots of fascinating and inspiring people with whom I look forward to following-up. :-)

- Canonical link for Web Literacy Map v2.0 work: http://bit.ly/weblitmapv2

Questions? Comments? Email me: doug@mozillafoundation.org or add your thoughts to this thread on the #TeachTheWeb discussion forum.

|

|

Patrick Finch: Thanking all bus drivers on behalf of Mozilla |

I’m just back from Mozlandia, our informal all hands coincidental work week in Portland, Oregon. In terms of what I got out of the event, I think this may be the best of its kind that I have attended.

On Friday evening, a curious thing happened. I was sitting with Pierros and Dietrich in the salubrious Hilton hotel lobby. We were accosted by a man who seemed in something of a rush, and who, by his appearance (specifically, the uniform he was wearing), was a bus driver. He asked if we were from Mozilla. We confirmed that we were. He then thanked us for the work we were doing for net neutrality.

I often read people describing themselves as “proud and humbled” in our industry. I have to confess, I have every bit as hard a time getting my head around how pride can be humble as Yngwie Malmsteen does with the idea that “less is more”. I can, however, relate to the idea of taking pride in being humble. And this was such a moment for me.

There are many people who know much, much more about net neutrality and its implications for the future of the internet than I do. But still, I expect myself to know more about the topic than our friendly, Oregonian bus driver. And so I find myself asking the question, “What is he expecting from net neutrality?”. I believe that his expectations will amount to the internet progressing much as it has to date. He probably expects there to be no overall controller, no balkanisation of access and content, and maybe he is even optimistic enough to hope that the internet will not give rise to the acceptance of widespread surveillance.

But I am guessing. Guessing because I didn’t have time to ask him, (he pretty much flew through that lobby), and also because I am not entirely sure myself of what we can expect from net neutrality. What troubles me is that “net neutrality” might be a placeholder for some, meaning not just net neutrality, but also a lot of the other aspects of a more – how do we put it – equitable internet. At the moment, net neutrality is both disrupting the old order, but also giving rise to new empires, vaster and more powerful than those they are replacing. Now, all empires fall: the ancient Romans, the British, Bell Telecommunications, even the seemingly invincible Liverpool FC of the 1970s and 1980s. All empires fall. The question is, “what will be their legacy?”. It isn’t an easy question to answer, and the parallels between the carve-up of the unindustrialised world and the formation and destruction of nation states in the preceding centuries is a gloomy place to look for metaphors, some of which (balkanisation) have already entered our everyday language.

What I do know is that the bus driver expects Mozilla to do the right thing. We have his trust.

I believe all of the paid staff at Mozilla are aware of our good fortune to be able to work with ideas and inventions that can shape the future of the internet in ways that we identify with, in ways that we want to believe in. And as Ogden Nash put it, “People who work sitting down get paid more than people who work standing up.” Working full-time in the tech industry has its hardships, but I can think of tougher places. We have much be grateful for.

And so, when a bus driver thanks us for our service, I feel compelled to offer my gratitude in return. We trust bus drivers to know where we want to go, and to get us there safely. What do they trust us to do?

http://patrickfinch.com/2014/12/08/thanking-all-bus-drivers-on-behalf-of-mozilla/

|

|

Wil Clouser: Goodwill Updates - A Firefox OS Feature Idea |

A common aspect amongst the regions Firefox OS targets is a lack of dependable bandwidth. Mobile data (if available) can be slow and expensive, wi-fi connections are rare, and in-home internet completely absent. With the lack of regular or affordable connectivity, it’s easy for people to ignore device and app updates and instead opt to focus on downloading their content.

In the current model, Firefox OS daily pings for system and app updates and downloads the updates when available. Once the update has been installed, the download is deleted from the device storage.

What if there was an alternative way to handle these numerous updates? Rather than delete the downloads, they are saved on the device. Instead of each Firefox OS device being required to download updates, the updates could be shared with other Firefox OS devices. This Goodwill Update would make it easier for people to get new features and important security fixes without having to rely on internet connectivity.

Goodwill Update could either run in the background (assuming there is disk space and battery life) or could be more user-facing presenting people with notifications about the presence of updates or even showing how much money has been saved by avoiding bandwidth charges. Perhaps they could even offer to buy Bob a beer!

Would this be worth doing to help emerging markets stay up to date?

PS. Hat tip to Katie and Tiffanie for the image and idea help.

|

|

Mozilla Fundraising: Bitcoin Donations to Mozilla: 17 Days In |

https://fundraising.mozilla.org/bitcoin-donations-to-mozilla-17-days-in/

|

|

Robert O'Callahan: We Aren't Really Going To Have "Firefox On iOS" |

Whatever we decide to do, we won't be porting Firefox as we know it to iOS, unless Apple makes major changes to their App Store policies. The principal issue is that on iOS, the only software Apple allows to download content from the Internet and execute it is their built-in iOS Webkit component. Under that policy, every browser --- including iOS Chrome, for example --- must be some kind of front-end to Apple's Webkit. Thus, from the point of view of Web authors --- and users encountering Web compatibility issues --- all iOS browsers behave like Safari, even when they are named after other browsers. There is some ability to extend the Webkit component but in most areas, engine performance and features are restricted to whatever Safari has.

I certainly support having a product on iOS and I don't necessarily object to calling it Firefox as long as we're very clear in our messaging. To some extent users and Web developers have already acclimatised to a similar confusing situation with iOS Chrome. It's not exactly the same situation: the difference between iOS Chrome and real Chrome is smaller than the difference between iOS Firefox and real Firefox because Blink shares heritage and still much code with Webkit. But both differences are rapidly growing since there's a ton of new Web features that Chrome and Firefox have that Webkit doesn't (e.g. WebRTC, Web Components, ES6 features, Web Animations).

In the meantime I think we need to avoid making pithy statements like "we're bringing Firefox to iOS".

http://robert.ocallahan.org/2014/12/we-arent-really-going-to-have-firefox.html

|

|

Robert O'Callahan: Portland |

Portland was one of the best Mozilla events I've ever attended --- possibly the very best. I say this despite the fact I had a cough the whole week (starting before I arrived), I had inadequate amounts of poor sleep, my social skills for large-group settings are meagre, and I fled the party when the music started.

I feel great about Portland because I spent almost all of each workday talking to people and almost every discussion felt productive. In most work weeks I run out of interesting things to talk about and fall back to the laptop, and/or we have lengthy frustrating discussions where we can't solve a problem or can't reach an agreement, but that didn't really happen this time. Some of my conversations had disagreements, but either we had a constructive and efficient exchange of views or we actually reached consensus.

A good example of the latter is a discussion I led about the future of painting in Gecko, in which I outlined a nebulous plan to fix the issues we currently have in painting and layer construction on the layout side. Bas brought up ideas about GPU-based painting which at first didn't seem to fit well with my plans, but later we were able to sketch a combined design that satisfies everything. I learned a lot in the process.

Another discussion where I learned a lot was with Jason about using rr for record-and-replay JS debugging. Before last week I wasn't sure if it was feasible, but after brainstorming with Jason I think we've figured out how to do it in a straightforward (but clever) way.

Portland also reemphasized to me just how excellent are the people in the Platform team, and other teams too. Just wandering around randomly, I'd almost immediately run into someone I think is amazing. We are outnumbered, but I find it hard to believe that anyone outguns us per capita.

There were lots of great events and people that I missed and wish I hadn't (sorry Doug!), but I feel I made good use of the time so I have few regrets. For the same reason I wasn't bothered by the scheduling chaos. I hear some people felt sad that they missed out on activities, but as often in life, it's a mistake to focus on what you didn't do.

During the week I reflected on my role in the project, finding the right ways to use the power I have, and getting older. I plan to blog about those a bit.

I played board games every night, mostly Bang! and Catan. It was great fun but I probably should cut back a bit next time. Then again, for me it was a more effective way to meet interesting strangers than the organized mixer party event we had.

|

|

Richard Newman: On soft martial arts and software engineers |

I recently began studying t`aij'iqu'an (“tai chi”), the Chinese martial art.

It always helps to have someone correct your form.

Many years ago I spent a year or two pursuing shotokan karate. Shotokan, by most standards, is a “hard” martial art: it opposes force with force, using low, stable stances to deliver direct strikes.

T`aij'iqu'an is an internal art, mixing hard with soft. To most observers (and most practitioners!) it’s entirely a soft, slow-moving exercise form. To quote Wikipedia:

The ability to use t’ai chi ch’uan as a form of self-defense in combat is the test of a student’s understanding of the art. T’ai chi ch’uan is the study of appropriate change in response to outside forces, the study of yielding and “sticking” to an incoming attack rather than attempting to meet it with opposing force. The use of t’ai chi ch’uan as a martial art is quite challenging and requires a great deal of training.

(Other martial arts are soft, but more immediately applicable: jujutsu, judo, and wing chun, for example.)

I see some parallels between the hard/soft characterization of martial arts and the ‘lifecycle’, if you will, of software engineers.

You might find it hard to believe (HTML needs a sarcasm tag, no?), but I was once a young, arrogant developer. I’d been hired at a startup in the US on the strength of a phone call, I was good at what I did, and there was an endless list of problems to solve. I like solving problems, and I liked that I could impress by doing so. And so I did.

I routinely worked 14-hour days. I’d get up at 7, shower, and head to the office. After work I’d go out for dinner with coworkers, then work until bed. I had no real hobbies apart from drinking with my coworkers, so my time was spent writing code. It’s so easy to solve problems when you can solve them yourself.

Eventually, after one too many solo victories over seemingly impossible deadlines, I was burned out.

Hard martial arts are very tempting, particularly to the young and able-bodied: they yield direct results. The better you get, the harder and faster you hit.

The problem with hard martial arts is that the world keeps making newer, tougher opponents, while time and each engagement are conspiring to strip away your own vigor. It takes a toll on your knees, your shoulders. Bruises take longer and longer to go away.

The software industry is like this, too. It will happily take as much time as you give it. Beating that last hard problem by burning a weekend will only win you a pat on the back and a new, bigger task to accomplish. Meanwhile your shoulders hunch, RSI kicks in, your vision worsens. You take your first week off work because the painkillers aren’t enough to let you type any more. You find out what an EKG is, what a sit-stand desk is, what physical therapy is like.

And while it looks like you’re winning — after all, you’re producing software that works — you’re accruing costs, too. You’re spending your future. Not only are you personally losing your motivation, your vitality, and a large part of your self, but you’re also building more software. Either you have to own it, or nobody really does. Maybe someone else should. Maybe it shouldn’t have been built at all. You think you’re winning, but you won’t know until later. And all along, your aggressive approach to building a solution alienates those around you.

A soft martial art tries to use your opponent’s strength and momentum against them. It yields and redirects. Ultimately, it asks whether you need to engage at all.

Hard martial arts eventually force you to confront your own fragility: “I can’t keep doing this”. So does software development, if you’re paying attention. You need to learn to ask the right questions, to draw on the rest of your team, to invest your time in learning and tools, in communication, and above all to invest in other people.

As the quote above suggests, this takes practice. But it works out best in the long run.

http://160.twinql.com/on-soft-martial-arts-and-software-engineers/

|

|

Soledad Penades: Meanwhile, in Mozlandia… |

Almost every employee and a good amount of volunteers flew into Portland past week for a sort of “coincidental work week” which also included a few common events, the “All hands”. Since it was held in Portland, home to “Portlandia“, someone started calling this week “Mozlandia” and the name stuck.

I knew it was going to be chaotic and busy and so I not only didn’t make any effort to meet with non-Mozilla-related Portlanders, but actively avoided that. When the day has been all about socialising from breakfast to afternoon, the last thing you want is to speak to more people. Also, I am not sure how to put this, but the fact that I visit some acquaintance’s town doesn’t mean that I am under any obligation to meet them. Sometimes people get angry that I didn’t tell them I was visiting and that’s not cool ![]()

Speaking about not-coolness: my trip started with two “incidents”. First, I got mansplained at the Heathrow Airport by an Air Canada employee that decided to take over my self-check in machine, trying to press buttons on the screen and answering security questions for me instead of just, maybe, allowing me to operate it as I was doing until he came and interrupted me, out of the blue. There was no one else in the area and I have no idea why he did that, but he got me angry.

Then the rest of the trip went pretty much as usual, with no incident. It was fun to spend layover time at the Vancouver Airport with Guillaume and Zac from the London office, and then share the experience of the Desolate Pod of Gates that is home to the mighty Propeller Planes.

I was really tired by the time I made it to my hotel–it was well past 6 AM in London time and I had been up for almost 24 hours with no sleep except for the short nap in the Vancouver-Portland flight, so the only thing I wanted was to make it to my room and sleeeeep. I got into one of the hotel lifts, and just as the doors were almost closed, someone waved their arm in and the doors opened again. Three massively tall and bulky men entered the lift and pressed some buttons for their floor, while I kept looking down and wondering how would the room look like and whether the pillows would be soft. And then I noticed something… something being repeated several times. I started paying attention and turns out that one of the men was talking to me. He was asking me:

How are you? How are you?

But I hadn’t replied because I was on my own world. So he repeated it again:

How are you?

So here’s the thing. When you’re that tired you have zero room for any sort of bullshit, and I was really, really tired. But those men were also really, really huge, compared to me. So I looked at him and I was really willing to give him a piece of my mind, but the only thing I said was

Maybe that is none of your business.

And luckily the doors for my floor opened and I didn’t have to stand their looks of “disappointment because I hadn’t been nice to them” any longer.

But…

I suddenly felt very unsafe because I hadn’t been nice to them.

Were them following me? Should I request my room to be changed to a different floor? Was there anything I was wearing that would be distinctive and would they be able to identify me the following days?

It took me a while to get asleep because I kept thinking about this, but eventually I got some sound slumber, hoping for an incident-free Sunday.

And it was a great, sunny and very COLD Sunday in Portland. Temperatures were about 0 degrees, which compared to London’s 12 degrees felt even colder. I kept going to warm closed places (cafes! shops! malls!) and then back to the glacial streets, so by Monday morning my body had decided it hated me and was going to demonstrate how much with a number of demonstrations. First came the throat pain, then tummy ache, sneezing, the full list of winter horrors.

This made me not really enjoy the whole “Mozlandia” week. I was in an state of confusion most of the time, either by virtue of my sinuses pressuring my brain, or just because of the medicine I took. It was hard to both follow conversations and articulate thoughts. I hope I didn’t disappoint anyone that wanted to meet me this week for SERIOUS BSNSS, but I was generally a shambles. Sorry about that!

And yet despite of that, I still had some interesting discussions with various people at Mozilla, both intentionally and accidentally, so that was cool. Some topics included:

- how can we work better with the Platform team (the ones implementing browser APIs, for those not in the Moz-know) so we know for certain which features are planned/implemented and with which degree of completion, and so we can give better advice to interested devs, and how can we improve the way we provide the feedback we get from developers at events, blog posts, etc. By the way: there’s a huge amount of cool new APIs coming up! this is neat

- future plans for the Web Audio API and the Web Audio Editor in Firefox DevTools, and also a general discussion on the API architecture and how it often takes developers by surprise, and whether we can do anything about that from a tooling point of view or not. Also, games, performance, and mixing other APIs together such as MediaRecorder.

- the Web Animations API and support for visualising that in the devtools-with keyframes and time lines and all that good, exciting stuff! It got me thinking about whether it would be possible to make another build of tween.js or some sort of util/wrapper that uses the Animations API internally. Food for thought!

- future Air Mozilla plans, including making it easier to upload content both from a moz-space and from an offline recording, and support for subtitles in various languages. I liked that they stress the fact that content does not need to be in English–after all, the Mozilla community speaks many languages!

- Rachel Nabors told us about her animation/authoring process to create interactive experiences/comics using just HTML+JS+CSS. This was really enlightening and while I don’t have all the answers to the issues yet, it got me thinking about how can we make this easier and more enjoyable for non-super-tech-savvy audiences. There were cries for a Firefox Designer Edition too–we joked that it would come with some extra colorpickers because why not?

I had to skip a couple of evenings because my immune system was just too excited to be on call, and so I stayed at my room. I didn’t want to go to sleep too early or the jetlag would be horrible, so I stayed awake by building a little silly thing: spoems, or spam poems (sources). I want to use it as a playground to try CSS stuff since it’s mostly text, but so far it’s super basic and that’s OK.

It was funny that this… morning? yesterday afternoon…? other mozillians that were flying back to London in the same plane than me were telling about the best of the closing party and internally I was like “well, I just drank some coffee and listened to Boards of Canada and then had ramen and watched random things on the Internet, and that was exactly what I needed”.

And that was my “Mozlandia”. What about yours? ![]()

http://soledadpenades.com/2014/12/07/meanwhile-in-mozlandia/

|

|

Benjamin Kerensa: Mozilla All Hands: They can’t hold us! |

Macklemore & Ryan Lewis perform for Mozilla

Macklemore & Ryan Lewis perform for MozillaWhat a wonderful all hands we had this past week. The entire week was full of meetings and planning and I must say I was exhausted by Thursday having been up each day working by 6:00am and going to bed by midnight.

I’m very happy to report that I made a lot of progress on meeting with more people to discuss the future of Firefox Extended Support Release and how to make it a much better offering to organizations.

I also spent some time talking to folks about Firefox in Ubuntu and rebranding Iceweasel to Firefox in Debian (fingers crossed something will happen here in 2015). Also it was great to participate in discussions around making all of the Firefox channels offer more stability and quality to our users.

It was great to hear that we will be doing some work to bring Firefox to iOS which I think will fill a gap that has existed for our users of OSX who have an iPhone. Anyways, what I can say about this all hands is that there were lots of opportunities for discussions on quality and the future is looking very bright.

Also a big thanks to Lukas Blakk who put together an early morning excursion to Sherwood Ice Arena where Mozillians played some matches of hockey which I took photos of here.

In closing, I have to say it was a great treat for Macklemore & Ryan Lewis to come and perform for us in a private show and help us celebrate Mozilla.

|

|

Tarek Ziad'e: 5 work week tips |

Our Mozilla work week just ended with an amazing evening. We had a private Mackelmore concert. Just check out Twitter with the #mozlandia tag and feel the vibe. example.

When they got on stage I must admit I did not know who Mackelmore was. yeah sorry. I live in a Spotify-curated music world and I have no TV.

At some point they played a song that got me thinking: ooohhh yeah that song, ok.

Anyways.

During some conversations a lot of folks told me that they where overwhelmed by the work week and har a very hard time to keep up with all the events. Some of them were very frustrated and felt like they were completely disconnected.

I went through this a lot in the past but things improved throughout the years. This blog post collect a few tips.

1. List the folks you want to meet

This one is a given. Before you arrive, make a list of the folks you want to meet and the topics you want to talk about with them.

Make that list short. 10, no more.

2. Do not code

This is the worst thing to do: dive into your laptop and code. It's easy to do and time will fly by once you've started to code. People that don't know you well will be afraid of disturbing you.

Coding is not something to do during your work weeks. If you need a break from the crowd that's the next tip.

3. Listen to your body

A work week is intense for your body. By the end of the week you will look like a Zombie and you will not be able to fully enjoy what's happening. If you are coming for a far place, the jetlag is going to make the problem worse. If you're a partygoer that's not going to help either. All the food and drinks are not really helping.

I've seen numerous folks getting really sick on day 3 or 4 because they had intensive days at the beginning of the event. It's hard not to burn out.

Some (young) folks are doing fine on this. I know I am not. What I did for the Portland work week was to skip everything on day 2 starting at 5pm, ate a soup and went to sleep at 8pm. Skipping on all the cookies and beers and goodies gives your body a bit of rest :)

That gave me the energy I needed for day 3.

4. Don't hang with your team all the time

You talk to those folks all the time. Meet other folks, check out other sessions, etc.

This is especially important if your native langage is not English. I got trapped many time by this problem: just hanging with a few french guys.

5. Walk away from meetings

Don't be shy of walking away from meetings that don't bring you any value. Walk out discretly and politely. You are not in a meeting to read hackernews on your laptop. You can do this at home.

People won't get offended in the context of a work week - unless this is a vital team meeting or something.

What are your tips?

|

|

Tarek Ziad'e: DNS-Based soft releases |

Firefox Hello is this cool WebRTC app we've landed in Firefox to let you video chat with friends. You should try it, it's amazing.

My team was in charge of the server side of this project - which consists of a few APIs that keep track of some session information like the list of the rooms and such things.

The project was not hard to scale since the real work is done in the background by Tokbox - who provide all the firewall traversal infrastructure. If you are curious about the reasons we need all those server-side bits for a peer-2-peer technology, this article is great to get the whole picture: http://www.html5rocks.com/en/tutorials/webrtc/infrastructure/

One thing we wanted to avoid is a huge peak of load on our servers on Firefox release day. While we've done a lot of load testing, there are so many interacting services that it's quite hard to be 100% confident. Potentially going from 0 to millions of users in a single day is... scary ? :)

So right now only 10% of our user base sees the Hello button. You can bypass this by tweaking a few prefs, as explained in many places on the web.

This percent is going to be gradually increased so our whole user base can use Hello.

How does it work ?

When you start Firefox, a random number is generated. Then Firefox ask our service for another number. If the generated number is inferior to the number sent by the server, the Hello button is displayed. If is superior, the button is hidden.

Adam Roach proposed to set up an HTTP endpoint on our server to send back the number and after a team meeting I suggested to use a DNS lookup instead.

The reason I wanted to use a DNS server was to rely on a system that's highly available and freaking fast. On the server side all we had to do is to add a new DNS entry and let Firefox do a DNS lookup - yeah you can do DNS lookups in Javascript as long as you are within Gecko.

Due to a DNS limitation we had to move from a TXT field to an A field - which returns an IP field. But converting IP to integer values is not a problem, so that worked out.

See https://wiki.mozilla.org/Loop/Load_Handling#Service_Soft_Start for all the details.

Generalizing the idea

I think using DNS as a distributed database for simple values like this is an awesome idea. I am happy I thought of this one :)

Based on the same technique, you can also set up some A/B testing based on the DNS server ability to send back a different value depending on things like a user location for example.

For example, we could activate a feature in Firefox only for people in Connecticut, or France or Europe.

We had a work week in Portland and we started to brainstorm on how such a service could look like, and if it would be practical from a client-side point of view.

The general feedback I had so far on this is: Hell yeah we want this!

To be continued...

|

|

David Burns: Bugsy 0.4.0 - More search! |

I have just released the latest version of Bugsy. This allows you to search bugs via change history fields and within a certain timeframe. This allows you to find things like bugs created within the last week, like below.

I have updated thedocumentation to get you started.

>>> bugs = bugzilla.search_for\

.keywords('intermittent-failure')\

.change_history_fields(["[Bug creation]"])\

.timeframe("2014-12-01", "2014-12-05")\

.search()

You can see the Changelog for more details..

Please raise issues on GitHub

http://www.theautomatedtester.co.uk/blog/2014/bugsy-0.4.0-more-search.html

|

|

Mike Hommey: Using git to interact with mercurial repositories |

I was planning to publish this later, but after talking about this project to a few people yesterday and seeing the amount of excitement in response, I took some time this morning to tie a few loose ends and publish this now. Mozillians, here comes the git revolution.

Let me start with a bit of history. I am an early git user. I’ve been using git almost since its first release. I like it. A lot. I’ve contributed dozens of patches to git.

I started using mercurial when I got commit access to Mozilla repositories, much later. I don’t enjoy using mercurial much.

There are many tools to make git talk to mercurial. Most are called git-remote-hg because they use the git remote helpers infrastructure. All of them rely on having a local mercurial clone. When dealing with repositories like mozilla-central, it means storing more than 1.5GB of data just to talk to mercurial, on top of the git database.

So a few years ago, I started to toy with the idea to make git talk to mercurial directly. I got as far as being able to do a full clone of mozilla-central back then, in a reasonable amount of time. But I left it at that because I needed to figure out how to efficiently store all the metadata required to handle incremental updates/pulling, and didn’t have enough incentive to go forward: working with mercurial was not painful enough.

Fast forward to the beginning of this year. The mozilla-central repository is now much bigger than it used to be, and mercurial handles it much less smoothly than it used to when Mozilla switched to using it. That was enough to get me started again, but not enough to dedicate enough time to it.

Fast forward to a few weeks ago. Gregory Szorc poked dev-platform to know what kind of workflows people were using with git to work on Mozilla code. And I was really not satisfied with the answers. First, I was wondering why no-one was mentioning the existing tools. So I picked one, and tried.

Cloning mozilla-central took 12 hours and left me with a ~10GB .git directory. Running git gc –agressive for another 10 hours (my settings may have made gc take more time than it would have with the default configuration) brought it down to about 2.6GB, only 700MB of which is actual git data, the remainder being the associated mercurial repository. And as far as I understand it, the tool doesn’t really support our use of mercurial repositories, especially try (but I could be wrong, I didn’t really look too much).

That was the straw that broke the camel’s back. So after a couple weeks hacking, I now have something that can clone mozilla-central within 30 minutes on my machine (network transfer excluded). The resulting .git directory is around 1.5GB with the default git config, without running git gc. If you tweak the compression level in your git config, cloning takes a bit longer, and the repo takes about 1.1GB, And you can subsequently pull from mozilla-central. As well as pull from other branches without having to clone them from scratch. Push support is not there yet because it’s an early prototype, but I should be able to get that to work in the next couple weeks.

At this point, you may be wondering how you can use that thing. Here it comes:

$ git clone https://github.com/glandium/git-remote-hg $ export PATH=$PATH:$(pwd)/git-remote-hg

Note it requires having the mercurial code available to python, because git-remote-hg uses the mercurial code to talk the mercurial wire protocol. Usually, having mercurial installed is enough.

You can now clone a mercurial repository:

$ git clone hg::http://hg.mozilla.org/mozilla-central

If, like me, you had a local mercurial clone, you can do the following instead:

$ git clone hg::/path/to/mozilla-central-clone $ git remote set-url origin hg::http://hg.mozilla.org/mozilla-central

You can then use git fetch/pull like with git repositories:

$ git pull

Now, you can add other repositories:

$ git remote add inbound hg::http://hg.mozilla.org/integration/mozilla-inbound $ git remote update inbound

There are a few caveats, like the fact that it currently creates new remote branches essentially any time you pull something. But it shouldn’t disrupt anything.

It should be noted that while the contents are identical to the gecko-dev git repositories (the git tree object sha1s are identical, I checked), the commit SHA1s are different. For two reasons: gecko-dev also contains the CVS history, and hg-git, which is used to fill it adds some mercurial metadata to commit messages that git-remote-hg doesn’t add.

It is, however, possible to graft the CVS history from gecko-dev to a clone created with git-remote-hg. Assuming you have a remote for gecko-dev and fetched from it, you can do the following:

$ echo eabda6aae98d14c71d7e7b95a66896868ff9500b 3ec464b55782fb94dbbb9b5784aac141f3e3ac01 >> .git/info/grafts

Last note: please read the README file when you update your git clone of the git-remote-hg repository. As the prototype evolves, there might be things that you need to do to your existing clones, and it will be written there.

|

|