Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Yunier Jos'e Sosa V'azquez: [Solucionado] ?D'onde est'a el bot'on Firefox Hello? |

Ayer cuando te presentamos la nueva versi'on de Firefox y sus novedades, destacaba la ausencia de una de sus caracter'isticas principales pero en realidad, siempre estuvo all'i. Al parecer, fue un problema con configuraci'on que en otras versiones de Firefox no suceder'a. En este art'iculo podr'as encontrar la soluci'on al problema.

Si el bot'on Firefox Hello ![]() no te aparece en la barra de herramientas o en el men'u, puede que todav'ia est'e en el panel Herramientas y caracter'isticas adicionales esperando que lo arrastres a donde m'as te convenga.

no te aparece en la barra de herramientas o en el men'u, puede que todav'ia est'e en el panel Herramientas y caracter'isticas adicionales esperando que lo arrastres a donde m'as te convenga.

Sigue estos pasos:

- En la esquina superior derecha del navegador, haz clic en el bot'on de men'u

.

. - Haz clic en

Personalizar para ver las herramientas y caracter'isticas adicionales de Firefox.

Personalizar para ver las herramientas y caracter'isticas adicionales de Firefox. - Arrastra el icono Hello desde la ventana de herramientas y caracter'isticas adicionales hasta tu barra de herramientas o men'u.

- Por 'ultimo, haz clic en Terminar personalizaci'on.

Si no te aparece el bot'on Hello en el panel Herramientas y caracter'isticas adicionales, debes acceder a la p'agina about:config y cambiar la preferencia loop.throttled a false. Despu'es reinicias Firefox y cuando lo abras nuevamente tendr'as el icono en la barra de herramientas. Mientras tanto, tambi'en puede recibir llamadas de otros usuarios.

Fuente: Ayuda de Firefox

http://firefoxmania.uci.cu/donde-esta-el-boton-firefox-hello-solucion/

|

|

Pascal Finette: Funny Facebook Messenges Exchange |

I just had an amazing Facebook Messages exchange with someone who asked me to write for his startup newsletter/website. Guess he’s not quite as empathetic about entrepreneurs as I and many other are. But read for yourself:

Mr X:

Great to meet you Pascal

I am founder at —REDACTED—

I would love to learn about your work

Pascal Finette:

How can I help?

Mr X:

I would like to invite you to write for —REDACTED—

Pascal Finette:

That’s a very kind invitation. But truth be told - between The Heretic, writing a book and all the other work I do, I just have no time to write for another outlet.

Mr X:

What is the book about?

Pascal Finette:

Entrepreneurship.

Mr X:

What is new in the topic?

Pascal Finette:

You tell me.

Mr X:

You just need to do it

Books will not help

Pascal Finette:

Why publish a website about this topic then? Just need to do it. Your website doesn’t help.

Mr X:

I know

It is for wannabees

Do you write about methodology or best practices?

Pascal Finette:

Just so I get this straight - you asked me to write for your website which you consider ‘for wannabees’? So you effectively tell me that you consider my writing ‘for wannabees’? Man, I would consider my approach to this whole thing… That surely is not the way you win partners.

After that last message I didn’t hear back from him. I wonder why?! :)

|

|

Michael Kaply: Sunsetting the Original CCK Wizard |

In the next few weeks, I'll be sunsetting the original CCK Wizard and removing it from AMO. It really doesn't work well with current Firefox versions anyway, so I'm surprised it still has so many users.

If for some reason you're still using the old CCK Wizard, please let me know why so I can make sure what you need is integrated into the CCK2.

I'm also looking for ideas for new posts for my blog, so if there is some subject around deploying or customizing Firefox that you want to know more about, please let me know.

http://mike.kaply.com/2014/12/02/sunsetting-the-original-cck-wizard/

|

|

Luis Villa: Free-riding and copyleft in cultural commons like Flickr |

Flickr recently started selling prints of Creative Commons Attribution-Share Alike photos without sharing any of the revenue with the original photographers. When people were surprised, Flickr said “if you don’t want commercial use, switch the photo to CC non-commercial”.

This seems to have mostly caused two reactions:

- “This is horrible! Creative Commons is horrible!”

- “Commercial reuse is explicitly part of the license; I don’t understand the anger.”

I think it makes sense to examine some of the assumptions those users (and many license authors) may have had, and what that tells us about license choice and design going forward.

- Free ride!!, by Dhinakaran Gajavarathan, under CC BY 2.0

Free riding is why we share-alike…

As I’ve explained before here, a major reason why people choose copyleft/share-alike licenses is to prevent free rider problems: they are OK with you using their thing, but they want the license to nudge (or push) you in the direction of sharing back/collaborating with them in the future. To quote Elinor Ostrom, who won a Nobel for her research on how commons are managed in the wild, “[i]n all recorded, long surviving, self-organized resource governance regimes, participants invest resources in monitoring the actions of each other so as to reduce the probability of free riding.” (emphasis added)

… but share-alike is not always enough

Copyleft is one of our mechanisms for this in our commons, but it isn’t enough. I think experience in free/open/libre software shows that free rider problems are best prevented when three conditions are present:

- The work being created is genuinely collaborative — i.e., many authors who contribute similarly to the work. This reduces the cost of free riding to any one author. It also makes it more understandable/tolerable when a re-user fails to compensate specific authors, since there is so much practical difficulty for even a good-faith reuser to evaluate who should get paid and contact them.

- There is a long-term cost to not contributing back to the parent project. In the case of Linux and many large software projects, this long-term cost is about maintenance and security: if you’re not working with upstream, you’re not going to get the benefit of new fixes, and will pay a cost in backporting security fixes.

- The license triggers share-alike obligations for common use cases. The copyleft doesn’t need to perfectly capture all use cases. But if at least some high-profile use cases require sharing back, that helps discipline other users by making them think more carefully about their obligations (both legal and social/organizational).

Alternately, you may be able to avoid damage from free rider problems by taking the Apache/BSD approach: genuinely, deeply educating contributors, before they contribute, that they should only contribute if they are OK with a high level of free riding. It is hard to see how this can work in a situation like Flickr’s, because contributors don’t have extensive community contact.1

The most important takeaway from this list is that if you want to prevent free riding in a community-production project, the license can’t do all the work itself — other frictions that somewhat slow reuse should be present. (In fact, my first draft of this list didn’t mention the license at all — just the first two points.)

Flickr is practically designed for free riding

Flickr fails on all the points I’ve listed above — it has no frictions that might discourage free riding.

- The community doesn’t collaborate on the works. This makes the selling a deeply personal, “expensive” thing for any author who sees their photo for sale. It is very easy for each of them to find their specific materials being reused, and see a specific price being charged by Yahoo that they’d like to see a slice of.

- There is no cost to re-users who don’t contribute back to the author—the photo will never develop security problems, or get less useful with time.

- The share-alike doesn’t kick in for virtually any reuses, encouraging Yahoo to look at the relationship as a purely legal one, and encouraging them to forget about the other relationships they have with Flickr users.

- There is no community education about the expectations for commercial use, so many people don’t fully understand the licenses they’re using.

So what does this mean?

This has already gone on too long, but a quick thought: what this suggests is that if you have a community dedicated to creating a cultural commons, it needs some features that discourage free riding — and critically, mere copyleft licensing might not be good enough, because of the nature of most production of commons of cultural works. In Flickr’s case, maybe this should simply have included not doing this, or making some sort of financial arrangement despite what was legally permissible; for other communities and other circumstances other solutions to the free-rider problem may make sense too.

And I think this argues for consideration of non-commercial licenses in some circumstances as well. This doesn’t make non-commercial licenses more palatable, but since commercial free riding is typically people’s biggest concern, and other tools may not be available, it is entirely possible it should be considered more seriously than free and open source software dogma might have you believe.

- It is open to discussion, I think, whether this works in Wikimedia Commons, and how it can be scaled as Commons grows.

http://lu.is/blog/2014/12/02/free-riding-and-copyleft-in-cultural-commons-like-flickr/

|

|

Gervase Markham: An Invitation |

Ben Smedberg boldly writes:

I’d like to invite my blog readers and Mozilla coworkers to Jesus Christ.

…

Making a religious invitation to coworkers and friends at Mozilla is difficult. We spend our time and build our deepest relationships in a setting of on email, video, and online chat, where off-topic discussions are typically out of place. I want to share my experience of Christ with those who may be interested, but I don’t want to offend or upset those who aren’t.

This year, however, presents me with a unique opportunity. Most Mozilla employees will be together for a shared planning week. If you will be there, please feel free to find me during our down time and ask me about my experience of Christ.

Amen to all of that. Online collaboration is great, but as Ben says, it’s hard to find opportunities to discuss things which are important outside of a Mozilla context. There are several Christians at Mozilla attending the work week in Portland (roc is another, for example) and any of us would be happy to talk.

I hope everyone has a great week!

http://feedproxy.google.com/~r/HackingForChrist/~3/CmBM4yLAt74/

|

|

Tantek Celik: Raising The Bar On Open Web Standards: Supporting More Openness |

Yesterday was the 8th annual Blue Beanie Day celebrating web standards. Jeffrey Zeldman called for celebrating community diversity and pledging “to keep things moving in a positive, humanist direction”. In addition to fighting bad behaviors, we should also push for more good behaviors, more openness, and more access across a more broadly diverse community.

Yesterday was the 8th annual Blue Beanie Day celebrating web standards. Jeffrey Zeldman called for celebrating community diversity and pledging “to keep things moving in a positive, humanist direction”. In addition to fighting bad behaviors, we should also push for more good behaviors, more openness, and more access across a more broadly diverse community.

I've written about the open web as well as best bractices for open web standards development before. It's time to update those and raise the bar on what we mean and want as "open".

In summary we should support open web standards that are:

- Free (of cost) to read (as opposed to "pay to download" as noted)

- Free(dom) to implement (royalty free, CC0)

- Free(dom and of cost) to discuss

- Free(dom) to update (e.g. by republishing with suggested changes)

- Published on the open web itself

- Published with open web formats

While some of those criteria have an obvious explanation like no cost to download, others have more subtle and lengthier explanations, like supporting standards licensed with CC0 to allow a more diverse set of communities to make suggestions, e.g. via republishing with changes, as well as direct incorporation of (pseudo)code in those standards into a more diverse set of (e.g. open source) implementations.

Not all web standards, even "open" web standards, are created equal, nor are they equally "open". We should (must) support ever more openness in web standards development as that benefits more contributors, as well as a more rapid evolution of those standards as well.

http://tantek.com/2014/335/b1/raising-bar-open-web-standards

|

|

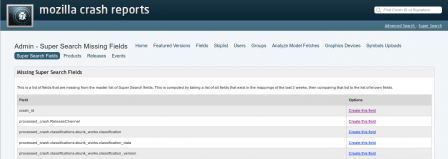

Adrian Gaudebert: Socorro: the Super Search Fields guide |

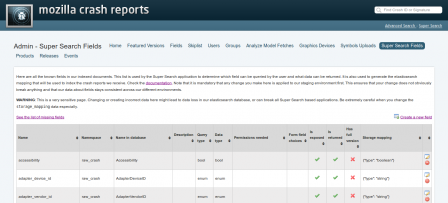

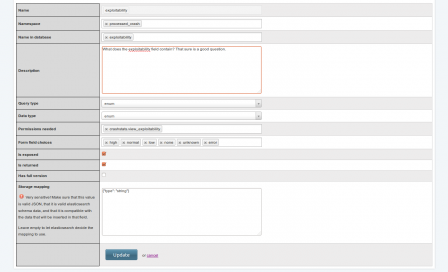

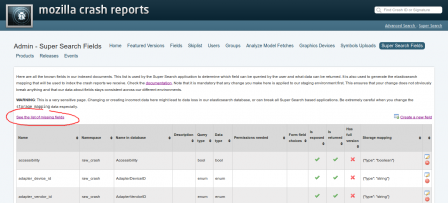

Socorro has a master list of fields, called the Super Search Fields, that controls several parts of the application: Super Search and its derivatives (Signature report, Your crash reports... ), available columns in report/list/, and exposed fields in the public API. Fields contained in that list are known to the application, and have a set of attributes that define the behavior of the app regarding each of those fields. An explanation of those attributes can be found in our documentation.

In this guide, I will show you how to use the administration tool we built to manage that list.

You need to be a superuser to be able to use this administration tool.

Understanding the effects of this list

It is important to fully understand the effects of adding, removing or editing a field in this Super Search Fields tool.

A field needs to have a unique Name, and a unique combination of Namespace and Name in database. Those are the only mandatory values for a field. Thus, if a field does not define any other attribute and keeps their default values, it won't have any impact in the application -- it will merely be "known", that's all.

Now, here are the important attributes and their effects:

Is exposed- if this value is checked, the field will be accessible in Super Search as a filter.Is returned- if this value is checked, the field will be accessible in Super Search as a facet / aggregation. It will also be available as a column in Super Search and report/list/, and it will be returned in the public API.Permissions needed- permissions listed in this attribute will be required for a user to be able to use or see this field.Storage mapping- this value will be used when creating the mapping to use in Elasticsearch. It changes the way the field is stored. You can use this value to define some special rules for a field, for example if it needs a specific analyzer. This is a sensitive attribute, if you don't know what to do with it, leave it empty and Elasticsearch will guess what the best mapping is for that field.

It is, as always, a rule of thumb to apply changes to the dev/staging environments before doing so in production. And to my Mozilla colleagues: this is mandatory! Please always apply any change to stage first, verify it works as you want (using Super Search for example), then apply it to production and verify there.

Getting there

To get to the Super Search Fields admin tool, you first need to be logged in as a superuser. Once that's done, you will see a link to the administration in the bottom-right corner of the page.

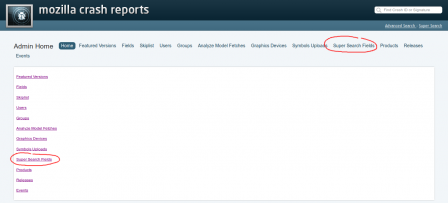

Clicking that link will get you to the admin home page, where you will find a link to the Super Search Fields page.

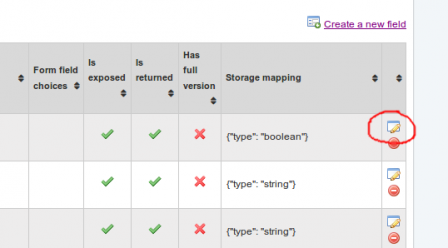

The Super Search Fields page lists all the currently known fields with their attributes.

Adding a new field

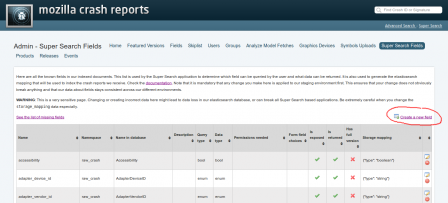

On the Super Search Fields page, click the Create a new field link in the top-right corner. That leads you to a form.

Fill all the inputs with the values you need. Note that Name is a unique identifier to this field, but also the name that will be displayed in Super Search. It doesn't have to be the same as Name in database. The current convention is to use the database name but in lower case and with underscores. So for example if your field is named DOMIPCEnabled in the database, we would make the Name something like dom_ipc_enabled.

Use the documentation about our attributes to understand how to fill that form.

Clicking the Create button might take some time, especially if you filled the Storage mapping attribute. If you did, in the back-end the application will perform a few things to very that this change does not break Elasticsearch indexing. If you get redirected to the Super Search Fields page, that means the operation was successful. Otherwise, an error will be displayed and you will need to press the Back button of your browser and fix the form data.

Note that cache is refreshed whenever you make a change to the list, so you can verify your changes right away by looking at the list.

Editing a field

Find the field you want to edit in the Super Search Fields list, and click the edit icon to the right of that field's row. That will lead you to a form much like the New field one, but prefilled with the current attributes' values of that field. Make the appropriate changes you need, and press the Update button. What applies to the New field form does apply here as well (mapping checks, cache refreshing, etc. ).

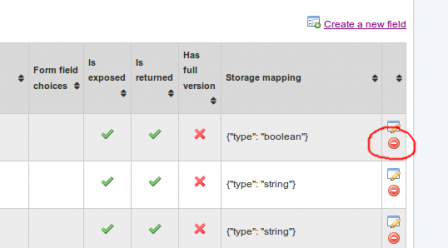

Deleting a field

Find the field you want to edit in the Super Search Fields list, and click the delete icon to the right of that field's row. You will be prompted to confirm your intention. If you are sure about what you're doing, then confirm and you will be done.

The missing fields tool

We have a tool that looks at all the fields known by Elasticsearch (meaning that Elasticsearch has received at least one document containing that field) and all the fields known in the Super Search Fields, and shows a diff of those. It is a good way to see if you did not forget some key fields that you could use in the app.

To access that list, click the See the list of missing fields link just above the Super Search Fields list.

The list of missing fields provides a direct link to create the field for each row. It will take you to the New field form with some prefilled values.

Conclusion

I think I have covered it all. If not, let me know and I'll adjust this guide. Same goes if you think some things are unclear or poorly explained.

If you find bugs in this Super Search Fields tool, please use Bugzilla to report them. And remember, Socorro is free / "libre" software, so you can also go ahead and fix the bugs yourself! :-)

http://adrian.gaudebert.fr/blog/post/2014/12/02/socorro-super-search-fields-guide

|

|

Doug Belshaw: A Brief History of Web Literacy and its Future Potential [DMLcentral] |

Further to an earlier post on the history of web literacy, I’ve just had an article published at DMLcentral. Entitled A Brief History of Web Literacy and its Future Potential, it weighs in at over 3,000 words – so you might want to sit down with a cup of coffee before starting to read it!

After doing some additional research on top of that I did for my thesis, I’ve identified five ‘eras’ of web literacy:

- 1993-1997: The Information Superhighway

- 1999-2002: The Wild West

- 2003-2007: The Web 2.0 era

- 2008-2012: The Era of the App

- 2013+: The Post-Snowden era

As I say in the article:

It’s worth noting that what follows is partial, incomplete, focused on the developed, western world, and only a first attempt. I’d be very grateful for comment, pushback, and pointers to other work in this area.

Click here to read the article in full at DMLcentral

Questions? Comments? Please add them to the original article at the link above. Alternatively, email me: doug@mozillafoundation.org

|

|

Liz Henry: Thoughts on working at Mozilla and the Firefox release process |

Some thoughts on my life at Mozilla as I head into our company-wide work week here in Portland! My first year at Mozilla I spent managing the huge volume of bugs, updating docs on how to triage incoming bugs and helping out with Bugzilla itself. For my second year I’ve been more closely tied into the Firefox release process, and I switched from being on the A-Team (Automation and Tools) to desktop Firefox QA, following Firefox 31 and then Firefox 34 through their beginning to release.

I also spent countless hours fooling around with Socorro, filing bugs on the highest volume crash signatures for Firefox, and then updating the bugs and verifying fixes, especially for startup crashes or crashes associated with “my” releases.

Over this process I’ve really enjoyed working with everyone I’ve met online and in person. The constant change of Mozilla environments and the somewhat anarchic processes are completely fascinating. Though sometimes unnerving. I have spent these two years becoming more of a generalist. I have to talk with end users, developers on every Mozilla engineering team, project or product managers if they exist, other QA teams, my own Firefox/Desktop QA team, the people maintaining all the tools that all of us use, release management, and release engineering. Much of the actual QA has been done by our Romanian team so I have coordinated a lot with them and hope to meet them all some day. When I need to dig into a bug or feature and figure out how it works, it means poking around in documentation or in the code or talking with people, then documenting whatever it is.

This is a job (and a workplace) suited for people who can cope with rapid change and shifting ground. Without a very specific focus, expertise is difficult. People complain a lot about this. But I kind of like it! And I’m constantly impressed by how well our processes work and what we produce. There are specific people who I think of as total rock stars of knowing things. Long time experts like bz or dbaron. Or dmajor who does amazing crash investigations. I am in their secret fan club. If there were bugzilla “likes” I would be liking up a storm on there! It’s really amazing how well the various engineering teams work together. As we scramble around to improve things and as we nitpick I want to keep that firmly in mind. And, as we are all a bit burned out from dealing with the issues from the 33 Firefox release and many point releases, then the 10th anniversary release, then last minute scrambling to incorporate surprise/secret changes to Firefox 34 because of our new deal with Yahoo.

I was in a position to see some of the hard work of the execs, product and design people, and engineers as well as going through some rounds of iterating very quickly with them these last 2 weeks (and seeing gavin and lmandel keep track of that rapid pace) Then seeing just part of what the release team needed to do, and knowing that from their perspective they also could see what IT and infrastructure teams had to do in response. So many ripples of teamwork and people thinking things through, discussing, building an ever-changing consensus reality.

I find that for every moment I feel low for not knowing something, or making a mistake (in public no less) there are more moments when I know something no one else knows in a particular context or am able to add something productive because I’m bringing a generalist perspective that includes my years of background as a developer.

As an author and editor I am reminded of what it takes to edit an anthology. My usual role is as a general editor with a vision: putting out a call, inviting people to contribute, working back and forth with authors, tracking all the things necessary (quite a lot to track, more than you’d imagine!) and shifting back and forth from the details of different versions of a story or author bios, to how the different pieces of the book fit together. And in that equation you also, if you’re lucky, have a brilliant and meticulous copy editor. At Aqueduct Press I worked twice with Kath Wilham. I would have gone 10 rounds of nitpick and Kath would still find things wrong. My personal feeling for my past year’s work is that my job as test plan lead on a release has been 75% “editor” and 25% copy editor.

If I had a choice I would always go with the editor and “glue” work rather than the final gatekeeper work. That last moment of signing off on any of the stages of a release freak me out. I’m not enough of a nitpicker and in fact, have zero background in QA — instead, 20 years as a lone wolf (or nearly so) developer, a noisy, somewhat half-assed one who has never *had* QA to work with much less *doing* QA. It’s been extremely interesting, and it has also been part of my goal for working in big teams. The other thing I note from this stressful last 6 weeks is that, consistent with other situations for me, in a temporary intense “crisis” mode my skills shine out. Like with the shifting-every-30-minutes disaster relief landscape, where I had great tactical and logistical skills and ended up as a good leader. My problem is that I can’t sustain that level of awareness, productivity, or activeness, both physically and mentally, for all that long. I go till I drop, crash & burn. The trick is knowing that’s going to happen, communicating it beforehand, and having other people to back you up. The real trick which I hope to improve at is knowing my limits and not crashing and burning at all. On the other hand, I like knowing that in a hard situation I have this ability to tap into. It just isn’t something I can expect to do all the time, and isn’t sustainable. One thing I miss about my “old” life is building actual tools. These last 2 years have been times of building human and institutional infrastructure for me much more than making something more obvious and concrete. I would like to write another book and to build a useful open source tool of some sort. Either for Mozilla, for an anti-harassment tool suite, or for Ingress…. ![]() Alternatively I have a long term idea about open source hardware project for mobility scooters and powerchairs. That may never happen, so at the least, I’m resolving to write up my outline of what should happen, in case someone else has the energy and time to do it.

Alternatively I have a long term idea about open source hardware project for mobility scooters and powerchairs. That may never happen, so at the least, I’m resolving to write up my outline of what should happen, in case someone else has the energy and time to do it.

In 2013 and 2014 I mentored three interns for the GNOME-OPW project at Mozilla. Thanks so much to Tiziana Selitto, Maja Frydrychowicz, and Francesca Ciceri for being awesome to work with! As well as the entire GNOME-OPW team at Mozilla and beyond. I spoke about the OPW projects this summer at Open Source Bridge and look forward to more work as a mentor and guide in the future.

Meanwhile! I started a nonprofit in my “spare time”! It’s Double Union, a feminist, women-only hacker and maker space in San Francisco and it has around 150 members. I’m so proud of everything that DU has become. And I continue my work on the advisory boards of two other feminist organizations, Ada Initiative and GimpGirl as well as work in the backchannels of Geekfeminism.org. I wrote several articles for Model View Culture this year and advised WisCon on security issues and threat modeling. I read hundreds of books, mostly science fiction, fantasy, and history. I followed the awesome work my partner Danny does with EFF, as well. These things are not just an important part of my life, they also make my work at Mozilla more valuable because I am bringing perspectives from these communities to the table in all my work.

The other day I thought of another analogy for my last year’s work at Mozilla that made me laugh pretty hard. I feel personally like the messy “glue” Perl scripts it used to be my job to write to connect tools and data. Part of that coding landscape was because we didn’t have very good practices or design patterns but part of it I see as inevitable in our field. We need human judgement and routing and communication to make complicated systems work, as well as good processes.

I think for 2015 I will be working more closely with the e10s team as well as keeping on with crash analysis and keeping an eye on the release train.

Mad respect and appreciation to everyone at Mozilla!!

http://bookmaniac.org/thoughts-on-working-at-mozilla-and-the-firefox-release-process/

|

|

Yunier Jos'e Sosa V'azquez: Firefox Hello, WebIDE y m'as en la nueva versi'on de Firefox |

Ya estamos en el 'ultimo mes del a~no y antes de que despidamos este genial 2014, Mozilla nos regala una nueva versi'on de nuestro navegador favorito cargadita de novedades para todo el p'ublico en general y en especial, para aquellos que gusten de realizar videollamadas y desarrollar para la Web. Sin dilatar m'as el art'iculo, conozca de primera mano las novedades de esta liberaci'on.

Firefox Hello es un cliente de comunicaci'on en tiempo real que te permite comunicarte con tu familia y amigos que quiz'as no tengan el mismo servicio de video chat, software o hardware como tu. Las llamadas de video y voz son gratis y no necesitar'as descargar nada porque todo viene incluido en el navegador. Dando clic en el bot'on ![]() “burbuja de chat” ubicado en la barra de herramientas de Firefox, estar'as listo para conectarte con cualquiera que tenga activado WebRTC en su navegador (Firefox, Chrome u Opera).

“burbuja de chat” ubicado en la barra de herramientas de Firefox, estar'as listo para conectarte con cualquiera que tenga activado WebRTC en su navegador (Firefox, Chrome u Opera).

Hello tambi'en permite utilizar el servicio sin tener que crear obligadamente una cuenta, compartiendo el v'inculo de la llamada, podr'an iniciar la comunicaci'on. La integraci'on de contactos est'a presente y con ella podr'as administrar tus contactos, incluso podr'as importalos desde tu cuenta en Google. Si tus contactos tienen una Cuenta Firefox (Firefox Account) y est'an en l'inea, entonces puedes “llamarlos” directamente desde Firefox.

Desde ahora cambiar temas/personas es m'as f'acil porque puedes hacerlo directamente en el modo de Personalizaci'on de Firefox, al cual accedes desde el Men'u ![]() ->

-> ![]() Personalizar o escribiendo about:customizing en la barra de direcciones.

Personalizar o escribiendo about:customizing en la barra de direcciones.

WebIDE es una nueva herramienta para desarrollo de aplicaciones dentro del navegador que encontrar'as en esta versi'on. WebIDE te permite crear una nueva aplicaci'on de Firefox OS (que es solo una Web App) desde una plantilla, o abrir el c'odigo de una aplicaci'on creada previamente. Desde all'i puedes editar los archivos de la aplicaci'on, ejecutarla en el simulador y depurarla con las herramientas de desarrollo. Para abrirlo, selecciona la opci'on WebIDE desde el men'u “Desarrollador web” de Firefox. (leer m'as documentaci'on)

Para incrementar tu seguridad, todas las b'usquedas que realices en Wikipedia desde Firefox ahora usan protocolo seguro (HTTPS), esto permitir'a que lo que busques no sea “visto” por terceros (solamente en ingl'es).

Mientras tanto, para la versi'on en ingl'es de Estados Unidos, se estrena una barra de b'usqueda mejorada que permite visualizar las sugerencias y cambiar de motor r'apidamente. Si este cambio tiene aceptaci'on se podr'a ver en las restantes localizaciones de Firefox. Para Rusia, Belarusia y Kasajast'an se cambi'o a Yandex como buscador por defecto, y Yahoo para Am'erica del Norte, esto se debe al cumplimiento de los acuerdos firmados hace pocos d'ias por Mozilla con Yandex y Yahoo.

Si ve'ias el cartel “Firefox ya est'a en ejecuci'on”, ya no lo ver'as m'as porque se incluy'o un modo de recuperaci'on desde un proceso bloqueado de Firefox transparente a ti. Tambi'en se han implementado el HTTP/2 (borrador 14) y ALPN.

Para Android

- El tema del navegador ha sido actualizado.

- Soporte para el reflejo de pesta~na (tab mirroring) de Chromecast .

- Ejecutar por primera vez ha sido redise~nado.

- Habilitado el soporte para las llaves p'ublicas fijadas.

- Podr'as cambiar a Wifi cuando se produzca un error al cargar una p'agina web.

- Adicionado el soporte para la cabecera HTTP Prefer:Safe.

Otras novedades

- WebCrypto: soporte a RSA-OAEP, PBKDF2 y AES-KW.

- WebCrypto: implementadas las funciones wrapKey y unwrapKey.

- WebCrypto: se pueden importar/exportar llaves en formato JWK.

- A~nadido el soporte el tipo de dato Symbol de ECMAScript 6.

- WebCrypto: soporte a ECDH.

- Desarrollo de las plantillas de cadenas en Java Script .

- Habilitada la API Device Storage para aplicaciones privilegiadas.

- Se ha implementado Performance.now() para workers.

- Posibilidad de resaltar todos los nodos que concuerden con un selector en el Editor de Estilos y el panel Reglas del Inspector.

- Mejorada la interfaz de usuario del Perfilador.

- La API del DOM Matches() ha sido implementada.

- Se ha a~nadido la funci'on console.table a la consola web.

Si deseas conocer m'as, puedes leer las notas de lanzamiento (en ingl'es).

Puedes obtener esta versi'on desde nuestra zona de Descargas en espa~nol e ingl'es para Linux, Mac, Windows y Android. Recuerda que para navegar a trav'es de servidores proxy debes modificar la preferencia network.negotiate-auth.allow-insecure-ntlm-v1 a true desde about:config.

Nota: Para Mac y Android se est'an descargando, cuando termine las publicamos.

http://firefoxmania.uci.cu/firefox-hello-webide-y-mas-en-la-nueva-version-de-firefox/

|

|

Fr'ed'eric Harper: Firefox OS – HTML for the mobile web at All Things Open |

Copyright: Jonathan LeBlanc

Every time I speak at a conference, I feel bless to be able to do so. For me, it’s a great opportunity to share my passion as expertise about technology. For All Things Open, I was happy to be part of the amazing list of speakers who love Open Source as much as I do. As for many of the talks I did in the last one year, and a half, I was talking about Firefox OS. I think that there is still a lot of awareness to create about this new operating system: so many people don’t know about the power behind Firefox OS and HTML5. It’s even truer about North America as you cannot go to your local store, and buy a device like you can in some places in Europe, and LATAM.

Since I was in the main room, my talk was recorded. Also, because of that, and that five tracks were available at the same time, my room looks a bit empty (I had about 100 people). In any cases, I got interesting feedbacks about Firefox OS and my talk.

As a habit, I started my recording process, so you have access to another version. The sound is not as good as a professional recording, but you have a better view on the screen (I should mix the two to make the ultimate recording).

Looking forward to speaking at the 2016 edition of ATO!

--

Firefox OS – HTML for the mobile web at All Things Open is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- HTML for the Mobile Web at All Things Open In about a month, I’ll speak at All Things Open...

- Fixing the mobile web with Firefox OS at FITC Toronto This morning I was presenting at FITC Toronto about Firefox...

http://outofcomfortzone.net/2014/12/01/firefox-os-html-for-the-mobile-web-at-all-things-open/

|

|

Patrick McManus: Firefox gecko API for HTTP/2 Push |

However, as part of gecko 36 we added a new gecko (i.e. internal firefox and add-on) API called nsIHttpPushListener that allows direct consumption of pushes without waiting for a cache hit. This opens up programming models other than browsing.

A single HTTP/2 stream, likely formed as a long lasting transaction from an XHR, can receive multiple pushed events correlated to it without having to form individual hanging polls for each event. Each event is both a HTTP request and HTTP response and is as arbitrarily expressive as those things can be.

It seems likely any implementation of a new Web based push notification protocol would be built around HTTP/2 pushes and this interface would provide the basis for subscribing and consuming those events.

nsIHttpPushListener is only implemented for HTTP/2. Spdy has a compatible feature set, but we've begun transitioning to the open standard and will likely not evolve the feature set of spdy any futher at this point.

There is no webidl dom access to the feature set yet, that is something that should be standardized across browsers before being made available.

http://bitsup.blogspot.com/2014/12/firefox-gecko-api-for-http2-push.html

|

|

Gervase Markham: Search Bugzilla with Yahoo! |

The Bugzilla team is aware that there are currently 5 different methods of searching Bugzilla (as explained in yesterday’s presentation) – Instant Search, Simple Search, Advanced Search, Google Search and QuickSearch. It has been argued that this is too many, and that we should simplify the options available – perhaps building a search which is all three of Instant, Simple and Quick, instead of just one of them. Some Bugzilla developers have sympathy with that view.

I, however, having caught the mood of the times, feel that Mozilla is all about choice, and there is still not enough choice in Bugzilla search. Therefore, I have decided to add a sixth option for those who want it. As of today, December 1st, by installing this GreaseMonkey script, you can now search Bugzilla with Yahoo! Search. (To do this, obviously, you will need a copy of GreaseMonkey.) It looks like this:

In the future, I may create a Bugzilla extension which allows users to fill the fourth tab on the search page with the search engine of their choice, perhaps leveraging the OpenSearch standard. Then, you will be able to search Bugzilla using the search engine which provides the best experience in your locale.

Viva choice!

http://feedproxy.google.com/~r/HackingForChrist/~3/YCi432rg3ec/

|

|

John O'Duinn: “APE – How to Publish a Book” by Guy Kawasaki |

A few years ago, I first put my toes into the book publishing world by co-writing a portion of AOSAv2 about Mozilla’s RelEng infrastructure. Having never written part of a published book before, I had no idea what I was really getting into. It was a lot of work, in the midst of an already-busy-day-time-job and yet, I found it was strangely quite rewarding. Not financially rewarding – all proceeds from the book went to Amnesty – but rewarding in terms of getting us all to organize our thoughts to write down in a clear, easy to read way, explaining the million-and-one details that “we just knew instinctively”, and hopefully helping spread the word to other software companies on what we did when changing Mozilla’s release cadence.

While working on AOSAv2, clearly explaining the technology was hard work, as expected, but I was surprised by how much work went into “simple” mechanics – merging back reviewer feedback, tracking revisions, dealing with formatting of tables and diagrams, publishing in different formats… and remember, this was a situation where book publisher contracts, revenue and other “messy stuff” was already taken care of by others. I “just” had to write. I was super happy to have the great guidance and support of Greg Wilson and Amy Brown who had been-there-done-that, helped work through all those details, and kept us all on track.

Ever since then, I’ve been considering more writing, but daunted by all the various details above and beyond “just writing”. These blog posts help scratch that itch, in between my own real-life-work-deadlines, but the idea of writing a full book, by myself, still lingered. A while ago, I grabbed “APE: Author, Publisher, Entrepreneur-How to Publish a Book” by Guy Kawasaki. It looked like a good HowTo manual, I’ve enjoyed some of his other books, and he’s always a great presenter, so I was looking forward to some quiet time to read this book cover-to-cover.

Ever since then, I’ve been considering more writing, but daunted by all the various details above and beyond “just writing”. These blog posts help scratch that itch, in between my own real-life-work-deadlines, but the idea of writing a full book, by myself, still lingered. A while ago, I grabbed “APE: Author, Publisher, Entrepreneur-How to Publish a Book” by Guy Kawasaki. It looked like a good HowTo manual, I’ve enjoyed some of his other books, and he’s always a great presenter, so I was looking forward to some quiet time to read this book cover-to-cover.

This was well worth the time, and I re-read it a few times!

For me, some of the highlights were:

- “why are you writing a book?” I really like how Guy turned this question around to “why would someone else want to read your book”. Excellent mind-flip. I’ve met a few people who want to write a book, and even a few published authors, and I’ve talked with them about my own ideas about writing. But no-one, not one, ever reversed the question like this. It was instantly self-evident to me – it takes time to read a book, and we’re all busy. So, even if someone gave me a book for free, why would I want to skip work and/or social plans to read a book by someone I don’t know. Making it clear, immediately, why someone would find it worthwhile reading your book is a crucial step that I think many people skip past. As the author, keeping this in mind at all times while writing, will help keep you focused on the straight-and-narrow path to writing a book that people would actually want to read.

- Money: Most publishers are super-secret about their contracts/terms/conditions, which can make a new time author feel like they’re going to be taken (The only exception I know of is Apress, who publish all their terms on their website, with a “no haggling” clause). To help educate potential authors, I respect how much full detail Guy & Shawn gave in small, easy to follow, words.

- “Tell the world you’re writing a book – not that you’re thinking of writing a book.” Again, an excellent mind-flip to help keep you motivated and writing, every single day, whether you want to or not. Also, they provided many links to writer’s clubs (writer support groups!?) who would help you keep motivated.

- print-on-demand vs print-big-batch: This reminded me of how software release cycles are changing the software industry from old monolith release cadence to rapid-release cadence. “Old way”: a big-bang-release every unpredictable 18months, with a costly big print run, and lots of ways to handle financial risk of under/over selling; any corrections are postponed until the next big-bang-release if it looks like there is enough interest. “New way”: build infrastructure to enable print-on-demand. Do smaller, more frequent, releases, each with small print runs, (almost) no risk of under/over selling, corrections handled frequently and easily. Yes, at first glance, each printed book might seem more expensive this way, but when you factor in the lack-of-under/over selling, removed financial risk, and benefits of frequent updates to the almost-free electronic readers, it actually feels cheaper, more efficient and more appealing to me.

- In addition to printed books, there’s a good description of pros/cons of the different popular electronic formats (PDF, MOBI, EPUB, DAISY, APK…) as well as related DRM.

- The differences between ebook publishers (Amazon, Apple, Barnes & Noble, Google, Kobo, …), Author Publisher Services (Lulu, Blurb, Author Solutions, …) and Print-on-Demand (Walkerville Publishing, Lightning Source, …) was detailed and very helpful. Complex chapter, with lots of data, and ending with the reassuring “Don’t obsess about making the wrong choice, however, because most distribution decisions are changeable.”!

- translations, audiobooks: normally, these are handled as edge cases. Guy & Shawn walk through some of the options (Audible/Amazon, Books-on-Tape/RandomHouse), as well as financial & legal realities.

- Some fun examples of rejection responses by agents/publishers. My personal favorite was a rejection sent to George Orwell about Animal Farm “It is impossible to sell animal stories in the USA”.

All in all, I found the writing style personal, helpful, direct and super honest. Even the way they ended the book… “Thank you. Now go write a book! —Guy and Shawn”

Thank you both.

http://oduinn.com/blog/2014/11/30/ape-how-to-publish-a-book-by-guy-kawasaki/

|

|

Benjamin Smedberg: An Invitation |

I’d like to invite my blog readers and Mozilla coworkers to Jesus Christ.

For most Christians, today marks the beginning of Advent, the season of preparation before Christmas. Not only is this a time for personal preparation and prayer while remembering the first coming of Christ as a child, but also a time to prepare the entire world for Christ’s second coming. Christians invite their friends, coworkers, and neighbors to experience Christ’s love and saving power.

I began my journey to Christ through music and choirs. Through these I discovered beauty in the teachings of Christ. There is a unique beauty that comes from combining faith and reason: belief in Christ does not require superstition nor ignorance of history or science. Rather, belief in Christ’s teachings brought me to a wholeness of understanding the truth in all it’s forms, and our own place within it.

Although Jesus is known to Christians as priest, prophet, and king, I have a special and personal devotion to Jesus as king of heaven and earth. The feast of Christ the King at the end of the church year is my personal favorite, and it is a particular focus when I perform and composing music for the Church. I discovered this passion during college; every time I tried to plan my own life, I ended up in confusion or failure, while every time I handed my life over to Christ, I ended up being successful. My friends even got me a rubber stamp which said “How to make God laugh: tell him your plans!” This understanding of Jesus as ruler of my life has led to a profound trust in divine providence and personal guidance in my life. It even led to my becoming involved with Mozilla and eventually becoming a Mozilla employee: I was a church organist and switching careers to become a computer programmer was a leap of faith, given my lack of education.

Making a religious invitation to coworkers and friends at Mozilla is difficult. We spend our time and build our deepest relationships in a setting of on email, video, and online chat, where off-topic discussions are typically out of place. I want to share my experience of Christ with those who may be interested, but I don’t want to offend or upset those who aren’t.

This year, however, presents me with a unique opportunity. Most Mozilla employees will be together for a shared planning week. If you will be there, please feel free to find me during our down time and ask me about my experience of Christ. If you aren’t at the work week, but you still want to talk, I will try to make that work as well! Email me.

1. On Jordan’s bank, the Baptist’s cry

Announces that the Lord is nigh;

Awake, and hearken, for he brings

Glad tidings of the King of kings!2. Then cleansed be every breast from sin;

Make straight the way for God within;

Prepare we in our hearts a home

Where such a mighty Guest may come.3. For Thou art our Salvation, Lord,

Our Refuge, and our great Reward.

Without Thy grace we waste away,

Like flowers that wither and decay.4. To heal the sick stretch out Thine hand,

And bid the fallen sinner stand;

Shine forth, and let Thy light restore

Earth’s own true lovliness once more.5. Stretch forth thine hand, to heal our sore,

And make us rise to fall no more;

Once more upon thy people shine,

And fill the world with love divine.36. All praise, eternal Son, to Thee

Whose advent sets Thy people free,

Whom, with the Father, we adore,

And Holy Ghost, forevermore.—Charles Coffin, Jordanis oras praevia (1736), Translated from Latin to English by John Chandler, 1837

|

|

Christian Heilmann: It is Blue Beanie Day – let’s reflect #bbd14 |

Today we celebrate once again blue beanie day. People who build things that are online don their blue hat and show their support for standards based web development. All this goes back to Jeffrey Zeldman’s book that outlined that idea and caused a massive change in the field of web design.

Let’s celebrate – once again

It feels good to be part of this, it is a tradition and it reminds us of how far we’ve come as a community and as a professional environment. To me, it starts to feel a bit stale though. I get the feeling we are losing our touch to what happens these days and celebrate the same old successes over and over again.

This could be normal disillusionment of having worked in the same field for a long time. It also could be having heard the same messages over and over. I start to wonder if the message of “use web standards” is still having an impact in today’s world.

The web is a commodity

I am not saying they are unnecessary – far from it. I am saying that we lose a lot of new developers to other causes and that web development as a craft is becoming less important than it used to be.

The web is a thing that people use. It is there, it does things. Much like opening a tap gives you water in most places we live in. We don’t think about how the tap works, we just expect it to do so. And we don’t want to listen to anyone who tells us that we need to use a tap in a certain way or we’re “doing it wrong”. We just call someone in when the water doesn’t run.

Standards mattered most when browsers worked against them

When web standards based development became a thing it was an absolute necessity. Browser support was all over the shop and we had to find something we can rely on. That is a standard. You can dismantle and assemble things because there is a standard for screws and screwdrivers. You can also use a knife or a key for that and thus damage the screw and the knife. But who cares as long as the job’s done, right? You do – as soon as you need to disassemble the same thing again.

Far beyond view source

Nowadays our world has changed a lot. Browser support is excellent. Browsers are pretty amazing at displaying complex HTML, CSS and JavaScript. On top of that, browsers are development tools giving us insights into what is happening. This goes beyond the view-source of old which made the web what it is. You can now inspect JavaScript generated code. You an see browser internal structures. You see what loaded when and how the browser performs. You can inspect canvas, WebGL and WebAudio. You can inspect browsers on connected devices and simulate devices and various connectivity scenarios.

All this and the fact that the HTML5 parser is forgiving and fixes minor markup glitches makes our chant for web standards support seem redundant. We’ve won. The enemies of old – Flash and other non-standard technologies seem to be forgotten. What’s there to celebrate?

Our standards, right or wrong?

Well, the struggle for a standards based web is far from over and at times we need to do things we don’t like doing. An open source browser like Firefox having to support DRM in video playback is not good. But it is better than punishing its users by preventing them from using massively successful services like Netflix. Or is it? Should our goal to only support open and standardised technology be the final decision? Or is it still up to us to show that open and standardised means the solutions are better in the long run and let that one slip for now? I’m not sure, but I know that it is easier to influence something when you don’t condemn it.

A new, self-made struggle

All in all there is a new target for those of us who count themselves in the blue beanie camp: complexity and “de-facto standards”.

The web grew to what it is now as it was simple to create for it. Take a text editor, write some code, open it in a browser and you’re done. These days professional web development looks much different. We rely on package managers. We rely on resource managers. We use task runners and pre-processing to create HTML, CSS and JavaScript solutions. All these tools are useful and can make a massive difference in a big and complex site. They should not be a necessity and are often overkill for the final product though. Web standards based development means one thing: you know what you’re doing and what your code should do in a supported browser. Adding these layers adds a layer of dark magic to that. Instead of teaching newcomers how to create, we teach them to rely on things they don’t understand. This is a perfectly OK way to deliver products, but it sets a strange tone for those learning our craft. We don’t empower builders, we empower users of solutions to build bigger solutions. And with that, we create a lot of extra code that goes on the web.

A “de-facto standard” is nonsense. The argument that something becomes good and sensible because a lot of people use it assumes a lot. Do these people use it because they need it? Or because they like it? Or because it is fashionable to use? Or because it yields quick results? Results that in a few months time are “considered dangerous” but stick around for eternity as the product has been shipped.

Framing the new world of web development

We who don the blue hats live in a huge echo chamber. It is time to stop repeating the same messages and concentrate on educating again. The web is obese, solutions become formulaic (parallax scrollers, huge hero headers…). There is a whole new range of frameworks to replace HTML, CSS and JavaScript out there that people use. Our job as the fans of standards is to influence those. We should make sure we don’t go towards a web that is dependent on the decisions of a few companies. Promises of evergreen support for those frameworks ring hollow. It happened with YUI - a very important player in making web standards based work scale to huge company size. And it can happen to anything we now promote as “the easier way to apply standards”.

http://christianheilmann.com/2014/11/30/it-is-blue-beanie-day-lets-reflect-bbd14/

|

|

David Rajchenbach Teller: Vous souhaitez apprendre `a d'evelopper des Logiciels Libres ? |

Cette ann'ee, la Communaut'e Mozilla propose `a Paris un cycle de Cours/TDs autour du D'eveloppement de Logiciels Libres.

Au programme :

- comment se joindre `a un projet existant ;

- comment communiquer dans une 'equipe distribu'ee ;

- comment financer un projet de logiciel libre ;

- qualit'e du code ;

- du code !

- (et beaucoup plus).

Pour plus de d'etails, et pour vous inscrire, tout est ici.

Attention, les cours commencent le 8 d'ecembre !

|

|

Doug Belshaw: Toward The Development of a Web Literacy Map: Exploring, Building, and Connecting Online |

I’m presenting at the Literacy Research Association conference next Friday. I got some useful feedback after my previous post so this is pretty much the version I’m going to present. The slides are above (modern web browser with fast JavaScript performance required!)

Introduction

Hi everyone, and thanks to Ian for the introduction. I’m really glad to be here - it’s my first time in Florida and, although I’ll only be here for about 46 hours, I plan to make full use of the amount of sunshine. I come from the frozen wastelands of northern England where most of us have skin like ‘Gollum’ from Lord of the Rings. Portland, Oregon - where I’ve just come from a Mozilla work week - was actually colder than where I live!

But, seriously, I really appreciate the opportunity to talk to you about something that’s really important to the Mozilla community - web literacy. It’s a topic I don’t think has been given enough thought and attention, and I’d like to use the brief time I’ve got here to convince you to help us rectify that. I’ll show you this quickly - the competency grid from v1.1 of the Web Literacy Map but I want to give some background before diving too much into that.

I’m a big fan of Howard Rheingold’s work, and he talks about 'literacies of attention’. It seems appropriate, therefore, to tell you what I’m going to cover and to front-load this presentation with the conclusions I’m going to make. That way you can process what I’m trying to get across while the caffeine’s still coursing through your veins.

I was always taught to say what you’re going to say, say it, and then say what you’ve said. So my conclusions, the things you should pay attention to, are the following:

- Web literacy is a useful focus / research area

- We should work together instead of building endless competing frameworks

- There’s a need to balance rigour and grokkability

Given the looks on some people’s faces, I should probably just say quickly that 'grok’ is a real word! The Oxford English Dictionary defines it as, 'to understand intuitively or by empathy; to establish rapport with.’ The Urban Dictionary, meanwhile, defines it as, 'literally meaning 'to drink’ but taken to mean 'understanding.’ Often used by programmers and other assorted geeks.’ You should probably grok Mozilla first, as it seems a bit odd to have some corporate shill from a browser company at the Literacy Research Association conference, no?

Well, that’s the thing. Every part of that sentence is incorrect. First, Mozilla isn’t a company, it’s a global non-profit. Second, Mozilla is not just about the half a billion people who use Firefox, but about a mission to promote openness, innovation & opportunity on the Web. And third, no-one’s selling anything here. Instead, it’s an invitation to do the work you were going to do anyway, but share and build with others for the benefit of mankind. Ian is a Mozillian who works in academia, as was I before I became a paid contributor. We have Mozillians in all walks of life, from engineers to teachers, and in every country of the world. It’s a global community that also makes products instantiating our mission and values.

Also, we work in the open and make everything we do available under open licenses. You’re free to rip and remix.

OK, so I think it was important to say that up front.

Web literacy is a useful focus / research area

Let’s start with web literacy as a useful focus / research area. I wrote my thesis on digital literacies and if there’s one thing that I learned it’s that there’s as many definitions of 'digital literacy’ as there are researchers in the field! Why on earth, then, would we need another term to endlessly redefine and argue about? Well, I’d argue that the good thing about the web is that it’s easier to agree what we’re actually talking about. Yes, there may be some people who use the term 'web’ when they actually mean 'internet’ but, by and large we all know what we’re talking about.

As well as being something most people know about, it’s also ubiquitous. If you have access to the internet, then you almost always also have access to the web. That’s not true of other digital spaces where walled gardens are the norm. I’m sure there are very specific skills, competencies and habits of mind you need to use locked-down, proprietary products. And that’s great. But I think a better use of our time is thinking about the skills, competencies and habits of mind required to use a public good. To use an imperfect analogy, we don’t teach people to drive specific cars but give them a license to drive pretty much any car.

Web literacy is also an important research area because it’s political. Take the live issue of 'net neutrality’. To recap, this is “the principle that Internet service providers and governments should treat all data on the Internet equally, not discriminating or charging differentially by user, content, site, platform, application, type of attached equipment, or mode of communication” (Wikipedia). While this may seem somewhat esoteric and distant, it’s a core part of web literacy. Just as Paolo Freire and others have seen literacy as a hugely emancipatory and liberating force for social change, so too web literacy is a force for good.

One response I get when I talk about web literacy is, “isn’t that covered by information literacy?” or “I’m sure what you describe is just digital media literacy.” And maybe it is. Let’s have a discussion. But before we do, I will note how fond researchers are of what I call 'umbrella terms’. So they conceive digital literacy as including media literacy and information literacy. Another thinks media literacy includes information literacy and digital literacy. And a third believes information literacy to include digital literacy and media literacy. And so on.

Perhaps the clearest thinking in recent times around new literacies has been provided by Colin Lankshear and Michele Knobel. They write in a clear, lucid way that makes sense to researchers and practitioners alike. They’re a good example of what I want to talk about later in terms of balancing rigour and grokkability. I’m particularly fond of this quotation from the introductory chapter to their New Literacies Sampler. Apologies for the lengthy quotation, but I think it’s important:

Briefly, then, we would argue that the more a literacy practice can be seen to reflect the characteristics of the insider mindset and, in particular, those qualities addressed here currently being associated with the concept of Web 2.0, the more it is entitled to be regarded as a new literacy. That is to say, the more a literacy practice privileges participation over publishing, distributed expertise over centralized expertise, collective intelligence over individual possessive intelligence, collaboration over individuated authorship, dispersion over scarcity, sharing over ownership, experimentation over “normalization,” innovation and evolution over stability and fixity, creative-innovative rule breaking over generic purity and policing, relationship over information broadcast, and so on, the more we should regard it as a “new” literacy. New technologies enable and enhance these practices, often in ways that are stunning in their sophistication and breathtaking in their scale. Paradigm cases of new literacies are constituted by “new technical stuff ” as well as “new ethos stuff.”

Now if what they describe in this quotation doesn’t describe the web and web literacy, then I don’t know what does!

I’ve been working recently on a brief history of web literacy. That was published earlier this week over at DMLcentral, so do go and have a read. I’d appreciate your insights, comments and pushback. In the article I loosely identified five 'eras’ of web literacy:

- 1993-1997: The Information Superhighway

- 1999-2002: The Wild West

- 2003-2007: The Web 2.0 era

- 2008-2012: The Era of the App

- 2013+: The Post-Snowden era

I haven’t got time to dive into this here, but it’s worth noting a couple of things. One, not everyone gives the name 'web literacy’ to the skills required to use the web. And, two, this isn’t a linear progression. For example, I’d argue that we’re entering a time when popular opinion realises that these skills need to be taught; they’re not innate nor just a result of immersion and use.

We should work together instead of building endless competing frameworks

So far, I haven’t defined web literacy. I’ve hinted at it by talking about skills, competencies and habits of mind but I haven’t introduced one definition to rule them all. Why is that? Well, as I mentioned before, there’s a lot of definitions out there. And definitions are powerful things. They can constrain what is in and out of scope. They can give some people a voice while silencing others. They can privilege certain ways of being above others. Given that digital skills are currency in the jobs market, definitions can have economic effects too.

Here’s how the Mozilla community currently defines web literacy:

the skills and competencies needed for reading, writing and participating on the web.

We hope that this definition is broad enough to be inclusive but specific enough to be able to do the work required of it. But if you want to change it, you’re welcome to - come along to one of the community calls, file a 'bug’ in Bugzilla, start a conversation thread in the Teach The Web discussion forum. We work open.

Let me explain what that means and what it looks like.

If I make something (say, a framework) and write a paper about it, then you could adopt it wholesale. You could. But what’s more likely is that you’d want to put your own stamp on it. You’d want to 'remix’ it to include things that might have been missed or neglected. In software development terms these are known as 'unmerged forks’. In other words, you’ve taken something, changed it, and then started promoting that new thing. Meanwhile, the original is still kicking around somewhere. Multiply this many times and you’ve got a recipe for confusion and chaos.

Instead, what if we merged those changes? What if we discussed them in a democratic and open way? And what if there was a global non-profit as a steward for the process? What I’ve described applies to the World Wide Web Consortium (usually abbreviated to W3C) which is the main international standards organization for the web. But it also describes something that we’ve defined and continue to evolve within the Mozilla community: the Web Literacy Map.

The Web Literacy Map v1.1 is currently localised in full or in part in 22 languages. This is done by an army of volunteers, some of whom have been part of the discussions leading to the map, some not. It forms the core of the Boys and Girls Clubs of America’s new digital strategy. The University of British Columbia use it for student onboarding. And there are many organisations using it as a 'sense check’ for their curricula, schemes of work, and rubrics. (Quite how many, we’re not entirely sure as it’s an openly-licensed project.)

Interestingly, the shift from calling it a Web Literacy 'Standard’ in 2013 to calling it a Web Literacy 'Map’ in 2014 seems to have slightly decreased its popularity in formal education, but increased its popularity elsewhere. The decision to do this came after we had feedback that, particularly in the US, 'standard’ was a problematic term that came with baggage. These cultural differences are interesting - for example 'standard’ doesn’t particularly have positive or negative connotations in most of Europe, as far as I can tell. Another example would be from Alvar Maciel, an Argentinian teacher and technology integrator. He informed us on one community call that while the translation of 'competence’ makes literal sense in Argentinian Spanish, because of the association and baggage it comes with, educators would avoid it.

At the same time, the Web Literacy Map exists for a particular purpose. That purpose is to underpin Mozilla’s Webmaker program. Webmaker is an attempt to give people the knowledge, skills and confidence to 'teach the web’. In other words, to train the trainers helping others with web literacy. The focus of the community building the Web Literacy Map has these people in mind and, because Webmaker is a global program, difference, diversity and nuance is welcome.

So far, this all sounds very edifying and unproblematic. Like any project, it’s not without issues. Perhaps the biggest, especially now version 1.1 is out the door, is participation and contribution. Although there are core contributors - like Ian and Greg and a few others. But a good number of others are episodic volunteers. By and large these are people who know the domain - researchers, teachers, consultants, industry experts. Their occasional contributions are great, but it can be difficult when we have to explain why decisions were taken - sometimes quite a while ago. At the same time, new blood can mix things up and force us to question what went before.

Let’s use the current development of what for the moment we’re calling Web Literacy Map v2.0. Here’s how we’ve proceeded so far. First off, I decided that if we were going to fulfil our promise to update the map as the web evolves, we should probably review it on a yearly basis. Back in August I approached people - mainly in my networks, mainly people who know the space - to ask if they’d like to be interviewed. I can’t think of anyone who said no. The questions I asked to loosely structure the recorded half-hour conversations were:

- Are you currently using the Web Literacy Map (v1.1)? In what kind of context?

- What kinds of contexts would you like to use an updated (v2.0) version of the Web Literacy Map?

- What does the Web Literacy Map do well?

- What’s missing from the Web Literacy Map?

- Who would you like to see use/adopt the Web Literacy Map?

I also gave them a chance to say things that didn’t seem to fit in elsewhere. Sometimes I asked the questions in a slightly different order. Sometimes I fed in ideas from previous interviewees.

From those interviews I identified around 21 emerging themes for things that people would like to see from a version 2.0 of the Web Literacy Map. I boiled these down to five that would help us define the scope of our work. I formed them into proposals for a web-based community survey. This, following demand from the community, was translated from English into five other languages. The five proposals were:

- Proposal 1: “I believe the Web Literacy Map should explicitly reference the Mozilla manifesto.”

- Proposal 2: “I believe the three strands should be renamed 'Reading’, 'Writing’ and 'Participating’.”

- Proposal 3: “I believe the Web Literacy Map should look more like a 'map’.”

- Proposal 4: “I believe that concepts such as 'Mobile’, 'Identity’, and 'Protecting’ should be represented as cross-cutting themes in the Web Literacy Map.”

- Proposal 5: “I believe a 'remix’ button should allow me to remix the Web Literacy Map for my community and context.”

Every question on the survey was optional. Respondents could indicate agreement with the proposal on a five-point scale and add a comment if they wished. We received 177 responses altogether. Some chose to remain anonymous, which is fine. The important thing is that almost every respondent completed all of the survey.

From that I proposed a series of seven community calls. There was an introductory call, we’re towards the end of separate calls discussing each of the proposals, and then we’ll conclude just before Christmas. This will help decide what’s in and out of scope so we can hit the ground running in 2015.

This is a microcosm of how we developed what was then called the Web Literacy Standard from 2012 onwards. Back then, we did some preliminary work and then published a whitepaper. We invited lots of people to a kick-off call and decided how to proceed. Once we decided what was in and out of scope, we dug into some of the complexity. The Mozilla Festival seemed like a good place to launch the first version, so we set ourselves September 2013 as the deadline. This involved some 'half-hour hackfests’ where a few of us focused on getting certain sections finished and ready for review.

It’s important to note that we all have skills in different areas. For example, Carla Casilli - who’s now at the Badge Alliance and who worked closely with me on this - has a real gift for naming things. Ian’s particularly good at practicalities and bringing us back down to earth. If it takes a village to raise a child, it takes a community to create a Web Literacy Map!

There’s a need to balance rigour and grokkability

So my third and final point is that we need to balance rigour and grokkability. Just as I’d happily argue until the cows come home about the necessity for that 'u’ in 'rigour’, so I’d be happy to get stuck into philosophical discussions about literacy. Seriously, grab me later today if you want a conversation about Pierce’s theory of signs or Empson on ambiguity. I’m definitely the person at Mozilla who’s most likely to say:

That’s all very well in practice, but how does it work in theory? (Garret FitzGerald)

However, that’s not always a great approach. I’ve learned that perfect is the enemy of good. We need to balance both, because:

Theory without practice is empty; practice without theory is blind. (Immanuel Kant)

If you like your thinkers more revolutionary than conservative, I’m also fond of the quotation on Karl Marx’s tomb:

The philosophers have only interpreted the world, in various ways. The point, however, is to change it.

So how do we do that? We invite everyone in. We care about the outcome more than about individual contributions. After all, given enough eyes, all bugs are shallow.

I think we also need to think about what 'rigour’ means. Grokkability is easy enough - we put what we’ve produced in front of people and see how they respond. But rigour is trickier. It depends not on the start of the journey but on the end of it. Does what we’ve produced lead to the outcomes we want?

With the Web Literacy Map, the outcomes we want are that people improve in their ability to read, write and participate on the web. We’ve intentionally used verbs with the skills we’ve listed, as we don’t want this to be 'head’ knowledge. It’s not much use just being able to pass a pencil-and-paper test. Applicability is everything. Literacy means a change in identity.

So we come to the dreaded problem of measurement. I said that grokkability is understanding things at the start of the journey and rigour getting the right outcomes at the end. Here’s the point at which it gets very interesting. The easy thing would be to throw our hands up in the air and say that we’re only providing the raw materials from which others can build activities, learning pathways and assessments. And to some extent that is the scope of the Web Literacy Map. It’s kind of infused with Mozilla’s mission but anyone can use it and contribute to it.

It’s outside the scope of this talk, really, but I thought I’d just point to some things my team is doing in the future. First, we want to build clear learning pathways that lead to meaningful credentials. People should be able to show what they know and can do with the web. That’s likely to start with Web Literacy Basics 101 and will probably use Open Badges. Second, we want to encourage mentors and leaders within the community. We’re gong to do this through what we’re currently calling 'Webmaker Clubs’. These are best understood as people coming together to learn and teach the web. Third, we want to focus on mobile - both in terms of devices and the mobility of the learner. This is particularly important in areas of the world where people are experiencing the web for the first time, and doing so on a mobile device. Finally, and tentatively, we want to use 'learning analytics’ to find out the best ways in which we can teach these skills.

If you’d like to help us with any of that, you can.

Get involved!

I’m really looking forward to finding out what you all think about what I’ve discussed here. If you’d like to get involved, that’s great. There’s a canonical URL to bookmark that will take you to the correct place on the Mozilla wiki: http://bit.ly/weblitmapv2.

We’ve got a couple more community calls before Christmas and then there’ll be some in the new year. I also invite you to contribute even if you can’t make the calls. I’m happy to begin that process by email, but after a couple of exchanges I’ll probably invite you to work openly by posting to the Teach The Web discussion forum.

So, that’s pretty much it from me. Please do ask me hard questions and push back as hard as you can. It helps all of us sharpen our thinking and means we put the best stuff out there that we can!

Comments? Questions? Email me: doug@mozillafoundation.org

|

|

Roberto A. Vitillo: A Telemetry API for Spark |

Check out my previous post about Spark and Telemetry data if you want to find out what all the fuzz is about Spark. This post is a step by step guide on how to run a Spark job on AWS and use our simple Scala API to load Telemetry data.

The first step is to start a machine on AWS using Mark Reid’s nifty dashboard, in his own words:

- Visit the analysis provisioning dashboard at telemetry-dash.mozilla.org and sign in using Persona (with an @mozilla.com email address as mentioned above).

- Click “Launch an ad-hoc analysis worker”.

- Enter some details. The “Server Name” field should be a short descriptive name, something like “mreid chromehangs analysis” is good. Upload your SSH public key (this allows you to log in to the server once it’s started up).

- Click “Submit”.

- A Ubuntu machine will be started up on Amazon’s EC2 infrastructure. Once it’s ready, you can SSH in and run your analysis job. Reload the webpage after a couple of minutes to get the full SSH command you’ll use to log in.

Now connect to the machine and clone my starter project template:

git clone https://github.com/vitillo/mozilla-telemetry-spark.git cd mozilla-telemetry-spark && source aws/setup.sh

The setup script will install Oracle’s JDK among some other bits. Now we are finally ready to give Spark a spin by launching:

sbt run

The command will run a simple job that computes the Operating System distribution for a small number of pings. It will take a while to complete as sbt, an interactive build tool for Scala, is downloading all required dependencies for Spark on the first run.

import org.apache.spark.SparkContext

import org.apache.spark.SparkContext._

import org.apache.spark.SparkConf