Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Sean McArthur: hyper |

Rust is a shiny new systems language that the lovely folks at Mozilla are building. It focuses on complete memory-safety, and being very fast. It’s speed is equivalent to C++ code, but you don’t have to manage pointers and the like; the language does that for you. It also catches a lot of irritating runtime errors at compile time, thanks to it’s fantastic type system. That should mean less crashes.

All of this sounds fantastic, let’s use it to make server software! It will be faster, and crash less. One speed bump: there’s no real rust http library.

rust-http and Teepee

There were 2 prior attempts at HTTP libraries, but the former (rust-http) has been ditched by it’s creator, and isn’t very “rust-like”. The latter, Teepee, started in an excellent direction, but life has gotten in the way for the author.1

For the client-side only, there exists curl-rust, which are just bindings to libcurl. Ideally, we’d like to have the all of the code written in Rust, so we don’t have to trust that the curl developers have written perfectly memory-safe code.

So I started a new one. I called it hyper, cause, y’know, hyper-text transfer protocol.

embracing types

The type system in Rust is quite phenomenal. Wait, what? Did I just say that? Huh, I guess I did. I know, I know, we hate wrestling with type systems. I can’t touch any Java code without cursing the type system. Thanks to Rust’s type inference, though, it’s not irritating at all.

In contrast, I’ve gotten tired of stringly-typed languages; chief among them is JavaScript. Everything is a string. Even property lookups. document.onlood = onload; is perfectly valid, since it just treats onlood as a string. You know a big problem with strings? Typos. If you write JavaScript, you will write typos that aren’t caught until your code is in production, and you see that an event handler is never triggered, or undefined is not a function.

I’m done with that. But if you still want to be able to use strings in your rust code, you certainly can. Just use something else besides hyper.

Now then, how about some examples. It’s most noticeable when using headers. In JavaScript, you’d likely do something like:

req.headers['content-type'] = 'application/json';

Here’s how to do the same using hyper:

req.headers.set(ContentType(Mime(Application, Json, vec![])));

Huh, interesting. Looks like more code. Yes, yes it is. But it’s also code that has been checked by the compiler. It has made sure there are no typos. It also has made sure you didn’t try to see the wrong format to a header. To get the header back out:

match req.headers.get() {

Some(&ContentType(Mime(Application, Json, _))) => "its json!",

Some(&ContentType(Mime(top, sub, _))) => "we can handle top and sub",

None => "le sad"

}

Here’s an example that makes sure the format is correct:

req.headers.set(Date(time::utc_now()));

// ...

match req.headers.get() {

Some(&Date(ref tm)) => {

// tm is a Tm instance, without you dealing with

// the various allowed formats in the HTTP spec.

}

// ...

}

Yea, yea, there is a stringly-typed API, for those rare cases you might need it, but it’s purposefully not easy to use. You shouldn’t use it. Maybe you think of a reason you might maybe have a good reason; no you don’t. Don’t use it. Let the compiler check for errors before you hit production.

Let’s look at status codes. Can you tell me what exactly this response means, without looking it up?

res.status = 307;

How about this instead:

res.status = StatusCode::MovedTemporarily;

Clearly better. You’ve seen code like this:

if res.status / 100 == 4 {}

What if we could make it better:

if res.status.is_client_error() {}

Message WriteStatus

I’ve been bitten by this before, I can only bet you have also: trying to write headers after they’ve already been sent. Hyper makes this a compile-time check. If you have a Request, then there exists header_mut() methods to get a mutable reference to the headers, so you can add some. You can’t accidently write to a Request, since it doesn’t implement Writer. When you are ready to start writing the body, you must specifically convert to a Request using req.start().

Likewise, a Request does not contain a headers_mut() accessor. You cannot change the headers once streaming has started. You can still inspect them, if that’s needed, but no setting! The compiler will make sure you don’t have that mistake in your code.

NetworkStreams

Both the Server and the Client are generic over NetworkStreams. The default is to use HttpStream, which can handle HTTP over TCP, and HTTPS using openssl. This design also allows something like Servo to implement a ServoStream or something, which could handle HTTPS using NSS instead.

Goals

These are some high level goals for the library, so you can see the direction:

- Be fast!

- The benchmarks preach that we’re already faster than both rust-http and libcurl. And we all know science doesn’t lie.

- Embrace types.

- See the above post for how we’re doing this.

- Provide an excellent http server library for rust webdev.

- Currently used by Iron, Rustless, Sserve, and others …

- Provide an excellent http client that can be used in place of curl.

- It’s landed in Servo!

The first step for hyper was get the streams and types working correctly and quickly. With the factory working underneath, it allows others to write specific implementations without re-doing all of HTTP, such as implementing the XHR2 spec in Servo. Work now has been on providing ergonomic Client and Server implementations.

It looks increasingly likely that hyper will be available to use on Rust-1.0-day.3There will be an HTTP library for Rust 1.0!

|

|

Gervase Markham: Google Concedes Google Code Not Good Enough? |

Google recently released an update to End-to-End, their communications security tool. As part of the announcement, they said:

We’re migrating End-To-End to GitHub. We’ve always believed strongly that End-To-End must be an open source project, and we think that using GitHub will allow us to work together even better with the community.

They didn’t specifically say how it was hosted before, but a look at the original announcement tells us it was here – on Google Code. And indeed, when you visit that link now, it says “Project “end-to-end” has moved to another location on the Internet”, and offers a link to the Github repo.

Is Google admitting that Google Code just doesn’t cut it any more? It certainly doesn’t have anything like the feature set of Github. Will we see it in the next round of Google spring-cleaning in 2015?

http://feedproxy.google.com/~r/HackingForChrist/~3/rWGFtV8T2BU/

|

|

Mozilla Open Policy & Advocacy Blog: The Benefits of Fellowship |

In just a few weeks, the application window to be a 2015 Ford-Mozilla Open Web Fellow will close. In its first year, the Fellows program will place emerging tech leaders at five of the world’s leading nonprofits fighting to keep the Internet as a shared, open and global resource.

We’ve already seen hundreds of applicants from more than 70 countries apply, and we wanted to answer one of the primary questions we’ve heard: why should I be a Fellow?

Fellowships offer unique opportunities to learn, innovate and gain credentials.

Fellowships offer unique opportunities to learn. Representing the notion that ‘the community is the classroom’, Ford-Mozilla Open Web Fellows will have a set of experiences in which they can learn and have an impact while working in the field. They will be at the epicenter of informing how public policy shapes the Internet. They will be working and collaborating together with a collection of people with diverse skills and experiences. They will be learning from other fellows, from the host organizations, and from the broader policy and advocacy ecosystem.

Fellowships offer the ability to innovate in policy and technology. The Fellowship offers the ability to innovate, using technology and policy as your toolset. We believe that the phrase ‘Move fast. Break things.’ is not reserved for technology companies – it is a way of being that Fellows will get to experience first-hand at our host organizations and working with Mozilla.

The Ford-Mozilla Fellowship offers a unique and differentiating credential. Our Fellows will be able to reference this experience as they continue in their career. As they advance in their chosen fields, alums of the program will be able to draw upon their experience leading in the community and working in the open. This experience will also enable them to expand their professional network as they continue to practice at the intersection of technology and policy.

We’ve also structured the program to remove barriers and assemble a Fellowship class that reflects the diversity of the entire community.

This is a paid fellowship with benefits to allow Fellows to focus on the challenging work of protecting the open Web through policy and technology work. Fellows will receive a $60,000 stipend for the 10-month program. In addition, we’ve created a series of supplements including support for housing, relocation, childcare, healthcare, continuing education and technology. We’re also offering visa assistance in order to ensure global diversity in participants.

In short, the Ford-Mozilla Open Web Fellowship is a unique opportunity to learn, innovate and gain credentials. It’s designed to enable Fellows to focus on the hard job of protecting the Internet.

More information on the Fellowship benefits can be found at https://advocacy.mozilla.org/. Good luck to the applicants of the 2015 Fellowship class.

The Ford-Mozilla Open Web Fellows application deadline is December 31, 2014. Apply at https://advocacy.mozilla.org/.

https://blog.mozilla.org/netpolicy/2014/12/18/the-benefits-of-fellowship/

|

|

Daniel Glazman: Bulgaria Web Summit |

I will be speaking at the Bulgaria Web Summit 2015 in Sofia, Bulgaria, 18th of may.

http://www.glazman.org/weblog/dotclear/index.php?post/2014/12/18/Bulgaria-Web-Summit

|

|

Henrik Skupin: Firefox Automation report – week 43/44 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 43 and 44.

Highlights

In preparation for the QA-wide demonstration of Mozmill-CI, Henrik reorganized our documentation to allow everyone a simple local setup of the tool. Along that we did the remaining deployment of latest code to our production instance.

Henrik also worked on the upgrade of Jenkins to latest LTS version 1.565.3, and we were able to push this upgrade to our staging instance for observation. Further he got the Pulse Guardian support implemented.

Mozmill 2.0.9 and Mozmill-Automation 2.0.9 have been released, and if you are curious what is included you want to check this post.

One of our major goals over the next 2 quarters is to replace Mozmill as test framework for our functional tests for Firefox with Marionette. Together with the A-Team Henrik got started on the initial work, which is currently covered in the firefox-greenlight-tests repository. More to come later…

Beside all that work we have to say good bye to one of our SoftVision team members.October the 29th was the last day for Daniel on the project. So thank’s for all your work!

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 43 and week 44.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 43 and week 44.

http://www.hskupin.info/2014/12/18/firefox-automation-report-week-43-44-2014/

|

|

Mike Hommey: Initial support for git pushes to mercurial, early testers needed |

This push to try was not created by mercurial.

I just landed initial support for pushing to mercurial from git. Considering the scary fact that it’s possible to screw up a repository with bundles with missing content (and, guess what, I figured out the hard way), I have restricted it to local mercurial repositories until I am more confident.

As such, I would need volunteers to use and test it on local mercurial repositories. On top of being limited to local mercurial repositories, it doesn’t support pushing merges that would have been created by git, nor does it support pushing a root commit (one with no parent).

Here’s how you can use it:

$ git clone https://github.com/glandium/git-remote-hg $ export PATH=$PATH:$(pwd)/git-remote-hg $ git clone hg::/path/to/mercurial-repository $ # work work, commit, commit $ git push

[ Note: you can still pull from remote mercurial repositories ]

This will push to your local repository, where it would be useful if you could check the push didn’t fuck things up.

$ cd /path/to/mercurial-repository $ hg verify

That’s the long, thorough version. You may just want to simply do this:

$ cd /path/to/mercurial-repository $ hg log --stat

Hopefully, you won’t see messages like:

abort: data/build/mozconfig.common.override.i@56d6fdb13666: no match found!

Update: You can also add the following to /path/to/mercurial-repository/.hg/hgrc, which should prevent corruptions from entering the mercurial repository at all:

[server] validate = True

Update 2: The above setting is now unnecessary, git-remote-hg will set it itself for its push session.

Then you can push with mercurial.

$ hg push

Please note that this is integrated in git in such a way that it’s possible to pass refspecs to git push and do other fancy stuff. Be aware that there are still rough edges on that part, but that your commits will be pushed, even if the resulting state under refs/remotes/ is not very consistent.

I’m planning a replay of several repositories to fully validate pushes don’t send broken bundles, but it’s going to take some time before I can set things up. I figured I’d rather crowdsource until then.

|

|

Gregory Szorc: mach sub-commands |

mach - the generic command line dispatching tool that powers the mach command to aid Firefox development - now has support for sub-commands.

You can now create simple and intuitive user interfaces involving sub-actions. e.g.

mach device sync

mach device run

mach device delete

Before, to do something like this would require a universal argument parser or separate mach commands. Both constitute a poor user experience (confusing array of available arguments or proliferation of top-level commands). Both result in mach help being difficult to comprehend. And that's not good for usability and approachability.

Nothing in Firefox currently uses this feature. Although there is an in-progress patch in bug 1108293 for providing a mach command to analyze C/C++ build dependencies. It is my hope that others write useful commands and functionality on top of this feature.

The documentation for mach has also been rewritten. It is now exposed as part of the in-tree Sphinx documentation.

Everyone should thank Andrew Halberstadt for promptly reviewing the changes!

|

|

Advancing Content: Getting Tiles Data From Firefox |

Following the launch of Tiles in November, I wanted to provide more information on how data is transmitted into and from Firefox. Last week, I described how we get Tiles data into Firefox differently from the usual cookie-identified requests. In this post, I will describe how we report on users’ interactions with Tiles.

As a reminder, we have three kinds of Tiles: the History Tiles, which were implemented in Firefox in 2012, Enhanced Tiles, where we have a custom creative design for a Tile for a site that a user has an existing relationship with, and Directory Tiles, where we place a Tile in a new tab page for users with no browsing history in their profile. Enhanced and Directory Tiles may both be sponsored, involving a commercial relationship, or they may be Mozilla projects or causes, such as our Webmaker initiative.

We need to be able to report data on user’s interactions with Tiles for two main reasons:

- to determine if the experience is a good one

- to report to our commercial partners on volumes of interactions by Firefox users

And we do these things in accordance with our data principles both to set the standards we would like the industry to follow and, crucially, to maintain the trust of our users.

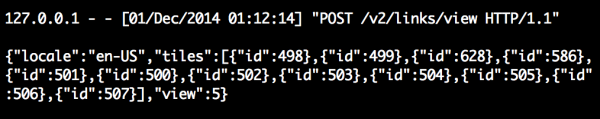

Unless a user has opted out by switching to Classic or Blank, Firefox currently sends a list of the Tiles on a user’s new tab page to Mozilla’s servers, along with data about the user’s interaction with the Tiles, e.g., view, click, or pin.

Directory and Enhanced Tiles are identified by a Tile id, (e.g., “Firefox for Android” Tile has an id of 499 for American English-speaking users while “Firefox pour Android” has an id of 510 for French-speaking users). History Tiles do not have an id, so we can only know that the user saw a history screenshot but not what page — except for early release channel Telemetry related experiments, we do not currently send URL information for Tiles, although of course we are able to infer it for the Directory and Enhanced Tiles that we have sent to Firefox.

Our implementation of Tiles uses the minimal actionable dataset, and we protect that data with with multiple layers of security. This means:

- cookie-less requests

- encrypted transmission

- aggressive cleaning of data

We also break up the data into smaller pieces that cannot be reconstructed to the original data. When our server receives a list of seen Tiles from an IP address, we record that the specific individual Tiles were seen and not the whole list.

With the data aggregated across many users, we can now calculate how many total times a given Tile has been seen and visited. By dividing the number of clicks by the number of views, we get a click-through-rate (CTR) that represents how valuable users find a particular tile, as well as a pin-rate and a block-rate. This is sufficient for us to determine both if we think a Tile is useful for a user and also for us to report to a commercial partner.

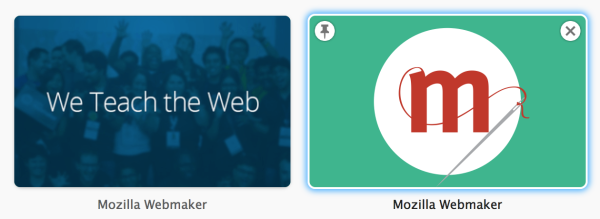

Calculating the CTR for each tile and comparing them helps us decide if a Tile is useful to many users. We can already see that the most popular tiles are “Customize Firefox” and “Firefox for Android” (Tile 499, remember) both in terms of clicks and pins.

For an advertiser, we create reports from our aggregated data, and they in turn can see the traffic for their URLs and are able to measure goal conversions on their back end. Since the Firefox 10th anniversary announcement, which included Tiles and the Firefox Developer Edition, we ran a Directory Tile for the Webmaker initiative. After 25 days, it had generated nearly 1 billion views, 183 thousand clicks, and 14 thousand pins.

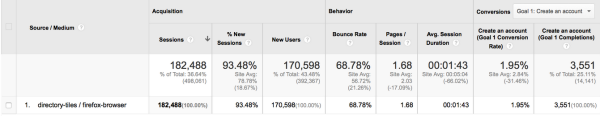

The Webmaker team, meanwhile, are able to see the traffic coming in (as the Tile directs traffic to a distinct URL), and they are able to give attribution to the Tile and track conversions from there:

We started with a relatively straightforward implementation to be able to measure how users are interacting with Tiles. But we’ve already gotten some good ideas on how to make things even better for improved accuracy with less data. For example, we currently cannot accurately measure how many unique users have seen a given Tile, and traditionally unique identifiers are used to measure that, but HyperLogLog has been suggested as a privacy-protecting technique to get us that data. A separate idea is that we can use statistical random sampling that doesn’t require all Firefox users to send data while still getting the numbers we need. We’ll test sampling through Telemetry experiments to measure site popularity, and we’ll share more when we get those results.

We would love to hear your thoughts on how we treat users data to find the Tiles that users want. And if you have ideas on how we can improve our data collection, please send them over as well!

– Ed Lee on behalf of the Tiles team.

https://blog.mozilla.org/advancingcontent/2014/12/18/getting-tiles-data-from-firefox/

|

|

Mark Surman: David, Goliath and empires of the web |

People in Mozilla have been talking a lot about radical participation recently. As Mitchell said at recently, participation will be key to our success as we move into ’the third era of Mozilla’ — the era where we find ways to be successful beyond the desktop browser.

This whole conversation has prompted me to reflect on how I think about radical participation today. And about what drew me to Mozilla in the first place more than five years ago.

For me, a big part of that draw was an image in my mind of Mozilla as the David who had knocked over Microsoft’s Goliath. Mozilla was the successful underdog in a fight I really cared about. Against all odds, Mozilla shook the foundation of a huge empire and changed what was possible with the web. This was magnetic. I wanted to be a part of that.

I started to think about this more the other day: what does it really mean for Mozilla to be David? And how do we win against future Goliaths?

Malcom Gladwell wrote a book last year that provides an interesting angle on this. He said: we often take the wrong lesson from David and Goliath story, thinking that it’s surprising that such a small challenger could fell such a large opponent.

Gladwell argues that Goliath was much more vulnerable that we think. He was large. But he was also slow, lumbering and had bad eyesight. Moreover, he used the most traditional fighting techniques of his time: the armour and brute force of infantry.

David, on the other hand, actually had a significant set of strategic advantages. He was nimble and good with a sling. A sling used properly, by the way, is a real weapon: it can project a rock at the speed of a .45 caliber pistol. Instead of confronting Goliath with brute force, he used a different and surprising technique to knock over his opponent. He wasn’t just courageous and lucky, he was smart.

Most other warriors would have seen Goliath as invincible. Not David: he was playing the game by his own rules.

In many ways, the same thing happened when we took on Microsoft and Internet Explorer. They didn’t expect the citizens of the web to rally against them: to build — and then choose by the millions — an unknown browser. Microsoft didn’t expect the citizens of the web to sling a rock at their weak spot, right between their eyes.

As a community, radical participation was our sling and our rock. It was our strategic advantage and our element of surprise. And it is what shook the web loose from Microsoft’s imperial grip on the web.

Of course, participation still is our sling. It is still part of who were are as an organization and a global community. And, as the chart above shows, it is still what makes us different.

But, as we know, the setting has changed dramatically since Mozilla first released Firefox. It’s not just — or even primarily — the browser that shapes the web today. It’s not just the three companies in this chart that are vying for territorial claim. With the internet growing at breakneck speed, there are many Goliaths on many fronts. And these Goliaths are expanding their scope around the world. They are building empires.

This has me thinking a lot about empire recently: about how the places that were once the subjects of the great European empires are by and large the same places we call “emerging markets”. These are the places where billions of people will be coming online for the first time in coming years. They are also the places where the new economic empires of the digital age are most aggressively consolidating their power.

Consider this: In North America, Android has about 68% of smartphone market share. In most parts of Asia and Africa, Android market share is in the 90% range – give or take a few points by country. That means Google has a near monopoly not only on the operating system on these markets, but also on the distribution of apps and how they are paid for. Android is becoming the Windows 98 of emerging economies, the monopoly and the control point; the arbiter of what is possible.

Also consider that Facebook and WhatsApp together control 80% of the messaging market globally, and are owned by one company. More scary: as we do market research with new smartphone users in countries like Bangladesh and Kenya. We usually ask people: do you use the internet: do you use the internet on you phone? The response is often: “what’s the Internet?” “What do you use you phone for?”, we ask. The response: “Oh, Facebook and WhatsApp.” Facebook’s internet is the only internet these people know of or can imagine.

It’s not the Facebooks and Googles of the world that concern me, per se. I use their products and in many cases, I love them. And I also believe they have done good in the world.

What concerns me is that, like the European powers in the 18th and 19th centuries, these companies are becoming empires that control both what is possible and what is imaginable. They are becoming monopolies that exert immense control over what people can do and experience on the web. And over what the web – and human society as a whole – may become.

One thing is clear to me: I don’t want this sort of future for the web. I want a future where anything is possible. I want a future where anything is imaginable. The web can be about these kinds of unlimited possibilities. That’s the web that I want everyone to be able to experience, including the billions of people coming online for the first time.

This is the future we want as a Mozilla. And, as a community we are going to need to take on some of these Goliaths. We are going to need reach down into our pocket and pull out that rock. And we are going to need to get some practice with our sling.

The truth is: Mozilla has become a bit rusty with it. Yes, participation is still a key part of who we are. But, if we’re honest, we haven’t relied on it as much of late.

If we want to shake the foundations of today’s digital empires, we need to regain that practice and proficiency. And find new and surprising ways to use that power. We need to aim at new weak spots in the giant.

We may not know what those new and surprising tactics are yet. But there is an increasing consensus that we need them. Chris Beard has talked recently about thinking differently about participation and product, building participation into the actual features and experience of our software. And we have been talking for the last couple of years about the importance of web literacy — and the power of community and participation to get people teaching each other how to wield the web. These are are the kinds of directions we need to take, and the strategies we need to figure out.

It’s not only about strategy, of course. Standing up to Goliaths and using participation to win are also about how we show up in the world. The attitude each of us embodies every day.

Think about this. Think about the image of David. The image of the underdog. Think about the idea of independence. And, then think of the task at hand: for all of us to bring more people into the Mozilla community and activate them.

If we as individuals and as an organization show up again as a challenger — like David — we will naturally draw people into what we’re doing. It’s a part of who we are as Mozillians, and its magnetic when we get it right

Filed under: mozilla, poetry, webmakers

https://commonspace.wordpress.com/2014/12/18/davidgoliathempire/

|

|

Pomax: Let's make a Firefox Extension, the painless way |

Ever had a thing you really wanted to customise about Firefox, but you couldn't because it wasn't in any regular menu, advanced menu, or about:config?

For instance, you want to be able to delete elements on a page for peace of mind from the context menu. How the heck do you do that? Well, with the publication of the new node-based jpm, the answer to that question is "pretty dang simply"...

Let's make our own Firefox extension with a "Delete element" option added to the context menu:

We're going to make that happen in five steps.

- Install jpm -- in your terminal simply run:

npm install -g jpm(make sure you have node.js installed) and done (this is mostly prerequisite to developing an extension, so you only have to do this once, and then never again. For future extensions, you start at step 2!) - Create a dir for working on your extension whereveryou like, navigate to it in the terminal and run:

jpm initto set up the standard files necessary to build your extension. Good news: it's very few files! - Edit the

index.jsfile that command generated, writing whatever code you need to do what you want to get done, - Turn your code into an

.xpiextension by running :jpm xpi, - Install the extension by opening the generated

.xpifile with Firefox

Of course, step (3) is the part that requires some effort, but let's run through this together. We're going to pretty much copy/paste the code straight from the context menu API documentation:

// we need to make sure we have a hook into "things" we click on:

1: var self = require("sdk/self");

// and we'll be using the context menu, so let's make sure we can:

2: var contextMenu = require("sdk/context-menu");

// let's add a menu item!

3: var menuItem = contextMenu.Item({

// the label is pretty obvious...

4: label: "Delete Element",

// the context tells Firefox which things should have this in their context

// menu, as there are quite a few elements that get "their own" menu,

// like "the page" vs "an image" vs "a link". .. We pretty much want

// everything on a page, so we make that happen:

5: context: contextMenu.PredicateContext(function(data) { return true; }),

// and finally the script that runs when we select the option. Delete!

6: contentScript: 'self.on("click", function (node, data) { node.outerHTML = ""; });'

});The only changes here are that we want "delete" for everything, so the context is simply "for anything that the context menu opens up on, consider that a valid context for our custom script" (which we do by using the widest context possible on line 5), and of course the script itself is different because we want to delete nodes (line 6).

The contentScript property is a string, so we're a little restricted in what we can do without all manner of fancy postMessages, but thankfully we don't need it: the addon mechanism will always call the contentScript function with two arguments, "node" and "data, and the "node" argument is simply the HTML element you clicked on, which is what we want to delete. So we do! We don't even try to be clever here, we simply set the element's .outerHTML property to an empty string, and that makes it vanish from the page.

If you expected more work, then good news: there isn't any, we're already done! Seriously: run jpm run yourself to test your extension, and after verifying that it indeed gives you the new "Delete element" option in the context menu and deletes nodes when used, move on to steps (4) and (5) for the ultimate control of your browser.

Because here's the most important part: the freedom to control your online experience, and Firefox, go hand in hand.

|

|

Mark C^ot'e: Searching Bugzilla |

BMO currently supports five—count ‘em, five—ways to search for bugs. Whenever you have five different ways to perform a similar function, you can be pretty sure the core problem is not well understood. Search has been rated, for good reason, one of the least compelling features of Bugzilla, so the BMO team want to dig in there and make some serious improvements.

At our Portland get-together a couple weeks ago, we talked about putting together a vision for BMO. It’s a tough problem, since BMO is used for so many different things. We did, however, manage to get some clarity around search. Gerv, who has been involved in the Bugzilla project for quite some time, neatly summarized the use cases. People search Bugzilla for only two reasons:

- to find a set of bugs, or

- to find a specific bug.

That’s it. The fact that BMO has five different searches, though, means either we didn’t know that, or we just couldn’t find a good way to do one, or the other, or both.

We’ve got the functionality of the first use case down pretty well, via Advanced Search: it helps you assemble a set of criteria of almost limitless specificity that will result in a list of bugs. It can be used to determine what bugs are blocking a particular release, what bugs a particular person has assigned to them, or what bugs in a particular Product have been fixed recently. Its interface is, admittedly, not great. Quick Search was developed as a different, text-based approach to Advanced Search; it can be quicker to use but definitely isn’t any more intuitive. Regardless, Advanced Search fulfills its role fairly well.

The second use of Search is how you’d answer the question, “what was that bug I was looking at a couple weeks ago?” You have some hazy recollection of a bug. You have a good idea of a few words in the summary, although you might be slightly off, and you might know the Product or the Assignee, but probably not much else. Advanced Search will give you a huge, useless result set, but you really just want one specific bug.

This kind of search isn’t easy; it needs some intelligence, like natural-language processing, in order to give useful results. Bugzilla’s solutions are the Instant and Simple searches, which eschew the standard Bugzilla::Search module that powers Advanced and Quick searches. Instead, they do full-text searches on the Summary field (and optionally in Comments as well, which is super slow). The results still aren’t very good, so BMO developers tried outsourcing the feature by adding a Google Search option. But despite Google being a great search engine for the web, it doesn’t know enough about BMO data to be much more useful, and it doesn’t know about new nor confidential bugs at all.

Since Bugzilla’s search engines were originally written, however, there have been many advances in the field, especially in FLOSS. This is another place where we need to bring Bugzilla into the modern world; MySQL full-text searches are just not good enough. In the upcoming year, we’re going to look into new approaches to search, such as running different databases in tandem to exploit their particular abilities. We plan to start with experiments using Elasticsearch, which, as the name implies, is very good at searching. By standing up an instance beside the main MySQL db and mirroring bug data over, we can refer specific-bug searches to it; even though we’ll then have to filter based on standard bug-visibility rules, we should have a net win in search times, especially when searching comments.

In sum, Mozilla developers, we understand your tribulations with Bugzilla search, and we’re on it. After all, we all have a reputation to maintain as the Godzilla of Search Engines!

|

|

Henrik Skupin: Firefox Automation report – week 41/42 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 41 and 42.

With the beginning of October we also have some minor changes in responsibilities of tasks. While our team members from SoftVision mainly care about any kind of Mozmill tests related requests and related CI failures, Henrik is doing all the rest including the framework and the maintenance of Mozmill CI.

Highlights

With the support for all locales testing in Mozmill-CI for any Firefox beta and final release, Andreea finished her blacklist patch. With that we can easily mark locales not to be tested, and get rid of the long white-list entries.

We spun up our first OS X 10.10 machine in our staging environment of Mozmill CI for testing the new OS version. We hit a couple of issues, especially some incompatibilities with mozrunner, which need to be fixed first before we can get started in running our tests on 10.10.

In the second week of October Teodor Druta joined the Softvision team, and he will assist all the others with working on Mozmill tests.

But we also had to fight a lot with Flash crashes on our testing machines. So we have seen about 23 crashes on Windows machines per day. And that all with the regular release version of Flash, which we re-installed because of a crash we have seen before was fixed. But the healthy period did resist long, and we had to revert back to the debug version without the protect mode. Lets see for how long we have to keep the debug version active.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 41 and week 42.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 41 and week 42.

http://www.hskupin.info/2014/12/17/firefox-automation-report-week-41-42-2014/

|

|

Nicholas Nethercote: Using Gmail filters to identify important Bugzilla mail in 2014 |

Many email filtering systems are designed to siphon each email into a single destination folder. Usually you have a list of rules which get applied in order, and as soon as one matches an email the matching process ends.

Gmail’s filtering system is different; it’s designed to add any number of labels to each email, and the rules don’t get applied in any particular order. Sometimes it’s really useful to be able to apply multiple labels to an email, but if you just want to apply one in a fashion that emulates folders, it can be tricky.

So here’s a non-trivial example of how I filter bugmail into two “folders”. The first “folder” contains high-priority bugmail.

- Review/feedback/needinfo notifications.

- Comments in bugs that I filed or am assigned to or am CC’d to.

- Comment in secure bugs.

- Comments in bugs in the DMD and about:memory components.

For the high priority bugmail, on Gmail’s “Create a Filter” screen, in the “From:” field I put:

bugzilla-daemon@mozilla.org

and in the “Has the words:” field I put:

“you are the assignee” OR “you reported” OR “you are on the CC list” OR subject:”granted:” OR subject:”requested:” OR subject:”canceled:” OR subject:”Secure bug” OR “Product/Component: Core :: DMD” OR “Product/Component: Toolkit :: about:memory” OR “Your Outstanding Requests”

For the low priority bugmail, on Gmail’s “Create a Filter” screen, in the “From:” field put:

bugzilla-daemon@mozilla.org

and in the “Doesn’t have:” field put:

(“you are the assignee” OR “you reported” OR “you are on the CC list” OR subject:”granted:” OR subject:”requested:” OR subject:”canceled:” OR subject:”Secure bug” OR “Product/Component: Core :: DMD” OR “Product/Component: Toolkit :: about:memory” OR “Your Outstanding Requests”)

(I’m not certain if the parentheses are needed here. It’s otherwise identical to the contents in the previous case.)

I’ve modified them a few times and they work very well for me. Everyone else will have different needs, but this might be a useful starting point.

This is just one way to do it. See here for an alternative way. (Update: Byron Jones pointed out that my approach assumes that the wording used in email bodies won’t change, and so the alternative is more robust.)

Finally, if you’re wondering about the “in 2014'' in the title of this post, it’s because I wrote a very similar post four years ago, and my filters have evolved slightly since then.

|

|

Will Kahn-Greene: Dennis v0.6 released! Line numbers, double vowels, better cli-fu, and better output! |

What is it?

Dennis is a Python command line utility (and library) for working with localization. It includes:

- a linter for finding problems in strings in .po files like invalid Python variable syntax which leads to exceptions

- a template linter for finding problems in strings in .pot files that make translator's lives difficult

- a statuser for seeing the high-level translation/error status of your .po files

- a translator for strings in your .po files to make development easier

v0.6 released!

Since v0.5, I've done the following:

- Rewrote the command line handling using click and added an exception handler.

- Merged the lint and linttemplate commands. Why should you care which file you're linting when the linter can figure it out for you?

- Added the whimsical double vowel transform.

- Added line numbers in the lint output. This will make it possible to find those pesky problematic strings in your .po/.pot files.

- Add a line reporter to the linter.

Getting pretty close to what I want for a 1.0, so I'm pretty excited about this version.

Denise update

I've updated Denise with the latest Dennis and moved it to a better url. Lint your .po/.pot files via web service using http://denise.paas.allizom.org/.

Where to go for more

For more specifics on this release, see here: http://dennis.readthedocs.org/en/latest/changelog.html#version-0-6-december-16th-2014

Documentation and quickstart here: http://dennis.readthedocs.org/en/v0.6/

Source code and issue tracker here: https://github.com/willkg/dennis

Source code and issue tracker for Denise (Dennis-as-a-service): https://github.com/willkg/denise

6 out of 8 employees said Dennis helps them complete 1.5 more deliverables per quarter.

|

|

Nathan Froyd: what’s new in xpcom |

I was talking to somebody at Mozilla’s recent all-hands meeting in Portland, and in the course of attempting to provide a reasonable answer for “What have you been doing lately?”, I said that I had been doing a lot of reviews, mostly because of my newfound duties as XPCOM module owner. My conversational partner responded with some surprise that people were still modifying code in XPCOM at such a clip that reviews would be a burden. I responded that while the code was not rapidly changing, people were still finding reasons to do significant modifications to XPCOM code, and I mentioned a few recent examples.

But in light of that conversation, it’s good to broadcast some of the work I’ve had the privilege of reviewing this year. I apologize in advance for not citing everybody; in particular, my reviews email folder only goes back to August, so I have a very incomplete record of what I’ve reviewed in the past year. In no particular order:

- Randall Barker has been working on building a standalone WebRTC library, which necessitates building a standalone XPCOM, as our WebRTC code depends on things like XPCOM timers and threads.

- Brian Birtles wrote a series of patches that implemented a version of TimeDuration that uses saturating-esque arithmetic, which is useful for animation code.

- Birunthan Mohanathas wrote a rather large series of patches for completely converting xpcom/ to Gecko style, not all of which are featured in the linked bug. He submitted numerous patches, re-indenting code, renaming arguments, and fixing other style violations, all easily reviewable. xpcom/ is a better place for his work.

- Eric Rahm made some significant changes to how the deadlock detector works, improving its memory usage and redoing how we store callstacks. The upshot here is that the deadlock detector is significantly faster, and no longer requires trace-malloc to provide callstacks.

- Ben Kelly implemented XPCOM streams that perform snappy compression on the data being written to or from them, as part of his ongoing work on Service Workers. We did think about using the stream converter service for this one, but the APIs didn’t match up very nicely with how the streams were actually getting used.

- Mike Hommey wrote a tool to record and replay memory allocations made by Firefox. In the course of doing so, he discovered a nasty infinite loop made possible by using locking primitives that always had to be dynamically allocated. Working around this required writing statically-allocated mutexes and carefully integrating with our file descriptor poisoning that happens during shutdown.

- Ehsan Akhgari discovered that the ReplaceSubstring method of XPCOM’s strings was O(n^2), which caused hangs when using the reftest analyzer. And motivated by wanting to have automated testing for this, he also ported the string tests to run under the GTest unit test framework (along with other tests that have lain dormant for far too long.)

- People also continue to make various tweaks to XPCOM data structures: Kyle Huey and Peter Van der Beken added rvalue reference support to nsTArray. Seth Fowler modified various Auto helpers so they’d work properly with mozilla::Maybe. Nicholas Nethercote fixed nsTArray to use exponential growth when appending to an array.

- Finally, reviewing wouldn’t be reviewing if you didn’t get to review fixes to code that you already reviewed and approved some time ago. Benjamin Smedberg wrote a patch a year ago to annotate out-of-memory callsites in XPCOM with the actual amount of bytes that were being allocated. This worked well enough, but David Major noticed that we sometimes passed the sentinel size of -1 as the actual allocation size, and we didn’t compute the correct sizes for XPCOM string allocations.

http://blog.mozilla.org/nfroyd/2014/12/16/whats-new-in-xpcom/

|

|

Michael Kaply: Managing Firefox with Group Policy and PolicyPak |

A lot of people ask me how to manage Firefox using Windows Group Policy. To that end, I have been working with a company called PolicyPak to help enhance their product to have more of the features that people are asking for (not just controlling preferences.) It's taken about a year, but the results are available for download now.

You can now manage the following things (and more) using PolicyPak, Group Policy and Firefox:

- Set and lock almost all preference settings (homepage, security, etc) plus most settings in about:config

- Set site specific permissions for pop-ups, cookies, camera and microphone

- Add or remove bookmarks on the toolbar or in the bookmarks folder

- Blacklist or whitelist any type of add-on

- Add or remove certificates

- Disable private browsing

- Turn off crash reporting

- Prevent access to local files

- Always clear saved passwords

- Disable safe mode

- Remove Firefox Sync

- Remove various buttons from Options

If you want to see it in action, you can check out these videos.

And if you've never heard of PolicyPak, you might have heard of the guy who runs it - Jeremy Moskowitz. He's a Group Policy MVP and literally wrote the book on Group Policy.

On a final note, if you decide to purchase, please let them know you heard about it from me.

http://mike.kaply.com/2014/12/16/managing-firefox-with-group-policy-and-policypak/

|

|

Jennie Rose Halperin: Leaving Mozilla as staff |

December 31 will be my last day as paid staff on the Community Building Team at Mozilla.

One year ago, I settled into a non-stop flight from Raleigh, NC to San Francisco and immediately fell asleep. I was exhausted; it was the end of my semester and I had spent the week finishing a difficult databases final, which I emailed to my professor as soon as I reached the hotel, marking the completion of my coursework in Library Science and the beginning of my commitment to Mozilla.

The next week was one of the best of my life. While working, hacking, and having fun, I started on the journey that has carried me through the past exhilarating months. I met more friendly faces than I could count and felt myself becoming part of the Mozilla community, which has embraced me. I’ve been proud to call myself a Mozillian this year, and I will continue to work for the free and open Web, though currently in a different capacity as a Rep and contributor.

I’ve met many people through my work and have been universally impressed with your intelligence, drive, and talent. To David, Pierros, William, and particularly Larissa, Christie, Michelle, and Emma, you have been my champions and mentors. Getting to know you all has been a blessing.

I’m not sure what’s next, but I am happy to start on the next step of my career as a Mozillian, a community mentor, and an open Web advocate. Thank you again for this magical time, and I hope to see you all again soon. Let me know if you find yourself in Boston! I will be happy to hear from you and pleased to show you around my hometown.

If you want to reach out, find me on IRC: jennierose. All the best wishes for a happy, restful, and healthy holiday season.

|

|

Mike Hommey: One step closer to git push to mercurial |

In case you missed it, I’m working on a new tool to use mercurial remotes in git. Since my previous post, I landed several fixes making clone and pull more reliable:

- Of 247316 unique changesets in the various mozilla-* repositories, now only two (but both in fact come from the same patch, one of the changesets being a backport to aurora of the other) are “corrupted” because their mercurial date have a timezone with a second.

- Of 23542 unique changesets in the canonical mercurial repository, only three are “corrupted” because their raw mercurial data contains, for an unknown reason, a whitespace after the timezone.

By corrupted, here, I mean that the round-trip hg->git->hg doesn’t lead to matching their sha1. They will be fixed eventually, but I haven’t decided how yet, because they’re really edge cases. They’re old enough that they don’t really matter for push anyways.

Pushing to mercurial, however, is still not there, but it’s getting closer. It involves several operations:

- Negotiating with the mercurial server what it doesn’t have that we do.

- Creating mercurial changesets, manifests and files for local git commits that were not imported from mercurial.

- Creating a bundle of the mercurial changesets, manifests and files that we have that the server doesn’t.

- Pushing that bundle to the server.

The first step is mostly covered by the pull code, that does a similar negotiation. I now have the third step covered (although I cheated around the “corruptions” mentioned above):

$ git clone hg::http://selenic.com/hg Cloning into 'hg'... (...) Checking connectivity... done. $ cd hg $ git hgbundle > ../hg.hg $ mkdir ../hg2 $ cd ../hg2 $ hg init $ hg unbundle ../hg.hg adding changesets adding manifests adding file changes added 23542 changesets with 44305 changes to 2272 files (run 'hg update' to get a working copy) $ hg verify checking changesets checking manifests crosschecking files in changesets and manifests checking files 2272 files, 23542 changesets, 44305 total revisions

Note: that hgbundle command won’t actually exist. It’s just an intermediate step allowing me to work incrementally.

In case you wonder what happens when the bundle contains bad data, mercurial fortunately rejects it:

$ cd ../hg $ git hgbundle-corrupt > ../hg.hg $ mkdir ../hg3 $ cd ../hg3 $ hg unbundle ../hg.hg adding changesets transaction abort! rollback completed abort: integrity check failed on 00changelog.i:3180!

|

|

Andrea Marchesini: Priv8 is out! |

Download page: click here

What is priv8? This is a Firefox addon that uses part of the security model of Firefox OS to create sandboxed tabs. Each sandbox is a completely separated world: it doesn’t share cookies, storage, and a lots of other stuff with the rest of Firefox, but just with other tabs from the same sandbox.

Each sandbox has a name and a color, therefore it will be always easy to identify which tab is sandboxed.

Also, these sandboxes are permanent! So, when you open one of them the second time, maybe after a restart, that sandbox will still have the same cookies, same storage, etc - as you left the previous time.

You can also switch between sandboxes using the context menu for the tab.

Here an example: with priv8 you can read your gmail webmail in a tab, and another gmail webmail in another tab at the same time. Still, you can be logged in on Facebook in a tab and not in the others. This is nice!

Moreover, if you are a web developer and you want to test a website using multiple accounts, priv8 gives you the opportunity to have each account in a sandboxed tab. Much easier then have multiple profiles or login and logout manung>ally every time!

Is it stable? I don’t know :) It works but more test must be done. Help needed!

Known issues?

- window.open() doesn’t work from a sandbox

- e10s is not supported yet.

- The UI must be improved.

Screenshots:

This is the manager, where you can “manage” your sandboxes.

The panel is always accessible from the firefox toolbar.

The context menu allows you to switch between sandboxes for the current tab. This will reload the tab after the switch.

3 separate instances of Gmail at the same time.

License: Priv8 is released under Mozilla Public License.

Source code: bakulf :: priv8

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1063818] Updates to form.dev-engagement-event

- [1111954] Updates to Spreadsheet Data in form.dev-engagement-event

- [1092578] Decide if an email needs to be encrypted at the time it is generated, not at the time it is sent

- [1107275] Include Build.PL file for bmo/4.2 to install Perl dependencies (useful for Travis CI, etc.)

- [829358] Changing the name of a private attachment in an unhidden bug results in the name change being sent unencrypted

- [1104291] The form.web.bounty page does not say it’s a bounty form

- [1105585] Fix bug bounty form to validate its input more and relax the restriction on the paid field to include -+? suffix

- [1105155] Indicate that an existing comment has been modified for tracking flags with prefill text

- [1105745] changes made via the bounty form are not emailed immediately

- [1111862] HTML code injection in review history page

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2014/12/16/happy-bmo-push-day-121/

|

|