Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Gervase Markham: Ctrl-Shift-T Not Working in Ubuntu |

I just spent ages debugging the fact that my Ctrl-Shift-T key stopped working in Ubuntu. This is my attempt to write a blog post that search engines will find if anyone else has the same problem. Ctrl-Shift-T is “new tab” in Terminal, and “reopen last closed tab” in Firefox, so it’s rather useful, and annoying when not working.

After lots of research and debugging, it turned out to be the following. I recently installed a bunch of VoIP apps, including Jitsi. Jitsi decided to run on startup, in a way which was invisible in the UI, and override a bunch of my keybindings, in a way which didn’t make it clear that it was the app capturing them. :-( So if anyone ever finds Ctrl-Shift keybindings stop working (seems like Jitsi takes A, H, P, L, M and T), and sees output like this in xev:

FocusOut event, serial 37, synthetic NO, window 0x5400001,

mode NotifyGrab, detail NotifyAncestor

FocusIn event, serial 37, synthetic NO, window 0x5400001,

mode NotifyUngrab, detail NotifyAncestor

KeymapNotify event, serial 37, synthetic NO, window 0x0,

keys: 34 0 0 0 32 0 4 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

instead of the expected KeyPress / KeyRelease events, then look for some app which has pinched your keys. I spent ages looking through the Compiz config settings, finding nothing, but then started killing processes until I found my keys were working again.

http://feedproxy.google.com/~r/HackingForChrist/~3/wosFzjcLPfE/

|

|

Mozilla Fundraising: When Testing Meets Localization |

https://fundraising.mozilla.org/when-testing-meets-localization/

|

|

Benjamin Kerensa: What is your Mozilla Resolution? |

We punch way above our weight

We punch way above our weightHappy new year, friends!

2014 was both a phenomenal year for Mozilla while a wild ride for us as we waded through what seemed like hit after hit from the tech press but we fared well. We have much to be excited about this year we announced Firefox for iOS, launched a refresh of Firefox’s UI, a Firefox Dev Edition, improved search options and we also launched phones with partners in more countries than I can count on two hands which makes us one of the most successful open source mobile platforms out there.

We also participated in 3000+ events worldwide and that is a pretty amazing feat in itself and I honestly do not know of many open source projects or companies that could come close to that figure. The fact is we had a really awesome year and all of our work is not done some of the things we started in 2014 will continue into 2015 and as exciting as 2014 was for us I’m betting we can do 2015 even bigger.

Remember Mozillians, we punch way above our weight and really are that David fighting Goliath and I think considering we will have a relentless focus on product next year the future for Mozilla is very bright.

Some of the things I look forwarding to working on next year:

- Continued improvement of Firefox ESR for our Enterprise, Academic and Government users

- Expand our presence at events that focus on Learning, Libraries (as in books) and serving underrepresented groups

- Have a Mozilla presence at events we would not usually be at (we need to reach new people!)

- Support Thunderbird in what will hopefully be a comeback year for the project

- Growing the North America Community by 10%

What is your Mozilla New Year’s Resolutions? Aim high!

|

|

Soledad Penades: Assorted bits and pieces |

As we wrap the year and my brain is kind of hazy with the extra food, and the total shock to the system caused by staying in Spain these days, I thought it would be a splendid moment to collect a few things that I haven’t blogged about yet. So there we go:

Talks

In Hacks

We were brainstorming what to close the year with at the Mozilla Hacks blog, and we said: let’s make a best of 2014 post!

For some reason I ended up building a giant list of videos from talks that had an impact on me, whether technical or emotional, or both, and I that thought would be great to share with fellow developers. And then the planets aligned and there was a call to action to help test video playing in Firefox, so we ended up with You can’t go wrong watching JavaScript talks, inviting you to watch these videos AND help test video playing. Two birds with one stone! (but figuratively, we do not want to harm birds, okay? okay!).

Since it is a list I curated, it is full of cool things such as realtime graphics, emoji, Animated GIFs, Web Components, accessibility, healthy community building, web audio and other new and upcoming Web APIs, Firefox OS hardware hacking, and of course, some satire. Go watch them!

Mine

And then the videos for some talks I’ve given recently have been published also.

Here’s the one from CMD+R conf, a new conference in London for Mac/iOS developers which was really nice even though I don’t work on that field. The organiser watched my CascadiaJS 2014 talk and liked it, and asked me to repeat it.

I’m quite happy with how it turned out, and I’m even a tad sad that they cut out a bit of the silly chatter from when I jumped on the stage and was sort of adjusting my laptop. I think it was funny. Or maybe it wasn’t and that’s why they cut it out ![]()

Then I also spoke at Full Frontal in Brighton, which is not a new conference but has a bit of a legendary aura already, so I was really proud to have been invited to speak there. I gave an introduction to Web Audio which was sort of similar to the Web Audio Hack Day introduction, but better. Everything gets better when you practice and repeat ![]()

Podcasts

Potch and me were guests in the episode 20 from The Web Platform, hosted by Erik Isaksen. We discussed Web Components, solving out problems for other developers with Brick, the quests you have to go through when you want to use them today, proper component/code design, and some more topics such as accessibility or using components for fun with Audio Tags.

And finally… meet ups and upcoming talks!

I’m going to be hosting the first Ladies Who Code meetup at London of the year. The date is the 6th of January, and here’s the event/sign up page. Come join us at Mozilla London and hack on stuff with fellow ladies who code! ![]()

And then on the 13th of January I’ll be also giving an overview talk about Web Components at the first ever London Web Components meetup. Exciting! Here’s the event page, although I think there is a waiting list already.

Finally for-reals I’ll be speaking at the Mozilla room at FOSDEM about Firefox OS app development with node-firefox, a project that Nicola started when he interned at Mozilla last summer, and which I took over once he left because it was too awesome to let it rust.

Of course “app development with node-firefox” is too bland, so the title of the talk is actually Superturbocharging Firefox OS app development with node-firefox. In my defense I came up with that title while I was jetlagged and incubating a severe cold, so I feel zero guilt about this superhyperbolic title ![]()

Merry belated whatevers!

http://soledadpenades.com/2014/12/26/assorted-bits-and-pieces/

|

|

Mozilla Fundraising: Localized Fundraising: After $1.75 Million, How Are We Doing? |

https://fundraising.mozilla.org/localized-fundraising-after-1-75-million-how-are-we-doing/

|

|

Gervase Markham: Is Christianity a “Life Hack”? |

This post discusses why people might be motivated to share Christianity with others, and was prompted by a comment on one of the Mozilla Yammer instances which is part of an ongoing discussion within Mozilla about this general subject.

[It is also me deciding to try out Mike Hoye’s proposed new Planet Mozilla content policy, which suggests that people posting content to Planet regarding “contentious or personal topics outside of Mozilla’s mission” may do so if they begin with a sentence advising people of that fact. Hence the above. I don’t intend to be contentious, but you could call this personal, and it’s outside Mozilla’s mission. I assume the intent is that the uninterested or potentially offended can just press “Next” in their feed reader. You can join the discussion on the proposed new policy in mozilla.governance.]

I won’t quote the comment directly because it was on a non-public Yammer instance, but the original commenter’s argument went something like: “If you knew something awesome and life-changing, wouldn’t you want to share it with others?”. A follow-up comment from another participant was in general agreement, and compared religion to a life hack – which I understand to mean something that someone has done which has improved their life and so they want to share so that other people’s lives can be improved too. (The original website posting such things was a Gawker site called Lifehacker, but there have been many imitations since.)

I want to engage with that idea, although I’ll talk about “Christianity” rather than “religion” because I don’t believe anyone’s life can be significantly improved by believing falsehoods, and the law of non-contradiction means that if Christianity is true (as I believe it is), all other religions are false.

There is a kernel of truth in the idea that Christianity is a life hack, but there’s a lot misleading about it too. Following Jesus does secure your eternal salvation, which is clearly a long-term improvement, and the confidence that comes from knowing what will happen to you, and having an ongoing relationship with your creator, is something that all Christians find encouraging every day. Who wouldn’t want others to have that? But in the short term, there are many people for whom becoming a Christian makes their life significantly more difficult. Publicly turning to Jesus in Afghanistan, or Saudi Arabia, or Eritrea, or Myanmar, will definitely lead to persecution and can even lead to death. Jesus said it would be so:

Then you will be handed over to be persecuted and put to death, and you will be hated by all nations because of me. — Jesus (Matthew 24:9)

In addition, the Bible teaches that Christians may encounter troubles in order to help them grow in faith and rely on God more. So the idea that becoming a Christian will definitely make your life better is not borne out by what Jesus said about it.

The other part of the life hack idea that is misleading is the “take it or leave it” aspect. One of the things about a life hack is that if it works for you in your circumstances, great. If not, no big deal. In today’s relativistic world many ideas, including Christianity, are presented this way because it’s far less offensive to people. “Take it or leave it” makes no demands; it does not require change; it does not present itself as the exclusive truth. And, in fact, that was the point of the person making the comparison – paraphrasing, “what’s wrong with offering people life hacks? You don’t have to accept. How can making an offer be offensive?”

But that’s not how Jesus presented his message to people.

I am the way and the truth and the life. No one comes to the Father except through me. (Jesus — John 14:6)

Whoever believes in him is not condemned, but whoever does not believe stands condemned already because they have not believed in the name of God’s one and only Son. (John 3:18, just 2 verses after the Really Famous Verse.)

This is not a “take it or leave it” position. Jesus was pretty clear about the consequences of rejecting him. And so that’s the other misleading thing about the idea that Christianity is a life hack – the suggestion that if you think it doesn’t work for you, you can just move on. No biggie, and no need to be offended.

Christianity offends and upsets people. (Unsurprisingly, and you may be detecting a pattern here, Jesus said that would happen too.) It does so in different ways in different ages through history; in our current age, one which particularly gets people’s backs up is the idea that it’s an exclusive truth claim rather than an optional “life hack”. Which is why the idea that it is a life hack is actually rather dangerous.

It may seem odd that I am arguing against someone who was arguing for the social acceptability of talking about Jesus in public places. And I don’t doubt their good intentions. But the opportunity to talk about him should not be bought at the cost of denying the difficulty of his path or the exclusivity and urgency of his message.

Merry Christmas and a Happy New Year to all! :-)

http://feedproxy.google.com/~r/HackingForChrist/~3/ZdVcmqQdxsM/

|

|

Francois Marier: Making Firefox Hello work with NoScript and RequestPolicy |

Firefox Hello is a new beta feature in Firefox 34 which give users the ability to do plugin-free video-conferencing without leaving the browser (using WebRTC technology).

If you cannot get it to work after adding the Hello button to the toolbar, this post may help.

Preferences to check

There are a few preferences to check in about:config:

media.peerconnection.enabledshould betruenetwork.websocket.enabledshould betrueloop.enabledshould betrueloop.throttledshould befalse

NoScript

If you use the popular NoScript add-on, you will need to whitelist the following hosts:

about:loopconversationhello.firefox.comloop.services.mozilla.comopentok.comtokbox.com

RequestPolicy

If you use the less popular but equally annoying RequestPolicy add-on, then you will need to whitelist the following destination host:

tokbox.com

as well as the following origin to destination mappings:

about:loopconversation->firefox.comabout:loopconversation->mozilla.comabout:loopconversation->opentok.comfirefox.com->mozilla.comfirefox.com->mozilla.orgfirefox.com->opentok.commozilla.org->firefox.com

I have unfortunately not been able to find a way to restrict tokbox.com to a

set of (source, destination) pairs. I suspect that the use of websockets

confuses RequestPolicy.

If you find a more restrictive policy that works, please leave a comment!

http://feeding.cloud.geek.nz/posts/making-firefox-hello-work-with-noscript-request-policy/

|

|

Christian Heilmann: Going IRL during the holidays can teach us to talk more, let’s do that. |

I am currently with my family celebrating Christmas in the middle of Germany. Just like the last years, I planned to do some reflection and write some deeper thinking posts, but now I keep getting challenged. Mostly to duels like these:

I find the visits to my family cleansing. Not because of a bonding thing or needing to re-visit my past, but as a reality check. I hear about work troubles. I hear about bills to keep the house in a state of non-repair. I hear about health issues. I hear about relationship problems.

What I don’t hear about is people with a lot of money, amazing freedoms at work and a challenging and creative job complaining. I also don’t hear hollow messages of having to save the world in 140 characters. I also don’t hear promises of amazing things being just around the corner.

I hear a lot of pessimism, I hear a lot of worry about a political shift to the right in every country. I hear worries about the future. I hear about issues I forgot existed, but are insurmountable by people outside our bubble. Not only technical issues, but communication ones and rigid levels of hierarchy.

And that makes me annoyed, so much that I wish for the coming time and year to be different. Working on the web, working for international companies we should feel grateful for what we have. I count international companies as those with a different language in the office than the one of the country. And those who practice outreach further than the country.

Let’s tackle communication in the coming year after we’ve come out refreshed and confused by the holidays. Let’s listen more, feel more, communicate more, forgive more and assume less. It is hard to fathom that in a world that connected and that communicative human interaction is terrible. We love to complain about big issues publicly to show that we care. We love to point fingers about who is to blame about a certain problem. We are concerned that people feel worried or unhappy but we fail to reach out and listen when they need us. We are too busy to complain that their problems exist. Not everybody who shares a lot online is happy and open. Sometimes there is a massive disconnect between that online person and the one doing the sharing. Talk more to another, be honest in your feedback. Forget likes, forget emoticons, forget stickers. Use your words. Use a simple “How are you”. We have a freedom not many people enjoy. We work in a communication medium where chatting with others and being online is seen as work. And we squander it away by being seclusive on one hand and overly sharing on another.

The corporate rat race of the 80s has been the topic of many a movie about burn-out and a lot of Christmas movies shown right now. A lot is about the seemingly successful business person finding that love and feeling and having friends matters. The 80s are over. The broken model of having to be successful and fast-moving in anything you do is still alive. And now, it is us. Let’s show that we can not only disrupt old and rigid business models. Let’s show that we can also be good people who talk to another and have careers without walking over others.

Have a happy few days off. I hope to talk to you soon and hear what you have to say.

|

|

Priyanka Nag: My first unconference format conference - AdaCamp Bangalore |

I met the two AdaCamp organizers, Alex and Suki, for the first time at the reception dinner sponsored by 'Web We Want' on the evening of Friday, 21st November 2014. This was the same place where I also met a lot of other amazing ladies. The most interesting part was, meeting people whom I was already connected with, virtually, but was meeting for the first time.

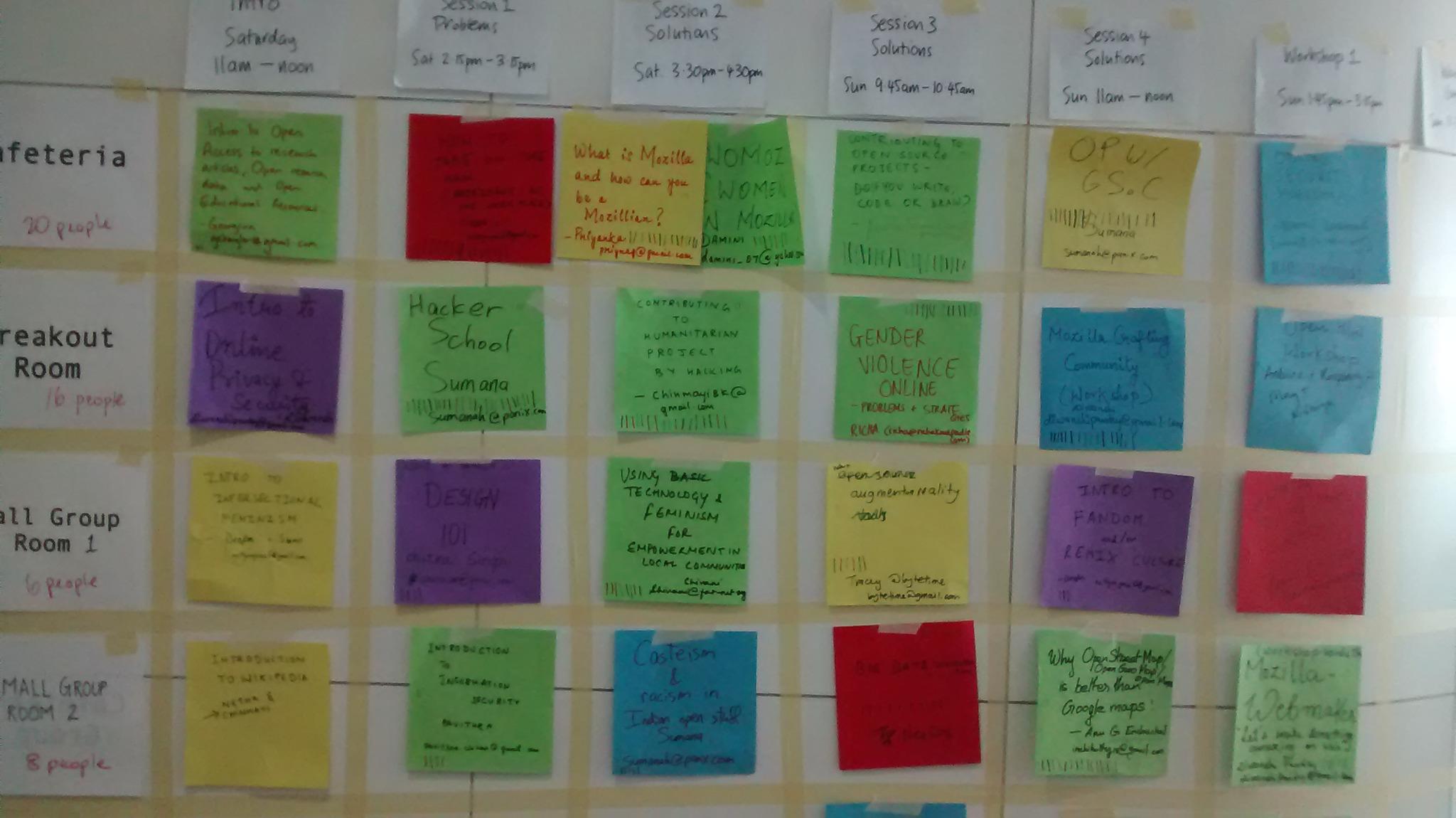

The next two days were one of the most amazing and learning experiences of my life! This was my first unconference format conference. When I had initially heard about this concept, I was really worried that this might be a very messy process! Not deciding tracks of a conference before hand and deciding them on the day of those sessions, in a very democratic way, involving everyone in the decision making process...really? To my surprise, this was one of the most organized way of making the schedule of a conference, I have ever witnessed. I have been to several meetings where organizers and expert panels would spend hours, deciding, arguing over the structure of an event and its agenda. How we can give participants the power to decide, choose and finalize what they want to both teach and learn from a conference was not only an amazing idea but at AdaCamp Bangalore, it was also an amazingly executed idea!

|

| The schedule of AdaCamp Bangalore, decided by participants |

Another woooow moment for me at the AdaCamp was Sumana Harihareswara's session on 'Imposter Syndrome'. I was surprised to see that every woman sitting in that room, attending AdaCamp, agreed at a point that they do suffer from imposter syndrome in some way or the other. The handouts given for this session is something I have preserved to be able to share with my other female colleagues and friends.

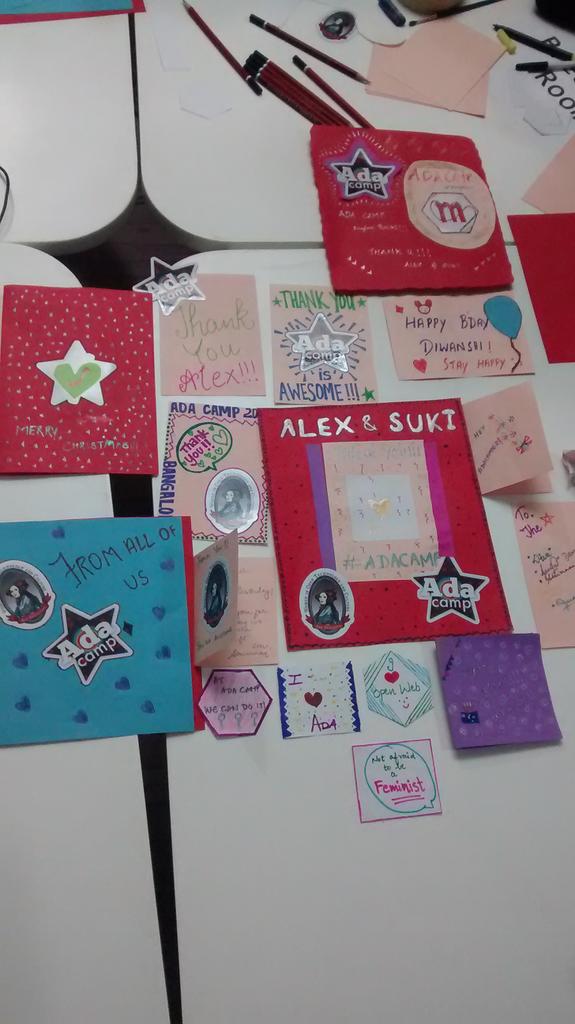

Mozilla got to mark a good presence at AdaCamp. All the participating Mozillians, actively proposed several sessions for the two days of the conference and to my surprise, almost all of our sessions got sufficient appreciation and made it to the final schedule. From a generic introduction session to Mozilla and its community to sessions on Firefox OS as well as Webmaker, we did it all. Diwanshi's session on the Art and Craft community of Mozilla was probably the most colorful session of the event where all creative hands got together to create some amazing stuffs.

|

| Some of the makes of the Art and Craft session at AdaCamp |

At AdaCamp, I also learnt the skill to organize and handle 'Lightening talks' better. There were so many other sessions, workshops, lunch discussions where I have not only learnt a lot of new things, have also found so many like minded people, together in one room.

Among all the great things that this event has taught me, a few which I would definitely like to list down are:

[1] While organizing events, we often don't take care of a lot of things. Since we like our beautiful faces to be clicked and published, we ignore the fact that there might be someone who might not enjoy it the same way. At AdaCamp, they take care of everyone's privacy. You get to choose from three different colored lanyards. Based on your preference on being photographed, you could choose to wear a particular colored lanyard. I totally admired this!

[2] Many of us like to blog, tweet or post about events and learning on different social media platforms, without realizing how much we are supposed to say and where the limit should be drawn to not hamper someone else's privacy. At AdaCamp, we were reminded of these factors. I have never been to another conference where everyone's privacy, their freedom was given such importance.

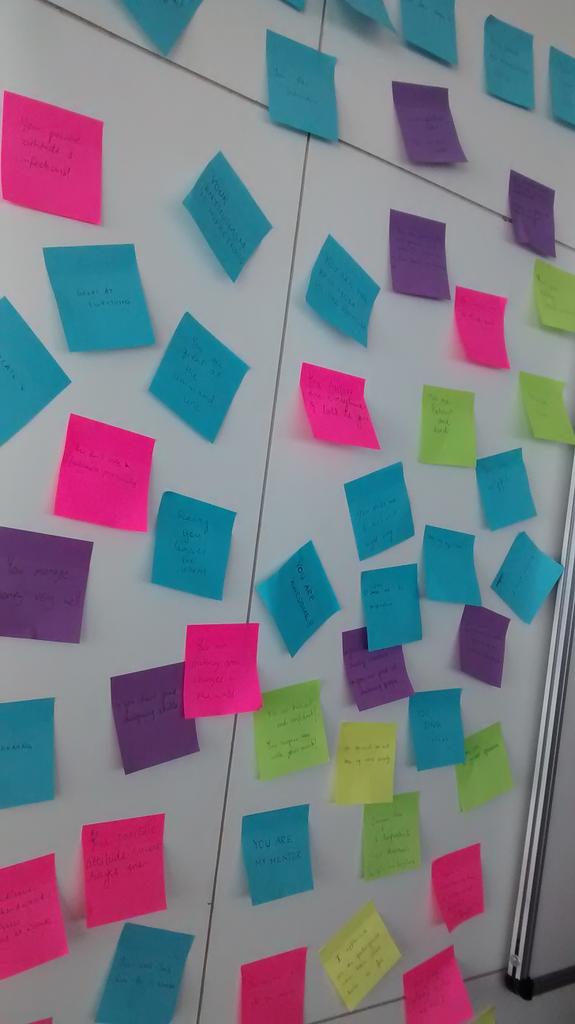

[3] The compliment wall. We all like to be appreciated and during the AdaCamp, we kept being appreciated for two full days. We had a wall where we had initially pinned up a lot of compliments, which we could think of, and later for the next two days, those compliments got down from the wall and reached the deserving person.

|

| The wall of compliments |

I have learnt a lot from AdaCamp and honestly, if I organize events in the future, reflections of those learning will surely show!

|

| The AdaCampers of Bangalore |

http://priyankaivy.blogspot.com/2014/12/my-first-unconference-format-conference.html

|

|

Michael Kaply: Keyword Search for Christmas |

I just wanted to say thanks to Mozilla for selecting Keyword Search as one of the best add-ons of 2014.

To celebrate, I've finally updated Keyword Search to work better with the search changes in Firefox 34. You can specify separate search engines for about:home and the new tab page and you get images that match the search you are using.

I also added a new feature for international users that allows google.com to be used regardless of the country you are in. This became an issue recently when Google started forcing all searches to country searches even if you start them on google.com.

You can download the latest version here.

Happy holidays!

http://mike.kaply.com/2014/12/24/keyword-search-for-christmas/

|

|

Tantek Celik: What Is Your 2015-01-01 #IndieWeb Personal Site Launch Commitment? |

At last week's Homebrew Website Club Meetup we made #indieweb commitments to each other, to launch a new feature on our personal sites and start using it as of 2015-001 (2015-01-01 for those who prefer Gregorian).

Join us. Blog (or tweet if you don't blog) your own personal site launch commitment for the start of 2015. No declaration too small or large. From getting started to fully owning various types of content instead of posting to silos, here's what we've committed to so far:

Getting Started

There are many simple things you can do to get yourself or a friend started on the indieweb, beginning with getting your own domain name, setting up you own online identity, setting up something to post content some place you control, improving the designs and storage of your posts and archives.

Kartik Prabhu - get @DivyaTaks’s new portfolio site up and running

Katie Johnson - add a blog to katiejohnson.me

Colin Tedford - fix single post display

Colin Tedford - fix single post display

Bret Comnes - get photo hosting to S3 via micropub working

New Content Types

The best place to start experimenting with new content types, perhaps even those that don't exist in any popular silo, is your own website. The following have committed to posting new content types on their personal site starting 2015-01-01.

Pius Uzamere - publish some VR content

Nick Doty - publish HTML citations with microformats generated by citeproc; at least one h-cite post on my site.

Ari Bader-Natal - moving my projects to my own site

Raise Your IndieMark: Improve Your Independence

There are numerous things you can do to improve the independence of your personal site. The IndieWebCamp community has been documenting many aspects of a personal site, and paths to increased independence for each aspect, or axis, and demarcating them in levels. Together these axes and levels are aggregated into an overall IndieMark metric that you can use to measure the independence of your site.

The following have committed to improving their IndieMark score on one or more axes.

Charles Stanhope - IndieMark Level 1

David Shanske - improved reply-context including author icon and author name, as well as making his WordPress plugin for "kinds do everything I want instead of also having post form".

Brett Slatkin - serve my pages with https

Jon Pierce - static site generator on my site and serve my pages with https

Kyle Mahan - remove http(s) mixed-content warnings to achieve https level 4.

Bear - micropub endpoint setup on personal site to post notes and articles without current 7 manual steps

Own Your Content

Owning your content at stable permalinks that you control is the key building block of the independent web. Every time you create the first and primary version of a post on your own site, and encourage others to reference it instead of a silo post, you are growing the indieweb.

Everyone on this list has committed to owning at least one more type of content completely at stable permalinks on their own personal site rather than a silo. Starting on 2015-001 (or sooner), they have committed to posting a specific type of content directly to their site, never first to a silo, and optionally copying it to a silo.

Tantek Celik - owning favorites instead of Twitter.

Tantek Celik - owning favorites instead of Twitter.

Aaron Parecki - owning likes in general.

Fred de Villamil - add favorites to Publify

David Peach - retweeting and favourites / likes working from site, posseing them to Twitter

Ben Roberts - owning checkins

Ryan Barrett - better owning his checkins by improving his UX for creating checkin posts, enabling himself to more easily checkin more often

More IndieWeb Ideas

Want more indieweb ideas to ship? See the following IndieWebCamp guides:

- Getting Started - get on the indieweb

- IndieMark - level up your independence

- Own Your Data - take control of what you create, own your notes, posts etc. rather than sharecropping for the silos.

Whatever you choose, blog it, tweet it, and tag it #indieweb. I'll add more commitments to the above list as they're posted.

Eight days left til we collectively take back a small piece of our web.

http://tantek.com/2014/357/b1/2015-indieweb-site-launch-commitment

|

|

Mozilla Fundraising: Trimming load times worldwide |

https://fundraising.mozilla.org/trimming-load-times-worldwide/

|

|

Monty Montgomery: A Fabulous Daala Holiday Update |

Before we get into the update itself, yes, the level of magenta in that banner image got away from me just a bit. Then it was just begging for inappropriate abuse of a font...

Ahem.

Hey everyone! I just posted a Daala update that mostly has to do with still image performance improvements (yes, still image in a video codec. Go read it to find out why!). The update includes metric plots showing our improvement on objective metrics over the past year and relative to other codecs. Since objective metrics are only of limited use, there's also side-by-side interactive image comparisons against jpeg, vp8, vp9, x264 and x265.

The update text (and demo code) was originally for a July update, as still image work was mostly in the beginning of the year. That update get held up and hadn't been released officially, though it had been discovered by and discussed at forums like doom9. I regenerated the metrics and image runs to use latest versions of all the codecs involved (only Daala and x265 improved) for this official better-late-than-never progress report!|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1111994] HTML 24 hour nag emails do not include invisible header text

- [1108631] Add the due_date field to the “Mozilla PR” product and update the mozpr form to use it

- [860297] “see also” links shown by inline history which link to the current bugzilla installation should show tooltips

- [1112311] Changes to Brand Engagement Initiation form

- [836713] Make group membership reports publicly-available

- [1098291] OPTION response for CORS requests to REST doesn’t allow X-Requested-With

- [1113286] Bugzilla login field should be

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2014/12/23/happy-bmo-push-day-122/

|

|

Mike Hommey: A new git workflow for Gecko development |

If you’ve been following this blog, you know I’ve been working on a new tool to allow to use git with mercurial repositories. See the previous blog posts for some detail if you don’t know what I’m talking about.

Today, a new milestone has been reached. After performing tens of thousands of pushes[1] and having had no server corruption as a result, I am now confident enough with the code to remove the limitation preventing to push to remote mercurial servers (by an interesting coincidence, it’s exactly the hundredth commit). Those tens of thousands of pushes allowed to find and fix a few corner-cases, but they were only affecting the client side.

So here is my recommended setup for Gecko development with git:

- Install mercurial (only needed for its libraries). You probably already have it installed. Eventually, this dependency will go away, because the use of mercurial libraries is pretty limited.

- If you can, rebuild git with this patch applied: https://github.com/git/git/commit/61e704e38a4c3e181403a766c5cf28814e4102e4. It is not yet in a released version of git, but it will make small fetches and pushes much faster.

- Install this git-remote-hg. Just clone it somewhere, and put that directory in your

PATH. - Create a git repository for Mozilla code:

$ git init gecko $ cd gecko

- Set

fetch.prunefor git-remote-hg to be happier:

$ git config fetch.prune true

- Set

push.defaultto “upstream”, which I think allows a better workflow to use topic branches and push them more easily:

$ git config push.default upstream

- Add remotes for the mercurial repositories you pull from:

$ git remote add central hg::http://hg.mozilla.org/mozilla-central -t tip $ git remote add inbound hg::http://hg.mozilla.org/integration/mozilla-inbound -t tip $ git remote set-url --push inbound hg::ssh://hg.mozilla.org/integration/mozilla-inbound (...)

-t tipis there to reduce the amount of churn from the old branches on these repositories. Please be very cautious if you use this on beta, release or esr repositories, because their tip can switch mercurial branches, and can be very confusing. I’m sure I’m not alone having pushed something on a release branch, when actually intending to push on the default branch, and that was with mercurial… Mercurial branches and their multiple heads are confusing, and git-remote-hg, while it supports them, probably makes the confusion worse at the moment. I’m still investigating how to make things better. For Mozilla integration branches, though, it works fine because their tip doesn’t switch heads. - Setup a remote for the try server:

$ git remote add try hg::http://hg.mozilla.org/try $ git config remote.try.skipDefaultUpdate true $ git remote set-url --push try hg::ssh://hg.mozilla.org/try $ git config remote.try.push +HEAD:refs/heads/tip

- Update all the remotes:

$ git remote update

This essentially does

git fetchon all remotes, except try.

With this setup, you can e.g. create new topic branches based on the remote branches:

$ git checkout -b bugxxxxxxx inbound/tip

When you’re ready to test your code on try, create a commit with the try syntax, then just do:

$ git push try

This will push whatever your checked-out branch is, to the try server (thanks to the refspec in remote.try.push).

When you’re ready to push to an integration branch, remove any try commit. Assuming you were using the topic branch from above, and did set push.default to “upstream”, push with:

$ git checkout topic-branch $ git pull --rebase $ git push

But, this is only one possibility. You’re essentially free to pick your own preferred workflow. Just keep in mind that we generally prefer linear history on integration branches, so prefer rebase to merge (and, git-remote-hg doesn’t support pushing merges yet). I’d recommend setting the pull.ff configuration to “only”, by the way.

Please note that rebasing something you pushed to e.g. try will leave dangling mercurial metadata in your git clone. git hgdebug fsck will tell you about them, but won’t do anything about them, at least currently. Eventually, there will be a git-gc-like command. [Update: actually, rebasing won’t leave dangling mercurial metadata because pushing creates head references in the metadata. There will be a command to clean that up, though.]

Please report any issue you encounter in the comments, or, if they are git-remote-hg related, on github.

1. In case you wonder what kind of heavy testing I did, this is roughly how it went:

- Add support to push a root changeset (one with no parent).

- Clone the mercurial repository and the mozilla-central repository with git-remote-hg.

- Since pushing merges is not supported yet, flatten the history with

git filter-branch --parent-filter "awk '{print \$1,\$2}'" HEAD, effectively replacing merges with “simple” commits with the entire merged content as a single patch (by the way, I should have written afast-importscript instead, it took almost a day (24 hours) to apply it to the mozilla-central clone ; filter-branch is a not-so-smart shell script, it doesn’t scale well). - Reclone those filtered clones, such that no git-remote-hg metadata is left.

- Tag all the commits with numbered tags, starting from 0 for the root commit, with the following command:

git rev-list --reverse HEAD | awk '{print "reset refs/tags/HEAD-" NR - 1; print "from", $1}' | git fast-import - Create empty local mercurial repositories and push random tags with increasing number, with variations of the following command:

python -c 'import random; print "\n".join(str(i) for i in sorted(random.sample(xrange(n), m)) + [n])' | while read i; do git push -f hg-remote HEAD-$i || break; done[ Note:

push -fis only really necessary for the push of the root commit ] - Repeat and rinse, with different values of n, m and hg-remote.

- Also arrange the above test to push to multiple mercurial repositories, such that pushes are performed with both commits that have already been pushed and commits that haven’t. (i.e. push A to repo-X, push B to repo-Y (which doesn’t have A), push C to repo-X (which doesn’t have B), etc.)

- Check that all attempts create the same mercurial changesets.

- Check that recloning those mercurial repositories creates the same git commits.

|

|

Nick Cameron: rustaceans.org |

Some of the technologies I was interested in were the modern, client-side, JS frameworks (Ember, Angular, React, etc.), node.js, and RESTful APIs. I ended up using Ember, node.js, and the GitHub API. I had fun learning about these technologies and learnt a lot, although I don't think I did more than scratch the surface, especially with Ember, which is HUGE.

What made the project a little bit more interesting is that I have absolutely no interest in getting involved with user credentials - there is simply too much that can go wrong, security-wise, and no one wants to remember another username and password. To deal with this, I observed that pretty much everyone in the Rust community already has a GitHub account, so why not let GitHub do the hard work with security and logins, etc. It is possible to use GitHub authentication on your own website, but I thought it would be fun to use pull requests to maintain user data in the phone book, rather than having to develop a UI for adding and editing user data.

The design of rustaceans.org follows from the idea of making it pull request based: there is a repository, hosted on GitHub, which contains a JSON file for each user. Users can add or update their information by sending a pull request. When a PR is submitted, a GitHub hook sends a request to the rustaceans.org backend (the node.js bit). The backend does a little sanity checking (most importantly that the user has only updated their own data), then merges the PR, then updates the backing database with the user's new data (the db could be considered a cache for the user data repository, it can be completely rebuilt from the repo when necessary).

The backend exposes a web service to access the db. This provides two functions as an http API (I would say it is RESTful, but I'm not 100% sure that it is) - search for a user with some string, and get a specific user by GitHub username. These just pull data out of the database and return it as JSON (not quite the same JSON as users submit, the data has been processed a little bit, for example, parsing the 'notes' field as markdown).

The frontend is the actual rustaceans.org webpage, which is just a small Ember app, and is a pretty simple UI wrapper around the backend web service. There is a front page with some info and a search box, and you can use direct links to users, e.g., http://www.rustaceans.org/nick29581.

All the implementation is pretty straightforward, which I think verifies the design to some extent. The hardest part was learning the new technologies. While using the site is certainly different from a regular setup where you would modify your own details on the site, it seems to be pretty successful. I've had no complaints, and we have a fair number of rustaceans in the db. Importantly, it has needed very little manual intervention - users presumably understand the procedures, and automation is working well.

Please take a look! And if you know any of those technologies, have a look at the source code and let me know where I could have done better (also, patches and issues are very welcome). And of course, if you are part of the Rust community, please add yourself!

http://featherweightmusings.blogspot.com/2014/12/rustaceansorg.html

|

|

Pomax: So you're thinking about using React |

I was in the same boat: at the Mozilla Foundation we're considering using React for the next few months as client-side framework of choice for new apps, and that means learning a new technology, because you can't make a decision on something you don't understand. So I took an app I was unhappy with due to framework choice, and rewrote the whole thing in React, taking notes on everything that felt weird, or didn't click.

I ended up writing about 6000 words worth of thoughts on React by the time I was done, and it took another 3000 or so to figure out what I had actually learned, and why React seemed so foreign at first, but so right once I got familiar with it. It's been great learning a new thing, and even better discovering that it's a thing I can love, but there's a reason for that, and I'd like to distill my close to 10k words into something that you can read, so that you might understand where React's coming from.

So: if you're used to other frameworks (whether they're MVC, MVVM, some other paradigm or even just plain JS and HTML templating engines), and you feel like React is doing something very weird, don't worry: if you treat it as HTML+JS, React is really, really weird. However, the reason for it is actually you: React is not about doing things as HTML+JS, it's about writing applications using plain old object oriented programing... The objects just happen to be able to render themselves as UI elements, and the UI happens to be the browser. The logic behind what you're programming, as such, depends on knowing that you're doing OOP, and making sure you're thinking about modelling your elements and interactions based on object interactions as you would in any other OOP design setting.

The bottom line: React has almost nothing to do with HTML. And for a web framework, that's weird. Although only a little, as we'll see in the rest of this post.

The best way I can think of to get to business is with a table that compares the various aspects of programming to how you express those things when you're using HTML, versus how you express those things when you're using React's object model. I'm calling it JSX because that's what React calls it, but really it's "React's object model".

So, here goes:

| concepts | HTML | JSX |

|---|---|---|

| "thing" to think in terms of | DOM elements | components (React XML elements) |

| Is this MVC? | there is no explicit model | components wrap MVC |

| internal structure | DOM tree | components tree |

| scope | global (window) |

local component and this.refs only |

| contextual id | id attribute |

ref attribute |

| hashcode | n/a | key attribute |

| mutable properties | HTML attributes | internal object properties |

| bootstrap properties | n/a | component props |

| property types | strings | any legal JS construct |

| internal state | ... | component state |

| style assignment | class attribute |

className attribute |

| hierarchy accessors | DOM child/parent access | local access only |

| content comes from... | any mix of DOM + JS | purely the component state |

| content manipulation | DOM API | setState |

I'm going to be running through each of these points in order (mostly), and I'd strongly advise you not to skip to just the step "you care about". Unless you gave up on the notion that you're writing a webpage rather than an object oriented application that happens to render its UI elements as inaccessible HTML, you're going to want to read all of them. In fact, humour me: even if you have, read every point anyway. They build on each other.

The "thing" that you're working on

In a traditional web setting, you have the data, the markup around that data, the styling of that markup, and the interaction logic that lets you bridge the gap between the data and the user. These things are typically your data, your HTML, your CSS and your JavaScript, respectively.

That is not how you model in React. React is far more like a traditional programming language, with objects that represent your functional blocks. Each object has an initial construction configuration, a running state, and because we're working on the web, each object has a .render() function that will produce a snapshot of the object, serialized into HTML that is "done" as far as React is concerned. Nothing you can do to it will be meaningful for the object that generated it. React could, essentially, be anything. If it rendered to native UI or to GTK or Java Swing, you'd never know, since React's written in a way that everything your components might conceivably want to do is contained in your object's code.

It's also much more like a traditional OOP environment in that objects don't "take things from other objects". Where in HTML+JS you might do a document.querySelector("#maincontainer div.secondary ol li.current") and then manipulate what comes rolling out of that, in React, you delegate. There is no "global" context to speak of, so you'd have a Main component, containing a Secondary component, and that's all Main can see. If it wants to initiate things on "the current selected list item", it tells the contained Secondary element to take care of it, without caring how it takes care of it. The code for doing things lives inside the things that do the doing.

This is pitfall number one if you're starting with React: you're not writing HTML, you're doing object oriented programming with a to-HTML-render pipeline step at the end.

Is this MVC?

If you think in terms of Model/View/Controller separation (and let's be clear here: that's just one of many possible ways to model data visualisation and interaction), you might be wondering if React is an MVC framework, to which the only real answer is "that question has assumptions that don't really apply here" (which is why comparing React to Ember or Angular or the like doesn't really make all that much sense). React is an object oriented modeling framework, whereas MVC frameworks are MVC frameworks.

If we absolutely have to express React in terms of MVC: each component is responsible for housing all MVC aspects, in isolation of every other component:

- the model aspect is captured as the component properties (its config) and state (it's current data),

- the view aspect is captured as the

renderfunction, generating new views every time either the configuration or state updates, and - the control aspect is covered by all the functions defined on the object that you (or React) intend to be called either through interaction with the component, or as public API, to be called by other components.

The internal structure of "things"

In HTML+JS, the structure of your functional blocks are just "more HTML". In React, you're using a completely different thing. It's a little bit different in terms of what it looks like (you should be entirely forgiven for thinking you're working with HTML except using XML syntax) but it's completely different in what it is: React uses a syntax that lets you define what look like XML structures, which are transformed into key/value maps. It's these key/value maps that React uses internally as the canonical representation of your object. That's a lot of words for the summary "it's not HTML, just a sort of familiar syntax to make development easy".

Let's unpack that a little more: the JSX syntax uses tags that map to HTML elements during the final render pipeline step. They are, however, most emphatically not real HTML elements, and certainly not elements that end up being used in the browser. The reason here is that React uses an internal snapshot representation that lets it perform diffs between successive render calls. Each call generates a snapshot, a new snapshot is structurally compared to the previous one, and differences between them are translated into transforms that the browser can selectively apply to the snapshot's associated active DOM. It's super fast, but also means that the JSX you write has almost nothing to do with what the browser will end up using as DOM.

This is pitfall number two, and really is just a rephrasing of pitfall number one: React objects aren't "backed by HTML", nor is the browser DOM "backed by a React object". What the browser shows you is just snapshots of React objects throughout their life cycle.

HTML is unscoped, React objects are scoped as per OOP rules.

Since we have no DOM, and we're doing proper OOP, React components know about their own state, and only their own state. To get around the completely isolation, the normal OOP approaches apply for making components aware of things outside of their scope: they can be passed outside references during creation (i.e. these are constructor arguments; the constructor syntax just happens to look like, but conceptually is not, markup), or they can be passed in later by calling functions on the object that let you pass data in, and get data back as function return or via callbacks.

Which brings us to pitfall number three: If you're using window or some other dsignated master global context in React objects, you're probably doing something wrong.

Everything you do in React you can achieve without the need for a global context. A component should only need to care about the things it was given when it was born, and the immediately visible content it has as described in its render() function. Which brings us to the next section

How do we find the right nodes?

In HTML the answer is simple: querySelect("#all.the[things]"). In React the answer is equally simple, as long as we obey OOP rules: We don't know anything about "higher up" elements and we only know about our immediate children. That last bit is important: we should not care about our children's children in the slightest. Children are black boxes and if we need something done to our children's children, we needSomethingDone(), so we call our children's API functions and trust that they do the right thing, without caring in the slightest how they get them done.

That's not to say you might not want to "highlight the input text field when the main dialog gains focus" but that's the HTML way of thinking. In React, when the dialog gains focus you want to tell all children that focus was gained, and then they can do whatever that means to them. If one of those children houses the input, then it should know to update its state to one where the input renders as highlighted. React's diffing updates will then take care of making sure it's still the same DOM element that gets highlight despite the render() call outputting a new snapshot.

So, how do we get our own children? In HTML we can use the globally unique id attribute, and the same concept but then scoped locally to React objects is the ref attribute:

this.refs.abcd in our code. Again: there is no HTML. Similarly, we can select any other React XML element to work with through this.refs so if we have a React element this.refs.md and call its functions so that it does what we need, such as this.refs.md.setTitle("Enhanced modal titles are a go");.

The secret sauce in React's diffing: elements have hashcodes, and sometimes that's on you.

Because React generates new XML tree snapshots every time render() gets called, it needs a way to order elements reliably, for which it uses hashcodes. These are unique identifiers local to the component they're used in that let React check two successive snapshots for elements with the same code, so that it can determine whether they moved around, whether any attributes changed, etc. etc.

For statically defined JSX, React can add these keys automatically, but it can't do that for dynamic content. When you're creating renderable content dynamically (and the only place you'll do this is in render(); if you do it anywhere else, you're doing React wrong) you need to make sure you add key attributes to your JSX. And example:

render: function() {

var list = this.state.mylist.map(function(obj) {

return {obj.text}

});

return {list}

;

}If we don't add key attributes in this JSX, React will see successive snapshots with unmarked elements, and will simply key them them based on their position. This is fairly inefficient, and can lead to situations where lists are generated without a fixed order, making React think multiple elements changed when in fact elements merely moved around. To prevent this needlessly expensive kind up updating, we explicitly add the key attribute for each obj in our list mylist so that they're transformed into XML elements that will eventually end up being rendered as an HTML list item in the browser, while letting React see actual differences in successive snapshots.

If, for instance, we remove the first element in mylist because we no longer need it, none of the other elements change, but without key attributes React will see every element as having changed, because the position for each element has changed. With key attributes, it'll see that the elements merely moved and one of them disappeared.

The simplest key when generating content, if you know your list stays ordered, is to make it explicit that you really do just want the position that React makes use of when you forget to add keys:

render: function() {

var list = this.state.mylist.map(function(obj, idx) {

return {obj.text}

});

return {list}

;

}However, while this will make React "stop complaining" about missing keys, this will also almost always be the "wrong" way to do keys, due to what React will see once you modify the list, rather than only ever appending to it. Generally, if you have dynamic content, spend a little time thinking about what can uniquely identify elements in it. Even if there's nothing you can think of, you can always use an id generator dedicated to just those elements:

render: function() {

var list = this.state.mylist.map(function(obj) {

return {obj.text}

});

return {list}

;

},

generateKey: (function() {

var id = 1;

return function() { return id++; };

},

addEntry: function(obj) {

obj.key = generateKey();

this.setState({

mylist: this.state.mylist.concat([obj])

});

}Now, every time we add something that needs to go in our list, we make sure that they have a properly unique key even before the object makes it into the list. If we now modify the list in any way, the objects retain their keys and React will be able to use its optimized diffing and stay nice and fast.

Before we move on, a warning: keys uniquely identify React XML elements, which also means that if you use key attributes, but some elements share the same value, React will treat these "clearly different" elements as "the same element", and the last element in a list of same-key elements will make it into the final browser DOM. The reason here is that keys are literally JavaScript object keys, and are used by React to build an internal element object of the standard JavaScript kind, forming

{

key1: element,

key2: element,

...

}React runs through the elements one by one and adds key:element bindings to this object, so when it sees a second (or third, or fourth, etc) element with key1 as key value, then the result of React's obj[key]=element is that the previously bound element simply gets clobbered.

This, for instance, is a list with only a single item, no matter how many items are actually in mylist:

render: function() {

var key = 1;

var list = this.state.mylist.map(function(obj) {

return {obj.text}

});

return {list}

;

}Mutable properties

We can be brief here: in HTML, all element attributes are mutable properties. In React, the only true mutable properties you have are the normal JavaScript object property kind of properties.

Immutable properties

This one's stranger, if you're used to HTML, because there aren't really any immutable properties when it comes to HTML elements.

In React, however, there are two kinds of immutable properties: the construction properties and the object's internal state. The first is used to "bootstrap" a component, and you can think of it as the constructor arguments you pass into a creation call, or the config or options object that you pass along during construction. These values are "set once, never touch". Your component uses them to set its initial state, and then after that the properties are kind of done. Except to reference the initial state (like during a reset call), they don't get used again. Instead, you constantly update the object's "state". This is the collection that defines everything that makes your object uniquely that object in time. An example

ParentElement = {

...

render: function() {

var spectype = 3;

return

},

...

} and

ChildElement = {

...

getInitialState: function() {

return {

name: '',

type: -1

};

},

componentDidMount: function() {

this.setState({

name: this.props.name,

type: this.props.type

});

},

render: function() {

return (

{this.state.name}

({this.state.type})

);

},

...

}We see the child element, with an intial "I have no idea what's going on" state defined in the getInitialState function. Once the element's been properly created and is ready to be rendered (at which point componentDidMount is called) it copies its creation properties into its state, setting up its "true" form, and after that the role of this.props is mostly over. Rendering relies on tapping into this.state to get the to-actually-show values, and we can modify the element's state through its lifecycle by using the setState() function:

ChildElement = {

...

render: function() {

return {this.state.name};

},

rename: function(event) {

var newname = prompt("Please enter a new name");

this.setState({ name: newname });

},

...

}The chain of events here is: render() -> the user clicks on the name -> rename() is called, which prompts for a new name -> the element's state is updated so that name is this new name -> render() is called because the element's state was updated -> React compared the new render output to the old output and sees a string diff in the

What can I pass as property values?

In HTML the answer is "strings" - it doesn't matter what you pass in, if you use it as HTML attribute content, it becomes a string.

In React, the answer is "everything". If you pass in a number, it stays a number. If you pass in a string, it stays a string. But, more importantly, if you pass in an object or function reference, it stays an object or function reference and that's how owning elements can set up meaningful deep bindings with child or even descendant elements.

If there is no HTML, how do I style my stuff??

React elements can use the className property so that you can use regular CSS styling on them. Anything passed into the className property gets transformed to the HTML class attribute at the final step of rendering.

What if I need ids?

You don't. Even if you have a single element that will always be the only single instance of that React object, ever, you could still be wrong. id attributes cannot be guaranteed in an environment where every component only knows about itself, and its immediate children. There is no global scope, so setting a global scope identifier makes absolutely no sense.

But what if I need to tie functionality to what-I-need-an-id-on element?

Add it:

Element = {

...

render: function() {

return ........;

},

...

}surprise: you can querySelect your way to this element just fine with a .top-level-element query selector.

But that's slower than ids!

Not in modern browsers, no. Let's move on.

How do I access elements hierarchically?

As should be obvious by now, we don't have querySelector available, but we do have a full OOP environment at our fingertips, so the answer is delegate. If you need something done to a descendant, tell your child between you and that descendant to "make it happen" and rely on their API function to do the right thing. How things are done are controlled by the things that do the actual doing, but that doesn't mean they can't offer an API that can be reached: X:Y.doIt() -> Y.doIt() { this.refs.Z.doIt(); } -> Z.doIt() { this.refs.actualthing.doIt(); }

Similarly, if you need parents to do things, then you need to make sure those parents construct the element with a knowledge of what to call:

ParentElement = {

...

render: function() {

return

},

doit: function() {

...

},

...

} and

ChildElement = {

...

render: function() {

return do it;

},

...

}What else is there?

This kind of covers everything in a way that hopefully makes you realise that React is a proper object oriented programming approach to page and app modelling, and that it has almost nothing to do with HTML. Its ultimate goal of course is to generate HTML that the browser knows how to show and work with, but that view and React's operations are extremely loosely coupled, and mostly works as a destructive rendering of the object: the DOM that you see rendered in the browser and the React objects that lead to that DOM are not tied together except with extremely opaque React hooks that you have no business with.

More posts will follow because this is hardly an exhausted topic, but in the mean time: treating React as a proper OOP environment actually makes it a delight to work with. Treating it as an HTML framework is going to leave you fighting it for control nonstop.

So remember:

- you’re not writing HTML, you’re doing object oriented programming with a to-HTML-render pipeline step at the end.

- React objects aren’t “backed by HTML”, nor is the browser DOM “backed by a React object”. What the browser shows you is just snapshots of React objects throughout their life cycle.

- Your objects have creation properties, but you should always render off of the "current state". If you need to copy those properties into your state right after creation, do. Then forget they're even there.

The best way I can think of to get to business is with a table that compares the various aspects of programming to how you express those things when you're using HTML, versus how you express those things when you're using React's object model. I'm calling it JSX because that's what React refers to it, but really it's "React's object model".

|

|

Armen Zambrano: Run mozharness talos as a developer (Community contribution) |

All you have to add is the following:

--cfg developer_config.py

--installer-url http://ftp.mozilla.org/pub/mozilla.org/firefox/nightly/latest-trunk/firefox-37.0a1.en-US.linux-x86_64.tar.bz2

To read more about running Mozharness locally go here.

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/lhOZ0fEK6mk/run-mozharness-talos-as-developer.html

|

|

Mozilla Open Policy & Advocacy Blog: Spotlight on Free Press: A Ford-Mozilla Open Web Fellow Host Organization |

{This is the final in a series of posts highlighting the Ford-Mozilla Open Web Fellows program host organizations. Free Press has been at the forefront of informing tech policy and mobilizing millions to take action to protect the Internet. This year, Free Press has been an instrumental catalyst in the fight to protect net neutrality. We are thrilled to have Free Press as a host organization, and eager to see the impact from their Fellow.}

Spotlight on Free Press: A Ford-Mozilla Open Web Fellow Host Organization

By Amy Kroin, editor, Free Press

In the next few months, the Federal Communications Commission will decide whether to surrender the Internet to a handful of corporations — or protect it as a space that’s shared and shaped by millions of users.

At Free Press, we believe that protecting everyone’s rights to connect and communicate is fundamental to advancing social change. We believe that people should have the opportunities to tell their own stories, hold leaders accountable and participate in policy making. And we know that the freedom to access and share information is essential to this.

But these freedoms are under constant attack.

Take Net Neutrality. In May, FCC Chairman Tom Wheeler released rules that would have allowed discrimination online and destroyed the Internet as we know it. Since then, Free Press has helped lead the movement to push Wheeler to ditch his rules — and safeguard Net Neutrality over the long term. Our nationwide mobilization efforts and our advocacy within the Beltway have prompted the president, leaders in Congress and millions of people to speak out for strong open Internet protections. Wheeler’s had to go back to the drawing board — and plans to release new rules in 2015.

Though we’ve built amazing momentum in our campaign, our opposition — AT&T, Comcast, Verizon and their hundreds of lobbyists — is not backing down. Neither are we. With the help of people like you, we can ensure the FCC enacts strong open Internet protections. And if the agency goes this route, we will do everything we can to defend those rules and fight any legal challenges.

But preserving Net Neutrality is only part of the puzzle. In addition to maintaining open networks for Internet users, we also need to curb government surveillance and protect press freedom.

In the aftermath of the Edward Snowden revelations, we helped launch the StopWatching.Us coalition, which organized the Rally Against Mass Surveillance and is pushing Congress to pass meaningful reforms. In 2015, we’re ramping up our advocacy and will cultivate more champions in Congress.

The widespread spying has had a particular impact on journalists, especially those who cover national security issues. Surveillance, crackdowns on whistleblowers and pressure to reveal confidential sources have made it difficult for many of these reporters to do their jobs.

Free Press has worked with leading press freedom groups to push the government to protect the rights of journalists. We will step up that work in the coming months with the hiring of a new journalism and press freedom program director.

This is just a snapshot of the kind of work we do every day at Free Press. We’re seeking a Ford-Mozilla Open Web Fellow with proven digital skills who can hit the ground running. Applicants should be up to speed on the latest trends in online organizing and should have experience using social media tools to advance policy goals. Candidates should also be accustomed to working within a collaborative workplace.

To join our team of Internet freedom fighters, apply to become a Ford-Mozilla Open Web Fellow at Free Press. We value excellence and diversity in our team. We strongly encourage applications from women, people of color, persons with disabilities, and lesbian, gay, bisexual and transgender individuals.

Be a Ford-Mozilla Open Web Fellow. Application deadline is December 31, 2014. Apply at https://advocacy.mozilla.org/

|

|

Tantek Celik: Happy Winter Solstice 2014! Ready For More Daylight Hours. |

The sun has set here in the Pacific Time Zone on the shortest northern hemisphere day of 2014.

I spent it at home, starting with an eight mile run at an even pace through Golden Gate Park to Ocean Beach and back with a couple of friends, then cooking and sharing brunch with them and a few more.

It was a good way to spend the minimal daylight hours we had: doing positive things, sharing genuinely with positive people who themselves shared genuinely.

Of all the choices we make day to day, I think these may be the most important we have to make:

- What we choose to do with our time

- Who we choose to spend our time with

These choices are particularly difficult because:

- So many possibilities

- So many people will tell you what you should do, and who you should spend time with; often only what they’re told, or to their advantage, not yours.

- You have to explicitly choose, or others will choose for you.

When you find those who have explicitly chosen to spend time with you, doing positive things, and who appreciate that you have explicitly chosen (instead of being pressured by obligation, guilt, entitlement etc.) to spend time with them, hug them and tell them you’re glad they are there.

I’m glad you’re here.

Happy Winter Solstice and may you spend more of your hours doing positive things, and genuinely sharing (without pressures of obligation, guilt, or entitlement) with those who similarly genuinely share with you.

Here’s to more daylight hours, both physically and metaphorically.

http://tantek.com/2014/355/b1/happy-winter-solstice-more-daylight

|

|