Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mozilla Open Policy & Advocacy Blog: Thank you, Mr. President. |

Today, Mozilla joined with dozens of advocacy organizations and companies to urge President Obama to take action on net neutrality in response to his recent vocal support for fair and meaningful net neutrality rules. Expressing views echoed by millions of Americans, the groups urged the FCC to stand against fast lanes for those who can afford it and slow lanes for the rest of us. Below find the full text of the letter.

***

Mr. President:

Earlier this week, you made a strong statement in support of net neutrality by saying:

“One of the issues around net neutrality is whether you are creating different rates or charges for different content providers. That’s the big controversy here. So you have big, wealthy media companies who might be willing to pay more and also charge more for spectrum, more bandwidth on the Internet so they can stream movies faster.

I personally, the position of my administration, as well as a lot of the companies here, is that you don’t want to start getting a differentiation in how accessible the Internet is to different users. You want to leave it open so the next Google and the next Facebook can succeed.”

We want to thank you for making your support for net neutrality clear and we are counting on you to take action to ensure equality on the Internet. A level playing field has been vital for innovation, opportunity and freedom of expression, and we agree that the next big thing will not succeed without it. We need to avoid a future with Internet slow lanes for everybody except for a few large corporations who can pay for faster service.

Like you, we believe in preserving an open Internet, where Internet service providers treat data equally, regardless of who is creating it and who is receiving it. Your vision of net neutrality is fundamentally incompatible with FCC’s plan, which would explicitly allow for paid prioritization. The only way for the FCC to truly protect an open Internet is by using its clear Title II authority. Over the next few months, we need your continued and vocal support for fair and meaningful net neutrality rules. Our organizations will continue to pressure the FCC to put forth solidly based rules, and will continue to encourage you and other elected officials to join us in doing so.

Thank you again for standing up for the open Internet so that small businesses and people everywhere have a fair shot.

Signed,

ACLU, 18 Million Rising, Center for Media Justice, Center for Rural Strategies, ColorOfChange, Common Cause, Consumers Union, CREDO, Daily Kos, Demand Progress, Democracy for America, EFF, Engine, Enjambre Digital, Etsy, EveryLibrary, Fandor, Fight for the Future, Free Press, Future of Music Coalition, Greenpeace, Kickstarter, Louder, Media Action Grassroots Network, Media Alliance, Media Literacy Project, Media Mobilizing Project, MoveOn.org, Mozilla, Museums and the Web, National Alliance for Media Arts and Culture, National Hispanic Media Coalition, Open Technology Institute, OpenMedia International, Presente.org, Progressive Change Campaign Committee, Progressives United, Public Knowledge, Reddit, Rural Broadband Policy Group, SumOfUs, The Student Net Alliance, ThoughtWorks, United Church of Christ, OC Inc., Women’s Institute for Freedom of the Press, Women’s Media Center, Y Combinator

https://blog.mozilla.org/netpolicy/2014/08/08/thank-you-mr-president/

|

|

Matt Brubeck: Let's build a browser engine! |

I’m building a toy HTML rendering engine, and I think you should too. This is the first in a series of articles describing my project and how you can make your own. But first, let me explain why.

You’re building a what?

Let’s talk terminology. A browser engine is the portion of a web browser that works “under the hood” to fetch a web page from the internet, and translate its contents into forms you can read, watch, hear, etc. Blink, Gecko, WebKit, and Trident are browser engines. In contrast, the the browser’s own UI—tabs, toolbar, menu and such—is called the chrome. Firefox and SeaMonkey are two browsers with different chrome but the same Gecko engine.

A browser engine includes many sub-components: an HTTP client, an HTML parser, a CSS parser, a JavaScript engine (itself composed of parsers, interpreters, and compilers), and much more. The many components involved in parsing web formats like HTML and CSS and translating them into what you see on-screen are sometimes called the layout engine or rendering engine.

Why a “toy” rendering engine?

A full-featured browser engine is hugely complex. Blink, Gecko, WebKit—these are millions of lines of code each. Even younger, simpler rendering engines like Servo and WeasyPrint are each tens of thousands of lines. Not the easiest thing for a newcomer to comprehend!

Speaking of hugely complex software: If you take a class on compilers or operating systems, at some point you will probably create or modify a “toy” compiler or kernel. This is a simple model designed for learning; it may never be run by anyone besides the person who wrote it. But making a toy system is a useful tool for learning how the real thing works. Even if you never build a real-world compiler or kernel, understanding how they work can help you make better use of them when writing your own programs.

So, if you want to become a browser developer, or just to understand what happens inside a browser engine, why not build a toy one? Like a toy compiler that implements a subset of a “real” programming language, a toy rendering engine could implement a small subset of HTML and CSS. It won’t replace the engine in your everyday browser, but should nonetheless illustrate the basic steps needed for rendering a simple HTML document.

Try this at home.

I hope I’ve convinced you to give it a try. This series will be easiest to follow if you already have some solid programming experience and know some high-level HTML and CSS concepts. However, if you’re just getting started with this stuff, or run into things you don’t understand, feel free to ask questions and I’ll try to make it clearer.

Before you start, a few remarks on some choices you can make:

On Programming Languages

You can build a toy layout engine in any programming language. Really! Go ahead and use a language you know and love. Or use this as an excuse to learn a new language if that sounds like fun.

If you want to start contributing to major browser engines like Gecko or WebKit, you might want to work in C++ because it’s the main language used in those engines, and using it will make it easier to compare your code to theirs. My own toy project, robinson, is written in Rust. I’m part of the Servo team at Mozilla, so I’ve become very fond of Rust programming. Plus, one of my goals with this project is to understand more of Servo’s implementation. (I’ve written a lot of browser chrome code, and a few small patches for Gecko, but before joining the Servo project I knew nothing about many areas of the browser engine.) Robinson sometimes uses simplified versions of Servo’s data structures and code. If you too want to start contributing to Servo, try some of the exercises in Rust!

On Libraries and Shortcuts

In a learning exercise like this, you have to decide whether it’s “cheating” to use someone else’s code instead of writing your own from scratch. My advice is to write your own code for the parts that you really want to understand, but don’t be shy about using libraries for everything else. Learning how to use a particular library can be a worthwhile exercise in itself.

I’m writing robinson not just for myself, but also to serve as example code for these articles and exercises. For this and other reasons, I want it to be as tiny and self-contained as possible. So far I’ve used no external code except for the Rust standard library. (This also side-steps the minor hassle of getting multiple dependencies to build with the same version of Rust while the language is still in development.) This rule isn’t set in stone, though. For example, I may decide later to use a graphics library rather than write my own low-level drawing code.

Another way to avoid writing code is to just leave things out. For example, robinson has no networking code yet; it can only read local files. In a toy program, it’s fine to just skip things if you feel like it. I’ll point out potential shortcuts like this as I go along, so you can bypass steps that don’t interest you and jump straight to the good stuff. You can always fill in the gaps later if you change your mind.

First Step: The DOM

Are you ready to write some code? We’ll start with something small: data structures for the DOM. Let’s look at robinson’s dom module.

The DOM is a tree of nodes. A node has zero or more children. (It also has various other attributes and methods, but we can ignore most of those for now.)

struct Node {

// data common to all nodes:

children: Vec<Node>,

// data specific to each node type:

node_type: NodeType,

}

There are several node types, but for now we will ignore most of them

and say that a node is either an Element or a Text node. In a language with

inheritance these would be subtypes of Node. In Rust they can be an enum

(Rust’s keyword for a “tagged union” or “sum type”):

enum NodeType {

Text(String),

Element(ElementData),

}

An element includes a tag name and any number of attributes, which can be stored as a map from names to values. Robinson doesn’t support namespaces, so it just stores tag and attribute names as simple strings.

struct ElementData {

tag_name: String,

attributes: AttrMap,

}

type AttrMap = HashMap<String, String>;

Finally, some constructor functions to make it easy to create new nodes:

fn text(data: String) -> Node {

Node { children: vec![], node_type: Text(data) }

}

fn elem(name: String, attrs: AttrMap, children: Vec<Node>) -> Node {

Node {

children: children,

node_type: Element(ElementData {

tag_name: name,

attributes: attrs,

})

}

}

And that’s it! A full-blown DOM implementation would include a lot more data and dozens of methods, but this is all we need to get started. In the next article, we’ll add a parser that turns HTML source code into a tree of these DOM nodes.

Exercises

These are just a few suggested ways to follow along at home. Do the exercises that interest you and skip any that don’t.

Start a new program in the language of your choice, and write code to represent a tree of DOM text nodes and elements.

Install the latest version of Rust, then download and build robinson. Open up

dom.rsand extendNodeTypeto include additional types like comment nodes.Write code to pretty-print a tree of DOM nodes.

References

Here’s a short list of “small” open source web rendering engines. Most of them are many times bigger than robinson, but still way smaller than Gecko or WebKit. WebWhirr, at 2000 lines of code, is the only other one I would call a “toy” engine.

- CSSBox (Java)

- Cocktail (Haxe)

- litehtml (C++)

- LURE (Lua)

- NetSurf (C)

- Servo (Rust)

- WeasyPrint (Python)

- WebWhirr (C++)

You may find these useful for inspiration or reference. If you know of any other similar projects—or if you start your own—please let me know!

To be continued

- Part 1: Getting started

- Part 2: Parsing HTML

http://limpet.net/mbrubeck/2014/08/08/toy-layout-engine-1.html

|

|

Irving Reid: Telemetry Results for Add-on Compatibility Check |

Earlier this year (in Firefox 32), we landed a fix for bug 760356, to reduce how often we delay starting up the browser in order to check whether all your add-ons are compatible. We landed the related bug 1010449 in Firefox 31 to gather telemetry about the compatibility check, so that we could to before/after analysis.

Background

When you upgrade to a new version of Firefox, changes to the core browser can break add-ons. For this reason, every add-on comes with metadata that says which versions of Firefox it works with. There are a couple of straightforward cases, and quite a few tricky corners…

- The add-on is compatible with the new Firefox, and everything works just fine.

- The add-on is incompatible and must be disabled.

- But maybe there’s an updated version of the add-on available, so we should upgrade it.

- Or maybe the add-on was installed in a system directory by a third party (e.g. an antivirus toolbar) and Firefox can’t upgrade it.

- The add-on says it’s compatible, but it’s not – this could break your browser!

- The add-on author could discover this in advance and publish updated metadata to mark the add-on incompatible.

- Mozilla could discover the incompatibility and publish a metadata override at addons.mozilla.org to protect our users.

- The add-on says it’s not compatible, but it actually is.

- Again, either the add-on author or Mozilla can publish a compatibility override.

We want to keep as many add-ons as possible enabled, because our users love (most of) their add-ons, while protecting users from incompatible add-ons that break Firefox. To do this, we implemented a very conservative check every time you update to a new version. On the first run with a new Firefox version, before we load any add-ons we ask addons.mozilla.org *and* each add-on’s update server whether there is a metadata update available, and whether there is a newer version of the add-on compatible with the new Firefox version. We then enable/disable based on that updated metadata, and offer the user the chance to upgrade those add-ons that have new versions available. Once this is done, we can load up the add-ons and finish starting up the browser.

This check involves multiple network requests, so it can be rather slow. Not surprisingly, our users would rather not have to wait for these checks, so in bug 760356 we implemented a less conservative approach:

- Keep track of when we last did a background add-on update check, so we know how out of date our metadata is.

- On the first run of a new Firefox version, only interrupt startup if the metadata is too out of date (two days, in the current implementation) *or* if some add-ons were disabled by this Firefox upgrade but are allowed to be upgraded by the user.

Did it work?

Yes! On the Aurora channel, we went from interrupting 92.7% of the time on the 30 -> 31 upgrade (378091 out of

407710 first runs reported to telemetry) to 74.8% of the time (84930 out of 113488) on the 31 -> 32 upgrade, to only interrupting 16.4% (10158 out of 61946) so far on the 32 -> 33 upgrade.

The change took effect over two release cycles; the new implementation was in 32, so the change from “interrupt if there are *any* add-ons the user could possibly update” to “interrupt if there is a *newly disabled* add-on the user could update” is in effect for the 31 -> 32 upgrade. However, since we didn’t start tracking the metadata update time until 32, the “don’t interrupt if the metadata is fresh” change wasn’t effective until the 32 -> 33 upgrade. I wish I had thought of that at the time; I would have added the code to remember the update time into the telemetry patch that landed in 31.

Cool, what else did we learn?

On Aurora 33, the distribution of metadata age was:

| Age (days) | Sessions |

|---|---|

| < 1 | 37207 |

| 1 | 9656 |

| 2 | 2538 |

| 3 | 997 |

| 4 | 535 |

| 5 | 319 |

| 6 – 10 | 565 |

| 11 – 15 | 163 |

| 16 – 20 | 94 |

| 21 – 25 | 69 |

| 26 – 30 | 82 |

| 31 – 35 | 50 |

| 36 – 40 | 48 |

| 41 – 45 | 53 |

| 46 – 50 | 6 |

so about 88% of profiles had fresh metadata when they upgraded. The tail is longer than I expected, though it’s not too thick. We could improve this by forcing a metadata ping (or a full add-on background update) when we download a new Firefox version, but we may need to be careful to do it in a way that doesn’t affect usage statistics on the AMO side.

What about add-on upgrades?

We also started gathering detailed information about how many add-ons are enabled or disabled during various parts of the upgrade process. The measures are all shown as histograms in the telemetry dashboard at http://telemetry.mozilla.org;

- SIMPLE_MEASURES_ADDONMANAGER_XPIDB_STARTUP_DISABLED

- The number of add-ons (both user-upgradeable and non-upgradeable) disabled during the upgrade because they are not compatible with the new version.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_DISABLED

- The number of user-upgradeable add-ons disabled during the upgrade.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_METADATA_ENABLED

- The number of add-ons that changed from disabled to enabled because of metadata updates during the compatibility check.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_METADATA_DISABLED

- The number of add-ons that changed from enabled to disabled because of metadata updates during the compatibility check.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_UPGRADED

- The number of add-ons upgraded to a new compatible version during the add-on compatibility check.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_UPGRADE_DECLINED

- The number of add-ons that had upgrades available during the compatibility check, but the user chose not to upgrade.

- SIMPLE_MEASURES_ADDONMANAGER_APPUPDATE_UPGRADE_FAILED

- The number of add-ons that appeared to have upgrades available, but the attempt to install the upgrade failed.

For these values, we got good telemetry data from the Beta 32 upgrade. The counts represent the number of Firefox sessions that reported that number of affected add-ons (e.g. 3170 Telemetry session reports said that 2 add-ons were XPIDB_DISABLED by the upgrade):

| Add-ons affected | XPIDB DISABLED | APPUPDATE DISABLED | METADATA ENABLED | METADATA DISABLED | UPGRADED | DECLINED | FAILED |

|---|---|---|---|---|---|---|---|

| 0 | 2.6M | 2.6M | 2.6M | 2.6M | 2.6M | 2.6M | 2.6M |

| 1 | 36230 | 7360 | 59240 | 14780 | 824 | 121 | 98 |

| 2 | 3170 | 1570 | 2 | 703 | 5 | 1 | 0 |

| 3 | 648 | 35 | 0 | 43 | 1 | 0 | 0 |

| 4 | 1070 | 14 | 1 | 6 | 0 | 0 | 0 |

| 5 | 53 | 20 | 0 | 0 | 0 | 0 | 0 |

| 6 | 157 | 194 | 0 | 0 | 0 | 0 | 0 |

| 7+ | 55 | 9 | 0 | 1 | 0 | 0 | 0 |

The things I find interesting here are:

- The difference between XPIDB disabled and APPUPDATE disabled is (roughly) the add-ons installed in system directories by third party installers. This implies that 80%-ish of add-ons made incompatible by the upgrade are 3rd party installs.

- upgraded + declined + failed is (roughly) the add-ons a user *could* update during the browser upgrade, which works out to fewer than one in 2000 browser upgrades having a useful add-on update available. I suspect this is because most add-on updates have already been performed by our regular background update. In any case, to me this implies that further work on updating add-ons during browser upgrade won’t improve our user experience much.

http://www.controlledflight.ca/2014/08/08/telemetry-results-for-add-on-compatibility-check/

|

|

Mozilla WebDev Community: Webdev Extravaganza – August 2014 |

Once a month, web developers from across Mozilla gather to summon cyber phantoms and techno-ghouls in order to learn their secrets. It’s also a good opportunity for us to talk about what we’ve shipped, share what libraries we’re working on, meet newcomers, and just chill. It’s the Webdev Extravaganza! Despite the danger of being possessed, the meeting is open to the public; you should stop by!

You can check out the wiki page that we use to organize the meeting, check out the Air Mozilla recording, or amuse yourself with the wild ramblings that constitute the meeting notes. Or, even better, read on for a more PG-13 account of the meeting.

Shipping Celebration

The shipping celebration is for anything we finished and deployed in the past month, whether it be a brand new site, an upgrade to an existing one, or even a release of a library.

Peep 1.3 is out!

There’s a new release of ErikRose‘s peep out! Peep is essentially pip, which installs Python packages, but with the ability to check downloaded packages against cryptographic hashes to ensure you’re receiving the same code each time you install. The latest version now passes through most arguments for pip install, supports Python 3.4, and installs a secondary script tied to the active Python version.

Open-source Citizenship

Here we talk about libraries we’re maintaining and what, if anything, we need help with for them.

Contribute.json

pmac and peterbe, with feedback from the rest of Mozilla Webdev, have created contribute.json, a JSON schema for open-source project contribution data. The idea is to make contribute.json available at the root of every Mozilla site to make it easier for potential contributors and for third-party services to find details on how to contribute to that site. The schema is still a proposal, and feedback or suggestions are very welcome!

New Hires / Interns / Volunteers / Contributors

Here we introduce any newcomers to the Webdev group, including new employees, interns, volunteers, or any other form of contributor.

| Name | IRC Nick | Project |

|---|---|---|

| John Whitlock | jwhitlock | Web Platform Compatibility API |

| Mark Lavin | mlavin | Mobile Partners |

Roundtable

The Roundtable is the home for discussions that don’t fit anywhere else.

How do you feel about Playdoh?

peterbe brought up the question of what to do about Playdoh, Mozilla’s Django-based project template for new sites. Many sites that used to be based on Playdoh are removing the components that tie them to the semi-out-of-date library, such as depending on playdoh-lib for library updates. The general conclusion was that many people want Playdoh to be rewritten or updated to address long-standing issues, such as:

- Libraries are currently either installed in the repo or included via git submodules. A requirements.txt-based approach would be easier for users.

- Many libraries included with Playdoh were made to implement features that Django has since included, making them redundant.

- Django now supports project templates, making the current install method of using funfactory to clone Playdoh obsolete.

pmac has taken responsibility as a peer on the Playdoh module to spend some time extracting improvements from Bedrock into Playdoh.

Helping contributors via Cloud9

jgmize shared his experience making Bedrock run on the Cloud9 platform. The goal is to make it easy for contributors to spin up an instance of Bedrock using a free Cloud9 account, allowing them to edit and submit pull requests without having to go through the complex setup instructions for the site. jgmize has been dogfooding using Cloud9 as his main development environment for a few weeks and has had positive results using it.

If you’re interested in this approach, check out Cloud9 or ask jgmize for more information.

Unfortunately, we were unable to learn any mystic secrets from the ghosts that we were able to summon, but hey: there’s always next month!

If you’re interested in web development at Mozilla, or want to attend next month’s Extravaganza, subscribe to the dev-webdev@lists.mozilla.org mailing list to be notified of the next meeting, and maybe send a message introducing yourself. We’d love to meet you!

See you next month!

https://blog.mozilla.org/webdev/2014/08/08/webdev-extravaganza-august-2014/

|

|

Frederic Plourde: Gecko on Wayland |

At Collabora, we’re always on the lookout for cool opportunities involving Wayland and we noticed recently that Mozilla had started to show some interest in porting Firefox to Wayland. In short, the Wayland display server is becoming very popular for being lightweight, versatile yet powerful and is designed to be a replacement for X11. Chrome and Webkit already got Wayland ports and we think that Firefox should have that too.

At Collabora, we’re always on the lookout for cool opportunities involving Wayland and we noticed recently that Mozilla had started to show some interest in porting Firefox to Wayland. In short, the Wayland display server is becoming very popular for being lightweight, versatile yet powerful and is designed to be a replacement for X11. Chrome and Webkit already got Wayland ports and we think that Firefox should have that too.

Some months ago, we wrote a simple proof-of-concept basically starting from actual Gecko’s GTK3 paths and stripping all the MOZ_X11 ifdefs out of the way. We did a bunch of quick hacks fixing broken stuff but rather easily and quickly (couple days), we got Firefox to run on Weston (Wayland official reference compositor). Ok, because of hard X11 dependencies, keyboard input was broken and decorations suffered a little, but that’s a very good start! Take a look at the below screenshot :)

http://fredinfinite23.wordpress.com/2014/08/08/gecko-on-wayland/

|

|

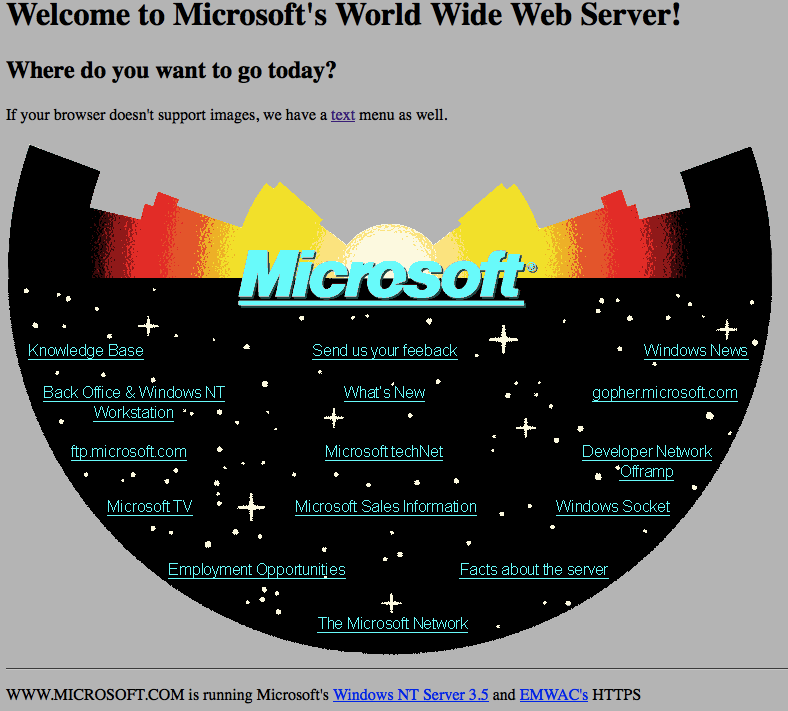

Christian Heilmann: Microsoft’s first web page and what it can teach us |

Today Microsoft released a re-creation of their first web site, 20 years ago complete with a readme.html explaining how it was done and why some things are the way they are.

I found this very interesting. First of all because it took me back to my beginnings – I built my first page in 1996 or so. Secondly this is an interesting reminder how creating things for the web changed over time whilst our mistakes or misconceptions stayed the same.

There are a few details worth mentioning in this page:

- Notice that whilst it uses an image for the whole navigation the texts in the image are underlined. Back then the concept of “text with underscore = clickable and taking you somewhere” was not quite ingrained in people. We needed to remind people of this new concept which meant consistency was king – even in images.

- The site is using the ISMAP attribute and a client side CGI program to turn x and y coordinates of the click into a redirect. I remember writing these in Perl and it is still a quite cool feature if you think about. You get the same mouse tracking for free if you use input type=image as that tells you where the image was clicked as form submission parameters

- Client-side image maps came later and where a pain to create. I remember first using Coffeecup’s Image Mapper (and being super excited to meet Jay Cornelius, the creator, later at the Webmaster Jam session when I was speaking there) and afterwards Adobe’s ImageReady (which turned each layer into an AREA element)

- Table layouts came afterwards and boy this kind of layout would have been one hell of a complex table to create with spacer GIFs and colspan and rowspan.

And this, to me, is the most interesting part here: one of the first web sites created by a large corporation makes the most basic mistake in web design – starting with a fixed design created in a graphical tool and trying to create the HTML to make it work. In other words: putting print on the web.

The web was meant to be consumed on any device capable of HTTP and text display (or voice, or whatever you want to turn the incoming text into). Text browsers like Lynx were not uncommon back then. And here is Microsoft creating a web page that is a big image with no text alternative. Also interesting to mention is that the image is 767px x 513px big. Back then I had a computer capable of 640 x 480 pixels resolution and browsers didn’t scale pictures automatically. This means that I would have had quite horrible scrollbars.

If you had a text browser, of course there is something for you:

If your browser doesn’t support images, we have a text menu as well.

This means that this page is also the first example of graceful degradation – years before JavaScript, Flash or DHTML. It means that the navigation menu of the page had to be maintained in two places (or with a CGI script on the server). Granted, the concept of progressive enhancement wasn’t even spoken of and with the technology of the day almost impossible (could you detect if images are supported and then load the image version and hide the text menu? Probably with a beacon…).

And this haunts us until now: the first demos of web technology already tried to be pretty and shiny instead of embracing the unknown that is the web. Fixed layouts were a problem then and still are. Trying to make them work meant a lot of effort and maintainability debt. This gets worse the more technologies you rely on and the more steps you put into between what you code and what the browser is meant to display for you.

It is the right of the user to resize a font. It is completely impossible to make assumptions of ability, screen size, connection speed or technical setup of the people we serve our content to. As Brad Frost put it, we have to Embrace the Squishiness of the web and leave breathing room in our designs.

One thing, however, is very cool: this page is 20 years old, the technology it is recreated in is the same age. Yet I can consume the page right now on the newest technology, in browsers Microsoft never dreamed of existing (not that they didn’t try to prevent that, mind you) and even on my shiny mobile device or TV set.

Let’s see if we can do the same with Apps created right now for iOS or Android.

This is the power of the web: what you build now in a sensible, thought-out and progressively enhanced manner will always stay consumable. Things you force into a more defined and controlled format will not. Something to think about. Nobody stops you from building an amazing app for one platform only. But don’t pretend what you did there is better or even comparable to a product based on open web technologies. They are different beasts with different goals. And they can exist together.

http://christianheilmann.com/2014/08/08/microsofts-first-web-page-and-what-it-can-teach-us/

|

|

Robert O'Callahan: Choose Firefox Now, Or Later You Won't Get A Choice |

I know it's not the greatest marketing pitch, but it's the truth.

Google is bent on establishing platform domination unlike anything we've ever seen, even from late-1990s Microsoft. Google controls Android, which is winning; Chrome, which is winning; and key Web properties in Search, Youtube, Gmail and Docs, which are all winning. The potential for lock-in is vast and they're already exploiting it, for example by restricting certain Google Docs features (e.g. offline support) to Chrome users, and by writing contracts with Android OEMs forcing them to make Chrome the default browser. Other bad things are happening that I can't even talk about. Individual people and groups want to do the right thing but the corporation routes around them. (E.g. PNaCl and Chromecast avoided Blink's Web standards commitments by declaring themselves not part of Blink.) If Google achieves a state where the Internet is really only accessible through Chrome (or Android apps), that situation will be very difficult to escape from, and it will give Google more power than any company has ever had.

Microsoft and Apple will try to stop Google but even if they were to succeed, their goal is only to replace one victor with another.

So if you want an Internet --- which means, in many ways, a world --- that isn't controlled by Google, you must stop using Chrome now and encourage others to do the same. If you don't, and Google wins, then in years to come you'll wish you had a choice and have only yourself to blame for spurning it now.

Of course, Firefox is the best alternative :-). We have a good browser, and lots of dedicated and brilliant people improving it. Unlike Apple and Microsoft, Mozilla is totally committed to the standards-based Web platform as a long-term strategy against lock-in. And one thing I can say for certain is that of all the contenders, Mozilla is least likely to establish world domination :-).

http://robert.ocallahan.org/2014/08/choose-firefox-now-or-later-you-wont.html

|

|

Michael Verdi: Lightspeed – a browser experiment |

Planet Mozilla viewers – you can watch this video on YouTube.

This is a presentation that Philipp Sackl and I put together for the Firefox UX team. It’s a browser experiment called Lightspeed. If you want the short version you can download this pdf version of the presentation. Let me know what you think!

Notes:

- I mention the “Busy Bee” and “Evergreen” user types. These are from the North American user type study we did in 2012 and 2013.

- This is a proposal for an experiment that I would love to see us build. At the very least I think there are some good ideas in here that might work as experiments on their own. But to be clear, we’re not removing add-ons, customization or anything else from Firefox.

https://blog.mozilla.org/verdi/463/lightspeed-a-browser-experiment/

|

|

Mozilla Open Policy & Advocacy Blog: MozFest 2014 – Calling All Policy & Advocacy Session Proposals |

Planning for MozFest 2014 (October 24-26 in London) is in full swing! This year, ‘Policy & Advocacy’ has its own track and we invite you to submit session proposals by the August 22nd deadline.

If you’ve never been to MozFest, here’s a bit about the conference: MozFest is a hands-on festival dedicated to forging the future of this open, global Web. Thousands of passionate makers, builders, educators, thinkers and creators from across the world come together as a community to dream at scale: exploring the Web, learning together, and making things that can change the world.

The Policy & Advocacy Track

MozFest is built on 11 core tracks, which are clusters of topics and sessions that have the greatest potential for innovation and empowerment with the Web. The Policy & Advocacy track will explore the current state of global Internet public policy and how to mobilize people to hold their leaders accountable to stronger privacy, security, net neutrality, free expression, digital citizenship, and access to the Web. Successful proposals are participatory, purposeful, productive, and flexible.

This is a critical time for the Internet, and you are all the heroes fighting everyday to protect and advance the free and open web. The Policy & Advocacy community is engaged in groundbreaking, vital efforts throughout the world. Let’s bring the community together to share ideas, passion, and expertise at MozFest.

We hope that you submit a session proposal today. We would love to hear from you.

|

|

Karl Dubost: Lightspeed and Blackhole |

It's 5AM. And I watched the small talk, Lightspeed a browser experiment, by Michael Verdi, who's doing UX at Mozilla. The proposal is about improving the user experience for people who don't want to have to configure anything. This is a topic I have at heart for two reasons. I think browsers are not helping me to manage information (I'm a very advanced user) and they are difficult to use by some people close to me (users with no technical knowledge).

When watching the talk, I was often along "yes, but we could push further this thing". I left a comment on Michael Verdi with this following content. I put it here too so I can keep a memory of it and I'm sure it will not disappear in the future. Here we go:

Search: Awesome bar matches the keywords in the title and the URI. Enlarge the scope by indexing the content of the full page instead. Rationale: We often remember things we have read more than their title which is invisible.

Heart: There are things I want to remember (the current heart), there are things I want to put on my ignore list or that I think are useless, disgusting, etc. Putting things on a list for ignoring will help to make it better. Hearts need to be page related and not domain related.

Bayesian: Now that you have indexed the full page, that you have chosen good and bad (heart/yirk), you can do bayesian filtering in the search results when you start styping, showing things which matters the most. Bayesian filtering is often used for removing spam, you can do the same by promoting content that is indexed.

BrowserTimeMachine: ok we indexed the full content of a page… hmm what about keeping a local copy of this page, be automatically or dynamically. Something that would work ala Time Machine, a kind of backup of my browsing. Something that would be a dated organization of my browsing or if you prefer an enhanced history. When you start searching you might have different versions in time of a Web page. I went there in October 2013, in February 2012. etc. When you are on a Webpage you could have a time slider timemachine and or ala Google streetview with the views from the past. (Additional benefit it can help you understand your self consumption of information). It could be everything you browse, or just the thing you put an heart. Which means you can put an heart multiple times on the same page.

In the end you get something which is becoming a very convenient tool for browsing (through local search) AND remembering (many tabs without managing them).

Otsukare!

|

|

Frederic Plourde: Firefox/Gecko : Getting rid of Xlib surfaces |

Over the past few months, working at Collabora, I have helped Mozilla get rid of Xlib surfaces for content on Linux platform. This task was the primary problem keeping Mozilla from turning OpenGL layers on by default on Linux, which is one of their long-term goals. I’ll briefly explain this long-term goal and will thereafter give details about how I got rid of Xlib surfaces.

LONG-TERM GOAL – Enabling Skia layers by default on Linux

My work integrated into a wider, long-term goal that Mozilla currently has : To enable Skia layers by default on Linux (Bug 1038800). And for a glimpse into how Mozilla initially made Skia layers work on linux, see bug 740200. At the time of writing this article, Skia layers are still not enabled by default because there are some open bugs about failing Skia reftests and OMTC (off-main-thread compositing) not being fully stable on linux at the moment (Bug 722012). Why is OMTC needed to get Skia layers on by default on linux ? Simply because by design, users that choose OpenGL layers are being grandfathered OMTC on Linux… and since the MTC (main-thread compositing) path has been dropped lately, we must tackle the OMTC bugs before we can dream about turning Skia layers on by default on Linux.

For a more detailed explanation of issues and design considerations pertaining turning Skia layers on by default on Linux, see this wiki page.

MY TASK – Getting rig of Xlib surfaces for content

Xlib surfaces for content rendering have been used extensively for a long time now, but when OpenGL got attention as a means to accelerate layers, we quickly ran into interoperability issues between XRender and Texture_From_Pixmap OpenGL extension… issues that were assumed insurmountable after initial analysis. Also, and I quote Roc here, “We [had] lots of problems with X fallbacks, crappy X servers, pixmap usage, weird performance problems in certain setups, etc. In particular we [seemed] to be more sensitive to Xrender implementation quality that say Opera or Webkit/GTK+.” (Bug 496204)

So for all those reasons, someone had to get rid of Xlib surfaces, and that someone was… me ;)

The Problem

So problem was to get rid of Xlib surfaces (gfxXlibSurface) for content under Linux/GTK platform and implicitly, of course, replace them with Image surfaces (gfxImageSurface) so they become regular memory buffers in which we can render with GL/gles and from which we can composite using GPU. Now, it’s pretty easy to force creation of Image surfaces (instead of Xlib ones) for just all content layers in gecko gfx/layers framework, just force gfxPlatformGTK::CreateOffscreenSurfaces(…) to create gfxImageSurfaces in any case.

Problem is, naively doing so gives rise to a series of perf. regressions and sub-optimal paths being taken, for example, to copy image buffers around when passing them across process boundaries, or unnecessary copying when compositing under X11 with Xrender support. So the real work was to fix everything after having pulled the gfxXlibSurface plug ;)

The Solution

First glitch on the way was that GTK2 theme rendering, per design, *had* to happen on Xlib surfaces. We didn’t have much choice as to narrow down our efforts to the GTK3 branch alone. What’s nice with GTK3 on that front is that it makes integral use of cairo, thus letting theme rendering happen on any type of cairo_surface_t. For more detail on that decision, read this.

Upfront, we noticed that the already implemented GL compositor was properly managing and buffering image layer contents, which is a good thing, but on the way, we saw that the ‘basic’ compositor did not. So we started streamlining basic compositor under OMTC for GTK3.

The core of the solution here was about implementing server-side buffering of layer contents that were using image backends. Since targetted platform was Linux/GTK3 and since Xrender support is rather frequent, the most intuitive thing to do was to subclass BasicCompositor into a new X11BasicCompositor and make it use a new specialized DataTextureSource (that we called X11DataTextureSourceBasic) that basically buffers upcoming layer content in ::Update() to an gfxXlibSurface that we keep alive for the TextureSource lifetime (unless surface changes size and/or format).

Performance results were satisfying. For 64 bit systems, we had around 75% boost in tp5o_shutdown_paint, 6% perf gain for ‘cart’, 14% for ‘tresize’, 33% for tscrollx and 12% perf gain on tcanvasmark.

For complete details about this effort, design decisions and resulting performance numbers, please read the corresponding bugzilla ticket.

To see the code that we checked-in to solve this, look at those 2 patches :

https://hg.mozilla.org/mozilla-central/rev/a500c62330d4

https://hg.mozilla.org/mozilla-central/rev/6e532c9826e7

Cheers !

http://fredinfinite23.wordpress.com/2014/08/07/firefoxgecko-getting-rid-of-xlib-surfaces/

|

|

Kim Moir: Scaling mobile testing on AWS |

|

| Do Android's Dream of Electric Sheep? ©Bill McIntyre, Creative Commons by-nc-sa 2.0 |

We run many Linux based tests, including Android emulators on AWS spot instances. Spot instances are AWS excess capacity that you can bid on. If someone outbids the price you have paid for your spot instance, you instance can be terminated. But that's okay because we retry jobs if they fail for infrastructure reasons. The overall percentage of spot instances that are terminated is quite small. The huge advantage to using spot instances is price. They are much cheaper than on-demand instances which has allowed us to increase our capacity while continuing to reduce our AWS bill.

We have a wide variety of unit tests that run on emulators for mobile on AWS. We encountered an issue where some of the tests wouldn't run on the default instance type (m1.medium), that we use for our spot instances. Given the number of jobs we run, we want to run on the cheapest AWS instance type that where the tests will complete successfully. At the time we first tested it, we couldn't find an instance type where certain CPU/memory intensive tests would run. So when I first enabled Android 2.3 tests on emulators, I separated the tests so that some would run on AWS spot instances and the ones that needed a more powerful machine would run on our inhouse Linux capacity. But this change consumed all of the capacity of that pool and we had very high number of pending jobs in that pool. This meant that people had to wait a long time for their test results. Not good.

To reduce the pending counts, we needed to buy some more in house Linux capacity or try to run a selected subset of the tests that need more resources or find a new AWS instance type where they would complete successfully. Geoff from the ATeam ran the tests on the c3.xlarge instance type he had tried before and now it seemed to work. In his earlier work the tests did not complete successfully on this instance type. We are unsure as to the reasons why. One of the things about working with AWS is that we don't have a window into the bugs that they fix at their end. So this particular instance type didn't work before, but it does now.

The next steps for me were to create a new AMI (Amazon machine image) that would serve as as the "golden" version for instances that would be created in this pool. Previously, we used Puppet to configure our AWS test machines but now just regenerate the AMI every night via cron and this is the version that's instantiated. The AMI was a copy of the existing Ubuntu64 image that we have but it was configured to run on the c3.xlarge instance type instead of m1.medium. This was a bit tricky because I had to exclude regions where the c3.xlarge instance type was not available. For redundancy (to still have capacity if an entire region goes down) and cost (some regions are cheaper than others), we run instances in multiple AWS regions.

Once I had the new AMI up that would serve as the template for our new slave class, I created a slave with the AMI and verified running the tests we planned to migrate on my staging server. I also enabled two new Linux64 buildbot masters in AWS to service these new slaves, one in us-east-1 and one in us-west-2. When enabling a new pool of test machines, it's always good to look at the load on the current buildbot masters and see if additional masters are needed so the current masters aren't overwhelmed with too many slaves attached.

After the tests were all green, I modified our configs to run this subset of tests on a branch (ash), enabled the slave platform in Puppet and added a pool of devices to this slave platform in our production configs. After the reconfig deployed these changes into production, I landed a regular expression to watch_pending.cfg to so that new tst-emulator64-spot pool of machines would be allocated to the subset of tests and branch I enabled them on. The watch_pending.py script watches the number of pending jobs that on AWS and creates instances as required. We also have scripts to terminate or stop idle instances when we don't get them. Why pay for machines when you don't need them now? After the tests ran successfully on ash, I enabled running the tests on the other relevant branches.

| |

|

And some Android 2.3 tests run on c3.xlarge or (tst-emulator64-spot instances), such as crashtests.

In enabling this slave class within our configs, we were also able to reuse it for some b2g tests which also faced the same problem where they needed a more powerful instance type for the tests to complete.

Lessons learned:

Use the minimum (cheapest) instance type required to complete your tests

As usual, test on a branch before full deployment

Scaling mobile tests doesn't mean more racks of reference cards

Future work:

Bug 1047467 c3.xlarge instance types are expensive, let's test running those tests on a range of instance types that are cheaper

Further reading:

AWS instance types

Chris Atlee wrote about how we Now Use AWS Spot Instances for Tests

Taras Glek wrote How Mozilla Amazon EC2 Usage Got 15X Cheaper in 8 months

Rail Aliiev http://rail.merail.ca/posts/firefox-builds-are-way-cheaper-now.html

Bug 980519 Experiment with other instance types for Android 2.3 jobs

Bug 1024091 Address high pending count in in-house Linux64 test pool

Bug 1028293 Increase Android 2.3 mochitest chunks, for aws

Bug 1032268 Experiment with c3.xlarge for Android 2.3 jobs

Bug 1035863 Add two new Linux64 masters to accommodate new emulator slaves

Bug 1034055 Implement c3.xlarge slave class for Linux64 test spot instances

Bug 1031083 Buildbot changes to run selected b2g tests on c3.xlarge

Bug 1047467 c3.xlarge instance types are expensive, let's try running those tests on a range of instance types that are cheaper

http://relengofthenerds.blogspot.com/2014/08/scaling-mobile-testing-on-aws.html

|

|

Armen Zambrano Gasparnian: mozdevice now mozlogs thanks to mozprocess! |

jgraham: armenzg_brb: This new logging in mozdevice is awesome!We recently changed the way that mozdevice works. mozdevice is a python package used to talk to Firefox OS or Android devices either through ADB or SUT.

armenzg: jgraham, really? why you say that?

jgraham: armenzg: I can see what's going on!

Several developers of the Auto Tools team have been working on the Firefox OS certification suite for partners to determine if they meet the expectations of the certification process for Firefox OS.

When partners have any issues with the cert suite, they can send us a zip file with their results so we can help them out. However, until recently, the output of mozdevice would go to standard out rather than being logged into the zip file they would send us.

In order to use the log manger inside the certification suite, I needed every adb command and its output to be logged rather than printed to stdout. Fortunately, mozprocess has a parameter that I can specify which allows me to manipulate any output generate by the process. To benefit from mozprocess and its logging, I needed to switch every subprocess.Popen() call to be replaced by ProcessHandler().

If you want to "see what's going on" in mozdevice, all you have to do is to request the debug level of logging like this:

DeviceManagerADB(logLevel=mozlog.DEBUG)As part of this change, we also switched to use structured logging instead of the basic mozlog logging.

Switching to mozprocess also helped us discover an issue in mozprocess specific to Windows.

You can see the patch in here.

You can see other changes inside of the mozdevice 0.38 release in here.

|

| At least with mozdevice you can know what is going on! |

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://armenzg.blogspot.com/2014/08/mozdevice-now-mozlogs-thanks-to.html

|

|

Gervase Markham: Laziness |

Dear world,

This week, I ordered Haribo Jelly Rings on eBay and had them posted to me. My son brought them from the front door to my office and I am now eating them.

That is all.

http://feedproxy.google.com/~r/HackingForChrist/~3/CBmHSwJ-BTU/

|

|

Nick Fitzgerald: Wu.Js 2.0 |

On May 21st, 2010, I started experimenting with lazy, functional streams in JavaScript with a library I named after the Wu-Tang Clan.

commit 9d3c5b19a088f6e33888c215f44ab59da4ece302

Author: Nick Fitzgerald <fitzgen@gmail.com>

Date: Fri May 21 22:56:49 2010 -0700

First commit

Four years later, the feature-complete, partially-implemented, and soon-to-be-finalized ECMAScript 6 supports lazy streams in the form of generators and its iterator protocol. Unfortunately, ES6 iterators are missing the higher order functions you expect: map, filter, reduce, etc.

Today, I'm happy to announce the release of wu.js version 2.0, which has been

completely rewritten for ES6.

wu.js aims to provide higher order functions for ES6 iterables. Some of them

you already know (filter, some, reduce) and some of them might be new to

you (reductions, takeWhile). wu.js works with all ES6 iterables,

including Arrays, Maps, Sets, and generators you write yourself. You don't

have to wait for ES6 to be fully implemented by every JS engine, wu.js can be

compiled to ES5 with the Tracuer compiler.

Here's a couple small examples:

const factorials = wu.count(1).reductions((last, n) => last * n);

// (1, 2, 6, 24, ...)

const isEven = x => x % 2 === 0;

const evens = wu.filter(isEven);

evens(wu.count());

// (0, 2, 4, 6, ...)

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [938272] Turn on Locale multi-select field in Mozilla Localizations :: Other

- [1046779] Components with no default QA still display a usermenu arrow on describecomponents.cgi

- [1047328] Please remove “Firefox for Metro:” from the “https://bugzilla.mozilla.org/enter_bug.cgi”; page

- [1047142] The User Story field should be expanded/editable by default if it’s non-empty when cloning a bug

- [1047131] enable “user story” field on all products

- [1049329] fix width of user_story field, and hide label for anon access when no story is set

- [1049830] Bug.search with count_only = 1 does not work for JSONRPC and REST but does work for XMLRPC

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/08/07/happy-bmo-push-day-106/

|

|

William Reynolds: 2014 halfway point for Community Tools |

As part of Mozilla’s 2014 goal to grow the number of active contributors by 10x, we have been adding new functionality to our tools. Community Tools are the foundation for all activities that our global contributors do to help us achieve our mission. At their best, they enable us to do more and do better.

We have a comprehensive roadmap to add more improvements and features, but stepping back, this post summarizes what the Community Tools team has accomplished so far this year. Tools are an org-wide, cross-functional effort with the Community Building team working on tools such as Baloo and the Mozilla Foundation building tools to enable and measure impact (see areweamillionyet.org). We’re in the process of trying to merge our efforts and work more closely. However, this post focuses on mozillians.org and the Mozilla Reps Portal.

mozillians.org

Our community directory, has over 6000 profiles of vouched Mozillians. This is a core way for volunteers and staff to contact each other and organize their programs, projects and interests through groups. Our efforts have a common theme of making it easier for Mozillians to get the information they need. Recently, we’ve worked on:

- Improvements to vouching – making it much clearer who can access non-public information using Mozillians.org (people who have been vouched because they participate and contribute to Mozilla) and how that list of people can grow (through individual judgments by people who have themselves been vouched numerous times).

- Real location information on profile – using geographical data for Country, Region and City information. No more duplicates or bad locations.

- Curated groups – increasing the value of groups by having a curator and information for each new group. Group membership can also be used for authorization on other sites through the Mozillians API.

- Democratize API access – making it easier for any vouched Mozillian to get an API key to access public information on mozillians.org (coming soon). For apps that want to use Mozillians-only information, developers can request a reviewed app API key.

- New account fields on profiles – adding more ways to contact and learn about contributors. The new fields include Lanyrd, SlideShare, Discourse, phone numbers and more.

Reps Portal

The portal for Reps activities and events has over 400 Reps and documents over 21,000 of their activities since the Reps program started 3 years ago. It provides tools for Reps to carry out their activities as well as public-facing information about thousands of Reps-organized events and general information about the Reps program. So far this year, we have:

- Started measuring individual activities – monthly reports have been replaced with reports that document specific activities. Reps can now describe an activity by filling out a few fields, and several activities are automatically captured as Reps use the site (ex: creating or attending an event)

- Automated the budget request process – as the number of events and budget requests has grown, we created a faster, less manual way for Council members to review and vote on budget requests.

- Standardized event metrics – events now have a curated set of metrics, which allows us to see the aggregate impact of several events and makes it easier for Reps to choose metrics that align with Mozilla’s organizational goals.

- Allow Reps to indicate when they are unavailable – each Rep can quickly notify their mentor and others Reps when they will be unavailable for a certain time period.

- Created an aggregated statistics page – anyone can now see an overview of the Reps program and how many Reps have been active the past couple months. We’ll be adding more Key Performance Indicators (similar to SUMO’s KPI dashboard) to this page in the next few months.

What’s next?

We’ve made great progress this year. There’s still a lot to do. For mozillians.org we want to focus on making contributor information more accessible, recognizing contributors in a meaningful way, and creating a suite of modules. On the Reps Portal we will work on scaling operations, measuring the impact of activities and events, and creating a community leadership platform.

The roadmap describes specific projects, and we’ll continue blogging about updates and announcements.

Want to help?

We’d love your help with making mozillians.org and the Reps Portal better. Check out how to get involved and say hi to the team on the #commtools and #remo-dev IRC channels.

http://dailycavalier.com/2014/08/2014-halfway-point-for-community-tools/

|

|

David Boswell: People are the hook |

One of Mozilla’s goals for 2014 is to grow the number of active contributors by 10x. As we’ve been working on this, we’ve been learning a lot of interesting things. I’m going to do a series of posts with some of those insights.

The recent launch of the contributor dashboard has provided a lot of interesting information. What stands out to me is the churn — we’re able to connect new people to opportunities, but growth is slower than it could be because many people are leaving at the same time.

To really highlight this part of the data, Pierros made a chart that compares the number of new people who are joining with the number of people leaving. The results are dramatic — more people are joining, but the number of people leaving is significant.

This is understandable — the goal for this year is about connecting new people and we haven’t focused much effort on retention. As the year winds down and we look to next year, I encourage us to think about what a serious retention effort would look like.

I believe that the heart of a retention effort is to make it very easy for contributors to find new contribution opportunities as well as helping them make connections with other community members.

Stories we’ve collected from long time community members almost all share the thread of making a connection with another contributor and getting hooked. We have data from an audit that shows this too — a positive experience in the community keeps people sticking around.

There are many ways we could help create those connections. Just one example is the Kitherder mentor matching tool that the Security team is working on. They did a demo of it at the last Grow Mozilla meeting.

I don’t know what the answer is though, so I’d love to hear what other people think. What are some of the ways you would address contributor retention?

http://davidwboswell.wordpress.com/2014/08/06/people-are-the-hook/

|

|

Mike Conley: Bugnotes |

Over the past few weeks, I’ve been experimenting with taking notes on the bugs I’ve been fixing in Evernote.

I’ve always taken notes on my bugs, but usually in some disposable text file that gets tossed away once the bug is completed.

Evernote gives me more powers, like embedded images, checkboxes, etc. It’s really quite nice, and it lets me export to HTML.

Now that I have these notes, I thought it might be interesting to share them. If I have notes on a bug, here’s what I’m going to aim to do when the bug is closed:

- Publish my notes on my new Bugnotes site1

- Comment in the bug linking to my notes

- Add a “bugnotes” tag to my comment with the link

I’ve just posted my first bugnote. It’s raw, unedited, and probably a little hard to follow if you don’t know what I’m working on. I thought maybe it’d be interesting to see what’s going on in my head as I fix a bug. And who knows, if somebody needs to re-investigate a bug or a related bug down the line, these notes might save some people some time.

Anyhow, here are my bugnotes. And if you’re interested in doing something similar, you can fork it (I’m using Jekyll for static site construction).

|

|