Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Benjamin Kerensa: UbuConLA: Firefox OS on show in Cartagena |

If you are attending UbuConLA I would strongly encourage you to check out the talks on Firefox OS and Webmaker. In addition to the talks, there will also be a Firefox OS workshop where attendees can go more hands on.

If you are attending UbuConLA I would strongly encourage you to check out the talks on Firefox OS and Webmaker. In addition to the talks, there will also be a Firefox OS workshop where attendees can go more hands on.

When the organizers of UbuConLA reached out to me several months ago, I knew we really had to have a Mozilla presence at this event so that Ubuntu Users who are already using Firefox as their browser of choice could learn about other initiatives like Firefox OS and Webmaker.

People in Latin America always have had a very strong ethos in terms of their support and use of Free Software and we have an amazingly vibrant community there in Columbia.

So if you will be anywhere near Universidad Tecnol'ogica De Bol'ivar in Catagena, Columbia, please go see the talks and learn why Firefox OS is the mobile platform that makes the open web a first class citizen.

Learn how you can build apps and test them in Firefox on Ubuntu! A big thanks to Guillermo Movia for helping us get some speakers lined up here! I really look forward to seeing some awesome Firefox OS apps getting published as a result of our presence at UbuConLA as I am sure the developers will love what Firefox OS has to offer.

Feliz Conferencia!

|

|

Fredy Rouge: OpenBadges at Duolingo test center |

Duolingo is starting a new certification program:

I think is good idea if anyone at MoFo (pay staff) write or call this people to propose the integration of http://openbadges.org/ in their certification program.

I really don’t have friends at MoFo/OpenBadges if you think is a good idea and you know anyone at OpenBadges please FW this idea.

Class'e dans:Statut Tagged: duolingo, english, Mozilla, OpenBadgets

http://fredyrouge.wordpress.com/2014/08/12/openbadges-at-duolingo-test-center/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1049929] Product Support / Corp Support to Business Support

- [1033897] Firefox OS MCTS Waiver Request Submission Form

- [1041964] Indicate that a comment is required when selecting a value which has an auto-comment configured

- [498890] Bugzilla::User::Setting doesn’t need to sort DB results

- [993926] Bugzilla::User::Setting::get_all_settings() should use memcached

- [1048053] convert bug 651803 dupes to INVALID bugs in “Invalid Bugs” product

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/08/12/happy-bmo-push-day-107/

|

|

Hannah Kane: Maker Party Engagement Week 4 |

We’re almost at the halfway point!

Here’s some fodder for this week’s Peace Room meetings.

tl;dr potential topics of discussion:

- big increase in user accounts this week caused by change to snippet strategy

- From Adam: We’re directing all snippet traffic straight to webmaker.org/signup while we develop a tailored landing page experience with built in account creation.This page is really converting well for an audience as broad and cold as the snippet, and I believe we can increase this rate further with bespoke pages and optimization.

Fun fact: this approach is generating a typical month’s worth of new webmaker users every three days.

- From Adam: We’re directing all snippet traffic straight to webmaker.org/signup while we develop a tailored landing page experience with built in account creation.This page is really converting well for an audience as broad and cold as the snippet, and I believe we can increase this rate further with bespoke pages and optimization.

- what do we want from promotional partners?

- what are we doing to engage active Mozillians?

——–

Overall stats:

- Contributors: 5441 (we’ve passed the halfway point!)

- Webmaker accounts: 106.3K (really big jump this week—11.6K new accounts this week as compared to 2.6K last week) (At one point we thought that 150K Webmaker accounts would be the magic number for hitting 10K Contributors. Should we revisit that assumption?)

- Events: 1199 (up 10% from last week; this is down from the previous week which saw a 26% jump)

- Hosts: 450 (up 14% from last week, same as the prior week)

- Expected attendees: 61,910 (up 13% from last week, down a little bit from last week’s 16% increase)

- Cities: 260 (up 8% from 241 last week)

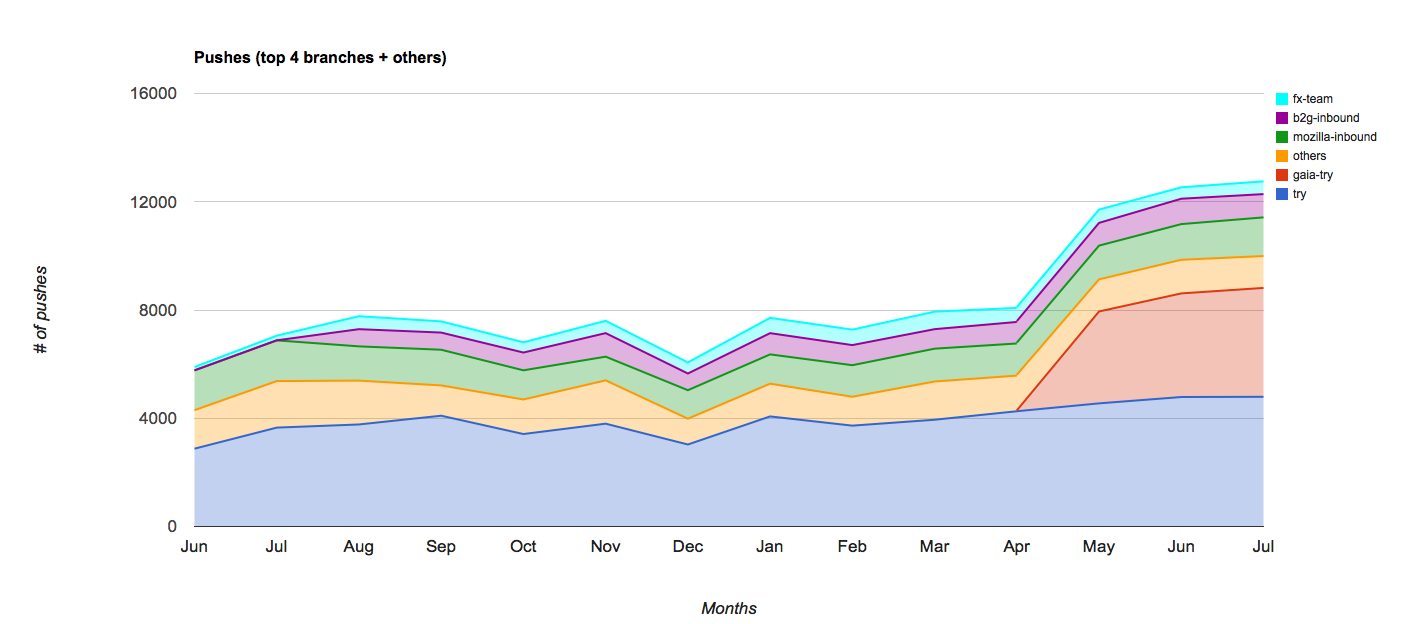

- Traffic: here’s the last three weeks. You can see we’re maintaining the higher levels that started with last week’s increase to our snippet allotment.

http://hannahgrams.com/2014/08/11/maker-party-engagement-week-4/

|

|

Sean Martell: What is a Living Brand? |

Today, we’re starting the Mozilla ID project, which will be an exploration into how to make the Mozilla identity system as bold and dynamic as the Mozilla project itself. The project will look into tackling three of our brand elements – typography, color, and the logo. Of these three, the biggest challenge will be creating a new logo since we currently don’t have an official mark at the moment. Mozilla’s previous logo was the ever amazing dino head that we all love, which has now been set as a key branding element for our community-facing properties. Its replacement should embody everything that Mozilla is, and our goal is to bake as much of our nature into the visual as we can while keeping it clean and modern. In order to do this, we’re embracing the idea of creating a living brand.

A living brand you say? Tell me more.

I’m pleased to announce you already know what a living brand is, you just may not know it under that term. If you’ve ever seen the MTV logo – designed in 1981 by Manhattan Design – you’ve witnessed a living brand. The iconic M and TV shapes are the base elements for their brand and building on that with style, color, illustrations and animations creates the dynamic identity system that brings it alive. Their system allows designers to explore unlimited variants of the logo, while maintaining brand consistency with the underlying recognizable shapes. As you can tell through this example, a living brand can unlock so much potential for a logo, opening up so many possibilities for change and customization. It’s because of this that we feel a living brand is perfect for Mozilla – we’ll be able to represent who we are through an open visual system of customization and creative expression.

You may be wondering how this is so open if Mozilla Creative will be doing all of the variants for this new brand? Here’s the exciting part. We’re going to be helping define the visual system, yes, but we’re exploring dynamic creation of the visual itself through code and data visualization. We’re also going to be creating the visual output using HTML5 and Web technologies, baking the building blocks of the Web we love and protect into our core brand logo.

OMG exciting, right? Wait, there’s still more!

In order to have this “organized infinity” allow a strong level of brand recognition, we plan to have a constant mark as part of the logo, similar to how MTV did it with the base shapes. Here’s the fun part and one of several ways you can get involved – we’ll be live streaming the process with a newly minted YouTube channel where you can follow along as we explore everything from wordmark choices to building out those base logo shapes and data viz styles. Yay! Open design process!

So there it is. Our new fun project. Stay tuned to various channels coming out of Creative – this blog, my Twitter account, the Mozilla Creative blog and Twitter account – and we’ll update you shortly on how you’ll be able to take part in the process. For now, fell free to jump in to #mologo on IRC to say hi and discuss all things Mozilla brand!

It’s a magical time for design, Mozilla. Let’s go exploring!

http://blog.seanmartell.com/2014/08/11/what-is-a-living-brand/

|

|

Gervase Markham: Accessing Vidyo Meetings Using Free Software: Help Needed |

For a long time now, Mozilla has been a heavy user of the Vidyo video-conferencing system. Like Skype, it’s a “pretty much just works” solution where, sadly, the free software and open standards solutions don’t yet cut it in terms of usability. We hope WebRTC might change this. Anyway, in the mean time, we use it, which means that Mozilla staff have had to use a proprietary client, and those without a Vidyo login of their own have had to use a Flash applet. Ick. (I use a dedicated Android tablet for Vidyo, so I don’t have to install either.)

However, this sad situation may now have changed. In this bug, it seems that SIP and H.263/H.264 gateways have been enabled on our Vidyo setup, which should enable people to call in using standards-compliant free software clients. However, I can’t get video to work properly, using Linphone. Is there anyone out there in the Mozilla world who can read the bug and figure out how to do it?

http://feedproxy.google.com/~r/HackingForChrist/~3/f5KEEuHYNLM/

|

|

Gervase Markham: It’s Not All About Efficiency |

Delegation is not merely a way to spread the workload around; it is also a political and social tool. Consider all the effects when you ask someone to do something. The most obvious effect is that, if he accepts, he does the task and you don’t. But another effect is that he is made aware that you trusted him to handle the task. Furthermore, if you made the request in a public forum, then he knows that others in the group have been made aware of that trust too. He may also feel some pressure to accept, which means you must ask in a way that allows him to decline gracefully if he doesn’t really want the job. If the task requires coordination with others in the project, you are effectively proposing that he become more involved, form bonds that might not otherwise have been formed, and perhaps become a source of authority in some subdomain of the project. The added involvement may be daunting, or it may lead him to become engaged in other ways as well, from an increased feeling of overall commitment.

Because of all these effects, it often makes sense to ask someone else to do something even when you know you could do it faster or better yourself.

– Karl Fogel, Producing Open Source Software

http://feedproxy.google.com/~r/HackingForChrist/~3/R5jtDBWrvdY/

|

|

Just Browsing: “Because We Can” is Not a Good Reason |

The two business books that have most influenced me are Geoffrey Moore’s Crossing the Chasm and Andy Grove’s Only the Paranoid Survive. Grove’s book explains that, for long-term success, established businesses must periodically navigate “strategic inflection points”, moments when a paradigm shift forces them to adopt a new strategy or fade into irrelevance. Moore’s book could be seen as a prequel, outlining strategies for nascent companies to break through and become established themselves.

The key idea of Crossing the Chasm is that technology startups must focus ruthlessly in order to make the jump from early adopters (who will use new products just because they are cool and different) into the mainstream. Moore presents a detailed strategy for marketing discontinuous hi-tech products, but to my mind his broad message is relevant to any company founder. You have a better chance of succeeding if you restrict the scope of your ambitions to the absolute minimum, create a viable business and then grow it from there.

This seems obvious: to compete with companies who have far more resources, a newcomer needs to target a niche where it can fight on an even footing with the big boys (and defeat them with its snazzy new technology). Taking on too much means that financial investment, engineering talent and, worst of all, management attention are diluted by spreading them across multiple projects.

So why do founders consistently jeopardize their prospects by trying to do too much? Let me count the ways.

In my experience the most common issue is an inability to pass up a promising opportunity. The same kind of person who starts their own company tends to be a go-getter with a bias towards action, so they never want to waste a good idea. In the very early stages this is great. Creativity is all about trying as many ideas as possible and seeing what sticks. But once you’ve committed to something that you believe in, taking more bets doesn’t increase your chances of success, it radically decreases them.

Another mistake is not recognizing quickly enough that a project has failed. Failure is rarely total. Every product will have a core group of passionate users or a flashy demo or some unique technology that should be worth something, dammit! The temptation is to let the project drag on even as you move on. Better to take a deep breath and kill it off so you can concentrate on your new challenges, rather than letting it weigh you down for months or years… until you inevitably cancel it anyway.

Sometimes lines of business need to be abandoned even if they are successful. Let’s say you start a small but prosperous company selling specialized accounting software to Lithuanian nail salons. You add a cash-flow forecasting feature and realize after a while that it is better than anything else on the market. Now you have a product that you can sell to any business in any country. But you might as well keep selling your highly specialized accounting package in the meantime, right? After all, it’s still contributing to your top-line revenue. Wrong! You’ve found a much bigger opportunity now and you should dump your older business as soon as financially possible.

Last, but certainly not least, there is the common temptation to try to pack too much into a new product. I’ve talked to many enthusiastic entrepreneurs who are convinced that their product will be complete garbage unless it includes every minute detail of their vast strategic vision. What they don’t realize is that it is going to take much longer than they think to develop something far simpler than what they have in mind. This is where all the hype about the Lean Startup and Minimum Viable Products is spot on. They force you to make tough choices about what you really need before going to market. In the early stages you should be hacking away big chunks of your product spec with a metaphorical machete, not agonizing over every “essential” feature you have to let go.

The common thread is that ambitious, hard-charging individuals, the kind who start companies, have a tough time seeing the downside of plugging away endlessly at old projects, milking every last drop out of old lines of business and taking on every interesting new challenge that comes their way. But if you don’t have a coherent, disciplined strategic view of what you are trying to achieve, if you aren’t willing to strip away every activity that doesn’t contribute to this vision, then you probably aren’t working on the right things.

http://feedproxy.google.com/~r/justdiscourse/browsing/~3/qIgu4yf-jUY/

|

|

Roberto A. Vitillo: Dasbhoard generator for custom Telemetry jobs |

tldr: Next time you are in need of a dashboard similar to the one used to monitor main-thread IO, please consider using my dashboard generator which takes care of displaying periodically generated data.

So you wrote your custom analysis for Telemetry, your map-reduce job is finally giving you the desired data and you want to set it up so that it runs periodically. You will need some sort of dashboard to monitor the weekly runs but since you don’t really care how it’s done what do you do? You copy paste the code of one of our current dashboards, a little tweak here and there and off you go.

That basically describes all of the recent dashboards, like the one for main-thread IO (mea culpa). Writing dashboards is painful when the only thing you care about is data. Once you finally have what you were looking for, the way you present is often considered an afterthought at best. But maintaining N dashboards becomes quickly unpleasant.

But what makes writing and maintaining dashboards so painful exactly? It’s simply that the more controls you have, the more the different kind events you have to handle and the easier things get out of hand quickly. You start with something small and beautiful that just displays some csv and presto you end up with what should have been properly described as a state machine but instead is a mess of intertwined event handlers.

What I was looking for was something on the line of Shiny for R, but in javascript and with the option to have a client-only based interface. It turns out that React does more or less what I want. It’s not necessary meant for data analysis so there aren’t any plotting facilities but everything is there to roll your own. What makes exactly Shiny and React so useful is that they embrace reactive programming. Once you define a state and a set of dependencies, i.e. a data flow graph in practical terms, changes that affect the state end up being automatically propagated to the right components. Even though this can be seen as overkill for small dashboards, it makes it extremely easy to extend them when the set of possible states expands, which is almost always what happens.

To make things easier for developers I wrote a dashboard generator, iacumus, for use-cases similar to the ones we currently have. It can be used in simple scenarios when:

- the data is collected in csv files on a weekly basis, usually using build-ids;

- the dashboard should compare the current week against the previous one and mark differences in rankings;

- it should be possible to go back back and forward in time;

- the dashboard should provide some filtering and sorting criterias.

Iacumus is customizable through a configuration file that is specified through a GET parameter. Since it’s hosted on github, it means you just have to provide the data and don’t even have to spend time deploying the dashboard somewhere, assuming the machine serving the configuration file supports CORS. Here is how the end result looks like using the data for the Add-on startup correlation dashboard. Note that currently Chrome doesn’t handle properly our gzipped datasets and is unable to display anything, in case you wonder…

My next immediate goal is to simplify writing map-reduce jobs for the above mentioned use cases or to the very least write down some guidelines. For instance, some of our dashboards are based on Firefox’s version numbers and not on build-ids, which is really what you want when you desire to make comparisons of Nightly on a weekly basis.

Another interesting thought would be to automatically detect differences in the dashboards and send alerts. That might be not as easy with the current data, since a quick look at the dashboards makes it clear that the rankings fluctuate quite a bit. We would have to collect daily reports and account for the variance of the ranking in those as just using a few weekly datapoints is not reliable enough to account for the deviation.

http://ravitillo.wordpress.com/2014/08/11/dasbhoard-generator-for-custom-telemetry-jobs/

|

|

Matt Brubeck: Let's build a browser engine! Part 2: Parsing HTML |

This is the second in a series of articles on building a toy browser rendering engine:

- Part 1: Getting started

- Part 2: Parsing HTML

- Part 3: CSS

This article is about parsing HTML source code to produce a tree of DOM nodes. Parsing is a fascinating topic, but I don’t have the time or expertise to give it the introduction it deserves. You can get a detailed introduction to parsing from any good course or book on compilers. Or get a hands-on start by going through the documentation for a parser generator that works with your chosen programming language.

HTML has its own unique parsing algorithm. Unlike parsers for most programming languages and file formats, the HTML parsing algorithm does not reject invalid input. Instead it includes specific error-handling instructions, so web browsers can agree on how to display every web page, even ones that don’t conform to the syntax rules. Web browsers have to do this to be usable: Since non-conforming HTML has been supported since the early days of the web, it is now used in a huge portion of existing web pages.

A Simple HTML Dialect

I didn’t even try to implement the standard HTML parsing algorithm. Instead I wrote a basic parser for a tiny subset of HTML syntax. My parser can handle simple pages like this:

Title

span> id="main" class="test">

Hello world!

The following syntax is allowed:

- Balanced tags:

...

- Attributes with quoted values:

id="main" - Text nodes:

world

Everything else is unsupported, including:

- Comments

- Doctype declarations

- Escaped characters (like

&) and CDATA sections - Self-closing tags:

- Error handling (e.g. unbalanced or improperly nested tags)

- Namespaces and other XHTML syntax:

- Character encoding detection

At each stage of this project I’m writing more or less the minimum code needed to support the later stages. But if you want to learn more about parsing theory and tools, you can be much more ambitious in your own project!

Example Code

Next, let’s walk through my toy HTML parser, keeping in mind that this is just one way to do it (and probably not the best way). Its structure is based loosely on the tokenizer module from Servo’s cssparser library. It has no real error handling; in most cases, it just aborts when faced with unexpected syntax. The code is in Rust, but I hope it’s fairly readable to anyone who’s used similar-looking languages like Java, C++, or C#. It makes use of the DOM data structures from part 1.

The parser stores its input string and a current position within the string. The position is the index of the next character we haven’t processed yet.

struct Parser {

pos: uint,

input: String,

}

We can use this to implement some simple methods for peeking at the next characters in the input:

impl Parser {

/// Read the next character without consuming it.

fn next_char(&self) -> char {

self.input.as_slice().char_at(self.pos)

}

/// Do the next characters start with the given string?

fn starts_with(&self, s: &str) -> bool {

self.input.as_slice().slice_from(self.pos).starts_with(s)

}

/// Return true if all input is consumed.

fn eof(&self) -> bool {

self.pos >= self.input.len()

}

// ...

}

Rust strings are stored as UTF-8 byte arrays. To go to the next

character, we can’t just advance by one byte. Instead we use char_range_at

which correctly handles multi-byte characters. (If our string used fixed-width

characters, we could just increment pos.)

/// Return the current character, and advance to the next character.

fn consume_char(&mut self) -> char {

let range = self.input.as_slice().char_range_at(self.pos);

self.pos = range.next;

range.ch

}

Often we will want to consume a string of consecutive characters. The

consume_while method consumes characters that meet a given condition, and

returns them as a string:

/// Consume characters until `test` returns false.

fn consume_while(&mut self, test: |char| -> bool) -> String {

let mut result = String::new();

while !self.eof() && test(self.next_char()) {

result.push_char(self.consume_char());

}

result

}

We can use this to ignore a sequence of space characters, or to consume a string of alphanumeric characters:

/// Consume and discard zero or more whitespace characters.

fn consume_whitespace(&mut self) {

self.consume_while(|c| c.is_whitespace());

}

/// Parse a tag or attribute name.

fn parse_tag_name(&mut self) -> String {

self.consume_while(|c| match c {

'a'..'z' | 'A'..'Z' | '0'..'9' => true,

_ => false

})

}

Now we’re ready to start parsing HTML. To parse a single node, we look at its

first character to see if it is an element or a text node. In our simplified

version of HTML, a text node can contain any character except <.

/// Parse a single node.

fn parse_node(&mut self) -> dom::Node {

match self.next_char() {

'<' => self.parse_element(),

_ => self.parse_text()

}

}

/// Parse a text node.

fn parse_text(&mut self) -> dom::Node {

dom::text(self.consume_while(|c| c != '<'))

}

An element is more complicated. It includes opening and closing tags, and between them any number of child nodes:

/// Parse a single element, including its open tag, contents, and closing tag.

fn parse_element(&mut self) -> dom::Node {

// Opening tag.

assert!(self.consume_char() == '<');

let tag_name = self.parse_tag_name();

let attrs = self.parse_attributes();

assert!(self.consume_char() == '>');

// Contents.

let children = self.parse_nodes();

// Closing tag.

assert!(self.consume_char() == '<');

assert!(self.consume_char() == '/');

assert!(self.parse_tag_name() == tag_name);

assert!(self.consume_char() == '>');

dom::elem(tag_name, attrs, children)

}

Parsing attributes is pretty easy in our simplified syntax. Until we reach

the end of the opening tag (>) we repeatedly look for a name followed by =

and then a string enclosed in quotes.

/// Parse a single name="value" pair.

fn parse_attr(&mut self) -> (String, String) {

let name = self.parse_tag_name();

assert!(self.consume_char() == '=');

let value = self.parse_attr_value();

(name, value)

}

/// Parse a quoted value.

fn parse_attr_value(&mut self) -> String {

let open_quote = self.consume_char();

assert!(open_quote == '"' || open_quote == ''');

let value = self.consume_while(|c| c != open_quote);

assert!(self.consume_char() == open_quote);

value

}

/// Parse a list of name="value" pairs, separated by whitespace.

fn parse_attributes(&mut self) -> dom::AttrMap {

let mut attributes = HashMap::new();

loop {

self.consume_whitespace();

if self.next_char() == '>' {

break;

}

let (name, value) = self.parse_attr();

attributes.insert(name, value);

}

attributes

}

To parse the child nodes, we recursively call parse_node in a loop until we

reach the closing tag:

/// Parse a sequence of sibling nodes.

fn parse_nodes(&mut self) -> Vec<dom::Node> {

let mut nodes = vec!();

loop {

self.consume_whitespace();

if self.eof() || self.starts_with("span>) {

break;

}

nodes.push(self.parse_node());

}

nodes

}

Finally, we can put this all together to parse an entire HTML document into a DOM tree. This function will create a root node for the document if it doesn’t include one explicitly; this is similar to what a real HTML parser does.

/// Parse an HTML document and return the root element.

pub fn parse(source: String) -> dom::Node {

let mut nodes = Parser { pos: 0u, input: source }.parse_nodes();

// If the document contains a root element, just return it. Otherwise, create one.

if nodes.len() == 1 {

nodes.swap_remove(0).unwrap()

} else {

dom::elem("html".to_string(), HashMap::new(), nodes)

}

}

That’s it! The entire code for the robinson HTML parser. The whole thing weighs in at just over 100 lines of code (not counting blank lines and comments). If you use a good library or parser generator, you can probably build a similar toy parser in even less space.

Exercises

Here are a few alternate ways to try this out yourself. As before, you can choose one or more of them and ignore the others.

Build a parser (either “by hand” or with a library or parser generator) that takes a subset of HTML as input and produces a tree of DOM nodes.

Modify robinson’s HTML parser to add some missing features, like comments. Or replace it with a better parser, perhaps built with a library or generator.

Create an invalid HTML file that causes your parser (or mine) to fail. Modify the parser to recover from the error and produce a DOM tree for your test file.

Shortcuts

If you want to skip parsing completely, you can build a DOM tree programmatically instead, by adding some code like this to your program (in pseudo-code; adjust it to match the DOM code you wrote in Part 1):

// Hello, world!

let root = element("html");

let body = element("body");

root.children.push(body);

body.children.push(text("Hello, world!"));

Or you can find an existing HTML parser and incorporate it into your program.

The next article in this series will cover CSS data structures and parsing.

http://limpet.net/mbrubeck/2014/08/11/toy-layout-engine-2.html

|

|

Christian Heilmann: Presenter tip: animated GIFs are not as cool as we think |

Disclaimer: I have no right to tell you what to do and how to present – how dare I? You can do whatever you want. I am not “hating” on anything – and I don’t like the term. I am also guilty and will be so in the future of the things I will talk about here. So, bear with me: as someone who spends most of his life currently presenting, being at conferences and coaching people to become presenters, I think it is time for an intervention.

The Tweet that started this and its thread

If you are a technical presenter and you consider adding lots of animated GIFs to your slides, stop, and reconsider. Consider other ways to spend your time instead. For example:

- Writing a really clean code example and keeping it in a documented code repository for people to use

- Researching how very successful people use the thing you want the audience to care

- Finding a real life example where a certain way of working made a real difference and how it could be applied to an abstract coding idea

- Researching real numbers to back up your argument or disprove common “truths”

Don’t fall for the “oh, but it is cool and everybody else does it” trap. Why? because when everybody does it there is nothing cool or new about it.

Animated GIFs are ubiquitous on the web right now and we all love them. They are short videos that work in any environment, they are funny and – being very pixelated – have a “punk” feel to them.

This, to me, was the reason presenters used them in technical presentations in the first place. They were a disruption, they were fresh, they were different.

We all got bored to tears by corporate presentations that had more bullets than the showdown in a Western movie. We all got fed up with amazingly brushed up presentations by visual aficionados that had just one too many inspiring butterfly or beautiful sunset.

We wanted something gritty, something closer to the metal – just as we are. Let’s be different, let’s disrupt, let’s show a seemingly unconnected animation full of pixels.

This is great and still there are many good reasons to use an animated GIF in our presentations:

- They are an eye catcher – animated things is what we look at as humans. The subconscious check if something that moves is a saber tooth tiger trying to eat me is deeply ingrained in us. This can make an animated GIF a good first slide in a new section of your talk: you seemingly do something unexpected but what you want to achieve is to get the audience to reset and focus on the next topic you’d like to cover.

- They can be a good emphasis of what you are saying. When Soledad Penades shows a lady drinking under the table (6:05) when talking about her insecurities as someone people look up to it makes a point.

When Jake Archibald explains that navigator.onLine will be true even if the network cable is plugged into some soil (26:00) it is a funny, exciting and simple thing to do and adds to the point he makes.

When Jake Archibald explains that navigator.onLine will be true even if the network cable is plugged into some soil (26:00) it is a funny, exciting and simple thing to do and adds to the point he makes.

- It is an in-crowd ting to do – the irreverence of an animated, meme-ish GIF tells the audience that you are one of them, not a professional, slick and tamed corporate speaker.

But is it? Isn’t a trick that everybody uses way past being disruptive? Are we all unique and different when we all use the same content? How many more times do we have to endure the “this escalated quickly” GIF taken from a 10 year old movie? Let’s not even talk about the issue that we expect the audience to get the reference and why it would be funny.

We’re running the danger here of becoming predictable and boring. Especially when you see speakers who use an animated GIF and know it wasn’t needed and then try to shoe-horn it somehow into their narration. It is not a rite of passage. You should use the right presentation technique to achieve a certain response. A GIF that is in your slides just to be there is like an unused global variable in your code – distracting, bad practice and in general causing confusion.

The reasons why we use animated GIFs (or videos for that matter) in slides are also their main problem:

- They do distract the audience – as a “whoa, something’s happening” reminder to the audience, that is good. When you have to compete with the blinking thing behind you it is bad. This is especially true when you chose a very “out there” GIF and you spend too much time talking over it. A fast animation or a very short loop can get annoying for the audience and instead of seeing you as a cool presenter they get headaches and think “please move on to the next slide” without listening to you. I made that mistake with my rainbow vomiting dwarf at HTML5Devconf in 2013 and was called out on Twitter.

- They are too easy to add – many a times we are tempted just to go for the funny cat pounding a strawberry because it is cool and it means we are different as a presenter and surprising.

Well, it isn’t surprising any longer and it can be seen as a cheap way out for us as creators of a presentation. Filler material is filler material, no matter how quirky.

You don’t make a boring topic more interesting by adding animated images. You also don’t make a boring lecture more interesting by sitting on a fart cushion. Sure, it will wake people up and maybe get a giggle but it doesn’t give you a more focused audience. We stopped using 3D transforms in between slides and fiery text as they are seen as a sign of a bad presenter trying to make up for a lack of stage presence or lack of content with shiny things. Don’t be that person.

When it comes to technical presentations there is one important thing to remember: your slides do not matter and are not your presentation. You are.

Your slides are either:

- wallpaper for your talking parts

- emphasis of what you are currently covering or

- a code example.

If a slide doesn’t cover any of these cases – remove it. Wallpaper doesn’t blink. It is there to be in the background and make the person in front of it stand out more. You already have to compete with a lot of of other speakers, audience fatigue, technical problems, sound issues, the state of your body and bad lighting. Don’t add to the distractions you have to overcome by adding shiny trinkets of your own making.

You don’t make boring content more interesting by wrapping it in a shiny box. Instead, don’t talk about the boring parts. Make them interesting by approaching them differently, show a URL and a screenshot of the boring resources and tell people what they mean in the context of the topic you talk about. If you’re bored about something you can bet the audience is, too. How you come across is how the audience will react. And insincerity is the worst thing you can project. Being afraid or being shy or just being informative is totally fine. Don’t try too hard to please a current fashion – be yourself and be excited about what you present and the rest falls into place.

So, by all means, use animated GIFs when they fit – give humorous and irreverent presentations. But only do it when this really is you and the rest of your stage persona fits. There are masterful people out there doing this right – Jenn Schiffer comes to mind. If you go for this – go all in. Don’t let the fun parts of your talk steal your thunder. As a presenter, you are entertainer, educator and explainer. It is a mix, and as all mixes go, they only work when they feel rounded and in the right rhythm.

http://christianheilmann.com/2014/08/11/presenter-tip-animated-gifs-are-not-as-cool-as-we-think/

|

|

Nicholas Nethercote: Some good reading on sexual harassment and being a decent person |

Last week I attended a sexual harassment prevention training seminar. This was the first of several seminars that Mozilla is holding as part of its commendable Diversity and Inclusion Strategy. The content was basically “how to not get sued for sexual harassment in the workplace”. That’s a low bar, but also a reasonable place to start, and the speaker was both informative and entertaining. I’m looking forward to the next seminar on Unconcious Bias and Inclusion, which sounds like it will cover more subtle issues.

With the topic of sexual harassment in mind, I stumbled across a Metafilter discussion from last year about an essay by Genevieve Valentine in which she describes and discusses a number of incidents of sexual harassment that she has experienced throughout her life. I found the essay interesting, but the Metafilter discussion thread even more so. It’s a long thread (594 comments) but mostly high quality. It focuses initially on one kind of harassment that some men perform on public transport, but quickly broadens to be about (a) the full gamut of harassing behaviours that many women face regularly, (b) the responses women make towards these behaviours, and (c) the reactions, both helpful and unhelpful, that people can and do have towards those responses. Examples abound, ranging from the disconcerting to the horrifying.

There are, of course, many other resources on the web where one can learn about such topics. Nonetheless, the many stories that viscerally punctuate this particular thread (and the responses to those stories) helped my understanding of this topic — in particular, how bystanders can intervene when a woman is being harassed — more so than some dryer, more theoretical presentations have. It was well worth my time.

|

|

Jonas Finnemann Jensen: Using Aggregates from Telemetry Dashboard in Node.js |

When I was working on the aggregation code for telemetry histograms as displayed on the telemetry dashboard, I also wrote a Javascript library (telemetry.js) to access the aggregated histograms presented in the dashboard. The idea was separate concerns and simplify access to the aggregated histogram data, but also to allow others to write custom dashboards presenting this data in different ways. Since then two custom dashboards have appeared:

- a developer toolbox activity dashboard by Jeff Griffiths, and

- a histogram regression dashboard by Roberto Vitillo.

Both of these dashboards runs a cronjob that downloads the aggregated histogram data using telemetry.js and then aggregates or analyses it in an interesting way before publishing the results on the custom dashboard. However, telemetry.js was written to be included from telemetry.mozilla.org/v1/telemetry.js, so that we could update the storage format, use a differnet data service, move to a bucket in another region, etc. I still want to maintain the ability to modify telemetry.js without breaking all the deployments, so I decided to write a node.js module called telemetry-js-node that loads telemetry.js from telemetry.mozilla.org/v1/telemetry.js. As evident from the example below, this module is straight forward to use, and exhibits full compatibility with telemetry.js for better and worse.

// Include telemetry.js var Telemetry = require('telemetry-js-node'); // Initialize telemetry.js just the documentation says to Telemetry.init(function() { // Get all versions var versions = Telemetry.versions(); // Pick a version var version = versions[0]; // Load measures for version Telemetry.measures(version, function(measures) { // Print measures available console.log("Measures available for " + version); // List measures Object.keys(measures).forEach(function(measure) { console.log(measure); }); }); });

Whilst there certainly is some valid concerns (and risks) with loading Javascript code over http. This hack allows us to offer a stable API and minimize maintenance for people consuming the telemetry histogram aggregates. And as we’re reusing the existing code the extensive documentation for telemetry is still applicable. See the following links for further details.

Disclaimer: I know it’s not smart to load Javascript code into node.js over http. It’s mostly a security issue as you can’t use telemetry.js without internet access anyway. But considering that most people will run this as an isolated cron job (using docker, lxc, heroku or an isolated EC2 instance), this seems like an acceptable solution.

By the way, if you make a custom telemetry dashboard, whether it’s using telemetry.js in the browser or Node.js, please file a pull request against telemetry-dashboard on github to have a link for your dashboard included on telemetry.mozilla.org.

http://jonasfj.dk/blog/2014/08/using-aggregates-from-telemetry-dashboard-in-node-js/

|

|

Jordan Lund: This Week In Releng - Aug 4th, 2014 |

Major Highlights:

- Kim enabled c3.xlarge slaves for selected b2g tests - bug 1031083

- Catlee added pvtbuilds to list of things that pass through proxxy

- Coop implemented the ability to enable/disable a slave directly from slave health

Completed work (resolution is 'FIXED'):

- Buildduty

- update bm-remote with new mobiletp4.zip file

- Decommission foopy117 and associated tegras

- Legacy vcs sync not able to update legacy mapper quick enough for b2gbuild.py, causing b2g builds calls to mapper to timeout

- Something wrong with b-2008-ix-002x slaves ("LINK : fatal error LNK1123: failure during conversion to COFF: file invalid or corrupt")

- Trees closed due to Windows test backlog

- upload a new talos.zip file to get nonlocal source fixes

- Install treeherder-client on internal PyPI

- upload a new mobiletp4.zip pageset to the 3 headed remote talos server (round 2)

- Windows builds pending on inbound for > 2 hours

- Install fxa-python-client on internal PyPI

- Last non-pending Linux 32 opt build on fx-team was 17 hours ago

- General Automation

- Make blobber uploads discoverable

- buildbot changes to run selected b2g tests on c3.xlarge

- [Flame] Turn off FX OS Version 34.0a1 nightly updates until instability is fixed

- Please schedule b2g emulator cppunit tests on Cedar

- Run linux64-br-haz builder on b2g-inbound/mozilla-inbound/fx-team/mozilla-central and try-by-default

- Try is attempting to trigger periodic non-unified builds

- Automatically delete obsolete latest-* builds for calendar

- Allow test machines in AWS to get to github via internet gateway

- Schedule mochitest-plain on linux64-mulet on all branches

- Loan Requests

- Mozharness

- Other

- linux desktop qt builds for firefox

- Figure out retention of flame tinderbox-builds

- New AWS account, 'mockbuild-repos-upload'

- Platform Support

- Release Automation

- send e-mail to release-drivers when a build is requested through ship it

- With French locale, the sort of the date is broken

- Add a footer to ship-it with a link to source + bugzilla

- Repos and Hooks

- esr31 branch on https://github.com/mozilla/gecko-dev

- Give Treeherder URLs alongside TBPL ones in the hg.mozilla.org push response

- Mercurial extension for monitoring servers

- Tools

- Balrog: Backend

- create balrog rules to properly support OS blocking and watershed updates on beta (and equivalent test) channel

- support comparison operators for matching version & buildID in rules

- Balrog: Frontend

- Buildduty

- General Automation

- Modify file structure of Firefox.app to allow for OSX v2 signing

- Thunderbird Linux and Mac MozMill builders are using the system python (fix bustage on Thunderbird Mac MozMill builders)

- Create periodic Linux code coverage builds

- Add dependency management to Runner

- [Tracking] Stand up Code Coverage build and test runs

- Splitting Gij and Gip tests in several chunks

- Don't clobber the source checkout

- repurpose a snow leopard machine for 10.9 mac signing

- Run mock against repos other than puppetagain

- cope with signing server transition in buildbot configs

- stop jobs from hg'n mozharness/tools repos

- android single locale central/aurora nightlies not reporting to balrog

- You can't choose mochitest-N where N>8 for Android specific mochitests

- make sure signing server works on 10.9 and with v2 signatures

- create signing servers for v2 mac signing

- Timeouts during pip install ("Error running install of package, /builds/slave/test/build/venv/bin/pip install --download-cache /builds/...", "Error running install of package, /builds/slave/talos-slave/test/build/venv/bin/pip install --download-cache...")

- AWS region-local caches for https stuff

- Loan Requests

- loan request to investigate bug 1026805

- Slave loan request for a b-2008-sm machine

- Loan an ami-6a395a5a instance to Aaron Klotz

- Slave loan request for a bld-linux64-ec2 vm for pmoore

- Slave loan request for a bld-linux64-ec2 vm

- loan windows 7 hardware talos slave to jmaher and rvitillo@mozilla.com

- Slave loan request for a talos-mtnlion-r5 machine

- Mozharness

- Turn on blobber upload for Gaia Unit Tests

- mozharness DesktopUnittestOutputParser.evaluate_parser() should record a failure if the return code was non-zero

- Other

- figure out what to do with the n900s

- Improve cleanup of tinderbox-builds

- Need read permissions to subnets

- Platform Support

- [mozpool-client] TypeError: unsupported operand type(s) for +: 'NoneType' and 'str'

- c3.xlarge instance types are expensive, let's test running those tests on a range of instance types that are cheaper

- Change Android 2.2 builds to be called Android 2.3 builds

- Upload JDK1.7 on builders

- Release Automation

- Figure out how to offer release build to beta users

- make aus3 and aus4 responses consistent

- Figure out Play Store dynamics for Android 2.2/ARMv6 EOL support releases

- Add a standalone process that listens to pulse for release related buildbot messages

- Add REST API entry point to shipit that allows shipit-agent to enter release data into shipit database

- update partner repack scripts to cope with new format after mac signing changes

- Add new table(s) to shipit database

- Repos and Hooks

- Tools

- Make Funsize generated partials identical across platforms and environments

- Add timeouts to buildapi's urllib.urlopen() requests

- slaverebooter should attempt reboot if graceful shutdown fails

- Funsize should return correct MIME type

- Aggregate similar exceptions in emails from masters

- Reconfigs should be automatic, and scheduled via a cron job

- Funsize returns errors out and returns an HTTP 500 when requested for a non-existent partial

- permanent location for vcs-sync mapfiles, status json, logs

- Dev services (e.g. hg.mozilla.org and git.mozilla.org) status page(s)

http://jordan-lund.ghost.io/this-week-in-releng-aug-4th-2014/

|

|

Mike Conley: DocShell in a Nutshell – Part 2: The Wonder Years (1999 – 2004) |

When I first announced that I was going to be looking at the roots of DocShell, and how it has changed over the years, I thought I was going to be leafing through a lot of old CVS commits in order to see what went on before the switch to Mercurial.

I thought it’d be so, and indeed it was so. And it was painful. Having worked with DVCS’s like Mercurial and Git so much over the past couple of years, my brain was just not prepared to deal with CVS.

My solution? Take the old CVS tree, and attempt to make a Git repo out of it. Git I can handle.

And so I spent a number of hours trying to use cvs2git to convert my rsync’d mirror of the Mozilla CVS repository into something that I could browse with GitX.

“But why isn’t the CVS history in the Mercurial tree?” I hear you ask. And that’s a good question. It might have to do with the fact that converting the CVS history over is bloody hard – or at least that was my experience. cvs2git has the unfortunate habit of analyzing the entire repository / history and spitting out any errors or corruptions it found at the end.1 This is fine for small repositories, but the Mozilla CVS repo (even back in 1999) was quite substantial, and had quite a bit of history.

So my experience became: run cvs2git, wait 25 minutes, glare at an error message about corruption, scour the web for solutions to the issue, make random stabs at a solution, and repeat.

Not the greatest situation. I did what most people in my position would do, and cast my frustration into the cold, unfeeling void that is Twitter.

But, lo and behold, somebody on the other side of the planet was listening. Unfocused informed me that whoever created the gecko-dev Github mirror somehow managed to type in the black-magic incantations that would import all of the old CVS history into the Git mirror. I simply had to clone gecko-dev, and I was golden.

Thanks Unfocused. ![]()

So I had my tree. I cracked open Gitx, put some tea on, and started pouring through the commits from the initial creation of the docshell folder (October 15, 1999) to the last change in that folder just before the switch over to 2005 (December 15, 2004)2.

The following are my notes as I peered through those commits.

Artist’s rendering of me reading some old commit messages. I’m not claiming to have magic powers.

“First landing”

That’s the message for the first commit when the docshell/ folder was first created by Travis Bogard.

Without even looking at the code, that’s a pretty strange commit just by the message alone. No bug number, no reviewer, no approval, nothing even approximating a vague description of what was going on.

Leafing through these early commits, I was surprised to find that quite common. In fact, I learned that it was about a year after this work started that code review suddenly became mandatory for commits.

So, for these first bits of code, nobody seems to be reviewing it – or at least, nobody is signing off on it in commit messages.

Like I mentioned, the date for this commit is October 15, 1999. If the timeline in this Wikipedia article about the history of the Mozilla Suite is reliable, that puts us somewhere between Milestone 10 and Milestone 11 of the first 1.0 Mozilla Suite release.3

That means that at the time that this docshell/ folder was created, the Mozilla source code had been publicly available for over a year4, but nothing had been released from it yet.

Travis Bogard

Before we continue, who is this intrepid Travis Bogard who is spearheading this embedding initiative and the DocShell / WebShell rewrite?

At the time, according to his LinkedIn page, he worked for America Online (which at this point in time owned Netscape.5) He’d been working for AOL since 1996, working his way up the ranks from lowly intern all the way to Principal Software Engineer.

Travis was the one who originally wrote the wiki page about how painful it was embedding the web engine, and how unwieldy nsWebShell was.6 It was Travis who led the charge to strip away all of the complexity and mess inside of WebShell, and create smaller, more specialized interfaces for the behind the scenes DocShell class, which would carry out most of the work that WebShell had been doing up until that point.7

So, for these first few months, it was Travis who would be doing most of the work on DocShell.

Parallel development

These first few months, Travis puts the pedal to the metal moving things out of WebShell and into DocShell. Remember – the idea was to have a thin, simple nsWebBrowser that embedders could touch, and a fat, complex DocShell that was privately used within Gecko that was accessible via many small, specialized interfaces.

Wholesale replacing or refactoring a major part of the engine is no easy task, however – and since WebShell was core to the very function of the browser (and the mail/news client, and a bunch of other things), there were two copies of WebShell made.

The original WebShell existed in webshell/ under the root directory. The second WebShell, the one that would eventually replace it, existed under docshell/base. The one under docshell/base is the one that Travis was stripping down, but nobody was using it until it was stable. They’d continue using the one under webshell/, until they were satisfied with their implementation by both manual and automated testing.

When they were satisfied, they’d branch off of the main development line, and start removing occurances of WebShell where they didn’t need to be, and replace them with nsWebBrowser or DocShell where appropriate. When they were done that, they’d merge into main line, and celebrate!

At least, that was the plan.

That plan is spelled out here in the Plan of Attack for the redesign. That plan sketches out a rough schedule as well, and targets November 30th, 1999 as the completion point of the project.

This parallel development means that any bugs that get discovered in WebShell during the redesign needs to get fixed in two places – both under webshell/ and docshell/base.

Breaking up is so hard to do

So what was actually changing in the code? In Travis’ first commit, he adds the following interfaces:

- nsIDocShell

- nsIDocShellEdit

- nsIDocShellFile

- nsIGenericWindow

- nsIGlobalHistory

- nsIScrollable

- nsIHTMLDocShell

along with some build files. Something interesting here is this nsIHTMLDocShell – where it looked like at this point, the plan was to have different DocShell interfaces depending on the type of document being displayed. Later on, we see this idea dissipate.

If DocShell was a person, these are its baby pictures. At this point, nsIDocShell has just two methods: LoadDocument, LoadDocumentVia, and a single nsIDOMDocument attribute for the document.

And here’s the interface for WebShell, which Travis was basing these new interfaces off of. Note that there’s no LoadDocument, or LoadDocumentVia, or an attribute for an nsIDOMDocument. So it seems this wasn’t just a straight-forward breakup into smaller interfaces – this was a rewrite, with new interfaces to replace the functionality of the old one.8

This is consistent with the remarks in this wikipage where it was mentioned that the new DocShell interfaces should have an API for the caller to supply a document, instead of a URI – thus taking the responsibility of calling into the networking library away from DocShell and putting it on the caller.

nsIDocShellEdit seems to be a replacement for some of the functions of the old nsIClipboardCommands methods that WebShell relied upon. Specifically, this interface was concerned with cutting, copying and pasting selections within the document. There is also a method for searching. These methods are all just stubbed out, and don’t do much at this point.

nsIDocShellFile seems to be the interface used for printing and saving documents.

nsIGenericWindow (which I believe is the ancestor of nsIBaseWindow), seems to be an interface that some embedding window must implement in order for the new nsWebBrowser / DocShell to be embedded in it. I think. I’m not too clear on this. At the very least, I think it’s supposed to be a generic interface for windows supplied by the underlying operating system.

nsIGlobalHistory is an interface for, well, browsing history. This was before tabs, so we had just a single, linear global history to maintain, and I guess that’s what this interface was for.

nsIScrollable is an interface for manipulating the scroll position of a document.

So these magnificent seven new interfaces were the first steps in breaking up WebShell… what was next?

Enter the Container

nsIDocShellContainer was created so that the DocShells could be formed into a tree and enumerated, and so that child DocShells could be named. It was introduced in this commit.

about:face

In this commit, only five days after the first landing, Travis appears to reverse the decision to pass the responsibility of loading the document onto the caller of DocShell. LoadDocument and LoadDocumentVia are replaced by LoadURI and LoadURIVia. Steve Clark (aka “buster”) is also added to the authors list of the nsIDocShell interface. It’s not clear to me why this decision was reversed, but if I had to guess, I’d say it proved to be too much of a burden on the callers to load all of the documents themselves. Perhaps they punted on that goal, and decided to tackle it again later (though I will point out that today’s nsIDocShell still has LoadURI defined in it).

First implementor

The first implementation of nsIDocShell showed up on October 25, 1999. It was nsHTMLDocShell, and with the exception of nsIGlobalHistory, it implemented all of the other interfaces that I listed in Travis’ first landing.

The base implementation

On October 25th, the stubs of a DocShell base implementation showed up in the repository. The idea, I would imagine, is that for each of the document types that Gecko can display, we’d have a DocShell implementation, and each of these DocShell implementations would inherit from this DocShell base class, and only override the things that they need specialized for their particular document type.

Later on, when the idea of having specialized DocShell implementations evaporates, this base class will end up being nsDocShell.cpp.

“Does not compile yet”

The message for this commit on October 27th, 1999 is pretty interesting. It reads:

added a bunch of initial implementation. does not compile yet, but that’s ok because docshell isn’t part of the build yet.

So not only are none of these patches being reviewed (as far as I can tell), and are not mapped to any bugs in the bug tracker, but the patches themselves just straight-up do not build. They are not building on tinderbox.

This is in pretty stark contrast to today’s code conventions. While it’s true that we might land code that is not entered for most Nightly users, we usually hide such code behind an about:config pref so that developers can flip it on to test it. And I think it’s pretty rare (if it ever occurs) for us to land code in mozilla-central that’s not immediately put into the build system.

Perhaps the WebShell tests that were part of the Plan of Attack were being written in parallel and just haven’t landed, but I suspect that they haven’t been written at all at this point. I suspect that the team was trying to stand something up and make it partially work, and then write tests for WebShell and try to make them pass for both old WebShell and DocShell. Or maybe just the latter.

These days, I think that’s probably how we’d go about such a major re-architecture / redesign / refactor; we’d write tests for the old component, land code that builds but is only entered via an about:config pref, and then work on porting the tests over to the new component. Once the tests pass for both, flip the pref for our Nightly users and let people test the new stuff. Once it feels stable, take it up the trains. And once it ships and it doesn’t look like anything is critically wrong with the new component, begin the process of removing the old component / tests and getting rid of the about:config pref.

Note that I’m not at all bashing Travis or the other developers who were working on this stuff back then – I’m simply remarking on how far we’ve come in terms of development practices.

Remember AOL keywords?

Tangentially, I’m seeing some patches go by that have to do with hooking up some kind of “Keyword” support to WebShell.

Remember those keywords? This was the pre-Google era where there were only a few simplistic search engines around, and people were still trying to solve discoverability of things on the web. Keywords was, I believe, AOL’s attempt at a solution.

You can read up on AOL Keywords here. I just thought it was interesting to find some Keywords support being written in here.

One DocShell to rule them all

Now that we have decided that there is only one docshell for all content types, we needed to get rid of the base class/ content type implementation. This checkin takes and moves the nsDocShellBase to be nsDocShell. It now holds the nsIHTMLDocShell stuff. This will be going away. nsCDocShell was created to replace the previous nsCHTMLDocShell.

This commit lands on November 12th (almost a month from the first landing), and is the point where the DocShell-implementation-per-document-type plan breaks down. nsDocShellBase gets renamed to nsDocShell, and the nsIHTMLDocShell interface gets moved into nsIDocShell.idl, where a comment above it indicates that the interface will soon go away.

We have nsCDocShell.idl, but this interface will eventually disappear as well.

[PORKJOCKEY]

So, this commit message on November 13th caught my eye:

pork jockey paint fixes. bug=18140, r=kmcclusk,pavlov

What the hell is a “pork jockey”? A quick search around, and I see yet another reference to it in Bugzilla on bug 14928. It seems to be some kind of project… or code name…

I eventually found this ancient wiki page that documents some of the language used in bugs on Bugzilla, and it has an entry for “pork jockey”: a pork jockey bug is a “bug for work needed on infrastructure/architecture”.

I mentioned this in #developers, and dmose (who was hacking on Mozilla code at the time), explained:

16:52 (dmose) mconley: so, porkjockeys

16:52 (mconley) let’s hear it

16:52 (dmose) mconley: at some point long ago, there was some infrastrcture work that needed to happen

16:52 mconley flips on tape recorder

16:52 (dmose) and when people we’re talking about it, it seemed very hard to carry off

16:52 (dmose) somebody said that that would happen on the same day he saw pigs fly

16:53 (mconley) ah hah

16:53 (dmose) so ultimately the group of people in charge of trying to make that happen were…

16:53 (dmose) the porkjockeys

16:53 (dmose) which was the name of the mailing list too

Here’s the e-mail that Brendan Eich sent out to get the Porkjockey’s flying.

Development play-by-play

On November 17th, the nsIGenericWindow interface was removed because it was being implemented in widget/base/nsIBaseWindow.idl.

On November 27th, nsWebShell started to implement nsIBaseWindow, which helped pull a bunch of methods out of the WebShell implementations.

On November 29th, nsWebShell now implements nsIDocShell – so this seems to be the first point where the DocShell work gets brought into code paths that might be hit. This is particularly interesting, because he makes this change to both the WebShell implementation that he has under the docshell/ folder, as well as the WebShell implementation under the webshell/ folder. This means that some of the DocShell code is now actually being used.

On November 30th, Travis lands a patch to remove some old commented out code. The commit message mentions that the nsIDocShellEdit and nsIDocShellFile interfaces introduced in the first landing are now defunct. It doesn’t look like anything is diving in to replace these interfaces straight away, so it looks like he’s just not worrying about it just yet. The defunct interfaces are removed in this commit one day later.

One day later, nsIDocShellTreeNode interface is added to replace nsIDocShellContainer. The interface is almost identical to nsIDocShellContainer, except that it allows the caller to access child DocShells at particular indices as opposed to just returning an enumerator.

December 3rd was a very big day! Highlights include:

- WebShell stops implementing nsIScriptContextOwner in favour of the shiny new nsIScriptGlobalObjectOwner.

- The (somewhat nebulous) distinction of DocShell “treeItems” and “treeNodes” is made. At this point, the difference between the two is that nsIDocShellTreeItem must be implemented by anything that wishes to be a leaf or middle node of the DocShell tree. The interface itself provides accessors to various attributes on the tree item. nsIDocShellTreeNode, on the other hand, is for manipulating one of these items in the tree – for example, finding, adding or removing children. I’m not entirely sure this distinction is useful, but there you have it. WebShell (both the fork and “live” version) are set to implement these two new interfaces.

Noticing something? A lot of these changes are getting dumped straight into the live version of WebShell (under the webshell/ directory). That’s not really what the Plan of Attack had spelled out, but that’s what appears to be happening. Perhaps all of this stuff was trivial enough that it didn’t warrant waiting for the WebShell fork to switch over.

On December 12th, nsIDocShellTreeOwner is introduced.

On December 15th, buster re-lands the nsIDocShellEdit and nsIDocShellFile interfaces that were removed on November 30th, but they’re called nsIContentViewerEdit and nsIContentViewerFile, respectively. Otherwise, they’re identical.

On December 17th, WebShell becomes a subclass of DocShell. This means that a bunch of things can get removed from WebShell, since they’re being taken care of by the parent DocShell class. This is a pretty significant move in the whole “replacing WebShell” strategy.

Similar work occurs on December 20th, where even more methods inside WebShell start to forward to the base DocShell class.

That’s the last bit of notable work during 1999. These next bits show up in the new year, and provides further proof that we didn’t all blow up during Y2K.

On Feburary 2nd, 2000, a new interface called nsIWebNavigation shows up. This interface is still used to this day to navigate a browser, and to get information about whether it can go “forwards” or “backwards”.

On February 8th, a patch lands that makes nsGlobalWindow deal entirely in DocShells instead of WebShells. nsIScriptGlobalObject also now deals entirely with DocShells. This is a pretty big move, and the patch is sizeable.

On February 11th, more methods are removed from WebShell, since the refactorings and rearchitecture have made them obsolete.

On February 14th, for Valentine’s day, Travis lands a patch to have DocShell implement the nsIWebNavigation interface. Later on, he lands a patch that relinquishes further control from WebShell, and puts the DocShell in control of providing the script environment and providing the nsIScriptGlobalObjectOwner interface. Not much later, he lands a patch that implements the Stop method from the nsIWebNavigation interface for DocShell. It’s not being used yet, but it won’t be long now. Valentine’s day was busy!

On February 24th, more stuff (like the old Stop implementation) gets gutted from WebShell. Some, if not all, of those methods get forwarded to the underlying DocShell, unsurprisingly.

Similar story on February 29th, where a bunch of the scroll methods are gutted from WebShell, and redirected to the underlying DocShell. This one actually has a bug and some reviewers!9 Travis also landed a patch that gets DocShell set up to be able to create its own content viewers for various documents.

March 10th saw Travis gut plenty of methods from WebShell and redirect to DocShell instead. These include Init, SetDocLoaderObserver, GetDocLoaderObserver, SetParent, GetParent, GetChildCount, AddChild, RemoveChild, ChildAt, GetName, SetName, FindChildWithName, SetChromeEventHandler, GetContentViewer, IsBusy, SetDocument, StopBeforeRequestingURL, StopAfterURLAvailable, GetMarginWidth, SetMarginWidth, GetMarginHeight, SetMarginHeight, SetZoom, GetZoom. A few follow-up patches did something similar. That must have been super satisfying.

March 11th, Travis removes the Back, Forward, CanBack and CanForward methods from WebShell. Consumers of those can use the nsIWebNavigation interface on the DocShell instead.

March 30th sees the nsIDocShellLoadInfo interface show up. This interface is for “specifying information used in a nsIDocShell::loadURI call”. I guess this is certainly better than adding a huge amount of arguments to ::loadURI.

During all of this, I’m seeing references to a “new session history” being worked on. I’m not really exploring session history (at this point), so I’m not highlighting those commits, but I do want to point out that a replacement for the old Netscape session history stuff was underway during all of this DocShell business, and the work intersected quite a bit.

On April 16th, Travis lands a commit that takes yet another big chunk out of WebShell in terms of loading documents and navigation. The new session history is now being used instead of the old.

The last 10% is the hardest part

We’re approaching what appears to be the end of the DocShell work. According to his LinkedIn profile, Travis left AOL in May 2000. His last commit to the repository before he left was on April 24th. Big props to Travis for all of the work he put in on this project – by the time he left, WebShell was quite a bit simpler than when he started. I somehow don’t think he reached the end state that he had envisioned when he’d written the original redesign document – the work doesn’t appear to be done. WebShell is still around (in fact, parts of it around were around until only recently!10 ). Still, it was a hell of chunk of work he put in.

And if I’m interpreting the rest of the commits after this correctly, there is a slow but steady drop off in large architectural changes, and a more concerted effort to stabilize DocShell, nsWebBrowser and nsWebShell. I suspect this is because everybody was buckling down trying to ship the first version of the Mozilla Suite (which finally occurred June 5th, 2002 – still more than 2 years down the road).

There are still some notable commits though. I’ll keep listing them off.

On June 22nd, a developer called “rpotts” lands a patch to remove the SetDocument method from DocShell, and to give the implementation / responsibility of setting the document on implementations of nsIContentViewer.

July 5th sees rpotts move the new session history methods from nsIWebNavigation to a new interface called nsIDocShellHistory. It’s starting to feel like the new session history is really heating up.

On July 18th, a developer named Judson Valeski lands a large patch with the commit message “webshell-docshell consolodation changes”. Paraphrasing from the bug, the point of this patch is to move WebShell into the DocShell lib to reduce the memory footprint. This also appears to be a lot of cleanup of the DocShell code. Declarations are moved into header files. The nsDocShellModule is greatly simplified with some macros. It looks like some dead code is removed as well.

On November 9th, a developer named “disttsc” moves the nsIContentViewer interface from the webshell folder to the docshell folder, and converts it from a manually created .h to an .idl. The commit message states that this work is necessary to fix bug 46200, which was filed to remove nsIBrowserInstance (according to that bug, nsIBrowserInstance must die).

That’s probably the last big, notable change to DocShell during the 2000's.

2001: A DocShell Odyssey

On March 8th, a developer named “Dan M” moves the GetPersistence and SetPersistence methods from nsIWebBrowserChrome to nsIDocShellTreeOwner. He sounds like he didn’t really want to do it, or didn’t want to be pegged with the responsibility of the decision – the commit message states “embedding API review meeting made me do it.” This work was tracked in bug 69918.

On April 16th, Judson Valeski makes it so that the mimetypes that a DocShell can handle are not hardcoded into the implementation. Instead, handlers can be registered via the CategoryManager. This work was tracked in bug 40772.

On April 26th, a developer named Simon Fraser adds an implementation of nsISimpleEnumerator for DocShells. This implementation is called, unsurprisingly, nsDocShellEnumerator. This was for bug 76758. A method for retrieving an enumerator is added one day later in a patch that fixes a number of bugs, all related to the page find feature.

April 27th saw the first of the NSPR logging for DocShell get added to the code by a developer named Chris Waterson. Work for that was tracked in bug 76898.

On May 16th, for bug 79608, Brian Stell landed a getter and setter for the character set for a particular DocShell.

There’s a big gap here, where the majority of the landings are relatively minor bug fixes, cleanup, or only slightly related to DocShell’s purpose, and not worth mentioning.11

And beyond the infinite…

On January 8th, 2002, for bug 113970, Stephen Walker lands a patch that takes yet another big chunk out of WebShell, and added this to the nsIWebShell.h header:

/** * WARNING WARNING WARNING WARNING WARNING WARNING WARNING WARNING !!!! * * THIS INTERFACE IS DEPRECATED. DO NOT ADD STUFF OR CODE TO IT!!!! */

I’m actually surprised it too so long for something like this to get added to the nsIWebShell interface – though perhaps there was a shared understanding that nsIWebShell was shrinking, and such a notice wasn’t really needed.

On January 9th, 2003 (yes, a whole year later – I didn’t find much worth mentioning in the intervening time), I see the first reference to “deCOMtamination”, which is an effort to reduce the amount of XPCOM-style code being used. You can read up more on deCOMtamination here.

On January 13th, 2003, Nisheeth Ranjan lands a patch to use “machine learning” in order to order results in the urlbar autocomplete list. I guess this was the precursor to the frencency algorithm that the AwesomeBar uses today? Interestingly, this code was backed out again on February 21st, 2003 for reasons that aren’t immediately clear – but it looks like, according to this comment, the code was meant to be temporary in order to gather “weights” from participants in the Mozilla 1.3 beta, which could then be hard-coded into the product. The machine-learning bug got closed on June 25th, 2009 due to AwesomeBar and frecency.

On Februrary 11th, 2004, the onbeforeunload event is introduced.

On April 17, 2004, gerv lands the first of several patches to switch the licensing of the code over to the MPL/LGPL/GPL tri-license. That’d be MPL 1.1.

On November 23rd, 2004, the parent and treeOwner attributes for nsIDocShellTreeItem are made scriptable. They are read-only for script.

And that’s probably the last notable patch related to DocShell in 2004.

Lord of the rings

Reading through all of these commits in the docshell/ and webshell/ folders is a bit like taking a core sample of a very mature tree, and reading its rings. I can see some really important events occurring as I flip through these commits – from the very birth of Mozilla, to the birth of XPCOM and XUL, to porkjockeys, and the start of the embedding efforts… all the way to the splitting out of Thunderbird, deCOMtamination and introduction of the MPL. I really got a sense of the history of the Mozilla project reading through these commits.

I feel like I’m getting a better and better sense of where DocShell came from, and why it does what it does. I hope if you’re reading this, you’re getting that same sense.

Stay tuned for the next bit, where I look at years 2005 to 2010.

Messages like: ERROR: A CVS repository cannot contain both cvsroot/mozilla/browser/base/content/metadata.js,v and cvsroot/mozilla/browser/base/content/Attic/metadata.js,v, for example

http://mikeconley.ca/blog/2014/08/10/docshell-in-a-nutshell-part-2-the-wonder-years-1999-2004/

|

|

Peter Bengtsson: Gzip rules the world of optimization, often |

So I have a massive chunk of JSON that a Django view is sending to a piece of Angular that displays it nicely on the page. It's big. 674Kb actually. And it's likely going to be bigger in the near future. It's basically a list of dicts. It looks something like this:

>>> pprint(d['events'][0]) {u'archive_time': None, u'archive_url': u'/manage/events/archive/1113/', u'channels': [u'Main'], u'duplicate_url': u'/manage/events/duplicate/1113/', u'id': 1113, u'is_upcoming': True, u'location': u'Cyberspace - Pacific Time', u'modified': u'2014-08-06T22:04:11.727733+00:00', u'privacy': u'public', u'privacy_display': u'Public', u'slug': u'bugzilla-development-meeting-20141115', u'start_time': u'15 Nov 2014 02:00PM', u'start_time_iso': u'2014-11-15T14:00:00-08:00', u'status': u'scheduled', u'status_display': u'Scheduled', u'thumbnail': {u'height': 32, u'url': u'/media/cache/e7/1a/e71a58099a0b4cf1621ef3a9fe5ba121.png', u'width': 32}, u'title': u'Bugzilla Development Meeting'}