Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Fr'ed'eric Harper: Linux Meetup Montr'eal et son int'er^et pour Mozilla |

Hier soir je pr'esentais au groupe Linux Meetup Montr'eal non `a propos de Linux, mais bien au sujet de Mozilla, Firefox OS, mais aussi Firefox. L’id'ee 'etait de faire une pr'esentation haut niveau, tout en gardant un aspect technique, car plusieurs d'eveloppeurs 'etaient pr'esent. Avec une salle comble, j’ai eu le plaisir de constater l’int'er^et des utilisateurs de Linux envers Mozilla. Malheureusement, mon enregistrement n’a pas fonctionn'e, mais voici tout de m^eme les diapositives.

|

|

Mozilla Release Management Team: Firefox 32 beta3 to beta4 |

- 17 changesets

- 19 files changed

- 135 insertions

- 57 deletions

| Extension | Occurrences |

| cpp | 8 |

| h | 4 |

| js | 2 |

| xml | 1 |

| py | 1 |

| jsm | 1 |

| in | 1 |

| c | 1 |

| Module | Occurrences |

| js | 6 |

| mobile | 3 |

| xpcom | 2 |

| dom | 2 |

| widget | 1 |

| testing | 1 |

| services | 1 |

| media | 1 |

| gfx | 1 |

| browser | 1 |

List of changesets:

| Ted Mielczarek | Bug 1033938 - rm full symbols.zip during buildsymbols. r=glandium, a=NPOTB - 0ad2cb256eb8 |

| Cosmin Malutan | Bug 990509 - Wait a second after tabs opening before finishing the sync operation. r=aeftimie, r=rnewman, a=test-only - 51f2c08f86ee |

| Richard Newman | Bug 1046369 - Add architecture to logged library load errors. r=blassey, a=sylvestre - 429123ab0700 |

| Mark Finkle | Bug 1012677 - Investigate delayed initialization of nsILoginManager in Firefox for Android r=margaret a=sylvestre - c110771b033f |

| Mark Finkle | Bug 1043920 - Reader mode (ambient light detection) prevents device from sleeping r=margaret a=sylvestre - a59d3af0c000 |

| Tim Taubert | Bug 1041788 - Don't enter _beginRemoveTab() when a .permitUnload() call is pending r=dao a=sylvestre - faf3b10d4868 |

| Mike Hommey | Bug 1044414 - Add a fallback for unknown platforms after Bug 945869. r=ted, a=NPOTB - 91dca763d0f5 |

| Mike Hommey | Bug 901208 - Fix Skia for ARM v4t. r=derf, a=NPOTB - aa124023125a |

| Gijs Kruitbosch | Bug 1042625 - High contrast mode detection broken on Windows <8. r=jimm, a=lmandel - 19d074395f1a |

| Randell Jesup | Bug 1035067 - Don't hint we expect a track if we're not going to receive it. r=ehugg, a=lmandel - b7316976ca8b |

| Bobby Holley | Bug 1044205 - Detach the XPCWrappedNativeScope from the CompartmentPrivate during forcible shutdown. r=billm, a=sledru - a979f156f8d1 |

| Bobby Holley | Bug 1044205 - Invoke XPCWrappedNativeScope::TraceSelf from TraceXPCGlobal. r=billm, a=sledru - 7fb3c0ed4417 |

| Bobby Holley | Bug 1044205 - Rename XPCWrappedNativeScope::TraceSelf to TraceInside. r=billm, a=sledru - 72c66ced503e |

| Andrew McCreight | Bug 1037510 - Part 1: Add nursery size as a parameter of CycleCollectedJSRuntime. r=khuey, a=sledru - 84bf8e0aaecb |

| Andrew McCreight | Bug 1037510 - Part 2: Reduce GGC nursery size to 1MB on workers. r=khuey, a=sledru - e61371be0f38 |

| Ryan VanderMeulen | Bug 1044205 - Replace xpc::CompartmentPrivate::Get with GetCompartmentPrivate to fix bustage. a=bustage - 932afd80c5a0 |

| Ryan VanderMeulen | Bug 1044205 - Fix up one other use of CompartmentPrivate::Get. a=bustage - 54fe10b3558d |

http://release.mozilla.org//statistics/32/2014/08/06/fx-32-b3-to-b4.html

|

|

Laura Hilliger: Connected Courses |

I’m quite pleased to point you to a new online learning experience being put together by a group of amazing educators from the Connected Learning community. Starting September 15th we’re going to be talking about openness and blended learning in a 12 week course that aims to help people run their own connected courses. It’s meta! I love meta.

The coursework will help you understand how we work in the digital space by demystifying the tools and trade of openness. We’ll explore why you might run a Connectivist learning experience, how to get started, how to connect online and offline participants, and how to MAKE things that support this kind of learning.

We’ll talk about building networks, maintaining networks, diversifying networks and living and working in a connected space. We’ll learn together, share ideas and start making action plans for our own connected courses.

You might understand, based on the above, why I’m excited about this. For the past couple of years I’ve been learning how to run connected courses, and I’ve been looking to people like the organizers of Connected Courses for advice, best practices and support. I’ve learned so much about how open online learning can activate and inspire people, and I’ve spent loads of time trying to understand the hows and whys in order to make Webmaker’s #TeachTheWeb program a sustainable engine of learning and support for our community. This course aims to simplify many of the trials and tribulations I’ve had organizing in this educational space, so that anyone can run these experiences and join in on open culture.

Everyone is welcome and no experience is required. The first unit starts on September 15th, but you can sign up now and find more details about the topics we'll be exploring at http://connectedcourses.org

See you there!

I’m quite pleased to point you to a new online learning experience being put together by a group of amazing educators from the Connected Learning community. Starting September 15th we’re going to be talking about openness and blended learning in a 12 week course that aims to help people run their own connected courses. It’s meta! I love meta.

The coursework will help you understand how we work in the digital space by demystifying the tools and trade of openness. We’ll explore why you might run a Connectivist learning experience, how to get started, how to connect online and offline participants, and how to MAKE things that support this kind of learning.

We’ll talk about building networks, maintaining networks, diversifying networks and living and working in a connected space. We’ll learn together, share ideas and start making action plans for our own connected courses.

You might understand, based on the above, why I’m excited about this. For the past couple of years I’ve been learning how to run connected courses, and I’ve been looking to people like the organizers of Connected Courses for advice, best practices and support. I’ve learned so much about how open online learning can activate and inspire people, and I’ve spent loads of time trying to understand the hows and whys in order to make Webmaker’s #TeachTheWeb program a sustainable engine of learning and support for our community. This course aims to simplify many of the trials and tribulations I’ve had organizing in this educational space, so that anyone can run these experiences and join in on open culture.

Everyone is welcome and no experience is required. The first unit starts on September 15th, but you can sign up now and find more details about the topics we'll be exploring at http://connectedcourses.org

See you there!

|

|

Marco Zehe: Started a 30 days with Android experiment |

After I revisited the results of my Switching to Android experiment, and finding most (like 99.5%) items in order now, I decided on Tuesday to conduct a serious 30 days with Android endeavor. I have handed in my iPhone to my wife, and she’s keeping (confiscating) it for me.

I will also keep a diary of experiences. However, since that is very specific and not strictly Mozilla-related, and since this blog is syndicated on Planet Mozilla, I have decided to create a new blog especially for that occasion. You’re welcome to follow along there if you’d like, and do feel free to comment! Day One is already posted.

Also, if you follow me on Twitter, the hashtag #30DaysWithAndroid will be used to denote things around this topic, and announce the new posts as I write them, hopefully every day.

It’s exciting, and I still feel totally crazy about it! ![]() Well, here goes!

Well, here goes!

http://www.marcozehe.de/2014/08/06/started-a-30-days-with-android-experiment/

|

|

Joshua Cranmer: Why email is hard, part 7: email security and trust |

At a technical level, S/MIME and PGP (or at least PGP/MIME) use cryptography essentially identically. Yet the two are treated as radically different models of email security because they diverge on the most important question of public key cryptography: how do you trust the identity of a public key? Trust is critical, as it is the only way to stop an active, man-in-the-middle (MITM) attack. MITM attacks are actually easier to pull off in email, since all email messages effectively have to pass through both the sender's and the recipients' email servers [1], allowing attackers to be able to pull off permanent, long-lasting MITM attacks [2].

S/MIME uses the same trust model that SSL uses, based on X.509 certificates and certificate authorities. X.509 certificates effectively work by providing a certificate that says who you are which is signed by another authority. In the original concept (as you might guess from the name "X.509"), the trusted authority was your telecom provider, and the certificates were furthermore intended to be a part of the global X.500 directory—a natural extension of the OSI internet model. The OSI model of the internet never gained traction, and the trusted telecom providers were replaced with trusted root CAs.

PGP, by contrast, uses a trust model that's generally known as the Web of Trust. Every user has a PGP key (containing their identity and their public key), and users can sign others' public keys. Trust generally flows from these signatures: if you trust a user, you know the keys that they sign are correct. The name "Web of Trust" comes from the vision that trust flows along the paths of signatures, building a tight web of trust.

And now for the controversial part of the post, the comparisons and critiques of these trust models. A disclaimer: I am not a security expert, although I am a programmer who revels in dreaming up arcane edge cases. I also don't use PGP at all, and use S/MIME to a very limited extent for some Mozilla work [3], although I did try a few abortive attempts to dogfood it in the past. I've attempted to replace personal experience with comprehensive research [4], but most existing critiques and comparisons of these two trust models are about 10-15 years old and predate several changes to CA certificate practices.

A basic tenet of development that I have found is that the average user is fairly ignorant. At the same time, a lot of the defense of trust models, both CAs and Web of Trust, tends to hinge on configurability. How many people, for example, know how to add or remove a CA root from Firefox, Windows, or Android? Even among the subgroup of Mozilla developers, I suspect the number of people who know how to do so are rather few. Or in the case of PGP, how many people know how to change the maximum path length? Or even understand the security implications of doing so?

Seen in the light of ignorant users, the Web of Trust is a UX disaster. Its entire security model is predicated on having users precisely specify how much they trust other people to trust others (ultimate, full, marginal, none, unknown) and also on having them continually do out-of-band verification procedures and publicly reporting those steps. In 1998, a seminal paper on the usability of a GUI for PGP encryption came to the conclusion that the UI was effectively unusable for users, to the point that only a third of the users were able to send an encrypted email (and even then, only with significant help from the test administrators), and a quarter managed to publicly announce their private keys at some point, which is pretty much the worst thing you can do. They also noted that the complex trust UI was never used by participants, although the failure of many users to get that far makes generalization dangerous [5]. While newer versions of security UI have undoubtedly fixed many of the original issues found (in no small part due to the paper, one of the first to argue that usability is integral, not orthogonal, to security), I have yet to find an actual study on the usability of the trust model itself.

The Web of Trust has other faults. The notion of "marginal" trust it turns out is rather broken: if you marginally trust a user who has two keys who both signed another person's key, that's the same as fully trusting a user with one key who signed that key. There are several proposals for different trust formulas [6], but none of them have caught on in practice to my knowledge.

A hidden fault is associated with its manner of presentation: in sharp contrast to CAs, the Web of Trust appears to not delegate trust, but any practical widespread deployment needs to solve the problem of contacting people who have had no prior contact. Combined with the need to bootstrap new users, this implies that there needs to be some keys that have signed a lot of other keys that are essentially default-trusted—in other words, a CA, a fact sometimes lost on advocates of the Web of Trust.

That said, a valid point in favor of the Web of Trust is that it more easily allows people to distrust CAs if they wish to. While I'm skeptical of its utility to a broader audience, the ability to do so for is crucial for a not-insignificant portion of the population, and it's important enough to be explicitly called out.

X.509 certificates are most commonly discussed in the context of SSL/TLS connections, so I'll discuss them in that context as well, as the implications for S/MIME are mostly the same. Almost all criticism of this trust model essentially boils down to a single complaint: certificate authorities aren't trustworthy. A historical criticism is that the addition of CAs to the main root trust stores was ad-hoc. Since then, however, the main oligopoly of these root stores (Microsoft, Apple, Google, and Mozilla) have made their policies public and clear [7]. The introduction of the CA/Browser Forum in 2005, with a collection of major CAs and the major browsers as members, and several [8] helps in articulating common policies. These policies, simplified immensely, boil down to:

- You must verify information (depending on certificate type). This information must be relatively recent.

- You must not use weak algorithms in your certificates (e.g., no MD5).

- You must not make certificates that are valid for too long.

- You must maintain revocation checking services.

- You must have fairly stringent physical and digital security practices and intrusion detection mechanisms.

- You must be [externally] audited every year that you follow the above rules.

- If you screw up, we can kick you out.

I'm not going to claim that this is necessarily the best policy or even that any policy can feasibly stop intrusions from happening. But it's a policy, so CAs must abide by some set of rules.

Another CA criticism is the fear that they may be suborned by national government spy agencies. I find this claim underwhelming, considering that the number of certificates acquired by intrusions that were used in the wild is larger than the number of certificates acquired by national governments that were used in the wild: 1 and 0, respectively. Yet no one complains about the untrustworthiness of CAs due to their ability to be hacked by outsiders. Another attack is that CAs are controlled by profit-seeking corporations, which misses the point because the business of CAs is not selling certificates but selling their access to the root databases. As we will see shortly, jeopardizing that access is a great way for a CA to go out of business.

To understand issues involving CAs in greater detail, there are two CAs that are particularly useful to look at. The first is CACert. CACert is favored by many by its attempt to handle X.509 certificates in a Web of Trust model, so invariably every public discussion about CACert ends up devolving into an attack on other CAs for their perceived capture by national governments or corporate interests. Yet what many of the proponents for inclusion of CACert miss (or dismiss) is the fact that CACert actually failed the required audit, and it is unlikely to ever pass an audit. This shows a central failure of both CAs and Web of Trust: different people have different definitions of "trust," and in the case of CACert, some people are favoring a subjective definition (I trust their owners because they're not evil) when an objective definition fails (in this case, that the root signing key is securely kept).

The other CA of note here is DigiNotar. In July 2011, some hackers managed to acquire a few fraudulent certificates by hacking into DigiNotar's systems. By late August, people had become aware of these certificates being used in practice [9] to intercept communications, mostly in Iran. The use appears to have been caught after Chromium updates failed due to invalid certificate fingerprints. After it became clear that the fraudulent certificates were not limited to a single fake Google certificate, and that DigiNotar had failed to notify potentially affected companies of its breach, DigiNotar was swiftly removed from all of the trust databases. It ended up declaring bankruptcy within two weeks.

DigiNotar indicates several things. One, SSL MITM attacks are not theoretical (I have seen at least two or three security experts advising pre-DigiNotar that SSL MITM attacks are "theoretical" and therefore the wrong target for security mechanisms). Two, keeping the trust of browsers is necessary for commercial operation of CAs. Three, the notion that a CA is "too big to fail" is false: DigiNotar played an important role in the Dutch community as a major CA and the operator of Staat der Nederlanden. Yet when DigiNotar screwed up and lost its trust, it was swiftly kicked out despite this role. I suspect that even Verisign could be kicked out if it manages to screw up badly enough.

This isn't to say that the CA model isn't problematic. But the source of its problems is that delegating trust isn't a feasible model in the first place, a problem that it shares with the Web of Trust as well. Different notions of what "trust" actually means and the uncertainty that gets introduced as chains of trust get longer both make delegating trust weak to both social engineering and technical engineering attacks. There appears to be an increasing consensus that the best way forward is some variant of key pinning, much akin to how SSH works: once you know someone's public key, you complain if that public key appears to change, even if it appears to be "trusted." This does leave people open to attacks on first use, and the question of what to do when you need to legitimately re-key is not easy to solve.

In short, both CAs and the Web of Trust have issues. Whether or not you should prefer S/MIME or PGP ultimately comes down to the very conscious question of how you want to deal with trust—a question without a clear, obvious answer. If I appear to be painting CAs and S/MIME in a positive light and the Web of Trust and PGP in a negative one in this post, it is more because I am trying to focus on the positions less commonly taken to balance perspective on the internet. In my next post, I'll round out the discussion on email security by explaining why email security has seen poor uptake and answering the question as to which email security protocol is most popular. The answer may surprise you!

[1] Strictly speaking, you can bypass the sender's SMTP server. In practice, this is considered a hole in the SMTP system that email providers are trying to plug.

[2] I've had 13 different connections to the internet in the same time as I've had my main email address, not counting all the public wifis that I have used. Whereas an attacker would find it extraordinarily difficult to intercept all of my SSH sessions for a MITM attack, intercepting all of my email sessions is clearly far easier if the attacker were my email provider.

[3] Before you read too much into this personal choice of S/MIME over PGP, it's entirely motivated by a simple concern: S/MIME is built into Thunderbird; PGP is not. As someone who does a lot of Thunderbird development work that could easily break the Enigmail extension locally, needing to use an extension would be disruptive to workflow.

[4] This is not to say that I don't heavily research many of my other posts, but I did go so far for this one as to actually start going through a lot of published journals in an attempt to find information.

[5] It's questionable how well the usability of a trust model UI can be measured in a lab setting, since the observer effect is particularly strong for all metrics of trust.

[6] The web of trust makes a nice graph, and graphs invite lots of interesting mathematical metrics. I've always been partial to eigenvectors of the graph, myself.

[7] Mozilla's policy for addition to NSS is basically the standard policy adopted by all open-source Linux or BSD distributions, seeing as OpenSSL never attempted to produce a root database.

[8] It looks to me that it's the browsers who are more in charge in this forum than the CAs.

[9] To my knowledge, this is the first—and so far only—attempt to actively MITM an SSL connection.

http://quetzalcoatal.blogspot.com/2014/08/why-email-is-hard-part-7-email-security.html

|

|

Benjamin Kerensa: Dogfooding: Flame on Nightly |

Just about two weeks ago, I got a Flame and have decided to use it as my primary phone and put away my Nexus 5. I’m running Firefox OS Nightly on it and so far have not run into any bugs so critical that I have needed to go back to Android.

Just about two weeks ago, I got a Flame and have decided to use it as my primary phone and put away my Nexus 5. I’m running Firefox OS Nightly on it and so far have not run into any bugs so critical that I have needed to go back to Android.

I have however found some bugs and have some thoughts on things that need improvement to make the Firefox OS experience even better.

Keyboard

One thing that has really irked me while using Firefox OS 2.1 is the keyboard always shows capital letters even if you don’t have caps lock on and are typing lower case letters. This experience is not what I have grown used to while using Android for years and iOS before that. When typing my password into web apps it is confusing to not see a visual cue on the keyboard to let me know what casing I am inputting.

For whatever reason this was an intended feature and I honestly think this UX decision needs to be rethought because it just doesn’t feel natural.

Call Volume

On Firefox OS 2.1, at least in my experience, the max volume is totally inadequate because indoors, it sounds like the person on the other end of a call is whispering or talking quietly. Making or receiving a call outdoors or in a noisy environment is impossible. I filed a bug on this so hopefully it will be sorted.

Mail App

I like that the mail app is fast and nimble but the lack of having threaded e-mail conversations just leaves me wanting more. It would also be nice to be able to have a smart inbox like the GMail app on Android has since that is also my mail provider.

Social Media Apps

The Facebook and Twitter app both work better and luckily the Facebook web app has avoided many of the UI and feature changes Facebook has landed to its Android and iOS app so the experience is still good. One thing I do miss though is that I got notices on Android where currently the Facebook (Apparently Facebook is working on adding support) and Twitter apps on Firefox OS do not check for new messages on either platform and send notifications to your phone.

Additionally, it would really be nice to have a standalone Google+ packaged app in the marketplace since the current one I can add to the home screen seems a bit hit or miss and has some weird grey bar above the app which limits the app using the full screen resolution.

Overall Impression

I’m still using my Flame so none of the issues I have pointed out are making my phone unusable and I know that I am running a nightly unreleased version of Firefox OS so stability and issues are expected on Nightly. That being said, I’m really impressed by how polished the UI is compared to previous versions. Each time I get an OTA, I see more and more polish and improvements coming to certified apps.

I am really happy with the fact the Flame had dual SIM since I am traveling to Europe in a few weeks and can buy a SIM for a few euros if my T-Mobile International Roaming for some reason fails me.

I also know others who are not involved in the Mozilla community that are also using the Flame as their daily driver in North America, so that’s very optimistic to see people buying the Flame and dogfooding it on their own simply because they want a platform that puts the Open Web while also being Open Source.

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/40x9ZCb3yNE/dogfooding-flame-nightly

|

|

Ben Kero: The ‘Try’ repository and its evolution |

As the primary maintainers of the back end of the Try repository (in addition to the rest of Mercurial infrastructure) we are responsible for its care and feeding, making sure it is available, and a safe place to put your code before integration into trees.

Recently (the past few years actually) we’ve been experiencing that Mercurial has problems scaling to it’s activity. Here are some statistics for example:

- 24550 Mercurial heads (this is reset every few months)

- Head count correlated with the degraded performance

- 4.3 GB in size, 203509 files without a working copy

One of the methods we’re attempting is to modify try so that each push is not a head, but is instead a bundle that can be applied cleanly to any mozilla-central tree.

By default when issuing a ‘hg clone’ from and to a local disk, it will create a hardlinked clone:

$ hg clone --time --debug --noupdate mozilla-central/ mozilla-central2/

linked 164701 files

listing keys for "bookmarks"

time: real 3.030 secs (user 1.080+0.000 sys 1.470+0.000)

$ du --apparent-size -hsxc mozilla-central*

1.3G mozilla-central

49M mozilla-central2

1.3G total

These lightweight clones are the perfect environment to apply try heads to, because they will all be based off existing revs on mozilla-central anyway. To do that we can apply a try head bundle on top:

$ cd mozilla-central2/

$ hg unbundle $HOME/fffe1fc3a4eea40b47b45480b5c683fea737b00f.bundle

adding changesets

adding manifests

adding file changes

added 14 changesets with 55 changes to 52 files (+1 heads)

(run 'hg heads .' to see heads, 'hg merge' to merge)

$ hg heads|grep fffe1fc

changeset: 213681:fffe1fc3a4ee

This is great. Imagine if instead of ‘mozilla-central2' this were named fffe1*, and could be stored in portable bundle format, and used to create repositories whenever they were needed. There is one problem though:

$ du -hsx --apparent-size mozilla-central*

1.3G mozilla-central

536M mozilla-central2

Our lightweight 49MB copy has turned into 536MB. This would be fine for just a few repositories, but we have tens of thousands. That means we’ll need to keep them in bundle format and turn them into repositories on demand. Thankfully this operation only takes about three seconds.

I’ve written a little bash script to go through the backlog of 24,553 try heads and generate bundles for each of them. Here is the script and some stats for the bundles:

$ ls *bundle|wc -l

24553

$ du -hsx

39G .

$ cat makebundles.sh

#!/bin/bash

/usr/bin/parallel --gnu --jobs 16 \

"test ! -f {}.bundle && \

/usr/bin/hg -R /repo/hg/mozilla/try bundle --rev {} {}.bundle ::: \

$(/usr/bin/hg -R /repo/hg/mozilla/try heads --template "{node} ")

If this tooling works well I’d like to start using this as the future method of submitting requests to try. Additionally if developers wanted, I could create a Mercurial extension to automate the bundling process and create a bundle submission engine for try.

|

|

Nick Hurley: HTTP/2 draft 14 in Firefox |

Update 2014-08-06 - Fixed off-by-one in the length of time we’ve been working on HTTP/2

As many of you reading this probably know, over the last one and a half years (or so) the HTTP Working Group has been hard at work on HTTP/2. During that time there have been 14 iterations of the draft spec (paired with 9 iterations of the draft spec for HPACK, the header compression scheme for HTTP/2), many in-person meetings (both at larger IETF meetings and in smaller HTTP-only interim meetings), and thousands of mailing list messages. On the first of this month, we took our next big step towards the standardization of this protocol - working group last call (WGLC).

There is lots of good information on the web about HTTP/2, so I won’t go into major details here. Suffice it to say, HTTP/2 is going to be a great advance in the speed and responsiveness of the web, as well as (hopefully!) increasing the amount of TLS on the web.

Throughout all these changes, I have kept Firefox up to date with the latest draft versions; not always an easy task, with some of the changes that have gone into the spec! Through all that time, the most recent version of HTTP/2 has been available in Nightly, disabled by default, behind the network.http.spdy.enabled.http2draft preference.

Today, however, Firefox took its next big step in HTTP/2 development. Not only have I landed draft 14 (the most recent version of the spec), but I also landed a patch to enable HTTP/2 by default. We felt comfortable enabling this by default given the WGLC status of the spec - there should be no major, incompatible changes to the wire format of the protocol unless some new information about, for example, major security flaws in the protocol come to light. This means that all of you running Nightly will now be able to speak HTTP/2 with any sites that support it. To find out if you’re speaking HTTP/2 to a website, just open up the developer tools console (Cmd-Alt-K or Ctrl-Alt-K), and make a network request. If the status line says “HTTP/2.0”, then congratulations, you’ve found a site that runs HTTP/2! Unfortunately, there aren’t a whole lot of these right now, mostly small test sites for developers of the protocol. That number should start to increase as the spec continues to move through the IETF process.

The current plan is to allow draft14 to ride the trains through to release, enabled by default. If during the course of your normal web surfing, you see any bugs and the connection is HTTP/2 (as reported by the developer tools, see above) and you can fix the bug by disabling HTTP/2, then please file a bug under Core :: Networking:HTTP.

https://todesschaf.org/posts/2014/08/05/http2-draft-14-in-firefox.html

|

|

Just Browsing: Going Functional: Three Tiny Useful Functions – Some, Every, Pluck |

Some, Every

Every now and then in your everyday programmer’s life you come across an array and you need to determine something, usually whether all the elements in the array pass a certain test.

You know, something like:

var allPass = true;

for (var i = 0; i < arr.length; ++i) {

if ( ! passesTest(arr[i])) {

allPass = false;

break;

}

}

Or the mirror image: figure out whether any element in the set passes a test.

Now, the for loop above (or a similar construct for the “any” problem) is not too bad. But hey, it’s still six lines of code. And the purpose is not too clear at first glance. So let’s not reinvent the wheel and just use every:

var allPass = arr.every(function(item) { return passesTest(item); });

or better yet:

var allPass = arr.every(passesTest);

Much nicer, isn’t it? Similar for some, which returns true if any element matches.

Those two functions have been available in the all major browsers for quite a while now. If you’re unlucky enough to have to deal with IE8 or worse, you can use Lo-Dash or Underscore libraries.

Pluck

Pluck is useful when you have an array of objects and you’re only interested in one of their properties. It constructs an array comprising the values of said property. Say you want to know the average height of an NBA player.

var nbaPlayers = [

{age: 37, name: "Manu Gin'obili", height: 1.98},

{age: 36, name: "Dirk Nowitzki", height: 2.13},

//...

];

var averageHeight = avg(_.pluck(nbaPlayers, 'height'));

Another way to look at that is that pluck is merely shorthand for map. It is, but it’s still very handy. pluck is not a standard JS function but you can find it in any good functional JS library (be it Lo-Dash, Underscore or some other).

http://feedproxy.google.com/~r/justdiscourse/browsing/~3/7mG0DL0991w/

|

|

Daniel Stenberg: I’m eight months in on my Mozilla adventure |

I started working for Mozilla in January 2014. Here’s some reflections from my first time as Mozilla employee.

Working from home

I’ve worked completely from home during some short periods before in my life so I had an idea what it would be like. So far, it has been even better than I had anticipated. It suits me so well it is almost scary! No commutes. No delays due to traffic. No problems ever with over-crowded trains or buses. No time wasted going to work and home again. And I’m around when my kids get home from school and it’s easy to receive deliveries all days. I don’t think I ever want to work elsewhere again… :-)

Another effect of my work place is also that I probably have become somewhat more active on social networks and IRC. If I don’t use those means, I may spent whole days without talking to any humans.

Also, I’m the only Mozilla developer in Sweden – although we have a few more employees in Sweden. (Update: apparently this is wrong and there’s’ also a Mats here!)

The freedom

I have freedom at work. I control and decide a lot of what I do and I get to do a lot of what I want at work. I can work during the hours I want. As long as I deliver, my employer doesn’t mind. The freedom isn’t just about working hours but I also have a lot of control and saying about what I want to work on and what I think we as a team should work on going further.

The not counting hours

For the last 16 years I’ve been a consultant where my customers almost always have paid for my time. Paid by the hour I spent working for them. For the last 16 years I’ve counted every single hour I’ve worked and made sure to keep detailed logs and tracking of whatever I do so that I can present that to the customer and use that to send invoices. Counting hours has been tightly integrated in my work life for 16 years. No more. I don’t count my work time. I start work in the morning, I stop work in the evening. Unless I work longer, and sometimes I start later. And sometimes I work on the weekend or late at night. And I do meetings after regular “office hours” many times. But I don’t keep track – because I don’t have to and it would serve no purpose!

The big code base

I work with Firefox, in the networking team. Firefox has about 10 million lines C and C++ code alone. Add to that everything else that is other languages, glue logic, build files, tests and lots and lots of JavaScript.

It takes time to get acquainted with such a large and old code base, and lots of the architecture or traces of the original architecture are also designed almost 20 years ago in ways that not many people would still call good or preferable.

Mozilla is using Mercurial as the primary revision control tool, and I started out convinced I should too and really get to learn it. But darn it, it is really too similar to git and yet lots of words are intermixed and used as command but they don’t do the same as for git so it turns out really confusing and yeah, I felt I got handicapped a little bit too often. I’ve switched over to use the git mirror and I’m now a much happier person. A couple of months in, I’ve not once been forced to switch away from using git. Mostly thanks to fancy scripts and helpers from fellow colleagues who did this jump before me and already paved the road.

C++ and code standards

I’m a C guy (note the absence of “++”). I’ve primarily developed in C for the whole of my professional developer life – which is approaching 25 years. Firefox is a C++ fortress. I know my way around most C++ stuff but I’m not “at home” with C++ in any way just yet (I never was) so sometimes it takes me a little time and reading up to get all the C++-ishness correct. Templates, casting, different code styles, subtleties that isn’t in C and more. I’m slowly adapting but some things and habits are hard to “unlearn”…

The publicness and Bugzilla

I love working full time for an open source project. Everything I do during my work days are public knowledge. We work a lot with Bugzilla where all (well except the security sensitive ones) bugs are open and public. My comments, my reviews, my flaws and my patches can all be reviewed, ridiculed or improved by anyone out there who feels like doing it.

Development speed

There are several hundred developers involved in basically the same project and products. The commit frequency and speed in which changes are being crammed into the source repository is mind boggling. Several hundred commits daily. Many hundred and sometimes up to a thousand new bug reports are filed – daily.

yet slowness of moving some bugs forward

Moving a particular bug forward into actually getting it land and included in pending releases can be a lot of work and it can be tedious. It is a large project with lots of legacy, traditions and people with opinions on how things should be done. Getting something to change from an old behavior can take a whole lot of time and massaging and discussions until they can get through. Don’t get me wrong, it is a good thing, it just stands in direct conflict to my previous paragraph about the development speed.

In the public eye

I knew about Mozilla before I started here. I knew Firefox. Just about every person I’ve ever mentioned those two brands to have known about at least Firefox. This is different to what I’m used to. Of course hardly anyone still fully grasp what I’m actually doing on a day to day basis but I’ve long given up on even trying to explain that to family and friends. Unless they really insist.

Vitriol and expectations of high standards

I must say that being in the Mozilla camp when changes are made or announced has given me a less favorable view on the human race. Almost anything or any chance is received by a certain amount of users that are very aggressively against the change. All changes really. “If you’ll do that I’ll be forced to switch to Chrome” is a very common “threat” – as if that would A) work B) be a browser that would care more about such “conservative loonies” (you should consider that my personal term for such people)). I can only assume that the Chrome team also gets a fair share of that sort of threats in the other direction…

Still, it seems a lot of people out there and perhaps especially in the Free Software world seem to hold Mozilla to very high standards. This is both good and bad. This expectation of being very good also comes from people who aren’t even Firefox users – we must remain the bright light in a world that goes darker. In my (biased) view that tends to lead to unfair criticisms. The other browsers can do some of those changes without anyone raising an eyebrow but when Mozilla does similar for Firefox, a shitstorm breaks out. Lots of those people criticizing us for doing change NN already use browser Y that has been doing NN for a good while already…

Or maybe I’m just not seeing these things with clear enough eyes.

How does Mozilla make money?

Yeps. This is by far the most common question I’ve gotten from friends when I mention who I work for. In fact, that’s just about the only question I get from a lot of people… (possibly because after that we get into complicated questions such as what exactly do I do there?)

curl and IETF

I’m grateful that Mozilla allows me to spend part of my work time working on curl.

I’m also happy to now work for a company that allows me to attend to IETF/httpbis and related activities much better than ever I’ve had the opportunity to in the past. Previously I’ve pretty much had to spend spare time and my own money, which has limited my participation a great deal. The support from Mozilla has allowed me to attend to two meetings so far during the year, in London and in NYC and I suspect there will be more chances in the future.

Future

I only just started. I hope to grab on to more and bigger challenges and tasks as I get warmer and more into everything. I want to make a difference. See you in bugzilla.

|

|

Daniel Stenberg: libressl vs boringssl for curl |

I tried to use two OpenSSL forks yesterday with curl. I built both from source first (of course, as I wanted the latest and greatest) an interesting thing in itself since both projects have modified the original build system so they’re now three different ways.

libressl 2.0.0 installed and built flawlessly with curl and I’ve pushed a change that shows LibreSSL instead of OpenSSL when doing curl -V etc.

boringssl didn’t compile from git until I had manually fixed a minor nit, and then it has no “make install” target at all so I had manually copy the libs and header files to a place suitable for curl’s configure to detect. Then the curl build failed because boringssl isn’t API compatiable with some of the really old DES stuff – code we use for NTLM. I asked Adam Langley about it and he told me that calling code using DES “needs a tweak” – but I haven’t yet walked down that road so I don’t know how much of a nuisance that actually is or isn’t.

Summary: as an openssl replacement, libressl wins this round over boringssl with 3 – 0.

http://daniel.haxx.se/blog/2014/08/05/libressl-vs-boringssl-for-curl/

|

|

Aaron Train: Profiling Gecko in Firefox for Android |

At last week's Mozilla QA Meetup in Mountain View, California I demonstrated how to effectively profile Gecko in Firefox for Android on a device. By targeting a device running Firefox for Android, one can measure responsiveness and performance costs of executed code in Gecko. By measuring sample set intervals, we can focus on a snapshot of a stack and pinpoint functions and application resources produced by a sampling run. With this knowledge, one can provide a valuable sampling of information back to developers to better filed bug reports. Typically, as we have seen, these are helpful in bug reports for measuring page-load and scrolling and panning performance problems, frame performance and other GPU issues.

How to Help

- Install this (available here) Gecko Profiler add-on in Firefox on your desktop (it is the Gecko profiler used for platform execution), and follow the instructions outlined here for setting up an environment

- If you encounter odd slowdown in Firefox for Android, profile it! You can save the profile locally or share it via URL

- Add it to a (or your) bug report on Bugzilla

- Talk to us on IRC about your experience or problems

Here is an example bug report, bug 1047127 (panning stutters on a page with overflow-x) where a profile may prove helpful for further investigation.

Detailed information on profiling in general is available on MDN here.

http://aaronmt.com/2014/08/05/profiling-firefox-for-android/

|

|

Christian Heilmann: On wearables and weariness |

I just published my first post on Medium about wearable technology and the disappointment of its current state of affairs partly because I wanted to vent and to try it out as a publishing platform.

So head over there to read about my thoughts – TL;DR: I love the idea of wearables but the current Google watch is just disappointing, both from a hardware perspective and as a feature. All you get is notifications and the concept of voice input is not as useful as it is sold to us.

http://christianheilmann.com/2014/08/04/on-wearables-and-weariness/

|

|

Jordan Lund: This Week In Releng - July 28th, 2014 |

Major Highlights:

- armv6 builds and tests are disabled

- ec2 instances avail of a proxy server that stores local cache of ftp downloadables

- runner, service that runs startup tasks so we don't need to reboot after every job, enabled for centos machines.

- desktop builders for mac and windows join linux on cedar as they are run via mach and mozharness

Completed Work (marked as resolved):

- Balrog: Backend

- Buildduty

- Completed builds not appearing on TBPL

- upgrade ec2 linux64 test masters from m3.medium to m3.large

- Install fxa-python-client on internal PyPI

- clobberer step is failing to clobber

- b2gbumper is stalled

- Firefox mozilla-central linux, linux64, and android2.2 l10n nightly builds are busted

- Windows 8 tests failing across all branches

- Wait times > 16h, pending builds > 7000, ix jobs ~3000 reftest/crashtest/jsreftest, 10.8 ~10h behind

- hitting ISE 500's while pulling from hg.m.o to buildbot repos across our masters. buildbot hgpollers also failing

- All Trees Closed -> building backlog of linux jobs because of issues with dynamic jacuzzi allocation

- B2g bumper bot problems - hasn't made commits for a while - Gaia-feeding trees closed until bumper bot is fixed

- Something wrong with b-2008-ix-002x slaves

- B2g-inbound tree closed due to pending B2G ICS Emulator Opt builds

- All trees closed due to AWS builder lag

- Integration Trees closed due Android tests fail Automation Error: Unable to reboot panda-xyz via Relay Board.

- builds-4hr not updating, buildapi returning 503s, all trees closed

- Failure "TypeError: request() got an unexpected keyword argument 'config'" on amazon dnsless spot instances

- General Automation

- Increase free space requirement for release-mozilla-beta-androidbuild jobs

- [Flame] Turn off FX OS Version 34.0a1 nightly updates until instability is fixed

- endtoend reconfig script should run its python unbuffered

- Run gaia unit tests on debug linux64 B2G desktop builds

- Disable non-unified builds on b2g32

- Disable Jetpack tests on B2G release branches

- Run reftests on B2G Desktop builds

- Schedule Android 2.3 crashtests, js-reftests, plain reftests, and m-gl on all trunk trees and make them ride the trains

- Make runner responsible for buildbot startup on CentOS

- Cleanup strays in scheduler db (bogus m-i win pgo, Mulet)

- Try is attempting to trigger periodic non-unified builds

- No updates served for mozilla-esr31 nightly builds

- Hazard failures with rsync: connection unexpectedly closed (0 bytes received so far) [sender]

- Upload new version of xulrunner to tooltool

- Don't schedule non-unified B2G hazard analysis builds

- Explicitly list gaia-try's priority rather than relying on the fallback

- Get e10s tests running on inbound, central, and try

- Schedule Android 4.0 Debug reftests and crashtests on all trunk trees and let them ride the trains

- Add task-specific configuration to Runner

- lock reporepo to a tag - b2g builds failing with AttributeError: 'list' object has no attribute 'values' | caught OS error 2: No such file or directory while running ['./gonk-misc/add-revision.py', '-o', 'sources.xml', '--force', '.repo/manifest.xml']

- Fabric should not try and prompt when we fork it.

- Device Builds Tests/Builds for mozilla-inbound

- Allow test machines in AWS to get to github via internet gateway

- Cleanup bogus Linux PGO jobs in scheduler db

- Improve slaveapi logging

- Re-enable us-west-2 when AWS is working again

- B2G builds failing with | gpg: Can't check signature: No public key | error: could not verify the tag 'v1.12.4' | fatal: repo init failed; run without --quiet to see why

- Loan Requests

- Other

- Platform Support

- Updated android builders to include google play services (and android support v7 if it isn't already included)

- Disable armv6 builds and tests everywhere apart from esr31

- Intermittent Android x86 We have not been able to establish a telnet connection with the emulator

- Release Automation

- release runner failure e-mail should be sent to relman or release-drivers

- release runner doesn't include enough context for some errors

- Releases

- Releases: Custom Builds

- Repos and Hooks

- Tools

- Allow editing of relevant slavealloc fields directly from slave health

- slavealloc API URL used when making changes from slavehealth contains an additional forward slash

- slavehealth stopped updating after 13:40 Friday

- Give Treeherder URLs in Try responses / emails.

- Aggregate similar exceptions in emails from masters

- Slaverebooter should handle its jobs in a Queue rather than kickoff a single thread per slave.

- Balrog: Backend

- Buildduty

- Try jobs backing up - need to deprioritise ash

- Decommission foopy117 and associated tegras

- Legacy vcs sync not able to update legacy mapper quick enough for b2gbuild.py, causing b2g builds calls to mapper to timeout

- [tracking] Eliminate buildduty

- General Automation

- buildbot changes to run selected b2g tests on c3.xlarge

- Add dependency management to Runner

- Please schedule b2g emulator cppunit tests on Cedar

- FlatFish: Integrate boot.img and recovery.img into the build system

- Stop rebooting after every job

- Run mock against repos other than puppetagain

- android single locale central/aurora nightlies not reporting to balrog

- generate "flatfish" builds for B2G

- Make runner responsible for buildbot startup on Ubuntu test

- Automatically delete obsolete latest-* builds for calendar

- Make some mozharness jobs easier to run outside of automation + allow for http authentication when running outside of the releng network

- Figure out the correct path setup and mozconfigs for automation-driven MSVC2013 builds

- Schedule mochitest-plain on linux64-mulet on all branches

- AWS region-local caches for https stuff

- Loan Requests

- Slave loan request for tst-linux64-spot

- Loan an ami-6a395a5a instance to Aaron Klotz

- Loaner slave matching WinXP mochitest1 slaves for :walter

- Loan an ami-6a395a5a instance to Aaron Klotz

- Slave loan request for a bld-linux64-ec2 vm

- Mozharness

- Make sure to mark jobs as failures when structured logger parsing fails

- fx desktop builds in mozharness

- Other

- Improve cleanup of tinderbox-builds

- Figure out retention of flame tinderbox-builds

- New AWS account, 'mockbuild-repos-upload'

- Create docs for l10n from a RelEng perspective (repos, locale lists, per product/branch explanations, etc) to allow newcomers to navigate this landscape

- Need read permissions to subnets

- Windows builds on cedar broken

- Platform Support

- Upload JDK1.7 on builders

- c3.xlarge instance types are expensive, let's test running those tests on a range of instance types that are cheaper

- Change Android 2.2 builds to be called Android 2.3 builds

- evaluate mac cloud options

- Release Automation

- release automation should update ship it at certain points

- pushing release builds to beta doesn't work in balrog

- Add new table(s) to shipit database

- Figure out Play Store dynamics for Android 2.2/ARMv6 EOL support releases

- adjust e-mail for updateshipping builder to be more intelligible

- Add a standalone process that listens to pulse for release related buildbot messages

- flip the switch to move beta users over to balrog

- Add REST API entry point to shipit that allows shipit-agent to enter release data into shipit database

- make aus3 and aus4 responses consistent

- Releases: Custom Builds

- add new image sizes to Bing/MSN search plugins

- Remove Blackbird builds for Fx32

- Remove Rambler config to inactive and remove builds from FTP

- Version bumps for Yahoo FF 32

- Repos and Hooks

- esr31 branch on https://github.com/mozilla/gecko-dev

- Consider disabling or redirecting bundle archives

- Tools

- Funsize requires file level diff caching for speedups in partial generation

- Funsize should return correct MIME type

- Add linux64-mulet to trychooser

- cut over l10n repos to the new vcs-sync system

- Reconfigs should be automatic, and scheduled via a cron job

- implement 'awscreateinstance' action in slaveapi

- permanent location for vcs-sync mapfiles, status json, logs

- Create a Comprehensive Slave Loan tool

- vcs-sync needs to be able to account for empty source repos

- two new instances like vcssync{1,2}, puppetized

http://jordan-lund.ghost.io/this-week-in-releng-july-28th-2014/

|

|

Jess Klein: How to learn more about Net Neutrality |

"Data, regardless of who it is from or who is receiving it, is treated equally by Internet service providers."I like to think of it as opening up the door for 2 internets - one that comes at a cost, and another that is free. Sounds fine, right? You're like .. ok what's the big deal? I'll just use the free one. But just like many other free services that you have used that all of a sudden put up features behind a paywall, your free offering becomes crap - cluttered with ads, slower to use and just a poor man's version of the prime real estate - this too will happen with the Web.

I believe that this issue is the most important topic for web users (globally), because without the neutral infrastructure of the internet, many freedoms that we take for granted in this hand crafted community - such as speech - become compromised in a privileged and gated web. Opposition to net neutrality threatens democracy, participation and free speech. But this isn't a hopeless cause, there is actually something proactive that you can do to protect the Web that you love: you can learn more about Net Neutrality and explain it's importance to your small circle of the world why it's important. The goal is to create an educated groundswell that will be able to defend the open web.

Here are a few concrete ways to learn:

1. Attend the free Net Neutrality global teach-ins offered by Mozilla this week

2. Check out the the Net Neutrality kit

3. Do an activity - here's a 1 hour activity

4. Join the conversation on discourse

I contributed to these kits by making a few activities:

I fight for #TeamInternet to save net neutrality because: You can make an internet meme explaining why you support net neutrality.

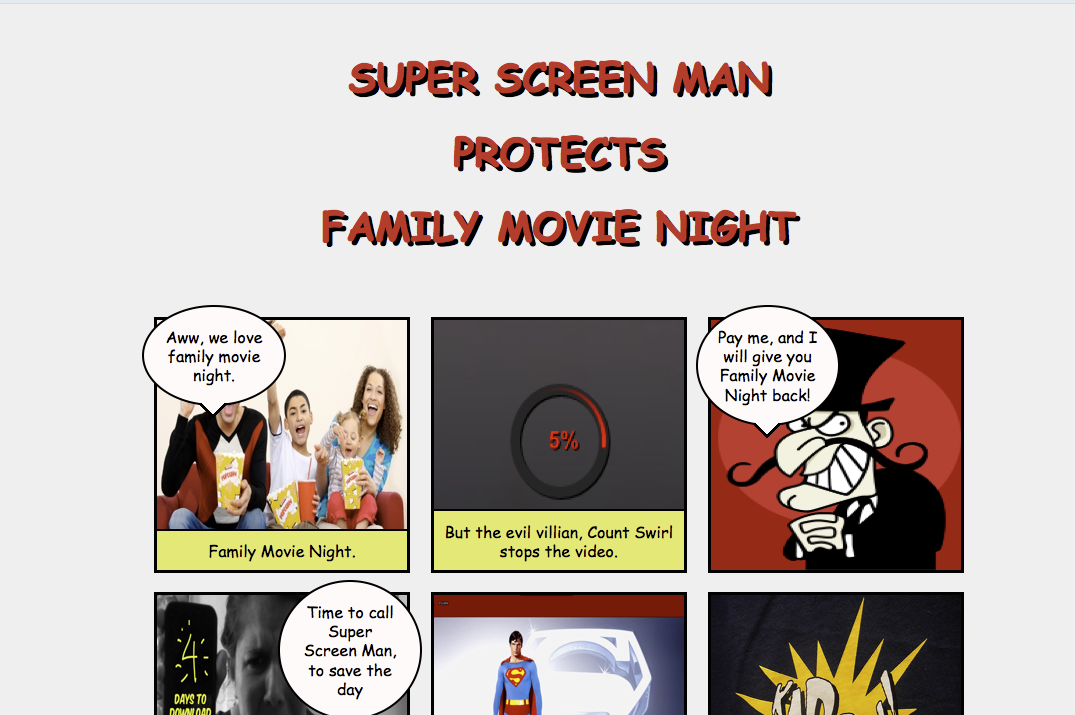

Superheroes for Net Neutrality: Calling all Superheroes! The Legion of Thorn has created 2 Webs - resulting in a dystopian state. Avenge them and defend Net Neutrality by crafting a league of superheroes to join Team Web, and remix a comic book webpage to show how they use their superpowers to save the day.

Regardless of how you learn about Net Neutrality, take some time out of your day to add it to your information diet.

http://jessicaklein.blogspot.com/2014/08/defenders-of-net-neutrality.html

|

|

Will Kahn-Greene: Input status: August 4th, 2014 |

Summary

This is the status report for development on Input. I publish a status report to the input-dev mailing list every couple of weeks or so covering what was accomplished and by whom and also what I'm focusing on over the next couple of weeks. I sometimes ruminate on some of my concerns. I think one time I told a joke.

Work summary

- Continued work on the development environment with awesome help from L. Guruprasad

- Implement the bits required to support Loop

- Add smoketest coverage for Firefox OS feedback form

- Fix some technical debt issues

Development

Landed and deployed:

- 6097571 [bug 1040919] Change version to only have one dot

- dcf8f91 [bug 1040919] Fix .0.0 versions to .0

- 4012933 Remove CEF-related things

- 4887c49 [bug 1030905] Add product/version tests

- 1bdd3a8 [bug 1042222] update Vagrantfile to use 14.04 LTS (L. Guruprasad)

- c1bdfbb Add L. Guruprasad to CONTRIBUTORS

- 3e607a5 [bug 1042560] Remove unused packages in dev VM (L. Guruprasad)

- c0759d1 Update django-mozilla-product-details

- bcf8ec8 Update product details

- 58579ab [bug 987801] peep-ify requirements

- 248b0ab [bug 987801] Exit if requirements are mismatched

- 5db57bc [bug 987801] Redo how we figure out whether to use vendor/

- df8400d Fix sampledata generation to use bulk create

- fa0caac [bug 1041622] Capture querystring slop

- 98745b5 [bug 1021155] Add basic FirefoxOS smoketest

- 6cf9e39 [bug 1041664] Capture slop in Input API

- ff4b711 Show context in response view

- 976ebbf [bug 1045942] Add category to response table

- 34622ad Add docs for category and extra context for Input API

- d1a8dd5 Add an additional note about keys

- fec1319 [bug 998726] Quell Django warnings

- 288afe4 Rename fjord/manage.py to fjord/manage_utils.py

- d40e8a9 Update dennis to v0.4.3

Landed, but not deployed:

- 28cd90f [bug 1047681] Fix mlt call to be more resilient

Current head: 28cd90f

Over the next two weeks

- Keep an eye out for any Loop or Heartbeat related work--that's top priority.

- Work on gradient support and product picker support

What I need help with

- (Google chrome, JavaScript, CSS, HTML) Investigate what's wrong with the date picker in Chrome and fix it [bug 1012965]

- Test out the Getting Started instructions. We've had a few people go through these already, but it's definitely worth having more eyes. http://fjord.readthedocs.org/en/latest/getting_started.html

If you're interested in helping, let me know! We hang out on #input on irc.mozilla.org and there's the input-dev mailing list.

That's it!

http://bluesock.org/~willkg/blog/mozilla/input_status_20140804

|

|

Tarek Ziad'e: ToxMail Experiment Cont'd |

I started the other day experimenting with Tox to build a secure e-mailing system. You can read my last post here.

To summarize what Toxmail does:

- connects to the Tox network

- runs a local SMTP and a local POP3 servers

- converts any e-mail sent to the local SMTP into a Tox message

The prototype is looking pretty good now with a web dashboard that lists all your contacts, uses DNS lookups to find users Tox Ids, and has a experimental relay feature I am making progress on.

See https://github.com/tarekziade/toxmail

DNS Lookups

As described here, Tox proposes a protocol where you can query a DNS server to find out the Tox ID of a user as long they have registered themselves to that server.

There are two Tox DNS servers I know about: http://toxme.se and http://utox.org

If you register a nickname on one of those servers, they will add a TXT record in their DNS database. For example, I have registered tarek at toxme.se and people can get my Tox Id querying this DNS:

$ nslookup -q=txt tarek._tox.toxme.se. Server: 212.27.40.241 Address: 212.27.40.241#53 Non-authoritative answer: tarek._tox.toxme.se text = "v=tox1\;id=545325E0B0B85B29C26BF0B6448CE12DFE0CD8D432D48D20362878C63BA4A734018C37107090\;sign=u+u+sQ516e9VKJRMiubQiRrWiVN0Nt98dSbUtsHBEwYiaQHk2T8zAq4hGprMl9lc89VXRnI+AukoqpC7vJoHDXRhcmVrVFMl4LC4WynCa/C2RIzhLf4M2NQy1I0gNih4xjukpzRwkA=="

Like other Tox clients, the Toxmail server uses this feature to convert on the fly a recipient e-mail into a corresponding Tox ID. So if I write an e-mail to tarek@toxme.se, Toxmail knows where to send the message.

That breaks anonymity of course, if the Tox Ids are published on a public server, but that's another issue.

Offline mode

The biggest issue of the Toxmail project is the requirement of having both ends connected to the network when a mail is sent.

I have added a retry loop when the recipient is offline, but the mail will eventually make it only when the two sides are connected at the same time.

This is a bit of a problem when you are building an asynchronous messaging system. We started to discuss some possible solutions on the tracker and the idea came up to have a Supernode that would relay e-mails to a recipient when its back online.

In order to do it securely, the mail is encrypted using the Tox public/private keys so the supernode don't get the message in clear text. It uses the same crypto_box APIs than Tox itself, and that was really easy to add thanks to the nice PyNaCL binding, see https://github.com/tarekziade/toxmail/blob/master/toxmail/crypto.py

However, using supernodes is adding centralization to the whole system, and that's less appealing than a full decentralized system.

Another option is to use all your contacts as relays. A e-mail propagated to all your contacts has probably good chances to eventually make it to its destination.

Based on this, I have added a relay feature in Toxmail that will send around the mail and ask people to relay it.

This adds another issue though: for two nodes to exchange data, they have to be friends on Tox. So if you ask Bob to relay a message to Sarah, Bob needs to be friend with Sarah. And maybe Bob does not want you to know that he's friend with Sarah.

Ideally everyone should be able to relay e-mails anonymously - like other existing systems where data is just stored around for the recipient to come pick it.

I am not sure yet how to solve this issue, and maybe Tox is not suited to my e-mail use case.

Maybe I am just trying to reinvent BitMessage. Still digging :)

|

|

Mozilla Release Management Team: Firefox 32 beta2 to beta3 |

- 28 changesets

- 37 files changed

- 510 insertions

- 158 deletions

| Extension | Occurrences |

| cpp | 8 |

| js | 7 |

| svg | 3 |

| mn | 3 |

| jsm | 3 |

| css | 3 |

| xml | 2 |

| java | 2 |

| html | 2 |

| xhtml | 1 |

| sjs | 1 |

| list | 1 |

| h | 1 |

| Module | Occurrences |

| browser | 17 |

| content | 6 |

| mobile | 4 |

| js | 3 |

| toolkit | 2 |

| dom | 2 |

| xpcom | 1 |

| security | 1 |

List of changesets:

| Wes Kocher | Bug 1045421 - Disable test_crash_manager.js for failures on a CLOSED TREE r=me a=test-only - 6782bc9ae760 |

| D~ao Gottwald | fix line endings, no bug, DONTBUILD - d0fd7d6cfd3c |

| D~ao Gottwald | Bug 1016405 - Update the icons in the context menu to have the correct size, HiDPI, and inverted variants. r=mdeboer a=lmandel - 92bb440bf6d6 |

| Mark Finkle | Bug 988648 - Telemetry for Bookmarking/Saving r=liuche a=lmandel - 68d81380f84c |

| Mark Finkle | Bug 1044255 - Fix 'storage.init is not a function' during startup r=margaret a=lmandel - 26341b100b6d |

| JW Wang | Bug 1042884 - Do nothing in AudioStream::OpenCubeb() after shutdown. r=kinetik, a=sledru - eb3c7dd5ac83 |

| Andrew McCreight | Bug 1038887 - Add back null check to nsErrorService::GetErrorStringBundle. r=froydnj, a=sledru - f1b3abcc9555 |

| Patrick McManus | Bug 1045640 - disable tls proxying Bug 378637 on gecko 32 r=backout a=lmandel - 97a654ff8682 |

| Ryan VanderMeulen | Backed out changeset 97a654ff8682 (Bug 1045640) for mass bustage. - 433a7a6a2c25 |

| Karl Tomlinson | Bug 1022945. r=roc, a=sledru - 59a8340afc89 |

| Heather Arthur | Bug 966805 - Disable transitions in browser_styleeditor_sourcemap_watching.js. r=bgrins, a=test-only - b278a705d160 |

| Richard Newman | Bug 1042657 - Fix FloatingHintEditText when invoked from GeckoPreferences. r=margaret, a=sledru - 1efe42b45348 |

| Nicolas B. Pierron | Bug 1034349 - Skip Float32 specialization for int32 operations. r=bbouvier, a=abillings - 4fc9c8d8a4ae |

| Boris Zbarsky | Bug 957243 - Fix test_bug602838.html to not assume server response ordering. r=mayhemer, a=test-only - 97b81de9d1a5 |

| Jared Wein | Bug 1035586 - allow snippets in about:home to highlight sync in the firefox menu. r=Unfocused a=lmandel - 4bb3a5226b75 |

| David Keeler | Bug 1040889 - Don't re-cache OCSP server failures if no fetch was attempted. r=briansmith, r=cviecco, a=lmandel - 1c3cce4e0395 |

| Bob Owen | Bug 1042798 - Use an AutoEntryScript in nsNPAPIPlugin _evaluate as we are about to run script and need to ensure the correct JSContext* gets pushed. r=bholley, a=lmandel - 74a868d4fccd |

| Bob Owen | Bug 1042798 - Test: ensure window.open followed by document.writeln doesn't throw security error when called through NPN evaluate. r=bz, a=lmandel - 55731dcb4b17 |

| Ryan VanderMeulen | Backed out changeset 1c3cce4e0395 (Bug 1040889) for Windows bustage. - 3d310f9e5e5e |

| Jan de Mooij | Bug 1046176 - Fix inlined UnsafeSetReservedSlot post barrier. r=nbp, a=sledru - 247751fedbeb |

| Terrence Cole | Bug 1029299. r=billm, a=abillings - 2e2e1357e6ed |

| D~ao Gottwald | Bug 1041969 - Disabled icons in context menu should use GrayText. r=mdeboer, a=sledru - 011c4355f782 |

| Shane Caraveo | Bug 1042991 - Fix history use in share panel. r=bmcbride, a=sledru - fd04691e6c71 |

| David Keeler | Bug 1040889 - Don't re-cache OCSP server failures if no fetch was attempted. r=briansmith, r=cviecco, a=lmandel - adc6758d943d |

| Tomislav Jovanovic | Bug 1041844 - Disable e10s tests on Aurora and Beta. r=evold, a=sledru - f3e354f0ec70 |

| Florian Qu`eze | Bug 1046142 - Empty language to translate to drop down on localized builds, r+a=gavin. - c381e6fba574 |

| Wes Kocher | Backed out changeset c381e6fba574 (Bug 1046142) for bc1 failures - 2d266c52e2a5 |

| Florian Qu`eze | Bug 1046142 - Empty language to translate to drop down on localized builds, r+a=gavin. - 33eea1df6465 |

http://release.mozilla.org//statistics/32/2014/08/03/fx-32-b2-to-b3.html

|

|

Marco Zehe: Revisiting the “Switch to Android full-time” experiment |

Just over a year ago, I conducted an experiment to see whether it would be possible for me to switch to an Android device full-time for my productive smartphone needs. The conclusion back then was that there were still too many things missing for me to productively switch to Android without losing key parts of my day to day usage.

However, there were several changes over the past 15 or so months that prompted me to revisit this topic. For one, Android itself has been updated to 4.4 Kitkat, and Android L is just around the corner. At least Google Nexus devices since the Nexus 7 2012 get 4.4, and the newer Nexus 7 and Nexus 5 models will most likely even get L.

Second, after an 8 month hiatus since the last release, TalkBack development continues, with the distribution for the beta versions being put on a much more professional level than previously. No more installing of an APK from a Google Code web site!

Also, through my regular work on testing Firefox for Android, I needed to stay current on a couple of Android devices anyway, and noticed that gesture recognition all over the place has improved dramatically in recent versions of Android and TalkBack 3.5.1.

So let’s revisit my points of criticism and see where these stand today! Note that since posting this originally on August 3, 2014, there have been some great tips and hints shared both on here as well as on the eyes-free mailing list, and I’ve now updated the post to reflect those findings.

The keyboard

Two points of criticism I had were problems with the keyboard. Since TalkBack users are more or less stuck on the Google Keyboard that comes with stock Android, there were a few issues that I could not resolve by simply using another keyboard. Both had to do with languages:

- When having multiple languages enabled, switching between them would not speak which language one would switch to.

- It was not possible to enter German umlauts or other non-English accented characters such as the French accented e ('e).

Well what can I say? The Google Keyboard recently got an update, and that solves the bigger of the two problems, namely the ability to enter umlauts and accented characters. The button to switch languages does not say yet which language one would switch to, but after switching, it is clearly announced.

To enter accented characters, one touches and keeps the finger on the base character, for example the e, and waits for the TalkBack announcement that special characters are available. Now, lift your finger and touch slightly above where you just were. If you hit the field where the new characters popped up, you can explore around like on the normal keys, and lift to enter. If you move outside, however, the popup will go away, and you have to start over. This is also a bit of a point of criticism I have: The learning curve at the beginning is a bit high, and one can dismiss the popup by accident very easily. But hey, I can enter umlauts now! ![]()

Another point is that the umlaut characters are sometimes announced with funny labels. The German a umlaut ("a), for example, is said to have a label of “a with threema”. Same for o and u umlauts. But once one gets used to these, entering umlauts is not really slower than on iOS if you don’t use the much narrower keyboard with umlauts already present.

This new version of the keyboard also has some other nice additions: If Android will auto-correct what you typed, space, and many relevant punctuation characters will now say that your text will be changed to the auto-corrected text once you enter this particular symbol. This is really nice since you immediately can explore to the top of the keyboard and maybe find another auto-correct suggestion, or decide to keep your original instead.

The new version of the keyboard was not offered to me as a regular software update in the Play Store for some reason. Maybe because I had never installed a Play Store version before. I had to explicitly bring it up in Google Play and tell the device to update it. If you need it, you can browse to the link above on the desktop and tell Google Play to send it to your relevant devices. ![]()

Two points gone!

Editing text

The next point of criticism I had was the lack of ability to control the cursor reliably, and to use text editing commands. Guess what? TalkBack improved this a lot. When on a text field, the local context menu (swipe up then right in one motion) now includes a menu for cursor control. That allows one to move the cursor to the beginning or the end, select all, and enter selection mode. Once in selection mode, swiping left and right will actually select and deselect text. TalkBack is very terse here, so you have to remember what you selected and what you deselected, but with a bit of practice, it is possible. Afterwards, once some text is selected, this context menu for cursor control not only contains an item to end selection mode, but also to copy or cut the text you selected. Once something is in the clipboard, a Paste option becomes available, too. Yay! That’s another point taken off the list of criticism!

Continuous reading of lists

TalkBack added the ability to read lists continuously by scrolling them one screen forward or back once you swiped to the last or first currently visible item. In fact, they did so not long after my original post. This is another point that can be taken off my list.

The German railway navigator app

This app has become accessible. They came out with a new version late last year that unified the travel planning and ticketting apps, and one can now find connections, book the tickets and use the Android smartphone to legitimize oneself on the train from start to finish on Android like it is possible on iOS. Another point off my list!

eBooks

While the Google Play Books situation hasn’t changed much, the Amazon Kindle app became fully accessible on Android, closely following the lead on iOS. This is not really a surprise, since FireOS, Amazon’s fork of Android, became much mure accessible in its 3.0 release in late 2013, and that included the eBook reader.

Calendaring

This was originally one of the problematic points left, however comments here and in the eyes-free list lead to a solution that is more than satisfactory. I originally wrote:

The stock Calendar app is still confusing as hell. While it talks better somewhat, I still can’t make hells of tales out of what this app wants to tell me. I see dates and appointments intermixed in a fashion that is not intuitive to me. Oh yes, it talks much better than it used to, but the way this app is laid out is confusing the hell out of me. I’m open to suggestions for a real accessible calendar app that integrates nicely with the system calendars coming from my google account.

As Ana points out in a reply on eyes-free, the solution here is to switch the Calendar to Agenda View. One has to find the drop down list at the top left of the screen, which irritatingly says “August 2014'' (or whatever month and year one is in), and which lead me to believe this would open a date picker of some sort. But indeed it opens a small menu, and the bottom-most item is the Agenda View. Once switched to it, appointments are nicely sorted by day, their lengths are given and all other relevant info is there, too. Very nice! And actually quite consistent with the way Google Calendar works on the web, where Agenda View is also the preferred view.

And if one’s calendaring needs aren’t covered by the Google Calendar app, I was recommended Business Calendar as an alternative.

Currency recognition

Originally, I wrote:

The Ideal Currency Identifier still only recognizes U.S. currency, and I believe that’ll stay this way, since it hasn’t seen an update since September 2012. Any hints for any app that recognizes Canadian or British currencies would be appreciated!

I received three suggestions: Darwin Wallet, Blind-Droid Wallet, and Goggles. All three work absolutely great for my purposes!

Navigation and travel

I wrote originally:

Maps are still not as accessible as on iOS, but the Maps app got better over time. I dearly miss an app like BlindSquare that allows me to identify junctions ahead and other marks in my surroundings to properly get oriented. I’m open to suggestions for apps that work internationally and offer a similar feature set on Android!

I received several suggestions, among them DotWalker and OsmAnd. Haven’t yet had a chance to test either, but will do so over the course of the next couple of days. The feature sets definitely sound promising!

One thing I am definitely missing, when comparing Google Maps to Apple Maps accessibility, is the ability to explore the map with my finger. iOS has had this feature since version 6, which was released in 2012, where you can explore the map on the touch screen and get not only spoken feedback, but can trace streets and junctions with your finger, too, getting an exact picture of whichever area you’re looking at. I haven’t found anything on Android yet that matches this functionality, so I’ll probably keep an iPad around and tether it if I need to look at maps in case my next main phone is indeed an Android one.

Ana also recommended The vOICe for Android as an additional aid. However, this thing is so much more powerful in other senses, too, that it probably deserves an article of its own. I will definitely have a look (or listen) at this one as well!

Other improvements not covered in the original post

Android has seen some other improvements that I didn not specifically cover in my original post, but which are worth mentioning. For example, the newer WebViews became more accessible in some apps, one being the German railway company app I mentioned above. Some apps like Gmail even incorporate something that sounds a lot like ChromeVox. The trick is to swipe to that container, not touch it, then ChromeVox gets invoked properly. With that, the Gmail app is as usable on Android now as the iOS version has been since a major update earlier this year. It is also no longer necessary to enable script improvements to web views in the Accessibility settings. This is being done transparently now.

Other apps like Google+ actually work better on Android even than on iOS. ![]()

Other third-party apps like Facebook and Twitter have also seen major improvements to their Android accessibility stories over the past year, in large parts due to the official formation of dedicated accessibility teams in those companies that train the engineers to deliver good accessible experience in new features they introduce.

And one thing should also be positively mentioned: Chrome is following the fox’s lead and now supports proper Explore By Touch and other real native Android accessibility integration. This is available in Chrome 35 and later.

Things that are still bummer points