Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Cameron: Rust for C++ programmers - part 3: primitive types and operators |

Numeric literals can take suffixes to indicate their type (using `i` and `u` instead of `int` and `uint`). If no suffix is given, Rust tries to infer the type. If it can't infer, it uses `int` or `f64` (if there is a decimal point). Examples:

fn main() {As a side note, Rust lets you redefine variables so the above code is legal - each `let` statement creates a new variable `x` and hides the previous one. This is more useful than you might expect due to variables being immutable by default.

let x: bool = true;

let x = 34; // type int

let x = 34u; // type uint

let x: u8 = 34u8;

let x = 34i64;

let x = 34f32;

}

Numeric literals can be given as binary, octal, and hexadecimal, as well as decimal. Use the `0b`, `0o`, and `0x` prefixes, respectively. You can use an underscore anywhere in a numeric literal and it will be ignored. E.g,

fn main() {Rust has chars and strings, but since they are Unicode, they are a bit different from C++. I'm going to postpone talking about them until after I've introduced pointers, references, and vectors (arrays).

let x = 12;

let x = 0b1100;

let x = 0o14;

let x = 0xe;

let y = 0b_1100_0011_1011_0001;

}

Rust does not implicitly coerce numeric types. In general, Rust has much less implicit coercion and subtyping than C++. Rust uses the `as` keyword for explicit coercions and casting. Any numeric value can be cast to another numeric type. `as` cannot be used to convert between booleans and numeric types. E.g.,

fn main() {Rust has the following numeric operators: `+`, `-`, `*`, `/`, `%`; bitwise operators: `|`, `&`, `^`, `<<`, `>>`; comparison operators: `==`, `!=`, `>`, `<`, `>=`, `<=`; short-circuit logical operators: `||`, `&&`. All of these behave as in C++, however, Rust is a bit stricter about the types the operators can be applied to - the bitwise operators can only be applied to integers and the logical operators can only be applied to booleans. Rust has the `-` unary operator which negates a number. The `!` operator negates a boolean and inverts every bit on an integer type (equivalent to `~` in C++ in the latter case). Rust has compound assignment operators as in C++, e.g., `+=`, but does not have increment or decrement operators (e.g., `++`).

let x = 34u as int; // cast unsigned int to int

let x = 10 as f32; // int to float

let x = 10.45f64 as i8; // float to int (loses precision)

let x = 4u8 as u64; // gains precision

let x = 400u16 as u8; // 144, loses precision (and thus changes the value)

println!("`400u16 as u8` gives {}", x);

let x = -3i8 as u8; // 253, signed to unsigned (changes sign)

println!("`-3i8 as u8` gives {}", x);

//let x = 45u as bool; // FAILS!

}

http://featherweightmusings.blogspot.com/2014/04/rust-for-c-programmers-part-3-primitive.html

|

|

Pascal Finette: Anatomy of a Speech |

A few weeks ago I was invited to speak at the World Business Dialogue in Cologne, Germany on “Tech for Good”.

As part of my speech I prepared speaking notes for the deck I was using. Below you’ll find the raw notes – this is the completely unedited version I wrote to prepare myself for the speech a day before the event itself.

I figured it might be fun to get a peek into the ugly underbelly of making a public speech.

[1] Good afternoon. Let’s talk a bit about what I mean when I talk about technology for good, why it’s important and why I am so passionate about it that I spent considerable amounts of my time working on it.

[2] Over the last century we experienced a rapidly accelerating curve of progress - all driven by technology. We long left the linear growth path and are on an accelerated exponential curve.

You might have heard of Moore’s Law: Intel’s founder Gordon Moore predicted that computers will double in speed about every two years. That’s linear growth. And it’s not true for a lot of technologies anymore - we far exceed this growth pattern.

Let me give you just two examples:

[3] 5 Exabytes of information, that’s a one with 18 zeros, is the amount of information all of mankind produced since the beginning of time until the year 2003.

In 2010 we produced the same amount of information every two days.

Last year we produced those 5 exabytes every 10 minutes.

[4] When the human genome project first sequenced the full human genome we spend about 10 years and 3 billion dollars on it. That was around the year 2000.

In 2006 we brought the price down to $10 million dollars and a few months of sequencing time.

This year you can get your genome sequenced for a mere $1,000 in just a few hours.

[5] These are all exponential trends. They happen in industry after industry - and yet, as humans, we are poorly equipped to identify them.

Take this curve of mobile phone adoption over time: Every single time an expert made a projection their prognosis was way off - and linear. We kept saying: We have reached the tipping point, technology doesn’t get any cheaper, faster or better anymore by the same rate we saw before. And we were wrong.

So why is this important? Because we need to push the envelop to solve some of the worlds most intractable problems.

[6] In 2050 we will have 9 billion people on this planet. To feed them we need to grow agricultural output by 2% year over year. Current technology only produces a 1% year over year gain.

[7] Today 3 billion people live on less than $2.5 a day - that’s about half what your cup of fancy Starbucks coffee cost you.

[8] Currently 800 million people don’t have access to clean drinking water. Not running water in their homes - any form of clean drinking water even from a well 10 miles away.

[9] 2.5 billion people around the world don’t have access to sanitation. Which effectively means open defecation - which results in countless health issues.

[10] We need to create 600 million new jobs in the next 15 years to sustain current employment rates.

And yet…

[11] When it comes to technology too many of us focus on building the next version of this.

Let me give you a few examples of technology solving real world problems at scale:

[12] 30% of all water pumps in the 3rd world don’t work. Nobody knows which ones. The movie which plays again and again is: Aid organizations go into a village, build a well, leave and a year or so later the well doesn’t work anymore.

[13] A San Francisco startup builds this sensor which measures water flow speed and even monitors water quality, sends this information wirelessly to a central command center and allows, for the first time, so see which wells operate well, which need servicing and so on. These sensors cost less than $30 and are currently deployed in Africa by Charity Water.

[14] Or take vaccination: 20% of all vaccines administered in the 3rd world are spoiled due to a break in the cold chain. In the best case this leads to ineffective vaccines, in the worst case it kills people. In any case - it leads to massive challenges if we want to eradicate diseases.

[15] A Boston-based startup developed this wirelessly controlled sensor which monitors temperature every few minutes, sends the data to a server and allows not only to find out if a particular vaccine is spoiled but also where in the chain it spoiled and thus allows, for the first time, to fix and optimize cold chains.

[16] And take natural disasters. One of the big challenges in disaster areas is that often vital infrastructure such as roads is destroyed. To get intel and aid into these regions we fly helicopters and airplanes - very expensive, hard to pull off (you need a working air strip etc), complicated.

[17] This copter-drone, developed by a team at Singularity University costs $2000, delivers up to 20 pounds of medication and provides valuable on-the-ground intel to aid workers.

[18] In summary: We need to develop new and novel ways to solve some of the worlds largest problems. Those solutions need to be deployed at scale. Technology is our ticket out of this situation. Pushing the curve towards exponential growth is what’s needed.

[19] And make no mistake: This is not only charity but good business. People who live on $2.50 a day still spend those $2.50. They just can’t buy good products at the moment. These are markets with hundreds of millions and billions of people.

|

|

Manish Goregaokar: I've been selected for Google Summer of Code 2014! |

My project is to implement XMLHttpRequest in Servo (proposal), under Mozilla (mentored by the awesome Josh Matthews)

Servo is a browser engine written in Rust. The Servo project is basically a research project, where Mozilla is trying to rethink the way browser engines are designed and try to make one which is more memory safe (The usage of Rust is very crucial to this goal), and much more parallel. If you'd like to learn more about it, check out this talk or join #servo on irc.mozilla.org.

What is GSoC?

Who else got selected?

Mozillia India friends

- Saurabh Anand (sawrubh): FileLinks in IMs / File transfer

- Avik Pal: Sound Visualization And Sound Effects In Artikulate

- Sukant Garg (gargsms): Add learning capability in the Gaia Keyboard prediction

- Pankaj Malhotra (bitgeeky): Functional Test Suite and Features for QA Taskboard - One and Done

- Suyash Agarwal (sshagarwal): Thunderbird - Make the unit test framework work with maildir mailbox format

- Sunny (darkolwzz): Implement Zest recorder and runner

Other IITB-ians

- Alankar Kotwal: Image Pixel Based Photometric Redshift Estimation

- Sushant Hiray: Extending Elementary Functions in CSymPy

- Navin Chandak: pgmpy : Implementation of Undirected Graphical Models and its algorithms

- Aman Mangal: Work Stealing Scheduling on Parallella

- Saket Choudhary: Human Genetic Variation Viewer

- Kunal Tyagi: Integration of ROS and Gazebo with Tango Controls

- Aditya Nambiar: Visualization for Mailing stats and A/B testing

- Anand Soni: Improvement of automatic benchmarking system

- Praveen Kumar Pendyala: Android based remote display

- S K Savant: Multiview Registration

- Abhishek Bhowmick: Performance Optimization with VOLK

- Roshan Raghupathy: Expand and Improve Boost.Compute

- Dushyant Sabharwal: Proposal for Access Control User Interface for SOS Servers

- Siddhant Rajagopalan: Mail Blast UI

http://inpursuitoflaziness.blogspot.com/2014/04/google-summer-of-code-2014.html

|

|

Nick Cameron: Rust for C++ programmers - an intermission - why Rust |

If you are using C or C++, it is probably because you have to - either you need low-level access to the system, or need every last drop of performance, or both. Rust aims to do offer the same level of abstraction around memory, the same performance, but be safer and make you more productive.

Concretely, there are many languages out there that you might prefer to use to C++: Java, Scala, Haskell, Python, and so forth, but you can't because either the level of abstraction is too high - you don't get direct access to memory, you are forced to use garbage collection, etc. - or there are performance issues - either performance is unpredictable or its simply not fast enough. Rust does not force you to use garbage collection, and as in C++, you get raw pointers to memory to play with. Rust subscribes to the 'pay for what you use' philosophy of C++. If you don't use a feature, then you don't pay any performance overhead for its existence. Furthermore, all language features in Rust have predictable (and usually small) cost.

Whilst these constraints make Rust a (rare) viable alternative to C++, Rust also has benefits: it is memory safe - Rust's type system ensures that you don't get the kind of memory errors which are common in C++ - memory leaks, accessing un-initialised memory, dangling pointers - all are impossible in Rust. Furthermore, whenever other constraints allow, Rust strives to prevent other safety issues too - for example, all array indexing is bounds checked (of course, if you want to avoid the cost, you can (at the expense of safety) - Rust allows you to do this in unsafe blocks, along with many other unsafe things. Crucially, Rust ensures that unsafety in unsafe blocks stays in unsafe blocks and can't affect the rest of your program). Finally, Rust takes many concepts from modern programming languages and introduces them to the systems language space. Hopefully, that makes programming in Rust more productive, efficient, and enjoyable.

I would like to motivate some of the language features from part 1. Local type inference is convenient and useful without sacrificing safety or performance (it's even in modern versions of C++ now). A minor convenience is that language items are consistently denoted by keyword (`fn`, `let`, etc.), this makes scanning by eye or by tools easier, in general the syntax of Rust is simpler and more consistent than C++. The `println!` macro is safer than printf - the number of arguments is statically checked against the number of 'holes' in the string and the arguments are type checked. This means you can't make the printf mistakes of printing memory as if it had a different type or addressing memory further down the stack by mistake. These are fairly minor things, but I hope they illustrate the philosophy behind the design of Rust.

http://featherweightmusings.blogspot.com/2014/04/rust-for-c-programmers-intermission-why.html

|

|

Peter Bengtsson: Grymt - because I didn't invent Grunt here |

grymt is a python tool that takes a directory full of .html, .css and .js and prepares the html for optimial production use.

For a teaser:

-

Look at the "output" (Note! You have to right-click and view source)

So why did I write my own tool and not use Grunt?!

Glad you asked! The reason is simple: I couldn't get Grunt to work.

Grunt is a framework. It's a place where you say which "recipes" to execute and how. It's effectively a common config framework. Like make.

However, I tried to set up a bunch of recipes in my Gruntfile.js and most of them worked well individually but it was a hellish nightmare to get it all to work together just the way I want it.

For example, the grunt-contrib-uglify is fine for doing the minification but it doesn't work with concatenation and it doesn't deal with taking one input file and outputting to a different file.

Basically, I spent two evenings getting things to work but I could never get exactly what I wanted. So I wrote my own and because I'm quite familiar with this kind of stuff, I did it in Python. Not because it's better than Node but just because I had it near by and was able to quicker build something.

So what sweet features do you get out of grymt?

-

You can easily make an output file have a hash in the filename. E.g.

vendor-$hash.min.jsbecomesvendor-64f7425.min.jsand thus the filename is always unique but doesn't change in between deployments unless you change the files. -

It automatically notices which files already have been minified. E.g. no need to minify

somelib.min.jsbut do minifyotherlib.js. -

You can put

$git_revisionanywhere in your HTML and this gets expanded automatically. For example, view the source of buggy.peterbe.com and look at the first 20 lines. -

Images inside CSS get rewritten to have unique names (based on files' modified time) so they can be far-future cached aggresively too.

-

You never have to write down any lists of file names in soome Gruntfile.js equivalent file

-

It copies ALL files from a source directory. This is important in case you have something like this inside your javascript code:

$('for example.').attr('src', 'picture.jpg')

-

You can chose to inline all the minified and concatenated CSS or javascript. Inlining CSS is neat for single page apps where you have a majority of primed cache hits. Instead of one .html and one .css you get just one .html and the amount of bytes is the same. Not having to do another HTTP request can save a lot of time on web performance.

-

The generated (aka. "dist" directory) contains everything you need. It does not refer back to the source directory in any way. This means you can set up your apache/nginx to point directly at the root of your "dist" directory.

So what's the catch?

-

It's not Grunt. It's not a framework. It does only what it does and if you want it to do more you have to work on grymt itself.

-

The files you want to analyze, process and output all have to be in a sub directory.

Look at how I've laid out the files here in this project for example. ALL files that you need is all in one sub-directory calledapp. So, to rungrymtI simply run:grymt app. -

The HTML files you throw into it have to be plain HTML files. No templates for server-side code.

How do you use it?

pip install grymt

Then you need a directory it can process, e.g ./client/ (assumed to contain a .html file(s)).

grymt ./client

For more options, check out

grymt --help

What's in the future of grymt?

If people like it and want to add features, I'm more than happy to accept pull requests. Some future potential feature work:

-

I haven't needed it immediately, yet, myself, but it would be nice to add things like coffeescript, less, sass etc into pre-processing hooks.

-

It would be easy to automatically generate and insert a reference to a appcache manifest. Since every file used and mentioned is noticed, we could very accurately generate an appcache file that is less prone to human error.

-

Spitting out some stats about number bytes saved and number of files reduced.

|

|

Justin Dolske: PoV Firefox |

A nice project by Antonio Ospite:

It’s a device made of a programmable LED strip attached to a jump rope, which can be used with high exposure photography for Light Painting. The principle behind it is the same as those cool POV (Persistence of vision) displays.

Very cool!

(via Hack A Day)

|

|

Christian Heilmann: Web Components and you – dangers to avoid |

Web Components are a hot topic now. Creating widgets on the web that are part of the browser’s rendering flow is amazing. So is inheriting from and enhancing existing ones. Don’t like how a SELECT looks or works? Get it and override what you don’t like. With the web consumed on mobile devices performance is the main goal. Anything we can do to save on battery consumption and to keep interfaces responsive without being sluggish is a good thing to do.

Web Components are a natural evolution of HTML. HTML is too basic to allow us to create App interfaces. When we defined HTML5 we missed the opportunity to create semantic widgets existing in other UI libraries. Instead of looking at the class names people used in HTML, it might have been more prudent to look at what other RIA environments did. We limited the scope of new elements to what people already hacked together using JS and the DOM. Instead we should have aimed for parity with richer environments or desktop apps. But hey, hindsight is easy.

What I am more worried about right now is that there is a high chance that we could mess up Web Components. It is important for every web developer to speak up now and talk to the people who build browsers. We need to make this happen in a way our end users benefit from Web Components the best. We need to ensure that we focus our excitement on the long-term goal of Web Components. Not on how to use them right now when the platforms they run on aren’t quite ready yet.

What are the chances to mess up? There are a few. From what I gathered at several events and from various talks I see the following dangers:

- One browser solutions

- Dependency on filler libraries

- Creating inaccessible solutions

- Hiding complex and inadequate solutions behind an element

- Repeating the “just another plugin doing $x” mistakes

One browser solutions

This should be pretty obvious: things that only work in one browser are only good for that browser. They can only be used when this browser is the only one available in that environment. There is nothing wrong with pursuing this as a tech company. Apple shows that when you control the software and the environment you can create superb products people love. It is, however, a loss for the web as a whole as we just can not force people to have a certain browser or environment. This is against the whole concept of the web. Luckily enough, different browsers support Web Components (granted at various levels of support). We should be diligent about asking for this to go on and go further. We need this, and a great concept like Web Components shouldn’t be reliant on one company supporting them. A lot of other web innovation that heralded as a great solution for everything went away quickly when only one browser supported it. Shared technology is safer technology. Whilst it is true that more people having a stake in something makes it harder to deliver, it also means more eyeballs to predict issues. Overall, sharing efforts prevents an open technology to be a vehicle for a certain product.

Dependency on filler libraries

A few years ago we had a great and – at the same time – terrible idea: let’s fix the problems in browsers with JavaScript. Let’s fix the weirdness of the DOM by creating libraries like jQuery, prototype, mootools and others. Let’s fix layout quirks with CSS libraries. Let’s extend the functionality of CSS with preprocessors. Let’s simulate functionality of modern browsers in older browsers with polyfills.

All these aim at a simple goal: gloss over the differences in browsers and allow people to use future technologies right now. This is on the one hand a great concept: it empowers new developers to do things without having to worry about browser issues. It also allows any developer to play with up and coming technology before its release date. This means we can learn from developers what they want and need by monitoring how they implement interfaces.

But we seem to forget that these solutions were build to be stop-gaps and we become reliant on them. Developers don’t want to go back to a standard interface of DOM interaction once they got used to $(). What people don’t use, browser makers can cross off their already full schedules. That’s why a lot of standard proposals and even basic HTML5 features are missing in them. Why put effort into something developers don’t use? We fall into the trap of “this works now, we have this”, which fails to help us once performance becomes an issue. Many jQuery solutions on the desktop fail to perform well on mobile devices. Not because of jQuery itself, but because of how we used it.

Which leads me to Web Components solutions like X-Tags, Polymer and Brick. These are great as they make Web Components available right now and across various browsers. Using them gives us a glimpse of how amazing the future for us is. We need to ensure that we don’t become dependent on them. Instead we need to keep our eye on moving on with implementing the core functionality in browsers. Libraries are tools to get things done now. We should allow them to become redundant.

For now, these frameworks are small, nimble and perform well. That can change as all software tends to grow over time. In an environment strapped for resources like a $25 smartphone or embedded systems in a TV set every byte is a prisoner. Any code that is there to support IE8 is nothing but dead weight.

Creating inaccessible solutions

Let’s face facts: the average web developer is more confused about accessibility than excited by it. There are many reasons for this, none of which worth bringing up here. The fact remains that an inaccessible interface doesn’t help anyone. We tout Flash as being evil as it blocks out people. Yet we build widgets that are not keyboard accessible. We fail to provide proper labeling. We make things too hard to use and expect the steady hand of a brain surgeon as we create tight interaction boundaries. Luckily enough, there is a new excitement about accessibility and Web Components. We have the chance to do something new and do it right this time. This means we should communicate with people of different ability and experts in the field. Let’s not just convert our jQuery plugins to Web Components in verbatim. Let’s start fresh.

Hiding complex and inadequate solutions behind an element

In essence, Web Components allow you to write custom elements that do a lot more than HTML allows you to do now. This is great, as it makes HTML extensible (and not in the weird XHTML2 way). It can also be dangerous, as it is simple to hide a lot of inefficient code in a component, much like any abstraction does. Just because we can make everything into an element now, doesn’t mean we should. What goes into a component should be exceptional code. It should perform exceptionally well and have the least dependencies. Let’s not create lots of great looking components full of great features that under the hood are slow and hard to maintain. Just because you can’t see it doesn’t mean the rules don’t apply.

Repeating the “just another plugin doing $x” mistake

You can create your own carousel using Web Components. That doesn’t mean though that you have to. Chances are that someone already built one and the inheritance model of Web Components allows you to re-use this work. Just take it and tweak it to your personal needs. If you look for jQuery plugins that are image carousels right now you better bring some time. There are a lot out there – in various states of support and maintenance. It is simple to write one, but hard to maintain.

Writing a good widget is much harder than it looks. Let’s not create a lot of components because we can. Instead let’s pool our research and findings and build a few that do the job and override features as needed. Core components will have to change over time to cater for different environmental needs. That can only happen when we have a set of them, tested, proven and well architected.

Summary

I am super excited about this and I can see a bright future for the web ahead. This involves all of us and I would love Flex developers to take a look at what we do here and bring their experience in. We need a rich web, and I don’t see creating DOM based widgets to be the solution for that for much longer with the diversity of devices ahead.

http://christianheilmann.com/2014/04/18/web-components-and-you-dangers-to-avoid/

|

|

Will Kahn-Greene: Django Eadred v0.3 released! Django app for generating sample data. |

Django Eadred gives you some scaffolding for generating sample data to make it easier for new contributors to get up and running quickly, bootstrapping required database data, and generating large amounts of random data for testing graphs and things like that.

The v0.3 release is a small one, but good:

- Added support for Python 3.3 and later (Thank you Trey Hunner!)

- Fixed test infrastructure and added tox support.

- Fixed documentation.

There are no backwards-compatability problems with previous versions.

To update, do:

pip install -U eadred

|

|

Nick Cameron: Rust for C++ programmers - part 1: Hello world |

First you need to install Rust. You can download a nightly build from http://www.rust-lang.org/install.html (I recommend the nighlties rather than 'stable' versions - the nightlies are stable in that they won't crash too much (no more than the stable versions) and you're going to have to get used to Rust evolving under you sooner or later anyway). Assuming you manage to install things properly, you should then have a `rustc` command available to you. Test it with `rustc -v`.

Now for our first program. Create a file, copy and paste the following into it and save it as `hello.rs` or something equally imaginative.

fn main() {Compile this using `rustc hello.rs`, and then run `./hello`. It should display the expected greeting \o/

println!("Hello world!");

}

Two compiler options you should know are `-o ex_name` to specify the name of the executable and `-g` to output debug info; you can then debug as expected using gdb or lldb, etc. Use `-h` to show other options.

OK, back to the code. A few interesting points - we use `fn` to define a function or method. `main()` is the default entry point for our programs (we'll leave program args for later). There are no separate declarations or header files as with C++. `println!` is Rust's equivalent of printf. The `!` means that it is a macro, for now you can just treat it like a regular function. A subset of the standard library is available without needing to be explicitly imported/included (we'll talk about that later). The `println!` macros is included as part of that subset.

Lets change our example a little bit:

fn main() {`let` is used to introduce a variable, world is the variable name and it is a string (technically the type is `&'static str`, but more on that in a later post). We don't need to specify the type, it will be inferred for us.

let world = "world";

println!("Hello {}!", world);

}

Using `{}` in the `println!` statement is like using `%s` in printf. In fact, it is a bit more general than that because Rust will try to convert the variable to a string if it is not one already*. You can easily play around with this sort of thing - try multiple strings and using numbers (integer and float literals will work).

If you like, you can explicitly give the type of `world`:

let world: &'static str = "world";In C++ we write `T x` to declare a variable `x` with type `T`. In Rust we write `x: T`, whether in `let` statements or function signatures, etc. Mostly we omit explicit types in `let` statements, but they are required for function arguments. Lets add another function to see it work:

fn foo(_x: &'static str) -> &'static str {The function `foo` has a single argument `_x` which is a string literal (we pass it "bar" from `main`). We don't actually use that argument in `foo`. Usually, Rust will warn us about this. By prefixing the argument name with `_` we avoid these warnings. In fact, we don't need to name the argument at all, we could just use `_`.

"world"

}

fn main() {

println!("Hello {}!", foo("bar"));

}

The return type for a function is given after `->`. If the function doesn't return anything (a void function in C++), we don't need to give a return type at all (as in `main`). If you want to be super-explicit, you can write `-> ()`, `()` is the void type in Rust. `foo` returns a string literal.

You don't need the `return` keyword in Rust, if the last expression in a function body (or any other body, we'll see more of this later) is not finished with a semicolon, then it is the return value. So `foo` will always return "world". The `return` keyword still exists so we can do early returns. You can replace `"world"` with `return "world";` and it will have the same effect.

* This is a programmer specified conversion which uses the `Show` trait, which works a bit like `toString` in Java. You can also use `{:?}` which gives a compiler generated representation which is sometimes useful for debugging. As with printf, there are many other options.

http://featherweightmusings.blogspot.com/2014/04/rust-for-c-programmers-part-1-hello.html

|

|

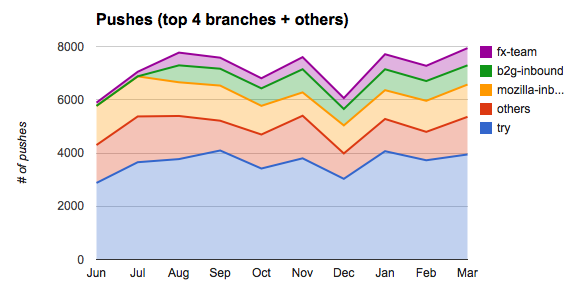

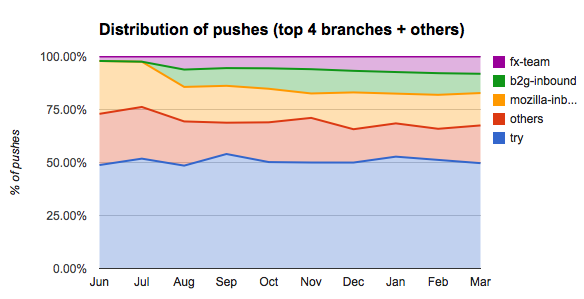

Armen Zambrano Gasparnian: Mozilla's pushes - March 2014 |

You can load the data as an HTML page or as a json file.

TRENDS

March (as February did) has the highest number of pushes EVER.We will soon have 8,000 pushes/month as our norm.

The only noticeable change in the distribution of pushes is that non-integration trees had a higher share of the cake (17.80% on Mar. vs 14.60% on Feb.).

HIGHLIGHTS

- 7,939 pushes

- NEW RECORD

- 284 pushes/day (average)

- NEW RECORD

- Highest number of pushes/day: 435 pushes on March, 4th

- NEW RECORD

- 16.07 pushes/hour (average)

GENERAL REMARKS

Try keeps on having around 50% of all the pushes.The three integration repositories (fx-team, mozilla-inbound and b2g-inbound) account around 30% of all the pushes.

RECORDS

- March 2014 was the month with most pushes (7,939 pushes)

- March 2014 has the highest pushes/day average with 284 pushes/day

- February 2014 has the highest average of "pushes-per-hour" is 16.57 pushes/hour

- March 4th, 2014 had the highest number of pushes in one day with 435 pushes

DISCLAIMERS

- The data collected prior to 2014 could be slightly off since different data collection methods were used

- Gaia pushes are more or less counted. I will write a blog post about in the near term.

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://armenzg.blogspot.com/2014/04/mozillas-pushes-march-2014.html

|

|

Ben Hearsum: This week in Mozilla RelEng – April 17th, 2014 |

Major Highlights:

- Massimo finished converting the remainder of our AWS Buildbot masters to cheaper & faster instance types.

- Aki is making steady progress on Try support for Gaia changes.

- Armen turned off the last jobs that were run on our rev 3 machines, which finally lets us retire them.

- Aki and Catlee got initial builds for the FirefoxOS “Flame” reference device going.

- Pete made more progress on scripts to do fully automated buildbot master reconfigs, which should help us land changes to them more quickly and efficiently.

Completed work (resolution is ‘FIXED’):

- Balrog: Backend

- Buildduty

- General Automation

- Split up test suites that take longer than N minutes to run

- remove machines that don’t exist from buildbot-configs

- investigate increase in certificate attribute check failures since bug 803531

- generate “FxOS Simulator” builds for B2G

- Spot instances don’t have security group set properly

- Rev4 machines have Puppet disabled which can lose their name and burn talos jobs because they end up with a name like client-builders-mac-mini-10

- Add mbrubeck to bors reviewers for servo

- turn off some b2g builds

- [Flame]figure out how to create “Flame” builds manually

- Create retry() context manager

- Bump MAX_BROKER_REFS to 4096

- Turn off Fedora b2g reftests on trunk branches

- Provide native js shell to tarako b2g builds

- Split up mochitest-bc on desktop into ~30minute chunks

- Bengali in 1.3t builds

- Loan Requests

- please loan jmaher an ec2 machine equal to a linux32-spot instance

- Slave loan request for Matt Woodrow

- borrow a linux ix multinode host to test CentOS-6.5

- Need a win32 slave to debug Lightning pymake issues

- Slave loan request for a w64-ix machine

- Slave loan request for a t-w864-ix machine

- Slave loan request for a bld-lion-r5 machine

- Other

- Platform Support

- Release Automation

- No Tarako 1.3 build available to smoketest

- staging release runner instructions don’t work with new dev-master1/ssh key management

- bouncer submitter doesn’t work in staging

- ccache enabled in nightly mozconfigs but not beta or release

- kill release downloader

- Need an update watershed in 29.0bN for beta channel

- Releases

- Repos and Hooks

- Request for a new git repository: “fuzz-tools” (public)

- Mirror bmo to github

- Mirror upstream Bugzilla to github

- Tools

- Make mozharness+buildbot configuration changes necessary for new test suite mochitest-dt

- Add mochitest-dt to Trychooser

- Slaveapi should support mobile devices for reboots

- Slaveclass warning on slave health

- Cron /home/buildduty/buildfaster/bin/buildfaster_report.sh

- buildbot wrangler should notice failed reconfigs

- Create instructions for Windows on EC2

In progress work (unresolved and not assigned to nobody):

- Balrog: Frontend

- Buildduty

- General Automation

- Make it possible to run gaia try jobs *without* doing a build

- Updates not properly signed on the nightly-ux branch: Certificate did not match issuer or name.

- Schedule Android 4.0 Debug M1,M2,M3,M8 on all trunk trees and let them ride the trains

- branch gaia l10n repos

- Move Android 2.3 reftests to ix slaves (ash only)

- revamp b2g upload configs

- Add ssltunnel to xre.zip bundle used for b2g tests

- Do debug B2G desktop builds

- Run unittests on Win64 builds

- Need Tarako 1.3t FOTA updates for testing purposes

- Add the build step or else process name to buildbot’s generic command timed out failure strings

- Fully decommission no longer used buildbot masters

- point [xxx] repository at new bm-remote webserver cluster to ensure parity in talos numbers

- Intermittent Android 2.3 install step hang

- Configure the MLS key for pvt builds

- Loan Requests

- Loan t-w864-ix-003 to jrmuizel

- Slave loan request for a tst-linux64-ec2 vm

- Slave loan request for dtownsend@mozilla.com

- Need a slave for bug 818968

- Slave loan request for dtownsend@mozilla.com

- Loan t-w732-ix-003, t-w732-ix-004 to Q

- Loan mshal an aws builder

- Loan :sfink a linux64 bld-centos6-hp-005

- Request Loan Machine for tst-linux64-spot

- Other

- Platform Support

- Deploy NSIS 3.0a2 or MozillaBuild 1.9 (includes NSIS 3.0a2) to buildslaves

- signing win64 builds is busted

- Cleanup temporary files on boot

- evaluate mac cloud options

- Create a Windows-native alternate to msys rm.exe to avoid common problems deleting files

- Release Automation

- Thunderbird Windows L10n repack builds failed with pymake enabled

- Figure out how to offer release build to beta users

- cache MAR + installer downloads in update verify

- Releases

- tracking bug for build and release of Firefox and Fennec 30.0

- tracking bug for build and release of Firefox 24.6.0 ESR

- Add SeaMonkey 2.26 Beta 2 to bouncer

- tracking bug for build and release of Thunderbird 24.6.0

- do a staging release of Firefox and Fennec 31.0b1

- Tools

- cut over gecko.git to the new vcs-sync system

- cut over l10n repos to the new vcs-sync system

- Blobber upload files not served with correct content type

- update relman scripts based on notes from first usage

- tool to compare different sources of slave and master data

- Add Windows support to aws_stop_idle.py

- Create a Comprehensive Slave Loan tool

http://hearsum.ca/blog/this-week-in-mozilla-releng-april-17th-2014/

|

|

Dietrich Ayala: Firefox OS and Academic Programs |

Although Mozilla feels almost like a household name at this point, it is a relatively small organization – tiny, actually – compared to the companies that ship similar types of software [1]. We must, however, have the impact of a much larger entity in order to ensure that the internet stays an open platform accessible to all.

Producing consumer software which influences the browser and smartphone OS markets in specific ways is how we make that impact. Shipping that software requires teams of people to design, build and test it, and all the countless other aspects of the release process. We can hire some of these people, but remember: we’re relatively tiny. We cannot compete with multi-billion-dollar mega-companies while operating in traditional ways. Mozilla has to be far more than the sum of its paid-employee parts in order to accomplish audaciously ambitious things.

Open source code and open communication allow participation and contribution from people that are not employees. And this is where opportunities lie for both Mozilla and universities.

For universities, undergraduates can earn credit, get real world experience, and ship software to hundreds of millions of people. Graduate researchers can break ground in areas where their findings can be applied to real world problems, and where they can see the impact of their work in the hands of people around the world. And students of any kind can participate before, during and after any involvement that is formally part of their school program.

For Mozilla, we receive contributions that help move our products and projects forward, often in areas that aren’t getting enough attention only because we don’t have the resources to do so. We get an influx of new ideas and new directions. We gain awesome contributors and can educate tomorrow’s technology workers about our mission.

I’ve been working with a few different programs recently to increase student involvement in the Firefox OS:

- Portland State University: The PSU CS Capstone program, run by Prof. Warren Harrison, has teams of students tackling projects for open source groups. The teams are responsible for all parts of the software life-cycle during the project. In the spring of 2013, a group of five students implemented an example messaging app using Persona and Firebase, documenting the challenges of Web platform development and the Firefox OS development/debugging workflow. This year’s group will implement a feature inside Firefox OS itself.

- Facebook Open Academy: This is a program coordinated by Stanford and Facebook, that puts teams from multiple universities together to build something proposed by an existing open source project. The Firefox OS team includes students from Carnegie-Mellon, Purdue, Harvard, Columbia in the US, and Tampere UT in Finland. They’re adding a new feature to Firefox OS which allows you to share apps directly between devices using NFC and Bluetooth. With 14 members across five universities, this team is collaborating via Github, Google Groups, IRC and weekly meetings for both the front-end and back-end parts, providing experience with remote working, group coordination and cross-team collaboration.

- University of Michigan: Prof. Z. Morley Mao’s mobile research group has started looking at device and network performance in Firefox OS. They’ve got a stack of phones and SIM cards, and we’re working with them to find ways to improve battery life and network efficiency on our devices. They’ve started a collection of focus areas and related research on the Mozilla wiki.

If you’re at an academic institution and would like to learn more about how to get your department or your students involved, or if you’re a Mozillian who wants to coordinate a project with your alma mater, email me!

1. Mozilla has ~1000 employees. According to Wikipedia, Google has ~50,000 employees, Apple ~80,000 and Microsoft ~100,000.

http://autonome.wordpress.com/2014/04/17/firefox-os-and-academic-programs/

|

|

Robert Nyman: The day Santa died |

Today, just as we prepare for an Easter break, I heard my youngest daughter arguing with her older sister, and then the younger came running to me.

Dad, does the Easter Bunny and Santa exist?

As a parent, or anyone speaking to a child, you don’t want to take away their dreams or hopes. At the same time, it’s a rough world, so you see it as your responsibility to teach them as much as possible about how the world works so they both know more but also, to be honest, avoid the risk of them being ridiculed for not knowing something.

So should I leave it be and then she’ll eventually find out? Or should I tell her the truth, with the risk of making her sad? I do believe in honesty but at the same time I don’t want to be cynical.

I thought a bit about it, what to say and how to approach the situation. After some thinking, I decided to ask her what she thought:

What do you think? Do you believe they exist?

Yes. Or… I think so.

Have you ever seen any of them in real life?

Well… We had a bunny at the kindergarten once. Who wore shoes. And then you were Santa once, daddy.

Right. So do you believe they exist?

Hmm… No, not really, I guess.

sounding more like she wished they existed, than actually thinking they do

And then she bounced off, having learned another lesson abut life.

|

|

Gijs Kruitbosch: Why doing visual refreshes of Firefox is hard |

We’re getting closer and closer to releasing Australis with Firefox 29, and that gives me more time to write something that’s been on my mind the last couple of weeks/months. Extra impetus was provided by sentiment along the lines of “how did you possibly miss this / think fix X was a good idea?” from some people outside the core development team, responding to some of our changes.

In this post, I’d like to give you an idea of the number of combinations of options, configurations, themes, add-ons, fonts and styles. It is enormous. Firefox generally tries to fit in with your operating system as best it can, and that means we have to pay attention to lots of things. And yes, that means sometimes we miss things. Here’s a breakdown of some of the things I’ve seen fly by as we made Australis, all linked to bugs specific to particular scenarios (there are 54 individual bugs linked, the majority of which were fixed for 29).

Firefox is available on three main (tier-1) platforms: Windows, Linux, and OS X.

All three platforms support lightweight themes, of which we support light and dark text variants. On light text lightweight themes, we invert the text and icons to be bright (which usually means the theme itself has a dark background). Interactions between these themes and the OS are not always the same everywhere, which leads to bugs.

Different toolbars like the menubar and the bookmarks toolbar can be toggled on and off (which sometimes makes certain ideas more difficult), and the menubar has an autohide state, which is new on Linux and caused specific bugs there.

And although we normally always show the tabbar and the navbar, there are popup windows where we don’t (toolbar=no), which, you guessed it, causes bugs.

Then we have per-window private browsing, which has an indicator which makes things ‘fun’ (and soon private browsing will look even more different from normal browsing).

Of course, while we stick to English layout direction is more or less fixed, but we ship both LTR and RTL locales on all platforms, which changes the order of things, which frequently leads to bugs.

Then there’s the padding that we added for “customize mode”, which affects layout of the toolbars and the (‘fake’) titlebar, which had its own problems.

Some issues are specific to pinned or overflowing tabs (sometimes even particular tabs), as well as panorama/tab groups.

Beyond that, styling is somewhat platform-specific, each with their own quirks:

OS X

OS X is, in a certain sense, “easiest” because the OS doesn’t have a lot of options that mess with things (font size, for example, isn’t easily configurable). But there’s still some variation:

- Lion vs. pre-lion: 10.6, which we still support, has no fullscreen button in the titlebar (unlike 10.7-10.9) and has no concept of “Lion fullscreen”.

- Spaces: causes odd bugs with panels.

- HiDPI (“retina”): this causes bugs / missed cases. Add external displays which might not be hidpi, and you get even odder bugs (this one’s 10.9-only, too, it seems!).

- RTL. Coupled with retina –> more bugs.

- 10.9 broke more stuff.

- Titlebar can be turned on/off now: cue more bugs.

Linux

Linux really means “Unix that has GTK”, as far as theming is concerned. Unfortunately that ends up being a wide spectrum of cases:

- Configurable font-sizes. And unsurprisingly, even when we use font-size based sizing to avoid issues, that still causes bugs (because CSS rounds things. ouch.)

- Different GTK themes cause lots of bugs.

- The same GTK theme being different in different distros or shells (Gnome 3 vs. Unity) makes bugs difficult to reproduce/track down.

- Different versions of GTK and/or GTK with/without compositor cause issues.

Windows

Windows really means “Windows XP, Windows Vista, Windows 7, Windows 8(.1) and all the corresponding Windows Server versions”. Which then means:

- A large number of OS themes to worry about:

- Configurable colors, fonts and font-sizes for Windows classic themes (and I think pre-Windows-8 high contrast themes, too?)

- Different fonts ship as default in different Windows locales (!), and the default fonts change between Windows versions. The font is user-configurable under some themes and versions of Windows, but not others (e.g. Windows 8).

- Titlebar toggling and some Windows themes (but not others, and also, multiple windows and a non-default session restore pref, in the case of this bug)

- Toggling some about:config preferences has surprising results.

- HiDPI is increasingly common, and more complicated than on OS X because of the variety of scaling factors available. We improved the tabs, but there is plenty left to do.

- Custom Windows XP themes! (which don’t always seem to expose the right colors, making it practically impossible to work well)

- Significant differences between maximized and restored mode.

This list doesn’t include bugs caused or revealed by add-ons, but of course those also add interesting behaviour to the mix.

All in all, it’s been an interesting first year as an employee at Mozilla (I started April 1st, 2013), and I can’t wait to see all our changes ship: Firefox 29 is scheduled for release on April 29th.

http://www.gijsk.com/blog/2014/04/why-doing-visual-refreshes-of-firefox-is-hard/

|

|

Frederic Wenzel: The Case for the Ubiquitous Mobile Web |

As we use more and more mobile devices in our lives, an open platform is becoming more, not less important.

In an article "The decline of the Mobile Web", Chris Dixon worries about the future of the Web, as despite the dramatic uptake in mobile device usage, mobile Web usage is rapidly declining in favor of apps.

John Gruber of Daring Fireball argues, this is a success of good over bad user experiences and suggests we celebrate all this as an evolution of the Web towards a dumb pipe delivering data to whatever device and platform provides the user experience.

Apples and Oranges

Unfortunately, Gruber is drawing the wrong conclusions from a good premise. I think no one would disagree that users do (and should) gravitate towards good experiences. But when he weighs the UX of apps against not-mobile-oriented websites accessed via dedicated browsers, he's comparing apples to oranges and draws the conclusion that the Web's underlying technologies are therefore inherently inferior.

And besides, Web technology doesn't matter to him, as long as the user-facing app uses something akin to HTTP in the background he'll still count it as the Web:

"App Stores are walled gardens, but the apps themselves are just clients to the open web/internet."

This is a very strange use of the word "open". There's nothing open about relegating the Web to acting as a "dumb pipe" like the underlying communication protocols are supposed to be.

As it stands, Gruber is splitting hairs -- calling it the "Web" just because HTTP is involved -- but he's missing the point. Users don't care about what the data pipe looks like, they care about their window into that data.

The Ubiquitous Walled Garden

Why it is harmful to redefine the term Open Web like this becomes clear when we start our discussion from a level playing field. We should ask:

Assuming the same good user experience, is an application written on a proprietary platform just as good for the user as one written on an open stack?

Consider Evernote CEO Phil Libin's recent prediction we're moving towards a network of connected devices, where the experiences are not enclosed in apps, but are "just there".1

That's a wonderful world, just so long as you only use devices blessed by a company (iPhone, iWatch, iFridge and iTV). The walled garden is beautiful on the inside, if you enjoy the exact experiences they deem suitable for you (and that don't interfere with their revenue models, etc. etc.) Who owns the platform, owns the user. And while "users flock to the best experiences", the worst part is the owner of the platform can choke off innovation whenever they feel threatened by it, and the users may never know what they're missing out on.

The only way around this is by embracing a shared development platform that is not owned by any one competitor (hence, open). And this platform is the Web.

Will it suffice if all those services run on closed platforms, just so long as they speak HTTP in the background? No. What makes the Web open is that you can connect to its resources from anywhere, with any device, so long as a browser exists for the platform2. The Web, if it merely acts as a delivery vehicle for data, is not open anymore and cannot act as the level playing field for innovation and choice that it is meant to be.

Time for more, not less openness

This is, in a nutshell, why Firefox OS is such an important project. It's not yet another proprietary mobile app platform. In Firefox OS, the open Web is the platform.

Firefox OS is about weaving the open Web into the very fabric of the mobile landscape. It's about enabling the next generation of makers to hack their devices to their heart's content. It's about providing users with a platform that fosters actual innovation rather than giving them the illusion of choice.

And that's a user experience worth fighting for.

|

|

Armen Zambrano Gasparnian: Kiss our old Mac Mini test pool goodbye |

There's a very very long lit of people that have been involved in this project (see bug 864866).

I want to thank ahal, fgomes, jgriffin, jmaher, jrmuizel and rail for their help on the last mile.

We're very happy to have finally decommissioned this non-datacenter-friendly infrastructure.

A bit of history

These minis were purchased back in early 2010 and we bought more than 300 of them.At first, we run on them Fedora 12, Fedora 12 x64, Windows Xp, Windows 7 and Mac 10.5. Later on we also added 10.6 to the mix (if my memory doesn't fail me).

Somewhere in 2012, we moved the Mac 10.6 testings to the revision 4 new mac server minis and deprecated the 10.5 rev3 testing pool. We then re-purposed those machines to increase the Windows and the Fedora pools.

By May of 2013, we stopped running Windows on them.

During 2013, we moved a lot of the Fedora testing to EC2.

Now, we managed to move the B2G reftests and Firefox debug mochitest-browser-chrome to EC2.

NOTE: I hope my memory does not fail me

|

| Delivery of the Mac minis (photo credit to joduinn) |

|

| Racked at the datacenter (photo credit to joduinn) |

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://armenzg.blogspot.com/2014/04/kiss-our-old-mac-mini-test-pool-goodbye.html

|

|

Patrick Cloke: Community and Volunteers |

"I am surprised [...] by how heartless the discussion has been."I should note that I did have some help editing this down from my original post. Turns out I tend to write inflammatory statements that don't help get me point across. Who knew? Anyway, thanks to all of you who helped me out there!

My full post is below (with a few links added and plaintext formatting converted to HTML formatting):

http://clokep.blogspot.com/2014/04/community-and-volunteers.html

|

|

Gervase Markham: Who We Are And How We Should Be |

“Every kingdom divided against itself will be ruined, and every city or household divided against itself will not stand.” — Jesus

It has been said that “Mozilla has a long history of gathering people with a wide diversity of political, social, and religious beliefs to work with Mozilla.” This is very true (although perhaps not all beliefs are represented in the proportions they are in the wider world). And so, like any collection of people who agree on some things and disagree on others, we have historically needed to figure out how that works in practice, and how we can avoid being a “kingdom divided”.

Our most recent attempt to write this down was the Community Participation Guidelines. As I see it, the principle behind the CPGs was, in regard to non-mission things: leave it outside. We agreed to agree on the mission, and agreed to disagree on everything else. And, the hope was, that created a safe space for everyone to collaborate on what we agreed on, and put our combined efforts into keeping the Internet open and free.

That principle has taken a few knocks recently, and from more than one direction.

I suggest that, to move forward, we need to again figure out, as Debbie Cohen describes it, “how we are going to be, together”. In TRIBE terms, we need a Designed Alliance. And we need to understand its consequences, commit to it as a united community, and back it up forcefully when challenged. Is that CPG principle still the right one? Are the CPGs the best expression of it?

But before we figure out how to be, we need to figure out who we are. What is the mission around which we are uniting? What’s included, and what’s excluded? Does Mozilla have a strict or expansive interpretation of the Mozilla Manifesto? I have read many articles over the past few weeks which simply assume the answer to this question – and go on to draw quite far-reaching conclusions. But the assumptions made in various quarters have been significantly different, and therefore so have the conclusions.

Now everyone has had a chance to take a breath after recent events, and with an interim MoCo CEO in place and Mozilla moving forward, I think it’s time to start this conversation. I hope to post more over the next few days about who I think we are and how I think we should be, and I encourage others to do the same.

http://feedproxy.google.com/~r/HackingForChrist/~3/P5xDqnNwVwg/

|

|

Pete Moore: Weekly review 2014-04-16 |

Accomplishments & status:

- Thursday/Friday I was buildduty - see https://releng.etherpad.mozilla.org/buildduty for an overview of issues that came up and activities performed

- Monday/Tuesday worked on https://bugzilla.mozilla.org/show_bug.cgi?id=978928 - this ended up being pretty much a rewrite.

example log:

http://people.mozilla.org/~pmoore/summary.log

code:

https://github.com/petemoore/build-tools/compare/mozilla:master…bug978928 - Worked on update_verify (met with Rail to discuss)

- Met with Dave Hunt from A-Team for some pairing

|

|

Christian Heilmann: Browser inconsistencies: animated GIF and drawImage() |

I just got asked why Firefox doesn’t do the same thing as Chrome does when you copy a GIF into a canvas element using drawImage(). The short answer is: Chrome’s behaviour is not according to the spec. Chrome copies the currently visible frame of the GIF whereas Firefox copies the first frame. The latter is consistent with the spec.

You can see the behaviour at this demo page:

Here’s the bug on Firefox and the bug request in Webkit to make it consistent thanks to Peter Kasting there is also a bug filed for Blink.

The only way to make this work across browsers seems to be to convert the GIF into its frames and play them in a canvas, much like jsGIF does.

http://christianheilmann.com/2014/04/16/browser-inconsistencies-animated-gif-and-drawimage/

|

|