Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Rick Eyre: Hosting your JavaScript library builds for Bower |

A while ago I blogged about the troubles of hosting a pre-built distribution of vtt.js for Bower. The issue was that there is a build step we have to do to get a distributable file that Bower can use. So we couldn't just point Bower at our repo and be done with it as we weren't currently checking in the builds. I decided on hosting these builds in a separate repo instead of checking the builds into the main repo. However, this got troublesome after a while (as you might be able to imagine) since I was building and commiting the Bower updates manually instead of making a script like I should have. It might be a good thing that I didn't end up automating it with a script since we decided to switch to hosting the builds in the same repo as the source code.

The way I ended up solving this was to build a grunt task that utilizes a number of other tasks to build and commit the files while bumping our library version. This way we're not checking in new dist files with every little change to the code. Dist files which won't even be available through Bower or node because they're not attached to a particular version. We only need to build and check in the dist files when we're ready to make a new release.

I called this grunt task release and it utilizes the grunt-contrib-concat, grunt-contrib-uglify, and grunt-bump modules.

grunt.registerTask( "build", [ "uglify:dist", "concat:dist" ] );

grunt.registerTask( "stage-dist", "Stage dist files.", function() {

exec( "git add dist/*", this.async() );

});

grunt.registerTask("release", "Build the distributables and bump the version.", function(arg) {

grunt.task.run( "build", "stage-dist", "bump:" + arg );

});

I've also separated out builds into dev builds and dist builds. This way in the normal course of development we don't build dist files which are tracked by git and have to worry about not commiting those changes. Which would be the case because our test suite needs to build the library in order to test it.

grunt.registerTask( "build", [ "uglify:dist", "concat:dist" ] ); grunt.registerTask( "dev-build", [ "uglify:dev", "concat:dev" ]) grunt.registerTask( "default", [ "jshint", "dev-build" ]);

Then when we're ready to make a new release with a new dist we would just run.

grunt release:patch // Or major or minor if we want too.

|

|

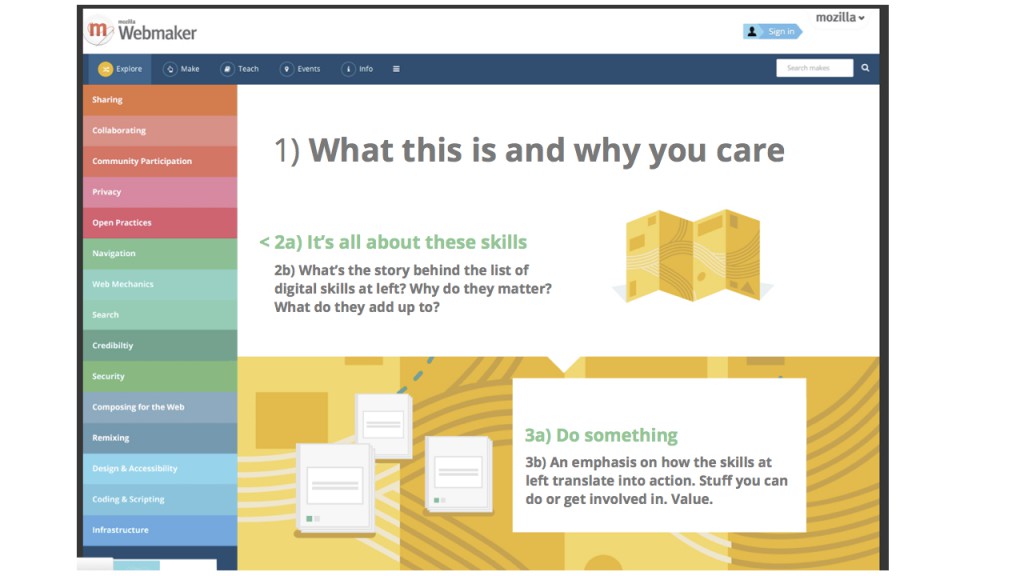

Matt Thompson: Writing for Webmaker’s new “Explore” page |

We’re shipping a new “explore” page for Webmaker. The goal: help users get their feet wet, quickly grokking what they can do on Webmaker.org. Plus: make it easy to browse through the list of skills in the Web Literacy Standard, finding resources and teaching kits for each.

It’s like an interactive text book for teaching web literacy.

The main writing challenge: what should the top panel say? The main headline and two blurbs that follow.

In my mind, this section should try do three things:

- State what this is. And why you care.

- Tell a story about the list of skills at left. When you hit this page, you see a list of rainbow-coloured words that can be confusing or random if you’re here for the first time. “Sharing. Collaborating. Community Participation…. Hmmm…. what does that all actually mean?”

- Focus on what users can do here. What does exploring those things do for you? What’s the action or value?

First draft

Here a start:

Teach the web with Webmaker

Explore creative ways to teach

|

|

Ben Hearsum: This week in Mozilla RelEng – April 11th, 2014 |

Major highlights:

- Armen and Bill McCloskey got an initial set of tests running with e10s enabled, which is a big step towards stabilizing it.

- Hal helped run the shortest tree closure window to date (less than 2 hours!).

- Massimo switched over some of our EC2 machines to SSD instance storage, which has improved Linux build times on try.

- Aki attended the first TRIBE session.

- Armen talked about some of our cost cutting measure in an air.mozilla.org presentation.

Completed work (resolution is ‘FIXED’):

- Balrog: Backend

- Buildduty

- Please add t-mavericks-r5-002 and t-mavericks-r5-003 to graphserver

- Reconfig bustage – temporary fix for “exceptions.KeyError: ‘tst-linux64-ec2-300'”

- Reimage remaining win64-rev1 machines as win64-rev2

- Testpool connection issues

- General Automation

- Provide native js shell to tarako b2g builds

- Cloning of hg.mozilla.org/build/tools and hg.mozilla.org/integration/gaia-central often times out, as does downloading/unzipping test zips

- add missing pandas/tegras to buildbot configs

- b2g build improvements

- generate “FxOS Simulator” builds for B2G

- Run additional hidden 3 debug mochitest-browser-chrome chunks and b2g reftests

- prioritize l10n nightlies below everything, regardless of branch

- Enable all mozharness desktop build linux variants on Cedar

- Run desktop C++ unit tests from test package

- Increase maxtime for browser-chrome-2. Again.

- Perma-fail on Linux64 slaves – test_hosted.xul,test_packaged.xul,test_packaged_launch.xul | Error during test: [Exception... "Component returned failure code: 0x80520012 (NS_ERROR_FILE_NOT_FOUND) [nsIFileOutputStream.init]” nsresult: “0x80520012

- Increase maxtime for browser-chrome-1

- Get e10s tests running on inbound, central, and try

- [tarako][build]create “tarako” build

- post_upload.py should use hardlinks where possible

- disable confusing double runs of mochitest-bc for linux debug

- Enable updates for tarako on 1.3t branch

- Run jit-tests from test package

- Kill spot vs on-demand retry logic

- logrotate aws_watch_pending.py

- Loan Requests

- please loan jmaher an ec2 machine equal to a linux32-spot instance

- Need a win32 unittest slave for debugging packaged tests

- Loan an ami-3ac4aa0a instance to Tim Abraldes

- Need a win32 slave to debug Lightning pymake issues

- please loan jmaher an ec2 machine equal to a linux32-spot instance

- Please loan dminor t-xp32-ix- instance

- Other

- Use mozmake for some windows builds

- stage NFS volume about to run out of space

- Add dev-master1:8951 master to slavealloc

- Platform Support

- Release Automation

- Minor documentation improvements on the ship-it form

- Add documentation about the beta release of Fennec

- Strip the firefox locale string

- deploy ship it patches from a few bugs

- Documentation about release-kickoff

- release repacks needs to submit data to balrog

- Releases

- Releases: Custom Builds

- Repos and Hooks

- Tools

In progress work (unresolved and not assigned to nobody):

- Balrog: Backend

- Balrog: Frontend

- Buildduty

- General Automation

- Port TryBuildFactory to Mozharness for fx desktop builds

- Add ssltunnel to xre.zip bundle used for b2g tests

- Disable mochitest-metro on Cedar

- m-c b2g version should be 2.0, not 1.5

- Add configs for debug B2G Mac OS X desktop builds & enable on mozilla-central and mozilla-aurora

- Bengali in 1.3t builds

- fx desktop builds in mozharness

- Spot instances don’t have security group set properly

- Add the build step or else process name to buildbot’s generic command timed out failure strings

- Need Tarako 1.3t FOTA updates for testing purposes

- Don’t do Thunderbird builds on comm-* branches for non-Thunderbird pushes

- [Flame]figure out how to create “Flame” builds manually

- Move Android 2.3 reftests to ix slaves (ash only)

- Turn off Fedora b2g reftests on trunk branches

- Please add non-unified builds to mozilla-central

- point [xxx] repository at new bm-remote webserver cluster to ensure parity in talos numbers

- remove machines that don’t exist from buildbot-configs

- Make it possible to run gaia try jobs *without* doing a build

- Updates not properly signed on the nightly-ux branch: Certificate did not match issuer or name.

- Decommission the ionmonkey tree

- Configure the MLS key for pvt builds

- Split up mochitest-bc on desktop into ~30minute chunks

- Loan Requests

- Slave loan request for a VS2013 build machine

- Loan an ami-6a395a5a instance to Aaron Klotz

- Please loan tst-linux64- instance to dminor

- borrow a linux ix multinode host to test CentOS-6.5

- Need a bld-lion-r5 to test build times with SSD

- Request Loan Machine for tst-linux64-spot

- Other

- Figure where to put sccache config for ceph

- Deprecate tinderbox-builds/old directories for desktop & mobile

- s/m1.large/m3.medium/

- Platform Support

- Release Automation

- Releases: Custom Builds

- Repos and Hooks

- find RFO for git.m.o OOM condition in bug 985864

- Request for a new git repository: “fuzz-tools” (public)

- Tools

- Update SlaveAPI sphynx docs to reflect recent changes

- New tooltool deployment

- Set up auto publish of doc index per Boston workweek agreement

- Using slaveapi to reboot a machine on the slave health page results in “list index out of range” under ‘output’

- tool to compare different sources of slave and master data

- slaveapi’s shutdown_buildslave action doesn’t cope well with a machine that isn’t connected to buildbot

- Add Windows support to aws_stop_idle.py

- db-based mapper on web cluster

- Create a Comprehensive Slave Loan tool

- SlaveAPI should be more precise on request TS

- vcs-sync needs to populate mapper db once it’s live

http://hearsum.ca/blog/this-week-in-mozilla-releng-april-11th-2014/

|

|

Pascal Finette: Technology Trends (April 2014) |

Earlier this month I was asked to present my thoughts and observations on “Technology Trends” in front of a group of Dutch business leaders. A lot of my thinking these days circles around the notion of “exponential growth” and the disruptive forces which come with this (full credit goes to Singularity University for putting these ideas into my head) and the notion of “ambient/ubiquitous computing” (full credit to my former colleague and friend Allen Wirfs-Brock).

In summary I believe we are truly in the midst of a new era with fundamental changes coming at an ever increasing pace at us.

Here’s my deck – it mostly works standalone.

|

|

Sylvestre Ledru: Changes Firefox 29 beta6 to beta7 |

This beta is a bit bigger than the beta6. It fixes some UI bugs, two bugs in the Gamepad API and some top crash bugs like bug 976536 or bug 987248.

- 32 changesets

- 50 files changed

- 1414 insertions

- 522 deletions

| Extension | Occurrences |

| cpp | 21 |

| js | 10 |

| h | 5 |

| css | 4 |

| xul | 1 |

| mn | 1 |

| mk | 1 |

| json | 1 |

| jsm | 1 |

| java | 1 |

| ini | 1 |

| in | 1 |

| build | 1 |

| Module | Occurrences |

| browser | 16 |

| js | 8 |

| image | 5 |

| layout | 4 |

| gfx | 4 |

| mobile | 3 |

| toolkit | 2 |

| xpfe | 1 |

| widget | 1 |

| view | 1 |

| netwerk | 1 |

| media | 1 |

| hal | 1 |

| content | 1 |

List of changesets:

| Nick Alexander | Bug 967022 - Fix Gingerbread progressbar animation bustage. r=rnewman, a=sylvestre - 26f9d2df24af |

| Neil Deakin | Bug 972566, when a window is resized, panels should be repositioned after the view reflow rather than within the webshell listener, r=tn, a=lsblakk. - 1a92004a684f |

| Mike Conley | Bug 989289 - Forcibly set the 'mode' attribute to 'icons' on toolbar construction. r=jaws, a=sledru. - 85d2c5b844bc |

| Gijs Kruitbosch | Bug 988191 - change to WCAG algorithm for titlebar font, r=jaws, a=sledru. - 5e0b16fe8951 |

| Mike de Boer | [Australis] Bug 986324: small refactor of urlbar and search field styles. r=dao, a=sledru. - 274d760590d5 |

| Mike Conley | Backed out changeset 9fc38ffaff75 (Bug 986920) - a90a4219b520 |

| Mike Conley | Bug 989761 - Make sure background tabs have the right z-index in relation to the classic theme fog. r=dao, a=sledru. - 552251cb84b9 |

| Mike Conley | Bug 984455 - Bookmarks menu and toolbar context menus can be broken after underflowing from nav-bar chevron. r=mak,mdeboer,Gijs. a=sledru. - 3f2d6f68c415 |

| Jan de Mooij | Bug 986678 - Fix type check in TryAddTypeBarrierForWrite. r=bhackett, a=abillings - c19e0e0a8535 |

| Jon Coppeard | Bug 986843 - Don't sweep empty zones if they contain marked compartments. r=terrence, a=sledru - ed9793adc2c7 |

| Douglas Crosher | Bug 919592 - Ionmonkey (ARM): Guard against branches being out of range and bail out of compilation if so. r=mjrosenb, a=sledru - 7be150811dd8 |

| Richard Marti | Bug 967674 - Port new Fxa sync options work to in-content prefs. r=markh, a=sledru - c8bcfc32f855 |

| Till Schneidereit | Bug 976536 - Don't relazify inlined functions. r=jandem, a=sledru - ee6aea5824b7 |

| Ted Mielczarek | Bug 980876 - Be smarter about sending gamepad updates from the background thread. r=smaug, a=sledru - 7ccc27d5c8f4 |

| Ted Mielczarek | Bug 980876 - Null check GamepadService in case of events still in play during shutdown. r=smaug, a=sledru - 30c45853f8cb |

| Bobby Holley | Bug 913138 - Release nsLayoutStatics when the layout module is unloaded. r=bsmedberg - 64fcbdc63ed7 |

| Bobby Holley | Bug 913138 - Shut down gfx at the end of layout shutdown. r=bsmedberg - 6899f7b4f57c |

| Bobby Holley | Bug 913138 - Move imgLoader singleton management out of nsContentUtils. r=bsmedberg - 58786efcdbbb |

| Bobby Holley | Bug 913138 - Shut down imagelib at the end of layout shutdown. r=bsmedberg a=sylvestre - 968f7b3ff551 |

| Nick Alexander | Bug 988437 - Part 1: Allow unpickling across Android Account types; bump pickle version. r=rnewman, a=sylvestre - 5dfea367b8b9 |

| Nick Alexander | Bug 988437 - Part 2: Make Firefox Account Android Account type unique per package. r=rnewman, a=sylvestre - 47c8852fde22 |

| Matthew Noorenberghe | Bug 972684 - Don't use about:home in browser_findbar.js since it leads to intermittent failures and isn't necessary for the test. r=mikedeboer, a=test-only - b39c5ca49785 |

| Edwin Flores | Bug 812881 - Ensure OMX plugins instantiate only one OMXClient instance. r=sotaro, a=sledru - 14b8222e1a24 |

| Nicholas Hurley | Bug 987248 - Prevent divide-by-zero in seer. r=mcmanus, a=sledru - afdcb5d5d7cc |

| Tim Chien | Bug 963590 - [Mac] Make sure lightweight themes don't affect fullscreen toolbar height/position. r=MattN, a=sledru - 2d58340206f4 |

| Gijs Kruitbosch | Bug 979653 - Fix dir attribute checks for url field in rtl mode. r=ehsan, a=sledru - 44a94313968a |

| Jeff Gilbert | Bug 963962 - Fix use of CreateDrawTargetForData in CanvasLayerD3D9/10. r=Bas, a=sledru - 635f912b3164 |

| Gijs Kruitbosch | Backed out changeset 85d2c5b844bc (Bug 989289) because we realized it'd break add-on toolbars, a=backout - 1244d500650c |

| Blair McBride | Bug 987492 - CustomizableUI.jsm should provide convenience APIs around windows, r=gijs,mconley, a=sledru. - 9c70e4856b3f |

| Mike de Boer | Bug 990533: use correct toolbar icon for the Home button when placed on the Bookmarks toolbar. r=mak, a=sledru. - 2948b8b5d51d |

| Mike de Boer | Bug 993265: preserve bookmark folder icons on the Bookmarks toolbar. r=mak, a=sledru. - 32d5b6ea4a64 |

| Matt Woodrow | Bug 988862 - Treat DIRECT2D render mode as GDI when drawing directly to the window through BasicLayers. r=jrmuizel, a=sledru. - f5622633b23f |

r= means reviewed by

a= means uplift approved by

Previous changelogs:

- Firefox 29 beta 5 to beta 6

- Firefox 29 beta 4 to beta 5

- Firefox 29 beta 3 to beta 4

- Firefox 29 beta 2 to beta 3

Original post blogged on b2evolution.

http://sylvestre.ledru.info/blog/2014/04/11/changes-firefox-29-beta6-to-beta7

|

|

Soledad Penades: What have I been working on? (2014/03) |

So it’s April the 11th already and here I am writing about what I did on March. Oh well!

I spent a bunch of time gathering and discussing requisites/feedback for AppManager v2, which implied

- thinking about the new ideas we sketched while at the Portland work week in February

- thinking about which AppManager questions to ask my team and the Partner Engineering team when we all were at Mountain View – because you can’t show up at a meeting without a set of questions ready to be asked

- then summarise the feedback and transmit it to Darrin, our UX guy who couldn’t be at the meeting in Mountain View

- then discuss the new questions with Paul & team who are going to implement it

And we were at Mountain View for the quarterly Apps meeting, when us in the Apps+Marketplace section of Mozilla get to talk apps and strategy and stuff for two days or more. It’s always funny when you meet UK-based workmates at another office and realise you’ve never spoken before, or you have, but didn’t associate their faces to their irc nicknames.

It was also the last of the meetings held at the already ex-Mozilla office in Castro Street, so it was sort of sad and bitter to leave Ten Forward (the big meeting room where most of the meetings and announcements have been happening) for the very last time. Mozilla HQ is now somewhere else in Mountain View, but I’ll always remember the Castro St. office with a smile because that’s where my first week at Mozilla happened :-)

But before I flew to San Francisco I attended GinJS, which I had been willing to attend for ages and couldn’t (because I’m never in town when it happens). I hadn’t even planned to go to that one, but some folks from Telefonica Digital were going and sort of convinced me to attend too. It was funny to sign up for the meeting while walking down Old Street on the way to the pub where GinJS was held. That’s what the mobile internet was designed for! Then at GinJS I met a number of cool people-some I had spoken before, some I hadn’t. I recommend you attend it, if you can make it :-)

I was also on the Components panel in EdgeConf. I still haven’t written about the experience and the aftermath of the conference because I basically fell ill at the end of it, and was very busy after that, but I’ll do it. I promise!

I also attended the first instalment of TRIBE, a sort of internal personal development program that is run at Mozilla. The first unwritten rule of TRIBE is you don’t talk about TRIBE… nah, I’m just joking! The first session is about “becoming aware of yourself”, and it was quite interesting to observe myself and my reactions in a conscious way rather than in the totally reactive, subconscious led mode we tend to operate under most of the time. It was also interesting to speak to other attendees and see things from their own point of view. I know it sounds tacky but it has led me to consider treating others more compassionately, or at least try to empathise more rather than instantly judge. This kind of seminars should be mandatory, whether you work in open source or not.

This session was held in Paris, so that gave me the chance to try and find the best croissant place in the morning and have a look at some nice views in the evening when we finished. Also, I went through the most amazingly thrilling and mindblowing-scary experience in a long time: a taxi ride to the airport during rush hour. I thought we were going to die on each of the multiple and violent street turns we did. I saw the Eiffel Tower in the distance and thought “Goodbye Paris, goodbye life” as we sped past a bus, just a few centimeters apart. Or as a bike almost ran over the taxi (an the opposite, too). I’m pretty sure I left a mark on the floor of the taxi as my foot involuntarily tried to brake all the time. And I thought that traffic in Rome was crazy… hah!

In between all this I managed to update a bunch of the existing Mortar templates, help improve some Brick components, publish an article in Mozilla Hacks, and give a ton of feedback on miscellaneous things (code, sites, peers, potential screencasts, conference talks, articles). Did I say a ton? Make it a ton and a half. Oh, and I also interviewed another potential intern. I’m starting to enjoy that–I wonder if it’s bad!

And I took a week of holidays.

I initially planned on hacking with WebMIDI and a KORG nanoKEY2, but my brain wasn’t willing to collaborate, so I just accepted that fact, and tried not to think much about it. The weather has been really warm and sunny so far so I’ve been hiking and staying outside as much as possible, and that’s been good after spending so much time indoors (or in planes).

http://soledadpenades.com/2014/04/11/what-have-i-been-working-on-201403/

|

|

Jan de Mooij: Fast arrow functions in Firefox 31 |

Last week I spent some time optimizing ES6 arrow functions. Arrow functions allow you to write function expressions like this:

a.map(s => s.length);

Instead of the much more verbose:

a.map(function(s){ return s.length });

Arrow functions are not just syntactic sugar though, they also bind their this-value lexically. This means that, unlike normal functions, arrow functions use the same this-value as the script in which they are defined. See the documentation for more info.

Firefox has had support for arrow functions since Firefox 22, but they used to be slower than normal functions for two reasons:

- Bound functions: SpiderMonkey used to do the equivalent of |arrow.bind(this)| whenever it evaluated an arrow expression. This made arrow functions slower than normal functions because calls to bound functions are currently not optimized or inlined in the JITs. It also used more memory because we’d allocate two function objects instead of one for arrow expressions.

In bug 989204 I changed this so that we treat arrow functions exactly like normal function expressions, except that we also store the lexical this-value in an extended function slot. Then, whenever this is used inside the arrow function, we get it from the function’s extended slot. This means that arrow functions behave a lot more like normal functions now. For instance, the JITs will optimize calls to them and they can be inlined. - Ion compilation: IonMonkey could not compile scripts containing arrow functions. I fixed this in bug 988993.

With these changes, arrow functions are about as fast as normal functions. I verified this with the following micro-benchmark:

function test(arr) {

var t = new Date;

arr.reduce((prev, cur) => prev + cur);

alert(new Date - t);

}

var arr = [];

for (var i=0; i<10000000; i++) {

arr.push(3);

}

test(arr);

I compared a nightly build from April 1st to today’s nightly and got the following results:

We’re 64x faster because Ion is now able to inline the arrow function directly without going through relatively slow bound function code on every call.

Other browsers don’t support arrow functions yet, so they are not used a lot on the web, but it’s important to offer good performance for new features if we want people to start using them. Also, Firefox frontend developers love arrow functions (grepping for “=>” in browser/ shows hundreds of them) so these changes should also help the browser itself ![]()

http://www.jandemooij.nl/blog/2014/04/11/fast-arrow-functions-in-firefox-31/

|

|

Planet Mozilla Interns: Tiziana Sellitto: Outreach Program For Women a year later |

It’s passed a year and a new Summer will begin…a new summer for the women that will be chosen and that will start soon the GNOME’s Outreach Program for Women.

This summer Mozilla will participate with three different projects listed here and among them the Mozilla Bug Wrangler for Desktop QA that is the one I applied for last year. It has been a great experience for me and I want to wish good luck to everyone who submitted the application.

I hope you’ll have a wonderful and productive summer ![]()

|

|

Andrew Halberstadt: Part 2: How to deal with IFFY requirements |

|

|

Henrik Skupin: Firefox Automation report – week 9/10 2014 |

In this post you can find an overview about the work happened in the Firefox Automation team during week 9 and 10. I for myself was a week on vacation. A bit of relaxing before the work on the TPS test framework should get started.

Highlights

In preparation to run Mozmill tests for Firefox Metro in our Mozmill-CI system, Andreea has started to get support for Metro builds and appropriate tests included.

With the help from Henrik we got Mozmill 2.0.6 released. It contains a helpful fix for waitForPageLoad(), which let you know about the page being loaded and its status in case of a failure. This might help us to nail down the intermittent failures when loading remote and even local pages. But the most important part of this release is indeed the support of mozcrash. Even that we cannot have a full support yet due to missing symbol files for daily builds on ftp.mozilla.org, we can at least show that a crash was happening during a testrun, and let the user know about the local minidump files.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 9 and week 10.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda, the video recording, and notes from the Firefox Automation meetings of week 9 and week 10.

http://www.hskupin.info/2014/04/11/firefox-automation-report-week-9-10-2014/

|

|

Gervase Markham: 21st Century Nesting |

Our neighbours have acquired a 21st century bird’s nest:

Not only is it behind a satellite dish but, if you look closely, large parts of it are constructed from the wire ties that the builders (who are still working on our estate) use for tying layers of bricks together. We believe it belongs to a couple of magpies, and it contains six (low-tech) eggs.

I have no idea what effect this has on their reception…

http://feedproxy.google.com/~r/HackingForChrist/~3/QRhVRrr6w6g/

|

|

Chris Double: Preventing heartbleed bugs with safe programming languages |

The Heartbleed bug in OpenSSL has resulted in a fair amount of damage across the internet. The bug itself was quite simple and is a textbook case for why programming in unsafe languages like C can be problematic.

As an experiment to see if a safer systems programming language could have prevented the bug I tried rewriting the problematic function in the ATS programming language. I’ve written about ATS as a safer C before. This gives a real world testcase for it. I used the latest version of ATS, called ATS2.

ATS compiles to C code. The function interfaces it generates can exactly match existing C functions and be callable from C. I used this feature to replace the dtls1_process_heartbeat and tls1_process_heartbeat functions in OpnSSL with ATS versions. These two functions are the ones that were patched to correct the heartbleed bug.

The approach I took was to follow something similar to that outlined by John Skaller on the ATS mailing list:

ATS on the other hand is basically C with a better type system.

You can write very low level C like code without a lot of the scary

dependent typing stuff and then you will have code like C, that

will crash if you make mistakes.

If you use the high level typing stuff coding is a lot more work

and requires more thinking, but you get much stronger assurances

of program correctness, stronger than you can get in Ocaml

or even Haskell, and you can even hope for *better* performance

than C by elision of run time checks otherwise considered mandatory,

due to proof of correctness from the type system. Expect over

50% of your code to be such proofs in critical software and probably

90% of your brain power to go into constructing them rather than

just implementing the algorithm. It's a paradigm shift.I started with wrapping the C code directly and calling that from ATS. From there I rewrote the C code into unsafe ATS. Once that worked I added types to find errors.

I’ve put the modified OpenSSl code in a github fork. The two branches there, ats and ats_safe, represent the latter two stages in implementing the functions in ATS.

I’ll give a quick overview of the different paths I took then go into some detail about how I used ATS to find the errors.

Wrapping C code

I’ve written about this approach before. ATS allows embedding C directly so the first start was to embed the dtls1_process_heartbeat C code in an ATS file, call that from an ATS function and expose that ATS function as the real dtls1_process_heartbeat. The code for this is in my first attempt of d1_both.dats.

Unsafe ATS

The second stage was to write the functions using ATS but unsafely. This code is a direct translation of the C code with no additional typechecking via ATS features. It uses usafe ATS code. The rewritten d1_both.dats contains this version.

The code is quite ugly but compiles and matches the C version. When installed on a test system it shows the heartbleed bug still. It uses all the pointer arithmetic and hard coded offsets as the C code. Here’s a snippet of one branch of the function:

val buffer = OPENSSL_malloc(1 + 2 + $UN.cast2int(payload) + padding)

val bp = buffer

val () = $UN.ptr0_set (bp, TLS1_HB_RESPONSE)

val bp = ptr0_succ (bp)

val bp = s2n (payload, bp)

val () = unsafe_memcpy (bp, pl, payload)

val bp = ptr_add (bp, payload)

val () = RAND_pseudo_bytes (bp, padding)

val r = dtls1_write_bytes (s, TLS1_RT_HEARTBEAT, buffer, 3 + $UN.cast2int(payload) + padding)

val () = if r >=0 && ptr_isnot_null (get_msg_callback (s)) then

call_msg_callback (get_msg_callback (s),

1, get_version (s), TLS1_RT_HEARTBEAT,

buffer, $UN.cast2uint (3 + $UN.cast2int(payload) + padding), s,

get_msg_callback_arg (s))

val () = OPENSSL_free (buffer) It should be pretty easy to follow this comparing the code to the C version.

Safer ATS

The third stage was adding types to the unsafe ATS version to check that the pointer arithmetic is correct and no bounds errors occur. This version of d1_both.dats fails to compile if certain bounds checks aren’t asserted. If the assertloc at line 123, line 178 or line 193 is removed then a constraint error is produced. This error is effectively preventing the heartbleed bug.

Testable Vesion

The last stage I did was to implement the fix to the tls1_process_heartbeat function and factor out some of the helper routines so it could be shared. This is in the ats_safe branch which is where the newer changes are happening. This version removes the assertloc usage and changes to graceful failure so it could be tested on a live site.

I tested this version of OpenSSL and heartbleed test programs fail to dump memory.

The approach to safety

The tls_process_heartbeat function obtains a pointer to data provided by the sender and the amount of data sent from one of the OpenSSL internal structures. It expects the data to be in the following format:

byte = hbtype

ushort = payload length

byte[n] = bytes of length 'payload length'

byte[16]= paddingThe existing C code makes the mistake of trusting the ‘payload length’ the sender supplies and passes that to a memcpy. If the actual length of the data is less than the ‘payload length’ then random data from memory gets sent in the response.

In ATS pointers can be manipulated at will but they can’t be dereferenced or used unless there is a view in scope that proves what is located at that memory address. By passing around views, and subsets of views, it becomes possible to check that ATS code doesn’t access memory it shouldn’t. Views become like capabilities. You hand them out when you want code to have the capability to do things with the memory safely and take it back when it’s done.

Views

To model what the C code does I created an ATS view that represents the layout of the data in memory:

dataview record_data_v (addr, int) =

| {l:agz} {n:nat | n > 16 + 2 + 1} make_record_data_v (l, n) of (ptr l, size_t n)

| record_data_v_fail (null, 0) of ()A ‘view’ is like a standard ML datatype but exists at type checking time only. It is erased in the final version of the program so has no runtime overhead. This view has two constructors. The first is for data held at a memory address l of length n. The length is constrained to be greater than 16 + 2 + 1 which is the size of the ‘byte’, ‘ushort’ and ‘padding’ mentioned previously. By putting the constraint here we immediately force anyone creating this view to check the length they pass in. The second constructor, record_data_v_fail, is for the case of an invalid record buffer.

The function that creates this view looks like:

implement get_record (s) =

val len = get_record_length (s)

val data = get_record_data (s)

in

if len > 16 + 2 + 1 then

(make_record_data_v (data, len) | data, len)

else

(record_data_v_fail () | null_ptr1 (), i2sz 0)

endHere the len and data are obtained from the SSL structure. The length is checked and the view is created and returned along with the pointer to the data and the length. If the length check is removed there is a compile error due to the constraint we placed earlier on make_record_data_v. Calling code looks like:

val (pf_data | p_data, data_len) = get_record (s)p_data is a pointer. data_len is an unsigned value and pf_data is our view. In my code the pf_ suffix denotes a proof of some sort (in this case the view) and p_ denotes a pointer.

Proof functions

In ATS we can’t do anything at all with the p_data pointer other than increment, decrement and pass it around. To dereference it we must obtain a view proving what is at that memory address. To get speciailized views specific for the data we want I created some proof functions that convert the record_data_v view to views that provide access to memory. These are the proof functions:

(* These proof functions extract proofs out of the record_data_v

to allow access to the data stored in the record. The constants

for the size of the padding, payload buffer, etc are checked

within the proofs so that functions that manipulate memory

are checked that they remain within the correct bounds and

use the appropriate pointer values

*)

prfun extract_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l, n),

array_v (byte, l, n) - record_data_v (l,n))

prfun extract_hbtype_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(byte @ l, byte @ l - record_data_v (l,n))

prfun extract_payload_length_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1, 2),

array_v (byte, l+1, 2) - record_data_v (l,n))

prfun extract_payload_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1+2, n-16-2-1),

array_v (byte, l+1+2, n-16-2-1) - record_data_v (l,n))

prfun extract_padding_proof {l:agz} {n:nat} {n2:nat | n2 <= n - 16 - 2 - 1}

(pf: record_data_v (l, n), payload_length: size_t n2):

(array_v (byte, l + n2 + 1 + 2, 16),

array_v (byte, l + n2 + 1 + 2, 16) - record_data_v (l, n))Proof functions are run at type checking time. They manipulate proofs. Let’s breakdown what the extract_hbtype_proof function does:

prfun extract_hbtype_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(byte @ l, byte @ l - record_data_v (l,n))This function takes a single argument, pf, that is a record_data_v instance for an address l and length n. This proof argument is consumed. Once it is called it cannot be accessed again (it is a linear proof). The function returns two things. The first is a proof byte @ l which says “there is a byte stored at address l”. The second is a linear proof function that takes the first proof we returned, consumes it so it can’t be reused, and returns the original proof we passed in as an argument.

This is a fairly common idiom in ATS. What it does is takes a proof, destroys it, returns a new one and provides a way of destroying the new one and bring back the old one. Here’s how the function is used:

prval (pf, pff) = extract_hbtype_proof (pf_data)

val hbtype = $UN.cast2int (!p_data)

prval pf_data = pff (pf)prval is a declaration of a proof variable. pf is my idiomatic name for a proof and pff is what I use for proof functions that destroy proofs and return the original.

The !p_data is similar to *p_data in C. It dereferences what is held at the pointer. When this happens in ATS it searches for a proof that we can access some memory at p_data. The pf proof we obtained says we have a byte @ p_data so we get a byte out of it.

A more complicated proof function is:

prfun extract_payload_length_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1, 2),

array_v (byte, l+1, 2) - record_data_v (l,n))The array_v view repesents a contigous array of memory. The three arguments it takes are the type of data stored in the array, the address of the beginning, and the number of elements. So this function consume the record_data_v and produces a proof saying there is a two element array of bytes held at the 1st byte offset from the original memory address held by the record view. Someone with access to this proof cannot access the entire memory buffer held by the SSL record. It can only get the 2 bytes from the 1st offset.

Safe memcpy

One more complicated view:

prfun extract_payload_data_proof {l:agz} {n:nat}

(pf: record_data_v (l, n)):

(array_v (byte, l+1+2, n-16-2-1),

array_v (byte, l+1+2, n-16-2-1) - record_data_v (l,n))This returns a proof for an array of bytes starting at the 3rd byte of the record buffer. Its length is equal to the length of the record buffer less the size of the payload, and first two data items. It’s used during the memcpy call like so:

prval (pf_dst, pff_dst) = extract_payload_data_proof (pf_response)

prval (pf_src, pff_src) = extract_payload_data_proof (pf_data)

val () = safe_memcpy (pf_dst, pf_src

add_ptr1_bsz (p_buffer, i2sz 3),

add_ptr1_bsz (p_data, i2sz 3),

payload_length)

prval pf_response = pff_dst(pf_dst)

prval pf_data = pff_src(pf_src)By having a proof that provides access to only the payload data area we can be sure that the memcpy can not copy memory outside those bounds. Even though the code does manual pointer arithmetic (the add_ptr1_bszfunction) this is safe. An attempt to use a pointer outside the range of the proof results in a compile error.

The same concept is used when setting the padding to random bytes:

prval (pf, pff) = extract_padding_proof (pf_response, payload_length)

val () = RAND_pseudo_bytes (pf |

add_ptr_bsz (p_buffer, payload_length + 1 + 2),

padding)

prval pf_response = pff(pf)aRuntime checks

The code does runtime checks that constrain the bounds of various length variables:

if payload_length > 0 then

if data_len >= payload_length + padding + 1 + 2 then

...

...Without those checks a compile error is produced. The original heartbeat flaw was the absence of similar runtime checks. The code as structured can’t suffer from that flaw and still be compiled.

Testing

This code can be built and tested. First step is to install ATS2:

$ tar xvf ATS2-Postiats-0.0.7.tgz

$ cd ATS2-Postiats-0.0.7

$ ./configure

$ make

$ export PATSHOME=`pwd`

$ export PATH=$PATH:$PATSHOME/binThen compile the openssl code with my ATS additions:

$ git clone https://github.com/doublec/openssl

$ cd openssl

$ git checkout -b ats_safe origin/ats_safe

$ ./config

$ make

$ make testTry changing some of the code, modifying the constraints tests etc, to get an idea of what it is doing.

For testing in a VM, I installed Ubuntu, setup an nginx instance serving an HTTPS site and did something like:

$ git clone https://github.com/doublec/openssl

$ cd openssl

$ git diff 5219d3dd350cc74498dd49daef5e6ee8c34d9857 >~/safe.patch

$ cd ..

$ apt-get source openssl

$ cd openssl-1.0.1e/

$ patch -p1 <~/safe.patch

...might need to fix merge conflicts here...

$ fakeroot debian/rules build binary

$ cd ..

$ sudo dpkg -i libssl1.0.0_1.0.1e-3ubuntu1.2_amd64.deb \

libssl-dev_1.0.1e-3ubuntu1.2_amd64.deb

$ sudo /etc/init.d/nginx restartYou can then use a heartbleed tester on the HTTPS server and it should fail.

Conclusion

I think the approach of converting unsafe C code piecemeal worked quite well in this instance. Being able to combine existing C code and ATS makes this much easier. I only concentrated on detecting this particular programming error but it would be possible to use other ATS features to detect memory leaks, abstraction violations and other things. It’s possible to get very specific in defining safe interfaces at a cost of complexity in code.

Although I’ve used ATS for production code this is my first time using ATS2. I may have missed idioms and library functions to make things easier so try not to judge the verbosity or difficulty of the code based on this experiement. The ATS community is helpful in picking up the language. My approach to doing this was basically add types then work through the compiler errors fixing each one until it builds.

One immediate question becomes “How do you trust your proof”. The record_data_v view and the proof functions that manipulate it define the level of checking that occurs. If they are wrong then the constraints checked by the program will be wrong. It comes down to having a trusted kernel of code (in this case the proof and view) and users of that kernel can then be trusted to be correct. Incorrect use of the kernel is what results in the stronger safety. From an auditing perspective it’s easier to check the small trusted kernel and then know the compiler will make sure pointer manipulations are correct.

The ATS specific additions are in the following files:

http://bluishcoder.co.nz/2014/04/11/preventing-heartbleed-bugs-with-safe-languages.html

|

|

Anthony Hughes: Google RMA, or how I finally got to use Firefox OS on Wind Mobile |

The last 24 hours have really been quite an adventure in debugging. It all started last week when I decided to order a Nexus 5 from Google. It arrived yesterday, on time, and I couldn’t wait to get home to unbox it. Soon after unboxing my new Nexus 5 I would discover something was not well.

After setting up my Google account and syncing all my data I usually like to try out the camera. This did not go very well. I was immediately presented with a “Camera could not connect” error. After rebooting a couple times the error continued to persist.

I then went to the internet to research my problem and got the usual advice: clear the cache, force quit any unnecessary apps, or do a factory reset. Try as I might, all of these efforts would fail. I actually tried a factory reset three times and that’s where things got weirder.

On the third factory reset I decided to opt out of syncing my data and just try the camera with a completely stock install. However, this time the camera icon was completely missing. It was absent from my home screen and the app drawer. It was absent from the Gallery app. The only way I was able to get the Camera app to launch was to select the camera button on my lock screen.

Now that I finally got to the Camera app I noticed it had defaulted to the front camera, so naturally I tried to switch to the rear. However when I tried this, the icon to switch cameras was completely missing. I tried some third party camera apps but they would just crash on startup.

After a couple hours jumping through these hoops between factory resets I was about to give in. I gave it one last ditch effort and flashed the phone using Google’s stock Android 4.4 APK. It took me about another hour between getting my environment set up and getting the image flashed to the phone. However the result was the same: missing camera icons and crashing all over the place.

It was now past 1am, I had been at this for hours. I finally gave in and called up Google. They promptly sent me an RMA tag and I shipped the phone back to them this morning for a full refund. And so began the next day of my adventure.

I was now at a point where I had to decide what I wanted to do. Was I going to order another Nexus 5 and trust that one would be fine or would I save myself the hassle and just dig out an old Android phone I had lying around?

I remembered that I still had a Nexus S which was perfectly fine, albeit getting a bit slow. After a bit of research on MDN I decided to try flashing the Nexus S to use B2G. I had never successfully flashed any phone to B2G before and I thought yesterday’s events might have been pushing toward this moment.

I followed the documentation, checked out the source code, sat through the lengthy config and build process (this took about 2 hours), and pushed the bits to my phone. I then swapped in my SIM card and crossed my fingers. It worked! It seemed like magic, but it worked. I can again do all the things I want to: make phone calls, take pictures, check email, and tweet to my hearts content; all on a phone powered by the web.

I have to say the process was fairly painless (apart from the hours spent troubleshooting the Nexus 5). The only problem I encountered was a small hiccup in the config.sh process. Fortunately, I was able to work around this pretty easily thanks to Bugzilla. I can’t help but recognize my success was largely due to the excellent documentation provided by Mozilla and the work of developers, testers, and contributors alike who came before me.

I’ve found the process to be pretty rewarding. I built B2G, which I’ve never succeeded at before; I flashed my phone, which I’ve never succeeded at before; and I feel like I learned something new today.

I’ve been waiting a long time to be able to test B2G 1.4 on Wind Mobile, and now I can. Sure I’m sleep deprived, and sure it’s not an “official” Firefox OS phone, but that does not diminish the victory for me; not one bit.

|

|

Gervase Markham: Copyright and Software |

As part of our discussions on responding to the EU Copyright Consultation, Benjamin Smedberg made an interesting proposal about how copyright should apply to software. With Chris Riley’s help, I expanded that proposal into the text below. Mozilla’s final submission, after review by various parties, argued for a reduced term of copyright for software of 5-10 years, but did not include this full proposal. So I publish it here for comment.

I think the innovation, which came from Benjamin, is the idea that the spirit of copyright law means that proprietary software should not be eligible for copyright protections unless the source code is made freely available to the public by the time the copyright term expires.

We believe copyright terms should be much shorter for software, and that there should be a public benefit tradeoff for receiving legal protection, comparable to other areas of IP.

We start with the premise that the purpose of copyright is to promote new creation by giving to their authors an exclusive right, but that this right is necessary time-limited because the public as a whole benefits from the public domain and the free sharing and reproduction of works. Given this premise, copyright policy has failed in the domain of software. All software has a much, much shorter life than the standard copyright term; by the end of the period, there is no longer any public benefit to be gained from the software entering the public domain, unlike virtually all other categories of copyrighted works. There is already more obsolete software out there than anyone can enumerate, and software as a concept is barely even 50 years old, so none is in the public domain. Any which did fall into the public domain after 50 or 70 years would be useful to no-one, as it would have been written for systems long obsolete.

We suggest two ideas to help the spirit of copyright be more effectively realized in the software domain.

Proprietary software (that is, software for which the source code is not immediately available for reuse anyway) should not be eligible for copyright protections unless the source code is made freely available to the public by the time the copyright term expires. Unlike a book, which can be read and copied by anyone at any stage before or after its copyright expires, software is often distributed as binary code which is intelligible to computers but very hard for humans to understand. Therefore, in order for software to properly fall into the public domain at the end of the copyright term, the source code (the human-readable form) needs to be made available at that time – otherwise, the spirit of copyright law is not achieved, because the public cannot truly benefit from the copyrighted material. An escrow system would be ideal to implement this.

This is also similar to the tradeoff between patent law and trade secret protection; you receive a legal protection for your activity in exchange for making it available to be used effectively by the broader public at the end of that period. Failing to take that tradeoff risks the possibility that someone will reverse engineer your methods, at which point they are unprotected.

Separately, the term of software copyright protection should be made much shorter (through international processes as relevant), and fixed for software products. We suggest that 14 years is the most appropriate length. This would mean that, for example, Windows XP would enter the public domain in August 2015, which is a year after Microsoft ceases to support it (and so presumably no longer considers it commercially viable). Members of the public who wish to continue to run Windows XP therefore have an interest in the source code being available so technically-capable companies can support them.

http://feedproxy.google.com/~r/HackingForChrist/~3/jEqgRNf-ytU/

|

|

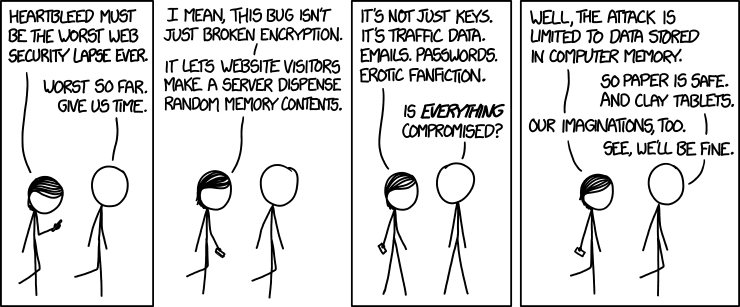

Christie Koehler: An Explanation of the Heartbleed bug for Regular People |

I’ve put this explanation together for those who want to understand the Heartbleed bug, how it fits into the bigger picture of secure internet browsing, and what you can do to mitigate its affects.

I’ve put this explanation together for those who want to understand the Heartbleed bug, how it fits into the bigger picture of secure internet browsing, and what you can do to mitigate its affects.

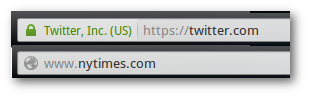

HTTPS vs HTTP (padlock vs no padlock)

When you are browsing a site securely, you use https and you see a padlock icon in the url bar. When you are browsing insecurely you use http and you do not see a padlock icon.

Firefox url bar for HTTPS site (above) and non-HTTPS (below).

Firefox url bar for HTTPS site (above) and non-HTTPS (below).HTTPS relies on something called SSL/TLS.

Understanding SSL/TLS

SSL stands for Secure Sockets Layer and TLS stands for Transport Layer Security. TLS is the later version of the original, proprietary, SSL protocol developed by Netscape. Today, when people say SSL, they generally mean TLS, the current, standard version of the protocol.

Public and private keys

The TLS protocol relies heavily on public-key or asymmetric cryptography. In this kind of cryptography, two separate but paired keys are required: a public key and a private key. The public key is, as its name suggests, shared with the world and is used to encrypt plain-text data or to verify a digital signature. (A digital signature is a way to authenticate identity.) A matching private key, on the other hand, is used to decrypt data and to generate digital signatures. A private key should be safeguarded and never shared. Many private keys are protected by pass-phrases, but merely having access to the private key means you can likely use it.

Authentication and encryption

The purpose of SSL/TLS is to authenticate and encrypt web traffic.

Authenticate in this case means “verify that I am who I say I am.” This is very important because when you visit your bank’s website in your browser, you want to feel confident that you are visiting the web servers of — and thereby giving your information to — your actual bank and not another server claiming to be your bank. This authentication is achieved using something called certificates that are issued by Certificate Authorities (CA). Wikipedia explains thusly:

The digital certificate certifies the ownership of a public key by the named subject of the certificate. This allows others (relying parties) to rely upon signatures or assertions made by the private key that corresponds to the public key that is certified. In this model of trust relationships, a CA is a trusted third party that is trusted by both the subject (owner) of the certificate and the party relying upon the certificate.

In order to obtain a valid certificate from a CA, website owners must submit, at minimum, their server’s public key and demonstrate that they have access to the website (domain).

Encrypt in this case means “encode data such that only authorized parties may decode it.” Encrypting internet traffic is important for sensitive or otherwise private data because it is trivially easy eavesdrop on internet traffic. Information transmitted not using SSL is usually done so in plain-text and as such clearly readable by anyone. This might be acceptable for general internet broswing. After all, who cares who knows which NY Times article you are reading? But is not acceptable for a range of private data including user names, passwords and private messages.

Behind the scenes of an SSL/TLS connection

When you visit a website with HTTPs enabled, a multi-step process occurs so that a secure connection can be established. During this process, the sever and client (browser) send messages back and forth in order to a) authenticate the server’s (and sometimes the client’s) identity and, b) to negotiate what encryption scheme, including which cipher and which key, they will use for the session. Identities are authenticated using the digital certificates mentioned previously.

When all of that is complete, the secure connection is established and the server and client send traffic back and forth to each other.

All of this happens without you ever knowing about it. Once you see your bank’s login screen the process is complete, assuming you see the padlock icon in your browser’s url bar.

Keepalives and Heartbeats

Even though establishing an ssl connection happens almost imperceptibly to you, it does have an overhead in terms of computer and network resources. To minimize this overhead, network connections are often kept open and active until a given timeout threshold is exceed. When that happens, the connection is closed. If the client and server wish to communicate again, they need to re-negotiate the connection and re-incur the overhead of that negotiation.

One way to forestall a connection being closed is via keepalives. A keepalive message is used to tell a server “Hey, I know I haven’t used this connection in a little while, but I’m still here and I’m planning to use it again really soon.”

Keepalive functionality was added to the TLS protocol specification via the Heartbeat Extension. Instead of “Keepalives,” they’re called “Heartbeats,” but they do basically the same thing.

Specification vs Implementation

Let’s pause for a moment to talk about specifications vs implementations. A protocol is a defined way of doing something. In this case of TLS, that something is encrypted network communications. When a protocol is standardized, it means that a lot of people have agreed upon the exact way that protocol should work and this way is outlined in a specification. The specification for TLS is collaboratively developed, maintained and promoted by the standards body Internet Engineering Task Force (IETF). A specification in and of itself does not do anything. It is a set of documents, not a program. In order for a specifications to do something, they must be implemented by programmers.

OpenSSL implementation of TLS

OpenSSL is one implementation of the TLS protocol. There are others, including the open source GnuTLS as well as proprietary implementations. OpenSSL is a library, meaning that it is not a standalone software package, but one that is used by other software packages. These include the very popular webserver Apache.

The Heartbleed bug only applies to webservers with SSL/TLS enabled, and only those using specific versions of the open source OpenSSL library because the bug relates to an error in the code of that library, specifically the heartbeat extension code. It is not related to any errors in the TLS specification or and in any of the underlying ciper suites.

Usually this would be good news. However, because OpenSSL is so widely used, particularly the affected version, this simple bug has tremendously reach in terms of the number of servers and therefor the number of users it potentially affects.

What the heartbeat extension is supposed to do

The heartbeat extension is supposed to work as follows:

- A client sends a heartbeat message to the server.

- The message contains two pieces of data: a payload and the size of that payload. The payload can by anything up to 64kb.

- When the server receives the heartbeat message, it is to add a bit of extra data to it (padding) and send it right back to the client.

Pretty simple, right? Heartbeat isn’t supposed to do anything other than let the server and client know they are each still there and accepting connections.

What the heartbeat code actually does

In the code for affected versions (1.0.1-1.0.1f) of the OpenSSL heartbeat extension, the programmer(s) made a simple but horrible mistake: They failed to verify the size of the received payload. Instead, they accepted what the client said was the size of the payload and returned this amount of data from memory, thinking it should be returning the same data it had received. Therefore, a client could send a payload of 1KB, say it was 64KB and receive that amount of data back, all from server memory.

If that’s confusing, try this analogy: Imagine you are my bank. I show up and make a deposit. I say the deposit is $64, but you don’t actually verify this amount. Moments later I request a withdrawal of the $64 I say I deposited. In fact, I really only deposited $1, but since you never checked, you have no choice but to give me $64, $63 of which doesn’t actually belong to me.

And, this is exactly how a someone could exploit this vulnerability. What comes back from memory doesn’t belong to the client that sent the heartbeat message, but it’s given a copy of it anyway. The data returned is random, but would be data that the OpenSSL library had been storing in memory. This should be pre-encryption (plain-text) data, including your user names and passwords. It could also technically be your server’s private key (because that is used in the securing process) and/or your server’s certificate (which is also not something you should share).

The ability to retrieve a server’s private key is very bad because that private key could be used to decrypt all past, present and future traffic to the sever. The ability to retreive a server’s certificate is also bad because it gives the ability to impersonate that server.

This, coupled with the widespread use of OpenSSL, is why this bug is so terribly bad. Oh, and it gets worse…

Taking advantage of this vulnerability leaves no trace

What’s worse is that logging isn’t part of the Heartbeat extension. Why would it be? Keepalives happen all the time and generally do not represent transmission of any significant data. There’s no reason to take up value time accessing the physical disk or taking up storage space to record that kind of information.

Because there is no logging, there is no trace left when someone takes advantage of this vulnerability.

The code that introduced this bug has been part of OpenSSl for 2+ years. This means that any data you’ve communicated to servers with this bug since then has the potential to be compromised, but there’s no way to determine definitively if it was.

This is why most of the internet is collectively freaking out.

What do server administrators need to do?

Server (website) administrators need to, if they haven’t already:

- Determine whether or not their systems are affected by the bug. (test)

- Patch and/or upgrade affected systems. (This will require a restart)

- Revoke and reissue keys and certificates for affected systems.

Furthermore, I strongly recommend you enable Perfect forward secrecy to safeguard data in the event that a private key is compromised:

When an encrypted connection uses perfect forward secrecy, that means that the session keys the server generates are truly ephemeral, and even somebody with access to the secret key can’t later derive the relevant session key that would allow her to decrypt any particular HTTPS session. So intercepted encrypted data is protected from prying eyes long into the future, even if the website’s secret key is later compromised.

What do users (like me) need to do?

The most important thing regular users need to do is change your passwords on critical sites that were vulnerable (but only after they’ve been patched). Do you need to change all of your passwords everywhere? Probably not. Read You don’t need to change all your passwords for some good tips.

Additionally, if you’re not already using a password manager, I highly recommend LastPass, which is cross-platform and works on pretty much every device. Yesterday LastPass announced they are helping users to know which passwords they need to update and when it is safe to do so.

If you do end up trying LastPass, checkout my guide for setting it up with two-factor auth.

Further Reading

If you like visuals, check out this great video showing how the Heartbleed exploit works.

If you’re interested in learning more about networking, I highly recommend Ilya Grigorik‘s High Performance Browser Networking, which you can also read online for free.

If you want some additional technical details about Heartbleed (including actual code!) checkout these posts:

Oh, and you can listen to Kevin and I talk about Heartbleed on In Beta episode 96, “A Series of Mathy Things.”

Conclusion

http://subfictional.com/2014/04/10/an-explanation-of-the-heartbleed-bug-for-regular-people/

|

|

Henrik Skupin: Firefox Automation report – week 7/8 2014 |

The current work load is still affecting my time for getting out our automation status reports. The current updates are a bit old but still worth to mention. So lets get them out.

Highlights

As mentioned in my last report, we had issues with Mozmill on Windows while running our restart tests. So during a restart of Firefox, Mozmill wasn’t waiting long enough to detect that the old process is gone, so a new instance has been started immediately. Sadly that process failed with the profile already in use error. Reason here was a broken handling of process.poll() in mozprocess, which Henrik fixed in bug 947248. Thankfully no other Mozmill release was necessary. We only re-created our Mozmill environments for the new mozprocess version.

With the ongoing pressure of getting automated tests implemented for the Metro mode of Firefox, our Softvision automation team members were concentrating their work on adding new library modules, and enhancing already existent ones. Another big step here was the addition of the Metro support to our Mozmill dashboard by Andrei. With that we got new filter settings to only show results for Metro builds.

When Henrik noticed one morning that our Mozmill-CI staging instance was running out of disk space, he did some investigation and has seen that we were using the identical settings from production for the build retention policy. Without having more then 40GB disk space we were trying to keep the latest 100 builds for each job. This certainly wont work, so Cosmin worked on reducing the amount of builds to 5. Beside this we were also able to fix our l10n testrun for localized builds of Firefox Aurora.

Given that we stopped support for Mozmill 1.5 and continued with Mozmill 2.0 already a while ago, we totally missed to update our Mozmill tests documentation on MDN. Henrik was working on getting all of it up-to-date, so new community members wont struggle anymore.

Individual Updates

For more granular updates of each individual team member please visit our weekly team etherpad for week 7 and week 8.

Meeting Details

If you are interested in further details and discussions you might also want to have a look at the meeting agenda and notes from the Firefox Automation meetings of week 7 and week 8.

http://www.hskupin.info/2014/04/10/firefox-automation-report-week-78-2014/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [924265] add an alert date field/mechanism to the “Infrastructure & Operations” product

- [987765] Updates to Developer Documentation product’s “form.doc” form and configuration

- [891757] “Additional hours worked” displayed inline with comments is now superfluous

- [986590] Confusing error message when not finding reviewer

- [987940] arbitrary product name (text) injection in guided workflow

- [988175] Needinfo dropdown should include “myself”

- [961843] Reset password token leakage

- [984505] Link component and product to browse for other bugs in this category

- [987521] flag activity api needs to prohibit requests which return the entire table

- [990982] Use to group products into classifications in the product drop-down on show_bug.cgi

- [991477] changing a tracking flag’s value doesn’t result in the value being updated on bugs

- [988414] The drop down menu icon next to the user name is not visible in Chrome on Mac OSX

- [994467] remove “anyone” from the needinfo menu

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/04/10/happy-bmo-push-day-89/

|

|

Selena Deckelmann: Python Core Summit: notes from my talk today |

I gave a short talk today about new coders and contributors to developer documentation today. Here are my notes!

Me: Selena Deckelmann Data Architect, Mozilla Major contributor to PostgreSQL, PyLadies organizer in Portland, OR

Focusing on Documentation, Teaching and Outreach

Two main forks of thought around teaching and outreach: 1. Brand new coders: PyLadies, Software Carpentry and University are the main communities represented 2. New contributors to Python & ecosystem

1. Brand new coders: PyLadies, Software Carpentry and University are the main communities represented

(a) Information architecture of the website

Where do you go if you are a teacher or want to teach a workshop? Totally unclear on python.org. Really could use a section on the website for this, microsite.

Version 2 vs 3 is very confusing for new developers. Most workshops default to 2, some workshops now require 3. Maybe mark clearly on all workshops which version. Generally this is a very confusing issue when encountering the site for the first time.

Possible solution: Completely separate “brand new coder” tutorial. Jessica McKellar would like to write this.

(b) Packaging and Installation problems — see earlier long conversation in this meeting about this. Many problems linked to having to compile C code while installing with pip

(c) New coder contribution can come through documenting of issues around install and setup. We could make this easier — maybe direct initial reports to stack overflow, and then float solutions to bugs.python.org

2. New contributors to Python & ecosystem — with a focus on things useful for keeping documentation and tutorials up-to-date and relevant

(a) GNOME Outreach Program for Women – Python is participating!

More people from core should participate as mentors! PSF is funding 2-3 students this cycle, Twisted has participated for a while and had a great experience. This program is great because:

- Supports code and non-code contribution

- Developer community seems very cohesive, participants seem to join communities and stick around

- Strong diversity support

- Participants don’t have to be students

- Participants are paid for 3 months

- Participants come from geographically diverse communities

- To participate, applicants must submit a patch or provide some other pre-defined contribution before their application is even accepted

Jessica McKellar and Lynn Root are mentors for Python itself. See them for more details about this round! Selena is a coordinator and former mentor for Mozilla’s participation and also available to answer questions.

(b) Write the Docs conference is a python-inspired community around documentation.

(c) Openstack – Anne Gentle & her blog. 3-year participant in OpenStack community and great resource for information about building technical documentation community.

(d) Better tooling for contribution could be a great vector for getting new contributors.

- Wiki is a place for information to go and die (no clear owners, neglected SEO etc) – Maybe separate documentation repos from core code repos for tutorials

- carefully consider the approval process – put the people who are most dedicated to maintaining the tutorials in charge of maintaining them

(e) bugs.python.org

Type selection is not relevant to ‘documentation’ errors/fixes. Either remove ‘type’ from the UI or provide relevant types. I recommend removing ‘type’ as a required (or implied required) form field when entering a bug.

The larger issue here is around how we design for contribution of docs:

- What language do we use in our input systems?

- What workflow do we expect technical writers to follow to get their contributions included?

- What is the approval process?

Also see the “tooling for contribution”

|

|

Gervase Markham: Recommended Reading |

This response to my recent blog post is the best post on the Brendan situation that I’ve read from a non-Mozillian. His position is devastatingly understandable.

http://feedproxy.google.com/~r/HackingForChrist/~3/PvaxsHaHAjY/

|

|

Joel Maher: is a phone too hard to use? |

Working at Mozilla, I get to see a lot of great things. One of them is collaborating with my team (as we are almost all remoties) and I have been doing that for almost 6 years. Sometime around 3 years ago we switch to using Vidyo as a way to communicate in meetings. This is great, we can see and hear each other. Unfortunately heartbleed came out and affects Mozilla’s Vidyo servers. So yesterday and today we have been without Vidyo.

Now I am getting meeting cancellation notices, why are we cancelling meetings? Did meetings not happen 3 years ago? Mozilla actually creates an operating system for a … phone. In fact our old teleconferencing system is still in place. I thought about this earlier today and wondered why we are cancelling meetings. Personally I always put Vidyo in the background during meetings and keep IRC in the foreground. Am I a minority?

I am not advocating for scrapping Vidyo, instead I would like to attend meetings, and if we find they cannot be held without Vidyo, we should cancel them (and not reschedule them).

Meetings existed before Vidyo and Open Source existed before GitHub, we don’t need the latest and greatest things to function in life. Pick up a phone and discuss what needs to be discussed.

http://elvis314.wordpress.com/2014/04/09/is-a-phone-too-hard-to-use/

|

|