Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Gervase Markham: PDX Biopsy |

(Those who faint when reading about blood may want to skip this one.)

On Tuesday, I went down to London to have the biopsy for the PDX trial in which I’m taking part. The biopsy happened at the Royal Marsden hospital in Chelsea on Wednesday morning. It was a CT-guided needle biopsy, which means that they use a CT scanner in near-real-time to guide a hollow needle towards the lump to be sampled, and the sample extraction needle is then passed down that needle to take multiple samples.

At least, that’s what happens when it all goes well. :-)

The target, I found out on the day, was an isolated tumour in the top corner of my left lung. As they were biopsying my lungs, which are not a stable target, I had to both stay very still, and achieve a consistent size of “held breath”, so that when they moved the needle or did a scan, everything was in pretty much the same place. I was placed on the CT scanner table, and they used a lead-or-similar grid placed on my chest to find the optimum point of entry. They then injected generous quantities of local anaesthetic (a process which itself stings) and started inserting the needle. After each movement, they stopped, slid me into the scanner, told me to take a standard breath, and used the scan to see where the needle was and whether the track needed correction.

All went fairly smoothly until the needle passed through the outer wall of the lung itself. At this point, I started bleeding into my lung, which (although I tried to suppress it) led to significant amounts of convulsive coughing. They had to use suction to remove the liquid from my mouth. One nurse said afterwards that she thought they might well have abandoned the procedure at this point. In someone as young as me, this complication at this point is fairly rare. Of course, coughing so much, I was not able to hold still or take the standard breath, and it was dangerous to move the needle any further.

After a couple of minutes, I managed to get the coughing under control, although later I opened my eyes for a short time and saw blood spatters all over the inside of the CT machine! Once I was stable again, they were able to continue inserting the needle and were able to get 8 good “cores” of sample for use in the PDX trial.

However, a final whole-chest scan revealed that all that jerking about had given me a small pneumothorax, which is where air gets into the pleural space, between the lung and the chest wall. So I had to stay there for longer while they inserted a second needle into a different part of me and attempted to suck out the introduced air. This took less long, and was mostly successful. Any remaining air should, God willing, be reabsorbed in the next week or so.

Towards the end, I asked what my heart rate was; they said “66''. That’s the peace of God in action, I thought. The nurses joked that we should measure the heart rate of the surgeon! :-)

I was sent to the recovery room and then to the Clinical Assessment Unit. After 3 hours, they did a chest X-ray, then after another 2 hours another one, to check that I was stable and the remaining tiny pneumothorax was not growing. It wasn’t, so they let me go home. But I have another X-ray in a week, here in Sheffield, to make sure everything is OK and I’m fine to fly to the USA the following Monday :-)

http://feedproxy.google.com/~r/HackingForChrist/~3/o4oT_-xHT4M/

|

|

Nigel Babu: meta-q-override is now warn-before-quit |

I wrote an add-on a while back that would catch Cmd+Q and show a screen asking for confirmation before actually quitting. I got tired of quitting Firefox one too many times.

It used to be called meta-q-override, I’ve now renamed it to warn-before-quit. As always, please file bugs for issues/feature requests.

|

|

Nigel Babu: Git Tips You Probably Didn't Know |

I’ve been using git for quite a while now and some of it’s features continue to amaze me. Here’s a few things I learned recently.

Finding only your changes to master.

When you’ve made a change against a master that’s moving often, you find that simply doing git diff master doesn’t give the right diff. It shows you the difference between your branch and current master. That’s not what you want in most cases. The master branch would have changed and now the command also shows those changes. GitHub does the right thing and the right command in this case is the following:

git diff master...Copying your changes in one branch into another

Recently, our designer Sam Smith started working on making CKAN more responsive. His Branch was based off master and I wanted to make a new branch based off release-v2.2 with his changes on top of it. My instinct was to make a patch.

git diff master...responsive > ../responsive.patchThen, apply the patch onto a different branch. This would surely work, but I’m using a version control system! It should be smart about this. The good folks in #git pointed me in the right direction.

First find the last commit from the responsive branch that you don’t want to copy.

git checkout responsive

git log master..responsiveThe last commit in that log is what you want to copy. In this case, it’s 7587c6e8fe49c809ef7357b6f88496bd06ac93b9, so now you want to do git log

7587c6e8fe49c809ef7357b6f88496bd06ac93b9^. The first commit is the one you don’t want to keep.

git checkout responsive

git checkout -b reponsive-2.2

git rebase 7587c6e8fe49c809ef7357b6f88496bd06ac93b9 --onto release-2.2Thus, responsive-2.2 is a new branch with responsive changes based on top of release-v2.2!

http://nigelb.me/2014-04-26-git-tips-you-probably-did-not-know.html

|

|

Fr'ed'eric Harper: How to get out of the crowd at Herzing College |

Creative Commons: https://flic.kr/p/82oNiM

Yesterday, I had the pleasure to do a talk at the Herzing College, the school I went to. They allocated me one hour with the students, so I decided to split my time in two parts. Firstly, I did a talk on how to get out of the crowd for the first thirty minutes. The remaining time was dedicated to questions. I thought it was a good opportunity for them to ask anything about the industry from someone who graduate twelve years ago, from the same place. Unfortunately, I wasn’t able to record my talk due to their class’ setting, but here are the slides:

As you can see, my goal was simple: help them to find insights on how to differentiate themselves from others. In the end, they’ll all graduate with the same diploma, same education, same experience, and same homework assignments. How, as an employer, receiving many resumes for one job, can I put their name on top of the list? In other words, it was a quick introduction to the concept of personal branding as it’s the perfect time for them to start thinking about it.

--

How to get out of the crowd at Herzing College is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- Fred’s tees #38 – Wikipedia College Wikipedia is known as the online dictionary of the web,...

- Firefox OS loves at the Athens App Days Yesterday I was invited to help support the Athens App...

- Empower Mobile Web Developers with JavaScript & WebAPI at PragueJS HTML5 is a giant step in the right direction: it...

|

|

David Burns: Marionette Good First Bugs - We need you! |

I have been triaging Marionette ( The Mozilla implementation of WebDriver built into Gecko) bugs for the last few weeks. My goal is to drive the project, barring any unforeseen firedrills that my team may need to attend, to getting Marionette released.

While I have been triaging I have also been finding bugs that would be good for first time contributors to get stuck into the code and help me get this done.

So, if you want to do a bit of coding and help get this done please look at our list

I will be adding to it constantly so feel free to check back regularly!

http://www.theautomatedtester.co.uk/blog/2014/marionette-good-first-bugs-we-need-you.html

|

|

Ben Hearsum: This week in Mozilla RelEng – April 25th, 2014 |

Major highlights:

- Aki has been branching all of the repositories for B2G 1.4, which is nearly complete now.

- I took over B2G update work and am making good progress on switching us over to our production-grade update server.

- Hal and many others battled against git.mozilla.org load issues that caused many tree closures, and won!

- We’ve all been prepping for our meet-up in Portland next week. Come say hi if you’re in town!

Completed work (resolution is ‘FIXED’):

- Balrog: Backend

- Buildduty

- Please upload closure_linter-2.3.13.tar.gz to RelEng PyPI

- Upload closure-linter to RelEng pypi

- Please upload python-gflags to RelEng pypi

- General Automation

- generate “FxOS Simulator” builds for B2G

- Add ssltunnel to xre.zip bundle used for b2g tests

- Enlarge the mozilla-inbound jacuzzis, or get rid of them until we can figure out how to calculate the proper size

- b2g_build.py checkout_sources() should attempt |repo sync| more than once & output a TBPL compatible failure message

- Stop spidermonkey jobs from stealing jacuzzi slaves

- delete non-CVS-based git repos

- m-c b2g version should be 2.0, not 1.5

- Schedule Android 4.0 Debug M1,M2,M3,M8 on all trunk trees and let them ride the trains

- Please remove hamachi.zip and the out folder from the ril build for hamachi

- All Tarako builds are userdebug, not user or eng

- Add –browser-arg to B2G desktop mozharness script

- Need Tarako 1.3t FOTA updates for testing purposes

- make recommendation on “quickest & easiest” way to avoid multiple b2g device builds starting at same time

- Disable 32-bit gaia-integration tests

- Enable updates for tarako on 1.3t branch

- Switch b2g-inbound to running Mac tests only on 10.6 instead of only on 10.8

- Plivo package required on the http://pypi.pub.build.mozilla.org mirror

- [Flame]figure out how to create “Flame” builds manually

- Use local storage for builds

- Shut off perma-busted builds on 1.3t

- Updates not properly signed on the nightly-ux branch: Certificate did not match issuer or name.

- add missing pandas/tegras to buildbot configs

- Turn off Metro Browser Chrome tests on inbound, central, cedar, ash, fx-team, etc.

- Write mozharness script for gaia integration tests

- emulator-kk builds crash make half the time (which it describes as “failed to build”)

- firefox-beta-latest and firefox-beta-stub on windows, bouncer staging, are 404s

- Loan Requests

- Slave loan request for dtownsend@mozilla.com

- Slave loan request for dtownsend@mozilla.com

- Slave loan request for a tst-linux64-ec2 vm

- loan w64-ix-slave80 to standard8

- loan osx 10.8 slave to kmoir

- Other

- Switch buildapi away from carrot, or figure out how to make it declare HA queues

- Investigate why more than two third of try builds are recloning the shared repo

- Access S3 endpoints via the internet gateway from EC2 instances

- Release Automation

- Thunderbird Windows L10n repack builds failed with pymake enabled

- ship it throws ISE 500 when trying to submit a release that exists

- Releases

- tracking bug for build and release of Thunderbird 24.4.0

- Remove sw during beta-release migration for Firefox 29

- do a staging release of Firefox and Fennec 30.0b1

- Releases: Custom Builds

- Tools

In progress work (unresolved and not assigned to nobody):

- Balrog: Backend

- Support new attributes for update.xml in Balrog

- Requesting data of non-existent release yields a 500 error

- add support for “isOSUpdate” attribute

- tracebacks on versions like “3.6.3plugin1''

- Buildduty

- General Automation

- Make blobber uploads discoverable

- Please update the backup-flame blobs for the flame as there were changes to what is extracted

- branch gaia l10n repos for 1.4

- b2g 1.4 branch support

- Do debug B2G desktop builds

- Add a TURN server for running mochitest automation under a VPN

- emergency change to b2g midnight PT start times

- set-up initial balrog rules for b2g updates

- disable flame update uploading to update.boot2gecko.org

- Show SM(Hf) builds on mozilla-aurora, mozilla-beta, and mozilla-release

- [Meta] Some “Android 4.0 debug” tests fail

- [Meta] Fix + unhide broken testsuites or else turn them off to save capacity

- Make linter error filenames shorter

- fx desktop builds in mozharness

- switch b2g builds to use aus4.mozilla.org as their update server

- Do nightly builds with profiling disabled

- Schedule M, R, G, and Gu tests OOP on b2g desktop builds on cedar

- Monitor aws_stop_idle.py hungs

- Move Android 2.3 reftests to ix slaves (ash only)

- balrog submitter shouldn’t forcefully capitalize product names

- point [xxx] repository at new bm-remote webserver cluster to ensure parity in talos numbers

- upload flame gecko/gaia mars to public ftp

- Make it possible to run gaia try jobs *without* doing a build

- We should stop using setup.sh in tooltool

- Configure the MLS key for pvt builds

- Add support for webapprt-test-chrome test jobs & enable them per push on Cedar

- Intermittent Android 2.3 install step hang

- make it possible to post b2g mar info to balrog

- Loan Requests

- Other

- Platform Support

- Release Automation

- Bump thunderbird tag disk space requirements

- Figure out how to offer release build to beta users

- cache MAR + installer downloads in update verify

- release repacks needs to submit data to balrog

- Releases

- tracking bug for build and release of Firefox and Fennec 29.0

- Tracking bug for 28-april-2014 migration work

- Repos and Hooks

- Tools

http://hearsum.ca/blog/this-week-in-mozilla-releng-april-25th-2014/

|

|

Mike Conley: Electrolysis: Debugging Child Processes of Content for Make Benefit Glorious Browser of Firefox |

Here’s how I’m currently debugging Electrolysis stuff on OS X using gdb. It involves multiple terminal windows. I live with that.

# In Terminal Window 1, I execute my Firefox build with MOZ_DEBUG_CHILD_PROCESS=1. # That environment variable makes it so that the parent process spits out the child # process ID as soon as it forks out. I also use my e10s profile so as to not muck up # my default profile. MOZ_DEBUG_CHILD_PROCESS=1 ./mach run -P e10s # So, now my Firefox is spawned up and ready to go. I have # browser.tabs.remote.autostart set to "true" in my about:config, which means I'm # using out-of-process tabs by default. That means that right away, I see the # child process ID dumped into the console. Maybe you get the same thing if # browser.tabs.remote.autostart is false. I haven't checked. CHILDCHILDCHILDCHILD debug me @ 45326 # ^-- so, this is what comes out in Terminal Window 1.

So, the next step is to open another terminal window. This one will connect to the parent process.

# Maybe there are smarter ways to find the firefox process ID, but this is what I # use in my new Terminal Window 2. ps aux | grep firefox # And this is what I get back: mikeconley 45391 17.2 5.3 3985032 883932 ?? S 2:39pm 1:58.71 /Applications/FirefoxAurora.app/Contents/MacOS/firefox mikeconley 45322 0.0 0.4 3135172 69748 s000 S+ 2:36pm 0:06.48 /Users/mikeconley/Projects/mozilla-central/obj-x86_64-apple-darwin12.5.0/dist/Nightly.app/Contents/MacOS/firefox -no-remote -foreground -P e10s mikeconley 45430 0.0 0.0 2432768 612 s002 R+ 2:44pm 0:00.00 grep firefox mikeconley 44878 0.0 0.0 0 0 s000 Z 11:46am 0:00.00 (firefox) # That second one is what I want to attach to. I can tell, because the executable # path lies within my local build's objdir. The first row is my main Firefox I just # use for work browsing. I definitely don't want to attach to that. The third line # is just me looking for the process with grep. Not sure what that last one is. # I use sudo to attach to the parent because otherwise, OS X complains about permissions # for process attachment. I attach to the parent like this: sudo gdb firefox 45322 # And now I have a gdb for the parent process. Easy peasy.

And finally, to debug the child, I open yet another terminal window.

# That process ID that I got from Terminal Window 1 comes into play now. sudo gdb firefox 45326 # Boom - attached to child process now.

Setting breakpoints for things like TabChild::foo or TabParent::bar can be done like this:

# In Terminal Window 3, attached to the child: b mozilla::dom::TabChild::foo # In Terminal Window 2, attached to the parent: b mozilla::dom::TabParent::bar

And now we’re cookin’.

|

|

Gervase Markham |

James Mickens on top form, on browsers, Web standards and JavaScript:

Automatically inserting semicolons into source code is like mishearing someone over a poor cell-phone connection, and then assuming that each of the dropped words should be

replaced with the phrase “your mom.” This is a great way to create excitement in your interpersonal relationships, but it is not a good way to parse code.

http://feedproxy.google.com/~r/HackingForChrist/~3/veJPOpt3Qx8/

|

|

Sudheesh Singanamalla: Lessons learnt from the past events. |

- Inadequate communication between the local organizers and the reps. Lack of planning.

This is one of the primary reasons why we organize events on weekends and travel on Friday nights to reach the venue and return back on Sunday nights. That takes away the weekends, which our classmates or colleagues get to relax or catch up with their study and work. Yes, it is hectic, but we enjoy every moment of it! So long as we get an eager crowd to cater our knowledge to.

Given this type of schedule, our calendars are totally blocked on weekdays and we rarely get to do anything outside our current schedule. For most of the events, we are not able to stay in touch with the local organizers and ensure they organize everything according to our requirements.

This results in huge gap between what we expect from the organizers and what the organizers expect from us. For instance, at Vizag, we had no idea that we will end up with such less number of participants than originally promised by the local organizer. This was primarily because the University hosting the event was on holiday and students had gone back home, which we were not informed before we reached the venue.

2. Lack of infrastructure.

A dedicated fast internet connection is what is required to make events like these a success. There was no proper internet connection and at the same time a few things like tables, chairs, proper lighting, projector facilities and projector cables. Maybe it'd be a great thing to have a speaker kit consisting of a VGA convertor and an internet dongle for the speakers.

Lessons Learnt

- Improve the communication between the speakers and reps and the event organizers so that the event can take place in a smoother manner.

- Make it a point to clearly specify the infrastructure requirements of the speaker to the event organizer.

- Make sure basic supplies like internet connectivity, power supply and drinking water supply is maintained.

EDIT:

The event was attended by 15 developers, 7 of them being beginners who were just getting started to web development and the rest of them were slightly more advanced who could understand a few internal details of Firefox OS like the way Gaia, Gonk etc.., work. At the same time 2 of them showcased their interest in developing for Firefox. The metrics though haven't been completely accomplished as per expectations, the ones who have turned out to be developing apps and are still in contact with us as an unofficial mentoring.

http://theirregularview.blogspot.com/2014/03/lessons-learnt-from-past-events.html

|

|

Gervase Markham: Patient-Derived Xenografts |

In my last post, I mentioned that I’ve been asked to take part in an interesting study to help develop a new form of cancer therapy. That therapy is based on Patient-Derived Xenografts (PDX). It works roughly like this:

- They extract a sample of your tumour, and divide it into two bits.

- They take one half, and implant it under the skin of an immune-deficient (“nude”) mouse. The immune deficiency means the mouse does not reject the graft.

- If it grows in the mouse, once it’s large enough they remove it and implant it into a second “generation” of four mice.

- They keep going for four generations, so at the end you have about 64 mice. It turns out that if you can get a tumour to grow for this long, it is acclimatised to the mice and will grow forever if required.

- Next, they take the other half of the sample and do a deep genetic sequence on it, along with one of a normal bit of me.

- They “diff” the two sequences to find all of the mutations that my cancer carries.

- Some of those mutations will be “driver” mutations, responsible for the cancer being cancerous, and others will be “passenger” mutations, which happened at the same time or subsequently, but are just along for the ride. They try and work out which are which.

- If they can identify the driver mutations, they look and see if they have a treatment for cancers driven by that set of mutations.

- If they do, they feed it to the mice.

- If it works in the mice, they give it to me.

As you can imagine, there’s a lot that can go wrong in this process. The biopsy samples could be bad, the tumour may not grow in the mice, or it may not grow for long enough, they may not be able to figure out which mutations are drivers, if they can there may not be an agent targetting that pathway, if there is it might not work in the mice, and if it does it might not work in me! So it would be wrong to trust in this for a “cure”. As before, my life is in the Lord’s hands, and he alone numbers my days. But I hope that even if it does not result in a cure for me, the knowledge gained will help them to cure others in the future.

I have significant involvement only at the beginning and (if we get there) the end. To start the process, it was necessary to provide a tumour sample via biopsy. That happened in London yesterday, and the rather eventful experience will be the subject of my next post.

http://feedproxy.google.com/~r/HackingForChrist/~3/ufQwVbX0Kyk/

|

|

Niko Matsakis: Parallel pipelines for JS |

I’ve been thinking about an alternative way to factor the PJS API.

Until now, we’ve had these methods like mapPar(), filterPar() and

so forth. They work mostly like their sequential namesakes but execute

in parallel. This API has the advantage of being easy to explain and

relatively clear, but it’s also not especially flexible nor elegant.

Lately, I’ve been prototyping an alternate design that I call parallel pipelines (that’s just a working title; I expect the name to change). Compared to the older approach, parallel pipelines are a more expressive API that doesn’t clutter up the array prototypes. The design draws on precedent from a lot of other languages, such as Clojure, Ruby, and Scala, which all offer similar capabilities. I’ve prototyped the API on a branch of SpiderMonkey, though the code doesn’t yet run in parallel (it is structured in such a way as to make parallel execution relatively straightforward, though).

CAVEAT: To be clear, this design is just one that’s in my head. I still have to convince everyone else it’s a good idea. :) Oh, and one other caveat: most all the names in here are just temporary, I’m sure they’ll wind up changing. Along with probably everything else.

Pipelines in a nutshell

The API begins with a single method called parallel() attached to

Array.prototype and typed object arrays. When you invoke

parallel(), no actual computation occurs yet. Instead, the result is

a parallel pipeline that, when executed, will iterate over the

elements of the array.

You can then call methods like map and filter on this

pipeline. None of these transformers takes any immediate action;

instead they just return a new parallel pipeline that will, when

executed, perform the appropriate map or filter.

So, for example, you might write some code like:

var pipeline = [1, 2, 3, 4, 5].parallel().map(x => x * 3).filter(x % 2);

This yields a pipeline that, when executed, will multiply each element of the array by 3 and then select the results that are even.

Once you’ve finished building up your pipeline, you execute it by

using one of two methods, toArray() or reduce(). toArray() will

execute the pipeline and return a new array with the results.

reduce() will exeute the pipeline but instead of returning an array

it reduces the elements returns a single scalar result.

Execution works the same way as PJS today: that is, we will attempt to execute in parallel. If your code mutates global state, or uses other features of JS that are not safe for parallel execution, then you will wind up with a sequential fallback semantics.

So for example, I might write:

var pipeline = [1, 2, 3, 4, 5, 6].parallel().map(x => x * 3).filter(x % 2);

// Returns [3, 9, 15]

var results = pipeline.toArray();

// Returns 27 (i.e., 3+9+15)

var reduction = pipeline.reduce((x, y) => x + y);

Pipelines and typed objects

The pipeline API is integrated with typed objects. Each pipeline stage

generates values of a specific type; when you toArray() the result,

you get back a typed object array based around this type.

Producing typed object arrays doesn’t incur any limitations vs using a

normal JS array, because the element type can always just be any.

Moreover, in those cases where you are able to produce a more

specialized type, such as int32, you will get big savings in memory

usage since typed object arrays enable a very compact representation.

Ranges

In the previous example, I showed how to create a pipeline given an array as the starting point. Sometimes you want to create parallel operations that don’t have any array but simply iterate over a range of integers. One obvious case is when you are producing a fresh array from scratch.

To support this, we will add a new “parallel” module with a variety of

functions for producing pipelines from scratch. One such function is

range(min, max), which just produces a range of integers starting

with min and stepping up to max. So if we wanted to compute

the first N fibonnaci numbers in parallel, we could write:

var fibs = parallel.range(0, N).map(fibonacci).toArray();

In fact, using range(), we can implement the parallel() method

for normal JS arrays:

Array.prototype.parallel = function() {

return parallel.range(0, this.length).map(i => this[i]);

}

Shapes and n-dimensional pipelines

Arrays are great, but it frequently happens that we want to work with multiple dimensions. For this reasons, parallel pipelines are not limited to iterating over a single dimensional space. They are can also iterate over multiple dimensions simultaneously.

We call the full iteration space a shape. Shapes are a list, where

the length of the shape corresponds to the number of dimensions. So a

1-dimensional iteration, such as that produced by range(), has a

shape like [N]. But a 2-d iteration might have the shape [W, H]

(where W and H might be the width and height of an image).

Similarly, iterating over some 3-D space would have a shape

[X, Y, Z].

To iterate over a parallel shape, you can use the parallel.shape()

function. For example, the following command iterates over a 5x5

space, and produces a two-dimensional typed object array of integers:

var matrix = parallel.shape([5, 5])

.map(([x, y]) => x + y)

.toArray();

You can see that shape() produces a vector [x, y] specifying the

current coordinates of each element in the space. In this case, we map

that result and add x and y, which means that the end result will be:

0 1 2 3 4

1 2 3 4 5

2 3 4 5 6

3 4 5 6 7

4 5 6 7 8

Another way to get N-dimensional iteration is to start with an

N-dimensional typed object array. The parallel() method on typed

object arrays takes an optional depth argument specifying how many

of the outer dimensions you want to iterate over in parallel; this

argument defaults to 1. This means we could further transform our matrix

as shown here:

var matrix2 = matrix.parallel(2).map(i => i + 1).toArray();

The end result would be to add one to each cell in the matrix:

1 2 3 4 5

2 3 4 5 6

3 4 5 6 7

4 5 6 7 8

5 6 7 8 9

Deferred pipelines

All the pipelines I showed so far were essentially “single shot”. They began with a fixed array and applied various operations to it and then created a result. But sometimes you would like to specify a pipeline and then apply it to multiple different arrays. To support this, you can create a “detached” pipeline. For example, the following code would create a pipeline for incrementing each element by one:

var pipeline = parallel.detached().map(i => i + 1);

Before you actually execute a detached pipeline, you must attach it to a specific input. The result is a new, attached pipeline which can then be converted to an array or reduced. Of course, you can attach the pipeline many times:

// yields [2, 3, 4]

pipeline.attach([1, 2, 3]).toArray();

// yields 12

pipeline.attach([2, 3, 4]).reduce((a,b) => a+b).reduce();

Put it all together

Here is relatively complete, if informal, description of the pipeline methods I’ve thought about thus far.

Creating pipelines

The fundamental ways to create a pipeline are the methods range(),

shape(), and detached(), all available from a parallel module.

In addition, Array.prototype and the prototype for typed object

arrays both feature a parallel() method that creates a pipeline

as we showed before.

Transforming pipelines

Each of the methods described here are available on all pipelines. Each produces a new pipeline.

The most common methods for transforming a pipeline will probably be

map and mapTo:

pipeline.map(func)– invokesfuncon each element, preserving the same output type aspipeline.pipeline.mapTo(type, [func])– invokesfuncon each element, converting the result to have the typetype. In fact,funcis optional if you just want to convert between types.

map and mapTo are somewhat special in that they work equally well

over any number of dimensions. The rest of the available methods

always operate only over the outermost dimension of the pipeline.

They also create a single dimensional output. Because this is a blog

post and not a spec, I won’t bother writing out the descriptions in

detail:

pipeline.flatMap(func)– like map, but flatten one layer of arrayspipeline.filter(func)– drop elements for whichfuncreturns falsepipeline.scan(func)– prefix sumpipeline.scatter(...)– move elements from one index to another

Finally, there is the attach() method, which is only applicable to

detached pipelines. It produces a new pipeline that is attached to a

specific input. If the pipeline is already attached, an exception results.

Executing pipelines

There are two fundamental ways to execute a pipeline:

pipeline.toArray()– executes the pipeline and collects the result into a new typed object array. The dimensions and type of this array are determined by thepipeline.pipeline.reduce(func, [initial])– executes the pipeline and reduces the results usingfunc, possibly with an initial value. Returns the result of this reduction.

Open questions

Should pipelines provide the index to the callback? I decided to

strive for simplicity and just say that pipeline transformers like

map always pass a single value to their callback. I imagine we

could add an enumerate transformer if indices are desired. But then

again, this pollutes the value being produced with indices that just

have to be stripped away. So maybe it’s better to just pass the index

as a second argment that the user can use or ignore as they choose. I

imagine that the index will be an integer for a 1D pipeline, and an

array for a multidimensional pipeline.

Should pipelines be iterable? It has been suggested that pipelines be iterable. I guess that this would be equivalent to collecting the pipeline into an array and then iterating over that. (Since, unless you are doing a map operation, the only real purpose for iteration is to produce side-effects.) This would be say enough to add, I just was worried that it might be misleading to see code like this:

for (var e of array.parallel().map(...)) {

/* Maybe it looks like this for loop body

executes in parallel? (Which it doesn't.) */

}

Updates

- Renamed

collect()totoArray(), which seems clearer.

http://smallcultfollowing.com/babysteps/blog/2014/04/24/parallel-pipelines-for-js/

|

|

Andreas Gal: Technical Leadership at Mozilla |

Today, I am starting my role as Mozilla’s new Chief Technology Officer. Mozilla is an unusual organization. We are not just a software company making a product. We are also a global community of people with a shared goal to build and further the Web, the world’s largest and fastest-growing technology ecosystem. My new responsibilities at Mozilla include identifying and enabling new technology ideas from across the project, leading technical decision making, and speaking for Mozilla’s vision of the Web.

I joined Mozilla almost six years ago to work with Brendan Eich and Mike Shaver on a just-in-time compiler for JavaScript based on my dissertation research (TraceMonkey). Originally, this was meant to be a three-month project to explore trace compilation in Firefox, but we quickly realized that we could rapidly bring this new technology to market. On August 23, 2008 Mozilla turned on the TraceMonkey compiler in Firefox, only days before Google launched its then-still-secret Chrome browser, and these two events spawned the JavaScript Performance Wars between Firefox, Chrome and Safari, massively advancing the state of the art in JavaScript performance. Today, JavaScript is one of the fastest dynamic languages in the world, even scaling to demanding use cases like immersive 3D gaming.

The work on TraceMonkey was an eye-opening experience for me. Through our products that are used by hundreds of millions of users, we can bring new technology to the Web at an unprecedented pace, changing the way people use and experience the Web.

Over the past almost six years I’ve enjoyed working for Mozilla as Director of Research and later as Vice President of Mobile and Research and co-founding many of Mozilla’s technology initiatives, including Broadway.js (a video decoder in JavaScript and WebGL), PDF.js (a PDF viewer built with the Web), Shumway (a Flash player built with the Web), the rebooted native Firefox for Android, and of course Firefox OS.

For me, the open Web is a unique ecosystem because no one controls or owns it. No single browser vendor, not even Mozilla, controls the Web. We merely contribute to it. Every browser vendor can prototype new technologies for the Web. Once Mozilla led the way with Firefox, market pressures and open standards quickly forced competitors to implement successful technology as well. The result has been an unprecedented pace of innovation that has already displaced competing proprietary technology ecosystems on the desktop.

We are on the cusp of the same open Web revolution happening in mobile as well, and Mozilla’s goal is to accelerate the advance of mobile by tirelessly pushing the boundaries of what’s possible with the Web. Or, to use the language of Mozilla’s engineers and contributors:

“For Mozilla, anything that the Web can’t do, or anything that the Web is not faster and better at than native technologies, is a bug. We should file it in our Bugzilla system, so we can start writing a patch to fix it.”

Filed under: Mozilla

http://andreasgal.com/2014/04/24/technical-leadership-at-mozilla/

|

|

Fr'ed'eric Harper: Firefox OS `a HTML5mtl |

Creative Commons: http://j.mp/1lJvFn3

Mardi soir avait lieu la rencontre mensuelle d’HTML5mtl et j’ai eu le plaisir d’y pr'esenter Firefox OS. Malgr'e la pluie et surtout, une partie des Canadiens, plusieurs d'eveloppeurs se sont pr'esent'es pour venir en apprendre sur comment ce nouveau syst`eme d’exploitation donne l’impression de faire rouler HTML5 sur les st'ero"ides. Voici le r'esum'e de ma pr'esentation:

HTML5 est un pas de g'eant dans la bonne direction: il apporte plusieurs fonctionnalit'es dont les d'eveloppeurs avaient besoin pour cr'eer plus facilement de meilleures exp'eriences web. Il a aussi fait naitre un d'ebat sans fin: applications natives ou applications web! Lors de cette pr'esentation, Fr'ed'eric Harper vous montrera comment le web ouvert peut vous aider `a cr'eer des applications mobiles de qualit'es. Vous en apprendrez plus sur des technologies telles que les WebAPIs, ainsi que les outils qui vous permettront de viser un nouveau march'e avec Firefox OS et le web d’aujourd’hui.

Comme d’habitude, pour ceux qui 'etaient pr'esents, voici les diapositives qui ont accompagn'e mes propos:

J’ai aussi enregistr'e cette derni`ere pour ceux qui voudraient revoir une partie ou bien pour ces fans de hockey qui ont fait le choix de ne pas venir:

Tel que mentionn'e durant la soir'ee, si vous avez d’autres questions sur la plateforme ou si vous planifier soumettre une application Firefox OS dans le Firefox Marketplace, faite-moi signe!

--

Firefox OS `a HTML5mtl is a post on Out of Comfort Zone from Fr'ed'eric Harper

Related posts:

- HTML5 sur les st'ero"ides `a HTML5mtl C’est avec un immense plaisir que je pr'esenterais pour la...

- FOXHACK, un hackathon Firefox OS `a Montr'eal Le samedi 28 septembre prochain aura lieu un hackathon Firefox...

- Firefox OS au Visual Studio Talkshow Il y a quelques jours j’ai particip'e au Visual Studio...

|

|

Gervase Markham: Cancer Update |

Last November, and again this month, I had CT scans, and it turns out my cancer (Adenoid Cystic Carcinoma) has been growing. (If you haven’t known me for long and didn’t know I have cancer, the timeline and in particular the video might be a useful introduction.) I now have lumps of significant size – 2cm or larger – in both of my lungs and in my liver. It has also spread to the space between the lung and the chest wall. It normally doesn’t cause much bother there, but it can bind the lung to the wall and cause breathing pain.

For the last 14 years, we have been following primarily a surgical management strategy. To this end, I have had approximately 5 neck operations, 2 mouth operations, 2 lung operations, and had half my liver, my gall bladder and my left kidney removed. Documentation about many of these events is available on this blog, linked from the timeline. Given that I’m still here and still pretty much symptom-free, I feel this strategy has served me rather well. God is good.

However, it’s now time for a change of tack. The main lump in my left lung surrounds the pulmonary artery, and the one in my liver is close to the hepatic portal vein. Surgery on these might be risky. So instead, the plan is to wait until one of them starts causing actual symptoms, and to apply targetted radiotherapy to shrink it. Because my cancer is “indolent” (a.k.a. “lazy”), it can have periods of activity and periods of inactivity. While it seems more active at the moment, that could stop at any time, or it could progress differently in different places.

There is no general chemotherapy for ACC. However, at my last consultation I was asked to take part in a clinical trial of a new and interesting therapeutic technique, of which more very soon.

A friend texted me a word of encouragement this morning, and said he and his family had been reading Psalm 103. It’s a timely reminder of the true nature of things:

The life of mortals is like grass,

they flourish like a flower of the field;

the wind blows over it and it is gone,

and its place remembers it no more.

But from everlasting to everlasting

the Lord’s love is with those who fear him,

and his righteousness with their children’s children –

with those who keep his covenant

and remember to obey his precepts.

http://feedproxy.google.com/~r/HackingForChrist/~3/SZbc2BzISLc/

|

|

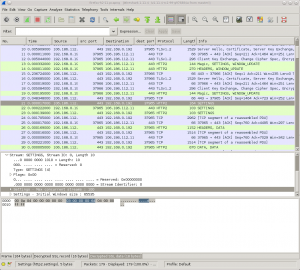

Daniel Stenberg: Wireshark dissector work |

Recently I cloned the Wireshark git repository and started updating the http2 dissector. That’s the piece of code that gets called to analyze a stream of data that Wireshark thinks is http2.

Recently I cloned the Wireshark git repository and started updating the http2 dissector. That’s the piece of code that gets called to analyze a stream of data that Wireshark thinks is http2.

The current http2 dissector was left at draft-09 state, while the current draft at the time was number 11 and there have been several changes on the binary format since so any reasonably updated client or server would send or receive byte streams that Wireshark couldn’t properly display.

I never wrote any dissector code before but I must say Wireshark didn’t disappoint. It was straight forward and mostly downright easy to fix most of the wrong details. I’m not pretending to be a master at this nor is the dissector code anywhere near “finished” yet but I still enjoyed the API and how to write a thing like this.

I’ve since dissected plain-text http2 streams that I’ve done with curl+nghttp2 and I’ve also used the SSLKEYLOGFILE trick with Firefox to automatically decrypt the TLS session and have the dissector figure out the underlying http2 parts.

If there’s any little snag to mention, it is the fact that they insist on getting patches submitted directly to gerrit instead of any mailing list or similar. This required me to create a gerrit account, and really figure out how to push my stuff from git to there, instead of the more traditional and simpler approach of just sending my patch to a mailing list or possibly submitting it to a bug/patch tracker somewhere with my browser.

Call me old-style but in fact the hip way of today with a pull-request github style would also have been much easier. Here’s what my gerrit submission looks like. But I get it, gerrit does push a little more work over to the submitter and I figure that once a submitter such as myself finally has fixed all the nits in the patch it is very easy for the project to actually merge it. I actually got someone else to help me point out how to even find the link to view the code review after the first one was submitted on the site… (when I post this, my patch has not yet been accepted or merged into the wireshark git repo)

Here’s a basic screenshot showing a trace of Firefox requesting https://nghttp2.org using http2. Click it for the full thing.

.. and what happens this morning my time? There’s a brand new http2 draft-12 out with more changes on the on-the-wire format! Well to be honest, that really wasn’t a surprise. I’ll get the new stuff supported too, but I’ll do that in a separate patch as I prefer to hold off until I see a live stream by at least one implementation to test against.

http://daniel.haxx.se/blog/2014/04/24/wireshark-dissector-work/

|

|

Armen Zambrano Gasparnian: Gaia code changes and how the trickle-down into Mozilla's RelEng CI |

- github.com

- Changes are merged to master (mozilla-b2g/gaia)

- These changes trigger the Travis CI

- git.mozilla.org

- hg.mozilla.org

- We convert our internal git repo to our hg repos (e.g. gaia-central)

- There is a B2G Bumper bot that will change device manifests on b2g-inbound with gonk/gaia git changesets for emulator/device builds

- There is a Gaia Bumper bot that will change device manifests on b2g-inbound with gaia hg changesets for b2g desktop builds

- Those manifest changes indicate which gaia changesets to checkout

- This will trigger tbpl changes and run on the RelEng infrastructure

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://armenzg.blogspot.com/2014/04/gaia-code-changes-and-how-trickle-down.html

|

|

Joel Maher: Vaibhav has a blog- a perspective of a new Mozilla hacker |

As I mentioned earlier in this year, I have had the pleasure of working with Vaibhav. Now that time has passed he continues to contribute to Mozilla, and he will be participating this year in Google Summer of Code with Mozilla. I am excited.

He now has a blog- while there is only one post, he will be posting ~weekly with updates to his GSoC project and other fun topics.

http://elvis314.wordpress.com/2014/04/23/vaibhav-has-a-blog-a-perspective-of-a-new-mozilla-hacker/

|

|

Edward Lee: Mr. AwesomeBar Runs for Congress |

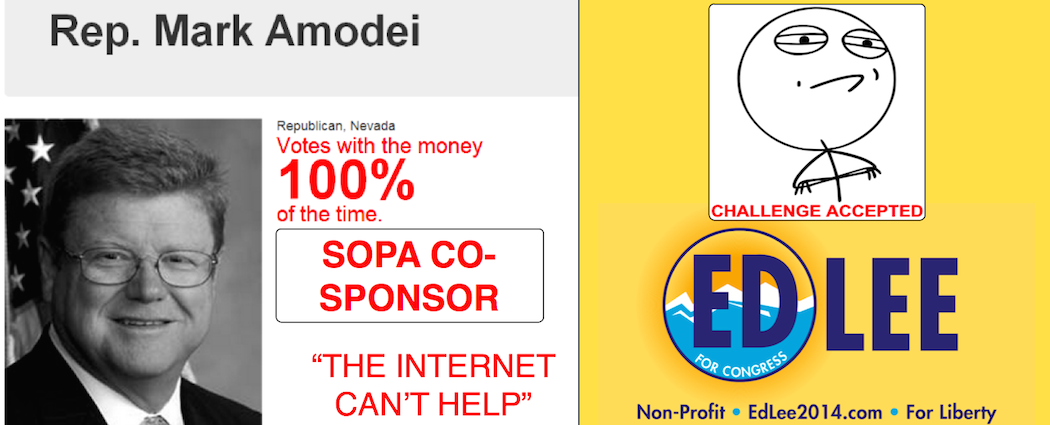

tl;dr The non-profit Mozilla changed the Internet by giving users choice and liberty. I, Ed Lee, want to build on that foundation to change how politics works in 2 ways: 1) get rid of politicians who focus on money and forget about voters and 2) change how politics is won by showing you can win without spammy advertisements that track tons of data about people. The Internet helped Mozilla take on “unbeatable” opponents, and I need your help to do the same.

I became a Mozillian 10 years ago. I found my passion helping people through Mozilla, a non-profit that promotes user choice and liberty on the Internet. These values attracted me to Mozilla in the first place, and I believe in these values even more after being employed at Mozilla for five years. I want to bring these values to politics because while the Internet can be a big part of our lives, politics tend to control the rest and can destroy the things/people we care about most.

During the SOPA protests, SopaTrack showed not only which Representatives were co-sponsors for the bill but also how often they voted with whichever side gave the most money. I was quite appalled to find out that Mark Amodei co-sponsored a bill that would take away our freedoms and earned a perfect 100% record of voting with the money [itworld.com].

The behavior of this “Representative” is the complete opposite of the values that brought me to Mozilla, and I believe the correct solution is to vote out these politicians. That’s why I’m running against him as a US Congress candidate in Nevada on a platform of “Non Profit” and “For Liberty” to directly contrast with this incumbent.

I’ve talked to various people involved in politics, and I’ve been ignored, laughed at, told to go away and stop wasting my time. The most interesting dismissal: “the Internet can’t help,” and I thought to myself, “Challenge Accepted.” I hope the rest of the Internet is as outraged as I was to hear that the best action is to do nothing and give a free pass to this incumbent who just votes with the money to destroy our liberties.

Mozilla was in a similar situation when Firefox had to take on the dominant web browser. The Internet cared about the browser that focuses on the user (e.g., would you like to see that popup?). People helped spread Firefox even without understanding the non-profit that created it because the built in values of freedom and choice resonated with users.

My ask of you is to think of aunts/uncles/friends/relatives in Reno/Sparks/Carson City and see if they’re on this anonymized list of names [edlee2014.com]. If so, please text or call that person and simply say “please look into Ed Lee,” and if you let me know, I’ll personally follow up with your contact. If you don’t find anyone on the list, please share it with others whom you think might know someone living in the northern half of Nevada. Early voting starts in a month with the primary less than 2 months away on June 10th, so let’s move fast!

I’m aiming for at least a third of the midterm primary votes in a 4-way race, and that roughly comes out to just over 9000. (Really!) The anonymized list of names are of people most likely to vote in my district’s Democratic primary, and the plan is to have the millions on the Internet find a connection to those several thousands to make a personal request. This is as opposed to traditional political advertising where large amounts of money is raised to track down and spam people where I estimate more than 95% of people won’t even be moved to vote or vote differently. With my Mozilla background, that’s not how I would want to approach campaigning.

If this technique works for the primary, it could work for the general election by focusing on non-partisan voters. We can further develop this technique to vote out all the money-seeking politicians and replace them with people who care about individuals and freedoms across the US, and dare I say, across the world.

The Internet helped Mozilla change the world with its non-profit mission and strong core values. I need your help to do the same in the world of politics.

- Ed Lee (Ed “Mr. AwesomeBar” Lee is a bit long for the ballot)

Paid for by Ed Lee for Congress

Yay for free speech, but apparently not free-as-in-beer if not correctly attributed.

http://ed.agadak.net/2014/04/mr-awesomebar-runs-for-congress

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [994570] unable to create an attachment with review+ : “You must provide a reviewer for review requests”

- [994540] “details” link in overdue requests email links to attachment content instead of details page

- [993940] Group icons should be displayed for indirect memberships, too

- [993913] remove the %user_cache from inline history, and ensure the object cache is always used

- [998236] Privacy policy url has changed

- [968576] Dangerous control characters allowed in Bugzilla text

- [997281] New QuickSearch operators can short-circuit each other depending on which ones are tested first

- [993894] the database query in bless_groups is slow

- [936509] Automatically disable accounts based on the number of comments tagged as spam

- [998017] Internal error: “Not an ARRAY reference” when using the summarize time feature

- [999734] User email addresses are publicly visible in profile titles

- [993910] Bugzilla/Search/Saved.pm:294 isn’t using the cache

- [998323] URLs pasted in comments are no longer displayed

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/04/23/happy-bmo-push-day-90/

|

|

Sylvestre Ledru: Changes Firefox 29 beta9 to 29build1 |

Last changes for the release. Fortunately, not too much changes. Mostly tests and a last minute top crash fix.

If needed, we might do a build2 but it is not planned.

- 9 changesets

- 29 files changed

- 155 insertions

- 292 deletions

| Extension | Occurrences |

| js | 8 |

| cpp | 7 |

| h | 4 |

| html | 3 |

| css | 2 |

| py | 1 |

| list | 1 |

| jsm | 1 |

| idl | 1 |

| Module | Occurrences |

| toolkit | 17 |

| js | 4 |

| browser | 3 |

| testing | 1 |

| modules | 1 |

| layout | 1 |

| editor | 1 |

List of changesets:

| Mike Connor | Bug 997402 - both bing and yahoo params are broken, r=mfinkle, a=sylvestre - 6bc0291bbe83 |

| Ehsan Akhgari | Bug 996009 - Ensure that the richtext2 browserscope tests do not attempt to contact the external network. r=roc, a=test-only - 5944b238bd76 |

| Nathan Froyd | Bug 996019 - Fix browser_bug435325.js to not connect to example.com. r=ehsan, a=test-only - 6bfce9c619d1 |

| Nathan Froyd | Bug 996031 - Remove 455407.html crashtest. r=dholbert, a=test-only - 6431641fb1b6 |

| Nathan Froyd | Bug 995995 - Set testing prefs to redirect to the test proxy server for RSS feeds. r=jmaher, a=test-only - ba1c380f55b9 |

| Monica Chew | Bug 998370: Rollback bugs 997759, 989232, 985720, 985623 in beta (r=backout,ba=sledru) - 4f4941d4cda9 |

| Douglas Crosher | Bug 996883. r=mjrosenb, a=abillings - 9208db873dbf |

| Jan de Mooij | Bug 976536 - Fix JSFunction::existingScript returning NULL in some cases. r=till, a=sledru - a5688b606883 |

| Gavin Sharp | Backed out changeset cb7f81834560 (Bug 980339) since it caused Bug 999080, a=lsblakk - 02556a393ed8 |

r= means reviewed by

a= means uplift approved by

Previous changelogs:

- Firefox 29 beta 8 to beta 9

- Firefox 29 beta 7 to beta 8

- Firefox 29 beta 6 to beta 7

- Firefox 29 beta 5 to beta 6

- Firefox 29 beta 4 to beta 5

- Firefox 29 beta 3 to beta 4

- Firefox 29 beta 2 to beta 3

Original post blogged on b2evolution.

http://sylvestre.ledru.info/blog/2014/04/23/changes-firefox-29-beta9-to-build1

|

|