Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Cameron Kaiser: So long, Opportunity rover |

Both Opportunity and Spirit were powered by the 20MHz BAE RAD6000, a radiation-hardened version of the original IBM POWER1 RISC Single Chip CPU and the indirect ancestor of the PowerPC 601. Many PowerPC-based spacecraft are still in operation, both with the original RAD6000 and its successor the RAD750, a radiation-hardened version of the G3.

Meanwhile, the Curiosity rover, which is running a pair of RAD750s (one main and one backup, plus two SPARC accessory CPUs), is still in operation at 2,319 Mars solar days and ticking. There is also the 2001 Mars Odyssey orbiter, which is still circling the planet with its own RAD6000 and is expected to continue operations until 2025. Curiosity's design is likely to be reused for the Mars 2020 rover, meaning possibly even more PowerPC design will be exploring the cosmos in the very near future.

http://tenfourfox.blogspot.com/2019/02/so-long-opportunity-rover.html

|

|

Dave Townsend: Welcoming a new Firefox/Toolkit peer |

Please join me in welcoming Bianca Danforth to the set of peers blessed with reviewing patches to Firefox and Toolkit. She’s been doing great work making testing experiment extensions easy and so it’s time for her to level-up.

https://www.oxymoronical.com/blog/2019/02/Welcoming-a-new-FirefoxToolkit-peer

|

|

Mozilla Open Policy & Advocacy Blog: Mozilla Foundation fellow weighs in on flawed EU Terrorist Content regulation |

As we’ve noted previously, the EU’s proposed Terrorist Content regulation would seriously undermine internet health in Europe, by forcing companies to aggressively suppress user speech with limited due process and user rights safeguards. Yet equally concerning is the fact that this proposal is likely to achieve little in terms of reducing the actual terrorism threat or the phenomenon of radicalisation in Europe. Here, Mozilla Foundation Tech Policy fellow and community security expert Stefania Koskova* unpacks why, and proposes an alternative approach for EU lawmakers.

With the proposed Terrorist Content regulation, the EU has the opportunity to set a global standard in how to effectively address what is a pressing public policy concern. To be successful, harmful and illegal content policies must carefully and meaningfully balance the objectives of national security, internet-enabled economic growth and human rights. Content policies addressing national security threats should reflect how internet content relates to ‘offline’ harm and should provide sufficient guidance on how to comprehensively and responsibly reduce it in parallel with other interventions. Unfortunately, the Commission’s proposal falls well short in this regard.

Key shortcomings:

- Flawed definitions: In its current form there is a considerable lack of clarity and specificity in the definition of ‘terrorist content’, which creates unnecessary confusion between ‘terrorist content’ and terrorist offences. Biased application, including through the association of terrorism with certain national or religious minorities and certain ideologies, can lead to serious harm and real-world consequences. This in turn can contribute to further polarisation and radicalisation.

- Insufficient content assessment: Within the proposal there is no standardisation of the ‘terrorist content’ assessment procedure from a risk perspective, and no standardisation of the evidentiary requirements that inform content removal decisions by government authorities or online services. Member States and hosting service providers are asked to evaluate the terrorist risk associated with specific online content, without clear or precise assessment criteria.

- Weak harm reduction model: Without a clear understanding of the impact of ‘terrorist content’ on the radicalisation process in specific contexts and circumstances, it seems inadvisable and contrary to the goal of evidence-based policymaking to assume that removal, blocking, or filtering will reduce radicalisation and prevent terrorism. Further, potential adverse effects of removal, blocking, and filtering, such as fueling grievances of those susceptible to terrorist propaganda, are not considered.

As such, the European Commission’s draft proposal in its current form creates additional risks with only vaguely defined benefits to countering radicalisation and preventing terrorism. To ensure the most negative outcomes are avoided, the following amendments to the proposal should be made as a matter of urgency:

- Improving definition of terrorist content: The definition of ‘terrorist content’ should be clarified such that it depends on illegality and intentionality. This is essential to protect the public interest speech of journalists, human rights defenders, and other witnesses and archivists of terrorist atrocities.

- Disclosing ‘what counts’ as terrorism through transparency reporting and monitoring: The proposal should ensure that Member States and hosting platforms are obliged to report on how much illegal terrorist content is removed, blocked or filtered under the regulation – broken down by category of terrorism (incl. nationalist-separatist, right-wing, left-wing, etc.) and the extent to which content decision and action was linked to law enforcement investigations. With perceptions of terrorist threat in the EU diverging across countries and across the political spectrum, this can safeguard against intentional or unintentional bias in implementation.

- Assessing security risks: In addition to to being grounded in a legal assessment, content control actions taken by competent authorities and companies should be strategic – i.e. be based on an assessment of the content’s danger to public safety and the likelihood that it will contribute to the commission of terrorist acts. This risk assessment should also take into account the likely negative repercussions arising from content removal/blocking/filtering.

- Focusing on impact: The proposal should require or ensure that all content policy measures are closely coordinated and coincide with the deployment of strategic radicalisation counter-narratives, and broader terrorism prevention and rehabilitation programmes.

The above recommendations address shortcomings in the proposal in the terrorism prevention context. Additionally, however, there remains the contested issue of 60-minute content takedowns and mandated proactive filtering, both of which are serious threats to internet health. There is an opportunity, through the parliamentary procedure, to address these concerns. Constructive feedback, including specific proposals that can significantly improve the current text, has been put forward by EU Parliament Committees, civil society and industry representatives.

The stakes are high. With this proposal, the EU can create a benchmark for how democratic societies should address harmful and illegal online content without compromising their own values. It is imperative that lawmakers take the opportunity.

*Stefania Koskova is a Mozilla Foundation Tech Policy fellow and a counter-radicalisation practitioner. Learn more about her Mozilla Foundation fellowship here.

The post Mozilla Foundation fellow weighs in on flawed EU Terrorist Content regulation appeared first on Open Policy & Advocacy.

https://blog.mozilla.org/netpolicy/2019/02/13/terrorist_content_regulation/

|

|

Will Kahn-Greene: Socorro: January 2019 happenings |

Summary

Socorro is the crash ingestion pipeline for Mozilla's products like Firefox. When Firefox crashes, the crash reporter collects data about the crash, generates a crash report, and submits that report to Socorro. Socorro saves the crash report, processes it, and provides an interface for aggregating, searching, and looking at crash reports.

January was a good month. This blog post summarizes activities.

Read more… (5 mins to read)

https://bluesock.org/~willkg/blog/mozilla/socorro_2019_01.html

|

|

The Mozilla Blog: Facebook Answers Mozilla’s Call to Deliver Open Ad API Ahead of EU Election |

After calls for increased transparency and accountability from Mozilla and partners in civil society, Facebook announced it would open its Ad Archive API next month. While the details are still limited, this is an important first step to increase transparency of political advertising and help prevent abuse during upcoming elections.

We’re committed to a new level of transparency for ads on Facebook (1/3) https://t.co/A9WeKGYHXO

— Rob Leathern (@robleathern) February 11, 2019

Facebook’s commitment to make the API publicly available could provide researchers, journalists and other organizations the data necessary to build tools that give people a behind the scenes look at how and why political advertisers target them. It is now important that Facebook follows through on these statements and delivers an open API that gives the public the access it deserves.

The decision by Facebook comes after months of engagement by the Mozilla Corporation through industry working groups and government initiatives and most recently, an advocacy campaign led by the Mozilla Foundation.

This week, the Mozilla Foundation was joined by a coalition of technologists, human rights defenders, academics, journalists demanding Facebook take action and deliver on the commitments made to put users first and deliver increased transparency.

“In the short term, Facebook needs to be vigilant about promoting transparency ahead of and during the EU Parliamentary elections,” said Ashley Boyd, Mozilla’s VP of Advocacy. “Their action — or inaction — can affect elections across more than two dozen countries. In the long term, Facebook needs to sincerely assess the role its technology and policies can play in spreading disinformation and eroding privacy.”

And in January, Mozilla penned a letter to the European Commission underscoring the importance of a publicly available API. Without the data, Mozilla and other organizations are unable to deliver products designed to pull back the curtain on political advertisements.

“Industry cannot ignore its potential to either strengthen or undermine the democratic process,” said Alan Davidson Mozilla’s VP of Global Policy, Trust and Security. “Transparency alone won’t solve misinformation problems or election hacking, but it’s a critical first step. With real transparency, we can give people more accurate information and powerful tools to make informed decisions in their lives.”

This is not the first time Mozilla has called on the industry to prioritize user transparency and choice. In the wake of the Cambridge Analytica news, the Mozilla Foundation rallied tens of thousands of internet users to hold Facebook accountable for its post-scandal promises. And Mozilla Corporation took action with a pause on advertising our products on Facebook and provided users with Facebook Container for Firefox, a product that keeps Facebook from tracking people around the web when they aren’t on the platform.

While the announcement from Facebook indicates a move towards transparency, it is critical the company follows through and delivers not only on this commitment but the other promises also made to European lawmakers and voters.

The post Facebook Answers Mozilla’s Call to Deliver Open Ad API Ahead of EU Election appeared first on The Mozilla Blog.

|

|

Rabimba: ARCore and Arkit, What is under the hood: SLAM (Part 2) |

What we will cover today:

- How ARCore and ARKit does it's SLAM/Visual Inertia Odometry

- Can we D.I.Y our own SLAM with reasonable accuracy to understand the process better

Sensing the world: as a computer

When we start any augmented reality application in mobile or elsewhere, the first thing it tries to do is to detect a plane. When you first start any MR app in ARKit, ARCore, the system doesn't know anything about the surroundings. It starts processing data from camera and pairs it up with other sensors.

When we start any augmented reality application in mobile or elsewhere, the first thing it tries to do is to detect a plane. When you first start any MR app in ARKit, ARCore, the system doesn't know anything about the surroundings. It starts processing data from camera and pairs it up with other sensors.- Build a point cloud mesh of the environment by building a map

- Assign a relative position of the device within that perceived environment

A little saga about relationships

In a perfect situation, we know the distance between A and B, and if we want to move towards C we can infer exactly how we need to move.

Aligning the Virtual World

| Image credits: Lu, F., & Milios, E. (1997). Globally consistent range scan alignment for environment mapping |

Let's build the map a.k.a SLAM

- Monocular Camera input

- Real-time

- Drift

Skeleton of SLAM

| Image Credit: Cadena et al |

- Sensor: On mobiles, this is primarily Camera, augmented by accelerometer, gyroscope and depending on the device light sensor. Apart from Project Tango enabled phones, nobody ahd depth sensor for Android.

- Front End: The feature extraction and anchor identification happens here as we described in previous post.

- Back End: Does error correction to compensate for the drift and also takes care of localizing pose model and overall geometric reconstruction.

- SLAM estimate: This is the result containing the tracked features and locations.

D.I.Y SlAM: Taking a peek at ORB-SLAM

ORB-SLAM just uses the camera and doesn't utilize any other gyroscope or accelerometer inputs. But the result is still impressive.

- Detecting Features: ORB-SLAM, as the name suggests uses ORB to find keypoint and generate binary descriptors. Internally ORB is based on the same method to find keypoint and generating binary descriptors as we discussed in part 1 for BRISK. In short ORB-SLAM analyzes each picture to find keyframe and then store it with a reference to the keyframe in a map. These are utilized in future to correct historical data.

- Keypoint > 3d landmark: The algorithm looks for new frames from the image and when it finds one it performs keypoint detection on it. These are then matched with the previous frame to get a spatial distance. This so far provides a good idea on where it can find the same key points again in a new frame. This provides the initial camera pose estimation.

- Refine Camera Pose: The algorithm repeats Step 2 by projecting the estimated initial camera pose into next camera frame to search for more keypoint which corresponds to the one it already knows. If it is certain it can find them, it uses the additional data to refine the pose and correct any spatial measurement error.

| green squares = tracked keypoints. Blue boxes: keyframes. Red box = camera view. Red points = local map points. Image credits: ORB-SLAM video by Ra'ul Mur Artal |

Returning home a.k.a Loop Closing

| Loop Closing performed by the ORB-SLAM algorithm. Image credits: Mur-Artal, R., Montiel |

| The reconstructed map before (up) and after (down) loop closure. Image credits: Mur-Artal, R., Montiel |

SLAM today:

Google: ARCore's documentation describes it's tracking method as "concurrent odometry and mapping" which is essentially SLAM+sensor inputs. Their patent also indicates they have included inertial sensors into the design.

Apple: Apple also is using Visual Interial Odometry which they acquired by buying Metaio and FlyBy. I learned a lot about what they are doing by having a look at this video at WWDC18.

References/Interesting Reads:

- A stochastic map for uncertain spatial relationships

- Globally consistent range scan alignment for environment mapping

- ORB-SLAM

- Past, Present, and Future of Simultaneous Localization and Mapping: Towards the Robust-Perception Age

- ORB feature tracking algorithm

- A Comparative Analysis of Tightly-coupled Monocular, Binocular, and Stereo VINS

- Semantic Visual Localization (this might just be future)

|

|

Mozilla Future Releases Blog: Making the Building of Firefox Faster for You with Clever-Commit from Ubisoft |

Firefox fights for people online: for control and choice, for privacy, for safety. We do this because it is our mission to keep the web open and accessible to all. No other tech company has people’s back like we do.

Part of keeping you covered is ensuring that our Firefox browser and the other tools and services we offer are running at top performance. When we make an update, or add a new feature the experience should be as seamless and smooth as possible for the user. That’s why Mozilla just partnered with Ubisoft to start using Clever-Commit, an Artificial Intelligence coding assistant developed by Ubisoft La Forge that will make the Firefox code-writing process faster and more efficient. Thanks to Clever-Commit, Firefox users will get to use even more stable versions of Firefox and have even better browsing experiences.

We don’t spend a ton of time regaling our users with the ins-and-outs of how we build our products because the most important thing is making sure you have the best experience when you’re online with us. But building a browser is no small feat. A web browser plays audio and video, manages various network protocols, secures communications using advanced cryptographic algorithms, handles content running in parallel multiple processes, all this to render the content that people want to see on the websites they visit.

And underneath all of this is a complex body of code that includes millions of lines written in various programming languages: JavaScript, C++, Rust. The code is regularly edited, released and updated onto Firefox users’ machines. Every Firefox release is an investment, with an average of 8,000 software edits loaded into the browser’s code by hundreds of Firefox staff and contributors for each release. It has a huge impact, touching hundreds of millions of internet users.

With a new release every 6 to 8 weeks, making sure the code we ship is as clean as possible is crucial to the performance people experience with Firefox. The Firefox engineering team will start using Clever-Commit in its code-writing, testing and release process. We will initially use the tool during the code review phase, and if conclusive, at other stages of the code-writing process, in particular during automation. We expect to save hundreds of hours of bug riskiness analysis and detection. Ultimately, the integration of Clever-Commit into the full Firefox developer workflow could help catch up to 3 to 4 out of 5 bugs before they are introduced into the code.

By combining data from the bug tracking system and the version control system (aka changes in the code base), Clever-Commit uses artificial intelligence to detect patterns of programming mistakes based on the history of the development of the software. This allows us to address bugs at a stage when fixing a bug is a lot cheaper and less time-consuming, than upon release.

Mozilla will contribute to the development of Clever-Commit by providing programming language expertise in Rust, C++ and Javascript, as well as expertise in C++ code analysis and analysis of bug tracking systems.

The post Making the Building of Firefox Faster for You with Clever-Commit from Ubisoft appeared first on Future Releases.

|

|

Mozilla VR Blog: Jingle Smash: Choosing a Physics Engine |

This is part 2 of my series on how I built Jingle Smash, a block smashing WebVR game .

The key to a physics based game like Jingle Smash is of course the physics engine. In the Javascript world there are many to choose from. My requirements were for fully 3D collision simulation, working with ThreeJS, and being fairly easy to use. This narrowed it down to CannonJS, AmmoJS, and Oimo.js: I chose to use the CannonJS engine because AmmoJS was a compiled port of a C lib and I worried would be harder to debug, and Oimo appeared to be abandoned (though there was a recent commit so maybe not?).

CannonJS

CannonJS is not well documented in terms of tutorials, but it does have quite a bit of demo code and I was able to figure it out. The basic usage is quite simple. You create a Body object for everything in your scene that you want to simulate. Add these to a World object. On each frame you call world.step() then read back position and orientations of the calculated bodies and apply them to the ThreeJS objects on screen.

While working on the game I started building an editor for positioning blocks, changing their physical properties, testing the level, and resetting them. Combined with physics this means a whole lot of syncing data back and forth between the Cannon and ThreeJS sides. In the end I created a Block abstraction which holds the single source of truth and keeps the other objects updated. The blocks are managed entirely from within the BlockService.js class so that all of this stuff would be completely isolated from the game graphics and UI.

Physics Bodies

When a Block is created or modified it regenerates both the ThreeJS objects and the Cannon objects. Since ThreeJS is documented everywhere I'll only show the Cannon side.

let type = CANNON.Body.DYNAMIC

if(this.physicsType === BLOCK_TYPES.WALL) {

type = CANNON.Body.KINEMATIC

}

this.body = new CANNON.Body({

mass: 1,//kg

type: type,

position: new CANNON.Vec3(this.position.x,this.position.y,this.position.z),

shape: new CANNON.Box(new CANNON.Vec3(this.width/2,this.height/2,this.depth/2)),

material: wallMaterial,

})

this.body.quaternion.setFromEuler(this.rotation.x,this.rotation.y,this.rotation.z,'XYZ')

this.body.jtype = this.physicsType

this.body.userData = {}

this.body.userData.block = this

world.addBody(this.body)

Each body has a mass, type, position, quaternion, and shape.

For mass I’ve always used 1kg. This works well enough but if I ever update the game in the future I’ll make the mass configurable for each block. This would enable more variety in the levels.

The type is either dynamic or kinematic. Dynamic means the body can move and tumble in all directions. A kinematic body is one that does not move but other blocks can hit and bounce against it.

The shape is the actual shape of the body. For blocks this is a box. For the ball that you throw I used a sphere. It is also possible to create interactive meshes but I didn’t use them for this game.

An important note about Boxes. In ThreeJS the BoxGeometry takes the the full width, height, and depth in the constructor. In CannonJS you use the extent from the center, which is half of the full width, height, and depth. I didn’t realize this when I started, only to discover my cubes wouldn’t fall all the way to the ground. :)

The position and quaternion (orientation) properties use the same values in the same order as ThreeJS. The material refers to how that block will bounce against others. In my game I use only two materials: wall and ball. For each pair of materials you will create a contact material which defines the friction and restitution (bounciness) to use when that particular pair collides.

const wallMaterial = new CANNON.Material()

// …

const ballMaterial = new CANNON.Material()

// …

world.addContactMaterial(new CANNON.ContactMaterial(

wallMaterial,ballMaterial,

{

friction:this.wallFriction,

restitution: this.wallRestitution

}

))

Gravity

All of these bodies are added to a World object with a hard coded gravity property set to match Earth gravity (9.8m/s^2), though individual levels may override this. The last three levels of the current game have gravity set to 0 for a different play experience.

const world = new CANNON.World();

world.gravity.set(0, -9.82, 0);

Once the physics engine is set up and simulating the objects we need to update the on screen graphics after every world step. This is done by just copying the properties out of the body and back to the ThreeJS object.

this.obj.position.copy(this.body.position)

this.obj.quaternion.copy(this.body.quaternion)

Collision Detection

There is one more thing we need: collisions. The engine handles colliding all of the boxes and making them fall over, but the goal of the game is that the player must knock over all of the crystal boxes to complete the level. This means I have to define what knock over means. At first I just checked if a block had moved from its original orientation, but this proved tricky. Sometimes a box would be very gently knocked and tip slightly, triggering a ‘knock over’ event. Other times you could smash into a block at high speed but it wouldn’t tip over because there was a wall behind it.

Instead I added a collision handler so that my code would be called whenever two objects collide. The collision event includes a method to get the velocity at the impact. This allows me to ignore any collisions that aren’t strong enough.

You can see this in player.html

function handleCollision(e) {

if(game.blockService.ignore_collisions) return

//ignore tiny collisions

if(Math.abs(e.contact.getImpactVelocityAlongNormal() < 1.0)) return

//when ball hits moving block,

if(e.body.jtype === BLOCK_TYPES.BALL) {

if( e.target.jtype === BLOCK_TYPES.WALL) {

game.audioService.play('click')

}

if (e.target.jtype === BLOCK_TYPES.BLOCK) {

//hit a block, just make the thunk sound

game.audioService.play('click')

}

}

//if crystal hits anything and the impact was strong enought

if(e.body.jtype === BLOCK_TYPES.CRYSTAL || e.target.jtype === BLOCK_TYPES.CRYSTAL) {

if(Math.abs(e.contact.getImpactVelocityAlongNormal() >= 2.0)) {

return destroyCrystal(e.target)

}

}

// console.log(`collision: body ${e.body.jtype} target ${e.target.jtype}`)

}

The collision event handler was also the perfect place to add sound effects for when objects hit each other. Since the event includes which objects were involved I can use different sounds for different objects, like the crashing glass sound for the crystal blocks.

Firing the ball is similar to creating the block bodies except that it needs an initial velocity based on how much force the player slingshotted the ball with. If you don’t specify a velocity to the Body constructor then it will use a default of 0.

fireBall(pos, dir, strength) {

this.group.worldToLocal(pos)

dir.normalize()

dir.multiplyScalar(strength*30)

const ball = this.generateBallMesh(this.ballRadius,this.ballType)

ball.castShadow = true

ball.position.copy(pos)

const sphereBody = new CANNON.Body({

mass: this.ballMass,

shape: new CANNON.Sphere(this.ballRadius),

position: new CANNON.Vec3(pos.x, pos.y, pos.z),

velocity: new CANNON.Vec3(dir.x,dir.y,dir.z),

material: ballMaterial,

})

sphereBody.jtype = BLOCK_TYPES.BALL

ball.userData.body = sphereBody

this.addBall(ball)

return ball

}

Next Steps

Overall CannonJS worked pretty well. I would like it to be faster as it costs me about 10fps to run, but other things in the game had a bigger impact on performance. If I ever revisit this game I will try to move the physics calculations to a worker thread, as well as redo the syncing code. I’m sure there is a better way to sync objects quickly. Perhaps JS Proxies would help. I would also move the graphics & styling code outside, so that the BlockService can really focus just on physics.

While there are some more powerful solutions coming with WASM, today I definitely recommend using CannonJS for the physics in your WebVR games. The ease of working with the API (despite being under documented) meant I could spend more time on the game and less time worrying about math.

|

|

The Mozilla Blog: Retailers: All We Want for Valentine’s Day is Basic Security |

Mozilla and our allies are asking four major retailers to adopt our Minimum Security Guidelines

Today, Mozilla, Consumers International, the Internet Society, and eight other organizations are urging Amazon, Target, Walmart, and Best Buy to stop selling insecure connected devices.

Why? As the Internet of Things expands, a troubling pattern is emerging:

[1] Company x makes a “smart” product — like connected stuffed animals — without proper privacy or security features

[2] Major retailers sell that insecure product widely

[3] The product gets hacked, and consumers are the ultimate loser

This has been the case with smart dolls, webcams, doorbells, and countless other devices. And the consequences can be life threatening: “Internet-connected locks, speakers, thermostats, lights and cameras that have been marketed as the newest conveniences are now also being used as a means for harassment, monitoring, revenge and control,” the New York Times reported last year. Compounding this: It is estimated that by 2020, 10 billion IoT products will be active.

Last year, in an effort to make connected devices on the market safer for consumers, Mozilla, the Internet Society, and Consumers International published our Minimum Security Guidelines: the five basic features we believe all connected devices should have. They include encrypted communications; automatic updates; strong password requirements; vulnerability management; and an accessible privacy policy.

Now, we’re calling on four major retailers to publicly endorse these guidelines, and also commit to vetting all connected products they sell against these guidelines. Mozilla, Consumers International, and the Internet Society have sent a sign-on letter to Amazon, Target, Walmart, and Best Buy.

The letter is also signed by 18 Million Rising, Center for Democracy and Technology, ColorOfChange, Consumer Federation of America, Common Sense Media, Hollaback, Open Media & Information Companies Initiative, and Story of Stuff.

Currently, there is no shortage of insecure products on shelves. In our annual holiday buyers guide, which ranks popular devices’ privacy and security features, about half the products failed to meet our Minimum Security Guidelines. And in the Valentine’s Day buyers guide we released last week, nine out of 18 products failed.

Why are we targeting retailers, and not the companies themselves? Mozilla can and does speak with the companies behind these devices. But by talking with retailers, we believe we can have an outsized impact. Retailers don’t want their brands associated with insecure goods. And if retailers drop a company’s product, that company will be compelled to improve its product’s privacy and security features.

We know this approach works. Last year, Mozilla called on Target and Walmart to stop selling CloudPets, an easily-hackable smart toy. Target and Walmart listened, and stopped selling the toys.

In the short-term, we can get the most insecure devices off shelves. In the long-term, we can fuel a movement for a more secure, privacy-centric Internet of Things.

Read the full letter, here or below.

Dear Target, Walmart, Best Buy and Amazon,

The advent of new connected consumer products offers many benefits. However, as you are aware, there are also serious concerns regarding standards of privacy and security with these products. These require urgent attention if we are to maintain consumer trust in this market.

It is estimated that by 2020, 10 billion IoT products will be active. The majority of these will be in the hands of consumers. Given the enormous growth of this space, and because so many of these products are entrusted with private information and conversations, it is incredibly important that we all work together to ensure that internet-enabled devices enhance consumers’ trust.

Cloudpets illustrated the problem, however we continue to see connected devices that fail to meet the basic privacy and security thresholds. We are especially concerned about how these issues impact children, in the case of connected toys and other devices that children interact with. That’s why we’re asking you to publicly endorse these minimum security and privacy guidelines, and commit publicly to use them to vet any products your company sells to consumers. While many products can and should be expected to meet a high set of privacy and security standards, these minimum requirements are a strong start that every reputable consumer company must be expected to meet. These minimum guidelines require all IoT devices to have:

1) Encrypted communications

The product must use encryption for all of its network communications functions and capabilities. This ensures that all communications are not eavesdropped or modified in transit.

2) Security updates

The product must support automatic updates for a reasonable period after sale, and be enabled by default. This ensures that when a vulnerability is known, the vendor can make security updates available for consumers, which are verified (using some form of cryptography) and then installed seamlessly. Updates must not make the product unavailable for an extended period.

3) Strong passwords

If the product uses passwords for remote authentication, it must require that strong passwords are used, including having password strength requirements. Any non-unique default passwords must also be reset as part of the device’s initial setup. This helps protect the device from vulnerability to guessable password attacks, which could result in device compromise.

4) Vulnerability management

The vendor must have a system in place to manage vulnerabilities in the product. This must also include a point of contact for reporting vulnerabilities and a vulnerability handling process internally to fix them once reported. This ensures that vendors are actively managing vulnerabilities throughout the product’s lifecycle.

5) Privacy practices

The product must have a privacy policy that is easily accessible, written in language that is easily understood and appropriate for the person using the device or service at the point of sale. At a minimum, users should be notified about substantive changes to the policy. If data is being collected, transmitted or shared for marketing purposes, that should be clear to users and, in line with the EU’s General Data Protection Regulation (GDPR), there should be a way to opt-out of such practices. Users should also have a way to delete their data and account. Additionally, like in GDPR, this should include a policy setting standard retention periods wherever possible.

We’ve seen headline after headline about privacy and security failings in the IoT space. And it is often the same mistakes that have led to people’s private moments, conversations, and information being compromised. Given the value and trust that consumers place in your company, you have a uniquely important role in addressing this problem and helping to build a more secure, connected future. Consumers can and should be confident that, when they buy a device from you, that device will not compromise their privacy and security. Signing on to these minimum guidelines is the first step to turn the tide and build trust in this space.

Yours,

Mozilla, Internet Society, Consumer’s International, ColorOfChange, Open Media & Information Companies Initiative, Common Sense Media, Story of Stuff, Center for Democracy and Technology, Consumer Federation of America, 18 Million Rising, Hollaback

The post Retailers: All We Want for Valentine’s Day is Basic Security appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/02/12/retailers-all-we-want-for-valentines-day-is-basic-security/

|

|

Hacks.Mozilla.Org: Anyone can create a virtual reality experience with this new WebVR starter kit from Mozilla and Glitch |

Here at Mozilla, we are big fans of Glitch. In early 2017 we made the decision to host our A-Frame content on their platform. The decision was easy. Glitch makes it easy to explore, and remix live code examples for WebVR.

We also love the people behind Glitch. They have created a culture and a community that is kind, encouraging, and champions creativity. We share their vision for a web that is creative, personal, and human. The ability to deliver immersive experiences through the browser opens a whole new avenue for creativity. It allows us to move beyond screens, and keyboards. It is exciting, and new, and sometimes a bit weird (but in a good way).

Building a virtual reality experience may seem daunting, but it really isn’t. WebVR and frameworks like A-Frame make it really easy to get started. This is why we worked with Glitch to create a WebVR starter kit. It is a free, 5-part video course with interactive code examples that will teach you the fundamentals of WebVR using A-Frame. Our hope is that this starter kit will encourage anyone who has been on the fence about creating virtual reality experiences to dive in and get started.

Check out part one of the five-part series below. If you want more, I’d encourage you to check out the full starter kit here, or use the link at the bottom of this post.

In the Glitch viewer embedded below, you can see how to make a WebVR planetarium in just a few easy-to-follow steps. You learn interactively (and painlessly) by editing and remixing the working code in the viewer:

Ready to keep going? Click below to view the full series on Glitch.

The post Anyone can create a virtual reality experience with this new WebVR starter kit from Mozilla and Glitch appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/02/webvr-starter-kit-mozilla-glitch/

|

|

The Mozilla Blog: Open Letter: Facebook, Do Your Part Against Disinformation |

Mozilla, Access Now, Reporters Without Borders, and 35 other organizations have published an open letter to Facebook.

Our ask: make good on your promises to provide more transparency around political advertising ahead of the 2019 EU Parliamentary Elections

Is Facebook making a sincere effort to be transparent about the content on its platform? Or, is the social media platform neglecting its promises?

Facebook promised European lawmakers and users it would increase the transparency of political advertising on the platform to prevent abuse during the elections. But in the very same breath, they took measures to block access to transparency tools that let users see how they are being targeted.

With the 2019 EU Parliamentary Elections on the horizon, it is vital that Facebook take action to address this problem. So today, Mozilla and 37 other organizations — including Access Now and Reporters Without Borders — are publishing an open letter to Facebook.

“We are writing you today as a group of technologists, human rights defenders, academics, journalists and Facebook users who are deeply concerned about the validity of Facebook’s promises to protect European users from targeted disinformation campaigns during the European Parliamentary elections,” the letter reads.

“Promises and press statements aren’t enough; instead, we’ll be watching for real action over the coming months and will be exploring ways to hold Facebook accountable if that action isn’t sufficient,” the letter continues.

Individuals may sign their name to the letter, as well. Sign here.

Read the full letter, here or below. The letter will also appear in the Thursday print edition of POLITICO Europe.

Diesen Brief auf Deutsch lesen

The letter urges Facebook to make good on its promise to EU lawmakers. Last year, Facebook signed the EU’s Code of Practice on disinformation and pledged to increase transparency around political advertising. But since then, Facebook has made political advertising more opaque, not more transparent. The company recently blocked access to third-party transparency tools.

Specifically, our open letter urges Facebook to:

- Roll out a functional, open Ad Archive API that enables advanced research and development of tools that analyse political ads served to Facebook users in the EU

- Ensure that all political advertisements are clearly distinguished from other content and are accompanied by key targeting criteria such as sponsor identity and amount spent on the platform in all EU countries

- Cease all harassment of good faith researchers who are building tools to provide greater transparency into the advertising on Facebook’s platform.

To safeguard the integrity of the EU Parliament elections, Facebook must be part of the solution. Users and voters across the EU have the right to know who is paying to promote the political ads they encounter online; if they are being targeted; and why they are being targeted.

The full letter

Dear Facebook:

We are writing you today as a group of technologists, human rights defenders, academics, journalists and Facebook users who are deeply concerned about the validity of Facebook’s promises to protect European users from targeted disinformation campaigns during the European Parliamentary elections. You have promised European lawmakers and users that you will increase the transparency of political advertising on the platform to prevent abuse during the elections. But in the very same breath, you took measures to block access to transparency tools that let your users see how they are being targeted.

In the company’s recent Wall Street Journal op-ed, Mark Zuckerberg wrote that the most important principles around data are transparency, choice and control. By restricting access to advertising transparency tools available to Facebook users, you are undermining transparency, eliminating the choice of your users to install tools that help them analyse political ads, and wielding control over good faith researchers who try to review data on the platform. Your alternative to these third party tools provides simple keyword search functionality and does not provide the level of data access necessary for meaningful transparency.

Actions speak louder than words. That’s why you must take action to meaningfully deliver on the commitments made to the EU institutions, notably the increased transparency that you’ve promised. Promises and press statements aren’t enough; instead, we need to see real action over the coming months, and we will be exploring ways to hold Facebook accountable if that action isn’t sufficient.

Specifically, we ask that you implement the following measures by 1 April 2019 to give developers sufficient lead time to create transparency tools in advance of the elections:

- Roll out a functional, open Ad Archive API that enables advanced research and development of tools that analyse political ads served to Facebook users in the EU

- Ensure that all political advertisements are clearly distinguished from other content and are accompanied by key targeting criteria such as sponsor identity and amount spent on the platform in all EU countries

- Cease harassment of good faith researchers who are building tools to provide greater transparency into the advertising on your platform

We believe that Facebook and other platforms can be positive forces that enable democracy, but this vision can only be realized through true transparency and trust. Transparency cannot just be on the terms with which the world’s largest, most powerful tech companies are most comfortable.

We look forward to the swift and complete implementation of these transparency measures that you have promised to your users.

Sincerely,

Mozilla Foundation

and also signed by:

Access Now

AlgorithmWatch

All Out

Alto Data Analytics

ARTICLE 19

Aufstehn

Bits of Freedom

Bulgarian Helsinki Committee

BUND – Friends of the Earth Germany

Campact

Campax

Center for Democracy and Technology

CIPPIC

Civil Liberties Union for Europe

Civil Rights Defenders

Declic

doteveryone

Estonian Human Rights Center

Free Press Unlimited

GONG Croatia

Greenpeace

Italian Coalition for Civil Liberties and Rights (CILD)

Mobilisation Lab

Open Data Institute

Open Knowledge International

OpenMedia

Privacy International

PROVIDUS

Reporters Without Borders

Skiftet

SumOfUs

The Fourth Group

Transparent Referendum Initiative

Uplift

Urgent Action Fund for Women’s Human Rights

WhoTargetsMe

Wikimedia UK

Note: This blog post has been updated to reflect additional letter signers.

The post Open Letter: Facebook, Do Your Part Against Disinformation appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/02/11/open-letter-facebook-do-your-part-against-disinformation/

|

|

Shing Lyu: Download JavaScript Data as Files on the Client Side |

When building websites or web apps, creating a “Download as file” link is quite useful. For example if you want to allow user to export some data as JSON, CSV or plain text files so they can open them in external programs or load them back later. Usually this requires a web server to format the file and serve it. But actually you can export arbitrary JavaScript variable to file entirely on the client side. I have implemented that function in one of my project, MozApoy, and here I’ll explain how I did that.

First, we create a link in HTML

The download attribute will be the filename for your file. It will look like this:

Notice that we keep the href attribute blank. Traditionally we fill this attribute with a server-generated file path, but this time we’ll assign it dynamically generate the link using JavaScript.

Then, if we want to export the content of the text variable as a text file, we can use this JavaScript code:

var text = 'Some data I want to export';

var data = new Blob([text], {type: 'text/plain'});

var url = window.URL.createObjectURL(data);

document.getElementById('download_link').href = url;

The magic happens on the third line, the window.URL.createObjectURL() API takes a Blob and returns an URL to access it. The URL lives as long as the document in the window on which it was created. Notice that you can assign the type of the data in the new Blob() constructor. If you assign the correct format, the browser can better handle the file. Other commonly seen formats include application/json and text/csv. For example, if we name the file as *.csv and give it type: 'text/csv', Firefox will recognize it as “CSV document” and suggest you open it with LibreOffice Calc.

And in the last line we assign the url to the element’s href attribute, so when the user clicks on the link, the browser will initiate an download action (or other default action for the specific file type.)

Everytime you call createObjectURL(), a new object URL will be created, which will use up the memory if you call it many times. So if you don’t need the old URL anymore, you should call the revokeObjectURL() API to free them.

var url = window.URL.createObjectURL(data);

window.URL.revokeObjectURL(url);

This is a simple trick to let your user download files without setting up any server. If you want to see it in action, you can check out this CodePen.

https://shinglyu.com/web/2019/02/09/js_download_as_file.html

|

|

Mozilla Open Policy & Advocacy Blog: Kenya Government mandates DNA-linked national ID, without data protection law |

Last month, the Kenya Parliament passed a seriously concerning amendment to the country’s national ID law, making Kenya home to the most privacy-invasive national ID system in the world. The rebranded, National Integrated Identity Management System (NIIMS) now requires all Kenyans, immigrants, and refugees to turn over their DNA, GPS coordinates of their residential address, retina scans, iris pattern, voice waves, and earlobe geometry before being issued critical identification documents. NIIMS will consolidate information contained in other government agency databases and generate a unique identification number known as Huduma Namba.

It is hard to see how this system comports with the right to privacy articulated in Article 31 of the Kenyan Constitution. It is deeply troubling that these amendments passed without public debate, and were approved even as a data protection bill which would designate DNA and biometrics as sensitive data is pending.

Before these amendments, in order to issue the National ID Card (ID), the government only required name, date and place of birth, place of residence, and postal address. The ID card is a critical document that impacts everyday life, without it, an individual cannot vote, purchase property, access higher education, obtain employment, access credit, or public health, among other fundamental rights.

Mozilla strongly believes that that no digital ID system should be implemented without strong privacy and data protection legislation. The proposed Data Protection Bill of 2018 which Parliament is likely to consider next month, is a strong and thorough framework that contains provisions relating to data minimization as well as collection and purpose limitation. If NIIMS is implemented, it will be in conflict with these provisions, and more importantly in conflict with Article 31 of the Constitution, which specifically protects the right to privacy.

Proponents of NIIMS claim that the system provides a number of benefits, such as accurate delivery of government services. These arguments also seem to conflate legal and digital identity. Legal ID used to certify one’s identity through basic data about one’s personhood (such as your name and the date and place of your birth) is a commendable goal. It is one of the United Nations Sustainable Development Goals 16.9 that aims “to provide legal identity for all, including birth registration by 2030”. However, it is important to remember this objective can be met in several ways. “Digital ID” systems, and especially those that involve sensitive biometrics or DNA, are not a necessary means of verifying identity, and in practice raise significant privacy and security concerns. The choice of whether to opt for a digital ID let alone a biometric ID therefore should be closely scrutinized by governments in light of these risks, rather than uncritically accepted as beneficial.

- Security Concerns: The centralized nature of NIIMS creates massive security vulnerabilities. It could become a honeypot for malicious actors and identity thieves who can exploit other identifying information linked to stolen biometric data. The amendment is unclear on how the government will establish and institute strong security measures required for the protection of such a sensitive database. If there’s a breach, it’s not as if your DNA or retina can be reset like a password or token.

- Surveillance Concerns: By centralizing a tremendous amount of sensitive data in a government database, NIIMS creates an opportunity for mass surveillance by the State. Not only is the collection of biometrics incredibly invasive, but gathering this data combined with transaction logs of where ID is used could substantially reduce anonymity. This is all the more worrying considering Kenya’s history of extralegal surveillance and intelligence sharing.

- Ethnic Discrimination Concerns: The collection of DNA is particularly concerning as this information can be used to identify an individual’s ethnic identity. Given Kenya’s history of politicization of ethnic identity, collecting this data in a centralized database like NIIMS could reproduce and exacerbate patterns of discrimination.

The process was not constitutional

Kenya’s constitution requires public input before any new law can be adopted. No public discussions were conducted for this amendment. It was offered for parliamentary debate under “Miscellaneous” amendments, which exempted it from procedures and scrutiny that would have required introduction as a substantive bill and corresponding public debate. The Kenyan government must not implement this system without sufficient public debate and meaningful engagement to determine how such a system should be implemented if at all.

The proposed law does not provide people with the opportunity to opt in or out of giving their sensitive and precise data. The Constitution requires that all Kenyans be granted identification. However, if an individual were to refuse to turn over their DNA or other sensitive information to the State, as they should have the right to do, they could risk not being issued their identity or citizenship documents. Such a denial would contravene Articles 12, 13, and 14 of the Constitution.

Opting out of this system should not be used to discriminate or exclude any individual from accessing essential public services and exercising their fundamental rights.

Individuals must be in full control of their digital identities with the right to object to processing and use and withdraw consent. These aspects of control and choice are essential to empowering individuals in the deployment of their digital identities. Therefore policy and technical decisions must take into account systems that allow individuals to identify themselves rather than the system identifying them.

Mozilla urges the government of Kenya to suspend the implementation of NIIMS and we hope Kenyan members of parliament will act swiftly to pass the Data Protection Bill of 2018.

The post Kenya Government mandates DNA-linked national ID, without data protection law appeared first on Open Policy & Advocacy.

|

|

Mozilla VR Blog: Immersive Media Content Creation Guide |

Firefox Reality is ready for your panoramic images and videos, in both 2D and 3D. In this guide you will find advice for creating and formatting your content to best display on the immersive web in Firefox Reality.

Images

The web is a great way to share immersive images, either as standalone photos or as part of an interactive tour. Most browsers can display immersive (360°) images but need a little help. Generally these images are regular JPGs or PNGs that have been taken with a 180° or 360° camera. Depending on the exact format you may need different software to display it in a browser. You can host the images themselves on your own server or use one of the many photo tour websites listed below.

Equirectangular Images

360 cameras usually take photos in equirectangular format, meaning an aspect ratio of 2 to 1. Here are some examples on Flickr.

To display one of these on the web in VR you will need an image viewer library. Here are some examples:

- AFrame’s a-sky component. Interactive example.

- ThreeJS using a SphereBuffer geometry: Interactive example and source.

- Babylon.js using a PhotoDome with an Equirectangular texture. Interactive example.

- Amazon Sumerian Skybox.

- PhotoSphereViewer, an easier to use library that uses ThreeJS underneath.

- Google’s VRView

Spherical Images and 3D Images

Some 360 cameras save as spherical projection, which generally looks like one or two circles. Generally these should be converted to equirectangular with the tools that came with your camera. 3D images from 180 cameras will generally be two images side by side or one above the other. Again most camera makers provide tools to prepare these for the web. Look at the documentation for your camera.

Photo Tours

One of the best ways to use immersive images on the web is to build an interactive tour with them. There are many excellent web-based tools for building 360 tours. Here are just a few of them:

- Google Tour Builder

- Marzipano

- Roundme

- IPanorama 360: Virtual Tour builder for Wordpress

- 3DVista

- WondaVR

- HoloBuilder

Video

360 and 3D video is much like regular video. It is generally encoded with the h264 codec and stored inside of an mp4 container. However, 360 and 3D video is very large. Generally you do not want to host it on your own web server. Instead you can host it with a video provider like YouTube or Vimeo. They each have their own instructions for how to process and upload videos.

- YouTube 360 video upload instructions.

- Vimeo 360 video upload instructions.

If you chose to host the video file yourself on a standard web server then you will need to use a video viewer library built with a VR framework like AFrame or ThreeJS.

- A-Frame 360 video player example

- Valiant, a ThreeJS based 360 video player

3D videos

3D video is generally just two 180 or 360 videos stuck together. This is usually called ‘over and under’ format, meaning each video frame is a square containing two equirectangular images, the top half is for the left eye and the bottom half is for the right eye.

- Youtube

- documentation on VR / 3D videos

- VR videos that aren’t 360.

- Vimeo

- Demo code of streaming Vimeo video to a WebVR app

Compression Advice

Use as high quality as you can get away with and let your video provider convert it as needed. If you are doing it yourself go for 4k in h264 with the highest bitrate your camera supports.

Devices for capturing 360 videos and images

You will get the best results from a camera built for 360,180, or 3D. Amazon has many fine products to choose from. They should all come with instructions and software for capturing and converting both photos and video.

Members of the Mozilla Mixed Reality team have personally used:

Though you will get better results from a dedicated camera, it is also possible to capture 360 images from custom smartphone camera apps such as FOV, Cardboard Camera and Facebook. See these tutorials on 360 iOS apps and Android apps for more information.

Sharing your Immersive Content

You can share your content on your own website, but if that won’t work for you then consider one of the many 360 content hosting sites like these:

Get Featured

Once you have your immersive content on the web, please let us know about it. We might be able to feature it in the Firefox Reality home page, getting your content in front of many viewers right inside VR.

|

|

QMO: Firefox 66 Beta 8 Testday, February 15th |

Hello Mozillians,

We are happy to let you know that Friday, February 15th, we are organizing Firefox 66 Beta 8 Testday. We’ll be focusing our testing on: Storage Access API/Cookie Restrictions.

Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better!

See you on Friday!

https://quality.mozilla.org/2019/02/firefox-66-beta-8-testday-february-15th/

|

|

Daniel Stenberg: commercial curl support! |

If you want commercial support, ports of curl to other operating systems or just instant help to fix your curl related problems, we’re here to help. Get in touch now! This is the premiere. This has not been offered by me or anyone else before.

I’m not sure I need to say it, but I personally have authored almost 60% of all commits in the curl source code during my more than twenty years in the project. I started the project, I’ve designed its architecture etc. There is simply no one around with my curl experience and knowledge of curl internals. You can’t buy better curl expertise.

curl has become one of the world’s most widely used software components and is the transfer engine doing a large chunk of all non-browser Internet transfers in the world today. curl has reached this level of success entirely without anyone offering commercial services around it. Still, not every company and product made out there has a team of curl experts and in this demanding time and age we know there are times when you rather hire the right team to help you out.

We are the curl experts that can help you and your team. Contact us for all and any support questions at support@wolfssl.com.

What about the curl project?

I’m heading into this new chapter of my life and the curl project with the full knowledge that this blurs the lines between my job and my spare time even more than before. But fear not!

The curl project is free and open and will remain independent of any commercial enterprise helping out customers. I realize me offering companies and organizations to deal with curl problems and solving curl issues for compensation creates new challenges and questions where boundaries go, if for nothing else for me personally. I still think this is worth pursuing and I’m sure we can figure out and handle whatever minor issues this can lead to.

My friends, the community, the users and harsh critiques on twitter will all help me stay true and honest. I know this. This should end up a plus for the curl project in general as well as for me personally. More focus, more work and more money involved in curl related activities should improve the project.

It is with great joy and excitement I take on this new step.

https://daniel.haxx.se/blog/2019/02/08/commercial-curl-support/

|

|

Alex Gibson: Creating a dark mode theme using CSS Custom Properties |

The use of variables in CSS preprocessors such as Sass and Less has been common practise in front-end development for some time now. Using variables to reference recurring values (such as for color, margin and padding etc), helps us to write cleaner, more maintainable, and consistent CSS. Whilst preprocessors have extended CSS with some great patterns that we now often take for granted, using variables when compiling to static CSS has some limitations. This is especially true when it comes to theming.

As an example, let’s take a look at creating a dark mode theme using Sass. Here’s a simplified example for what could be a basic page header:

$color-background: #fff;

$color-text: #000;

$color-title: #999;

$color-background-dark: #000;

$color-text-dark: #fff;

$color-title-dark: #ccc;

.header {

// default theme colours

background-color: $color-background;

color: $color-text;

.header-title {

color: $color-title;

}

// dark theme colours

.t-dark & {

background-color: $color-background-dark;

color: $color-text-dark;

.header-title {

color: $color-title-dark;

}

}

}

The above example isn’t very complicated, but it also isn’t terribly efficient. Because Sass has to compile all our styles down to static CSS at build time, we lose all the benefits that dynamic variables could provide. We end up generating selectors for each theme style, and repeating the same properties for each colour change. Here’s what the generated CSS looks like:

.header {

background-color: #fff;

color: #000;

}

.header .header-title {

color: #999;

}

.t-dark .header {

background-color: #000;

color: #fff;

}

.t-dark .header .header-title {

color: #ccc;

}

This may not look like much, but for large or complex projects with many of components, this pattern can end up creating a lot of extra CSS. Bundling every selector required to do each theme is suboptimal in terms of performance. We could try to solve this by compiling each theme to a separate stylesheet and then dynamically loading our CSS, or by removing unused CSS post-compilation, but this all adds more complexity when it should be simple.

Another issue with the above pattern is related to maintainability. Ensuring that selectors and matching properties exist for each theme can easily be prone to human error. We could try and alleviate this by using component mixins to generate our CSS, but this still doesn’t fix the underlying problem we see with static compilation. Wouldn’t it be great if we could just update the variables at runtime and be done with it all?

Hello Custom Properties

CSS Custom Properties (or CSS variables, as they are commonly referred to) help to solve many of these problems. Unlike preprocessors which compile variables to static values in CSS, custom properties are dynamic. This makes them incredibly flexible and a perfect fit for writing efficient, practical CSS themes.

Custom properties are powerful because they follow the rules of inheritance and the cascade, just like regular CSS properties. If the value of a custom property changes, all DOM elements associated with a selector that uses that property are repainted by the browser automatically.

Here’s the same header example implemented using CSS custom properties:

:root {

--color-background: #fff;

--color-text: #000;

--color-title: #999;

}

:root.t-dark {

--color-background: #000;

--color-text: #fff;

--color-title: #ccc;

}

.header {

background-color: var(--color-background);

color: var(--color-text);

.header-title {

color: var(--color-title);

}

}

Much more readable and succinct! We only ever have to write our header component CSS once, and our theme colours can be updated dynamically at runtime in the browser. Whilst this is only a basic example, it is easy to see how this scales so much better. And because custom properties are dynamic it means we can do all kinds of new things, such as change their values from within CSS media queries, or even via JavaScript.

Supporting dark mode in macOS Mojave

Safari recently added support for a prefers-color-scheme media query that works with macOS Mojave’s new dark mode feature. This enables web pages to opt-in to whichever mode the system preference is set to.

@media (prefers-color-scheme: dark) {

:root {

--color-background: #000;

--color-text: #fff;

--color-title: #ccc;

}

}

You can also detect the preference in JavaScript like so:

// detect dark mode preference.

const prefersDarkMode = window.matchMedia('(prefers-color-scheme: dark)').matches;

Because I’m a big fan of dark UI’s (I find them much easier on the eye, especially over extended periods of time), I couldn’t resist adding a custom theme to this blog. For browsers which don’t yet support prefers-color-scheme, I also added a theme toggle to the top right navigation.

Detecting support for CSS custom properties

Detecting browser support for custom properties is pretty straight forward, and can be done in either CSS or JavaScript.

You can detect support for custom properties in CSS using @supports:

@supports (--color-background: #fff) {

:root {

--color-background: #fff;

--color-text: #000;

--color-title: #999;

}

}

You can also provide fallbacks for older browsers just by using a previous declaration, which is often simpler.

.header {

background-color: #fff;

background-color: var(--color-background);

}

It’s worth noting here that providing a fallback does create more redundant properties in your CSS. Depending on your project, not using any fallback may be just fine. Browsers that don’t support custom properties will resort to default user agent styles, which are still perfectly accessible.

You can also detect support for custom properties in JavaScript using the following line of code (I’m using this to determine whether or not to initialise the theme selector in the navigation).

const supportsCustomProperties = window.CSS && window.CSS.supports('color', 'var(--fake-color');

Take a closer look

If you would like to take a closer look at the code I used to implement my dark mode theme, feel free to poke around at the source code on GitHub.

https://alxgbsn.co.uk/2019/02/08/blog-theming-css-variables/

|

|

The Mozilla Blog: Mozilla Heads to Capitol Hill to Defend Net Neutrality |

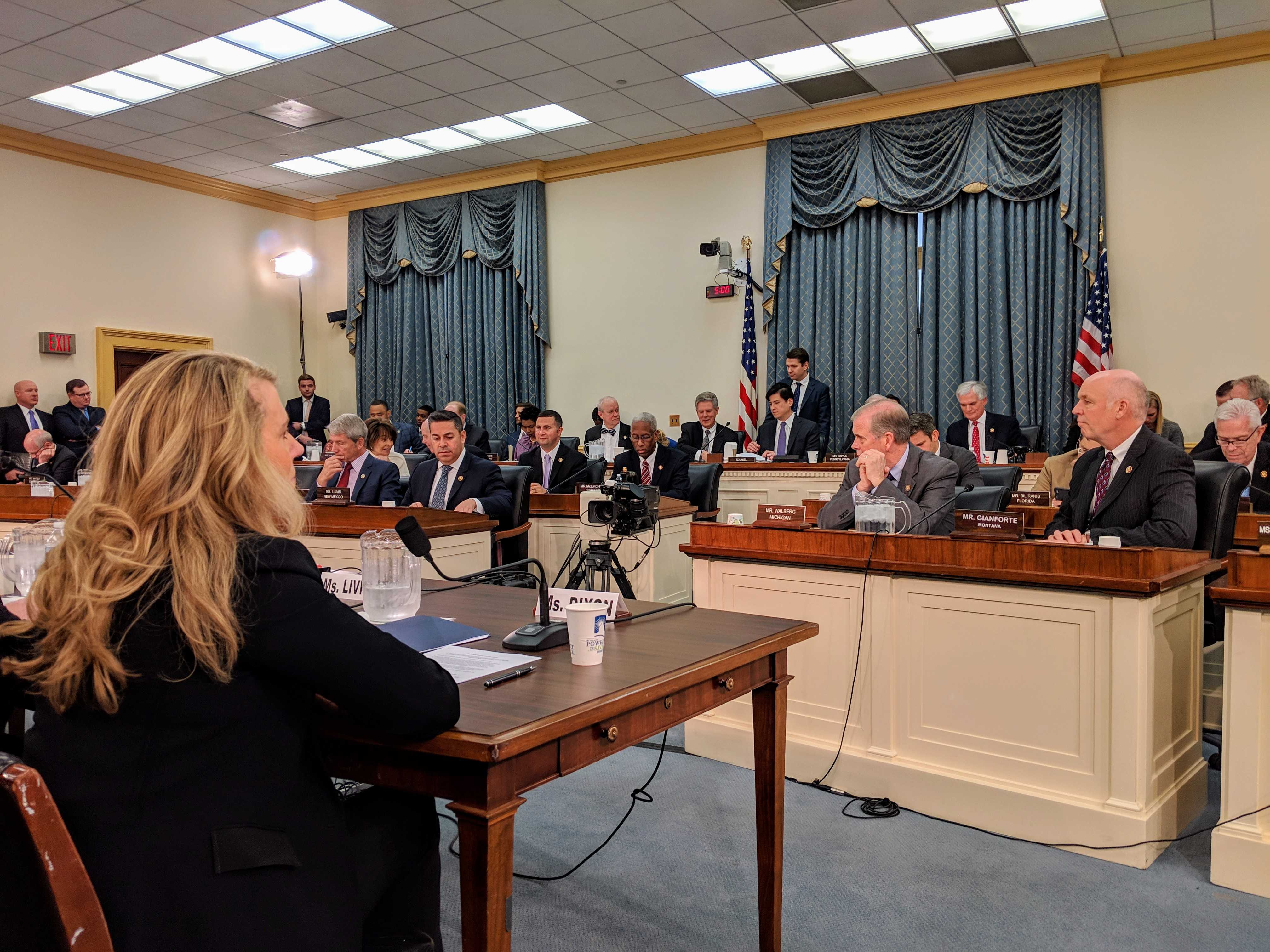

Today Denelle Dixon, Mozilla COO, had the honor of testifying on behalf of Mozilla before a packed United States House of Representatives Energy & Commerce Telecommunications Subcommittee in support of our ongoing fight for net neutrality. It was clear: net neutrality principles are broadly embraced, even in partisan Washington.

Dixon in front of the United States House of Representatives Energy & Commerce Telecommunications Subcommittee

Our work to restore net neutrality is driven by our mission to build a better, healthier internet that puts users first. And we believe that net neutrality is fundamental to preserving an open internet that creates room for new businesses and new ideas to emerge and flourish, and where internet users can choose freely the companies, products, and services that put their interests first.

We are committed to restoring the protections users deserve and will continue to go wherever the fight for net neutrality takes us.

For more, check out the replay of the hearing or read Denelle’s prepared written testimony to the subcommittee.

The post Mozilla Heads to Capitol Hill to Defend Net Neutrality appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/02/07/mozilla-heads-to-capitol-hill-to-defend-net-neutrality/

|

|

Hacks.Mozilla.Org: Refactoring MDN macros with async, await, and Object.freeze() |

In March of last year, the MDN Engineering team began the experiment of publishing a monthly changelog on Mozilla Hacks. After nine months of the changelog format, we’ve decided it’s time to try something that we hope will be of interest to the web development community more broadly, and more fun for us to write. These posts may not be monthly, and they won’t contain the kind of granular detail that you would expect from a changelog. They will cover some of the more interesting engineering work we do to manage and grow the MDN Web Docs site. And if you want to know exactly what has changed and who has contributed to MDN, you can always check the repos on GitHub.

In January, we landed a major refactoring of the KumaScript codebase and that is going to be the topic of this post because the work included some techniques of interest to JavaScript programmers.

Modern JavaScript

One of the pleasures of undertaking a big refactor like this is the opportunity to modernize the codebase. JavaScript has matured so much since KumaScript was first written, and I was able to take advantage of this, using let and const, classes, arrow functions, for...of loops, the spread (…) operator, and destructuring assignment in the refactored code. Because KumaScript runs as a Node-based server, I didn’t have to worry about browser compatibility or transpilation: I was free (like a kid in a candy store!) to use all of the latest JavaScript features supported by Node 10.

KumaScript and macros

Updating to modern JavaScript was a lot of fun, but it wasn’t reason enough to justify the time spent on the refactor. To understand why my team allowed me to work on this project, you need to understand what KumaScript does and how it works. So bear with me while I explain this context, and then we’ll get back to the most interesting parts of the refactor.

First, you should know that Kuma is the Python-based wiki that powers MDN, and KumaScript is a server that renders macros in MDN documents. If you look at the raw form of an MDN document (such as the HTML <body> element) you’ll see lines like this:

It must be the second element of an {{HTMLElement("html")}} element.

The content within the double curly braces is a macro invocation. In this case, the macro is defined to render a cross-reference link to the MDN documentation for the html element. Using macros like this keeps our links and angle-bracket formatting consistent across the site and makes things simpler for writers.

MDN has been using macros like this since before the Kuma server existed. Before Kuma, we used a commercial wiki product which allowed macros to be defined in a language they called DekiScript. DekiScript was a JavaScript-based templating language with a special API for interacting with the wiki. So when we moved to the Kuma server, our documents were full of macros defined in DekiScript, and we needed to implement our own compatible version, which we called KumaScript.

Since our macros were defined using JavaScript, we couldn’t implement them directly in our Python-based Kuma server, so KumaScript became a separate service, written in Node. This was 7 years ago in early 2012, when Node itself was only on version 0.6. Fortunately, a JavaScript-based templating system known as EJS already existed at that time, so the basic tools for creating KumaScript were all in place.

But there was a catch: some of our macros needed to make HTTP requests to fetch data they needed. Consider the HTMLElement macro shown above for instance. That macro renders a link to the MDN documentation for a specified HTML tag. But, it also includes a tooltip (via the title attribute) on the link that includes a quick summary of the element:

That summary has to come from the document being linked to. This means that the implementation of the KumaScript macro needs to fetch the page it is linking to in order to extract some of its content. Furthermore, macros like this are written by technical writers, not software engineers, and so the decision was made (I assume by whoever designed the DekiScript macro system) that things like HTTP fetches would be done with blocking functions that returned synchronously, so that technical writers would not have to deal with nested callbacks.

This was a good design decision, but it made things tricky for KumaScript. Node does not naturally support blocking network operations, and even if it did, the KumaScript server could not just stop responding to incoming requests while it fetched documents for pending requests. The upshot was that KumaScript used the node-fibers binary extension to Node in order to define methods that blocked while network requests were pending. And in addition, KumaScript adopted the node-hirelings library to manage a pool of child processes. (It was written by the original author of KumaScript for this purpose). This enabled the KumaScript server to continue to handle incoming requests in parallel because it could farm out the possibly-blocking macro rendering calls to a pool of hireling child processes.

Async and await

This fibers+hirelings solution rendered MDN macros for 7 years, but by 2018 it had become obsolete. The original design decision that macro authors should not have to understand asynchronous programming with callbacks (or Promises) is still a good decision. But when Node 8 added support for the new async and await keywords, the fibers extension and hirelings library were no longer necessary.

You can read about async functions and await expressions on MDN, but the gist is this:

- If you declare a function

async, you are indicating that it returns a Promise. And if you return a value that is not a Promise, that value will be wrapped in a resolved Promise before it is returned. - The

awaitoperator makes asynchronous Promises appear to behave synchronously. It allows you to write asynchronous code that is as easy to read and reason about as synchronous code.

As an example, consider this line of code:

let response = await fetch(url);

In web browsers, the fetch() function starts an HTTP request and returns a Promise object that will resolve to a response object once the HTTP response begins to arrive from the server. Without await, you’d have to call the .then() method of the returned Promise, and pass a callback function to receive the response object. But the magic of await lets us pretend that fetch() actually blocks until the HTTP response is received. There is only one catch:

- You can only use

awaitwithin functions that are themselves declaredasync. Meantime,awaitdoesn’t actually make anything block: the underlying operation is still fundamentally asynchronous, and even if we pretend that it is not, we can only do that within some larger asynchronous operation.

What this all means is that the design goal of protecting KumaScript macro authors from the complexity of callbacks can now be done with Promises and the await keyword. And this is the insight with which I undertook our KumaScript refactor.

As I mentioned above, each of our KumaScript macros is implemented as an EJS template. The EJS library compiles templates to JavaScript functions. And to my delight, the latest version of the library has already been updated with an option to compile templates to async functions, which means that await is now supported in EJS.

With this new library in place, the refactor was relatively simple. I had to find all the blocking functions available to our macros and convert them to use Promises instead of the node-fibers extension. Then, I was able to do a search-and-replace on our macro files to insert the await keyword before all invocations of these functions. Some of our more complicated macros define their own internal functions, and when those internal functions used await, I had to take the additional step of changing those functions to be async. I did get tripped up by one piece of syntax, however, when I converted an old line of blocking code like this:

var title = wiki.getPage(slug).title;

To this:

let title = await wiki.getPage(slug).title;

I didn’t catch the error on that line until I started seeing failures from the macro. In the old KumaScript, wiki.getPage() would block and return the requested data synchronously. In the new KumaScript, wiki.getPage() is declared async which means it returns a Promise. And the code above is trying to access a non-existent title property on that Promise object.