Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Nick Desaulniers: Booting a Custom Linux Kernel in QEMU and Debugging it with GDB |

Typically, when we modify a program, we’d like to run it to verify our changes. Before booting a compiled Linux kernel image on actual hardware, it can save us time and potential headache to do a quick boot in a virtual machine like QEMU as a sanity check. If your kernel boots in QEMU, it’s not a guarantee it will boot on metal, but it is a quick assurance that the kernel image is not completely busted. Since I finally got this working, I figured I’d post the built up command line arguments (and error messages observed) for future travelers. Also, QEMU has more flags than virtually any other binary I’ve ever seen (other than a google3 binary; shots fired), and simply getting it to print to the terminal is 3/4 the battle. If I don’t write it down now, or lose my shell history, I’ll probably forget how to do this.

TL;DR:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

Booting in QEMU

We’ll play stupid and see what errors we hit, and how to fix them. First, let’s try just our new kernel:

1

| |

A new window should open, and we should observe some dmesg output, a panic, and your fans might spin up. I find this window relatively hard to see, so let’s get the output (and input) to a terminal:

1

| |

This is missing an important flag, but it’s important to see what happens when

we forget it. It will seem that there’s no output, and QEMU isn’t responding

to ctrl+c. And my fans are spinning again. Try ctrl+a, then c, to get a

(qemu) prompt. A simple q will exit.

Next, We’re going to pass a kernel command line argument. The kernel accepts command line args just like userspace binaries, though usually the bootloader sets these up.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

Well at least we’re no longer in the dark (remember, ctrl+a, c, q to

exit). Now we’re panicking because there’s no root filesystem, so there’s no

init binary to run. Now we could create a custom filesystem image with the

bare bones (definitely a post for another day), but creating a ramdisk is the

most straightforward way, IMO. Ok, let’s create the ramdisk,

then add it to QEMU’s parameters:

1 2 | |

Unfortunately, we’ll (likely) hit the same panic and the panic doesn’t provide

enough information, but the default maximum memory QEMU will use is too

limited. -m 512 will give our virtual machine enough memory to boot and get

a busybox based shell prompt:

1 2 3 4 | |

Enabling kvm seems to help with those fans spinning up:

1

| |

Finally, we might be seeing a warning when we start QEMU:

1

| |

Just need to add -cpu host to our invocation of QEMU. It can be helpful when

debugging to disable

KASLR

via nokaslr in the appended kernel command line parameters, or via

CONFIG_RANDOMIZE_BASE not being set in our kernel configs.

We can add -s to start a gdbserver on port 1234, and -S to pause the kernel

until we continue in gdb.

Attaching GDB to QEMU

Now that we can boot this kernel image in QEMU, let’s attach gdb to it.

1

| |

If you see this on your first run:

1 2 3 4 | |

Then you can do this one time fix in order to load the gdb scripts each run:

1 2 | |

Now that QEMU is listening on port 1234 (via -s), let’s connect to it, and

set a break point early on in the kernel’s initialization. Note the the use of

hbreak (I lost a lot of time just using b start_kernel, only for the

kernel to continue booting past that function).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

We can start/resume the kernel with c, and pause it with ctrl+c. The gdb

scripts provided by the kernel via CONFIG_GDB_SCRIPTS can be viewed with

apropos lx. lx-dmesg is incredibly handy for viewing the kernel dmesg

buffer, particularly in the case of a kernel panic before the serial driver has

been brought up (in which case there’s output from QEMU to stdout, which is

just as much fun as debugging graphics code (ie. black screen)).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 | |

Where to Go From Here

Maybe try cross compiling a kernel (you’ll need a cross

compiler/assembler/linker/debugger and likely a different console=ttyXXX

kernel command line parameter), building your own root filesystem with

buildroot,

or exploring the rest of QEMU’s command line options.

|

|

The Rust Programming Language Blog: Announcing Rust 1.30 |

The Rust team is happy to announce a new version of Rust, 1.30.0. Rust is a systems programming language focused on safety, speed, and concurrency.

If you have a previous version of Rust installed via rustup, getting Rust 1.30.0 is as easy as:

$ rustup update stable

If you don’t have it already, you can get rustup from the

appropriate page on our website, and check out the detailed release notes for

1.30.0 on GitHub.

What’s in 1.30.0 stable

Rust 1.30 is an exciting release with a number of features. On Monday, expect another blog post asking you to check out Rust 1.31’s beta; Rust 1.31 will be the first release of “Rust 2018.” For more on that concept, please see our previous post “What is Rust 2018”.

Procedural Macros

Way back in Rust 1.15, we announced the ability to define “custom derives.” For example,

with serde_derive, you could

#[derive(Serialize, Deserialize, Debug)]

struct Pet {

name: String,

}

And convert a Pet to and from JSON using serde_json because serde_derive

defined Serialize and Deserialize in a procedural macro.

Rust 1.30 expands on this by adding the ability to define two other kinds of advanced macros, “attribute-like procedural macros” and “function-like procedural macros.”

Attribute-like macros are similar to custom derive macros, but instead of generating code

for only the #[derive] attribute, they allow you to create new, custom attributes of

your own. They’re also more flexible: derive only works for structs and enums, but

attributes can go on other places, like functions. As an example of using an

attribute-like macro, you might have something like this when using a web application

framework:

#[route(GET, "/")]

fn index() {

This #[route] attribute would be defined by the framework itself, as a

procedural macro. Its signature would look like this:

#[proc_macro_attribute]

pub fn route(attr: TokenStream, item: TokenStream) -> TokenStream {

Here, we have two input TokenStreams: the first is for the contents of the

attribute itself, that is, the GET, "/" stuff. The second is the body of the

thing the attribute is attached to, in this case, fn index() {} and the rest

of the function’s body.

Function-like macros define macros that look like function calls. For

example, an sql! macro:

let sql = sql!(SELECT * FROM posts WHERE id=1);

This macro would parse the SQL statement inside of it and check that it’s syntactically correct. This macro would be defined like this:

#[proc_macro]

pub fn sql(input: TokenStream) -> TokenStream {

This is similar to the derive macro’s signature: we get the tokens that are inside of the parentheses and return the code we want to generate.

use and macros

You can now bring macros into scope with the use keyword. For example,

to use serde-json’s json macro, you used to write:

#[macro_use]

extern crate serde_json;

let john = json!({

"name": "John Doe",

"age": 43,

"phones": [

"+44 1234567",

"+44 2345678"

]

});

But now, you’d write

extern crate serde_json;

use serde_json::json;

let john = json!({

"name": "John Doe",

"age": 43,

"phones": [

"+44 1234567",

"+44 2345678"

]

});

This brings macros more in line with other items and removes the need for

macro_use annotations.

Finally, the proc_macro crate

is made stable, which gives you the needed APIs to write these sorts of macros.

It also has significantly improved the APIs for errors, and crates like syn and

quote are already using them. For example, before:

#[derive(Serialize)]

struct Demo {

ok: String,

bad: std::thread::Thread,

}

used to give this error:

error[E0277]: the trait bound `std::thread::Thread: _IMPL_SERIALIZE_FOR_Demo::_serde::Serialize` is not satisfied

--> src/main.rs:3:10

|

3 | #[derive(Serialize)]

| ^^^^^^^^^ the trait `_IMPL_SERIALIZE_FOR_Demo::_serde::Serialize` is not implemented for `std::thread::Thread`

Now it will give this one:

error[E0277]: the trait bound `std::thread::Thread: serde::Serialize` is not satisfied

--> src/main.rs:7:5

|

7 | bad: std::thread::Thread,

| ^^^ the trait `serde::Serialize` is not implemented for `std::thread::Thread`

Module system improvements

The module system has long been a pain point of new Rustaceans; several of its rules felt awkward in practice. These changes are the first steps we’re taking to make the module system feel more straightforward.

There’s two changes to use in addition to the aforementioned change for

macros. The first is that external crates are now in the

prelude, that is:

// old

let json = ::serde_json::from_str("...");

// new

let json = serde_json::from_str("...");

The trick here is that the ‘old’ style wasn’t always needed, due to the way Rust’s module system worked:

extern crate serde_json;

fn main() {

// this works just fine; we're in the crate root, so `serde_json` is in

// scope here

let json = serde_json::from_str("...");

}

mod foo {

fn bar() {

// this doesn't work; we're inside the `foo` namespace, and `serde_json`

// isn't declared there

let json = serde_json::from_str("...");

}

// one option is to `use` it inside the module

use serde_json;

fn baz() {

// the other option is to use `::serde_json`, so we're using an absolute path

// rather than a relative one

let json = ::serde_json::from_str("...");

}

}

Moving a function to a submodule and having some of your code break was not a great

experience. Now, it will check the first part of the path and see if it’s an extern

crate, and if it is, use it regardless of where you’re at in the module hierarchy.

Finally, use also supports bringing items into scope with paths starting with

crate:

mod foo {

pub fn bar() {

// ...

}

}

// old

use ::foo::bar;

// or

use foo::bar;

// new

use crate::foo::bar;

The crate keyword at the start of the path indicates that you would like the path to

start at your crate root. Previously, paths specified after use would always start at

the crate root, but paths referring to items directly would start at the local path,

meaning the behavior of paths was inconsistent:

mod foo {

pub fn bar() {

// ...

}

}

mod baz {

pub fn qux() {

// old

::foo::bar();

// does not work, which is different than with `use`:

// foo::bar();

// new

crate::foo::bar();

}

}

Once this style becomes widely used, this will hopefully make absolute paths a bit more

clear and remove some of the ugliness of leading ::.

All of these changes combined lead to a more straightforward understanding of how paths

resolve. Wherever you see a path like a::b::c someplace other than a use statement,

you can ask:

- Is

athe name of a crate? Then we’re looking forb::cinside of it. - Is

athe keywordcrate? Then we’re looking forb::cfrom the root of our crate. - Otherwise, we’re looking for

a::b::cfrom the current spot in the module hierarchy.

The old behavior of use paths always starting from the crate root still applies. But

after making a one-time switch to the new style, these rules will apply uniformly to

paths everywhere, and you’ll need to tweak your imports much less when moving code around.

Raw Identifiers

You can now use keywords as identifiers with some new syntax:

// define a local variable named `for`

let r#for = true;

// define a function named `for`

fn r#for() {

// ...

}

// call that function

r#for();

This doesn’t have many use cases today, but will once you are trying to use a Rust 2015 crate with a Rust 2018 project and vice-versa because the set of keywords will be different in the two editions; we’ll explain more in the upcoming blog post about Rust 2018.

no_std applications

Back in Rust 1.6, we announced the stabilization of no_std and

libcore for building

projects without the standard library. There was a twist, though: you could

only build libraries, but not applications.

With Rust 1.30, you can use the #[panic_handler] attribute

to implement panics yourself. This now means that you can build applications,

not just libraries, that don’t use the standard library.

Other things

Finally, you can now match on visibility keywords, like pub, in

macros using the vis specifier. Additionally, “tool

attributes” like #[rustfmt::skip] are now

stable. Tool lints

like #[allow(clippy::something)] are not yet stable, however.

See the detailed release notes for more.

Library stabilizations

A few new APIs were stabilized for this release:

Ipv4Addr::{BROADCAST, LOCALHOST, UNSPECIFIED}Ipv6Addr::{BROADCAST, LOCALHOST, UNSPECIFIED}Iterator::find_map

Additionally, the standard library has long had functions like trim_left to eliminate

whitespace on one side of some text. However, when considering RTL languages, the meaning

of “right” and “left” gets confusing. As such, we’re introducing new names for these

APIs:

trim_left->trim_starttrim_right->trim_endtrim_left_matches->trim_start_matchestrim_right_matches->trim_end_matches

We plan to deprecate (but not remove, of course) the old names in Rust 1.33.

See the detailed release notes for more.

Cargo features

The largest feature of Cargo in this release is that we now have a progress bar!

See the detailed release notes for more.

Contributors to 1.30.0

Many people came together to create Rust 1.30. We couldn’t have done it without all of you. Thanks!

|

|

Hacks.Mozilla.Org: Dweb: Identity for the Decentralized Web with IndieAuth |

In the Dweb series, we are covering projects that explore what is possible when the web becomes decentralized or distributed. These projects aren’t affiliated with Mozilla, and some of them rewrite the rules of how we think about a web browser. What they have in common: These projects are open source and open for participation, and they share Mozilla’s mission to keep the web open and accessible for all.

We’ve covered a number of projects so far in this series that require foundation-level changes to the network architecture of the web. But sometimes big things can come from just changing how we use the web we have today.

Imagine if you never had to remember a password to log into a website or app ever again. IndieAuth is a simple but powerful way to manage and verify identity using the decentralization already built into the web itself. We’re happy to introduce Aaron Parecki, co-founder of the IndieWeb movement, who will show you how to set up your own independent identity on the web with IndieAuth.

– Dietrich Ayala

Introducing IndieAuth

IndieAuth is a decentralized login protocol that enables users of your software to log in to other apps.

From the user perspective, it lets you use an existing account to log in to various apps without having to create a new password everywhere.

IndieAuth builds on existing web technologies, using URLs as identifiers. This makes it broadly applicable to the web today, and it can be quickly integrated into existing websites and web platforms.

IndieAuth has been developed over several years in the IndieWeb community, a loosely connected group of people working to enable individuals to own their online presence, and was published as a W3C Note in 2018.

IndieAuth Architecture

IndieAuth is an extension to OAuth 2.0 that enables any website to become its own identity provider. It builds on OAuth 2.0, taking advantage of all the existing security considerations and best practices in the industry around authorization and authentication.

IndieAuth starts with the assumption that every identifier is a URL. Users as well as applications are identified and represented by a URL.

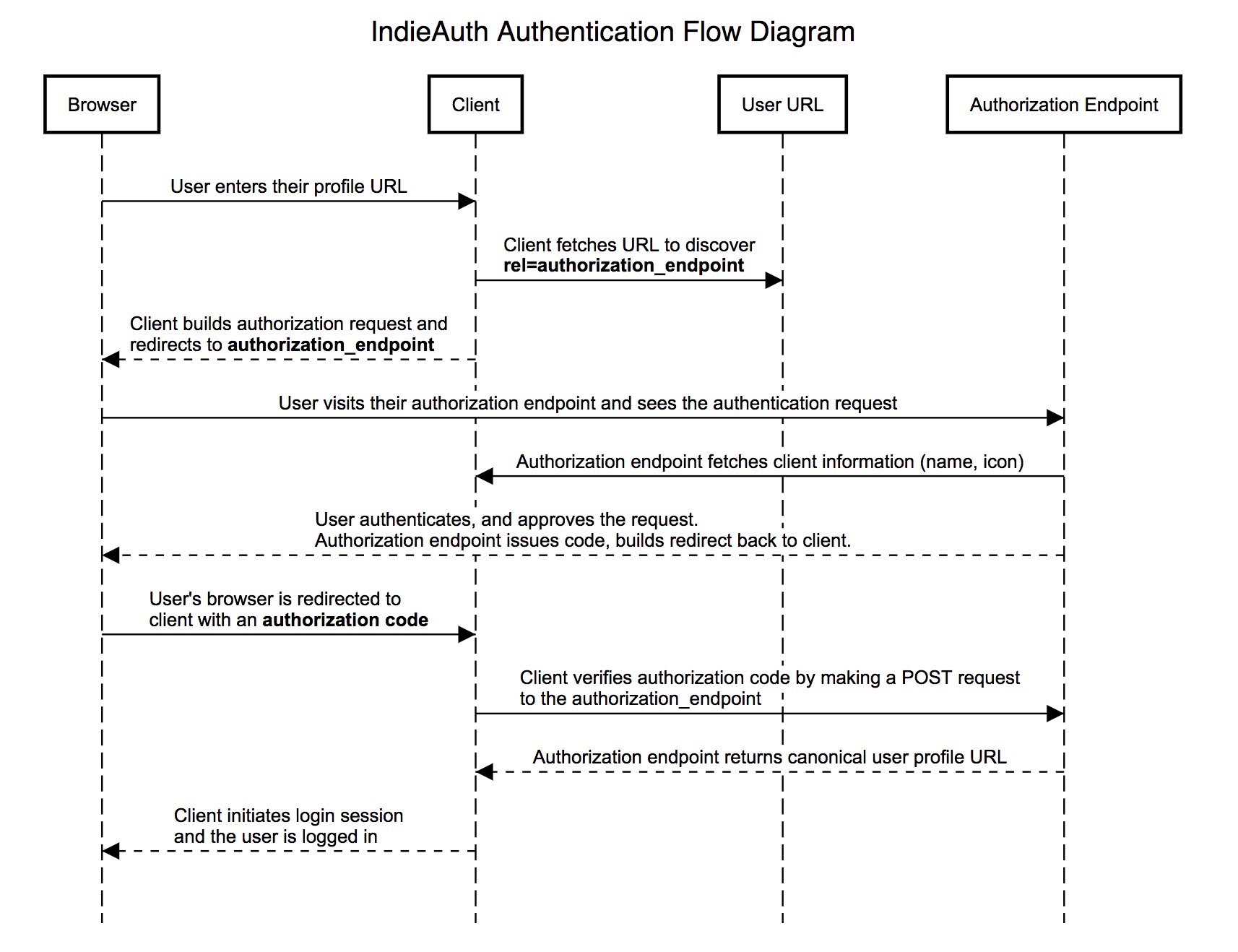

When a user logs in to an application, they start by entering their personal home page URL. The application fetches that URL and finds where to send the user to authenticate, then sends the user there, and can later verify that the authentication was successful. The flow diagram below walks through each step of the exchange:

Get Started with IndieAuth

The quickest way to use your existing website as your IndieAuth identity is to let an existing service handle the protocol bits and tell apps where to find the service you’re using.

If your website is using WordPress, you can easily get started by installing the IndieAuth plugin! After you install and activate the plugin, your website will be a full-featured IndieAuth provider and you can log in to websites like https://indieweb.org right away!

To set up your website manually, you’ll need to choose an IndieAuth server such as https://indieauth.com and add a few links to your home page. Add a link to the indieauth.com authorization endpoint in an HTML tag so that apps will know where to send you to log in.

Then tell indieauth.com how to authenticate you by linking to either a GitHub account or email address.

GitHub

Email

Note: This last step is unique to indieauth.com and isn’t part of the IndieAuth spec. This is how indieauth.com can authenticate you without you creating a password there. It lets you switch out the mechanism you use to authenticate, for example in case you decide to stop using GitHub, without changing your identity at the site you’re logging in to.

If you don’t want to rely on any third party services at all, then you can host your own IndieAuth authorization endpoint using an existing open source solution or build your own. In any case, it’s fine to start using a service for this today, because you can always swap it out later without your identity changing.

Now you’re ready! When logging in to a website like https://indieweb.org, you’ll be asked to enter your URL, then you’ll be sent to your chosen IndieAuth server to authenticate!

Learn More

If you’d like to learn more, OAuth for the Open Web talks about more of the technical details and motivations behind the IndieAuth spec.

You can learn how to build your own IndieAuth server at the links below:

You can find the latest spec at indieauth.spec.indieweb.org. If you have any questions, feel free to drop by the #indieweb-dev channel in the IndieWeb chat, or you can find me on Twitter, or my website.

The post Dweb: Identity for the Decentralized Web with IndieAuth appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2018/10/dweb-identity-for-the-decentralized-web-with-indieauth/

|

|

The Mozilla Blog: Keeping AI Accountable with Science Fiction, Documentaries, and Doodles (Plus $225,000) |

Mozilla is announcing the seven recipients of its Creative Media Awards — projects that use art and advocacy to highlight the unintended consequences of artificial intelligence

The artificial intelligence (AI) behind our screens has an outsized impact on our lives — it influences what news we read, who we date, and if we’re hired for that dream job.

More than ever, it’s essential for internet users to understand how this AI works — and how it can go awry, from radicalizing YouTube users to promoting bias to spreading misinformation.

Today, Mozilla is announcing funding for seven art and advocacy projects that shine a light on the AI at work in our everyday lives.

These seven projects are winners of Mozilla’s latest $225,000 Creative Media Awards. They hail from five countries. And they make AI’s impact on society understandable using science fiction, short documentaries, games, and more. These projects will launch to the public by June 2019.

Mozilla’s Creative Media Awards are part of our mission to support a healthy internet. They fuel the people and projects on the front lines of the internet health movement — from digital artists in the Netherlands to computer scientists in the United Arab Emirates to science fiction writers in the U.S.

The winners:

[1] Stealing Ur Feelings | by Noah Levenson in the U.S. | $50,000 prize

Stealing Ur Feelings will be an interactive film that reveals how social networks and apps use your face to secretly collect data about your emotions. The documentary will explore how emotion recognition AI determines if you’re happy or sad — and how companies use that information to influence your behavior.

An early version of Stealing Ur Feelings

[2] Do Not Draw a Penis | by Moniker in the Netherlands | $50,000 prize

Do Not Draw a Penis will address automated censorship and algorithmic content moderation. Users will visit a web page and will be met with a blank canvas. Users can draw whatever they like, and an AI voice will comment on their drawings (e.g. “nice landscape!”). But if the drawing resembles a penis or other “forbidden” content, the AI will scold the user, take control, and destroy the image.

[3] A Week With Wanda | by Joe Hall in the UK | $25,000 prize

A Week With Wanda will be a web-based simulation of the risks and rewards of artificial intelligence. Wanda — an AI assistant — will interact with users over the course of one week in an attempt to “improve” their lives. But she quickly goes off the rails. Along the way, Wanda might send uncouth messages to Facebook friends, order you anti-depressants, or freeze your bank account. (Wanda’s actions are simulated, not real.)

A potential conversation from A Week With Wanda

[4] Survival of the Best Fit | by Alia ElKattan in the United Arab Emirates, and Gabor Csapo, Jihyun Kim, and Miha Klasinc | $25,000 prize

Survival of the Best Fit is a web simulation of how blind usage of AI in hiring can reinforce workforce inequality. Users will operate an algorithm and see first-hand how white-sounding names are often prioritized, among other biases.

[5] The Training Commission | by Ingrid Burrington and Brendan Byrne in the U.S. | $25,000 prize

The Training Commission is a work of web-based speculative fiction that tells the stories of AI’s unintended consequences and harms to public life. It unfolds from the perspective of a journalist who is reckoning with how deeply AI has scarred society.

[6] What Do You See? | by Suchana Seth in India | $25,000 prize

What Do You See? highlights how differently humans and algorithms “see” the same image, and how easily bias can take root. Humans will visit a website and describe an image in their own words, without prompts. Then, humans will see how an image captioning algorithm describes that same image.

[7] Mate Me or Eat Me | by Benjamin Berman in the U.S. | $25,000 prize

Mate Me or Eat Me is a dating simulator that examines how exclusionary real dating apps can be. Users create a monster and mingle with others, swiping right and left to either mate with or eat others. Along the way, users have insight into how their choices affect who they see next — and who is excluded from their pool of potential paramours.

A mock-up from Mate Me or Eat Me

~

These seven awardees were selected based on quantitative scoring of their applications by a review committee, and a qualitative discussion at a review committee meeting. Committee members include Mozilla staff, current and alumni Mozilla Fellows, and outside experts. Selection criteria is designed to evaluate the merits of the proposed approach. Diversity in applicant background, past work, and medium were also considered.

These awards are part of the NetGain Partnership, a collaboration between Mozilla, Ford Foundation, Knight Foundation, MacArthur Foundation, and the Open Society Foundation. The goal of this philanthropic collaboration is to advance the public interest in the digital age.

Also see: (June 4, 2018) Mozilla Announces $225,000 for Art and Advocacy Exploring Artificial Intelligence

The post Keeping AI Accountable with Science Fiction, Documentaries, and Doodles (Plus $225,000) appeared first on The Mozilla Blog.

|

|

The Mozilla Blog: University of Dundee and Mozilla Announce Doctoral Program for ‘Healthier IoT’ |

With €1.5m in EU funding, this paid PhD program will explore how to build a more open, secure, and trustworthy Internet of Things

This week, the University of Dundee and Mozilla are announcing a new, innovative PhD program: OpenDoTT (Open Design of Trusted Things). This program will train technologists, designers, and researchers to create and advocate for connected products that are more open, secure, and trustworthy. The project is made possible through €1.5m in funding from the EU’s Horizon 2020 program.

As IoT evolves, the internet becomes more deeply entwined in humans’ everyday lives. Data flows around us in ever more complex ways: wearable technologies monitor our heartbeat, AI voice assistants cohabit our kitchens and our children’s bedrooms, smart cities know our every move, and facial recognition determines our access across country borders.

These technologies need to be built responsibly, and this practice requires the cultivation of design research and advocacy. OpenDoTT addresses this need on a systems level. By training the very people who will develop and influence IoT technology, we can create positive change that starts at the drawing board.

The challenges of the Internet of Things (IoT) require interdisciplinary thinking. And so the program will be hosted across several locations with training by leading organizations in different fields. The doctoral researchers will begin at the University of Dundee to learn about design research, and then move to Mozilla’s office in Berlin to focus on internet health. Throughout their studies, they will receive training on open hardware from Officine Innesto; field research from Quicksand and STBY; internet policy from the Humboldt Institute for Internet and Society; responsible IoT from Thingscon; and digital security from SimplySecure.

University of Dundee will lead training in design research, building on their world-class work on the Internet of Things, co-creation, and craft technology. The university’s past projects have explored the future of voice assistants in the home and IoT for independent retailers.

Mozilla will lead training around open technology and healthy internet practices. Mozilla focuses on fueling the movement for a healthy internet by connecting open internet leaders with each other and by mobilizing grassroots activists around the world.

Professor Jon Rogers, the project coordinator and a Mozilla Fellow, says: “This program is a game changer for the future of IoT because it’s about developing leadership. Change happens through people, and this project will bring future leaders together for a radical training programme that is located between university research and industry advocacy.”

Dr. Nick Taylor of University of Dundee adds: “This project builds on our long-term collaboration with Mozilla and provides an amazing platform to make a real difference in the IoT landscape. These doctoral researchers represent a huge boost to Dundee’s growing capacity for design-led IoT research.”

Michelle Thorne, the program coordinator at Mozilla, states: “With training at the intersection of design, technology and policy, OpenDoTT will produce a cohort of leaders in the internet health movement who are uniquely qualified to steer the field not only toward what is possible, but what is also responsible.”

The program will begin recruiting doctoral trainees in late 2018, and the first trainees will begin in July 2019. There are five available slots in the program. Further details can be found on the project website (OpenDoTT.org), where potential applicants can register their interest.

The project is a Marie Sklodowska-Curie Innovative Training Network (ITN), which are designed to support mobility of young researchers across borders, while providing the training needed to support European industries. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement No 813508.

The post University of Dundee and Mozilla Announce Doctoral Program for ‘Healthier IoT’ appeared first on The Mozilla Blog.

|

|

Daniel Stenberg: curl up 2019 will happen in Prague |

The curl project is happy to invite you to the city of Prague, the Czech Republic, where curl up 2019 will take place.

curl up is our annual curl developers conference where we gather and talk Internet protocols, curl's past, current situation and how to design its future. A weekend of curl.

Previous years we've gathered twenty-something people for an intimate meetup in a very friendly atmosphere. The way we like it!

In a spirit to move the meeting around to give different people easier travel, we have settled on the city of Prague for 2019, and we'll be there March 29-31.

Symposium on the Future of HTTP

This year, we're starting off the Friday afternoon with a Symposium dedicated to "the future of HTTP" which is aimed to be less about curl and more about where HTTP is and where it will go next. Suitable for a slightly wider audience than just curl fans.

That's Friday the 29th of March, 2019.

Program and talks

We are open for registrations and we would love to hear what you would like to come and present for us - on the topics of HTTP, of curl or related matters. I'm sure I will present something too, but it becomes a much better and more fun event if we distribute the talking as much as possible.

The final program for these days is not likely to get set until much later and rather close in time to the actual event.

The curl up 2019 wiki page is where you'll find more specific details appear over time. Just go back there and see.

Helping out and planning?

If you want to follow the planning, help out, offer improvements or you have questions on any of this? Then join the curl-meet mailing list, which is dedicated for this!

Free or charge thanks to sponsors

We're happy to call our event free, or "almost free" of charge and we can do this only due to the greatness and generosity of our awesome sponsors. This year we say thanks to Mullvad, Sticker Mule, Apiary and Charles University.

There's still a chance for your company to help out too! Just get in touch.

https://daniel.haxx.se/blog/2018/10/24/curl-up-2019-will-happen-in-prague/

|

|

K Lars Lohn: Things Gateway - Sunrise, Sunset, Swifty Flow the Days |

In my previous blog post, I introduced Time Triggers to demonstrate time based home automation. Sometimes, however, pegging an action down to a specific time doesn't work: darkness falls at different times every evening as one season follows another. How do you calculate sunset time? It's complicated, but there are several Python packages that can do it: I chose Astral.

The Things Gateway doesn't know where it lives. The Raspberry Pi distribution that includes the Things Gateway doesn't automatically know and understand your timezone when it is booted. Instead, it uses UTC, essentially Greenwich Mean Time, with none of those confounding Daylight Savings rules. Yet when viewing the Things Gateway from within a browser, the times in the GUI Rule System automatically reflect your local timezone. The presentation layer of the Web App served by the Things Gateway is responsible for showing you the correct time for your location. Beware, when you travel and access your Things Gateway GUI rules remotely from a different timezone, any references to time will display in your remote timezone. They'll still work properly at their appropriate times, but they will look weird during travel.

My own homegrown rule system uses a different tactic: it nails down a timezone for your Things Gateway. In the configuration, you specify two timezones: the timezone where your Things Gateway is physically located, local_timezone, and the timezone that is the default on the computer's clock running the the external rule system, system_timezone. Here's two examples to show why both need to be specified.

- I generally run my rules on my Linux Workstation. As this machine sits on my desk, its internal clock is set to reflect my local time. I set both the local_timezone and the system_timezone to US/Pacific. That tells my rule system that no time translations are required

- However, if were to instead run my Rule System on the Raspberry Pi that also runs the Things Gateway, I'd have have to specify the system_timezone as UTC. My local_timezone remains US/Pacific.

Or they can be command line parameters:

$ export local_timezone=US/Pacific

$ export system_timezone=US/Pacific

$ ./my_rules.py --help

Or they can be in a configuration file:

$ ./my_rules.py --local_timezone=US/Pacific --system_timezone=US/Pacific

$ cat config.ini

local_timezone=US/Pacific

system_timezone=US/Pacific

$ ./my_rules.py --admin.config=config.ini

My next blog post will cover more on how to set configuration and run this rule system on either the same Raspberry Pi that runs the Things Gateway or some other machine.

Meanwhile, let's talk about solar events. Once the Rule System knows where the Things Gateway is, it can calculate sunrise, sunset along with a host of other solar events that happen on a daily basis. That's where the Python package Astral comes in. Given the latitude, longitude, elevation and the local timezone, it will calculate the times for: blue_hour, dawn, daylight, dusk, golden_hour, night, rahukaalam, solar_midnight, solar_noon, sunrise, sunset, and twilight.

I created a trigger object that wraps Astral so it can be specified in the register_trigger method of the Rule System. Here's a rule that will turn a porch light on ten minutes after sunset every day:

class EveningPorchLightRule(Rule):(see this code in situ in the solar_event_rules.py file in the pywot rule system demo directory)

def register_triggers(self):

self.sunset_trigger = DailySolarEventsTrigger(

self.config,

"sunset_trigger",

("sunset", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

"10m" # ten minutes

)

self.ten_pm_trigger = AbsoluteTimeTrigger(

self.config,

'ten_pm_trigger',

'22:00:00'

)

return (self.sunset_trigger, self.ten_pm_trigger)

def action(self, the_triggering_thing, *args):

if the_triggering_thing is self.sunset_trigger:

self.Philips_HUE_01.on = True

else:

self.Philips_HUE_01.on = False

Like the other rules that I've created, I start by creating my trigger, in this case, an instance of the DailySolarEventsTrigger class. It is given the name "sunset_trigger" and the solar event, "sunset". The rule can trigger on multiple solar events, but in this case, since I want only one, "sunset" appears alone in a tuple. Next I specify the latitude and longitude of my home city, Corvallis, OR. That's in the US/Pacific timezone and about 70 meters above sea level. Finally, I specify a string representing 10 minutes.

After the DailySolarEventsTrigger, I create another trigger, AbsoluteTimeTrigger to handle turning the light off at 10pm. I could have created a second rule to do this, but a single rule to handle both ends of the operation seemed more satisfying.

In the action part of the rule, I needed to differentiate from the two types of actions. Both triggers will call the action function, each identifying itself as the value of the parameter, the_triggering_thing. If it was the sunset_trigger calling action, it turns the porch light on. If it was the ten_pm_trigger calling action, the light gets turned off. I think the implementation begs for a better dispatch method, but Python doesn't help much with that.

Some of the solar events are not just a single instant in time like a sunset, some represent periods during the day. One example is Rahukaalam. According to a Wikipedia article, in the realm of Vedic Astrology, Rahukaalam is an "inauspicious time" during which it is unwise to embark on new endeavors. It's based on dividing the daylight hours into eight periods. One of the periods is marked as the inauspicious one based on the day of the week. For example, it's the fourth period on Fridays and the seventh on Tuesdays. Since the length of the daylight hours changes every day, the lengths of the periods change and it gets hard to remember when it's okay to start something new.

Here's a rule that will control a warning light. The light being on indicates that the current time is within the start and end of the Rahukaalam time for the given global location and elevation

class RahukaalamRule(Rule):(this code is not part of the demo scripts, however, the next very similar script is.)

def register_triggers(self):

rahukaalam_trigger = DailySolarEventsTrigger(

self.config,

"rahukaalam_trigger",

("rahukaalam_start", "rahukaalam_end", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

)

return (rahukaalam_trigger,)

def action(self, the_triggering_thing, the_trigger, *args):

if the_trigger == "rahukaalam_start":

logging.info('%s starts', self.name)

self.Philips_HUE_02.on = True

self.Philips_HUE_02.color = "#FF9900"

else:

logging.info('%s ends', self.name)

self.Philips_HUE_02.on = False

Like all rules, it has two parts: registering triggers and responding to trigger actions. In register_trigger, I subscribe to the rahukaalam_start and rahukaalam_end solar events for my town location, timezone and elevation. In the action, I just look to the_trigger to see which of the two possible triggers fired. It results in a suitably cautious orange light illuminating during the Rahukaalam period.

Could we make the light blink for the first 30 seconds, just so we ensure that we notice the warning? Sure we can!

class RahukaalamRule(Rule):(see this code in situ in the solar_event_rules.py file in the pywot rule system demo directory)

def register_triggers(self):

rahukaalam_trigger = DailySolarEventsTrigger(

self.config,

"rahukaalam_trigger",

("rahukaalam_start", "rahukaalam_end", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

"-2250s"

)

return (rahukaalam_trigger,)

async def blink(self, number_of_seconds):

number_of_blinks = number_of_seconds / 3

for i in range(int(number_of_blinks)):

self.Philips_HUE_02.on = True

await asyncio.sleep(2)

self.Philips_HUE_02.on = False

await asyncio.sleep(1)

self.Philips_HUE_02.on = True

def action(self, the_triggering_thing, the_trigger, *args):

if the_trigger == "rahukaalam_start":

logging.info('%s starts', self.name)

self.Philips_HUE_02.on = True

self.Philips_HUE_02.color = "#FF9900"

asyncio.ensure_future(self.blink(30))

else:

logging.info('%s ends', self.name)

self.Philips_HUE_02.on = False

Here I created an async method that will turn the lamp on for two seconds and off for a second as many times as it can in 30 seconds. The method is fired off by the action method when the light is initially turned on. Once the blink routine has finished blinking, it silently quits.

Perhaps one of the best things about the Things Gateway is that the Things Framework allows nearly any programming language to participate.

Thanks this week goes to the authors and contributors to the Python package Astral. Readily available fun packages like Astral contribute immensely to the sheer joy of Open Source programming.

Now it looks like I've only got twenty minutes to publish this before I enter an inauspicious time... D'oh, too late...

http://www.twobraids.com/2018/10/things-gateway-sunrise-sunset-swifty.html

|

|

K Lars Lohn: Things Gateway - Sunrise, Sunset, Swifty Flow the Days |

In my previous blog post, I introduced Time Triggers to demonstrate time based home automation. Sometimes, however, pegging an action down to a specific time doesn't work: darkness falls at different times every evening as one season follows another. How do you calculate sunset time? It's complicated, but there are several Python packages that can do it: I chose Astral.

The Things Gateway doesn't know where it lives. The Raspberry Pi distribution that includes the Things Gateway doesn't automatically know and understand your timezone when it is booted. Instead, it uses UTC, essentially Greenwich Mean Time, with none of those confounding Daylight Savings rules. Yet when viewing the Things Gateway from within a browser, the times in the GUI Rule System automatically reflect your local timezone. The presentation layer of the Web App served by the Things Gateway is responsible for showing you the correct time for your location. Beware, when you travel and access your Things Gateway GUI rules remotely from a different timezone, any references to time will display in your remote timezone. They'll still work properly at their appropriate times, but they will look weird during travel.

My own homegrown rule system uses a different tactic: it nails down a timezone for your Things Gateway. In the configuration, you specify two timezones: the timezone where your Things Gateway is physically located, local_timezone, and the timezone that is the default on the computer's clock running the the external rule system, system_timezone. Here's two examples to show why both need to be specified.

- I generally run my rules on my Linux Workstation. As this machine sits on my desk, its internal clock is set to reflect my local time. I set both the local_timezone and the system_timezone to US/Pacific. That tells my rule system that no time translations are required

- However, if were to instead run my Rule System on the Raspberry Pi that also runs the Things Gateway, I'd have have to specify the system_timezone as UTC. My local_timezone remains US/Pacific.

Or they can be command line parameters:

$ export local_timezone=US/Pacific

$ export system_timezone=US/Pacific

$ ./my_rules.py --help

Or they can be in a configuration file:

$ ./my_rules.py --local_timezone=US/Pacific --system_timezone=US/Pacific

$ cat config.ini

local_timezone=US/Pacific

system_timezone=US/Pacific

$ ./my_rules.py --admin.config=config.ini

My next blog post will cover more on how to set configuration and run this rule system on either the same Raspberry Pi that runs the Things Gateway or some other machine.

Meanwhile, let's talk about solar events. Once the Rule System knows where the Things Gateway is, it can calculate sunrise, sunset along with a host of other solar events that happen on a daily basis. That's where the Python package Astral comes in. Given the latitude, longitude, elevation and the local timezone, it will calculate the times for: blue_hour, dawn, daylight, dusk, golden_hour, night, rahukaalam, solar_midnight, solar_noon, sunrise, sunset, and twilight.

I created a trigger object that wraps Astral so it can be specified in the register_trigger method of the Rule System. Here's a rule that will turn a porch light on ten minutes after sunset every day:

class EveningPorchLightRule(Rule):(see this code in situ in the solar_event_rules.py file in the pywot rule system demo directory)

def register_triggers(self):

self.sunset_trigger = DailySolarEventsTrigger(

self.config,

"sunset_trigger",

("sunset", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

"10m" # ten minutes

)

self.ten_pm_trigger = AbsoluteTimeTrigger(

self.config,

'ten_pm_trigger',

'22:00:00'

)

return (self.sunset_trigger, self.ten_pm_trigger)

def action(self, the_triggering_thing, *args):

if the_triggering_thing is self.sunset_trigger:

self.Philips_HUE_01.on = True

else:

self.Philips_HUE_01.on = False

Like the other rules that I've created, I start by creating my trigger, in this case, an instance of the DailySolarEventsTrigger class. It is given the name "sunset_trigger" and the solar event, "sunset". The rule can trigger on multiple solar events, but in this case, since I want only one, "sunset" appears alone in a tuple. Next I specify the latitude and longitude of my home city, Corvallis, OR. That's in the US/Pacific timezone and about 70 meters above sea level. Finally, I specify a string representing 10 minutes.

After the DailySolarEventsTrigger, I create another trigger, AbsoluteTimeTrigger to handle turning the light off at 10pm. I could have created a second rule to do this, but a single rule to handle both ends of the operation seemed more satisfying.

In the action part of the rule, I needed to differentiate from the two types of actions. Both triggers will call the action function, each identifying itself as the value of the parameter, the_triggering_thing. If it was the sunset_trigger calling action, it turns the porch light on. If it was the ten_pm_trigger calling action, the light gets turned off. I think the implementation begs for a better dispatch method, but Python doesn't help much with that.

Some of the solar events are not just a single instant in time like a sunset, some represent periods during the day. One example is Rahukaalam. According to a Wikipedia article, in the realm of Vedic Astrology, Rahukaalam is an "inauspicious time" during which it is unwise to embark on new endeavors. It's based on dividing the daylight hours into eight periods. One of the periods is marked as the inauspicious one based on the day of the week. For example, it's the fourth period on Fridays and the seventh on Tuesdays. Since the length of the daylight hours changes every day, the lengths of the periods change and it gets hard to remember when it's okay to start something new.

Here's a rule that will control a warning light. The light being on indicates that the current time is within the start and end of the Rahukaalam time for the given global location and elevation

class RahukaalamRule(Rule):(this code is not part of the demo scripts, however, the next very similar script is.)

def register_triggers(self):

rahukaalam_trigger = DailySolarEventsTrigger(

self.config,

"rahukaalam_trigger",

("rahukaalam_start", "rahukaalam_end", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

)

return (rahukaalam_trigger,)

def action(self, the_triggering_thing, the_trigger, *args):

if the_trigger == "rahukaalam_start":

logging.info('%s starts', self.name)

self.Philips_HUE_02.on = True

self.Philips_HUE_02.color = "#FF9900"

else:

logging.info('%s ends', self.name)

self.Philips_HUE_02.on = False

Like all rules, it has two parts: registering triggers and responding to trigger actions. In register_trigger, I subscribe to the rahukaalam_start and rahukaalam_end solar events for my town location, timezone and elevation. In the action, I just look to the_trigger to see which of the two possible triggers fired. It results in a suitably cautious orange light illuminating during the Rahukaalam period.

Could we make the light blink for the first 30 seconds, just so we ensure that we notice the warning? Sure we can!

class RahukaalamRule(Rule):(see this code in situ in the solar_event_rules.py file in the pywot rule system demo directory)

def register_triggers(self):

rahukaalam_trigger = DailySolarEventsTrigger(

self.config,

"rahukaalam_trigger",

("rahukaalam_start", "rahukaalam_end", ),

(44.562951, -123.3535762),

"US/Pacific",

70.0,

"-2250s"

)

return (rahukaalam_trigger,)

async def blink(self, number_of_seconds):

number_of_blinks = number_of_seconds / 3

for i in range(int(number_of_blinks)):

self.Philips_HUE_02.on = True

await asyncio.sleep(2)

self.Philips_HUE_02.on = False

await asyncio.sleep(1)

self.Philips_HUE_02.on = True

def action(self, the_triggering_thing, the_trigger, *args):

if the_trigger == "rahukaalam_start":

logging.info('%s starts', self.name)

self.Philips_HUE_02.on = True

self.Philips_HUE_02.color = "#FF9900"

asyncio.ensure_future(self.blink(30))

else:

logging.info('%s ends', self.name)

self.Philips_HUE_02.on = False

Here I created an async method that will turn the lamp on for two seconds and off for a second as many times as it can in 30 seconds. The method is fired off by the action method when the light is initially turned on. Once the blink routine has finished blinking, it silently quits.

Perhaps one of the best things about the Things Gateway is that the Things Framework allows nearly any programming language to participate.

Thanks this week goes to the authors and contributors to the Python package Astral. Readily available fun packages like Astral contribute immensely to the sheer joy of Open Source programming.

Now it looks like I've only got twenty minutes to publish this before I enter an inauspicious time... D'oh, too late...

http://www.twobraids.com/2018/10/things-gateway-sunrise-sunset-swifty.html

|

|

Patrick Cloke: Calling Celery from Twisted |

Background

I use Twisted and Celery daily at work, both are useful frameworks, both have a lot of great information out there, but a particular use (that I haven’t seen discussed much online, hence this post) is calling Celery tasks from Twisted (and subsequently using the result).

The difference …

http://patrick.cloke.us/posts/2018/10/23/calling-celery-from-twisted/

|

|

Patrick Cloke: Calling Celery from Twisted |

Background

I use Twisted and Celery daily at work, both are useful frameworks, both have a lot of great information out there, but a particular use (that I haven’t seen discussed much online, hence this post) is calling Celery tasks from Twisted (and subsequently using the result).

The difference …

http://patrick.cloke.us/posts/2018/10/23/calling-celery-from-twisted/

|

|

About:Community: Firefox 63 new contributors |

With the release of Firefox 63, we are pleased to welcome the 53 developers who contributed their first code change to Firefox in this release, 44 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

- changqing.li: 1480315

- lifanfzeng: 1446923

- omersid: 1472170

- polly.shaw: 356831, 1482224

- rahul.shivaprasad: 1478883

- spillner: 260562

- starsmarsjupitersaturn: 1466428

- Akshay Chiwhane: 1465046

- Alexis Deschamps: 1476034

- Aniket Kadam: 1460355

- Ankita Tanwar: 1330544

- Arshad Kazmi: 1396684, 1443861, 1462678, 1483073, 1484925, 1486313

- Arthur Iakab: 1481664

- Ashley Hauck: 1440648, 1449540, 1449985, 1456973, 1471371, 1472126, 1473228, 1473230, 1476921, 1484708, 1486584

- Christian Salinas: 1484904

- Corentin No"el: 1438051

- David Heiberg: 1476661

- Denis Palmeiro: 785922, 1482560, 1485738

- Devika Sugathan: 1375220, 1472121, 1483810

- Diego Pino: 1357636, 1405022, 1482200, 1482230

- Emma Malysz: 1454358, 1465866

- ExE Boss: 1371951, 1391464, 1473130, 1482411, 1482498, 1482693

- Florens Verschelde: 1464348, 1476590, 1477586, 1479750, 1483782, 1487785

- Gabriel V^ijiala: 1334940, 1471408, 1471646, 1471660, 1472236, 1473313, 1473610, 1474342, 1474572, 1474575, 1474869, 1476165, 1480120, 1480187, 1480875, 1481834, 1483586

- Imanol Fernandez: 1470348, 1474847, 1475270, 1476380, 1478754, 1479424, 1481393, 1482613, 1483397, 1487079, 1487115, 1487825

- Israel Madueme: 1468821

- Jennifer Wilde: 1482972

- Liam: 1472163

- L'eo Paquet: 1413283

- Mihir Karbelkar: 1474365

- Mitch Ament: 1477335

- Ondrej Zoder: 1472611, 1481392

- Pavan Veginati: 1470034

- Petru Gurita: 1319793, 1445216, 1468750, 1471144, 1473520

- Philipp Hancke: 1481557, 1481725, 1481851

- Preeti: 696385

- Raynald Mirville: 1476552

- Robert Bartlensky: 1471943, 1472672, 1472676, 1472681, 1473278, 1473951, 1474928, 1474967, 1474973, 1475282, 1475283, 1475949, 1475964, 1476009, 1476011, 1476014, 1476015, 1476016, 1476313, 1476314, 1476340, 1476565, 1476603, 1476640, 1476644, 1476645, 1476657, 1477707, 1479401

- Roland Mutter Michael: 1467555

- Ruslan Bekenev: 1400775

- Sahil Bhosale: 1478855, 1479605, 1481401, 1481724, 1483813

- Sandeep Bypina: 1476137

- Scott Downe: 1476045, 1485528

- Sean Voisen: 1466722

- Siddhant: 1478267

- Sriharsha: 1484949

- Suriyaa Sundararuban: 1481409, 1481532, 1482419, 1482708

- Wambui: 1400233

- Yaron Tausky: 1264182, 1433916, 1455078, 1480702, 1483505

- Yash Johar: 1485185

- Yuan Lyu: 1441295

- makmm // v0idifier: 1476580

- pdknsk: 1459425, 1464257, 1477858, 1481237, 1484556

- rugk: 1474095

https://blog.mozilla.org/community/2018/10/23/firefox-63-new-contributors/

|

|

Hacks.Mozilla.Org: Firefox 63 – Tricks and Treats! |

It’s that time of the year again- when we put on costumes and pass out goodies to all. It’s Firefox release week! Join me for a spook-tacular1 look at the latest goodies shipping this release.

Web Components, Oh My!

After a rather long gestation, I’m pleased to announce that support for modern Web Components APIs has shipped in Firefox! Expect a more thorough write-up, but let’s cover what these new APIs make possible.

Custom Elements

To put it simply, Custom Elements makes it possible to define new HTML tags outside the standard set included in the web platform. It does this by letting JS classes extend the built-in HTMLElement object, adding an API for registering new elements, and by adding special “lifecycle” methods to detect when a custom element is appended, removed, or attributes are updated:

class FancyList extends HTMLElement {

constructor () {

super();

this.style.fontFamily = 'cursive'; // very fancy

}

connectedCallback() {

console.log('Make Way!');

}

disconnectedCallback() {

console.log('I Bid You Adieu.');

}

}

customElements.define('fancy-list', FancyList);

Shadow DOM

The web has long had reusable widgets people can use when building a site. One of the most common challenges when using third-party widgets on a page is making sure that the styles of the page don’t mess up the appearance of the widget and vice-versa. This can be frustrating (to put it mildly), and leads to lots of long, overly specific CSS selectors, or the use of complex third-party tools to re-write all the styles on the page to not conflict.

Cue frustrated developer:

There has to be a better way…

Now, there is!

The Shadow DOM is not a secretive underground society of web developers, but instead a foundational web technology that lets developers create encapsulated HTML trees that aren’t affected by outside styles, can have their own styles that don’t leak out, and in fact can be made unreachable from normal DOM traversal methods (querySelector, .childNodes, etc.).

let shadow = div.attachShadow({ mode: 'open' });

let inner = document.createElement('b');

inner.appendChild(document.createTextNode('I was born in the shadows'));

shadow.appendChild(inner);

div.querySelector('b'); // empty

Custom elements and shadow roots can be used independently of one another, but they really shine when used together. For instance, imagine you have a element with playback controls. You can put the controls in a shadow root and keep the page’s DOM clean! In fact, Both Firefox and Chrome now use Shadow DOM for the implementation of the element.

Expect a deeper dive on building full-fledged components here on Hacks soon! In the meantime, you can plunge into the Web Components docs on MDN as well as see the code for a bunch of sample custom elements on GitHub.

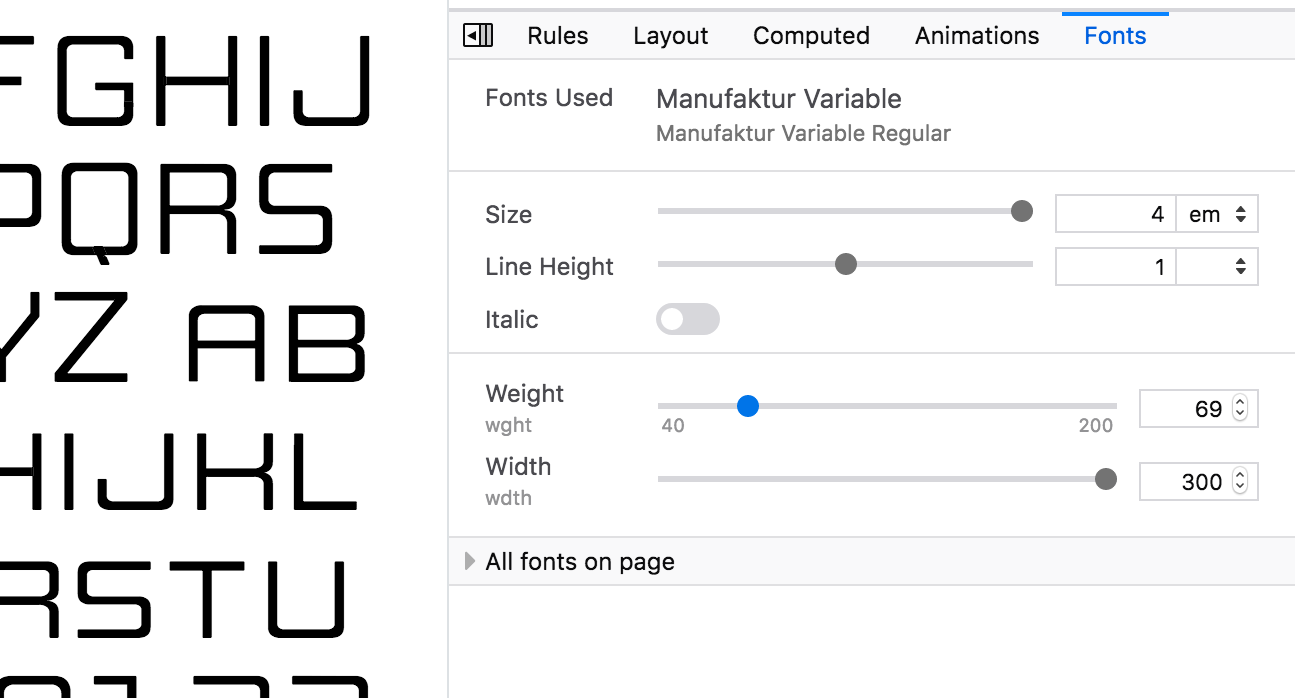

Fonts Editor

The Inspector’s Fonts panel is a handy way to see what local and web fonts are being used on a page. Already useful for debugging webfonts, in Firefox 63 the Fonts panel gains new powers! You can adjust the parameters of the font on the currently selected element, and if the current font supports Font Variations, you can view and fine-tune those paramaters as well. The syntax for adjusting variable fonts can be a little unfamiliar and it’s not otherwise possible to discover all the variations built into a font, so this tool can be a life saver.

Read all about how to use the new Fonts panel on MDN Web Docs.

Reduced motion preferences for CSS

Slick animations can give a polished and unique feel to a digital experience. However, for some people, animated effects like parallax and sliding/zooming transitions can cause vertigo and headaches. In addition, some older/less powerful devices can struggle to render animations smoothly. To respond to this, some devices and operating systems offer a “reduce motion” option. In Firefox 63, you can now detect this preference using CSS media queries and adjust/reduce your use of transitions and animations to ensure more people have a pleasant experience using your site. CSS Tricks has a great overview of both how to detect reduced motion and why you should care.

Conclusion

There is, as always, a bunch more in this release of Firefox. MDN Web Docs has the full run-down of developer-facing changes, and more highlights can be found in the official release notes. Happy Browsing!

1. not particularly spook-tacular

The post Firefox 63 – Tricks and Treats! appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2018/10/firefox-63-tricks-and-treats/

|

|

Mozilla Future Releases Blog: The Path to Enhanced Tracking Protection |

As a leader of Firefox’s product management team, I am often asked how Mozilla decides on which privacy features we will build and launch in Firefox. In this post I’d like to tell you about some key aspects of our process, using our recent Enhanced Tracking Protection functionality as an example.

What makes Mozilla and Firefox different than other browsers?

Mozilla is a mission-driven organization whose flagship product, Firefox, is meant to espouse the principles of our manifesto. Firefox is our expression of what it means to have someone on your side when you’re online. We are always standing up for your rights while pushing the web forward as a platform, open and accessible to all. As such, there are a number of careful considerations we need to weigh as part of our product development process in order to decide which features or functionality make it into the product; particularly as it relates to user privacy.

A focus on people and the health of the web

Foremost, we focus on people. They motivate us. They are the reason that Mozilla exists and how we have leverage in the industry to shape the future of the web. Through a variety of methods (surveys, in-product studies, A/B testing, qualitative user interviews, formative research) we try to better understand the unmet needs of the people who use Firefox. Another consideration we weigh is how changes we make in Firefox will affect the health of the web, longer term. Are we shifting incentives for websites in a positive or negative direction? What will the impact of these shifts be on people who rely on the internet in the short term? In the long run? In many ways, before deciding to include a privacy feature in Firefox, we need to apply basic game theory to play out the potential outcomes and changes ecosystem participants are likely to make in response, including developers, publishers and advertisers. The reality is that the answer isn’t always clear-cut.

How we arrived at Enhanced Tracking Protection

Recently we announced a change to our anti-tracking approach in Firefox in response to what we saw as shifting market conditions and an increase in user demand for more privacy protections. As an example of that demand, look no further than our Firefox Public Data Report and the rise in users manually enabling our original Tracking Protection feature to be Always On (by default, Tracking Protection is only enabled in Private Browsing):

Always On Tracking Protection shows the percentage of Firefox Desktop clients with Tracking Protection enabled for all browsing sessions (note: the setting was made available for users to change with the release of Firefox 57)

The desired outcomes are clear – people should not be tracked across websites by default and they shouldn’t be subjected to abusive practices or detrimental impacts to their online experience in the name of tracking. However, the challenge with many privacy features is that there are often trade-offs between stronger protections and negative impacts to user experience. Historically this trade-off has been handled by giving users privacy options that they can optionally enable. We know from our research that people want these protections but they don’t understand the threats or protection options enough to turn them on.

We have run multiple studies to better understand these trade-offs as they relate to tracking. In particular, since we introduced the original Tracking Protection in Firefox’s Private Browsing mode in 2015, many people have wondered why we don’t just enable the feature in all modes. The reality is that Firefox’s original Tracking Protection functionality can cause websites to break, which confuses users. Here is a quick sample of the website breakage bugs that have been filed:

Bugs filed related to broken website functionality due to our original Tracking Protection

In addition, because the feature blocks everything, including ads, from any domain that is also used for tracking, it can have a significant negative impact on small websites and content creators who depend on third-party advertising tools/networks. Because small site owners cannot change how these third-party tools operate in order to adhere to Disconnect’s policy to be removed from the tracker list, the revenue impact may hurt content creation and accessibility in the medium to long-term, which is not our intent.

Finding the right tradeoffs

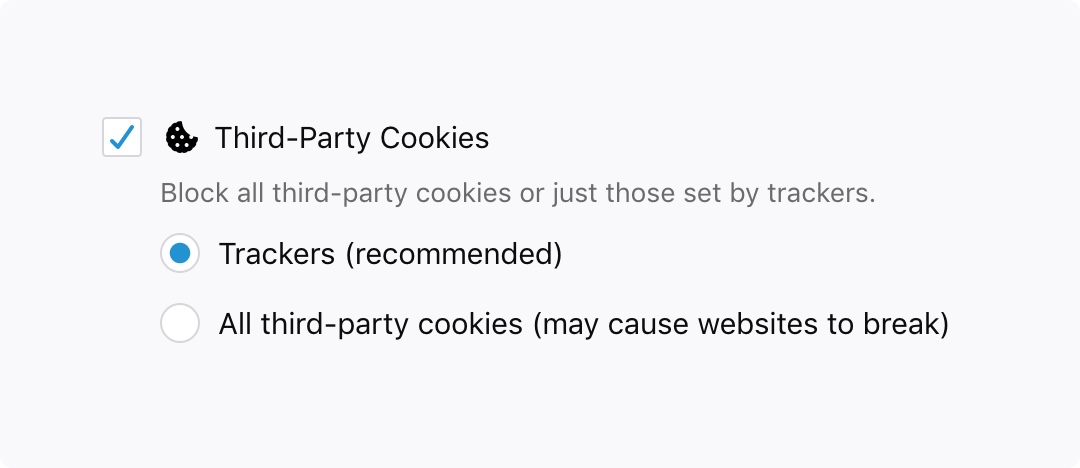

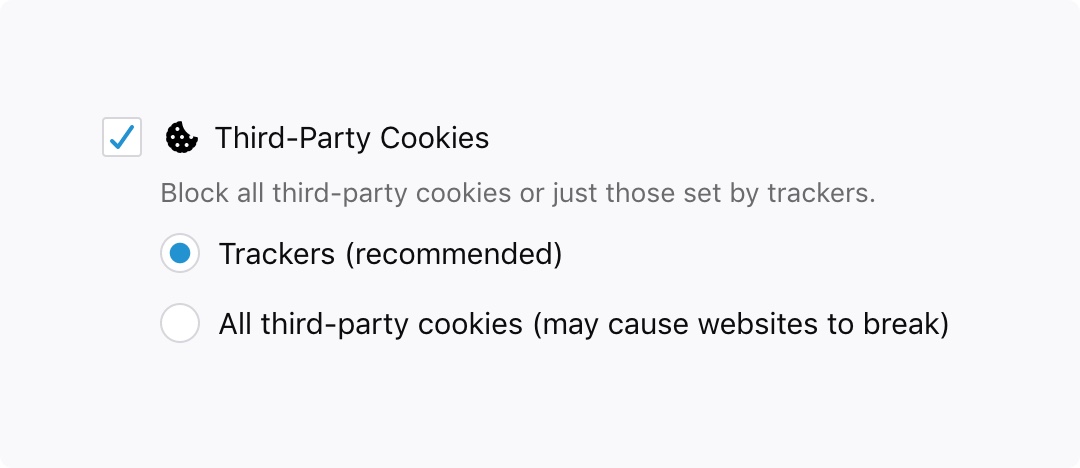

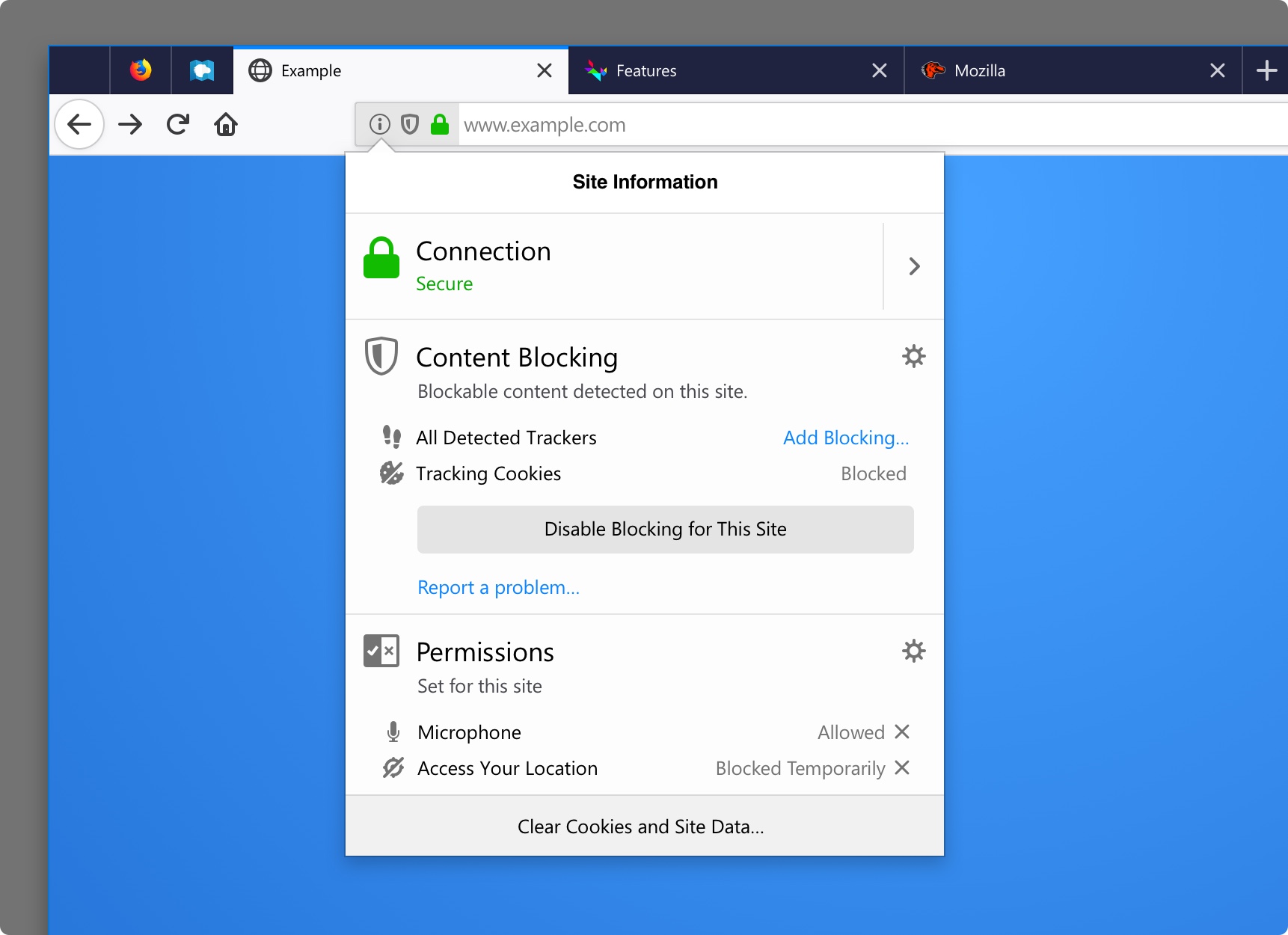

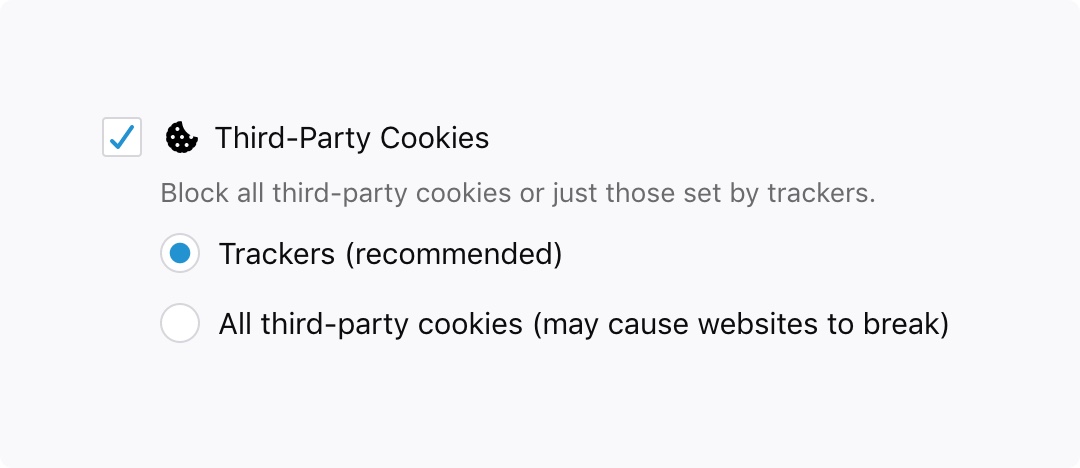

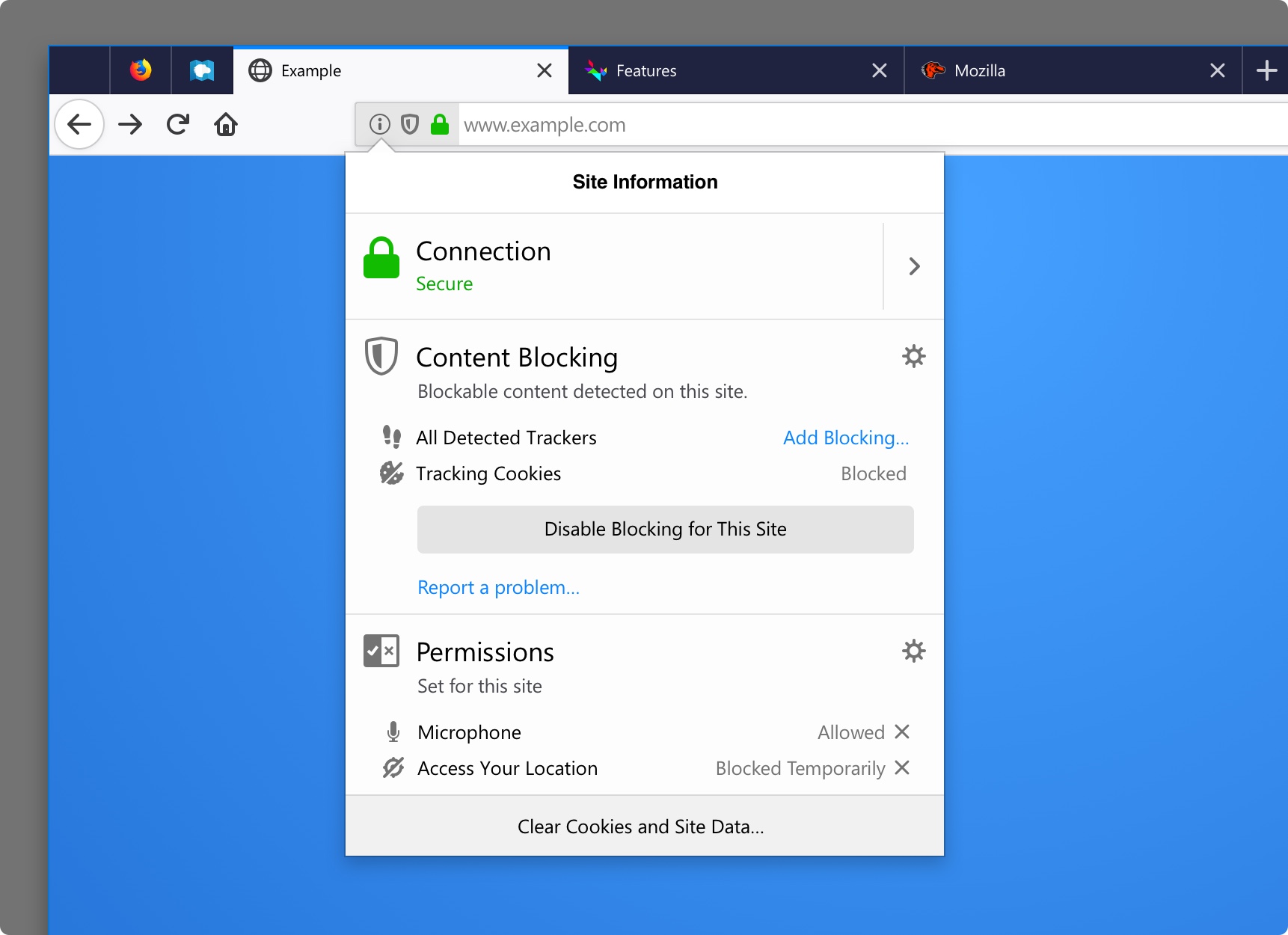

The outcome of these studies caused us to seek new solutions which could be applied by default outside of Private Browsing without detrimental impacts to user experience and without blocking most ads. This is exactly what Enhanced Tracking Protection, being introduced in Firefox 63, is meant to help with. With this feature, you can block cookies and storage access from third-party trackers:

The feature more surgically targets the problem of cross-site tracking without the breakage and wide-scale ad blocking which occurred with our initial Tracking Protection implementation. It does this by preventing known trackers from setting third-party cookies — the primary method of tracking across sites. Certainly you will still be able to decide to block all known trackers under Firefox Options/Preferences if you so choose (note that this may prevent some websites from loading properly, as described above):

Sometimes plans change – that’s why we test

As part of our announcements that ultimately led to Enhanced Tracking Protection, we described how we planned to block trackers that cause long page load times. We’re continuing to hone the approach and experience before deciding to roll out this performance feature to Firefox users. Why? There are a number of reasons: the initial feature design was similar in nature to the original Tracking Protection functionality (including ad blocking) but blocking only occurred after a few seconds of page load. In our testing, there was a high degree of variability as to when various third-party domains would be blocked (even within the same site). This could be confusing for users since they would see blocking happen inconsistently.

A secondary motivation to block trackers and ads on slow page loads was to encourage websites to speed up how quickly content loads. With the tested design, a number of factors such as the network speed played a part in determining whether or not blocking of trackers and ads would occur on a given site. However, because factors like network speed aren’t in the control of the website, it would pose a challenge for many sites, even if they did their best at speeding up content load. We felt this provided the wrong incentive – it may cause sites to prioritize loading ads over content to avoid the ads from being blocked, a worse outcome from a user perspective. As a result, we are exploring some alternative options targeted at the same outcome – much faster page loads.

Open, transparent roadmaps

We work in the open. We do it because our community is important to us. We do it because open dialog is important to us. We do it because deciding on the future of the web we all have come to rely upon should be a transparent process – one that inherently invites participation. You can expect that we will continue to operate in this manner, being upfront and public about our intent and testing efforts. We encourage you to test your own site with our new features, and let us know about any problems by clicking “Report a Problem” in the Content Blocking section of the Control Center.

I hope this glimpse into our decision-making process around Enhanced Tracking Protection reaffirms that Mozilla stands for a healthy web – one that upholds the right to privacy as fundamental.

The post The Path to Enhanced Tracking Protection appeared first on Future Releases.

https://blog.mozilla.org/futurereleases/2018/10/23/the-path-to-enhanced-tracking-protection/

|

|

Mozilla Future Releases Blog: The Path to Enhanced Tracking Protection |

As a leader of Firefox’s product management team, I am often asked how Mozilla decides on which privacy features we will build and launch in Firefox. In this post I’d like to tell you about some key aspects of our process, using our recent Enhanced Tracking Protection functionality as an example.

What makes Mozilla and Firefox different than other browsers?

Mozilla is a mission-driven organization whose flagship product, Firefox, is meant to espouse the principles of our manifesto. Firefox is our expression of what it means to have someone on your side when you’re online. We are always standing up for your rights while pushing the web forward as a platform, open and accessible to all. As such, there are a number of careful considerations we need to weigh as part of our product development process in order to decide which features or functionality make it into the product; particularly as it relates to user privacy.

A focus on people and the health of the web

Foremost, we focus on people. They motivate us. They are the reason that Mozilla exists and how we have leverage in the industry to shape the future of the web. Through a variety of methods (surveys, in-product studies, A/B testing, qualitative user interviews, formative research) we try to better understand the unmet needs of the people who use Firefox. Another consideration we weigh is how changes we make in Firefox will affect the health of the web, longer term. Are we shifting incentives for websites in a positive or negative direction? What will the impact of these shifts be on people who rely on the internet in the short term? In the long run? In many ways, before deciding to include a privacy feature in Firefox, we need to apply basic game theory to play out the potential outcomes and changes ecosystem participants are likely to make in response, including developers, publishers and advertisers. The reality is that the answer isn’t always clear-cut.

How we arrived at Enhanced Tracking Protection

Recently we announced a change to our anti-tracking approach in Firefox in response to what we saw as shifting market conditions and an increase in user demand for more privacy protections. As an example of that demand, look no further than our Firefox Public Data Report and the rise in users manually enabling our original Tracking Protection feature to be Always On (by default, Tracking Protection is only enabled in Private Browsing):

Always On Tracking Protection shows the percentage of Firefox Desktop clients with Tracking Protection enabled for all browsing sessions (note: the setting was made available for users to change with the release of Firefox 57)

The desired outcomes are clear – people should not be tracked across websites by default and they shouldn’t be subjected to abusive practices or detrimental impacts to their online experience in the name of tracking. However, the challenge with many privacy features is that there are often trade-offs between stronger protections and negative impacts to user experience. Historically this trade-off has been handled by giving users privacy options that they can optionally enable. We know from our research that people want these protections but they don’t understand the threats or protection options enough to turn them on.

We have run multiple studies to better understand these trade-offs as they relate to tracking. In particular, since we introduced the original Tracking Protection in Firefox’s Private Browsing mode in 2015, many people have wondered why we don’t just enable the feature in all modes. The reality is that Firefox’s original Tracking Protection functionality can cause websites to break, which confuses users. Here is a quick sample of the website breakage bugs that have been filed:

Bugs filed related to broken website functionality due to our original Tracking Protection

In addition, because the feature blocks everything, including ads, from any domain that is also used for tracking, it can have a significant negative impact on small websites and content creators who depend on third-party advertising tools/networks. Because small site owners cannot change how these third-party tools operate in order to adhere to Disconnect’s policy to be removed from the tracker list, the revenue impact may hurt content creation and accessibility in the medium to long-term, which is not our intent.

Finding the right tradeoffs

The outcome of these studies caused us to seek new solutions which could be applied by default outside of Private Browsing without detrimental impacts to user experience and without blocking most ads. This is exactly what Enhanced Tracking Protection, being introduced in Firefox 63, is meant to help with. With this feature, you can block cookies and storage access from third-party trackers:

The feature more surgically targets the problem of cross-site tracking without the breakage and wide-scale ad blocking which occurred with our initial Tracking Protection implementation. It does this by preventing known trackers from setting third-party cookies — the primary method of tracking across sites. Certainly you will still be able to decide to block all known trackers under Firefox Options/Preferences if you so choose (note that this may prevent some websites from loading properly, as described above):

Sometimes plans change – that’s why we test

As part of our announcements that ultimately led to Enhanced Tracking Protection, we described how we planned to block trackers that cause long page load times. We’re continuing to hone the approach and experience before deciding to roll out this performance feature to Firefox users. Why? There are a number of reasons: the initial feature design was similar in nature to the original Tracking Protection functionality (including ad blocking) but blocking only occurred after a few seconds of page load. In our testing, there was a high degree of variability as to when various third-party domains would be blocked (even within the same site). This could be confusing for users since they would see blocking happen inconsistently.

A secondary motivation to block trackers and ads on slow page loads was to encourage websites to speed up how quickly content loads. With the tested design, a number of factors such as the network speed played a part in determining whether or not blocking of trackers and ads would occur on a given site. However, because factors like network speed aren’t in the control of the website, it would pose a challenge for many sites, even if they did their best at speeding up content load. We felt this provided the wrong incentive – it may cause sites to prioritize loading ads over content to avoid the ads from being blocked, a worse outcome from a user perspective. As a result, we are exploring some alternative options targeted at the same outcome – much faster page loads.

Open, transparent roadmaps

We work in the open. We do it because our community is important to us. We do it because open dialog is important to us. We do it because deciding on the future of the web we all have come to rely upon should be a transparent process – one that inherently invites participation. You can expect that we will continue to operate in this manner, being upfront and public about our intent and testing efforts. We encourage you to test your own site with our new features, and let us know about any problems by clicking “Report a Problem” in the Content Blocking section of the Control Center.

I hope this glimpse into our decision-making process around Enhanced Tracking Protection reaffirms that Mozilla stands for a healthy web – one that upholds the right to privacy as fundamental.

The post The Path to Enhanced Tracking Protection appeared first on Future Releases.

https://blog.mozilla.org/futurereleases/2018/10/23/the-path-to-enhanced-tracking-protection/

|

|

The Firefox Frontier: Save a step when you’re searching with Firefox |

We live in an amazing time. When all the knowledge in the world is at our fingertips. Where having an edge doesn’t come from being able to remember information, but … Read more

The post Save a step when you’re searching with Firefox appeared first on The Firefox Frontier.

|

|

The Firefox Frontier: Save a step when you’re searching with Firefox |

We live in an amazing time. When all the knowledge in the world is at our fingertips. Where having an edge doesn’t come from being able to remember information, but … Read more

The post Save a step when you’re searching with Firefox appeared first on The Firefox Frontier.

|

|

The Mozilla Blog: Latest Firefox Rolls Out Enhanced Tracking Protection |

At Firefox, we’re always looking to build features that are true to the Mozillia mission of giving people control over their data and privacy whenever they go online. We recently announced our approach to Anti-tracking where we discussed three key feature areas we’re focusing on to help people feel safe while they’re on the web. With today’s release, we’re making progress against “removing cross-site tracking” with what we’re calling Enhanced Tracking Protection. To ensure we balance these new preferences with the experiences our uses want and expect, we’re rolling things out off-by-default and starting with third-party cookies. You can learn more details about our approach here.

What’s a tracking cookie and why do I need to block them?

Cookies have been around since almost the beginning of the web. They were created so that browsers could store small bits of information, like remembering that you’ve already logged into a site. Like any technology, cookies have many uses, including ones that aren’t so easy to understand. These include the use of cookies to help track your behavior across the internet, a technique known as cross-site tracking, mostly without your knowledge. We go more in-depth about this in our Firefox Frontier blog post.

We’ve all had the experience of seeing ads change based on browsing, even across multiple websites. These ads are often for things that you have no interest in purchasing, but the economics of the internet make it easy to cast a wide net cheaply. Maybe this seems like no big deal, but we think that you should have a say in how this data is used. After all, it’s more than just an annoying pair of shoes following you around, it’s data that can be used to subtly shape the content you consume or even influence your opinions.

At Firefox, we believe in giving control to the people, and hence giving users the choice to block third-party tracking cookies and the information collected in them.

Introducing Firefox’s Enhanced Tracking Protection

With today’s Firefox release, users will have the option to block cookies and storage access from third-party trackers. This is designed to effectively block the most common form of cross-site tracking.