Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

This Week In Rust: This Week in Rust 257 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

- Writing an OS in Rust: Hardware interrupts.

- Towards fearless SIMD.

- Shifgrethor I: Garbage collection as a Rust library.

- Update on the October 15, 2018 incident on crates.io.

- docs.rs is now part of the rust-lang-nursery organization.

- Is Rust functional?

- Multithreading Rust and WebAssembly.

- Rust has higher kinded types already... sort of.

- Auth web microservice with Rust using actix-web.

Crate of the Week

This week's crate is static-assertions, a crate that does what it says on the tin – allow you to write static assertions. Thanks to llogiq for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

115 pull requests were merged in the last week

- mir-inlining: don't inline virtual calls

- reject partial init and reinit of uninitialized data

- improve verify_llvm_ir config option

- add missing lifetime fragment specifier to error message

- rustc: fix (again) simd vectors by-val in ABI

- resolve: scale back hard-coded extern prelude additions on 2015 edition

- resolve: do not skip extern prelude during speculative resolution

- allow explicit matches on ! without warning

- deduplicate some code and compile-time values around vtables

- NLL: propagate bounds from generators

- NLL lacks various special case handling of closures

- NLL: fix migrate mode issue by not buffering lints

- NLL: change compare-mode=nll to use borrowck=migrate

- NLL: use new region infer errors when explaining borrows

- NLL type annotations in multisegment path

- add filtering option to

rustc_on_unimplementedand rewordIteratorE0277 errors - custom E0277 diagnostic for

Path unused_patternslint- check the type of statics and constants for

Sizedness - miri: layout should not affect CTFE checks

- added graphviz visualization for obligation forests

- replace CanonicalVar with DebruijnIndex

- stabilize slice::chunks_exact(), chunks_exact_mut(), rchunks(), rchunks_mut(), rchunks_exact(), rchunks_exact_mut()

- add a

copysignfunction to f32 and f64 - don't warn about parentheses on

match (return) - handle underscore bounds in unexpected places

- fix ICE and report a human readable error

- add slice::rchunks(), rchunks_mut(), rchunks_exact() and rchunks_exact_mut()

- unify multiple errors on single typo in match pattern

- fix LLVMRustInlineAsmVerify return type mismatch

- miri engine: hooks for basic stacked borrows

- add support for 'cargo check --all-features'

- cargo: add PackageError wrappers for activation errors

- rustdoc: use dyn keyword when rendering dynamic traits

- rustdoc: don't prefer dynamic linking in doc tests

- rustdoc: add lint for doc without codeblocks

- detect if access to localStorage is forbidden by the user's browser

- librustdoc: disable spellcheck for search field

- crates.io: add a missing index on crates

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

- [disposition: merge] Re-Rebalancing Coherence.

- [disposition: merge] Allow non-ASCII identifiers.

- [disposition: merge] Meta-RFC: Future possibilities.

Tracking Issues & PRs

- [disposition: merge] Report const eval error inside the query.

- [disposition: merge] Unchecked thread spawning.

- [disposition: merge] Implement FromStr for PathBuf.

- [disposition: close] Regression from stable: pointer to usize conversion no longer compiles.

New RFCs

Upcoming Events

Online

- Oct 31. Rust Community Team Meeting in Discord.

- Nov 5. Rust Community Content Subteam Meeting in Discord.

- Nov 7. Rust Events Team Meeting in Telegram.

Africa

- Oct 27. Nairobi, KE - HACK & LEARN: Hacktoberfest Edition.

- Nov 6. Johannesburg, SA - Monthly Meetup of the Johannesburg Rustaceans.

Europe

- Oct 27. St. Petersburg, RU - Неформальная встреча Rust-разработчиков.

- Oct 30. Paris, FR - Rust Paris meetup #43.

- Oct 31. Prague, CZ - Prague Containers Meetup - The way of Rust.

- Oct 31. Berlin, DE - Berlin Rust Hack and Learn.

- Oct 31. Milan, IT - Rust Language Milano - Rust Exercises.

- Nov 7. Stuttgart, DE - Rust in der Industrie & Automatisierung.

- Nov 7. Cologne, DE - Rust Cologne.

North America

- Oct 28. Mountain View, US - Rust Dev in Mountain View!.

- Oct 30. Dallas, US - Dallas Rust - Last Tuesday.

- Oct 31. Vancouver, CA - Vancouver Rust meetup.

- Nov 4. Mountain View, US - Rust Dev in Mountain View!.

- Nov 7. Atlanta, US - Grab a beer with fellow Rustaceans.

- Nov 7. Indianapolis, US - Indy.rs.

- Nov 8. Utah, US - Utah Rust monthly meetup.

- Nov 8. Arlington, US - Rust DC - Mid-month Rustful.

- Nov 8. Columbus, US - Columbus Rust Society - Monthly Meeting.

- Nov 8. Boston, US - Rust/Scala meetup at SPLASH conf.

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Panic is “pulling over to the side of the road” whereas crash is “running into a telephone pole”.

– /u/zzzzYUPYUPphlumph on /r/rust

Thanks to KillTheMule for the suggestion!

Please submit your quotes for next week!

This Week in Rust is edited by: nasa42, llogiq, and Flavsditz.

https://this-week-in-rust.org/blog/2018/10/23/this-week-in-rust-257/

|

|

This Week In Rust: This Week in Rust 257 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

- Writing an OS in Rust: Hardware interrupts.

- Towards fearless SIMD.

- Shifgrethor I: Garbage collection as a Rust library.

- Update on the October 15, 2018 incident on crates.io.

- docs.rs is now part of the rust-lang-nursery organization.

- Is Rust functional?

- Multithreading Rust and WebAssembly.

- Rust has higher kinded types already... sort of.

- Auth web microservice with Rust using actix-web.

Crate of the Week

This week's crate is static-assertions, a crate that does what it says on the tin – allow you to write static assertions. Thanks to llogiq for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

115 pull requests were merged in the last week

- mir-inlining: don't inline virtual calls

- reject partial init and reinit of uninitialized data

- improve verify_llvm_ir config option

- add missing lifetime fragment specifier to error message

- rustc: fix (again) simd vectors by-val in ABI

- resolve: scale back hard-coded extern prelude additions on 2015 edition

- resolve: do not skip extern prelude during speculative resolution

- allow explicit matches on ! without warning

- deduplicate some code and compile-time values around vtables

- NLL: propagate bounds from generators

- NLL lacks various special case handling of closures

- NLL: fix migrate mode issue by not buffering lints

- NLL: change compare-mode=nll to use borrowck=migrate

- NLL: use new region infer errors when explaining borrows

- NLL type annotations in multisegment path

- add filtering option to

rustc_on_unimplementedand rewordIteratorE0277 errors - custom E0277 diagnostic for

Path unused_patternslint- check the type of statics and constants for

Sizedness - miri: layout should not affect CTFE checks

- added graphviz visualization for obligation forests

- replace CanonicalVar with DebruijnIndex

- stabilize slice::chunks_exact(), chunks_exact_mut(), rchunks(), rchunks_mut(), rchunks_exact(), rchunks_exact_mut()

- add a

copysignfunction to f32 and f64 - don't warn about parentheses on

match (return) - handle underscore bounds in unexpected places

- fix ICE and report a human readable error

- add slice::rchunks(), rchunks_mut(), rchunks_exact() and rchunks_exact_mut()

- unify multiple errors on single typo in match pattern

- fix LLVMRustInlineAsmVerify return type mismatch

- miri engine: hooks for basic stacked borrows

- add support for 'cargo check --all-features'

- cargo: add PackageError wrappers for activation errors

- rustdoc: use dyn keyword when rendering dynamic traits

- rustdoc: don't prefer dynamic linking in doc tests

- rustdoc: add lint for doc without codeblocks

- detect if access to localStorage is forbidden by the user's browser

- librustdoc: disable spellcheck for search field

- crates.io: add a missing index on crates

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

- [disposition: merge] Re-Rebalancing Coherence.

- [disposition: merge] Allow non-ASCII identifiers.

- [disposition: merge] Meta-RFC: Future possibilities.

Tracking Issues & PRs

- [disposition: merge] Report const eval error inside the query.

- [disposition: merge] Unchecked thread spawning.

- [disposition: merge] Implement FromStr for PathBuf.

- [disposition: close] Regression from stable: pointer to usize conversion no longer compiles.

New RFCs

Upcoming Events

Online

- Oct 31. Rust Community Team Meeting in Discord.

- Nov 5. Rust Community Content Subteam Meeting in Discord.

- Nov 7. Rust Events Team Meeting in Telegram.

Africa

- Oct 27. Nairobi, KE - HACK & LEARN: Hacktoberfest Edition.

- Nov 6. Johannesburg, SA - Monthly Meetup of the Johannesburg Rustaceans.

Europe

- Oct 27. St. Petersburg, RU - Неформальная встреча Rust-разработчиков.

- Oct 30. Paris, FR - Rust Paris meetup #43.

- Oct 31. Prague, CZ - Prague Containers Meetup - The way of Rust.

- Oct 31. Berlin, DE - Berlin Rust Hack and Learn.

- Oct 31. Milan, IT - Rust Language Milano - Rust Exercises.

- Nov 7. Stuttgart, DE - Rust in der Industrie & Automatisierung.

- Nov 7. Cologne, DE - Rust Cologne.

North America

- Oct 28. Mountain View, US - Rust Dev in Mountain View!.

- Oct 30. Dallas, US - Dallas Rust - Last Tuesday.

- Oct 31. Vancouver, CA - Vancouver Rust meetup.

- Nov 4. Mountain View, US - Rust Dev in Mountain View!.

- Nov 7. Atlanta, US - Grab a beer with fellow Rustaceans.

- Nov 7. Indianapolis, US - Indy.rs.

- Nov 8. Utah, US - Utah Rust monthly meetup.

- Nov 8. Arlington, US - Rust DC - Mid-month Rustful.

- Nov 8. Columbus, US - Columbus Rust Society - Monthly Meeting.

- Nov 8. Boston, US - Rust/Scala meetup at SPLASH conf.

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Panic is “pulling over to the side of the road” whereas crash is “running into a telephone pole”.

– /u/zzzzYUPYUPphlumph on /r/rust

Thanks to KillTheMule for the suggestion!

Please submit your quotes for next week!

This Week in Rust is edited by: nasa42, llogiq, and Flavsditz.

https://this-week-in-rust.org/blog/2018/10/23/this-week-in-rust-257/

|

|

Cameron Kaiser: TenFourFox FPR10 available |

The changes for FPR11 (December) and FPR12 will be smaller in scope mostly because of the holidays and my parallel work on the POWER9 JIT for Firefox on the Talos II. For the next couple FPRs I'm planning to do more ES6 work (mostly Symbol and whatever else I can shoehorn in) and to enable unique data URI origins, and possibly get requestIdleCallback into a releaseable state. Despite the slower pace, however, we will still be tracking the Firefox release schedule as usual.

http://tenfourfox.blogspot.com/2018/10/tenfourfox-fpr10-available.html

|

|

The Servo Blog: RGSoC wrap-up - Supporting Responsive Images in Servo |

|

|

Mozilla Future Releases Blog: Testing new ways to keep you safe online |

Mozilla has long played an important role in the online world, and we’re proud of the impact we’ve had. But we want to do even more, and that means exploring ways to keep you safe beyond the browser’s reach. Across numerous studies we’ve consistently heard from our users that they want Firefox to protect their privacy on public networks like cafes and airports. With that in mind, over the next few months we will be running an experiment in which we’ll offer a virtual private network (VPN) service to a small group of Firefox users.

This experiment is also important to Mozilla’s future. We believe that an innovative, vibrant, and sustainable Mozilla is critical to the future of the open Internet, and we plan to be here over the long haul. To do that with confidence we also need to have diverse sources of revenue. For some time now Mozilla has largely been funded by our search partnerships. With this VPN experiment which kicks off Wednesday, October 24th, we’re starting the process of exploring new, additional sources of revenue that align with our mission.

What is a VPN?

A VPN is an online service and a piece of software that work together to secure your internet connection against monitoring and eavesdropping. By encrypting all your internet traffic and routing it through a secure server, a VPN prevents your ISP (internet service provider), school, or government from seeing which websites you visit and tracing your online activity back to your IP address. A VPN can also offer valuable peace of mind when you’re using an unsecured public Wi-Fi network, like the one at the airport or your local coffee shop.

How will it work?

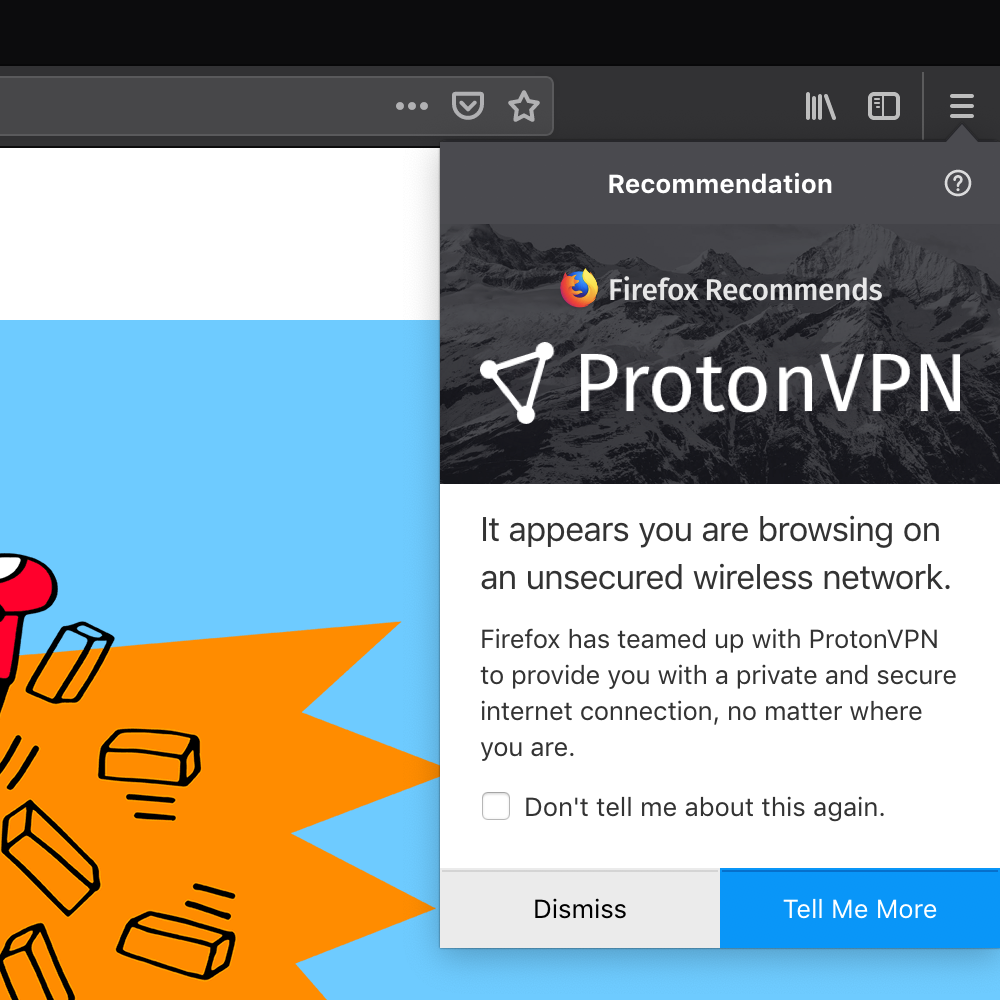

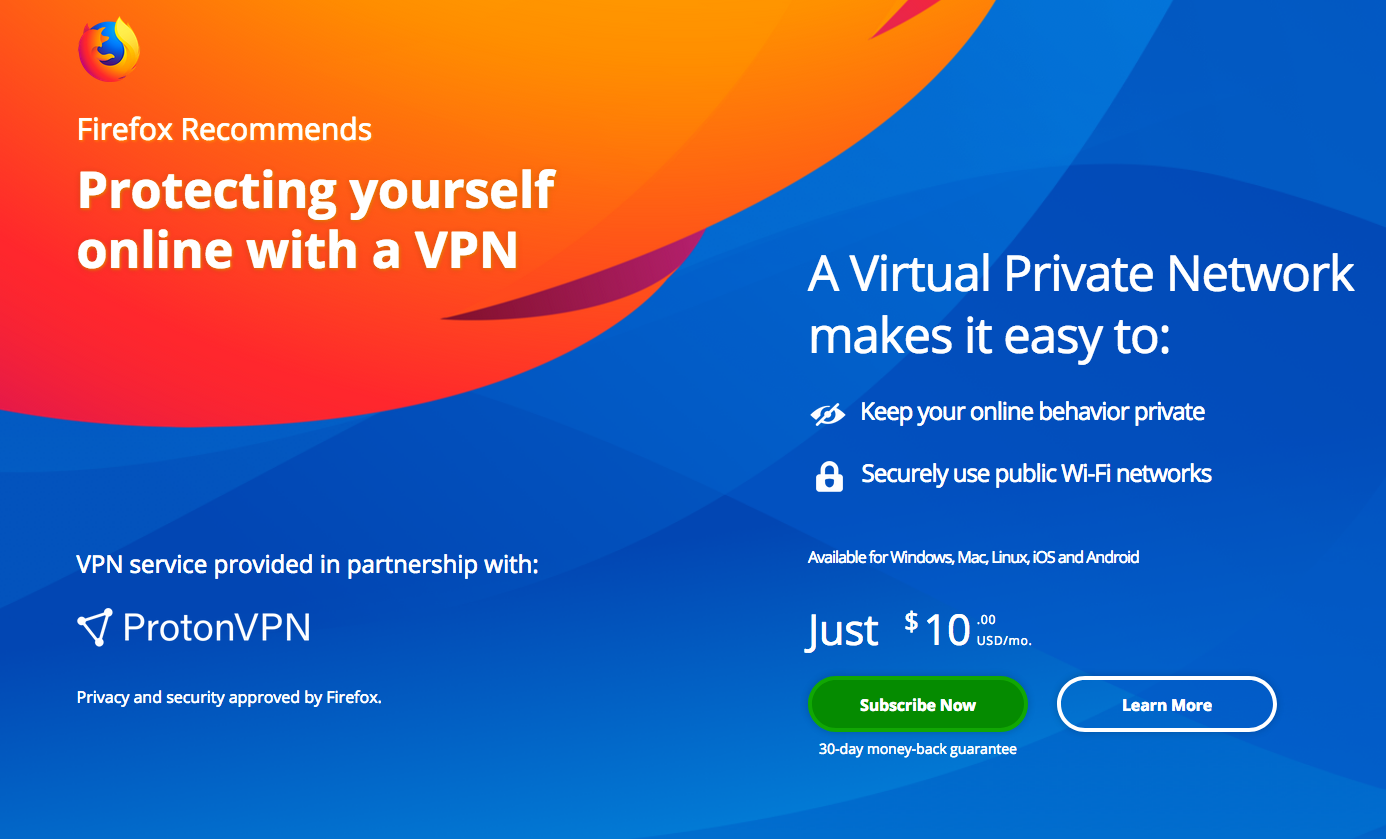

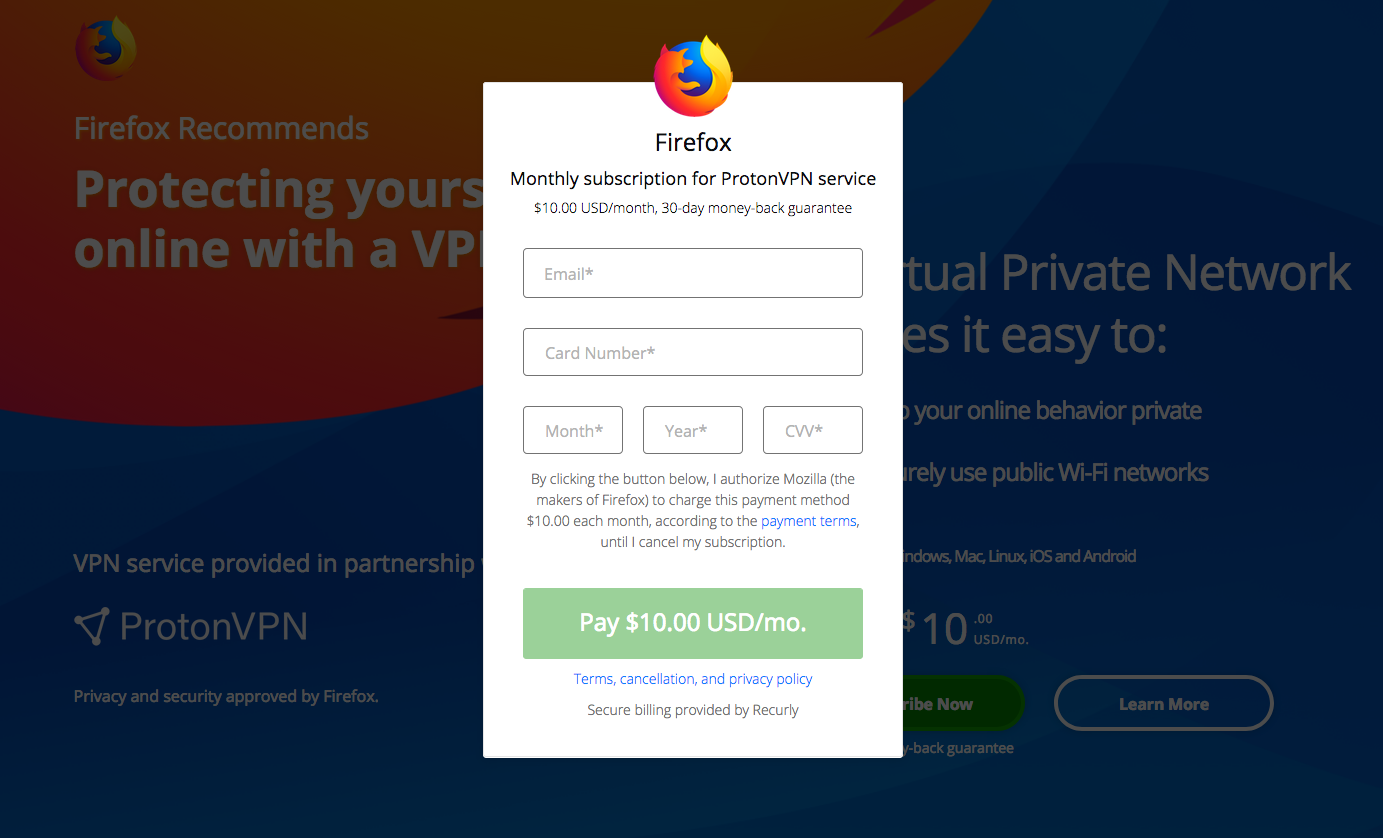

A small, random group of US-based Firefox users will be presented with an offer to purchase a monthly subscription to a VPN service that’s been vetted and approved by Mozilla. After signing up for a subscription (billed securely using payment services Stripe and Recurly) they will be able to download and install the VPN software. Windows, macOS, Linux, iOS, and Android are all supported. The VPN can be easily turned on or off as needed, and the subscription can be cancelled at any time.

Screenshots of the experiment’s user experience:

Partnership with ProtonVPN

Using a VPN service means placing a great deal of trust in its provider because you depend upon both the safety of its technology and its commitment to protecting your privacy. There are many VPN vendors out there, but not all of them are created equal. We knew that we could only offer our users a VPN product if it met or exceeded our most rigorous standards. We also knew that the practices, policies, and character of these vendors would be just as important in our decision.

We therefore set out to conduct a thorough evaluation of a long list of market-leading VPN services. Our team looked closely at a wide variety of factors, ranging from the design and implementation of each VPN service and its accompanying software, to the security of the vendor’s own network and internal systems. We examined each vendors’ privacy and data retention policies to ensure they logged as little user data as possible. And we considered numerous other factors, including local privacy laws, company track record, transparency, and quality of support.

As a result of this evaluation we’ve selected ProtonVPN for this experiment. ProtonVPN offers a secure, reliable, and easy-to-use VPN service and is operated by the makers of ProtonMail, a respected, privacy-oriented email service. Based in Switzerland, ProtonVPN has a strict privacy policy and does not log any data about your usage of their service. As a company they have a track record of fighting for online privacy and they share our dedication to internet safety and security.

Your purchase supports Mozilla’s work

ProtonVPN will be providing the service in this experiment. Mozilla will be the party collecting payment from Firefox users who decide to subscribe. A portion of these proceeds will be shared with ProtonVPN, to offset their costs in operating the service, and a portion will go to Mozilla. In this way, subscribers will be directly supporting Mozilla while benefiting from one of the very best VPN services on the market today.

We’re looking forward to this experiment and we are excited to bring the protection of a VPN to more people. We’ll be watching both user and community feedback closely as this experiment runs.

The post Testing new ways to keep you safe online appeared first on Future Releases.

https://blog.mozilla.org/futurereleases/2018/10/22/testing-new-ways-to-keep-you-safe-online/

|

|

Hacks.Mozilla.Org: WebAssembly’s post-MVP future: A cartoon skill tree |

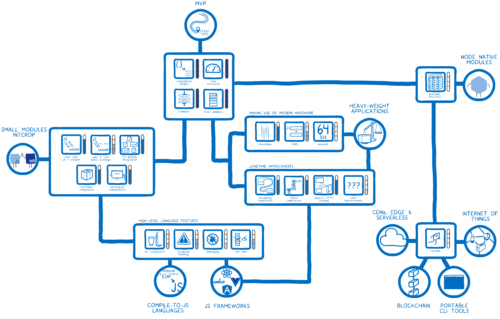

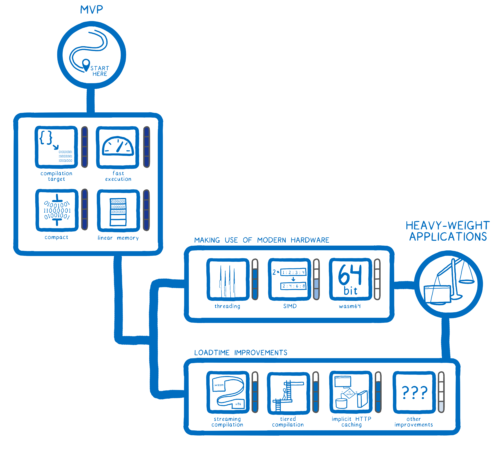

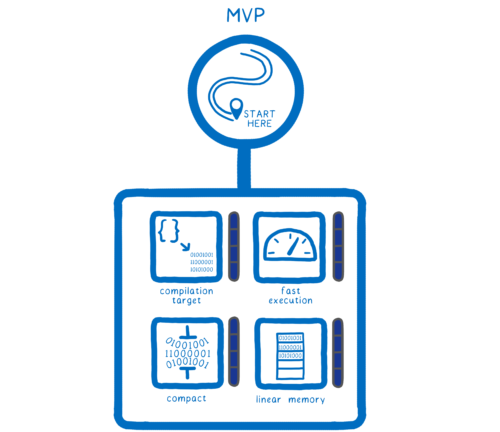

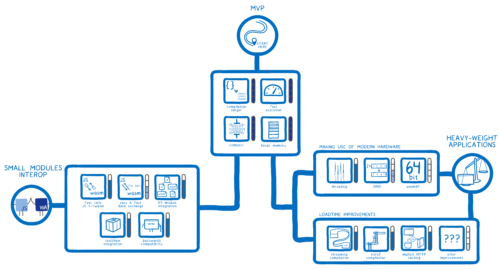

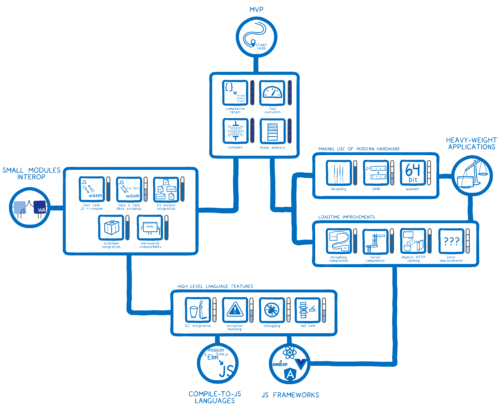

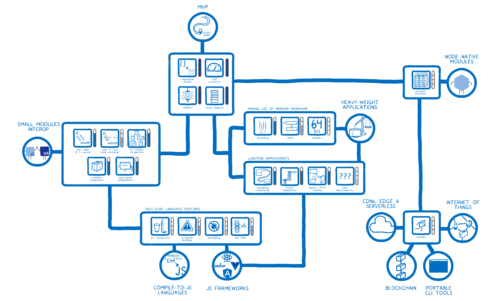

People have a misconception about WebAssembly. They think that the WebAssembly that landed in browsers back in 2017—which we called the minimum viable product (or MVP) of WebAssembly—is the final version of WebAssembly.

I can understand where that misconception comes from. The WebAssembly community group is really committed to backwards compatibility. This means that the WebAssembly that you create today will continue working on browsers into the future.

But that doesn’t mean that WebAssembly is feature complete. In fact, that’s far from the case. There are many features that are coming to WebAssembly which will fundamentally alter what you can do with WebAssembly.

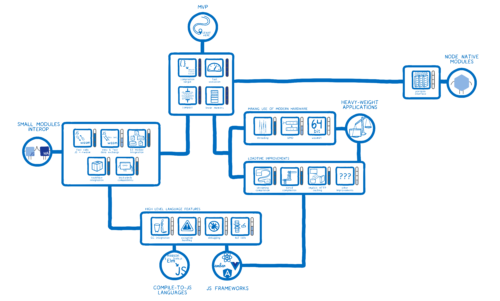

I think of these future features kind of like the skill tree in a videogame. We’ve fully filled in the top few of these skills, but there is still this whole skill tree below that we need to fill-in to unlock all of the applications.

So let’s look at what’s been filled in already, and then we can see what’s yet to come.

Minimum Viable Product (MVP)

The very beginning of WebAssembly’s story starts with Emscripten, which made it possible to run C++ code on the web by transpiling it to JavaScript. This made it possible to bring large existing C++ code bases, for things like games and desktop applications, to the web.

The JS it automatically generated was still significantly slower than the comparable native code, though. But Mozilla engineers found a type system hiding inside the generated JavaScript, and figured out how to make this JavaScript run really fast. This subset of JavaScript was named asm.js.

Once the other browser vendors saw how fast asm.js was, they started adding the same optimizations to their engines, too.

But that wasn’t the end of the story. It was just the beginning. There were still things that engines could do to make this faster.

But they couldn’t do it in JavaScript itself. Instead, they needed a new language—one that was designed specifically to be compiled to. And that was WebAssembly.

So what skills were needed for the first version of WebAssembly? What did we need to get to a minimum viable product that could actually run C and C++ efficiently on the web?

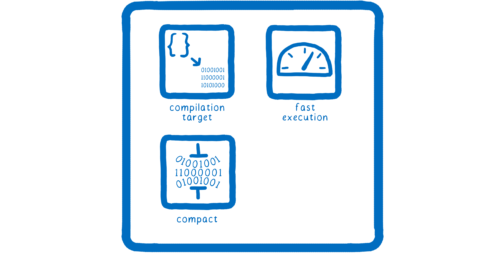

Skill: Compile target

The folks working on WebAssembly knew they didn’t want to just support C and C++. They wanted many different languages to be able to compile to WebAssembly. So they needed a language-agnostic compile target.

The folks working on WebAssembly knew they didn’t want to just support C and C++. They wanted many different languages to be able to compile to WebAssembly. So they needed a language-agnostic compile target.

They needed something like the assembly language that things like desktop applications are compiled to—like x86. But this assembly language wouldn’t be for an actual, physical machine. It would be for a conceptual machine.

Skill: Fast execution

That compiler target had to be designed so that it could run very fast. Otherwise, WebAssembly applications running on the web wouldn’t keep up with users’ expectations for smooth interactions and game play.

That compiler target had to be designed so that it could run very fast. Otherwise, WebAssembly applications running on the web wouldn’t keep up with users’ expectations for smooth interactions and game play.

Skill: Compact

In addition to execution time, load time needed to be fast, too. Users have certain expectations about how quickly something will load. For desktop applications, that expectation is that they will load quickly because the application is already installed on your computer. For web apps, the expectation is also that load times will be fast, because web apps usually don’t have to load nearly as much code as desktop apps.

In addition to execution time, load time needed to be fast, too. Users have certain expectations about how quickly something will load. For desktop applications, that expectation is that they will load quickly because the application is already installed on your computer. For web apps, the expectation is also that load times will be fast, because web apps usually don’t have to load nearly as much code as desktop apps.

When you combine these two things, though, it gets tricky. Desktop applications are usually pretty large code bases. So if they are on the web, there’s a lot to download and compile when the user first goes to the URL.

To meet these expectations, we needed our compiler target to be compact. That way, it could go over the web quickly.

Skill: Linear memory

These languages also needed to be able to use memory differently from how JavaScript uses memory. They needed to be able to directly manage their memory—to say which bytes go together.

These languages also needed to be able to use memory differently from how JavaScript uses memory. They needed to be able to directly manage their memory—to say which bytes go together.

This is because languages like C and C++ have a low-level feature called pointers. You can have a variable that doesn’t have a value in it, but instead has the memory address of the value. So if you’re going to support pointers, the program needs to be able to write and read from particular addresses.

But you can’t have a program you downloaded from the web just accessing bytes in memory willy-nilly, using whatever addresses they want. So in order to create a secure way of giving access to memory, like a native program is used to, we had to create something that could give access to a very specific part of memory and nothing else.

To do this, WebAssembly uses a linear memory model. This is implemented using TypedArrays. It’s basically just like a JavaScript array, except this array only contains bytes of memory. When you access data in it, you just use array indexes, which you can treat as though they were memory addresses. This means you can pretend this array is C++ memory.

Achievement unlocked

So with all of these skills in place, people could run desktop applications and games in your browser as if they were running natively on their computer.

And that was pretty much the skill set that WebAssembly had when it was released as an MVP. It was truly an MVP—a minimum viable product.

This allowed certain kinds of applications to work, but there were still a whole host of others to unlock.

This allowed certain kinds of applications to work, but there were still a whole host of others to unlock.

Heavy-weight Desktop Applications

The next achievement to unlock is heavier weight desktop applications.

The next achievement to unlock is heavier weight desktop applications.

Can you imagine if something like Photoshop were running in your browser? If you could instantaneously load it on any device like you do with Gmail?

We’ve already started seeing things like this. For example, Autodesk’s AutoCAD team has made their CAD software available the browser. And Adobe has made Lightroom available through the browser using WebAssembly.

But there are still a few features that we need to put in place to make sure that all of these applications—even the heaviest of heavy weight—can run well in the browser.

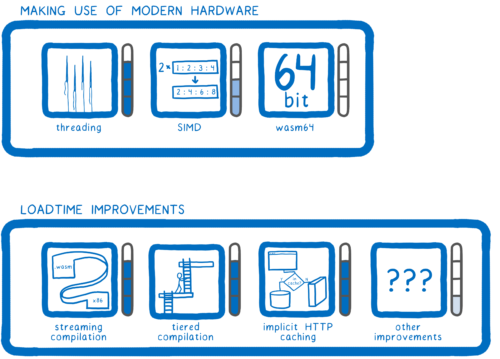

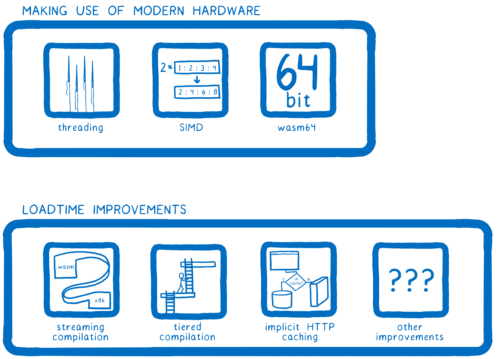

Skill: Threading

First, we need support for multithreading. Modern-day computers have multiple cores. These are basically multiple brains that can all be working at the same time on your problem. That can make things go much faster, but to make use of these cores, you need support for threading.

First, we need support for multithreading. Modern-day computers have multiple cores. These are basically multiple brains that can all be working at the same time on your problem. That can make things go much faster, but to make use of these cores, you need support for threading.

Skill: SIMD

Alongside threading, there’s another technique that utilizes modern hardware, and which enables you to process things in parallel.

Alongside threading, there’s another technique that utilizes modern hardware, and which enables you to process things in parallel.

That is SIMD: single instruction multiple data. With SIMD, it’s possible to take a chunk of memory and split up across different execution units, which are kind of like cores. Then you have the same bit of code—the same instruction—run across all of those execution units, but they each apply that instruction to their own bit of the data.

Skill: 64-bit addressing

Another hardware capability that WebAssembly needs to take full advantage of is 64-bit addressing.

Another hardware capability that WebAssembly needs to take full advantage of is 64-bit addressing.

Memory addresses are just numbers, so if your memory addresses are only 32 bits long, you can only have so many memory addresses—enough for 4 gigabytes of linear memory.

But with 64-bit addressing, you have 16 exabytes. Of course, you don’t have 16 exabytes of actual memory in your computer. So the maximum is subject to however much memory the system can actually give you. But this will take the artificial limitation on address space out of WebAssembly.

Skill: Streaming compilation

For these applications, we don’t just need them to run fast. We needed load times to be even faster than they already were. There are a few skills that we need specifically to improve load times.

For these applications, we don’t just need them to run fast. We needed load times to be even faster than they already were. There are a few skills that we need specifically to improve load times.

One big step is to do streaming compilation—to compile a WebAssembly file while it’s still being downloaded. WebAssembly was designed specifically to enable easy streaming compilation. In Firefox, we actually compile it so fast—faster than it is coming in over the network— that it’s pretty much done compiling by the time you download the file. And other browsers are adding streaming, too.

Another thing that helps is having a tiered compiler.

Another thing that helps is having a tiered compiler.

For us in Firefox, that means having two compilers. The first one—the baseline compiler—kicks in as soon as the file starts downloading. It compiles the code really quickly so that it starts up quickly.

The code it generates is fast, but not 100% as fast as it could be. To get that extra bit of performance, we run another compiler—the optimizing compiler—on several threads in the background. This one takes longer to compile, but generates extremely fast code. Once it’s done, we swap out the baseline version with the fully optimized version.

This way, we get quick start up times with the baseline compiler, and fast execution times with the optimizing compiler.

In addition, we’re working on a new optimizing compiler called Cranelift. Cranelift is designed to compile code quickly, in parallel at a function by function level. At the same time, the code it generates gets even better performance than our current optimizing compiler.

Cranelift is in the development version of Firefox right now, but disabled by default. Once we enable it, we’ll get to the fully optimized code even quicker, and that code will run even faster.

But there’s an even better trick we can use to make it so we don’t have to compile at all most of the time…

Skill: Implicit HTTP caching

With WebAssembly, if you load the same code on two page loads, it will compile to the same machine code. It doesn’t need to change based on what data is flowing through it, like the JS JIT compiler needs to.

With WebAssembly, if you load the same code on two page loads, it will compile to the same machine code. It doesn’t need to change based on what data is flowing through it, like the JS JIT compiler needs to.

This means that we can store the compiled code in the HTTP cache. Then when the page is loading and goes to fetch the .wasm file, it will instead just pull out the precompiled machine code from the cache. This skips compiling completely for any page that you’ve already visited that’s in cache.

Skill: Other improvements

Many discussions are currently percolating around other ways to improve this, skipping even more work, so stay tuned for other load-time improvements.

Many discussions are currently percolating around other ways to improve this, skipping even more work, so stay tuned for other load-time improvements.

Where are we with this?

Where are we with supporting these heavyweight applications right now?

- Threading

- For the threading, we have a proposal that’s pretty much done, but a key piece of that—SharedArrayBuffers—had to be turned off in browsers earlier this year.

They will be turned on again. Turning them off was just a temporary measure to reduce the impact of the Spectre security issue that was discovered in CPUs and disclosed earlier this year, but progress is being made, so stay tuned. - SIMD

- SIMD is under very active development at the moment.

- 64-bit addressing

- For wasm-64, we have a good picture of how we will add this, and it is pretty similar to how x86 or ARM got support for 64 bit addressing.

- Streaming compilation

- We added streaming compilation in late 2017, and other browsers are working on it too.

- Tiered compilation

- We added our baseline compiler in late 2017 as well, and other browsers have been adding the same kind of architecture over the past year.

- Implicit HTTP caching

- In Firefox, we’re getting close to landing support for implicit HTTP caching.

- Other improvements

- Other improvements are currently in discussion.

Even though this is all still in progress, you already see some of these heavyweight applications coming out today, because WebAssembly already gives these apps the performance that they need.

But once these features are all in place, that’s going to be another achievement unlocked, and more of these heavyweight applications will be able to come to the browser.

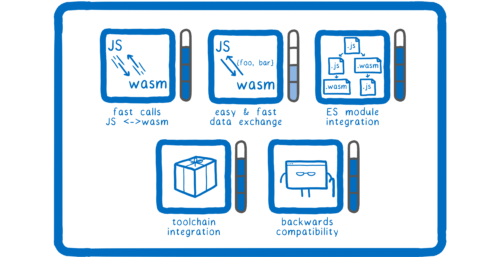

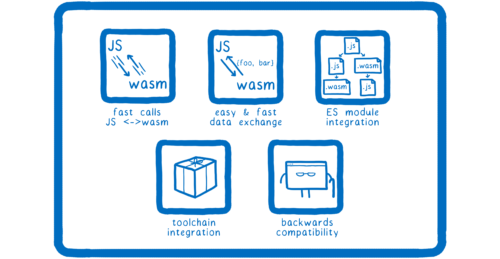

Small modules interoperating with JavaScript

But WebAssembly isn’t just for games and for heavyweight applications. It’s also meant for regular web development… for the kind of web development folks are used to: the small modules kind of web development.

But WebAssembly isn’t just for games and for heavyweight applications. It’s also meant for regular web development… for the kind of web development folks are used to: the small modules kind of web development.

Sometimes you have little corners of your app that do a lot of heavy processing, and in some cases, this processing can be faster with WebAssembly. We want to make it easy to port these bits to WebAssembly.

Again, this is a case where some of it’s already happening. Developers are already incorporating WebAssembly modules in places where there are tiny modules doing lots of heavy lifting.

One example is the parser in the source map library that’s used in Firefox’s DevTools and webpack. It was rewritten in Rust, compiled to WebAssembly, which made it 11x faster. And WordPress’s Gutenberg parser is on average 86x faster after doing the same kind of rewrite.

But for this kind of use to really be widespread—for people to be really comfortable doing it—we need to have a few more things in place.

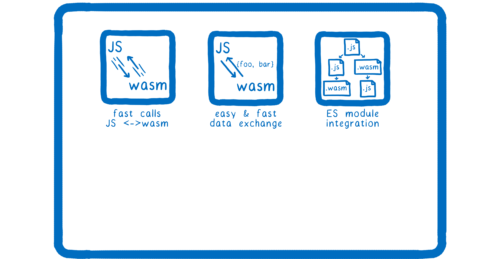

Skill: Fast calls between JS and WebAssembly

First, we need fast calls between JS and WebAssembly, because if you’re integrating a small module into an existing JS system, there’s a good chance you’ll need to call between the two a lot. So you’ll need those calls to be fast.

First, we need fast calls between JS and WebAssembly, because if you’re integrating a small module into an existing JS system, there’s a good chance you’ll need to call between the two a lot. So you’ll need those calls to be fast.

But when WebAssembly first came out, these calls weren’t fast. This is where we get back to that whole MVP thing—the engines had the minimum support for calls between the two. They just made the calls work, they didn’t make them fast. So engines need to optimize these.

We’ve recently finished our work on this in Firefox. Now some of these calls are actually faster than non-inlined JavaScript to JavaScript calls. And others engines are also working on this.

Skill: Fast and easy data exchange

That brings us to another thing, though. When you’re calling between JavaScript and WebAssembly, you often need to pass data between them.

That brings us to another thing, though. When you’re calling between JavaScript and WebAssembly, you often need to pass data between them.

You need to pass values into the WebAssembly function or return a value from it. This can also be slow, and it can be difficult too.

There are a couple of reasons it’s hard. One is because, at the moment, WebAssembly only understands numbers. This means that you can’t pass more complex values, like objects, in as parameters. You need to convert that object into numbers and put it in the linear memory. Then you pass WebAssembly the location in the linear memory.

That’s kind of complicated. And it takes some time to convert the data into linear memory. So we need this to be easier and faster.

Skill: ES module integration

Another thing we need is integration with the browser’s built in ES module support. Right now, you instantiate a WebAssembly module using an imperative API. You call a function and it gives you back a module.

Another thing we need is integration with the browser’s built in ES module support. Right now, you instantiate a WebAssembly module using an imperative API. You call a function and it gives you back a module.

But that means that the WebAssembly module isn’t really part of the JS module graph. In order to use import and export like you do with JS modules, you need to have ES module integration.

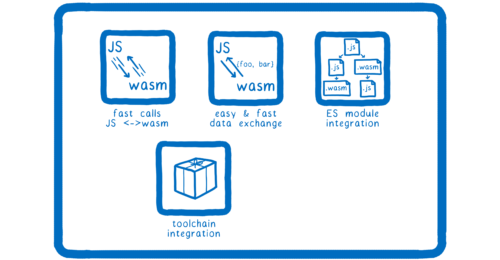

Skill: Toolchain integration

Just being able to import and export doesn’t get us all the way there, though. We need a place to distribute these modules, and download them from, and tools to bundle them up.

Just being able to import and export doesn’t get us all the way there, though. We need a place to distribute these modules, and download them from, and tools to bundle them up.

What’s the npm for WebAssembly? Well, what about npm?

And what’s the webpack or Parcel for WebAssembly? Well, what about webpack and Parcel?

These modules shouldn’t look any different to the people who are using them, so no reason to create a separate ecosystem. We just need tools to integrate with them.

Skill: Backwards compatibility

There’s one more thing that we need to really do well in existing JS applications—support older versions of browsers, even those browsers that don’t know what WebAssembly is. We need to make sure that you don’t have to write a whole second implementation of your module in JavaScript just so that you can support IE11.

There’s one more thing that we need to really do well in existing JS applications—support older versions of browsers, even those browsers that don’t know what WebAssembly is. We need to make sure that you don’t have to write a whole second implementation of your module in JavaScript just so that you can support IE11.

Where are we on this?

So where are we on this?

- Fast calls between JS and WebAssembly

- Calls between JS and WebAssembly are fast in Firefox now, and other browsers are also working on this.

- Easy and fast data exchange

- For easy and fast data exchange, there are a few proposals that will help with this.

As I mentioned before, one reason you have to use linear memory for more complex kinds of data is because WebAssembly only understands numbers. The only types it has are ints and floats.

With the reference types proposal, this will change. This proposal adds a new type that WebAssembly functions can take as arguments and return. And this type is a reference to an object from outside WebAssembly—for example, a JavaScript object.

But WebAssembly can’t operate directly on this object. To actually do things like call a method on it, it will still need to use some JavaScript glue. This means it works, but it’s slower than it needs to be.

To speed things up, there’s a proposal that we’ve been calling the host bindings proposal. It let’s a wasm module declare what glue must be applied to its imports and exports, so that the glue doesn’t need to be written in JS. By pulling glue from JS into wasm, the glue can be optimized away completely when calling builtin Web APIs.

There’s one more part of the interaction that we can make easier. And that has to do with keeping track of how long data needs to stay in memory. If you have some data in linear memory that JS needs access to, then you have to leave it there until the JS reads the data. But if you leave it in there forever, you have what’s called a memory leak. How do you know when you can delete the data? How do you know when JS is done with it? Currently, you have to manage this yourself.

Once the JS is done with the data, the JS code has to call something like a free function to free the memory. But this is tedious and error prone. To make this process easier, we’re adding WeakRefs to JavaScript. With this, you will be able to observe objects on the JS side. Then you can do cleanup on the WebAssembly side when that object is garbage collected.

So these proposals are all in flight. In the meantime, the Rust ecosystem has created tools that automate this all for you, and that polyfill the proposals that are in flight.

One tool in particular is worth mentioning, because other languages can use it too. It’s called wasm-bindgen. When it sees that your Rust code should do something like receive or return certain kinds of JS values or DOM objects, it will automatically create JavaScript glue code that does this for you, so you don’t need to think about it. And because it’s written in a language independent way, other language toolchains can adopt it.

- ES module integration

- For ES module integration, the proposal is pretty far along. We are starting work with the browser vendors to implement it.

- Toolchain support

- For toolchain support, there are tools like

wasm-packin the Rust ecosystem which automatically runs everything you need to package your code for npm. And the bundlers are also actively working on support. - Backwards compatibility

- Finally, for backwards compatibility, there’s the

wasm2jstool. That takes a wasm file and spits out the equivalent JS. That JS isn’t going to be fast, but at least that means it will work in older versions of browsers that don’t understand WebAssembly.

So we’re getting close to unlocking this achievement. And once we unlock it, we open the path to another two.

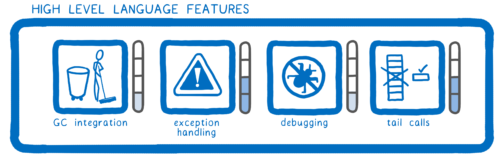

JS frameworks and compile-to-JS languages

JS frameworks and compile-to-JS languages

One is rewriting large parts of things like JavaScript frameworks in WebAssembly.

The other is making it possible for statically-typed compile-to-js languages to compile to WebAssembly instead—for example, having languages like Scala.js, or Reason, or Elm compile to WebAssembly.

The other is making it possible for statically-typed compile-to-js languages to compile to WebAssembly instead—for example, having languages like Scala.js, or Reason, or Elm compile to WebAssembly.

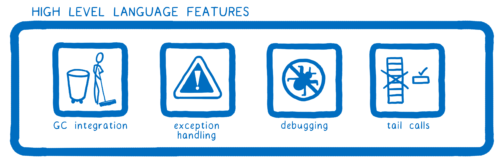

For both of these use cases, WebAssembly needs to support high-level language features.

For both of these use cases, WebAssembly needs to support high-level language features.

Skill: GC

We need integration with the browser’s garbage collector for a couple of reasons.

We need integration with the browser’s garbage collector for a couple of reasons.

First, let’s look at rewriting parts of JS frameworks. This could be good for a couple of reasons. For example, in React, one thing you could do is rewrite the DOM diffing algorithm in Rust, which has very ergonomic multithreading support, and parallelize that algorithm.

You could also speed things up by allocating memory differently. In the virtual DOM, instead of creating a bunch of objects that need to be garbage collected, you could used a special memory allocation scheme. For example, you could use a bump allocator scheme which has extremely cheap allocation and all-at-once deallocation. That could potentially help speed things up and reduce memory usage.

But you’d still need to interact with JS objects—things like components—from that code. You can’t just continually copy everything in and out of linear memory, because that would be difficult and inefficient.

So you need to be able to integrate with the browser’s GC so you can work with components that are managed by the JavaScript VM. Some of these JS objects need to point to data in linear memory, and sometimes the data in linear memory will need to point out to JS objects.

If this ends up creating cycles, it can mean trouble for the garbage collector. It means the garbage collector won’t be able to tell if the objects are used anymore, so they will never be collected. WebAssembly needs integration with the GC to make sure these kinds of cross-language data dependencies work.

This will also help statically-typed languages that compile to JS, like Scala.js, Reason, Kotlin or Elm. These language use JavaScript’s garbage collector when they compile to JS. Because WebAssembly can use that same GC—the one that’s built into the engine—these languages will be able to compile to WebAssembly instead and use that same garbage collector. They won’t need to change how GC works for them.

Skill: Exception handling

We also need better support for handling exceptions.

We also need better support for handling exceptions.

Some languages, like Rust, do without exceptions. But in other languages, like C++, JS or C#, exception handling is sometimes used extensively.

You can polyfill exception handling currently, but the polyfill makes the code run really slowly. So the default when compiling to WebAssembly is currently to compile without exception handling.

However, since JavaScript has exceptions, even if you’ve compiled your code to not use them, JS may throw one into the works. If your WebAssembly function calls a JS function that throws, then the WebAssembly module won’t be able to correctly handle the exception. So languages like Rust choose to abort in this case. We need to make this work better.

Skill: Debugging

Another thing that people working with JS and compile-to-JS languages are used to having is good debugging support. Devtools in all of the major browsers make it easy to step through JS. We need this same level of support for debugging WebAssembly in browsers.

Another thing that people working with JS and compile-to-JS languages are used to having is good debugging support. Devtools in all of the major browsers make it easy to step through JS. We need this same level of support for debugging WebAssembly in browsers.

Skill: Tail calls

And finally, for many functional languages, you need to have support for something called tail calls. I’m not going to get too into the details on this, but basically it lets you call a new function without adding a new stack frame to the stack. So for functional languages that support this, we want WebAssembly to support it too.

And finally, for many functional languages, you need to have support for something called tail calls. I’m not going to get too into the details on this, but basically it lets you call a new function without adding a new stack frame to the stack. So for functional languages that support this, we want WebAssembly to support it too.

Where are we on this?

So where are we on this?

- Garbage collection

- For garbage collection, there are two proposals currently underway:

The Typed Objects proposal for JS, and the GC proposal for WebAssembly. Typed Objects will make it possible to describe an object’s fixed structure. There is an explainer for this, and the proposal will be discussed at an upcoming TC39 meeting.

The WebAssembly GC proposal will make it possible to directly access that structure. This proposal is under active development.

With both of these in place, both JS and WebAssembly know what an object looks like and can share that object and efficiently access the data stored on it. Our team actually already has a prototype of this working. However, it still will take some time for these to go through standardization so we’re probably looking at sometime next year.

- Exception handling

- Exception handling is still in the research and development phase, and there’s work now to see if it can take advantage of other proposals like the reference types proposal I mentioned before.

- Debugging

- For debugging, there is currently some support in browser devtools. For example, you can step through the text format of WebAssembly in Firefox debugger.But it’s still not ideal. We want to be able to show you where you are in your actual source code, not in the assembly. The thing that we need to do for that is figure out how source maps—or a source maps type thing—work for WebAssembly. So there’s a subgroup of the WebAssembly CG working on specifying that.

- Tail calls

- The tail calls proposal is also underway.

Once those are all in place, we’ll have unlocked JS frameworks and many compile-to-JS languages.

So, those are all achievements we can unlock inside the browser. But what about outside the browser?

So, those are all achievements we can unlock inside the browser. But what about outside the browser?

Outside the Browser

Now, you may be confused when I talk about “outside the browser”. Because isn’t the browser what you use to view the web? And isn’t that right in the name—WebAssembly.

But the truth is the things you see in the browser—the HTML and CSS and JavaScript—are only part of what makes the web. They are the visible part—they are what you use to create a user interface—so they are the most obvious.

But there’s another really important part of the web which has properties that aren’t as visible.

But there’s another really important part of the web which has properties that aren’t as visible.

That is the link. And it is a very special kind of link.

The innovation of this link is that I can link to your page without having to put it in a central registry, and without having to ask you or even know who you are. I can just put that link there.

The innovation of this link is that I can link to your page without having to put it in a central registry, and without having to ask you or even know who you are. I can just put that link there.

It’s this ease of linking, without any oversight or approval bottlenecks, that enabled our web. That’s what enabled us to form these global communities with people we didn’t know.

But if all we have is the link, there are two problems here that we haven’t addressed.

The first one is… you go visit this site and it delivers some code to you. How does it know what kind of code it should deliver to you? Because if you’re running on a Mac, then you need different machine code than you do on Windows. That’s why you have different versions of programs for different operating systems.

Then should a web site have a different version of the code for every possible device? No.

Instead, the site has one version of the code—the source code. This is what’s delivered to the user. Then it gets translated to machine code on the user’s device.

The name for this concept is portability.

So that’s great, you can load code from people who don’t know you and don’t know what kind of device you’re running.

So that’s great, you can load code from people who don’t know you and don’t know what kind of device you’re running.

But that brings us to a second problem. If you don’t know these people who’s web pages you’re loading, how do you know what kind of code they’re giving you? It could be malicious code. It could be trying to take over your system.

Doesn’t this vision of the web—running code from anybody who’s link you follow—mean that you have to blindly trust anyone who’s on the web?

This is where the other key concept from the web comes in.

That’s the security model. I’m going to call it the sandbox.

Basically, the browser takes the page—that other person’s code—and instead of letting it run around willy-nilly in your system, it puts it in a sandbox. It puts a couple of toys that aren’t dangerous into that sandbox so that the code can do some things, but it leaves the dangerous things outside of the sandbox.

Basically, the browser takes the page—that other person’s code—and instead of letting it run around willy-nilly in your system, it puts it in a sandbox. It puts a couple of toys that aren’t dangerous into that sandbox so that the code can do some things, but it leaves the dangerous things outside of the sandbox.

So the utility of the link is based on these two things:

- Portability—the ability to deliver code to users and have it run on any type of device that can run a browser.

- And the sandbox—the security model that lets you run that code without risking the integrity of your machine.

So why does this distinction matter? Why does it make a difference if we think of the web as something that the browser shows us using HTML, CSS, and JS, or if we think of the web in terms of portability and the sandbox?

Because it changes how you think about WebAssembly.

You can think about WebAssembly as just another tool in the browser’s toolbox… which it is.

It is another tool in the browser’s toolbox. But it’s not just that. It also gives us a way to take these other two capabilities of the web—the portability and the security model—and take them to other use cases that need them too.

It is another tool in the browser’s toolbox. But it’s not just that. It also gives us a way to take these other two capabilities of the web—the portability and the security model—and take them to other use cases that need them too.

We can expand the web past the boundaries of the browser. Now let’s look at where these attributes of the web would be useful.

Node.js

How could WebAssembly help Node? It could bring full portability to Node.

How could WebAssembly help Node? It could bring full portability to Node.

Node gives you most of the portability of JavaScript on the web. But there are lots of cases where Node’s JS modules aren’t quite enough—where you need to improve performance or reuse existing code that’s not written in JS.

In these cases, you need Node’s native modules. These modules are written in languages like C, and they need to be compiled for the specific kind of machine that the user is running on.

Native modules are either compiled when the user installs, or precompiled into binaries for a wide matrix of different systems. One of these approaches is a pain for the user, the other is a pain for the package maintainer.

Now, if these native modules were written in WebAssembly instead, then they wouldn’t need to be compiled specifically for the target architecture. Instead, they’d just run like the JavaScript in Node runs. But they’d do it at nearly native performance.

So we get to full portability for the code running in Node. You could take the exact same Node app and run it across all different kinds of devices without having to compile anything.

But WebAssembly doesn’t have direct access to the system’s resources. Native modules in Node aren’t sandboxed—they have full access to all of the dangerous toys that the browser keeps out of the sandbox. In Node, JS modules also have access to these dangerous toys because Node makes them available. For example, Node provides methods for reading from and writing files to the system.

For Node’s use case, it makes a certain amount of sense for modules to have this kind access to dangerous system APIs. So if WebAssembly modules don’t have that kind of access by default (like Node’s current modules do), how could we give WebAssembly modules the access they need? We’d need to pass in functions so that the WebAssembly module can work with the operating system, just as Node does with JS.

For Node, this will probably include a lot of the functionality that’s in things like the C standard library. It would also likely include things that are part of POSIX—the Portable Operating System Interface—which is an older standard that helps with compatibility. It provides one API for interacting with the system across a bunch of different Unix-like OSs. Modules would definitely need a bunch of POSIX-like functions.

Skill: Portable interface

What the Node core folks would need to do is figure out the set of functions to expose and the API to use.

What the Node core folks would need to do is figure out the set of functions to expose and the API to use.

But wouldn’t it be nice if that were actually something standard? Not something that was specific to just Node, but could be used across other runtimes and use cases too?

A POSIX for WebAssembly if you will. A PWSIX? A portable WebAssembly system interface.

And if that were done in the right way, you could even implement the same API for the web. These standard APIs could be polyfilled onto existing Web APIs.

These functions wouldn’t be part of the WebAssembly spec. And there would be WebAssembly hosts that wouldn’t have them available. But for those platforms that could make use of them, there would be a unified API for calling these functions, no matter which platform the code was running on. And this would make universal modules—ones that run across both the web and Node—so much easier.

Where are we with this?

So, is this something that could actually happen?

A few things are working in this idea’s favor. There’s a proposal called package name maps that will provide a mechanism for mapping a module name to a path to load the module from. And that will likely be supported by both browsers and Node, which can use it to provide different paths, and thus load entirely different modules, but with the same API. This way, the .wasm module itself can specify a single (module-name, function-name) import pair that Just Works on different environments, even the web.

With that mechanism in place, what’s left to do is actually figure out what functions make sense and what their interface should be.

There’s no active work on this at the moment. But a lot of discussions are heading in this direction right now. And it looks likely to happen, in one form or another.

There’s no active work on this at the moment. But a lot of discussions are heading in this direction right now. And it looks likely to happen, in one form or another.

Which is good, because unlocking this gets us halfway to unlocking some other use cases outside the browser. And with this in place, we can accelerate the pace.

So, what are some examples of these other use cases?

So, what are some examples of these other use cases?

CDNs, Serverless, and Edge Computing

One example is things like CDNs, and Serverless, and Edge Computing. These are cases where you’re putting your code on someone else’s server, and they make sure that the server is maintained and that the code is close to all of your users.

One example is things like CDNs, and Serverless, and Edge Computing. These are cases where you’re putting your code on someone else’s server, and they make sure that the server is maintained and that the code is close to all of your users.

Why would you want to use WebAssembly in these cases? There was a great talk explaining exactly this at a conference recently.

Fastly is a company that provides CDNs and edge computing. And their CTO, Tyler McMullen, explained it this way (and I’m paraphrasing here):

If you look at how a process works, code in that process doesn’t have boundaries. Functions have access to whatever memory in that process they want, and they can call whatever other functions they want.

When you’re running a bunch of different people’s services in the same process, this is an issue. Sandboxing could be a way to get around this. But then you get to a scale problem.

For example, if you use a JavaScript VM like Firefox’s SpiderMonkey or Chrome’s V8, you get a sandbox and you can put hundreds of instances into a process. But with the numbers of requests that Fastly is servicing, you don’t just need hundreds per process—you need tens of thousands.

Tyler does a better job of explaining all of it in his talk, so you should go watch that. But the point is that WebAssembly gives Fastly the safety, speed, and scale needed for this use case.

So what did they need to make this work?

Skill: Runtime

They needed to create their own runtime. That means taking a WebAssembly compiler—something that can compile WebAssembly down to machine code—and combining it with the functions for interacting with the system that I mentioned before.

They needed to create their own runtime. That means taking a WebAssembly compiler—something that can compile WebAssembly down to machine code—and combining it with the functions for interacting with the system that I mentioned before.

For the WebAssembly compiler, Fastly used Cranelift, the compiler that we’re also building into Firefox. It’s designed to be very fast and doesn’t use much memory.

Now, for the functions that interact with the rest of the system, they had to create their own, because we don’t have that portable interface available yet.

So it’s possible to create your own runtime today, but it takes some effort. And it’s effort that will have to be duplicated across different companies.

What if we didn’t just have the portable interface, but we also had a common runtime that could be used across all of these companies and other use cases? That would definitely speed up development.

Then other companies could just use that runtime—like they do Node today—instead of creating their own from scratch.

Where are we on this?

So what’s the status of this?

Even though there’s no standard runtime yet, there are a few runtime projects in flight right now. These include WAVM, which is built on top of LLVM, and wasmjit.

Even though there’s no standard runtime yet, there are a few runtime projects in flight right now. These include WAVM, which is built on top of LLVM, and wasmjit.

In addition, we’re planning a runtime that’s built on top of Cranelift, called wasmtime.

And once we have a common runtime, that speeds up development for a bunch of different use cases. For example…

Portable CLI tools

WebAssembly can also be used in more traditional operating systems. Now to be clear, I’m not talking about in the kernel (although brave souls are trying that, too) but WebAssembly running in Ring 3—in user mode.

Then you could do things like have portable CLI tools that could be used across all different kinds of operating systems.

And this is pretty close to another use case…

Internet of Things

The internet of things includes devices like wearable technology, and smart home appliances.

These devices are usually resource constrained—they don’t pack much computing power and they don’t have much memory. And this is exactly the kind of situation where a compiler like Cranelift and a runtime like wasmtime would shine, because they would be efficient and low-memory. And in the extremely-resource-constrained case, WebAssembly makes it possible to fully compile to machine code before loading the application on the device.

There’s also the fact that there are so many of these different devices, and they are all slightly different. WebAssembly’s portability would really help with that.

So that’s one more place where WebAssembly has a future.

Conclusion

Now let’s zoom back out and look at this skill tree.

I said at the beginning of this post that people have a misconception about WebAssembly—this idea that the WebAssembly that landed in the MVP was the final version of WebAssembly.

I said at the beginning of this post that people have a misconception about WebAssembly—this idea that the WebAssembly that landed in the MVP was the final version of WebAssembly.

I think you can see now why this is a misconception.

Yes, the MVP opened up a lot of opportunities. It made it possible to bring a lot of desktop applications to the web. But we still have many use cases to unlock, from heavy-weight desktop applications, to small modules, to JS frameworks, to all the things outside the browser… Node.js, and serverless, and the blockchain, and portable CLI tools, and the internet of things.

So the WebAssembly that we have today is not the end of this story—it’s just the beginning.

The post WebAssembly’s post-MVP future: A cartoon skill tree appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2018/10/webassemblys-post-mvp-future/

|

|

Daniel Stenberg: DNS-over-HTTPS is RFC 8484 |

The protocol we fondly know as DoH, DNS-over-HTTPS, is now officially RFC 8484 with the official title "DNS Queries over HTTPS (DoH)". It documents the protocol that is already in production and used by several client-side implementations, including Firefox, Chrome and curl. Put simply, DoH sends a regular RFC 1035 DNS packet over HTTPS instead of over plain UDP.

I'm happy to have contributed my little bits to this standard effort and I'm credited in the Acknowledgements section. I've also implemented DoH client-side several times now.

Firefox has done studies and tests in cooperation with a CDN provider (which has sometimes made people conflate Firefox's DoH support with those studies and that operator). These studies have shown and proven that DoH is a working way for many users to do secure name resolves at a reasonable penalty cost. At least when using a fallback to the native resolver for the tricky situations. In general DoH resolves are slower than the native ones but in the tail end, the absolutely slowest name resolves got a lot better with the DoH option.

To me, DoH is partly necessary because the "DNS world" has failed to ship and deploy secure and safe name lookups to the masses and this is the one way applications "one layer up" can still secure our users.

https://daniel.haxx.se/blog/2018/10/19/dns-over-https-is-rfc-8484/

|

|

Chris H-C: Three-Year Moziversary |

Another year at Mozilla. They certainly don’t slow down the more you have of them.

For once a year of stability, organization-wise. The two biggest team changes were the addition of Jan-Erik back on March 1, and the loss of our traditional team name “Browser Measurement II” for a more punchy and descriptive “Firefox Telemetry Team.”

I will miss good ol’ BM2, though it is fun signing off notification emails with “Your Friendly Neighbourhood Firefox Telemetry Team (:gfritzsche, :janerik, :Dexter, :chutten)”

We’re actually in the market for a Mobile Telemetry Engineer, so if you or someone you know might be interested in hanging out with us and having their username added to the above, please take a look right here.

In blogging velocity I think I kept up my resolution to blog more. I’m up to 32 posts so far in 2018 (compared to year totals of 15, 26, and 27 in 2015-2017) and I have a few drafts kicking in the bin that ought to be published before the end of the year. Part of this is due to two new blogging efforts: So I’ve Finished (a series of posts about video games I’ve completed), and Ford The Lesser (a series summarizing the deeds and tone of the new Ontario Provincial Government). Neither are particularly mozilla-related, though, so I’m not sure if the count of work blogposts has changed.

Thinking back to work stuff, let’s go chronologically. Last November we released Firefox Quantum. It was and is a big deal. Then in December all hands went to Austin, Texas.

Electives happened again so I did a reprise of Death-Defying Stats (where I stand up and solve data questions, Live On Stage). We saw Star Wars: The Last Jedi (I’m not sure why the internet didn’t like it. I thought it was grand. Though the theatre ruined the impact of That One Scene by letting us know that no, the sound didn’t actually cut out it was deliberate. Yeesh). We partied at a pseudo-historical southwestern US town, drinking warm beverages out of gigantic branded mugs we got to take home afterwards.

Then we launched straight into 2018. Interviews increased to a crushing density for the role that was to become Jan-Erik’s and for two interns: one (Erin Comerford) working on redesigns for the venerable Telemetry Measurement Dashboards, and another (Agnieszka Ciepielewska) working on automatic change detection and explanation for Telemetry metrics.

In June we met again in San Francisco, but this time without Georg who was suffering a cold. Sunah and I gave a talk about Event Telemetry, Steak Club met again, and we got to mess around with science stuff at the Exploratorium.

This year’s Summer Big Project… y’know, there were a few of them. The first was the Event Telemetry “event” ping. Then there was the Measurement Dashboard redesign project where I ended up mentoring more than I expected due to PTO and timezones.

Also in the summer I was organizing and then going on a trip to celebrate a different anniversary (my tenth wedding anniversary) for nearly the entire month of July.

In August the team met in Berlin, and this time I was able to join in. That was a fun and productive time where we settled matters of team identity, ownership, process, and developed some delightful in-jokes to perplex anyone not in the in-group. I acted as an arm of Ontario Craft Beer Tourism by importing a few local cans (Waterloo Dark and Mad & Noisy Lagered Ale) while asking (well-intentioned but numerous and likely ignorant) questions about European life and politics and food and history and …

And that brings us more or less to now. September was busy. October is busy. I’m helping :frank put authentication on the old Measurement Dashboards so we can put release-channel data back up there without someone taking it and misinterpreting it. (As an org we’ve made the conscious decision to use our public data in a deliberate fashion to support truthful narratives about our products and our users. Like on the Firefox Public Data Report.) I’m looking into how we might take what we learned with Erin’s redesign prototype and productionize it with real data. I’m also improving documentation and consulting with a variety of teams on a variety of data things.

So, resolutions for the next twelve months? Keep on keeping on, I guess. I’m happy with the progress I have made this past year. I’m pleased with the direction our team and the broader org is headed. I’m interested to see where time and effort will take us.

:chutten

https://chuttenblog.wordpress.com/2018/10/19/three-year-moziversary/

|

|

Daniel Pocock: Debian GSoC 2018 report |

One of my major contributions to Debian in 2018 has been participation as a mentor and admin for Debian in Google Summer of Code (GSoC).

Here are a few observations about what happened this year, from my personal perspective in those roles.

Making a full report of everything that happens in GSoC is close to impossible. Here I consider issues that span multiple projects and the mentoring team. For details on individual projects completed by the students, please see their final reports posted in August on the mailing list.

Thanking our outgoing administrators

Nicolas Dandrimont and Sylvestre Ledru retired from the admin role after GSoC 2016 and Tom Marble has retired from the Outreachy administration role, we should be enormously grateful for the effort they have put in as these are very demanding roles.

When the last remaining member of the admin team, Molly, asked for people to step in for 2018, knowing the huge effort involved, I offered to help out on a very temporary basis. We drafted a new delegation but didn't seek to have it ratified until the team evolves. We started 2018 with Molly, Jaminy, Alex and myself. The role needs at least one new volunteer with strong mentoring experience for 2019.

Project ideas

Google encourages organizations to put project ideas up for discussion and also encourages students to spontaneously propose their own ideas. This latter concept is a significant difference between GSoC and Outreachy that has caused unintended confusion for some mentors in the past. I have frequently put teasers on my blog, without full specifications, to see how students would try to respond. Some mentors are much more precise, telling students exactly what needs to be delivered and how to go about it. Both approaches are valid early in the program.

Student inquiries

Students start sending inquiries to some mentors well before GSoC starts. When Google publishes the list of organizations to participate (that was on 12 February this year), the number of inquiries increases dramatically, in the form of personal emails to the mentors, inquiries on the debian-outreach mailing list, the IRC channel and many project-specific mailing lists and IRC channels.

Over 300 students contacted me personally or through the mailing list during the application phase (between 12 February and 27 March). This is a huge number and makes it impossible to engage in a dialogue with every student. In the last years where I have mentored, 2016 and 2018, I've personally but a bigger effort into engaging other mentors during this phase and introducing them to some of the students who already made a good first impression.

As an example, Jacob Adams first inquired about my PKI/PGP Clean Room idea back in January. I was really excited about his proposals but I knew I simply didn't have the time to mentor him personally, so I added his blog to Planet Debian and suggested he put out a call for help. One mentor, Daniele Nicolodi replied to that and I also introduced him to Thomas Levine. They both generously volunteered and together with Jacob, ensured a successful project. While I originally started the clean room, they deserve all the credit for the enhancements in 2018 and this emphasizes the importance of those introductions made during the early stages of GSoC.

In fact, there were half a dozen similar cases this year where I have interacted with a really promising student and referred them to the mentor(s) who appeared optimal for their profile.

After my recent travels in the Balkans, a number of people from Albania and Kosovo expressed an interest in GSoC and Outreachy. The students from Kosovo found that their country was not listed in the application form but the Google team very promptly added it, allowing them to apply for GSoC for the first time. Kosovo still can't participate in the Olympics or the World Cup, but they can compete in GSoC now.

At this stage, I was still uncertain if I would mentor any project myself in 2018 or only help with the admin role, which I had only agreed to do on a very temporary basis until the team evolves. Nonetheless, the day before student applications formally opened (12 March) and after looking at the interest areas of students who had already made contact, I decided to go ahead mentoring a single project, the wizard for new students and contributors.

Student selections

The application deadline closed on 27 March. At this time, Debian had 102 applications, an increase over the 75 applications from 2016. Five applicants were female, including three from Kosovo.

One challenge we've started to see is that since Google reduced the stipend for GSoC, Outreachy appears to pay more in many countries. Some women put more effort into an Outreachy application or don't apply for GSoC at all, even though there are far more places available in GSoC each year. GSoC typically takes over 1,000 interns in each round while Outreachy can only accept approximately 50.

Applicants are not evenly distributed across all projects. Some mentors/projects only receive one applicant and then mentors simply have to decide if they will accept the applicant or cancel the project. Other mentors receive ten or more complete applications and have to spend time studying them, comparing them and deciding on the best way to rank them and make a decision.

Given the large number of project ideas in Debian, we found that the Google portal didn't allow us to use enough category names to distinguish them all. We contacted the Google team about this and they very quickly increased the number of categories we could use, this made it much easier to tag the large number of applications so that each mentor could filter the list and only see their own applicants.

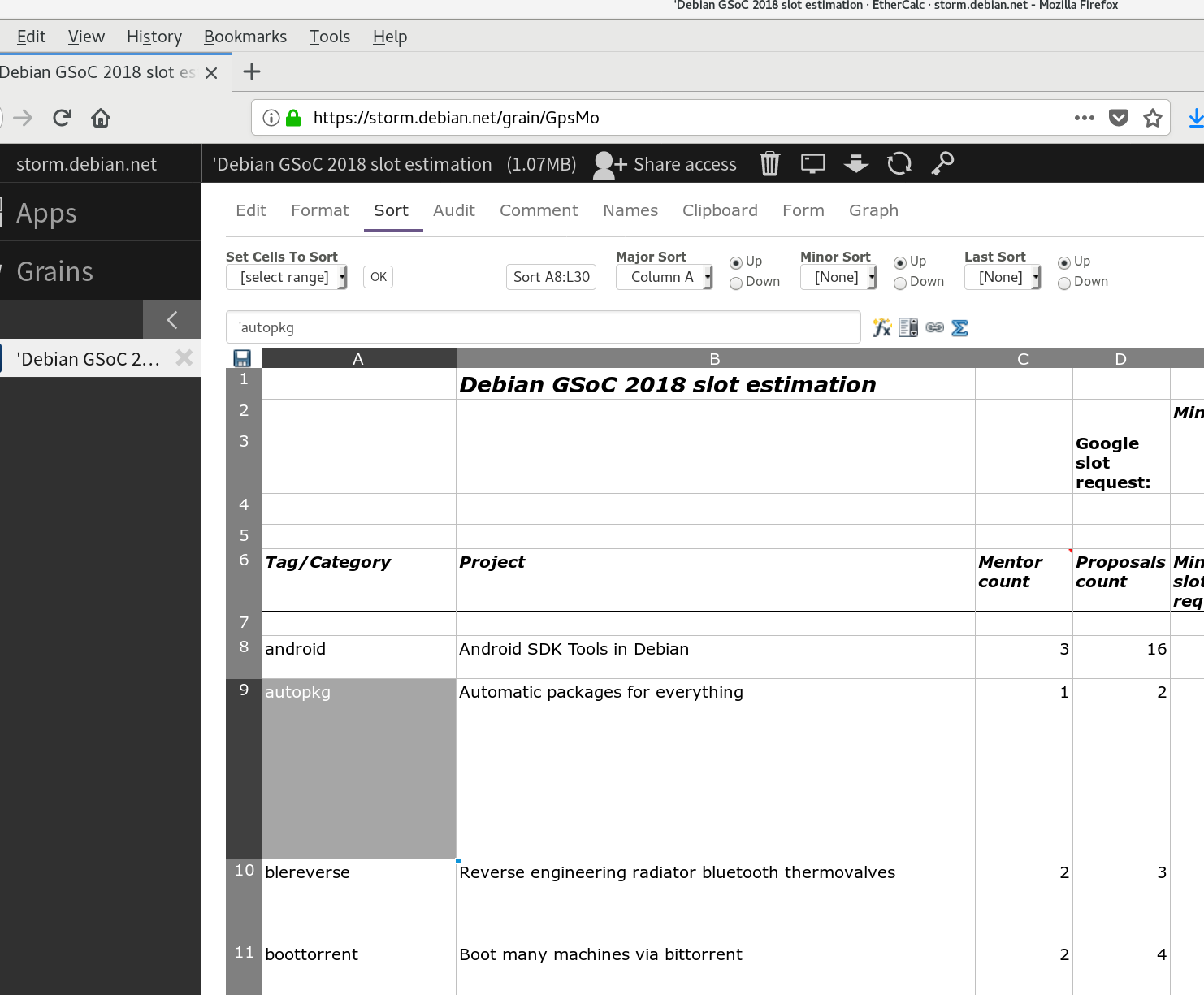

The project I mentored personally, a wizard for helping new students get started, attracted interest from 3 other co-mentors and 10 student applications. To help us compare the applications and share data we gathered from the students, we set up a shared spreadsheet using Debian's Sandstorm instance and Ethercalc. Thanks to Asheesh and Laura for setting up and maintaining this great service.

Slot requests

Switching from the mentor hat to the admin hat, we had to coordinate the requests from each mentor to calculate the total number of slots we wanted Google to fund for Debian's mentors.

Once again, Debian's Sandstorm instance, running Ethercalc, came to the rescue.

All mentors were granted access, reducing the effort for the admins and allowing a distributed, collective process of decision making. This ensured mentors could see that their slot requests were being counted correctly but it means far more than that too. Mentors put in a lot of effort to bring their projects to this stage and it is important for them to understand any contention for funding and make a group decision about which projects to prioritize if Google doesn't agree to fund all the slots.

Management tools and processes

Various topics were discussed by the team at the beginning of GSoC.

One discussion was about the definition of "team". Should the new delegation follow the existing pattern, reserving the word "team" for the admins, or should we move to the convention followed by the DebConf team, where the word "team" encompasses a broader group of the volunteers? A draft delegation text was prepared but we haven't asked for it to be ratified, this is a pending task for the 2019 team (more on that later).