Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: 25,000 curl questions on stackoverflow |

Over time, I’ve reluctantly come to terms with the fact that a lot of questions and answers about curl is not done on the mailing lists we have setup in the project itself.

Over time, I’ve reluctantly come to terms with the fact that a lot of questions and answers about curl is not done on the mailing lists we have setup in the project itself.

A primary such external site with curl related questions is of course stackoverflow – hardly news to programmers of today. The questions tagged with curl is of course only a very tiny fraction of the vast amount of questions and answers that accumulate on that busy site.

The pile of questions tagged with curl on stackoverflow has just surpassed the staggering number of 25,000. Of course, these questions involve persons who ask about particular curl behaviors (and a large portion is about PHP/CURL) but there’s also a significant amount of tags for questions where curl is only used to do something and that other something is actually what the question is about. And ‘libcurl’ is used as a separate tag and is often used independently of the ‘curl’ one. libcurl is tagged on almost 2,000 questions.

But still. 25,000 questions. Wow.

But still. 25,000 questions. Wow.

I visit that site every so often and answer to some questions but I often end up feeling a great “distance” between me and questions there, and I have a hard time to bridge that gap. Also, stackoverflow the site and the format isn’t really suitable for debugging or solving problems within curl so I often end up trying to get the user move over to file an issue on curl’s github page or discuss the curl problem on a mailing list instead. Forums more suitable for plenty of back-and-forth before the solution or fix is figured out.

Now, any bets for how long it takes until we reach 100K questions?

https://daniel.haxx.se/blog/2016/09/29/25000-curl-questions-on-stackoverflow/

|

|

Air Mozilla: Kernel Recipes 2016 Day 2 PM Session |

Three days talks around the Linux Kernel

Three days talks around the Linux Kernel

https://air.mozilla.org/kernel-recipes-2016-09-29-PM-Session/

|

|

Niko Matsakis: Distinguishing reuse from override |

In my previous post, I started discussing the idea of

intersection impls, which are a possible extension to

specialization. I am specifically looking at the idea of

making it possible to add blanket impls to (e.g.) implement Clone

for any Copy type. We saw that intersection impls, while useful, do

not enable us to do this in a backwards compatible way.

Today I want to dive a bit deeper into specialization. We’ll see that specialization actually couples together two things: refinement of behavior and reuse of code. This is no accident, and its normally a natural thing to do, but I’ll show that, in order to enable the kinds of blanket impls I want, it’s important to be able to tease those apart somewhat.

This post doesn’t really propose anything. Instead it merely explores

some of the implications of having specialization rules that are not

based purely on subsets of types

, but instead go into other areas.

Requirements for backwards compatibility

In the previous post, my primary motivating example focused on the

Copy and Clone traits. Specifically, I wanted to be able to add an

impl like the following (we’ll call it impl A

):

1 2 3 4 5 | |

The idea is that if I have a Copy type, I should not have to write a

Clone impl by hand. I should get one automatically.

The problem is that there are already lots of Clone impls in the

wild

(in fact, every Copy type has one, since Copy is a subtrait

of Clone, and hence implementing Copy requires implememting

Clone too). To be backwards compatible, we have to do two things:

- continue to compile those

Cloneimpls without generating errors; - give those existing

Cloneimpls precedence over the new one.

The last point may not be immediately obvious. What I’m saying is that

if you already had a type with a Copy and a Clone impl, then any

attempts to clone that type need to keep calling the clone() method

you wrote. Otherwise the behavior of your code might change in subtle

ways.

So for example imagine that I am developing a widget crate with some

types like these:

1 2 3 4 5 6 7 8 9 10 11 | |

Then, for backwards compatibility, we want that if I have a variable

widget of type Widget for any T (including cases where

T: Copy, and hence Widget), then widget.clone() invokes

impl C.

Thought experiment: Named impls and explicit specialization

For the purposes of this post, I’d like to engage now in a thought experiment. Imagine that, instead of using type subsets as the basis for specialization, we gave every impl a name, and we could explicitly specify when one impl specializes another using that name. When I say that an impl X specializes an impl Y, I mean primarily that items in the impl X override items in impl Y:

- When we go looking for an associated item, we use the one in X first.

However, in the specialization RFC as it currently stands, specializing is also tied to reuse. In particular:

- If there is no item in X, then we go looking in Y.

The point of this thought experiment is to show that we may want to separate these two concepts.

To avoid inventing syntax, I’ll use a #[name] attribute to specify

the name of an impl and a #[specializes] attribute to declare when

one impl specializes another. So we might declare our two Clone

impls from the previous section as follows:

1 2 3 4 5 6 | |

Interestingly, it turns out that this scheme of using explicit names

interacts really poorly with the reuse aspects of the

specialization RFC. The Clone trait is kind of too simple to show

what I mean, so let’s consider an alternative trait, Dump, which has

two methods:

1 2 3 4 | |

Now imagine that I have a blanket implementation of Dump that

applies to any type that implements Debug. It defines both

display and debug to print to stdout using the Debug

trait. Let’s call this impl D

.

1 2 3 4 5 6 7 8 9 10 11 12 | |

Now, maybe I’d like to specialize this impl so that if I have an

iterator over items that also implement Display, then display dumps

out their debug instead. I don’t want to change the behavior for

debug, so I leave that method unchanged. This is sort of analogous

to subtyping in an OO language: I am refining the impl for

Dump by tweaking how it behaves in certain scenarios. We’ll call

this impl E.

1 2 3 4 5 6 7 8 9 | |

So far, everything is fine. In fact, if you just remove the #[name]

and #[specializes] annotations, this example would work with

specialization as currently implemented. But imagine that we did a

slightly different thing. Imagine we wrote impl E but without

the requirement that T: Debug (everything else is the same). Let’s

call this variant impl F.

1 2 3 4 5 6 7 8 9 | |

Now we no longer have the subset of types

property. Because of the

#[specializes] annotation, impl F specializes impl D, but in fact it

applies to an overlapping, but different set of types (those that

implement Display rather than those that implement Debug).

But losing the subset of types

property makes the reuse in impl F

invalid. Impl F only defines the display() method and it claims to

inherit the debug() method from Impl D. But how can it do that? The

code in impl D was written under the assumption that the types we are

iterating over implement Debug, and it uses methods from the Debug

trait. Clearly we can’t reuse that code, since if we did so we might

not have the methods we need.

So the takeaway here is that if an impl A wants to reuse some items from impl B, then impl A must apply to a subset of impl B’s types. That guarantees that the item from impl B will still be well-typed inside of impl A.

What does this mean for copy and clone?

Interesting thought experiment,

you are thinking, but how does this

relate to `Copy` and `Clone`?

Well, it turns out that if we ever want

to be able to add add things like an autoconversion impl between

Copy and Clone (and Ord and PartialOrd, etc), we are going to

have to move away from subsets of types

as the sole basis for

specialization. This implies we will have to separate the concept of

when you can reuse

(which requires subset of types) from when you

can override

(which can be more general).

Basically, in order to add a blanket impl backwards compatibly, we

have to allow impls to override one another in situations where

reuse would not be possible. Let’s go through an example. Imagine that

– at timestep 0 – the Dump trait was defined in a crate dump,

but without any blanket impl:

1 2 3 4 5 | |

Now some other crate widget implements Dump for its type Widget,

at timestep 1:

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Now, at timestep 2, we wish to add an implementation of Dump

that works for any type that implements Debug (as before):

1 2 3 4 5 6 7 8 9 10 11 12 | |

If we assume that this set of impls will be accepted – somehow,

under any rules – we have created a scenario very similar to our

explicit specialization. Remember that we said in the beginning

that, for backwards compatibility, we need to make it so that adding

the new blanket impl (impl I) does not cause any existing code to

change what impl it is using. That means that Widget also

needs to be resolved to impl H, the original impl from the crate

widget: even if impl I also applies.

This basically means that impl H overrides impl I (that is, in

cases where both impls apply, impl H takes precedence). But impl H

cannot reuse from impl I, since impl H does not apply to a subset

of blanket impl’s types. Rather, these impls apply to overlapping but

distinct sets of types. For example, the Widget impl applies to all

Widget, even in cases where T: Debug does not hold. But the

blanket impl applies to i32, which is not a widget at all.

Conclusion

This blog post argues that if we want to support adding blanket impls

backwards compatibly, we have to be careful about reuse. I actually

don’t think this is a mega-big deal, but it’s an interesting

observation, and one that wasn’t obvious to me at first. It means that

subset of types

will always remain a relevant criteria that we have

to test for, no matter what rules we wind up with (which might in turn

mean that intersection impls remain relevant).

The way I see this playing out is that we have some rules for when one impl specializes one another. Those rules do not guarantee a subset of types and in fact the impls may merely overlap. If, additionally, one impl matches a subst of the other’s types, then that first impl may reuse items from the other impl.

PS: Why not use names, anyway?

You might be thinking to yourself right now boy, it is nice to have

names and be able to say explicitly what we specialized by what

. And

I would agree. In fact, since specializable

impls must mark their

items as default, you could easily imagine a scheme where those impls

had to also be given a name at the same time. Unfortunately, that

would not at all support my copy-clone use case, since in that case we

want to add the base impl after the fact, and hence the extant

specializing impls would have to be modified to add a #[specializes]

annotation. Also, we tried giving impls names back in the day; it felt

quite artificial, since they don’t have an identity of their own,

really.

Comments

Since this is a continuation of my previous post, I’ll just re-use the same internals thread for comments.

http://smallcultfollowing.com/babysteps/blog/2016/09/29/distinguishing-reuse-from-override/

|

|

Christian Heilmann: Quick tip: using modulo to re-start loops without the need of an if statement |

A few days ago Jake Archibald posted a JSBin example of five ways to center vertically in CSS to stop the meme of “CSS is too hard and useless”. What I found really interesting in this example is how he animated showing the different examples (this being a CSS demo, I’d probably would’ve done a CSS animation and delays, but he wanted to support OldIE, hence the use of className instead of classList):

var els = document.querySelectorAll('p'); var showing = 0; setInterval(function() { // this is easier with classlist, but meh: els[showing].className = els[showing].className.replace(' active', ''); showing = (showing + 1) % 5; els[showing].className += ' active'; }, 4000); |

The interesting part to me here is the showing = (showing + 1) % 5; line. This means that if showing is 4 showing becomes 0, thus starting the looping demo back from the first example. This is the remainder operator of JavaScript, giving you the remaining value of dividing the first value with the second. So, in the case of 4 + 1 % 5, this is zero.

Whenever I used to write something like this, I’d do an if statement, like:

showing++; if (showing === 5) { showing = 0; } |

Using the remainder seems cleaner, especially when instead of the hard-coded 5, you’d just use the length of the element collection.

var els = document.querySelectorAll('p'); var all = els.length; var c = 'active'; var showing = 0; setInterval(function() { els[showing].classList.remove(c); showing = (showing + 1) % all; els[showing].classList.add(c); }, 4000); |

A neat little trick to keep in mind.

|

|

Air Mozilla: Kernel Recipes 2016 Day 2 AM Session |

Three days talks around the Linux Kernel

Three days talks around the Linux Kernel

https://air.mozilla.org/kernel-recipes-2016-09-29-AM-Session/

|

|

Chris McDonald: i-can-manage-it Weekly Update 2 |

A little over a week ago, I started this series about the game I’m writing. Welcome to the second installment. It took a little longer than a week to get around to writing. I wanted to complete the task, determining what tile the user clicked on, I set out for myself at the end of my last post before coming back and writing up my progress. But while we’re on the topic, the “weekly” will likely be a loose amount of time. I’ll aim for each weekend but I don’t want guilt from not posting getting in the way of building the game.

Also, you may notice the name changed just a little bit. I decided to go with the self motivating and cuter name of i-can-manage-it. The name better captures my state of mind when I’m building this. I just assume I can solve a problem and keep working on it until I understand how to solve it or why that approach is not as good as some other approach. I can manage building this game, you’ll be able to manage stuff in the game, we’ll all have a grand time.

So with the intro out of the way, lets talk progress. I’m going to bullet point the things I’ve done and then discuss them in further detail below.

- Learned more math!

- Built a bunch of debugging tools into my rendering engine!

- Can determine what tile the mouse is over!

- Wrote my first special effect shader!

Learned more math!

If you are still near enough to high school to remember a good amount of the math from it and want to play with computer graphics, keep practicing it! So far I haven’t needed anything terribly advanced to do the graphics I’m currently rendering. In my high school Algebra 2 covered matrix math to a minor degree. Now back then I didn’t realize that this was a start into linear algebra. Similarly, I didn’t consider all the angle and area calculations in geometry to be an important life lesson, just neat attributes of the world expressed in math.

In my last post I mentioned this blog post on 3d transformations which talks about several but not necessarily all coordinate systems a game would have. So, I organized my world coordinate system, the coordinates that my map outputs and game rules use, so that it matched how the X and Y change in OpenGL coordinates. X, as you’d expect gets larger going toward the right of the screen. And if you’ve done much math or looked at graphs, you’ve seen demonstrations of the Y getting larger going toward the top. OpenGL works this way and so I made my map render this way.

You then apply a series of 4x4 matrices that correspond to things like moving the object to where it should be in world coordinates from it’s local coordinates which are the coordinates that might be exported from 3d modelling or generated by the game engine. You also apply a 4x4 matrix for the window’s aspect ratio, zoom, pan and probably other stuff too.

That whole transform process I described above results in a bunch of points that aren’t even on the screen. OpenGL determines that by looking at points between -1 and 1 on each axis and anything outside of that range is culled, which means that the graphics card wont put it on the screen.

I learned that a neat property of these matrices is that many of them are invertable. Which means you can invert the matrix then apply it to a point on the screen and get back where that point is in your world coordinates. If we wanted to know what object was at the center of the screen, we’d take that inverted matrix and multiply it by {x: 0, y: 0, z: 0, w: 1} (as far as I can tell the w servers to make this math magic all work) and get back what world coordinates were at the center of the view. In my case because my world is 2d, that means I can just calculate what tile is at that x and y coordinate and what is the top most thing on that tile. If you had a 3d world, you’d then need to something like ray casting, which sends a ray out from the specified point at the camera’s z axis and moves across the z axis until it encounters something (or hits the back edge).

I spent an afternoon at the library and wrote a few example programs to test this inversion stuff to check my pen and paper math using the cgmath crate. That way I could make sure I understood the math, as well as how to make cgmath do the same thing. I definitely ran into a few snags where I multiplied or added the wrong numbers when working on paper due to taking short cuts. Taking the time to also write the math using code meant I’d catch these errors quickly and then correct how I thought about things. It was so productive and felt great. Also, being surrounded by knowledge in the library is one of my favorite things.

Built a bunch of debugging tools into my rendering engine!

Through my career, I’ve found that the longer you expect the project to last, the more time you should spend on making sure it is debuggable. Since I expect this project to take up the majority of my spare time hacking for at least a few years, maybe even becoming the project I work on longer than any other project before it I know that each debugging tool is probably a sound investment.

Every time I add in a 1 off debugging tool, I work on it for a while getting it to a point to solve my problem at hand. Then, once I’m ready to clean up my code, I think about how many other types or problems that debugging tool might solve and how hard it would be to make easy to access in the future. Luckily, most debugging tools are more awesome when you can toggle them on the fly. If the tool is easy to toggle, I definitely leave it in until it causes trouble adding a new feature.

An example of adapting tools to keep them, my FPS (frames per second) counter I built was logging the FPS to the console every second and had become a hassle. When working on other problems because other log lines would scroll by due to the FPS chatter. So I added a key to toggle the FPS printing, but keep calculating it every frame. I’d thought about removing the calculation too, but decided I’ll probably want to track that metric for a long time so it should probably be a permanent fixture and cost.

A tool I’m pretty proud of had to do with my tile map rendering. My tiles are rendered as a series of triangles, 2 per tile, that are stitched in a triangle strip, which is a series of points where each 3 points is a triangle. I also used degenerate triangles which are triangles that have no area so OpenGL doesn’t render them. I generate this triangle strip once then save it and reuse it with some updated meta data on each frame.

I had some of the points mixed up causing the triangles to cross the whole map that rendered over the tiles. I added the ability to switch to line drawing instead of filled triangles, which helped some of the debugging because I could see more of the triangles. I realized I could take a slice of the triangle strip and only render the first couple points. Then by adding a couple key bindings I could make that dynamic, so I could step through the vertices and verify the order they were drawn in. I immediately found the issue and felt how powerful this debug tool could be.

Debugging takes up an incredible amount of time, I’m hoping by making sure I’ve got a large toolkit I’ll be able to overcome any bug that comes up quickly.

Can determine what tile the mouse is over!

I spent time learning and relearning the math mentioned in the first bullet point to solve this problem. But, I found another bit of math I needed to do for this. Because of how older technology worked, mouse pointers coordinates start in the upper left of the screen and grow bigger going toward the right (like OpenGL) and going toward the bottom (the opposite of OpenGL). Also, because OpenGL coordinates are a -1 to 1 range for the window, I needed to turn the mouse pointer coordinates into that as well.

This inversion of the Y coordinate were a huge source of my problems for a couple days. To make a long story short, I inverted the Y coordinate when I first got it, then I was inverting it again when I was trying to work out what tile the mouse was over. This was coupled with me inverting the Y coordinate in the triangle strip from my map instead of using a matrix transform to account for how I was drawing the map to the console. This combination of bugs meant that if I didn’t pan the camera at all I could get the tile the mouse was over correctly. But, as soon as I panned it up or down, the Y coordinate would be off, moving in the opposite direction of the panning. Took me a long time to hunt this combination of bugs down.

But, the days of debugging made me take a lot of critical looks at my code, taking the time to cleaned up my code and math. Not abstracting it really, just organizing it into more logical blocks and moving some things out of the rendering loop, only recalculating them as needed. This may sound like optimization, but the goal wasn’t to make the code faster, just more logically organized. Also I got a bunch of neat debugging tools in addition to the couple I mentioned above.

So while this project took me a bit longer than expected, I made my code better and am better prepared for my next project.

Wrote my first special effect shader!

I was attempting to rest my brain from the mouse pointer problem by working on shader effects. It was something I wanted to start learning and I set a goal of having a circle at the mouse point that moves outwards. I spent most of my hacking on Sunday on this problem and here are the results. In the upper left click the 2 and change it to 0.5 to make it nice and smooth. Hide the code up in the upper left if that isn’t interesting to you.

First off, glslsandbox is pretty neat. I was able to immediately start experimenting with a shader that had mouse interaction. I started by trying to draw a box around the mouse pointer. I did this because it was simple and I figured calculating the circle would be more expensive than checking the bounding box. I was quickly able to get there. Then a bit of Pythagorean theorem, thanks high school geometry, and I was able to calculate a circle.

The only trouble was that it wasn’t actually a circle. It was an elliptical disc instead, matching the aspect ratio of the window. Meaning that because my window was a rectangle instead of a square, my circle reflected that the window was shorter than it was wide. In the interest of just getting things working, I pulled the orthographic projection I was using in my rendering engine and translated it to glsl and it worked!

Next was to add another circle on the inside, which was pretty simple because I’d already done it once, and scaling the circle’s size with time. Honestly, despite all the maybe scary looking math on that page, it was relatively simple to toss together. I know there are whole research papers on just parts of graphical effects, but it is good to know that some of the more simple ones are able to be tossed together in a few hours. Then later, if I decide I want to really use the effect, I can take the time to deeply understand the problem and write a version using less operations to be more efficient.

On that note, I’m not looking for feedback on that shader I wrote. I know the math is inefficient and the code is pretty messy. I want to use this shader as a practice for taking and effect shader and making it faster. Once I’ve exhausted my knowledge and research I’ll start soliciting friends for feedback, thanks for respecting that!

Wrapping up this incredibly long blog post I want to say everyone in my life has been so incredibly supportive of me building my own game. Co-workers have given me tips on tools to use and books to read, friends have given input on the ideas for my game engine helping guide me in an area I don’t know well. Last and most amazing is my wife, who listens to me prattle away about my problems in my game engine or how some neat math thing I learned works, and then encourages me with her smile.

Catch you in the next update!

https://wraithan.net/2016/09/29/i-can-manage-it-weekly-update-2/

|

|

Mitchell Baker: UN High Level Panel and UN Secretary General Ban Ki-moon issue report on Women’s Economic Empowerment |

“Gender equality remains the greatest human rights challenge of our time.” UN Secretary General Ban Ki-moon, September 22, 2016.

To address this challenge the Secretary General championed the 2010 creation of UN Women, the UN’s newest entity. To focus attention on concrete actions in the economic sphere he created the “High Level Panel on Women’s Economic Empowerment” of which I am a member.

The Panel presented its initial findings and commitments last week during the UN General Assembly Session in New York. Here is the Secretary General, with the the co-chairs, and the heads of the IMF and the World Bank, the Executive Director of the UN Women, and the moderator and founder of All Africa Media, each of whom is a panel member.

The findings are set out in the Panel’s initial report. Key to the report is the identification of drivers of change, which have been deemed by the panel to enhance women’s economic empowerment:

- Breaking stereotypes: Tackling adverse social norms and promoting positive role models

- Leveling the playing field for women: Ensuring legal protection and reforming discriminatory laws and regulations

- Investing in care: Recognizing, reducing and redistributing unpaid work and care

- Ensuring a fair share of assets: Building assets—Digital, financial and property

- Businesses creating opportunities: Changing business culture and practice

- Governments creating opportunities: Improving public sector practices in employment and procurement

- Enhancing women’s voices: Strengthening visibility, collective voice and representation

- Improving sex-disaggregated data and gender analysis

Chapter Four of the report describes a range of actions that are being undertaken by Panel Members for each of the above drivers. For example under the Building assets driver: DFID and the government of Tanzania are extending land rights to more than 150,000 Tanzanian women by the end of 2017. Tanzania will use media to educate people on women’s land rights and laws pertaining to property ownership. Clearly this is a concrete action that can serve as a precedent for others.

As a panel member, Mozilla is contributing to the working on Building Assets – Digital. Here is my statement during the session in New York:

“Mozilla is honored to be a part of this Panel. Our focus is digital inclusion. We know that access to the richness of the Internet can bring huge benefits to Women’s Economic Empowerment. We are working with technology companies in Silicon Valley and beyond to identify those activities which provide additional opportunity for women. Some of those companies are with us today.

Through our work on the Panel we have identified a significant interest among technology companies in finding ways to do more. We are building a working group with these companies and the governments of Costa Rica, Tanzania and the U.A. E. to address women’s economic empowerment through technology.

We expect the period from today’s report through the March meeting to be rich with activity. The possibilities are huge and the rewards great. We are committed to an internet that is open and accessible to all.”

You can watch a recording of the UN High Level Panel on Women’s Economic Empowerment here. For my statement, view starting at: 2.07.53.

There is an immense amount of work to be done to meet the greatest human rights challenge of our time. I left the Panel’s meeting hopeful that we are on the cusp of great progress.

|

|

Hub Figui`ere: Introducing gudev-rs |

A couple of weeks ago, I released gudev-rs, Rust wrappers for gudev. The goal was to be able to receive events from udev into a Gtk application written in Rust. I had a need for it, so I made it and shared it.

It is mostly auto-generated using gir-rs from the gtk-rs project. The license is MIT.

If you have problems, suggestion, patches, please feel free to submit them.

https://www.figuiere.net/hub/blog/?2016/09/28/861-introducing-gudev-rs

|

|

Mozilla Addons Blog: How Video DownloadHelper Became Compatible with Multiprocess Firefox |

Today’s post comes from Michel Gutierrez (mig), the developer of Video DownloadHelper, among other add-ons. He shares his story about the process of modernizing his XUL add-on to make it compatible with multiprocess Firefox (e10s).

***

Video DownloadHelper (VDH) is an add-on that extracts videos and image files from the Internet and saves them to your hard drive. As you surf the Web, VDH will show you a menu of download options when it detects something it can save for you.

It was first released in July 2006, when Firefox was on version 1.5. At the time, both the main add-on code and DOM window content were running in the same process. This was helpful because video URLs could easily be extracted from the window content by the add-on. The Smart Naming feature was also able to extract video names from the Web page.

When multiprocess Firefox architecture was first discussed, it was immediately clear that VDH needed a full rewrite with a brand new architecture. In multiprocess Firefox, DOM content for webpages run in a separate process, which means required asynchronous communication with the add-on code would increase significantly. It wasn’t possible to simply make adaptations to the existing code and architecture because it would make the code hard to read and unmaintainable.

The Migration

After some consideration, we decided to update the add-on using SDK APIs. Here were our requirements:

- Code running in the content process needed to run separately from code running in Javascript modules and the main process. Communication must occur via message passing.

- Preferences needed to be available in the content process, as there are many adjustable parameters that affect the user interface.

- Localization of HTML pages within the content script should be as easy as possible.

In VDH, the choice was made to handle all of these requirements using the same Client-Server architecture commonly used in regular Web applications: the components that have access to the preferences, localization, and data storage APIs (running in the main process) serve this data to the UI components and the components injected into the page (running in the content process), through the messaging API provided by the SDK.

Limitations

Migrating to the SDK enabled us to become compatible with multiprocess Firefox, but it wasn’t a perfect solution. Low-level SDK APIs, which aren’t guaranteed to work with e10s or stay compatible with future versions of Firefox, were required to implement anything more than simple features. Also, an increased amount of communication between processes is required even for seemingly simple interactions.

- Resizing content panels can only occur in the background process, but only the content process knows what the dimensions should be. This gets more complicated when the size dynamically changes or depends on various parameters.

- Critical features like monitoring network traffic or launching external programs in VDH requires low-level APIs.

- Capturing tab thumbnails from the Add-on SDK API does not work in e10s mode. This feature had to be reimplemented in the add-on using a framescript.

- When intercepting network responses, the Add-on SDK does not decode compressed responses.

- The SDK provides no easy means to determine if e10s is enabled or not, which would be useful as long as glitches remain where the add-on has to act differently.

Future Direction

Regardless of the limitations posed, making VDH compatible to multiprocess Firefox was a great success. Taking the time to rewrite the add-on also improved the general architecture and prepared it for changes needed for WebExtensions. The first e10s-compatible version of VDH is version 5.0.1 and had been available since March 2015.

Looking forward, the next big challenge is making VDH compatible with WebExtensions. We considered migrating directly to WebExtensions, but the legacy and low-level SDK APIs used in VDH could not be replaced at the time without compromising the add-on’s features.

To fully complete the transition to WebExtensions, additional APIs may need to be created. As an extension developer we’ve found it helpful to work with Mozilla to define those APIs, and design them in a way that is general enough for them to be useful in many other types of add-ons.

A note from the add-ons team: resources for migrating your add-ons to WebExtensions can be found here.

https://blog.mozilla.org/addons/2016/09/28/migrating-an-sdk-add-on-to-multiprocess-firefox/

|

|

Armen Zambrano: [NEW] Added build status updates - Usability improvements for Firefox automation initiative - Status update #6 |

- Debugging tests on interactive workers (only Linux on TaskCluster)

- Improve end to end times on Try (Thunder Try project)

- Fixed regression that broke the interactive wizard

- Support for Android reftests landed

- Support for Android xpcshell

- Video demonstration

- Windows and Mac artifact builds are soon to land

- |mach try| now supports --artifact option

- Compiled-code tests jobs error-out early when run with --artifact on try

- Windows and Mac artifact builds available on Try

- Fix triggering of test jobs on Buildbot with artifact build

- Drill-down charts:

- With optional wait times included (missing 10% outliers, so looks almost the same):

- Iron out interactivity bugs

- Show outliers

- Post these (static) pages to my people page

- Fix ActiveData production to handle these queries (I am currently using a development version of ActiveData, but that version has some nasty anomalies)

- We identified an interaction with EBS in AWS that is likely making several parts of automation slower than they should be (https://bugzilla.mozilla.org/show_bug.cgi?id=1305174)

- WPT is now running from the source checkout in automation

- There are still parts in automation relying on a test zip. Next steps is to minimize those so you can get a loner, pull any revision from any repo, and test WPT changes in an environment that is exactly what the automation tests run in.

- Bug 1300812 - Make Mozharness downloads and unpacks actions handle better intermittent S3/EC2 issues

- This adds retry logic to reduce intermittent oranges

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/00B6HWa15nU/new-added-build-status-updates.html

|

|

Air Mozilla: The Joy of Coding - Episode 73 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Cameron Kaiser: Firefox OS goes Tier WONTFIX |

There may be a plan to fork the repository, but they'd need someone crazy dedicated to keep chugging out builds. We're not anything on that level of nuts around here. Noooo.

http://tenfourfox.blogspot.com/2016/09/firefox-os-goes-tier-wontfix.html

|

|

Air Mozilla: Weekly SUMO Community Meeting Sept 28, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-sept-28-2016/

|

|

Air Mozilla: Kernel Recipes 2016 Day 1 PM Session |

Three days talks around the Linux Kernel

Three days talks around the Linux Kernel

https://air.mozilla.org/kernel-recipes-2016-09-28-PM-Session/

|

|

The Mozilla Blog: Firefox’s Test Pilot Program Launches Three New Experimental Features |

Earlier this year we launched our first set of experiments for Test Pilot, a program designed to give you access to experimental Firefox features that are in the early stages of development. We’ve been delighted to see so many of you participating in the experiments and providing feedback, which ultimately, will help us determine which features end up in Firefox for all to enjoy.

Since our launch, we’ve been hard at work on new innovations, and today we’re excited to announce the release of three new Test Pilot experiments. These features will help you share and manage screenshots; keep streaming video front and center; and protect your online privacy.

What Are The New Experiments?

Min Vid:

Keep your favorite entertainment front and center. Min Vid plays your videos in a small window on top of your other tabs so you can continue to watch while answering email, reading the news or, yes, even while you work. Min Vid currently supports videos hosted by YouTube and Vimeo.

Page Shot:

The print screen button doesn’t always cut it. The Page Shot feature lets you take, find and share screenshots with just a few clicks by creating a link for easy sharing. You’ll also be able to search for your screenshots by their title, and even the text captured in the image, so you can find them when you need them.

Tracking Protection:

We’ve had Tracking Protection in Private Browsing for a while, but now you can block trackers that follow you across the web by default. Turn it on, and browse free and breathe easy. This experiment will help us understand where Tracking Protection breaks the web so that we can improve it for all Firefox users.

How do I get started?

Test Pilot experiments are currently available in English only. To activate Test Pilot and help us build the future of Firefox, visit testpilot.firefox.com.

As you’re experimenting with new features within Test Pilot, you might find some bugs, or lose some of the polish from the general Firefox release, so Test Pilot allows you to easily enable or disable features at any time.

Your feedback will help us determine what ultimately ends up in Firefox – we’re looking forward to your thoughts!

https://blog.mozilla.org/blog/2016/09/28/three-new-test-pilot-experiments/

|

|

Air Mozilla: Kernel Recipes 2016 Day 1 AM Session |

Three days talks around the Linux Kernel

Three days talks around the Linux Kernel

https://air.mozilla.org/kernel-recipes-2016-09-28-AM-Session/

|

|

Wil Clouser: Test Pilot is launching three new experiments for Firefox |

The Test Pilot team has been heads-down for months working on three new experiments for Firefox and you can get them all today!

Min Vid

Min Vid is an add-on that allows you to shrink a video into a small always-on-top frame in the corner of your browser. This lets you watch and interact with a video while browsing the web in other tabs. Opera and Safari are implementing similar features so this one might have some sticking power.

Thanks to Dave, Jen, and Jared for taking this from some prototype code to in front of Firefox users in six months.

Tracking Protection

Luke has been working hard on Tracking Protection - an experiment focused on collecting feedback from users about which sites break when Firefox blocks the trackers from loading. As we collect data from everyday users we can make decisions about how best to block what people don't want and still show them what they do. Eventually this could lead to us protecting all Firefox users with Tracking Protection by default.

Page Shot

Page Shot is a snappy experiment that enables users to quickly take screenshots in their browser and share them on the internet. There are a few companies in this space already, but their products always felt too heavy to me, or they ignored privacy, or some simply didn't even work (this was on Linux). Page Shot is light and quick and works great everywhere.

As a bonus, a feature I haven't seen anywhere else, Page Shot also offers searching the text within the images themselves. So if you take a screenshot of a pizza recipe and later search for "mozzarella" it will find the recipe.

I was late to the Page Shot party and my involvement is just standing on the shoulders of giants at this point: by the time I was involved the final touches were already being put on. A big thanks to Ian and Donovan for bringing this project to life.

I called out the engineers who have been working to bring their creations to life, but of course there are so many teams who were critical to today's launches. A big thank you to the people who have been working tirelessly and congratulations on launching your products! :)

http://micropipes.com/blog//2016/09/28/test-pilot-is-launching-three-new-experiments-for-firefox/

|

|

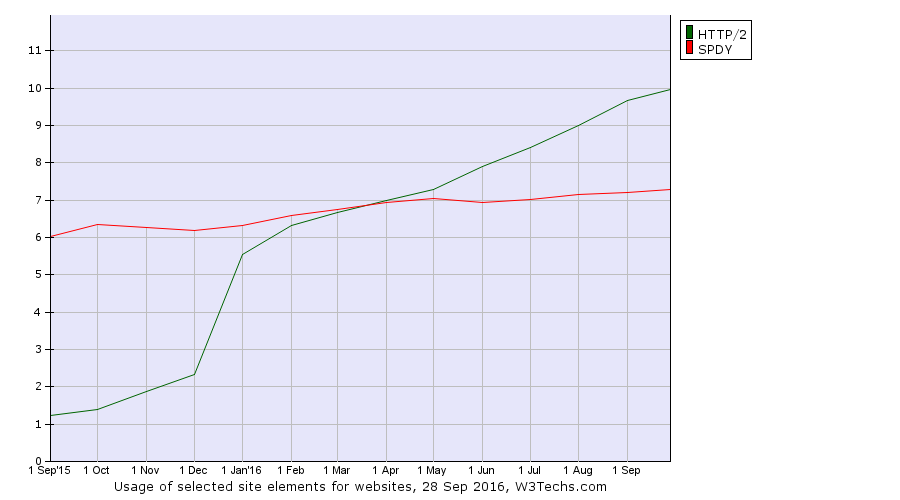

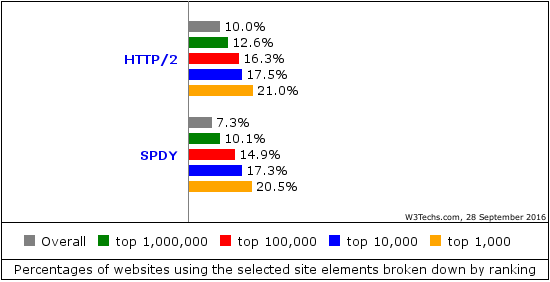

Daniel Stenberg: 1,000,000 sites run HTTP/2 |

… out of the top ten million sites that is. So there’s at least that many, quite likely a few more.

This is according to w3techs who runs checks daily. Over the last few months, there have been about 50,000 new sites per month switching it on.

It also shows that the HTTP/2 ratio has increased from a little over 1% deployment a year ago to the 10% today.

HTTP/2 gets more used the more popular site it is. Among the top 1,000 sites on the web, more than 20% of them use HTTP/2. HTTP/2 also just recently (September 9) overcame SPDY among the top-1000 most popular sites.

On September 7, Amazon announced their CloudFront service having enabled HTTP/2, which could explain an adoption boost over the last few days. New CloudFront users get it enabled by default but existing users actually need to go in and click a checkbox to make it happen.

As the web traffic of the world is severely skewed toward the top ones, we can be sure that a significantly larger share than 10% of the world’s HTTPS traffic is using version 2.

Recent usage stats in Firefox shows that HTTP/2 is used in half of all its HTTPS requests!

https://daniel.haxx.se/blog/2016/09/28/1000000-sites-run-http2/

|

|

Cameron Kaiser: And now for something completely different: Rehabilitating the Performa 6200? |

But every unloved machine has its defenders, and I noticed that the Wikipedia entry on the 6200 series radically changed recently. The "Dtaylor372" listed in the edit log appears to be this guy, one "Daniel L. Taylor". If it is, here's his reasoning why the seething hate for the 6200 series should be revisited.

Daniel does make some cogent points, cites references, and even tries to back them some of them up with benchmarks (heh). He helpfully includes a local copy of Apple's tech notes on the series, though let's be fair here -- Apple is not likely to say anything unbecoming in that document. That said, the effort is commendable even if I don't agree with everything he's written. I'll just cite some of what I took as highlights and you can read the rest.

- The Apple tech note says, "The Power Macintosh 5200 and 6200 computers are electrically similar to the Macintosh Quadra 630 and LC 630." It might be most accurate to say that these computers are Q630 systems with an on-board PowerPC upgrade. It's an understatement to observe that's not the most favourable environment for these chips, but it would have required much less development investment, to be sure.

- He's right that the L2 cache, which is on a 64-bit bus and clocked at the actual CPU speed, certainly does mitigate some of the problems with the Q630's 32-bit interface to memory, and 256K L2 in 1995 would have been a perfectly reasonable amount of cache. (See page 29 for the block diagram.) A 20-25% speed penalty (his numbers), however, is not trivial and I think he underestimates how this would have made the machines feel comparatively in practice even on native code.

- His article claims that both the SCSI bus and the serial ports have DMA, but I don't see this anywhere in the developer notes (and at least one source contradicts him). While the NCR controller that the F108 ASIC incorporates does support it, I don't see where this is hooked up. More to the point, the F108's embedded IDE controller -- because the 6200 actually uses an IDE hard drive -- doesn't have DMA set up either: if the Q630 is any indication, the 6200 is also limited to PIO Mode 3. While this was no great sin when the Q630 was in production, it was verging on unacceptable even for a low-to-midrange system by the time the 6200 hit the market. More on that in the next point.

Do note that the Q630 design does support bus mastering, but not from the F108. The only two entities which can be bus master are the CPU or either the PDS expansion card or communications card via the PrimeTime II IC "southbridge."

- Daniel makes a very well-reasoned assertion that the computer's major problems were due to software instead of hardware design, which is at least partially true, but I think his objections are oversimplified. Certainly the Mac OS (that's with a capital M) was not well-suited for handling the real-time demands of hardware: ADB, for example, requires quite a bit of polling, and the OS could not service the bus sufficiently often to make it effective for large-volume data transfer (condemning it to a largely HID-only capacity, though that's all it was really designed for). Even interrupt-driven device drivers could be problematic; a large number of interrupts pending simultaneously could get dropped (the limit on outstanding secondary interrupt requests prior to MacOS 9.1 was 40, see Apple TN2010) and a badly-coded driver that did not shunt work off to a deferred task could prevent other drivers from servicing their devices because those other interrupts were disabled while the bad driver tied up the machine.

That said, however, these were hardly unknown problems at the time and the design's lack of DMA where it counts causes an abnormal reliance on software to move data, which for those and other reasons the MacOS was definitely not up to doing and the speed hit didn't help. Compare this design with the 9500's full PCI bus, 64-bit interface and hardware assist: even though the 9500 was positioned at a very different market segment, and the weak 603 implementation is no comparison to the 604, that doesn't absolve the 6200 of its other deficiencies and the 9500 ran the same operating system with considerably fewer problems (he does concede that his assertions to the contrary do "not mean that [issues with redraw, typing and audio on the 6200s] never occurred for anyone," though his explanation of why is of course different). Although Daniel states that relaying traffic for an Ethernet card "would not have impacted Internet handling" based on his estimates of actual bandwidth, the real rate limiting step here is how quickly the CPU, and by extension the OS, can service the controller. While the comm slot at least could bus master, that only helps when it's actually serviced to initiate it. My personal suspicion is because the changes in OpenTransport 1.3 reduced a lot of the latency issues in earlier versions of OT, that's why MacOS 8.1 was widely noted to smooth out a lot of the 6200's alleged network problems. But even at the time of these systems' design Copland (the planned successor to System 7) was already showing glimmers of trouble, and no one seriously expected the MacOS to explosively improve in the machines' likely sales life. Against that historical backdrop the 6200 series could have been much better from the beginning if the component machines had been more appropriately engineered to deal with what the OS couldn't in the first place.

http://tenfourfox.blogspot.com/2016/09/and-now-for-something-completely.html

|

|

Air Mozilla: Connected Devices Meetup - Sensor Web |

Mozilla's own Cindy Hsiang to discuss SensorWeb SensorWeb wants to advance Mozilla's mission to promote the open web when it evolves to the physical world....

Mozilla's own Cindy Hsiang to discuss SensorWeb SensorWeb wants to advance Mozilla's mission to promote the open web when it evolves to the physical world....

https://air.mozilla.org/connected-devices-meetup-sensor-web-2016-09-27/

|

|