Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Air Mozilla: Connected Devices Meetup - Laurynas Riliskis |

We are on the verge of next revolution Connected devices have emerged during the last decade into what's known as the Internet of Things. These...

We are on the verge of next revolution Connected devices have emerged during the last decade into what's known as the Internet of Things. These...

https://air.mozilla.org/connected-devices-meetup-laurynas-riliskis-2016-09-27/

|

|

Air Mozilla: Connected Devices Meetup - Johannes Ernst: UBOS and the Indie IoT |

We are on the verge of next revolution Connected devices have emerged during the last decade into what's known as the Internet of Things. These...

We are on the verge of next revolution Connected devices have emerged during the last decade into what's known as the Internet of Things. These...

https://air.mozilla.org/connected-devices-meetup-johannes-ernst-ubos-and-the-indie-iot-20160927/

|

|

Air Mozilla: Connected Devices Meetup - Nicholas van de Walle |

Nicholas van de Walle is a local web developer who loves shoving computers into everything from clothes to books to rocks. He has worked for...

Nicholas van de Walle is a local web developer who loves shoving computers into everything from clothes to books to rocks. He has worked for...

https://air.mozilla.org/connected-devices-meetup-nicholas-van-de-walle/

|

|

Air Mozilla: Connected Devices Meetup - September 27, 2016 |

6:30pm - Johannes Ernst; UBOS and the Indie IoT: Will the IoT inevitably make us all digital serfs to a few overlords in the cloud,...

6:30pm - Johannes Ernst; UBOS and the Indie IoT: Will the IoT inevitably make us all digital serfs to a few overlords in the cloud,...

https://air.mozilla.org/connected-devices-meetup-2016-09-27/

|

|

David Lawrence: Happy BMO Push Day 2! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1305713] BMO: Persistent XSS via Git commit messages in comments

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/09/27/happy-bmo-push-day-2-2/

|

|

Air Mozilla: B2G OS Announcements September 2016 |

The weekly B2G meeting on Tuesday 27th September will be attended by Mozilla senior staff members who would like to make some announcements to the...

The weekly B2G meeting on Tuesday 27th September will be attended by Mozilla senior staff members who would like to make some announcements to the...

|

|

Air Mozilla: Martes Mozilleros, 27 Sep 2016 |

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

Reuni'on bi-semanal para hablar sobre el estado de Mozilla, la comunidad y sus proyectos. Bi-weekly meeting to talk (in Spanish) about Mozilla status, community and...

|

|

Christian Heilmann: JavaScript aus ist nicht das Problem – Vortrag beim Frontend Rhein Main Meetup |

Das Video des Vortrags ist von AOE schon fertig editiert und auf YouTube zu finden:

Die (englischen) Slides sind auf Slideshare:

Eine Vorschau auf den ganzen Talk gab es von mir auch auf der SmashingConf Freiburg Jam Session, und der Screencast davon (schreiend, auf Englisch) ist auch auf YouTube:

Sowohl AOE als auch die UserGruppe haben ueber den Abend gebloggt.

Es war ein netter Abend, und obschon ich dachte es w"are das zehnj"ahrige Jubil"aum und nicht das zehnte Meetup hat sichs gelohnt, nach der SmashingConf und vor dem A-Tag in Wiesbaden vorbei zu kommen. Vor allem auch, weil Patricia Kuchen gebacken hat und nun ganz wild JavaScript lernt!

|

|

Gervase Markham: Off Trial |

Six weeks ago, I posted “On Trial”, which explained that I was taking part in a medical trial in Manchester. In the trial, I was trying out some interesting new DNA repair pathway inhibitors which, it was hoped, might have a beneficial effect on my cancer. However, as of ten days ago, my participation has ended. The trial parameters say that participants can continue as long as their cancer shrinks or stays the same. Scans are done every six weeks to determine what change, if any, there has been. As mine had been stable for the five months before starting participation, I was surprised to discover that after six weeks of treatment my liver metastasis had grown by 7%. This level of growth was outside the trial parameters, so they concluded (probably correctly!) the treatment was not helping me and that was that.

The Lord has all of this in his hands, and I am confident of his good purposes for me :-)

http://feedproxy.google.com/~r/HackingForChrist/~3/nYtwef5d39c/

|

|

Emily Dunham: Rust's Community Automation |

Rust’s Community Automation

Here’s the text version, with clickable links, of my Automacon lightning talk today.

Intro

I’m a DevOps engineer at Mozilla Research and a member of the Rust Community subteam, but the conclusions and suggestions in this talk are my own observations and opinions.

The slides are a result of trying to write my own CSS for sliderust... Sorry about the ugliness.

I have 10 minutes, so this is not the time to teach you any Rust. Check out rust-lang.org, the Rust Community Resources, or your city’s Rust meetup to get started with the language.

What we are going to cover is how Rust automates some community tasks, and what you can learn from our automation.

Community

I define “community”, in this context, as “the human interaction work necessary for a project’s success”. This work is done by a wide variety of people in many situations. Every interaction, from helping a new contributor to discussing a proposed code change to criticizing someone’s behavior, affects the overall climate of a project’s community.

Automation

To me, “automation” means “offloading peoples’ work onto a system”. This can be a computer system, but I think it can also mean changes to the social systems that guide peoples’ behavior.

So, community automation is a combination of:

- Building tools to do things the humans used to have to

- Tweaking the social systems to minimize the overhead they create

Scoping the Problem

While not all things can be automated and not all factors of the community are under the project leadership’s control, it’s not totally hopeless.

Choices made and automation deployed by project leaders can help control:

- Which contributors feel welcome or unwelcome in a project

- What code makes it into the project’s tree

- Robots!

Moderation

Our robots and social systems to improve workflow and contributor experience all rely on community members’ cooperation. To create a community of people who want to work constructively together and not be jerks to each other, Rust defines behavior expectations code of conduct. The important thing to note about the CoC is that half the document is a clear explanation of how the policies in it will be enforced. This would be impossible without the dedication of the amazing mod team.

The process of moderation cannot and should not be left to a computer, but we can use technology to make our mods’ work as easy as possible. We leave the human tasks to humans, but let our technologies do the rest.

In this case, while the mods need to step in when a human has a complaint about something, we can automate the process of telling peole that the rules exist. You can’t join the IRC channel, post on the Discourse forums, or even read the Rust subreddit without being made aware that you’re expected to follow the CoC’s guidelines in official Rust spaces.

Depending on the forums where your project communicates, try to automate the process of excluding obvious spammers and trolls. Not everybody has the skills or interest to be an excellent moderator, so when you find them, avoid wasting their time on things that a computer could do for them!

It didn’t fit in the talk, but this Slashdot post is one of my favorite examples of somebody being filtered out of participating in the Rust community due to their personal convictions about how project leadership should work. While we do miss out on that person’s potential technical contributions, we also save all of the time that might be spent hashing out our disagreements with them if we had a less clear set of community guideines.

Robots

This lightning talk highlighted 4 categories of robots:

- Maintaining code quality

- Engaging in social pleasantries

- Guiding new contributors

- Widening the contributor pipeline

Longer versions of this talk also touch on automatically testing compiler releases, but that’s more than 10 minutes of content on its own.

The Not Rocket Science Rule of Software Engineering

To my knowledge, this blog post by Rust’s inventor Graydon Hoare is the first time that this basic principle has been put so succinctly:

Automatically maintain a repository of code that always passes all the tests.

This policy guides the Rust compiler’s development workflow, and has trickled down into libraries and projects using the language.

Bors

The name Bors has been handed down from Graydon’s original autolander bot to an instance of Homu, and is often verbed to refer to the simple actions he does:

- Notice when a human says “r+” on a PR

- Create a branch that looks like master will after the change is applied

- Test that branch

- Fastforward the master branch to the tested state, if it passed.

Keep your tree green

Saying “we can’t break the tests any more” is a pretty significant cultural change, so be prepeared for some resistance. With that disclaimer, the path to following the Not Rocket Science Rule is pretty simple:

- Write tests that fail when your code is bad and pass when it’s good

- Run the tests on every change

- Only merge code if it passes all the tests

- Fix the tests whenever thy’re wrong.

This strategy encourages people to maintain the tests, because a broken test becomes everyone’s problem and interrupts their workflow until it’s fixed.

I believe that tests are necessary for all code that people work on. If the code was fully and perfectly correct, it wouldn’t need changes – we only write code when something is wrong, whether that’s “It crashes” or “It lacks such-and-such a feature”. And regardless of the changes you’re making, tests are essential for catching any regressions you might accidentally introduce.

Guide new contributors

In open source projects, “I’m new; what can I work on?” is a common inquiry. In internal projects, you’ll often meet colleagues from elsewhere in your organization who ask you to teach them something about the project or the skills you use when working on it.

The Rust-implemented browser engine Servo is actually a slightly better example of this than the compiler itself, since the smaller and younger codebase has more introductory-level issues remaining. The site starters.servo.org automatically scrapes the organization’s issue trackers for easy and unclaimed issues.

Issue triage is often unrewarding, but using the tags for a project like this creates a greater incentive to keep them up to date.

When filing introductory issues, try to include links to the relevant documentation, instructions for reproducing the bug, and a suggestion of what file you would look in first if you tackled the problem yourself.

Automating mentorship

Mentorship is a highly personalized process in which one human transfers their skills to another. However, large projects often have more contributors seeking the same basic skills than mentors with time to teach them.

The parts of mentorship which don’t explicitly require a human mentor can be offloaded onto technology.

The first way to automate mentorship tasks is to maintain correct and up-to-date documentation. Correct docs train humans to consult them before interrupting an expert, whereas docs that are frequently outdated or wrong condition their users to skip them entirely.

Use tools like octohatrack and your project status updates to identify and recognize contributors who help with docs and issue triage. Docs contributions may actually save more developer and community time than new code features, so respect them accordingly.

Finally, maintain a list of introductory or mentored issues – even if that’s just a Google Doc or Etherpad.

Bear in mind that an introductory issue doesn’t necessarily mean “suitable for someone who has never coded before”. Someone with great skills in a scripting language might be looking for a place to help with an embedded codebase, or a UX designer might want to get involved with a web framework that they’ve used. Introductory issues should be clear about what knowledge a contributor should acquire in order to try them, but they don’t have to all be “easy”.

Automating the pipeline

Drive-by fixes are to being a core contributor as interviews are to full time jobs. Just as a company attempts to interview as many qualified candidates as it can, you can recruit more contributors by making your introductory issues widely available.

Before publicizing your project, make sure you have a CONTRIBUTING.txt or good README outlining where a new contributor should start, or you’ll be barraged with the same few questions over and over.

There are a variety of sites, which I call issue aggregators, where people who already know a bit about open source development can go to find a new project to work on. I keep a list on this page /edunham.net/pages/issue_aggregators.html>, pull requests welcome /github.com/edunham/site/blob/master/pages/issue_aggregators.rst> if I’m missing anything. Submitting your introductory issues to these sites broadens your pipeline, and may free up humans’ recruiting time to focus on peole who need more help getting up to speed.

If you’re working on internal rather than public projects, issue aggregators are less relevant. However, if you have the resources, it’s worthwhile to consider the recruiting device of open sourcing an internal tool that would be useful to others. If an engineer uses and improves that tool, you get a tool improvement and they get some mentorship. In the long term, you also get a unique opportunity to improve that engineer’s opinion of your organization while networking with your engineers, which can make them more likely to want to work for you later.

Follow Up

For questions, you’re welcome to chat with me on Twitter (@QEDunham), email

(automacon

Slides from the talk are here.

http://edunham.net/2016/09/27/rust_s_community_automation.html

|

|

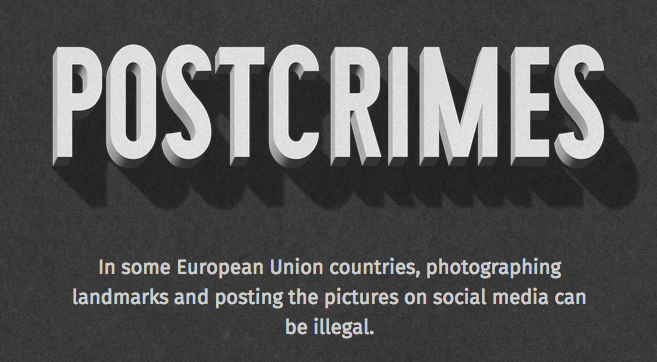

The Mozilla Blog: Help Fix Copyright: Send a Rebellious Selfie to European Parliament (Really!) |

The EU’s proposed copyright reform keeps in place retrograde laws that make many normal online creative acts illegal. The same restrictive laws will stifle innovation and hurt technology businesses. Let’s fix it. Sign Mozilla’s petition, watch and share videos, and snap a rebellious selfie

—

Earlier this month, the EU Commission released their proposal for a reformed copyright framework. In response, we are asking everyone reading this post to take a rebellious selfie and send that doctored snapshot to EU Parliament. Seem ridiculous? So is an outdated law that bans taking and sharing selfies in front of the Eiffel Tower at night in Paris, or in front of the Little Mermaid in Copenhagen.

Of course, no one is actually going to jail for subversive selfies. But the technical illegality of such a basic online act underscores the grave shortcomings in the EU’s latest proposal on copyright reform. As Mozilla’s Denelle Dixon-Thayer noted in her last post on the proposed reform, it “thoroughly misses the goal to deliver a modern reform that would unlock creativity and innovation.” It doesn’t, for instance, include needed exceptions for panorama, parody, or remixing, nor does it include a clause that would allow noncommercial transformations of works (like remixes, or mashups) or a flexible user clause like an open norm, or fair dealing.

Translation? Making memes and gifs will remain an illicit act.

And that’s just the start. Exceptions for text and data mining are limited to public institutions. This could stifle startups looking to online data to build innovative businesses. Then there is the dangerous “neighbouring right,” similar to the ancillary copyright laws we’ve seen in Spain and Germany (which have been clear failures, respectively). This misguided part of the reform would allow online publishers to copyright “press publications” for up to 20 years, with retroactive effect. The vague wording makes it unclear exactly to whom and for whom this new exclusive right would apply.

Finally, another unclear provision would require any internet service that provides access to “large amounts” of works to users to broker agreements with rightsholders for the use of, and protection of, their works. This could include the use of “effective content recognition technologies” — which imply universal monitoring and strict filtering technologies that identify and/or remove copyrighted content.

These proposals, if adopted as they are, would deal a blow to EU startups, to independent coders, creators, and artists, and to the health of the internet as a driver for economic growth and innovation.

We’re not advocating plagiarism or piracy. Creators must be treated fairly, including proper remuneration, for their creations and works. Mozilla wants to improve copyright for everyone, so individuals are not discouraged from creating and innovating.

Mozilla isn’t alone in our objections: Over 50,000 individuals have signed our petition and demanded modern copyright laws that foster creativity, innovation, and opportunity online.

We have our work cut out for us. As the European Parliament revises the proposal this fall, we need a movement — a collection of passionate internet users who demand better, modern laws. Today, Mozilla is launching a public education campaign to support that movement.

Mozilla has created an app to highlight the absurdity of some of Europe’s outdated copyright laws. Try Post Crimes: Take a selfie in front of European landmarks that can be technically unlawful to photograph — like the Eiffel Tower’s night-time light display, or the Little Mermaid in Denmark — due to restrictive copyright laws.

Then, send your selfie as a postcard to your Member of the European Parliament (MEP). Show European policymakers how outdated copyright laws are, and encourage them to forge a more future-looking and innovation-friendly copyright reform.

We’ve also created three short videos that outline the need for reform. They’re educational, playful, and a little bit weird — just like the internet. But they explore a serious issue: The harmful effect outdated and restrictive copyright laws have on our creativity and the open internet. We hope you’ll watch them and share them with others.

We need your help standing up for better copyright laws. When you sign the petition, snap a selfie, or share our videos, you’re supporting creativity, innovation and opportunity online — for everyone.

|

|

This Week In Rust: This Week in Rust 149 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

- Returning from Exceptions. How to return from exceptions correctly. Part of the series Writing an OS in Rust.

- ripgrep is faster than {grep, ag, git grep, ucg, pt, sift}. ripgrep is a command line search tool that combines the usability of The Silver Searcher (an ack clone) with the raw speed of GNU grep.

- Intersection Impls. A specialization example of adding a blanket impl that implements

Clonefor anyCopytype, its shortcomings, and one proposed fix using intersection impls (also called lattice impls). - My adventures in Rust web development.

- From tweet to Rust feature. Journey of an idea from being a tweet to becoming a Rust feature.

- How to count newlines really fast in Rust.

- Writing Cocoa apps in Rust.

- Experiment: compare ZODB file-storage iteration with Python and Rust.

- Implementing Huffman coding in Rust.

RustConf/RustFest Experiences

- My Thoughts on RustConf 2016 by Jeena Lee.

- RustConf and Strange Loop 2016 by Malisa.

- Habitat at RustConf by Salim Alam.

- RustFest was great! by Cyryl Plotnicki.

New Crates & Project Updates

- Rust Community Team announces the Rust programming language video channel on YouTube and Twitter.

- Servo maintains a fork of rust-bindgen which just got updated with a major rewrite that cleans up the codebase and paves the way for future improvements.

- slog version 1.0 released. slog is a structured, composable logging library for Rust.

- Cyano. An advanced Rust-to-JavaScript transpiler.

- gimli. A lazy, zero-copy parser for the DWARF debugging format.

- Sarkara. A Post-Quantum cryptography library written in Rust.

- Native Windows GUI. Native Window GUI (nwg for short) is a GUI library for Windows.

- dataplotlib. Plotting library that tries to make it easy to do scientific plots in Rust.

- rouille. Rust web server middleware.

- Perlin. A lazy, zero-allocation and data-agnostic Information Retrieval library.

- This week in Rust Docs 23. Updates from the Rust documentation team.

- This week in Servo 78. Servo is a prototype web browser engine written in Rust.

- This week in Tock OS 5. Tock is a safe, multitasking operating system for low-power, low-memory microcontrollers.

- These weeks in intermezzOS 3. intermezzOS is a teaching operating system focused on introducing systems programming concepts to experienced developers.

- This week in TiKV 2016-09-16. TiKV is a distributed Key-Value database which is based on the design of Google Spanner and HBase.

- This week in Ruma 2016-09-18. Ruma is a Matrix homeserver written in Rust.

- This week in Ruma 2016-09-25.

- What's coming up in imag 16. imag is a text based personal information management suite.

Crate of the Week

Somewhat unsurprisingly, this week's crate of the week is ripgrep. In case you've missed it, this is a grep/ag/pt/whatever search tool you use replacement that absolutely smokes the competition in most performance tests. Thanks to DanielKeep for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- Rust Design Patterns is looking for collaborators. Check out the README for more information.

- [easy] rust: incr.comp.: Issue warning if cache directory is on FS without hard-linking.

- [tedious] rust: Initial webassembly support via LLVM.

- [easy] rust: Bootstrap key logic is too strict.

- [easy] rust: rustc should emit an error when there's a bootstrap key mismatch.

- [easy] rust: Lint against using generic conversion traits when concrete methods are available.

- [moderate] rust: Create official .deb packages.

- [easy] rust-www: Better front-page example. The front page example on the website isn't so special. Make it shine.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

77 pull requests were merged in the last two weeks.

Selfcan no longer be a type parameter- MIR: Trivial copy propagation

- MIR: Constant propagation

- Attribute invocation at crate root level allowed again (were inadvertently disallowed two weeks ago)

TypedArenanow allocates lazily, loses.with_capacity(_)(the latter is a breaking change)syntax::codemap::Spans can now be merged if adjacent- RBML is gone (epic PR)

#[inline]d functions are now only instantiated on use site (epic speedup)- Better

parentinfo for `save-analysis trans::adtis superceded byrustc::ty::layout- Rustc metadata diagnostics

assert_ne!(..)anddebug_assert_ne!(..)2u64.pow(99)now panics instead of silently overflowingStringno longerimplsFrom>norFrom<&'a [char]>(for now, until the regressions are sorted out)- ARM LLVM bug workaround: Setting discriminant via

memset - Preparations for macros 2.0

New Contributors

- aclarry

- Alexander von Gluck IV

- Andrew Lygin

- Ashley Williams

- Austin Hicks

- Eitan Adler

- Gianni Ciccarelli

- jacobpadkins

- James Duley

- Joe Neeman

- Niels Sascha Reedijk

- Vanja Cosic

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

New RFCs

Upcoming Events

- 9/28. Boston Rust Meetup.

- 9/28. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 9/28. Rust Documentation Team Meeting at #rust-docs on irc.mozilla.org.

- 9/29. Rust Meetup Dresden.

- 9/29. Rust DC: Who is more foolish? Novice traps in Rust.

- 10/5. Open-Space Rust Meetup Cologne/Bonn.

- 10/5. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 10/5. Rust Documentation Team Meeting at #rust-docs on irc.mozilla.org.

- 10/6. Rust release triage.

- 10/10. Seattle Rust Meetup.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email the Rust Community Team for access.

fn work(on: RustProject) -> Money

No jobs listed for this week.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

You can actually return Iterators without summoning one of the Great Old Ones now, which is pretty cool.

Thanks to Johan Sigfrids for the suggestion.

Submit your quotes for next week!

This Week in Rust is edited by: nasa42, llogiq, and brson.

https://this-week-in-rust.org/blog/2016/09/27/this-week-in-rust-149/

|

|

Nikki Bee: RustConf 2016 |

So, a couple weeks ago I got to go to RustConf 2016, the first instance of an annual event! What was it about? The relatively new programming language Rust, from Mozilla!

Attending

I was only able to attend thanks to three factors: travel re-reimbursement allowance from Outreachy for my recent internship with them; a scholarship ticket from RustConf to cover attendance fees; and being able to stay at a friends place in Portland for a few days. Were it not for each of those, I would not have felt financially comfortable attending! I’m very grateful for all of the support.

The Morning

I went to RustConf with my now wife, Decky Coss (who also was able to attend under the same circumstances as me!). We decided that since the event is only a day long, and due to our track record for having a hard time getting the “full” experience out of conventions, that we would work extra hard on getting there on time. As such, we only missed 25 minutes of the first talk.

Of course, I’m not pleased that that happened, but we had the disadvantage of some rough flights the day before, as well as not staying on or near the location. Plus, we’re just not cut out for whatever it takes to attend conferences studiously. But we did our best that day and I think that was OK.

As I said, we missed the first half of the opening keynote, given by Aaron Turon and Niko Matsakis, but I really liked what I saw. It felt like a neat overview of Rust, especially concerning common issues newbies (like me!) run into with the language. It was gratifying hearing them point out the very same trip-ups I deal with, especially since they talked about how Rust developers are aware of these issues! They are looking into ways to help stop these trip-ups, such as better language syntax, and updating the Rust documentation.

Acting as a perfect follow-up to the keynote was “The Illustrated Adventure Survival Guide”, by Liz Baillie, which was easily the funnest talk. It was a drawn slideshow/comic making many metaphors and jokes representing how Rust works. I felt like it would be hard to enjoy if you didn’t have a decent understanding of Rust but then again, I suppose nobody would be there if they didn’t!

The last talk of the morning, “Integrating Rust and VLC”, by Geoffroy Couprie. It felt a bit hard to follow in that I didn’t know what it was leading to. All the same I greatly enjoyed seeing both a practical implementation of Rust (in this case, handling video streaming codecs), and how Rust can act as a friendlier alternative to C-like languages (something that has been heavily on my mind).

The Afternoon

Lunchtime! Lunch was set to be an hour and a half long, which, when I first saw the schedule, I thought would be excessively long. Somehow it went by very fast! Plus, following such a long morning, I was very grateful to get to unwind for a bit from listening to so much talking.

However, the dessert I had gave me a bad sugar rush and crash and I felt very out of it for the next few hours, so I don’t remember much about the talks during this time. None of them really did much for me, not that they were bad, but I just wasn’t able to pay much attention.

The Evening

Snack time! Again, I was glad to have a respite where I could chill out for a bit. Especially after having a hard time keeping focus for a few hours. This break was not nearly as long as lunch, but if it was any longer, I think the conference would have been too long.

The first talk of the evening was “A Modern Editor in Rust”, presented by Raph Levien. Raph talked about the text editor Xi. I was excited by some of the things offered in it, since it’s made to tackle unique issues, such as quickly loading and scrolling through massive (like, hundreds of megabytes big) files. I didn’t see much in it that I thought I needed personally, but I appreciate seeing programs for solving unique challenges like that.

The next talk I ended up writing so much about, I put it into another section.

The Second-Last Talk

Next was “RFC: In Order to Form a More Prefect union”, by Josh Triplett, which was easily the most impactful talk to me. It was a chronicle of a Request For Comments idea created by the speaker, which was recently committed into Rust! Josh described the RFC process in the Rust community as “welcoming, open, and inclusive”, all of which echoes my experience as a Rust newbie.

Josh talked about each step of their submission, including how far their proposal went (which was a bit of a surprise to them!). The best part to me was, despite it becoming one of the most discussed issues on Rust’s Github, Josh never found the proposal being taken from them by a more experienced contributor, no matter how high-level the discussion got. It’s not that I would expect such a rude thing to happen easily, but more that I greatly appreciate the speaker being given ample room to learn how to become a better contributor.

Previously, I contributed to Servo (the browser engine written in Rust) for my Outreachy internship, which had formal processes for my additions to be merged into the main code. That makes it obviously different than how it would be for any another contributor, since my internship gave me a unique position in relation to a mentor who knew the ins and outs of the Servo project. I never felt like I would be able to replicate this anywhere else.

All that said, if I ever contribute to Rust more informally, this talk has given me a great idea of what to expect, and how to prepare to enter into doing so. Whenever I have an idea I want to share with Rust, I won’t hesitate over doing so! Also, the next time I contribute to another FOSS project, I’ll be thinking of the process here, and try to map the open Rust process to that project!

The Closing Keynote

Finally, the closing keynote, presented by Julia Evans. She’s the only person I already recognized, as we’re both Recurse Center alums. During the snack break Decky and I decided to try to find her to chat for a couple minutes. We managed to do so- as well as get copies of a delightful zine she wrote about Linux tools she’s found invaluable for programming! You can find it on her website.

By this time I felt pretty fatigued from the long day, but Julia’s talk was very energetic which made me enthused to pay attention. Her keynote was focused on learning systems programming with Rust, while new to the language- two things that felt challenging to her, but that she was able to get into well surprisingly quickly. I really relate to that sort of experience, since many times a new project I wanted to do seemed insurmountable, but became much easier after I started!

I’ve never done any systems programming, but I’ve had a couple friends ask me when I’ve told them about Rust, if they can do X or Y sort of thing in it, to which I don’t often have an answer. Based on Julia’s talk, I feel comfortable saying systems programming is a good bet!

Other Things

That’s it for the talks themselves, although I had a few more thoughts I wanted to share.

-

As part of being late (as I mentioned before), Decky and I missed the breakfast offered by RustConf, although we hadn’t realized there was any before we got there. Having lunch and snacks offered as part of the conference was fantastic. If Decky and I had to go out for lunch we probably would have missed a talk. Although, in the future, I’ll be skipping out on sweets, even free ones, because sugar is just really bad for me :(.

-

I’ve never done an event that’s so continuously long. The schedule for RustConf was about 9 hours straight, and I was quite exhausted by the end of it. In the future, I’d prefer an event with multiple, shorter days! Conventions in general are fatiguing for me, but having to sit all the time for a long day takes a lot out of me.

-

I know better than to assume on an individual level, but I felt dismayed by the low ratio of women to men present at the conference. As a result of feeling a bit stranded by that, I felt uncomfortable talking to strangers. The only people I talked to - for more than a few sentences - were people I knew, or recognized from online.

-

One of the talks ended in a very forced parody of Trump’s campaign slogan which got a lot of laughs from the crowd. When that happened, my only reaction was to turn to Decky and grimace to show her how unhappy I was. Comedy shouldn’t be used to trivialize fascists, it should be used to kill fascism.

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 26 Sep 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160926/

|

|

Doug Belshaw: Curriculum as algorithm |

Way back in Episode 39 of Today In Digital Education, the podcast I record every week with Dai Barnes, we discussed the concept of ‘curriculum as algorithm’. If I remember correctly, it was Dai who introduced the idea.

The first couple of things that pop into my mind when considering curricula through an algorithmic lens are:

But let’s rewind and define our terms, including their etymology. First up, curriculum:

In education, a curriculum… is broadly defined as the totality of student experiences that occur in the educational process. The term often refers specifically to a planned sequence of instruction, or to a view of the student’s experiences in terms of the educator’s or school’s instructional goals.

[…]

The word “curriculum” began as a Latin word which means “a race” or “the course of a race” (which in turn derives from the verb currere meaning “to run/to proceed”). (Wikipedia)

…and algorithm:

In mathematics and computer science, an algorithm… is a self-contained step-by-step set of operations to be performed. Algorithms perform calculation, data processing, and/or automated reasoning tasks.

The English word 'algorithm’ comes from Medieval Latin word algorism and French-Greek word “arithmos”. The word 'algorism’ (and therefore, the derived word 'algorithm’) come from the name al-Khwarizmi. Al-Khwarizmi (Persian:

|

|

Christian Heilmann: Help making the fourth industrial revolution less scary |

Last week I spent in Germany at an event sponsored by the government agency for unemployment covering the issue of digitalisation of the job market and the subsequential loss of jobs.

When the agency approached me to give a keynote on the upcoming “fourth industrial revolution” and what machine learning and artificial intelligence means for the job market I was – to put it mildly – bricking it. All the other presenters at the event had several doctor titles and were professors of this and that. And here I was, being asked to deliver the “future” to an audience of company owners, university professors and influential people who decide the employment fate of thousands of people.

I went into hermit mode and watched, read and translated dozens of videos and articles on AI and the work environment. In the end, I took a more detailed look at the conference schedule and realised that most of the subject matter data will be covered by the presenter before me.

Thus I delivered a talk covering the current situation of AI and what it means for us as job seekers and employers. The slides and screencast are in German, but I am looking forward to translating them and maybe deliver them in a European frame soon.

The slide deck is on Slideshare, and even without knowing German, you should get the gist:

The feedback was overwhelming and humbling. I got interviewed by the local TV station where I mostly deflected all the negative and defeatist attitude towards artificial intelligence the media loves to portrait.

I also got a half page spread in the local newspaper where – to the amusement of my friends – I was touted a “fascinating prophet”.

During the expert panel on digital security I had a few interesting encounters. Whilst in general it felt tough to see how inflexible and outdated some of the attitudes of companies towards computers were, there is a lot of innovation happening even in rural areas. I was especially impressed with the state of robots in warehouses and the investment of the European Union in Blockchain solutions and security research.

One thing I am looking forward to is working with a cybersecurity centre in the area giving workshops on social engineering and security of iOT.

A few things I learned and I’d like you to also consider:

- We are at the cusp – if not in the middle of – a new digital revolution

- Our job as people in the know is to reach out to those who are afraid of it and give out sensible information as a counter point to some of the fearmongering of the press

- It is incredibly rewarding to go out of our comfort zone and echo chamber and talk to people with real business and social change issues. It humbles you and makes you wonder just how you ended up knowing all that we do.

- The good social aspects of our jobs could be a blueprint for other companies to work and change to be resilient towards replacement by machines

- German is hard :)

So, be brave, offer to present at places not talking about the latest flavour of JavaScript or CSS preprocessing. The world outside our echo chamber needs us.

Or as the interrupters put it: What’s your plan for tomorrow?

|

|

Gervase Markham: GPLv2 Combination Exception for the Apache 2 License |

CW: heavy open source license geekery ahead.

One unfortunate difficulty with open source licensing is that some lawyers, including the FSF, consider the Apache License 2.0 incompatible with the GPL 2.0, which is to say that you can’t combined Apache 2.0-licensed code with GPL 2.0-licensed code and distribute the result. This is annoying because when choosing a permissive licence, we want people to use the more modern Apache 2.0 over the older BSD or MIT licenses, because it provides some measure of patent protection. And this incompatibility discourages people from doing that.

This was a concern for Mozilla when determining the correct licensing for Rust, and this is why the standard Rust license is a dual license – the choice of Apache 2.0 or MIT. The idea was that Apache 2.0 would be the normal license, but people could choose MIT if they wanted to combine “Rust license” code with GPL 2.0 code.

However, the LLVM project has now had notable open source attorney Heather Meeker come up with an exception to be added to the Apache 2.0 license to enable GPL 2.0 compatibility. This exception meets a number of important criteria for a legal fix for this problem:

- It’s an additional permission, so is unlikely to affect the open source-ness of the license;

- It doesn’t require the organization using it to take a position on the question of whether the two licenses are actually compatible or not;

- It’s specific to the GPL 2.0, thereby constraining its effects to solving the problem.

Here it is:

—- Exceptions to the Apache 2.0 License: —-

In addition, if you combine or link compiled forms of this Software with software that is licensed under the GPLv2 (“Combined Software”) and if a court of competent jurisdiction determines that the patent provision (Section 3), the indemnity provision (Section 9) or other Section of the License conflicts with the conditions of the GPLv2, you may retroactively and prospectively choose to deem waived or otherwise exclude such Section(s) of the License, but only in their entirety and only with respect to the Combined Software.

—- end —-

It seems very well written to me; I wish it had been around when we were licensing Rust.

http://feedproxy.google.com/~r/HackingForChrist/~3/LwfflfNQj0E/

|

|

Gervase Markham: Introducing Deliberate Protocol Errors: Langley’s Law |

Google have just published the draft spec for a protocol called Roughtime, which allows clients to determine the time to within the nearest 10 seconds or so without the need for an authoritative trusted timeserver. One part of their ecosystem document caught my eye – it’s like a small “chaos monkey” for protocols, where their server intentionally sends out a small subset of responses with various forms of protocol error:

A healthy software ecosystem doesn‘t arise by specifying how software should behave and then assuming that implementations will do the right thing. Rather we plan on having Roughtime servers return invalid, bogus answers to a small fraction of requests. These bogus answers would contain the wrong time, but would also be invalid in another way. For example, one of the signatures might be incorrect, or the tags in the message might be in the wrong order. Client implementations that don’t implement all the necessary checks would find that they get nonsense answers and, hopefully, that will be sufficient to expose bugs before they turn into a Blackhat talk.

The fascinating thing about this is that it’s a complete reversal of the ancient Postel’s Law regarding internet protocols:

Be conservative in what you send, be liberal in what you accept.

This behaviour instead requires implementations to be conservative in what they accept, otherwise they will get garbage data. And it also involves being, if not liberal, then certainly occasionally non-conforming in what they send.

Postel’s law has long been criticised for leading to interoperability issues – see HTML for an example of how accepting anything can be a nightmare, with the WHAT-WG having to come along and spec things much more tightly later. However, but simply reversing the second half to be conservative in what you accept doesn’t work well either – see XHTML/XML and the yellow screen of death for an example of a failure to solve the HTML problem that way. This type of change wouldn’t work in many protocols, but the particular design of this one, where you have to ask a number of different servers for their opinion, makes it possible. It will be interesting to see whether reversing Postel will lead to more interoperable software. Let’s call it “Langley’s Law”:

Be occasionally evil in what you send, and conservative in what you accept.

http://feedproxy.google.com/~r/HackingForChrist/~3/tyuAOUDbG3c/

|

|

QMO: Firefox 50 Beta 3 Testday, September 30th |

Hello Mozillians,

We are happy to announce that Friday, September 30th, we are organizing Firefox 50 Beta 3 Testday. We will be focusing our testing on Pointer Lock API and WebM EME support for Widevine features. Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better! See you on Friday!

https://quality.mozilla.org/2016/09/firefox-50-beta-3-testday-september-30th/

|

|

Karl Dubost: [worklog] Edition 037. Autumn is here. The fall of -webkit- |

Two Japanese national holidays during the week. And there goes the week. Tune of the Week: Anderson .Paak - Silicon Valley

Webcompat Life

Progress this week:

Today: 2016-09-26T09:24:48.064519 336 open issues ---------------------- needsinfo 13 needsdiagnosis 110 needscontact 9 contactready 27 sitewait 161 ----------------------

You are welcome to participate

- Monday day off in Japan: Respect for the elders.

- Thursday day off in Japan: Autumn Equinox.

Firefox 49 has been released and an important piece of cake is delivered now to every users. You can get some context on why some (not all) -webkit- landed on Gecko and the impact on Web standards.

We have a team meeting soon in Taipei.

The W3C was meeting this week in Lisbon. Specifically about testing.

I did a bit of Prefetch links testing and how they appear in devtools.

Webcompat issues

(a selection of some of the bugs worked on this week).

- Little by little we are accumulating our issues list about CSS zoom. Firefox is the only one to not support the non-standard property. It's coming from (Trident) IE was imported in WebKit (Safari), then maintained alive in Blink (Chrome, Opera) to finally come into Edge. Sadness.

Webcompat.com development

- Working on code for improving our management of webhooks with GitHub.

- Suggestions for improving the design of comments box.

- Provide documentation for testing the branch of someone else

Reading List

- Thoughts about UI for an advanced search

- Firefox 49 fixes sites designed with WebKit in mind, and more

- Overview of web compatibility in Taiwan

Follow Your Nose

TODO

- Document how to write tests on webcompat.com using test fixtures.

- ToWrite: Amazon prefetching resources with

for Firefox only.

Otsukare!

|

|