Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Andy McKay: System Add-ons |

System add-ons are a new kind of add-on in Firefox, you might also know them as Go Faster add-ons.

These are interesting add-ons, they allow Firefox developers to ship code faster by writing the code in an add-on and then allow that to be developed and shipped independently of the main Firefox code.

Mostly these are not using WebExtensions and there is some questions if they should. I've been thinking about this one for a while and here are my thoughts at the moment - they aren't more than thoughts at this time.

System add-ons are really "internal" pieces of code that would otherwise be shipped in mozilla-central, blessed by the module owner and generally approved. They are maintained by someone who is active involved in their code (usually but not always a Mozilla employee). They have gone through security and privacy reviews. They are tested against Firefox code in the test infrastructure on each release. They sometimes do things that no other add-on should be allowed to do.

This is all in contrast to third party add-ons that you'll find on AMO. When you look through all the reasoning behind WebExtensions, you'll find that a lot of the reasons involve things like "hard to maintain", "security problems" and so on. Please see my earlier posts for more on this. I would say that these reasons don't apply to system add-ons.

So do system add-ons need to be WebExtensions? Maybe they don't. In fact I think if we try and push them into being system add-ons we'll create a scenario where WebExtensions become the blocker.

System add-ons will want to do things that don't exist, so APIs will need to be added to WebExtensions. Some of the things that system add-ons will want to do are things that third party add-ons should not be allowed to do. Then we need to add in another permissions layer to say some add-on developers can use those APIs and others can't.

Already there is a distinction between what can and cannot land in Firefox and that's made by the module owners and people who work on Firefox.

If a system add-on does something unusual, do you end up in a scenario where you write a WebExtension API that only one add-on uses? The maintenance burden of creating an API for one part of Firefox that only one add-on can use doesn't seem worth it. It also makes WebExtensions the blocker and slows system add-on development down.

It feels to me like there isn't a compelling reason to make system add-ons to be WebExtensions. Instead we should encourage them to be so if it makes sense and let them be regular bootstrapped add-ons otherwise.

|

|

George Wright: An Introduction to Shmem/IPC in Gecko |

We use shared memory (shmem) pretty extensively in the graphics stack in Gecko. Unfortunately, there isn’t a huge amount of documentation regarding how the shmem mechanisms in Gecko work and how they are managed, which I will attempt to address in this post.

Firstly, it’s important to understand how IPC in Gecko works. Gecko uses a language called IPDL to define IPC protocols. This is effectively a description language which formally defines the format of the messages that are passed between IPC actors. The IPDL code is then compiled into C++ code by our IPDL compiler, and we can then use the generated classes in Gecko’s C++ code to do IPC related things. IPDL class names start with a P to indicate that they are IPDL protocol definitions.

IPDL has a built-in shmem type, simply called mozilla::ipc::Shmem. This holds a weak reference to a SharedMemory object, and code in Gecko operates on this. SharedMemory is the underlying platform-specific implementation of shared memory and facilitates the shmem subsystem by implementing the platform-specific API calls to allocate and deallocate shared memory regions, and obtain their handles for use in the different processes. Of particular interest is that on OS X we use the Mach virtual memory system, which uses a Mach port as the handle for the allocated memory regions.

mozilla::ipc::Shmem objects are fully managed by IPDL, and there are two different types: normal Shmem objects, and unsafe Shmem objects. Normal Shmem objects are mostly intended to be used by IPC actors to send large data chunks between themselves as this is more efficient than saturating the IPC channel. They have strict ownership policies which are enforced by IPDL; when the Shmem object is sent across IPC, the sender relinquishes ownership and IPDL restricts the sender’s access rights so that it can neither read nor write to the memory, whilst the receiver gains these rights. These Shmem objects are created/destroyed in C++ by calling PFoo::AllocShmem() and PFoo::DeallocShmem(), where PFoo is the Foo IPDL interface being used. One major caveat of these “safe” shmem regions is that they are not thread safe, so be careful when using them on multiple threads in the same process!

Unsafe Shmem objects are basically a free-for-all in terms of access rights. Both sender and receiver can always read/write to the allocated memory and careful control must be taken to ensure that race conditions are avoided between the processes trying to access the shmem regions. In graphics, we use these unsafe shmem regions extensively, but use locking vigorously to ensure correct access patterns. Unsafe Shmem objects are created by calling PFoo::AllocUnsafeShmem(), but are still destroyed in the same manner as normal Shmem objects by simply calling PFoo::DeallocShmem().

With the work currently ongoing to move our compositor to a separate GPU process, there are some limitations with our current shmem situation. Notably, a SharedMemory object is effectively owned by an IPDL channel, and when the channel goes away, the SharedMemory object backing the Shmem object is deallocated. This poses a problem as we use shmem regions to back our textures, and when/if the GPU process dies, it’d be great to keep the existing textures and simply recreate the process and IPC channel, then continue on like normal. David Anderson is currently exploring a solution to this problem, which will likely be to hold a strong reference to the SharedMemory region in the Shmem object, thus ensuring that the SharedMemory object doesn’t get destroyed underneath us so long as we’re using it in Gecko.

http://www.hackermusings.com/2016/09/an-introduction-to-shmemipc-in-gecko/

|

|

Justin Crawford: Debugging WebExtension Popups |

Note: In the time since I last posted here I have been doing a bit more hands-on web development [for example, on the View Source website]. Naturally this has led me to learn new things. I have learned a few things that may be new to others, too. I’ll drop those here when I run across them.

I have been looking for a practical way to learn about WebExtensions, the new browser add-on API in Firefox. This API is powerful for a couple reasons: It allows add-on developers to build add-ons that work across browsers, and it’s nicer to work with than the prior Firefox add-on API (for example, it watches code and reloads changes without restarting the browser).

So I found a WebExtensions add-on to hack on, which I’ll probably talk about in a later post. The add-on has a chrome component, which is to say it includes changes to the browser UI. Firefox browser chrome is just HTML/CSS/JavaScript, which is great. But it took me a little while to figure out how to debug it.

The tools for doing this are all fairly recent. The WebExtension documentation on MDN is fresh from the oven, and the capabilities shown below were missing just a few months ago.

Here’s how to get started debugging WebExtensions in the browser:

First, enable the Browser Toolbox. This is a special instance of Firefox developer tools that can inspect and debug the browser’s chrome. Cool, eh? Here’s how to make it even cooler:

- Set up a custom Firefox profile with the Toolbox enabled, so you don’t have to enable it every time you fire up your development environment. Consider just using the DevPrefs add-on, which toggles a variety of preferences (including Toolbox) to optimize the browser for add-on development.

- Once you have a profile with DevPrefs installed, you can launch it with your WebExtension like so:

./node_modules/.bin/web-ext run --source-dir=src --firefox-binary {path to firefox binary} --firefox-profile {name of custom profile}(See the WebExtensions command reference for more information.

Next, with the instance of Firefox that appears when you run the above command, go to the Tools -> Web Developer -> Browser Toolbox menu. A window should appear that looks just like a standard Firefox developer tools window. But this window is imbued with the amazing ability to debug the browser itself. Try it: Use the inspector to look at the back button!

In that window you’ll see a couple small icons near the top right. One looks like a waffle. This button makes the popup sticky — just like a good waffle. This is quite helpful, since otherwise the popup will disappear the minute you try to inspect, debug, or modify it using the Browser Toolbox.

Next to the waffle is a button with a downward arrow on it. This button lets you select which content to debug — so, for example, you could select the HTML of your popup. When you have a sticky popup selected, you can inspect and hack on its HTML and CSS just like you would any other web content.

This information is now documented in great detail on MDN. Check it out!

https://hoosteeno.com/2016/09/20/debugging-webextension-popups/

|

|

Will Kahn-Greene: Standup v2: system test |

What is Standup?

Standup is a system for capturing standup-style posts from individuals making it easier to see what's going on for teams and projects. It has an associated IRC bot standups for posting messages from IRC.

Join us for a Standup v2 system test!

Paul and I did a ground-up rewrite of the Standup web-app to transition from Persona to GitHub auth, release us from the shackles of the old architecture and usher in a new era for Standup and its users.

We're done with the most minimal of minimal viable products. It's missing some features that the current Standup has mostly around team management, but otherwise it's the same-ish down to the lavish shade of purple in the header that Rehan graced the site with so long ago.

If you're a Standup user, we need your help testing Standup v2 on the -stage environment before Thursday, September 22nd, 2016!

We've thrown together a GitHub issue to (ab)use as a forum for test results and working out what needs to get fixed before we push Standup v2 to production. It's got instructions that should cover everything you need to know.

Why you would want to help:

You get to see Standup v2 before it rolls out and point out anything that's missing that affects you.

You get a chance to discover parts of Standup you may not have known about previously.

This is a chance for you to lend a hand on this community project that helps you which we're all working on in our free time.

Once we get Standup v2 up, there are a bunch of things we can do with Standup that will make it more useful. Freddy is itching to fix IRC-related issues and wants https support [1]. I want to implement user API tokens, a cli and search. Paul want's to have better weekly team reports and project pages.

There are others listed in the issue tracker and some that we never wrote down.

We need to get over the Standup v2 hurdle first.

Why you wouldn't want to help:

You're on PTO.

Stop reading--enjoy that PTO!

It's the end of the quarter and you're swamped.

Sounds like you're short on time. Spare a minute and do something in the Short on time, but want to help anyhow? section.

You're looking to stop using Standup.

I'd love to know what you're planning to switch to. If we can meet peoples' needs with some other service, that's more free time for me and Paul.

Some fourth thing I lack the imagination to think of.

If you have some other blocker to helping, toss me an email.

Hooray for the impending Standup v2!

| [1] | This is in progress--we're just waiting for a cert. |

http://bluesock.org/%7Ewillkg/blog/mozilla/standup_v2_systemtest.html

|

|

David Lawrence: Happy BMO Push Day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1275568] bottom of page ‘duplicate’ button focuses top of page duplicate field

- [1283930] Add Makefile.PL & local/lib/perl5 support to bmo/master

- [1278398] Enable “Due Date” field for all websites, web services, infrastructure(webops, netops, etc), infosec bugs (all components)

- [1213791] “suggested reviewers” menu overflows horizontally from visible area if reviewers have long name.

- [1297522] changes to legal form

- [1302835] Enable ‘Rank’ field for Tech Evangelism Product

- [1267347] Editing the Dev-Events Form to be current

- [1303659] Bug.comments (/rest/bug/

/comment) should return the count value in the results

discuss these changes on mozilla.tools.bmo.

https://dlawrence.wordpress.com/2016/09/20/happy-bmo-push-day-28/

|

|

This Week In Rust: This Week in Rust 148 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

News & Blog Posts

- My experience rewriting Enjarify in Rust. Enjarify is a tool (written in Python) for translating Dalvik bytecode to equivalent Java bytecode.

- The PlayRust Classifier. Synopsis of a RustConf talk on a classifier to detect posts that were intended for /r/playrust but were mistakenly posted on /r/rust.

- Why Rust's

std::collectionsis absolutely fantastic. Follow-up to - a critique of Rust'sstd::collections. - Using

and_thenandmapcombinators on the RustResultType. - GFX Programming Model. A deep dive into what makes gfx-rs complex and awesome.

- Building a scalable MySQL Proxy in Rust.

- Using unsafe tricks to examine Rust data structure layout.

- Tools for profiling Rust.

- Generating Rustdoc with a custom style.

- Understanding where clauses and trait constraints.

- Let's Build a REPL/Parser with Rust & LALRPOP.

- [video] Videos from Rust Meetup Cologne/Bonn.

RustConf Experiences

- My RustConf travelogue by Zack M. Davis.

- Rustconf 2016 – What was cool and what surprised me by Andy Grove.

- Notes from RustConf 2016 talks by Brian Pearce.

New Crates & Project Updates

- Announcing the code style RFC process and style team.

- Redox is now listed in Github's Open Source Operating Systems Showcase.

- rustcxx is a tool allowing C++ to be used from a Rust project easily. It works by allowing snippets of C++ to be included within a Rust function, and vice-versa.

- Kinder. Algebraic structure and emulation of higher kinded types for Rust.

- rdedup. Data deduplication with compression and public key encryption.

- bit_reverse. A Rust library to compute the bit reversal of primitive integers.

- This Week in Rust Docs 22.

- These days in Piston 3.

- This week in Ruru 1. Ruru lets you write native Ruby extensions in Rust.

- This week in TiKV 2016-09-19.

Crate of the Week

This week's crate of the week is (the in best TWiR-tradition shamelessly self-promoted) mysql-proxy, a flexible, lightweight and scalable proxy for MySQL databases. Thanks to andygrove for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- [easy] rust: Specialisation error 502 is misleading.

- [easy] rust: Bootstrap key logic is too strict.

- [easy] rust: rustc should emit an error when there's a bootstrap key mismatch.

- [easy] rust: Lint against using generic conversion traits when concrete methods are available.

- [hard] rust: Fix unwinding on emscripten.

- [moderate] rust: Create official .deb packages.

- [easy] rust-www: Better front-page example. The front page example on the website isn't so special. Make it shine.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

98 pull requests were merged in the last two weeks.

- Macro invocations now fold/visit in the same order (makes it easier to reason about them)

- Optimized parser's last token handling (who'd have thought it could be optimized further?)

- Some dependency graph improvements

- Rustbuild now supports python3

- LLVM updated

- Don't lose padding for constant closures and tuples (fixes #36401 segfault)

- Improved move checker accuracy and error reports

- Better error message when shadowing type with generics

- Improve Macro-1.1 errors labelling

SyntaxExtension::MacroRulesTTis no more#[derive(Clone, Eq)]produces less code (Yay! faster builds!)- Default stack size upped to 16MiB (temporary measure against stack overflows)

- Change in invoking drop glue for boxed dynamically-sized values (fixes LLVM assertion failure)

private_in_publicerror demoted to warning (until remaining regressions are fixed, also in beta)- MIR optimization: Remove reborrows for references (and already pass dependencies seem to become subtle...)

- Better parent info for

-Z save-analysis - Avoid loading/parsing unused modules (e.g.

#[cfg(any())] mod foo) - Fix closure-as-trait-object dropping

Duration::checked_{add,sub,mul,div}ty::TraitObject's projection bounds are now stably sorted (also unifies/removes diverse hashing implementations)- De-specialized

Zipdata (some ongoing optimization work) Iterator::sum()andproduct()no longer check for overflow in release mode- Fix poor performance in

Vec::{extend_from_slice,extend_with_element}() std::str::replacen(..)- Zero the first byte of

CStrings on drop likely(_)/unlikely(_)intrinsics added (help the CPU with branch prediction)std::io::Take::into_inner()std::alloc::{Rc,Arc}::ptr_eq(..)compiler-rtis dead, long livecompiler-builtins!- dist tarball now contains version info

- sublime-rust now works with the new error format

New Contributors

- Caleb Jones

- dangcheng

- Eugene Bulkin

- knight42

- Liigo

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

- RFC 1696:

mem::discriminant(). Add a function that extracts the discriminant from an enum variant as a comparable, hashable, printable, but (for now) opaque and unorderable type.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Let a

loop { ... }expression return a value viabreak my_value;. - Add a compiler flag that emits crate dependencies on a best-effort basis.

- Generalize the delayed resolution of language items to arbitrary items.

New RFCs

Upcoming Events

- 9/21. Rust Boulder/Denver Monthly Meeting.

- 9/21. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 9/21. Rust Dcoumentation Team Meeting at #rust-docs on irc.mozilla.org.

- 9/22. RustPH Mentors Meeting.

- 9/22. Rust release triage at #rust-triage on irc.mozilla.org.

- 9/26. S~ao Paulo Meetup.

- 9/28. Boston Rust Meetup.

- 9/28. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 9/28. Rust Dcoumentation Team Meeting at #rust-docs on irc.mozilla.org.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email the Rust Community Team for access.

fn work(on: RustProject) -> Money

No jobs listed for this week.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

No quote was selected for QotW.

(Full disclosure: we removed QotW for this issue because selected QotW was deemed inappropriate and against the core values of Rust community. Here is the relevant discussion on reddit. If you are curious, you can find the quote in git history).

Submit your quotes for next week!

This Week in Rust is edited by: nasa42, llogiq, and brson.

https://this-week-in-rust.org/blog/2016/09/20/this-week-in-rust-148/

|

|

The Mozilla Blog: Latest Firefox Expands Multi-Process Support and Delivers New Features for Desktop and Android |

With the change of the season, we’ve worked hard to release a new version of Firefox that delivers the best possible experience across desktop and Android.

Expanding Multiprocess Support

Last month, we began rolling out the most significant update in our history, adding multiprocess capabilities to Firefox on desktop, which means Firefox is more responsive and less likely to freeze. In fact, our initial tests show a 400% improvement in overall responsiveness.

Our first phase of the rollout included users without add-ons. In this release, we’re expanding support for a small initial set of compatible add-ons as we move toward a multiprocess experience for all Firefox users in 2017.

Desktop Improvement to Reader Mode

This update also brings two improvements to Reader Mode. This feature strips away clutter like buttons, ads and background images, and changes the page’s text size, contrast and layout for better readability. Now we’re adding the option for the text to be read aloud, which means Reader Mode will narrate your favorite articles, allowing you to listen and browse freely without any interruptions.

We also expanded the ability to customize in Reader Mode so you can adjust the text and fonts, as well as the voice. Additionally, if you’re a night owl like some of us, you can read in the dark by changing the theme from light to dark.

Offline Page Viewing on Android

On Android, we’re now making it possible to access some previously viewed pages when you’re offline or have an unstable connection. This means you can interact with much of your previously viewed content when you don’t have a connection. The feature works with many pages, though it is dependent on your specific device specs. Give it a try by opening Firefox while your phone is in airplane mode.

We’re continuing to work on updates and new features that make your Firefox experience even better. Download the latest Firefox for desktop and Android and let us know what you think.

- Download Firefox for Windows, Mac, Linux

- Release Notes for Firefox for Windows, Mac, Linux

- Download Firefox for Android

- Release Notes for Firefox for Android

https://blog.mozilla.org/blog/2016/09/19/expanded-multi-process-desktop-android-updates/

|

|

Daniel Glazman: W3C |

J'ai toujours dit que la standardisation au W3C, c'est de l'h'emoglobine sur les murs dans une ambiance feutr'ee. Je ne changerai pas un iota `a cette affirmation. Mais le W3C c'est aussi l'histoire d'une industrie d`es ses premi`eres heures et des amiti'es franches construites dans l'explosion d'une nouvelle `ere. J'ai pass'e ce soir, en marge du Technical Plenary Meeting du W3C `a Lisbonne, un d^ine inoubliable avec mes vieux potes Yves et Olivier que je connais et appr'ecie depuis ohlala tellement longtemps. Un moment d'elicieux, sympa et dr^ole autour d'un repas fabuleux dans une gargote lisbo`ete de r^eve. Des 'eclats de rire, des confidences, une super-soir'ee bref un vrai moment de bonheur. Merci `a eux pour cette g'eniale soir'ee et `a Olivier pour l'adresse, en tous points extra. Je re-signe quand vous voulez, les gars, et c'est un honneur de vous avoir comme potes

http://www.glazman.org/weblog/dotclear/index.php?post/2016/09/20/W3C

|

|

Chris Cooper: RelEng & RelOps Weekly highlights - September 19, 2016 |

Welcome back to our *cough*weekly*cough* updates!

Modernize infrastructure:

Amy and Alin decommissioned all but 20 of our OS X 10.6 test machines, and those last few will go away when we perform the next ESR release. The next ESR release corresponds to Firefox 52, and is scheduled for March next year.

Improve Release Pipeline:

Ben finally completed his work on Scheduled Changes in Balrog. With it, we can pre-schedule changes to Rules, which will help minimize the potential for human error when we ship, and make it unnecessary for RelEng to be around just to hit a button.

Lots of other good Balrog work has happened recently too, which is detailed in Ben’s blog post.

Improve CI Pipeline:

Windows TaskCluster builders were split into level-1 (try) and level-3 (m-i, m-c, etc) worker types with sccache buckets secured by level.

Windows 10 AMI generators were added to automation in preparation for Windows 10 testing on TaskCluster. We’ve been looking to switch from testing on Windows 8 to Windows 10, as Windows 8 usage continues to decline. The move to TaskCluster seems like a natural breakpoint to make that switch.

Dustin massive patch set to enable in-tree config of the various build kinds landed last week. This was no small feat. Kudos to him for the testing and review stamina it took to get that done. Those of us working to migrate nightly builds to TaskCluster are now updating – and simplifying – our task graphs to leverage his work.

Operational:

After work to fix some bugs and make it reliable, Mark re-enabled the cron job that generates our Windows 7 AWS AMIs each night.

Now that many of the Windows 7 tests are being run in AWS, Amy and Q reallocated 20 machines from Windows 7 testing to XP testing to help with the load. We are reallocating 111 additional machines from Windows 7 to XP and Windows 8 in the upcoming week.

Amy created a template for postmortems and created a folder where all of Platform Operations can consolidate their postmortem documents.

Jake and Kendall took swift action on the TCP ChallengeAck side attack vulnerability. This has been fixed with a sysctl workaround on all linux hosts and instances.

Jake pushed a new version of the mig-agent client which was deployed across all Linux and OS X platforms.

Hal implemented the new GitHub feature to require two-factor authentication for several Mozilla organizations on GitHub.

Release:

Rail has automated re-generating our SHA-1 signed Windows installers for Firefox, which are served to users on old versions of Windows (XP, Vista). This means that users on those platforms will no longer need to update through a SHA-1 signed, watershed release (we were using Firefox 43 for this) before updating to the most recent version. This will save XP/Vista users some time and bandwidth by creating a one-step update process for them to get the latest Firefox.

See you next *cough*week*cough*!

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 19 Sep 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160919/

|

|

David Rajchenbach Teller: This blog has moved |

You can find my new blog on github. Still rough around the edges, but I’m planning to improve this as I go.

https://dutherenverseauborddelatable.wordpress.com/2016/09/19/this-blog-has-moved/

|

|

Firefox Nightly: Getting Firefox Nightly to stick to Ubuntu’s Unity Dock |

I installed Ubuntu 16.04.1 this week and decided to try out Unity, the default window manager. After I installed Nightly I assumed it would be simple to get the icon to stay in the dock, but Unity seemed confused about Nightly vs the built-in Firefox (I assume because the executables have the same name).

It took some doing to get Nightly to stick to the Dock with its own icon. I retraced my steps and wrote them down below.

My goal was to be able to run a couple versions of Firefox with several profiles. I thought the easiest way to accomplish that would be to add a new icon for each version+profile combination and a single left click on the icon would run the profile I want.

After some research, I think the Unity way is to have a single icon for each version of Firefox, and then add Actions to it so you can right click on the icon and launch a specific profile from there.

Installing Nightly

If you don’t have Nighly yet, download Nightly (these steps should work fine with Aurora or Beta also). Open a terminal:

$ mkdir /opt/firefox

$ tar -xvjf ~/Downloads/firefox-51.0a1.en-US.linux-x86_64.tar.bz2 /opt

You may need to chown some directories to get that in /opt which is fine. At the end of the day, make sure your regular user can write to the directory or else you won’t be able to install Nightly’s updates.

Adding the icon to the dock

Then create a file in your home directory named nightly.desktop and paste this into it:

[Desktop Entry]

Version=1.0

Name=Nightly

Comment=Browse the World Wide Web

Icon=/opt/firefox/browser/icons/mozicon128.png

Exec=/opt/firefox/firefox %u

Terminal=false

Type=Application

Categories=Network;WebBrowser;

Actions=Default;Mozilla;ProfileManager;[Desktop Action Default]

Name=Default Profile

Exec=/opt/firefox/firefox --no-remote -P minefield-default[Desktop Action Mozilla]

Name=Mozilla Profile

Exec=/opt/firefox/firefox --no-remote -P minefield-mozilla

[Desktop Action ProfileManager]

Name=Profile Manager

Exec=/opt/firefox/firefox --no-remote --profile-manager

Adjust anything that looks like it should change, the main callout being the Exec line should have the names of the profiles you want to use (in the above file mine are called minefield-default and minefield-mozilla). If you have more profiles just make more copies of that section and name them appropriately.

If you think you’ve got it, run this command:

$ desktop-file-validate nightly.desktop

No output? Great — it passed the validator. Now install it:

$ desktop-file-install --dir=.local/share/applications nightly.desktop

Two notes on this command:

- If you leave off –dir it will write to /usr/share/applications/ and affect all users of the computer. You’ll probably need to sudo the command if you want that.

- Something is weird with the parsing. Originally I passed in –dir=~/.local/… and it literally made a directory named ~ in my home directory, so, if the menu isn’t updating, double check the file is getting copied to the right spot.

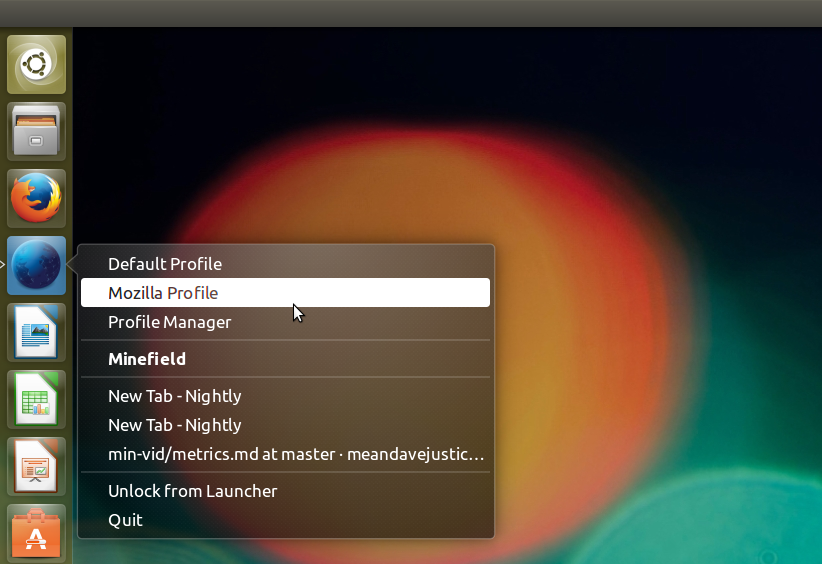

Some people report having to run unity again to get the change to appear, but it showed up for me. Now left-clicking runs Nightly and right-clicking opens a menu asking me which profile I want to use.

Right-click menu for Nightly in Unity’s Dock.

Modifying the Firefox Launcher

I also wanted to launch profiles off the regular Firefox icon in the same way.

The easiest way to do that is to copy the built-in one from /usr/share/applications/firefox.desktop and modify it to suit you. Conveniently, Unity will override a system-wide .desktop file if you have one with the same name in your local directory so installing it with the same commands as you did for Nightly will work fine.

Postscript

I should probably add a disclaimer that I’ve used Unity for all of two days and there may be a smoother way to do this. I saw a couple of 3rd-party programs that will generate .desktop files but I didn’t want to install more things I’d rarely use. Please leave a comment if I’m way off on these instructions!

https://blog.nightly.mozilla.org/2016/09/19/getting-firefox-nightly-to-stick-to-ubuntus-unity-dock/

|

|

Henrik Skupin: Moving home folder to another encrypted volume on OS X |

Over the last weekend I was reinstalling my older MacBookPro (late 2011 model) again after replacing its hard drive with a fresh and modern SSD drive from Crucial 512GB. That change was really necessary given that simple file operations took about a minute, and every system tools claimed that the HDD was fine.

So after installing Mavericks I moved my home folder to another partition to make it easier later to reinstall OS X again. But as it turned out it is not that easy, especially not given that OS X doesn’t support mounting of other encrypted partitions beside the system partition during start-up yet. If you had a single user only, you will be busted after the home dir move and a reboot. That’s what I experienced. As fix under such a situation put back OS X into the “post install” state, and create a new administrator account via single-user mode. With this account you can at least sign-in again, and after unlocking the other encrypted partition you will have access to your original account again.

Having to first login via an account which data is still hosted on the system partition is not a workable solution for me. So I was continuing to find a solution which let me unlock the second encrypted partition during startup. After some search I finally found a tool which actually let me do this. It’s called Unlock and can be found on Github. To make it work it installs a LaunchDaemon which retrieves the encryption password via the System keychain, and unlocks the partition during start-up. To actually be on the safe side I compiled the code myself with Xcode and got it installed with some small modifications to the install script (I may want to contribute those modifications back into the repository for sure :).

In case you have similar needs, I hope this post will help you to avoid those hassles as I have experienced.

https://www.hskupin.info/2016/09/19/moving-home-folder-to-another-encrypted-volume-on-os-x/

|

|

Mozilla Privacy Blog: Improving Government Disclosure of Security Vulnerabilities |

Last week, we wrote about the shared responsibility of protecting Internet security. Today, we want to dive deeper into this issue and focus on one very important obligation governments have: proper disclosure of security vulnerabilities.

Software vulnerabilities are at the root of so much of today’s cyber insecurity. The revelations of recent attacks on the DNC, the state electoral systems, the iPhone, and more, have all stemmed from software vulnerabilities. Security vulnerabilities can be created inadvertently by the original developers, or they can be developed or discovered by third parties. Sometimes governments acquire, develop, or discover vulnerabilities and use them in hacking operations (“lawful hacking”). Either way, once governments become aware of a security vulnerability, they have a responsibility to consider how and when (not whether) to disclose the vulnerability to the affected company so that developer can fix the problem and protect their users. We need to work with governments on how they handle vulnerabilities to ensure they are responsible partners in making this a reality today.

In the U.S., the government’s process for reviewing and coordinating the disclosure of vulnerabilities that it learns about or creates is called the Vulnerabilities Equities Process (VEP). The VEP was established in 2010, but not operationalized until the Heartbleed vulnerability in 2014 that reportedly affected two thirds of the Internet. At that time, White House Cybersecurity Coordinator Michael Daniel wrote in a blog post that the Obama Administration has a presumption in favor of disclosing vulnerabilities. But, policy by blog post is not particularly binding on the government, and as Daniel even admits, “there are no hard and fast rules” to govern the VEP.

It has now been two years since Heartbleed and the U.S. government’s blog post, but we haven’t seen improvement in the way that vulnerabilities disclosure is being handled. Just one example is the alleged hack of the NSA by the Shadow Brokers, which resulted in the public release of NSA “cyberweapons”, including “zero day” vulnerabilities that the government knew about and apparently had been exploiting for years. Companies like Cisco and Fortinet whose products were affected by these zero day vulnerabilities had just that, zero days to develop fixes to protect users before the vulnerabilities were possibly exploited by hackers.

The government may have legitimate intelligence or law enforcement reasons for delaying disclosure of vulnerabilities (for example, to enable lawful hacking), but these same vulnerabilities can endanger the security of billions of people. These two interests must be balanced, and recent incidents demonstrate just how easily stockpiling vulnerabilities can go awry without proper policies and procedures in place.

Cybersecurity is a shared responsibility, and that means we all must do our part – technology companies, users, and governments. The U.S. government could go a long way in doing its part by putting transparent and accountable policies in place to ensure it is handling vulnerabilities appropriately and disclosing them to affected companies. We aren’t seeing this happen today. Still, with some reforms, the VEP can be a strong mechanism for ensuring the government is striking the right balance.

More specifically, we recommend five important reforms to the VEP:

- All security vulnerabilities should go through the VEP and there should be public timelines for reviewing decisions to delay disclosure.

- All relevant federal agencies involved in the VEP must work together to evaluate a standard set of criteria to ensure all relevant risks and interests are considered.

- Independent oversight and transparency into the processes and procedures of the VEP must be created.

- The VEP Executive Secretariat should live within the Department of Homeland Security because they have built up significant expertise, infrastructure, and trust through existing coordinated vulnerability disclosure programs (for example, US CERT).

- The VEP should be codified in law to ensure compliance and permanence.

These changes would improve the state of cybersecurity today.

We’ll dig into the details of each of these recommendations in a blog post series from the Mozilla Policy team over the coming weeks – stay tuned for that.

Today, you can watch Heather West, Mozilla Senior Policy Manager, discuss this issue at the New America Open Technology Institute event on the topic of “How Should We Govern Government Hacking?” The event can be viewed here.

|

|

Chris McDonald: i-can-management Weekly Update 1 |

A couple weeks ago I started writing a game and i-can-management is the directory I made for the project so that’ll be the codename for now. I’m going to write these updates to journal the process of making this game. As I’m going through this process alone, you’ll see all aspects of the game development process as I go through them. That means some weeks may be art heavy, while others game rules, or maybe engine refactoring. I also want to give a glance how I’m feeling about the project and rules I make for myself.

Speaking of rules, those are going to be a central theme on how I actually keep this project moving forward.

- Optimize only when necessary. This seems obvious, but folks define necessary differently. 60 frames per second with 750x750 tiles on the screen is my current benchmark for whether I need to optimize. I’ll be adding numbers for load times and other aspects once they grow beyond a size that feels comfortable.

- Abstractions are expensive, use them sparingly.This is something I learned from a Jonathan Blow talk I mention in my previous post. Abstractions can increase or remove flexibility. On one hand reusing components may allow more rapid iteration. On the other hand it may take considerable effort to make systems communicate that weren’t designed to pass messages.I’m making it clear in each effort whether I’m in exploration mode so I work mostly with just 1 function, or if I’m in architect mode where I’m trying to make the next feature a little easier to implement. This may mean 1000 line functions and lots of global like use for a while until I understand how the data will be used. Or it may mean abstracting a concept like the camera to a struct because the data is always used together.

- Try the easier to implement answer before trying the better answer.I have two goals with this. First, it means I get to start trying stuff faster so I know if I want to pursue it or if I’m kinda off on the idea. Maybe this first implementation will show some other subsystem needs features first so I decide to delay the more correct answer. So in short quicker to test and expose unexpected requirements.The other goal is to explore building games in a more holistic way. Knowing a quick and dirty way to implement something may help when trying to get an idea thrown together really quick. Then knowing how to evolve that code into a better long term solution means next games or ideas that cross pollinate are faster to compose because the underlying concepts are better known.

The last couple weeks have been an exploration of OpenGL via glium the library I’m using to access OpenGL from Rust as well as abstract away the window creation. I’d only ever ran the example before this dive into building a game. From what I remember of doing this in C++ the abstraction it provides for the window creation and interaction, using the glutin library is pretty great. I was able to create a window of whatever size, hook up keyboard and mouse events, and render to the screen pretty fast after going through the tutorial in the glium book.

This brings me to one of the first frustrating points in this project. So many things are focused on 3d these days that finding resources for 2d rendering is harder. If you find them, they are for old versions of OpenGL or use libraries to handle much of the tile rendering. I was hoping to find an article like “I built a 2d tile engine that is pretty fast and these are the techniques I used!” but no such luck. OpenGL guides go immediately into 3d space after getting past basic polygons. But it just means I get to explore more which is probably a good thing.

I already had a deterministic map generator built to use as the source of the tiles on the screen. So, I copy and pasted some of the matrices from the glium book and then tweak the numbers I was using for my tiles until they show up on the screen and looked ok. From here I was pretty stoked. I mean if I have 25x40 tiles on the screen what more could someone ask for. I didn’t know how to make the triangle strips work well for the tiles to be drawn all at once, so I drew each tile to the screen separately, calculating everything on every frame.

I started to add numbers here and there to see how to adjust the camera in different directions. I didn’t understand the math I was working with yet so I was mostly treating it like a black box and I would add or multiply numbers and recompile to see if it did anything. I quickly realized I needed it to be more dynamic so I added detection for the mouse scrolling. Since I’m on my macbook most of the time I’m doing development I can scroll vertically as well as horizontally, making a natural panning feeling.

I noticed that my rendering had a few quirks, and I didn’t understand any of the math that was being used, so I went seeking more sources of information on how these transforms work. At first I was directed to the OpenGL transformations page which set me on the right path, including a primer on the linear algebra I needed. Unfortunately, it quickly turned toward 3d graphics and I didn’t quite understand how to apply it to my use case. In looking for more resources I found Solarium Programmers’ OpenGL 101 page which took some more time with orthographic projects, what I wanted for my 2d game.

Over a few sessions I rewrote all the math to use a coordinate system I understood. This was greatly satisfying, but if I hadn’t started with ignoring the math, I wouldn’t have had a testbed to see if I actually understood the math. A good lesson to remember, if you can ignore a detail for a bit and keep going, prioritize getting something working, then transforming it into something you understand more thoroughly.

I have more I learned in the last week, but this post is getting quite long. I hope to write a post this week about changing from drawing individual tiles to using a single triangle strip for the whole map.

In the coming week my goal is to have mouse clicks interacting with the map working. This involves figuring out what tile the mouse has clicked which I’ve learned isn’t trivial. In parallel I’ll be developing the first set of tiles using Pyxel Edit and hopefully integrating them into the game. Then my map will become richer than just some flat colored tiles.

Here is a screenshot of the game so far for posterity’s sake. It is showing 750x750 tiles with deterministic weighted distribution between grass, water, and dirt: :

:

https://wraithan.net/2016/09/18/i-can-management-weekly-update-1/

|

|

Andy McKay: TFSA Check |

The TFSA is a savings account for Canadians that was introduced in 2009.

As a quick check I wanted to see how much or little my TFSA had changed against what it should be. That meant a double check of how much room I had in the TFSA each year. So this is a quick cacluation the theoretical case: that you are able to invest the maximum amount each year, at the beginning of the year and get 5% return (after fees) on that.

| Year | Maximum | Total invested | Compounded |

|---|---|---|---|

| 2009 | $5,000.00 | $5,000.00 | $5,250.00 |

| 2010 | $5,000.00 | $10,000.00 | $10,762.50 |

| 2011 | $5,000.00 | $15,000.00 | $16,550.63 |

| 2012 | $5,000.00 | $20,000.00 | $22,628.16 |

| 2013 | $5,500.00 | $25,500.00 | $29,534.56 |

| 2014 | $5,500.00 | $31,000.00 | $36,786.29 |

| 2015 | $10,000.00 | $41,000.00 | $49,125.61 |

| 2016 | $5,500.00 | $46,500.00 | $57,356.89 |

Which always raises the question for me of what is a reasonable rate to calculate at these days. It always used to be 10%, but that's very hard to get these days. Since 2006 the annualized return on the S&P 500 is 5.158% for example. Perhaps 5% represents too conversative a number.

|

|

Matej Cepl: OpenWeatherMapProvider for CyanogenMod 13 |

I don’t understand. CyanogenMod 13 introduced new Weather widget and lock screen support. Great! Unfortunately, the widget requires specific providers for weather services and CM does not provide any in the default installation. There exists Weather Underground provider, which works, but only other provider I found (Yahoo! Weather provider) does not work with my CM without Google Play!.

I would a way prefer OpenWeatherMap provider, but although CyanogenMod has the GitHub repository for one , but no APK anywhere (and certainly not one for F-Droid). Fortunately, I have found a blogpost which describes how simple it is build the APK from the given code. Unfortunately, author did not provide APK on his site. I am not sure, whether there is not some hook, but here is mine.

https://matej.ceplovi.cz/blog/openweathermapprovider-for-cyanogenmod-13.html

|

|

Chris McDonald: Changing Optimization Targets |

Alternate Title: How I changed my mental model to be a more effective game developer and human.

Back in February 2016, I started my journey as a professional game developer. I joined Sparkypants to work on the backend for Dropzone. This was about 7 months ago at time of writing. I didn’t enter the game development world in the standard ways. I wasn’t at one of the various schools with game dev programs, I didn’t intern at a studio, I haven’t spent much of my personal development time building my own indie games. I had on the other hand, spent years building backend services, writing dev tools, competing in AI competitions, and building a slew of half finished open source projects. In short, I was not a game developer when I started.

My stark contrast in background works to my advantage in many parts of my job. Most of our engineers haven’t worked on backend services and haven’t needed to scale that sort of infrastructure. My lead and friend Johannes has been instrumental in many of my successes so far in the company. He has background in backend development as well as game development and has often been a translator and guide to me as I learn what being a game developer means.

At first, I assumed my contrast would work itself out naturally and I’d just become a game developer by osmosis. If I am surrounded by folks doing this and I’m actively developing a game, I will become a game developer. But that presupposes success, which was only coming to me in limited amounts. The other conclusion would be leaving game development because I wasn’t compatible with it, something I’m unwilling to accept at this time.

I shared my concerns around not fitting the culture at Sparkypants with Johannes, as well as some productivity worries. I’ve learned over the years that if I’m feeling problems like this, my boss may be as well. Johannes with his typical wonderful encouraging personality reminded me that there are large aspects of my personality that fit in with the culture, just maybe my development style and conflict resolution needed work and recommended this talk by Jonathan Blow to show me the mental model that is closer to how many of the other developers operate, among some other advice.

That talk by Jonathan Blow spends a fair amount of its time on the topic of optimization. Whether it is using data oriented techniques to make data series processing faster or drawing in a specific way to make the graphics card use less memory or any number of topics, optimization comes up in nearly every game development talk or article at some point. His point though was that we often spend too much time optimizing the wrong things. If you’ve been in computer science for a bit you’ve inevitably heard at least a fragment of the following quote from Donald Knuth, if not you’re in for a treat, this is a good one:

Programmers waste enormous amounts of time thinking about, or worrying about, the speed of noncritical parts of their programs, and these attempts at efficiency actually have a strong negative impact when debugging and maintenance are considered. We should forget about small efficiencies, say about 97% of the time: premature optimization is the root of all evil. Yet we should not pass up our opportunities in that critical 3%.

The bolded text is the part most folks quote, implying the rest. I had heard this, quoted it, and used it as justification for doing or not doing things many times in the past. But, I’d also forgotten it, I’d apply it when it was convenient for me, but not generally to my software development. Blow starts with the more traditional overthinking algorithms and code in general that most bring up when they speak on premature optimization. Then he followed on with the idea that selecting data structures is a form of optimization. That follow on was a segue to point out that any time you are thinking about a problem, you should keep in mind if it is the most important or urgent problems for you to think about.

The end of the day, your job as a game developer is not to optimize for speed or correctness, but to optimize for fun. This means trying a lot of ideas and throwing many of them out. If you spent a lot of time optimizing for a million users of a feature and only some folks in the company use it before you decide to remove it, you’ve wasted a lot of effort. Maybe not completely, since you’ve probably learned during the process, but that effort could have been put into other features or parts of the system that may actually need attention. This shift in thinking has me letting go of details in more cases, spend less time on projects and focusing on “functional” over “correct and scalable.”

The next day after watching that talk and discussing with Johannes, I attended RustConf and saw a series of amazing talks on Rust and programming in general. Of particular note for changing my mental model was Julia Evan’s closing keynote about learning systems programming with Rust. There were so many things that struck me during that talk, but I’ll just focus on the couple that were most relevant.

First and foremost was the humility in the talk. Julia’s self described experience level was “intermediate developer” while having about as many years of experience as I have and I considered myself a more “senior developer.” At many points over the last couple years I’ve wrestled with this, considering myself senior then seeing evidence that I’m not. As more confident person, it is an easy trap for me to fall into. I’m in my first year as a game developer, regardless of other experience I’m a junior game developer at best.

Starting to internalize this humility has resulted in fighting my coworkers less when they bring up topics that I think I have enough knowledge to weigh in on. The more experienced folks at work have decades of building games behind them. I’m not saying my input to these discussions is worthless, I still have a lot to contribute, but I’ve been able to check my ego at the door more easily and collaborate through topics instead of being contrary.

The humility in the talk makes another major concept from it, life long learning, take on a new light. I’ve always been striving for more knowledge in the computer science space, so life long learning isn’t new to me, but like the optimization discussion above there is more nuance to be discovered. Having humility when trying to learn makes the experience so much richer for all parties. Teachers being humble will not over explain a topic and recognize that their way is not the only way. Learners being humble will be more receptive to ideas that don’t fit their current mental model and seek more information about them.

This post has become quite long, so I’ll try to wrap things up and use further blog posts to explore these ideas with more concrete examples. Writing this has been a mechanism for me to understand some of this change in myself as well as help others who may end up in similar shoes.

If this blog post were a tweet, I think it’d be summarized into “Pay attention to the important things, check your ego at the door, and keep learning.” which I’m sure would get me some retweets and stars or hearts or whatever. And if someone else said it, I’d go “of course, yeah folks mess this up all the time!” But, there is so much more nuance in those ideas. I now realize I’m just a very junior game developer with some other sometimes relevant experience, I’ve so much to learn from my peers and am extremely excited to do so.

If you have additional resources that you’d think I or others who read this would find valuable, please comment below or send me at tweet.

https://wraithan.net/2016/09/17/changing-optimization-targets/

|

|

Mozilla Security Blog: Update on add-on pinning vulnerability |

Earlier this week, security researchers published reports that Firefox and Tor Browser were vulnerable to “man-in-the-middle” (MITM) attacks under special circumstances. Firefox automatically updates installed add-ons over an HTTPS connection. As a backup protection measure against mis-issued certificates, we also “pin” Mozilla’s web site certificates, so that even if an attacker manages to get an unauthorized certificate for our update site, they will not be able to tamper with add-on updates.

Due to flaws in the process we used to update “Preloaded Public Key Pinning” in our releases, the pinning for add-on updates became ineffective for Firefox release 48 starting September 10, 2016 and ESR 45.3.0 on September 3, 2016. As of those dates, an attacker who was able to get a mis-issued certificate for a Mozilla Web site could cause any user on a network they controlled to receive malicious updates for add-ons they had installed.

Users who have not installed any add-ons are not affected. However, Tor Browser contains add-ons and therefore all Tor Browser users are potentially vulnerable. We are not presently aware of any evidence that such malicious certificates exist in the wild and obtaining one would require hacking or compelling a Certificate Authority. However, this might still be a concern for Tor users who are trying to stay safe from state-sponsored attacks. The Tor Project released a security update to their browser early on Friday; Mozilla is releasing a fix for Firefox on Tuesday, September 20.

To help users who have not updated Firefox recently, we have also enabled Public Key Pinning Extension for HTTP (HPKP) on the add-on update servers. Firefox will refresh its pins during its daily add-on update check and users will be protected from attack after that point.

https://blog.mozilla.org/security/2016/09/16/update-on-add-on-pinning-vulnerability/

|

|

Air Mozilla: Webdev Beer and Tell: September 2016 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

https://air.mozilla.org/webdev-beer-and-tell-september-2016/

|

|