Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mic Berman |

How will you Lead? From a talk at MarsDD in Toronto April 2016

|

|

Niko Matsakis: Intersection Impls |

As some of you are probably aware, on the nightly Rust builds, we currently offer a feature called specialization, which was defined in RFC 1210. The idea of specialization is to improve Rust’s existing coherence rules to allow for overlap between impls, so long as one of the overlapping impls can be considered more specific. Specialization is hotly desired because it can enable powerful optimizations, but also because it is an important component for modeling object-oriented designs.

The current specialization design, while powerful, is also limited in a few ways. I am going to work on a series of articles that explore some of those limitations as well as possible solutions.

This particular posts serves two purposes: it describes the running

example I want to consder, and it describes one possible solution:

intersection impls (more commonly called lattice impls

). We’ll

see that intersection impls are a powerful feature, but they don’t

completely solve the problem I am aiming to solve and they also

intoduce other complications. My conclusion is that they may be a part

of the final solution, but are not sufficient on their own.

Running example: interconverting between Copy and Clone

I’m going to structure my posts around a detailed look at the Copy

and Clone traits, and in particular about how we could use

specialization to bridge between the two. These two traits are used in

Rust to define how values can be duplicated. The idea is roughly like

this:

- A type is

Copyif it can be copied from one place to another just by copying bytes (i.e., withmemcpy). This is basically types that consist purely of scalar values (e.g.,u32,[u32; 4], etc). - The

Clonetrait expands uponCopyto include all types that can be copied at all, even if requires executing custom code or allocating memory (for example, aStringorVec).

These two traits are clearly related. In fact, Clone is a

supertrait of Copy, which means that every type that is copyable

must also be cloneable.

For better or worse, supertraits in Rust work a bit differently than

superclasses from OO languages. In particular, the two traits are

still independent from one another. This means that if you want to

declare a type to be Copy, you must also supply a Clone impl.

Most of the time, we do that with a #[derive] annotation, which

auto-generates the impls for you:

1 2 3 4 5 | |

That derive annotation will expand out to two impls looking

roughly like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

The second impl (the one implementing the Clone trait) seems a bit

odd. After all, that impl is written for Point, but in principle it

could be used any Copy type. It would be nice if we could add a

blanket impl that converts from Copy to Clone that applies to all

Copy types:

1 2 3 4 5 6 | |

If we had such an impl, then there would be no need for Point above

to implement Clone explicitly, since it implements Copy, and the

blanket impl can be used to supkply the Clone impl. (In other words,

you could just write #[derive(Copy)].) As you have probably

surmised, though, it’s not that simple. Adding a blanket impl like

this has a few complications we’d have to overcome first. This is

still true with the specialization system described in [RFC 1210][].

There are a number of examples where these kinds of blanket impls

might be useful. Some examples: implementing PartialOrd in terms of

Ord, implementing PartialEq in terms of Eq, and implementing

Debug in terms of Display.

Coherence and backwards compatibility

Hi! I’m the language feature coherence! You may remember me from previous essays like Little Orphan Impls or RFC 1023.

Let’s take a step back and just think about the language as it is now,

without specialization. With today’s Rust, adding a blanket

impl Clone for T would be massively backwards incompatible.

This is because of the coherence rules, which aim to prevent there

from being more than one trait applicable to any type (or, for generic

traits, set of types).

So, if we tried to add the blanket impl now, without specialization,

it would mean that every type annotated with #[derive(Copy, Clone)]

would stop compiling, because we would now have two clone impls: one

from derive and the blanket impl we are adding. Obviously not

feasible.

Why didn’t we add this blanket impl already then?

You might then wonder why we didn’t add this blanket impl converting from

Copy to Clone in the wild west

days, when we broke every

existing Rust crate on a regular basis. We certainly considered

it. The answer is that, if you have such an impl, the coherence rules

mean that it would not work well with generic types.

To see what problems arise, consider the type Option:

1 2 3 4 5 | |

You can see that Option derives Copy and Clone. But because

Option is generic for T, those impls have a slightly different

look to them once we expand them out:

1 2 3 4 5 6 7 8 9 10 | |

Before, the Clone impl for Point was just *self. But for

Option, we have to do something more complicated, which actually

calls clone on the contained value (in the case of a Some). To see

why, imagine a type like Option> – this is clearly

cloneable, but it is not Copy. So the impl is rewritten so that it

only assumes that T: Clone, not T: Copy.

The problem is that types like Option are sometimes Copy and

sometimes not. So if we had the blanket impl that converts all Copy

types to Clone, and we have the impl above that impl Clone for

Option if T: Clone, then we can easily wind up in a situation

where there are two applicable impls. For example, consider

Option: it is Copy, and hence we could use the blanket impl

that just returns *self. But it is also fits the Clone-based impl

I showed above. This is a coherence violation, because now the

compiler has to pick which impl to use. Obviously, in the case of the

trait Clone, it shouldn’t matter too much which one it chooses,

since they both have the same effect, but the compiler doesn’t know

that.

Enter specialization

OK, all of that prior discussion was assuming the Rust of today. So what if we adopted the existing specialization RFC? After all, its whole purpose is to improve coherence so that it is possible to have multiple impls of a trait for the same type, so long as one of those implementations is more specific. Maybe that applies here?

In fact, the RFC as written today does not. The reason is that the

RFC defines rules that say an impl A is more specific than another

impl B if impl A applies to a strict subset of the types which

impl B applies to. Let’s consider some arbitrary trait Foo. Imagine

that we have an impl of Foo that applies to any Option:

1

| |

The more specific

rule would then allow a second impl for

Option; this impl would specialize the more generic one:

1

| |

Here, the second impl is more specific than the first, because while

the first impl can be used for Option, it can also be used for

lots of other types, like Option, Option, etc. So that

means that these two impls would be accepted under

RFC #1210. If the compiler ever had to choose between them, it

would prefer the impl that is specific to Option over the

generic one that works for all T.

But if we try to apply that rule to our two Clone impls, we run into

a problem. First, we have the blanket impl:

1

| |

and then we have an impl tailored to Option where T: Clone:

1

| |

Now, you might think that the second impl is more specific than the

blanket impl. After all, it can be used for any type, whereas the

second impl can only be used Option. Unfortunately, this isn’t

quite right. After all, the blanket impl cannot be used for any type

T: it can only be used for Copy types. And we already saw that

there are lots of types for which the second impl can be used where

the first impl is inapplicable. In other words, neither impl is a

subset of one another – rather, they both cover two distinct, but

overlapping, sets of types.

To see what I mean, let’s look at some examples:

1 2 3 4 5 6 | |

Note in particular the first and fourth rows. The first row shows that

the blanket impl is not a subset of the Option impl. The last row

shows that the Option impl is not a subset of the blanket impl

either. That means that these two impls would be rejected by

RFC #1210 and hence adding a blanket impl now would still be

a breaking change. Boo!

To see the problem from another angle, consider this Venn digram, which indicates, for every impl, the sets of types that it matches. As you can see, there is overlap between our two impls, but neither is a strict subset of one another:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

Enter intersection impls

One of the first ideas proposed for solving this is the so-called

lattice

specialization rule, which I will call intersection

impls,

since I think that captures the spirit better. The intuition is pretty

simple: if you have two impls that have a partial intersection, but

which don’t strictly subset one another, then you can add a third impl

that covers precisely that intersection, and hence which subsets

both of them. So now, for any type, there is always a most specific

impl to choose. To get the idea, it may help to consider this ASCII

Art

Venn diagram. Note the difference from above: there is now an

impl (indicating with = lines and . shading) covering precisely

the intersection of the other two.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 | |

Intersection impls have some nice properties. For one thing, it’s a kind of minimal extension of the existing rule. In particular, if you are just looking at any two impls, the rules for deciding which is more specific are unchanged: the only difference when adding in intersection impls is that coherence permits overlap when it otherwise wouldn’t.

They also give us a good opportunity to recover some

optimization. Consider the two impls in this case: the blanket

impl

that applies to any T: Copy simply copies some bytes around, which

is very fast. The impl that is tailed to Option, however, does

more work: it matches the impl and then recursively calls

clone. This work is necessary if T: Copy does not hold, but

otherwise it’s wasted work. With an intersection impl, we can recover

the full performance:

1 2 3 4 5 6 | |

A note on compiler messages

I’m about to pivot and discuss the shortcomings of intersection impls. But before I do so, I want to talk a bit about the compiler messages here. I think that the core idea of specialization – that you want to pick the impl that applies to the most specific set of types – is fairly intuitive. But working it out in practice can be kind of confusing, especially at first. So whenever we propose any extension, we have to think carefully about the error messages that might result.

In this particular case, I think that we could give a rather nice error message. Imagine that the user had written these two impls:

1 2 3 4 5 6 7 | |

As we’ve seen, these two impls overlap but neither specializes the other. One might imagine an error message that says as much, and which also suggests the intersection impl that must be added:

1 2 3 4 5 6 7 8 9 10 | |

Note the message at the end. The wording could no doubt be improved, but the key point is that we should be to actually tell you exactly what impl is still needed.

Intersection impls do not solve the cross-crate problem

Unfortunately, intersection impls don’t give us the backwards compatibility that we want, at least not by themselves. The problem is, if we add the blanket impl, we also have to add the intersection impl. Within the same crate, this might be ok. But if this means that downstream crates have to add an intersection impl too, that’s a big problem.

Intersection impls may force you to precict the future

There is one other problem with intersection impls that arises in

cross-crate situations, which

nrc described on the tracking issue: sometimes there is a

theoretical intersection between impls, but that intersection is

empty in practice, and hence you may not be able to write the code you

wanted to write. Let me give you an example. This problem doesn’t show

up with the Copy/Clone trait, so we’ll switch briefly to another

example.

Imagine that we are adding a RichDisplay trait to our project. This

is much like the existing Display trait, except that it

can support richer formatting like ANSI codes or a GUI. For

convenience, we want any type that implements Display to also

implement RichDisplay (but without any fancy formatting). So we add

a trait and blanket impl like this one (let’s call it impl A):

1 2 | |

Now, imagine that we are also using some other crate widget that

contains various types, including Widget. This Widget type

does not implement Display. But we would like to be able to render a

widget, so we implement RichDisplay for this Widget type. Even

though we didn’t define Widget, we can implement a trait for it

because we defined the trait:

1

| |

Well, now we have a problem! You see, according to the rules from

RFC 1023, impls A and B are considered to potentially overlap,

and hence we will get an error. This might surprise you: after all,

impl A only applies to types that implement Display, and we said

that Widget does not. The problem has to do with semver: because

Widget was defined in another crate, it is outside of our

control. In this case, the other crate is allowed to implement

Display for Widget at some later time, and that should not be a

breaking change. But imagine that this other crate added an impl like

this one (which we can call impl C):

1

| |

Such an impl would cause impls A and B to overlap. Therefore, coherence considers these to be overlapping – however, specialization does not consider impl B to be a specialization of impl A, because, at the moment, there is no subset relationship between them. So there is a kind of catch-22 here: because the impl may exist in the future, we can’t consider the two impls disjoint, but because it doesn’t exist right now, we can’t consider them to be specializations.

Clearly, intersection impls don’t help to address this issue, as the

set of intersecting types is empty. You might imagine having some

alternative extension to coherence that permits impl B on the logic of

if impl C were added in the future, that’d be fine, because impl B

would be a specialization of impl A

.

This logic is pretty dubious, though! For example, impl C might have

been written another way (we’ll call this alternative version of impl C impl C2

):

1 2 | |

Note that instead of working for any T: Display, there is now some

other trait T: WidgetDisplay in use. Let’s say it’s only implemented

for optional 32-bit integers right now (for some reason or another):

1 2 | |

So now if we had impls A, B, and C2, we would have a different

problem. Now impls A and B would overlap for Widget>,

but they would not overlap for Widget. The reason here is

that Option, and hence impl A applies. But

String: RichDisplay (because String: Display) and hence impl B

applies. Now we are back in the territory where intersection impls

come into play. So, again, if we had impls A, B, and C2, one could

imagine writing an intersection impl to cover this situation:

1

| |

But, of course, impl C2 has yet to be written, so we can’t really write this intersection impl now, in advance. We have to wait until the conflict arises before we can write it.

You may have noticed that I was careful to specify that both the

Display trait and Widget type were defined outside of the current

crate. This is because RFC 1023 permits the use of negative

reasoning

if either the trait or the type is under local

control. That is, if the RichDisplay and the Widget type were

defined in the same crate, then impls A and B could co-exist,

because we are allowed to rely on the fact that Widget does not

implement Display. The idea here is that the only way that Widget

could implement Display is if I modify the crate where Widget is

defined, and once I am modifying things, I can also make any other

repairs (such as adding an intersection impl) that are necessary.

Conclusion

Today we looked at a particular potential use for specialization:

adding a blanket impl that implements Clone for any Copy type. We

saw that the current subset-only

logic for specialization isn’t

enough to permit adding such an impl. We then looked at one proposed

fix for this, intersection impls (often called lattice

impls).

Intersection impls are appealing because they increase expressiveness

while keeping the general feel of the subset-only

logic. They also

have an explicit

nature that appeals to me, at least in

principle. That is, if you have two impls that partially overlap, the

compiler doesn’t select which one should win: instead, you write an

impl to cover precisely that intersection, and hence specify it

yourself. Of course, that explicit nature can also be verbose and

irritating sometimes, particularly since you will often want the

intersection impl

to behave the same as one of the other two (rather

than doing some third, different thing).

Moreover, the explicit nature of interseciton impls causes problems across crates:

- they don’t allow you to add a blanket impl in a backwards compatible fashion;

- they interact poorly with semver, and specifically the limitations on negative logic imposed by RFC 1023.

My conclusion then is that intersection impls may well be part of the solution we want, but we will need additional mechanisms. Stay tuned for additional posts.

A note on comments

As is my wont, I am going to close this post for comments. If you would like to leave a comment, please go to this thread on Rust’s internals forum instead.

http://smallcultfollowing.com/babysteps/blog/2016/09/24/intersection-impls/

|

|

Cameron Kaiser: TenFourFox 45.5.0b1 available: now with little-endian (integer) typed arrays, AltiVec VP9, improved MP3 support and a petulant rant |

First, minimp3 has been converted to a platform decoder. Simply by doing that fixed a number of other bugs which were probably related to how we chunked frames, such as Google Translate voice clips getting truncated and problems with some types of MP3 live streams; now we use Mozilla's built-in frame parser instead and in this capacity minimp3 acts mostly as a disembodied codec. The new implementation works well with Google Translate, Soundcloud, Shoutcast and most of the other things I tried. (See, now there's a good use for that Mac mini G4 gathering dust on your shelf: install TenFourFox and set it up for remote screensharing access, and use it as a headless Internet radio -- I'm sitting here listening to National Public Radio over Shoutcast in a foxbox as I write this. Space-saving, environmentally responsible computer recycling! Yes, I know I'm full of great ideas. Yes. You're welcome.)

Interestingly, or perhaps frustratingly, although it somewhat improved Amazon Music (by making duration and startup more reliable) the issue with tracks not advancing still persisted for tracks under a certain critical length, which is dependent on machine speed. (The test case here was all the little five or six second Fingertips tracks from They Might Be Giants' Apollo 18, which also happens to be one of my favourite albums, and is kind of wrecked by this problem.) My best guess is that Amazon Music's JavaScript player interface ends up on a different, possibly asynchronous code path in 45 than 38 due to a different browser feature profile, and if the track runs out somehow it doesn't get the end-of-stream event in time. Since machine speed was a factor, I just amped up JavaScript to enter the Baseline JIT very quickly. That still doesn't fix it completely and Apollo 18 is still messed up, but it gets the critical track length down to around 10 or 15 seconds on this Quad G5 in Reduced mode and now most non-pathological playlists will work fine. I'll keep messing with it.

In addition, this release carries the first pass at AltiVec decoding for VP9. It has some of the inverse discrete cosine and one of the inverse Hadamard transforms vectorized, and I also wrote vector code for two of the convolutions but they malfunction on the iMac G4 and it seems faster without them because a lot of these routines work on unaligned data. Overall, our code really outshines the SSE2 versions I based them on if I do say so myself. We can collapse a number of shuffles and merges into a single vector permute, and the AltiVec multiply-sum instruction can take an additional constant for use as a bias, allowing us to skip an add step (the SSE2 version must do the multiply-sum and then add the bias rounding constant in separate operations; this code occurs quite a bit). Only some of the smaller transforms are converted so far because the big ones are really intimidating. I'm able to model most of these operations on my old Core 2 Duo Mac mini, so I can do a step-by-step conversion in a relatively straightforward fashion, but it's agonizingly slow going with these bigger ones. I'm also not going to attempt any of the encoding-specific routines, so if Google wants this code they'll have to import it themselves.

G3 owners, even though I don't support video on your systems, you get a little boost too because I've also cut out the loopfilter entirely. This improves everybody's performance and the mostly minor degradation in quality just isn't bad enough to be worth the CPU time required to clean it up. With this initial work the Quad is able to play many 360p streams at decent frame rates in Reduced mode and in Highest Performance mode even some 480p ones. The 1GHz iMac G4, which I don't technically support for video as it is below the 1.25GHz cutoff, reliably plays 144p and even some easy-to-decode (pillarboxed 4:3, mostly, since it has lots of "nothing" areas) 240p. This is at least as good as our AltiVec VP8 performance and as I grind through some of the really heavyweight transforms it should get even better.

To turn this on, go to our new TenFourFox preference pane (TenFourFox > Preferences... and click TenFourFox) and make sure MediaSource is enabled, then visit YouTube. You should have more quality settings now and I recommend turning annotations off as well. Pausing the video while the rest of the page loads is always a good idea as well as before changing your quality setting; just click once anywhere on the video itself and wait for it to stop. You can evaluate it on my scientifically validated set of abuses of grammar (and spelling), 1970s carousel tape decks, gestures we make at Gmail other than the middle finger and really weird MTV interstitials. However, because without further configuration Google will "auto-"control the stream bitrate and it makes that decision based on network speed rather than dropped frames, I'm leaving the "slower" appellation because frankly it will be, at least by default. Nevertheless, please advise if you think MSE should be the default in the next version or if you think more baking is necessary, though the pref will be user-exposed regardless.

To turn this on, go to our new TenFourFox preference pane (TenFourFox > Preferences... and click TenFourFox) and make sure MediaSource is enabled, then visit YouTube. You should have more quality settings now and I recommend turning annotations off as well. Pausing the video while the rest of the page loads is always a good idea as well as before changing your quality setting; just click once anywhere on the video itself and wait for it to stop. You can evaluate it on my scientifically validated set of abuses of grammar (and spelling), 1970s carousel tape decks, gestures we make at Gmail other than the middle finger and really weird MTV interstitials. However, because without further configuration Google will "auto-"control the stream bitrate and it makes that decision based on network speed rather than dropped frames, I'm leaving the "slower" appellation because frankly it will be, at least by default. Nevertheless, please advise if you think MSE should be the default in the next version or if you think more baking is necessary, though the pref will be user-exposed regardless.

But the biggest and most far-reaching change is, as promised, little-endian typed arrays (the "LE" portion of the IonPower-NVLE project). The rationale for this change is that, largely due to the proliferation of asm.js code and the little-endian Emscripten systems that generate it, there will be more and more code our big-endian machines can't run properly being casually imported into sites. We saw this with images on Facebook, and later with WhatsApp Web, and also with MEGA.nz, and others, and so on, and so forth. asm.js isn't merely the domain of tech demos and high-end ported game engines anymore.

The change is intentionally very focused and very specific. Only typed array access is converted to little-endian, and only integer typed array access at that: DataView objects, the underlying ArrayBuffers and regular untyped arrays in particular remain native. When a multibyte integer (16-bit halfword or 32-bit word) is written out to a typed array in IonPower-LE, it is transparently byteswapped from big-endian to little-endian and stored in that format. When it is read back in, it is byteswapped back to big-endian. Thus, the intrinsic big-endianness of the engine hasn't changed -- jsvals and doubles are still tag followed by payload, and integers and single-precision floats are still MSB at the lowest address -- only the way it deals with an integer typed array. Since asm.js uses a big typed array buffer essentially as a heap, this is sufficient to present at least a notional illusion of little-endianness as the asm.js script accesses that buffer as long as those accesses are integer.

I mentioned that floats (neither single-precision nor doubles) are not byteswapped, and there's an important reason for that. At the interpreter level, the virtual machine's typed array load and store methods are passed through the GNU gcc built-in to swap the byte order back and forth (which, at least for 32 bits, generates pretty efficient code). At the Baseline JIT level, the IonMonkey MacroAssembler is modified to call special methods that generate the swapped loads and stores in IonPower, but it wasn't nearly that simple for the full Ion JIT itself because both unboxed scalar values (which need to stay big-endian because they're native) and typed array elements (which need to be byte-swapped) go through the same code path. After I spent a couple days struggling with this, Jan de Mooij suggested I modify the MIR for loading and storing scalar values to mark it if the operation actually accesses a typed array. I added that to the IonBuilder and now Ion compiled code works too.

All of these integer accesses have almost no penalty: there's a little bit of additional overhead on the interpreter, but Baseline and Ion simply substitute the already-built-in PowerPC byteswapped load and store instructions (lwbrx, stwbrx, lhbrx, sthbrx, etc.) that we already employ for irregexp for these accesses, and as a result we incur virtually no extra runtime overhead at all. Although the PowerPC specification warns that byte-swapped instructions may have additional latency on some implementations, no PPC chip ever used in a Power Mac falls in that category, and they aren't "cracked" on G5 either. The pseudo-little endian mode that exists on G3/G4 systems but not on G5 is separate from these assembly language instructions, which work on all PowerPCs including the G5 going all the way back to the original 601.

Floating point values, on the other hand, are a different story. There are no instructions to directly store a single or double precision value in a byteswapped fashion, and since there are also no direct general purpose register-floating point register moves, the float has to be spilled to memory and picked up by a GPR (or two, if it's a double) and then swapped at that point to complete the operation. To get it back requires reversing the process, along with the GPR (or two) getting spilled this time to repopulate the double or float after the swap is done. All that would have significantly penalized float arrays and we have enough performance problems without that, so single and double precision floating point values remain big-endian.

Fortunately, most of the little snippets of asm.js floating around (that aren't entire Emscriptenized blobs: more about that in a moment) seem perfectly happy with this hybrid approach, presumably because they're oriented towards performance and thus integer operations. MEGA.nz seems to load now, at least what I can test of it, and WhatsApp Web now correctly generates the QR code to allow your phone to sync (just in time for you to stop using WhatsApp and switch to Signal because Mark Zuckerbrat has sold you to his pimps here too).

But what about bigger things? Well ...

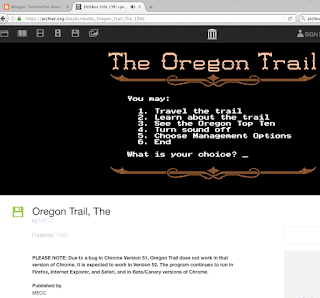

Yup. That's DOSBOX emulating MECC's classic Oregon Trail (from the Internet Archive's MS-DOS Game Library), converted to asm.js with Emscripten and running inside TenFourFox. Go on and try that in 45.4. It doesn't work; it just throws an exception and screeches to a halt.

To be sure, it doesn't fully work in this release of 45.5 either. But some of the games do: try playing Oregon Trail yourself, or Where in the World is Carmen Sandiego or even the original, old school in its MODE 40 splendour, Те

http://tenfourfox.blogspot.com/2016/09/tenfourfox-4550b1-available-now-with.html

|

|

Mitchell Baker: Living with Diverse Perspectives |

Diversity and Inclusion is more than having people of different demographics in a group. It is also about having the resulting diversity of perspectives included in the decision-making and action of the group in a fundamental way.

I’ve had this experience lately, and it demonstrated to me both why it can be hard and why it’s so important. I’ve been working on a project where I’m the individual contributor doing the bulk of the work. This isn’t because there’s a big problem or conflict; instead it’s something I feel needs my personal touch. Once the project is complete, I’m happy to describe it with specifics. For now, I’ll describe it generally.

There’s a decision to be made. I connected with the person I most wanted to be comfortable with the idea to make sure it sounded good. I checked with our outside attorney just in case there was something I should know. I checked with the group of people who are most closely affected and would lead the decision and implementation if we proceed. I received lots of positive response.

Then one last person checked in with me from my first level of vetting and spoke up. He’s sorry for the delay, etc but has concerns. He wants us to explore a bunch of different options before deciding if we’ll go forward at all, and if so how.

At first I had that sinking feeling of “Oh bother, look at this. I am so sure we should do this and now there’s all this extra work and time and maybe change. Ugh!” I got up and walked around a bit and did a few thing that put me in a positive frame of mind. Then I realized — we had added this person to the group for two reasons. One, he’s awesome — both creative and effective. Second, he has a different perspective. We say we value that different perspective. We often seek out his opinion precisely because of that perspective.

This is the first time his perspective has pushed me to do more, or to do something differently, or perhaps even prevent me from something that I think I want to do. So this is the first time the different perspective is doing more than reinforcing what seemed right to me.

That lead me to think “OK, got to love those different perspectives” a little ruefully. But as I’ve been thinking about it I’ve come to internalize the value and to appreciate this perspective. I expect the end result will be more deeply thought out than I had planned. And it will take me longer to get there. But the end result will have investigated some key assumptions I started with. It will be better thought out, and better able to respond to challenges. It will be stronger.

I still can’t say I’m looking forward to the extra work. But I am looking forward to a decision that has a much stronger foundation. And I’m looking forward to the extra learning I’ll be doing, which I believe will bring ongoing value beyond this particular project.

I want to build Mozilla into an example of what a trustworthy organization looks like. I also want to build Mozilla so that it reflects experience from our global community and isn’t living in a geographic or demographic bubble. Having great people be part of a diverse Mozilla is part of that. Creating a welcoming environment that promotes the expression and positive reaction to different perspectives is also key. As we learn more and more about how to do this we will strengthen the ways we express our values in action and strengthen our overall effectiveness.

https://blog.lizardwrangler.com/2016/09/23/living-with-diverse-perspectives/

|

|

Mozilla WebDev Community: Beer and Tell – September 2016 |

Once a month, web developers from across the Mozilla Project get together to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

emceeaich: Gopher Tessel

First up was emceeaich, who shared Gopher Tessel, a project for running a Gopher server (an Internet protocol that was popular before the World Wide Web) on a Tessel. Tessel is small circuit board that runs Node.js projects; Gopher Tessel reads sensors (such as the temperature sensor) connected to the board, and exposes their values via Gopher. It also can control lights connected to the board.

groovecoder: Crypto: 500 BC – Present

Next was groovecoder, who shared a preview of a talk about cryptography throughout history. The talk is based on “The Code Book” by Simon Sign. Notable moments and techniques mentioned include:

- 499 BCE: Histiaeus of Miletus shaves the heads of messengers, tattoos messages on their scalps, and sends them after their hair has grown back to hide the message.

- ~100 AD: Milk of tithymalus plant is used as invisible ink, activated by heat.

- ~700 BCE: Scytale

- 49 BC: Caesar cipher

- 1553 AD: Vigen`ere cipher

bensternthal: Home Monitoring & Weather Tracking

bensternthal was up next, and he shared his work building a dashboard with weather and temperature information from his house. Ben built several Node.js-based applications that collect data from his home weather station, from his Nest thermostat, and from Weather Underground and send all the data to an InfluxDB store. The dashboard itself uses Grafana to plot the data, and all of these servers are run using Docker.

The repositories for the Node.js applications and the Docker configuration are available on GitHub:

craigcook: ByeHolly

Next was craigcook, who shared a virtual yearbook page that he made as a farewell tribute to former-teammate Holly Habstritt-Gaal, who recently took a job at another company. The page shows several photos that are clipped at the edges to look curved like an old television screen. This is done in CSS using clip-path with an SVG-based path for clipping. The SVG used is also defined using proportional units, which allows it to warp and distort correctly for different image sizes, as seen by the variety of images it is used on in the page.

peterbe: react-buggy

peterbe told us about react-buggy, a client for viewing Github issues implemented in React. It is a rewrite of buggy, a similar client peterbe wrote for Bugzilla bugs. Issues are persisted in Lovefield (a wrapper for IndexedDB) so that the app can function offline. The client also uses elasticlunr.js to provide full-text search on issue titles and comments.

shobson: tic-tac-toe

Last up was shobson, who shared a small Tic-Tac-Toe game on the viewsourceconf.org offline page that is shown when the site is in offline mode and you attempt to view a page that is not available offline.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2016/09/23/beer-and-tell-september-2016/

|

|

Air Mozilla: Participation Q3 Demos |

Watch the Participation Team share the work from the last quarter in the Demos.

Watch the Participation Team share the work from the last quarter in the Demos.

|

|

Emily Dunham: Setting a Freenode channel's taxonomy info |

Setting a Freenode channel’s taxonomy info

Some recent flooding in a Freenode channel sent me on a quest to discover whether the network’s services were capable of setting a custom message rate limit for each channel. As far as I can tell, they are not.

However, the problem caused me to re-read the ChanServ help section:

/msg chanserv help - ***** ChanServ Help ***** - ... - Other commands: ACCESS, AKICK, CLEAR, COUNT, DEOP, DEVOICE, - DROP, GETKEY, HELP, INFO, QUIET, STATUS, - SYNC, TAXONOMY, TEMPLATE, TOPIC, TOPICAPPEND, - TOPICPREPEND, TOPICSWAP, UNQUIET, VOICE, - WHY - ***** End of Help *****

Taxonomy is a cool word. Let’s see what taxonomy means in the context of IRC:

/msg chanserv help taxonomy - ***** ChanServ Help ***** - Help for TAXONOMY: - - The taxonomy command lists metadata information associated - with registered channels. - - Examples: - /msg ChanServ TAXONOMY #atheme - ***** End of Help *****

Follow its example:

/msg chanserv taxonomy #atheme - Taxonomy for #atheme: - url : http://atheme.github.io/ - ОХЯЕБУ : лололол - End of #atheme taxonomy.

That’s neat; we can elicit a URL and some field with a cryllic and apparently custom name. But how do we put metadata into a Freenode channel’s taxonomy section? Google has no useful hits (hence this blog post), but further digging into ChanServ’s manual does help:

/msg chanserv help set - ***** ChanServ Help ***** - Help for SET: - - SET allows you to set various control flags - for channels that change the way certain - operations are performed on them. - - The following subcommands are available: - EMAIL Sets the channel e-mail address. - ... - PROPERTY Manipulates channel metadata. - ... - URL Sets the channel URL. - ... - For more specific help use /msg ChanServ HELP SET command. - ***** End of Help *****

Set arbirary metadata with /msg chanserv set #channel property key value

The commands /msg chanserv set #channel email a@b.com and /msg chanserv set #channel property email a@b.com appear to function identically, with the former being a convenient wrapper around the latter.

So that’s how #atheme got their fancy cryllic taxonomy: Someone with the appropriate permissions issued the command /msg chanserv set #atheme property ОХЯЕБУ лололол.

Behaviors of channel properties

I’ve attempted to deduce the rules governing custom metadata items, because I couldn’t find them documented anywhere.

- Issuing a set property command with a property name but no value deletes

the property, removing it from the taxonomy.

- A property is overwritten each time someone with the appropriate permissions

issues a /set command with a matching property name (more on the matching in a moment). The property name and value are stored with the same capitalization as the command issued.

- The algorithm which decides whether to overwrite an existing property or

create a new one is not case sensitive. So if you set ##test email test@example.com and then set ##test EMAIL foo, the final taxonomy will show no field called email and one field called EMAIL with the value foo.

- When displayed, taxonomy items are sorted first in alphabetical order (case

insensitively), then by length. For instance, properties with the names a, AA, and aAa would appear in that order, because the initial alphebetization is case-insensitive.

- Attempting to place [mIRC color codes](http://www.mirc.com/colors.html) in the

property name results in the error “Parameters are too long. Aborting.”

However, placing color codes in the value of a custom property works just fine.

Other uses

As a final note, you can also do basically the same thing with Freenode’s NickServ, to set custom information about your nickname instead of about a channel.

http://edunham.net/2016/09/23/setting_a_freenode_channel_s_taxonomy_info.html

|

|

Support.Mozilla.Org: What’s Up with SUMO – 22nd September |

Hello, SUMO Nation!

How are you doing? Have you seen the First Inaugural Firefox Census already? Have you filled it out? Help us figure out what kind of people use Firefox! You can get to it right after you read through our latest & greatest news below.

Welcome, new contributors!

If you just joined us, don’t hesitate – come over and say “hi” in the forums!

Contributors of the week

- All the forum supporters who tirelessly helped users out for the last week.

- All the writers of all languages who worked tirelessly on the KB for the last week.

- All the Social Superheroes – thank you!

We salute you!

SUMO Community meetings

- LATEST ONE: 21st of September- you can read the notes here and see the video at AirMozilla.

- NEXT ONE: happening on the 28th of September!

- If you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- We have sent out several nominations for the upcoming December Work Week conference – the Participation team will be reviewing the nominations and contacting some of you in the coming days.

- Reminder: MozFest is a bit more than a month away! Are you going to attend this year?

- Ongoing reminder #1: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

- Ongoing reminder #2: we are looking for more contributors to our blog. Do you write on the web about open source, communities, SUMO, Mozilla… and more? Do let us know!

- Ongoing reminder #3: want to know what’s going on with the Admins? Check this thread in the forum.

Platform

- PLATFORM REMINDER! The Platform Meetings are BACK! If you missed the previous ones, you can find the notes in this document. (here’s the channel you can subscribe to). We really recommend going for the document and videos if you want to make sure we’re covering everything as we go.

- A few important and key points to make regarding the migration:

- We are trying to keep as many features from Kitsune as possible. Some processes might change. We do not know yet how they will look.

- Any and all training documentation that you may be accessing is generic – both for what you can accomplish with the platform and the way roles and users are called within the training. They do not have much to do with the way Mozilla or SUMO operate on a daily basis. We will use these to design our own experience – “translate” them into something more Mozilla, so to speak.

- All the important information that we have has been shared with you, one way or another.

- The timelines and schedule might change depending on what happens.

- We started discussions about Ranks and Roles after the migration – join in! More topics will start popping up in the forums up for discussion, but they will all be gathered in the first post of the main migration thread.

- If you are interested in test-driving the new platform now, please contact Madalina.

- IMPORTANT: the whole place is a work in progress, and a ton of the final content, assets, and configurations (e.g. layout pieces) are missing.

- QUESTIONS? CONCERNS? Please take a look at this migration document and use this migration thread to put questions/comments about it for everyone to share and discuss. As much as possible, please try to keep the migration discussion and questions limited to those two places – we don’t want to chase ten different threads in too many different places.

Social

- It’s a SUMO Day today! There’s still time to contribute :-) Go for it!

- A new setup for Sprinklr, so do let Rachel know if you have feedback about it. Also, you should be able to filter by keywords or

- More Social awesomeness from Brazil – obrigado!

- Want to join us? Please email Rachel and/or Madalina to get started supporting Mozilla’s product users on Facebook and Twitter. We need your help! Use the step-by-step guide here. Take a look at some useful videos:

Support Forum

- Once again, and with gusto – SUUUUMO DAAAAAY! Go for it!

- Final reminder: If you are using email notifications to know what posts to return to, jscher2000 has a great tip (and tool) for you. Check it out here!

Knowledge Base & L10n

- We are in the release week. What does that mean? (Reminder: we are following the process/schedule outlined here).

- No work on next release content for English; minor fixes for existing English content; please avoid setting edited articles to RFL unless it’s critical (consult Joni or another admin).

- Localizers finish content localization and fix potential last-minute issues with release content – this has priority over other existing content (= non-current-release), that can be localized as usual.

- The content to be localized with high priority can be found on this list.

Firefox

- for Android

- Version 49 is out! Now you can enjoy the following:

- caching selected pages (e.g. mozilla.org) for offline retrieval

- usual platform and bug fixes

- caching selected pages (e.g. mozilla.org) for offline retrieval

- Version 49 is out! Now you can enjoy the following:

- for Desktop

- Version 49 is out! Enjoy the following:

- text-to-speech in Reader mode (using your OS voice modules)

- ending support for older Mac OS versions

- ending support for older CPUs

- ending support for Firefox Hello

- usual platform and bug fixes

- Version 49 is out! Enjoy the following:

- for iOS

- No news from under the apple tree this time!

- No news from under the apple tree this time!

By the way – it’s the first day of autumn, officially! I don’t know about you, but I am looking forward to mushroom hunting, longer nights, and a bit of rain here and there (as long as it stops at some point). What is your take on autumn? Tell us in the comments!

Cheers and see you around – keep rocking the helpful web!

https://blog.mozilla.org/sumo/2016/09/22/whats-up-with-sumo-22nd-september/

|

|

Air Mozilla: Connected Devices Weekly Program Update, 22 Sep 2016 |

Weekly project updates from the Mozilla Connected Devices team.

Weekly project updates from the Mozilla Connected Devices team.

https://air.mozilla.org/connected-devices-weekly-program-update-20160922/

|

|

Mozilla WebDev Community: Extravaganza – September 2016 |

Once a month, web developers from across Mozilla get together to talk about the work that we’ve shipped, share the libraries we’re working on, meet new folks, and talk about whatever else is on our minds. It’s the Webdev Extravaganza! The meeting is open to the public; you should stop by!

You can check out the wiki page that we use to organize the meeting, or view a recording of the meeting in Air Mozilla. Or just read on for a summary!

Shipping Celebration

The shipping celebration is for anything we finished and deployed in the past month, whether it be a brand new site, an upgrade to an existing one, or even a release of a library.

Survey Gizmo Integration with Google Analytics

First up was shobson, who talked about a survey feature on MDN that prompts users to leave feedback about how MDN helped them complete a task. The survey is hosted by SurveyGizmo, and custom JavaScript included on the survey reports the user’s answers back to Google Analytics. This allows us to filter on the feedback from users to answer questions like, “What sections of the site are not helping users complete their tasks?”.

View Source Offline Mode

shobson also mentioned the View Source website, which is now offline-capable thanks to Service Workers. The pages are now cached if you’ve ever visited them, and the images on the site have offline fallbacks if you attempt to view them with no internet connection.

SHIELD Content Signing

Next up was mythmon, who shared the news that Normandy, the backend service for SHIELD, now signs the data that it sends to Firefox using the Autograph service. The signature is included with responses via the Content-Signature header. This signing will allow Firefox to only execute SHIELD recipes that have been approved by Mozilla.

Open-source Citizenship

Here we talk about libraries we’re maintaining and what, if anything, we need help with for them.

Neo

Eli was up next, and he shared Neo, a tool for setting up new React-based projects with zero configuration. It installs and configures many useful dependencies, including Webpack, Babel, Redux, ESLint, Bootstrap, and more! Neo is installed as a command used to initialize new projects or a dependency to be added to existing projects, and acts as a single dependency that pulls in all the different libraries you’ll need.

Roundtable

The Roundtable is the home for discussions that don’t fit anywhere else.

Standu.ps Reboot

Last up was pmac, who shared a note about how he and willkg are re-writing the standu.ps service using Django, and are switching the rewrite to use Github authentication instead of Persona. They have a staging server setup and expect to have news next month about the availability of the new service.

Standu.ps is a service used by several teams at Mozilla for posting status updates as they work, and includes an IRC bot for quick posting of updates.

If you’re interested in web development at Mozilla, or want to attend next month’s Extravaganza, subscribe to the dev-webdev@lists.mozilla.org mailing list to be notified of the next meeting, and maybe send a message introducing yourself. We’d love to meet you!

See you next month!

https://blog.mozilla.org/webdev/2016/09/22/extravaganza-september-2016/

|

|

Air Mozilla: Reps weekly, 22 Sep 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Firefox Nightly: These Weeks in Firefox: Issue 1 |

Every two weeks, engineering teams working on Firefox Desktop get together and update each other on things that they’re working on. These meetings are public. Details on how to join, as well as meeting notes, are available here.

We feel that the bleeding edge development state captured in those meeting notes might be interesting to our Nightly blog audience. To that end, we’re taking a page out of the Rust and Servo playbook, and offering you handpicked updates about what’s going on at the forefront of Firefox development!

Expect these every two weeks or so.

Thanks for using Nightly, and keep on rocking the free web!

Highlights

- In an effort to replace XUL themes with something better, jaws and mikedeboer sent out a survey to gather data on how people want to theme their browser.

- MattN announced that the Form Autofill project has been kicked off!

- Check out the excellent UX spec

- The current plan is to design and implement this as a System Add-on

- Development kick-off and mailing list coming soon!

- After analyzing performance and stability data, mconley and felipe report that we are green-lit to ship e10s to release users that have the add-ons on this whitelist installed, as well as WebExtensions. The plan is to do this in 49.

- To help with porting / transitioning, andym reports that the Add-ons Team is landing the ability for WebExtension APIs to be used from SDK and bootstrap.js extension. Stay tuned for official word from the team.

- A reminder that Firefox 49 release is scheduled for September 20th

Contributor(s) of the Week

- The team has nominated Adam (adamgj.wong), who has helped clean-up some of our Telemetry APIs. Great work, Adam!

Project Updates

Add-ons

- andym wants to remind everybody that the Add-ons team is still triaging and fixing SDK bugs (like this one, for example).

Electrolysis (e10s)

- mconley reports that a11y and touchscreen support landed in 51, but might not go out in that release, as there are still a few bad bugs with it.

- mconley also wants everybody to know that we’re currently on track to ship Firefox with two content processes by default in Firefox 52, so stay tuned for that.

Core Engineering

- ksteuber rewrote the Snappy Symbolication Server (mainly used for the Gecko Profiler for Windows builds) and this will be deployed soon.

- felipe is in the process of designing experiment mechanisms for testing different behaviours for Flash (allowing some, denying some, click-to-play some, based on heuristics)

Platform UI and other Platform Audibles

- mconley is refactoring the “unsubmitted crash report” notification, as well as allowing users to always submit backlogged crash reports

- jessica and scottwu are working hard on the Date / Time form field work, and reviews have been given for both the layout and picker parts of the project

- daleharvey is working on a fallback for the GMP downloader in case AUS goes down

Quality of Experience

- mikedeboer has re-enabled the new Find in Page mode on Nightly, and is working on fixing performance and behaviour bugs

- dao continues on his quest to improve the default theme in high-contrast display settings

Sync / Firefox Accounts

- kitcambridge reports that tcsc has implemented atomic uploads for Sync on desktop

- There are other Sync engine improvements in the pipe and coming to a build near you soon!

Uncategorized

- A discussion is underway in firefox-dev concerning the organization of tests, and how “Firefox UI” tests fit in. These will likely be put in “puppeteer” subdirectories.

Here are the raw meeting notes that were used to derive this list.

Want to help us build Firefox? Get started here!

Here’s a tool to find some mentored, good first bugs to hack on.

https://blog.nightly.mozilla.org/2016/09/22/these-weeks-in-firefox-1/

|

|

Air Mozilla: Privacy Lab - September 2016 - EU Privacy Panel |

Want to learn more about EU Privacy? Join us for a lively panel discussion of EU Privacy, including GDPR, Privacy Shield, Brexit and more. After...

Want to learn more about EU Privacy? Join us for a lively panel discussion of EU Privacy, including GDPR, Privacy Shield, Brexit and more. After...

|

|

About:Community: One Mozilla Clubs |

In 2015, The Mozilla Foundation launched the Mozilla Clubs program to bring people together locally to teach, protect and build the open web in an engaging and collaborative way. Within a year it grew to include 240+ Clubs in 100+ cities globally, and now is growing to reach new communities around the world.

Today we are excited to share a new focus for Mozilla Clubs taking place on a University or College Campus (Campus Clubs). Mozilla Campus Clubs blend the passion and student focus of the former Firefox Student Ambassador program and Take Back The Web Campaign with the existing structure of Mozilla Clubs to create a unified model for participation on campuses!

Mozilla Campus Clubs take advantage of the unique learning environments of Universities and Colleges to bring groups of students together to teach, build and protect the open web. It builds upon the Mozilla Club framework to provide targeted support to those on campus through its:

- Structure: Campus Clubs include an Executive Team in addition to the Club Captain position, who help develop programs and run activities specific to the 3 impact areas (teach, build, protect).

- Training & Support: Like all Mozilla Clubs, Regional Coordinators and Club Captains receive training and mentorship throughout their clubs journey. However the nature of the training and support for Campus Clubs is specific to helping students navigate the challenges of setting up and running a club in the campus context.

- Activities: Campus Club activities are structured around 3 impact areas (teach, build, protect). Club Captains in a University or College can find suggested activities (some specific to students) on the website here.

These clubs will be connected to the larger Mozilla Club network to share resources, curriculum, mentorship and support with others around the world. In 2017 you’ll see additional unification in terms of a joint application process for all Regional Coordinators and a unified web presence.

This is an exciting time for us to unite our network of passionate contributors and create new opportunities for collaboration, learning, and growth within our Mozillian communities. We also see the potential of this unification to allow for greater impact across Mozilla’s global programs, projects and initiatives.

If you’re currently involved in Mozilla Clubs and/or the FSA program, here are some important things to know:

- The Firefox Student Ambassador Program is now Mozilla Campus Clubs: After many months of hard work and careful planning the Firefox Ambassador Program (FSA) has officially transitioned to Mozilla Clubs as of Monday September 19th, 2016. For full details about the Firefox Student Ambassador transition check out this guide here.

- Firefox Club Captains will now be Mozilla Club Captains: Firefox Club Captains who already have a club, a structure, and a community set up on a university/college should register your club here to be partnered with a Regional Coordinator and have access to new resources and opportunities, more details are here.

- Current Mozilla Clubs will stay the same: Any Mozilla Club that already exists will stay the same. If they happen to be on a university or college campus Clubs may choose to register as a Campus Club, but are not required to do so.

- There is a new application for Regional Coordinators (RC’s): Anyone interested in taking on more responsibility within the Clubs program can apply here. Regional Coordinators mentor Club Captains that are geographically close to them. Regional Coordinators support all Club Captains in their region whether they are on campus or elsewhere.

- University or College students who want to start a Club at their University and College may apply here. Students who primarily want to lead a club on a campus for/with other university/college students will apply to start a Campus Club.

- People who want to start a club for any type of learner apply here. Anyone who wants to start a club that is open to all kinds of learners (not limited to specifically University students) may apply to start a Club here.

Individuals who are leading Mozilla Clubs commit to running regular (at least monthly) gatherings, participate in community calls, and contribute resources and learning materials to the community. They are part of a network of leaders and doers who support and challenge each other. By increasing knowledge and skills in local communities Club leaders ensure that the internet is a global public resource, open and accessible to all.

This is the beginning of a long term collaboration for the Mozilla Clubs Program. We are excited to continue to build momentum for Mozilla’s mission through new structures and supports that will help engage more people with a passion for the open web.

https://blog.mozilla.org/community/2016/09/21/one-mozilla-clubs/

|

|

Air Mozilla: MozFest Volunteer Meetup - September 21, 2016 |

Meetup for 2016 MozFest Volunteers

Meetup for 2016 MozFest Volunteers

https://air.mozilla.org/mozfest-volunteer-meetup-2016-09-21/

|

|

Air Mozilla: The Joy of Coding - Episode 72 |

mconley livehacks on real Firefox bugs while thinking aloud.

mconley livehacks on real Firefox bugs while thinking aloud.

|

|

Air Mozilla: Weekly SUMO Community Meeting Sept 21, 2016 |

This is the sumo weekly call

This is the sumo weekly call

https://air.mozilla.org/weekly-sumo-community-meeting-sept-21-2016/

|

|

Julia Vallera: Introducing Mozilla Campus Clubs |

In 2015, The Mozilla Foundation launched the Mozilla Clubs program to bring people together locally to teach, protect and build the open web in an engaging and collaborative way. Within a year it grew to include 240+ Clubs in 100+ cities globally, and now is growing to reach new communities around the world.

Today we are excited to share a new focus for Mozilla Clubs taking place on a University or College Campus (Campus Clubs). Mozilla Campus Clubs blend the passion and student focus of the former Firefox Student Ambassador program and Take Back The Web Campaign with the existing structure of Mozilla Clubs to create a unified model for participation on campuses!

Mozilla Campus Clubs take advantage of the unique learning environments of Universities and Colleges to bring groups of students together to teach, build and protect the open web. It builds upon the Mozilla Club framework to provide targeted support to those on campus through its:

- Structure: Campus Clubs include an Executive Team in addition to the Club Captain position, who help develop programs and run activities specific to the 3 impact areas (teach, build, protect).

- Specific Training & Support: Like all Mozilla Clubs, Regional Coordinators and Club Captains receive training and mentorship throughout their clubs journey. However the nature of the training and support for Campus Clubs is specific to helping students navigate the challenges of setting up and running a club in the campus context.

- Activities: Campus Club activities are structured around 3 impact areas (teach, build, protect). Club Captains in a University or College can find suggested activities (some specific to students) on the website here.

These clubs will be connected to the larger Mozilla Club network to share resources, curriculum, mentorship and support with others around the world. In 2017 you’ll see additional unification in terms of a joint application process for all Club leaders and a unified web presence.

This is an exciting time for us to unite our network of passionate contributors and create new opportunities for collaboration, learning, and growth within our Mozillian communities. We also see the potential of this unification to allow for greater impact across Mozilla’s global programs, projects and initiatives.

If you’re currently involved in Mozilla Clubs and/or the FSA program, here are some important things to know:

- The Firefox Student Ambassador Program is now Mozilla Campus Clubs: After many months of hard work and careful planning the Firefox Ambassador Program (FSA) has officially transitioned to Mozilla Clubs as of Monday September 19th, 2016. For full details about the Firefox Student Ambassador transition check out this guide here.

- Firefox Club Captains will now be Mozilla Club Captains: Firefox Club Captains who already have a club, a structure, and a community set up on a university/college should register your club here to be partnered with a Regional Coordinator and have access to new resources and opportunities, more details are here.

- Current Mozilla Clubs will stay the same: Any Mozilla Club that already exists will stay the same. If they happen to be on a university or college campus Clubs may choose to register as a Campus Club, but are not required to do so.

- There is a new application for Regional Coordinators (RC’s): Anyone interested in taking on more responsibility within the Clubs program can apply here. Regional Coordinators mentor Club Captains that are geographically close to them. Regional Coordinators support all Club Captains in their region whether they are on campus or elsewhere.

- University or College students who want to start a Club at their University and College may apply here. Students who primarily want to lead a club on a campus for/with other university/college students will apply to start a Campus Club.

- People who want to start a club for any type of learner apply here. Anyone who wants to start a club that is open to all kinds of learners (not limited to specifically University students) may apply on the Mozilla Club website.

Individuals who are leading Mozilla Clubs commit to running regular (at least monthly) gatherings, participate in community calls, and contribute resources and learning materials to the community. They are part of a network of leaders and doers who support and challenge each other. By increasing knowledge and skills in local communities Club leaders ensure that the internet is a global public resource, open and accessible to all.

This is the beginning of a long term collaboration for the Mozilla Clubs Program. We are excited to continue to build momentum for Mozilla’s mission through new structures and supports that will help engage more people with a passion for the open web.

|

|

Meeting OW2

Meeting OW2