Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

JavaScript at Mozilla: IonMonkey: Evil on your behalf |

“Programmers waste enormous amounts of time thinking about, or worrying about, the speed of noncritical parts of their programs, and these attempts at efficiency actually have a strong negative impact when debugging and maintenance are considered.”

Maintainable code is a matter of taste. For example, we often teach students to dislike “goto” in C, but the best error handling code I have seen (in C) comes from the Linux kernel, and is using “goto”’s. Maintainable code in JavaScript is also a matter of taste. Some might prefer using ES6 features, while others might prefer functional programing approach with lambdas or even using some framework.

Scalar Replacement

norm_multiply function, the Inlining phase will bake the code of complex and norm functions inside the norm_multiply function.

function complex(r, i) {

return { r: r, i: i };

}

function norm(c) {

return Math.sqrt(c.r * c.r + c.i * c.i);

}

function norm_multiply(a, b) {

var mul = complex(a.r * b.r - a.i * b.i, a.r * b.i + a.i * b.r);

return norm(mul);

}complex function, and replaces it by two local variables. The object is no longer needed and IonMonkey effectively runs the following code:

function norm_multiply(a, b) {

var mul_r = a.r * b.r - a.i * b.i;

var mul_i = a.r * b.i + a.i * b.r;

return Math.sqrt(mul_r * mul_r + mul_i * mul_i);

}Escape Analysis

- a function call.

escape({ bar: 1, baz: 2 }); - a returned value.

function next() { return { done: false, value: 0}; } - an escaped object property.

escaped_obj = { property: obj }; escape(escaped_obj);

function doSomething(obj) {

if (theHighlyUnlikelyHappened())

throw new Error("aaaaaaahhh!!", obj);

}

Mixing Objects from Multiple Allocation Sites

function dummy() {

var obj = { done: false }; // (a)

if (len == max)

obj = { done: true }; // (b)

if (obj.done) // obj comes from either (a) or (b)

console.log("We are done! \o/");

}next function of iterators:function next() {

if (this.idx < this.len)

return { value: this.getValue(idx), done: false };

return { done: true };

}function next() {

var obj = { value: undefined, done: false };

if (this.idx < this.len) {

obj.value = this.getValue(idx);

return obj;

}

obj.done = true;

return obj;

} next() function, used by for-of. At first we rewrote it as shown in the example above, but to properly handle the security model of SpiderMonkey, this trick was not enough. The security model requires an extra branch which adds a new return statement with a different object, which cannot be merged above. Fortunately, this issue goes away with Branch Pruning, as we will see below.Known Properties

function norm1(vec) {

var sum = 0;

for (var i = 0; i < vec.length; i++)

sum += vec[i];

return sum;

}Lambdas

forEach function, and the code of the lambda given as argument to the forEach function, into the caller. Then Scalar Replacement will detect that the lambda does not escape, since forEach and the lambda are inlined, and it will replace the scope chain holding the captured sum variable with a local variable.function norm1(vec) {

var sum = 0;

vec.forEach((x) => { sum += x; });

return sum;

}forEach call as fast as the C-like for loop equivalent.Branch Pruning

function doSomething(obj) {

if (theHighlyUnlikelyHappened())

throw new Error("aaaaaaahhh!!", obj);

}// IonMonkey's compiled code

function doSomething(obj) {

if (theHighlyUnlikelyHappened())

bailout; // yield and resume in Baseline compiled code.

}Frameworks

next() function has an extra branch to support the security model of SpiderMonkey. This adds an extra branch that is unlikely to be used by most websites, thus Branch Pruning is able to remove this branch and replace it with a bailout.Future Work

https://blog.mozilla.org/javascript/2016/07/05/ionmonkey-evil-on-your-behalf/

|

|

QMO: Firefox 48 Beta 6 Testday, July 8th |

Hello Mozillians,

Good news! Friday, July 8th, we will host a new Testday for Firefox 48 Beta 6. We will have fun testing APZ (Async Scrolling), verifying and triaging bugs. If you want to find out more information visit this etherpad.

You don’t need testing experience to take part in the testday so feel free to join the #qa IRC channel and the moderators will help if you have any questions.

I am waiting forward on seeing you on Friday. Cheers

https://quality.mozilla.org/2016/07/firefox-48-beta-6-testday-july-8th/

|

|

Air Mozilla: RMLL 2016 : The Security Track, 05 Jul 2016 |

No global RMLL this year ? True ! However, the Security Track will be held at Paris Mozilla office next July. Come and enjoy listening...

No global RMLL this year ? True ! However, the Security Track will be held at Paris Mozilla office next July. Come and enjoy listening...

|

|

Aki Sasaki: python package pinning: pip-tools and dephash |

discovering pip tools

Last week, I wrote a long blog draft about python package pinning. Then I found this. It's well written, and covers many of the points I wanted to make. The author perfectly summarizes the divide between package development and package deployment to production:

So I dug deeper. pip-tools #303 perfectly describes the problem I was trying to solve:

Given a minimal dependency list,

- generate a full, expanded dependency list, and

- pin that full dependency list by version and hash.

Fixing pip-tools #303 and upstreaming seemed like the ideal solution.

However, pip-tools is broken with pip 8.1.2. The Python Packaging Authority (PyPA) states that pip's public API is the CLI, and pip-tools could potentially break with every new pip patch release. This is solvable by either using pypa/packaging directly, or switching pip-tools to use the CLI. That's considerably more work than just integrating hashing capability into pip-tools.

But I had already whipped up a quick'n'dirty python script that used the pip CLI. (I had assumed that bypassing the internal API was a hack, but evidently this is the supported way of doing things.) So, back to the original blog post, but much shorter:

dephash gen

dephash gen takes a minimal requirements file, and generates an expanded dependency list, pinned by version and hash.

$ cat requirements-dev.txt

requests

arrow

$ dephash gen requirements-dev.txt > requirements-prod.txt

$ cat requirements-prod.txt

# Generated from dephash.py + hashin.py

arrow==0.8.0 \

--hash=sha512:b6c01970d408e1169d042f593859577...

python-dateutil==2.5.3 \

--hash=sha512:413b935321f0a65fd8e8ba49990acd5... \

--hash=sha512:d8e28dad57ea85663962f4518faea0e... \

--hash=sha512:107ff2eb6f0715996061262b70844ec...

requests==2.10.0 \

--hash=sha512:e5b7d20c4d692b2655c13fa177b8462... \

--hash=sha512:05c6a1a742d31511ca4d3f39534e3e0...

six==1.10.0 \

--hash=sha512:a41b40b720c5267e4a47ffb98cdc792... \

--hash=sha512:9a53b7bc8f7e8b358c930eaecf91cc5...

Developers can work against requirements-dev.txt, with the latest available dependencies. At the same time, production can be pinned against specific package versions+hashes for stability and security.

dephash outdated

dephash outdated PATH checks whether PATH contains outdated packages. PATH can be a requirements file or virtualenv.

$ cat requirements-outdated.txt six==1.9.0 $ dephash outdated requirements-outdated.txt Found outdated packages in requirements-outdated.txt: six (1.9.0) - Latest: 1.10.0 [wheel]

or,

$ virtualenv -q venv $ venv/bin/pip install -q -r requirements-outdated.txt $ dephash outdated venv Found outdated packages in venv: six (1.9.0) - Latest: 1.10.0 [wheel]

This just uses pip list --outdated on the backend. I'm tentatively thinking a whitelist of known-outdated dependencies might help here, but I haven't written it yet.

wrapup

I still think the glorious future involves fixing pip-tools #303 and getting pip-tools pointed at a supported pypa API. And/or getting hashin or pip-tools upstreamed into pip. But in the meantime, there's dephash.

(I'm leaving my package vendoring musings and python package wishlist for future blogpost(s).)

|

|

Air Mozilla: RMLL 2016 : The Security Track, 04 Jul 2016 |

No global RMLL this year ? True ! However, the Security Track will be held at Paris Mozilla office next July. Come and enjoy listening...

No global RMLL this year ? True ! However, the Security Track will be held at Paris Mozilla office next July. Come and enjoy listening...

|

|

Mike Hommey: Announcing git-cinnabar 0.4.0 beta 1 |

Git-cinnabar is a git remote helper to interact with mercurial repositories. It allows to clone, pull and push from/to mercurial remote repositories, using git.

These release notes are also available on the git-cinnabar wiki.

What’s new since 0.3.2?

- Various bug fixes.

- Updated git to 2.9 for cinnabar-helper.

- Now supports bundle2 for both fetch/clone and push (https://www.mercurial-scm.org/wiki/BundleFormat2).

- Now Supports

git credentialfor HTTP authentication. - Removed upgrade path from repositories used with version < 0.3.0.

- Experimental (and partial) support for using git-cinnabar without having mercurial installed.

- Use a mercurial subprocess to access local mercurial repositories.

- Cinnabar-helper now handles fast-import, with workarounds for performance issues on macOS.

|

|

Byron Jones: adding “reviewer delegation” to MozReview |

Background

One of the first projects I worked on when I moved to the MozReview team was “review delegation”. The goal was to add the ability for a reviewer to redirect a review request to someone else. It turned out to be a change that was much more complicated than expected.

MozReview is a Mozilla developed extension to the open source Review Board product; with the primary focus on working with changes as a series of commits instead of as a single unified diff. This requires more than just a Review Board extension, it also encompasses a review repository (where reviews are pushed to), as well as Mercurial and Git modules that drive the review publishing process. Autoland is also part of our ecosystem, with work started on adding static analysis (eg. linters) and other automation to improve the code review workflow.

I inherited the bug with a patch that had undergone a single review. The patch worked by exposing the reviewers edit field to all users, and modifying the review request once the update was OK’d. This is mostly the correct approach, however it had two major issues:

- According to Review Board and Bugzilla, the change was always made by the review’s submitter, not the person who actually made the change

- Unlike other changes made in Review Board, the review request was updated immediately instead of using a draft which could then be published or discarded

Permissions

Review Board has a simple permissions model – the review request’s submitter (aka patch author) can make any change, while the reviewers can pretty much only comment, raise issues, and mark a review as “ready to ship” / “fix it”. As you would expect, there are checks within the object layer to ensure that these permission boundaries are not overstepped. Review Board’s extension system allows for custom authorisation and permissions check, however the granularity of these are course: you can only control if a user can edit a review request as a whole, not on a per-field level.

In order to allow the reviewer list to be changed, we need to tell Review Board that the submitter was making the change.

Performing the actions as the submitter instead of the authenticated user is easy enough, however when the changes were pushed to Bugzilla they were attributed to the wrong user. After a few false starts, I settled on storing the authenticated user in the request’s meta data, performing the changes as the submitter, and updating the routines that touch Bugzilla to first check for a stored user and make changes as that user instead of the submitter. Essentially “su”.

This worked well except for on the page where the meta data changes are displayed – here the change was incorrectly attributed to the review submitter. Remember that under Review Board’s permissions model only the review submitter can make these changes, so the name and gravatar were hard-coded in the django template to use the submitter. Given the constraints a Review Board extension has to operate in, this was a problem, and developing a full audit trail for Review Board would be too time consuming and invasive. The solution I went with was simple: hide the name and gravatar using CSS.

Drafts and Publishing

How Review Board works is, when you make a change, a draft is (almost) always created which you can then publish or discard. Under the hood this involves duplicating the review request into a draft object, against which modifications are made. A review request can only have one draft, which isn’t a problem because only the submitter can change the review.

Of course for reviewer delegation to work we needed a draft for each user. Tricky.

The fix ended up needing to take a different approach depending on if the submitter was making the change or not.

When the review submitter updates the reviewers, the normal review board draft is used, with a few tweaks that show the draft banner when viewing any revision in the series instead of just the one that was changed. This allows us to correctly cope with situations where the submitter makes changes that are broader than just the reviewers, such as pushing a revised patch, or attaching a screenshot.

When anyone else updates the reviewers, a “draft” is created in local storage in their browser, and a fake draft banner is displayed. Publishing from this fake draft banner calls the API endpoint that performs the permissions shenanigans mentioned earlier.

Overall it has been an interesting journey into how Review Board works, and highlighted some of the complications MozReview hits when we need to work against Review Board’s design. We’ve been working with the Review Board team to ease some of these issues, as well as deviating where required to deliver a Mozilla-focused user experience.

Filed under: mozreview

https://globau.wordpress.com/2016/07/04/adding-reviewer-delegation-to-mozreview/

|

|

Smokey Ardisson: Your Daily QA: VoteYourPark |

For some time, I’ve been meaning to start a series of posts that outline software/website issues I run across on a daily basis (because I *do* run across buggy or poorly designed software/websites on a daily basis), in part in an effort to blog more often, but also to shine the light on what we as technology-using people deal with on a daily basis. It’s taken me a while—blogging is hard ![]() , and so is documenting and writing a good bug report—but herewith the first entry in Your Daily QA.)

, and so is documenting and writing a good bug report—but herewith the first entry in Your Daily QA.)

——

The National Geographic Society, in partnership with the National Trust for Historic Preservation and American Express, has been running a vote to divy up $2 million in funding to help preserve or restore various sites in National Parks: #VoteYourPark.

It’s a lovely site and a nice idea, but there are a couple of wonky implementation details that routinely drive me crazy. When you try to sign in using your email address, the sign-in form doesn’t work like a typical form. In most forms, pressing return in a text field will submit the form (or trigger the default button), and if you’ve been using the web on the desktop for a long time, you have finely-trained muscle memory that expects this to be true. Not for this form, however. Even worse, the “button” (it’s really a link camouflaged by CSS to look like a button) isn’t in the tab chain (or at least isn’t in the tab chain in the right location), so you can’t even tab to it and trigger the form via the keyboard after entering your password. Instead, every single day you have to take your hand off of the keyboard and click the “button” to log in.

When you try to sign in using your email address, the sign-in form doesn’t work like a typical form. In most forms, pressing return in a text field will submit the form (or trigger the default button), and if you’ve been using the web on the desktop for a long time, you have finely-trained muscle memory that expects this to be true. Not for this form, however. Even worse, the “button” (it’s really a link camouflaged by CSS to look like a button) isn’t in the tab chain (or at least isn’t in the tab chain in the right location), so you can’t even tab to it and trigger the form via the keyboard after entering your password. Instead, every single day you have to take your hand off of the keyboard and click the “button” to log in.

The fun doesn’t end with the sign-in form, though. After you’ve voted for your parks, you have the opportunity to enter a sweepstakes for a trip to Yellowstone National Park. To do so, you have to (re)provide your email address. In this form, though, pressing return does do something, because instead of being offered the opportunity to enter the sweepstakes, pressing return shows this:

In this form, though, pressing return does do something, because instead of being offered the opportunity to enter the sweepstakes, pressing return shows this: It appears that you have lost your opportunity to enter the sweepstakes as a result of pressing return to submit the form. (If you close the message and then look closely at the area of the page that originally contained the sign-in information, there is a link that will let you enter the sweepstakes after the voting procedure is complete, but it’s fairly well hidden; I didn’t find it until muscle memory in the form submission had caused me to miss several chances to enter by triggering the above screen.)

It appears that you have lost your opportunity to enter the sweepstakes as a result of pressing return to submit the form. (If you close the message and then look closely at the area of the page that originally contained the sign-in information, there is a link that will let you enter the sweepstakes after the voting procedure is complete, but it’s fairly well hidden; I didn’t find it until muscle memory in the form submission had caused me to miss several chances to enter by triggering the above screen.)

There are other annoyances (like the progress meter that appears when loading the page and again after logging in), but they’re minor compared to breaking the standard semantics of web forms—and breaking them in different ways in two different forms on the same page. By all means, make your form fit with your website’s needs, but please don’t break basic web form semantics (and years of users’ muscle memory) in the process.

http://www.ardisson.org/afkar/2016/07/04/your-daily-qa-voteyourpark/

|

|

Wladimir Palant: Why Mozilla shouldn't copy Chrome's permission prompt for extensions |

As Mozilla’s Web Extensions project is getting closer towards being usable, quite a few people seem to expect some variant of Chrome’s permission prompt to be implemented in Firefox. So instead of just asking you whether you want to trust an add-on Firefox should list exactly what kind of permissions an add-on needs. So users will be able to make an informed decision and Mozilla will be able to skip the review for add-ons that don’t request any “dangerous” permissions. What could possibly be wrong with that?

In fact, lots of things. People seem to think that Chrome’s permission prompt is working well, because… well, it’s Google and they tend to do things right? However, having dealt with the effects of this prompt for several years I’m fairly certain that it doesn’t have the desired effect. In fact, the issues are so severe that I consider it security theater. Here is why.

Informed decisions?

The permission prompt shown above says: “Read and change all your data on the websites you visit.” Can a regular user tell whether that’s a bad thing? In fact, lots of confused users asked why Adblock Plus needed this permission and whether it was spying on them. And we explained that the process of blocking ads is in fact what Chrome describes as changing data on all websites. Also, Adblock Plus could also read data as a side-effect, but it doesn’t do anything like that of course. The latter isn’t because the permission system stops Adblock Plus from doing it, but simply because we are good people (and we also formulated this very restrictive privacy policy).

The problem is: useful extensions will usually request this kind of “give me the keys to the kingdom” permission. Password managers? Sure, these need to fill in passwords on any website — and if they are allowed to access these websites they could theoretically also extract data from them. Context search extensions? Sure, these need to know what word you selected on any website — they could extract additional data. In fact, there are few extensions in Chrome Web Store that don’t produce the scary “read and change all your data” warning, mostly it’s very specialized ones.

How do users deal with that? A large number of users learned to ignore the warnings. I mean, there is always a scary warning and it always means absolutely nothing, why care about it at all? But there is also another group, people who got scared enough that they stopped using extensions altogether. In between, there are those who only install extensions associated with known brands — other extension developers have told me that users distrusting the extension author is very noticeable for extensions outside the “Most popular” list.

No reviews?

It’s a well-known fact that many extension developers love Chrome Web Store (CWS) and dislike Addons.Mozilla.Org (AMO). From developer’s point of view, publishing to CWS is a very simple process and updates go online after merely 60 minutes. AMO on the other hand takes an arbitrary time for a manual review, and review times used to be rather bad in the past — assuming that you get a positive review at all rather than a request to improve various aspects of your extension.

There is another angle to that however, namely the user’s. If your mom wants to install an extension, is that safe? For AMO I’d say: mostly yes, as long as she stays clear of unreviewed add-ons. And CWS? Well, not really. Even many of the most popular extensions have functionality which violates user’s privacy. As you go to the more obscure extensions you will also find more intrusive behavior, all the way to outright malicious.

Wait, doesn’t the permissions system take care of malicious behavior? In a way, it does — an extension cannot format your hard drive, it cannot hide itself in the list of extensions and it cannot prevent users from uninstalling it. But reading out your passwords as you enter them on webpages? Track what pages you visit and send them to a third party service? Add advertisements to webpages as they load? Redirect you to “alternative” search engines as you enter your search term? Not a big deal, all covered by the usual all-encompassing “read and change all your data” permission.

So, what’s the point?

Chrome’s permissions system doesn’t really make the decision process easier for users, usually they still have to trust the extension author. It also does a very poor job when it comes to keeping malicious actors out of CWS. So, does it solve any issue at all? But of course it does! It allows Google to shift blame: if a user installs a malicious extension then it’s the user’s fault and not Google’s. After all, the user has been warned and accepted the permission prompt. Privacy violations and various kinds of malicious behavior are perfectly legitimate given CWS policies because… well, there is no issue as long as users are being warned! Developers are happy and Google can save lots of money on reviews — a win-win situation.

Can this be done better?

At Mozilla All Hands in London we briefly discussed whether there are any alternatives. One of the suggestions was combining that permission prompt with code review. For example, a reviewer could determine whether the extension is merely modifying webpage behavior or actually extracting data from it. Depending on the outcome the permission prompt should display a different message. This compromise would keep the review task relatively simple while providing users with useful information.

Thinking more about it, I’m not entirely convinced however that this is going into the right direction. Even if the website is extracting data from the webpage, the real question for the user is always: is that in line with the stated goal of the extension or tracking functionality that isn’t really advertised? And reviewers are currently trying to answer that exact question, the latter being a reason for extensions to be rejected due to violations of the “No surprises” policy. Wouldn’t it be a better idea to keep doing that so that the installation prompt can simply say: “Hey, we made sure that this extension is doing what it says, want to install it?”

And what about updates?

One aspect that people usually don’t seem to recognize is extension updates. As an extension evolves, the permissions that it requires might change. For example, my Google search link fix extension was originally meant for Google only, later it turned out that it could address the same issue on other search engines in a similar way. How does Chrome deal with extension updates that require new permissions? Well, it disables the extension until the user approves the permissions again. As you might imagine, a significant percentage of users never do that. This puts extension developers in a dilemma, they have to choose between losing users and limiting extension functionality to the available permissions.

This might be one contributing factor to the prevalence of extensions requesting very broad privileges: it’s much easier to request too many privileges from the start than add permissions later. This only works with permissions that already exist however, if Chrome adds new functionality which is useful for your extensions you cannot get around requesting an additional privilege for it.

The Chrome developers seem to address this issue from two angles. On the one hand, permissions for new functionality being developed tend to be subsumed by already existing permissions. So adding this permission on an update won’t trigger a new permission prompt due to the permissions you already have. Also, Chrome added support for optional permissions which the extension can request in context, when responding to a particular user action. This works well to show the user why a particular permission is needed but doesn’t work for actions performed in background.

Altogether, I could hopefully show that Chrome’s permission prompt has a number of issues that haven’t been sufficiently addressed yet. If Mozilla is going to implement something similar, they better think about these issues first. I’d hate seeing Mozilla also using it as a mechanism to shift the responsibility.

https://palant.de/2016/07/02/why-mozilla-shouldn-t-copy-chrome-s-permission-prompt-for-extensions

|

|

Ludovic Hirlimann: I broke the web and I’m sorry. |

One of the power of the web ( at least for me) is linking and embedding content (like images and videos) to make new content. I have been taking digital pictures since 2006 and using flickr to host these.

About a month ago, I deleted that flickr account with 10 years of shots ( I probably have raws somewhere, but ain’t sure and some would be hard to dig out). Before deleting the account I used flickr touch to backup my pictures (had to patch it to save non ascii stuff I had). I thought I had lost most of the picture metadata, but no, most of it is still there , the one that was embedded in the jpeg by lightroom before uploading. (and yes I was a lightroom user). Deleting the account broke the web, like this web post, even flickr got broken by it, because all the pictures I use to host are now returning 404s. I’m sorry I broke the web.

Of course I couldn’t live without pictures , so I’ve reopen a new flickr account :)(more on that later. I’ve lost my grandfather right to host unlimited, I’m now limited to 1TB. I’m also rebuilding my flickr presence there and posting for now only my best pics (mostly animals, birds and landscapes). If I had a portrait of you you liked, ask me and I’ll dig it up, same for group pictures, but if not asked for it’s very probable that these pictures will be gone forever from the web. The good news is that I’ve changed my default license to a more open one.

Why did I choose to go back to flickr ?

- I know how it works.

- I have plenty of contacts there (even if some are not active anymore).

- I like the L shortcut when looking at the picture.

- The power of explore, the dizziness of it.

- The APIs

- Things like fluidr

and sorry again for breaking the web.

|

|

Support.Mozilla.Org: SUMO Show & Tell: Helping users with certificate errors |

Hey SUMO Nation!

During the recent Work Week in London we had the utmost pleasure of hanging out with some of you (we’re still a bit sad about not everyone making it… and that we couldn’t organize a meetup for everyone contributing to everything around Mozilla).

During the recent Work Week in London we had the utmost pleasure of hanging out with some of you (we’re still a bit sad about not everyone making it… and that we couldn’t organize a meetup for everyone contributing to everything around Mozilla).

Among the numerous sessions, working groups, presentations, and demos we also had a SUMO Show & Tell – a story-telling session where everyone could showcase one cool thing they think everyone should know about.

I have asked those who presented to help me share their awesome stories with everyone else – and here you go, with the first one presented by Philipp, one of our long-time Firefox champions.

Take a look below and learn how we’re dealing with certificate errors and what the future holds in store:

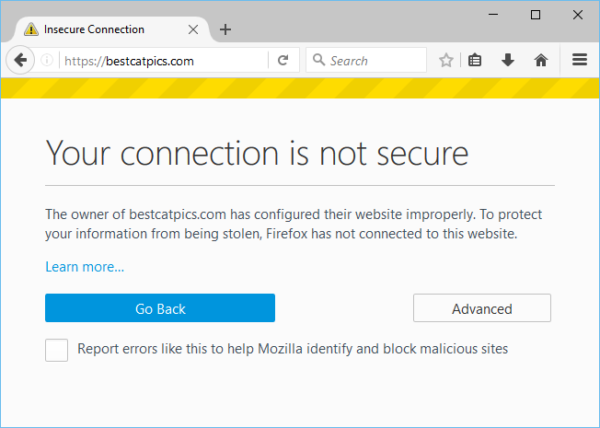

One of the most common and prolonged user problems we face at SUMO and all other support channels is users being unable to visit secure HTTPS websites because they see something like this instead:

From Firefox telemetry data we know that at least 115 million users a month end up seeing such an error page – probably even more, because Firefox telemetry is depending on a working HTTPS connection as well, of course (we do care about our users’ privacy!).

From Firefox telemetry data we know that at least 115 million users a month end up seeing such an error page – probably even more, because Firefox telemetry is depending on a working HTTPS connection as well, of course (we do care about our users’ privacy!).

Since the release of Firefox 44 there is a “Learn more…” link on those error pages, pointing to our What does “Your connection is not secure” mean? article, which quickly became the most visited troubleshooting article on SUMO after that (1.3 million monthly visitors). We try to lead users through a list of common fixes and troubleshooting steps in the article, but because there is a whole array of underlying issues that might cause such errors (for example – misconfigured web servers or networks, a skewed clock on a device, intercepted connections) and the error message we show is not very detailed, it’s not always easy for users to navigate around those problems.

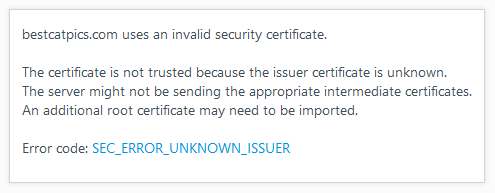

The most common cause triggering these error pages according to our experience at SUMO is a “man-in-the-middle attack”. When that happens, Firefox does not get to see the trusted certificate of the website you’re supposed to be connected to, because something is intercepting the connection and is replacing the genuine certificate. Sometimes, it can be caused by malware present within the operating system, but the software causing this doesn’t always have to be malicious by nature – security software from well-known vendors like Kaspersky, Avast, BitDefender and others will trigger the error page, as it’s trying to get into the middle of encrypted connections to perform scanning or other tasks. In this case, affected users will see the error code SEC_ERROR_UNKNOWN_ISSUER when they click on the “Advanced”-button on the error page:

In order to avoid that, those software products will place their certificate (that they use to intercept secure connections) into your browser’s trusted certificate store. However, since Firefox is implementing its own store of trusted certificates and isn’t relying on the operating system for this, things are bound to go wrong more easily for us: maybe the external software is failing to properly place its certificates in the Firefox trust store or it only does so once during its original setup but a user installs and starts to use Firefox later on, or a user might just refresh his Firefox profile and all custom certificates get lost in the process, or… You get the picture – so many ways things could go wrong!

In order to avoid that, those software products will place their certificate (that they use to intercept secure connections) into your browser’s trusted certificate store. However, since Firefox is implementing its own store of trusted certificates and isn’t relying on the operating system for this, things are bound to go wrong more easily for us: maybe the external software is failing to properly place its certificates in the Firefox trust store or it only does so once during its original setup but a user installs and starts to use Firefox later on, or a user might just refresh his Firefox profile and all custom certificates get lost in the process, or… You get the picture – so many ways things could go wrong!

As a result, a user might have problems accessing any sites using HTTPS (including personal or work email, favourite search engines, social networks or web banking) in Firefox, but other browsers will still continue to work as expected – so, we are in danger of disappointing and discouraging users from using Firefox!

Starting with Firefox 48, scheduled to be released in early August, users who land on a certificate error page due to a suspected “man-in-the-middle” attack, will now be lead to this custom-made SUMO article after they click on “Learn more” (Bug 1242886 – big thanks to Johann Hofmann for implementing that!). The article contains known workarounds tailored for various security suites, which can hopefully put many more affected users in the position to fix the issue and get their Firefox working securely and as expected again.

Ideally, I would imagine that we would not prohibit Firefox users from loading secure pages when a certificate from a known security software is used to intercept a secure connection in the first place… But we might find more subtle ways of helping the user realise that their connection might be monitored for safety reasons, rather than intercepted for malicious purposes.

…thank you, Philipp! What is certain is that we won’t stop there and will continue to work on reducing the biggest user pain-points from within the product wherever possible…

We also won’t stop posting more stories from the Show & Tell – at least until we run out of them ;-) I hope you enjoyed Philipp’s insight into one of the complex aspects of internet security, as provided by Firefox.

https://blog.mozilla.org/sumo/2016/07/02/sumo-show-tell-helping-users-with-certificate-errors/

|

|

Cameron Kaiser: Dr Kaiser? You've been Servoed. Good day. |

Once 45 hits release and 38 is retired, we'll start the old unstable builds up again for new features (i.e., feature parity). My plan is one new functionality improvement and one new optimization each cycle, with 6-12 week cycles for baking due to our smaller user base. You'll get some clues about the user-facing features as part of tenfourfox.dtd, which will be pre-written so that localizers can have it done and features can just roll out as I complete them.

On to other things. Mozilla announced yesterday the (very preliminary) release of the Servo Developer Preview, using their next-generation Servo engine instead of the Gecko engine that presently powers Firefox (and TenFourFox). Don't get your hopes up for this one: Servo is written in Rust, Rust needs llvm (which doesn't work yet on OS X/ppc, part of the reason we're dropping source parity), and even the extant PowerPC Rust compiler on Linux may never be capable of building it. This one's strictly for the Intel Mac lulz.

So here's Servo, rendering Ars Technica:

Servo's interface is very sparse, but novel, and functional enough. I'm not going to speak further about that because it's quite obviously nowhere near finished or final. It works well enough to test and I wasn't able to make the browser crash in my brief usage. Thumbs up there.

With regard to the layout engine, though, many things don't work. You can see several rendering glitches immediately on the main page with the gradient and font block backgrounds. Comment threads in articles appear crazily spaced. Incidentally, I don't care if you can see my browser tabs or that I'm trying to figure out how to interface a joystick port to a Raspberry Pi (actually, it's for a C.H.I.P., but the Pi schematics should work for the GPIO pins, as well as whatever's needed to connect it to 5V logic).

The TenFourFox home page doesn't fare much better:

The background is missing and the top Classilla link seems to have gotten fixed to the top. On the other hand, the Help and Support Tab does load, but articles are not clickable and you can't pick anything from drop-down select form elements.

Now, I'll admit this last one is an unfair test, but Floodgap's home page is also pretty wrecked:

This is an unfair test because I intentionally wrote the Floodgap web page to be useable and "proper" as far back as Netscape Navigator 3, festooning it with lots of naughtiness like and other unmentionables that are the equivalent of HTML syphilis. Gecko handles it fine, but Servo chokes on the interlaced GIFs and just about completely ignores any of the font colour and face stuff. But I wasn't really expecting it to do otherwise at this stage; no doubt quirks mode is not currently a priority.

I think the best that can be said about this first public release of Servo, admittedly from my fairly uninformed outsider view, is that it exists and that it works. There was certainly a lot of doubt about those things not too long ago, and Mozilla has demonstrated clearly with this release that Servo is viable as a technology if not yet as a browser. What is less clear is what advantages it will ultimately offer. Though the aim with Servo is better performance on modern systems, especially systems with cores to burn, on this 2014 i7 MacBook Air Servo didn't really seem to offer any speed advantage over Gecko -- even with the understanding this version is almost certainly unoptimized, right now Gecko is rather faster, substantially less buggy and infinitely more functional. It's going to take a very long time before Servo can stand on its own, let alone become a Gecko replacement, and I think in the meantime Mozilla needs to do a better job of not alienating the users they've got or Servo-Firefox will remain a purely academic experiment.

Meanwhile, I look forward to the next version and seeing how it evolves, even though I doubt it will ever run on a Power Mac.

http://tenfourfox.blogspot.com/2016/07/dr-kaiser-youve-been-servoed-good-day.html

|

|

Nick Desaulniers: Cross Compiling C/C++ for Android |

Let’s say you want to build a hello world command line application in C or C++ and run it on your Android phone. How would you go about it? It’s not super practical; apps visible and distributable to end users must use the framework (AFAIK), but for folks looking to get into developing on ARM it’s they likely have an ARM device in their pocket.

This post is for folks who typically invoke their compiler from the command line, either explicitly, from build scripts, or other forms of automation.

At

work,

when working on Android, we typically checkout the entire Android source code

(which is huge),

use lunch to configure a ton of environmental variables, then use Makefiles

with lots of includes and special vars. We don’t want to spend the time and

disk space checking out the Android source code just to have a working cross

compiler. Luckily, the Android tools team has an excellent utility to grab a

prebuilt cross compiler.

This assumes you’re building from a Linux host. Android is a distribution of Linux, which is much easier to target from a Linux host. At home, I’ll usually develop on my OSX machine, ssh’d into my headless Linux box. (iTerm2 and tmux both have exclusive features, but I currently prefer iTerm2.)

The first thing we want to do is fetch the Android NDK. Not the SDK, the NDK.

1 2 3 | |

http://nickdesaulniers.github.io/blog/2016/07/01/android-cli/

|

|

Mozilla Addons Blog: July 2016 Featured Add-ons |

Pick of the Month: Firebug

by Firebug Working Group

Access a bevy of development tools while you browse—edit, debug, and monitor CSS, HTML, and JavaScript live in any Web page.

”One of the main reasons why I love Firefox. Cannot imagine Firefox without Firebug.”

Featured: ipFood

by hg9x

Browse the Web anonymously by generating random proxies.

”Everything works perfectly.”

Nominate your favorite add-ons

Featured add-ons are selected by a community board made up of add-on developers, users, and fans. Board members change every six months, so there’s always an opportunity to participate. Stayed tuned to this blog for the next call for applications. Here’s further information on AMO’s featured content policies.

If you’d like to nominate an add-on for featuring, please send it to amo-featured@mozilla.org for the board’s consideration. We welcome you to submit your own add-on!

https://blog.mozilla.org/addons/2016/07/01/july-2016-featured-add-ons/

|

|

Mozilla Open Policy & Advocacy Blog: Protecting the Open Internet in India |

Millions of people in developing nations think that Facebook is the Internet. This is not a matter of mere confusion. It means that millions of people aren’t able to take advantage of all the things that the open, neutral Internet has to offer.

We’re committed to advancing net neutrality on a global scale. People around the world deserve access to the open Web: a Web ripe for exploration, education, and innovation. It’s especially critical to protect this right in countries where people are going online for the first time.

Mozilla’s entire community of open Web supporters is a demonstration of this commitment. Over one million Indians have mobilized through social media campaigns and on-the-ground efforts to “Save the Internet” and petition the Telecom Regulatory of India (TRAI). Indian Mozillians took a bold stand for net neutrality, because they care about freedom, choice, and innovation.

This week, Mozilla filed comments with TRAI. We’ve been engaged before with TRAI and the government of India on net neutrality, free data, and differential pricing. This time, we’re commenting on a pre-consultation paper on net neutrality. We are also contributing feedback on the Free Data consultation paper. This document asks for possible options that respect net neutrality while providing free data to users and complying with the Differential Pricing Regulation.

Our comments stress the need for a strong regulatory framework in India that ensures all internet traffic is treated equally, whether you’re a billion dollar company or a small startup. We also encourage the development of data-offering models that follow net neutrality principles:

- All points in the network should be able to connect with other points in the network

- Service providers should deliver all traffic from point to point as expeditiously as possible

- The Internet should remain a place for permissionless innovation.

We also articulate principles that define characteristics of equal-rating compliant models. We believe these principles will help TRAI and other regulators assess subsidized data offerings. Equal-rating means that offerings:

- Are content-agnostic – data offered is not limited to specific content or types of content

- Are not subject to gatekeepers – content doesn’t have to go through subjective or arbitrary processes to be included in the system

- Do not allow pay-for-play – data can’t be bought outright, which would privilege providers who have more purchasing power

- Are transparent – the terms are understandable and up-front for both end users and content providers

- Allow for user and content choice – users and content providers ultimately have the power to be included in, or excluded from, the system.

The Work Ahead

We applaud the Indian government for taking encouraging steps to protect the open Internet. TRAI’s consultation on net neutrality is a welcome step toward enshrining net neutrality in India. Still, connecting the unconnected remains one of the greatest challenges of our time. More work is needed to develop new, alternative models that offer the full diversity of the open Internet to everyone. We’ll continue to support the open Internet and net neutrality in India and around the world.

Join us in this effort by engaging on social media with #SaveTheInternet. Stay tuned to this blog for more on these issues. Share your opinion with the TRAI. There’s still time!

https://blog.mozilla.org/netpolicy/2016/07/01/protecting-the-open-internet-in-india/

|

|

Mark Finkle: Leaving Mozilla |

I joined Mozilla in 2006 wanting to learn how to build & ship software at a large scale, to push myself to the next level, and to have an impact millions of people. Mozilla also gave me an opportunity to build teams, lead people, and focus on products. It’s been a great experience and I have definitely accomplished my original goals, but after nearly 10 years, I have decided to move on.

One of the most unexpected joys from my time at Mozilla has been working with contributors and the Mozilla Community. The mentorship and communication with contributors creates a positive environment that benefits everyone on the team. Watching someone get excited and engaged from the process of landing code in a Firefox is an awesome feeling.

People of Mozilla, past and present: Thank you for your patience, your trust and your guidance. Ten years creates a lot of memories.

Special shout-out to the Mozilla Mobile team. I’m very proud of the work we (mostly you) accomplished and continue to deliver. You’re a great group of people. Thanks for helping me become a better leader.

It’s a Small World – Orlando All Hands Dec 2015

It’s a Small World – Orlando All Hands Dec 2015|

|

Jen Kagan: day whoscountinganymore: the “good-first-bug” tag |

let’s talk about this tag for a minute. it looks like this:

it appears on github. it signals to baby contributors that the issue it is attached to may be a safe one to start with.

while i still imagine myself as a baby contributor, the truth is that i’m really not anymore. it’s been almost a year since i wrote my first line of code in dan shiffman’s “intro to computational media” class, and i’ve been inching my way into open source projects ever since. so that’s where i’m at. one year in. this good-first-bug bug should be a piece of cake. here’s the bug/issue:

add a link? nbd. i followed all the instructions on the test pilot readme for setting up a development environment, but i hit a 404 page when i tried to navigate to the development server. and then there were npm errors related to gulp. and development permissions issues. and that was setting up the dev environment before editing any files. which, by the way, which file is this url supposed to live in anyway?

it turns out test pilot uses ampersand.js for data binding. which, as far as i can tell, basically means that we’re not directly editing html, but templates for html files. “models” or something. yes, something about scalability. so dave clarified:

to load the dummy data, per step 6, you need the super secret docker command:

docker exec testpilot_server_1 ./manage.py loaddata fixtures/demo_data.json

which lives in this 3-month-old issue here.

i thought i was in the clear, but i hit another error after that:

how can you say “discourse_url does not exist” when it totally DOES exist BECAUSE I JUST WROTE IT?

sweet salvation finally came in the form of a previous test pilot bug fix for an issue that was basically exactly the same as the one i was working on: “add link to experiment model”

after reviewing that commit, i saw that what i was missing, what was producing the “does not exist” error for me, was that i hadn’t yet created a particular python script in the experiments/migrations/ folder.

i’m not totally clear on what that script does—i copy/pasted dave’s script and adjusted it for the changes i was making—but my guess, judging by the folder name “migrations” and the python code that says something like “add a field to the experiment model” is that it tells some part of the add-on that a new thing has been added and that the add-on should incorporate it instead of ignoring it.

okay. the point of this has not been to be boring for boring’s sake. it has been to say: “good-first-bug” is relative. sometimes things that are named hard are easy. sometimes, things that are named intro-level are not. ask for help. iz okay.

http://www.jkitppit.com/2016/07/01/day-whoscountinganymore-the-good-first-bug-tag/

|

|

Mozilla Addons Blog: Mozlondon Debriefer |

From June 13 – 17, more than a thousand Mozillians descended on London to celebrate the accomplishments of the past six months and collaborate on priorities for the latter half of the year. The add-ons team and a handful of volunteers did just that, and we wanted to share some of the highlights.

From June 13 – 17, more than a thousand Mozillians descended on London to celebrate the accomplishments of the past six months and collaborate on priorities for the latter half of the year. The add-ons team and a handful of volunteers did just that, and we wanted to share some of the highlights.

Add-on reviews

We celebrated the fact that review times have dropped dramatically in 2016. Last year, most add-on submissions got clogged in the review queue for 10 or more days. Now, 95% of submissions are reviewed in under five days (84% in less than 24 hours!). This is a huge boon to our developer community and a great credit to our tireless, mostly-volunteer review team.

Review efficacy should continue to trend positively over the balance of this year. We furthered plans to simplify the review process by eliminating preliminary reviews, among other streamlining initiatives.

Engineering

It’s likely not a surprise to learn that WebExtensions dominated engineering discussions in London. Here are a few top-level items…

… A WebExtensions Advisory Group has been formed. This cross-disciplinary body is primarily tasked with providing input around the development of future APIs.

… We saw some inspired demos, like runtime.connectNative, which will allow add-ons to communicate out of process; and the auto-reload function for WebExtensions (set to land in 49); plus a demo of a hybrid add-on that allows an SDK add-on to embed a WebExtension add-on, allowing developers to start using the WebExtension API and migrate their users.

… Prioritized future work for WebExtensions was solidified, including permissions support, Chrome parity support for top Chrome content, and end-to-end automated installation testing.

… We recapped many recent WebExtensions advancements, such as the implementation of dozens of new APIs (e.g. history, commands, downloads, webNavigation, etc.)

Community & Editorial

It’s worth recognizing all the diverse ways our community contributes to the success of add-ons—coders, content reviewers, curators, translators, tech writers, evangelists, student ambassadors, and more—our community is integral in providing Firefox users with the power to personalize their Web experience and AMO’s ability to support a massive ecosystem of more than 32,000 extensions and 400,000 themes.

One of the focal points of our community discussions in London was: How can we do more to move volunteers up the contribution curve, so we can nurture the next generation of leaders? What more can we do to turn bug commenters into bug fixers, developers into evangelists, and so forth? Check out the notes from this session and add your ideas there!

On the editorial side, we started exploring ways of re-thinking content discovery. Of course we have the AMO site as a content discovery destination with more than 15 million monthly users, but research tells us the majority of Firefox users hardly know what an add-on is, let alone where to find one. So how can we introduce the wonder of add-ons to a broader Firefox audience?

A general consensus emerged—we need to take the content to where the user is, to key moments in their everyday Web experience where perhaps an add-on can address a need. This is just one of the projects we’ll begin tackling in the second half of the year. If you’d like to get involved, you’re invited to join our weekly public UX meeting.

To get involved with the add-ons world, please visit our wiki page, or get in touch with us at irc.mozilla.org in the #addons channel.

https://blog.mozilla.org/addons/2016/06/30/mozlondon-debriefer/

|

|

Mozilla Addons Blog: Add-on Compatibility for Firefox 49 |

Firefox 49 will be released on September 13th. Here’s the list of changes that went into this version that can affect add-on compatibility. There is more information available in Firefox 49 for Developers, so you should also give it a look.

General

- Cleanup doorhanger notification anchor ID & class duplication. This could affect the styling of add-ons that use popup notifications.

- Make a window opened through window.open() to be scrollable by default.

- Remove non-standard flag argument from

String.prototype.{search,match,replace}. - Remove HTML Microdata API.

XPCOM and Modules

- Limit the size of pref values sent to the child process. As a reminder, you shouldn’t use the preferences service to store large amounts of data. It should only be used for small values (under 4Kb). This bug enforces it for inter-process communication.

- Need to shim protocol.js move for add-ons. The module should now be accessed using

require("devtools/shared/protocol"). For now, there’s a shim that permits the old location to be used. - Add deprecation warning within nsFaviconService.cpp. If you manipulate favicons, you can find the changes described in this bug comment. After this change you’ll only see a warning if you don’t pass the right principal, but eventually it won’t work as you expect.

Let me know in the comments if there’s anything missing or incorrect on these lists. If your add-on breaks on Firefox 49, I’d like to know.

The automatic compatibility validation and upgrade for add-ons on AMO will happen in a few weeks, so keep an eye on your email if you have an add-on listed on our site with its compatibility set to Firefox 48.

https://blog.mozilla.org/addons/2016/06/30/compatibility-for-firefox-49/

|

|

Armen Zambrano: Adding new jobs to Treeherder is now transparent |

This gives two main advantages:

- You can have access to the output of how the request went

- A a link to file a bug under the right component and CC me

You can see in this screenshot what the job looks like:

- Backfill a job - Link to log

- Add new jobs - Link to log

- Trigger all talos jobs - Link to log

- Click on job (the job's panel will load at the bottom of the page)

- Click on "Job details"

- You will see "File bug" (see the screenshot below)

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|