Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Chris Cooper: RelEng & RelOps Weekly highlights - April 18, 2016 |

“My update requests have your blood on them.”

“My update requests have your blood on them.”Improve Release Pipeline:

Varun began work on improving Balrog’s backend to make multifile responses (such as GMP) easier to understand and configure. Historically it has been hard for releng to enlist much help from the community due to the access restrictions inherent in our systems. Kudos to Ben for finding suitable community projects in the Balrog space, and then more importantly, finding the time to mentor Varun and others through the work.

Improve CI Pipeline:

With build promotion well underway for the upcoming Firefox 46 release, releng is switching gears and jumping into the TaskCluster migration with both feet. Kim and Mihai will be working full-time on migration efforts, and many others within releng have smaller roles. There is still a lot of work to do just to migrate all existing Linux workloads into TaskCluster, and that will be our focus for the next 3 months.

Release:

We started doing the uplifts for the Firefox 46 release cycle late last week. Release candidates builds should be starting soon. As mentioned above, this is the first non-beta release of Firefox to use the new build promotion process.

Last week, we shipped Firefox and Fennec 45.0.2 and 46.0b10, Firefox 45.0.2esr and Thunderbird 45.0. For further details, check out the release notes here:

- https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-04-13

- https://wiki.mozilla.org/Releases:Release_Post_Mortem:2016-04-20

See you next week!

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 18 Apr 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160418/

|

|

Nathan Froyd: rr talk post-mortem |

On Wednesday last week, I gave an invited talk on rr to a group of interested students and faculty at Rose-Hulman. The slides I used are available, though I doubt they make a lot of sense without the talk itself to go with them. Things I was pleased with:

- I didn’t overrun my time limit, which was pretty satisfying. I would have liked to have an hour (40 minutes talk/20 minutes for questions or overrun), but the slot was for a standard class period of 50 minutes. I also wanted to leave some time for questions at the end, of which there were a few. Despite the talk being scheduled for the last class period of the day, it was well-attended.

- The slides worked well My slides are inspired by Lawrence Lessig’s style of presenting, which I also used for my lightning talk in Orlando. It forces you to think about what you’re putting on each slide and make each slide count. (I realize I didn’t use this for my Gecko onboarding presentation; I’m not sure if the Lessig method would work for things like that. Maybe at the next onboarding…)

- The level of sophistication was just about right, and I think the story approach to creating

rrhelped guide people through the presentation. At least, it didn’t look as though many people were nodding off or completely confused, despiterrbeing a complex systems-heavy program.

Most of the above I credit to practicing the talk repeatedly. I forget where I heard it, but a rule of thumb I use for presentations is 10 hours of prep time minimum (!) for every 1 hour of talk time. The prep time always winds up helping: improving the material, refining the presentation, and boosting my confidence giving the presentation. Despite all that practice, opportunities for improvement remain:

- The talk could have used any amount of introduction on “here’s how debuggers work”. This is kind of old hat to me, but I realized after the fact that to many students (perhaps even some faculty), blithely asserting that

rrcan start and stop threads at will, for instance, might seem mysterious. A slide or two on the differences between howrr recordworks vs. howrr replayworks and interacts with GDB would have been clarifying as well. - The above is an instance where a diagram or two might have been helpful. I dislike putting diagrams in my talks because I dislike the thought of spending all that time to find a decent, simple app for drawing things, actually drawing them, and then exporting a non-awful version into a presentation. It’s just a hurdle that I have to clear once, though, so I should just get over it.

- Checkpointing and the actual mechanisms by which

rrcan run forwards or backwards in your program got short shrift and should have been explained in a little more detail. (Diagrams again…) Perhaps not surprisingly, the checkpointing material got added later during the talk prep and therefore didn’t get practiced as much. - The demo received very little practice (I’m sensing a theme here) and while it was able to show off a few of

rr‘s capabilities, it wasn’t very polished or impressive. Part of that is due torrmysteriously deciding to cease working on my virtual machine, but part of that was just my own laziness and assuming things would work out just fine at the actual talk. Always practice!

https://blog.mozilla.org/nfroyd/2016/04/18/rr-talk-post-mortem/

|

|

Allen Wirfs-Brock: Slide Bit: From Chaos |

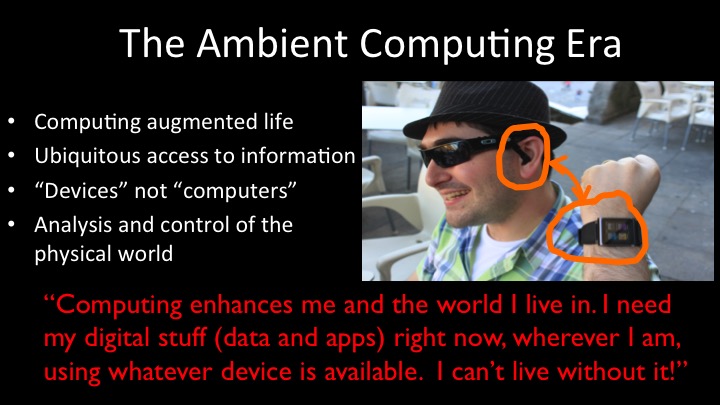

At the beginning of a new computing era, it’s fairly easy to sketch a long-term vision of the era. All it takes is knowledge of current technical trajectories and a bit of imagination. But it’s impossible to predict any of the essential details of how it will actually play out.

Technical, business, and social innovation is rampant in the early years of a new era. Chaotic interactions drive the churn of innovation. The winners that will emerge from this churn are unpredictable. Serendipity is as much a factor as merit. But eventually, the stable pillars of the new era will emerge from the chaos. There are no guarantees of success, but for innovators right now is your best opportunity for impacting the ultimate form of the Ambient Computing Era.

|

|

Kartikaya Gupta: Using multiple keyboards |

When typing on a laptop keyboard, I find that my posture tends to get very closed and hunched. To fix this I resurrected an old low-tech solution I had for this problem: using two keyboards. Simply plug in an external USB keyboard, and use one keyboard for each hand. It's like a split keyboard, but better, because you can position it wherever you want to get a posture that's comfortable for you.

I used to do this on a Windows machine back when I was working at RIM and it worked great. Recently I tried to do it on my Mac laptop, but ran into the problem where the modifier state from one keyboard didn't apply to the other keyboard. So holding shift on one keyboard and T on the other wouldn't produce an uppercase T. This was quite annoying, and it seems to be an OS-level thing. After some googling I found Karabiner which solves this problem. Well, really it appears to be a more general keyboard customization tool, but the default configuration also combines keys across keyboards which is exactly what I wanted. \o/

Of course, changing your posture won't magically fix everything - moving around regularly is still the best way to go, but for me personally, this helps a bit :)

|

|

QMO: Firefox 47.0 Aurora Testday Results |

Hello Mozillians!

As you may already know, last Friday – April 15th – we held a new successful Testday event, for Firefox 47.0 Aurora.

Results:

We’d like to take this opportunity to say a big THANK YOU to Teodora Vermesan, Chandrakant Dhutadmal, gaby2300, Moin Shaikh, Juan David Pati~no, Luis Fernandez, Vignesh Kumar, Ilse Mac'ias, Iryna Thompson and to our amazing Bangladesh QA Community: Hossain Al Ikram, Rezaul Huque Nayeem, Azmina Akter Papeya, Saheda Reza Antora, Raihan Ali, Khalid Syfullah Zaman, Sajedul Islam, Samad Talukdar, John Sujoy, Saddam Hossain, Asiful Kabir Heemel, Roman Syed, Md. Tanvir Ahmed, Md Rakibul Islam, Anik Roy, Kazi Nuzhat Tasnem, Sauradeep Dutta, Kazi Sakib Ahmad, Maruf Rahman, Shanewas Niloy, Tanvir Rahman, Tazin Ahmed, Mohammed Jawad Ibne Ishaque, A.B.M.Nashrif , Fahim, Mohammad Maruf Islam, akash, Zayed News, Forhad Hossain, Md.Tarikul Islam Oashi, Sajal Ahmed, Fahmida Noor, Mubina Rahaman Jerin and Md.Faysal Alam Riyad for getting involved in this event and making Firefox as best as it could be.

Also, many thanks to all our active moderators.

Keep an eye on QMO for upcoming events!

|

|

Fr'ed'eric Harper: The Day I Wanted to Kill Myself |

My semicolon tattoo

A little more than a year ago, my life was perfect. At least, from my own point of view. I was engaged to the most formidable woman I ever knew and we were living in our beautiful spacious condominium with our kids, our three cats. People were paying me very well to share my passion about technology and travel all over the world to help developers succeed with their projects. My friends and family were an important part of my life and even if I wasn’t the healthiest man on eart, I had no real health issues. I was happy and I couldn’t ask for more… Until my world collapsed.

I was 4500 kilometers away from home when I learned that the woman of my life, the one I spent one fourth of my young existence with, was leaving me. That was, the end of my world! Like if it wasn’t enough to lose the person you share your life with, some people I considered friends ran away from me: sad Fred is no fun, and obviously, when there is a separation, people feel the needs to “take a side”. Right before, I realized that the company I was working for, wasn’t the right one for me, so I decided to resign: I wasn’t able to deliver as I should taking this in the equation with everything else. Of course, I had no savings and it’s at that exact moment that we had water damage in our building and that I had to pay out a couple of thousands for the renovation. During that period, I sank deeply and very quickly: someone calls Depression knocked at my door.

For months, I was going deeper in the rabbit hole. Everything was hard to achieve, and uninteresting: even taking my shower was a real exploit. I was staying home, doing nothing except eating shitty food, getting weight and watching Netflix. I’ve always considered myself a social beast, but even just seeing my best friend was painful and unpleasant. I didn’t want to talk to people at all. I didn’t want to see people. I didn’t need help even if I felt I was a failure. My life was a failure. During that period, my “happiest” moments were when I was at a bar, drinking: alcohol was making me numb, making me forget that I was swimming in the dark all day long. Obviously, that tasty nectar called beer wasn’t helping me at all: it was taking me deeper than I was and as any stupid human, I was trying to get back my love, in the most ineffective way ever, to stay polite with myself. On top of that, even with good will, everyone was giving me shitty advices (side note: in that situation, the only thing you should do is being there for the other – you don’t know what the person is going thru and please, don’t give advices, just be there). That piece of shit that I was seeing in the mirror couldn’t have been me: I was strong. I’ve always done everything in my life to be happy: why I was not able to make the necessary changes to get back on my feet? Something was pulling me to the bottom and was putting weight on my shoulder. I wasn’t happy anymore, my life wasn’t valuable anymore. Maybe the solution was to kill myself?

Seriously, why live in a world where the woman I wanted to spend the rest of my life, the woman who wanted to spend the rest of her life with my own person, was running away from me? Why live in a world where people were spitting on me, not literally, in the lovely world that is social media? Why live in a world where the job I thought I was born for was maybe not made for me? You know that thing call the impostor syndrome? I wasn’t happy and I wasn’t seeing the light at the end of the tunnel. I had no more strength left. I didn’t have a proper night of sleep since weeks and no healthy meal since, forever. I don’t even talk about exercise… I was practically dead already, so one night, I drank like never before, and had the marvellous idea to nearly harass my former fianc'e: I wanted her back. She closed her phone, it was the end: I decided it was the end. I was an asshole. I had enough. I wasn’t able to take more of that shit that is life. Fortunately, I blacked out, being truly intoxicated, before doing anything irreparable… until the cops knocked at my door. They were there to check if I was still alive. Two cops, at my door, wanted to see if I was alive. Can you imagine? I’m pretty sure you can’t. I was shaking and nearly crying: they were ready to smash my door if I wasn’t answering them in the second after I did. I reached a point where people who still cared about me were worried enough to call the police. Can you imagine again? Worrying the people you love so much that they need to take drastic actions like this? I was terrified. I. Was. Terrified. Not about the cops, but about me… I was at a breaking point! Fuck…

At that exact moment, I decided I needed to tried to take care of myself. I started to see a psychologist twice a week. My doctor prescribed me antidepressants and pills to help me sleep a bit. Until now, I didn’t take any medicine for my severe deficit attention disorder (ADD – ADHD, with hyperactivity, in my case) that was diagnosed years ago, but I asked my doctor to add this to the cocktails of pills she was giving me. I also forced myself to see my close friends and I stopped taking anything containing alcohol. It was a complete turn over: anything that was helping me to see some light out of that terrible time of my life was part of my plan. Actually, I didn’t had any plan, I just wanted to run away from that scariest part of me. I even started to write a personal diary every time I had a difficult thought in my mind, which was more than once daily. It wasn’t easy. I wasn’t happy, but I was scared. I was scared to get back to that moment when the only plausible idea was to end my life. The frightening was bigger than the sadness, trust me. Baby steps were made to go forward. It was, and still is the biggest challenge I ever had in my life.

One evening, I was with my best friend at a Jean Leloup show: for a small moment, first time since months, I was having fun. I was smiling! And I started to cry… I realized that if I had killed myself, I wouldn’t be able to be there, with a man who is loving me as a friend for eighteen years and supported me like no one during that difficult time. I wouldn’t have been able to be there, singing and dancing on the music I love so much… At that exact moment, I knew I was starting to slowly get back on my feet. I knew that it wasn’t only the right thing to do, it was the thing to do. Thanks to my parents, my friends and the health professionals, I was finally feeling like my life was improving. It was a work in progress, but I was going in the right direction.

Still today, life isn’t easy. Life is continuing to throw rocks at me, like my mother getting a diagnostic of Alzheimer and this week, a cancer. I’m still trying to fix parts of my life, trying to find myself, but I can smile now, most of the time. It’s a constant battle, but I now know it’s worth it. Anyhow, I have mental illness and I’m not ashamed anymore of it: I’m not ashamed anymore of what happened! I’m putting all efforts I can to make my life better. Again, it’s not easy, but small steps at a time, I’m getting better. Since, there is a semicolon tattoo on my wrist (picture above) to remember me that life is precious. That my life is precious. I could’ve ended my life, like an author ending a sentence with a coma, but I chose not to: my story isn’t over…

P.S.: If you have suicide ideas or feels like you are going thru what I’ve lived, please call a friend. If you don’t want or can’t, call Suicide Action Montreal at 514-723-4000 or check the hotline number in your country. You deserve better. You deserve to live!

http://feedproxy.google.com/~r/outofcomfortzonenet/~3/RXASk6b1Idk/

|

|

Karl Dubost: [worklog] Ganesh, remover of obstacles |

Earthquake in Kumamoto (Green circle is where I live). For people away from Japan, it always sounds like a scary thing. This one was large but still Japan is a big series of islands and where I live was far from the Earthquake. Or if you want a comparison it's a bit like people in Japan who would be worried for people in New-York because of an earthquake in Miami, or for people in Oslo because of an earthquake in Athens. Time to remove any obstacles, so Ganesh.

Tune of the week: Deva Shree Ganesha - Agneepath.

Webcompat Life

Progress this week:

Today: 2016-04-18T07:52:17.560261 374 open issues ---------------------- needsinfo 3 needsdiagnosis 126 needscontact 25 contactready 95 sitewait 122 ----------------------

You are welcome to participate

Preparing Londong agenda.

After reducing the incoming bug to nearly 0 every day in the previous weeks, I have now reduced the needscontact around 25 issues. Most of the remaining are for Microsoft, Opera, Firefox OS community to deal with. My next target is the contactready ones around 100 issues.

Posted my git/github workflow.

Webcompat issues

(a selection of some of the bugs worked on this week).

- This bug is still going on and is still insane. If you have contacts in Brazil, maybe you could help.

- A weird gmail notifications issue. Google has been contacted, they knew about the issue but not exactly where it was. Done.

- Flickr with a strange scrolling bug. Always helpful this is fixed now.

- Apple still trying to contact for the video issues.

Webcompat development

- Exploring some design ideas for the issue section and its associated comments.

- Reviewing and merging code for HTTP error handling.

- We need to improve the UX of bug reporting

They are invisible

Some people in the Mozilla community are publishing on Medium, aka their content will disappear one day when you know Medium will be bought or just sunset. It's a bit sad. Their content is also most of the time not syndicated elsewhere.

- David Bryant, Mozilla CTO

- Chris Beard, Mozilla CEO, he didn't publish anything yet.

- Anthony Lam, Firefox UX

- Gemma Petrie, Firefox UX

- Philipp Sackl, Firefox UX

Reading List

- A must-watch video about the Web and understands why it is cool to develop for the Web. All along the talk I was thinking: When native will finally catch up with the Web.

- A debugging thought process

Follow Your Nose

TODO

- Document how to write tests on webcompat.com using test fixtures.

- ToWrite: rounding numbers in CSS for

width - ToWrite: Amazon prefetching resources with

for Firefox only.

Otsukare!

|

|

Armen Zambrano: Project definition: Give Treeherder the ability to schedule TaskCluster jobs |

The main things I give in here are:

- Background

- Where we came from, where we are and we are heading towards

- Goal

- Use case for developers

- Breakdown of components

- Rather than all aspects being mixed and not logically separate

-----------------------------------

Give Treeherder the ability to schedule TaskCluster jobs

A - Generate data source with all possible tasks

- Bug 1232005 - Let the gecko decision task generate a file with all the possible TaskCluster jobs that could have been scheduled for a given push

- We will probably need to create a "latest" alias

- RISK: The structure of graphs is going to change; the artifact will change in format:

- Alternative mentor for this section are: garndt

B - Teach Treeherder to use the artifact

- RISK: The structure of graphs is going to change; the artifact will change in format:

- This will require close collaboration with Treeherder engineers

- This work can be done locally with a Treeherder instance

- It can also be deployed to the “staging” version of Treeherder to do tests

- Alternative mentors for this section is: camd

C - Teach pulse_actions to listen for requests from Treeherder

- pulse_actions is a pulse listener of Treeherder actions

- You can see pulse_actions’ workflow in here

- Once part B is completed, we will be able to listen for messages requesting certain TaskCluster tasks to be scheduled and we will schedule those tasks on behalf of the user

- RISK: Depending if the TaskCluster actions project is completed on time, we might instead make POST requests to an API

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Armen Zambrano: Project definition: SETA re-write |

It was also a good exercise for students to have to read and ask questions about what was not clear and give lots to read about the project.

I want to share this and another project definition in case it is useful for others.

----------------------------------

- Write SETA as an independent project that is:

- maintainable

- more reliable

- automatically deployed through Heroku app

- Support TaskCluster, our new CI (continuous integration system)

- It has a cronjob which kicks in every 12 hours to scrape information about jobs from every push

- It takes the information about jobs (which it grabs from Treeherder) into a database

- Get familiar with deploying apps and using databases in Heroku

- Host SETA in Heroku instead of http://alertmanager.allizom.org/seta.html

- Teach SETA about TaskCluster

- Change the gecko decision task to reliably use SETA [5][6]

- If the SETA service is not available we should fall back to run all tasks/jobs

- Document how SETA works and auto-deployments of docs and Heroku

- Write automatically generated documentation

- Add auto-deployments to Heroku and readthedocs

- Add tests for SETA

- Add tox/travis support for tests and flake8

- Re-write SETA using ActiveData [3] instead of using data collected by Ouija

- Make the current CI (Buildbot) use the new SETA Heroku service

- Create SETA data for per test information instead of per job information (stretch goal)

- On Treeherder we have jobs that contain tests

- Tests re-order between those different chunks

- We want to run jobs at a per-directory level or per-manifest

- Add priorities into SETA data (stretch goal)

- Priority 1 gets every time

- Priority 2 gets triggered on Y push

[8] http://alertmanager.allizom.org/seta.html

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/ayDtl0NUZMw/project-definition-seta-re-write.html

|

|

Fr'ed'eric Wang: OpenType MATH in HarfBuzz |

TL;DR:

- •

Work is in progress to add OpenType MATH support in HarfBuzz and will be instrumental for many math rendering engines relying on that library, including browsers.

- •

For stretchy operators, an efficient way to determine the required number of glyphs and their overlaps has been implemented and is described here.

In the context of Igalia browser team effort to implement MathML support using TeX rules and OpenType features, I have started implementation of OpenType MATH support in HarfBuzz. This table from the OpenType standard is made of three subtables:

- •

The MathConstants table, which contains layout constants. For example, the thickness of the fraction bar of .

- •

The MathGlyphInfo table, which contains glyph properties. For instance, the italic correction indicating how slanted an integral is e.g. to properly place the subscript in

http://www.maths-informatique-jeux.com/blog/frederic/?post/2016/04/16/OpenType-MATH-in-HarfBuzz

|

|

Mark Finkle: Pitching Ideas – It’s Not About Perfect |

I realized a long time ago that I was not the type of person who could create, build & polish ideas all by myself. I need collaboration with others to hone and build ideas. More than not, I’m not the one who starts the idea. I pick up something from someone else – bend it, twist it, and turn it into something different.

Like many others, I have a problem with ‘fear of rejection’, which kept me from shepherding my ideas from beginning to shipped. If I couldn’t finish the idea myself or share it within my trusted circle, the idea would likely die. I had most successes when sharing ideas with others. I have been working to increase the size of the trusted circle, but it still has limits.

Some time last year, Mozilla was doing some annual planning for 2016 and Mark Mayo suggested creating informal pitch documents for new ideas, and we’d put those into the planning process. I created a simple template and started turning ideas into pitches, sending the documents out to a large (it felt large to me) list of recipients. To people who were definitely outside my circle.

The world didn’t end. In fact, it’s been a very positive experience, thanks in large part to the quality of the people I work with. I don’t get worried about feeling the idea isn’t ready for others to see. I get to collaborate at a larger scale.

Writing the ideas into pitches also forces me to get a clear message, define objectives & outcomes. I have 1x1s with a variety of folks during the week, and we end up talking about the idea, allowing me to further build and hone the document before sending it out to a larger group.

I’m hooked! These days, I send out pitches quite often. Maybe too often?

http://starkravingfinkle.org/blog/2016/04/pitching-ideas-its-not-about-perfect/

|

|

Allen Wirfs-Brock: Slide Bite: The Ambient Computing Era |

In the Ambient Computing Era humans live in a rich environment of communicating computer enhanced devices interoperating with a ubiquitous cloud of computer mediated information and services. We don’t even perceive most of the computers we interact with. They are an invisible and indispensable part of our everyday life.

|

|

Air Mozilla: Foundation Demos April 15 2016 |

Foundation Demos April 15 2016

Foundation Demos April 15 2016

|

|

Allen Wirfs-Brock: Slide Bite: Transitional Technologies |

A transitional technology is a technology that emerges as a computing era settles into maturity and which is a precursor to the successor era. Transitional technologies are firmly rooted in the “old” era but also contain important elements of the “new” era. It’s easy to think that what we experience using transitional technologies is what the emerging era is going to be like. Not likely! Transitional technologies carry too much baggage from the waning era. For a new computing era to fully emerge we need to move “quickly through” the transition period and get on with the business of inventing the key technologies of the new era.

|

|

Air Mozilla: Webdev Beer and Tell: April 2016 |

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

Once a month web developers across the Mozilla community get together (in person and virtually) to share what cool stuff we've been working on in...

|

|

Hub Figui`ere: Modernizing AbiWord code |

When you work on a 18 year old code base like AbiWord, you encounter stuff from another age. This is the way it is in the lifecycle of software where the requirement and the tooling evolve.

Nonetheless, when AbiWord started in 1998, it was meant as a cross-platform code base written in C++ that had to compile on both Windows and Linux. C++ compiler where not as standard compliant as today so a lot of things where excluded: no template, so not standard C++ library (it was called STL at the time). Over the years, things have evolved, Mac support was added, gcc 4 got released (with much better C++ support), and in 2003 we started using template for the containers (not necessarily in that oder, BTW). Still no standard library. This came later. I just flipped the switch to make C++11 mandatory, more on that later.

As I was looking for some bugs I found it that with all that hodge podge of coding standard there wasn't any, and this caused some serious ownership problems where we'd be using freed memory. The worse is this lead to file corruption where we write garbage memory into files as are supposed to be valid XML. This is bad.

The core of the problem is the way we pass attributes / properties around. They are passed as a NULL terminated array of pointer to strings. Even index are keys, odd are string values. While keys are always considered static, values are not always. Sometime they are taken out of a std::string or a one of the custom string containers from the code base (more on that one later), sometime they are just strdup() and free() later (uh oh, memory leaks).

Maybe this is the good time to do a cleanup and modernize the code base and make sure we have safer code rather that trying to figure out one by one all the corner cases. And shall I add that there is virtually no tests on AbiWord? So it is gonna be epic.

As I'm writing this I have 8 patches with a couple very big, amounting to the following stats (from git):

134 files changed, 5673 insertions(+), 7730 deletions(-)

These numbers just show how broad the changes are, and it seems to work. The bugs I was seeing with valgrind are gone, no more access to freed memory. That's a good start.

Some of the 2000+ lines deleted are redundant code that could have been refactored (there are still a few places I marked for that), but a lot have to do with what I'm fixing. Also some changes are purely whitespace / indentation where it was relevant usually around an actual change.

Now, instead of passing around const char ** pointers, we pass around a const PP_PropertyVector & which is, currently, a typedef to std::vector. To make things nice the main storage for these properties is now also a std::map (possibly I will switch it to an unordered map) so that assignments are transparent to the std::string implementation. Before that it was a one of the custom containers.

Patterns like this:

const char *props[] = { NULL, NULL, NULL, NULL };

int i = 0;

std::string value = object->getValue();

props[i++] = "key"; const char *s = strdup(value.c_str()); props[i++] = s;

thing->setProperties(props);

free(s);

Turns to

PP_PropertyValue props = {

"key", object->getValue()

};

thing->setProperties(props);

Shorter, readable, less error prone. This uses C++11 initializer list. This explain some of the line removal.

Use C++ 11!

Something I can't recommend enough if you have a C++ code base is to switch to C++ 11. Amongst the new features, let me list the few that I find important:

autofor automatic type deduction. Make life easier in typing and also in code changes. I mostly always use it whe declaring an iterator from a container.

unique_ptr<>andshared_ptr<>. Smart pointer inherited from boost. But without the need for boost.unique_ptr<>replaces the dreadedauto_ptr<>that is now deprecated.

unordered_map<>andunordered_set<>: hash based map and set in the standard library.

- lambda functions. Not need to explain, it was one of the big missing feature of C++ in the age of JavaScript popularity

- move semantics: transfer the ownership of an object. Not easy to use in C++ but clearly beneficial for when you always ended up copying. This is a key part of the

unique_ptr<>implementation to be usable in a container whereauto_ptr<>didn't. The move semantic is the default behaviour of Rust while C++ copies.

- initializer list allow construction of object by passing a list of initial values. I use this one a lot in this patch set for property vectors.

Don't implement your own containers.

Don't implement vector, map, set, associative container, string, lists. Use the standard C++ library instead. It is portable, it works and it likely does a better job than your own. I have another set of patches to properly remove these UT_Vector, UT_String, etc. from the AbiWord codebase. Some have been removed progressively, but it is still ongoing.

Also write tests.

This is something that is missing on AbiWord that I have tried to tackle a few time.

One more thing.

I could have mechanised these code changes to some extent, but then I wouldn't have had to review all that code in which I found issues that I addressed. Eyeball mark II is still good for that.

The patch (in progress)

https://www.figuiere.net/hub/blog/?2016/04/15/860-modernizing-abiword-code

|

|

Christopher Arnold |

Back in 2005-2006 my friend Liesl told me about the coming age of chat bots. I had a hard time imagining how people would embrace products that simulated human voice communication but were less “intelligent”. She ended up building a company that allowed people to have polite automated service agents that you could program with a certain specific area of intelligence. Upon launch she found that people spent a lot more time conversing with the bots than they did with the average human service agent. I wondered if this was because it was harder to get questions answered, or if people just enjoyed the experience of conversing with the bots more than they enjoyed talking to people. Perhaps when we know the customer service agent is paid hourly, we don't gab in excess. But if it's chat bot you're talking to, we don't feel the need to be hasty?

Back in 2005-2006 my friend Liesl told me about the coming age of chat bots. I had a hard time imagining how people would embrace products that simulated human voice communication but were less “intelligent”. She ended up building a company that allowed people to have polite automated service agents that you could program with a certain specific area of intelligence. Upon launch she found that people spent a lot more time conversing with the bots than they did with the average human service agent. I wondered if this was because it was harder to get questions answered, or if people just enjoyed the experience of conversing with the bots more than they enjoyed talking to people. Perhaps when we know the customer service agent is paid hourly, we don't gab in excess. But if it's chat bot you're talking to, we don't feel the need to be hasty? Fast forwarding over a decade later, IBM has acquired her company into the Watson group. During a dinner party we talked about Amazon’s Echo sitting on her porch. She and her husband would occasionally make DJ requests to “Alexa” (the name for Echo’s internal chat bot) as if it was a person attending the party. It was definitely seeming that the age of more intelligent bots is upon us. Most folk who have experimented with speech-input products of the last decade have become accustomed to talking to bots in a robotic monotone devoid of accent because of the somewhat random speech capture mistakes that early technology was burdened with. If the bots don't adapt to us, we go to them it seems, mimicking the 50's and 60's movies of how we've heard robotic voices depicted to us in science fiction films.

Fast forwarding over a decade later, IBM has acquired her company into the Watson group. During a dinner party we talked about Amazon’s Echo sitting on her porch. She and her husband would occasionally make DJ requests to “Alexa” (the name for Echo’s internal chat bot) as if it was a person attending the party. It was definitely seeming that the age of more intelligent bots is upon us. Most folk who have experimented with speech-input products of the last decade have become accustomed to talking to bots in a robotic monotone devoid of accent because of the somewhat random speech capture mistakes that early technology was burdened with. If the bots don't adapt to us, we go to them it seems, mimicking the 50's and 60's movies of how we've heard robotic voices depicted to us in science fiction films. This month both Microsoft and Facebook have announced open bot APIs for their respective platforms. Microsoft’s platform for integration is an open source "Bot Framework" that allows any web developer to re-purpose the code to inject new actions or content tools in the active discussion flow of their conversational chat bot called Cortana, which is built into the search box of every Windows 10 operating system they license. They also demonstrated how the new bot framework allows their Skype messenger to respond to queries intelligently if they have the right libraries loaded. Amazon refers to the app-sockets for the Echo platform as "talents", whereby you load a specific field of intelligence into the speech engine to allow Alexa to query the external sources you wish. I noticed that both Alexa team and Cortana team seem to be focusing on pizza ordering in both their product demos. But one day we'll be able to query beyond the basic necessities. In my early demonstration back in 2005 of the technology Liesl and Dr. Zakos (her cofounder) built, they had their chat bot ingest all my blog writings about folk percussion, then answer questions about certain topics that were in my personal blog. If a bot narrows a question to a subject matter, its answers can be uncannily accurate to the field!

This month both Microsoft and Facebook have announced open bot APIs for their respective platforms. Microsoft’s platform for integration is an open source "Bot Framework" that allows any web developer to re-purpose the code to inject new actions or content tools in the active discussion flow of their conversational chat bot called Cortana, which is built into the search box of every Windows 10 operating system they license. They also demonstrated how the new bot framework allows their Skype messenger to respond to queries intelligently if they have the right libraries loaded. Amazon refers to the app-sockets for the Echo platform as "talents", whereby you load a specific field of intelligence into the speech engine to allow Alexa to query the external sources you wish. I noticed that both Alexa team and Cortana team seem to be focusing on pizza ordering in both their product demos. But one day we'll be able to query beyond the basic necessities. In my early demonstration back in 2005 of the technology Liesl and Dr. Zakos (her cofounder) built, they had their chat bot ingest all my blog writings about folk percussion, then answer questions about certain topics that were in my personal blog. If a bot narrows a question to a subject matter, its answers can be uncannily accurate to the field!Facebook’s plan is to inject bot-intelligence into the main Facebook Messenger app.) Their announcements actually seem to follow quite closely the concept Microsoft announced of developers being able to port in new capabilities for the chatting engines of each platform vendor. It may be that both Microsoft and Facebook are planning for the social capabilities of their joint collaborations on the launch of Oculus, Facebook's immersive virtual environment of head-set based virtual world environments which run on Windows 10 machines.

The outliers in this era of chat bot openness are the Apple Siri and Ok Google speech tools that are like a centrally managed brain. (Siri may query the web using specific sources like Wolfram Alpha, but most of the answers you get from either will be consistent with the answers others receive for similar questions.) The thing that I think is very elegant about the approaches Amazon, Microsoft and Facebook are taking is that they make the knowledge engine of the core platform extensible in ways that a single company could not. Also, the approach allows customers to personalize their experience of the platform by specifically adding new ported service to the tools. My interest here is that the speech platforms will become much more like the Internet of today where we are used to having very diverse “content” experiences based on our personal preferences and proclivities.

It is very exciting to see that speech is becoming a very real and useful interface for interacting with computers. While the content of the web is already one of the knowledge ports of these speech tools, the open-APIs of Cortana, Alexa and Facebook Messenger will usher in a very exciting new means to create compelling internet experiences. My hope is that there is a bit of standardization so that a merchant like Domino's doesn't have to keep rebuilding their chat bot tools for each platform.

I remember my first experience having a typed conversation with the Dr. Know computer at the Oregon Museum of Science and Industry when I was a teenager. It was a simulated Turing test program designed to give a reasonably acceptable experience of interacting with a computer in a human way. While Dr. Know was able to artfully dodge or re-frame questions when it detected input that wasn’t in its knowledge database, I can see that the next generations of teenagers will be able to have exactly the same kind of experience I had in the 1980’s. But their discussions will go in the direction of exploring knowledge and exploring logic structures of a mysterious mind instead of ending up in rhetorical Cul-de-sacs of the Dr. Know program.

While we may not chat with machines with quite the same intimacy of Spike Jonze’s character in “Her”, the days where we talk in robotic tones to operate with the last decade’s speech input systems is soon to end. Each of these innovative companies is dealing with the hard questions of how to get us out of our stereotypes of robot behavior and get us back to acting like people again, returning to the main interface that humans have used for eons to interact with each other. Ideally the technology will fade into the background and we'll start acting normally again instead of staring at screens and tapping fingers.

P.S Of course Mozilla has several initiatives on speech in process. We'll talk about those very soon. But this post is just about how the other innovators in the industry are doing an admirable job making our machines more human-friendly.

http://ncubeeight.blogspot.com/2016/04/back-in-2005-2006-my-friend-liesl-told.html

|

|

John O'Duinn: “Distributed” ER#7 now available! |

“Distributed” Early Release #7 is now publicly available, a  month after ER#6 came out.

month after ER#6 came out.

This ER#7 includes a significant reworking of the first section of this book. Some chapters were resequenced. Some were significantly trimmed – by over half! Some were split up, creating new chapters or merged with existing sections of other chapters later in the book. All slow, detailed work that I hope makes the book feel more focused. The format of all chapters throughout were slightly tweaked, and there are also plenty of across-the-board minor fixes.

You can buy ER#7 by clicking here, or clicking on the thumbnail of the book cover. Anyone who already bought any of the previous ERs should get prompted with a free update to ER#7 – if you don’t please let me know! And yes, you’ll get updated when ER#8 comes out.

Thanks again to everyone for their ongoing encouragement, proof-reading help and feedback so far – keep letting me know what you think. Each piece of great feedback makes me wonder how I missed such obvious errors before. And makes me happy, as each fix helps make this book better. It’s important this book be interesting, readable and practical – so if you have any comments, concerns, etc., please email me. Yes, I will read and reply to each email personally! To make sure that any feedback doesn’t get lost or caught in spam filters, please email comments to feedback at oduinn dot com. I track all feedback and review/edit/merge as fast as I can. And thank you to everyone who has already sent me feedback/opinions/corrections – all really helpful.

John.

=====

ps: For the curious, here is the current list of chapters and their status:

Chapter 1 The Real Cost of an Office – AVAILABLE

Chapter 2 Distributed Teams Are Not New – AVAILABLE

Chapter 3 Disaster Planning – AVAILABLE

Chapter 4 Diversity

Chapter 5 Organizational Pitfalls to Avoid – AVAILABLE

Chapter 6 Physical Setup – AVAILABLE

Chapter 7 Video Etiquette – AVAILABLE

Chapter 8 Own Your Calendar – AVAILABLE

Chapter 9 Meetings – AVAILABLE

Chapter 10 Meeting Moderator – AVAILABLE

Chapter 11 Single Source of Truth

Chapter 12 Email Etiquette – AVAILABLE

Chapter 13 Group Chat Etiquette – AVAILABLE

Chapter 14 Culture, Conflict and Trust

Chapter 15 One-on-Ones and Reviews – AVAILABLE

Chapter 16 Hiring, Onboarding, Firing, Reorgs,

Layoffs and other Departures – AVAILABLE

Chapter 17 Bring Humans Together – AVAILABLE

Chapter 18 Career Path – AVAILABLE

Chapter 19 Feed Your Soul – AVAILABLE

Chapter 20 Final Chapter

Appendix A The Bathroom Mirror Test – AVAILABLE

Appendix B How NOT to Work – AVAILABLE

Appendix C Further Reading – AVAILABLE

=====

http://oduinn.com/blog/2016/04/14/distributed-er7-now-available/

|

|

Robert O'Callahan: Leveraging Modern Filesystems In rr |

During recording, rr needs to make sure that the data read by all mmap and read calls is saved to the trace so it can be reproduced during replay. For I/O-intensive applications (e.g. file copying) this can be expensive in time, because we have to copy the data to the trace file and we also compress it, and in space, because the data is being duplicated.

Modern filesystems such Btrfs and XFS have features that make this much better: the ability to clone files and parts of files. These cloned files or blocks have copy-on-write semantics: i.e. the underlying storage is shared until one of the copies is written to. In common cases no future writes happen or they happen later when copying isn't a performance bottleneck. So I've extended rr to support these features.

mmap was pretty easy to handle. We already supported hardlinking mmapped files into the trace directory as a kind of hacky/incomplete copy-on-write approximation, so I just extended that code to try BTRFS_IOC_CLONE first. Works great.

read was harder to handle. We extend syscall buffering to give each thread its own "cloned-data" trace file, and every time a large block-aligned read occurs, we first try to clone those blocks from the source file descriptor into that trace file. If that works, we then read the data for real but don't save it to the syscall buffer. During replay, we read the data from the cloned-data trace file instead of the original file descriptor. The details are a bit tricky because we have to execute the same code during recording as replay.

This approach introduces a race: if some process writes to the input file between the tracee cloning the blocks and actually reading from the file, the data read during replay will not match what was read during recording. I think this is not a big deal since in Linux such read/write races can result in the reader seeing an arbitrary mix of old and new data, i.e. this is almost certainly a severe bug in the application, and I suspect such bugs are relatively rare. Personally I've never seen one. We could eliminate the race by reading from the cloned-data file instead of the original input file, but that performs very poorly because it defeats the kernel's readahead optimizations.

Naturally this optimization only works if you have the right sort of filesystem and the trace and the input files are on the same filesystem. So I'm formatting each of my machines with a single large btrfs partition.

Here are some results doing "cp -a" of an 827MB Samba repository, on my laptop with SSD:

The space savings are even better:

The cloned btrfs data is not actually using any space until the contents of the original Samba directory are modified. And of course, if you make multiple recordings they continue to share space even after the original files are modified. Note that some data is still being recorded (and compressed) normally, since for simplicity the cloning optimization doesn't apply to small reads or reads that are cut short by end-of-file.

The cloned btrfs data is not actually using any space until the contents of the original Samba directory are modified. And of course, if you make multiple recordings they continue to share space even after the original files are modified. Note that some data is still being recorded (and compressed) normally, since for simplicity the cloning optimization doesn't apply to small reads or reads that are cut short by end-of-file.

http://robert.ocallahan.org/2016/04/leveraging-modern-filesystems-in-rr.html

|

|