Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

The Mozilla Blog: Mozilla’s Commitment to Inclusive Internet Access |

Developing the Internet and defending its openness are key to global growth that is equitable, sustainable, and inclusive. The Internet is most powerful when anyone — regardless of gender or geography — can participate equally.

Today Mozilla announced two commitments to help make universal internet access a reality as part of the U.S. State Department’s Global Connect Initiative global actions, in partnership with the World Bank and the Institute of Electrical and Electronics Engineers (IEEE):

- Mozilla will launch a public challenge this year to spur innovation and equal-rating solutions for providing affordable access and digital literacy. The goal is to inject practical, action-oriented, new thinking into the current debate on how to connect the unconnected people of the world.

- Additionally, Mozilla is building a global hub to help more women learn how to read, write, and participate online. Over the past five years, Mozilla volunteers have started over 100 clubs and run over 5000 local events in 90 countries to teach digital literacy. Building on this model, Mozilla is now working with U.N. Women to set up clubs just for women and girls in Kenya and South Africa. This is the next step towards creating a global hub.

“Connecting the unconnected is one of the greatest challenges of our time, and one we must work on together. We will need corporate, government, and philanthropic efforts to ensure that the Internet as the world’s largest shared public resource is truly open and accessible to all. We are pleased to see a sign of that collaboration with the Global Connect Initiative commitments,” said Mitchell Baker, executive chairwoman of Mozilla.

Mark Surman, executive director of the Mozilla Foundation, added, “We must address the breadth but also the depth of digital inclusion. Having access to the Web is essential, but knowing how to read, write and participate in the digital world has become a basic foundational skill next to reading, writing, and arithmetic. At Mozilla we are looking at – and helping to solve – both the access and digital literacy elements of inclusion.”

We look forward to sharing progress on both our commitments as the year progresses.

https://blog.mozilla.org/blog/2016/04/14/mozillas-commitment-to-inclusive-internet-access/

|

|

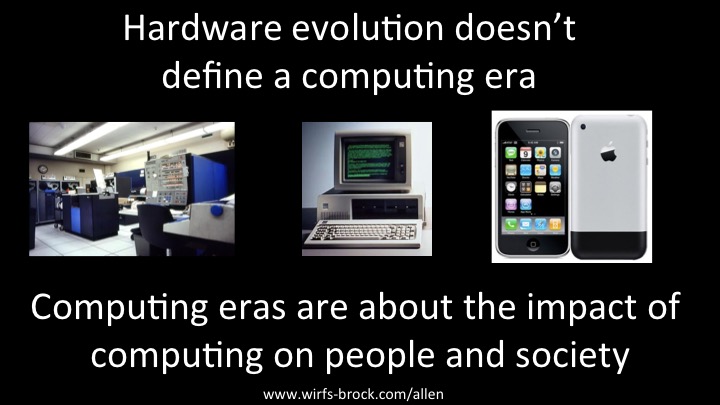

Allen Wirfs-Brock: Slide Bite: Computing Eras Aren’t About Hardware |

Computing “generations” used to be defined by changing computer hardware. Not anymore. The evolution of computing hardware (and software) technologies may enable the transition to a new era of computing. But it isn’t the hardware that really defines such an era. Instead, a new computing era emerges when hardware and software innovations result in fundamental changes to the way that computing impacts people and society. A new computing era is about completely rethinking what we do with computers.

|

|

Support.Mozilla.Org: Trip report: Tech + Women + Kazakhstan! |

Greetings, SUMO Nation! At the moment, we are meeting in Berlin to talk about SUMO and our involvement in Mozilla’s mission. Therefore, we have no major news or updates to report this week (but there will be more of that soon, worry not!). Then again, we can’t leave you without something nice and inspiring to read, right?

As you can imagine, even if we spend a lot of time on SUMO-related activities, it’s not the only thing we do. Just like any Mozillian, our activities span many different fields. Thus, it is with great pleasure that I share with you our own Rachel’s trip report from her recent visit to Kazakhstan as a member of the Tech Women organization.

How would YOU inspire women & girls?

To promote STEM learning and diversity in the workplace, Tech Women organizes three delegation trips each year. The trip opens leadership opportunities to women on the international scene of professional employment. Over four weeks, women in STEM become emerging leaders and develop business plans, learning about one of the fields of knowledge in an in-depth manner.

I feel fortunate to mentor through Tech Women and get the opportunity to visit Kazakhstan on a delegation last month. In addition to sightseeing and experience the local culture, we gathered for Technovation events at hackerspaces, middle school and university campuses.

A postcard from the road

We visited the old and new capital of Kazakhstan, Astana and Almaty. Many of the building are still influenced by the Soviet style. Kazakhstan is also known for being the place of origin for apples, and the people’s love for their horses.

On the third day of our visit, while staying at the Doysk Hotel, we were greeted by three US Consulate representatives. One of them, who previously worked at NASA, and had an inspiring career in science (also studying Soviet sciences) was dressed in green and had hair dyed red for the festive March 17 holiday.

They answered our questions about the pollution in the area, as well as many cultural questions that occupied our minds after running around in the snow for a few days.

We visited the National University that day and had two panels talking about women in the workforce, interview processes, and ways of progressing careers in STEM fields. The university impressed some of us with over an acre of planned solar panel space. The mechanical engineers among us got to interact with an equally impressive robot that recognized sign language.

On the trip, our delegation group participated in projects related to application development, Technovation groups and spoke on several discussion panels.

The Technovation groups themes varied from design thinking and learning parts of computer hard drives to pitching Android apps. It was surprising that even in a place located quite remotely in the mountains, between two of the largest countries on the planet (in several respects), there is a lot of access and will to seize to the opportunity to learn and develop on an open web.

Digital literacy develops these women as users of the web, as well as makers just thanks to the basic web access they get in the hacker spaces and schools we visited. During our visit, we learned that development opportunities for young girls in schools were rather limited outside of those options.

Technovation workshop – identifying hard drive parts

The speaking panels addressed career development skills such as interviewing, networking at events, and resume building. However, some of the questions were more directed around Human Resource issues like maternity leave and securing funding for a business idea. In the United States, there is legal action that can be taken for many of the questions that the Kazakh women had, so there is definitely room for improvement there.

Conducting a mock interview with career developing skills for women interested in working in the US

For the next generation of people in Kazakhstan, particularly young women, open access to communication plays a key role. However, the challenge of funding and lack of guided direction towards STEM careers discourages women from fully optimizing the potential of digital growth.

Emerging leaders and women from Yahoo speaking about being a woman in the modern workplace. Talking to girls choosing where to go for university and what to study. Bottom right: Pitching an app idea for volunteers.

Sig Space motivational chalk board. The event invited 10 men from various professions and included technical industry women speaking about increasing the number of women active in that field. A National University representative looking to start a training program for girls focused on Data Center and Cisco certifications. He also wanted to learn more about Mozilla and our mission.

Thank you for your awesome report, Rachel! It’s great to see more women getting into tech every day, everywhere around the world :-).

https://blog.mozilla.org/sumo/2016/04/14/trip-report-tech-women-kazakhstan/

|

|

Allen Wirfs-Brock: An Experiment: Slide Bites |

Over the last several years, a lot of my ideas about the future of computing have emerged as I prepared talks and presentations for various venues. For such talks, I usually try to illustrate each key idea with an evocative slide. I’ve been reviewing some of these presentations for material that I should blog about. But one thing I noticed is that some of these slides really capture the essence of an idea. They’re worth sharing and shouldn’t be buried deep within a presentation deck where few people are likely to find them.

So, I’m going to experiment with a series of short blog posts, each consisting of an image of one of my slides and at most a paragraph or two of supplementary text. But the slide is the essence of the post. One nice thing about this form is that the core message can be captured in a single tweet. A lot of reading time isn’t required. And if it isn’t obvious, “slide bite” is a play on “sound bite”.

Let me know (tweet me at @awbjs) what you think about these slide bites. I’m still going to write longer form pieces but for some ideas I may start with a slide bite and then expand it into a longer prose piece.

|

|

Air Mozilla: Web QA Weekly Meeting, 14 Apr 2016 |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Air Mozilla: Reps weekly, 14 Apr 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Daniel Stenberg: HTTP/2 in April 2016 |

On April 12 I had the pleasure of doing another talk in the Google Tech Talk series arranged in the Google Stockholm offices. I had given it the title “HTTP/2 is upon us, and here’s what you need to know about it.” in the invitation.

The room seated 70 persons but we had the amazing amount of over 300 people in the waiting line who unfortunately didn’t manage to get a seat. To those, and to anyone else who cares, here’s the video recording of the event.

If you’ve seen me talk about HTTP/2 before, you might notice that I’ve refreshed the material somewhat since before.

|

|

Mozilla Addons Blog: Developing Extensions With Web-ext 1.0 |

As the transition to WebExtensions continues, we are also building tools to make developing them faster and easier than ever. Our latest is a command line tool called web-ext, which we released recently as an initial working version with some basic features.

We built it because we want developing extensions to be fast and easy. Just as Firefox’s WebExtensions API is designed for cross-browser compatibility, we want web-ext to eventually support platforms such as Chrome or Opera. We will continue developing jpm in parallel, as needed.

To give it a try, you can install it from npm:

npm install --global web-extWhen developing an extension, here’s how you can run it in Firefox to test it out:

cd /path/to/your/source

web-ext runThis is similar to how you can load your source directly on the about:debugging page.

When you’ve got your extension working, here’s how to build an XPI file that can be submitted to addons.mozilla.org:

web-ext buildYou can also self-host your XPI file for distribution but it needs to be signed by Mozilla first. Here’s how to build and sign an XPI file:

web-ext signThe end user documentation is a work in progress but you can reference all commands and options by typing:

web-ext --helpAs you can see, this is a very early release just to get you started while we continue adding features. If you’d like to help out on the development of web-ext, check out the contributor guide and take a look at some good first bugs.

https://blog.mozilla.org/addons/2016/04/14/developing-extensions-with-web-ext-1-0/

|

|

Karl Dubost: Documenting my git/GitHub workflow |

For webcompat.com, we are developing the project using among others, the GitHub infrastructure. We have webcompat.com repository. Do not hesitate to participate.

I'm not sure we exactly share the same workflow in the core contributors, but I figured out that I should document the way I contribute. It might help beginners and it might be useful for myself in the future.

Let's start with a rough draft.

The project is hosted on

https://github.com/webcompat/webcompat.com/

I created a fork in my own area (the fork button at the top right)

https://github.com/karlcow/webcompat.com/

Now I can create a local clone on my computer.

git clone git@github.com:karlcow/webcompat.com.git

I'm cloning my fork instead of the project because I want to be able to work in local and mess up with branches that I push to my own fork without bothering anyone else. Pull requests are the moments I mingle with others.

Once I have done this. I have a fresh copy of the repo, I can start working on a issue. Let's take for example 902 (easy, dumb issue just for illustration). I create a new branch on my local clone. Read also below the section on updating my local fork.

git checkout -b 902/1

The protocol I use for the branch name (that I have stolen from Mike) is

branch_name = issue_number/branch_version

So if I'm not satisfied with the work done, but I still want to keep some history about it, I can create a second branch for this same issue with git checkout -b 902/2 and so on. When satisfied I will be able to pull request the one I really like.

git commit -m '#902 upgrade GitHub-Flask to 3.1.1' requirements.txt git commit -m '#902 upgrade Flask-Limiter to 2.2.0' requirements.txt # etc

At the end of the day or when I'm planning to do something or simply because I want to share the code. I will upload my work to my fork on github.

git push karlcow 902/1

Here you will notice that I didn't use git push origin 902/1, see below for the explanation about repository aliases.

Once I have finished with all the commits, I can create a pull request on GitHub to webcompat:master from karlcow:902/1.

https://github.com/webcompat/webcompat.com/pull/920

I use the message:

fix #902 - 902/1 Update python module dependencies in the project.

and requests for a review by mike with

r? @miketaylr

We discuss, throw, modify or merge.

Repository aliases

Usually GitHub is using the terms upstream for the original repository and origin for your own fork. It was confusing me a lot so I decided to change that.

git remote rename origin karlcow git remote rename upstream webcompat

It becomes a lot easier when I update the project or when I push my commits. I know exactly where it goes.

# download things from the webcompat.com repo on github.com git fetch webcompat # pushing my branch to my own fork on github.com git push karlcow branch_name

Updating my local fork

Each time, I have finished with a branch or I have finished with today's work and everything has been committed. I keep my local (on my computer) clone master branch in synch with the distant master branch on webcompat.com.

Usually with github vocabulary it is:

git checkout master git fetch upstream git merge upstream/master

In my case

git checkout master git fetch webcompat git merge webcompat/master

My project is updated and I can create a new branch for the next issue I will be working on.

Cleaning the local and remote forks

Because I create branches locally in ~/code/webcompat.com/ and remotely on https://github.com/karlcow/webcompat.com/, it's good to delete them, little by little. So we do not end up with a huge list of branches when doing

git branch -a

For example right now, I have

git branch -a 264/1 396/1 * 710/3 713/1 master r929/958 remotes/karlcow/264/1 remotes/karlcow/702/1 remotes/karlcow/710/1 remotes/karlcow/710/3 remotes/karlcow/HEAD -> karlcow/master remotes/karlcow/master remotes/webcompat/gh-pages remotes/webcompat/issues/741/2 remotes/webcompat/letmespamyou remotes/webcompat/master remotes/webcompat/searchwork remotes/webcompat/staging remotes/webcompat/staticlabels remotes/webcompat/webpack

So to clean up I'll do this:

# Remove locally (on the computer) git branch -d 12/1 245/1 # Remove remotely (on github my repo) git push karlcow --delete 12/1 git push karlcow --delete 245/1

Otsukare!

|

|

The Mozilla Blog: Mozilla Open Source Support (MOSS) Update: Q1 2016 |

This is an update on the Mozilla Open Source Support (MOSS) program for the first quarter of 2016. MOSS is Mozilla’s initiative to support the open source community of which we are a part.

We are pleased to announce that MOSS has been funded for 2016 – both the existing Track 1, “Foundational Technology”, and a new Track 2, “Mission Partners”. This new track will be open to any open source project, but the work applied for will need to further the Mozilla mission. Exactly what that means, and how this track will function, is going to be worked out in the next few months. Join the MOSS discussion forum to have your say.

On Track 1, we have paid or are in the process of making payments to six of the original seven successful applicants whose awards were finalized in December; for the seventh one, work has been postponed for a period. We are learning from our experience with these applications. Much process had to be put in place for the first time, and we hope that future award payments will be smoother and quicker.

This year so far, two more applications have been successful. The Django REST Framework, which is an extension for Django, has been awarded $50,000, and The Intern, a testing framework, has been awarded $35,000. Our congratulations go out to them. We are at the stage of drawing up agreements with both of these projects.

Applications remain open for Track 1. If you know of an open source project that Mozilla uses in its software or infrastructure, or Mozillians use to get their jobs done and which could do with some financial support, please encourage them to apply.

https://blog.mozilla.org/blog/2016/04/13/mozilla-open-source-support-moss-update-q1-2016/

|

|

Armen Zambrano: Improving how I write Python tests |

What I'm writing now is something I should have learned many years ago as a Python developer. It can be embarrassing to recognize it, however, I've thought of sharing this with you since I know it would have helped me earlier on my career and I hope it might help you as well.

Somebody has probably written about this topic and if you're aware of a good blog post covering this similar topic please let me know. I would like to see what else I've missed.

This post might also be useful for new contributors trying to write tests for your project.

My takeaway

These are some of the things I've learned- Make running tests easy

- We use tox to help us create a Python virtual environment, install the dependencies for the project and to execute the tests

- Here's the tox.ini I use for mozci

- If you use py.test learn how to not capture the output

- Use the -s flag to not capture the output

- If your project does not print but instead it uses logging, add the pytest-capturelog plugin to py.test and it will immediately log for you

- If you use py.test learn how to jump into the debugger upon failures

- Use --pdb to using the Python debugger upon failure

- Learn how to use @patch and Mock properly

- The theory of how to mock is explained very well in "Using Mocks in Python"

- Learning where to @patch is golden. Read "Where to patch"

How I write tests

This is what I do:- If no tests exists for a module, create the file for it

- If you're testing module.py create a test called test_module.py

- If you already have tests but want to add coverage to a function, determine what is the minimal py.test call to only call the test or set of tests

- You don't want to run all tests when you're developing

- You can read about it in "Specifying tests/selecting tests".

@patch properly and use Mocks

What I'm doing now to patch modules is the following:- What function are you testing? (aka test subject)

- Have a look at the function you're adding tests for and list which functions it calls (aka test resources)

- Which of those test resources do you need to patch?

- To patch the test resources I use @patch + I change the return_value. You can see an example in test_buildbot_bridge.py. I use two different style of patching if you're interested

- I normally change test resources which hit the network (controlled environment) or that I can make the test execution faster

- You can have pieces of code that are shared between tests to avoid duplicating mocking code

- Determine if you need to Mock objects and function calls

- See in here an example where I needed to mock a Push object

The way that Mozilla CI tools is designed it begs for integration tests, however, I don't think it is worth doing beyond unit testing + mocking. The reason is that mozci might not stick around once we have fully migrated from Buildbot which was the hard part to solve.

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Mozilla WebDev Community: Extravaganza – April 2016 |

Once a month, web developers from across Mozilla get together to talk about the work that we’ve shipped, share the libraries we’re working on, meet new folks, and talk about whatever else is on our minds. It’s the Webdev Extravaganza! The meeting is open to the public; you should stop by!

You can check out the wiki page that we use to organize the meeting, or view a recording of the meeting in Air Mozilla. Or just read on for a summary!

Shipping Celebration

The shipping celebration is for anything we finished and deployed in the past month, whether it be a brand new site, an upgrade to an existing one, or even a release of a library.

A-Frame v0.2.0

First up was ngoke, who shared the news that A-Frame shipped a new release! The v0.2.0 release focuses on extensibility by improving the Component API and adding a slew of new objects that can be registered for use in the framework. A blog post is available that explains the changes.

Let’s Encrypt Switches to Pip 8

ErikRose wasn’t able to attend, but left notes on the wiki about Let’s Encrypt switching from peep to pip 8 for installing its requirements.

DXR Indexing Most Mozilla-Central Branches

Erik also left a note mentioning that DXR, Mozilla’s code browser and search tool for the Firefox codebase, now indexes more branches from mozilla-central, including Aurora, Beta, Central, ESR45, and Release.

Pipstrap 1.1.1

The last note that Erik left mentioned that Pipstrap, a small script for bootstrapping a hash-checked version of Pip, shipped a new release that fixes an error reporting bug under Python 2.6.

Snippets in AWS + Deis

Next up was bensternthal, who shared the news that snippets.mozilla.com is now hosted on Engagement Engineering’s Deis cluster in AWS. Thanks to giorgos, jgmize, and pmac for helping to move the site over! Ben also mentioned that Basket is the next service slated to move over.

Open-source Citizenship

Here we talk about libraries we’re maintaining and what, if anything, we need help with for them.

New django-csp Maintainer

pmac stopped by to let us know that he is taking over maintenance of django-csp. Thanks to jsocol for authoring and maintaining the library until now!

Hashin Python Version Filtering

Last up was peterbe, who shared news of a new release of hashin. The main addition is a --python-version flag, which allows you to filter the hashes hashin adds by python versions that you plan to use, reducing the number hashes added. Thanks to mythmon for submitting the patch!

If you’re interested in web development at Mozilla, or want to attend next month’s Extravaganza, subscribe to the dev-webdev@lists.mozilla.org mailing list to be notified of the next meeting, and maybe send a message introducing yourself. We’d love to meet you!

See you next month!

https://blog.mozilla.org/webdev/2016/04/13/extravaganza-april-2016/

|

|

James Long: Two Weird Tricks with Redux |

I have now used Redux pretty extensively in multiple projects by now, especially the Firefox Developer Tools. While I think it breaks down in a few specific scenarios, generally I think it holds up well in complex apps. Certainly nothing is perfect, and the good news is when you want to do something outside of the normal workflow, it’s not hard.

I rewrote the core of the Firefox debugger to use redux a few months ago. Recently my team was discussing some of the code and it made me remember a few things I did to solve some complex scenarios. I thought I should blog about it.

The best part of Redux is that it keeps its scope small, and even markets itself as a low-level abstraction. Some people find this off-putting; it takes too much code to do various things. But I like the simplicity of the abstraction itself because it’s very straight-forward to debug. Actions pump through the system and state is updated. Logging the actions is almost always enough information for me to figure out why something happened badly.

One of the challenging parts is integrating asynchronous behavior with redux. There are many solutions out there, but you can get very far with the thunk middleware which allows action creators to dispatch multiple actions over time. The idea is that the asynchronous layer sits “on top” of the entire system and pumps actions through it. In this way, you are converting asynchronous events into actions that give you a clear look into what is happening.

But there are some asynchronous situations that simple middlewares aren’t capable of handling. One solution is to throw everything into Observables which would require essentially using an entirely different language. It’s not clear to me how to automatically convert those streams into actions though, and I’m not interested in Observables. Other solutions like sagas look very interesting, but I’m not sure if they would solve all the problems. (EDIT: Note that the primary use case here is using redux in the context of an existing system so the more light-weight the solution, the better.)

While working on the debugger for the Firefox devtools, I ran into two situations that took some thought to solve. The first one exposes a weakness in Redux’s architecture, and the second one wasn’t as much of a weakness but required boilerplate code to solve.

Here are two weird tricks to solve some complex Redux workflows.

Waiting for an Action

Actions are simple objects that are passed into functions to accumulate new state. In an asynchronous world, you have 3 actions indicating asynchronous work: start, done, and error. In our system, they all are of the same type (like ADD_BREAKPOINT) but the status field indicates the event type.

We have our own promise middleware to write nice asynchronous workflows (example). Any actions that use the promise middleware return promises, so you can build up workflows like dispatch(addBreakpoint()).then(…) in other action creators or components. This is common in the redux world; remember, the asynchronous layer sits “on top” of redux, dispatching actions as things happen.

There’s one flaw in all of this though: once an asynchronous operation starts, we don’t have a reference to it anymore. It’s like creating a promise but never storing it. That’s not really a “flaw”, but in a traditional app if you need the promise later you would probably stick it onto a class (this._requestPromise), but we have nowhere to store it. Only action creators see promises and they are just functions.

Why would you need it later? Imagine this scenario: you have a protocol method eatBurger that the client can call. Unlike fries which you like to shove as much in your mouth at once, you don’t ever want to be eating more than one burger at once (or do you?). Now, if another burger happens to appear in front of you, you do want to eat it, but you have to wait until you finished your current one.

Because of this, you can’t just request eatBurger willy-nilly like you would eatFry (or most other methods). If a current eatBurger request is going on you want to wait for that one to finish and then send another request. This could either be an optimization (lots of data coming from the server) or avoiding race conditions (the request mutates something).

This inherently involves state. You have to know if another request is going on at some indeterminate time, so that information must be stored somewhere. This is why I think expressing this with any async abstraction is going to be somewhat complex, but some may solve it better than others.

I think it’s an anti-pattern to store promises somewhere and always requiring going through the promise to get the value. This kind of code explodes in complexity, is really hard to follow, and forces every single function in the entire world to be promise-based. The great thing about Redux is that almost everything acts as normal synchronous functions.

So how do we solve this in Redux? We don’t want to store promises. The only other option is to wait for future actions to come through. If we have a flag in the app state indicating an eatBurger request is currently happening, we can wait for the { type: EAT_BURGER, status: “done” } action to come through and then re-request a burger to eat.

That means we need a way to tap into the action stream. There is no way to do this by default. So I wrote a middleware: wait-service. I called it a “service” because it’s stateful. This allows me to tap into the action stream by firing an action that holds a predicate and a function to run when that predicate is true. Here’s how the EAT_BURGER flow would look:

if (state.isEatingBurger) {

dispatch({

type: services.WAIT_UNTIL,

predicate: action => (action.type === constants.EAT_BURGER &&

action.status === "done"),

run: (dispatch, getState, action) => {

dispatch({ type: EAT_BURGER,

condiments: ['jalapenos'] });

}

});

return;

}

// Actually fire a request to eat a burger

This simple service provides us a way to bypass Redux’s normal constraints and implement complex workflows. It’s a little awkward, but it’s rare that you need this. I’ve used it a couple times to solve complex scenarios, and I get to keep the simplicity of Redux for the rest of the system.

Ignoring Async Responses

While using the developer tools, you might navigate away to a different page. When this happens, we need to clear all of the state and rebuild it for the new page. We basically want to forget everything about the previous session.

Redux makes this very nice because we just need to replace the app state with the initial state, and the entire UI resets to the default. It’s great.

But there’s a problem: there may be async requests still happening when you navigate away, like fetching sources, searching for a string, etc. If we reset the state and handle the response, it will blow up because it doesn’t have the right state to handle the response with.

This was a huge problem with tests: the test would run and shutdown quickly, but we would still handle async responses after the shutdown happened. It blows up, of course, and makes the test fail. But the infuriating part if that these failures are intermittent: it would only sometimes shutdown too quickly.

This is a problem inherent in testing, but luckily Redux gives us the tools to come up with a nice generic solution.

Actions are broadcasted to all reducers, and async actions all have the same shape (a status field and also a seqId field which is unique identifier). Because of this, we track exactly which async requests are currently happening. It’s just another reducer. This reducer looks for async actions and keeps an array if ids of currently open requests. I’ll just put all the code here:

const initialState = [];

function update(state = initialState, action) {

const { seqId } = action;

if (action.type === constants.UNLOAD) {

return initialState;

}

else if (seqId) {

let newState;

if (action.status === 'start') {

newState = [...state, seqId];

}

else if (action.status === 'error' || action.status === 'done') {

newState = state.filter(id => id !== seqId);

}

return newState;

}

return state;

}

Remember, when a page navigates away or the devtools is destroyed we reset the app state to the initial state. That clears out this reducer’s state, so it’s just an empty array.

Because of this, when we create the store we can use a top-level reducer that ignores all async requests that we don’t know about. (See all the code)

let store = createStore((state, action) => {

if (action.seqId &&

(action.status === 'done' || action.status === 'error') &&

state &&

state.asyncRequests.indexOf(action.seqId) === -1) {

return state;

}

return reducer(state, action);

});

By not dispatching actions, we completely ignore any async response that we don’t know about. Implementing this simple strategy completely solved all of the shutdown-related intermittents I faced.

End

I’m sure that you will show me other abstractions that handle these things better by default. In my opinion, every abstraction has rough edges. The question is how well you can workaround the system to solve any rough edges you face, and I don’t feel like these are bad solutions. It’s just code you have to write. I’ve certainly faced much harder situations where the abstraction was just too controlling and I couldn’t even work around it.

|

|

James Long: Two Weird Tricks with Redux |

I have now used Redux pretty extensively in multiple projects by now, especially the Firefox Developer Tools. While I think it breaks down in a few specific scenarios, generally I think it holds up well in complex apps. Certainly nothing is perfect, and the good news is when you want to do something outside of the normal workflow, it’s not hard.

I rewrote the core of the Firefox debugger to use redux a few months ago. Recently my team was discussing some of the code and it made me remember a few things I did to solve some complex scenarios. I thought I should blog about it.

The best part of Redux is that it keeps its scope small, and even markets itself as a low-level abstraction. Some people find this off-putting; it takes too much code to do various things. But I like the simplicity of the abstraction itself because it’s very straight-forward to debug. Actions pump through the system and state is updated. Logging the actions is almost always enough information for me to figure out why something happened badly.

One of the challenging parts is integrating asynchronous behavior with redux. There are many solutions out there, but you can get very far with the thunk middleware which allows action creators to dispatch multiple actions over time. The idea is that the asynchronous layer sits “on top” of the entire system and pumps actions through it. In this way, you are converting asynchronous events into actions that give you a clear look into what is happening.

But there are some asynchronous situations that simple middlewares aren’t capable of handling. One solution is to throw everything into Observables which would require essentially using an entirely different language. It’s not clear to me how to automatically convert those streams into actions though, and I’m not interested in Observables. Other solutions like sagas look very interesting, but I’m not sure if they would solve all the problems.

While working on the debugger for the Firefox devtools, I ran into two situations that took some thought to solve. The first one exposes a weakness Redux’s architecture, and the second one wasn’t as much of a weakness but required boilerplate code to solve.

Here are two weird tricks to solve some complex Redux workflows.

Waiting for an Action

Actions are simple objects that are passed into functions to accumulate new state. In an asynchronous world, you have 3 actions indicating asynchronous work: start, done, and error. In our system, they all are of the same type (like ADD_BREAKPOINT) but the status field indicates the event type.

We have our own promise middleware to write nice asynchronous workflows (example). Any actions that use the promise middleware return promises, so you can build up workflows like dispatch(addBreakpoint()).then(…) in other action creators or components. This is common in the redux world; remember, the asynchronous layer sits “on top” of redux, dispatching actions as things happen.

There’s one flaw in all of this though: once an asynchronous operation starts, we don’t have a reference to it anymore. It’s like creating a promise but never storing it. That’s not really a “flaw”, but in a traditional app if you need the promise later you would probably stick it onto a class (this._requestPromise), but we have nowhere to store it. Only action creators see promises and they are just functions.

Why would you need it later? Imagine this scenario: you have a protocol method eatBurger that the client can call. Unlike fries which you like to shove as much in your mouth at once, you don’t ever want to be eating more than one burger at once (or do you?). Now, if another burger happens to appear in front of you, you do want to eat it, but you have to wait until you finished your current one.

Because of this, you can’t just request eatBurger willy-nilly like you would eatFry (or most other methods). If a current eatBurger request is going on you want to wait for that one to finish and then send another request. This could either be an optimization (lots of data coming from the server) or avoiding race conditions (the request mutates something).

This inherently involves state. You have to know if another request is going on at some indeterminate time, so that information must be stored somewhere. This is why I think expressing this with any async abstraction is going to be somewhat complex, but some may solve it better than others.

I think it’s an anti-pattern to store promises somewhere and always requiring going through the promise to get the value. This kind of code explodes in complexity, is really hard to follow, and forces every single function in the entire world to be promise-based. The great thing about Redux is that almost everything acts as normal synchronous functions.

So how do we solve this in Redux? We don’t want to store promises. The only other option is to wait for future actions to come through. If we have a flag in the app state indicating an eatBurger request is currently happening, we can wait for the { type: EAT_BURGER, status: “done” } action to come through and then re-request a burger to eat.

That means we need a way to tap into the action stream. There is no way to do this by default. So I wrote a middleware: wait-service. I called it a “service” because it’s stateful. This allows me to tap into the action stream by firing an action that holds a predicate and a function to run when that predicate is true. Here’s how the EAT_BURGER flow would look:

if (state.isEatingBurger) {

dispatch({

type: services.WAIT_UNTIL,

predicate: action => (action.type === constants.EAT_BURGER &&

action.status === "done"),

run: (dispatch, getState, action) => {

dispatch({ type: EAT_BURGER,

condiments: ['jalapenos'] });

}

});

return;

}

// Actually fire a request to eat a burger

This simple service provides us a way to bypass Redux’s normal constraints and implement complex workflows. It’s a little awkward, but it’s rare that you need this. I’ve used it a couple times to solve complex scenarios, and I get to keep the simplicity of Redux for the rest of the system.

Ignoring Async Responses

While using the developer tools, you might navigate away to a different page. When this happens, we need to clear all of the state and rebuild it for the new page. We basically want to forget everything about the previous session.

Redux makes this very nice because we just need to replace the app state with the initial state, and the entire UI resets to the default. It’s great.

But there’s a problem: there may be async requests still happening when you navigate away, like fetching sources, searching for a string, etc. If we reset the state and handle the response, it will blow up because it doesn’t have the right state to handle the response with.

This was a huge problem with tests: the test would run and shutdown quickly, but we would still handle async responses after the shutdown happened. It blows up, of course, and makes the test fail. But the infuriating part if that these failures are intermittent: it would only sometimes shutdown too quickly.

This is a problem inherent in testing, but luckily Redux gives us the tools to come up with a nice generic solution.

Actions are broadcasted to all reducers, and async actions all have the same shape (a status field and also a seqId field which is unique identifier). Because of this, we track exactly which async requests are currently happening. It’s just another reducer. This reducer looks for async actions and keeps an array if ids of currently open requests. I’ll just put all the code here:

const initialState = [];

function update(state = initialState, action) {

const { seqId } = action;

if (action.type === constants.UNLOAD) {

return initialState;

}

else if (seqId) {

let newState;

if (action.status === 'start') {

newState = [...state, seqId];

}

else if (action.status === 'error' || action.status === 'done') {

newState = state.filter(id => id !== seqId);

}

return newState;

}

return state;

}

Remember, when a page navigates away or the devtools is destroyed we reset the app state to the initial state. That clears out this reducer’s state, so it’s just an empty array.

Because of this, when we create the store we can use a top-level reducer that ignores all async requests that we don’t know about. (See all the code)

let store = createStore((state, action) => {

if (action.seqId &&

(action.status === 'done' || action.status === 'error') &&

state &&

state.asyncRequests.indexOf(action.seqId) === -1) {

return state;

}

return reducer(state, action);

});

By not dispatching actions, we completely ignore any async response that we don’t know about. Implementing this simple strategy completely solved all of the shutdown-related intermittents I faced.

End

I’m sure that you will show me other abstractions that handle these things better by default. In my opinion, every abstraction has rough edges. The question is how well you can workaround the system to solve any rough edges you face, and I don’t feel like these are bad solutions. It’s just code you have to write. I’ve certainly faced much harder situations where the abstraction was just too controlling and I couldn’t even work around it.

|

|

Kyle Huey: IndexedDB Logging Subsystems |

IndexedDB in Gecko has a couple different but related logging capabilities. We can hook into the Gecko Profiler to show data related to the performance of the database threads. These are enabled unconditionally in builds that support profiling. You can find the points marked with a PROFILER_LABEL annotation in the IndexedDB module.

We also have a logging system that is off by default. By setting the preference dom.indexedDB.logging.enabled and NSPR_LOG_MODULES=IndexedDB:5 you can enable the IDB_LOG_MARK annotations that are scattered throughout the IndexedDB code. This will output a bunch of data (using the standard logging subsystem) to show DOM calls, transactions, request, and the other mechanics of IndexedDB. This is intended for functional debugging (instead of looking at performance problems, which the PROFILER_LABEL annotations are for). You can even set dom.indexedDB.logging.detailed to get extra verbose information.

|

|

Armen Zambrano: mozci-trigger now installs with pip install mozci-scripts |

In order to use mozci from the command line you now have to install with this:

pip install mozci-scriptsinstead of:

pip install mozci

This helps to maintain the scripts separately from the core library since we can control which version of mozci the scripts use.

All scripts now lay under the scripts/ directory instead of the library:

https://github.com/mozilla/mozilla_ci_tools/tree/master/scripts

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Mozilla Addons Blog: How an Add-on Saved a Student Untold Hours |

This is the actual hand Rami uses to click his mouse and save text to files.

At the start of any research project, Rami says he reviews at least 30 other papers related to his subject. But only small, scattered portions of any paper bear relation to his own topic. “My issue was—I needed a quick tool to copy a summary of text I found, and then add a data time stamp and URL reference so I could return back to the website,” explains Rami. “I was doing this manually and it consumed lots of time.”

Then he found Save Text to File, an add-on that saves selected text and its metadata to a directory file with one simple right-click of the mouse.

“This was exactly what I was looking for,” says Rami. “For each chapter of my thesis I have a file full of summarized text and URLs of the papers that I can quickly access and remember what each page and paper was about. I have my own annotated bibliography that’s ready to be formatted and added to my papers.”

Very cool. Thanks for sharing your add-ons story with us, Rami!

If you, kind reader, use add-ons in interesting ways and want to share your experience, please email us at editor@mozilla.com with “my story” in the subject line.

https://blog.mozilla.org/addons/2016/04/12/how-an-add-on-saved-a-student-untold-hours/

|

|

Air Mozilla: Connected Devices Weekly Program Review, 12 Apr 2016 |

Weekly project updates from the Mozilla Connected Devices team.

Weekly project updates from the Mozilla Connected Devices team.

https://air.mozilla.org/connected-devices-weekly-program-review-20160412/

|

|

The Mozilla Blog: Mozilla-supported Let’s Encrypt goes out of Beta |

In 2014, Mozilla teamed up with Akamai, Cisco, the Electronic Frontier Foundation, Identrust, and the University of Michigan to found Let’s Encrypt in order to move the Web towards universal encryption. Today, Let’s Encrypt is leaving beta. We here at Mozilla are very proud of Let’s Encrypt reaching this stage of maturity

Let’s Encrypt is a free, automated and open Web certificate authority that helps make it easy for any Web site to turn on encryption. Let’s Encrypt uses an open protocol called ACME which is being standardized in the IETF. There are already over 40 independent implementations of ACME. Several web hosting services such as Dreamhost and Automattic, who runs WordPress.com, also use ACME to integrate with Let’s Encrypt and provide security that is on by default.

HTTPS, the protocol that forms the basis of Web security, has been around for a long time. However, as of the end of 2015, only ~40% of page views and ~65% of transactions used HTTPS. Those numbers should both be 100% if the Web is to provide the level of privacy and security that people expect. One of the biggest barriers to setting up a secure Web site is getting a “certificate”, which is the digital credential that lets Web browsers make certain they are talking to the right site. Historically the process of getting certificates has been difficult and expensive, making it a major roadblock towards universal encryption.

In the six months since its beta launch in November 2015, Let’s Encrypt has issued more than 1.7 million certificates for approximately 2.4 million domain names, and is currently issuing more than 20,000 certificates a day. More than 90% of Let’s Encrypt certificates are protecting web sites that never had security before. In addition, more than 20 other companies have joined Let’s Encrypt, making it a true cross-industry effort.

Congratulations and thanks to everyone who has been part of making Let’s Encrypt happen. Security needs to be a fundamental part of the Web and Let’s Encrypt is playing a key role in making the Internet more secure for everyone.

https://blog.mozilla.org/blog/2016/04/12/mozilla-supported-lets-encrypt-goes-out-of-beta/

|

|

Roberto A. Vitillo: Measuring product engagment at scale |

How engaged are our users for a certain segment of the population? How many users are actively using a new feature? One way to answer that question is to compute the engagement ratio (ER) for that segment, which is defined as daily active users (DAU) over monthly active users (MAU), i.e.

Intuitively the closer the ratio is to 1, the higher the number of returning users. A segment can be an arbitrary combination of values across a set of dimensions, like operating system and activity date.

Ideally, given a set of dimensions, pre-computing the ER for all possible combinations of values of said dimensions would ensure that user queries run as fast as possible. Clearly that’s not very efficient though; if we assume for simplicity that every dimension has

possible values, then there are

ratios.

Is there a way around computing all of those ratios while still having an acceptable query latency? One could build a set of users for each value of each dimension and then at query time simply use set union and set intersection to compute any desired segment. Unfortunately that doesn’t work when you have millions of users as the storage complexity is proportional to the number of items stored in the sets. This is where probabilistic counting and the HyperLogLog sketch comes into play.

HyperLogLog

The HyperLogLog sketch can estimate cardinalities well beyond with a standard error of 2% while only using a couple of KB of memory.

The intuition behind is simple. Imagine a hash function that maps user identifiers to a string of N bits. A good hash function ensures that each bit has the same probability of being flipped. If that’s the case then the following is true:

- 50 % of hashes have the prefix 1

- 25 % of hashes have the prefix 01

- 12.5% of hashes the prefix 001

- …

Intuitively, if 4 distinct values are hashed we would expect to see on average one single hash with a prefix of 01 while for 8 distinct values we would expect to see one hash with a prefix of 001 and so on. In other words, the cardinality of the original set can be estimated from the longest prefix of zeros of the hashed values. To reduce the variability of this single estimator, the average of K estimators can be used as the approximated cardinality and it can be shown that the standard error of a HLL sketch is .

The detailed algorithm is documented in the original paper, and its practical implementation and variants are covered in depth by a 2013 paper from Google.

Set operations

One of the nice properties of HLL is that the union of two HLL sketches is very simple to compute as the union of a single estimator with another estimator is just the maximum of the two estimators, i.e. the longest prefix of zeros. This property makes the algorithm trivially parallelizable which makes it well suited for map-reduce style computations.

What about set intersection? There are two ways to compute that for e.g. two sets:

- Using the inclusion-exclusion principle:

.

- Using the MinHash (MH) sketch, which estimates the Jaccard index that measures how similar two sets are:

. Given the MH sketch one could estimate the intersection with

.

It turns out that both approaches yield bad approximations when the overlap is small, which makes set intersection not particularly attractive for many use-cases, which is why we decided to use only the union operation for HLL sketches for our datasets.

Going back to the original problem of estimating the ER for an arbitrary segment, by computing the HLL sketches for all combinations of values across all dimensions the HLL sketch for any segment can be derived using set union.

Implementation

At Mozilla we use both Spark and Presto for analytics and even though both support HLL their implementation is not compatible, i.e. Presto can’t read HLL sketches serialized from Spark. To that end we created a Spark package, spark-hyperloglog, and a Presto plugin, presto-hyperloglog, to extend both systems with the same HLL implementation.

Usage

Mozilla employees can access the client_count table from re:dash and run Presto queries against it to compute DAU, MAU or the ER for a certain segment. For example, this is how DAU faceted by channel and activity date can be computed:

SELECT normalizedchannel,

activitydate,

cardinality(merge(cast(hll AS HLL))) AS hll

FROM client_count

GROUP BY activitydate, normalizedchannel

ORDER BY normalizedchannel, activitydate DESC

The merge aggregate function computes the set union while the cardinality function returns the cardinality of a sketch. A complete example with DAU, MAU and ER for the electrolysis engagement ratio can be seen here.

By default the HLL sketches in the client_count table have a standard error of about 1.6%, i.e. the cardinalities will be off on average by 1.6%.

http://robertovitillo.com/2016/04/12/measuring-product-engagment-at-scale/

|

|