Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Chris H-C: Who needs the NSA? FLOSS is bad enough. |

Here’s a half-hour talk (with 15min of Q&A at the end) from FOSDEM 2014, an open-source software conference held annually in Brussels. But I know you won’t watch it, so here’s the summary.

NSA operation ORCHESTRA has had a successful first year of operation. With a budget of $1B, and breaking no laws (or even using FISA courts or other questionable methods), they have successfully:

- kept the majority of Internet traffic unencrypted, so that it can be read by anyone

- centralized previously-decentralized technologies (e.g. Skype) under companies friendly to the NSA (e.g. Microsoft)

- created a system of finances that encourages outside influences on proprietary software and transparent bribery

- created a system of patent expectations that allows companies friendly to the NSA to squelch smaller players that might create disruptive technologies

- encouraged poor community policies and practices in Open Source projects to help derail useful work through arguments about licenses, naming conventions, and bikeshedding

The joke is that ORCHESTRA doesn’t exist (as far as we know). The software community has been doing this by itself, without encouragement or aid, for years.

“kept the majority of Internet traffic unencrypted, so that it can be read by anyone”

Internet traffic is unencrypted largely because it is expensive to encrypt things. Well, this isn’t true, as one could always self-sign a certificate for one’s webserver and then be able to guarantee that the number of parties in the session does not change in the middle of your transaction. This doesn’t happen because a browser connecting to unencrypted sites displays no warning. But a browser connecting to a site with a self-signed certificate shows a big nastygram that is difficult to understand, let alone bypass:

Emails are unencrypted because using encryption software on email is difficult, not interoperable, and ceases to work as soon as you want to send email to someone who isn’t capable of decrypting your message. In short: if Amazon can’t encrypt their shipping notification when they send it to you, then it won’t work.

Generic traffic is unencrypted or badly-encrypted (which is, in some cases, worse) because encryption is hard, and the premier tool for using it, OpenSSL, is so poorly documented and has such awful default settings that you’re as likely to encrypt your cat as you are your chat.

(This is where Mozilla could step up with its Mozilla Open Source Support program and offer cash incentives to improve the software and documentation and fund needed audits of the codebase.)

“centralized previously-decentralized technologies (e.g. Skype) under companies friendly to the NSA (e.g. Microsoft)”

Skype, once bought by Microsoft, centralized its encrypted communications under Microsoft’s servers. This aided in the directory service and NAT-busting parts of the protocol, sure, but also provided a single target for subpoenas and other court orders.

As Apple is helping make the public aware, it is beginning to seem as though the safest course is to code things so that even the dev can’t read them.

“created a system of finances that encourages outside influences on proprietary software and transparent bribery”

The entire system of Venture Capitalism is rife with abuse and corruption. That funding isn’t coming from the NSA is incidental as VCs come in and drop $10k on a startup in exchange for influence and a piece of the pie. If your funding is coming from someone who thinks you should pivot away from usable crypto, you aren’t going to argue with the paycheque.

Also, if you are the NSA and need an easy way to pay an informant, you can say to her “Tell your boss you quit to form a startup. We’ll set you up in the Valley with $1M in investment. Just surf the web for a year or two.” Easy rewards, perfectly legal.

“created a system of patent expectations that allows companies friendly to the NSA to squelch smaller players that might create disruptive technologies”

Software patents in the United States are an easy tool for larger companies to prey on smaller ones. If you are a small company that patents something that amazingly doesn’t infringe on other patents, a large company, friendly to the NSA, can scoop them up and squelch the startup in a fit of managerial pique. If the patent does infringe on a friend-of-the-NSA’s patents, the small company is squished under the lawyers’ boots.

“encouraged poor community policies and practices in Open Source projects to help derail useful work through arguments about licenses, naming conventions, and bikeshedding”

Here’s the kicker for Mozilla and others trying to make a difference in the Open Source space.

The NSA doesn’t need agents provocateurs to derail conversations. Well-meaning contributors do all the time.

The GCHQ don’t need to distract us with fights about licensing or superficial changes. Users do that all the time.

CSIS agents aren’t sowing doubt about what is better to stop us from doing what is good. Our own internal voices shout quite loudly enough.

…so, what do we do? Well, a good start for those of us in Open Source communities, is to think before we type. Bikeshedding slows us down, but software quality is an imperative. Licensing just doesn’t matter as much as you think it does and is as personal a choice as one’s toenail polish colour.

The next step is to realize that these are generally not technological problems. They are political. They are social. They are human. Developers and technical managers and architects might be some of the best people to understand these problems, but not the best people to solve them.

We need outside help. We need politicians who agree to be helped to understand these complex issues and take action. We need community managers who understand the needs of the project and the needs of humans and how to step between or step away. We need each individual to actively and sincerely stop sucking at talking to others because the harm we’re doing reaches beyond the mailing list and affects users.

We have seen the enemy, and it is us. It is also them. But we can fix “us” so that we are able to fight “them”. I think.

:chutten

https://chuttenblog.wordpress.com/2016/04/08/who-needs-the-nsa-floss-is-bad-enough/

|

|

Gervase Markham: Type 1 vs Type 2 Decisions |

Some decisions are consequential and irreversible or nearly irreversible – one-way doors – and these decisions must be made methodically, carefully, slowly, with great deliberation and consultation. If you walk through and don’t like what you see on the other side, you can’t get back to where you were before. We can call these Type 1 decisions. But most decisions aren’t like that – they are changeable, reversible – they’re two-way doors. If you’ve made a suboptimal Type 2 decision, you don’t have to live with the consequences for that long. You can reopen the door and go back through. Type 2 decisions can and should be made quickly by high judgment individuals or small groups.

As organizations get larger, there seems to be a tendency to use the heavy-weight Type 1 decision-making process on most decisions, including many Type 2 decisions. The end result of this is slowness, unthoughtful risk aversion, failure to experiment sufficiently, and consequently diminished invention. We’ll have to figure out how to fight that tendency.

http://feedproxy.google.com/~r/HackingForChrist/~3/nzJyEApTEKI/

|

|

Soledad Penades: Securing your self-hosted website with Let’s Encrypt |

- Introduction

- HTTPS and certificate authorities

- Using Let’s Encrypt to generate and renew digital certificates

- Hardening default setups and avoiding known vulnerabilities

- I have HTTPS, now what?

- WordPress considerations

- A workflow to migrate from HTTP to HTTPS

- More cool things about Let’s Encrypt

https://soledadpenades.com/2016/04/08/securing-your-self-hosted-website-with-lets-encrypt/

|

|

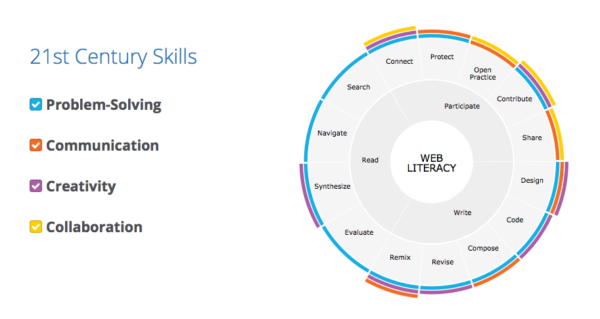

The Mozilla Blog: Introducing Mozilla’s Web Literacy Map, Our New Blueprint for Teaching People About the Web |

Within the next decade, the number of individuals with access to the Internet will rise to five billion. These billions of new users, many from emerging markets, have the potential to experience unprecedented personal, civic and economic opportunity online — but only if they have the necessary skills to meaningfully wield the Internet.

To this end, Mozilla is dedicated to empowering people with the knowledge they need to read, write and participate online. We define this knowledge as “web literacy” — a collection of core skills and competencies like search engine know-how, design basics, online privacy fundamentals, and a working understanding of sharing, open source licensing and remixing.

We don’t believe everyone needs to learn how to code in order to be web literate. But when everyone has a fundamental understanding of web mechanics, they’re able to realize the Internet’s full potential. Learning and teaching these skills — combined with 21st-century skills like collaboration and problem solving — allows more and diverse people to shape the Web. And this helps grow a stronger, healthier open Internet.

When users aren’t web literate, they become disenfranchised from the open Internet. And the Internet itself suffers, too — without new and diverse users, it becomes more closed, more commercial, more monolithic.

We believe web literacy is as important as reading, writing and arithmetic. When teaching these three Rs, we rely on centuries of experience. But the Internet has no clear educational roadmap. Mozilla created the Web Literacy Map, version 2.0 as a resource to fill this gap and aid educators around the globe who are teaching and learning the Web.

The Map is an interactive, detailed framework that outlines and defines the key web literacy and 21st-century skills needed to realize the Internet’s full potential. The Map also provides hands-on activities for teaching and learning these skills.

Mozilla staff and volunteers worked for several months to create and launch the Map. A diverse collection of researchers, educators, scientists, entrepreneurs and others contributed research, interviews, surveys and focus groups.

Explore Mozilla’s new Web Literacy Map >>

Whether you’re a first-time smartphone user or accomplished programmer, we encourage you to explore the Map, and to use it as a resource as you teach and learn the Web with those around you.

Whether you’re a first-time smartphone user or accomplished programmer, we encourage you to explore the Map, and to use it as a resource as you teach and learn the Web with those around you.

An-Me Chung is the Mozilla Foundation’s Director of Strategic Partnerships.

Mozilla community members teach the Web in Indonesia. Credit: Laura de Reynal

|

|

Michael Kaply: CCK2 Source on GitHub (and a new release) |

I had put the original CCK Wizard on code.google.com, but since I did the development of the CCK2 on my private SVN, I had never gone through the trouble of making the source available. It is technically open source, but I wasn’t doing enough to allow other people to contribute to the code. That changes today.

The CCK2 source is now available on GitHub under the MPL.

Nothing else about how I manage the CCK2 will change – I will still be using Freshdesk for support requests and I will still have premium support subscriptions. I will be using GitHub issues only for actual bugs in the CCK2.

When I do new releases of the CCK2, you’ll be able to get information about those releases on the releases page.

And speaking of new releases, I’ve just updated the CCK2 to fix a bug related to adding bookmarks with international characters. You can download it here.

https://mike.kaply.com/2016/04/08/cck2-source-on-github-and-a-new-release/

|

|

Soledad Penades: Securing your self-hosted website with Let’s Encrypt, part 5: I have HTTPS, and now what? |

In part 4, we looked at hardening default configurations and avoiding known vulnerabilities, but what other advantages are there to having our sites run HTTPS?

First, a recap of what we get by using HTTPS:

- Privacy – no one knows what are your users accessing

- Integrity – what is sent between you and your users is not tampered with at any point*

*unless the uses’ computers are infected with a virus or some kind of browser malware that modifies pages after the browser has decrypted them, or modifies the content before sending it back to the network via the browser–Remember I said that security is not 100% guaranteed? Sorry to scare you. You’re welcome

|

|

Gervase Markham: Facebook Switches Off Email Forwarding |

You remember that email address @facebook.com that Facebook set up for you in 2010, and then told everyone viewing your Facebook profile to use in 2012 (without asking)?

Well, they are now breaking it:

Hello Gervase,

You received this email because your gerv.markham@facebook.com account is set up to forward messages to [personal email address]. After 1 May 2016, you will no longer be able to receive email sent to gerv.markham@facebook.com.

Please update your email address for any services that currently send email to gerv.markham@facebook.com.

Thank You,

Email Team at Facebook

Good work all round, there, Facebook.

http://feedproxy.google.com/~r/HackingForChrist/~3/ztp8cQZ6HK0/

|

|

Christian Heilmann: Amazing accessibility news all around… |

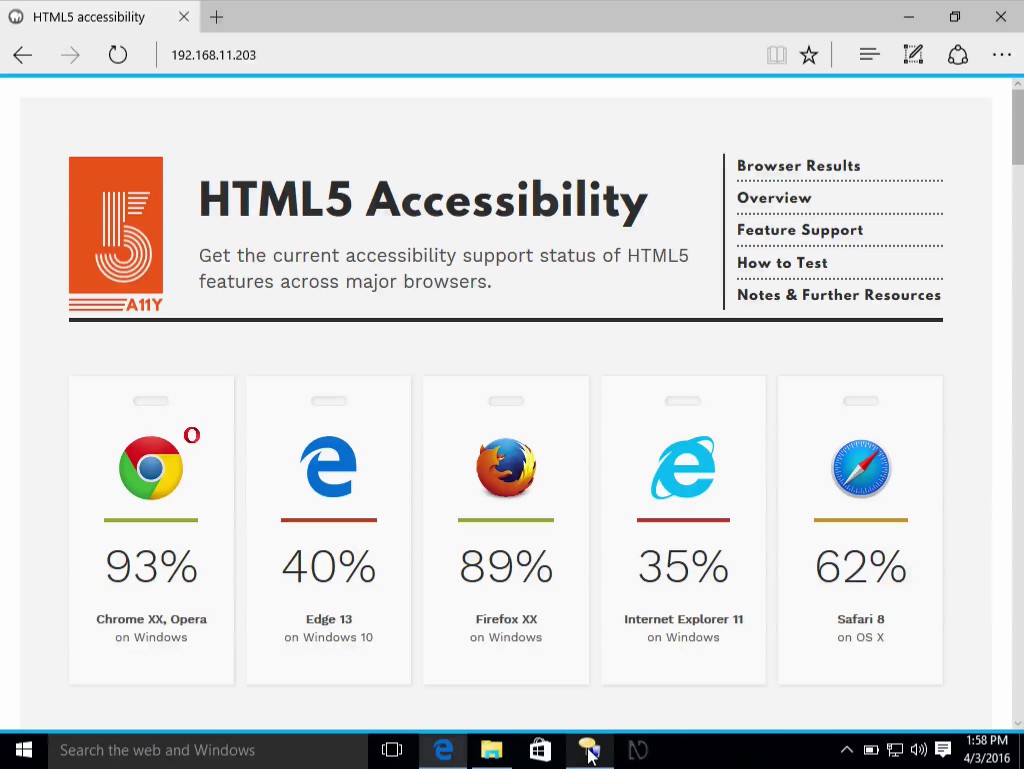

Lately I’ve been quite immersed in the world of Microsoft to find my way around the new job, and whilst doing so I discovered a few things you might have missed. Especially in the field of accessibility there is some splendid stuff happening.

During the key note of //build last week in San Francisco there were quite a few mind-blowing demos. The video of they keynote is available here (even for download).

For me, it got very interesting 2 hours and five minutes in when Cornelia Carapcea, Senior PM of the Cognitive APIs group shows off some interesting tools:

- A “What’s in this image” demo using the Computer Vision API

- The Caption Bot, which automatically detects content in images and proposes alternative text

- The Custom Recognition Intelligent Service (CRIS), which allows you to train a system to get better results when converting audio to text.

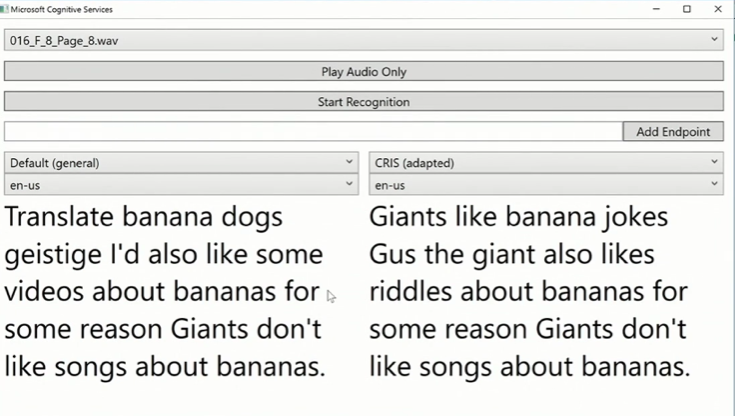

The results of CRIS are impressive. For example, Cornelia showed off how a set of audio files with interviews with children became much more usable. On the right in this screenshot you see the traditional results of speech to text APIs and on the right what CRIS was able to extract after being trained up:

The biggest shock for me was to see my old friend Saqib Shaikh appear on stage showing off his Seeing AI demo app. It pretty much feels like accessibility sci-fi becoming reality:

The Microsoft Cognitive Services: Give Your Apps a Human Side breakout session at build had some more interesting demos:

- Tele2 using the translation API to do live translation of phone calls into other languages. You hear the message in the original language, a beep and then the translation. (34:50 onwards)

- ProDeaf doing the same to translate live audio into sign language across languages. (40:00 onwards)

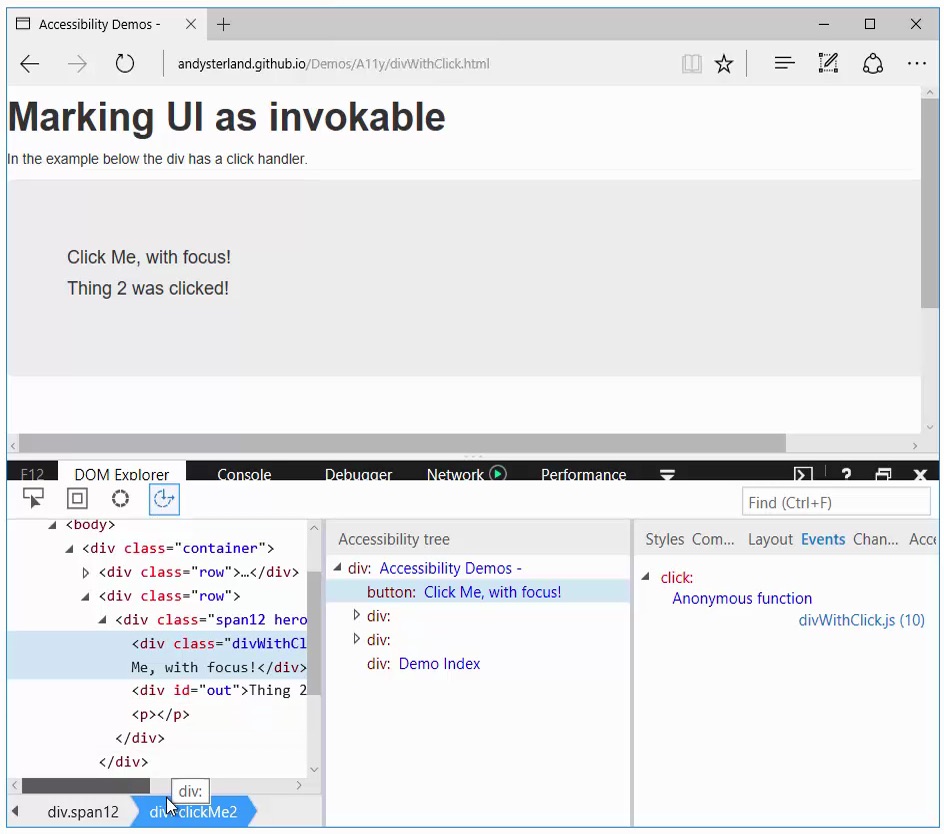

In Andy Sterland’s F12 Developer Tools talk of Edge Web Summit he covered some very important new accessibility features of developer tools:

- F12 tools for Edge now have not only an accessibility tab in the DOM viewer, but also a live updating Accessibility Tree viewer

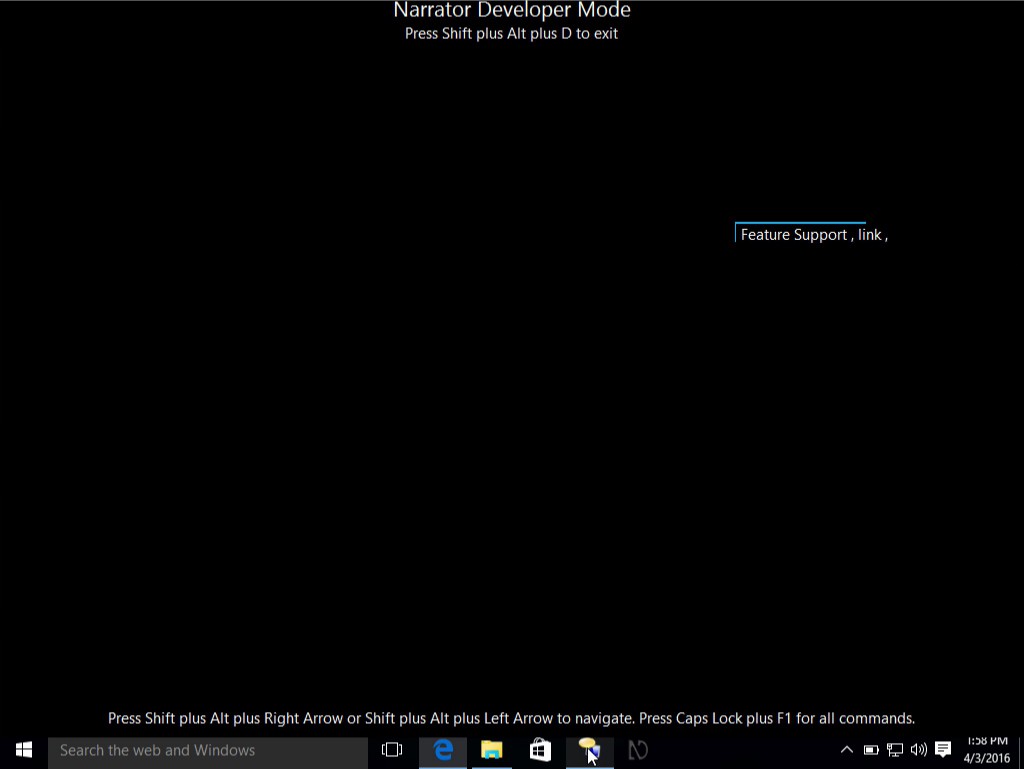

- Narrator for Windows will have a developer mode. You can turn this on using “Narrator + Caps Lock + Shift + F12” and it will only read out the app that you chose rather than the whole operating system, including your editor and the things you type in. To avoid the mistake of looking at the screen whilst using a screen reader, it also automatically hides the screen except for the currently read out part.

My direct colleage and man of awesome Aaron Gustafson deep dived more into the subject of accessibility and covered a large part of the Inclusive Design Toolkit.

All in all, there is a lot of great stuff happening in the world of accessibility at Microsoft. I had a very easy time researching my talk next week at Funka.

https://www.christianheilmann.com/2016/04/08/amazing-accessibility-news-all-around/

|

|

Giorgio Maone: CrossFUD: an Analysis of Inflated Research and Sloppy Reporting |

On April the 7th at 22:53, Aaron wrote:

I just read a Digital Trends article that states NoScript is a security breach. What's the story here???

It's a story of FUD and sensationalism, which got reported in such a careless way that now makes explaining and correcting readers' perception an uphill battle.

They've just demonstrated that rather than invoking a low-level function directly, like any installed add-on could do anyway, a malicious Firefox extension that has already been approved by an AMO code reviewer and manually installed by the user can invoke another add-on that the same user had previously installed and perform the low-level tasks on its behalf, not in order to gain any further privilege but just for obfuscation purposes.

It's like saying that you need to uninstall Microsoft Office immediately because tomorrow you may also install a virus that then can use Word's automation interface to replicate itself, rather than invoking the OS input/output functions directly. Or that, for the same reasons, you must uninstall any Mac OS application which exposes an AppleScript interface.

BTW, if you accept this as an Office or AppleScript vulnerability, Adblock Plus is not less "vulnerable", so to speak, than the other mentioned add-ons, despite what the article states. It's just that those "researchers" were not competent enough to understand how to "exploit" it.

And I'm a bit disappointed of Nick Nguyen who, rather than putting some effort in rebutting this cheap "research", chose the easier path of pitching our new WebExtensions API, whose better insulation and permissions system actually makes this specific scenario less likely and deserves to be praised anyway, but does not and could not prevent the almost infinite other ways to obfuscate malicious intent available to any kind of non-trivial program, be it a Chrome extension, an iOS app or a shell script. Only the trained eye of a code reviewer can mitigate this risk, and even if there's always room for improvement, this is what makes AMO stand out among the crowd of so called "market places".

https://hackademix.net/2016/04/08/crossfud-an-analysis-of-inflated-research-and-sloppy-reporting/

|

|

Support.Mozilla.Org: What’s Up with SUMO – 7th April |

Hello, SUMO Nation!

How is everyone doing? Chilling out to the tunes everyone’s sharing in the Off-Topic forums, perhaps? Or maybe you’re out and about in the spring freshness? Well, that only makes sense if your local spring actually is about the weather getting better… Is it? ;-)

Here are the latest news from the world of SUMO! And still more to come over the next few days…

Welcome, new contributors!

Contributors of the week

- The forum supporters who helped users out for the last week.

- The writers of all languages who worked on the KB for the last week.

We salute you!

Most recent SUMO Community meeting

- Reminder: WE HAVE MOVED TO WEDNESDAYS!

- You can read the notes here and see the video on our YouTube channel and at AirMozilla.

The next SUMO Community meeting…

- …is possibly happening on WEDNESDAY the 13th of April – join us!

- Reminder: if you want to add a discussion topic to the upcoming meeting agenda:

- Start a thread in the Community Forums, so that everyone in the community can see what will be discussed and voice their opinion here before Wednesday (this will make it easier to have an efficient meeting).

- Please do so as soon as you can before the meeting, so that people have time to read, think, and reply (and also add it to the agenda).

- If you can, please attend the meeting in person (or via IRC), so we can follow up on your discussion topic during the meeting with your feedback.

Community

- Want to Take Back The Web? Click here!

- The “oh-what-a-cool-acronym-this-is” NDAs are back! More details here.

- Want to know what’s going on with the Admins? Check this thread in the forum.

- Reminder: the nominations for the London Work Week are locked down and will be sent out soon – the Participation team will contact everyone in early April – in the meantime, take a look at this wiki page for more details.

-

Ongoing reminder: if you think you can benefit from getting a second-hand device to help you with contributing to SUMO, you know where to find us.

Social

- Welcome to new Socialites: Marco Aurelio, Jaime, and Samuel from Brazil!

- If you’re curious about becoming a Social reviewer, talk to Madalina. (She’s busy documenting it for later, too)

- Are you using Telegram? Join the Social Support Telegram group!

- Final reminder: Madalina is waiting for your feedback regarding Sprinklr. Send it directly to her, using her email address.

- An ongoing and gentle technical reminder for those already supporting on Social – please don’t forget to sign your initials at the end of each reply and always use the @ at the beginning of it.

- Ongoing reminder: We have a training out there for all those interested in Social Support – talk to Madalina or Costenslayer on #AoA (IRC) for more information.

Support Forum

- Sync for Firefox went offline for a while… and came back!

- Stay tuned for a new locale joining the ranks of official support forum languages… Can you guess which one it will be?

- If you’re new and feel a bit lost – ask an admin!

Knowledge Base & L10n

- Monthly Milestone Congratulations (also known as MMCs) to:

- Chinese localizers for 200 articles!

- Czech localizers for reaching 100%!!!

- Portuguese localizers for 500 articles!

- Dutch localizers for holding the 100% completion flag high and mighty!

- New locale milestones for April now in your dashboard! Check them out!

- Reminder: are you aware of the l10n hackathons happening in your locale? Take a look at this blog post and contact your l10n team to get involved.

- A general SUMO l10n update post coming your way… really soon!

- You can see all the articles that have been updated for version 45 in this document.

- You can check the KB training article feedback and progress in this document.

Developers

- Important reminder: Kitsune development is currently in maintenance mode due to changes in the developer group – read this FAQ post. If you have questions about this, please contact us via the forum.

- This week’s SUMO Platform meeting has been cancelled – developer updates soon!

Firefox

- for Android

- Version 45 support discussion thread.

- Version 46 support discussion thread (WIP)

- includes an update about previously cached pages

- no more support for Android Honeycomb (3.0)

- Sync 1.1 (also known as “old sync”) no longer supported

- History and Bookmarks now in the menu

- for Desktop

- for iOS

- Reminder: Firefox for iOS 3,0 has been approved and is out and about!

- a 3.x point release coming soon…

- Firefox for iOS 4 will be here in roughly 6 weeks, with a decision on bidirectional synchronization of bookmarks and more!

- Reminder: Firefox for iOS 3,0 has been approved and is out and about!

https://blog.mozilla.org/sumo/2016/04/07/whats-up-with-sumo-7th-april/

|

|

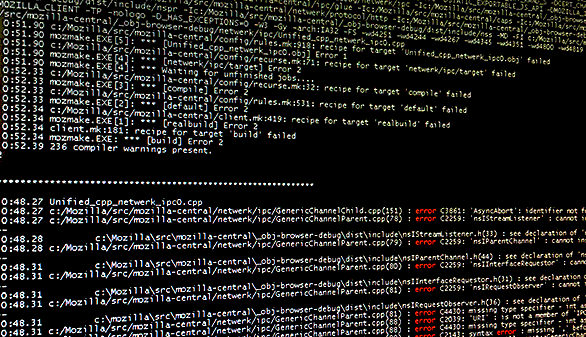

Honza Bambas: Highlight errors of mozilla build on command line |

Something I wanted mach to do natively. But I was always told something like “filter yourself”. So here it is

- Download this small script *)

- Copy it to your source directory or somewhere your $PATH points to

- run mach as:

./mach build | err

When there are errors during build, those will be listed under the build log and conveniently highlighted.

*) It’s tuned for mingw, but might well work on linux/osx too.

The post Highlight errors of mozilla build on command line appeared first on mayhemer's blog.

http://www.janbambas.cz/highlight-errors-mozilla-build-command-line/

|

|

Air Mozilla: Mapathon Missing Maps #3 |

Nous vous proposons de d'ecouvrir comment contribuer `a OpenStreetMap, le "Wikipedia de la carte", durant un « mapathon » (un 'ev'enement convivial ou l'on se...

Nous vous proposons de d'ecouvrir comment contribuer `a OpenStreetMap, le "Wikipedia de la carte", durant un « mapathon » (un 'ev'enement convivial ou l'on se...

|

|

Soledad Penades: Securing your self-hosted website with Let’s Encrypt, part 4: hardening default setups and avoiding known vulnerabilities |

In part 3, we looked at how to finally use Let’s Encrypt to issue and renew certificates for our domains. But I also finished with a terrifying cliffhanger: basic HTTPS setups can be vulnerable to attacks! Gasp…!

Let me start by clarifying that I am not a security expert and if someone breaks into your system I will take no responsibility whatsoever, lalalala…

What makes basic HTTPS setups vulnerable?

There are two main culprits:

- using older protocols and ciphers

- and using defaults at web app or browser level

Why is using older protocols a bad idea?

SSL, the initial protocol used for encryption, has been proved insecure (see: POODLE). It is recommended that people use the newer TLS protocol instead.

Servers such as nginx will offer SSL by default if the value is not explicitly set in the server configuration file. Also, many configuration samples you find around the internetssss will place the weaker versions of the protocol first!

Remember when we talked about negotiating the HTTPS connection and how browsers would try and choose the first protocol they supported from the list the server offered? If we put the weaker versions first in our offerings, then browsers will consider those first! Not the best idea…

So: use TLS, which is safer (and maybe faster), and also offer safer protocols first… or just don’t offer the insecure protocols at all.

For example, this would be a good change for your nginx configuration:

- ssl_protocols SSLv3 TLSv1 TLSv1.1 TLSv1.2; # nginx default

+ ssl_protocols TLSv1.2 TLSv1.1 TLSv1;See how we removed SSL, and then reversed the order of the TLS versions to offer the latest versions first.

There’s a caveat: older clients might not be able to access your site if they do not implement any of the protocols your server offers.

What about using older ciphers?

Likewise, the issue with offering older ciphers is that some of them have been broken, and if you offer encryption with those you might be making it easy for the communications to be deciphered. Not what you want!

But where there are just SSL and TLS and a few version numbers to choose from for protocols, there’s an incredibly LONG LIST of ciphers and combinations and sizes you can choose for your server. Should you use ECDHE-ECDSA-AES256-GCM-SHA384 or maybe ECDHE-RSA-AES256-GCM-SHA384? Or perhaps ECDHE-ECDSA-CHACHA20-POLY1305? (Chacha! Chachacha!

|

|

Air Mozilla: Reps weekly, 07 Apr 2016 |

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

This is a weekly call with some of the Reps to discuss all matters about/affecting Reps and invite Reps to share their work with everyone.

|

|

Mozilla Addons Blog: Add-on Compatibility for Firefox 47 |

Firefox 47 will be released on June 7th. Here’s the list of changes that went into this version that can affect add-on compatibility. There is more information available in Firefox 47 for Developers, so you should also give it a look.

General

- Remove FUEL. The FUEL library has been deprecated since Firefox 40.

- Remove the about:customizing preloading hack. The UI customization panel no longer opens using the about:customizing URL.

- Move gDevTools.jsm to a commonjs module. Instead of loading the module using

Cu.import, you should now use

require("devtools/client/framework/devtools-browser");. The module still works for backward compatibility, but it will be removed in the future. - Don’t let web pages link to view-source: URLs.

Tabs

TabOpenandTabCloseevents should provide more detail about tabs moved between windows.- Remove tabs’

visibleLabelproperty andTabLabelModifiedevent. This is a reversal from previous work on supporting a separate label for tabs.

XPCOM

- CookieManager should remove cookies only if they match the userContextId. This changes

nsICookieManager.removeby adding a new required argument (originAttributes). - Various changes to

nsIX509CertDB: bug 1064402, bug 1241646, bug 1241650. Many functions changed here, so I won’t list them. - Remove uses of

nsIEnumeratorfrom PSM. This changes the functionslistTokens, listModules, andlistSlots, both of which now returnnsISimpleEnumeratorinstead ofnsIEnumerator. - Remove

uriIsPrefixoption fromnsINavHistoryQuery. - Replace

removeVisitsTimeframewithHistory.removeVisitsByFilterand deprecate it.

New

- Debugging auto-close XUL panels is painful. Now you can debug popup panels in add-ons.

- Support frames similar to mozbrowser on desktop Firefox. This enables a new iframe API that makes the content believe it’s being loaded in a top-level window.

Let me know in the comments if there’s anything missing or incorrect on these lists. If your add-on breaks on Firefox 47, I’d like to know.

The automatic compatibility validation and upgrade for add-ons on AMO will happen in a few weeks, so keep an eye on your email if you have an add-on listed on our site with its compatibility set to Firefox 46.

https://blog.mozilla.org/addons/2016/04/07/compatibility-for-firefox-47/

|

|

QMO: Firefox 47.0 Aurora Testday, April 15th |

Hello Mozillians,

We are happy to announce that Friday, April 15th, we are organizing Firefox 47.0 Aurora Testday. We’ll be focusing our testing on the new features, bug verification and bug triage. Check out the detailed instructions via this etherpad.

No previous testing experience is required, so feel free to join us on #qa IRC channel where our moderators will offer you guidance and answer your questions.

Join us and help us make Firefox better! See you on Friday!

https://quality.mozilla.org/2016/04/firefox-47-0-aurora-testday-april-15th/

|

|

Hannes Verschore: AWFY now comparing across OS |

At the end of last year (2015) we had performance numbers of Firefox compared to the other browser vendors in the shell and on windows 8 and Mac OSX in the browser on different hardware.

This opened requests for better information. We didn’t have any information if Firefox on windows was slower compared to other OS on the same hardware. Also we got requests to run Windows 10 and also to compare to the Edge browser.

Due to these request we decided to find a way to run all OS on the same specification of hardware. In order to do that we ordered 3 mac mini’s and installed the latest flavors of Windows, Mac OSX and Ubuntu on it. Afterwards we got our AWFY slave on each machine and started reporting to the same graph.

Finally we have Edge performance numbers. Their new engine isn’t sub-par with other JS engines. It is quite good! Though there are some side-notes I need to attach to the numbers above. We cannot state enough that Sunspider is actually obsolete. It was a good benchmark at the start of the JS performance race. Now it is mostly annoying, since it is testing wrong things. Not the things you want to/should improve. Next to that octane is still a great benchmarks, except for mandreel latency. That benchmark is totally wrong and we notified the v8 team about it, but we got no response so far. (https://github.com/chromium/octane/issues/29). It is quite sad to see our hard work devalued due to other vendors gaming on that benchmark.

We also have performance across OS for the first time. This showed us our octane score was lower on windows. Over the last few months we have been rectifying this. We still see some slower cold performance due to MSVC 2015 being less eager in inlining fuctions. But our jit performance should be similar across platform now!

|

|

Aaron Klotz: New Team, New Project |

In February of this year I switched teams: After 3+ years on Mozilla’s Performance Team, and after having the word “performance” in my job description in some form or another for several years prior to that, I decided that it was time for me to move on to new challenges. Fortunately the Platform org was willing to have me set up shop under the (e10s|sandboxing|platform integration) umbrella.

I am pretty excited about this new role!

My first project is to sort out the accessibility situation under Windows e10s. This started back at Mozlando last December. A number of engineers from across the Platform org, plus me, got together to brainstorm. Not too long after we had all returned home, I ended up making a suggestion on an email thread that has evolved into the core concept that I am currently attempting. As is typical at Mozilla, no deed goes unpunished, so I have been asked to flesh out my ideas. An overview of this plan is available on the wiki.

My hope is that I’ll be able to deliver a working, “version 0.9” type of demo in time for our London all-hands at the end of Q2. Hopefully we will be able to deliver on that!

Some Additional Notes

I am using this section of the blog post to make some additional notes. I don’t feel that these ideas are strong enough to commit to a wiki yet, but I do want them to be publicly available.

Once concern that our colleagues at NVAccess have identified is that the current COM interfaces are too chatty; this is a major reason why screen readers frequently inject libraries into the Firefox address space. If we serve our content a11y objects as remote COM objects, there is concern that performance would suffer. This concern is not due to latency, but rather due to frequency of calls; one function call does not provide sufficient information to the a11y client. As a result, multiple round trips are required to fetch all of the information that is required for a particular DOM node.

My gut feeling about this is that this is probably a legitimate concern, however we cannot make good decisions without quantifying the performance. My plan going forward is to proceed with a na"ive implementation of COM remoting to start, followed by work on reducing round trips as necessary.

Smart Proxies

One idea that was discussed is the idea of the content process speculatively

sending information to the chrome process that might be needed in the future.

For example, if we have an IAccessible, we can expect that multiple properties

will be queried off that interface. A smart proxy could ship that data across

the RPC channel during marshaling so that querying that additional information

does not require additional round trips.

COM makes this possible using “handler marshaling.” I have dug up some information about how to do this and am posting it here for posterity:

House of COM, May 1999 Microsoft Systems Journal;

Implementing and Activating a Handler with Extra Data Supplied by Server on MSDN;

Wicked Code, August 2000 MSDN Magazine. This is not available on the MSDN Magazine website but I have an archived copy on CD-ROM.

|

|

Jim Chen: Fennec LogView add-on now supports Android 5+ |

The Fennec LogView add-on has been updated to version 1.2, and it now supports Android Lollipop, Marshmallow, and above.

The previous version read directly from the Android logger device located at /dev/log/main, which worked well on Android 4.x. However, starting with Android 5.0, apps no longer have read permission to /dev/log/main. Fortunately, Android 5.0 also added several new APIs in the liblog.so library specifically for reading logs. LogView 1.2 uses these new APIs, when available, through js-ctypes, and this approach should continue to work on future versions of Android as well.

Last modified:: 2016/04/06 22:59

http://www.jnchen.com/blog/2016/04/fennec-logview-add-on-now-supports-android-5

|

|

Karl Dubost: Mozilla Planet, Firefox UX and Publishing Silos |

Mozilla Planet is a syndication of different blogs written by people from the Mozilla community. This is a great way to follow what is happening in the community. Even better it has an Atom feed you can subscribe to and consume in your favorite feed reader application at your convenience. We also created a Webcompat planet (feed) more focused on the topic relevant to this community.

This morning, on an IRC channel, we had a short discussion on Andy Lam's article about the Reading List UX on Firefox Android. Someone asked:

not published on a planet ?

The person meant that the article was not syndicated in the usual communication channels aggregating blog posts. And that's true, until that link dropped on IRC, I had no idea about the Firefox UX team blog on Medium. And that's kind of sad because it's very interesting. Note that Firefox UX has a dedicated Planet and the Medium blog posts are not aggregated there (no new content since January 2015). Another sad moment.

Then I asked myself, but does Medium has rss/atom feeds in the first place. Looking at the source code of https://medium.com/firefox-ux, I found:

<link id="feedLink" rel="alternate" type="application/rss+xml" title="RSS" href="https://medium.com/feed/firefox-ux">

Ah! cool! https://medium.com/feed/firefox-ux/. You can today add the Medium Firefox UX channel to your feed reader. But then it means also it could be syndicated on a planet. Let's see.

https://medium.com/firefox-ux/this-is-our-reading-list-c81c4238dd3d?source=rss----da1138ddf2cd---4 span> isPermaLink="false">https://medium.com/p/c81c4238dd3d Tue, 05 Apr 2016 19:56:34 GMT

That means that only a summary of the blog post is available in the Medium feed. Not the full post. Another sad moment.

Wishes

- Medium please, could you publish the full content in the feed?

- Medium please, could you make your feeds atom friendly?

- Anthony Lam, please could you add your feed to Planet Mozilla and/or Planet Firefox UX? Let me help with that.

Conclusion

When choosing a publishing platform, be aware of the consequences for your content in terms of resilience, distribution and permanence? Own your domain name, own your content. Publish on your site and distribute elsewhere.

Thanks.

Otsukare!

|

|