Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: Absorbing 1,000 emails per day |

Some people say email is dead. Some people say there are “email killers” and bring up a bunch of chat and instant messaging services. I think those people communicate far too little to understand how email can scale.

I receive up to around 1,000 emails per day. I average on a little less but I do have spikes way above.

Why do I get a thousand emails?

Primarily because I participate on a lot of mailing lists. I run a handful of open source projects myself, each with at least one list. I follow a bunch more projects; more mailing lists. We have a whole set of mailing lists at work (Mozilla) and I participate and follow several groups in the IETF. Lists and lists. I discuss things with friends on a few private mailing lists. I get notifications from services about things that happen (commits, bugs submitted, builds that break, things that need to get looked at). Mails, mails and mails.

Don’t get me wrong. I prefer email to web forums and stuff because email allows me to participate in literally hundreds of communities from a single spot in an asynchronous manner. That’s a good thing. I would not be able to do the same thing if I had to use one of those “email killers” or web forums.

Unwanted email

I unsubscribe from lists that I grow tired from. I stamp down on spam really hard and I run aggressive filters and blacklists that actually make me receive rather few spam emails these days, percentage wise. There are nowadays about 3,000 emails per month addressed to me that my mail server accepts that are then classified as spam by spamassassin. I used to receive a lot more before we started using better blacklists. (During some periods in the past I received well over a thousand spam emails per day.) Only 2-3 emails per day out of those spam emails fail to get marked as spam correctly and subsequently show up in my inbox.

Flood management

My solution to handling this steady high paced stream of incoming data is prioritization and putting things in different bins. Different inboxes.

- Filter incoming email. Save the email into its corresponding mailbox. At this very moment, I have about 30 named inboxes that I read. I read them in order, top to bottom as they’re sorted in roughly importance order (to me).

- Mails that don’t match an existing mailing list or topic that get stored into the 28 “topic boxes” run into another check: is the sender a known “friend” ? That’s a loose term I use, but basically means that the mail is from an email address that I have had conversations with before or that I know or trust etc. Mails from “friends” get the honor of getting put in mailbox 0. The primary one. If the mail comes from someone not listed as friend, it’ll end up in my “suspect” mailbox. That’s mailbox 1.

- Some of the emails get the honor of getting forwarded to a cloud email service for which I have an app in my phone so that I can get a sense of important mail that arrive. But I basically never respond to email using my phone or using a web interface.

- I also use the “spam level” in spams to save them in different spam boxes. The mailbox receiving the highest spam level emails is just erased at random intervals without ever being read (unless I’m tracking down a problem or something) and the “normal” spam mailbox I only check every once in a while just to make sure my filters are not hiding real mails in there.

Reading

I monitor my incoming mails pretty frequently all through the day – every day. My wife calls me obsessed and maybe I am. But I find it much easier to handle the emails a little at a time rather than to wait and have it pile up to huge lumps to deal with.

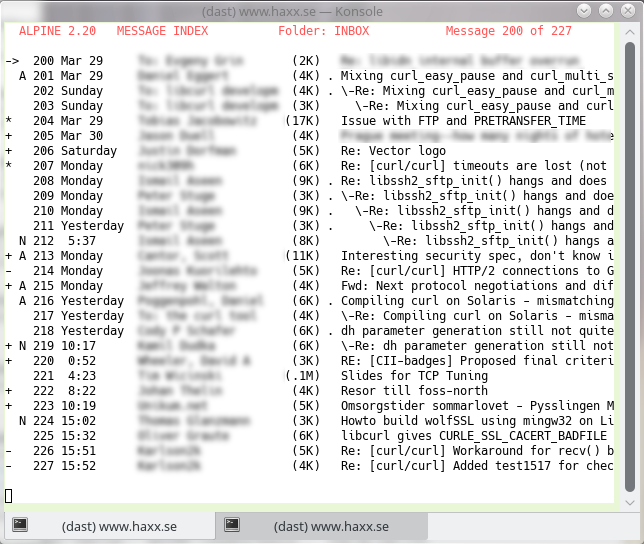

I receive mail at my own server and I read/write my email using Alpine, a text based mail client that really excels at allowing me to plow through vast amounts of email in a short time – something I can’t say that any UI or web based mail client I’ve tried has managed to do at a similar degree.

A snapshot from my mailbox from a while ago looked like this, with names and some topics blurred out. This is ‘INBOX’, which is the main and highest prioritized one for me.

I have my mail client to automatically go to the next inbox when I’m done reading this one. That makes me read them in prio order. I start with the INBOX one where supposedly the most important email arrives, then I check the “suspect” one and then I go down the topic inboxes one by one (my mail client moves on to the next one automatically). Until either I get overwhelmed and just return to the main box for now or I finish them all up.

I tend to try to deal with mails immediately, or I mark them as ‘important’ and store them in the main mailbox so that I can find them again easily and quickly.

I try to only keep mails around in my mailbox that concern ongoing topics, discussions or current matters of concern. Everything else should get stored away. It is hard work to maintain the number of emails there at a low number. As you all know.

Writing email

I averaged at less than 200 emails written per month during 2015. That’s 6-7 per day.

That makes over 150 received emails for every email sent.

https://daniel.haxx.se/blog/2016/04/26/absorbing-1000-emails-per-day/

|

|

Ludovic Hirlimann: (via https://www.youtube.com/watch?v=77DYsnDdQDY) |

|

|

Air Mozilla: Mozilla Weekly Project Meeting, 25 Apr 2016 |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20160425/

|

|

Allen Wirfs-Brock: Slide Bite: Survival of the Fittest |

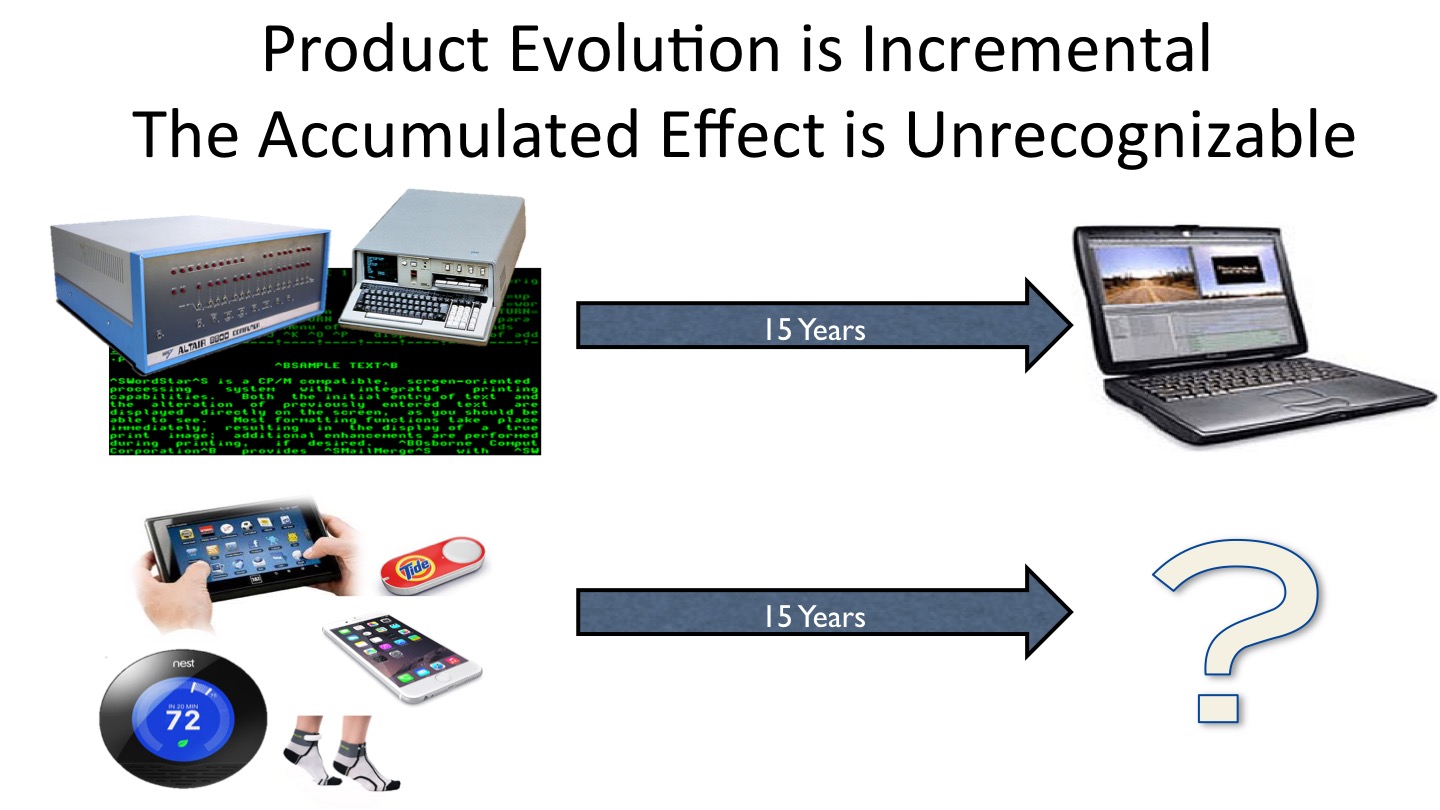

The first ten or fifteen years of a computing era is a period of chaotic experimentation. Early product concepts rapidly evolve via both incremental and disruptive innovations. Radical ideas are tried. Some succeed and some fail. Survival of the fittest prevails. By mid-era, new stable norms should be established. But we can’t predict the exact details.

|

|

Andrew Truong: Experience, Learn, Revitalize and Share: The Adventures of High School |

I started my first year off in high school rough. However, I was able to adapt quite easily through the attendance of leadership seminars every week. I started to get a little more involved with events around the school and eventually around the community. The teachers were far from different than what our junior high teachers prescribed them as. They weren't uncaring, leaving you on your own and were helpful with finding your way around. They were the exact opposite of what our junior high teachers told us. Perhaps, they told us that "lie" to prepare us, or maybe they went through something completely different during their time.

First year in, I had an assortment of classes and I felt good and at ease with them. I was fortunate enough to have every other day free in the second semester where I was able to go to leadership and further enhance my life skills. The regular days, I had a class where I was able to do homework and receive additional help when I needed it, due the fact that I didn't do too well in junior high. Nonetheless, I excelled in the main course wasting most of my time in the additional help class.

Grade 11 rolls by and I took a block (there are 4 blocks in a day) of my day during the first semester to go to leadership. There I was able to further enhance my abilities, be assigned responsibilities and earn the trust of the department head. Furthermore, I ran to the students' union president, though I was not successful - it may have benefited me instead. There's nothing much to say as things went a certain direction and it worked out quite well.

Into my last year of high school, there's a new development in our family and household. This year is extremely important as I must pass all courses in order to graduate and move on to post-secondary. I was satisfied with my first semester where my courses went out pretty well. I still took a block of my day out the first semester to go to leadership. But, this time, I took on the position of being the chairperson of Spirit Wear for the school year. Designing, advertising, and promoting what we had to sell was a wonderful journey. I also met some great people during my spare time in leadership and I learned a lot more about myself and what I was socially doing wrong. That realization of what I was doing wrong, dawned upon me and led me to become who I am today.

The second semester comes around the corner and it was a roller coaster for me. For some odd reason, the course I excelled in continuously the 2 years before, I was now having trouble. It was partially a leap from what I knew and learned to something completely different. Part of the blame for this is the instructor, as I knew from how others have struggled with this particular teacher in the past, I would too - even though I told myself I won't. I got through it with my ups and downs despite being worried about whether or not I would being able to graduate and move on to post-secondary. In the end, I graduated and received my high school diploma.

http://feer56.blogspot.com/2016/04/experience-learn-revitalize-and-share_25.html

|

|

Air Mozilla: Foundation Demos April 22 2016 |

Foundation Demos April 22 2016

Foundation Demos April 22 2016

|

|

Jennie Rose Halperin: Notes from an Unemployed Information Architect |

I wrote about the difficulty of applying for unemployment benefits in Massachusetts over at Medium.

http://jennierosehalperin.me/notes-from-an-unemployed-information-architect/

|

|

This Week In Rust: These Weeks in Rust 127 |

Hello and welcome to another multi-week issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us an email! Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

This week's edition was edited by: Vikrant and llogiq.

Updates from Rust Community

News & Blog Posts

Announcing Rust 1.8.

Announcing Rust 1.8.

- Writing an OS in Rust: Kernel heap. Part of the series Writing an OS in Rust.

- Learn you a Rust III - Lifetimes 101. Part of the series Learn you a Rust for great good!.

- PoC: using LLVM’s profile guided optimization in Rust.

- From &str to Cow.

- The basics of Rust structs.

- Rust community == Awesome!. How the Rust community wins despite its small size and incomplete ecosystem.

- This week in Rust docs 1.

- This week in Servo 59 and This week in Servo 60.

- This week in Ruma 2016-04-17. Ruma is a Matrix client-server API written in Rust.

Notable New Crates & Project Updates

- Reminder: Rust Belt Rust Conference CFP is open until April 30!

- rustc/cargo can now be installed on ARM Linux, NetBSD and FreeBSD using rustup/multirust.

- Announcing cargo-apk.

- Leaf now has a book that teaches you how you can build machine learning applications.

- nix. Rust friendly bindings to *nix APIs.

- winapi-kmd. Windows Kernel-Mode Drivers written in Rust.

- rust-musl-builder. Docker container for easily building static Rust binaries.

- rustw. A web frontend for the Rust compiler.

- Cake. A simple, Rustic build tool.

- metacollect. A lint to collect some crate metadata.

- Anima Engine. The quirky game engine.

- Tera. A template engine in Rust.

- rgo. A Go compiler toolchain, written in Rust.

- specs. A rusty parallel ECS with breaking performance (ecs_bench)

- Epaste. Tool to encrypt data and encode it as base64, so that it could be pasted on pastebin.

Crate of the Week

This week's Crate of the Week is owning_ref, which contains a reference type that can carry its owner with it. Thanks to Diwic for the suggestion!

Submit your suggestions for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- [easy] rust: Add error explanations for all error codes.

- [easy] servo/saltfs: buildbot steps need description and descriptionDone.

- [easy] rust: rustbuild: Add a tidy check to ensure Cargo.lock updates are checked in.

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

186 pull requests were merged in the last two weeks.

Notable changes

pub(restricted)(RFC 1422) implemented- Warn on type parameter defaults, this will become an error in the future

- RFC #1494 (amendment to #550) implemented, allows blocks to follow types/paths in macro patterns

de-/encode()methods no longer break Serialization deriving- Macro hygiene bugs fixed

- Avoid crashing due to duplicate external items

- Fix multiple glob import

- MIR debuginfo mostly works (etc.)

- MIR Blocks no longer require END_BLOCK

- MIR now has LLVM-agnostic type layout

- Register duplicate item symbols anyway

- Resolve compiler performance regression fixed

- Syntax: Import prefixes are now paths

- Don't report errors in constants at every use site

- Handle over-aligned realloc failures on UNIX

- String::truncate goes to greater lengths to not panic

BinaryHeap::append(..)- Faster

is_char_boundary()with bit twiddling - Fixed

BufReadoverrun onTake DefaultforRwLock,Mutex,CondVar,CStr,Path- Cargo can now use multiple git user names

- Removed the (apparently broken)

std::net::IPV6_V6ONLYfeature - Handle

DefIds and extern crates before lowering the AST to HIR - Compiletest now uses JSON output

VecDeque::contains(_)andLinkedList::contains(_)implemented- Rust now bootstraps from previous stable instead of snapshots

impl From>andInto>forVecDequeBTree::append(_)implemented

New Contributors

- Alec S

- Andrey Tonkih

- c4rlo

- David Hewitt

- David Tolnay

- Deepak Kannan

- Gigih Aji Ibrahim

- jocki84

- Jonathan Turner

- Kaiyin Zhong

- Lukas Kalbertodt

- Lukas Pustina

- Maxim Samburskiy

- Raph Levien

- rkjnsn

- Sander Maijers

- Szabolcs Berecz

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

- RFC 1543: Add more integer atomic types.

- RFC 1510: Add a new crate-type, cdylib.

- RFC 1535: Stabilize the

-C overflow-checkscommand line argument. - RFC 1440: Allow Drop types in statics/const functions.

- RFC 1399: Add

#[repr(pack = "N")]. - Amend RFC 1228 with operator fixity and precedence.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now. This week's FCPs are:

- Add

#[repr(align = "N")]. - Proposal for thread affinity.

- Specifying that

::clone(&t) T: Copyshould be equivalent toptr::read(&t). - Add workspaces to Cargo.

- Add

TryFromandTryIntotraits. as_millisfunction onstd::time::Duration.

New RFCs

FusedIteratormarker trait anditer::Fusespecialization.- Add

parse_generics!andparse_where!macros. - Macros by example 2.0. A replacement for

macro_rules!. - Define a best practices procedure for making bug fixes in the compiler.

- Add a

lifetimespecifier tomacro_rules!.

Upcoming Events

- 4/26. Rust Bay Area: Securing OSes and Apps with Rust, seL4, and SGX.

- 4/27. Rust Community Team Meeting at #rust-community on irc.mozilla.org.

- 5/4. OpenTechSchool Berlin: Rust Hack and Learn.

- 5/9. Seattle Rust Meetup.

- 5/13. Rust Meetup Darmstadt.

If you are running a Rust event please add it to the calendar to get it mentioned here. Email Erick Tryzelaar or Brian Anderson for access.

fn work(on: RustProject) -> Money

- PhD and postdoc positions at MPI-SWS.

- Software Engineer at Coturnix.

- Senior full stack developer at OneSignal.

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

Cow is still criminally underused in a lot of code bases

I suggest we make a new slogan to remedy this: "To err is human, to moo bovine." (I may or may not have shamelessly stolen this from this bug report)

Thanks to killercup for the suggestion.

Submit your quotes for next week!

https://this-week-in-rust.org/blog/2016/04/25/these-weeks-in-rust-127/

|

|

Daniel Stenberg: fcurl is fread and friends for URLs |

This whole family of functions, fopen, fread, fwrite, fgets, fclose and more are defined in the C standard since C89. You can’t really call yourself a C programmer without knowing them and probably even using them in at least a few places.

The charm with these is that they’re standard, they’re easy to use and they’re available everywhere where there’s a C compiler.

A basic example that just reads a file from disk and writes it to stdout could look like this:

FILE *file;

file = fopen("hello.txt", "r");

if(file) {

char buffer [256];

while(1) {

size_t rc = fread(buffer, sizeof(buffer),

1, file);

if(rc > 0)

fwrite(buffer, rc, 1, stdout);

else

break;

}

fclose(file);

}

Imagine you’d like to switch this example, or one of your actual real world programs that use the fopen() family of functions to read or write files, and instead read and write files from and to the Internet instead using your favorite Internet protocols. How would you do that without having to change your code a lot and do a major refactoring job?

Enter fcurl

I’ve started to work on a library that provides a look-alike API with matching functions and behaviors, but that allows fopen() to instead specify a URL instead of a file name. I call it fcurl. (Much inspired by the libcurl example fopen.c, which I wrote the first version of already back in 2002!)

It is of course open source and is powered by libcurl.

The project is in its early infancy. I think it would be interesting to try it out and I’ve mentioned the idea to a few people that have shown interest. I really can’t make this happen all on my own anyway so while I’ve created a first embryo, it will take some time before it gets truly useful. Help from others would be greatly appreciated of course.

Using this API, a version of the above example that instead reads data from a HTTPS site instead of a local file could look like:

FCURL *file;

file = fcurl_open("https://daniel.haxx.se/",

"r");

if(file) {

char buffer [256];

while(1) {

size_t rc = fcurl_read(buffer,

sizeof(buffer), 1,

file);

if(rc > 0)

fwrite(buffer, rc, 1, stdout);

else

break;

}

fcurl_close(file);

}

And it could even actually also read a local file using the file:// sheme.

Drop-in replacement

The idea here is to make the alternative functions have new names but as far as possible accept the same input arguments, return the same return codes and so on.

If we do it right, you could possibly even convert an existing program with just a set of #defines at the top without even having to change the code!

Something like this:

#define FILE FCURL #define fopen(x,y) fcurl_open(x, y) #define fclose(x) fcurl_close(x)

I think it is worth considering a way to provide an official macro set like that for those who’d like to switch easily (?) and quickly.

Fun things to consider

1. for non-scheme input, use normal fopen?

An interesting take is probably to make fcurl_open() treat input specified without a “scheme://” to be a local file, and then passed to fopen() instead under the hood. That would then enable even more code to switch to fcurl since all the existing use cases with local file names would just continue to work.

2. LD_PRELOAD

An interesting area of deeper research around this could be to provide a way to LD_PRELOAD replacements for the functions so that not even any source code would need be changed and already built existing binaries could be given this functionality.

3. fopencookie

There’s also the GNU libc’s fopencookie concept to figure out if that is something for fcurl to support/use. BSD and OS X have something similar called funopen.

4. merge in official libcurl

If this turns out useful, appreciated and good. We could consider moving the API in under the curl project’s umbrella and possibly eventually even making it part of the actual libcurl. But hey, we’re far away from that and I’m not saying that is even the best idea…

Your input is valuable

Please file issues or pull-requests. Let’s see where we can take this!

https://daniel.haxx.se/blog/2016/04/24/fcurl-is-fread-and-friends-for-urls/

|

|

Michael Kohler: Reps Council Working Days Berlin 2016 |

From April 15th through April 17th the Mozilla Reps Council met in Berlin together with the Participation Team to discuss the Working groups and overall strategy topics. Unfortunately I couldn’t attend on Friday (working day 1) since I had to take my exams. Therefore I could only attend Saturday and Sunday. Nevertheless I think I could help out a lot and definitely learned a lot doing this :) This blog posts reflects my personal opinions, the others will write a blog post as well to give you a more concise view of this weekend.

Alignment Working Group

The first session on Saturday was about the Alignment WG. Before the weekend we (more or less) finished the proposal. This allowed us to discuss the last few open questions, which are now all integrated in the proposal. This will only need review by Konstantina to make sure I haven’t forgotten to add anything from the session and then we can start implementing it. We are sure that this will formalize the interaction between Mozilla goals and Reps goals, stay tuned for more information, we’re currently working on a communication strategy for all the RepsNext changes to make it easier and more fun for you to get informed about the changes.

Meta Working Group

For the Meta Working Group we had more open questions and therefore decided to do brainstorming in three teams. The questions were:

- Who can join Council?

- Which recognition mechanisms should be implement now?

- How does accountability look in Reps?

We’re currently documenting the findings in the Meta working group working proposal, but we probably will need some more time to figure out everything perfectly. Keep an eye out on the Discourse topic in case we’ll need more feedback from you all!

Identity Working Group

A new working group? As you see, I didn’t believe it at first and Rara was visibly shocked!

Fun aside, yes, we’ll start a new Working group around the topics of outwards communication and the Rep program’s image. During our discussions on Saturday, we came up with a few questions that we will need to answer. This Friday we had our first call, follow us in the Discourse topic and it’s not too late to help out here! Please get involved as soon as possible to shape the future of Reps!

Communication Session

On Sunday we ran a joint session with the rest of the Participation team around the topic “How we work together”. We came up with the questions above and let those be answered / brainstormed in groups. I started to document the findings yesterday, but this is not yet in a state where it will be useful for anybody. Stay tuned for more communication around this (communication about communication, isn’t it fun? :)). The last question around “How might we improve the communication between the Participation-Team and the Council?” is already documented in the Alignment Working group proposal. Further the Identity working group will tackle and elaborate further the question around visibility.

Reps Roadmap for 2016

Wait, there is a roadmap?

Yes!

At the end of our sessions we put up a timeline for Reps for all our different initiatives on a wall. Within the next days we’ll work on this to have it digitally per months. For now, we have started to create GitHub issues in the Reps repo. Stay tuned for more information about this, the current information might confuse you since we haven’t updated all issues yet! It basically includes everything from RepsNext proposal implementations to London Work Week preparations to Council elections.

Conclusion

This weekend showed that we currently have an amazing, hard-working Council. It also showed that we’re on track with all the RepsNext work and that we can do a lot once we all work together and have Working Groups to involve all Reps as well.

Looking forward to the next months! If you haven’t yet, have a look at the Reps Discourse category, to keep yourself updated on Reps related topics and the working groups!

The other Council members will write their blog post in the next few days as well, keep an eye out for link on our Reps issues. Once again, there are a lot of changes to be implemented and discussed, we are working on a strategy for that. We believe that just pointing to all proposals is not easy enough and will come up with fun ways to chime into these and fully understand them. Nevertheless, if you have questions about anything I wrote here, feel free to reach out to me!

Credit: all pictures were taken by our amazing photographer Christos!

https://michaelkohler.info/2016/reps-council-working-days-berlin-2016

|

|

Michael Kohler: Mozilla Switzerland IoT Hackathon in Lausanne |

On April 2nd 2016 we held a small IoT Hackathon in Lausanne to brainstorm about the Web and IoT. This was aligned with the new direction that Mozilla is taking on.

Preparation

We started to organize the Hackathon on Github, so everyone can participate. Geoffroy was really helpful to organize the space for it at Liip.ch. Thanks a lot to them, without them organizing our events would be way harder!

The Hackathon

We expected more people to come, but as mentioned above, this is our first self-organized event in the French speaking part of Switzerland. Nevertheless we were four persons with an interest in hacking something together.

Geoffroy and Paul started to have a look at Vaani.iot, one of the projects that Mozilla is currently pushing on. They started to build it on their laptops, unfortunately the Vaani documentation is not good enough yet to see the full picture and what you could do with it. We’re planning to send some feedback regarding that to the Vaani team.

In the meantime Martin and I set up my Raspberry Pi and started to write a small script together that reads out the temperature from one of the sensors. Once we’ve done that, I created a small API to have the temperature returned in JSON format.

At this point, we decided we wanted to connect those two pieces and create a Web app to read out the temperature and announce it through voice. Since we couldn’t get Vaani working, we decided to use the WebSpeech API for this. The voice output part is available in Firefox and Chrome right now, therefore we could achieve this goal without using any non-standard APIs. After that Geoffroy played around with the voice input feature of this API. This is currently only working in Chrome, but there is a bug to implement it in Firefox as well. In the spirit of the open web, we decided to ignore the fact that we need to use Chrome for now, and create a feature that is built on Web standards that are on track to standardization.

After all, we could achieve something together and definitely had some good learnings during that.

Lessions learned

- Organizing a hackathon for the first time in a new city is not easy

- We probably need to establish an “evening-only” meetup series first, so we can attract participants that identify with us

- We could use this opportunity to document the Liip space in Lausanne for future events on our Events page on the wiki

- Not all projects are well documented, we need to work on this!

After the Hackathon

Since I needed to do a project for my studies that involves hardware as well, I could take the opportunity and take the sensors for my project.

You can find the Source Code on the MozillaCH github organization. It currently regularly reads out the two temperature sensors and checks if there is any movement registered by the movement sensor. If the temperature difference is too high it sends an alarm to the NodeJS backend. The same goes for the situation where it detects movement. I see this as a first step into my own take on a smart home, it would need a lot of work and more sensors to be completely useful though.

https://michaelkohler.info/2016/mozilla-switzerland-iot-hackathon-in-lausanne

|

|

Daniel Pocock: LinuxWochen, MiniDebConf Vienna and Linux Presentation Day |

Over the coming week, there are a vast number of free software events taking place around the world.

I'll be at the LinuxWochen Vienna and MiniDebConf Vienna, the events run over four days from Thursday, 28 April to Sunday, 1 May.

At MiniDebConf Vienna, I'll be giving a talk on Saturday (schedule not finalized yet) about our progress with free Real-Time Communications (RTC) and welcoming 13 new GSoC students (and their mentors) working on this topic under the Debian umbrella.

On Sunday, Iain Learmonth and I will be collaborating on a workshop/demonstration on Software Defined Radio from the perspective of ham radio and the Debian Ham Radio Pure Blend. If you want to be an active participant, an easy way to get involved is to bring an RTL-SDR dongle. It is highly recommended that instead of buying any cheap generic dongle, you buy one with a high quality temperature compensated crystal oscillator (TXCO), such as those promoted by RTL-SDR.com.

Saturday, 30 April is also Linux Presentation Day in many places. There is an event in Switzerland organized by the local local FSFE group in Basel.

DebConf16 is only a couple of months away now, Registration is still open and the team are keenly looking for additional sponsors. Sponsors are a vital part of such a large event, if your employer or any other organization you know benefits from Debian, please encourage them to contribute.

|

|

Hal Wine: Enterprise Software Writers R US |

Enterprise Software Writers R US

Someone just accused me of writing Enterprise Software!!!!!

Well, the “someone” is Mahmoud Hashemi from PayPal, and I heard him on the Talk Python To Me podcast (episode 54). That whole episode is quite interesting - go listen to it.

Mahmoud makes a good case, presenting nine “hallmarks” of enterprise software (the more that apply, the more “enterprisy” your software is). Most of the work RelEng does easily hits 7 of the points. You can watch Mahmoud define Enterprise Software for free by following the link from his blog entry (link is 2.1 in table of contents). (It’s part of his “Enterprise Software with Python” course offered on O’Reilly’s Safari.) One advantage of watching his presentation is that PayPal’s “Mother of all Diagrams” make ours_ look simple! (Although “blue spaghetti” is probably tastier.)

Do I care about “how enterprisy” my work is? Not at all. But I do like the way Mahmoud explains the landscape and challenges of enterprise software. He makes it clear, in the podcast, how acknowledging the existence of those challenges can inform various technical decisions. Such as choice of language. Or need to consider maintenance. Or – well, just go listen for yourself.

http://dtor.com/halfire/2016/04/23/enterprise_software_writers_r_us.html

|

|

Myk Melez: Project Positron |

Along with several colleagues, I recently started working on Project Positron, an effort to build an Electron-compatible runtime on top of the Mozilla technology stack (Gecko and SpiderMonkey). Mozilla has long supported building applications on its stack, but the process is complex and cumbersome. Electron development, by comparison, is a dream. We aim to bring the same ease-of-use to Mozilla.

Positron development is proceeding along two tracks. In the SpiderNode repository, we’re working our way up from Node, shimming the V8 API so we can run Node on top of SpiderMonkey. Ehsan Akhgari details that effort in his Project SpiderNode post.

In the Positron repository, we’re working our way down from Electron, importing Electron (and Node) core modules, stubbing or implementing their native bindings, and making the minimal necessary changes (like basing the

It’s early days. As Ehsan noted, SpiderNode doesn’t yet link the Node executable successfully, since we haven’t shimmed all the V8 APIs it accesses. Meanwhile, Positron supports only a tiny subset of the Electron and Node APIs.

Nevertheless, we reached a milestone today: the tip of the Positron trunk now runs the Electron Quick Start app described in the Electron tutorial. That means it can open a BrowserWindow, hook up a DevTools window to it (with Firefox DevTools integration contributed by jryans), and handle basic application lifecycle events. We’ve imported that app into the Positron repository as our “hello world” example.

Clone and build Positron to see it in action!

|

|

About:Community: Firefox 46 new contributors |

With the release of Firefox 46, we are pleased to welcome the 37 developers who contributed their first code change to Firefox in this release, 31 of whom were brand new volunteers! Please join us in thanking each of these diligent and enthusiastic individuals, and take a look at their contributions:

- chikoski: 1179627

- leper: 1236373

- sakshivaid95: 1207185

- simon: 1231806

- AJ Kerrigan: 1132556

- Abdulla Alkaabi: 1219920

- Alex Jordan: 1106353

- Andy McKay: 1214007

- Brad Kotsopoulos: 1228676, 1239488

- Brendan Good: 1111701

- Brian Armstrong: 987186

- Brian J Murray: 1232486

- Bryce Van Dyk: 1239096

- Daniel Vucci: 1236864

- Giorgio Maone: 1215197

- Gordon Su: 1214169

- Haik Aftandilian: 1232374

- Jacobo Aragunde P'erez: 939496

- Julian Descottes: 1157469, 1198326, 1214177, 1224201, 1238467, 1238639, 1238941, 1239670, 1239673, 1239730, 1240344, 1240368

- Kartikey Agrawal: 1234131

- Kevin Atkinson: 1238031

- Louis Christie: 524109, 864780

- Mohamed Hammoud: 1156252

- Nico Grunbaum : 1196542

- Phil Booth: 1227527

- Ross Lovas: 1190093

- SUN Haitao: 1234544

- Samuel Mendes: 1228037

- Shakthi Wijeratne: 1232669

- Shane Tomlinson: 1233668

- Shivin: 1197163, 1207273

- Shruti Jasoria: 1097676

- Thomas Kuyper: 1184550

- Tim: 1164569

- Tony Mechelynck: 1236937

- Wilmer Paulino: 1237668

- Ya-Chieh Wu: 1216148

- raunaqabhyankar: 1149780, 1164307

http://blog.mozilla.org/community/2016/04/22/firefox-46-new-contributors/

|

|

Andrew Truong: Experience, Learn, Revitalize and Share: Junior High in a Nutshell |

Part of what made it difficult is that I was keen on following the same way of doing things I was used to in elementary. I was also reluctant to change and the ability to see change as well. The most crucial part was that I was acting in a manner where I was telling myself to just be myself but, I did just that in the wrong way, which caused more harm rather than good. More so, I started doing things that were unacceptable, but not embarrassing. It resulted in me being down in the office speaking with the AP or Principal on a few occasions. With one of the incidents that frowned upon then, wouldn't be frowned upon now, in our digital age. There are times where I wish I could just go back and change things up, but at the same time: life moves on. What has happened, happened.

The other part which made it difficult was that I didn't have wonderful teachers in my opinion. Not all of them were bad, but a handful of them just didn't click for me. I could say, to a certain extent that they picked on me at times because I simply just did not like class discussions (I still don't). More so, that in grade 8, teachers would know every students' name in the class except mine; I'm not sure what the issue was, or what the deal with that was. It wasn't just one, but 2 teachers that did it all the time. They were corrected from time to time but it didn't click to them that it was the reason that other classmates were laughing out loud because of it, every time it happened.

What changed me, however, took place through the summer break I had before going into grade 9. At that point, I discovered the opportunity to volunteer/ contribute to Mozilla. I started off with live chat on SUMO which paved the way for me to improve my writing skills and grammar by contributing to the knowledge base.

As I started into my last year of junior high, the other thing that I was lucky to be part of was: leadership. I was lucky and fortunate enough to be enrolled in that class so, that I was able to find myself and be myself. In leadership, students are encouraged to help each other out, work in groups/ teams, work to boost your enthusiasm and self-esteem, and to help organize school events. I loved it! I was able to see my potential, and what potential others had. These 2 factors allowed me to become more successful in my studies, and day-to-day life along with my contributions to Mozilla. I started to have things dawn on me, and so I was able to figure out what I did wrong, and how I could take a different and better approach the next time if the similar situations arose again.

Unfortunately, even though there are positives, there will be negatives. Not everything worked out to be a miracle. There were 2 situations where I had issues with my teachers.

The first of which, was where a teacher wanted things done her way only. If you found a solution to a homework question, test question a different way but with the same answer, and you could do that for other questions as well, you were still wrong. You had to do it a certain and specific way in order for it to be right. Now, as always, there are 2 ways of thinking about this for sure, but as we've progressed, we find that there are multiple approaches to achieving or reaching something and it doesn't have to be done in the set in a stone way.

The second was where I know that I don't have the talent or ability to complete something and required help. I tried and tried through the whole semester to achieve what was being taught in the class. On the very last day, I didn't expect myself to take it any further but somehow one thing lead to another where I wasn't happy with the teacher and nor was he happy with me. In the end, I spoke with my favourite AP who was also a teacher of mine as well, and she agreed with what I said and we ended it there.

There are always 2 parts to a story, but I can only reveal so much that it doesn't hurt me in the long run. I'm being really vague as I don't want to hurt my reputation nor do I want an investigation to be launched. The sole purpose of this blog post is to share what I experienced in junior high and to share how I was able to progress and find myself simply through the power of leadership.

http://feer56.blogspot.com/2016/04/experience-learn-revitalize-and-share.html

|

|

Christian Heilmann: Turning a community into evangelism helpers – DevRelCon Notes |

These are the notes of my talk at DevRelCon in San Francisco. “Turning a community into evangelism helpers” covered how you can scale your evangelism/advocacy efforts. The trick is to give up some of the control and sharing your materials with the community. Instead of being the one who brings the knowledge, you’re the one who shares it and coaches people how to use it.

Why include the community?

First of all, we have to ask ourselves why we should include the community in our evangelism efforts. Being the sole source of information about your products can be beneficial. It is much easier to control the message and create materials that way. But, it limits you to where you can physically be at one time. Furthermore, your online materials only reach people who already follow you.

Sharing your materials and evangelism efforts with a community reaps a lot of benefits:

- You cut down on travel – whilst it is glamorous to rack up the air miles and live the high life of lounges and hotels it also burns you out. Instead of you traveling everywhere, you can nurture local talent to present for you. A lot of conferences will want the US or UK presenter to come to attract more attendees. You can use this power to introduce local colleagues and open doors for them.

- You reach audiences that are beyond your reach – often it is much more beneficial to speak in the language and the cultural background of a certain place. You can do your homework and get translations. But, there is nothing better than a local person delivering in the right format.

- You avoid being a parachute presenter – instead of dropping out of the sky, giving your talk and then vanishing without being able to keep up with the workload of answering requests, you introduce a local counterpart. That way people get answers to their requests after you left in a language and format they understand. It is frustrating when you have no time to answer people or you just don’t understand what they want.

Share, inspire, explain

Starts by making yourself available beyond the “unreachable evangelist”. You’re not a rockstar, don’t act like one. Share your materials and the community will take them on. That way you can share your workload. Break down the barrier between you and your community by sharing everything you do. Break down fears of your community by listening and amplifying things that impress you.

Make yourself available and show you listen

- Have a repository of slide decks in an editable format – besides telling your community where you will be and sharing the videos of your talks also share your slides. That way the community can re-use and translate them – either in part or as a whole.

- Share out interesting talks and point out why they are great – that way you show that there is more out there than your company materials. And you advertise other presenters and influencers for your community to follow. Give a lot of details here to show why a talk is great. In Mozilla I did this as a minute-by-minute transcript.

- Create explanations for your company products, including demo code and share it out with the community – the shorter and cleaner you can keep these, the better. Nobody wants to talk over a 20 minute screencast.

- Share and comment on great examples from community members – this is the big one. It encourages people to do more. It shows that you don’t only throw content over the wall, but that you expect people to make it their own.

Record and teach recording

Keeping a record of everything you do is important. It helps you to get used to your own voice and writing style and see how you can improve over time. It also means that when people ask you later about something you have a record of it. Ask for audio and video recordings of your community presenting to prepare for your one on one meetings with them. It also allows you to share these with your company to show how your community improves. You can show them to conference organisers to promote your community members as prospective speakers.

Recordings are great

- They show how you deliver some of the content you talked about

- They give you an idea of how much coaching a community member needs to become a presenter

- They allow people to get used to seeing themselves as they appear to others

- You create reusable content (screencasts, tutorials), that people can localise and talk over in presentations

Often you will find that a part of your presentation can inspire people. It makes them aware of how to deliver a complex concept in an understandable manner. And it isn’t hard to do – get Camtasia or Screenflow or even use Quicktime. YouTube is great for hosting.

Avoid the magical powerpoint

One thing both your company and your community will expect you to create is a “reusable power point presentation”. One that people can deliver over and over again. This is a mistake we’ve been doing for years. Of course, there are benefits to having one of those:

- You have a clear message – a Powerpoint reviewed by HR, PR and branding and makes sure there are no communication issues.

- You have a consistent look and feel – and no surprises of copyrighted material showing up in your talks

- People don’t have to think about coming up with a talk – the talking points are there, the soundbites hidden, the tweetable bits available.

All these are good things, but they also make your presentations boring as toast. They don’t challenge the presenter to own the talk and perform. They become readers of slides and notes. If you want to inspire, you need to avoid that at all cost.

You can have the cake of good messaging and eat it, too. Instead of having a full powerpoint to present, offer your community a collection of talking points. Add demos and screencasts to remix into their own presentations.

There is merit in offering presentation templates though. It can be daunting to look at a blank screen and having to choose fonts, sizes and colours. Offering a simple, but beautiful template to use avoids that nuisance.

What I did in the past was offering an HTML slide deck on GitHub that had introductory slides for different topics. Followed by annotated content slides how to show parts of that topic. Putting it up on GitHub helped the community adding to it, translating it and fork their own presentations. In other words, I helped them on the way but expected them to find their own story arc and to make it relevant for the audience and their style of presenting.

Delegate and introduce

Delegation is the big win whenever you want to scale your work. You can’t reap the rewards of the community helping you without trusting them. So, stop doing everything yourself and instead delegate tasks. What is annoying and boring to you might be a great new adventure for someone else. And you can see them taking your materials into places you hadn’t thought of.

Delegate tasks early and often

Here are some things you can easily delegate:

- Translation / localisation – you don’t speak all the languages. You may not be aware that your illustration or your use of colour is offensive in some countries.

- Captioning and transcription of demo videos – this takes time and effort. It is annoying for you to describe your own work, but it is a great way for future presenters to memorise it.

- Demo code cleanup / demo creation – you learn by doing, it is that simple.

- Testing and recording across different platforms/conditions – your community has different setups from what you have. This is a good opportunity to test and fix your demos with their hardware.

- Maintenance of resources – in the long run, you don’t want to be responsible for maintaining everything. The earlier you get people involved, the smoother the transition will be.

Introduce local community members

Sharing your content is one thing. The next level is to also share your fame. You can use your schedule and bookings to help your community:

- Mention them in your talks and as a resource to contact – you avoid disappointing people by never coming back to them. And it shows your company cares about the place you speak at.

- Co-present with them at events – nothing better to give some kudos than to share the stage

- Introduce local companies/influencers to your local counterpart – the next step in the introduction cycle. This way you have something tangible to show to your company. It may be the first step for that community member to get hired.

- Once trained up, tell other company departments about them. – this is the final step to turn volunteers into colleagues.

Set guidelines and give access

You give up a lot of control and you show a lot of trust when you start scaling by converting your community. In order not to cheapen that, make sure you also define guidelines. Being part of this should not be a medal for showing up – it should become something to aim for.

- Define a conference playbook – if someone speaks on behalf of your company using your materials, they should also have deliveries. Failing to deliver them means they get less or no support in the future.

- Offer 1:1 training in various levels as a reward – instead of burning yourself out by training everyone, have self-training materials that people can use to get themselves to the next level

- Have a defined code of conduct – your reputation is also at stake when one of your community members steps out of line

- Define benefits for participation – giving x number of talks gets you y, writing x amount of demos y amount of people use give you the same, and so on.

Official channels > Personal Blogs

Often people you train want to promote their own personal channels in their work. That is great for them. But it is dangerous to mix their content with content created on work time by someone else. This needs good explanation. Make sure to point out to your community members that their own brand will grow with the amount of work they delivered and the kudos they got for it. Also explain that by separating their work from your company’s, they have a chance to separate themselves from bad things that happen on a company level.

Giving your community members access to the official company channels and making sure their content goes there has a lot of benefits:

- You separate personal views from company content

- You control the platform (security, future plans…)

- You enjoy the reach and give kudos to the community member.

You don’t want to be in the position to explain a hacked blog or outrageous political beliefs of a community member mixed with your official content. Believe me, it isn’t fun.

Communicate sideways and up

This is the end game. To make this sustainable, you need full support from your company.

For sustainability, get company support

The danger of programs like this is that they cost a lot of time and effort and don’t yield immediate results. This is why you have to be diligent in keeping your company up-to-date on what’s happening.

- Communicate out successes company-wide – find the right people to tell about successful outreach into markets you couldn’t reach but the people you trained could. Tell all about it – from engineering to marketing to PR. Any of them can be your ally in the future.

- Get different company departments to maintain and give input to the community materials – once you got community members to talk about products, try to get a contact in these departments to maintain the materials the community uses. That way they will be always up to date. And you don’t run into issues with outdated materials annoying the company department.

- Flag up great community members for hiring as full-time devrel people

The perfect outcome of this is to convert community members into employees. This is important to the company as people getting through the door is expensive. Already trained up employees are more effective to hit the ground running. It also shows that using your volunteer time on evangelism pays off in the long run. It can also be a great career move for you. People hired through this outreach are likely to become your reports.

|

|

Mark C^ot'e: How MozReview helps |

A great post on code review is making its rounds. It’s started some discussion amongst Mozillians, and it got me thinking about how MozReview helps with the author’s points. It’s particularly interesting because apparently Twitter uses Review Board for code reviews, which is a core element of the whole MozReview system.

The author notes that it’s very important for reviewers to know what reviews are waiting on them, but also that Review Board itself doesn’t do a good job of this. MozReview fixes this problem by piggybacking on Bugzilla’s review flags, which have a number of features built around them: indicators, dashboards, notification emails, and reminder emails. People can even subscribe to the reminders for other reviewers; this is a way managers can ensure that their teams are responding promptly to review requests. We’ve also toyed around with the idea of using push notifications to notify people currently using Bugzilla that they have a new request (also relevant to the section on being “interrupt-driven”).

On the submitter side, MozReview’s core support for microcommits—a feature we built on top of Review Board, within our extensions—helps “keep reviews as small as possible”. While it’s impossible to enforce small commits within a tool, we’ve tried to make it as painless as possible to split up work into a series of small changes.

The MozReview team has made progress on automated static analysis (linters and the like), which helps submitters verify that their commits follow stylistic rules and other such conventions. It will also shorten review time, as the reviewer will not have to spend time pointing out these issues; when the review bots have given their r+s, the reviewer will be able to focus solely on the logic. As we continue to grow the MozReview team, we’ll be devoting some time to finishing up this feature.

|

|

Armen Zambrano: The Joy of Automation |

This follows the idea that mconley started with The Joy of Coding and his livehacks.

At the moment there is only "Unscripted" videos of me hacking away. I hope one day to do live hacks but for now they're offline videos.

Mistakes I made in case any Platform Ops member wanting to contribute want to avoid:

- Lower the music of the background music

- Find a source of music without ads and with music that would not block certain countries from seeing it (e.g. Germany)

- Do not record in .flv format since most video editing software do not handle it

- Add an intro screen so you don't see me hiding OBS

- Have multiple bugs to work on in case you get stuck in the first one

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

http://feedproxy.google.com/~r/armenzg_mozilla/~3/VHKWjujB3X0/the-joy-of-automation.html

|

|

Benjamin Bouvier: Making asm.js/WebAssembly compilation more parallel in Firefox |

In December 2015, I've worked on reducing startup time of asm.js programs in Firefox by making compilation more parallel. As our JavaScript engine, Spidermonkey, uses the same compilation pipeline for both asm.js and WebAssembly, this also benefitted WebAssembly compilation. Now is a good time to talk about what it meant, how it got achieved and what are the next ideas to make it even faster.

What does it mean to make a program "more parallel"?

Parallelization consists of splitting a sequential program into smaller

independent tasks, then having them run on different CPU. If your program

is using N cores, it can be up to N times faster.

Well, in theory. Let's say you're in a car, driving on a 100 Km long road. You've already driven the first 50 Km in one hour. Let's say your car can have unlimited speed from now on. What is the maximal average speed you can reach, once you get to the end of the road?

People intuitively answer "If it can go as fast as I want, so nearby lightspeed

sounds plausible". But this is not true! In fact, if you could teleport from

your current position to the end of the road, you'd have traveled 100 Km in one

hour, so your maximal theoritical speed is 100 Km per hour. This result is a

consequence of Amdahl's law.

When we get back to our initial problem, this means you can expect a N times

speedup if you're running your program with N cores if, and only if your

program can be entirely run in parallel. This is usually not the case, and

that is why most wording refers to speedups up to N times faster, when it

comes to parallelization.

Now, say your program is already running some portions in parallel. To make it faster, one can identify some parts of the program that are sequential, and make them independent so that you can run them in parallel. With respect to our car metaphor, this means augmenting the portion of the road on which you can run at unlimited speed.

This is exactly what we have done with parallel compilation of asm.js programs under Firefox.

A quick look at the asm.js compilation pipeline

I recommend to read this blog post. It clearly explains the differences between JIT (Just In Time) and AOT (Ahead Of Time) compilation, and elaborates on the different parts of the engines involved in the compilation pipeline.

As a TL;DR, keep in mind that asm.js is a strictly validated, highly optimizable, typed subset of JavaScript. Once validated, it guarantees high performance and stability (no garbage collector involved!). That is ensured by mapping every single JavaScript instruction of this subset to a few CPU instructions, if not only a single instruction. This means an asm.js program needs to get compiled to machine code, that is, translated from JavaScript to the language your CPU directly manipulates (like what GCC would do for a C++ program). If you haven't heard, the results are impressive and you can run video games directly in your browser, without needing to install anything. No plugins. Nothing more than your usual, everyday browser.

Because asm.js programs can be gigantic in size (in number of functions as well as in number of lines of code), the first compilation of the entire program is going to take some time. Afterwards, Firefox uses a caching mechanism that prevents the need for recompilation and almost instaneously loads the code, so subsequent loadings matter less*. The end user will mostly wait for the first compilation, thus this one needs to be fast.

Before the work explained below, the pipeline for compiling a single function (out of an asm.js module) would look like this:

- parse the function, and as we parse, emit intermediate representation (IR) nodes for the compiler infrastructure. SpiderMonkey has several IRs, including the MIR (middle-level IR, mostly loaded with semantic) and the LIR (low-level IR closer to the CPU memory representation: registers, stack, etc.). The one generated here is the MIR. All of this happens on the main thread.

- once the entire IR graph is generated for the function, optimize the MIR graph (i.e. apply a few optimization passes). Then, generate the LIR graph before carrying out register allocation (probably the most costly task of the pipeline). This can be done on supplementary helper threads, as the MIR optimization and LIR generation for a given function doesn't depend on other ones.

- since functions can call between themselves within an asm.js module, they need references to each other. In assembly, a reference is merely an offset to somewhere else in memory. In this initial implementation, code generation is carried out on the main thread, at the cost of speed but for the sake of simplicity.

So far, only the MIR optimization passes, register allocation and LIR generation were done in parallel. Wouldn't it be nice to be able to do more?

* There are conditions for benefitting from the caching mechanism. In particular, the script should be loaded asynchronously and it should be of a consequent size.

Doing more in parallel

Our goal is to make more work in parallel: so can we take out MIR generation from the main thread? And we can take out code generation as well?

The answer happens to be yes to both questions.

For the former, instead of emitting a MIR graph as we parse the function's body, we emit a small, compact, pre-order representation of the function's body. In short, a new IR. As work was starting on WebAssembly (wasm) at this time, and since asm.js semantics and wasm semantics mostly match, the IR could just be the wasm encoding, consisting of the wasm opcodes plus a few specific asm.js ones*. Then, wasm is translated to MIR in another thread.

Now, instead of parsing and generating MIR in a single pass, we would now parse and generate wasm IR in one pass, and generate the MIR out of the wasm IR in another pass. The wasm IR is very compact and much cheaper to generate than a full MIR graph, because generating a MIR graph needs some algorithmic work, including the creation of Phi nodes (join values after any form of branching). As a result, it is expected that compilation time won't suffer. This was a large refactoring: taking every single asm.js instructions, and encoding them in a compact way and later decode these into the equivalent MIR nodes.

For the second part, could we generate code on other threads? One structure in

the code base, the MacroAssembler, is used to generate all the code and it

contains all necessary metadata about offsets. By adding more metadata there to

abstract internal calls **, we can describe the new scheme in terms of a

classic functional map/reduce:

- the wasm IR is sent to a thread, which will return a MacroAssembler. That

is a

mapoperation, transforming an array of wasm IR into an array of MacroAssemblers. - When a thread is done compiling, we merge its MacroAssembler into one big

MacroAssembler. Most of the merge consists in taking all the offset metadata

in the thread MacroAssembler, fixing up all the offsets, and concatenate the

two generated code buffers. This is equivalent to a

reduceoperation, merging each MacroAssembler within the module's one.

At the end of the compilation of the entire module, there is still some light work to be done: offsets of internal calls need to be translated to their actual locations. All this work has been done in this bugzilla bug.

* In fact, at the time when this was being done, we used a different superset of wasm. Since then, work has been done so that our asm.js frontend is really just another wasm emitter.

** referencing functions by their appearance order index in the module, rather than an offset to the actual start of the function. This order is indeed stable, from a function to the other.

Results

Benchmarking has been done on a Linux x64 machine with 8 cores clocked at 4.2 Ghz.

First, compilation times of a few asm.js massive games:

The X scale is the compilation time in seconds, so lower is better. Each value point is the best one of three runs. For the new scheme, the corresponding relative speedup (in percentage) has been added:

For all games, compilation is much faster with the new parallelization scheme.

Now, let's go a bit deeper. The Linux CLI tool perf has a stat command

that gives you an average of the number of utilized CPUs during the program

execution. This is a great measure of threading efficiency: the more a CPU is

utilized, the more it is not idle, waiting for other results to come, and thus

useful. For a constant task execution time, the more utilized CPUs, the more

likely the program will execute quickly.

The X scale is the number of utilized CPUs, according to the perf stat

command, so higher is better. Again, each value point is the best one of three

runs.

With the older scheme, the number of utilized CPUs quickly rises up from 1 to 4 cores, then more slowly from 5 cores and beyond. Intuitively, this means that with 8 cores, we almost reached the theoritical limit of the portion of the program that can be made parallel (not considering the overhead introduced by parallelization or altering the scheme).

But with the newer scheme, we get much more CPU usage even after 6 cores! Then it slows down a bit, although it is still more significant than the slow rise of the older scheme. So it is likely that with even more threads, we could have even better speedups than the one mentioned beforehand. In fact, we have moved the theoritical limit mentioned above a bit further: we have expanded the portion of the program that can be made parallel. Or to keep on using the initial car/road metaphor, we've shortened the constant speed portion of the road to the benefit of the unlimited speed portion of the road, resulting in a shorter trip overall.

Future steps

Despite these improvements, compilation time can still be a pain, especially on mobile. This is mostly due to the fact that we're running a whole multi-million line codebase through the backend of a compiler to generate optimized code. Following this work, the next bottleneck during the compilation process is parsing, which matters for asm.js in particular, which source is plain text. Decoding WebAssembly is an order of magnitude faster though, and it can be made even faster. Moreover, we have even more load-time optimizations coming down the pipeline!

In the meanwhile, we keep on improving the WebAssembly backend. Keep track of our progress on bug 1188259!

https://blog.benj.me/2016/04/22/making-asmjs-webassembly-compilation-more-parallel/

|

|