Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Dave Hunt: Custom Firefox Cufflinks |

If you’re interested in a pair of custom Firefox logo cufflinks for $25 plus postage then please get in touch. I’ve been in contact with CuffLinks.com, and if I can place an initial order of at least 25 pairs then the mold and tooling fee will be waived. Check out their website for examples of their work. The Firefox cufflinks would be contoured to the outline of the logo, and the logo itself would be screen printed in full colour. If these go well, I’d also love a pair of dino head cufflinks to complement them!

Unless I can organise with CuffLinks.com to ship to multiple recipients and take payments separately, I will accept payments via Paypal and take care of sending the cufflinks out. As an example of postage costs, sending to USA would cost me approximately $12 per parcel. Let me know if you’re interested via a comment on this post, e-mail (find me in the phonebook), or IRC (:davehunt).

|

|

Karl Dubost: Vendor Prefixes And Market Reality |

Through the Web Compat twitter account, I happen to read a thread about Apple introducing a new vendor prefix.

|

|

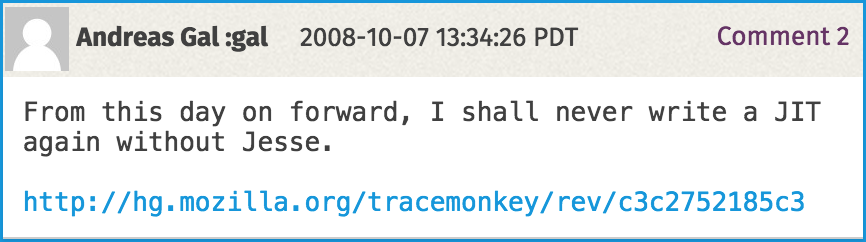

Jesse Ruderman: Releasing jsfunfuzz and DOMFuzz |

Today I'm releasing two fuzzers: jsfunfuzz, which tests JavaScript engines, and DOMFuzz, which tests layout and DOM APIs.

Over the last 11 years, these fuzzers have found 6450 Firefox bugs, including 790 bugs that were rated as security-critical.

I had to keep these fuzzers private for a long time because of the frequency with which they found security holes in Firefox. But three things have changed that have tipped the balance toward openness.

First, each area of Firefox has been through many fuzz-fix cycles. So now I'm mostly finding regressions in the Nightly channel, and the severe ones are fixed well before they reach most Firefox users. Second, modern Firefox is much less fragile, thanks to architectural changes to areas that once oozed with fuzz bugs. Third, other security researchers have noticed my success and demonstrated that they can write similarly powerful fuzzers.

My fuzzers are no longer unique in their ability to find security bugs, but they are unusual in their ability to churn out reliable, reduced testcases. Each fuzzer alternates between randomly building a JS string and then evaling it. This construction makes it possible to make a reproduction file from the same generated strings. Furthermore, most DOMFuzz modules are designed so their functions will have the same effect even if other parts of the testcase are removed. As a result, a simple testcase reduction tool can reduce most testcases from 3000 lines to 3-10 lines, and I can usually finish reducing testcases in less than 15 minutes.

The ease of getting reduced testcases lets me afford to report less severe bugs. Occasionally, one of these turns out to be a security bug in disguise. But most importantly, these bug reports help me establish positive relationships with Firefox developers, by frequently saving them time.

A JavaScript engine developer can easily spend a day trying to figure out why a web site doesn't work in Firefox. If instead I can give them a simple testcase that shows an incorrect result with a new JS optimization enabled, they can quickly find the source of the bug and fix it. Similarly, they much prefer reliable assertion testcases over bug reports saying "sometimes, Google Maps crashes after a while".

As a result, instead of being hostile to fuzzing, Firefox developers actively help me fuzz their code. They've added numerous assertions to their code, allowing fuzzers to notice as soon as the smallest thing goes wrong. They've fixed most of the bugs that impede fuzzing progress. And several have suggested new ways to test their code, even (especially) ways that scare them.

Developers working on the JavaScript engine have been especially helpful. First, they ensured I could test their code directly, apart from the rest of the browser. They already had a JavaScript shell for running regression tests, and they added a --fuzzing-safe option to disable the more dangerous testing functions.

The JS team also created a large set of testing functions to let me control things that would normally be based on heuristics. Fuzzers can now choose when garbage collection happens and even how much. They can make expensive JITs kick in after 2 loop iterations rather than 100. Fuzzers can even simulate out-of-memory conditions. All of these things make it possible to create small, reliable testcases for nasty classes of bugs.

Finally, the JS team has supported differential testing, a form of fuzzing where output is checked for correctness against some oracle. In this case, the oracle is the same JavaScript engine with most of its optimizations disabled. By fixing inconsistencies quickly and supporting --enable-more-deterministic, they've ensured that differential testing doesn't get stuck finding the same problems repeatedly.

Please join us on IRC, or just dive in and contribute! Your suggestions and patches can have a large impact: fuzzer modules often act together to find complex interactions within the browser. For example, colspan, editor">bug 893333 was found by my designMode module interacting with a

http://www.squarefree.com/2015/07/28/releasing-jsfunfuzz-and-domfuzz/

|

|

Christian Heilmann: Got something to say? Write a post! |

Here’s the thing: Twitter sucks for arguments:

- It is almost impossible to follow conversation threads

- People favouriting quite agressive tweets leaves you puzzled as to the reasons

- People retweeting parts of the conversation out of context leads to wrong messages and questionable quotes

- 140 characters are great to throw out truisms but not to make a point.

- People consistenly copying you in on their arguments floods your notifications tab without really wanting to weigh in any longer

This morning was a great example: Peter Paul Koch wrote yet another incendiary post asking for a one year hiatus of browser innovation. I tweeted about the post saying it has some good points. Paul Kinlan of the Chrome team disagreed strongly with the post. I opted to agree with some of it, as a lot of features we created and thought we discarded tend to linger longer on the web than we want to.

A few of those back and forth conversations later and Alex Russel dropped the mic:

@Paul_Kinlan: good news is that @ppk has articulated clearly how attractive failure can be. @codepo8 seems to agree. Now we can call it out.

Now, I am annoyed about that. It is accusing, calling me reactive and calls out criticism of innovation a failure. It also very aggressively hints that Alex will now always quote that to show that PPK was wrong and keeps us from evolving. Maybe. Probably. Who knows, as it is only 140 characters. But I am keeping my mouth shut, as there is no point at this agressive back and forth. It results in a lot of rushed arguments that can and will be quoted out of context. It results in assumed sub-context that can break good relationships. It – in essence – is not helpful.

If you truly disagree with something – make your point. Write a post, based on research and analysis. Don’t throw out a blanket approval or disapproval of the work of other people to spark a “conversation” that isn’t one.

Well-written thoughts lead to better quotes and deeper understanding. It takes more effort to read a whole post than to quote a tweet and add your sass.

In many cases, whilst writing the post you realise that you really don’t agree or disagree as much as you thought you did with the author. This leads to much less drama and more information.

And boy do we need more of that and less drama. We are blessed with jobs where people allow us to talk publicly, research and innovate and to question the current state. We should celebrate that and not use it for pithy bickering and trench fights.

Photo Credit: acidpix

http://christianheilmann.com/2015/07/28/got-something-to-say-write-a-post/

|

|

Daniel Stenberg: HTTP Workshop, second day |

All 37 of us gathered again on the 3rd floor in the Factory hotel here in M"unster. Day two of the HTTP Workshop.

Jana Iyengar (from Google) kicked off this morning with his presentations on HTTP and the Transport Layer and QUIC. Very interesting area if you ask me – if you’re interested in this, you really should check out the video recording from the barbof they did on this topic in the recent Prague IETF. It is clear that a team with dedication, a clear use-case, a fearless approach to not necessarily maintaining “layers” and a handy control of widely used servers and clients can do funky experiments with new transport protocols.

I think there was general agreement with Jana’s statement that “Engagement with the transport community is critical” for us to really be able to bring better web protocols now and in the future. Jana’s excellent presentations were interrupted a countless number of times with questions, elaborations, concerns and sub-topics from attendees.

Gaetano Carlucci followed up with a presentation of their QUIC evaluations, showing how it performs under various situations like packet loss etc in comparison to HTTP/2. Lots of transport related discussions followed.

We rounded off the afternoon with a walk through the city (the rain stopped just minutes before we took off) to the town center where we tried some of the local beers while arguing their individual qualities. We then took off in separate directions and had dinner in smaller groups across the city.

http://daniel.haxx.se/blog/2015/07/28/http-workshop-second-day/

|

|

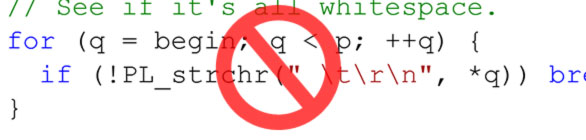

Honza Bambas: String parsing made simple with mozilla::Tokenizer |

I can see FindChar, Substring, ToInteger and even atoi, strchr, strstr and sscanf craziness all over the Mozilla code base. There are though much better and, more importantly, safer ways to parse even a very simple input.

I wrote a parser class with API derived from lexical analyzers that helps with simple inputs parsing in a very easy way. Just include mozilla/Tokenizer.h and use class mozilla::Tokenizer. It implements a subset of features of a lexical analyzer. Also nicely hides boundary checks of the input buffer from the consumer.

To describe the principal briefly: Tokenizer recognizes tokens like whole words, integers, white spaces and special characters. Consumer never works directly with the string or its characters but only with pre-parsed parts (identified tokens) returned by this class.

There are two main methods of Tokenizer:

-

bool Next(Token& result);

If there is anything to read from the input at the current internal read position, including the EOF, returns true and result is filled with a token type and an appropriate value easily accessible via a simple variant-like API. The internal read cursor is shifted to the start of the next token in the input before this method returns.

-

bool Check(const Token& tokenToTest);

If a token at the current internal read position is equal (by the type and the value) to what has been passed in the tokenToTest argument, true is returned and the internal read cursor is shifted to the next token. Otherwise (token is different than expected) false is returned and the read cursor is left unaffected.

Few usage examples:

Construction

#include "mozilla/Tokenizer.h"

mozilla::Tokenizer p(NS_LITERAL_CSTRING("Sample string 2015."));

Reading a single token, examining it

mozilla::Tokenizer::Token t;

bool read = p.Next(t);

// read == true, we have read something and t has been filled

// Following our example string...

if (t.Type() == mozilla::Tokenizer::TOKEN_WORD) {

t.AsString(); // returns "Sample"

}

Checking on a token value and automatically skipping on a positive test

if (!p.CheckChar('\x20')) {

throw "I expect a space here!";

}

read = p.Next(t);

// read == true

t.Type() == mozilla::Tokenizer::TOKEN_WORD;

t.AsString() == "string";

if (!p.CheckWhite()) {

throw "A white space is expected here!";

}

Reading numbers

read = p.Next(t); // read == true t.Type() == mozilla::Tokenizer::TOKEN_INTEGER; t.AsInteger() == 2015;

Reaching the end of the input

read = p.Next(t); // read == true t.Type() == mozilla::Tokenizer::TOKEN_CHAR; t.AsChar() == '.'; read = p.Next(t); // read == true t.Type() == mozilla::Tokenizer::TOKEN_EOF; read = p.Next(t); // read == false, we are behind the EOF // t is here undefined!

More features

To learn more enhanced features of the Tokenizer – there is not that many, don’t be scared  – look at the well documented Tokenizer.h file under xpcom/ds.

– look at the well documented Tokenizer.h file under xpcom/ds.

As a teaser you can go through this more enhanced example or check on a gtest for Tokenizer:

#include "mozilla/Tokenizer.h"

using namespace mozilla;

{

// A simple list of key:value pairs delimited by commas

nsCString input("message:parse me,result:100");

// Initialize the parser with an input string

Tokenizer p(input);

// A helper var keeping type and value of the token just read

Tokenizer::Token t;

// Loop over all tokens in the input

while (p.Next(t)) {

if (t.Type() == Tokenizer::TOKEN_WORD) {

// A 'key' name found

if (!p.CheckChar(':')) {

// Must be followed by a colon

return; // unexpected character

}

// Note that here the input read position is just after the colon

// Now switch by the key string

if (t.AsString() == "message") {

// Start grabbing the value

p.Record();

// Loop until EOF or comma

while (p.Next(t) && !t.Equals(Tokenizer::Token::Char(',')))

;

// Claim the result

nsAutoCString value;

p.Claim(value);

MOZ_ASSERT(value == "parse me");

// We must revert the comma so that the code bellow recognizes the flow correctly

p.Rollback();

} else if (t.AsString() == "result") {

if (!p.Next(t) || t.Type() != Tokenizer::TOKEN_INTEGER) {

return; // expected a value and that value must be a number

}

// Get the value, here you know it's a valid number

uint32_t number = t.AsInteger();

MOZ_ASSERT(number == 100);

} else {

// Here t.AsString() is any key but 'message' or 'result', ready to be handled

}

// On comma we loop again

if (p.CheckChar(',')) {

// Note that now the read position is after the comma

continue;

}

// No comma? Then only EOF is allowed

if (p.CheckEOF()) {

// Cleanly parsed the string

break;

}

}

return; // The input is not properly formatted

}

}

Currently works only with ASCII inputs but can be easily enhanced to also support any UTF-8/16 coding or even specific code pages if needed.

The post String parsing made simple with mozilla::Tokenizer appeared first on mayhemer's blog.

http://www.janbambas.cz/string-parsing-made-simple-with-mozillatokenizer/

|

|

Aaron Klotz: Interesting Win32 APIs |

Yesterday I decided to diff the export tables of some core Win32 DLLs to see what’s changed between Windows 8.1 and the Windows 10 technical preview. There weren’t many changes, but the ones that were there are quite exciting IMHO. While researching these new APIs, I also stumbled across some others that were added during the Windows 8 timeframe that we should be considering as well.

Volatile Ranges

While my diff showed these APIs as new exports for Windows 10, the MSDN docs

claim that these APIS are actually new for the Windows 8.1 Update. Using the

OfferVirtualMemory

and ReclaimVirtualMemory

functions, we can now specify ranges of virtual memory that are safe to

discarded under memory pressure. Later on, should we request that access be

restored to that memory, the kernel will either return that virtual memory to us

unmodified, or advise us that the associated pages have been discarded.

A couple of years ago we had an intern on the Perf Team who was working on bringing this capability to Linux. I am pleasantly surprised that this is now offered on Windows.

madvise(MADV_WILLNEED) for Win32

For the longest time we have been hacking around the absence of a madvise-like

API on Win32. On Linux we will do a madvise(MADV_WILLNEED) on memory-mapped

files when we want the kernel to read ahead. On Win32, we were opening the

backing file and then doing a series of sequential reads through the file to

force the kernel to cache the file data. As of Windows 8, we can now call

PrefetchVirtualMemory

for a similar effect.

Operation Recorder: An API for SuperFetch

The OperationStart

and OperationEnd

APIs are intended to record access patterns during a file I/O operation.

SuperFetch will then create prefetch files for the operation, enabling prefetch

capabilities above and beyond the use case of initial process startup.

Memory Pressure Notifications

This API is not actually new, but I couldn’t find any invocations of it in the

Mozilla codebase. CreateMemoryResourceNotification

allocates a kernel handle that becomes signalled when physical memory is running

low. Gecko already has facilities for handling memory pressure events on other

platforms, so we should probably add this to the Win32 port.

|

|

Mozilla IT & Operations: CVS & BZR services decommissioned on mozilla.org |

tl;dr: CVS & BZR (aka Bazaar) version control systems have been decommissioned at Mozilla. See https://ftp.mozilla.org/pub/mozilla.org/vcs-archive/README for final archives.

As part of our ongoing efforts to ensure that the services operated by Mozilla continue to meet the current needs of Mozilla, the following VCS systems have been decommissioned:

- cvs.mozilla.org via bug 985022

- bzr.mozilla.org via bug 967642

This work took coordinated effort between IT, Developer Services, and the remaining active users of those systems. Thanks to all of them for their contributions!

Final archives of the public repositories are currently available at https://ftp.mozilla.org/pub/mozilla.org/vcs-archive/. The README file has instructions for retrieval of non-public repositories.

NOTE: These URLs are subject to change, please refer back to this blog post for the up to date link.

For any questions or concerns, please contact the Developer Services team.

|

|

Mozilla Open Policy & Advocacy Blog: Experts develop cybersecurity recommendations |

Today, we’re excited to publish the output of our “Cybersecurity Delphi 1.0” research process, tapping into a panel of 32 cybersecurity experts from diverse and mutually reinforcing backgrounds.

Mozilla Cybersecurity Delphi 1.0

Securing our communications and our data is hard. Every month seems to bring new stories of mistakes and attacks resulting in our personal information being made available – bit by bit harming trust online, and making ordinary Internet users feel fear. Yet, cybersecurity public policy often seems stuck in yesterday’s solution space, focused exclusively on well known terrain, around issues such as information sharing, encryption, and critical infrastructure protection. These “elephants” of cybersecurity policy are significant issues – but too much focus on them eclipses other solutions that would allow us to secure the Internet for the future.

So, working with Camille Francois & DHM Research we’ve spent the past year engaging the panel of cybersecurity experts through a tailored research process to try to extract public policy ideas and see what consensus can be found around them. We weren’t aiming for full consensus (an impossible task within the security community!). Our goal was to foment ideation and exchange, to develop a user-focused and holistic cybersecurity policy agenda.

Our experts collectively generated 36 distinct policy suggestions for government action in cybersecurity. We then asked them to identify and rank their top choices of policy options by both feasibility and desirability. The result validated the importance of the “cyberelephants.” Privacy-respecting information sharing policies, effective critical infrastructure protection, and widespread availability and understanding of secure encryption programs are all important goals to pursue: they ranked high on desirability, but were generally viewed as hard to achieve.

More important are the ideas that emerged that aren’t on the radar screens of policymakers today. First and foremost was a proposal that stood out above the others as both highly desirable and highly feasible: increased funding to maintain the security of free and open source software. Although not high on many security policy agendas, the issue deserves attention. After all, 2014’s major security incidents around Poodle, Heartbleed, and Shellshock all centered on vulnerabilities in open source software. Moreover, open source software libraries are built into countless noncommercial and commercial products.

Many other good proposals and priorities surfaced through the process, including: developing and deploying alternative authentication mechanisms other than passwords; improving the integrity of public key infrastructure; and making secure communications tools easier to use. Another unexpected policy priority area highlighted by all segments of our expert panel as highly feasible and desirable was norm development, including norms concerning governments’ and corporations’ behavior in cyberspace, guided by human rights and communicated with maximum clarity in national and international contexts.

This report is not meant to be a comprehensive analysis of all cybersecurity public policy issues. Rather, it’s meant as a first, significant step towards a broader, collaborative policy conversation around the real security problems facing Internet users today.

At Mozilla, we will build on the ideas that emerged from this process, and hope to work with policymakers and others to develop a holistic, effective, user-centric cybersecurity public policy agenda going forward.

This research was made possible by a generous grant from the John D. and Catherine T. MacArthur Foundation.

Mozilla Cybersecurity Delphi 1.0

Chris Riley

Jochai Ben-Avie

Camille Francois

https://blog.mozilla.org/netpolicy/2015/07/28/experts-develop-cybersecurity-recommendations/

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1185823] add additional [audit] syslog entries

- [1187184] Minor updates to gear form

- [1184828] api searches should honour the same fields in its “order” parameter as the web UI

- [1186803] remove %product_sec_groups from bmo/lib/data.pm

- [1186776] allow users to set keywords on bug creation (via API/internally only)

- [1181453] Amend https://bugzilla.mozilla.org/form.fxos.feature form

- [1186788] disabling an account should always disable bugmail

- [1171806] add the ability for a user to disable/”remove” their own account

- [1187498] Disable SiteMapIndex extension if running on under development site instead of production

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

https://globau.wordpress.com/2015/07/28/happy-bmo-push-day-152/

|

|

Nicholas Nethercote: A work-around for Tree Style Tab breakage on Firefox Nightly caused by mozRequestAnimationFrame removal |

This post is aimed at Firefox Nightly users who also use the Tree Style Tab extension. Bug 909154 landed last week. It removed support for the prefixed mozRequestionAnimationFrame function, and broke Tree Style Tab. The GitHub repository that hosts Tree Style Tab’s code has been updated, but that has not yet made it into the latest Tree Style Tab build, which has version number 0.15.2015061300a003855.

Fortunately, it’s fairly easy to modify your installed version of Tree Style Tab to fix this problem. (“Fairly easy”, at least, for the technically-minded users who run Firefox Nightly.)

- Find the Tree Style Tabs

.xpifile. On my Linux machine, it’s at~/.mozilla/firefox/ndbcibpq.default-1416274259667/extensions/treestyletab@piro.sakura.ne.jp.xpi. Your profile name will not be exactly the same. (In general, you can find your profile with these instructions.) - That file is a zip file. Edit the

modules/lib/animationManager.jsfile within that file, and change the two occurrences ofmozRequestAnimationFrametorequestAnimationFrame. Save the change.

I did the editing in vim, which was easy because vim has the ability to edit zip files in place. If your editor does not support that, it might work if you unzip the code, edit the file directly, and then rezip, but I haven’t tried that myself. Good luck.

|

|

Chris Cooper: The changing face of buildduty, Summer 2015 edition |

Buildduty is the Mozilla release engineering (releng) equivalent of front-line support. It’s made up of a multitude of small tasks, none of which on their own are particulary complex or demanding, but taken in aggregate can amount to a lot of work.

It’s also non-deterministic. One of the most important buildduty tasks is acting as information brokers during tree closures and outages, making sure sheriffs, developers, and IT staff have the information they need. When outages happen, they supercede all other work. You may have planned to get through the backlog of buildduty tasks today, but congratulations, now you’re dealing with a network outage instead.

Releng has struggled to find a sustainable model for staffing buildduty. The struggle has been two-fold: finding engineers to do the work, and finding a duration for a buildduty rotation that doesn’t keep the engineer out of their regular workflow for too long.

I’m a firm believer that engineers *need* to be exposed to the consequences of the software they write and the systems they design:

DevOpsSupport - You write the software, you get a shift carrying the pager for it, and you get a shift answering customer calls about it.

— mhoye (@mhoye) July 15, 2015I also believe that it’s a valuable skill to be able to design a system and document it sufficiently so that it can be handed off to someone else to maintain.

Starting this week, we’re trying something new. We’re shifting at least part of the burden to management: I am now managing a pair of contractors who will be responsible for buildduty for the rest of 2015.

Alin and Vlad are our new contractors, and are both based in Romania. Their offset from Mozilla Standard Time (aka PST) will allow them to tackle the asynchronous activities of buildduty, namely slave loans, non-urgent developer requests, and maintaining the health of the machine pools.

It will take them a few weeks to find their feet since they are unfamiliar with any of the systems. You can find them on IRC in the usual places (#releng and #buildduty). Their IRC nicks are aselagea and vladC. Hopefully they will both be comfortable enough to append |buildduty to those nicks soon. :)

While Alin and Vlad get up to speed, buildduty continues as usual in #releng. If you have an issue that needs buildduty assistance, please ask in #releng, and someone from releng will assist you as quickly as possible. For less urgent requests, please file a bug.

|

|

Daniel Stenberg: The HTTP Workshop started |

So we started today. I won’t get into any live details or quotes from the day since it has all been informal and we’ve all agreed to not expose snippets from here without checking properly first. There will be a detailed report put together from this event afterwards.

The most critical peace of information is however how we must not walk on the red parts of the sidewalks here in M"unster, as that’s the bicycle lane and they can be ruthless there.

We’ve had a bunch of presentations today with associated Q&A and follow-up discussions. Roy Fielding (HTTP spec pioneer) started out the series with a look at HTTP full of historic details and views from the past and where we are and what we’ve gone through over the years. Patrick Mcmanus (of Firefox HTTP networking) took us through some of the quirks of what a modern day browser has to do to speak HTTP and topped it off with a quiz regrading Firefox metrics. Did you know 31% of all Firefox HTTP requests get fulfilled by the cache or that 73% of all Firfox HTTP/2 connections are used more than once but only 7% of the HTTP/1 ones?

Poul-Henning Kamp (author of Varnish) brought his view on HTTP/2 from an intermediary’s point of view with a slightly pessimistic view, not totally unlike what he’s published before. Stefan Eissing (from Green Bytes) entertained us by talking about his work on writing mod_h2 for Apache Httpd (and how it might be included in the coming 2.4.x release) and we got to discuss a bit around timing measurements and its difficulties.

We rounded off the afternoon with a priority and dependency tree discussion topped off with a walk-through of numbers and slides from Kazuho Oku (author of H2O) on how dependency-trees really help and from Moto Ishizawa (from Yahoo! Japan) explaining Firefox’s (Patrick’s really) implementation of dependencies for HTTP/2.

We spent the evening having a 5-course (!) meal at a nice Italian restaurant while trading war stories about HTTP, networking and the web. Now it is close to midnight and it is time to reload and get ready for another busy day tomorrow.

I’ll round off with a picture of where most of the important conversations were had today:

http://daniel.haxx.se/blog/2015/07/27/the-http-workshop-started/

|

|

Mozilla IT & Operations: Product Delivery Migration: What is changing, when it’s changing and the impacts |

As promised, the FTP Migration team is following up from the 7/20 Monday Project Meeting where Sean Rich talked about a project that is underway to make our Product Delivery System better.

As a part of this project, we are migrating content out of our data centers to AWS. In addition to storage locations changing, namespaces will change and the FTP protocol for this system will be deprecated. If, after reading this post, you have any further questions, please email the team.

Action: The ftp protocol on ftp.mozilla.org is being turned off.

Timing: Wednesday, 5th August 2015.

Impacts:

- After 8/5/15, ftp protocol support for ftp.mozilla.org will be completely disabled and downloads can only be accessed through http/https.

- Users will no longer be able to just enter “ftp.mozilla.org” into their browser, because this action defaults to the ftp protocol. Going forward, users should start using archive.mozilla.org. The old name will still work but needs to be entered in your browser as https://ftp.mozilla.org/

Action: The contents of ftp.mozilla.org are being migrated from the NetApp in SCL3 to AWS/S3 managed by Cloud Services.

Timing: Migrating ftp.mozilla.org contents will start in late August and conclude by end of October. Impacted teams will be notified of their migration date.

Impacts:

- Those teams that currently manually upload to these locations have been contacted and will be provided with S3 API keys. They will be notified prior to their migration date and given a chance to validate their upload functionality post-migration.

- All existing download links will continue to work as they do now with no impact.

|

|

Mark Surman: Mozilla Learning Strategy Slides |

Developing a long term Mozilla Learning strategy has been my big focus over the last three months. Working closely with people across our community, we’ve come up with a clear, simple goal for our work: universal web literacy. We’ve also defined ‘leadership’ and ‘advocacy’ as our two top level strategies for pursuing this goal. The use of ‘partnerships and networks’ will also be key to our efforts. These are the core elements that will make up the Mozilla Learning strategy.

Over the last month, I’ve summarized our thinking on Mozilla Learning for the Mozilla Board and a number of other internal audiences. This video is based on these presentations:

As you’ll see in the slides, our goal for Mozilla Learning is an ambitious one: make sure everyone knows how to read, write and participate on the web. In this case, everyone = the five billion people who will be online by 2025.

Our top level thinking on how to do this includes:

1. Develop leaders who teach and advocate for web literacy.

Concretely, we will integrate our Clubs, Hive and Fellows initiatives into a single, world class learning and leadership program.

2. Shift thinking: everyone understands the web / internet.

Concretely, this means we will invest more in advocacy, thought leadership and user education. We may also design ways to encourage web literacy more aggressively in our products.

3. Build a global web literacy network.

Mozilla can’t create universal web literacy on its own. All of our leadership and advocacy work will involve ‘open source’ partners with whom we’ll create a global network committed to universal web literacy.

Process-wise: we arrived at this high level strategy by looking at our existing programs and assets. We’ve been working on web literacy, leadership development and open internet advocacy for about five years now. So, we already have a lot in play. What’s needed right now is a way to focus all of our efforts in a way that will increase their impact — and that will build a real snowball of people, organizations and governments working on the web literacy agenda.

The next phase of Mozilla Learning strategy development will dig deeper on ‘how’ we will do this. I’ll provide a quick intro post on that next step in the coming days.

Filed under: mozilla

https://commonspace.wordpress.com/2015/07/27/mozilla-learning-strategy-slides/

|

|

Armen Zambrano: Enabling automated back-filling on mozilla-inbound for few hours |

few hours on mozilla-inbound.

We were aiming for Monday but pushed it to Tuesday to help publicize this more.

If on Wednesday there are no fall-outs we will leave it running for m-i for a week before enabling it on other places.

Posted on various mailing lists including mozilla.dev.tree-management.

> Hello all,

>

> We are planning to turn on a service that automatically backfills

> failed test jobs on m-i. If there are no concerns, we would like to

> turn this on experimentally for a couple of hours on [Tuesday]. We

> hope this will make it easier to identify which revision broke a

> test. Suggestions are welcome.

>

> The backfilling works like this: - It triggers the job that failed

> one extra time - Then it looks for a successful run of the job on the

> previous 5 revisions. If a good run is found, it triggers the job

> only on revisions up to this good run. If not, it triggers the job on

> every one of the previous 5 revisions. Previous jobs will be

> triggered one time.

>

> The tracking bug is:

> https://bugzilla.mozilla.org/show_bug.cgi?id=1180732

>

> Best, Alice

--

Zambrano Gasparnian, Armen

Automation & Tools Engineer

http://armenzg.blogspot.ca

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Air Mozilla: Mozilla Weekly Project Meeting |

The Monday Project Meeting

The Monday Project Meeting

https://air.mozilla.org/mozilla-weekly-project-meeting-20150727/

|

|

Mozilla Reps Community: Thank you Emma, Arturo and Raj |

Being part of the Reps Council is a great experience, it is at the same time an honour and a challenge and it comes with a lot of responsibility, hard work, but also lessons and growth.

We want to thank and recognize our most recent former Reps Council members for serving their one year term bringing all the their passions, knowledge and dedication to the program and making it more powerful.

Emma Irwin

Emma was a great inspiration not only for Reps, but specially for mentors and the Council. Her passion for education and empowering volunteers allowed her to push the program to be much more centered towards education and growth, marking a new era for Reps.

Emma was a great inspiration not only for Reps, but specially for mentors and the Council. Her passion for education and empowering volunteers allowed her to push the program to be much more centered towards education and growth, marking a new era for Reps.

She was not only advocating for it, but also rolling up her sleeves and running different trainings, creating curriculum and working towards improving the mentorship culture. She was also extremely helpful helping us navigate some conflicts and getting Reps to grow and put aside differences.

Arturo Martinez

Arturo’s unchallenged energy and drive were great additions to the Council. Specially during his term as Council Chair he set the standard for effectively driving initiatives, helping everyone to achieve their goals and pushing us to be excellent. Thank you for the productivity boost!

Gauthamraj Elango

Raj’s passion for Web literacy and for empowering everyone to take part of the open web helped him lead Reps program to work more closely with Webmaker team, showcasing an example on how Reps can bring much more value to initiatives. He drove efforts to innovate our initiatives both in terms of local organization and funding.

These are just a few examples of their exemplary work as Council members, that not only helped Reps all around the world to step up and have more impact, but also inspired new Mozillians and all the Reps around the world on how to lead change for the open Web.

Once again, thank you so much for your time, effort and your passion, you left an outstanding mark in the program.

You can share your gratitude with them in this topic on Discourse.

https://blog.mozilla.org/mozillareps/2015/07/27/thank-you-emma-arturo-raj/

|

|

QMO: Firefox 40 Beta 7 Testday Results |

Hello Mozillians!

As you may already know, last Friday – July 24th – we held a new Testday event, for Firefox 40 Beta 7.

We’d like to take this opportunity to thank everyone for getting involved in the proposed testing activities and in general, for helping us make Firefox better.

Many thanks go out to the Bangladesh QA Community, for testing Firefox Hello context, WebGl, Adobe flash plugin and also verifying lots of bug fixes: Hossain Al Ikram, Nazir Ahmed Sabbir, Rezaul Huque Nayeem, MD.Owes Quruny Shubho, Mohammad Maruf Islam, Md.Rahimul Islam, Kazi Nuzhat Tasnem, Md. Ehsanul Hassan, Saheda.Reza Antora, Fahmida Noor, Meraj Kazi, Md. Jahid Hasan Fahim, Israt, Towkir Ahmed and Eyakub.

Special thanks go out to participants of Campus-Party Mexico that attended Firefox 40 beta 7 testday and helped with the testing of Firefox Hello context, WebGl and Adobe flash plugin: Mauricio Navarro Miranda, LASR21 S'anchez, diegoehg, nataly Gurrola, Jorge Luis Flores Barrales, EZ274, Armando Gomez, Karla Danitza Duran Memijes and Eduardo Arturo Enciso Hern'andez.

Also a big thank you goes to all our moderators.

Keep an eye on QMO for upcoming events!

https://quality.mozilla.org/2015/07/firefox-40-beta-7-testday-results/

|

|

Daniel Stenberg: HTTPS and HTTP/2 plans for my sites |

I produce a fair amount of open source code. I make that code available online. curl is probably the most popular package.

People ask me how they can trust that they are actually downloading what I put up there. People ask me when my source code can be retrieved over HTTPS. Signatures and hashes don’t add a lot against attacks when they all also are fetched over HTTP…

HTTPS

I really and truly want to offer HTTPS (only) for all my sites. I and my friends run a whole busload of sites on the same physical machine and IP address (www.haxx.se, daniel.haxx.se, curl.haxx.se, c-ares.haxx.se, cool.haxx.se, libssh2.org and many more) so I would like a solution that works for all of them.

I really and truly want to offer HTTPS (only) for all my sites. I and my friends run a whole busload of sites on the same physical machine and IP address (www.haxx.se, daniel.haxx.se, curl.haxx.se, c-ares.haxx.se, cool.haxx.se, libssh2.org and many more) so I would like a solution that works for all of them.

I can do this by buying certs, either a lot of individual ones or a few wildcard ones and then all servers would be covered. But the cost and the inconvenience of needing a lot of different things to make everything work has put me off. Especially since I’ve learned that there is a better solution in the works!

Let’s Encrypt will not only solve the problem for us from a cost perspective, but they also promise to solve some of the quirks on the technical side as well. They say they will ship certificates by September 2015 and that has made me wait for that option rather than rolling up my sleeves to solve the problem with my own sweat and money. Of course there’s a risk that they are delayed, but I’m not running against a hard deadline myself here.

HTTP/2

Related, I’ve been much involved in the HTTP/2 development and I host my “http2 explained” document on my still non-HTTPS site. I get a lot of questions (and some mocking) about why my HTTP/2 documentation isn’t itself available over HTTP/2. I would really like to offer it over HTTP/2.

Since all the browsers only do HTTP/2 over HTTPS, a prerequisite here is that I get HTTPS up and running first. See above.

Once HTTPS is in place, I want to get HTTP/2 going as well. I still run good old Apache here so it might be done using mod_h2 or perhaps with a fronting nghttp2 proxy. We’ll see.

http://daniel.haxx.se/blog/2015/07/26/https-and-http2-plans-for-my-sites/

|

|