Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Air Mozilla: German speaking community bi-weekly meeting |

https://wiki.mozilla.org/De/Meetings

https://wiki.mozilla.org/De/Meetings

https://air.mozilla.org/german-speaking-community-bi-weekly-meeting-20150730/

|

|

Matjaz Horvat: A single platform for localization |

Let’s get straight to the biscuits. From now on, you only need one tool to localize Mozilla stuff. That’s it. Single user interface, single translation memory, single permission management, single user account. Would you like to give it a try? Keep on reading!

A little bit of background.

Mozilla software and websites are localized by hundreds of volunteers, who give away their free time to put exciting technology into the hands of people across the globe. Keep in mind that 2 out of 3 Firefox installations are non-English and we haven’t shipped a single Firefox OS phone in English yet.

Considering the amount of impact they have and the work they contribute, I have a huge respect for our localizers and the feedback we get from them. One of the most common complaints I’ve been hearing is that we have too many localization tools. And I couldn’t agree more. At one of our recent l10n hackathons I was even introduced to a tool I never heard about despite 13 years of involvement with Mozilla localization!

So I thought, “Let’s do something about it!”

9 in 1.

I started by looking at the tools we use in Slovenian team and counted 9(!) different tools:

- 4 editors: Verbatim, Pootle, Pontoon, Text editor.

- 3 terminology services: Transvision, amaGama, Microsoft Terminology.

- 2 dashboards: product and web.

Eating my own dog food, I had already integrated all 3 terminology services into Pontoon, so that suggestions from these sources are presented to users while they translate. Furthermore, Pontoon syncs with repositories, sometimes even more often that the dashboards, practically eliminating the need to look at them.

So all I had to do is migrate projects from the rest of the editors into Pontoon. Not a single line of code needed to be written for Verbatim migration. Pootle and the text editor were slightly more complicated. They were used to localize Firefox, Firefox for Android, Thunderbird and Lightning, which all use the huge mozilla-central repository as their source repository and share locale repositories.

Nevertheless, a few weeks after the team agreed to move to Pontoon, Slovenian now uses Pontoon as the only tool to localize all (31) of our active projects!

Who wants to join the party?

Slovenian isn’t the only team using Pontoon. In fact, there are 2 dozens of locales with at least 5 projects enabled in Pontoon. Recently, Ukranian (uk) and Portugese Brasil (pt-BR) have been especially active, not only in terms of localization but also in terms of feedback. A big shout out to Artem, Marco and Marko!

There are obvious benefits of using just one tool, namely keeping all translations, attributions, contributor stats, etc. in one place. To give Pontoon a try, simply select a project and request your locale to be enabled. Migrating projects from other tools will of course preserve all the translations. Starting today, that includes attributions and submission dates (who translated what, and when it was translated) if you’re moving projects from Verbatim.

And, as you already know, Pontoon is developed by Mozilla, so we invite you to report problems and request new features. We also accept patches. ![]() We have many exciting things coming up by the end of the summer, so keep an eye out for updates!

We have many exciting things coming up by the end of the summer, so keep an eye out for updates!

|

|

Air Mozilla: Web QA Weekly Meeting |

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

This is our weekly gathering of Mozilla'a Web QA team filled with discussion on our current and future projects, ideas, demos, and fun facts.

|

|

Ehsan Akhgari: Tab audio indicators and muting in Firefox Nightly |

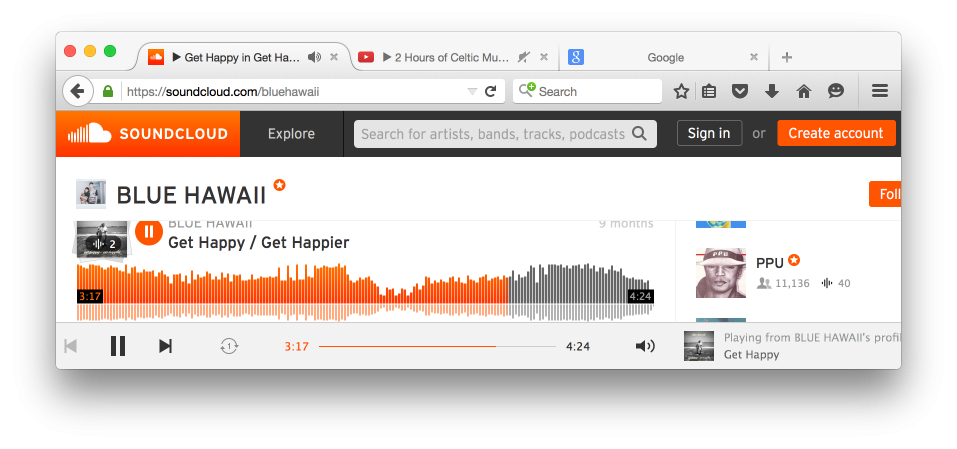

Sometimes when you have several tabs open, and one of them starts to make some noise, you may wonder where the noise is coming from. Other times, you may want to quickly mute a tab without figuring out if the web page provides its own UI for muting the audio. On Wednesday, I landed the user facing bits of a feature to add an audio indicator to the tabs that are playing audio, and enable muting them. You can see a screenshot of what this will look like in action below.

As you can see in the screenshot, my Soundcloud tab is playing audio, and so is my Youtube tab, but the Youtube tab has been muted. Muting and unmuting a tab is easy by clicking on the tab audio indicator icon. You can now test this out yourself on Firefox Nightly tomorrow!

This feature should work with all APIs that let you play audio, such as HTML5

We’re interested in your feedback about this feature, and especially about any bugs that you may encounter. We hope to iron out the rough edges and then let this feature ride the trains. If you are curious about this progress, please follow along on the tracking bug.

Last but not least, this is the results of the effort of many of my colleagues, most notably Andrea Marchesini, Benoit Girard, and Stephen Horlander. Thanks to those and everyone else who helped with the code, reviews, and other things!

http://ehsanakhgari.org/blog/2015-07-30/tab-audio-indicators-and-muting-in-firefox-nightly

|

|

David Burns: Another Marionette release! Now with Windows Support! |

If you have been wanting to use Marionette but couldn't because you only work on Windows, now is your chance to do so! All the latest downloads are available from our development github repository releases page

There is also a new page on MDN that walks you through the process of setting up Marionette and using it. I have only updated the python bindings so I can get a fell for how people are using it

Since you are awesome early adopters it would be great if we could raise bugs.

I am not expecting everything to work but below is a quick list that I know doesn't work.

- No support for self-signed certificates

- No support for actions

- No support logging endpoint

- getPageSource not available. This will be added in at a later stage, it was a slightly contentious part in the specification.

- I am sure there are other things we don't remember

Switching of Frames needs to be done with either a WebElement or an index. Windows can only be switched by window handles. This is currently how it has been discussed in the specification.

If in doubt, raise bugs!

Thanks for being an early adopter and thanks for raising bugs as you find them!

|

|

Karl Dubost: CSS Vendor Prefixes - Some Historical Context |

A very good (must) read by Daniel Glazman about the CSS vendor prefixes and its challenges. He reminds us of what I was brushing of yesterday about the issues with regards to Web Compatibility:

Flagged properties have another issue: they don't solve the problem of proprietary extensions to CSS that become mainstream. If a given vendor implements for its own usage a proprietary feature that is so important to them, internally, they have to "unflag" it, you can be sure some users will start using it if they can. The spread of such a feature remains a problem, because it changes the delicate balance of a World Wide Web that should be readable and usable from anywhere, with any platform, with any browser.

I think the solution is in the hands of browser vendors: they have to consider that experimental features are experimental whetever their spread in the wild. They don't have to care about the web sites they will break if they change, update or even ditch an experimental or proprietary feature. We have heard too many times the message « sorry, can't remove it, it spread too much ». It's a bad signal because it clearly tells CSS Authors experimental features are reliable because they will stay forever as they are. They also have to work faster and avoid letting an experimental feature alive for more than two years.

Emphasis is mine on this last part. Yes it's a very bad signal. And check what was said yesterday.

@AlfonsoML And we will always support them (unlike some vendors that remove things at will). So what is the issue?

This is the issue in terms of Web Compatibility. It's what I was precisely saying that implementers do not understand the impact it has.

http://www.otsukare.info/2015/07/30/css-vendor-prefix-history

|

|

Daniel Glazman: CSS Vendor Prefixes |

I have read everything and its contrary about CSS vendor prefixes in the last 48 hours. Twitter, blogs, Facebook are full of messages or articles about what are or are supposed to be CSS vendor prefixes. These opinions are often given by people who were not members of the CSS Working Group when we decided to launch vendor prefixes. These opinions are too often partly or even entirely wrong so let me give you my own perspective (and history) about them. This article is with my CSS Co-chairman's hat off, I'm only an old CSS WG member in the following lines...

- CSS Vendor Prefixes as we know them were proposed by Mike Wexler from Adobe in September 1998 to allow browser vendors to ship proprietary extensions to CSS.

In order to allow vendors to add private properties using the CSS syntax and avoid collisions with future CSS versions, we need to define a convention for private properties. Here is my proposal (slightly different than was talked about at the meeting). Any vendors that defines a property that is not specified in this spec must put a prefix on it. That prefix must start with a '-', followed by a vendor specific abbreviation, and another '-'. All property names that DO NOT start with a '-' are RESERVED for using by the CSS working group.

- One of the largest shippers of prefixed properties at that time was Microsoft that introduced literally dozens of such properties in Microsoft Office.

- The CSS Working Group slowly evolved from that to « vendor prefixes indicate proprietary features OR experimental features under discussion in the CSS Working Group ». In the latter case, the vendor prefixes were supposed to be removed when the spec stabilized enough to allow it, i.e. reaching an official Call for Implementation.

- Unfortunately, some prefixed « experimental features » were so immensely useful to CSS authors that they spread at fast pace on the Web, even if the CSS authors were instructed not to use them. CSS Gradients (a feature we originally rejected: « Gradients are an example. We don't want to have to do this in CSS. It's only a matter of time before someone wants three colors, or a radial gradient, etc. ») are the perfect example of that. At some point in the past, my own editor BlueGriffon had to output several different versions of CSS gradients to accomodate the various implementation states available in the wild (WebKit, I'm looking at you...).

- Unfortunately, some of those prefixed properties took a lot, really a lot, of time to reach a stable state in a Standard and everyone started relying on prefixed properties in production web sites...

- Unfortunately again, some vendors did not apply the rules they decided themselves: since the prefixed version of some properties was so widely used, they maintained them with their early implementation and syntax in parallel to a "more modern" implementation matching, or not, what was in the Working Draft at that time.

- We ended up just a few years ago in a situation where prefixed proprerties were so widely used they started being harmful to the Web. The indredible growth of first WebKit and then Chrome triggered a massive adoption of prefixed properties by CSS authors, up to the point other vendors seriously considered implementing themselves the -webkit- prefix or at least simulating it.

Vendor prefixes were not a complete failure. They allowed the release to the masses of innovative products and the deep adoption of HTML and CSS in products that were not originally made for Web Standards (like Microsoft Office). They allowed to ship experimental features and gather priceless feedback from our users, CSS Authors. But they failed for two main reasons:

- The CSS Working Group - and the Group is really made only of its Members, the vendors - took faaaar too much time to standardize critical features that saw immediate massive adoption.

- Some vendors did not update nor "retire" experimental features when they had to do it, ditching themselves the rules they originally agreed on.

From that perspective, putting experimental features behind a flag that is by default "off" in browsers is a much better option. It's not perfect though. I'm still under the impression the standardization process becomes considerably harder when such a flag is "turned on" in a major browser before the spec becomes a Proposed Recommendation. A Standardization process is not a straight line, and even at the latest stages of standardization of a given specification, issues can arise and trigger more work and then a delay or even important technical changes. Even at PR stage, a spec can be formally objected or face an IPR issue delaying it. As CSS matures, we increasingly deal with more and more complex features and issues, and it's hard to predict when a feature will be ready for shipping. But we still need to gather feedback, we still need to "turn flags on" at some point to get real-life feedback from CSS Authors. Unfortunately, you can't easily remove things from the Web. Breaking millions of web sites to "retire" an experimental feature is still a difficult choice...

Flagged properties have another issue: they don't solve the problem of proprietary extensions to CSS that become mainstream. If a given vendor implements for its own usage a proprietary feature that is so important to them, internally, they have to "unflag" it, you can be sure some users will start using it if they can. The spread of such a feature remains a problem, because it changes the delicate balance of a World Wide Web that should be readable and usable from anywhere, with any platform, with any browser.

I think the solution is in the hands of browser vendors: they have to consider that experimental features are experimental whetever their spread in the wild. They don't have to care about the web sites they will break if they change, update or even ditch an experimental or proprietary feature. We have heard too many times the message « sorry, can't remove it, it spread too much ». It's a bad signal because it clearly tells CSS Authors experimental features are reliable because they will stay forever as they are. They also have to work faster and avoid letting an experimental feature alive for more than two years. That requires taking the following hard decisions:

- if a feature does not stabilize in two years' time, that's probably because it's not ready or too hard to implement, or not strategic at that moment, or that the production of a Test Suite is a too large effort, or whatever. It has then to be dropped or postponed.

- Tests are painful and time-consuming. But testing is one of the mandatory steps of our Standardization process. We should "postpone" specs that can't get a Test Suite to move along the REC track in a reasonable time. That implies removing the experimental feature from browsers, or at least turning the flag they live behind off again. It's a hard and painful decision, but it's a reasonable one given all I said above and the danger of letting an experimenal feature spread.

http://www.glazman.org/weblog/dotclear/index.php?post/2015/07/30/CSS-Vendor-Prefixes

|

|

Benjamin Kerensa: N'oir'in Plunkett: Remembering Them |

N'oir'in and I

N'oir'in and IToday I learned of some of the worst kind of news, my friend and a valuable contributor to the great open source community N'oir'in Plunkett passed away. They (this is their preferred pronoun per their twitter profile) was well regarded in the open source community for contributions.

I had known them for about four years now, having met them at OSCON and seen them regularly at other events. They were always great to have a discussion with and learn from and they always had a smile on their face.

It is very sad to lose them as they demonstrated an unmatchable passion and dedication to open source and community and surely many of us will spend many days, weeks and months reflecting on the sadness of this loss.

Other posts about them:

https://adainitiative.org/2015/07/remembering-noirin-plunkett/

http://www.apache.org/memorials/noirin.html

http://www.harihareswara.net/sumana/2015/07/29/0

|

|

Jonathan Griffin: A-Team Update, July 29, 2015 |

Highlights

Treeherder: We’ve added to mozlog the ability to create error summaries which will be used as the basis for automatic starring. The Treeherder team is working on implementing database changes which will make it easier to add support for that. On the front end, there’s now a “What’s Deployed” link in the footer of the help page, to make it easier to see what commits have been applied to staging and production. Job details are now shown in the Logviewer, and a mockup has been created of additional Logviewer enhancements; see bug 1183872.

MozReview and Autoland: Work continues to allow autoland to work on inbound; MozReview has been changed to carry forward r+ on revised commits.

Bugzilla: The ability to search attachments by content has been turned off; BMO documentation has been started at https://bmo.readthedocs.org.

Perfherder/Performance Testing: We’re working towards landing Talos in-tree. A new Talos test measuring tab-switching performance has been created (TPS, or Talos Page Switch); e10s Talos has been enabled on all platforms for PGO builds on mozilla-central. Some usability improvements have been made to Perfherder – https://treeherder.mozilla.org/perf.html#/graphs.

TaskCluster: Successful OSX cross-compilation has been achieved; working on the ability to trigger these on Try and sorting out details related to packaging and symbols. Work on porting Linux tests to TaskCluster is blocked due to problems with the builds.

Marionette: The Marionette-WebDriver proxy now works on Windows. Documentation on using this has been added at https://developer.mozilla.org/en-US/docs/Mozilla/QA/Marionette/WebDriver.

Developer Workflow: A kill_and_get_minidump method has been added to mozcrash, which allows us to get stack traces out of Windows mochitests in more situations, particularly plugin hangs. Linux xpcshell debug tests have been split into two chunks in buildbot in order to reduce E2E times, and chunks of mochitest-browser-chrome and mochitest-devtools-chrome have been re-normalized by runtime across all platforms. Now that mozharness lives in the tree, we’re planning on removing the “in-tree configs”, and consolidating them with the previously out-of-tree mozharness configs (bug 1181261).

Tools: We’re testing an auto-backfill tool which will automatically retrigger coalesced jobs in Treeherder that precede a failing job. The goal is to reduce the turnaround time required for this currently manual process, which should in turn reduce tree closure times related to test failures

The Details

bugzilla.mozilla.org

- bug 1180571 – you can no longer search attachments by content

- bug 1177497 – work started on bmo specific documentation at https://bmo.readthedocs.org

- bug 1171806 – users can now disable their own account instead of making a request of bmo administrators

- see https://wiki.mozilla.org/BMO/Recent_Changes for a full list

Treeherder/Automatic Starring

- We’re generating error summaries now that will serve as the basis for automatic starring work.

Treeherder/Front End

- New “What’s Deployed” feature in Help footer to view stage/prod deployment status

- Logviewer now contains the full ‘Job Info’ aka. tinderbox printlines (bug 1092209)

- Created a mock of logviewer UI changes (bug 1183872)

Perfherder/Performance Testing

- Working towards moving Talos code in-tree (bug 787200)

- New Talos test TPS (Talos Page Switch) (bug 1166132)

- Fixed a few data ingestion/duplication cases.

- Adjusting calculation of suite summaries to match graph server, not finished yet (tracking: bug 1184968)

- e10s on all platforms, only runs on mozilla-central for pgo builds, broken tests, big regressions are tracked in bug 1144120

- perfherder is easier to use, some polish on test selection and the compare view, and most importantly we have found a few odd bugs that has caused duplicate data to show up, check it out: https://treeherder.mozilla.org/perf.html#/graphs

- Starting the work of moving Android Talos to Autophone (bug 1170685)

MozReview/Autoland

- bug 1184079 – Fix for autopublishing when authenticating to MozReview via BMO cookies

- bug 1178025 – Commits table looks nicer

- bug 1175166 – r+ is now carried forward on commits from level 3 authors

TaskCluster Support

- Successful OS X cross-compile in Taskcluster: https://tools.taskcluster.net/task-inspector/#tT5nGpWORFKgxPe__C2RUw/ (patches need to land in m-c, but all have been reviewed)

- Next steps: sorting out blockers to be able to trigger builds on Try, start working on packaging/symbols

Mobile Automation

- Continued work on porting android talos tests to autophone, remaining work is to figure out posting results and ensuring it runs on a regular basis and reliable.

- Support for the Android stock browser and Dolphin has been added to mozbench (bug 1103134)

Dev Workflow

- Created patch that replaces mach’s logger with mozlog. Still several rough edges and perf issues to iron out

Media Automation

- The new MSE rewrite is now enabled by default on Nightly and we’re replacing a few tests in response: bug 1186943 – detection of video stalls has to repond to new internal strings from new MSE implementation by :jya.

- firefox-media-tests mozharness log is now parsed into steps for Treeherder’s Log Viewer

- Fixed a problem with automation scripts for WebRTC tests for Windows 64.

General Automation

- Moved mozlog.structured to top-level mozlog, and released mozlog 3.0

- Added a kill_and_get_minidump method to mozcrash (bug 890026). As a result we’re getting minidumps out of Windows mochitests under more circumstances (in particular, plugin hangs in certain intermittently failing tests).

- The MozillaPulse consumer now supports listening to multiple exchanges simultaneously (bug 1180897).

- Bug 1186420 – Autophone – update requirements and deploy thclient 1.6

- Bughunter moved to SCL3 without interruption

- Bug 1185498 – Sisyphus – Bughunter – consume urls directly from Socorro

- linux debug xpcshell was split into two chunks to reduce E2E times (bug 1185499)

- runtimes for mochitest-browser-chrome and mochitest-devtools have been renormalized across all platforms

- Allow Firefox UI tests to determine where to get Firefox crash symbols for releases and improve reproducibility

- Testing auto-backfill in production (bug 1180732)

- Now that mozharness lives in the tree, we’re going to remove the “in-tree configs”, which will consolidate mozharness options and make maintenance simpler (bug 1181261)

ActiveData

- ActiveData requires monitoring on all nodes before it can be left alone for more than a day without it failing:

- Made fork of Supervisor to run simple Cron jobs – the biggest task was finding and installing (and compiling!) the C libraries used

- Added Supervisor to spot instances to monitor ES; not just the process, but query response time. Also monitoring the indexing jobs.

- Replicated OrangeFactor to ActiveData so masters student (and the public) we can query it, or extract it.

Marionette

- Landed Proxy support via capabilities

- Updating cookie support to return httpOnly flag

- Added a –version arg to Marionette (bug 1183157)

- Landing support for W3C Compatible Drivers in Selenium Tree and released 2.46.1 so users can use it.

- Wrote a small guide to use it https://developer.mozilla.org/en-US/docs/Mozilla/QA/Marionette/WebDriver

- Marionette<->WebDriver Proxy now works on Windows, Linux and OSX as of 0.3.0

https://jagriffin.wordpress.com/2015/07/29/a-team-update-july-29-2015/

|

|

Joel Maher: Lost in data – episode 2 – bisecting and comparing |

This week on Lost in Data, we tackle yet another pile of alerts. This time we have a set of changes which landed together and we push to try for bisection. In addition we have an e10s only failure which happened when we broke talos uploading to perfherder. See how I get one step closer to figuring out the root cause of the regressions.

https://elvis314.wordpress.com/2015/07/29/lost-in-data-episode-2-bisecting-and-comparing/

|

|

Daniel Stenberg: A third day of HTTP Workshopping |

I’ve met a bunch of new faces and friends here at the HTTP Workshop in M"unster. Several who I’ve only seen or chatted with online before and some that I never interacted with until now. Pretty awesome really.

Out of the almost forty HTTP fanatics present at this workshop, five persons are from Google, four from Mozilla (including myself) and Akamai has three employees here. Those are the top-3 companies. There are a few others with 2 representatives but most people here are the only guys from their company. Yes they are all guys. We are all guys. The male dominance at this event is really extreme and we’ve discussed this sad circumstance during breaks and it hasn’t gone unnoticed.

This particular day started out grand with Eric Rescorla (of Mozilla) talking about HTTP Security in his marvelous high-speed style. Lots of talk about how how the HTTPS usage is right now on the web, HTTPS trends, TLS 1.3 details and when it is coming and we got into a lot of talk about how HTTP deprecation and what can and cannot be done etc.

Next up was a presentation about HTTP Privacy and Anonymity by Mike Perry (from the Tor project) about lots of aspects of what the Tor guys consider regarding fingerprinting, correlation, network side-channels and similar things that can be used to attempt to track user or usage over the Tor network. We got into details about what recent protocols like HTTP/2 and QUIC “leak” or open up for fingerprinting and what (if anything) can or could be done to mitigate the effects.

Evolving HTTP Header Fields by Julian Reschke (of Green Bytes) then followed, discussing all the variations of header syntax that we have in HTTP and how it really is not possible to write a generic parser that can handle them, with a suggestion on how to unify this and introduce a common format for future new headers. Julian’s suggestion to use JSON for this ignited a discussion about header formats in general and what should or could be done for HTTP/3 and if keeping support for the old formats is necessary or not going forward. No real consensus was reached.

Willy Tarreau (from HAProxy) then took us into the world of HTTP Infrastructure scaling and Load balancing, and showed us on the microsecond level how fast a load balancer can be, how much extra work adding HTTPS can mean and then ending with a couple suggestions of what he thinks could’ve helped his scenario. That then turned into a general discussion and network architecture brainstorm on what can be done, how it could be improved and what TLS and other protocols could possibly be do to aid. Cramming out every possible gigabit out of load balancers certainly is a challange.

Talking about cramming bits, Kazuho Oku got to show the final slides when he showed how he’s managed to get his picohttpparser to parse HTTP/1 headers at a speed that is only slightly slower than strlen() – including a raw dump of the x86 assembler the code is turned into by a compiler. What could possibly be a better way to end a day full of protocol geekery?

Google graciously sponsored the team dinner in the evening at a Peruvian place in the town! Yet another fully packed day has ended.

I’ll top off today’s summary with a picture of the gift Mark Nottingham (who’s herding us through these days) was handing out today to make us stay keen and alert (Mark pointed out to me that this was a gift from one of our Japanese friends here):

http://daniel.haxx.se/blog/2015/07/29/a-third-day-of-http-workshopping/

|

|

Air Mozilla: Product Coordination Meeting |

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

https://air.mozilla.org/product-coordination-meeting-20150729/

|

|

Mozilla WebDev Community: Beer and Tell – July 2015 |

Once a month, web developers from across the Mozilla Project get together to develop an encryption scheme that is resistant to bad actors yet able to be broken by legitimate government entities. While we toil away, we find time to talk about our side projects and drink, an occurrence we like to call “Beer and Tell”.

There’s a wiki page available with a list of the presenters, as well as links to their presentation materials. There’s also a recording available courtesy of Air Mozilla.

Osmose: Moseamp

Osmose (that’s me!) was up first, and shared Moseamp, an audio player. It’s built using HTML, CSS, and JavaScript, but acts as a native app thanks to the Electron framework. Moseamp can play standard audio formats, and also can load plugins to add support for extra file formats, such as Moseamp-Audio-Overload for playing PSF files and Moseamp-GME for playing NSF and SPC files. The plugins rely on libraries written in C that are compiled via Emscripten.

Peterbe: Activity

Next was Peterbe with Activity, a small webapp that displays the events relevant to a project, such as pull requests, PR comments, bug comments, and more, and displays the events in a nice timeline along with the person related to the action. It currently pulls data from Bugzilla and Github.

The project was born from the need to help track a single individual’s activities related to a project, even if they have different usernames on different services. Activity can help a project maintainer see what contributors are doing and determine if there’s anything they can do to help the contributor.

New One: MXR to DXR

New One was up next with a Firefox add-on called MXR to DXR. The add-on rewrites all links to MXR viewed in Firefox to point to the equivalent page on DXR, the successor to MXR. The add-on also provides a hotkey for switching between MXR and DXR while browsing the sites.

bwalker: Liturgiclock

Last was bwalker who shared liturgiclock, which is a webpage showing a year-long view of what religious texts that Lutherans are supposed to read throughout the year based on the date. The site uses a Node.js library that provides the data on which text belongs to which date, and the visualization itself is powered by SVG and D3.js.

We don’t actually know how to go about designing an encryption scheme, but we’re hoping to run a Kickstarter to pay for the Udacity cryptography course. We’re confident that after being certified as cryptologists we can make real progress towards our dream of swimming in pools filled with government cash.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2015/07/29/beer-and-tell-july-2015/

|

|

Air Mozilla: The Joy of Coding (mconley livehacks on Firefox) - Episode 23 |

Watch mconley livehack on Firefox Desktop bugs!

Watch mconley livehack on Firefox Desktop bugs!

https://air.mozilla.org/the-joy-of-coding-mconley-livehacks-on-firefox-episode-23/

|

|

Sean McArthur: What’s the password? |

Exploring the wilds of the internets, I stumble upon a brand new site that allows me to turn cat images into ASCII art.

No way. Cats? In text form?! Text messages full of kitties!

This is amaze. How do I get started?

Says here, “Just create an account.” Ok.

“What’s your username?” seanmonstar.

“Pick a p

|

|

Rob Wood: Raptor on Gaia CI: Update |

Raptor on Gaia-CI Refactored

A lot has happened in the world of Raptor in the last couple of months. The Raptor tool itself has been migrated out of Gaia, and is now available as it’s own Raptor CLI Tool installed as a global NPM package. The new Raptor tool has several improvements including the addition of Marionette, allowing Raptor tests to use Marionette to drive the device UI, and in turn spawn performance markers.

The automation running Raptor on Gaia-ci has now been updated to use the new Raptor CLI tool. Not only has the tool itself been upgraded, but how the Raptor suite is running on Gaia-ci has also been completely refactored, for the better.

In order to reduce test variance, the coldlaunch test is now run on the base Gaia version and then on the patch Gaia version, all on the same taskcluster worker/machine instance. This has the added benefit of just having a single Raptor app launch test task per Gaia application that the test is supported on.

New Raptor Tasks on Treeherder

The Raptor tasks are now visible by default on Treeherder for Gaia and Gaia-master, and appear in a new Raptor group as seen here:

All of the applications that the coldlaunch performance test were performed on are listed (currently 12 apps). The Raptor suite is run once by default now (on both base and patch Gaia revisions) and results calculated and compared all in the same task, per app. If an app startup time regression is detected, the test is automatically retried up to 3 more times. The app task is marked as an orange failure if all 4 runs show a 15% or greater regression in app startup time.

To view the coldlaunch test results for any app, click on the corresponding app task symbol on Treeherder. Then on the panel that appears towards the bottom, click on “Job details”, and then “Inspect Task”. The Task Inspector opens in a new tab. Scroll down and you will see the console output which includes the summary table for the base and patch Gaia revs, and the overall result (if a regression has been detected or not). If a regression has been detected, the regression percentage is displayed; this represents the increase in application launch time.

Retriggering the Launch Test

If you wish to repeat the app launch test again for an individual app, perhaps to further confirm a launch regression, it can be easily retriggered. To retrigger the coldlaunch test for a single app, simply click the task representing the app and retrigger the task how you normally would on Treeherder (login and click on the retrigger job button). This will start the Raptor coldlaunch test again just for the selected app, on both the gaia base and patch revisions as before. The versions of Gaia and the emulator (gecko) will remain the same as they were in the original suite run, when the test is retriggered on an individually-selected app.

If you wish to repeat the coldlaunch test on ALL of the apps instead of just one, then you can retrigger the entire Raptor suite. Select the Raptor decision task (the green “R“, as seen below) and click the retrigger button:

Note: Retriggering the entire Raptor suite will cause the emulator (gecko) version to be updated to the very latest before running the test, so if a new emulator build has been completed since the Raptor suite was originally run, then that new emulator (gecko) build will be used. The Gaia base and patch revisions used in the tests will be the same revisions used on the original suite run.

For more information drop by the #raptor channel on Mozilla IRC, or send me a message via the contact form on the About page of this blog. Happy coding!

|

|

Laura de Reynal: Snapchat |

“If Snapchat was a phone I would definitely buy it. It’s like an iPhone, it’s really fast, you can see everyone’s life and see what is going on in your world.”

16 years old female teenager, Chicago.

Filed under: Mozilla, Quotes, Research

|

|

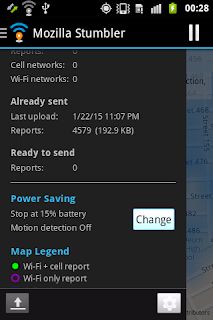

Arky: Autonomous Mozilla Stumbler with Android |

Mozilla Location Service (MLS) is an open source service to determine location based on network infrastructure like WiFi access points and cell towers. The project has released an client applications to collect the large dataset of GSM, Cellphone, WiFi data using crowd sourcing. In this blog post I'll explore an idea to re-purpose an old Android mobile phone as an autonomous MozStumbling device that could be easily deployed in public transport, taxis or your friend who is driving across the country.

Bill of Materials

- Android Mobile phone.

- Mozstumbler Android App.

- Taskbomb Android App.

- Mini-USB cable.

- GSM SIM (With mobile data).

- Car lighter socket power adapter.

- Powerbank (optional).

Putting it together

In this setup, I am using a rugged Android phone running Android Gingerbread. It can take a lot punishment. Leaving a phone in overheated car is recipe for disaster.

From the Android settings I enabled allow installation of non-market applications 'Unknown Sources'. Connected the phone to my computer using the Mini-USB cable. Transferred the previous downloaded apps(.apk) packages to phone and installed the Mozstumbler and Taskbomb applications.

Configured the Mozstumbler application to start on boot with Taskbomb app. Also configured Mozstumbler to upload using mobile data connection. The phone has GSM SIM card with data connection. Made sure both WiFi, GPS and Cellular data is enabled.

To prevent phone from downloading software updates and using up all the data. I disabled all software updates. Disabled all notifications both audio and LED notifications. Finally locked by phone by setting a secret code. Now the device is ready for deployment. The phone is plugged into car's lighter charging unit to keep it powered up. You can also use a power bank in case where charging options are not available.

Planning to use this autonomous Mozstumbler hack soon. Perhaps I should ask Thejesh to use on his epic trip across India.

http://playingwithsid.blogspot.com/2015/07/autonomous-mozilla-stumbler-with-android.html

|

|

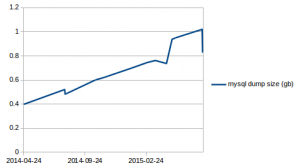

Hannes Verschore: Keep on growing |

I haven’t had the time to create a blogpost about the last year with numbers and describe the changes that have happened over the months/year. Hopefully soon, but a small teaser:

mysql dump size (in gb)

mysql dump size (in gb)|

|

Weekly Mozilla Reps call

Weekly Mozilla Reps call