Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Pocock: Unpaid work training Google's spam filters |

This week, there has been increased discussion about the pain of spam filtering by large companies, especially Google.

It started with Google's announcement that they are offering a service for email senders to know if their messages are wrongly classified as spam. Two particular things caught my attention: the statement that less than 0.05% of genuine email goes to the spam folder by mistake and the statement that this new tool to understand misclassification is only available to "help qualified high-volume senders".

From there, discussion has proceeded with Linus Torvalds blogging about his own experience of Google misclassifying patches from Linux contributors as spam and that has been widely reported in places like Slashdot and The Register.

Personally, I've observed much the same thing from the other perspective. While Torvalds complains that he isn't receiving email, I've observed that my own emails are not always received when the recipient is a Gmail address.

It seems that Google expects their users work a little bit every day going through every message in the spam folder and explicitly clicking the "Not Spam" button:

so that Google can improve their proprietary algorithms for classifying mail. If you just read or reply to a message in the folder without clicking the button, or if you don't do this for every message, including mailing list posts and other trivial notifications that are not actually spam, more important messages from the same senders will also continue to be misclassified.

If you are not willing to volunteer your time to do this, or if you are simply one of those people who has better things to do, Google's Gmail service is going to have a corrosive effect on your relationships.

A few months ago, we visited Australia and I sent emails to many people who I wanted to catch up with, including invitations to a family event. Some people received the emails in their inboxes yet other people didn't see them because the systems at Google (and other companies, notably Hotmail) put them in a spam folder. The rate at which this appeared to happen was definitely higher than the 0.05% quoted in the Google article above. Maybe the Google spam filters noticed that I haven't sent email to some members of the extended family for a long time and this triggered the spam algorithm? Yet it was at that very moment that we were visiting Australia that email needs to work reliably with that type of contact as we don't fly out there every year.

A little bit earlier in the year, I was corresponding with a few students who were applying for Google Summer of Code. Some of them also observed the same thing, they sent me an email and didn't receive my response until they were looking in their spam folder a few days later. Last year I know a GSoC mentor who lost track of a student for over a week because of Google silently discarding chat messages, so it appears Google has not just shot themselves in the foot, they managed to shoot their foot twice.

What is remarkable is that in both cases, the email problems and the XMPP problems, Google doesn't send any error back to the sender so that they know their message didn't get through. Instead, it is silently discarded or left in a spam folder. This is the most corrosive form of communication problem as more time can pass before anybody realizes that something went wrong. After it happens a few times, people lose a lot of confidence in the technology itself and try other means of communication which may be more expensive, more synchronous and time intensive or less private.

When I discussed these issues with friends, some people replied by telling me I should send them things through Facebook or WhatsApp, but each of those services has a higher privacy cost and there are also many other people who don't use either of those services. This tends to fragment communications even more as people who use Facebook end up communicating with other people who use Facebook and excluding all the people who don't have time for Facebook. On top of that, it creates more tedious effort going to three or four different places to check for messages.

Despite all of this, the suggestion that Google's only response is to build a service to "help qualified high-volume senders" get their messages through leaves me feeling that things will get worse before they start to get better. There is no mention in the Google announcement about what they will offer to help the average person eliminate these problems, other than to stop using Gmail or spend unpaid time meticulously training the Google spam filter and hoping everybody else does the same thing.

Some more observations on the issue

Many spam filtering programs used in corporate networks, such as SpamAssassin, add headers to each email to suggest why it was classified as spam. Google's systems don't appear to give any such feedback to their users or message senders though, just a very basic set of recommendations for running a mail server.

Many chat protocols work with an explicit opt-in. Before you can exchange messages with somebody, you must add each other to your buddy lists. Once you do this, virtually all messages get through without filtering. Could this concept be adapted to email, maybe giving users a summary of messages from people they don't have in their contact list and asking them to explicitly accept or reject each contact?

If a message spends more than a week in the spam folder and Google detects that the user isn't ever looking in the spam folder, should Google send a bounce message back to the sender to indicate that Google refused to deliver it to the inbox?

I've personally heard that misclassification occurs with mailing list posts as well as private messages.

http://danielpocock.com/unpaid-work-training-googles-spam-filters

|

|

Daniel Pocock: Recording live events like a pro (part 1: audio) |

Whether it is a technical talk at a conference, a political rally or a budget-conscious wedding, many people now have most of the technology they need to record it and post-process the recording themselves.

For most events, audio is an essential part of the recording. There are exceptions: if you take many short clips from a wedding and mix them together you could leave out the audio and just dub the couple's favourite song over it all. For a video of a conference presentation, though, the the speaker's voice is essential.

These days, it is relatively easy to get extremely high quality audio using a lapel microphone attached to a smartphone. Lets have a closer look at the details.

Using a lavalier / lapel microphone

Full wireless microphone kits with microphone, transmitter and receiver are usually $US500 or more.

The lavalier / lapel microphone by itself, however, is relatively cheap, under $US100.

The lapel microphone is usually an omnidirectional microphone that will pick up the voices of everybody within a couple of meters of the person wearing it. It is useful for a speaker at an event, some types of interviews where the participants are at a table together and it may be suitable for a wedding, although you may want to remember to remove it from clothing during the photos.

There are two key features you need when using such a microphone with a smartphone:

- TRRS connector (this is the type of socket most phones and many laptops have today)

- Microphone impedance should be at least 1k

http://danielpocock.com/recording-live-events-like-a-pro-part-one-audio

|

|

Nicholas Nethercote: “Thank you” is a wonderful phrase |

Last year I contributed a number of patches to pdf.js. Most of my patches were reviewed and merged to the codebase by Yury Delendik. Every single time he merged one of my patches, Yury wrote “Thank you for the patch”. It was never “thanks” or “thank you!” or “thank you :)”. Nor was it “awesome” or “great work” or “+1'' or “\o/” or “\m/”. Just “Thank you for the patch”.

Oddly enough, this unadorned use of one of the most simple and important phrases in the English language struck me as quaint, slightly formal, and perhaps a little old-fashioned. Not a bad thing by any means, but… notable. And then, as Yury merged more of my patches, I started getting used to it. Tthen I started enjoying it. Each time he wrote it — I’m pretty sure he wrote it every time — it made me smile. I felt a small warm glow inside. All because of a single, simple, specific phrase.

So I started using it myself. (“Thank you for the patch.”) I tried to use it more often, in situations I previously wouldn’t have. (“Thank you for the fast review”.) I mostly kept to this simple form and eschewed variations. (“Thank you for the additional information.”) I even started using it each time somebody answered one of my questions on IRC. (“glandium: thank you”)

I’m still doing this. I don’t always use this exact form, and I don’t always remember to thank people who have helped me. But I do think it has made my gratitude to those around me more obvious, more consistent, and more sincere. It feels good.

https://blog.mozilla.org/nnethercote/2015/07/23/thank-you-is-a-wonderful-phrase/

|

|

Morgan Phillips: git push origin taskcluster |

Up until now, there have been a few ways for developers to schedule custom CI jobs in TaskCluster; but nothing completely general. However, today I'd like to give sneak peak at a project I've been working on to make the process of running jobs on our infra extremely simple: TaskCluster GitHub.

1.) The service watches an entire organization at once: if your project lives in github/mozilla, drop a

.taskclusterrc file in the base of your repository and the jobs will just start running after each pull request - dead simple.2.) TaskCluster will give you more control over the platform/environment: you can choose your own docker container by default, but thanks to the generic worker we'll also be able to run your jobs on Windows XP-10 and OSX.

3.) Expect integration with other Mozilla services: For a mozilla developer, using this service over travis or circle should make sense, since it will continue to evolve and integrate with our infrastructure over time.

Because today the prototype is working, and I'm very excited! I also feel that there's no harm in spreading the word about what's coming.

When this goes into production I'll do something more significant than a blog post, to let developers know they can start using the system. In the meantime here it is handling a replay of this pull request. \o/ note: The finished version will do nice things, like automatically leave a comment with a link to the job and its status..

|

|

Mic Berman: Getting to know your new direct reports |

A few of my coaching clients have recently had to take on new teams or hire new people and grow their teams rapidly.

Here are some great questions to get to know your new folks in your 1:1's. Remember to answer the questions yourselves as well - this relationship is about reciprocity - Enjoy :)!

- What will make this relationship rewarding for you?

- What approaches encourage or motivate you? and discourage or de-motivate you?

- How will you know you are receiving value from this relationship?

- If you trusted me enough to say how to manage you most effectively, what tips would you give?

- What else would you like me to know about you?

- What do you do when you're really against an obstacle or barrier?

- What makes you angry, frustrated or upset?

- What's easy to appreciate? what matters in the more difficult times?

- What works for you when you are successful at making changes?

- Where do you usually get stuck?

- How do you deal with disappointment or failure?

- What is the compliment or acknowledgement you hear most often about yourself?

- What words describe you at your best?

- What words describe you when you are at less than your best?

PS Don't use them all at once ;) take your time getting to know each other and working together

http://michalberman.typepad.com/my_weblog/2015/07/getting-to-know-your-new-direct-reports.html

|

|

Mozilla Addons Blog: Add-ons Update – Week of 2015/07/22 |

I post these updates every 3 weeks to inform add-on developers about the status of the review queues, add-on compatibility, and other happenings in the add-ons world.

Add-ons Forum

As we announced before, there’s a new add-ons community forum for all topics related to AMO or add-ons in general. The Add-ons category is one of the most active in the community forum, so thank you all for your contributions! The old forum is still available in read-only mode.

The Review Queues

- Most nominations for full review are taking less than 10 weeks to review.

- 308 nominations in the queue awaiting review.

- Most updates are being reviewed within 10 weeks.

- 127 updates in the queue awaiting review.

- Most preliminary reviews are being reviewed within 12 weeks.

- 334 preliminary review submissions in the queue awaiting review.

The unlisted queues aren’t mentioned here, but they are empty for the most part. We’re in the process of getting more help to reduce queue length and waiting times for the listed queues.

If you’re an add-on developer and would like to see add-ons reviewed faster, please consider joining us. Add-on reviewers get invited to Mozilla events and earn cool gear with their work. Visit our wiki page for more information.

Firefox 40 Compatibility

The Firefox 40 compatibility blog post is up. The automatic compatibility validation will be run soon.

As always, we recommend that you test your add-ons on Beta and Firefox Developer Edition (formerly known as Aurora) to make sure that they continue to work correctly. End users can install the Add-on Compatibility Reporter to identify and report any add-ons that aren’t working anymore.

Extension Signing

We announced that we will require extensions to be signed in order for them to continue to work in release and beta versions of Firefox. The wiki page on Extension Signing has information about the timeline, as well as responses to some frequently asked questions.

A recent update is that Firefox for Android will implement signing at the same time as Firefox for Desktop. This mostly means that we will run the automatic signing process for add-ons that support Firefox for Android on AMO, so they are all ready before it hits release.

Electrolysis

Electrolysis, also known as e10s, is the next major compatibility change coming to Firefox. In a nutshell, Firefox will run on multiple processes now, running content code in a different process than browser code. This should improve responsiveness and overall stability, but it also means many add-ons will need to be updated to support this.

We will be talking more about these changes in this blog in the future. For now we recommend you start looking at the available documentation.

https://blog.mozilla.org/addons/2015/07/22/add-ons-update-68/

|

|

Air Mozilla: Quality Team (QA) Public Meeting |

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

This is the meeting where all the Mozilla quality teams meet, swap ideas, exchange notes on what is upcoming, and strategize around community building and...

https://air.mozilla.org/quality-team-qa-public-meeting-20150722/

|

|

Air Mozilla: Product Coordination Meeting |

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

Duration: 10 minutes This is a weekly status meeting, every Wednesday, that helps coordinate the shipping of our products (across 4 release channels) in order...

https://air.mozilla.org/product-coordination-meeting-20150722/

|

|

Air Mozilla: The Joy of Coding (mconley livehacks on Firefox) - Episode 22 |

Watch mconley livehack on Firefox Desktop bugs!

Watch mconley livehack on Firefox Desktop bugs!

https://air.mozilla.org/the-joy-of-coding-mconley-livehacks-on-firefox-episode-22/

|

|

Christian Heilmann: I don’t want Q&A in conference videos |

I present at conferences – a lot. I also moderate conferences and I brought the concept of interviews instead of Q&A to a few of them (originally this concept has to be attributed to Alan White for Highland Fling, just to set the record straight). Many conferences do this now, with high-class ones like SmashingConf and Fronteers being the torch-bearers. Other great conferences, like EdgeConf, are 100% Q&A, and that’s great, too.

I also watch a lot of conference talks – to learn things, to see who is a great presenter (and I will recommend to conference organisers who ask me for talent), and to see what others are doing to excite audiences. I do that live, but I’m also a great fan of talk recordings.

I want to thank all conference organisers who go the extra mile to offer recordings of the talks at their event – you already rock, thanks!

I put those on my iPod and watch them in the gym, whilst I am on the cross trainer. This is a great time to concentrate, and to get fit whilst learning things. It is a win-win.

Much like everyone else, I pick the talk by topic, but also by length. Half an hour to 40 minutes is what I like best. I also tend to watch 2-3 15 minute talks in a row at times. I am quite sure, I am not alone in this. Many people watch talks when they commute on trains or in similar “drive by educational” ways. That’s why I’d love conference organisers to consider this use case more.

I know, I’m spoilt, and it takes a lot of time and effort and money to record, edit and release conference videos and you make no money from it. But before shooting me down and telling me I have no right to demand this if I don’t organise events myself, let me tell you that I am pretty sure you can stand out if you do just a bit of extra work to your recordings:

- Make them available offline (for YouTube videos I use YouTube DL on the command line to do that anyways). Vimeo has an option for that, and Channel 9 did that for years, too.

- Edit out the Q&A – there is nothing more annoying than seeing a confused presenter on a small screen trying to understand a question from the audience for a minute and then saying “yes”. Most of the time Q&A is not 100% related to the topic of the talk, and wanders astray or becomes dependent on knowledge of the other talks at that conference. This is great for the live audience, but for the after-the-fact consumer it becomes very confusing and pretty much a waste of time.

That way you end up with much shorter videos that are much more relevant. I am pretty sure your viewing/download numbers will go up the less cruft you have.

It also means better Q&A for your event:

- presenters at your event know they can deliver a great, timed talk and go wild in the Q&A answering questions they may not want recorded.

- people at the event can ask questions they may not want recorded (technically you’d have to ask them if it is OK)

- the interviewer or people at the event can reference other things that happened at the event without confusing the video audience. This makes it a more lively Q&A and part of the whole conference experience

- there is less of a rush to get the mic to the person asking and there is more time to ask for more details, should there be some misunderstanding

- presenters are less worried about being misquoted months later when the video is still on the web but the context is missing

For presenters, there are a few things to consider when presenting for the audience and for the video recording, but that’s another post. So, please, consider a separation of talk and Q&A – I’d be happier and promote the hell out of your videos.

http://christianheilmann.com/2015/07/22/i-dont-want-qa-in-conference-videos/

|

|

Jared Wein: Polishing Firefox for Windows 10 |

Over the years, the Firefox front-end team has made numerous polishes and updates to the Firefox theme.

Firefox 4 was a huge update to the user interface of Firefox compared to Firefox 3.6. A couple years down the line we released Australis in Firefox 29. Next month we’ll be releasing Firefox 40 and with it will come with what I believe to be the most polished UI we’ve shipped to date.

The Australis release was great in so many ways. It may have taken a bit longer than many had expected to get released, but much of that was due to the complexities of the Firefox theme code. During the Australis release we removed some lesser used features which consistently were a pain in the rear when making theme changes (small icons and icons+text mode, I’m looking at both of you

https://msujaws.wordpress.com/2015/07/22/polishing-firefox-for-windows-10/

|

|

Mark Surman: Building a big tent (for web literacy) |

Building a global network of partners will be key to the success of our Mozilla Learning initiative. A network like this will give us the energy, reach and diversity we need to truly scale our web literacy agenda. And, more important, it will demonstrate the kind of distributed leadership and creativity at the heart of Mozilla’s vision of the web.

As I said in my last two posts, leadership development and advocacy will be the two core strategies we employ to promote universal web literacy. Presumably, Mozilla could do these things on its own. However, a distributed, networked approach to these strategies is more likely to scale and succeed.

Luckily, partners and networks are already central to many of our programs. What we need to do at this stage of the Mozilla Learning strategy process is determine how to leverage and refine the best aspects of these networks into something that can be bigger and higher impact over time. This post is meant to frame the discussion on this topic.

The basics

As a part of the Mozilla Learning strategy process, we’ve looked at how we’re currently working with partners and using networks. There are three key things we’ve noticed:

- Partners and networks are a part of almost all of our current programs. We’ve designed networks into our work from early on.

- Partners fuel our work: they produce learning content; they host fellows; they run campaigns with us. In a very real way, partners are huge contributors (a la open source) to our work.

- Many of our partners specialize in learning and advocacy ‘on the ground’. We shouldn’t compete with them in this space — we should support them.

With these things in mind, we’ve agreed we need to hold all of our program designs up to this principle:

Design principle = build partners and networks into everything.

We are committed to integrating partners and networks into all Mozilla Learning leadership and advocacy programs. By design, we will both draw from these networks and provide value back to our partners. This last point is especially important: partnerships need to provide value to everyone involved. As we go into the next phase of the strategy process, we’re going to engage in a set of deep conversations with our partners to ensure the programs we’re building provide real value and support to their work.

Minimum viable partnership

Over the past few years, a variety of network and partner models have developed through Mozilla’s learning and leadership work. Hives are closely knit city-wide networks of educators and orgs. Maker Party is a loose network of people and orgs around the globe working on a common campaign. Open News and Mozilla Science sit within communities of practice with a shared ethos. Mozilla Clubs are much more like a global network of local chapters. And so on.

As we develop our Mozilla Learning strategy, we need to find a way to both: a) build on the strengths of these networks; and b) develop a common architecture that makes it possible for the overall network to grow and scale.

Striking this balance starts with a simple set of categories for Mozilla Learning partners and networks. For example:

- Member: any org participating in Mozilla Learning.

- Partner: any org contributing to Mozilla Learning.

- Club: a locally-run node in the Mozilla Learning network.

- Affiliate network: group of orgs aligned with Moz Learning.

- Core network: group of orgs coordinated by Mozilla staff.

This may not be the exact way to think about it, but it is certain that we will need some sort of common network architecture if we want to build partners and networks into everything. Working through this model will be an important part of the next phase of Mozilla Learning strategy work.

Partners = open source

In theory, one of the benefits of networks is that the people and organizations inside them can build things together in an open source-y way. For example, one set of partners could build a piece of software that they need for an immediate project. Another partner might hear about this software through the network, improve it for their own project and then give it back. The fact that the network has a common purpose means it’s more likely that this kind of open source creativity and value creation takes place.

This theory is already a reality in projects like Open News and Hive. In the news example, fellows and other members of the community post their code and documentation on the Source web page. This attracts the attention of other news developers who can leverage their work. Similarly, curriculum and practices developed by Hive members are shared on local Hive websites for others to pick up and run with. In both cases, the networks include a strong social component: you are likely to already know, or can quickly meet, the person who created a thing you’re interested in. This means it’s easy to get help or start a collaboration around a tool or idea that someone else has created.

One question that we have for Mozilla Learning overall is: can we better leverage this open source production aspect of networks in a more serious, instrumental and high impact way as we move forward? For example, could we: a) work on leadership development with partners in the internet advocacy space; b) have the fellows / leaders involved produce high quality curriculum or media; and c) use these outputs to fuel high impact global campaigns? Presumably, the answer can be ‘yes’. But we would first need to design a much more robust system of identifying priorities, providing feedback and deploying results across the network.

Questions

Whatever the specifics of our Mozilla Learning programs, it is clear that building in partnerships and networks will be a core design principle. At the very least, such networks provide us diversity, scale and a ground game. They may also be able to provide a genuine ‘open source’ style production engine for things like curriculum and campaign materials.

In order to design the partnership elements of Mozilla Learning, there are a number of questions we’ll need to dig into:

- Who are current and desired partners? (make a map)

- What value do they seek from us? What do they offer?

- Specifically, do they see value in our leadership and advocacy programs?

- What do partners want to contribute? What do they want in return?

- What is the right network / partner architecture?

A key piece of work over the coming months will be to talk to partners about all of this. I will play a central role here, convening a set of high level discussions. People leading the different working groups will also: a) open up the overall Mozilla Learning process to partners and b) integrate partner input into their plans. And, hopefully, Laura de Reynal and others will be able to design a user research process that lets us get info from our partners in a detailed and meaningful way. More on all this in coming weeks as we develop next steps for the Mozilla Learning process.

Filed under: mozilla

https://commonspace.wordpress.com/2015/07/22/building-a-big-tent-for-web-literacy/

|

|

Christian Heilmann: New chapter in the Developer Evangelism handbook: keeping time in presentations |

Having analysed a lot of conference talks lately, I found a few things that don’t work when it comes to keeping to the time you have as a speakers. I then analysed what the issues were and what you can do to avoid them and put together a new chapter for the Developer Evangelism Handbook called “Keeping time in presentations“.

White Rabbit by Claire Stevenson

In this pretty extensive chapter, I cover a few topics:

- How will I fit all of this in X minutes? – how to deal with the stress of time limits and how to own the fact that you always will be faster than you think you’ll be

- Less is more – how to avoid padding your talk with more and more information as there is time

- Your talk is only extremely important to you – how to understand that your talk fits into a whole day of other talks and how to stand out

- Map out more information – how to delegate information instead of trying to tell people everything

- Live coding? – the pros, but mostly the cons of live coding on stage

- Avoid questions – how to avoid questions and answers to interfere with your talk

- Things to cut – what to remove from your talks to win some time

- Talk fillers – what to add to talks to give some more value

All this information is applicable to conference talks. As this is a handbook, all of it is YMMV, too. But following these guidelines, I always managed to keep on time and feel OK watching some of my old videos without thinking I should have done a less rushed job.

|

|

Mozilla Reps Community: Council at Whistler Work Week |

From the 23rd to 26th of June the Reps Council attended the Mozilla Work Week in Whistler, British Columbia, Canada to discuss the future plans with the rest of the Participation team. Unfortunately Bob Reyes couldn’t attend due to delays in the Visa process.

Human centered design workshop

At the beginning of the week Emma introduced us to “Human centered design” where the overall goal is to find solutions to a problem while keeping the individual in the focus. We split up in groups of two to do the exercises. We tried to come up with individual solutions for the problem statement “How might we improve the gift giving experience?”.

At first we interviewed our partner to get to the root of the problem they currently have with gift giving. This might either be that they don’t have ideas on what to give to a person, or maybe the person doesn’t want to get a gift, or something entirely different. All in all, we came up with a lot of different root causes to analyze.

After talking intensively to the partner, we came up with individual solution proposals which we then discussed with the partner and improved based on their feedback.

We think this was a valuable workshop for the following sessions with the functional area teams.

Sessions with other functional areas

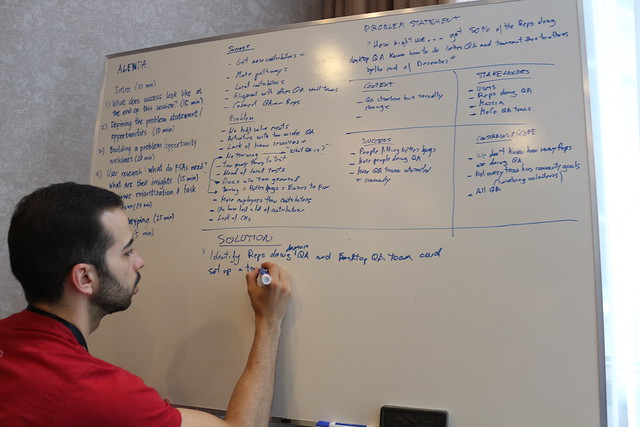

With this knowledge Council members, as part of the Participation team, attended more than 27 meetings with other functional teams from all across Mozilla. The goal was to invite these teams to a session, where we analyze their issues they have with Participation.

During these meetings we provided feedback to the team’s plans for bringing more participation and enabling more community members into their projects. We also received a lot of insights on what functional teams think about the community and how valuable is the work of volunteer to them. In every session the goal was to come up with a solid problem statement and then find possible solutions for it. Due to the Council being volunteers each of us could give valuable input and ideas.

Some problem statements we tackled during the week (of course this is just a selection):

- How might we increase the Code contribution retention so people come back to us after doing a code contribution?

- How might community approach organizations who already touch the “socially involved” audience to infiltrate their systems so Shape of the Web can touch people so that it can have a lasting impact?

- How might we improve the SUMO retention ratio from 10% to 30% in the next 6 months?

- How might we deliver operational capability to run suggested titles in German speaking countries by the end of the year?

Our plans (along with the Participation team) are to continue working with most of these teams and the community in order to accomplish our common plans for bringing more participation into these functional areas.

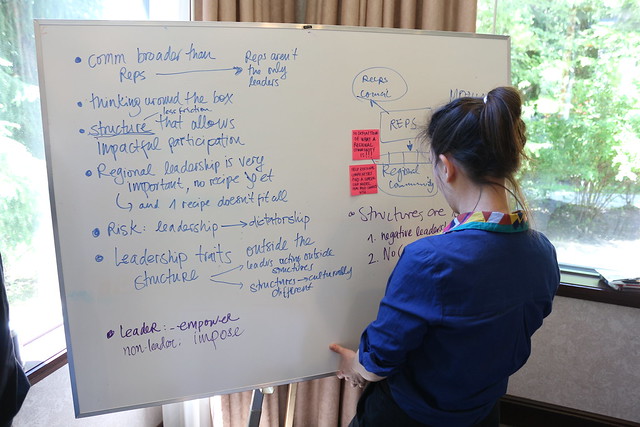

Council meetings & Leadership workshop

During the week we also held council meetings where we prioritised tasks and worked on the most important ones. Mentor’s selection criteria 2.0, new budget SOPs, Reps recognition and Reps selection criteria for important events are some of these tasks. After completing these important tasks we would like to focus on Reps as leadership platform and set our goals for the next (at least) one year.

On that direction, Rosana Ardila run a highly interesting workshop on Friday around volunteer leadership and the current Organisation model within Mozilla. In three hours we tried to think outside the box and come up with solutions to make volunteer leadership more effective. We haven’t started any plans to incorporate this with the community, but in the coming weeks we will look at this and figure out which parts might work for community as well.

Radical Participation session

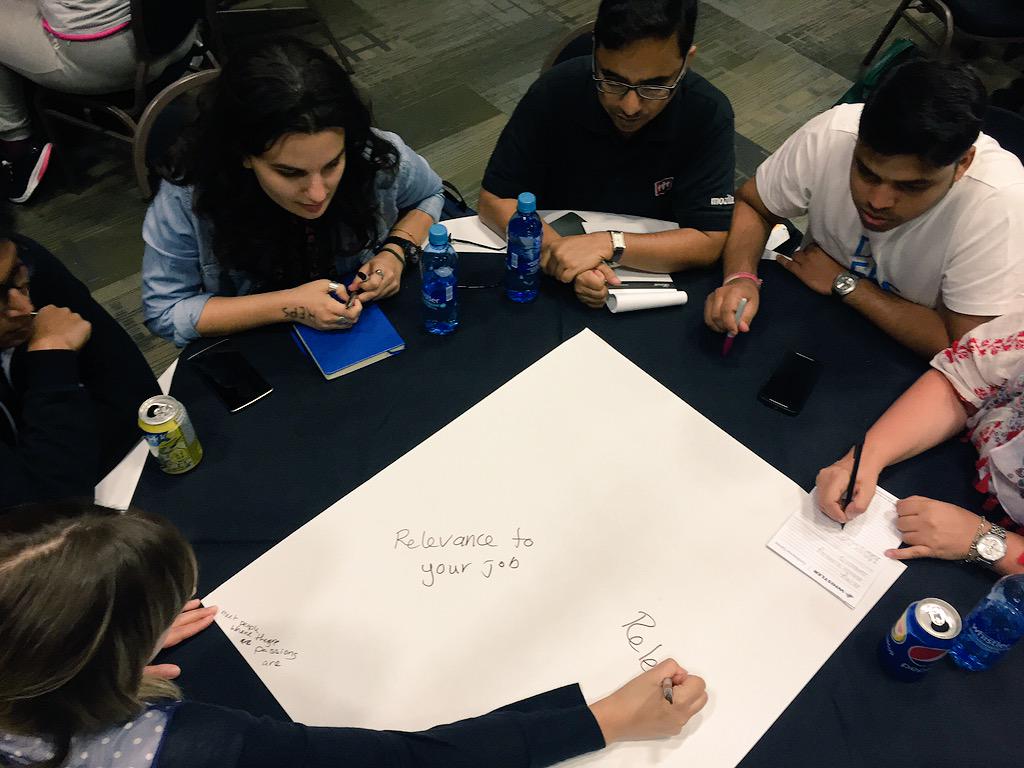

On Wednesday evening, a lot of volunteers and staff come together for the “Radical Participation” session. Mozilla invited several external experts on Participation to give a lightning talk to inspire us.

After these lightning talks we evaluated what resonated with us the most for our job and for Mozilla in general. It was good to get an outside view and tips so we can move forward with our plans as best prepared as we can be.

At the end, Mark and Mitchell gave us an update on what they think about Radical Participation. We think we’re on a good way planning for impact, but there is still a lot to do!

Participation Planning

This was one of the most interesting sessions we had since we used a new and innovative post-it notes (more post-it notes!) method for framing the current status of Participation in Mozilla. Identifying in detail the current status and the structure of Participation, was the most important step towards having more impact within the project.

By re-ordering and evaluating the notes we managed to make statements on what we could change and come with a plan for the following months. We looked at an 18-month timeline. George Roter is currently in charge of getting the document which describes the 18-month plan in more detail finished. Stay tuned for this!

Unofficial meetings with other teams

During the social parts of the Work Week (arrival apero and dinners), we all had a chance to talk to other teams informally and discuss our pain points for projects we’re working on outside of Council. We had a lot of talks with different teams outside our Participation sessions, therefore we could move forward with several other, non-Reps related, projects as well. To give an example: Michael met Patrick Finch on Monday of the work week to discuss the Community Tile for the German-speaking community. Due to several talks during the week, the Community Tile went online around a week after the work week.

Conclusion

All in all it was crucial to sit together and work on future plans. We could get a good understand of what the Participation team has been working on and could share what we have been working on as a Council. We could set the next steps for future work. After a hard working Work Week we are all ready to tackle the next steps for Participation. Let’s help Mozilla move forward with Participation! You can find other blog posts from the Participation Team below.

More blog posts about the Work Week from the Participation Team

Participation at Whistler

Storify – Recap Participation at Whistler

https://blog.mozilla.org/mozillareps/2015/07/22/council-at-whistler-work-week/

|

|

Armen Zambrano: Few mozci releases + reduced memory usage |

From the latest release I want to highlight that we're replacing the standard json library for ijson since it solves some memory leak issues we were facing for pulse_actions (bug 1186232).

This was important to fix since our Heroku instance for pulse_actions has an upper limit of 1GB of RAM.

Here are the release notes and the highlights of them:

- 0.9.0 - Re-factored and cleaned-up part of the modules to help external consumers

- 0.10.0:

- --existing-only flag prevents triggering builds that are needed to trigger test jobs

- Support for pulse_actions functionality

- 0.10.1 - Fixed KeyError when querying for the request_id

- 0.11.0 - Added support for using ijson to load information, which decreases our memory usage

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Botond Ballo: It’s official: the Concepts TS has been voted for publication! |

I mentioned in an earlier post that the C++ Concepts Technical Specification will come up for its final publication vote during a committee-wide teleconference on July 20.

That teleconference has taken place, and the outcome was to unanimously vote the TS for publication!

With this vote having passed, the final draft of the TS will be sent to the ISO head offices, which will complete the publication process within a couple of months.

With the TS published, the committee will be on the lookout for feedback from implementers and users, to see how the proposed design weathers real-world codebases and compiler architectures. This will allow the committee to determine whether any design changes need to be made before merging the contents of the TS into the C++ International Standard, thus making the feature all official (and hard to change the design of).

GCC has a substantially complete implementation of the Concepts TS in a branch; if you’re interested in concepts, I encourage you to try it out!

|

|

Air Mozilla: Bugzilla Development Meeting |

Help define, plan, design, and implement Bugzilla's future!

Help define, plan, design, and implement Bugzilla's future!

https://air.mozilla.org/bugzilla-development-meeting-20150722/

|

|

Nick Fitzgerald: Proposal For Encoding Source-Level Environment Information Within Source Maps |

A month ago, I wrote about how source maps are an insufficient debugging format for the web. I tried to avoid too much gloom and doom, and focus on the positive aspect. We can extend the source map format with the environment information needed to provide a rich, source-level debugging experience for languages targeting JavaScript. We can do this while maintaining backwards compatibility.

Today, I'm happy to share that I have a draft proposal and a reference implementation for encoding source-level environment information within source maps. It's backwards compatible, compact, and future extensible. It enables JavaScript debuggers to rematerialize source-level scopes and bindings, and locate any given binding's value, even if that binding does not exist in the compiled JavaScript.

I look forward to the future of debugging languages that target JavaScript.

Interested in getting involved? Join the discussion.

|

|

Jordan Lund: Mozharness now lives in Gecko |

What's changed?

continuous-integration and release jobs that use Mozharness will now get Mozharness from the Gecko repo that the job is running against.

How?

Whether the job is a build (requires a full gecko checkout) or a test (only requires a Firefox/Fennec/Thunderbird/B2G binary), automation will first grab a copy of Mozharness from the gecko tree, even before checking out the rest of the tree. Effectively minimizing changes to our current infra.

This is thanks to a new relengapi endpoint, Archiver, and hg.mozilla.org's sub directory archiving abilities. Essentially Archiver will get a tar ball of Mozharness from within a target gecko repo, rev, and sub-repo-directory and upload it to Amazon's S3.

What's nice about Achiver is that it is not restricted to just grabbing Mozharness. You could, for example, put https://hg.mozilla.org/build-tools in the Gecko tree or, improving on our tests.zip model, simply grab subdirectories from within the testing/* part of the tree and request them on a suite by suite basis.

What does this mean for you?

it depends. if you are...

1) developing on Mozharness

You will need to checkout gecko and patches will now land like any other gecko patch: 1) land on a development tree-branch (e.g. mozilla-inbound) 2) ride the trains. This now means:

- we can have tree specific configs and even scripts

- the Mozharness pinning abstraction layer is no longer needed

- we have more deterministic builds and tests as the jobs are tied to a specific Gecko repo + rev

- there is more transparency on how automation runs continuous integration jobs

- there should be considerably less strain on hg.mozilla.org as we no longer rm + clone mozharness jobs

- development tree-branches (inbound, fx-team, etc) will behave like the Mozharness default branch while mozilla-central will act as production branch.

This also means:

- Mozharness patches that require tree wide changes will need to be uplifted across trees

- development on Mozharness will require a Gecko tree checkout

- Github + Travis tests will not be run on each Mozharness change (mh tests will have to be run locally for now)

- Mozharness changes will not be documented or visible (outside of diffing gecko revs)

2) just needing to deploy Mozharness or get a copy of it without gecko

Like the usage docs linked to Archiver above, you could hit the API directly. But I recommend using the client that buildbot uses. The client will wait until the api call is complete, download the archive from a response location, and unpack it to a specified destination.

Let's take a look at that in action: say you want to download and unpack a copy of mozharness based on mozilla-beta at 93c0c5e4ec30 to some destination.

python archiver_client.py mozharness --repo releases/mozilla-beta --rev 93c0c5e4ec30 --destination /home/jlund/downloads/mozharness

Note: if that was the first time Archiver was polled for that repo + rev, it might take a few seconds as it has to download Mozharness from hgmo and then upload it to S3. Subsequent calls will happen near instantly

Note 2: if your --destination path already exists with a copy of Mozharness or something else, the client won't rm that path, it will merge (just like unpacking a tarball behaves)

3) a Release Engineering service that is still using hg.mozilla.org/build/mozharness

Not all Mozharness scripts are used for continuous integration / release jobs. There are a number of Releng services that are based on Mozharness: e.g. Bumper, vcs-sync, and merge_day. As these services transition to using Archiver, they will continue to use hgmo/build/mozharness as the Repository of Record (RoR).

If certain services that can not use gecko based Mozharness, then we can fork Mozharness and setup a separate repo. That will of course mean such services won't receive upstream changes from the gecko copy so we should avoid this if possible.

If you are an owner or major contributor to any of these releng services, we should meet and talk about such a transition. Archiver and its client should make deployments pretty painless in most cases.

Have something that may benefit from Archiver?

If you want to move something into a larger repository or be able to pull something out of such a repository for lightweight deployments, feel free to chat to me about Archiver and Relengapi.

As always, please leave your questions, comments, and concerns below

http://jordan-lund.ghost.io/mozharness-goes-live-in-the-tree/

|

|

QMO: Help us Triage Firefox Bugs – Introducing the Bug Triage tool |

Interested in helping with some Firefox Bug Triage? We have a new experimental tool that makes it really easy to help on a daily basis.

Please visit our new triage tool and sign up to triage some bugs. If you can give us a few minutes of your day, you can help Mozilla move faster!

https://quality.mozilla.org/2015/07/help-us-triage-firefox-bugs-introducing-the-bug-triage-tool/

|

|