Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Daniel Stenberg: The state of curl 2020 |

As tradition dictates, I do a “the state of curl” presentation every year. This year, as there’s no physical curl up conference happening, I have recorded the full presentation on my own in my solitude in my home.

This is an in-depth look into the curl project and where it’s at right now. The presentation is 1 hour 53 minutes.

The slides: https://www.slideshare.net/bagder/the-state-of-curl-2020

https://daniel.haxx.se/blog/2020/04/30/the-state-of-curl-2020/

|

|

The Mozilla Blog: Contact Tracing, Governments, and Data |

Digital contact tracing apps have emerged in recent weeks as one potential tool in a suite of solutions that would allow countries around the world to respond to the COVID-19 pandemic and get people back to their daily lives. These apps raise a number of challenging privacy issues and have been subject to extensive technical analysis and argument. One important question that policymakers are grappling with is whether they should pursue more centralized designs that share contact information with a central authority, or decentralized ones that leave contact information on people’s devices and out of the reach of governments and companies.

Firefox Chief Technology Officer Eric Rescorla has an excellent overview of these competing design approaches, with their different potential risks and benefits. One critical insight he provides is that there is no Silicon Valley wizardry that will easily solve our problems. These different designs present us with different trade-offs and policy choices.

In this post, we want to provide a direct answer to one policy choice: Our view is that centralized designs present serious risk and should be disfavored. While decentralized systems present concerns of their own, their privacy properties are generally superior in situations where governments have chosen to deploy contact tracing apps.

Should your government have the social graph?

Centralized designs share data directly with public health professionals that may aid in their manual contact tracing efforts, providing a tool to identify and reach out to other potentially infected people. That is a key benefit identified by the designers of the BlueTrace system in use in Singapore. The biggest problem with this approach, as described recently by a number of leading technologists, is that it would expand government access to the “social graph” — data about you, your relationships, and your links with others.

The scope of this risk will depend on the details of specific proposals. Does the data include your location? Is it linked to phone numbers or emails? Is app usage voluntary or compulsory? A number of proposals only share your contact list when you are infected, and, if the infection rate is low, then access to the social graph will be more limited. But regardless of the particulars, we know this social graph data is near impossible to truly anonymize. It will provide information about you that is highly sensitive, and can easily be abused for a host of unintended purposes.

Social graph data could be used to see the contacts of political dissidents, for criminal investigations, or for immigration enforcement, to give just a few examples. This isn’t just about risk to personal privacy. Governments, in partnership with the private sector, could use this data to target or discriminate against particular segments of society.

Recently, many have pointed to well-established privacy principles as important tools that can mitigate privacy risk created by contact tracing apps. These include data minimization, rules governing data access and use, strict retention limits, and sunsetting of technical solutions when they are no longer needed. These are principles that Mozilla has long advocated for, and they may have important applications to contact tracing systems.

These protections are not strong enough, however, to prevent the potential abuse of data in centralized systems. Even minimized data is inherently sensitive because the government needs to know who tested positive, and who their contacts are. Recent history has shown that this kind of data, once collected, creates a tempting target for new uses — and for attackers if not kept securely. Neither governments nor the private sector have shown themselves up to the task of policing these new uses. The incentives to put data to unintended uses are simply too strong, so privacy principles don’t provide enough protection.

Moreover, as Mozilla Executive Director Mark Surman observes, the norms we establish today will live far beyond any particular app. This is an opportunity to establish the precedent that privacy is not optional. Centralized contact tracing apps threaten to do the opposite, normalizing systems to track citizens at scale. The technology we build today will likely live on. But even if it doesn’t, the decisions we make today will have repercussions beyond our current crisis and after we’ve sunset any particular app.

At Mozilla, we know about the pitfalls of expansive data collection. We are not experts in public health. In this moment of crisis, we need to take our cue from public health professionals about the problems they need to solve. But we also want policymakers, and the developers building these tools, to be mindful of the full costs of the solutions before them.

Trust is an essential part of helping people to take the steps needed to combat the pandemic. Centralized designs that provide contact information to central authorities are more likely to create privacy and security issues over time, and more likely to erode that trust. On balance we believe decentralized contact tracing apps, designed with privacy in mind, offer a better tool to solve real public health problems and establish a trusted relationship with the technology our lives may depend on.

The post Contact Tracing, Governments, and Data appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/04/29/contact-tracing-governments-and-data/

|

|

The Mozilla Blog: Looking at designs for COVID-19 Contact Tracing Apps |

|

|

Julien Vehent: 7 years at Mozilla |

Seven years ago, on April 29th 2013, I walked into the old Castro Street Mozilla headquarters in Mountain View for my week of onboarding and orientation. Jubilant and full of imposter syndrom, that day marked the start of a whole new era in my professional career.

I'm not going to spend an entire post reminiscing about the good ol' days (though those days were good indeed). Instead, I thought it might be useful to share a few things that I've learned over the last seven years, as I went from senior engineer to senior manager.

Be brief

Je n’ai fait celle-ci plus longue que parce que je n’ai pas eu le loisir de la faire plus courte.

- Blaise Pascal

Pascal's famous quote - If I had more time, I would have written a shorter letter - is strong advice. One of the best way to disrupt any discussion or debate is indeed to be overly verbose, to extend your commentary into infinity, and to bore people to death.

Here's the thing: nobody cares about the history of the universe. Be brief. If someone asks for someone specific, give them that information, and perhaps mention that there's more to be said about it. They'll let you know if they are interested in hearing the backstory.

People like to hear themselves talk. I certainly do. But over time I realized that, by being brief, I get a lot more attention from people, so they ask more questions, reach out more often, because they know I won't drag them into a lengthy debate.

But don't fall into the extreme opposite. Being overly brief can be detrimental to a conversation, or make you appear like you don't care to participate. The is a right balance to be found between too short and too long, depending on the context and your audience.

Tell a good story

Or more accurately, tell a story that touches your audience directly. If you're working on a security review of a nodejs application hosted in heroku, tell them stories of other nodejs applications hosted in heroku, ideally from a nearby team or organization. Don't go lecture them on the need for network security monitoring in datacenters, they simply won't care, as it doesn't touch them.

If you want to get people interested in what you're doing, first you need to take interest into what they are doing, then you need to tell them a good story they will care about. Finding out what that is will increase your chances of success.

That story will also change depending on your audience. Engineers will care about one thing, their managers something else, and the executives another thing entirely. Emphasize the parts of your project or idea that your audience will be most interested in to catch their attention, without losing the nature of your work.

This isn't rocket science. In fact, it's old school business playbook. Dale Carnegie's 1936 "How to Win Friends & Influence People" covers this at length. And while I certainly wouldn't take that book to the letter, it raises a number of points which I think are relevant to security professionals.

Be technical

I started out at Mozilla as a senior security engineer focused entirely on operations and infrastructure. I spent my days doing security reviews, making guides and writing code. 100% technical work. When I got promoted to staff, then to manager, then to senior manager, the proportion of technical work gradually reduced to make room for managerial work.

Managing is important. With 9-or-so people on the team, being able to accurately focus attention on the right set of problems is critical to the security of the perimeter. In fact, there's an entire school of thought that advocates that managers should be entirely focused on management tasks, and stay away from technical work.

For better or worse, I don't buy into that. I believe, for myself and for my team, that I'm a better team manager and security strategist when I have a deep technical understanding of the issues at hand.

That doesn't mean non-technical managers are bad. In fact, I think there are many situations where a non-technical manager is a better choice than a technical one. But for the field of operations security, at Mozilla, managing the people I manage, I think being technical is a strength.

How do you remain technical while being a manager? There are certainly areas in which I don't have a deep technical understanding and struggle to acquire one. But in general, I find that experimenting outside core projects, and picking up tasks outside the critical path, helps remain current and relevant.

For example, if the organization decide to switch to writing web applications in Rust with Actix, I'll write one myself. I won't get to the level of expertise I have in other areas, but I'll know enough to be relevant during security reviews and threat modeling sessions. And I continue to acquire knowledge in my areas of specialty: cloud infrastructure, cryptographic services, etc.

I don't expect to leave the management track any time soon. In fact, I expect to continue to grow in it. But I find it important that I could go back to a senior staff engineer role if I wanted to. Perhaps it is hubris, time will tell.

Never make assumptions

A year ago, I spent a night seated on a small table outside the reception of the Wahweap campground in Lake Powell, Arizona, as I was helping my team re-issue an intermediate certificate used to sign Firefox add-ons. It was freezing outside. I caught a cold, and a strong lesson, as dozens of my peers where untangling a mess I had helped create.

We called this incident "Armagaddon" internally, and it all started because we made a few assumptions we never took time to verify. We assumed that certificate expiration checking was disabled when verifying add-ons signatures, when in fact it was only disabled for end-entities. When the intermediate expired, everything blew up.

I learned that lesson. I also learned to identify and question every assumptions that we, engineers, make. The more complex a system becomes - and the Firefox ecosystem certainly is a complex one - the more assumptions people make. Learning to identify and consistently call out those assumption, forcing myself and others to verify them, and basing decisions on hard data and tests is critically important.

There is a place and a time where assumptions can be made and risks be taken, but not always, and certainly not on mission critical components. As an industry, we've bought into the "go fast and break things" mindset that is plain wrong for a lot of environments. Learning to slow down and taking the time to verify assumptions is, perhaps, the biggest cultural change we need.

Don't ignore backward compatibility

You think differently about engineering when you have to maintain compatibility with devices and software that haven't been updated in one, and sometime two, decades. Firefox falls into that category. Every time we try to change something that's been around a while, we run into backward compatibility issues.

Up until recently, we maintained a separate set of HTTPS endpoints that supported SSL3 and SHA-1 certificates, issued by decomissioned roots, to allow XP SP2 users to download Firefox installers. And when I say recently, I mean one or two years ago. Long after Microsoft had stopped supporting those users.

I have tons of examples of having to maintain weird configurations and infrastructure for deprecated users no one wants to think about. Yet, they exists, and often represent a sizeable portion of our users that cannot simply be ignored.

As a system designer, learning to account for backward compatibility is a learning curve. It's certainly much easier to greenfield a process while ignoring its history than to design a monster that needs to adopt modern techniques while serving old clients.

Some folks are better at this than others, and this is where you really feel the importance of experience and the value of tenured employees. Those people who jump ship every 18 months? They can't tell you a thing about backward compatibility. But that engineer who's been maintaining a critical system for the past 5 or 10 years absolutely can. Seek them, ask for the history of things, it's always interesting to hear!

Use boring tech

Nobody ever got fired for building a site in Python with Django and Postgresql. Or perhaps you'd like to keep using PHP? Maybe even Perl? The cool kids at the local hackathon will make fun of you for not using the latest javascript framework or Rust nightly, but your security team will probably love you for it.

The thing is, in 99% of cases, you'll be an order of magnitude more productive and secure with boring tech. There are very few cases where the bleeding edge will actually give you an edge.

For example, I'm a big fan of Rust. And not because not being a big fan of Rust at Mozilla is a severe faux-pas. But because I think the language team is doing a great job of distilling programming best practices into a reasonable set of engineering principles. Yet, I absolutely do not recommend anyone to write backend services in Rust, unless they are ready to deal with a lot of pain. Things like web frameworks, ORMs, cloud SDKs, migration frameworks, unit testing, and so on all exist in Rust, but are nowhere as mature or tested as their Python equivalents.

My favorite stack to build a quick prototype of a web service is Python, Flask, Postgresql and Heroku. That's it. All stuff that's been around for over a decade and that no one considers new or cool.

Bugzilla is written in Perl. Phabricator or Pocket are PHP. ZAP is Java. etc. There are tons of examples of software that is widely successful by using boring tech, because their developers are so much more productive on those stacks than they would be on anything bleeding edge.

And from a security perspective, those boring techs have acquired a level of maturity that can only be attained by walking the walk. Sure, programming languages can and do prevent entire classes of vulnerabilities, but not all of them, and using Rust won't stop SQL injections or SSRF.

So when should you not use boring tech? Mostly when you have time and money. If you're flush on cash and you unique problems, taking six months or a year to ramp up a new tech is worthwhile. The ideal time to do it is when you're rewriting a well-established service, and have a solid test suite to verify the rewrite is equivalent to the original. That's what the Durable Sync team did at Mozilla, when they rewrote the Firefox Sync backend from Python/Mysql to Rust/Spanner.

Delete your code

My first two years at Mozilla were focused on an endpoint security project called MIG, for Mozilla Investigator. It was an exciting greenfield project and I got to use all the cool stuff: Go, MongoDB (yurk), RabbitMQ (meh), etc. I wrote tons and tons of code, shipped a fully functional architecture, got a logo from a designer friend, gave a dozen conference talks, even started a small open source community around it.

And then I switched gears.

In the space of maybe 6 months, I completely stopped working on MIG to focus on cloud services security. At first, it was hard to let go of a project I had invested so much into. Then, gradually, it faded, until eventually the project died off and got archived. My code was effectively deleted. Two years of work out the window. This isn't something you're trained to deal with. And in fact, most engineers, like I was, are overly attached to their code, to the point of aggressively fighting any change they disagree with.

If this is you, stop, right now, you're not doing anyone any favors.

Your code will be deleted. Your projects will be cancelled. People are going to take over your work and transform it entirely, and you won't even have a say in it. This is OK. That's the way we move forward and progress. Learning to cope with that feeling early on will help you later in your career.

Nowadays, when someone is about to touch code I have previously written, I explicitely welcome them to rip out anything they think is worth removing. I give them a clear signal that I'm not attached to my code. I'd much rather see the project thrive than keep around old lines of code. I encourage you to do the same.

Be passionate, and respectful

Security folks generally don't need to be told to be passionate. In fact, they are often told the opposite, that they should tone it down a notch, that they are making too many waves. I disagree with this. I think it's good and useful to an organization to have a passionate security team that truly cares about doing good work. If we're not passionate about security, who else is going to be? That's literally what we're paid to do.

When a team comes ask for a security review about a new project, they expect to receive the full-on adversarial doomsday threat model experience from us. They want to talk to someone who's passionate about breaking and hardening services to the extreme. So this is what we do in our risks assessments meetings. We don't tone it down or play it safe, we push things to the extreme, we're unreasonable and it's fun as hell!

But when folks respectfully disagree with my recommendation to encrypt all databases with HSM-backed AES keys split with a Shamir threshold of 4 and store each fragment in underground bunkers around the world to prevent the NSA from compromising several of our employees to access the data, I remain respectful of their opinion. We have a productive and polite debate that leads to a reasonable middle-ground which sufficiently addresses the risks, and can be implemented within timeline and budget.

Both passion and respect are important quality of a successful engineer (or a person, really). I like to end a day knowing that I've done the right thing and that, even if everything didn't go my way, I have made a good case and I'm satisfied with the outcome.

Onward.

And so this is seven years at Mozilla. A lot more could be said about the work accomplished or the lessons learned, but then this would turn into a book, and I swore to myself I wouldn't write another one of those just yet.

Working at Mozilla is a heck of a job, even in those trying times. The team is fantastic, the work is fascinating, and, as they said during my first week of onboarding back in the old Castro street office, we get to work for Mankind, not for The Man. That's gotta be worth something!

https://j.vehent.org/blog/index.php?post/2020/04/28/7-years-at-Mozilla

|

|

Daniel Stenberg: curl 7.70.0 with JSON and MQTT |

We’ve done many curl releases over the years and this 191st one happens to be the 20th release ever done in the month of April, making it the leading release month in the project. (February is the month with the least number of releases with only 11 so far.)

Numbers

the 191st release

4 changes

49 days (total: 8,076)

135 bug fixes (total: 6,073)

262 commits (total: 25,667)

0 new public libcurl function (total: 82)

0 new curl_easy_setopt() option (total: 270)

1 new curl command line option (total: 231)

65 contributors, 36 new (total: 2,169)

40 authors, 19 new (total: 788)

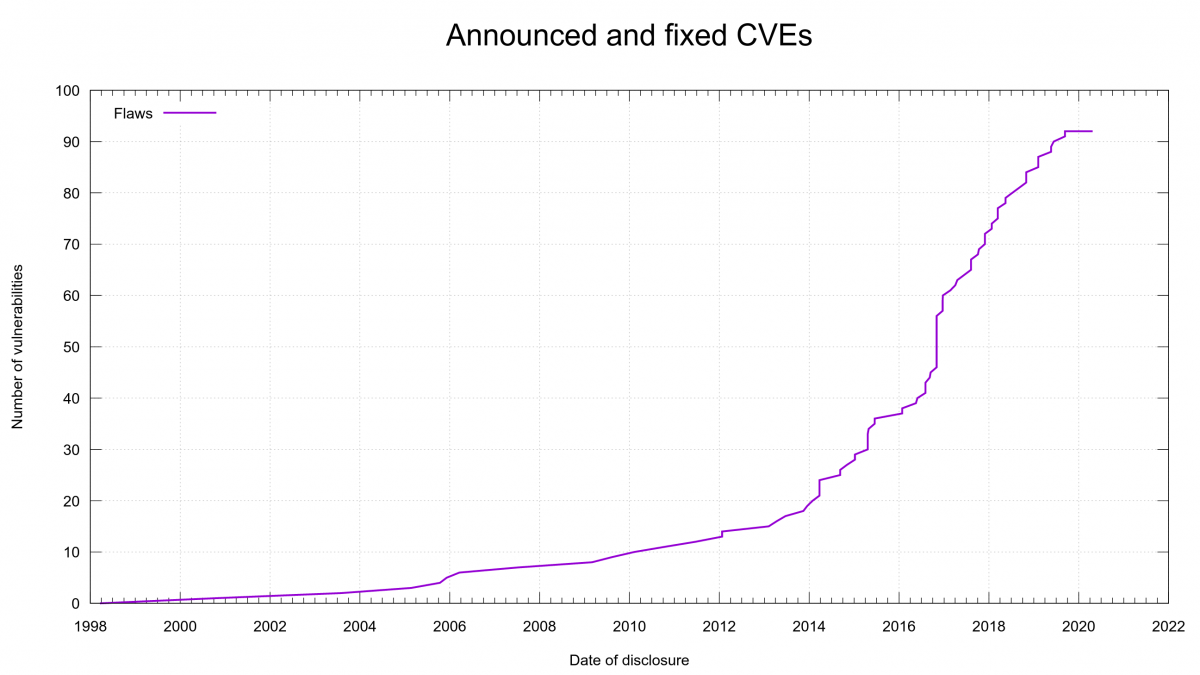

0 security fixes (total: 92)

0 USD paid in Bug Bounties

Security

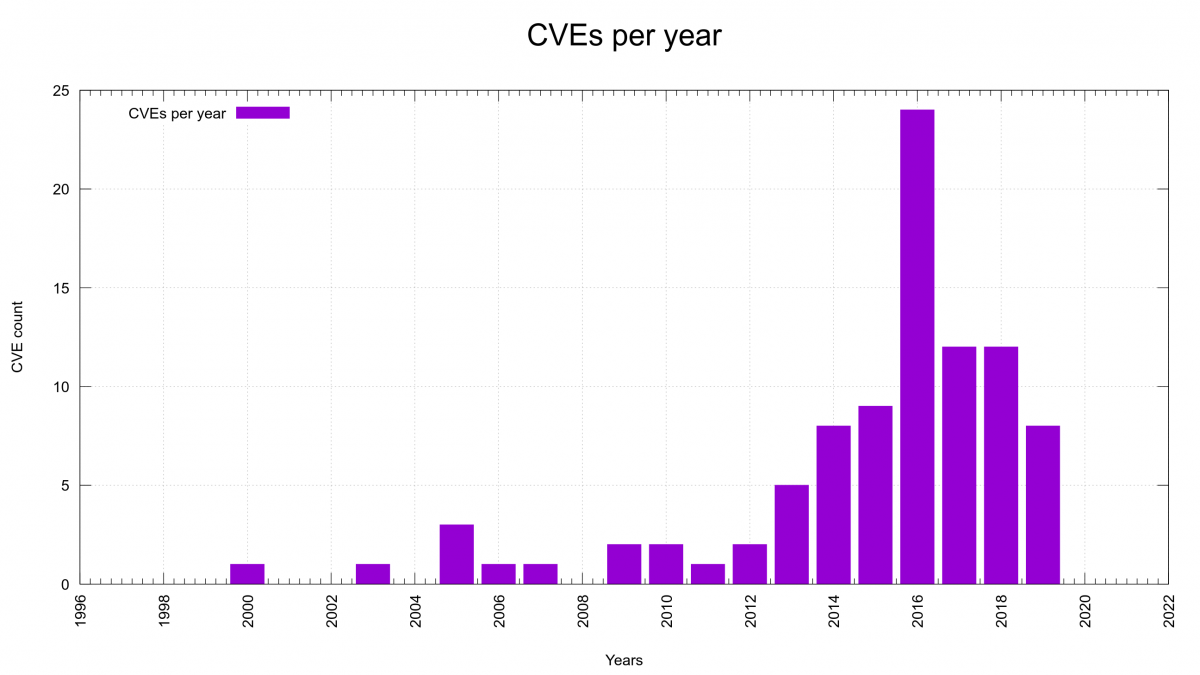

There’s no security advisory released this time. The release of curl 7.70.0 marks 231 days since the previous CVE regarding curl was announced. The longest CVE-free period in seven years in the project.

Changes

The curl tool got the new command line option --ssl-revoke-best-effort which is powered by the new libcurl bit CURLSSLOPT_REVOKE_BEST_EFFORT you can set in the CURLOPT_SSL_OPTIONS. They tell curl to ignore certificate revocation checks in case of missing or offline distribution points for those SSL backends where such behavior is present (read: Schannel).

curl’s --write-out command line option got support for outputting the meta data as a JSON object.

We’ve introduced the first take on MQTT support. It is marked as experimental and needs to be explicitly enabled at build-time.

Bug-fixes to write home about

This is just an ordinary release cycle worth of fixes. Nothing particularly major but here’s a few I could add some extra blurb about…

gnutls: bump lowest supported version to 3.1.10

GnuTLS has been a supported TLS backend in curl since 2005 and we’ve supported a range of versions over the years. Starting now, we bumped the lowest supported GnuTLS version to 3.1.10 (released in March 2013). The reason we picked this particular version this time is that we landed a bug-fix for GnuTLS that wanted to use a function that was added to GnuTLS in that version. Then instead of making more conditional code, we cleaned up a lot of legacy and simplified the code significantly by simply removing support for everything older than this. I would presume that this shouldn’t hurt many users as I suspect it is a very bad idea to use older versions anyway, for security reasons if nothing else.

libssh: Use new ECDSA key types to check known hosts

curl supports three different SSH backends, and one them is libssh. It turned out that the glue layer we have in curl between the core libcurl and the SSH library lacked proper mappings for some recent key types that have been added to the SSH known_hosts file. This file has been getting new key types added over time that OpenSSH is using by default these days and we need to make sure to keep up…

multi-ssl: reset the SSL backend on Curl_global_cleanup()

curl can get built to support multiple different TLS backends – which lets the application to select which backend to use at startup. Due to an oversight we didn’t properly support that the application can go back and cleanup everything and select a different TLS backend – without having to restart the application. Starting now, it is possible!

Revert “file: on Windows, refuse paths that start with \\”

Back in January 2020 when we released 7.68.0 we announced what we then perceived was a security problem: CVE-2019-15601.

Later, we found out more details and backpedaled on that issue. “It’s not a bug, it’s a feature” as the saying goes. Since it isn’t a bug (anymore) we’ve now also subsequently removed the “fix” that we introduced back then…

tests: introduce preprocessed test cases

This is actually just one out of several changes in the curl test suite that has happened as steps in a larger sub-project: move all test servers away from using fixed port numbers over to using dynamically assigned ones. Using dynamic port numbers makes it easier to run the tests on random users’ machines as the risk for port collisions go away.

Previously, users had the ability to ask the tests to run on different ports by using a command line option but since it was rarely used, new test were often written assuming the default port number hard-coded. With this new concept, such mistakes can’t slip through.

In order to correctly support all test servers running on any port, we’ve enhanced the main test “runner” (runtests) to preprocess the test case files correctly which allows all our test servers to work with such port numbers appearing anywhere in protocol details, headers or response bodies.

The work on switching to dynamic port numbers isn’t quite completed yet but there are still a few servers using fixed ports. I hope those will be addressed within shortly.

tool_operate: fix add_parallel_transfers when more are in queue

Parallel transfers in the curl tool is still a fairly new thing, clearly, as we can get a report on this kind of basic functionality flaw. In this case, you could have curl generate zero byte output files when using --parallel-max to limit the parallelism, instead of getting them all downloaded fine.

version: add ‘cainfo’ and ‘capath’ to version info struct

curl_version_info() in libcurl returns lots of build information from the libcurl that’s running right now. It includes version number of libcurl, enabled features and version info from used 3rd party dependencies. Starting now, assuming you run a new enough libcurl of course, the returned struct also contains information about the built-in CA store default paths that the TLS backends use.

The idea being that your application can easily extract and use this information either in information/debugging purposes but also in cases where other components are used that also want a CA store and the application author wants to make sure both/all use the same paths!

windows: enable UnixSockets with all build toolchains

Due to oversights, several Windows build didn’t enable support for unix domain sockets even when built for such Windows 10 versions where there’s support provided for it in the OS.

scripts: release-notes and copyright

During the release cycle, I regularly update the RELEASE-NOTES file to include recent changes and bug-fixes scheduled to be included in the coming release. I do this so that users can easily see what’s coming; in git, on the web site and in the daily snapshots. This used to be a fairly manual process but the repetitive process finally made me create a perl script for it that removes a lot of the manual work: release-notes.pl. Yeah, I realize I’m probably the only one who’s going to use this script…

Already back in December 2018, our code style tool checksrc got the powers to also verify the copyright year range in the top header (written by Daniel Gustafsson). This makes sure that we don’t forget to bump the copyright years when we update files. But because this was a bit annoying and surprising to pull-request authors on GitHub we disabled it by default – which only lead to lots of mistakes still being landed on the poor suckers (like me) who enabled it would get the errors instead. Additionally, the check is a bit slow. This finally drove me into disabling the check as well.

To combat the net effect of that, I’ve introduced the copyright.pl script which is similar in spirit but instead scans all files in the git repository and verifies that they A) have a header and B) that the copyright range end year seems right. It also has a whitelist for files that don’t need to fulfill these requirements for whatever reason. Now we can run this script one every release cycle instead and get the same end results. Without being annoying to users and without slowing down anyone’s everyday builds! Win-win!

The release presentation video

Credits

The top image was painted by Dirck van Delen 1631. Found in the Swedish National Museum’s collection.

https://daniel.haxx.se/blog/2020/04/29/curl-7-77-0-with-json-and-mqtt/

|

|

Will Kahn-Greene: Experimenting with Symbolic |

One of the things I work on is Tecken which runs Mozilla Symbols Server. It's a server that handles Breakpad symbols files upload, download, and stack symbolication.

Bug #1614928 covers adding line numbers to the symbolicated stack results for the symbolication API. The current code doesn't parse line records in Breakpad symbols files, so it doesn't know anything about line numbers. I spent some time looking at how much effort it'd take to improve the hand-written Breakpad symbol file parsing code to parse line records which requires us to carry those changes through to the caching layer and some related parts--it seemed really tricky.

That's the point where I decided to go look at Symbolic which I had been meaning to look at since Jan wrote the Native Crash Reporting: Symbol Servers, PDBs, and SDK for C and c++ blog post a year ago.

What is symbolication?

There are lots of places where stacks are interesting. For example:

the stack of the crashing thread in a crash report

the stacks of the parent and child processes in a hung IPC channel

the stack of a thread being profiled

the stack of a thread at a given point in time for debugging

"The stack" is an array of addresses in memory corresponding to the value of

the instruction pointer for each of those stack frames. You can use the module

information to convert that array of memory offsets to an array of [module,

module_offset] pairs. Something like this:

[ 3, 6516407 ], [ 3, 12856365 ], [ 3, 12899916 ], [ 3, 13034426 ], [ 3, 13581214 ], [ 3, 13646510 ], ...

with modules:

[ "firefox.pdb", "5F84ACF1D63667F44C4C44205044422E1" ], [ "mozavcodec.pdb", "9A8AF7836EE6141F4C4C44205044422E1" ], [ "Windows.Media.pdb", "01B7C51B62E95FD9C8CD73A45B4446C71" ], [ "xul.pdb", "09F9D7ECF31F60E34C4C44205044422E1" ], ...

That's neat, but hard to work with.

What you really want is a human-readable stack of function names and files and line numbers. Then you can go look at the code in question and start your debugging adventure.

When the program is compiled, the act of compiling produces a bunch of compiler

debugging information. We use dump_syms to extract the symbol information

and put it into the Breakpad symbols file format. Those files get uploaded to

Mozilla Symbols Server where they join all the symbols files for all the builds

for the last 2 years.

Symbolication takes the array of [module, module_offset] pairs, the list of

modules in memory, and the Breakpad symbols files for those modules and looks

up the symbols for the [module, module_offset] pairs producing symbolicated

frames.

Then you get something nicer like this:

0 xul.pdb mozilla::ConsoleReportCollector::FlushReportsToConsole(unsigned long long, nsIConsoleReportCollector::ReportAction) 1 xul.pdb mozilla::net::HttpBaseChannel::MaybeFlushConsoleReports()", 2 xul.pdb mozilla::net::HttpChannelChild::OnStopRequest(nsresult const&, mozilla::net::ResourceTimingStructArgs const&, mozilla::net::nsHttpHeaderArray const&, nsTArray const&) 3 xul.pdb std::_Func_impl_no_alloc<`lambda at /builds/worker/checkouts/gecko/netwerk/protocol/http/HttpChannelChild.cpp:1001:11',void>::_Do_call() ...

Yay! Much rejoicing! Something we can do something with!

I wrote about this a bit in Crash pings and crash reports.

Tecken has a symbolication API, so you can send in a well-crafted HTTP POST and it'll symbolicate the stack for you and return it.

Quickstart with Symbolic in Python

Symbolic is a Rust crate with a Python library wrapper. The Sentry folks do a great job of generating wheels and uploading those to PyPI, so installing Symbolic is as easy as:

pip install symbolic

The Symbolic docs are terse. I found the following documentation:

That helped, but I had questions those didn't answer. I have an intrepid freshman understanding of Rust, so I ended up reading the code, tests, and examples.

The one big thing that tripped me up was that Symbolic can't parse Breakpad symbols files from a bye stream--they need to be files on disk. Tecken doesn't store Breakpad symbols files on disk--they're in AWS S3 buckets. So it downloads them and parses the byte stream. In order to use Symbolic, we'll have to adjust that to save the file to disk, then parse it, then delete the file afterwards. 1

- 1

If that's not true, please let me know.

Anyhow, here's some sample annotated code using Symbolic to do symbol lookups:

import symbolic # This is a Breakpad symbols file I have on disk. archive = symbolic.Archive.open("XUL/75A79CFA0E783A35810F8ADF2931659A0/XUL.sym") # We do debug ids as all-uppercase with no hyphens. However, symbolic # requires that get normalized into the form it likes. debug_id = symbolic.normalize_debug_id("75A79CFA0E783A35810F8ADF2931659A0") # This parses the Breakpad symbols file and returns a symcache that we can # look up addresses in. obj = archive.get_object(debug_id=ndebug_id) symcache = obj.make_symcache() # Symbol lookup returns a list of LineInfo objects. lineinfos = symcache.lookup(0xf5aa0) print("line: %s symbol: %s" % (lineinfos[0].line, lineinfos[0].symbol))

Cool!

Symbolic parses Breakpad symbols files. It uses a cache format for fast symbol lookups. Loading the cache file is very fast.

Further, Symbolic parses files of a variety of other debug binary formats. This could be handy for skipping the intermediary Breakpad symbol file and using the debug binaries directly. More on that idea later.

Tecken is maintained by a team of two and we have other projects, so it spends a lot of time sitting in the corner feeling sad. Meanwhile, Symbolic is actively worked on by Sentry and a cadre of other contributors including Mozilla engineers because it's one of the cornerstone crates for the great Rust rewrite of Breakpad things. That's a big win for me.

So then I built a prototype

Today, I threw together a web app that does symbolication using Symbolic and called it Sherwin Syms.

https://github.com/willkg/sherwin-syms/

Building a separate prototype gives me something to tinker with that's not in production. I was able to add line number information pretty quickly. I can experiment with caching on disk. I can compare the symbolication API output for stacks between the prototype and what the Mozilla Symbols Server produces.

There's a lot of scaffolding in there. The Symbolic-using bits are in this file:

https://github.com/willkg/sherwin-syms/blob/master/src/sherwin_syms/symbols.py

Next steps

I need to integrate this into Tecken. I think that means writing a new v6 API view because the v4 and v5 code is tangled up with downloading and caching.

Markus and Gabriele suggested Tecken skip Breakpad symbols files and instead use the debug binaries directly. Breakpad symbols files don't have symbols for inline functions, so they lose that information--using the debug binaries would be better. I hope to look into that soon.

Summary

That summarizes the week I spent with Symbolic.

https://bluesock.org/~willkg/blog/mozilla/experimenting_with_symbolic.html

|

|

Daniel Stenberg: webinar: common libcurl mistakes |

On May 7, 2020 I will present common mistakes when using libcurl (and how to fix them) as a webinar over Zoom. The presentation starts at 19:00 Swedish time, meaning 17:00 UTC and 10:00 PDT (US West coast).

Abstract

libcurl is used in thousands of different applications and devices for client-side Internet transfer and powers a significant part of what flies across the wires of the world a normal day.

Over the years as the lead curl and libcurl developer I’ve answered many questions and I’ve seen every imaginable mistake done. Some of the mistakes seem to happen more frequently and some of the mistake seem easier than others to avoid.

I’m going to go over a list of things that users often get wrong with libcurl, perhaps why they do and of course I will talk about how to fix those errors.

Length

It should be done within 30-40 minutes, plus some additional time for questions at the end.

Audience

You’re interested in Internet transfer, preferably you already know what libcurl is and perhaps you have even written code that uses libcurl. Directly in C or using a binding in another language.

Material

The video and slides will of course be made available as well in case you can’t tune in live.

Sign up

If you sign up to attend, you can join, enjoy the talk and of course ask me whatever is unclear or you think needs clarification around this topic. See you next week!

https://daniel.haxx.se/blog/2020/04/28/webinar-common-libcurl-mistakes/

|

|

The Firefox Frontier: Now that my entire life is online, how do I protect my personal data? |

by Astrid Rivera For the past month or so I’ve been living my life exclusively indoors and online. This new normal means that everything has shifted to a virtual realm … Read more

The post Now that my entire life is online, how do I protect my personal data? appeared first on The Firefox Frontier.

|

|

Mozilla VR Blog: Announcing Hubs Cloud |

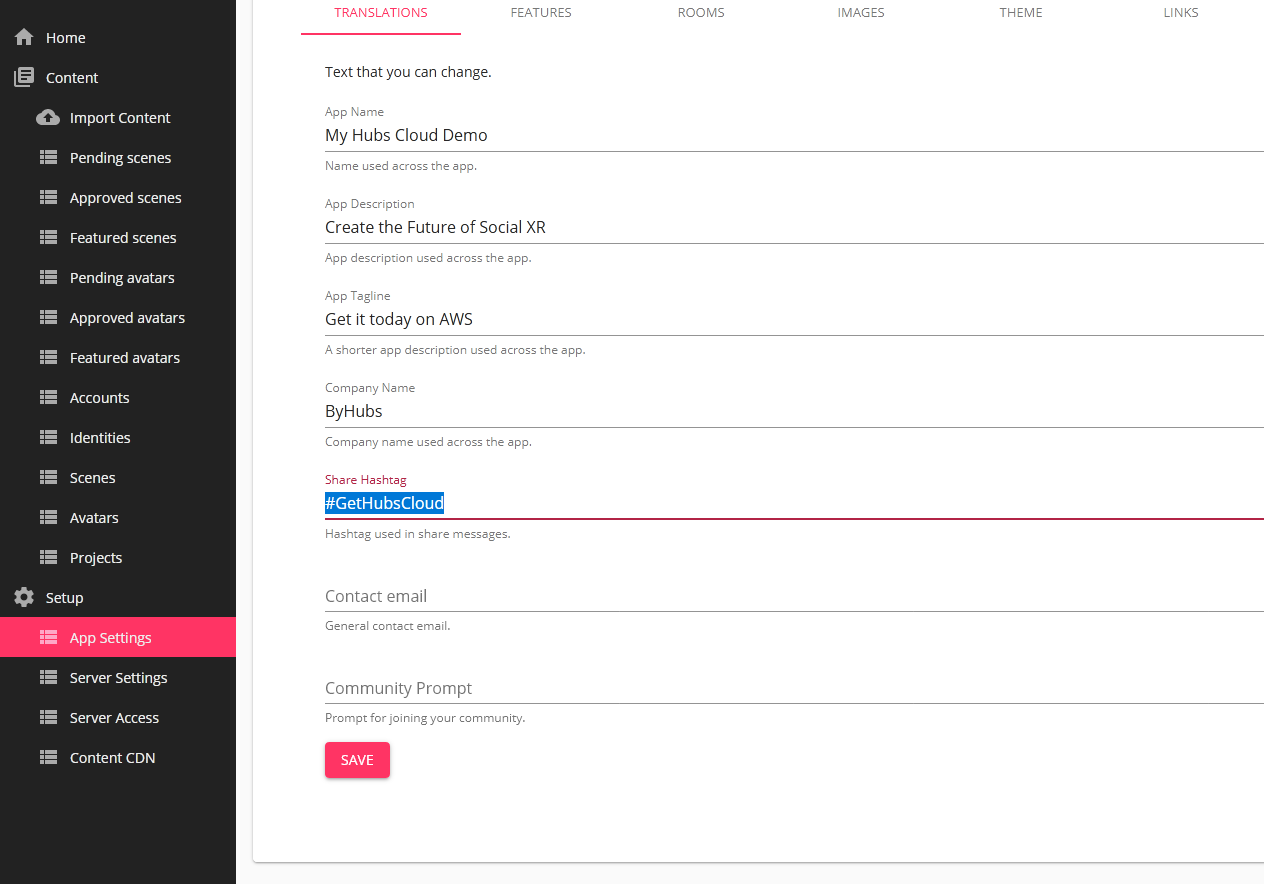

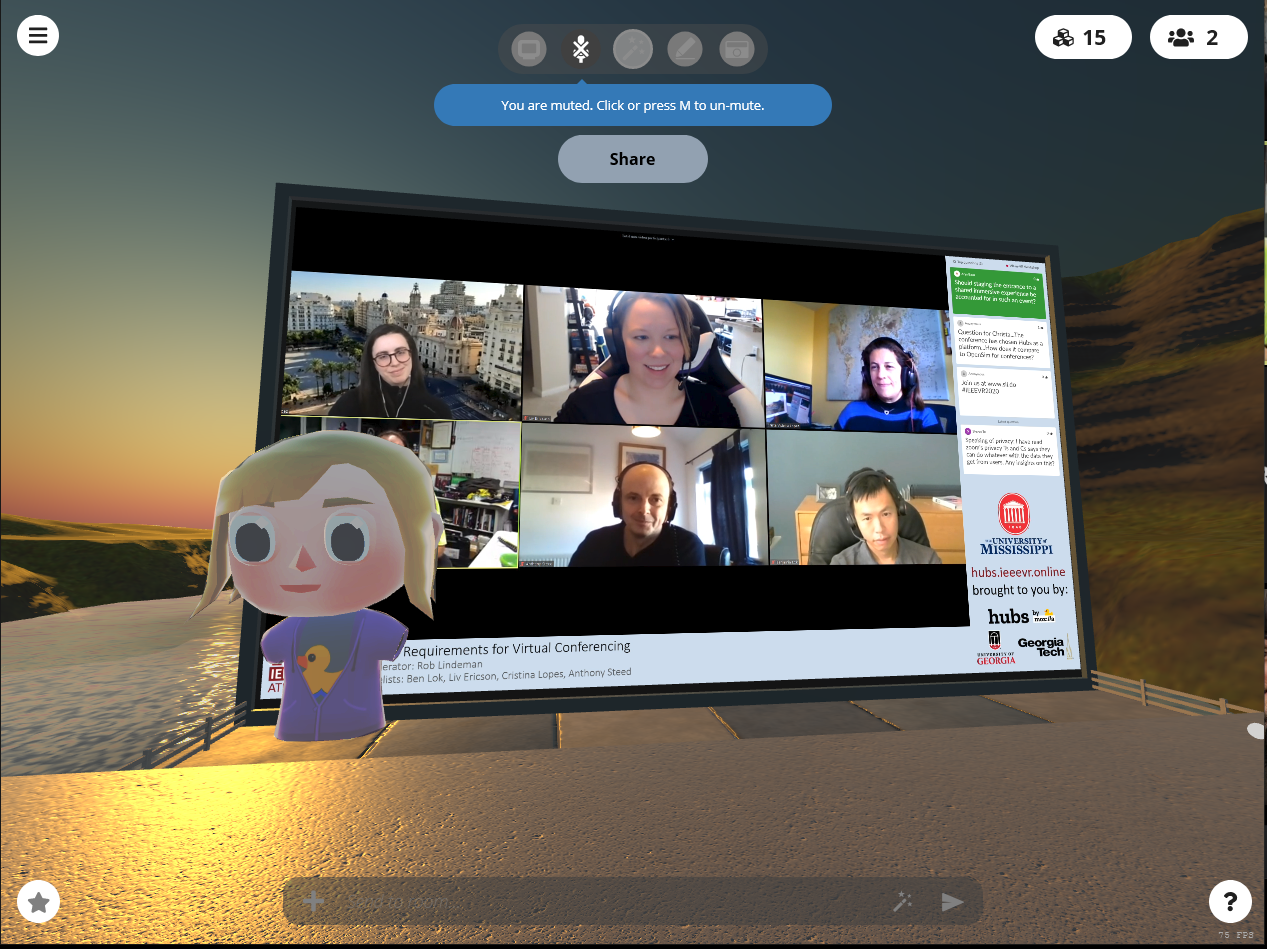

Hubs Cloud is a new product offering from the Mozilla Mixed Reality group that allows companies and organizations to create their own private, social spaces that work with desktop, mobile, and VR headsets. Hubs Cloud contains the underlying architecture that runs hubs.mozilla.com, and is being offered in Early Access on AWS. With Hubs Cloud, it is now possible for external organizations to deploy, customize and configure their own unique instances of the Hubs platform.

When we began building Hubs, the plan was not to try and create a singular, shared online experience owned entirely by Mozilla. Instead, we wanted to create a platform that could be adopted and deployed by organizations to suit their own needs and build their own social applications that worked on both 2D and on VR devices. With that in mind, the team set out to build a set of collaboration tools with versatile web frameworks that could lay the foundation of a platform that provides private rooms, customizable avatars, and the power of virtual reality to connect people regardless of whether or not they were in a shared physical location.

Over the past several months, we’ve done a lot of work to provide a way for external development teams to stand up private instances of our Hubs infrastructure on an organizational AWS account. Hubs Cloud instances are compatible with the same avatars and scenes that are published on the main hubs.mozilla.com site, or you can choose to create your own content, unique to your application. Hubs Cloud offers access to an administrator panel that allows you to customize the branding for your site, approve scenes and avatars that are submitted, import default environments and set platform-wide room settings, and use your own domain names. And, since the deployment is done through your organization’s AWS account, you control the account access and data for your individual instances.

We’ve been incredibly excited by the use cases that we’ve seen from our early partners who have deployed Hubs Cloud. Last month, IEEE deployed a custom Hubs Cloud instance to host an online experience for their VR conference, and brought viewing parties, poster sessions, and breakout sessions into shared virtual spaces. Companies have also begun deploying custom instances of Hubs for industry-specific verticals, bringing the power of collaborative 3D computing to their existing workflows in areas such as accident visualization and reconstruction. We’ve also seen explorations in educational initiatives at the K-12 and university levels, and look forward to sharing more about what our partners are working on in the coming months.

Hubs Cloud is available in Personal and Enterprise editions. For both editions, billing is based on hourly metering and the instance sizes used. On the AWS Marketplace page, there is a cost estimation calculator to help estimate these ahead of time, and are dependent on the concurrency expected, uptime for the system, data, and storage costs. Both Personal and Enterprise offer the same platform features, but Personal is configured to use a smaller instance size at lower costs and has limits on system-wide scalability.

In the coming months, we’ll be working on bringing Hubs Cloud to additional providers, with Digital Ocean as our next target platform.

Get started with deploying Hubs Cloud today at hubs.mozilla.com/cloud, check out the documentation, or send us a message at hubs@mozilla.com to learn more.

|

|

The Mozilla Blog: Which Video Call Apps Can You Trust? |

Amid the pandemic, Mozilla is educating consumers about popular video apps’ privacy and security features and flaws

Right now, a record number of people are using video call apps to conduct business, teach classes, meet with doctors, and stay in touch with friends. It’s more important than ever for this technology to be trustworthy — but some apps don’t always respect users’ privacy and security.

So today, Mozilla is publishing a guide to popular video call apps’ privacy and security features and flaws. Consumers can use this information to choose apps they’re comfortable with — and to avoid ones they find creepy.

This work is an addition to Mozilla’s annual *Privacy Not Included guide, which rates popular connected products’ privacy and security features during the holiday shopping season. We created this new edition based on reader demand: Last month, we asked our community what information they need most right now, and an overwhelming number asked for privacy and security insights into video call apps.

In this latest installment, Mozilla researchers dug into 15 apps, from Zoom and Skype to HouseParty and Discord. Our researchers answered important questions like: Does the app share user data — and if so, with whom? Are users alerted when meetings are recorded? Is the app compliant with U.S. medical privacy laws? And many more.

Researchers also determined whether or not apps meet Mozilla’s Minimum Security Standards. These five guidelines include: Using encryption; providing security updates; requiring strong passwords; managing vulnerabilities; and featuring a privacy policy.

In total, 12 apps met Mozilla’s Minimum Security Standards: Zoom, Google Duo/HangoutsMeet, Apple FaceTime, Skype, Facebook Messenger, WhatsApp, Jitsi Meet, Signal, Microsoft Teams, BlueJeans, GoTo Meeting, and Cisco WebEx.

Three products did not meet Mozilla’s Minimum Security Standards: Houseparty, Discord, and Doxy.me.

The Minimum Security Standards are just one layer of our guide, however. What else did our research uncover?

- Competition is fierce in the video call app space — which is good news for consumers

- Zoom has been criticized for privacy and security flaws. Because there are many other video call app options out there, Zoom acted quickly to address concerns. This isn’t something we necessarily see with companies like Facebook, which don’t have a true competitor

- When one company adds a feature that users really like, other companies are quick to follow. For example, Zoom and Google Hangouts popularized one-click links to get into meetings, and Skype recently added the feature. And just last week Facebook added Messenger Rooms, which allows up to 50 people to chat at once in Messenger for as long as they want

- All apps use some form of encryption, but not all encryption is equal

- All the video call apps in our guide offer some form of encryption. But not all apps use the holy grail: end-to-end encryption. End-to-end encryption means only those who are part of the call can access the call’s content. No one can listen in, not even the company. Other apps use client-to-server encryption, similar to what your browser does for HTTPS web sites. As your data moves from one point to another, it’s unreadable. Though unlike end-to-end encryption, once your data lands on a company’s servers, it then becomes readable

- Video call apps targeting businesses have a different set of features than video call apps targeting everyday use

- This may seem obvious. But it’s important. Video call apps like FaceTime, Google Duo, Signal, and Houseparty have a very different set of video chat features and ease of use than business-oriented apps such as Zoom, BlueJeans, GoToMeeting, Microsoft Teams, and Cisco Webex. Consumers who want something simple may want to skip the B2B apps. Business users who want a fuller set of features and have money to pay may look to business-focused apps

- There is a diverse range of risks

- Facebook doesn’t use the content of your messages for ad targeting. But it does collect a lot of other personal information. It collects name, email, location, geolocations on photos you upload, information about your contacts, information about you other people might share, and even any information it can gather about you when you use the camera feature. Facebook says it can use all this personal information to target you with ads. It also shares information with a large number of third-party partners including advertisers, vendors, academic researchers, and analytic services

- WhatsApp is solid for video chat, and gets bonus points for using end-to-end encryption on users’ messages and calls. However, it is sullied by an overwhelming amount of misinformation on the platform. Especially during this global pandemic, conspiracies and fake news are being spread across WhatsApp

- Houseparty is admittedly more fun than some others on our list, but it comes with its own problems. Houseparty appears to be a personal data vacuum (though kudos to their privacy policy for being easy to read to tell you that)

- Discord collects more information than we’re comfortable with. For example, it collects information on your contacts if you link your social media accounts. And then there’s the toxicity: dig deep enough and you’ll find some pretty troubling corners of Discord that are known for misogyny, racial harrassment, and human trafficking

- Good news: Many apps provide admirable privacy and security features

- All apps with a built-in recording feature alert participants when recording occurs

- On most apps, hosts have the ability to set rules, like who can unmute and who can share their screen — meaning accidents and trolls can quickly be dealt with

- The two open-source apps in the guide — Jitsi Meet and Signal — have strong privacy protections

The post Which Video Call Apps Can You Trust? appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2020/04/28/which-video-call-apps-can-you-trust/

|

|

Daniel Stenberg: curl ootw: –remote-name-all |

This option only has a long version and it is --remote-name-all.

Shipped curl 7.19.0 for the first time – September 1 2008.

History of curl output options

I’m a great fan of the Unix philosophy for command line tools so for me there was never any deeper thoughts on what curl should do with the contents of the URL it gets already from the beginning: it should send it to stdout by default. Exactly like the command line tool cat does for files.

Of course I also realized that not everyone likes that so we provided the option to save the contents to a given file. Output to a named file. We selected -o for that option – if I remember correctly I think I picked it up from some other tools that used this letter for the same purpose: instead of sending the response body to stdout, save it to this file name.

Okay but when you selected “save as” in a browser, you don’t actually have to select the full name yourself. It’ll propose the default name to use based on the URL you’re viewing, probably because in many cases that makes sense for the user and is a convenient and quick way to get a sensible file name to save the content as.

It wasn’t hard to come with the idea that curl could offer something similar. Since the URL you give to curl has a file name part when you want to get a file name, having a dedicated option pick the name from the rightmost part of the URL for the local file name was easy. As different output option that -o,it felt natural to pick the uppercase O option for this slightly different save-the-output option: -O.

Enter more than URL

curl sends everything to stdout, unless to tell it to direct it somewhere else. Then (this is still before the year 2000, so very early days) we added support for multiple URLs on the command line and what would the command line options mean then?

The default would still be to send data to stdout and since the -o and -O options were about how to save a single URL we simply decided that they do exactly that: they instruct curl how to send a single URL. If you provide multiple URLs to curl, you subsequently need to provide multiple output flags. Easy!

It has the interesting effect that if you download three files from example.com and you want them all named according to their rightmost part from the URL, you need to provide multiple -O options:

curl https://example.com/1 https://example.com/2 https://example.com/3 -O -O -O

Maybe I was a bit sensitive

Back in 2008 at some point, I think I took some critique about this maybe a little too hard and decided that if certain users really wanted to download multiple URLs to local file names in an easier manner, that perhaps other command line internet download tools do, I would provide an option that lets them to this!

--remote-name-all was born.

Specifying this option will make -O the default behavior for URLs on the command line! Now you can provide as many URLs as you like and you don’t need to provide an extra flag for each URL.

Get five different URLs on the command line and save them all locally using the file part form the URLs:

curl --remote-name-all https://example.com/a.html https://example.com/b.html https://example.com/c.html https://example.com/d.html https://example.com/e.html

Then if you don’t want that behavior you need to provide additional -o flags…

.curlrc perhaps?

I think the primary idea was that users who really want -O by default like this would put --remote-name-all in their .curlrc files. I don’t this ever really materialized. I believe this remote name all option is one of the more obscure and least used options in curl’s huge selection of options.

https://daniel.haxx.se/blog/2020/04/27/curl-ootw-remote-name-all/

|

|

The Firefox Frontier: Try Firefox Picture-in-Picture for multi-tasking with videos |

The Picture-in-Picture feature in the Firefox browser makes multitasking with video content easy, no window shuffling necessary. With Picture-in-Picture, you can play a video in a separate, scalable window that … Read more

The post Try Firefox Picture-in-Picture for multi-tasking with videos appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/firefox-picture-in-picture-for-videos/

|

|

The Talospace Project: Eight four two one, twice the cores is (almost) twice as fun |

Again, I say "semi-review" because if I were going to do this right, I'd have set up both the dual-4 and the dual-8 identically, had them do the same tasks and gone back if the results were weird. However, when you're buying a $7000+ workstation you economize where you can, which means I didn't buy any new NVMe cards, bought additional rather than spare RAM, and didn't buy another GPU; the plan was always to consolidate those into the new machine and keep the old chassis, board and CPUs/HSFs as spares. Plus, I moved over the case stickers and those totally change the entire performance characteristics of the system, you dig? We'll let the Phoronix guy(s) do that kind of exacting head-to-head because I pay for this out of pocket and we've all gotta tighten our belts in these days of plague. Here's the new beast sitting beside me under my work area:

(By the way, I'm still taking candidates for #ShowUsYourTalos. If you have pictures uploaded somewhere, I'll rebroadcast them here with your permission and your system's specs. Blackbirds, POWER8s and of course any other OpenPOWER systems welcome. Post in the comments.)

This new "consolidated" system has 64GB of RAM, Raptor's BTO option Radeon WX7100 workstation GPU and two NVMe main drives on the current Talos II 1.01 board, running Fedora 31 as before. In normal usage the dual-8 runs a bit hotter than the dual-4, but this is absolutely par for the course when you've just doubled the number of processors onboard. In my low-Earth-orbit Southern California office the dual-4's fastest fan rarely got above 2100rpm while the dual-8 occasionally spins up to 2300 or 2600rpm. Similarly, give or take system load, the infrared thermometer pegged the dual-4's "exhaust" at around 95 degrees Fahrenheit; the dual-8 puts out about 110 F. However, idle power usage is only about 20W more when sitting around in Firefox and gnome-terminal (130W vs 110W), and the idle fan speeds are about the same such that overall the dual-8 isn't appreciably louder than the very quiet dual-4 was with the most current firmware (with the standard Supermicro fan assemblies, though I replaced the dual-4's PSUs with "super-quiets" a while back and those are in the dual-8 now too).

Naturally the CPUs are the most notable change. Recall that the Sforza "scale out" POWER9 CPUs in Raptor family workstations are SMT-4, i.e., each core offers four hardware threads, which appear as discrete CPUs to the operating system. My dual-4 appeared to be a 32 CPU system to Fedora; this dual-8 appears to have 64. These threads come from "slices," and SMT-4 cores have four which are paired into two "super-slices." They look like this:

Each slice has a vector-scalar unit and an address generator feeding a load-store unit. The VSU has 64-bit integer, floating point and vector ALU components; two slices are needed to get the full 128-bit width of a VMX vector, hence their pairing as super-slices. The super-slices do not have L1 cache of their own, nor do they handle branch instructions or branch prediction; all of that is per-core, which also does instruction fetch and dispatch to the slices. (This has some similar strengths and pitfalls to AMD Ryzen, for example, which also has a single branch unit and caches per core, but the Ryzen execution units are not organized in the same fashion.) The upshot of all this is that certain parallel jobs, especially those that may be competing for scarcer per-core resources like L1 cache or the branch unit, may benefit more from full cores than from threads and this is true of pretty much any SMT implementation. In a like fashion, since each POWER9 slice is not a full vector unit (only the super-slices are), heavy use of VMX would soak up to twice the execution resources though amortized over the greater efficiency vector code would offer over scalar.

The biggest task I routinely do on my T2 are frequent smoke-test builds of Firefox to make sure OpenPOWER-specific bugs are found before they get to release. This was, in fact, where I hoped I would see the most improvement. Indeed, a fair bit of it can be run parallel, so if any of my typical workloads would show benefit, I felt it would likely be this one. Before I tore down the dual-4 I put all 64GB of RAM in it for a final time run to eliminate memory pressure as a variable (and the same sticks are in the dual-8, so it's exactly the same RAM in exactly the same slot layout). These snapshots were done building the Firefox 75 source code from mozilla-release (current as of this writing) with my standard optimized .mozconfig, varying only in the number of jobs specified. I'm only reporting wall time here because frankly that's the only thing I personally cared about. All build runs were done at the text console before booting X and GNOME to further eliminate variability, and I did three runs of each configuration back to back (./mach clobber && ./mach build) to account for any caching that might have occurred. Power was measured at the UPS. Default Spectre and Meltdown mitigations were in effect.

Dual-4 (-j24)

32:22.65

31:19.17

30:49.66

average draw 170W

Dual-8 (-j48)

19:16.28

19:09.18

19:08.32

average draw 230W

Dual-8 (-j24)

19:16.46

19:13.78

19:10.14

average draw 230W

The dual-8 is approximately 40% faster than the dual-4 on this task (or, said another way, the dual-4 was about 1.6x slower), but doubling the number of make processes from my prior configuration didn't seem to yield any improvement despite being well within the 64 threads available. This surprised me, so given that the dual-8 has 16 cores, I tried 16 processes directly:

Dual-8 (-j16)

21:49.72

21:40.18

21:41.33

average draw 215W

This proves, at least for this workload, that SMT does make some difference, just not as much as I would have thought. It also argues that the sweet spot for the dual-4 might have been around -j12, but I'm not willing to tear this box back down to try it. Still, cutting down my build times by over 10 minutes is nothing to sneeze at.

For other kinds of uses, though, I didn't see a lot different in terms of performance between DD2.2 and DD2.3 and to be honest you wouldn't expect to. DD2.3 does have improved Spectre mitigations and this would help the kind of branch-heavy code that would benefit least from additional slices, but the change is relatively minor and the difference in practise indeed seemed to be minimal. On my JIT-accelerated DOSBox build the benchmarks came in nearly exactly the same, as did QEMU running Mac OS 9. Booted into GNOME as I am right now, the extra CPU resources certainly do smooth out doing more things at once, but again, that's of course more a factor of the number of cores and slices than the processor stepping.

Overall I'm pretty pleased with the upgrade, and it's a nice, welcome boost that improves my efficiency further. Here are my present observations if you're thinking about a CPU upgrade too (or are a first time buyer considering how much you should get):

- Upgrading is pretty easy with these machines: if you bought too little today, you can always drop in a beefier CPU or two tomorrow (assuming you have the dough and the sockets), and the Self-Boot Engine code is generic such that any Sforza POWER9 chip will work on any Raptor system that can accommodate it. I have been repeatedly assured no FPGA update is needed to use DD2.3 chips, even for "O.G." T2 systems. However, if you're running a Blackbird, you should think about case and cooling as well because the 8-core will run noticeably hotter than a 4-core. A lot of the more attractive slimline mATX cases are very thermally constrained, and the 8-core CPU really should be paired with the (3U) high speed fan assembly than the (2U) passive heatsink. This is a big reason why my personal HTPC Blackbird is still a little 4-core system.

- The 4 and 8-core chips are familiar to most OpenPOWER denizens but the 18 and 22-core monsters have a complicated value proposition. People have certainly run them in Blackbirds; my personal thought is this is courting an early system demise, though I am impressed by how heroic some of those efforts are. I wouldn't myself, however: between the thermal and power demands you're gonna need a bigger boat for these sharks.

The T2 is designed for them and will work fine, but after my experience here one wonders how loud and hot they would get in warmer environments. Plus, you really need to fill both of those sockets or you'll lose three slots (those serviced by the second CPU's PCIe lanes), which would make them even louder and hotter. The dual-8 setup here gets you 16 cores and all of the slots turned on, so I think it's the better workstation configuration even though it costs a little more than a single-18 and isn't nearly as performant. The dual-18 and dual-22 configurations are really meant for big servers and crazy people.

With the T2 Lite, though, these CPUs make absolute sense and it would be almost a waste to run one with anything less. The T2 Lite is just a cut-down single-socket T2 board in the same form factor, so it will also easily accommodate any CPU but more cheaply. If you need the massive thread resources of a single-18 (72 thread) or single-22 (88 thread) workstation, and you can make do with an x16 and an x8 slot, it's really the overall best option for those configurations and it's not that much more than a Blackbird board. Plus, being a single CPU configuration it's probably a lot more liveable under one's desk.

- Simply buying a DD2.3 processor to replace a DD2.2 processor of the same core count probably doesn't pay off for most typical tasks. Unless you need the features (and there are some: besides the Spectre mitigations, it also has Ultravisor support and proper hardware watchpoints), you'll just end up spending additional money for little or no observable benefit. However, if you're going to buy more cores at the same time, then you might as well get a DD2.3 chip and have those extra features just in case you'll need them later. The price difference is almost certainly worth a little futureproofing.

https://www.talospace.com/2020/04/eight-four-two-one-twice-cores-is.html

|

|

William Lachance: mozregression for MacOS |

Just a quick note that, as a side-effect of the work I mentioned a while ago to add telemetry to mozregression, mozregression now has a graphical Mac client! It’s a bit of a pain to install (since it’s unsigned), but likely worlds easier for the average person to get going than the command-line version. Please feel free to point people to it if you’re looking to get a regression range for a MacOS-specific problem with Firefox.

More details: The Glean Python SDK, which mozregression now uses for telemetry, requires Python 3. This provided the impetus to port the GUI itself to Python 3 and PySide2 (the modern incarnation of PyQt), which brought with it a much easier installation/development experience for the GUI on platforms like Mac and Linux.

I haven’t gotten around to producing GUI binaries for the Linux yet, but it should not be much work.

Speaking of Glean, mozregression, and Telemetry, stay tuned for more updates on that soon. It’s been an adventure!

https://wlach.github.io/blog/2020/04/mozregression-for-macos/?utm_source=Mozilla&utm_medium=RSS

|

|

Nicholas Nethercote: How to speed up the Rust compiler in 2020 |

I last wrote in December 2019 about my work on speeding up the Rust compiler. Time for another update.

Incremental compilation

I started the year by profiling incremental compilation and making several improvements there.

#68914: Incremental compilation pushes a great deal of data through a hash function, called SipHasher128, to determine what code has changed since the last compiler invocation. This PR greatly improved the extraction of bytes from the input byte stream (with a lot of back and forth to ensure it worked on both big-endian and little-endian platforms), giving incremental compilation speed-ups of up to 13% across many benchmarks. It also added a lot more comments to explain what is going on in that code, and removed multiple uses of unsafe.

#69332: This PR reverted the part of #68914 that changed the u8to64_le function in a way that made it simpler but slower. This didn’t have much impact on performance because it’s not a hot function, but I’m glad I caught it in case it gets used more in the future. I also added some explanatory comments so nobody else will make the same mistake I did!

#69050: LEB128 encoding is used extensively within Rust crate metadata. Michael Woerister had previously sped up encoding and decoding in #46919, but there was some fat left. This PR carefully minimized the number of operations in the encoding and decoding loops, almost doubling their speed, and giving wins on many benchmarks of up to 5%. It also removed one use of unsafe. In the PR I wrote a detailed description of the approach I took, covering how I found the potential improvement via profiling, the 18 different things I tried (10 of which improved speed), and the final performance results.

LLVM bitcode

Last year I noticed from profiles that rustc spends some time compressing the LLVM bitcode it produces, especially for debug builds. I tried changing it to not compress the bitcode, and that gave some small speed-ups, but also increased the size of compiled artifacts on disk significantly.

Then Alex Crichton told me something important: the compiler always produces both object code and bitcode for crates. The object code is used when compiling normally, and the bitcode is used when compiling with link-time optimization (LTO), which is rare. A user is only ever doing one or the other, so producing both kinds of code is typically a waste of time and disk space.

In #66598 I tried a simple fix for this: add a new flag to rustc that tells it to omit the LLVM bitcode. Cargo could then use this flag whenever LTO wasn’t being used. After some discussion we decided it was too simplistic, and filed issue #66961 for a more extensive change. That involved getting rid of the use of compressed bitcode by instead storing uncompressed bitcode in a section in the object code (a standard format used by clang), and introducing the flag for Cargo to use to disable the production of bitcode.

The part of rustc that deals with all this was messy. The compiler can produce many different kinds of output: assembly code, object code, LLVM IR, and LLVM bitcode in a couple of possible formats. Some of these outputs are dependent on other outputs, and the choices on what to produce depend on various command line options, as well as details of the particular target platform. The internal state used to track output production relied on many boolean values, and various nonsensical combinations of these boolean values were possible.

When faced with messy code that I need to understand, my standard approach is to start refactoring. I wrote #70289, #70345, and #70384 to clean up code generation, #70297, #70729 , and #71374 to clean up command-line option handling, and #70644 to clean up module configuration. Those changes gave me some familiarity with the code, simplifed it, and I was then able to write #70458 which did the main change.

Meanwhile, Alex Crichton wrote the Cargo support for the new -Cembed-bitcode=no option (and also answered a lot of my questions). Then I fixed rustc-perf so it would use the correct revisions of rustc and Cargo together, without which the the change would erroneously look like a performance regression on CI. Then we went through a full compiler-team approval and final comment period for the new command-line option, and it was ready to land.

Unfortunately, while running the pre-landing tests we discovered that some linkers can’t handle having bitcode in the special section. This problem was only discovered at the last minute because only then are all tests run on all platforms. Oh dear, time for plan B. I ended up writing #71323 which went back to the original, simple approach, with a flag called -Cbitcode-in-rlib=no. (Edit: note that libstd is still compiled with -Cbitcode-in-rlib=yes, which means that libstd rlibs will still work with both LTO and non-LTO builds.)

The end result was one of the bigger performance improvements I have worked on. For debug builds we saw wins on a wide range of benchmarks of up to 18%, and for opt builds we saw wins of up to 4%. The size of rlibs on disk has also shrunk by roughly 15-20%. Thanks to Alex for all the help he gave me on this!

Anybody who invokes rustc directly instead of using Cargo might want to use -Cbitcode-in-rlib=no to get the improvements.

Miscellaneous improvements

#67079: Last year in #64545 I introduced a variant of the shallow_resolved function that was specialized for a hot calling pattern. This PR specialized that function some more, winning up to 2% on a couple of benchmarks.

#67340: This PR shrunk the size of the Nonterminal type from 240 bytes to 40 bytes, reducing the number of memcpy calls (because memcpy is used to copy values larger than 128 bytes), giving wins on a few benchmarks of up to 2%.

#68694: InferCtxt is a type that contained seven different data structures within RefCells. Several hot operations would borrow most or all of the RefCells, one after the other. This PR grouped the seven data structures together under a single RefCell in order to reduce the number of borrows performed, for wins of up to 5%.

#68790: This PR made a couple of small improvements to the merge_from_succ function, giving 1% wins on a couple of benchmarks.

#68848: The compiler’s macro parsing code had a loop that instantiated a large, complex value (of type Parser) on each iteration, but most of those iterations did not modify the value. This PR changed the code so it initializes a single Parser value outside the loop and then uses Cow to avoid cloning it except for the modifying iterations, speeding up the html5ever benchmark by up to 15%. (An aside: I have used Cow several times, and while the concept is straightforward I find the details hard to remember. I have to re-read the documentation each time. Getting the code to work is always fiddly, and I’m never confident I will get it to compile successfully… but once I do it works flawlessly.)

#69256: This PR marked with #[inline] some small hot functions relating to metadata reading and writing, for 1-5% improvements across a number of benchmarks.

#70837: There is a function called find_library_crate that does exactly what its name suggests. It did a lot of repetitive prefix and suffix matching on file names stored as PathBufs. The matching was slow, involving lots of re-parsing of paths within PathBuf methods, because PathBuf isn’t really designed for this kind of thing. This PR pre-emptively extracted the names of the relevant files as strings and stored them alongside the PathBufs, and changed the matching to use those strings instead, giving wins on various benchmarks of up to 3%.

#70876: Cache::predecessors is an oft-called function that produces a vector of vectors, and the inner vectors are usually small. This PR changed the inner vector to a SmallVec for some very small wins of up to 0.5% on various benchmarks.

Other stuff

I added support to rustc-perf for the compiler’s self-profiler. This gives us one more profiling tool to use on the benchmark suite on local machines.

I found that using LLD as the linker when building rustc itself reduced the time taken for linking from about 93 seconds to about 41 seconds. (On my Linux machine I do this by preceding the build command with RUSTFLAGS="-C link-arg=-fuse-ld=lld".) LLD is a really fast linker! #39915 is the three-year old issue open for making LLD the default linker for rustc, but unfortunately it has stalled. Alexis Beingessner wrote a nice summary of the current situation. If anyone with knowledge of linkers wants to work on that issue, it could be a huge win for many Rust users.

Failures

Not everything I tried worked. Here are some notable failures.

#69152: As mentioned above, #68914 greatly improved SipHasher128, the hash function used by incremental compilation. That hash function is a 128-bit version of the default 64-bit hash function used by Rust hash tables. I tried porting those same improvements to the default hasher. The goal was not to improve rustc’s speed, because it uses FxHasher instead of default hashing, but to improve the speed of all Rust programs that do use default hashing. Unfortunately, this caused some compile-time regressions for complex reasons discussed in detail in the PR, and so I abandoned it. I did manage to remove some dead code in the default hasher in #69471, though.

#69153: While working on #69152, I tried switching from FxHasher back to the improved default hasher (i.e. the one that ended up not landing) for all hash tables within rustc. The results were terrible; every single benchmark regressed! The smallest regression was 4%, the largest was 85%. This demonstrates (a) how heavily rustc uses hash tables, and (b) how much faster FxHasher is than the default hasher when working with small keys.

I tried using ahash for all hash tables within rustc. It is advertised as being as fast as FxHasher but higher quality. I found it made rustc a tiny bit slower. Also, ahash is also not deterministic across different builds, because it uses const_random! when initializing hasher state. This could cause extra noise in perf runs, which would be bad. (Edit: It would also prevent reproducible builds, which would also be bad.)

I tried changing the SipHasher128 function used for incremental compilation from the Sip24 algorithm to the faster but lower-quality Sip13 algorithm. I got wins of up to 3%, but wasn’t confident about the safety of the change and so didn’t pursue it further.

#69157: Some follow-up measurements after #69050 suggested that its changes to LEB128 decoding were not as clear a win as they first appeared. (The improvements to encoding were still definitive.) The performance of decoding appears to be sensitive to non-local changes, perhaps due to differences in how the decoding functions are inlined throughout the compiler. This PR reverted some of the changes from #69050 because my initial follow-up measurements suggested they might have been pessimizations. But then several sets of additional follow-up measurements taken after rebasing multiple times suggested that the reversions sometimes regressed performance. The reversions also made the code uglier, so I abandoned this PR.

#66405: Each obligation held by ObligationForest can be in one of several

states, and transitions between those states occur at various points. This

PR reduced the number of states from five to three, and greatly reduced the

number of state transitions, which won up to 4% on a few benchmarks. However, it ended up causing some drastic regressions for some users, so in #67471 I reverted those changes.

#60608: This issue suggests using FxIndexSet in some places where currently an FxHashMap plus a Vec are used. I tried it for the symbol table and it was a significant regression for a few benchmarks.

Progress

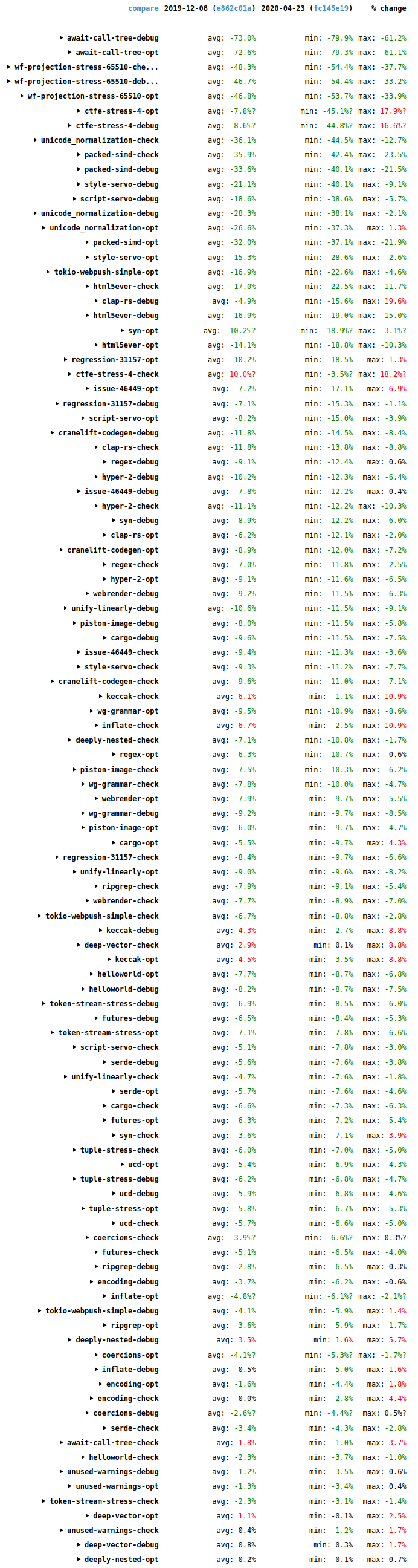

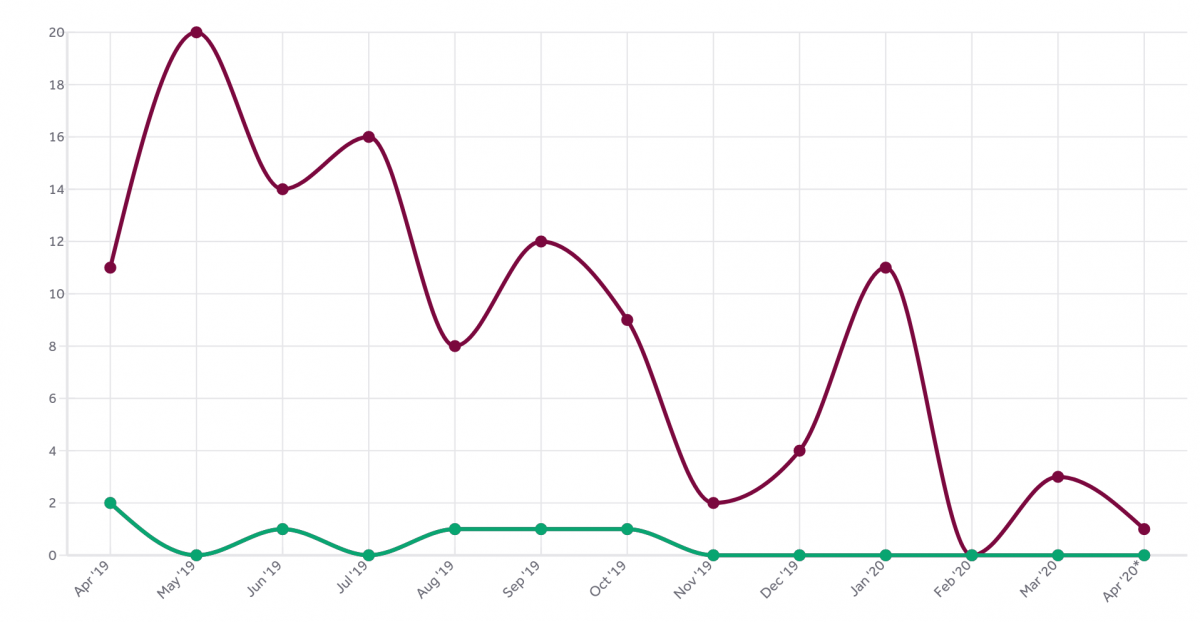

Since my last blog post, compile times have seen some more good improvements. The following screenshot shows wall-time changes on the benchmark suite since then (2019-12-08 to 2020-04-22).

The biggest changes are in the synthetic stress tests await-call-tree-debug, wf-projection-stress-65510, and ctfe-stress-4, which aren’t representative of typical code and aren’t that important.

Overall it’s good news, with many improvements (green), some in the double digits, and relatively few regressions (red). Many thanks to everybody who helped with all the performance improvements that landed during this period.

https://blog.mozilla.org/nnethercote/2020/04/24/how-to-speed-up-the-rust-compiler-in-2020/

|

|

Hacks.Mozilla.Org: A Taste of WebGPU in Firefox |

WebGPU is an emerging API that provides access to the graphics and computing capabilities of hardware on the web. It’s designed from the ground up within the W3C GPU for the Web group by all major browser vendors, as well as Intel and a few others, guided by the following principles:

We are excited to bring WebGPU support to Firefox because it will allow richer and more complex graphics applications to run portably in the Web. It will also make the web platform more accessible to teams who mostly target modern native platforms today, thanks to the use of modern concepts and first-class WASM (WebAssembly) support.

API concepts

WebGPU aims to work on top of modern graphics APIs: Vulkan, D3D12, and Metal. The constructs exposed to the users reflect the basic primitives of these low-level APIs. Let’s walk through the main constructs of WebGPU and explain them in the context of WebGL – the only baseline we have today on the Web.

Separation of concerns

The first important difference between WebGPU and WebGL is that WebGPU separates resource management, work preparation, and submission to the GPU (graphics processing unit). In WebGL, a single context object is responsible for everything, and it contains a lot of associated state. In contrast, WebGPU separates these into multiple different contexts:

GPUDevicecreates resources, such as textures and buffers.GPUCommandEncoderallows encoding individual commands, including render and compute passes.- Once done, it turns into

GPUCommandBufferobject, which can be submitted to aGPUQueuefor execution on the GPU. - We can present the result of rendering to the HTML canvas. Or multiple canvases. Or no canvas at all – using a purely computational workflow.