Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Cameron Kaiser: TenFourFox FPR27b1 available (now with sticky Reader View) |

The big user-facing update for FPR27 is a first pass at "sticky" Reader View. I've been paying attention more to improving TenFourFox's implementation of Reader View because, especially for low-end Power Macs (and there's an argument to be made that all Power Macs are, by modern standards, low end), rendering articles in Reader View strips out extraneous elements, trackers, ads, social media, comments, etc., making them substantially lighter and faster than "full fat." Also, because the layout is simplified, this means less chance for exposing or choking on layout or JavaScript features TenFourFox currently doesn't support. However, in regular Firefox and FPR26, you have to go to a page and wait for some portion of it to render before you enter Reader View, which is inconvenient, and worse still if you click any link in a Reader-rendered article you exit Reader View and have to manually repeat the process. This can waste a non-trivial amount of processing time.

So when I say Reader View is now "sticky," that means links you click in an article in reader mode are also rendered in reader mode, and so on, until you explicitly exit it (then things go back to default). This loads pages much faster, in some cases nearly instantaneously. In addition, to make it easier to enter reader mode in fewer steps (and on slower systems, less time waiting for the reader icon in the address bar to be clickable), you can now right click on links and automatically pop the link into Reader View in a new tab ("Open Link in New Tab, Enter Reader View").

As always this is configurable, though "sticky" mode will be the default unless a serious bug is identified: if you set tenfourfox.reader.sticky to false, the old behaviour is restored. Also, since you may be interacting differently with new tabs you open in Reader View, it uses a separate option than Preferences' "When I open a link in a new tab, switch to it immediately." Immediately switching to the newly opened Reader View tab is the default, but you can make such tabs always open in the background by setting tenfourfox.reader.sticky.tabs.loadInBackground to false also.

Do keep in mind that not every page is suitable for Reader View, even though allowing you to try to render almost any page (except for a few domains on an internal blacklist) has been the default for several versions. The good news is it won't take very long to find out, and TenFourFox's internal version of Readability is current with mainline Firefox's, so many more pages render usefully. I intend to continue further work with this because I think it really is the best way to get around our machines' unfortunate limitations and once you get spoiled by the speed it's hard to read blogs and news sites any other way. (I use it heavily on my Pixel 3 running Firefox for Android, too.)

Additionally, this version completes the under-the-hood changes to get updates from Firefox 78ESR now that 68ESR is discontinued, including new certificate and EV roots as well as security patches. Part of the security updates involved pulling a couple of our internal libraries up to current versions, yielding both better security and performance improvements, and I will probably do a couple more as part of FPR28. Accordingly, you can now select Firefox 78ESR as a user-agent string from the TenFourFox preference pane if needed as well (though the usual advice to choose as old a user-agent string as you can get away with still applies). OlgaTPark also discovered what we were missing to fix enhanced tracking protection, so if you use that feature, it should stop spuriously blocking various innocent images and stylesheets.

What is not in this release is a fix for issue 621 where logging into LinkedIn crashes due to a JavaScript bug. I don't have a proper understanding of this crash, and a couple speculative ideas didn't pan out, but it is not PowerPC-specific or associated with the JavaScript JIT compiler as it occurs in Intel builds as well. (If any Mozillian JS deities have a good guess why an object might get created with the wrong number of slots, feel free to commment here or on Github.) Since it won't work anyway I may decide to temporarily blacklist LinkedIn to avoid drive-by crashes if I can't sort this out before final release, which will be on or around September 21.

http://tenfourfox.blogspot.com/2020/09/tenfourfox-fpr27b1-available-now-with.html

|

|

Will Kahn-Greene: Socorro Engineering: Half in Review 2020 h1 |

Summary

2020h1 was rough. Layoffs, re-org, Berlin All Hands, Covid-19, focused on MLS for a while, then I switched back to Socorro/Tecken full time, then virtual All Hands.

It's September now and 2020h1 ended a long time ago, but I'm only just getting a chance to catch up and some things happened in 2020h1 that are important to divulge and we don't tell anyone about Socorro events via any other medium.

Prepare to dive in!

Read more… (15 min remaining to read)

https://bluesock.org/~willkg/blog/mozilla/socorro_2020_h1.html

|

|

The Firefox Frontier: The age of activism: Protect your digital security and know your rights |

No matter where you have been getting your news these past few months, the rise of citizen protest and civil disobedience has captured headlines, top stories and trending topics. The … Read more

The post The age of activism: Protect your digital security and know your rights appeared first on The Firefox Frontier.

|

|

Firefox UX: Content Strategy in Action on Firefox for Android |

The Firefox for Android team recently celebrated an important milestone. We launched a completely overhauled Android experience that’s fast, personalized, and private by design.

Firefox recently launched launched a completely overhauled Android experience.

When I joined the mobile team six months ago as its first embedded content strategist, I quickly saw the opportunity to improve our process by codifying standards. This would help us avoid reinventing solutions so we could move faster and ultimately develop a more cohesive product for end users. Here are a few approaches I took to integrate systems thinking into our UX process.

Create documentation to streamline decision making

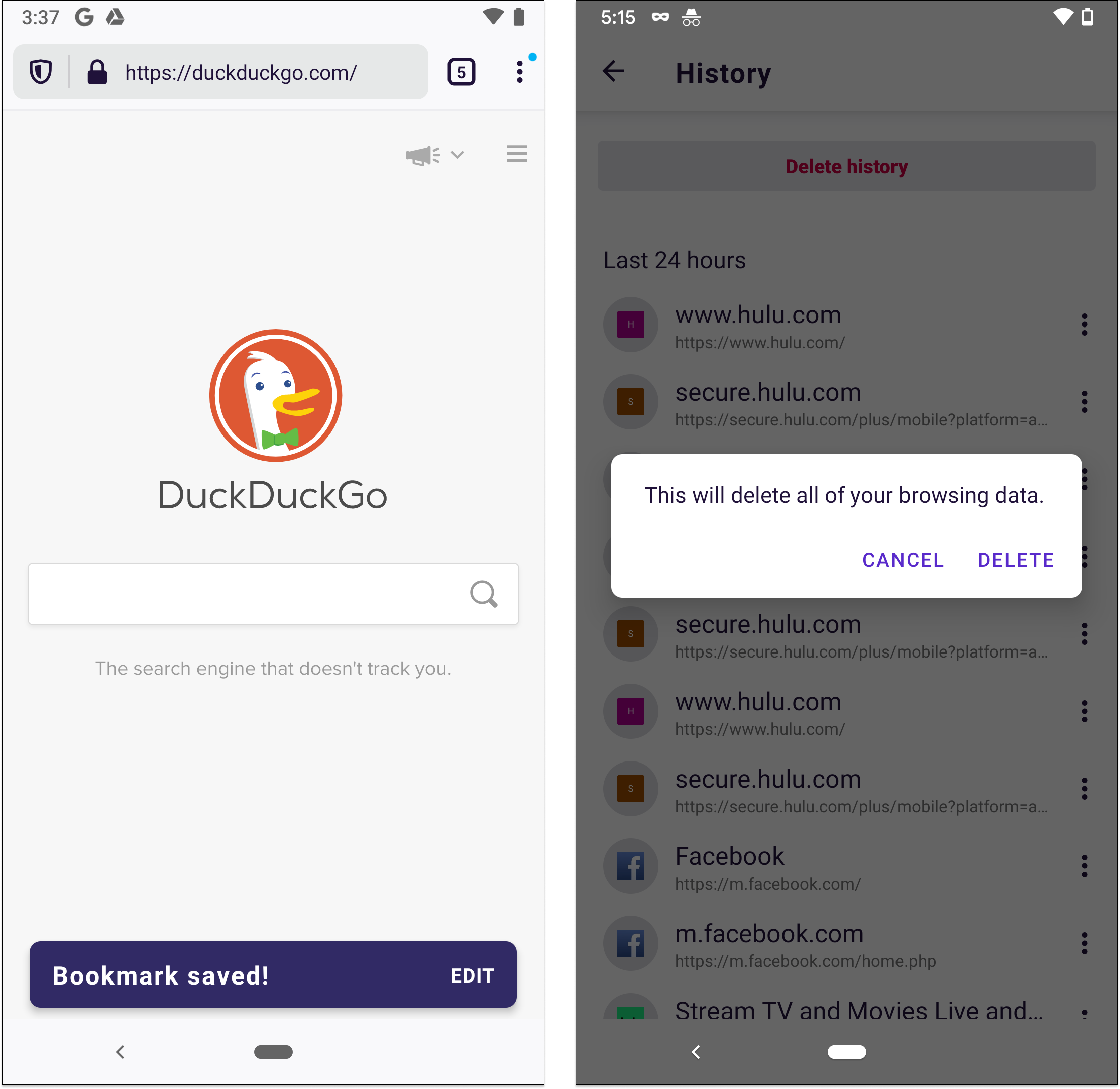

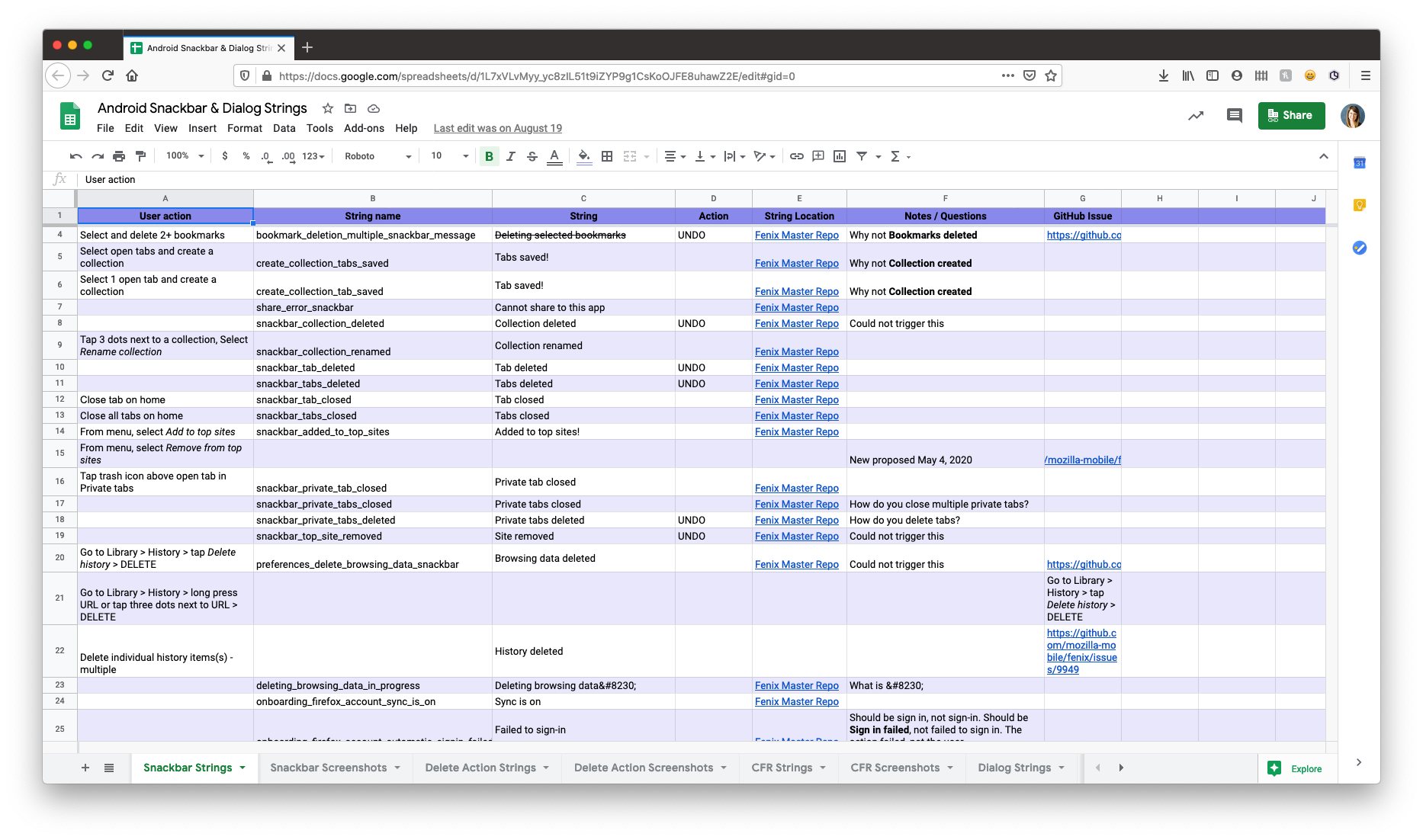

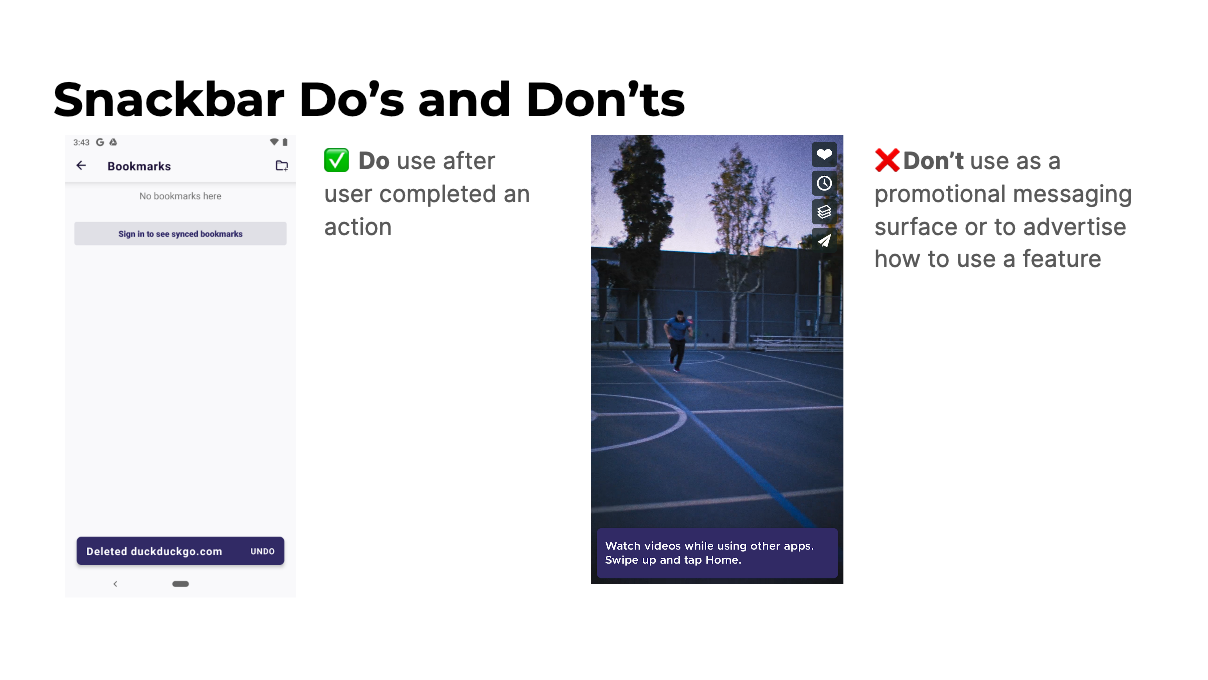

I had an immediate ask to write strings for several snackbars and confirmation dialogs. Dozens of these already existed in the app. They appear when you complete actions like saving a bookmark, closing a tab, or deleting browsing data.

Snackbars and confirmation dialogs appear when you take certain actions inside the app, such as saving a bookmark or deleting your browsing data.

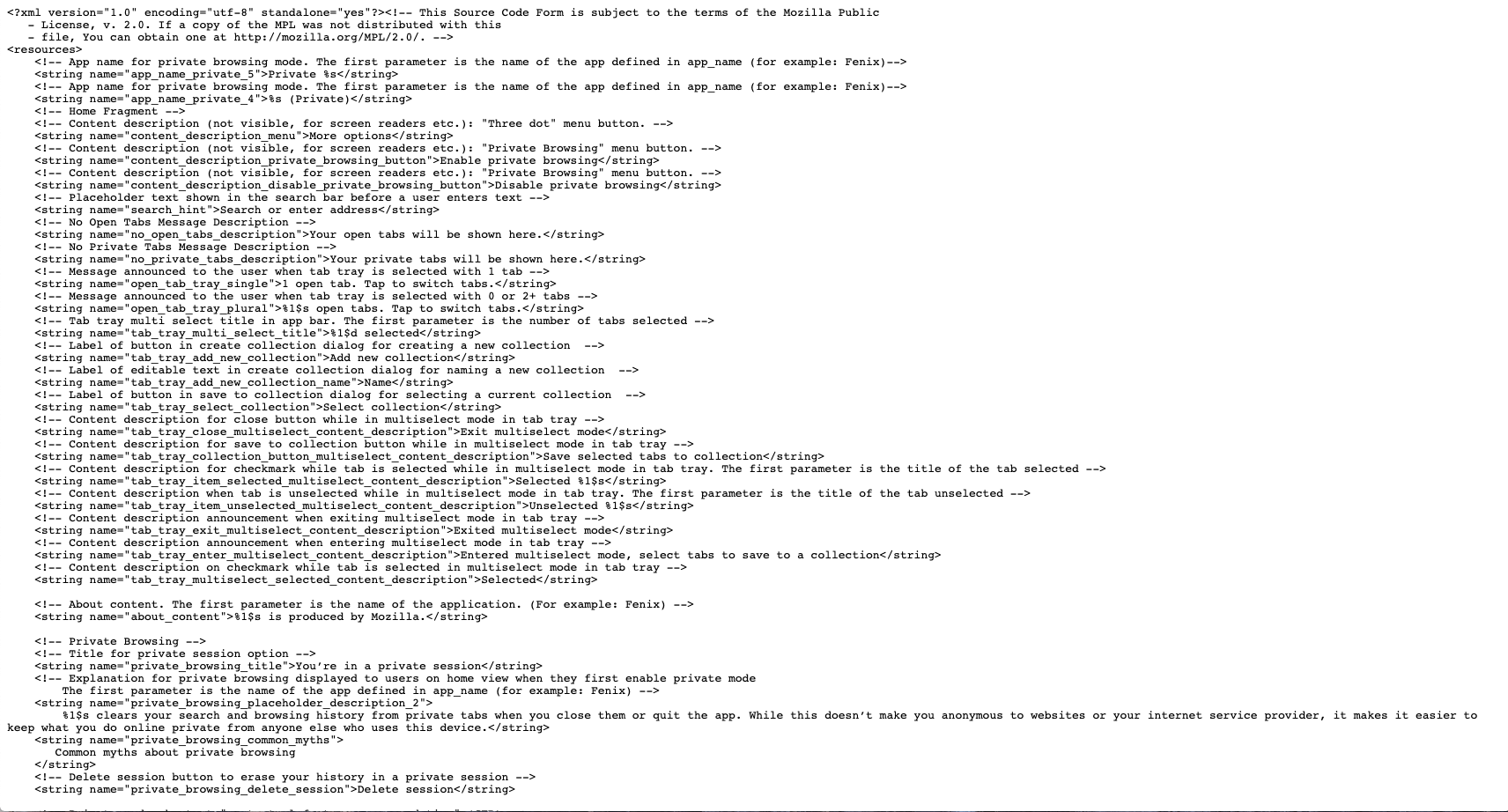

All I had to do was review the existing strings and follow the already-established patterns. That was easier said than done. Strings live in two XML files. Similar strings, like snackbars and dialogs, are rarely grouped together. It’s also difficult to understand the context of the interaction from an XML file.

It’s difficult to identify content patterns and inconsistencies from an XML file.

To see everything in one, more digestible place, I conducted a holistic audit of the snackbars and dialogs.

I downloaded the XML files and pulled all snackbar and dialog-related strings into a spreadsheet. I also went through the app and triggered as many of the messages as I could to add screenshots to my documentation. I audited a few competitors, too. As the audit came together, I began to see patterns emerge.

Organizing and coding strings in a spreadsheet helped me identify patterns and inconsistencies.

I identified the following:

- Inconsistencies in strings. Example: Some had terminal punctuation and others did not.

- Inconsistencies in triggers and behaviors. Example: A snackbar should have appeared but didn’t, or should NOT have appeared but did.

I used this to create guidelines around our usage of these components. Now when a request for a snackbar or dialog comes up, I can close the loop much faster because I have documented guidelines to follow.

Define and standardize reusable patterns and components

Snackbars are one component of many within the app. Firefox for Android has buttons, permission prompts, error fields, modals, in-app messaging surfaces, and much more. Though the design team maintained a UI library, we didn’t have clear standards around the components themselves. This led to confusion and in some cases the same components being used in different ways.

I began to collect examples of various in-app components. I started small, tackling inconsistencies as I came across them and worked with individual designers to align our direction. After a final decision was made about a particular component, we shared back with the rest of the team. This helped to build the team’s institutional memory and improve transparency about a decision one or two people may have made.

Example of guidance we now provide around when it’s appropriate to use a snackbar.

Note that you don’t need fancy tooling to begin auditing and aligning your components. Our team was in the middle of transitioning between tools, so I used a simple Google Slides deck with screenshots to start. It was also easy for other team members to contribute because a slide deck has a low barrier to entry.

Establish a framework for introducing new features

As we moved closer towards the product launch, we began to discuss which new features to add. This led to conversations around feature discoverability. How would users discover the features that served them best?

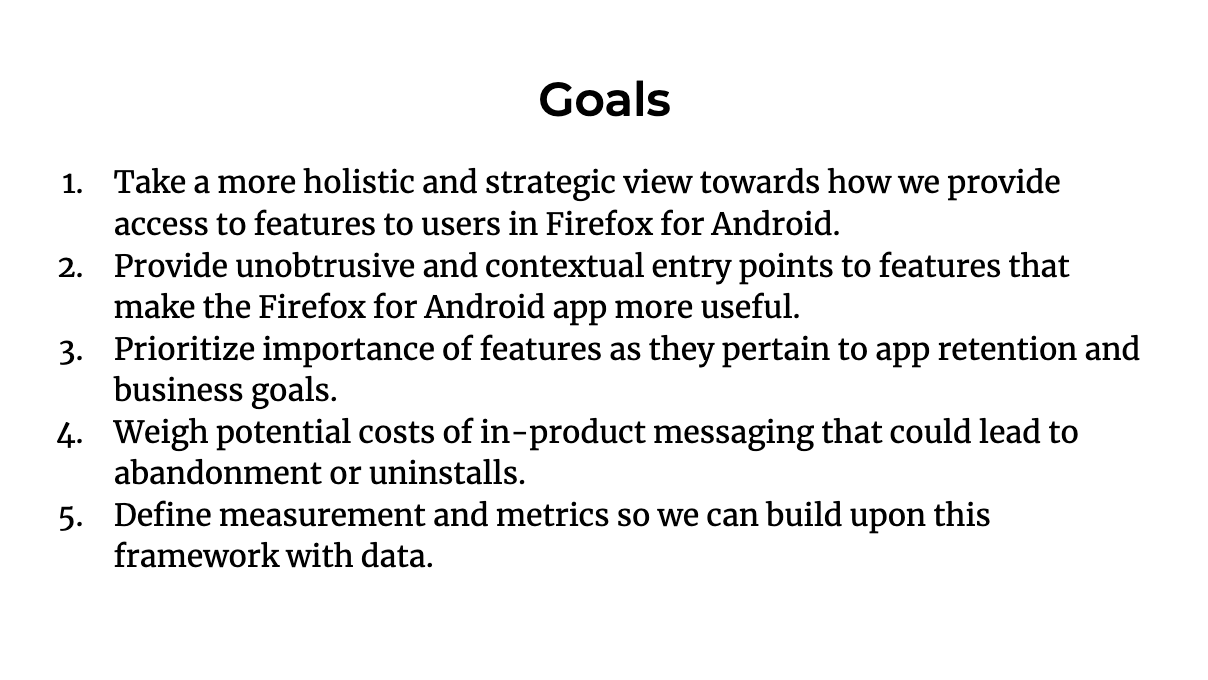

Content strategy advocated for a holistic approach; if we designed a solution for one feature independent of all others in the app, we could end up with a busy, overwhelming end experience that annoyed users. To help us define when, why, and how we would draw attention to in-app features, I developed a Feature Discovery Framework.

Excerpt from the Feature Discovery Framework we are piloting to provide users access to the right features at the right time.

The framework serves as an alignment tool between product owners and user experience to identify the best approach for a particular feature. It’s broken down into three steps. Each step poses a series of questions that are intended to create clarity around the feature itself.

Step 1: Understanding the feature

How does it map to user and business goals?

Step 2: Introducing the feature

How and when should the feature be surfaced?

Step 3: Defining success with data

How and when will we measure success?

After I had developed the first draft of the framework, I shared in our UX design critique for feedback. I was surprised to discover that my peers working on other products in Firefox were enthusiastic about applying the framework on their own teams. The feedback I gathered during the critique session helped me make improvements and clarify my thinking. On the mobile team, we’re now piloting the framework for future features.

Wrapping up

The words you see on a screen are the most tangible output of a content strategist’s work, but are a small sliver of what we do day-to-day. Developing documentation helps us align with our teams and move faster. Understanding user needs and business goals up front help us define what approach to take. To learn more about how we work as content strategists at Firefox, check out Driving Value as a Tiny UX Content Team.

This post was originally published on Medium.

https://blog.mozilla.org/ux/2020/09/content-strategy-in-action-on-firefox-for-android/

|

|

About:Community: Weaving Safety into the Fabric of Open Source Collaboration |

At Mozilla, with over 400 staff in community-facing roles, and thousands of volunteer contributors across multiple projects: we believe that everyone deserves the right to work, contribute and convene with knowledge that their safety and well-being are at the forefront of how we operate and communicate as an organization.

In my 2017 research into the state of diversity and inclusion in open source, including qualitative interviews with over 90 community members, and a survey of over 204 open source projects, we found that while a majority of projects had adopted a code of conduct, nearly half (47%) of community members did not trust (or doubted) its enforcement. That number jumped to 67% for those in underrepresented groups.

For mission driven organizations like Mozilla, and others building open source into their product development workflows, I found a lack of cross-organizational strategy for enforcement. A strategy that considers the intertwined nature of open source, where staff and contributors regularly work together as teammates and colleagues.

It was clear, that the success of enforcement was dependent on the organizational capacity to respond as a whole, and not as sole responsibility of community managers. This blog post describes our journey to get there.

Why This Matters

Photo by Debby Hudson on Unsplash

Truly ‘open’ participation requires that everyone feel safe, supported, and empowered in their roles.

From an ‘organizational health perspective this is also critical to get right as there are unique risks associated with code of conduct enforcement in open collaboration:

- Safety – Physical/Sexual/Psychological for all involved.

- Privacy – Privacy, confidentiality, security of data related to reports.

- Legal – Failure to recognize applicable law, or follow required processes, resulting in potential legal liability. This includes the geographic region where reporter & reported individuals reside.

- Brand – Mishandling (or not handling) can create mistrust in organizations, and narratives beyond their ability to manage.

Where We Started (Staff)

I want to first acknowledge that across Mozilla’s many projects and communities, maintainers, and project leads were doing an excellent job of managing low to moderate risk cases, including ‘drive by’ trolling.

That said, our processes and program were immature. Many of those same staff found themselves without expertise, tools and lacking key partnerships required to manage escalations and high risk situations. This caused stress and perhaps placed an unfair burden on those people to solve complex problems in real time. Specifically gaps were:

Investigative & HR skill set – Investigation requires both a mindset, and set of tactics to ensure that all relevant information is considered, before making a decision. This and other skills, related to supporting employees, sits in the HR department.

Legal – Legal partnership for both product and employment issues are key to high risk cases (in any of the previously mentioned categories) and those which may involve staff – either as the reporter or the reported. The when and how for consulting legal wasn’t yet fully clear.

Incident Response – Incident response requires timing and a clear set of steps that ensures complex decisions like a project ban are executed in such a way that safety and privacy of all involved are at center. This includes access to expertise and tools that help keep people safe. There was no repeatable predictable, and visible process to plug into.

Centralized Data Tracking – There was no single, cohesive way to track HR, Legal and community violations of the CPG across the project. This means theoretically, that someone banned from the community could have potentially applied for a MOSS grant, fellowship or been invited to Mozilla’s bi-annual All Hands by another team – without that being readily flagged.

Where We Started (Community)

Photo by Nicola Fioravanti on Unsplash

“Community does not pay attention to CPG , people don’t feel it will do anything.” – 2017 Research into the state of diversity & inclusion in open source.

For those in our communities, 2017 research found little- to-no knowledge about how to report violations, and what to expect if they did. In situations perceived as urgent, contributors would look for help from multiple staff members they already had a rapport with, and/or affiliated community leadership groups like Mozilla Reps council. Those community leaders were often heroic in their endeavors to help, but again just lacked the same tools, processes and visibility into how the organization was set up to support them.

In open source more broadly, we have a long timeline of unaddressed toxic behavior, especially from those in roles of influence . It seems fair to hypothesize that the human and financial cost of unaddressed behavior is not unlike concerning numbers showing up in research about toxic work environments.

Where We Are Now

Photo by Patrick Hendry on Unsplash

While this work is never done, I can say with a lot of confidence that the program we’ve built is solid, both from the perspective of systematically addressing the majority of the gaps I’ve mentioned, and set up to continually improve.

Investments required to build this program were both practical, in that we required resources, and budget, but also of the intangible – and emotional commitment to stand shoulder-to-shoulder with people in difficult circumstances, and potentially endure the response of those of those for whom equality felt uncomfortable.

Over time, and as processes became more efficient, those investments have also been gradually reduced from two people, working full time, to only 25% of a full time employee’s time. Even with recent layoffs at Mozilla, these programs are now lean enough to continue as is.

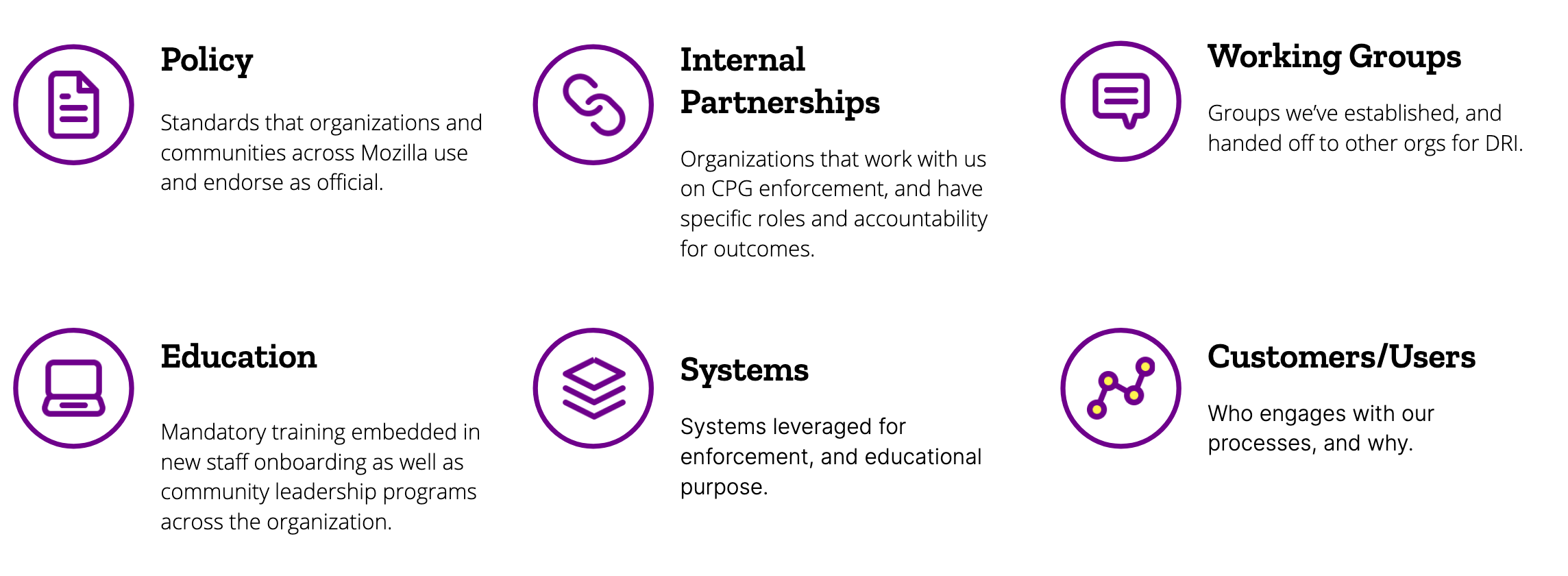

The CPG Program for Enforcement

To date, we’ve triaged 282 reports, consulted on countless issues related to enforcement, and fully rolled out 19 complex full project bans among other decisions ranked according to levels on our consequence ladder . We’ve also ensured that over 873 of Mozilla’s Github repositories use our template, which directs to our processes.

Customers/Users

Who uses this program? It might seem a bit odd to describe those who seek support in difficult situations as customers, or users but from the perspective of service design, thinking this way ensures we are designing with empathy, compassion and providing value for the journey of open source collaboration.

“I felt very supported by Mozilla’s CPG process when I was being harassed. It was clear who was involved in evaluating my case, and the consequences ladder helped jump-start a conversation about what steps would be taken to address the harassment. It also helped that the team listened to and honor my requests during the process.” – Mozilla staff member

“I am not sure how I would have handled this on my own. I am grateful that Mozilla provided support to manage and fix a CPG issues in my community” – Mozilla community member.

Obviously I cannot be specific to protect privacy of individuals involved, but I can group ‘users’ into three groups:

People – contributors, community leaders, community-facing staff, and their managers.

Mozilla Communities & Projects – it’s hard to think of an area that has not leveraged this program in some capacity including: Firefox, Developer Tools, SUMO, MDN, Fenix, Rust, Servo, Hubs, Addons, Firefox, Mozfest, All Hands, Mozilla Reps, Tech Speakers, Dev Rel, Reps, MOSS, L10N and regional communities are top of mind.

External Communities & Projects – because we’ve shared our work openly, we’ve seen adoption more broadly in the ecosystem including the Contributor’s Covenant ‘Enforcement Guidelines’.

Policy & Standards

Answering: “How might we scale our processes, in a way that ensures quality, safety, stability, reproducibility and ultimately builds trust across the board (staff and communities)?”.

This includes continual improvement of the CPG itself. This year, after interviewing an expert on the topic of caste discrimination, and its potentially negative impact on open communities, we added caste as a protected group. This year, we’ve also translated the policy into 7 more languages for a total of 15. Finally, we added a How to Report page, including best practices for accepting a report, and ensuring compliance based on whether staff are reporting or the reporter. All changes are tracked here.

For the enforcement policy itself we have the following standards:

- A Decision making policy governs cases where contributors are involved – ensuring the right stakeholders are consulted, scope of that consultation is limited to protect privacy of those involved.

- Consequence ladder guides decision-making, and if required, provides guidance for escalation in cases of repeat violations.

- For rare cases where a ban is temporary, we have a policy to invite members back through our CPG onboarding.

- To roll out a decision across multiple systems, we’ve developed this project-wide process including communication templates.

To ensure unified understanding and visibility, we have the following supportive processes and tools:

- A Github standard for CODE_OF_CONDUCT.md file.

- A script for scanning the Mozilla’s Github organization for out-dated, or missing CODE_OF_CONDUCT files. This same script will open an issue and associate PR with the correct file.

- A Code of Conduct evaluator tool for Mozilla’s Open Source(MOSS) grant program to better evaluate the inclusion of applicant policies.

Internal Partnerships

Answering: “How might we unite people, and teams with critical skills needed to respond efficiently and effectively (without that requiring a lot of formality)?”.

There were three categories of formal, and informal partnerships, internally:

Product Partnerships – those with accountability, and skill sets related to product implementation of standards and policies. Primarily this is legal’s product team, and those administering the Mozilla GitHub organization.

Safety Partnerships – those with specific skill sets, required in emergency situations. At Mozilla, this is Security Assurance, HR, Legal and Workplace Resources (WPR) .

Enforcement Partnerships – Specifically this means alignment between HR and legal on which actions belong to which team. That’s not to say, we always have the need for these, many smaller reports can easily be handled by the community team alone.

An example of how a case involving an employee as reporter, or reported is managed between departments.

We also have less formalized partnerships (more of an intention to work together) across events like All Hands, and in collaboration with other enforcement leaders at Mozilla like those managing high-volume issues in places like Bugzilla.

Working Groups

Answering: “How can we convene representatives from different areas of the org, around specific problems?”

Centralized CPG Enforcement Data – Working Group

To mitigate risk identified a working group consisting of HR (for both Mozilla Corporation, and Mozilla Foundation), legal and the community team come together periodically to triage requests for things like MOSS grants, community leadership roles, and in-person invites to events (pre-COVID-19) reduced the potential for re-emergence of those with CPG violations risk in a number of areas.

Safety – Working Group

When Mozillians feel threatened (perceived or actual), we want to make sure there is an accountable team, with access and ability to trigger broader responses across the organization, based on risk. This started first as a mailing list of accountable for Security, Community, Workplaces Resources (WPR) and HR, this group now has Security as a DRI, ensuring prompt incident response.

Each of these working groups started as an experiment, each having demonstrated value, now has an accountable DRI (HR & Security Assurance respectively).

Education

Answering: “How can we ensure an ongoing high standard of response, through knowledge sharing and training for contributors in roles of project leadership, and staff in community-facing roles(and their managers)?”

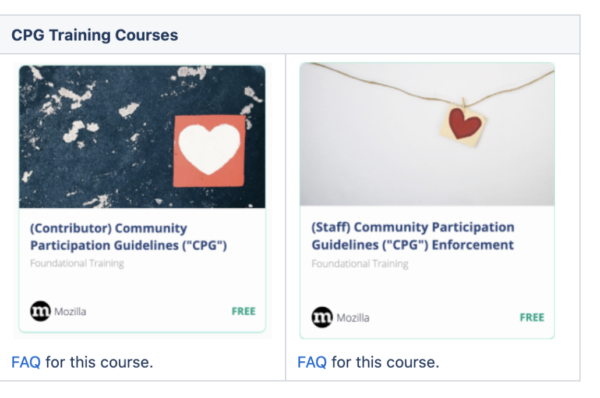

We created two courses:

These are not intended to make people ‘enforcement experts’. Instead, curriculum covers, at a high level (think ‘first aid’ for enforcement!), those topics critical to mitigating risk, and keeping people safe.

These are not intended to make people ‘enforcement experts’. Instead, curriculum covers, at a high level (think ‘first aid’ for enforcement!), those topics critical to mitigating risk, and keeping people safe.

98% of the 501 staff who have completed this course said they understood how it applied to their role, and valued the experience.

Central to content is this triage process, for quick decision making, and escalation if needed.

Last (but not least), these courses encourage learners to prioritize self-care , with available resources, and clear organizational support for doing so.

Supporting Systems

As part of our design and implementation we also found a need for systems to further our program’s effectiveness. Those are:

Reporting Tool: We needed a way to effectively and consistently accept, and document reports. We decided to use a third party system that allowed Mozilla to create records directly and allowed contributors/community members to personally submit reports in the same tool. This helped with making sure that we had one authorized system rather than a smattering of notes and documents being kept in an unstructured way. It also allows people to report in their own language.

Learning Management System (LMS): No program is effective without meaningful training. To support, we engaged with a third party tool that allowed us to create content that was easy to digest, but also provides assessment opportunities (quizzes) and ability to track course completion.

Finally…

Photo by Courtney Hedger on Unsplash

This, often invisible work of safety, is critical if open source is to reach it’s full potential. I want to thank, the many, many people who cared, advocated and contributed to this work and those that trusted us to help.

If you have any questions about this program, including how to leverage our open resources, please do reach out via our Github repository, or Discourse.

___________________________________________________

NOTE: We determined Community-facing roles as those with ‘Community Manager’ in their title and:

- Engineers, designers, and others working regularly with contributors on platforms like Mercurial, Bugzilla and Github.

- Anyone organizing, speaking or hosting events on behalf of Mozilla.

- Those with jobs requiring social media interactions with external audiences including blogpost authorship.

https://blog.mozilla.org/community/2020/09/10/weaving-safety-into-the-fabric-of-open-source/

|

|

Daniel Stenberg: store the curl output over there |

tldr: --output-dir [directory] comes in curl 7.73.0

The curl options to store the contents of a URL into a local file, -o (--output) and -O (--remote-name) were part of curl 4.0, the first ever release, already in March 1998.

Even though we often get to hear from users that they can’t remember which of the letter O’s to use, they’ve worked exactly the same for over twenty years. I believe the biggest reason why they’re hard to keep apart is because of other tools that use similar options for maybe not identical functionality so a command line cowboy really needs to remember the exact combination of tool and -o type.

Later on, we also brought -J to further complicate things. See below.

Let’s take a look at what these options do before we get into the new stuff:

--output [file]

This tells curl to store the downloaded contents in that given file. You can specify the file as a local file name for the current directory or you can specify the full path. Example, store the the HTML from example.org in "/tmp/foo":

curl -o /tmp/foo https://example.org

--remote-name

This option is probably much better known as its short form: -O (upper case letter o).

This tells curl to store the downloaded contents in a file name name that is extracted from the given URL’s path part. For example, if you download the URL "https://example.com/pancakes.jpg" users often think that saving that using the local file name “pancakes.jpg” is a good idea. -O does that for you. Example:

curl -O https://example.com/pancakes.jpg

The name is extracted from the given URL. Even if you tell curl to follow redirects, which then may go to URLs using different file names, the selected local file name is the one in the original URL. This way you know before you invoke the command which file name it will get.

--remote-header-name

This option is commonly used as -J (upper case letter j) and needs to be set in combination with --remote-name.

This makes curl parse incoming HTTP response headers to check for a Content-Disposition: header, and if one is present attempt to parse a file name out of it and then use that file name when saving the content.

This then naturally makes it impossible for a user to be really sure what file name it will end up with. You leave the decision entirely to the server. curl will make an effort to not overwrite any existing local file when doing this, and to reduce risks curl will always cut off any provided directory path from that file name.

Example download of the pancake image again, but allow the server to set the local file name:

curl -OJ https://example.com/pancakes.jpg

(it has been said that “-OJ is a killer feature” but I can’t take any credit for having come up with that.)

Which directory

So in particular with -O, with or without -J, the file is download in the current working directory. If you want the download to be put somewhere special, you had to first ‘cd’ there.

When saving multiple URLs within a single curl invocation using -O, storing those in different directories would thus be impossible as you can only cd between curl invokes.

Introducing --output-dir

In curl 7.73.0, we introduce this new command line option --output-dir that goes well together with all these output options. It tells curl in which directory to create the file. If you want to download the pancake image, and put it in /tmp no matter which your current directory is:

curl -O --output-dir /tmp https://example.com/pancakes.jpg

And if you allow the server to select the file name but still want it in /tmp

curl -OJ --output-dir /tmp https://example.com/pancakes.jpg

Create the directory!

This new option also goes well in combination with --create-dirs, so you can specify a non-existing directory with --output-dir and have curl create it for the download and then store the file in there:

curl --create-dirs -O --output-dir /tmp/receipes https://example.com/pancakes.jpg

Ships in 7.73.0

This new option comes in curl 7.73.0. It is curl’s 233rd command line option.

You can always find the man page description of the option on the curl website.

Credits

I (Daniel) wrote the code, docs and tests for this feature.

Image by Alexas_Fotos from Pixabay

https://daniel.haxx.se/blog/2020/09/10/store-the-curl-output-over-there/

|

|

Mozilla Privacy Blog: Mozilla offers a vision for how the EU Digital Services Act can build a better internet |

Later this year the European Commission is expected to publish the Digital Services Act (DSA). These new draft laws will aim at radically transforming the regulatory environment for tech companies operating in Europe. The DSA will deal with everything from content moderation, to online advertising, to competition issues in digital markets. Today, Mozilla filed extensive comments with the Commission, to outline Mozilla’s vision for how the DSA can address structural issues facing the internet while safeguarding openness and fundamental rights.

The stakes at play for consumers and the internet ecosystem could not be higher. If developed carefully and with broad input from the internet health movement, the DSA could help create an internet experience for consumers that is defined by civil discourse, human dignity, and individual expression. In addition, it could unlock more consumer choice and consumer-facing innovation, by creating new market opportunities for small, medium, and independent companies in Europe.

Below are the key recommendations in Mozilla’s 90-page submission:

- Content responsibility: The DSA provides a crucial opportunity to implement an effective and rights-protective framework for content responsibility on the part of large content-sharing platforms. Content responsibility should be assessed in terms of platforms’ trust & safety efforts and processes, and should scale depending on resources, business practices, and risk. At the same time, the DSA should avoid ‘down-stack’ content control measures (e.g. those targeting ISPs, browsers, etc). These interventions pose serious free expression risk and are easily circumventable, given the technical architecture of the internet.

**** - Meaningful transparency to address disinformation: To ensure transparency and to facilitate accountability, the DSA should consider a mandate for broad disclosure of advertising through publicly available ad archive APIs.

**** - Intermediary liability: The main principles of the E-Commerce directive still serve the intended purpose, and the European Commission should resist the temptation to weaken the directive in the effort to increase content responsibility.

**** - Market contestability: The European Commission should consider how to best support healthy ecosystems with appropriate regulatory engagement that preserves the robust innovation we’ve seen to date — while also allowing for future competitive innovation from small, medium and independent companies without the same power as today’s major platforms.

**** - Ad tech reform: The advertising ecosystem is a key driver of the digital economy, including for companies like Mozilla. However the ecosystem today is unwell, and a crucial first step towards restoring it to health would be for the DSA to address its present opacity.

**** - Governance and oversight: Any new oversight bodies created by the DSA should be truly co-regulatory in nature, and be sufficiently resourced with technical, data science, and policy expertise.

Our submission to the DSA public consultation builds on this week’s open letter from our CEO Mitchell Baker to European Commission President Ursula von der Leyen. Together, they provide the vision and the practical guidance on how to make the DSA an effective regulatory tool.

In the coming months we’ll advance these substantive recommendations as the Digital Services Act takes shape. We look forward to working with EU lawmakers and the broader policy community to ensure the DSA succeeds in addressing the systemic challenges holding back the internet from what it should be.

A high-level overview of our DSA submission can be found here, and the complete 90-page submission can be found here.

The post Mozilla offers a vision for how the EU Digital Services Act can build a better internet appeared first on Open Policy & Advocacy.

|

|

Nicholas Nethercote: How to speed up the Rust compiler one last time |

Due to recent changes at Mozilla my time working on the Rust compiler is drawing to a close. I am still at Mozilla, but I will be focusing on Firefox work for the foreseeable future.

So I thought I would wrap up my “How to speed up the Rust compiler” series, which started in 2016.

Looking back

I wrote ten “How to speed up the Rust compiler” posts.

- How to speed up the Rust compiler.The original post, and the one where the title made the most sense. It focused mostly on how to set up the compiler for performance work, including profiling and benchmarking. It mentioned only four of my PRs, all of which optimized allocations.

- How to speed up the Rust compiler some more. This post switched to focusing mostly on my performance-related PRs (15 of them), setting the tone for the rest of the series. I reused the “How to…” naming scheme because I liked the sound of it, even though it was a little inaccurate.

- How to speed up the Rust compiler in 2018. I returned to Rust compiler work after a break of more than a year. This post included updated info on setting things up for profiling the compiler and described another 7 of my PRs.

- How to speed up the Rust compiler some more in 2018. This post described some improvements to the standard benchmarking suite and support for more profiling tools, covering 14 of my PRs. Due to multiple requests from readers, I also included descriptions of failed optimization attempts, something that proved popular and that I did in several subsequent posts. (A few times, readers made suggestions that then led to subsequent improvements, which was great.)

- How to speed up the Rustc compiler in 2018: NLL edition. This post described 13 of my PRs that helped massively speed up the new borrow checker, and featured my favourite paragraph of the entire series: “the html5ever benchmark was triggering out-of-memory failures on CI… over a period of 2.5 months we reduced the memory usage from 14 GB, to 10 GB, to 2 GB, to 1.2 GB, to 600 MB, to 501 MB, and finally to 266 MB”. This is some of the performance work I’m most proud of. The new borrow checker was a huge win for Rust’s usability and it shipped with very little hit to compile times, an outcome that was far from certain for several months.

- How to speed up the Rust compiler in 2019. This post covered 44(!) of my PRs including ones relating to faster globals accesses, profiling improvements, pipelined compilation, and a whole raft of tiny wins from reducing allocations with the help of the new version of DHAT.

- How to speed up the Rust compiler some more in 2019. This post described 11 of my PRs, including several minimising calls to

memcpy, and several improving theObligationForestdata structure. It discussed some PRs by others that reduced library code bloat. I also included a table of overall performance changes since the previous post, something that I continued doing in subsequent posts. - How to speed up the Rust compiler one last time in 2019. This post described 21 of my PRs, including two separate sequences of refactoring PRs that unintentionally led to performance wins.

- How to speed up the Rust compiler in 2020: This post described 23 of my successful PRs relating to performance, including a big change and win from avoiding the generation of LLVM bitcode when LTO is not being used (which is the common case). The post also described 5 of my PRs that represented failed attempts.

- How to speed up the Rust compiler some more in 2020: This post described 19 of my PRs, including several relating to LLVM IR reductions found with cargo-llvm-lines, and several relating to improvements in profiling support. The post also described the important new weekly performance triage process that I started and is on track to be continued by others.

Beyond those, I wrote several other posts related to Rust compilation.

- The Rust compiler is getting faster. This post provided some measurements showing significant overall speed improvements.

- Ad Hoc Profiling. This post described a simple but surprisingly effective profiling tool that I used for a lot of my PRs.

- How to get the size of Rust types with -Zprint-type-sizes. This post described how to see how Rust types are laid out in memory.

- A better DHAT. This post described improvements I made to DHAT, which I used for a lot of my PRs.

- The Rust compiler is still getting faster. Another status post about speed improvements.

- Visualizing Rust compilation. This post described a new Cargo feature that produces graphs showing the compilation of Rust crates in a project. It can help project authors rearrange their code for faster compilation.

As well as sharing the work I’d been doing, a goal of the posts was to show that there are people who care about Rust compiler performance and that it was actively being worked on.

Lessons learned

Boiling down compiler speed to a single number is difficult, because there are so many ways to invoke a compiler, and such a wide variety of workloads. Nonetheless, I think it’s not inaccurate to say that the compiler is at least 2-3x faster than it was a few years ago in many cases. (This is the best long-range performance tracking I’m aware of.)

When I first started profiling the compiler, it was clear that it had not received much in the way of concerted profile-driven optimization work. (It’s only a small exaggeration to say that the compiler was basically a stress test for the allocator and the hash table implementation.) There was a lot of low-hanging fruit to be had, in the form of simple and obvious changes that had significant wins. Today, profiles are much flatter and obvious improvements are harder for me to find.

My approach has been heavily profiler-driven. The improvements I did are mostly what could be described as “bottom-up micro-optimizations”. By that I mean they are relatively small changes, made in response to profiles, that didn’t require much in the way of top-down understanding of the compiler’s architecture. Basically, a profile would indicate that a piece of code was hot, and I would try to either (a) make that code faster, or (b) avoid calling that code.

It’s rare that a single micro-optimization is a big deal, but dozens and dozens of them are. Persistence is key.

I spent a lot of time poring over profiles to find improvements. I have measured a variety of different things with different profilers. In order of most to least useful:

- Instruction counts (Cachegrind and Callgrind)

- Allocations (DHAT)

- All manner of custom path and execution counts via ad hoc profiling (

counts) - Memory use (DHAT and Massif)

- Lines of LLVM IR generated by the front end (

cargo-llvm-lines) memcpys (DHAT)- Cycles (perf), but only after I discovered the excellent Hotspot viewer… I find perf’s own viewer tools to be almost unusable. (I haven’t found cycles that useful because they correlate strongly with instruction counts, and instruction count measurements are less noisy.)

Every time I did a new type of profiling, I found new things to improve. Often I would use multiple profilers in conjunction. For example, the improvements I made to DHAT for tracking allocations and memcpys were spurred by Cachegrind/Callgrind’s outputs showing that malloc/free and memcpy were among the hottest functions for many benchmarks. And I used counts many times to gain insight about a piece of hot code.

Off the top of my head, I can think of some unexplored (by me) profiling territories: self-profiling/queries, threading stuff (e.g. lock contention, especially in the parallel front-end), cache misses, branch mispredictions, syscalls, I/O (e.g. disk activity). Also, there are lots of profilers out there, each one has its strengths and weaknesses, and each person has their own areas of expertise, so I’m sure there are still improvement to be found even for the profiling metrics that I did consider closely.

I also did two larger “architectural” or “top-down” changes: pipelined compilation and LLVM bitcode elision. These kinds of changes are obviously great to do when you can, though they require top-down expertise and can be hard for newcomers to contribute to. I am pleased that there is an incremental compilation working group being spun up, because I think that is an area where there might be some big performance wins.

Good benchmarks are important because compiler inputs are complex and highly variable. Different inputs can stress the compiler in very different ways. I used rustc-perf almost exclusively as my benchmark suite and it served me well. That suite changed quite a bit over the past few years, with various benchmarks being added and removed. I put quite a bit of effort into getting all the different profilers to work with its harness. Because rustc-perf is so well set up for profiling, any time I needed to do some profiling of some new code I would simply drop it into my local copy of rustc-perf.

Compilers are really nice to profile and optimize because they are batch programs that are deterministic or almost-deterministic. Profiling the Rust compiler is much easier and more enjoyable than profiling Firefox, for example.

Contrary to what you might expect, instruction counts have proven much better than wall times when it comes to detecting performance changes on CI, because instruction counts are much less variable than wall times (e.g. ±0.1% vs ±3%; the former is highly useful, the latter is barely useful). Using instruction counts to compare the performance of two entirely different programs (e.g. GCC vs clang) would be foolish, but it’s reasonable to use them to compare the performance of two almost-identical programs (e.g. rustc before PR #12345 and rustc after PR #12345). It’s rare for instruction count changes to not match wall time changes in that situation. If the parallel version of the rustc front-end ever becomes the default, it will be interesting to see if instruction counts continue to be effective in this manner.

I was surprised by how many people said they enjoyed reading this blog post series. (The positive feedback partly explains why I wrote so many of them.) The appetite for “I squeezed some more blood from this stone” tales is high. Perhaps this relates to the high level of interest in Rust, and also the pain people feel from its compile times. People also loved reading about the failed optimization attempts.

Many thanks to all the people who helped me with this work. In particular:

- Mark Rousskov, for maintaining

rustc-perfand the CI performance infrastructure, and helping me with manyrustc-perfchanges; - Alex Crichton, for lots of help with pipelined compilation and LLVM bitcode elision;

- Anthony Jones and Eric Rahm, for understanding how this Rust work benefits Firefox and letting me spend some of my Mozilla working hours on it.

Rust’s existence and success is something of a miracle. I look forward to being a Rust user for a long time. Thank you to everyone who has contributed, and good luck to all those who will contribute to it in the future!

https://blog.mozilla.org/nnethercote/2020/09/08/how-to-speed-up-the-rust-compiler-one-last-time/

|

|

Mozilla Thunderbird: OpenPGP in Thunderbird 78 |

Updating to Thunderbird 78 from 68

Soon the Thunderbird automatic update system will start to deliver the new Thunderbird 78 to current users of the previous release, Thunderbird 68. This blog post is intended to share with you details about our OpenPGP support in Thunderbird 78, and some details Enigmail add-on users should consider when updating. If you are interested in reading more about the other features in the Thunderbird 78 release, please see our previous blog post.

Updating to Thunderbird 78 is highly recommended to ensure you will receive security fixes, because no more fixes will be provided for Thunderbird 68 after September 2020.

The traditional Enigmail Add-on cannot be used with version 78, because of changes to the underlying Mozilla platform Thunderbird is built upon. Fortunately, it is no longer needed with Thunderbird version 78.2.1 because it enables a new built-in OpenPGP feature.

Not all of Enigmail’s functionality is offered by Thunderbird 78 yet – but there is more to come. And some functionality has been implemented differently, partly because of technical necessity, but also because we are simplifying the workflow for our users.

With the help of a migration tool provided by the Enigmail Add-on developer, users of Enigmail’s classic mode will get assistance to migrate their settings and keys. Users of Enigmail’s Junior Mode will be informed by Enigmail, upon update, about their options for using that mode with Thunderbird 78, which requires downloading software that isn’t provided by the Thunderbird project. Alternatively, users of Enigmail’s Junior Mode may attempt a manual migration to Thunderbird’s new integrated OpenPGP feature, as explained in our howto document listed below.

Unlike Enigmail, OpenPGP in Thunderbird 78 does not use GnuPG software by default. This change was necessary to provide a seamless and integrated experience to users on all platforms. Instead, the software of the RNP project was chosen for Thunderbird’s core OpenPGP engine. Because RNP is a newer project in comparison to GnuPG, it has certain limitations, for example it currently lacks support for OpenPGP smartcards. As a workaround, Thunderbird 78 offers an optional configuration for advanced users, which requires additional manual setup, but which can allow the optional use of separately installed GnuPG software for private key operations.

The Mozilla Open Source Support (MOSS) awards program has thankfully provided funding for an audit of the RNP library and Thunderbird’s related code, which was conducted by the Cure53 company. We are happy to report that no critical or major security issues were found, all identified issues had a medium or low severity rating, and we will publish the results in the future.

More Info and Support

We have written a support article that lists questions that users might have, and it provides more detailed information on the technology, answers, and links to additional articles and resources. You may find it at: https://support.mozilla.org/en-US/kb/openpgp-thunderbird-howto-and-faq

If you have questions about the OpenPGP feature, please use Thunderbird’s discussion list for end-to-end encryption functionality at: https://thunderbird.topicbox.com/groups/e2ee

Several topics have already been discussed, so you might be able to find some answers in its archive.

https://blog.thunderbird.net/2020/09/openpgp-in-thunderbird-78/

|

|

The Mozilla Blog: Mozilla CEO Mitchell Baker urges European Commission to seize ‘once-in-a-generation’ opportunity |

Today, Mozilla CEO Mitchell Baker published an open letter to European Commission President Ursula von der Leyen, urging her to seize a ‘once-in-a-generation’ opportunity to build a better internet through the opportunity presented by the upcoming Digital Services Act (“DSA”).

Mitchell’s letter coincides with the European Commission’s public consultation on the DSA, and sets out high-level recommendations to support President von der Leyen’s DSA policy agenda for emerging tech issues (more on that agenda and what we think of it here).

The letter sets out Mozilla’s recommendations to ensure:

- Meaningful transparency with respect to disinformation;

- More effective content accountability on the part of online platforms;

- A healthier online advertising ecosystem; and,

- Contestable digital markets

As Mitchell notes:

“The kind of change required to realise these recommendations is not only possible, but proven. Mozilla, like many of our innovative small and medium independent peers, is steeped in a history of challenging the status quo and embracing openness, whether it is through pioneering security standards, or developing industry-leading privacy tools.”

Mitchell’s full letter to Commission President von der Leyen can be read here.

The post Mozilla CEO Mitchell Baker urges European Commission to seize ‘once-in-a-generation’ opportunity appeared first on The Mozilla Blog.

|

|

Support.Mozilla.Org: Introducing the Customer Experience Team |

A few weeks ago, Rina discussed the impact of the recent changes in Mozilla on the SUMO team. This change has resulted in a more focused team that combines Pocket Support and Mozilla Support into a single team that we’re calling Customer Experience, led by Justin Rochell. Justin has been leading the support team in Pocket and will now broaden his responsibilities to oversee Mozilla’s products as well as SUMO community.

Here’s a short introduction from Justin:

Hey everyone! I’m excited and honored to be stepping into this new role leading our support and customer experience efforts at Mozilla. After heading up support at Pocket for the past 8 years, I’m excited to join forces with SUMO to improve our support strategy, collaborate more closely with our product teams, and ensure that our contributor community feels nurtured and valued.

One of my first support jobs was for an email client called Postbox, which is built on top of Thunderbird. It feels as though I’ve come full circle, since support.mozilla.org was a valuable resource for me when answering support questions and writing knowledge base articles.

You can find me on Matrix at @justin:mozilla.org – I’m eager to learn about your experience as a contributor, and I welcome you to get in touch.

We’re also excited to welcomer Olivia Opdahl, who is a Senior Community Support Rep at Pocket and has been on the Pocket Support team since 2014. She’s been responsible for many things in addition to support, including QA, curating Pocket Hits, and doing social media for Pocket.

Here’s a short introduction from Olivia:

Hi all, my name is Olivia and I’m joining the newly combined Mozilla support team from Pocket. I’ve worked at Pocket since 2014 and have seen Pocket evolve many times into what we’re currently reorganizing as a more integrated part of Mozilla. I’m excited to work with you all and learn even more about the rest of Mozilla’s products!

When I’m not working, I’m probably playing video games, hiking, learning programming, taking photos or attending concerts. These days, I’m trying to become a Top Chef, well, not really, but I’d like to learn how to make more than mac and cheese :D

Thanks for welcoming me to the new team!

Besides Justin and Olivia, JR/Joe Johnson, who you might remember for being a maternity cover for Rina earlier this year, will step in as a Release/Insights Manager for the team and work closely with the product team. Joni will continue to be our Content Lead and Angela as a Technical Writer. I will also stay as a Support Community Manager.

We’ll be sharing more information about our team’s focus in the future as we get to know more. For now, please join me to welcome Justin and Olivia on the team!

On behalf of the Customer Experience Team,

Kiki

https://blog.mozilla.org/sumo/2020/09/06/introducing-the-customer-experience-team/

|

|

The Mozilla Blog: A look at password security, Part V: Disk Encryption |

|

|

Daniel Stenberg: curl help remodeled |

curl 4.8 was released in 1998 and contained 46 command line options. curl --help would list them all. A decent set of options.

When we released curl 7.72.0 a few weeks ago, it contained 232 options… and curl --help still listed all available options.

What was once a long list of options grew over the decades into a crazy long wall of text shock to users who would enter this command and option, thinking they would figure out what command line options to try next.

–help me if you can

We’ve known about this usability flaw for a while but it took us some time to figure out how to approach it and decide what the best next step would be. Until this year when long time curl veteran Dan Fandrich did his presentation at curl up 2020 titled –help me if you can.

Emil Engler subsequently picked up the challenge and converted ideas surfaced by Dan into reality and proper code. Today we merged the refreshed and improved --help behavior in curl.

Perhaps the most notable change in curl for many users in a long time. Targeted for inclusion in the pending 7.73.0 release.

help categories

First out, curl --help will now by default only list a small subset of the most “important” and frequently used options. No massive wall, no shock. Not even necessary to pipe to more or less to see proper.

Then: each curl command line option now has one or more categories, and the help system can be asked to just show command line options belonging to the particular category that you’re interested in.

For example, let’s imagine you’re interested in seeing what curl options provide for your HTTP operations:

$ curl --help http Usage: curl [options…] http: HTTP and HTTPS protocol options --alt-svc Enable alt-svc with this cache file --anyauth Pick any authentication method --compressed Request compressed response -b, --cookie Send cookies from string/file -c, --cookie-jar Write cookies to after operation -d, --data HTTP POST data --data-ascii HTTP POST ASCII data --data-binary HTTP POST binary data --data-raw HTTP POST data, '@' allowed --data-urlencode HTTP POST data url encoded --digest Use HTTP Digest Authentication [...]

list categories

To figure out what help categories that exists, just ask with curl --help category, which will show you a list of the current twenty-two categories: auth, connection, curl, dns, file, ftp, http, imap, misc, output, pop3, post, proxy, scp, sftp, smtp, ssh, telnet ,tftp, tls, upload and verbose. It will also display a brief description of each category.

Each command line option can be put into multiple categories, so the same one may be displayed in both in the “http” category as well as in “upload” or “auth” etc.

--help all

You can of course still get the old list of every single command line option by issuing curl --help all. Handy for grepping the list and more.

“important”

The meta category “important” is what we use for the options that we show when just curl --help is issued. Presumably those options should be the most important, in some ways.

Credits

Code by Emil Engler. Ideas and research by Dan Fandrich.

|

|

This Week In Rust: This Week in Rust 354 |

Hello and welcome to another issue of This Week in Rust! Rust is a systems language pursuing the trifecta: safety, concurrency, and speed. This is a weekly summary of its progress and community. Want something mentioned? Tweet us at @ThisWeekInRust or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub. If you find any errors in this week's issue, please submit a PR.

Updates from Rust Community

Official

Tooling

Newsletters

Observations/Thoughts

- Should we trust Rust with the future of systems programming?

- Optionality in the type systems of Julia and Rust

- Migrating from quick-error to SNAFU: a story on revamped error handling in Rust

- Go use those Traits

- Starframe devlog: Architecture

- Event Chaining as a Decoupling Method in Entity-Component-System

- Rust serialization: What's ready for production today?

- rust vs scripted languages; a short case study

- [DE] Entwicklung: Warum Rust die Antwort auf miese Software und Programmierfehler ist

- [video] Is Rust Used Safely by Software Developers?

Learn Standard Rust

- Ownership in Rust, Part 1

- Ownership in Rust, Part 2

- Learning Rust 1: Install things and read a file

- Learning Rust 2: A Tiny Database is Born

- That's so Rusty: Ownership

Learn More Rust

- As above, so below: Bare metal Rust generics 2/2

- Halite III Bot Development Kit in Rust

- Zero To Production #3.5: HTML forms, Databases, Integration tests

- Objective-Rust

- Building web apps with Rust using the Rocket framework

- Writing an asynchronous MQTT Broker in Rust - Part 3

- Multiple Thread Pools in Rust

- Type-directed metaprogramming in Rust

- Generating static arrays during compile time in Rust

- Let's build a single binary gRPC server-client with Rust in 2020 - Part 2

- How to use Rust + WebAssembly to Perform Serverless Machine Learning and Data Visualization in the Cloud

- Fireworks for your terminal

- Serverless Data Ingestion with Rust and AWS SES

- Box Plots at the Olympics

- Fixing include_bytes!

- Generating static arrays during compile time in Rust

- [PL] CrabbyBird #1 O tym, jak animowa'c posta'c gracza

- [video] FLTK Rust: Creating a music player with customized widgets

Project Updates

- This August in my database project written in Rust

- Using Rust for Kentik's New Synthetic Monitoring Agent

- AWS introduces Bottlerocket: A Rust language-oriented Linux for containers

- Using

cargo testfor embedded testing withpanic-probe - Headcrab: August 2020 progress report

- Rust Analyzer 2020[..6] Financial Report

Miscellaneous

- Avoid Build Cache Bloat By Sweeping Away Artifacts

- const_fn makes it too easy to do mandelbrots

- Linux Developers Continue Evaluating The Path To Adding Rust Code To The Kernel

- Supporting Linux kernel development in Rust

-

[video] LPC 2020 - LLVM MC

Crate of the Week

This week's crate is GlueSQL, a SQL database engine written in Rust with WebAssembly support.

Thanks to Taehoon Moon for the suggestion!

Submit your suggestions and votes for next week!

Call for Participation

Always wanted to contribute to open-source projects but didn't know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

- Database in Rust is looking for contributors

- Docs.rs is looking for help adding a changelog

- Gooseberry has several good first issues with mentoring available

- Diskonaut - Option to delete without confirmation?

- Diskonaut - Feature: only show "(x = Small files)" legend when there are small files on the screen

- Diskonaut - Feature: error reporting with clean stacktraces

If you are a Rust project owner and are looking for contributors, please submit tasks here.

Updates from Rust Core

326 pull requests were merged in the last week

- point to a move-related span when pointing to closure upvars

- abort when foreign exceptions are caught by

catch_unwind - new pass to optimize

ifconditions on integrals to switches on the integer - suggest

mem::forgetifmem::ManuallyDrop::newisn't used - improve error message when typo is made in

format! - allow reallocation to different alignment in

AllocRef - add some avx512f intrinsics for mask, rotation, shift

- make some

Orderingmethods const - stabilize {

Range,RangeInclusive}::is_empty - get rid of bounds check in

slice::chunks_exact()and related functions - stdarch: avx512

- hashbrown: make

with_hasherfunctions const fn - hashbrown: implement

replace_entry_with - clippy: add a lint for an async block/closure that yields a type that is itself awaitable

- use

rustc_lexerfor rustdoc syntax highlighting - report an ambiguity if both modules and primitives are in scope for intra-doc links

- rustdoc: improve rendering of crate features via

doc(cfg) - docs.rs: separate metadata parsing into a library

Rust Compiler Performance Triage

- 2020-08-24: 1 regression, 4 improvements.

This week included a major speedup on optimized builds of real-world crates (up to 5%) as a result of the upgrade to LLVM 11.

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

RFCs

Tracking Issues & PRs

- [disposition: merge]Add

#[cfg(panic = '...')] - [disposition: merge]Allow Weak::as_ptr and friends for unsized T

- [disposition: merge]Update stdarch

- [disposition: merge]Tracking issue for #[doc(alias = "...")]

New RFCs

Upcoming Events

Online

- September 8. Saarbr"ucken, DE - Rust-Saar Meetup -

3u16.map_err(...) - September 8. Seattle, WA, US - Seattle Rust Meetup - Monthly meetup

- September 9. East Coast, US - Rust East Coast Virtual Talks + Q&A

- September 11. Russia - Russian Rust Online Meetup

North America

- September 9. Atlanta, GA, US - Rust Atlanta - Grab a beer with fellow Rustaceans

- September 10. Lehi, UT, US - Utah Rust - The Blue Pill: Rust on Microcontrollers (Sept / Second Round)

Asia Pacific

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Rust Jobs

- Senior Backend Engineer - Rust at Kraken (Remote US, Remote EMEA)

- Backend Engineer, Data Processing – Rust at Kraken (Remote US, Remote EMEA)

- Backend Engineer - Rust at Kraken (Remote US, Remote EMEA)

Tweet us at @ThisWeekInRust to get your job offers listed here!

Quote of the Week

When the answer to your question contains the word "variance" you're probably going to have a bad time.

Thanks to Michael Bryan for the suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, and cdmistman.

https://this-week-in-rust.org/blog/2020/09/04/this-week-in-rust-354/

|

|

About:Community: Five years of Tech Speakers |

Given the recent restructuring at Mozilla, many teams have been affected by the layoff. Unfortunately, this includes the Mozilla Tech Speakers program. As one of the volunteers who’s been part of the program since the very beginning, I’d like to share some memories of the last five years, watching the Tech Speakers program grow from a small group of people to a worldwide community.

Mozilla Tech Speakers – a program to bring together volunteer contributors who are already speaking to technical audiences (developers/web designers/computer science and engineering students) about Firefox, Mozilla and the Open Web in communities around the world. We want to support you and amplify your work!

It all started as an experiment in 2015 designed by Havi Hoffman and Dietrich Ayala from the Developer Relations team. They invited a handful of volunteers who were passionate about giving talks at conferences on Mozilla-related technologies and the Open Web in general to trial a program that would support their conference speaking activities, and amplify their impact. That’s how Mozilla Tech Speakers were born.

It was a perfect symbiosis. A small, scrappy Developer Relations team can’t cover all the web conferences everywhere, but with help from trained and knowledgeable volunteers that task becomes a lot easier. Volunteer local speakers can share information at regional conferences that are distant or inaccessible for staff. And for half a decade, it worked, and the program grew in reach and popularity.

Those volunteers, in return, were given training and support, including funding for conference travel and cool swag as a token of appreciation.

From the first cohort of eight people, the program grew over the years to have more than a hundred of expert technical speakers around the world, giving top quality talks at the best web conferences. Sometimes you couldn’t attend an event without randomly bumping into one or two Tech Speakers. It was a globally recognizable brand of passionate, tech-savvy Mozillians.

The meetups

After several years of growth, we realized that connecting remotely is one thing, but meeting in person is a totally different experience. That’s why the idea for Tech Speakers meetups was born. We’ve had three gatherings in the past four years: Berlin in 2016, Paris in 2018, and two events in 2019, in Amsterdam and Singapore to accommodate speakers on opposite sides of the globe.

The first Tech Speakers meetup in Berlin coincided with the 2016 View Source Conference, hosted by Mozilla. It was only one year after the program started, but we already had a few new cohorts trained and integrated into the group. During the Berlin meetup we gave short lightning talks in front of each other, and received feedback from our peers, as well as professional speaking coach Denise Graveline.

After the meetup ended, we joined the conference as volunteers, helping out in the registration desk, talking to attendees in the booths, and making the speakers feel welcome.

The second meetup took place two years later in Paris – hosted by Mozilla’s unique Paris office, looking literally like a palace. We participated in training workshops about Firefox Reality, IoT, WebAssembly, and Rust. We continued the approach of presenting lightning talks that were evaluated by experts in the web conference scene: Ada Rose Cannon, Jessica Rose, Vitaly Friedman, and Marc Thiele.

Mozilla hosted two meetups in 2019, before the 2020 pandemic put tech conferences and events on hold. The European tech speakers met in Amsterdam, while folks from Asia and the Pacific region met in Singapore.

The experts giving feedback for our Amsterdam lightning talks were Joe Nash, Kristina Schneider, Jessica Rose, and Jeremy Keith, with support from Havi Hoffman, and Ali Spivak as well. The workshops included Firefox DevTools and Web Speech API.

The knowledge

The Tech Speakers program was intended to help developers grow, and share their incredible knowledge with the rest of the world. We had various learning opportunities – from the first training to actually becoming a Tech Speaker. We had access to updates from Mozilla engineering staff talking about various technologies (Tech Briefings), or from experts outside of the company (Masterclasses), to monthly calls where we talked about our own lessons learned.

People shared links, usually tips about speaking, teaching and learning, and everything tech related in between.

The perks

Quite often we were speaking at local or not-for-profit conferences, organized by passionate people like us, and having those costs covered, Mozilla was being presented as the partner of such a conference, which benefited all parties involved.

It was a fair trade – we were extending the reach of Mozilla’s Developer Relations team significantly, always happy to do it in our free time, while the costs of such activities were relatively low. Since we were properly appreciated by the Tech Speakers staff, it felt really professional at all times and we were happy with the outcomes.

The reports

At its peak, there were more than a hundred Tech Speakers giving thousands of talks to tens of thousands of other developers around the world. Those activities were reported via a dedicated form, but writing trip reports was also a great way to summarize and memorialize our involvement in a given event.

The Statistics

In the last full year of the program, 2019, we had over 600 engagements (out of which about 14% were workshops, the rest – talks at conferences) from 143 active speakers across 47 countries. This summed up to a total of about 70 000 talk audience and 4 000 workshop audience. We were collectively fluent in over 50 of the world’s most common languages.

The life-changing experience

I reported on more than one hundred events I attended as a speaker, workshop lead, or booth staff – many of which wouldn’t have been possible without Mozilla’s support for the Tech Speakers program. Last year I was invited to attend a W3C workshop on Web games in Redmond, and without the travel and accommodation coverage I received from Mozilla, I’d have missed a huge opportunity.

At that particular event, I met Desigan Chinniah, who got me hooked on the concept of Web Monetization. I immediately went all in, and we quickly announced the Web Monetization category in the js13kGames competition, I was showcasing monetized games at MozFest Arcade in London, and later got awarded with the Grant for the Web. I don’t think it all would be possible without someone actually accepting my request to fly across the ocean to talk about an Indie perspective on Web games as a Tech Speaker.

The family

Aside from the “work” part, Tech Speakers have become literally one big family, best friends for life, and welcome visitors in each other’s cities. This is stronger than anything a company can offer to their volunteers, for which I’m eternally grateful. Tech Speakers were, and always will be, a bunch of cool people doing stuff out of pure passion.

I’d like to thank Havi Hoffman most of all, as well as Dietrich Ayala, Jason Weathersby, Sandra Persing, Michael Ellis, Jean-Yves Perrier, Ali Spivak, Istv'an Flaki Szmozs'anszky, Jessica Rose, and many others shaping the program over the years, and every single Tech Speaker who made this experience unforgettable.

I know I’ll be seeing you fine folks at conferences when the current global situation settles. We’ll be bumping casually into each other, remembering the good old days, and continuing to share our passions, present and talk about the Open Web. Much love, see you all around!

https://blog.mozilla.org/community/2020/09/03/five-years-of-tech-speakers/

|

|

The Rust Programming Language Blog: Planning the 2021 Roadmap |

The core team is beginning to think about the 2021 Roadmap, and we want to hear from the community. We’re going to be running two parallel efforts over the next several weeks: the 2020 Rust Survey, to be announced next week, and a call for blog posts.

Blog posts can contain anything related to Rust: language features, tooling improvements, organizational changes, ecosystem needs — everything is in scope. We encourage you to try to identify themes or broad areas into which your suggestions fit in, because these help guide the project as a whole.

One way of helping us understand the lens you're looking at Rust through is to give one (or more) statements of the form "As a X I want Rust to Y because Z". These then may provide motivation behind items you call out in your post. Some examples might be:

- "As a day-to-day Rust developer, I want Rust to make consuming libraries a better experience so that I can more easily take advantage of the ecosystem"

- "As an embedded developer who wants to grow the niche, I want Rust to make end-to-end embedded development easier so that newcomers can get started more easily"

This year, to make sure we don’t miss anything, when you write a post please submit it into this google form! We will try to look at posts not submitted via this form, too, but posts submitted here aren’t going to be missed. Any platform — from blogs to GitHub gists — is fine!

To give you some context for the upcoming year, we established these high-level goals for 2020, and we wanted to take a look back at the first part of the year. We’ve made some excellent progress!

- Prepare for a possible Rust 2021 Edition

- Follow-through on in-progress designs and efforts

- Improve project functioning and governance

Prepare for a possible Rust 2021 Edition

There is now an open RFC proposing a plan for the 2021 edition! There has been quite a bit of discussion, but we hope to have it merged within the next 6 weeks. The plan is for the new edition to be much smaller in scope than Rust 2018. It it is expected to include a few minor tweaks to improve language usability, along with the promotion of various edition idiom lints (like requiring dyn Trait over Trait) so that they will be “deny by default”. We believe that we are on track for being able to produce an edition in 2021.

Follow-through on in-progress designs and efforts

One of our goals for 2020 was to push “in progress” design efforts through to completion. We’ve seen a lot of efforts in this direction:

- The inline assembly RFC has merged and new implementation ready for experimentation

- Procedural macros have been stabilized in most positions as of Rust 1.45

- There is a proposal for a MVP of const generics, which we’re hoping to ship in 2020

- The async foundations group is expecting to post an RFC on the

Streamtrait soon - The FFI unwind project group is closing out a long-standing soundness hole, and the first RFC there has been merged

- The safe transmute project group has proposed a draft RFC

- The traits working group is polishing Chalk, preparing rustc integration, and seeing experimental usage in rust-analyzer. You can learn more in their blog posts.

- We are transitioning to rust-analyzer as the official Rust IDE solution, with a merged RFC laying out the plan

- Rust’s tier system is being formalized with guarantees and expectations set in an in-progress RFC

- Compiler performance work continues, with wins of 10-30% on many of our benchmarks

- Reading into uninitialized buffers has an open RFC, solving another long-standing problem for I/O in Rust

- A project group proposal for portable SIMD in std has an open RFC

- A project group proposal for error handling ergonomics, focusing on the std::error API, has an open RFC

std::syncmodule updates are in brainstorming phase- Rustdoc's support for intra-doc links is close to stabilization!

There’s been a lot of other work as well both within the Rust teams, but these items highlight some of the issues and designs that are being worked on actively by the Rust teams.

Improve project functioning and governance

Another goal was to document and improve our processes for running the project. We had three main subgoals.

Improved visibility into state of initiatives and design efforts