Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Christian Heilmann: Simple things: styling ordered lists |

This blog started as a scratch pad of simple solutions to problems I encountered. So why not go back to basics?

It is pretty easy to get an ordered list into a document. All you have to do is add an OL element with LI child elements:

> >Collect underpants> >???> >Profit> > |

But what if you want to style the text differently from the numbers? What if you don’t like that they end with a full stop? The generated numbers of the OL are somewhat of that dark magic browsers do for us (something we work on dragging into the sunlight with ShadowDOM).

In order to make those more style-able in the past you had to add another element to get a hook:

span> class="oldschool"> >>Collect underpants>> >>???>> >>Profit>> > |

.oldschool li { color: green; } .oldschool span { color: lime; } |

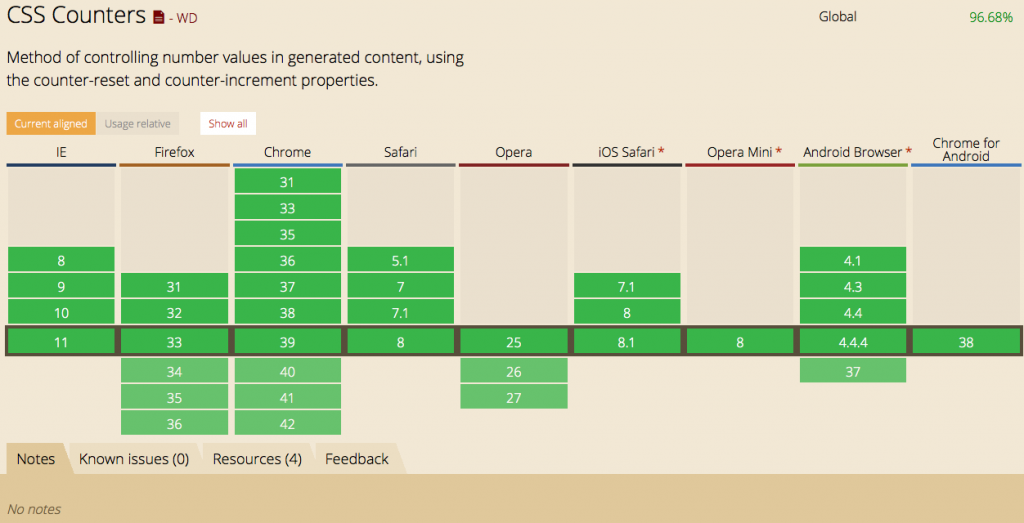

Which is kind of a terrible hack and doesn’t quite scale as you may never know who edits your list. With newer browsers we have a better way of doing that using CSS counters. The browser support is ridiculously good, so there should be no excuse for us not to use them:

Using counter, you keep the HTML structure:

span> class="counter"> >Collect underpants> >???> >Profit> > |

You then reset the counter for each of the lists with this class:

.counter { counter-reset: list; } |

This means each list will start at 1 and not go on through the document tree. You then get rid of the list style and style the list item like you want to. In this case we give it a colour and we position it relative. This allows us to position other, new content in there and contain it to the list item:

.counter li { list-style: none; position: relative; color: lime; } |

Once you hid the normal numbering with the list-style: none; you can create your own numbers using counter and generated CSS content:

.counter li::before { counter-increment: list; content: counter(list) '.'; position: absolute; top: 0px; left: -1.2em; color: green; } |

If you wanted to remove the full stop, all you need to do is remove it in the CSS. You have now full styling control over these numbers, for example you can animate them slightly moving from one colour to another and zoom out a bit:

.animated li::before { transition: 0.5s; color: green; } .animated li:hover::before { color: white; transform: scale(1.5); } |

Counters allow you for a lot of different times of numbering. You can for example add a leading zero by using counter(list,decimal-leading-zero), you can use Roman numerals with counter(list,lower-roman) or even Greek ones with counter(list,lower-greek).

If you want to see all of that in action, check out this Fiddle:

Pretty simple, and quite powerful. Here are some more places to read up on this:

http://christianheilmann.com/2014/11/19/simple-things-styling-ordered-lists/

|

|

Jonathan Watt: Converting Mozilla's SVG implementation to Moz2D - part 2 |

This is part 2 of a pair of posts describing my work to convert Mozilla's SVG implementation to directly use Moz2D. Part 1 provided some background information and details about the process. This post will discuss the performance benefits of the conversion of the SVG code and future work.

Benefits

For the most part the performance improvements from the conversion to Moz2D were gradual; as code was incrementally converted, little by little gfxContext overhead was avoided. On doing an audit of our open SVG performance bugs it seems that painting performance is no longer one of the reasons that we perform poorly, except for when we us cairo backed DrawTargets (Linux, Windows XP and other Windows versions with blacklisted drivers), and with the exception of one bug that needs further investigation. (See below for the issues that still do causes SVG performance problems.)

Besides the incremental improvements, there have been a couple of interesting perf bumps that are worth mentioning.

The biggest perf bump by far came when I converted the code that does the actual filling and stroking of SVG geometry to directly use a DrawTarget. The time taken to render this map then dropped from about 15s to 1-2s on my Mac. On the same machine Chrome Canary shows a blank window for about 5s, and then takes a further 20s to render. Now, to be honest, this improvement will be down to something pathological that has been removed rather than being down to avoiding Thebes overhead. (I haven't got to the bottom of exactly what that was yet.) The DrawTarget object being drawn to is ultimately the same object, and Thebes overhead isn't likely to be more than a few percent of any time spent in this code. Nevertheless, it's still a welcome win.

Another perf bump that came from the Moz2D conversion was that it enabled us to cache path objects. When using Thebes, paths are built up using gfxContext API calls and the consumer never gets to touch the resulting path. This prevents the consumer from keeping hold of the path and reusing it in future. This can be a disadvantage when the path is reused frequently, especially when D2D is being used where path creation is relatively expensive. Converting to Moz2D has allowed the SVG code to hold on to the path objects that it creates and reuse them. (For example, in addition to their obvious use during rasterization, paths might be reused for bounds calculations (think invalidation areas, objectBoundingBox content, getBBox() calls) and hit-testing.) Caching paths made us noticeably more responsive on this cool data visualization (temporarily mirrored here while the site is down) when mousing over the table rows, and gave us a +25% boost on this NYT article, for example.

For those of you that are interested in Talos, I did take a look at the SVG test data, but the unfortunately frequent up-and-down of unrelated regressions and wins makes it impossible to use that to show any overall impact of Moz2D conversion on the Talos tests. (Since the beginning of the year the times on Windows have improved slightly while on Mac they have regressed slightly.) The incremental nature of most of the work also unfortunately meant that the impact of individual patches couldn't usually be distinguished from the noise in Talos' results. One notable exception was the change to make SVG geometry use a path object directly which resulted in an improvement in the region of 6% for the svg_opacity suite on Windows 7 and 8.

Other than the performance benefits, several parts of the SVG implementation that were pretty messy and hard to get into and debug have become a lot more approachable. This has already allowed me to fix various SVG bugs that would otherwise have taken a lot longer to work out, and I hope it makes the code easier to approach for devs who aren't so familiar with it.

One final note on performance for any of you that will do your own testing to compare build - note that the enabling of e10s and tiled layers has caused significant changes in performance characteristics. You might want to turn those off.

Future SVG work

As I noted above there are still SVG performance issues unrelated to graphics speed. There are three sources of significant SVG performance issues that can make Mozilla perform poorly on SVG relative to other implementations. There is our lack of hardware acceleration of SVG filters; there's the issue of display list overhead dwarfing painting on SVGs that contain huge numbers of elements (display lists being an implementation detail, and one that gave us very large wins in many other cases); and there are a whole bunch of "strange" bugs that I expect are related to our layers infrastructure that are causing us to over invalidate (and thus do work painting when we shouldn't need to).

Currently these three issues are not on a schedule, but as other higher priority Mozilla work gets ticked of I expect we'll add them.

Future Moz2D work

The performance benefits from the Moz2D conversion on the SVG code do seem to have been positive enough that I expect that we will continue converting the rest of layout in the future. As usual, it will all depend on relative priorities though.

One thing that we should do is audit all the code that creates DrawTargets to check for backend type compatibility. Mixing hardware and software backed DrawTargets when we don't need to can cause us to unwittingly be taking big performance hits due to readback from and/or upload to the GPU. I fixed several instances of mismatch that I happened to notice during the conversion work, and in one case accidentally introduced one which fortunately was caught because it caused a 10-25% regression in a specific Talos test. We know that we still have outstanding bugs on this (such as bug 944571) and I'm sure there are a bunch of cases that we're unaware of.

I mentioned above that painting performance is still a significant issue on machines that fall back to using cairo backed DrawTargets. I believe that the Graphics team's plan to solve this is to finish the Skia backend for Moz2D and use that on the platforms that don't support D2D.

There are a few things that need to be added to Moz2D before we can completely get rid of gfxContext. The main thing we're missing is push-group API on DrawTarget. This is the main reason that gfxContexts actually wraps a stack of DrawTargets, which has all sorts of irritating fallout. Most annoying it makes it hazardous to set clip paths or transforms directly on DrawTargets that may be accessed via a wrapping gfxContext before the DrawTarget's clip stack and transform has been restored, and why I had to continue passing gfxContexts to a lot of code that now only paints directly via the DrawTarget.

The only Moz2D design decision that I've found myself to be somewhat unhappy with is the decision to make patterns relative to user-space. This is what most other hardware accelerated libraries do, but I don't think it's a good fit for 2D browser rendering. Typically crisp rendering is very important to web content, so we render patterns assuming a specific user-space to device-space transform and device space pixel alignment. To maintain crisp rendering we have to make sure that patterns are used with the device-space transform that they were created for, and having to do this manually can be irksome. Anyway, it's a small detail, but something I'll be discussing with the Graphics guys when I see them face-to-face in a couple of weeks.

Modulo the two issues above (and all the changes that I and others had made to it over the last year) I've found the Moz2D API to be a pleasure to work with and I feel the SVG code is better performing and a lot cleaner for converting to it. Well done Graphics team!

|

|

Jonathan Watt: Converting Mozilla's SVG implementation to Moz2D - part 1 |

One of my main work items this year was the conversion of the graphics portions of Mozilla's SVG implementation to directly use Moz2D APIs instead of using the old gfxContext/gfxASurface Thebes APIs. This pair of posts will provide some information on that work. This post will give some background and information on the conversion process, while part 2 will provide some discussion about the benefits of the work and what steps we might want to carry out next.

For background on why Mozilla is building Moz2D (formerly called Azure) and how it can improve Mozilla's performance see some of the earlier posts by Joe, Bas and Robert.

Early Moz2D development

When Moz2D was first being put together it was initially developed and tested as an alternative rendering backend for Mozilla's implementation of HTML

As Moz2D started to become more stable, Thebes' gfxContext class was extended to allow it to wrap a Moz2D DrawTarget (prior to that it was backed only by an instance of a Thebes gfxASurface subclass, in turn backed by a cairo_surface_t). This might seem a bit strange since, after all, Moz2D is supposed to replace Thebes, not be wrapped by it adding yet another layer of abstraction and overhead. However, it was an important step to allow the Graphics team to start testing Moz2D on Mozilla's more complicated, non-canvas, rendering scenarios. It allowed many classes of Moz2D bugs and missing Moz2D features to be worked on/out before beginning a larger effort to convert the masses of non-canvas rendering code to Moz2D.

In order to switch any of the large number of instances of gfxContext to be backed by a DrawTarget, any code that might encounter that gfxContext and try to get a gfxASurface from it had to be updated to handle DrawTargets too. For example, lots of forks in the code had to be added to BasicLayerManager, and gfxFont required a new GlyphBufferAzure class to be written. As this work progressed some instances of Thebes gfxContexts were permanently flipped to being backed by a Moz2D DrawTarget, helping keep working Moz2D code paths from regressing.

SVG, the next Guinea pig

Towards the end of 2013 it was felt that Moz2D was sufficiently ready to start thinking about converting Mozilla's layout code to use Moz2D directly and eliminate its use of gfxContext API. (The layout code being the code that decides where and how most things are placed on the screen, and by far the biggest consumer of the graphics code.) Before committing a lot of engineering time and resources to a large scale conversion, Jet wanted to convert a specific part of the layout code to ensure that Moz2D could meet its needs and determine what performance benefits it could provide to layout. The SVG code was chosen for this purpose since it was considered to be the most complicated to convert (if Moz2D could work for SVG, it could work for the rest of layout).

Stage 1 - Converting all gfxContexts to wrap a DrawTarget

After drawing up a rough list of the work to convert the SVG code to Moz2D I got stuck in. The initial plan was to add code paths to the SVG code to check for and extract DrawTargets from gfxContexts that were passed in (if the gfxContext was backed by one) and operate directly on the DrawTarget in that case. (At some future point the Thebes forks could then be removed.) It soon became apparent that these forks were often not how we would want the code to be structured on completion of Moz2D conversion though. To leverage Moz2D more effectively I frequently found myself wanting to refactor the code quite substantially, and in ways that were not compatible with the existing Thebes code paths. Rather than spending months writing suboptimal Moz2D code paths only to have to rewrite things again when we got rid of the Thebes paths I decided to save time in the long run and first make sure that any gfxContexts that were passed into SVG code would be wrapping a DrawTarget. That way maintaining Thebes forks would be unnecessary.

It wasn't trivial to determine which gfxContexts might end up being passed to SVG code. The complexity of the code paths and the virtually limitless permutations in which Web content can be combined meant that I only identified about a dozen gfxContexts that could not end up in SVG code. As a result I ended up working to convert all gfxContexts in the Mozilla code. (The small amount of additional work to convert the instances that couldn't end up in SVG code allowed us to reduce a whole bunch of code complexity (and remove a lot of then dead code) and simplified things for other devs working with Thebes/Moz2D.)

Ensuring that all the gfxContexts that might be passed to SVG code would be backed by a DrawTarget turned out to be quite a task. I started this work when relatively few gfxContexts had been converted to wrap a DrawTarget so unsurprisingly things were a bit rough. I tripped over several Moz2D bugs at this point. Mostly though the headaches were caused by the amount of code that assumed gfxContexts wrapped and could provide them with a gfxASurface/cairo_surface_t/platform library object, possibly getting or then passing those objects from/to seemingly far corners of the Mozilla code. Particularly challenging was converting the image code where the sources and destinations of gfxASurfaces turned out to be particularly far reaching requiring the code to be converted incrementally in 34 separate bugs. Doing this without temporary performance regressions was tricky.

Besides preparing the ground for the SVG conversion, this work resulted in a decent number of performance improvements in its own right.

Stage 2 - Converting the SVG code to Moz2D

Converting the SVG code to Moz2D was a lot more than a simple case of switching calls from one graphics API to another. The stateful context provided by a retained mode API like Thebes or cairo allows consumer code to set context state (for example, fill pattern, or anti-alias mode) in points of the code that can seem far removed from other code that takes an action (for example, filling a path) that relies on that state having been set. The SVG code made use of this a lot since in many cases (for example, when passing things through for callbacks) it simplified the code to only pass a context rather than a context and some state to set.

This wouldn't have been all that bad if it wasn't for another fundamental difference between Thebes/cairo and Moz2D -- in Moz2D paths and patterns are relative to user-space, whereas in Thebes/cairo they are relative to device-space. Whereas with Thebes we could set a path/pattern and then change the transform before drawing (perhaps, say, to apply a clip in a different space) and the position of the path/pattern would be unaffected, with Moz2D such a transform change would change (and thus break) the rendering. This, incidentally, was why the SVG code was expected to be the hardest area to switch to Moz2D. Partly for historic reasons, and partly because some of the features that SVG supports lead it to, the SVG code did a lot of setting state, changing transforms, setting some more state and then drawing. Often the complexity of the code made it difficult to figure out which code could be setting relevant state before a transform change, requiring more involved refactoring. On the plus side, sorting this out has made parts of the code significantly easier to understand, and has been something I've wanted to find the time to do for years.

Benefits and next steps

To continue reading about the performance benefits of the conversion of the SVG code and some possible next steps continue to part 2.

|

|

Giorgio Maone: Avast, you’re kidd… killing me - said NoScript >:( |

If NoScript keeps disappearing from your Firefox, Avast! Antivirus is likely the culprit.

It’s gone Berserk and mass-deleting add-ons without a warning.

I’m currently receiving tons of reports by confused and angry users.

If the antivirus is dead (as I’ve been preaching for 7 years), looks like it’s not dead enough, yet.

https://hackademix.net/2014/11/19/avast-youre-kidd-killing-me-said-noscript/

|

|

Christian Heilmann: What I am looking for in a guest writer on this blog |

Simple: go try guest writing someplace else. This is my personal blog and if I am interested in something, I come to you and do it interview style in order to point to your work or showcase something amazingly cool that you have done.

Please, please, please with cherry on top, stop sending me emails like this one:

Hi,I’m {NAME}, a freelance writer/education consultant. I found “Christian Heilmann” on a Google search and thought I would contact you to see if you would like to work with me. I own a website on Job Application Service that I’m currently promoting for myself. I thought we could benefit each other somehow? If you are interested, I’d be happy to write a very high-quality article for your site and get a couple permanent links from it? While your website is benefiting from my high-quality article, I’m getting links from your site, making this proposition mutually beneficial.

Shall I write an article that matches your niche and send it across for your review or do you need me to write on a particular topic that interests you and your readers, I’m open to any topic, thoughts please?

If this does not interest you, I am sorry to have bothered you. Have a good day! If this does great I hope we can build a long-term business relationship together! If you wish to have a chat on the phone please let me know your phone number and when a good time to call is :) If you’d like, I can share samples with you.

Regards,

{FIRSTNAME}

I am very happy you know how to enter a name in Google and find the blog of that person. That’s a good start. Nobody got hurt, you didn’t overdo it with the research or spent too much effort before asking for my phone number and pointing out just how much you would get out of this “mutually beneficial relationship”. Seriously, I would love to be a fly on the wall when you try dating.

I’ve worked hard on this blog, that’s why it has some success or is at least found. Go work on yours yourself. That’s how it should be. A blog is you. Just like this one is mine.

http://christianheilmann.com/2014/11/19/what-i-am-looking-for-in-a-guest-writer-on-this-blog/

|

|

Gervase Markham: BMO show_bug Load Times 2x Faster Since January |

The load time for viewing bugs on bugzilla.mozilla.org has got 2x faster since January. See this tweet for graphical evidence.

If you are looking for a direction in which to send your bouquets, glob is your man.

http://feedproxy.google.com/~r/HackingForChrist/~3/R0-_K7mtu54/

|

|

David Rajchenbach Teller: The Future of Promise |

Promise. You may even have noticed that we now have several implementations of Promise in mozilla-central, and that things are moving fast, and sometimes breaking.- Promise.jsm, as provided by the toolkit;

- DOM Promise, as provided by the DOM.

General Overview

Missing pieces

Debugging and testing

it is easier to inspect a promise from Promise.jsm for debugging purposes(not anymore, things have been moving fast while I was writing this blog entry);

- Promise.jsm integrates nicely in the test suite, to make sure that uncaught errors are reported and cause test failures.

API differences

- Promise.jsm offers an additional function

Promise.defer, which didn’t make it to standardization.

Also, there is a slight bug in DOM Promise that gives it a slightly unexpected behavior in a few edge cases. This should not hit developers who use DOM Promise as expected, but this might surprise people who know the exact scheduling algorithm and expect it to be consistent between Promise.jsm and DOM Promise.

Oh, wait, that’s fixed already.

Wrapping it up

As a developer, what should I do?

Promise.defer. Rather, use PromiseUtils.defer, which is strictly equivalent but is not going away.Promise.defer, migrating to DOM Promise should be as simple as removing the line that imports Promise.jsm in your module.http://dutherenverseauborddelatable.wordpress.com/2014/11/19/the-future-of-promise/

|

|

Doug Belshaw: Native apps, the open web, and web literacy |

In a recent blog post, John Gruber argues that native apps are part of the web. This was in response to a WSJ article in which Christopher Mims stated his belief that the web is dying; apps are killing it. In this post, I want to explore the relationship between native apps and web literacy. This is important as we work towards a new version of Mozilla’s Web Literacy Map. It’s something that I explored preliminarily in a post earlier this year entitled What exactly is ‘the mobile web’? (and what does it mean for web literacy?). This, again, was in response to Gruber.

This blog focuses on new literacies, so I’ll not be diving too much into technical specifications, etc. I’m defining web literacy in the same way as we do with the Web Literacy Map v1.1: ‘the skills and competencies required to read, write and participate on the web’. If the main question we’re considering is are native apps part of the web? then the follow-up question is and what does this mean for web literacy?

Defining our terms

First of all, let’s make sure we’re clear about what we’re talking about here. It’s worth saying right away that 'app’ is almost always used as a shorthand for 'mobile app’. These apps are usually divided into three categories:

- Native app

- Hybrid app

- Web app

From this list, it’s probably easiest to describe a web app:

A web application or web app is any software that runs in a web browser. It is created in a browser-supported programming language (such as the combination of JavaScript, HTML and CSS) and relies on a web browser to render the application. ( Wikipedia)

It’s trickier to define a native app, but the essence can be seen most concretely through Apple’s ecosystem that include iOS and the App Store. Developers use a specific programming language and work within constraints set by the owner of the ecosystem. By doing so, native apps get privileged access to all of the features of the mobile device.

A hybrid app is a native app that serves as a 'shell’ or 'wrapper’ for a web app. This is usually done for the sake of convenience and, in some cases, speed.

The boundary between a native app and a web app used to be much more clear and distinct. However, the lines are increasingly blurred. For example:

- APK files (i.e. native apps) can be downloaded from the web and installed on Android devices.

- Developments as part of Firefox OS mean that web technologies can securely access low-level functionality of mobile devices (e.g. camera, GPS, accelerometer).

- The specifications for HTML5 and CSS3 allow beautiful and engaging web apps to be used offline.

Web literacy and native apps

As a result of all this, it’s probably easier these days to differentiate between a native app and a web app by talking about ecosystems and silos. Understanding it this way, a native app is one that is built specifically using the technologies and is subject to the constraints of a particular ecosystem. So a developer creating an app for Apple’s App Store would have to go through a different process and use a different programming language than if they were creating one for Google’s Play Store. And so on.

Does this mean that we need to talk of a separate 'literacy’ for each ecosystem? Should we define 'Google literacy’ as the skills and competencies required to read, write and participate in Google’s ecosystem? I don’t think so. While there may be variations in the way things are done within the different ecosystems, these procedural elements do not constitute 'literacy’.

What we’re aiming for with the Web Literacy Map is a holistic overview of the skills and competencies people require when using the web. I think at this juncture we’ve got a couple of options. The first would be define 'the web’ more loosely to really mean 'the internet’.

This is John Gruber’s preferred option. He thinks we should focus less on web browsers (i.e. HTML) and more on the connections (i.e. HTTP). For example, in a 2010 talk he pointed out a difference between 'web apps’ and 'browser apps’. His argument rested on a technical point, which he illustrated with an example. When a user scrolls through their timeline using the Twitter app for iPhone, they’re not using a web browser, but they are using HTTP technologies. This, said Gruber, means that ecosystems such as Apple’s and the web are not in opposition to one another.

While this is technically correct, it’s a red herring. HTML does matter because the important thing here is the open web. Check out Gruber’s sleight of hand in this closing paragraph:

Arguments about “open” and “closed” often devolve into unresolvable cross-talk where the two sides have different definitions of what open and closed really mean. But the weird thing about a truly open platform is that its openness allows closed things to be built on top of it. In broad strokes, that’s why GNU/GPL software isn’t “open” in the way that BSD software is (and why Richard Stallman outright rejects the term “open source”). If you expand your view of “the web” from merely that which renders inside the confines of a web browser to instead encompass all network traffic sent over HTTP/S, the explosive growth of native mobile apps is just another stage in the growth of the web. Far from killing it, native apps have made the open web even stronger.

I think Gruber needs to read up on enclosure and the Commons. To use a 16th-century English agricultural metaphor, the important thing isn’t that the grass is growing in the field, it’s that it’s been fenced off and people are excluded.

A way forward

A second approach is to double-down on what makes the web different and unique. Mozilla’s mission is to promote openness, innovation & opportunity on the web and the Web Literacy Map is a platform for doing this. Even if we don’t tie development of the Web Literacy Map explicitly to the Mozilla manifesto it’s still a key consideration. Therefore, when we’re talking about 'web literacy’ it’s probably more accurate to define it as 'the skills and competencies required to read, write and participate on the open web.

What do we mean by the 'open web’? While Tantek Celik approaches it from a technical standpoint, I like Brad Neuberg’s (2008) focus on the open web as a series of philosophies:

Decentralization - Rather than controlled by one entity or centralized, the web is decentralized – anyone can create a web site or web service. Browsers can work with millions of entities, rather than tying into one location. It’s not the Google or Microsoft Web, but rather simply the web, an open system that anyone can plug into and create information at the end-points.

Transparency - An Open Web should have transparency at all levels. This includes being able to view the source of web pages; having human-readable network identifiers, such as URLs; and having clear network entry points, such as HTTP and REST exposes.

Hackability - It should be easy to lash together and script the different portions of this web. MySpace, for example, allows users to embed components from all over the web; Google’s AdSense, another example, allows ads to be integrated onto arbitrary web pages. What would you like to hack together, using the web as a base?

Openness - Whether the protocols used are de facto or de-jure, they should either be documented with open specifications or open code. Any entity should be able to implement these standards or use this code to hook into the system, without penalty of patents, copyright of standards, etc.

From Gift Economies to Free Markets - The Open Web should support extreme gift economies, such as open source and Wikis, all the way to traditional free market entities, such as Amazon.com and Google. I call this Freedom of Social Forms; the tent is big enough to support many forms of social and economic organization, including ones we haven’t imagined yet. Third-Party Integration - At all layers of the system third-parties should be able to hook into the system, whether creating web browsers, web servers, web services, etc.

Third-Party Innovation - Parties should be able to innovate and create without asking the powers-that-be for permission.

Civil Society and Discourse - An open web promotes both many-to-many and one-to-many communication, allowing for millions of conversations by millions of people, across a range of conversation modalities.

Two-Way Communication - An Open Web should allow anyone to assume three different roles: Readers, Writers, and Code Hackers. Readers read content, Writers write content, and Code Hackers hack new network services that empower the first two roles.

End-User Usability and Integration - One of the original insights of the web was to bind all of this together with an easy to use web browser that was integrated for ease of use, despite the highly decentralized nature of the web. The Open Web should continue to empower the mainstream rather than the tech elite with easy to use next generation browsers that are highly usable and integrated despite having an open infrastructure. Open should not mean hard to use. Why can’t we have the design brilliance of Steve Jobs coupled with the geek openness of Steve Wozniak? Making them an either/or is a false dichotomy.

Conclusion

The Web Literacy Map describes the skills and competencies required to read, write and participate on the open web. But it’s also prescriptive. It’s a way to develop an open attitude towards the world:

Open is a willingness to share, not only resources, but processes, ideas, thoughts, ways of thinking and operating. Open means working in spaces and places that are transparent and allow others to see what you are doing and how you are doing it, giving rise to opportunities for people who could help you to connect with you, jump in and offer that help. And where you can reciprocate and do the same.

Native apps can mitigate against the kind of reciprocity required for an open web. In many ways, it’s the 21st century enclosure of the commons. I believe that web literacy, as defined and promoted through the Web Literacy Map, should not consider native apps part of the open web. Such apps may be built on top of web technologies, they may link to the open web, but native apps are something qualitatively different. Those who want to explore what reading, writing and participating means in closed ecosystems have other vocabularies – provided by media literacy, information literacy, and digital literacy – with which to do so.

Comments? Questions? Direct them here: doug@mozillafoundation.org or discuss this post in the #TeachTheWeb discussion forum

|

|

Christie Koehler: Happy 10th Birthday, MozillaWiki! |

Last Monday, Firefox turned 10 years old. Thunderbird turns 10 on 7 December.

This week we celebrate another birthday: MozillaWiki turns 10 on Wednesday, 18 November!

I’m immensely proud of our wiki, its ten year history, and of all the work Mozillians do to make MozillaWiki a hub of collaboration and a living memory for the Mozilla Project.

To show our appreciation for your efforts over the last decade, the MozillaWiki team has created a 10th Birthday badge.

MozillaWiki 10th Birthday Badge

MozillaWiki 10th Birthday BadgeAll you need to do to join in the celebration and claim the badge is log in to MozillaWiki. Once you’ve done that, you’ll see a link to claim the badge at the top of the page. Don’t have a MozillaWiki account? No worries! Create one during this Birthday celebration and you can claim the badge too.

A bit of MozillaWiki history

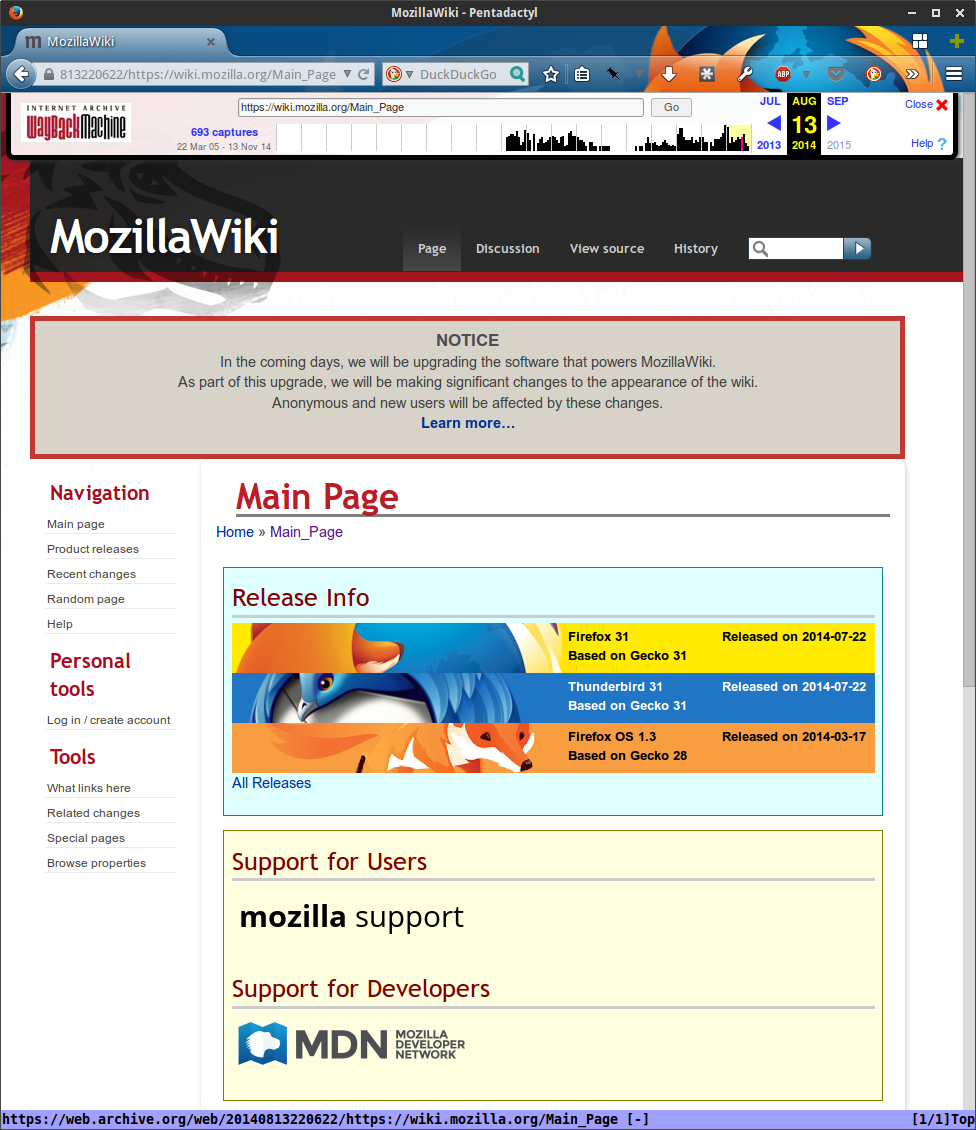

Before I talk about all the good work we’ve done, and what we have planned for the remainder of this year and beyond, let’s take a quick stroll through the last 10 years. Thank you Internet Archive for hosting these snapshots of the wiki!

July 2004

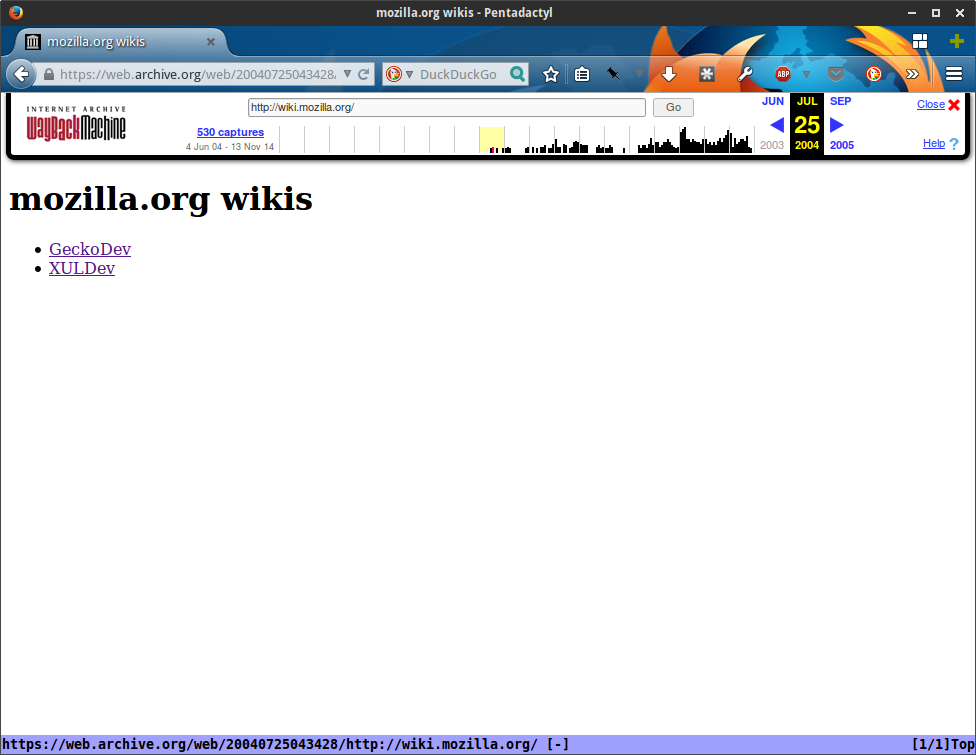

The earliest snapshot I could find of the domain wiki.mozilla.org was from July 2004. It looks like we were hosting separate wiki installations, which may or may not have been Mediawiki.

wik.mozilla.org July 2004

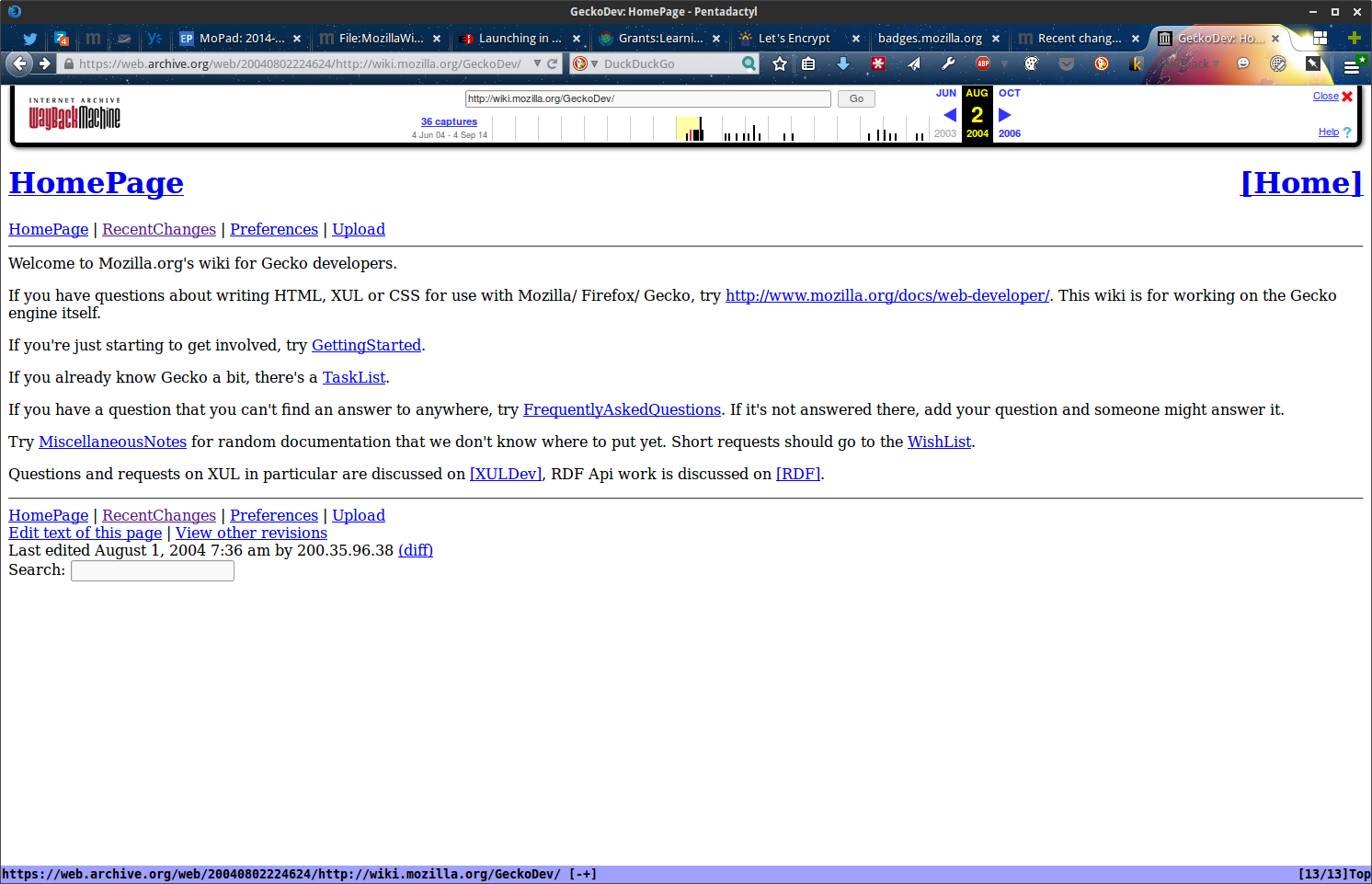

wik.mozilla.org July 2004 wiki.mozilla.org/GeckoDev August 2004

wiki.mozilla.org/GeckoDev August 2004November-December 2004

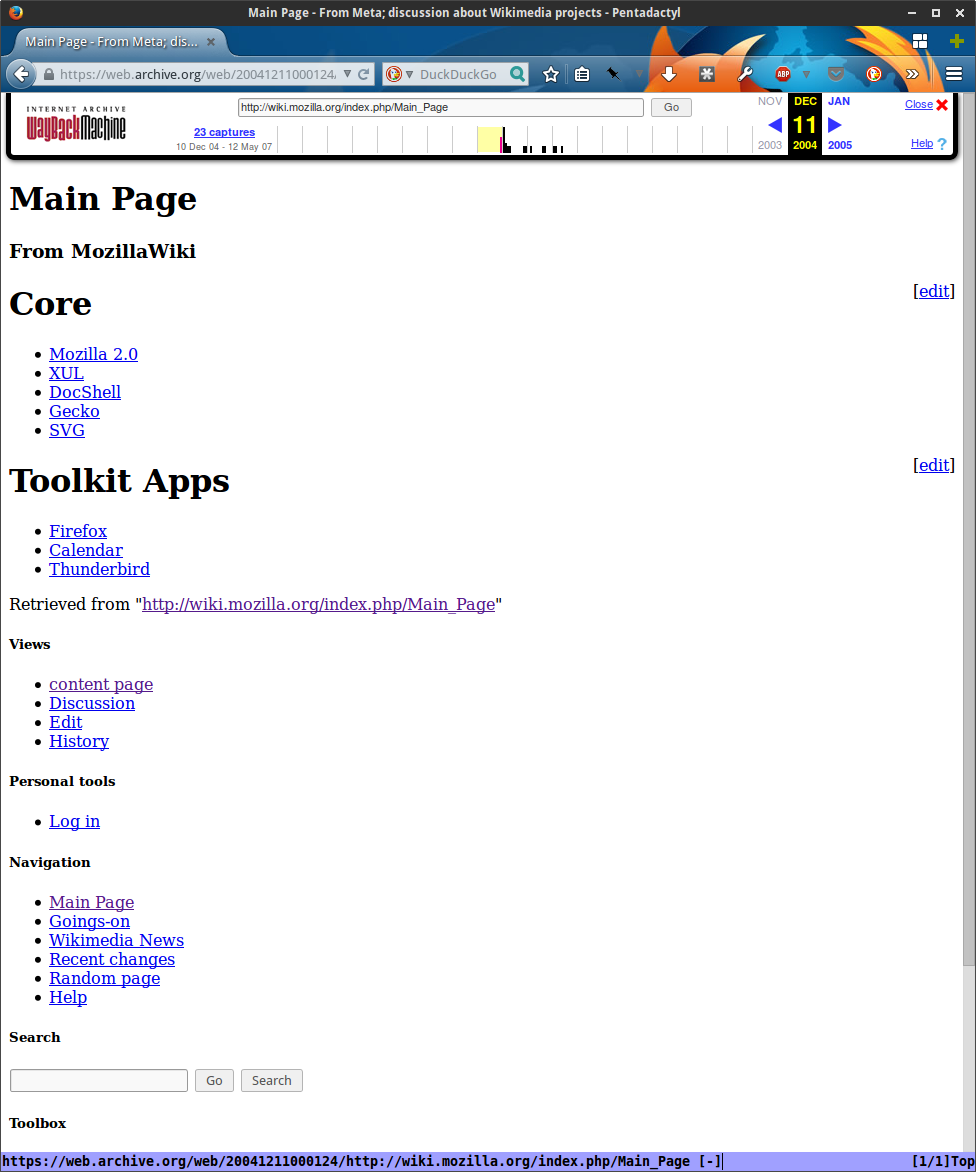

According to WikiApiary, the current installation of MozillaWiki was created on 18 November 2004. The closest snapshot to this date in the Internet Archive is 11 December 2004:

MozillaWiki December 2004

MozillaWiki December 2004April 2005

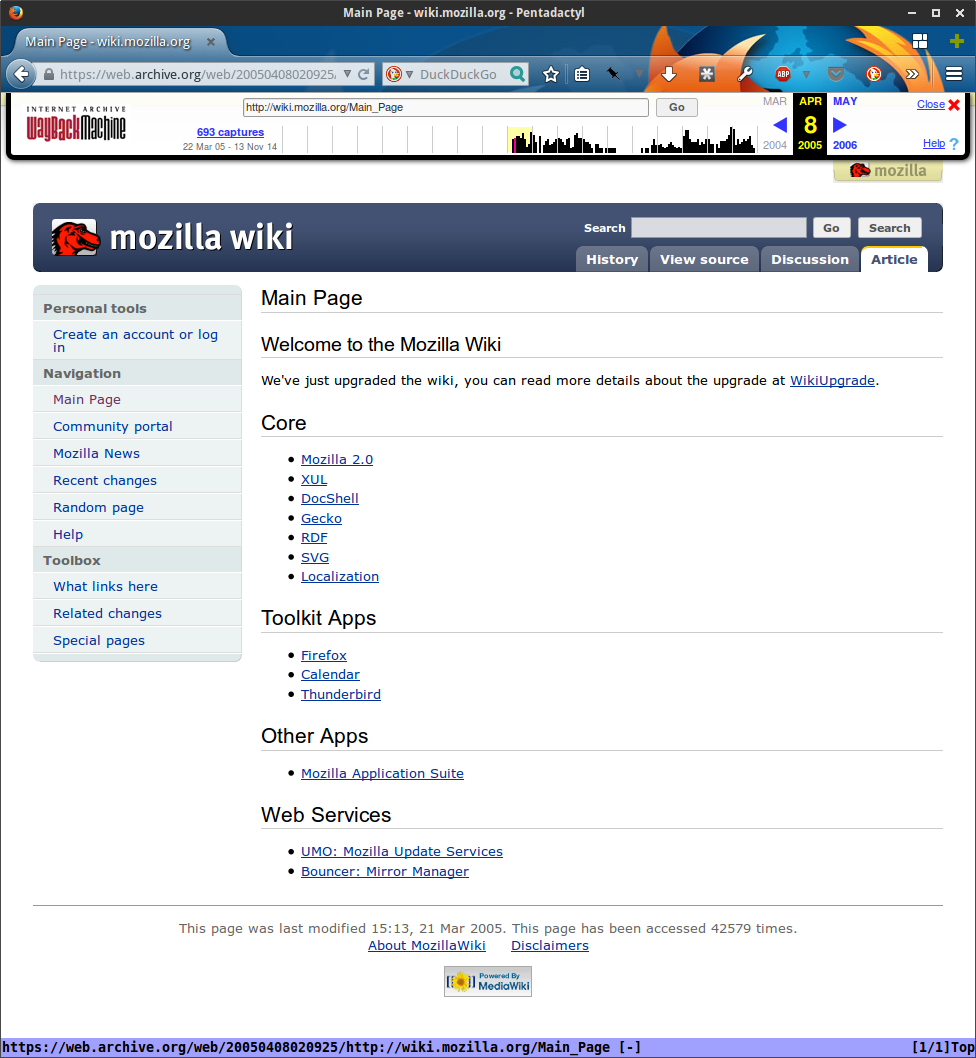

By April 2005, the wiki had been upgraded, had a new theme (Cavendish), and had started using Apache rewrite rules to make the url pretty (e.g. no index.php).

Mozilla Wiki, April 2005

Mozilla Wiki, April 2005August 2008

Three years later, in April 2008, we were still rockin’ the Cavendish theme and the Main Page had some more content, including links to the weekly project call that continues to this day.

MozillaWiki August 2008

MozillaWiki August 2008December 2010

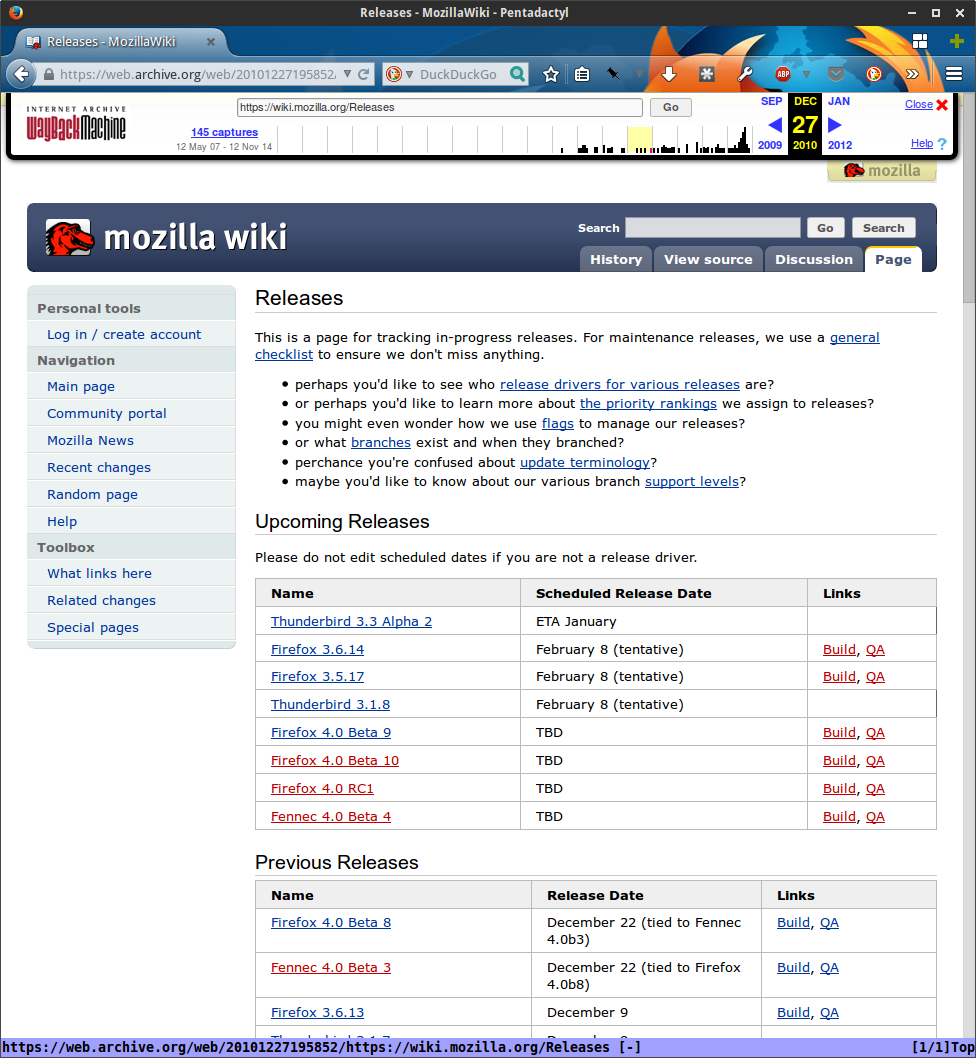

We started tracking releases in December 2007 (see version). Here’s what the Releases page looked like in December 2010.

MozillaWiki December 2010 Releases page

MozillaWiki December 2010 Releases pageMay 2011

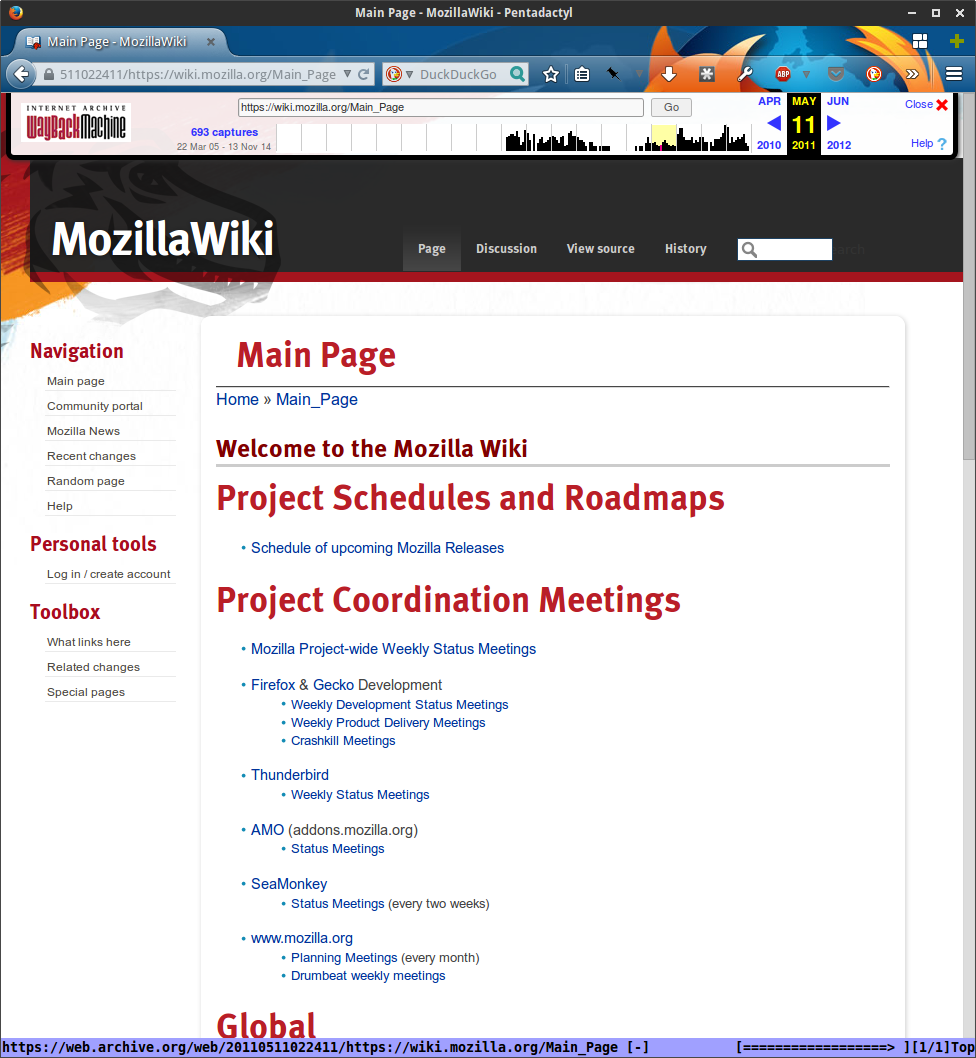

In May 2011, after 6 years of service, Cavendish was retired as the default skin and replaced with GMO.

MozillaWiki May 2011 – New GMO skin

MozillaWiki May 2011 – New GMO skinJuly 2012

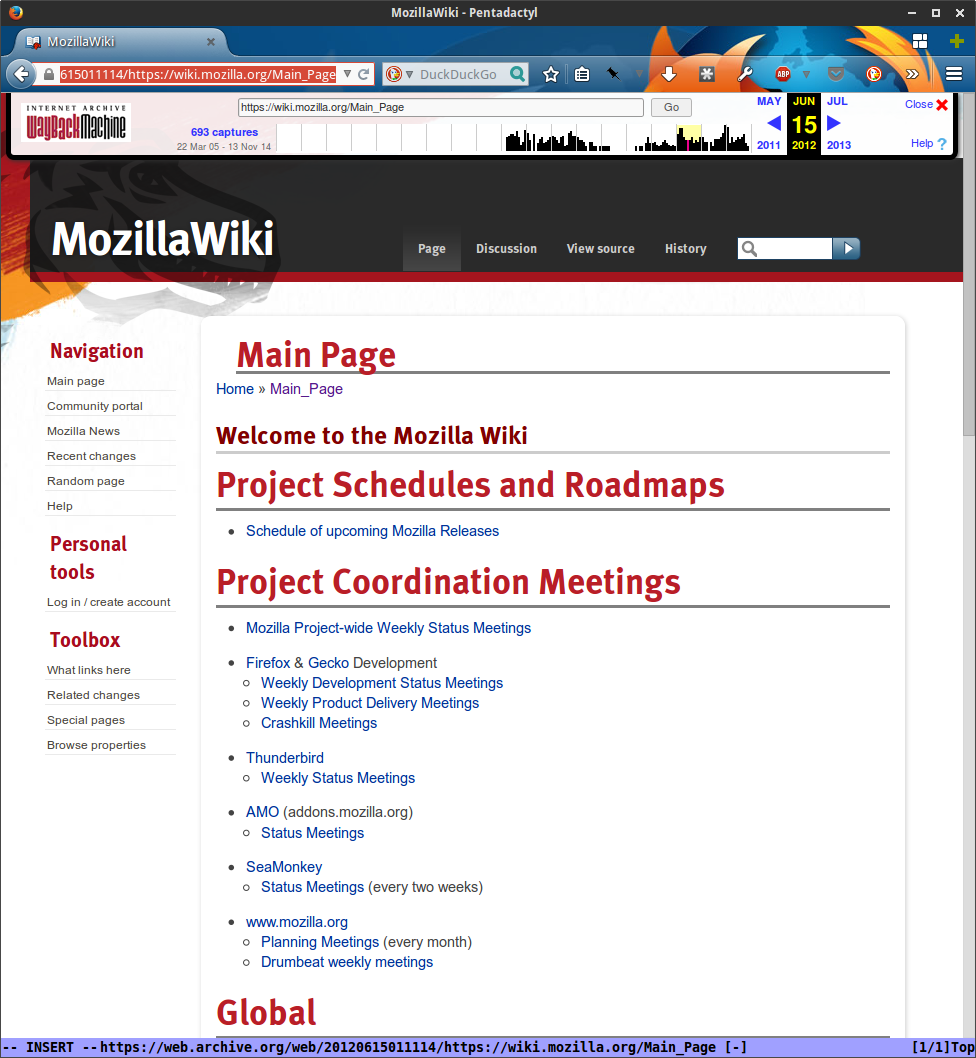

A year later, July 2012, MozillaWiki looked much the same.

MozillaWiki July 2012

MozillaWiki July 2012July 2013

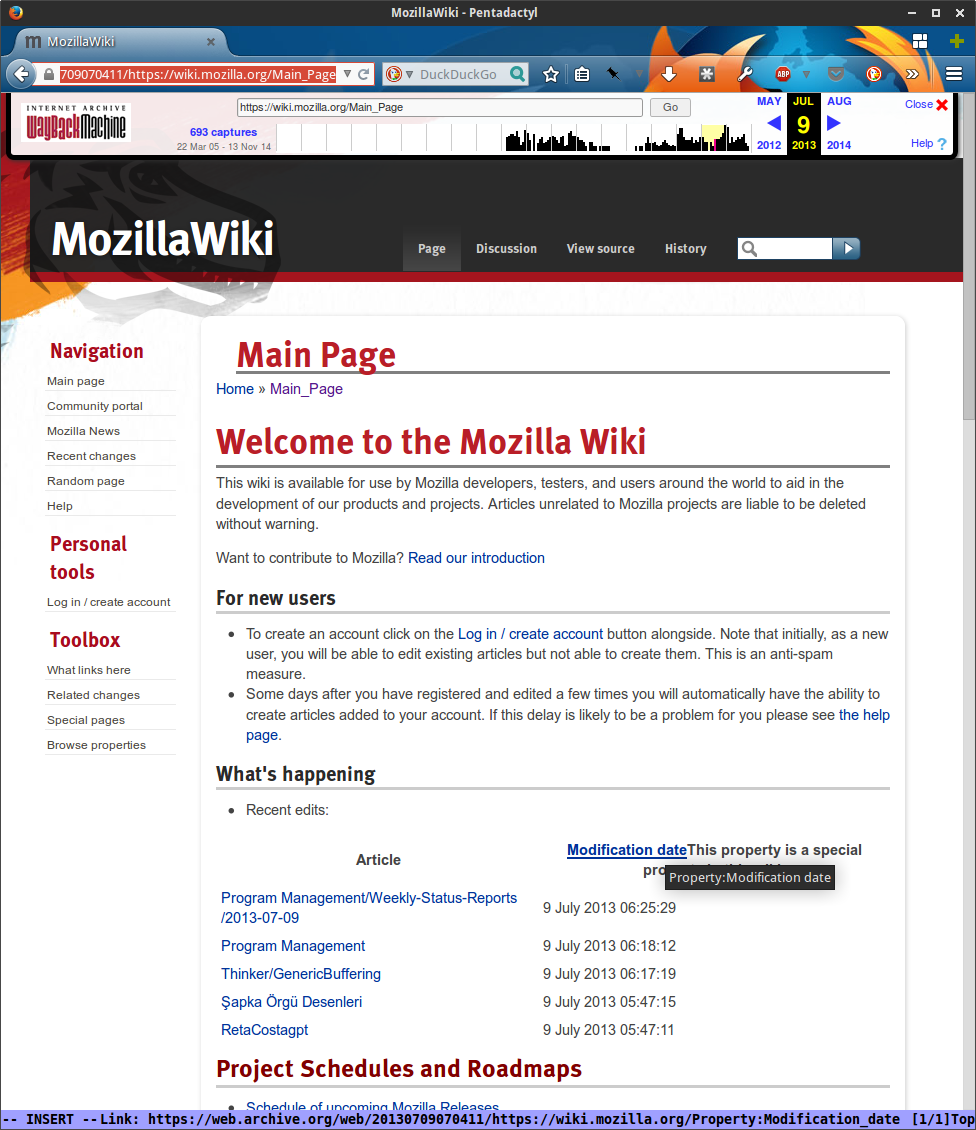

By July 2013, the Main Page was edited to include a few recent changes, but otherwise looked very similar.

MozillaWiki July 2013

MozillaWiki July 2013August 2014

By August 2014, the revitalization of the MozillaWiki was in full swing and we were preparing for a major update to both the skin (GMO to Vector) as well as the underlying software (Mediawiki 1.19 to 1.23). We also had made significant changes to the content of the Main Page based on results of our recent user survey.

MozillaWiki August 2013

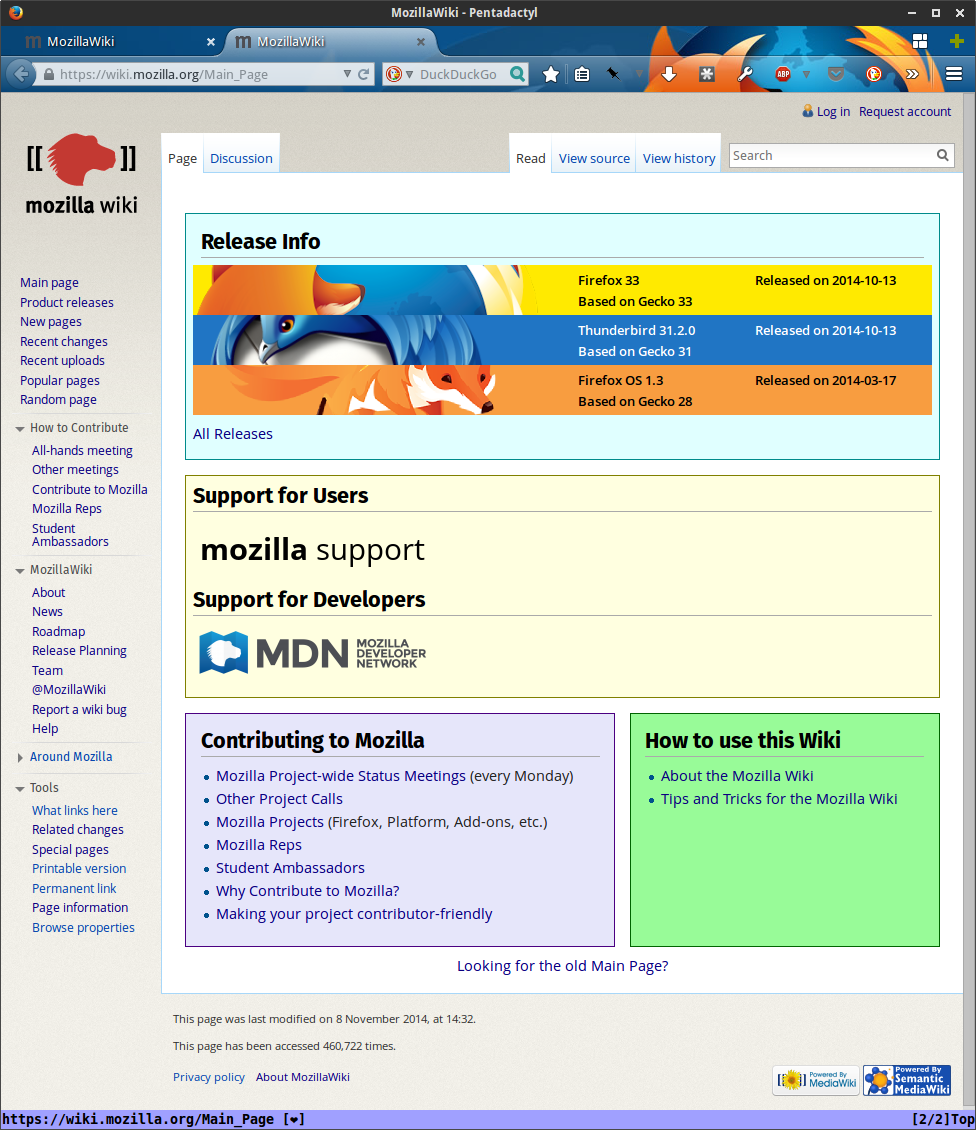

MozillaWiki August 2013November 2014

Here’s what the wiki looks like today, 17 November, the day before it’s birthday. We’re running a slightly modified Vector skin and Mediawiki 1.23.x branch.

MozillaWiki November 2014

MozillaWiki November 2014MozillaWiki today

Pages, visitors and accounts

As of 16 November, MozillaWiki has 115,912 pages, all public, and nearly 10k uploaded files. About 630 people per month, on average, log in and make contributions to the wiki. These include both staff and volunteers. Want to track these stats yourself? Visit Special:Statistics.

The number of daily visitors ranges from 9k-30k, with an average likely around 13-14k. Who are these visitors? According our analytics software we get visitors from all over the world, with the greatest concentration being from the US, Canada and UK.

The wiki has over 330,000 registered user accounts. I estimate that about 300k of these are inactive spam accounts, so the real number for user accounts is probably closer to 30,000.

What kinda of content is hosted on MozillaWiki?

All kinds of project activity is coordinated and recorded on the wiki. This includes activity related to our products: Firefox, Firefox OS, WebMaker, etc. It also includes community activities such as Reps, Firefox Student Ambassadors, etc. Most project activities have some representation on MozillaWiki. People also use the wiki to track projects and goals on an individual level. In this regard, it served as a place for Mozillians’ profiles long before we had mozillians.org.

The MozillaWiki isn’t setup for localized content now, but this hasn’t stopped our localized community from translating content. Every day a significant portion of account requests come from volunteers from regional communities and are often in a language other than English. In 2015, depending on resources available, we plan to significantly improve support for localized content on MozillaWiki.

2014 Accomplishments

This year we’ve made significant progress towards revitalizing MozillaWiki.

Accomplishments include:

- Forming a team of dedicated volunteers to lead a revitalization effort.

- Creating an About page for MozillaWiki that clarifies its scope and role in the project, including what is appropriate content and how to report issues.

- Fixing years-old bugs that cause significant usability problems (table sorting, unavailability of Wikieditor, etc.).

- Identifying a product owner for MozillaWiki and creating a Module for it, lead by a mix of staff and contributors.

- Halting the creation of new spam and cleaning up significant amounts of spam content.

- Upgrading Mediawiki from 1.19.x branch to 1.23.x branch AND changing the default theme without any significant downtime or disruptions to users.

- Organizing a user survey and using those results to guide much of our roadmap, including the redesign of the Main Page and sidebar navigation.

Thank you everyone who has been a part of this work!

There’s still plenty to do, and many ways to contribute

We’ve made so much progress on the technical and infrastructure debt of MozillaWiki that we’re now ready to focus on improving content and collaboration mechnisms.

How can I help?

The are many ways you can help, and we have contribution opportunities for all kinds of skill levels and time commitments.

We’re working on documenting and organizing these contribution opportunities here: https://wiki.mozilla.org/MozillaWiki:Contribute so check that page often.

Join our mailing-list or community call

If you’d like to help us organize those opportunities, or have other ideas for improving the wiki, join one of our MozillaWiki Team communication channels or one of our community meetings. These meetings are held twice a month on Tuesday at 8:30 PST / 15:30 UTC. Our next meeting is 16 December. All who are interested in contributing to the wiki are welcome.

In the meantime, log in to MozillaWiki and celebrate its birthday with us by claiming the birthday badge!

http://subfictional.com/2014/11/18/happy-10th-birthday-mozillawiki/

|

|

Christian Heilmann: We have a massive recruitment problem |

A few months ago, I flew over to see my parents for their 50th wedding anniversary. As some of you may know, I have a humble background. My dad was a coal miner and then factory worker and my mother has always been a home maker / housewife. I am the only one in my family that went to college and I skipped university as the thing to do was to make money in a job as soon as you are 18.

It was humbling and almost embarassing to have conversations with my family. Half of them are either unemployed or worried about their jobs. The rest are unhappy in their jobs but see no way to change that as they need the security. Finding joy in family life and leisure time is more important than enjoying the work. A job is a job, you got to do what you got to do and all that.

That’s why it feels surreal to come back into “our world” and get offers for jobs I don’t want. A lot of them. Some with ridiculous amounts of money offered and most with perks that would make my family blush and sense a trap.

Why are we not recruiter compatible and vice versa?

We’re lucky to be that sought after and yet it seems there is no happy symbiosis between us and recruiters. On the contrary, as soon as you even mention recruiting most of us techies start ranting.

I feel uneasy doing that. I feel like an arrogant ass and I feel that we should be more grateful about the opportunities we get. The relationship of recruiter and job seeker should be high-fives and unicorns. Instead there is a massive sense of dread: “Oh god, another job offer, how tiring”.

There are reasons for our dismay:

- A lot of headhunters/recruiters work on a commission basis and are measured by how many contacts they had that day. This leads to a scattergun approach and you get offers that are not “you” at all.

- Many recruiters seem to just look for keywords and then send the offer out to you. That’s why you get Java positions when you have a JavaScript background. Just like you would send car mechanic jobs to a carpet expert.

- Others go for company names. A great example was this recruiter trying to hire someone’s dog as a Java/Python developer.

- Many recruiting sites are very pushy to get you into their database to show potential hiring companies just how many job searchers they have. This leads to very old and outdated profiles and you get offers for jobs you’ve done years ago. Basically they don’t want to find you a job, they want you as an ad.

- People write ridiculous job descriptions and send them to us. In the past I wrote what kind of people I try to hire and once it went through HR and recruitment review something completely ridiculous ended up online. You’ve seen those. Asking people for two degrees, but not older than 20. Seven years experience in a half year old technology, and similar confusing points.

- There is probably nothing more intrusive to someone who feels at home online to be called by somebody. Recruiters, however, seem to see the “personal touch” as the most important thing.

On the other side of this issue, we are not innocent either:

- Instead of telling people why we didn’t want their offer, we just ignore them. There is no learning on either side.

- We love our own tools and are not too interested in changing that. Every recruitment department I worked with needed a CV in a document format for filing and keeping. Instead of having one of those at hand we love to create online CVs and portfolios or point people to our GitHub account as “real people who would hire me find all they need there”. This is navel gazing and arrogant. If I want to go on a bus, I need a bus ticket. A macaroni picture with glitter on it saying “most amazing responsive bus ticket” will not get me on there. Have the tool for the task.

- We don’t keep our presence up-to-date. If you’re not seeking, say so on LinkedIn. Have a template to send back to recruiters telling them “thanks, but no.”

- We also shouldn’t create profiles of our dog on LinkedIn. This is a professional tool, if we don’t use these in a professional manner we shouldn’t be surprised that they go to the dogs.

- Keep your skills up-to-date. If you never ever want to work with a certain product any longer, remove it from your online presence. That way keyword searchers don’t find you.

We need to communicate better

I feel there is a massive waste going on and an accumulation of frustration on both sides. We need to get better in helping another to make this the natural partnership it should be. I feel terrible hearing about friends not in our world who send out hundreds of applications and don’t get answers whilst we complain about people trying to offer us jobs. It feels almost unreal.

There are a few good ideas around and there is a start to clean this mess up. Joblint is a tool that comes to mind. It is an analysing tool that takes job descriptions and allows you to

“Test tech job specs for issues with sexism, culture, expectations, and recruiter fails”.

A lot of miscommunication could be avoided simply by using that.

Considering giving a helping hand

Maybe I should do something about this and use my time off to reach out and try to change something. I wonder if a workshop for recruiters about issues to avoid would be of interest? In any case, let’s try to be more understanding. Recruiters do their jobs the same way we do ours. By understanding their drives and goals, we can make both of our lives easier. By being arrogant and come across as divas we shouldn’t be surprised if job descriptions start calling out for rockstars, ninjas, gurus and mavens.

Let’s highlight the great experiences we had, and share what worked. Maybe that could be the lever we need to crack this nut open.

http://christianheilmann.com/2014/11/18/we-have-a-massive-recruitment-problem/

|

|

Josh Aas: Let’s Encrypt |

Today we announced a project that I’ve been working on for a while now – Let’s Encrypt. This is a new Certificate Authority (CA) that is intended to be free, fully automated, and transparent. We want to help make the dream of TLS everywhere a reality. See the official announcement blog post I wrote for more information.

Eric Rescorla and I decided to try to make this happen during the summer of 2012. We were trying to figure out how to increase SSL/TLS deployment, and felt that an innovative new CA would likely be the best way to do so. Mozilla agreed to help us out as our first major sponsor, and by May of 2013 we had incorporated Internet Security Research Group (ISRG). By September 2013 we had merged a similar project started by EFF and researchers from the University of Michigan into ISRG, and submitted our 501(c)(3) application. Since then we’ve put a lot of work into ISRG’s governance, found the right sponsors, and put together the plans for our CA, Let’s Encrypt.

I’ll be serving as ISRG’s Executive Director while we search for more permanent leadership. During this time I’ll remain with Mozilla.

Too many people to thank for their help here, many of whom work for our sponsors, but I want to call out Eric Rescorla (Mozilla) and Kevin Dick (Right Side Capital Management) in particular. Eric was my original co-conspirator, and Kevin has spent innumerable hours with me helping to create partnerships and the necessary legal infrastructure for ISRG. Both are incredible at what they do, and I’ve learned a lot from working with them.

Now it’s time to finish building the CA – lots of software to write, hardware to install, and auditing to complete. If you have relevant skills, we hope you’ll join us.

https://boomswaggerboom.wordpress.com/2014/11/18/lets-encrypt/

|

|

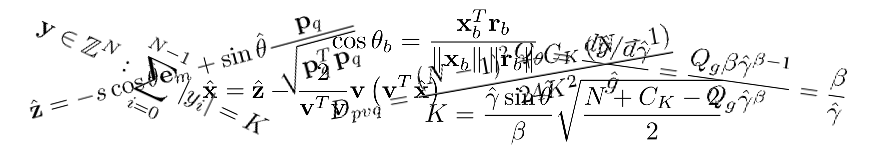

Jean-Marc Valin: Daala: Perceptual Vector Quantization (PVQ) |

Here's my new contribution to the Daala demo effort. Perceptual Vector Quantization has been one of the core ideas in Daala, so it was time for me to explain how it works. The details involve lots of maths, but hopefully this demo will make the general idea clear enough. I promise that the equations in the top banner are the only ones you will see!

|

|

Andreas Gal: Firefox and Cisco’s Project Squared |

Yesterday I was at Cisco’s Collaboration Summit where Cisco’s CTO for Collaboration Jonathan Rosenberg and I showed Cisco’s new WebRTC-based Project Squared collaboration service running in Firefox, talking to a Cisco Collaboration Desktop endpoint without requiring transcoding.

This demo is the culmination of a year long collaboration between Cisco and Mozilla in the WebRTC space. WebRTC enables voice and video communication directly from within the browser. This means that anyone can build a video conferencing service just using WebRTC and HTML5 standards, without the need for the user to download a plugin or a native application.

Cisco is not only developing WebRTC-based services that run on the Web. They have also joined a growing number of organizations and companies helping Mozilla to build a better Web. Over the last year Cisco has contributed numerous technical improvements to Mozilla’s WebRTC implementation, including support for screen sharing and the H.264 video codec. These features are now shipping in Firefox. We intend to use them in the future in Mozilla’s own Hello communication service that we are bringing to Firefox.

Cisco’s contributions to the Web go beyond just advancing Firefox. For the last three years the IETF, the standards body defining the networking protocols for WebRTC, has been unable to agree on a mandatory video codec for WebRTC, putting ubiquitous interoperability in doubt.

One of the major blockers to coming to a consensus was that H.264 is subject to royalty-bearing patents, which made it problematic for open source projects such as Firefox to deploy it. To break this logjam, Cisco open-sourced its H.264 code base and made it available in plugin form. Any product — not just Firefox — can download the plugin and use it to enable H.264 without paying any royalties.

This collaboration between Mozilla and Cisco enabled Firefox to add support for H.264 in WebRTC, and also played a significant role in the compromise reached at the last IETF meeting to adopt both H.264 and VP8 as mandatory video codecs for WebRTC in browsers. As a result of this compromise, in the future all browsers should match the capabilities already available in Firefox.

Mozilla will continue to work on advancing Firefox and the Web, and we are excited to have strong partners like Cisco who share our commitment to the open Web as a shared technology platform.

Filed under: Mozilla Tagged: Mozilla, WebRTC

http://andreasgal.com/2014/11/18/firefox-and-ciscos-project-squared/

|

|

Adam Lofting: Will our latest donation form help us raise more money this year? |

I posted to the fundraising.mozilla.org blog today:

I posted to the fundraising.mozilla.org blog today:

http://feedproxy.google.com/~r/adamlofting/blog/~3/iBiYBNir9_U/

|

|

Alexander Surkov: Accessibility goes into DOM |

I have bunch of issues with the approach I wanted to outline here. Just to keep things in one place.

The purpose

I've been told that primary reason is a testing propose, but having role and name only is not enough to run UAIG tests or any accessibility automation tool since it would require other accessibility properties.

Also they say that it might be used for non accessibility proposes. I realize that semantics, the ARIA adds, can be used by non assistive technologies. In Firefox we have a large number of non AT consumers but we don't have a good idea in most of cases what they are for. So I don't really have the use case, and thus it's hard to say whether accessible role and name only works well for non a11y proposes.

Concerning to assistive technologies I think they also need a much larger API.

Blowing the DOM

Anything useful should require extra accessible properties as I said above. These are accessible description, states, relations, ability to navigate the hierarchy etc. That means sooner or later the Element interface has to be changed to a great extent. Check out AtkObject to get an idea about possible changes.

In beginning of times accessibility interfaces was built on top of DOM and later they were turned into full APIs. Now we are faced to backward process, accessibility APIs are getting back to DOM. I'm not sure if that's a good idea because accessibility tasks are something very specific, and accessibility API might be not suitable for common needs of web apps.

Restrictions

Not every semantically meaningful piece on the screen has a DOM node, for example, list bullets don't necessary have DOM elements associated with them. So Element based accessibility API is too restrictive to fit the requirements of the assistive technologies.

Performance

Last but not least is performance issue. In most of browsers the accessibility engine is kept separately and it gets running on demand. If accessibility is merged with the DOM then nothing tells the user this method may trigger heavy accessibility computations and make his app slower. Surely the browsers will learn how to get smarter but the approach will have a perf hit either way.

What's it going to be then, eh?

The idea is to provide a separate accessibility interface. If you like it then it can be done by parts, for example, introduce role and name only for the first round same as the original proposal says. Later you can think of adding all other properties.

This idea was welcomed initially, then later it was rejected as being too complex and accessibility centric. But - and that's most important thing - it doesn't have disadvantages the Element approach has.

http://asurkov.blogspot.com/2014/11/accessibility-goes-into-dom.html

|

|

Andreas Gal: Let’s Encrypt: One more step on the road to TLS Everywhere |

Principle 4 of the Mozilla Manifesto states: Individuals’ security and privacy on the Internet are fundamental and must not be treated as optional.

Unfortunately treating user security as optional is exactly what happens when sites let users connect over insecure HTTP rather than HTTP over TLS (HTTPS). What insecure means here is that your network traffic is totally unprotected and can be read and/or modified by anyone who shares a network with you, including random people sharing Starbucks or airport WiFi.

One of the biggest reasons that web sites don’t deploy TLS is the requirement to get a digital certificate — a cryptographic credential which allows a user’s browser to know it’s talking to the right site and not to an attacker. Certificates are issued by Certificate Authorities (CAs) often using a clumsy and error-prone manual process. A further disincentive to deployment is that most CAs charge a fee for their certificates, which not only prices some people out of the market but also interferes with automatic issuance and renewal.

Mozilla, along with our partners Akamai, Cisco, EFF, and Identrust decided to do something about this situation. Together, we’ve formed a new consortium, the Internet Security Research Group, which is starting Let’s Encrypt, a new certificate authority designed to bring security to everyone. Let’s Encrypt is built around a few key principles:

- Free: Certificates will be offered at no cost.

- Automatic: Certificates will be issued via a public and published API, allowing Web server software to automatically obtain new certificates at installation time and without manual intervention.

- Independent: No piece of infrastructure this important should be controlled by a single company. ISRG, the parent entity of Let’s Encrypt, is governed by a board drawn from industry, academia, and nonprofits, ensuring that it will be operated in the public interest.

- Open: Let’s Encrypt will be publishing its source code and protocols, as well as submitting the protocols for standardization so that server software as well as other CAs can take advantage of them.

Let’s Encrypt will be issuing its first real certificates in Q2 2015. In the meantime, we have published some initial protocol drafts along with a demonstration client and server at: https://github.com/letsencrypt/node-acme and https://github.com/letsencrypt/heroku-acme. These are functional today and can be used to issue test certificates.

It’s been a long road getting here and we’re not done yet, but this is an important step towards a world with TLS Everywhere.

Filed under: Mozilla

http://andreasgal.com/2014/11/18/lets-encrypt-one-more-step-on-the-road-to-tls-everywhere/

|

|

Soledad Penades: Tools for the 21st century musician—super abridged dotJS edition |

I attended dotJS yesterday where I gave a very short version of past past week’s talk at Full Frontal (18 minutes versus 40).

The conference happened in a theatre and we were asked not to use bright background so I changed my slides to be darker and classier.

It didn’t really go as smoothly as I expected (a kernel panic a bit before the start of the talk, and I got nervous and distracted so I got more nervous and…), but I guess I can’t always WIN! It was fun to speak in French if only one line, though: Je suis tr`es contente d’^etre parmi vous!–thanks to Thomas for the assistance in coming up with the perfect presentation line, and Guillaume and Sasha for listening to me repeat it until it resembled passable French!

While the video is edited and released, here’s a sample in the form of slides, online and their source code in GitHub.

It was fun to use CSS filters to invert the images so they would not be a big white block on top of a dark background. Yay CSS filters!

.filter-invert {

filter: invert(100%) brightness(2);

}Also, using them in transitions between slides. I discovered that I could blur between slides. Cinematic effects! (sorta, as I cannot get vertical/horizontal blur). But:

.bespoke-active.emphatic-text {

filter: none;

}

.bespoke-inactive.emphatic-text {

filter: blur(10px);

}I use my custom plugin presentation-fullscreen for getting real fullscreen in my slides. It’s on npm:

npm install presentation-fullscreen --savethen just

require('presentation-fullscreen');will add a new option to the contextual menu for making the whole body go fullscreen.

I shall write about this tip and how I use bespoke.js in general, and a couple thoughts and ideas I had during the conference soon. Topics including (so I don’t forget): why a mandatory lack of anonymity is not the solution to doxxing, and the ideal talk length.

|

|

Mozilla Release Management Team: Firefox 34 release date moving to Dec 1/2 |

The Firefox 34 release date will move out one week from Nov 25 to Dec 1/2. This change impacts Firefox Desktop, Firefox for Android, Firefox ESR, and Thunderbird.

The purpose of this change is to allow for an additional week of stabilization during the 34 cycle.

Details of the change:

- Release date change from Nov 25 to Dec 1/2 (need to determine the date that works best given the work week)

- Merge date change from Tue, Nov 24 to Fri, Nov 28

- Two additional desktop betas (10 and 11) will be added to the calendar this week on our usual beta build schedule (build Mon and Thu, release Tue and Fri)

- One additional mobile beta (beta 11) will be added to the schedule.

Note that mobile beta 10 will gtb on schedule on Mon.

Mobile beta 11 will gtb on Thu with desktop in order to be ready early the following week. - RC builds will happen on Mon, Nov 24

http://release.mozilla.org/planning/34/2014/11/18/34-dates-moved.html

|

|

Patrick Finch: Is the Web dying? |

This article may or may not be pay-walled, depending on how you arrive at it. It is an exploration of the shift to apps.

The history of computing is companies trying to use their market power to shut out rivals, even when it’s bad for innovation and the consumer….That doesn’t mean the Web will disappear. Facebook and Google still rely on it to furnish a stream of content that can be accessed from within their apps. But even the Web of documents and news items could go away. Facebook has announced plans to host publishers’ work within Facebook itself, leaving the Web nothing but a curiosity, a relic haunted by hobbyists.

This is something I was getting at with my post yesterday: that advertising remains one of the Web’s unique selling points. It is much more effective as an advertising platform than mobile apps are. At the moment, the Internet giants extract an enormous amount of value from the content on the Web, using it to drive engagement with their services. The Web has very low barriers to entry, but economic sustainability is difficult and the only proven revenue model appears to be advertising at scale. The model needs liberating.

(Note: The source of this article, the Wall Street Journal, may appear to refute that, (given it has a paywall), but I believe that their model is essentially freemium and it isn’t clear to me what revenues they derive from subscription customers.)

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1096565] GET REST calls should allow arbitrary URL parameters to be passed in addition the values in the path

- [1097813] Bug.search causes error when using simple token auth and specifying ‘token’ instead of ‘Bugzilla_token’

- [1036802] Requests to the native rest/bzapi endpoints with gzip encoding always result in HTTP/200 responses

- [1097382] OS sniffing should detect Windows 10 from “Windows NT 6.4'' instead of detecting Windows NT

- [1098956] remove autoland support

- [1100368] css concatenation breaks data: urls

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/11/18/happy-bmo-push-day-119/

|

|