Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Soledad Penades: Using a Flame as my main phone, day 1 |

So here's my last tweet from an Android phone. Moving to using a @FirefoxOSFeed Flame full time! pic.twitter.com/FZLdY1sL1I

—

http://soledadpenades.com/2014/09/30/using-a-flame-as-my-main-phone-day-1/

|

|

Mozilla Release Management Team: Firefox 33 beta7 to beta8 |

- 46 changesets

- 110 files changed

- 1976 insertions

- 805 deletions

| Extension | Occurrences |

| cpp | 34 |

| h | 14 |

| html | 13 |

| js | 11 |

| jsm | 6 |

| css | 4 |

| xul | 3 |

| xml | 3 |

| ini | 3 |

| cc | 3 |

| c | 2 |

| xhtml | 1 |

| webidl | 1 |

| svg | 1 |

| py | 1 |

| properties | 1 |

| nsh | 1 |

| list | 1 |

| in | 1 |

| idl | 1 |

| dtd | 1 |

| Module | Occurrences |

| dom | 18 |

| gfx | 16 |

| browser | 14 |

| layout | 12 |

| toolkit | 9 |

| security | 6 |

| js | 6 |

| content | 6 |

| netwerk | 5 |

| mobile | 3 |

| media | 3 |

| widget | 2 |

| xpfe | 1 |

| xpcom | 1 |

| modules | 1 |

| ipc | 1 |

| embedding | 1 |

List of changesets:

| David Keeler | Bug 1057123 - mozilla::pkix: Certificates with Key Usage asserting the keyCertSign bit may act as end-entities. r=briansmith, a=sledru - 599ae9ec1b9c |

| Robert Strong | Bug 1070988 - Windows installer should remove leftover chrome.manifest on pave over install to prevent startup crash with Firefox 32 and above with unpacked omni.ja. r=tabraldes, a=sledru - 9286fb781568 |

| Bobby Holley | Bug 1072174 - Handle all the cases XrayWrapper.cpp. r=peterv, a=abillings - bb4423c0da47 |

| Brian Nicholson | Bug 1067429 - Alphabetize theme styles. r=lucasr, a=sledru - f29b8812b6d0 |

| Brian Nicholson | Bug 1067429 - Create GeckoAppBase as the parent for Gecko.App. r=lucasr, a=sledru - 112a9fe148d2 |

| Brian Nicholson | Bug 1067429 - Add values-v14, removing v14-only styles from values-v11. r=lucasr, a=sledru - 89d93cece9fd |

| David Keeler | Bug 1060929 - mozilla::pkix: Allow explicit encodings of default-valued BOOLEANs because lol standards. r=briansmith, a=sledru - 008eb429e655 |

| Tim Taubert | Bug 1067173 - Bail out early if _resizeGrid() is called before the page has loaded. f=Mardak, r=adw, a=sledru - c043fec932a6 |

| Markus Stange | Bug 1011166 - Improve the workarounds cairo does when rendering large gradients with pixman. r=roc, r=jrmuizel, a=sledru - a703ff0c7861 |

| Edwin Flores | Bug 976023 - Fix crash in AppleMP3Reader. r=rillian, a=sledru - f2933e32b654 |

| Nicolas Silva | Bug 1066139 - Put stereo video behind a pref (off by default). r=Bas, a=sledru - e60e089a7904 |

| Nicholas Nethercote | Bug 1070251 - Anonymize non-chrome inProcessTabChildGlobal URLs in memory reports when necessary. r=khuey, a=sledru - 09dcf9d94d33 |

| Andrea Marchesini | Bug 1060621 - WorkerScope should CC mLocation and mNavigator. r=bz, a=sledru - 32d5ee00c3ab |

| Andrea Marchesini | Bug 1062920 - WorkerNavigator strings should honor general.*.override prefs. r=khuey, a=sledru - 6d53cfba12f0 |

| Andrea Marchesini | Bug 1069401 - UserAgent cannot be changed for specific websites in workers, r=khuey, r=bz, a=sledru - e178848e43d1 |

| Gijs Kruitbosch | Bug 1065998 - Empty-check Windows8WindowFrameColor's customizationColor in case its registry value is gone. r=jaws, a=sledru - 12a5b8d685b2 |

| Richard Barnes | Bug 1045973 - sec_error_extension_value_invalid: mozilla::pkix does not accept certificates with x509v3 extensions in x509v1 or x509v2 certificates. r=keeler, a=sledru - a4697303afa6 |

| Branislav Rankov | Bug 1058024 - IonMonkey: (ARM) Fix jsapi-tests/testJitMoveEmitterCycles. r=mjrosenb, a=sledru - 371e802df4dc |

| Rik Cabanier | Bug 1072100 - mix-blend-mode doesn't work when set in JS. r=dbaron, a=sledru - badc5be25cc1 |

| Jim Chen | Bug 1067018 - Make sure calloc/malloc/free usages match in Tools.h. r=jwatt, a=sledru - cf8866bd741f |

| Bill McCloskey | Bug 1071003 - Fix null crash in XULDocument::ExecuteScript. r=smaug, a=sledru - b57f0af03f78 |

| Felipe Gomes | Bug 1063848 - Disable e10s in safe mode. r=bsmedberg, r=ally, a=sledru, ba=jorgev - 2b061899d368 |

| Gijs Kruitbosch | Bug 1069300 - strings for panic/privacy/forget-button for beta, r=jaws,shorlander, a=dolske, l10n=pike, DONTBUILD=strings-only - 16e19b9cec72 |

| Valentin Gosu | Bug 1011354 - Use a mutex to guard access to nsHttpTransaction::mConnection. r=mcmanus, r=honzab, a=abillings - ac926de428c3 |

| Terrence Cole | Bug 1064346 - JSFunction's extended attributes expect POD-style initialization. r=billm, a=abillings - fd4720dd6a46 |

| Marty Rosenberg | Bug 1073771 - Add namespaces and whatnot to make JitMoveEmitterCycles compile. r=dougc, a=test-only - 97feda79279e |

| Ed Lee | Bug 1058971 - [Legal]: text for sponsored tiles needs to be localized for Firefox 33 [r=adw a=sylvestre] - deaa75a553ac |

| Ed Lee | Bug 1064515 - update learn more link for sponsored tiles overlay [r=adw a=sylvestre] - b58a231c328c |

| Ed Lee | Bug 1071822 - update the learn more link in the tiles intro popup [r=adw a=sylvestre] - 0217719f20c5 |

| Ed Lee | Bug 1059591 - Incorrectly formatted remotely hosted links causes new tab to be empty [r=adw a=sylvestre] - d34488e06177 |

| Ed Lee | Bug 1070022 - Improve Contrast of Text on New Tab Page [r=adw a=sylvestre] - 8dd30191477e |

| Ed Lee | Bug 1068181 - NEW Indicator for Pinned Tiles on New Tab Page [r=ttaubert a=sylvestre] - 02da3cf36508 |

| Ed Lee | Bug 1062256 - Improve the design of the »What is this« bubble on about:newtab [r=adw a=sylvestre] - 2a8947c986ed |

| Bas Schouten | Bug 1072404: Firefox may crash when the D3D device is removed while rendering. r=mattwoodrow a=sylvestre - 3d41bbe16481 |

| Bas Schouten | Bug 1074045: Turn OMTC back on on beta. r=nical a=sylvestre - b9e8ce2a141b |

| Jim Mathies | Bug 1068189 - Force disable browser.tabs.remote.autostart in non-nightly builds. r=felipe, a=sledru - d41af0c7fdaf |

| Randell Jesup | Bug 1033066 - Never let AudioSegments underflow mDuration and cause OOM allocation. r=karlt, a=sledru - 82f4086ba2c7 |

| Georg Fritzsche | Bug 1070036 - Catch NS_ERROR_NOT_AVAILABLE during OpenH264Provider startup. r=irving, a=sledru - b6985e15046b |

| Nicolas Silva | Bug 1061712 - Don't crash in DrawTargetDual::CreateSimilar if allocation fails. r=Bas, a=sledru - 69047a750833 |

| Nicolas Silva | Bug 1061699 - Only crash deBug builds if BorrowDrawTarget is called on an unlocked TextureClient. r=Bas, a=sledru - 4020480a6741 |

| Aaron Klotz | Bug 1072752 - Make Chromium UI message loops for Windows call into WinUtils::WaitForMessage. r=jimm, a=sledru - 737fbc0e3df4 |

| Florian Qu`eze | Bug 1067367 - Tapping the icon of a second doorhanger reopens the first doorhanger if it was already open. r=Enn, a=sledru - 3ff9831143fd |

| Robert Longson | Bug 1073924 - Hovering over links in SVG does not cause cursor to change. r=jwatt, a=sledru - 19338c25065c |

| Ryan VanderMeulen | Backed out changeset d41af0c7fdaf (Bug 1068189) for reftest-ipc crashes/failures. - dabbfa2c0eac |

| Randell Jesup | Bug 1069646 - Scale frame rate initialization in webrtc media_opimization. r=gcp, a=sledru - bc5451d18901 |

| David Keeler | Bug 1053565 - Update minimum system NSS requirement in configure.in (it is now 3.17.1). r=glandium, a=sledru - 0780dce35e25 |

http://release.mozilla.org/statistics/33/2014/09/30/fx-33-b7-to-b8.html

|

|

Mozilla Reps Community: ReMo Camp 2014: Impact through action |

For the last 3 years the council, peers and mentors of the Mozilla Reps program have been meeting annually at ReMo Camp, a 3-day meetup to check the temperature of the program and plan for the next 12 months. This year’s Camp was particularly special because for the first time, Mitchell Baker, Mark Surman and Mary Ellen Muckerman participated in it. With such a great mix of leadership both at the program level and at the organization, it was clear this ReMo Camp would be our most interesting and productive one.

For the last 3 years the council, peers and mentors of the Mozilla Reps program have been meeting annually at ReMo Camp, a 3-day meetup to check the temperature of the program and plan for the next 12 months. This year’s Camp was particularly special because for the first time, Mitchell Baker, Mark Surman and Mary Ellen Muckerman participated in it. With such a great mix of leadership both at the program level and at the organization, it was clear this ReMo Camp would be our most interesting and productive one.

The meeting spanned 3 days:

Day 1:

The Council and Peers got together to add the finishing touches and tweaks to the program content and schedule but also to discuss the program’s governance structure. Council and Peers defined the different roles in the program that allow the Reps to keep each leadership body accountable and made sure there was general alignment. We will post a separate blog post on governance explaining the exact functions of the module owner, the peers, the council, mentors and Reps.

Day 2

The second day was very exciting and was coined the “challenges” day where we had Mitchell, Mark and Mary Ellen joining the Reps to work on 6 “contribution challenges”. These challenges are designed to be concrete initiatives that aim to have quick and concrete impact on Mozilla’s product goals with large scale volunteer participation. Mozillians around the globe work tireless to push the Mozilla mission forward and one of the most powerful ways of doing so is by improving our products. We worked on 6 specific areas to have an impact and identify the next steps. There’s a lot of excitement already and the Reps program will play a central role as a platform to mobilize and empower local communities participating in these challenges. More on this shortly…

Day 3

The last day of the was entirely dedicated to the Reps program. We had so many things to talk about, so many ideas and alas the day only has so many hours, so we focused on three thematic pillars: impact, mentorship training and getting stuff done. The council and peers had spent Friday setting those priorities, the rationale being that Mozilla Reps leadership is very good at identifying what needs to get done, and not as good with follow-through. The sessions on “impact” were prioritized over others as we wanted to figure out how to best enable/empower Reps to have an impact and follow up with all the great plans we do. Impact was broken down into three thematic buckets:

Accountability: how to we keep Reps accountable for what the have signed up for?

Impact measurement: how do we measure the impact of all the wonderful things we do?

Recognition: how do we recognize in a more systematic and fair way our volunteers who are going out of their way?

After the impact discussion, we changed gears and moved to the Mentorship training. During the preparations leading to ReMo Camp most of the mentors asked for training. Our mentors are really committed to helping Reps on the ground to do a great job, so the council and the peers facilitated a mentorship training divided in 5 different stations. We got a lot of great feedback and we’ll be producing videos with the materials of the training so that any mentor (or interested Rep) has access to this content. We will be also rolling out Q&A sessions for each mentorship station. Stay tuned if you want to learn more about mentorship and the Reps program in general.

The third part of Day 3 was “getting stuff done” a session where we identified 10 concrete tasks (most of them pending from the last ReMo Camp) that we could actually get done by the end of the day.

The overall take-away from this Camp was that instead of designing grand ambitious plans we need to be more agile and sometimes be more realistic with what work we can get accomplished. Ultimately, it will help us get more stuff done more quickly. That spirit of urgency and agility permeated the entire weekend, and we hope to be able to transmit this feeling to each and every Rep.

There wasn’t enough time, but we spent it in the best possible way. Having the Mozilla leadership with us was incredibly empowering and inspiring. The Reps have organized themselves and created this powerful platform. Now it’s time to focus our efforts. The weekend in Berlin proved that the Reps are a cohesive group of volunteer leaders with a lot of experience and the eyes and ears of Mozilla in every corner of the world. Now let’s get together and committing to doing everything we set ourselves to do before ReMo Camp 2015.

https://blog.mozilla.org/mozillareps/2014/09/30/remo-camp-2014-impact-through-action/

|

|

Roberto A. Vitillo: Telemetry meets Clojure. |

tldr: Data related telemetry alerts (e.g. histograms or main-thread IO) are now aggregated by medusa, which allows devs to post, view and filter alerts. The dashboard allows to subscribe to search criterias or individual metrics.

As mentioned in my previous post, we recently switched to a dashboard generator, “iacomus“, to visualize the data produced by some of our periodic map-reduce jobs. Given that the dashboards gained some metadata that describes their datasets, writing a regression detection algorithm based on the iacomus data-format followed naturally.

The algorithm generates a time-series for each possible combination of the filtering and sorting criterias of a dashboard, compares the latest data-point to the distribution of the previous N, and generates an alert if it detects an outlier. Stats 101.

Alerts are aggregated by medusa, which provides a RESTful API to submit alerts and exposes a dashboard that allows users to view and filter alerts using regular expressions and subscribe to alerts.

Writing the aggregator and regression detector in Clojure[script] has been a lot of fun. I found particularly attracting the fact that Clojure doesn’t have any big web framework a la Ruby or Python that forces you in one specific mindset. Instead you can roll your own using a wide set of libraries, like:

- HTTP-Kit, an event-driven HTTP client/server

- Compojure, a routing library

- Korma, a SQL DSL

- Liberator, RESTful resource handlers

- om, React.js interface for Clojurescript

- secretary, a client-side routing library

The ability to easily compose functionality from different libraries is exceptionally well explained by a quote from Alan Perlis: “It is better to have 100 functions operate on one data structure than 10 functions on 10 data structures”. And so as it happens instead of each library having its own set of independent abstractions and data-structures, Clojure libraries tend to use mostly just lists, vectors, sets and maps which greatly simplify interoperability.

Lisp gets criticized for its syntax, or lack thereof, but I don’t feel that’s fair. Using any editor that inserts and balances parentheses for you does the trick. I also feel like I didn’t have to run a background thread in my mind to think if what I was writing would please the compiler or not, unlike in Scala for instance. Not to speak of the ability to use macros which allows one to easily extend the compiler with user-defined code. The expressiveness of Clojure means also that more thought is required per LOC but that might be just a side-effect of not being a full-time functional programmer.

What I do miss in the clojure ecosystem is a strong set of tools for statistics and machine learning. Incanter is a wonderful library but, coming from a R and python/scipy background, I had the impression that there is still a lot of catching up to do.

http://ravitillo.wordpress.com/2014/09/30/telemetry-meets-clojure/

|

|

David Rajchenbach Teller: What David Did During Q3 |

September is ending, and with it Q3 of 2014. It’s time for a brief report, so here is what happened during the summer.

Session Restore

After ~18 months working on Session Restore, I am progressively switching away from that topic. Most of the main performance issues that we set out to solve have been solved already, we have considerably improved safety, cleaned up lots of the code, and added plenty of measurements.

During this quarter, I have been working on various attempts to optimize both loading speed and saving speed. Unfortunately, both ongoing works were delayed by external factors and postponed to a yet undetermined date. I have also been hard at work on trying to pin down performance regressions (which turned out to be external to Session Restore) and safety bugs (which were eventually found and fixed by Tim Taubert).

In the next quarter, I plan to work on Session Restore only in a support role, for the purpose of reviewing and mentoring.

Also, a rant The work on Session Restore has relied heavily on collaboration between the Perf team and the FxTeam. Unfortunately, the resources were not always available to make this collaboration work. I imagine that the FxTeam is spread too thin onto too many tasks, with too many fires to fight. Regardless, the symptom I experienced is that during the course of this work, both low-priority, high-priority and safety-critical patches have been left to rot without reviews, despite my repeated requests, for 6, 8 or 10 weeks, much to the dismay of everyone involved. This means man·months of work thrown to /dev/null, along with quarterly objectives, morale, opportunities, contributors and good ideas.

I will try and blog about this, eventually. But please, in the future, everyone: remember that in the long run, the priority of getting reviews done (or explaining that you’re not going to) is a quite higher than the priority of writing code.

Async Tooling

Many improvements to Async Tooling landed during Q3. We now have the PromiseWorker, which simplifies considerably the work of interacting between the main thread and workers, for both Firefox and add-on developers. I hear that the first add-on to make use of this new feature is currently being developed. New features, bugfixes and optimizations landed for OS.File. We have also landed the ability to watch for changes in a directory (under Windows only, for the time being).

Sadly, my work on interactions between Promise and the Test Suite is currently blocked until the DevTools team manages to get all the uncaught asynchronous errors under control. It’s hard work, and I can understand that it is not a high priority for them, so in Q4, I will try to find a way to land my work and activate it only for a subset of the mochitest suites.

Places

I have recently joined the newly restarted effort to improve the performance of Places, the subsystem that handles our bookmarks, history, etc. For the moment, I am still getting warmed up, but I expect that most of my work during Q4 will be related to Places.

Shutdown

Most of my effort during Q3 was spent improving the Shutdown of Firefox. Where we already had support for shutting down asynchronously JavaScript services/consumers, we now also have support for native services and consumers. Also, I am in the process of landing Telemetry that will let us find out the duration of the various stages of shutdown, an information that we could not access until now.

As it turns out, we had many crashes during asynchronous shutdown, a few of them safety-critical. At the time, we did not have the necessary tools to determine to prioritize our efforts or to find out whether our patches had effectively fixed bugs, so I built a dashboard to extract and display the relevant information on such crashes. This proved a wise investment, as we spent plenty of time fighting AsyncShutdown-related fires using this dashboard.

In addition to the “clean shutdown” mechanism provided by AsyncShutdown, we also now have the Shutdown Terminator. This is a watchdog subsystem, launched during shutdown, and it ensures that, no matter what, Firefox always eventually shuts down. I am waiting for data from our Crash Scene Investigators to tell us how often we need this watchdog in practice.

Community

I lost track of how many code contributors I interacted with during the quarter, but that represents hundreds of e-mails, as well as countless hours on IRC and Bugzilla, and a few hours on ask.mozilla.org. This year’s mozEdu teaching is also looking good.

We also launched FirefoxOS in France, with big success. I found myself in a supermarket, presenting the ZTE Open C and the activities of Mozilla to the crowds, and this was a pleasing experience.

For Q4, expect more mozEdu, more mentoring, and more sleepless hours helping contributors debug their patches :)

http://dutherenverseauborddelatable.wordpress.com/2014/09/30/what-david-did-during-q3/

|

|

Andrew Halberstadt: How many tests are disabled? |

|

|

Niko Matsakis: Multi- and conditional dispatch in traits |

I’ve been working on a branch that implements both multidispatch (selecting the impl for a trait based on more than one input type) and conditional dispatch (selecting the impl for a trait based on where clauses). I wound up taking a direction that is slightly different from what is described in the trait reform RFC, and I wanted to take a chance to explain what I did and why. The main difference is that in the branch we move away from the crate concatenability property in exchange for better inference and less complexity.

The various kinds of dispatch

The first thing to explain is what the difference is between these various kinds of dispatch.

Single dispatch. Let’s imagine that we have a conversion trait:

1 2 3 | |

This trait just has one method. It’s about as simple as it gets. It

converts from the (implicit) Self type to the Target type. If we

wanted to permit conversion between int and uint, we might

implement Convert like so:

1 2 | |

Now, in the background here, Rust has this check we call

coherence. The idea is (at least as implemented in the master

branch at the moment) to guarantee that, for any given Self type,

there is at most one impl that applies. In the case of these two

impls, that’s satisfied. The first impl has a Self of int, and the

second has a Self of uint. So whether we have a Self of int or

uint, there is at most one impl we can use (and if we don’t have a

Self of int or uint, there are zero impls, that’s fine too).

Multidispatch. Now imagine we wanted to go further and allow int

to be converted to some other type MyInt. We might try writing an

impl like this:

1 2 | |

Unfortunately, now we have a problem. If Self is int, we now have

two applicable conversions: one to uint and one to MyInt. In a

purely single dispatch world, this is a coherence violation.

The idea of multidispatch is to say that it’s ok to have multiple

impls with the same Self type as long as at least one of their

other type parameters are different. So this second impl is ok,

because the Target type parameter is MyInt and not uint.

Conditional dispatch. So far we have dealt only in concrete types

like int and MyInt. But sometimes we want to have impls that apply

to a category of types. For example, we might want to have a

conversion from any type T into a uint, as long as that type

supports a MyGet trait:

1 2 3 4 5 6 7 8 9 10 11 | |

We call impls like this, which apply to a broad group of types,

blanket impls. So how do blanket impls interact with the coherence

rules? In particular, does the conversion from T to MyInt conflict

with the impl we saw before that converted from int to MyInt? In

my branch, the answer is “only if int implements the MyGet trait”.

This seems obvious but turns out to have a surprising amount of

subtlety to it.

Crate concatenability and inference

In the trait reform RFC, I mentioned a desire to support crate concatenability, which basically means that you could take two crates (Rust compilation units), concatenate them into one crate, and everything would keep building. It turns out that the coherence rules already basically guarantee this without any further thought – except when it comes to inference. That’s where things get interesting.

To see what I mean, let’s look at a small example. Here we’ll use the

same Convert trait as we saw before, but with just the original set

of impls that convert between int and uint. Now imagine that I

have some code which starts with a int and tries to call convert()

on it:

1 2 3 4 5 6 | |

What can we say about the type of y here? Clearly the user did not

specify it and hence the compiler must infer it. If we look at the set

of impls, you might think that we can infer that y is of type

uint, since the only thing you can convert a int into is a uint.

And that is true – at least as far as this particular crate goes.

However, if we consider beyond a single crate, then it is possible

that some other crate comes along and adds more impls. For example,

perhaps another crate adds the conversion to the MyInt type that we

saw before:

1 2 | |

Now, if we were to concatenate those two crates together, then this

type inference step wouldn’t work anymore, because int can now be

converted to either uint or MyInt. This means that the snippet

of code we saw before would probably require a type annotation to clarify

what the user wanted:

1 2 | |

Crate concatenation and conditional impls

I just showed that the crate concatenability principle interferes with

inference in the case of multidispatch, but that is not necessarily

bad. It may not seem so harmful to clarify both the type you are

converting from and the type you are converting to, even if there is

only one type you could legally choose. Also, multidispatch is fairly

rare; most traits has a single type that decides on the impl and

then all other types are uniquely determined. Moreover, with the

associated types RFC, there is even a syntactic way to

express this.

However, when you start trying to implement conditional dispatch

that is, dispatch predicated on where clauses, crate concatenability

becomes a real problem. To see why, let’s look at a different trait

called Push. The purpose of the Push trait is to describe

collection types that can be appended to. It has one associated type

Elem that describes the element types of the collection:

1 2 3 4 5 | |

We might implement Push for a vector like so:

1 2 3 4 5 | |

(This is not how the actual standard library works, since push is an

inherent method, but the principles are all the same and I didn’t want

to go into inherent methods at the moment.) OK, now imagine I have

some code that is trying to construct a vector of char:

1 2 3 4 | |

The question is, can the compiler resolve the calls to push() here?

That is, can it figure out which impl is being invoked? (At least in

the current system, we must be able to resolve a method call to a

specific impl or type bound at the point of the call – this is a

consequence of having type-based dispatch.) Somewhat surprisingly, if

we’re strict about crate concatenability, the answer is no.

The reason has to do with DST. The impl for Push that we saw before

in fact has an implicit where clause:

1 2 3 | |

This implies that some other crate could come along and implement Push for

an unsized type:

1

| |

Now, when we consider a call like v.push('a'), the compiler must

pick the impl based solely on the type of the receiver v. At the

point of calling push, all we know is that is the type of v is a

vector, but we don’t know what it’s a vector of – to infer the

element type, we must first resolve the very call to push that we

are looking at right now.

Clearly, not being able to call push without specifying the type of

elements in the vector is very limiting. There are a couple of ways to

resolve this problem. I’m not going to go into detail on these solutions,

because they are not what I ultimately opted to do. But briefly:

- We could introduce some new syntax for distinguishing conditional

dispatch vs other where clauses (basically the input/output

distinction that we use for type parameters vs associated types).

Perhaps a

whenclause, used to select the impl, versus awhereclause, used to indicate conditions that must hold once the impl is selected, but which are not checked beforehand. Hard to understand the difference? Yeah, I know, I know. - We could use an ad-hoc rule to distinguish the input/output clauses. For example, all predicates applied to type parameters that are directly used as an input type. Limiting, though, and non-obvious.

- We could create a much more involved reasoning system (e.g., in this

case,

Vec::new()in fact yields a vector whose types are known to be sized, but we don’t take this into account when resolving the call topush()). Very complicated, unclear how well it will work and what the surprising edge cases will be.

Or… we could just abandon crate concatenability. But wait, you ask, isn’t it important?

Limits of crate concatenability

So we’ve seen that crate concatenability conflicts with inference and it also interacts negatively with conditional dispatch. I now want to call into question just how valuable it is in the first place. Another way to phrase crate concatenability is to say that it allows you to always add new impls without disturbing existing code using that trait. This is actually a fairly limited guarantee. It is still possible for adding impls to break downstream code across two different traits, for example. Consider the following example:

1 2 3 4 5 6 7 8 9 10 11 12 13 | |

Here you have two traits with the same method name (draw). However,

the first trait is implemented only on Player and the other on

Polygon. So the two never actually come into conflict. In

particular, if I have a player player and I write player.draw(), it could

only be referring to the draw method of the Cowboy trait.

But what happens if I add another impl for Image?

1

| |

Now suddenly a call to player.draw() is ambiguous, and we need to

use so-called “UFCS” notation to disambiguate (e.g.,

Player::draw(&player)).

(Incidentally, this ability to have type-based dispatch is a great strength of the Rust design, in my opinion. It’s useful to be able to define method names that overlap and where the meaning is determined by the type of the receiver.)

Conclusion: drop crate concatenability

So I’ve been turning these problems over for a while. After some discussions with others, aturon in particular, I feel the best fix is to abandon crate concatenability. This means that the algorithm for picking an impl can be summarized as:

- Search the impls in scope and determine those whose types can be unified with the current types in question and hence could possibly apply.

- If there is more than one impl in that set, start evaluating where clauses to narrow it down.

This is different from the current master in two ways. First of all,

to decide whether an impl is applicable, we use simple unification

rather than a one-way match. Basically this means that we allow impl

matching to affect inference, so if there is at most one impl that can

match the types, it’s ok for the compiler to take that into account.

This covers the let y = x.convert() case. Second, we don’t consider

the where clauses unless they are needed to remove ambiguity.

I feel pretty good about this design. It is somewhat less pure, in that it blends the role of inputs and outputs in the impl selection process, but it seems very usable. Basically it is guided only by the ambiguities that really exist, not those that could theoretically exist in the future, when selecting types. This avoids forcing the user to classify everything, and in particular avoids the classification of where clauses according to when they are evaluated in the impl selection process. Moreover I don’t believe it introduces any significant compatbility hazards that were not already present in some form or another.

http://smallcultfollowing.com/babysteps/blog/2014/09/30/multi-and-conditional-dispatch-in-traits/

|

|

Gregory Szorc: Mozilla Mercurial Statistics |

I recently gained SSH access to Mozilla's Mercurial servers. This allows me to run some custom queries directly against the data. I was interested in some high-level numbers and thought I'd share the results.

hg.mozilla.org hosts a total of 3,445 repositories. Of these, there are 1,223 distinct root commits (i.e. distinct graphs). Altogether, there are 32,123,211 commits. Of those, there are 865,594 distinct commits (not double counting commits that appear in multiple repositories).

We have a high ratio of total commits to distinct commits (about 37:1). This means we have high duplication of data on disk. This basically means a lot of repos are clones/forks of existing ones. No big surprise there.

What is surprising to me is the low number of total distinct commits. I was expecting the number to run into the millions. (Firefox itself accounts for ~240,000 commits.) Perhaps a lot of the data is sitting in Git, Bitbucket, and GitHub. Sounds like a good data mining expedition...

http://gregoryszorc.com/blog/2014/09/30/mozilla-mercurial-statistics

|

|

Chris McAvoy: Me and Open Badges – Different, but the same |

Hi there, if you read this blog it’s probably for one of three things,

1) my investigation of the life of Isham Randolph, the chief engineer of the Chicago Sanitary and Ship canal.

2) you know me and you want to see what I’m doing but you haven’t discovered Twitter or Facebook yet.

3) Open Badges.

This is a quick update for everyone in that third group, the Open Badges crew. I have some news.

When I joined the Open Badges project nearly three years ago, I knew this was something that once I joined, I wouldn’t leave. The idea of Open Badges hits me exactly where I live, at the corner of ‘life long learning’ and ‘appreciating people for who they are’. I’ve been fortunate that my love of life long learning and self-teaching led me down a path where I get to do what I love as my career. Not everyone is that fortunate. I see Open Badges as a way to make my very lucky career path the norm instead of the exception. I believe in the project, I believe in the goals and I’m never going to not work toward bringing that kind of opportunity to everyone regardless of the university they attended or the degree hanging on their wall.

This summer has been very exciting for me. I joined the Badge Alliance, chaired the BA standard working group and helped organize the first BA Technology Council. At the same time, I was a mentor for Chicago’s Tech Stars program and served as an advisor to a few startups in different stages of growth. The Badge Alliance work has been tremendously satisfying, the standard working group is about to release the first cycle report, and it’s been great to see our accomplishments all written in one place. We’ve made a lot of progress in a short amount of time. That said, my role at the Alliance has been focused on standards growth, some evangelism and guiding a small prototyping project. As much as I loved my summer, the projects and work don’t fit the path I was on. I’ve managed engineering teams for a while now, building products and big technology architectures. The process of guiding a standard is something I’m very interested in, but it doesn’t feel like a full-time job now. I like getting my hands dirty (in Emacs), I want to write code and direct some serious engineer workflow.

Let’s cut to the chase – after a bunch of discussions with Sunny Lee and Erin Knight, two of my favorite people in the whole world, I’ve decided to join Earshot, a Chicago big data / realtime geotargeted social media company, as their CTO. I’m not leaving the Badge Alliance. I’ll continue to serve as the BA director of technology, but as a volunteer. Earshot is a fantastic company with a great team. They understand the Open Badges project and want me to continue to support the Badge Alliance. The Badge Alliance is a great team, they understand that I want to build as much as I want to guide. I’m so grateful to everyone involved for being supportive of me here, I can think of dozens of ways this wouldn’t have worked out. Just a bit of life lesson – as much as you can, work with people who really care about you, it leads to situations like this, where everyone gets what they really need.

The demands of a company moving as fast as Earshot will mean that I’ll be less available, but no less involved in the growth of the Badge Alliance and the Open Badges project. From a tactical perspective, Sunny Lee will be taking over as chair of the standard working group. I’ll still be an active member. I’ll also continue to represent the BA (along with Sunny) in the W3C credentials community group.

If you have any questions, please reach out to me! I’ll still have my chris@badgealliance.org email address…use it!

http://chrismcavoy.org/2014/09/28/me-and-open-badges-different-but-the-same/

|

|

Christian Heilmann: Reconnecting at TEDxLinz – impressions, slides, resources |

I just returned from Linz, Austria, where I spoke at TEDxLinz yesterday. After my stint at TEDxThessaloniki earlier in the year I was very proud to be invited to another one and love the variety of talks you encounter there.

The overall topic of the event was “re-connect” and I was very excited to hear all the talks covering a vast range of topics. The conference was bilingual with German (well, Austrian) talks and English ones. Oddly enough, no speaker was a native English speaker.

My favourite parts were:

- Ingrid Brodnig talking about online hate and how to battle it

- Andrea G"otzelmann talking about re-integrating people into their home countries after emigrating. A heart-warming story of helping people out who moved out and failed just to return and succeed

- Gergely Teglasy talking about creating a crowd-curated novel written on Facebook

- Malin Elmlid of The Bread Exchange showing how her love of creating your own food got her out into the world and learn about all kind of different cultures. And how doing an exchange of goods and services beats being paid.

- Elisabeth Gatt-Iro and Stefan Gatt showing us how to keep our relationships fresh and let love listen.

- Johanna Schuh explaining how asking herself questions about her own behaviour rather than throwing blame gave her much more peace and the ability to go out and speak to people.

- Stefan Pawel enlightening us about how far ahead Linz is compared to a lot of other cities when it comes to connectivity (150 open hot spots, webspace for each city dweller)

The location was the convention centre of a steel factory and the stage setup was great and not over the top. The audience was very mixed and very excited and all the speakers did a great job mingling. Despite the impressive track record of all of them there was no sense of diva-ism or “parachute presenting”.

I had a lovely time at the speaker’s dinner and going to and from the hotel.

The hotel was a special case in itself: I felt like I was in an old movie and instead of using my laptop I was tempted to grow a tufty beard and wear layers and layers of clothes and a nice watch on a chain.

My talk was about bringing the social back into social media or – in other words – stopping to chase numbers of likes and inane comments and go back to a web of user generated content that was done by real people. I have made no qualms about it in the past that I dislike memes and animated GIFs cropped from a TV series of movie with a passion and this was my chance to grand-stand about it.

I wanted the talk to be a response to the “Look up” and “Look down” videos about social oversharing leading to less human interaction. My goal was to move the conversation into a different direction, explaining that social media is for us to put things we did and wanted to share. The big issue is that the addiction-inducing game mechanisms of social media platforms instead lead us to post as much as we can and try to be the most shared instead of the creators.

This also leads to addiction and thus to strange online behaviour up to over-sharing materials that might be used as blackmail opportunities against us.

Resources I covered in the talk:

- ‘Look Up’ – A spoken word film for an online generation

- LOOK DOWN: a rhyming response to that LOOK UP viral video

- How to remove extra information from a photo that allows you to be tracked

- B3TA – an online community with an evolved way to avoid duplicated content charmingly called “The Glasscock search”

- Snopes.com – an online resource to check the validity of news items

- Penelope Pickles on Facebook

Other than having a lot of fun on stage I also managed to tick some things off my bucket list:

- Vandalising a TEDx stage

- Being on stage with my fly open

- Using the words “sweater pillows” and “dangly bits” in a talk

I had a wonderful time all in all and I want to thank the organisers for having me, the audience for listening, the other speakers for their contribution and the caterers and volunteers for doing a great job to keep everybody happy.

http://christianheilmann.com/2014/09/27/reconnecting-at-tedxlinz-impressions-slides-resources/

|

|

Eric Shepherd: The Sheppy Report: September 26, 2014 |

I can’t believe another week has already gone by. This is that time of the year where time starts to scream along toward the holidays at a frantic pace.

What I did this week

- I’ve continued working heavily on the server-side sample component system

- Implemented the startup script support, so that each sample component can start background services and the like as needed.

- Planned and started implementation work on support for allocating ports each sample needs.

- Designed tear-down process.

- Created a new “download-desc” class for use on the Firefox landing page on MDN. This page offers download links for all Firefox channels, and this class is used to correct a visual glitch. The class has not as yet been placed into production on the main server though. See this bug to track landing of this class.

- Updated the MDN administrators’ guide to include information on the new process for deploying changes to site CSS now that the old

CustomCSSmacro has been terminated on production. - Cleaned up Signing Mozilla apps for Mac OS X.

- Created Using the Mozilla build VM, based heavily on Tim Taubert’s blog post and linked to it from appropriate landing pages.

- Copy-edited and revised the Web Bluetooth API page.

- Deleted a crufty page from the Window API.

- Meetings about API documentation updates and more.

Wrap up

That’s a short-looking list but a lot of time went into many of the things on that list; between coding and research for the server-side component service and experiments with the excellent build VM (I did in fact download it and use it almost immediately to build a working Nightly), I had a lot to do!

My work continues to be fun and exciting, not to mention outright fascinating. I’m looking forward to more, next week.

https://www.bitstampede.com/2014/09/26/the-sheppy-report-september-26-2014/

|

|

Jeff Walden: Minor changes are coming to typed arrays in Firefox and ES6 |

JavaScript has long included typed arrays to efficiently store numeric arrays. Each kind of typed array had its own constructor. Typed arrays inherited from element-type-specific prototypes: Int8Array.prototype, Float64Array.prototype, Uint32Array.prototype, and so on. Each of these prototypes contained useful methods (set, subarray) and properties (buffer, byteOffset, length, byteLength) and inherited from Object.prototype.

This system is a reasonable way to expose typed arrays. Yet as typed arrays have grown, it’s grown unwieldy. When a new typed array method or property is added, distinct copies must be added to Int8Array.prototype, Float64Array.prototype, Uint32Array.prototype, &c. Likewise for “static” functions like Int8Array.from and Float64Array.from. These distinct copies cost memory: a small amount, but across many tabs, windows, and frames it can add up.

A better system

ES6 changes typed arrays to fix these issues. The typed array functions and properties now work on any typed array.

var f32 = new Float32Array(8); // all zeroes var u8 = new Uint8Array([0, 1, 2, 3, 4, 5, 6, 7]); Uint8Array.prototype.set.call(f32, u8); // f32 contains u8's values

ES6 thus only needs one centrally-stored copy of each function. All functions move to a single object, denoted %TypedArray%.prototype. The typed array prototypes then inherit from %TypedArray%.prototype to expose them.

assertEq(Object.getPrototypeOf(Uint8Array.prototype),

Object.getPrototypeOf(Float64Array.prototype));

assertEq(Object.getPrototypeOf(Object.getPrototypeOf(Int32Array.prototype)),

Object.prototype);

assertEq(Int16Array.prototype.subarray,

Float32Array.prototype.subarray);

ES6 also changes the typed array constructors to inherit from the %TypedArray% constructor, on which functions like Float64Array.from and Int32Array.of live. (Neither function yet in Firefox, but soon!)

assertEq(Object.getPrototypeOf(Uint8Array),

Object.getPrototypeOf(Float64Array));

assertEq(Object.getPrototypeOf(Object.getPrototypeOf(Int32Array)),

Function.prototype);

I implemented these changes a few days ago in Firefox. Grab a nightly build and test things out with a new profile.

Conclusion

In practice this won’t affect most typed array code. Unless you depend on the exact [[Prototype]] sequence or expect typed array methods to only work on corresponding typed arrays (and thus you’re deliberately extracting them to call in isolation), you probably won’t notice a thing. But it’s always good to know about language changes. And if you choose to polyfill an ES6 typed array function, you’ll need to understand %TypedArray% to do it correctly.

http://whereswalden.com/2014/09/26/minor-changes-are-coming-to-typed-arrays-in-firefox-and-es6/

|

|

Fr'ed'eric Harper: HTML, not just for desktops at Congreso Universitario Mo |

My translator, and me

At the beginning of the month, I was in Mexico to represent Mozilla at

http://outofcomfortzone.net/2014/09/26/html-not-just-for-desktops-at-congreso-universitario-movil/

|

|

Gervase Markham: Prevent Territoriality |

Watch out for participants who try to stake out exclusive ownership of certain areas of the project, and who seem to want to do all the work in those areas, to the extent of aggressively taking over work that others start. Such behavior may even seem healthy at first. After all, on the surface it looks like the person is taking on more responsibility, and showing increased activity within a given area. But in the long run, it is destructive. When people sense a “no trespassing” sign, they stay away. This results in reduced review in that area, and greater fragility, because the lone developer becomes a single point of failure. Worse, it fractures the cooperative, egalitarian spirit of the project. The theory should always be that any developer is welcome to help out on any task at any time.

— Karl Fogel, Producing Open Source Software

http://feedproxy.google.com/~r/HackingForChrist/~3/avoa0G4IiFQ/

|

|

Soledad Penades: Berlin Web Audio Hack Day 2014 |

As with the Extensible Web Summit, we wrote some notes collaboratively. Here are the notes for the Web Audio Hackday!

We started the day with me being late because I took a series of badly timed bad decisions and that ended up in me taking the wrong untergrund lines. In short: I don’t know how to metro in Berlin in the mornings and I’m still so sorry.

I finally arrived to Soundcloud’s offices, and it was cool that Jan was still doing the presentations, so Tiffany gave me a giant glass of water and I almost drank it all while they finished. Then I set up my computer and proceeded to give my talk/workshop!

It was an improved and revised version of the beta-talk I gave at Mozilla London past past week:

Note to self: maybe remove red banners behind me if wearing a red shirt, so as not to blend with them

Sadly it wasn’t recorded and I didn’t screencast it either, so you’ll have to make do with the slides and the code for the slides (which includes the examples). Or maybe wait until I maybe run this workshop again (which I have already been asked to do!)

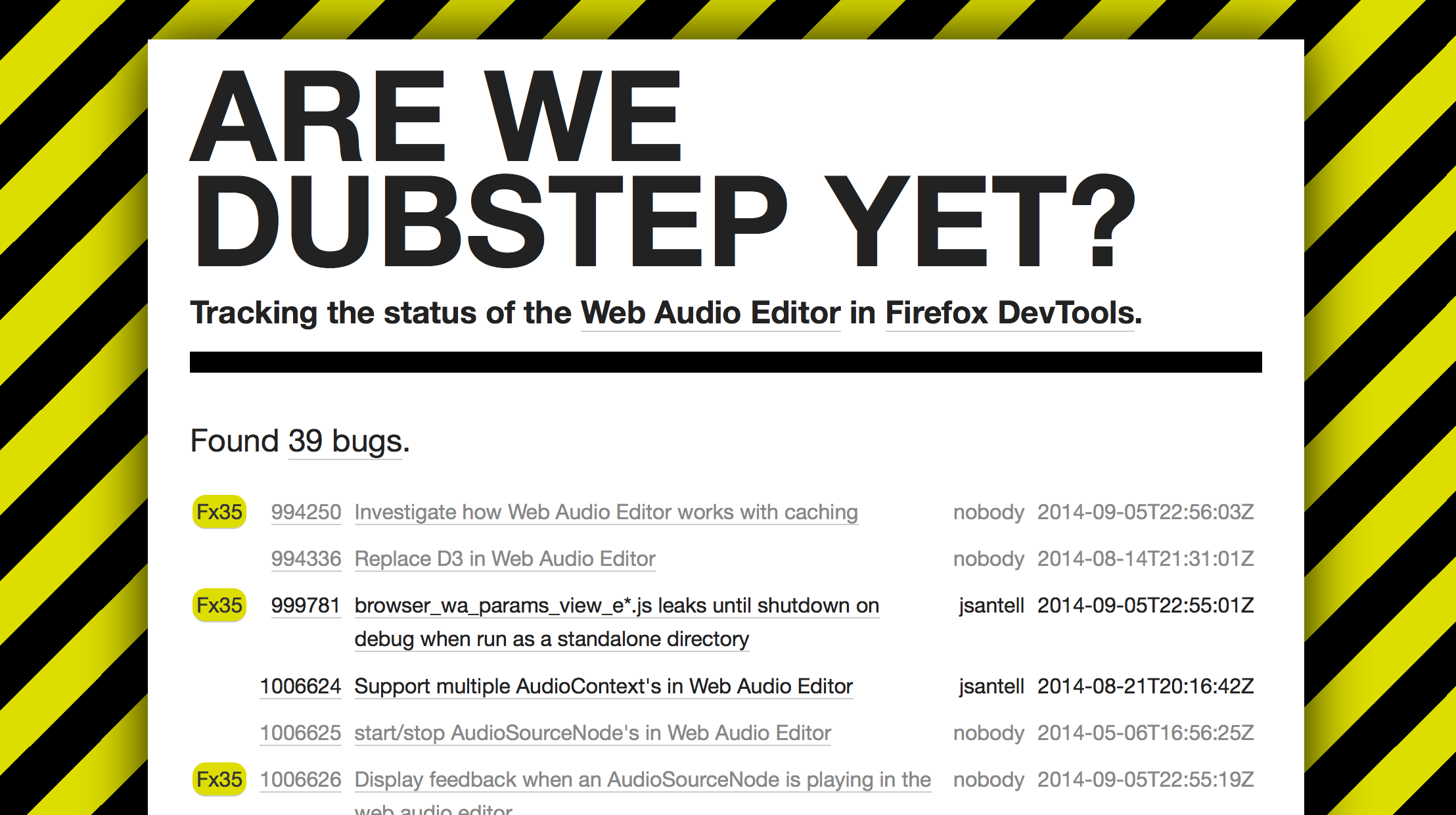

Jordan Santell and the Web Audio Editor in Firefox Devtools

Then Jordan (of dancer.js and component.fm fame) talked about the fancy new Web Audio Editor which is one of the latest tools to join the Firefox Devtools collection of awesome—and it just appeared in Firefox Stable (32) so you don’t even need to run Beta, Aurora or Nightly to use it! (I talked a bit about it already).

You can use the editor to visualise the audio graph, change values of the nodes and also detect if you have a memory leak when allocating nodes (which is something that is part of the normal workflow of working with Web Audio).

There was a nice plug to Are We Dubstep Yet?, the minisite I am building to keep track of bugs in the Web Audio Editor. Yay plugs!

Jordan’s slides are here. You can also watch his JSConf talk where he introduced an early version of the tools!

Chris Wilson and the Web MIDI API

Finally the mighty Chris Wilson explained how the Web MIDI API works and made some demos using a few and assorted MIDI devices he had brought with him: a keyboard, pads, a DJ deck controller…!

It’s interesting that most of the development of the Web MIDI implementation seems to be happening in Japan, so they are super original in their examples.

Chris’ slides on Web MIDI and other audio in general slides.

Hacking + Hacks!

I think we had lunch then… and then it was HACK TIME! But before actually getting started, some people pitched their idea to see if someone else wanted to collaborate with them and hack together. I think that was a really neat idea :-)

Myself, I spent the hack time…

- reconnecting with old acquaintances

- answering questions! but very few of them and none of them were the usual “but why doesn’t my oscillator start anymore?” but more interesting ones, so that was cool!

- asking questions! to Chris mostly–one cannot ask questions to a spec editor in person every day!

- and even started a hack which I didn’t finish: visualising custom periodic waves for use with an Oscillator Node, given the harmonics array. I gave myself the idea while I was doing the workshop, which is a terrible thing to do to myself, as I was distracting myself and wanted to hack on that instead of finishing the workshop. My brain probably hates itself, or me in general.

Also this was really cool:

.@cwilso and me feeling a tremendous relief at not being the only ones randomly causing our machines to emit weird sounds #webaudiohackday

—

http://soledadpenades.com/2014/09/26/berlin-web-audio-hack-day-2014/

|

|

Daniel Stenberg: Changing networks with Firefox running |

Short recap: I work on network code for Mozilla. Bug 939318 is one of “mine” – yesterday I landed a fix (a patch series with 6 individual patches) for this and I wanted to explain what goodness that should (might?) come from this!

diffstat

diffstat reports this on the complete patch series:

29 files changed, 920 insertions(+), 162 deletions(-)

The change set can be seen in mozilla-central here. But I guess a proper description is easier for most…

The bouncy road to inclusion

This feature set and associated problems with it has been one of the most time consuming things I’ve developed in recent years, I mean in relation to the amount of actual code produced. I’ve had it “landed” in the mozilla-inbound tree five times and yanked out again before it landed correctly (within a few hours), every time of course reverted again because I had bugs remaining in there. The bugs in this have been really tricky with a whole bunch of timing-dependent and race-like problems and me being unfamiliar with a large part of the code base that I’m working on. It has been a highly frustrating journey during periods but I’d like to think that I’ve learned a lot about Firefox internals partly thanks to this resistance.

As I write this, it has not even been 24 hours since it got into m-c so there’s of course still a risk there’s an ugly bug or two left, but then I also hope to fix the pending problems without having to revert and re-apply the whole series…

Many ways to connect to networks

In many network setups today, you get an environment and a network “experience” that is crafted for that particular place. For example you may connect to your work over a VPN where you get your company DNS and you can access sites and services you can’t even see when you connect from the wifi in your favorite coffee shop. The same thing goes for when you connect to that captive portal over wifi until you realize you used the wrong SSID and you switch over to the access point you were supposed to use.

In many network setups today, you get an environment and a network “experience” that is crafted for that particular place. For example you may connect to your work over a VPN where you get your company DNS and you can access sites and services you can’t even see when you connect from the wifi in your favorite coffee shop. The same thing goes for when you connect to that captive portal over wifi until you realize you used the wrong SSID and you switch over to the access point you were supposed to use.

For every one of these setups, you get different DHCP setups passed down and you get a new DNS server and so on.

These days laptop lids are getting closed (and the machine is put to sleep) at one place to be opened at a completely different location and rarely is the machine rebooted or the browser shut down.

Switching between networks

Switching from one of the networks to the next is of course something your operating system handles gracefully. You can even easily be connected to multiple ones simultaneously like if you have both an Ethernet card and wifi.

Enter browsers. Or in this case let’s be specific and talk about Firefox since this is what I work with and on. Firefox – like other browsers – will cache images, it will cache DNS responses, it maintains connections to sites a while even after use, it connects to some sites even before you “go there” and so on. All in the name of giving the users an as good and as fast experience as possible.

The combination of keeping things cached and alive, together with the fact that switching networks brings new perspectives and new “truths” offers challenges.

Realizing the situation is new

The changes are not at all mind-bending but are basically these three parts:

- Make sure that we detect network changes, even if just the set of available interfaces change. Send an event for this.

- Make sure the necessary parts of the code listens and understands this “network topology changed” event and acts on it accordingly

- Consider coming back from “sleep” to be a network changed event since we just cannot be sure of the network situation anymore.

The initial work has been made for Windows only but it allows us to smoothen out any rough edges before we continue and make more platforms support this.

The network changed event can be disabled by switching off the new “network.notify.changed” preference. If you do end up feeling a need for that, I really hope you file a bug explaining the details so that we can work on fixing it!

Act accordingly

So what is acting properly? What if the network changes in a way so that your active connections suddenly can’t be used anymore due to the new rules and routing and what not? We attack this problem like this: once we get a “network changed” event, we “allow” connections to prove that they are still alive and if not they’re torn down and re-setup when the user tries to reload or whatever. For plain old HTTP(S) this means just seeing if traffic arrives or can be sent off within N seconds, and for websockets, SPDY and HTTP2 connections it involves sending an actual ping frame and checking for a response.

The internal DNS cache was a bit tricky to handle. I initially just flushed all entries but that turned out nasty as I then also killed ongoing name resolves that caused errors to get returned. Now I instead added logic that flushes all the already resolved names and it makes names “in transit” to get resolved again so that they are done on the (potentially) new network that then can return different addresses for the same host name(s).

This should drastically reduce the situation that could happen before when Firefox would basically just freeze and not want to do any requests until you closed and restarted it. (Or waited long enough for other timeouts to trigger.)

The ‘N seconds’ waiting period above is actually 5 seconds by default and there’s a new preference called “network.http.network-changed.timeout” that can be altered at will to allow some experimentation regarding what the perfect interval truly is for you.

Initially on Windows only

Initially on Windows only

My initial work has been limited to getting the changed event code done for the Windows back-end only (since the code that figures out if there’s news on the network setup is highly system specific), and now when this step has been taken the plan is to introduce the same back-end logic to the other platforms. The code that acts on the event is pretty much generic and is mostly in place already so it is now a matter of making sure the event can be generated everywhere.

My plan is to start on Firefox OS and then see if I can assist with the same thing in Firefox on Android. Then finally Linux and Mac.

I started on Windows since Windows is one of the platforms with the largest amount of Firefox users and thus one of the most prioritized ones.

More to do

There’s separate work going on for properly detecting captive portals. You know the annoying things hotels and airports for example tend to have to force you to do some login dance first before you are allowed to use the internet at that location. When such a captive portal is opened up, that should probably qualify as a network change – but it isn’t yet.

http://daniel.haxx.se/blog/2014/09/26/changing-networks-with-firefox-running/

|

|

Arky: Building Boot2Gecko(B2G) on Ubuntu |

Update: This documentation is out-of-date: Please read developer.mozilla.org/en-US/Firefox_OS/Building for latest information

You heard about Mozilla Boot2Gecko(B2G) mobile operating system. Boot2Gecko's Gaia user interface is developed entirely using HTML, CSS and Javascript web technologies. If you are an experienced web developer you can contribute to Gaia UI and develop new Boot2Gecko applications with ease. In this post I'll explain how to setup the Boot2Gecko (B2G) development environment on your personal computer.

You can run Boot2Gecko(B2G) inside an emulator or inside a Firefox web browser. Using Boot2Gecko(B2G) with QEMU emulator is very resource intensive, so we will focus on the later in this post. I'll assume you are comfortable with Mozilla build environment. So, get that pot of coffee brewing and prepare for a long night of hacking.

Building Boot2Gecko(B2G) Firefox App

Before you start, let us make sure that you have all the prerequisites for building Firefox on your computer. Please have a look at the build prerequisites for your Linux, Window and OSX operating system.

# Let get the source code

# Download mozilla-central repository

$ hg clone http://hg.mozilla.org/mozilla-central mozilla-central

# Download the Gaia source code

$ git clone https://github.com/mozilla-b2g/gaia gaia

# Change directory and create our profile

$ cd gaia

$ make profile

# Change directory into your mozilla-central directory

$ cd mozilla-central

# Create a .mozconfig file inside your mozilla-central directory:

$ nano .mozconfig

mk_add_options MOZ_OBJDIR=../b2g-build

mk_add_options MOZ_MAKE_FLAGS="-j9 -s"

ac_add_options --enable-application=b2g

ac_add_options --disable-libjpeg-turbo

ac_add_options --enable-b2g-ril

ac_add_options --with-ccache=/usr/bin/ccache

# Build the Firefox B2G app and wait for the build to finish

$ make -f client.mk build

# Create a simple b2g bash script to launch B2G app; change paths you suit your environment

# Note: Have to use to -safe-mode option due to bug on my Ubuntu box

#!/bin/sh

export B2G_HOMESCREEN=http://homescreen.gaiamobile.org/

/home/arky/src/b2g-build/dist/bin/b2g -profile /home/arky/src/gaia/profile

If everything goes well. You should have boot2gecko running inside a firefox now.

Customizing Firefox B2G App

For better Boot2Gecko (B2G) experience, we will customize Firefox features offline cache and touch events using a custom Firefox profile.

Create a Custom Firefox Profile

You can use dist/bin/b2g -ProfileManager option to launch Firefox Profile Manager. Create a new profile called 'b2g'. Now we can add customizations to this new profile.

On Linux computers, the profile is created under ~/.mozilla/b2g/ directory. You can find the information about location of firefox profiles for your operating system here.

You launch B2G with your new custom profile using the '-P' option. Modify your B2G bash script and add the custom profile option. dist/bin/b2g -P b2g

Disable offline cache

Create a user.js file inside your custom 'b2g' firefox profile directory. Add the following line to disable offline cache.

user_pref('browser.cache.offline.enable', false);Enabling Touch events

Add the following line in your user.js file inside your custom 'b2g' Firefox profile directory to enable touch events.

user_pref('dom.w3c_touch_events.enabled', true);That's it. You now have a Boot2Gecko(B2G) running inside Firefox on your computer. Happy Hacking!

http://playingwithsid.blogspot.com/2012/03/building-boot2gecko-on-ubuntu.html

|

|

Julien Vehent: Shellshock IOC search using MIG |

Shellshock is being exploited. People are analyzing malwares dropped on systems using the bash vulnerability.

I wrote a MIG Action to check for these IOCs. I will keep updating it with more indicators as we discover them. To run this on your Linux 32 or 64 bits system, download the following archive: mig-shellshock.tar.xz

Download the archive and run mig-agent as follow:

$ wget https://jve.linuxwall.info/ressources/taf/mig-shellshock.tar.xz

$ sha256sum mig-shellshock.tar.xz 0b527b86ae4803736c6892deb4b3477c7d6b66c27837b5532fb968705d968822 mig-shellshock.tar.xz

$ tar -xJvf mig-shellshock.tar.xz

shellshock_iocs.json

mig-agent-linux64

mig-agent-linux32

$ ./mig-agent-linux64 -i shellshock_iocs.json

$ ./mig-agent-linux64 -i shellshock_iocs.json|grep foundanything

"foundanything": false,

"foundanything": false,

{

"name": "Shellshock IOCs (nginx and more)",

"target": "os='linux' and heartbeattime \u003e NOW() - interval '5 minutes'",

"threat": {

"family": "malware",

"level": "high"

},

"operations": [

{

"module": "filechecker",

"parameters": {

"searches": {

"iocs": {

"paths": [

"/usr/bin",

"/usr/sbin",

"/bin",

"/sbin",

"/tmp"

],

"sha256": [

"73b0d95541c84965fa42c3e257bb349957b3be626dec9d55efcc6ebcba6fa489",

"ae3b4f296957ee0a208003569647f04e585775be1f3992921af996b320cf520b",

"2d3e0be24ef668b85ed48e81ebb50dce50612fb8dce96879f80306701bc41614",

"2ff32fcfee5088b14ce6c96ccb47315d7172135b999767296682c368e3d5ccac",

"1f5f14853819800e740d43c4919cc0cbb889d182cc213b0954251ee714a70e4b"

],

"regexes": [

"/bin/busybox;echo -e '\\\\147\\\\141\\\\171\\\\146\\\\147\\\\164'"

]

}

}

}

},

{

"module": "netstat",

"parameters": {

"connectedip": [

"108.162.197.26",

"162.253.66.76",

"89.238.150.154",

"198.46.135.194",

"166.78.61.142",

"23.235.43.31",

"54.228.25.245",

"23.235.43.21",

"23.235.43.27",

"198.58.106.99",

"23.235.43.25",

"23.235.43.23",

"23.235.43.29",

"108.174.50.137",

"201.67.234.45",

"128.199.216.68",

"75.127.84.182",

"82.118.242.223",

"24.251.197.244",

"166.78.61.142"

]

}

}

],

"description": {

"author": "Julien Vehent",

"email": "ulfr@mozilla.com",

"revision": 201409252305

},

"syntaxversion": 2

}https://jve.linuxwall.info/blog/index.php?post/2014/09/25/Shellshock-IOC-search-using-MIG

|

|

Armen Zambrano: Making mozharness easier to hack on and try support |

We're mainly focused on making it easier for developers and allow for further flexibility.

We will initially focus on the testing side of the automation and make ground work for other further improvements down the line.

The set of changes discussed for this quarter are:

- Move remaining set of configs to the tree - bug 1067535

- This makes it easier to test harness changes on try

- Read more information from the in-tree configs - bug 1070041

- This increases the number of harness parameters we can control from the tree

- Use structured output parsing instead of regular where it applies - bug 1068153

- This is part of a larger goal where we make test reporting more reliable, easy to consume and less burdening on infrastructure

- It's to establish a uniform criteria for setting a job status based on a test result that depends on structured log data (json) rather than regex-based output parsing

- "How does a test turn a job red or orange?"

- We will then have a simple answer that is that same for all test harnesses

- Mozharness try support - bug 791924

- This will allow us to lock which repo and revision of mozharnes is checked out

- This isolates mozharness changes to a single commit in the tree

- This give us try support for user repos (freedom to experiment with mozharness on try)

This work by Zambrano Gasparnian, Armen is licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 Unported License.

|

|

Mozilla Release Management Team: Firefox 33 beta6 to beta7 |

This beta has been driven by the NSS chemspill. We used this unexpected beta to test the behavior of 33 without OMTC under Windows.

- 8 changesets

- 232 files changed

- 73163 insertions

- 446 deletions

| Extension | Occurrences |

| cc | 73 |

| h | 45 |

| py | 23 |

| c | 11 |

| vcproj | 8 |

| sh | 7 |

| xcconfig | 6 |

| mn | 6 |

| pump | 4 |

| mk | 4 |

| cpp | 3 |

| cbproj | 3 |

| txt | 2 |

| sln | 2 |

| plist | 2 |

| pbxproj | 2 |

| m4 | 2 |

| html | 2 |

| def | 2 |

| mm | 1 |

| list | 1 |

| + | 1 |

| js | 1 |

| in | 1 |

| groupproj | 1 |

| dep | 1 |

| cmake | 1 |

| am | 1 |

| ac | 1 |

| Module | Occurrences |

| security | 151 |

| security | 69 |

| image | 4 |

| widget | 1 |

| modules | 1 |

| + | 1 |

| js | 1 |

| gfx | 1 |

List of changesets:

| Michael Wu | Bug 1062886 - Fix one color padded drawing path. r=seth, a=sledru - 232c3b4708b9 |

| Michael Wu | Bug 1068230 - Don't use the gfxContext transform in intermediate surface. r=seth, a=sledru - bca0649c9b79 |

| Douglas Crosher | Bug 1013996 - irregexp: Avoid unaligned accesses in ARM code. r=bhackett, a=sledru - 5e2a5b6c7a0d |

| Bas Schouten | Bug 1030147 - Switch off OMTC on windows. r=milan, a=sylvestre - f631df57b34c |

| Steven Michaud | Bug 1056251 - Changing to a Firefox window in a different workspace does not focus automatically. r=masayuki a=lmandel - 7c118b1cf343 |

| Kai Engert | Bug 1064636, upgrade to NSS 3.17.1 release, r=rrelyea, a=lmandel - fb8ff9258d02 |

| Matt Woodrow | Bug 1030147 - Release the DrawTarget to drop the surface ref in ThebesLayerD3D9. r=Bas a=lmandel CLOSED TREE - 280407351f1b |

| L. David Baron | Bug 1064636 followup: Add new function to config/external/nss/nss.def r=khuey a=bustage CLOSED TREE - 2431af782661 |

http://release.mozilla.org/statistics/33/2014/09/25/fx-33-b6-to-b7.html

|

|