Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Mike Hommey: So, hum, bash… |

So, I guess you heard about the latest bash hole.

What baffles me is that the following still is allowed:

env echo='() { xterm;}' bash -c "echo this is a test"

Interesting replacements for “echo“, “xterm” and “echo this is a test” are left as an exercise to the reader.

Update: Another thing that bugs me: Why is this feature even enabled in posix mode? (the mode you get from bash --posix, or, more importantly, when running bash as sh) After all, export -f is a bashism.

|

|

Sean Bolton: From the Furthest Edge to the Deepest Middle |

In my role as Community Building Intern at Mozilla this summer, my goal has been to be explicit about how community building works so that people both internal and external to Mozilla can better understand and build upon this knowledge. This requires one of my favorite talents: connecting what emerges and making it a thing. We all experience this when we’ve been so immersed in something that we begin to notice patterns – our brains like to connect. One of my mentors, Dia Bondi, experienced this with her 21 Things, which she created during her time as a speech coach and still uses today in her work.

I set out to develop a mental model to help thing-ify this seemingly ambiguous concept of community building so that we all could collectively drive the conversation forward. (That might be the philosopher in me.) What emerged was this sort of fascinating overarching story: community building is connecting the furthest edge to the deepest middle (and making the process along that path easier). What I mean here is that the person with the largest of any form of distance must be able to connect to the hardest to reach person in the heart of the formal organization. For example, the 12 year old girl in Brazil who just taught herself some new JavaScript framework needs to be able to connect in some way to the module owner of that new JavaScript framework located in Finland because when they work together we all rise further together.

The edge requires coordination from community. The center requires internal champions. The goal of community building is then to support community by creating structures that bridge community coordinators and internal champions while independently being or supporting the development of both. This structure allows for more action and creativity than no structure at all – a fundamental of design school.

Below is a model of community management. We see this theme of furthest edge to deepest middle. “It’s broken” is the edge. “I can do something about it” approaches the middle. This model shows how to take action and make the pathway from edge to middle easier.

Community building is connecting the furthest edge to the deepest middle. It’s implicit. It’s obvious. But, when we can be explicit and talk about it we can figure out where and how to improve what works and focus less on what does not.

http://seanbolton.me/2014/09/24/from-the-furthest-edge-to-the-deepest-middle/

|

|

Ted Clancy: A better way to input Vietnamese |

Earlier this year I had the pleasure of implementing for Firefox OS an input method for Vietnamese (a language I have some familiarity with). After being dissatisfied with the Vietnamese input methods on other smartphones, I was eager to do something better.

I believe Firefox OS is now the easiest smartphone on the market for out-of-the-box typing of Vietnamese.

The Challenge of Vietnamese

Vietnamese uses the Latin alphabet, much like English, but it has an additional 7 letters with diacritics (A, ^A, D, ^E, ^O,

http://tedclancy.wordpress.com/2014/09/25/a-better-way-to-input-vietnamese/

|

|

Erik Vold: Jetpack Pro Tip - setImmediate and clearImmediate |

Do you know about window.setImmediate

or window.clearImmediate ?

Did you know that you can use these now with the Add-on SDK ?

We’ve managed to keep them a little secret, but they are awesome because

setImmediate is much quicker than setTimeout(fn, 0) especially if it is used

a lot as it would be if it were in a loop or if it were used recursively. This is well described in

the notes in MDN on window.setImmediate.

To use these function with the Add-on SDK, do the following:

const { setImmediate, clearImmediate } = require("sdk/timers");

function doStuff() {}

let timerID = setImmediate(doStuff); // to run `doStuff` in the next tick

clearImmediate(timerID) // to cancel `doStuff`

http://work.erikvold.com/jetpack-pro-tip/2014/09/25/setImmediate.html

|

|

Nicholas Nethercote: You should use WebRTC for your 1-on-1 video meetings |

Did you know that Firefox 33 (currently in Beta) lets you make a Skype-like video call directly from one running Firefox instance to another without requiring an account with a central service (such as Skype or Vidyo)?

This feature is built on top of Firefox’s WebRTC support, and it’s kind of amazing.

It’s pretty easy to use: just click on the toolbar button that looks like a phone handset or a speech bubble (which one you see depends which version of Firefox you have) and you’ll be given a URL with a call.mozilla.com domain name. [Update: depending on which beta version you have, you might need to set the loop.enabled preference in about:config, and possibly customize your toolbar to make the handset/bubble icon visible.] Send that URL to somebody else — via email, or IRC, or some other means — and when they visit that URL in Firefox 33 (or later) it will initiate a video call with you.

I’ve started using it for 1-on-1 meetings with other Mozilla employees and it works well. It’s nice to finally have an open source implementation of video calling. Give it a try!

|

|

Byron Jones: happy bmo push day! |

the following changes have been pushed to bugzilla.mozilla.org:

- [1067381] Sorting by ID broken when changing multiple bugs

- [1065398] Error when using checksetup.pl to create BMO database from scratch when Review extension enabled

- [1064395] concatenate and slightly minify javascript files

- [1068014] skip strptime() in datetime_from() if the date is in a standard format

- [1054141] add the ability to filter on the user that made the change

- [891199] clicking on needinfo flag/text should scroll you to the comment which set the flag

- [1069504] Put My Dashboard in the drop down on the top-right

- [1067410] Modification time wrong for deleted flags in review schema

- [1067808] Review history page displays cancelled reviews as overdue

- [1060728] Add perltidyrc that makes it easier to follow existing code standards to BMO repository

- [1068328] needinfo flag shows up on attachment details page only when not doing “Edit as Comment”

- [1037663] Make custom bug entry forms more discoverable

- [1071926] Can’t unmentor a bug

discuss these changes on mozilla.tools.bmo.

Filed under: bmo, mozilla

http://globau.wordpress.com/2014/09/24/happy-bmo-push-day-114/

|

|

Ben Hearsum: Stop stripping (OS X builds), it leaves you vulnerable |

While investigating some strange update requests on our new update server, I discovered that we have thousands of update requests from Beta users on OS X that aren’t getting an update, but should. After some digging I realized that most, if not all of these are coming from users who have installed one of our official Beta builds and subsequently stripped out the architecture they do not need from it. In turn, this causes our builds to report in such a way that we don’t know how to serve updates for them.

We’ll look at ways of addressing this, but the bottom line is that if you want to be secure: Stop stripping Firefox binaries!

http://hearsum.ca/blog/stop-stripping-it-leaves-you-vulnerable/

|

|

Lucas Rocha: New Features in Picasso |

I’ve always been a big fan of Picasso, the Android image loading library by the Square folks. It provides some powerful features with a rather simple API.

Recently, I started working on a set of new features for Picasso that will make it even more awesome: request handlers, request management, and request priorities. These features have all been merged to the main repo now. Let me give you a quick overview of what they enable you to do.

Request Handlers

Picasso supports a wide variety of image sources, from simple resources to content providers, network, and more. Sometimes though, you need to load images in unconventional ways that are not supported by default in Picasso.

Wouldn’t it be nice if you could easily integrate your custom image loading logic with Picasso? That’s what the new request handlers are about. All you need to do is subclass RequestHandler and implement a couple of methods. For example:

public class PonyRequestHandler extends RequestHandler {

private static final String PONY_SCHEME = "pony";

@Override public boolean canHandleRequest(Request data) {

return PONY_SCHEME.equals(data.uri.getScheme());

}

@Override public Result load(Request data) {

return new Result(somePonyBitmap, MEMORY);

}

}

Then you register your request handler when instantiating Picasso:

Picasso picasso = new Picasso.Builder(context)

.addRequestHandler(new PonyHandler())

.build();

Voil`a! Now Picasso can handle pony URIs:

picasso.load("pony://somePonyName")

.into(someImageView)

This pull request also involved rewriting all built-in bitmap loaders on top of the new API. This means you can also override the built-in request handlers if you need to.

Request Management

Even though Picasso handles view recycling, it does so in an inefficient way. For instance, if you do a fling gesture on a ListView, Picasso will still keep triggering and canceling requests blindly because there was no way to make it pause/resume requests according to the user interaction. Not anymore!

The new request management APIs allow you to tag associated requests that should be managed together. You can then pause, resume, or cancel requests associated with specific tags. The first thing you have to do is tag your requests as follows:

Picasso.with(context)

.load("http://example.com/image.jpg")

.tag(someTag)

.into(someImageView)

Then you can pause and resume requests with this tag based on, say, the scroll state of a ListView. For example, Picasso’s sample app now has the following scroll listener:

public class SampleScrollListener implements AbsListView.OnScrollListener {

...

@Override

public void onScrollStateChanged(AbsListView view, int scrollState) {

Picasso picasso = Picasso.with(context);

if (scrollState == SCROLL_STATE_IDLE ||

scrollState == SCROLL_STATE_TOUCH_SCROLL) {

picasso.resumeTag(someTag);

} else {

picasso.pauseTag(someTag);

}

}

...

}

These APIs give you a much finer control over your image requests. The scroll listener is just the canonical use case.

Request Priorities

It’s very common for images in your Android UI to have different priorities. For instance, you may want to give higher priority to the big hero image in your activity in relation to other secondary images in the same screen.

Up until now, there was no way to hint Picasso about the relative priorities between images. The new priority API allows you to tell Picasso about the intended order of your image requests. You can just do:

Picasso.with(context)

.load("http://example.com/image.jpg")

.priority(HIGH)

.into(someImageView);

These priorities don’t guarantee a specific order, they just tilt the balance towards higher-priority requests.

That’s all for now. Big thanks to Jake Wharton and Dimitris Koutsogiorgas for the prompt code and API reviews!

You can try these new APIs now by fetching the latest Picasso code on Github. These features will probably be available in the 2.4 release. Enjoy!

|

|

Michael Kaply: Fileblock |

One of the things that I get asked the most is how to prevent a user from accessing the local file system from within Firefox. This generally means preventing file:// URLs from working, as well as removing the most common methods of opening files from the Firefox UI (the open file button, menuitem and shortcut). Because I consider this outside of the scope of the CCK2, I wrote an extension to do this and gave it out to anyone that asked. Unfortunately over time it started to have a serious case of feature creep.

Going forward, I've decided to go back to basics and just produce a simple local file blocking extension. The only features that it supports are whitelisting by directory and whitelisting by file extension. I've made that available here. There is a README that gives full information on how to use it.

For the other functionality that used to be a part of FileBlock, I'm going to produce a specific extension for each feature. They will probably be AboutBlock (for blocking specific about pages), ChromeBlock (for preventing the loading of chrome files directly into the browser) and SiteBlock (for doing simple whitelisting).

Hopefully this should cover the most common cases. Let me know if you think there is a case I missed.

|

|

Gervase Markham: Licensing Policy Change: Tests are Now Public Domain |

I’ve updated the Mozilla Foundation License Policy to state that:

PD Test Code is Test Code which is Mozilla Code, which does not carry an explicit license header, and which was either committed to the Mozilla repository on or after 10th September 2014, or was committed before that date but all contributors up to that date were Mozilla employees, contractors or interns. PD Test Code is made available under the Creative Commons Public Domain Dedication. Test Code which has not been demonstrated to be PD Test Code should be considered to be under the MPL 2.

So in other words, new tests are now CC0 (public domain) by default, and some or many old tests can be relicensed as well. (We don’t intend to do explicit relicensing of them ourselves, but people have the ability to do so in their copies if they do the necessary research.) This should help us share our tests with external standards bodies.

This was bug 788511.

http://feedproxy.google.com/~r/HackingForChrist/~3/y3vDf3cPn18/

|

|

Gervase Markham: Survey on FLOSS Contribution Policies |

In the “dull but important” category: my friend Allison Randal is doing a survey on people’s attitudes to contribution policies (committer’s agreements, copyright assignment, DCO etc.) in free/libre/open source software projects. I’m rather interested in what she comes up with. So if you have a few minutes (it should take less than 5 – I just did it) to fill in her survey about what you think about such things, she and I would be most grateful:

http://survey.lohutok.net is the link. You want the “FLOSS Developer Contribution Policy Survey” – I’ve done the other one on Mozilla’s behalf.

Incidentally, this survey is notable as I believe it’s the first online multiple-choice survey I’ve ever taken where I didn’t think “my answer doesn’t fit into your narrow categories” about at least one of the questions. So it’s definitely well-designed.

http://feedproxy.google.com/~r/HackingForChrist/~3/QyA2uO1tz-g/

|

|

Erik Vold: Jetpack Pro Tip - Reusing files for content and chrome |

I’ve seen

this issue come up a lot.

Where an add-on developer is trying to reuse a library file, like underscore in both

their add-on code, and their content scripts.

Typically the SDK documentation will say to put all of the content scripts in your add-on’s

data/ folder, and that is the best thing to do if the script is only going to be used

as a content script, but if you want to use the file in your add-on scope too then

the file should not be in the data/ folder, and it should be in your lib/ folder instead.

Once this is done, the add-on scope can easy require it, so all that is left is to figure

out a uri for the file in your lib/ folder which can be used for content scripts.

To do this there are two options, one of which only works on JPM.

JPM

The JPM solution is very simple (thanks to Jordan Santell for implementing this), it is:

let underscoreURI = require.resolve("./lib/underscore");

if the file is in lib/underscore, but it should only be there if you copied and pasted it there,

which pros don’t do. Pros use NPM because they know

underscore is there, so they just

make that a dependency, by adding this to package.json:

{

// ..

"dependencies": {

"underscore": "1.6.0"

}

//..

}

Then, simply use:

let underscoreURI = require.resolve("underscore");

CFX

WIth CFX you will have to

copy and paste the file in to your lib/ folder, then you can get a URL for the file

by doing this:

let underscoreURI = module.uri.replace("main.js", "underscore.js");

Assuming that the code above is evaluated in lib/main.js.

So you can see an issue with the above code is that you have to know the name of the file which this code is evaluated in, so another approach could be:

let underscoreURI = module.uri.replace(/\/[^\/]*$/, "/underscore.js");

http://work.erikvold.com/jetpack/2014/09/23/jp-pro-tip-reusing-js.html

|

|

Julien Vehent: Batch inserts in Go using channels, select and japanese gardening |

I was recently looking into the DB layer of MIG, searching for ways to reduce the processing time of an action running on several thousands agents. One area of the code that was blatantly inefficient concerned database insertions.

When MIG's scheduler receives a new action, it pulls a list of eligible agents, and creates one command per agent. One action running on 2,000 agents will create 2,000 commands in the ''commands'' table of the database. The scheduler needs to generate each command, insert them into postgres and send them to their respective agents.

MIG uses separate goroutines for the processing of incoming actions, and the storage and sending of commands. The challenge was to avoid individually inserting each command in the database, but instead group all inserts together into one big operation.

Go provides a very elegant way to solve this very problem.

At a high level, MIG Scheduler works like this:

- a new action file in json format is loaded from the local spool and passed into the NewAction channel

- a goroutine picks up the action from the NewAction channel, validates it, finds a list of target agents and create a command for each agent, which is passed to a CommandReady channel

- a goroutine listens on the CommandReady channel, picks up incoming commands, inserts them into the database and sends them to the agents (plus a few extra things)

// Goroutine that loads and sends commands dropped in ready state

// it uses a select and a timeout to load a batch of commands instead of

// sending them one by one

go func() {

ctx.OpID = mig.GenID()

readyCmd := make(map[float64]mig.Command)

ctr := 0

for {

select {

case cmd := <-ctx.Channels.CommandReady:

ctr++

readyCmd[cmd.ID] = cmd

case <-time.After(1 * time.Second):

if ctr > 0 {

var cmds []mig.Command

for id, cmd := range readyCmd {

cmds = append(cmds, cmd)

delete(readyCmd, id)

}

err := sendCommands(cmds, ctx)

if err != nil {

ctx.Channels.Log <- mig.Log{OpID: ctx.OpID, Desc: fmt.Sprintf("%v", err)}.Err()

}

}

// reinit

ctx.OpID = mig.GenID()

ctr = 0

}

}

}()As long as messages are incoming, the select() statement will elect the case when a message is received, and store the command into the readyCmd map.

When messages stop coming for one second, the select statement will fall into its second case: time.After(1 * time.Second).

In the second case, the readyCmd map is emptied and all commands are sent as one operation. Later in the code, a big INSERT statement that include all commands is executed against the postgres database.

In essence, this algorithm is very similar to a Japanese Shishi-odoshi.

The current logic is not yet optimal. It does not currently set a maximum batch size, mostly because it does not currently need to. In my production environment, the scheduler manages about 1,500 agents, and that's not enough to worry about limiting the batch size.

|

|

Eric Shepherd: The Sheppy Report: September 19, 2014 |

I’ve been working on getting a first usable version of my new server-side sample server project (which remains unnamed as yet — let me know if you have ideas) up and running. The goals of this project are to allow MDN to host samples that require a server side component (for example, examples of how to do XMLHttpRequest or WebSockets), and to provide a place to host samples that require the ability to do things that we don’t allow in an on MDN itself. This work is going really well and I think I’ll have something to show off in the next few days.

What I did this week

- Caught up on bugmail and other messages that came in while I was out after my hospital stay.

- Played with JSMESS a bit to see extreme uses of some of our new technologies in action.

- Did some copy-editing work.

- Wrote up a document for my own reference about the manifest format and architecture for the sample server.

- Got most of the code for processing the manifests for the sample server modules and running their startup scripts written. It doesn’t quite work yet, but I’m close.

- Filed a bug about implementing a drawing tool within MDN for creating diagrams and the like in-site. Pointed to draw.io as an example of a possible way to do it. Also created a developer project page for this proposal.

- Exchanged email with Piotr about the editor revamp. It’s making good progress.

Wrap up

I’m really, really excited about the sample server work. With this up and running (hopefully soon), we’ll be able to create examples for technologies we were never able to properly demonstrate in the past. It’s been a long time coming. It’s also been a fun, fun project!

https://www.bitstampede.com/2014/09/22/the-sheppy-report-september-19-2014/

|

|

Curtis Koenig: The Curtisk report: 2014-09-21 |

People wanna know what I do, so I am going to give this a shot, so each Monday I will make a post about the stuff I did in the previous week.

Idea shamlessly stolen from Eric Shepherd

What I did this week

- MWoS: SeaSponge Project Proposal (Review)

- Crusty Bugs data digging

- Mozillians.org security review (move along)

- Firefox OS Sec discussion

- sec triage process massaging

- Firefox OS Security coordination

- Vendor site review

- testing plan for vendor site testing

- testing coordination with team and vendor

- CBT Training survey

- security scan of [redacted]

Meetings attended this week

Mon

- Weekly Project Meeting

- Web Bounty Triage

Tue

- SecAutomation

- Cloud Services Security Team

Wed

- MWoS team Project meeting

- Vendor testing call

- Web Bug Triage

Thu

- Security Open Mic

- Grow Mozilla / Community Building

- Computer Science Teachers Association (guest speaker)

https://spartiates.wordpress.com/2014/09/22/the-curtisk-report-2014-09-21/

|

|

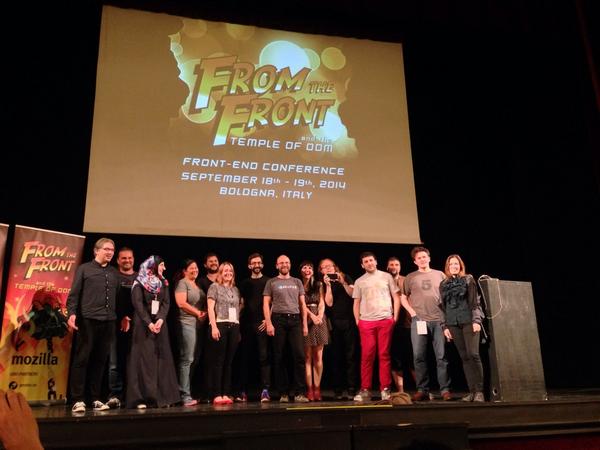

Christian Heilmann: Notes on my closing keynote of From the Front 2014 |

These are some notes about my closing keynote at From the Front in Bologna, Italy last week. The overall theme of the event was “Temple of the DOM” thus I kept it Indiana Jones themed (one could say shoehorned, but I wasn’t alone with this).

The slides are available on Slideshare.

In Indiana Jones and the Temple of Doom the Sankara Stones are very powerful stones that can bring prosperity or destroy people, depending how they are used. When you bring the stones together they light up and in general all is very mystic and amazing. It gives the movie an adventure angle that can not explained and allows us to suspend our disbelief and see Indy as being capable of more than a normal human being.

A tangent: Blowing people’s mind is pretty easy. All you need to do is take a known concept and then make an assumption from it. For example, when you see Luigi from Super Mario Brothers and immediately recognise him, there is quite a large chance you have an older sibling. You were always the one who had to wait till your sibling failed in the game so it was your turn to play with “green Mario”. Also, if Luigi and Mario are the Mario brothers then Mario’s name is Mario Mario. Ponder this.

The holy trinity of web development

On the web we also have magical stones that we can put together and create good or evil. These are the standardised technologies of the web: HTML, CSS and JavaScript. These are available in every browser, understood by them without any extra compilation step, very well documented and easy to learn (but harder to master).

Back in 1999, Jeffrey Zeldman taught us all not to write tag-soup any longer and use the technologies of the web to build intelligent solutions that use them to their strengths. These are commonly referred to as the separation of concerns:

- Structure (HTML and added extra-value semantics like Microformats)

- Presentation (CSS, Images)

- Behaviour (JavaScript)

Back then this was a very necessary stake in the ground, explaining that web development is not a random WYSIWYG result but something with a lot of planning and organisation behind it. The separation of concerns played all the different technologies to their strengths and also meant that one or two can fail and nothing will go wrong.

This also paved the way for the progressive enhancement idea that all you really need is a proper HTML document and the rest gets added when and if needed or – in the case of JavaScript – once it has been tested to be available and applicable.

The problems started when people with different agendas skewed the concept of the separation of concerns:

- HTML and semantic markup enthusiasts advocated far too loudly for very clean markup, validation and adding things like Microformats. For engineers just trying to get something to show up in a browser this has always been a confusion as the tangible benefits of this are, well, not tangible. Browsers are very forgiving and will fix HTML for you and when there is no interface in browsers that surfaces the data in Microformats, why do it? Of course, I disagree and stated very often that semantic, clean markup is the good grammar of the web – you don’t need it, but it does make you much easier to understand and shows that you learned what you are doing. But that doesn’t really matter. Fact is that we continuously try to make people understand something we hold dear without giving them tangible benefits.

- JavaScript enthusiasts, on the other hand, create far too much with JavaScript. This is a matter of control. You know JavaScript, you are happy seeing parts of an app or a page as objects and you want to instantiate them, inherit from them and re-use them. You don’t want to write much code but feel that generating it is the most clever way of using technology. Many JS enthusiasts also keep citing that browser differences are a real issue and that in JS they have the chance to test and fix problems browsers have. The fallacy here, of course, is that by doing that they also made the current and future browser issues their own.

- CSS enthusiasts started to shoot against JavaScript as a tool when CSS became more powerful. Are animations and transitions behaviour or presentation? Should it be done in CSS or in JS where there is much more granular control? What about generated content? Where does this fall into? We can create whole drawings from one DIV element, but should we?

All of this, together with lots and lots of libraries promising us to solve all kind of cross-browser issues lead to the massively obese web we see today. An average web site size of almost 2MB would have blown our minds in the past, but these days it seems the right thing to do if you want to be professional and use the tools professionals use. Starting a vanilla HTML file feels like a hack – using a build script to start a boiler plate seems to be the intelligent, full stack development thing to do.

Best practice reminders, repeated

This is nothing new, of course.

Back in 2004, I wrote a self training course on Unobtrusive JavaScript trying to make people understand the need for separation of behaviour and look and feel. In 2005 I questioned the validity of three layers of separation as I worked on CMS and framework driven web products where I did not have full control over the markup but had to deal with the result of .NET 1.0 renderers.

Web technologies have always been a struggle for people to grasp and understand. JavaScript is very powerful whilst being a very loosely architected language compared to C or Java. The ability to use inline styling and scripting always tempted people to write everything in one document rather than separating it out into several which allows for caching and re-use. That way we always created bloated, hard to maintain documents or over-used scripts and style sheets we don’t control and understand.

It seems the epic struggle about what technology to use for what is far from over and we still argue until we are blue in the face if an animation should be done in CSS or in JavaScript and whether static HTML or deferred loading and creation of markup using template engines is the fastest way to go.

So what can we do to stop this endless debate?

The web has moved on a lot since Zeldman laid down the law and I think it is time to move on with it. We have to understand that not everything is readily enhanceable. We also have standard definitions that just seem odd and could have very much been better with our input. But we, the people who know and love the web, were too busy fighting smaller fights and complaining about things we should have taken for granted a while ago:

- There will always be marketing materials or commercial training programs that get everything wrong we stand for. Mentioning them or trying to debunk them will just get more people to look at them. Yes, I do consider W3Schools part of this. We make these obsolete and unnecessary by creating better resources, not by telling people about their dangers.

- Browsers will always get things wrong and no, there will not be an amazing future where all browsers are ever-green and users upgrade all the time.

- Materials by standards bodies like this “Standards for Web Applications on Mobile: current state and roadmap” will always be verbose and seem academic in their wording. That’s what a standard is. There can not be wiggle room that’s why it sounds far more complex than we think it is.

- There will always be people who use a certain technology for things we consider inappropriate. A great example I saw lately was a Mandelbrot fractal renderer creating a span for each pixel written in SASS and needing 5 minutes to compile.

A fault tolerant web? Think again

One of the great things of old about the web was that it was fault tolerant. Meaning that if something breaks, you can provide a fallback or the browser ignores it. There were no broken interfaces.

This changed when multimedia became a larger part of HTML5. Of course, you can use a fallback image for a CANVAS element (and you should as these get shown as thumbnails on Facebook for example) but it isn’t the same thing as you don’t add a CANVAS for the fun of it but as an interactive part of the page.

The plain fallback case does not quite cut it any longer.

Take a simple example of an image in the page:

span> src="meh.jpg" alt="cute kitten photo"> |

This is cool. If the image can not be loaded or rendered, the browser shows the alternative text provided in the alt attribute (no, it is not a tag). In most browsers these days, this is just a text display. You even have full control in JavaScript knowing if the image wasn’t loaded and you could provide a different fallback:

var img = document.querySelector('img'); img.addEventListener('error', function(ev) { if (this.naturalWidth === 0 && this.naturalHeight === 0) { console.log('Image ' + this.src + ' not loaded'); } }, false); |

With video, it is slightly different. Take the following example:

span> controls> span> src="dynamicsearch.mp4" type="video/mp4"> > span> href="dynamicsearch.mp4"> span> src="dynamicsearch.jpg" alt="Dynamic app search in Firefox OS"> > >Click image to play a video demo of dynamic app search> > |

If the browser is not capable of supporting HTML5 video, we get a fallback image (again, great for indexing by Facebook and others). However, these browsers are not that likely to be in use any longer. The more interesting question is what happens when the browser can not play the video because the video codec is not supported? What end users get now is a grey box with the grace of a Java Applet that failed to load.

How to find out that the video playback failed? You’d expect an error handler on the video would do it, right? Well, not according to the specs which ask for an error handler on the last source element in the video element. That means that if you want to have the alternative content in the video element to show up when the video can not be played you need the following code:

var v = document.querySelector('video'), sources = v.querySelectorAll('source'), lastsource = sources[sources.length-1]; lastsource.addEventListener('error', function(ev) { var d = document.createElement('div'); d.innerHTML = v.innerHTML; v.parentNode.replaceChild(d, v); }, false); |

Codec detection is incredibly flaky and hard as it is on OS level of the hardware and not fully in the control of the browser. That’s probably also the reason why the return value of the canPlayType() method of a video element (which is meant to tell you if a video format is supported) returns “maybe”, “probably” or an empty string. A coy method, that one.

It is the web, deal with it!

We could get very annoyed with this, or we can just deal with it. In my 18 years of web development I learned to take things like that in stride and I am actually happy about the quirky issues of the web. It makes it a constantly changing and interesting environment to be in.

I really think Mattias Petter Johansson of Spotify nailed it when he answered on Quora to someone why JavaScript is the only language in a browser:

Hating JavaScript is like hating the Internet.

The Internet is a cobweb of different technologies cobbled together with duct tape, string and chewing gum. It’s not elegantly designed in any way, because it’s more of a growing organism than it is a machine constructed with intent.

This is also why we should stop trying to make people love the web no matter what and force our ideas down their throats.

Longevity? Meh!

One of the main things we keep harping on about is the lovely longevity of the web. Whether it is Microsoft’s first web page still working in browsers now after 20 years or the web being the only platform with backwards compatibility and forward enhancement – we love to point out that we are in for the long game.

Sadly, this argument means nothing to developers who currently work in the mobile app space where being first out of the door is the most important part and people know that two months down the line nobody is going to be excited about your game any more. This is not sustainable, and reminds me of other fast-moving technologies that came and went. So let’s not waste our time trying to convince people who already subscribed to an idea of creating consumable software with a very short shelf-life.

I put it this way:

If you enable people world-wide to get a good experience and solve a problem they have, I like it. The technology you use is not the important part. How much you lock them in is. Don’t lock people in.

Let’s analyse our own behaviour

A lot of the bloat and repetitiveness of the web seems to me to stem from three mistakes we make:

- we optimise prematurely

- we tend to strive for generic solutions for very specific problems.

- we build stop-gap solutions to use upcoming technology before it is ready and become dependent on those

A glimpse at the state of the componentised web seems to validate this. Web Components are amazingly necessary for the future of apps on the web platform, but they aren’t ready yet. Many of these frameworks give me great solutions right now and the effort I have to put in to learn them will make it hard for me to ever switch away from them. We’ve been there before: just try to find a junior JavaScript developer that knows the DOM instead of using jQuery for everything.

The cool new thing now are static HTML pages as they run fast, don’t take many resources and are very portable. Except that we already have 298 different generators to choose from if we want to create them. Or, we could write static HTML if all we have is a few sites. But where’s the fun in that?

Fredrik Noren had a great article about this lately called On Generalisation and put it quite succinctly:

Generalization is, in fact, prediction. We look at some things we have and we predict that any current and following entities in that group will look and behave sufficiently similar in the future to what we have now. We predict that we can write something that will cater to all, or most, of its future needs. And that makes perfect sense, if you just disregard one simple fact of life: humans are awful at predicting the future!

So let’s stop trying to build for an assumed problematic future that probably will never come and instead be thankful for what we have right now.

Such amazing times we live in

If you play with the web these days and you leave your “everything is broken, I must fix it!” hat off, it is amazing how much fun you can have. The other day I wrote Makethumbnails.com – a quick app that allows you to drag and drop images into your browser and get a zip of thumbnails back. All without a server in between, all working offline and written on a plane without a web connection using only the developer tools built into the browser these days.

We have an amazing amount of new events, sensors and data to play with. For example, reading out the ambient light around a laptop is a simple event handler:

window.addEventListener('devicelight', function(e) { var lv = e.value; // lv is the light in lux }); |

You can use this to switch from a dark on light to a light on dark display. Or you could detect a 0 and know that the end user is currently covering their camera with their hands and provide a very simple hand gesture interface that way. This sensor is always on and you don’t need to have the camera enabled. How cool is that?

Are there other sensors or features in devices you’d like to have? Please ask on the feedback form about Open Web Apps and you can be part of the next iteration of web interaction.

Developer tools across browsers moved on beyond the view-source functionality and all of them now offer timelines, step-by-step debugging, network information and even device or screen emulation and visual editors for colours and animations. Most also offer some sort of in-built editor and remote debugging of devices. If you miss something there, here is another channel to tell the makers about that.

It is a big, fragmented world out there

The next big boom of the web is not in the Western world and on laptops and desktops that are connected with massively fast lines. We live in a mobile world and the predictability of what device our end users will have is gone. Surveys in Android usage showed 18,796 different devices in use already and both Mozilla’s and Google’s reach into emerging markets with under $100 devices means the light-weight web is going to be a massive thing for all of us. This is why we need to re-think our ways.

First of all, offline first should be our mantra. There is no steady connection we can rely on. Alex Feyerke has a great talk about this.

Secondly, we need to ensure that our solutions run smoothly on very low end devices. For this, there are a few tricks and developer tools give us great insight into where we waste memory and framerate. Angelina Fabbro has a great talk about that.

In general, the web is and stays an amazingly useful resource now more than ever. Tools like Github, JSFiddle, JSBin, CodePen and many others allow us to build things together and be in constant communication. Together.js (built into JSFiddle as the ‘collaboration’ button) allows us to code together with a text or voice chat and seeing each other’s cursors. This is an incredible opportunity to make coding more human and help another whilst we develop instead of telling each other how we should develop.

Let’s use the web to work on things together. Don’t wait to build the perfect solution. Share it early, take on advice and pull requests and together we can build much better products.

http://christianheilmann.com/2014/09/22/notes-on-my-closing-keynote-of-from-the-front-2014/

|

|

Soledad Penades: JSConf.eu 2014 |

I accidentally ended up attending JSConf.eu 2014–it wasn’t my initial intent, but someone from Mozilla who was going to be at the Hacker Lounge couldn’t make it for personal reasons, and he asked me to join in, so I did!

Turns out I'll be in @jsconfeu after all! Look for me at the @mozilla hacker lounge and ask all the questions! pic.twitter.com/8Ex4ctxOa9

—

|

|

Mozilla WebDev Community: Beer and Tell – September 2014 |

September’s Beer and Tell has come and gone.

A practical lesson in the ephemeral nature of networks interrupted the live feed and the recording, but fear not! A wiki page archives the meeting structure and this very post will lay plain the private ambitions of the Webdev cabal.

Mike Cooper: GMR.js

Mythmon is a Civilization V enthusiast, but multiplayer games are difficult — games can last a dozen hours or more. The somewhat archaic play-by-mail format removes the simultaneous, continuous time commitment, and the Giant Multiplayer Robot service abstracts away the other hassles of coordinating turns and save game files.

GMR provides a client for Windows only, so Mythmon created GMR.js to provide equivalent functionality cross platform with Node.js. It presents an interactive command line UI. This enables participation from a Steam Box and other non-windows platforms.

Bramwelt: pewpew

Trevor Bramwell, summer intern for the Web Engineering team, presenting a homebrew clone of space invaders he calls pewpew. He built is using PhaserJS as an exercise to better understand prototypal inheritance. You can follow along as he develops it by playing the live demo on gh-pages.

Cvan: honeyishrunktheurl

Chris Van shared two new takes on the classic url shortener. The first is written in go, with configuration stored in JSON on the server. It was used as an exercise for learning go. The second is an html page that handles the redirect on the server side.

He intends to put them into production on a side project, but hasn’t found a suitable domain name.

Cvan: honeyishrunktheurl

Chris Van held the stage for a second demo. He showed how the CSS order property can be used to cheaply rearrange DOM nodes without destroying and re-rendering new nodes. An accompanying blog post delves into the details. The post is worth a read, since it covers some limitations of the technique that came up in discussion during the demo.

Lonnen: Alonzo, pt II

Last time he joined us, Lonnen was showing off a scheme interpreter he was writing in Haskell called Alonzo. This month Alonzo had a number of new features, including variable assignment, functions, closures, and IO. Next he’ll pursue building a standard library and adding a test suite.

If you’re interested in attending the next Beer and Tell, sign up for the dev-webdev@lists.mozilla.org mailing list. An email is sent out a week beforehand with connection details. You could even add yourself to the wiki and show off your side-project!

See you next month!

https://blog.mozilla.org/webdev/2014/09/22/beer-and-tell-september-2014/

|

|

Daniel Stenberg: daniel.haxx.se week #3 |

I won’t keep posting every video update here, but I mostly wanted to mention that I’ve kept posting a weekly video over at youtube basically explaining what’s going on right now within my dearest projects. Mostly curl and some Firefox stuff.

This week: libcurl server cert verification API got a bashing at SEC-T, is HTTP for UDP a good idea? How about adding HTTP cache support to libcurl? HTTP/2 is getting deployed as we speak. Interesting curl bug when used by XBMC. The patch series for Firefox bug 939318 is improving slowly – will it ever land?

http://daniel.haxx.se/blog/2014/09/22/daniel-haxx-se-week-3/

|

|

Erik Vold: Add-on Directionless |

At the moment there is no one at Mozilla “in charge” that has awareness of the add-on community’s plight. It’s sadly true that Mozilla has been divesting in add-on support for awhile now. To be fair, I think the inital divestments were good ones, the Add-on Builder website was a money pit for example. The priority has shifted to web apps, and the old addons.mozilla.org team is working mostly on the marketplace (which is no longer for add-ons, and is now just for apps), and the Add-on SDK team is now mostly working on Firefox DevTool projects.

At the moment we have only a few staffers working on addons.mozilla.org and the SDK, and none of us have an authority to make decisions or end debates, there is a tech lead for the SDK but that is not a position that has the authority to make directional decisions, or decide how staffers prioritize their work and spend their time, each person’s manager will be in charge of that, and our managers are DevTool and Marketplace people..

Either we all agree on a direction or we don’t.

http://work.erikvold.com/jetpack/2014/09/22/addon-directionless.html

|

|