Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Tobias Markus: MozCamp invitation only for contributors who comply with the Grow Mozilla initiative? |

|

|

Peter Bengtsson: HTML Tree on Hacker News |

On Friday I did a Show HN and got featured on the front page for HTML Tree.

Amazingly, out of the 3,858 visitors (according to Google Analytics today) 2,034 URLs were submitted and tested on the app. Clearly a lot of people just clicked the example submission but out of those 1,634 were unique. Granted, some people submitted more than one URL but I think a large majority of people came up with a URL of their own to try. Isn't that amazing! What a turnout of a Friday afternoon hack (with some Sunday night hacking to make it into a decent looking website).

The lesson to learn here is that the Hacker News crowd is excellent for getting engagement. Yes, there are a lot of blather and almost repetitive submissions but by and large it's a very engaging community. Suck on that those who make fun of HN!

|

|

Jared Wein: New in Firefox Nightly: In-content Preferences |

I’m happy to announce that starting today, the new in-content preferences are enabled by default in Firefox Nightly.

This project was started by a group of students at Michigan State University and was mentored by Blair McBride and myself. Since its start, it has continued to get a ton of attention from contributors world-wide.

This is a list of people who have contributed patches to the in-content preferences as of this posting:

- Ally Naaktgeboren

- Andres Hernandez

- Andrew Hurle

- Benjamin Peterson

- Benjamin Smedberg

- Brendan Dahl

- Brian R. Bondy

- Brian Smith

- Carsten “Tomcat” Book

- Chris Mahoney

- Chris Peterson

- Christian Ascheberg

- Christian Sonne

- Devan Sayles

- D~ao Gottwald

- Ed Morley

- Ehsan Akhgari

- Florian Qu`eze

- Gervase Markham

- Gijs Kruitbosch

- Gregory Szorc

- Honza Bambas

- Jan de Mooij

- Jan Varga

- Jared Wein

- Javi Rueda

- Jeff Walden

- John Schoenick

- Jon Rietveld

- Jonathan Mayer

- Josh Matthews

- JosiahOne

- Kyle Machulis

- Mahdi Dibaiee

- Manish Goregaokar

- Manish Goregaokar

- Mark Hammond

- Martin Stransky

- Matthew Noorenberghe

- Michael Harrison

- Mike Connor

- Mike Hommey

- Ms2ger

- Nicholas Nethercote

- Nick Alexander

- Owen Carpenter

- Paolo Amadini

- Phil Ringnalda

- Richard Marti

- Richard Newman

- rsx11m

- Ryan VanderMeulen

- Sebastian Hengst

- Sid Stamm

- Ted Mielczarek

- Theo Chevalier

- Tim Taubert

- Yosy

- Zuhao (Joe) Chen

There is still a lot of work to be done before shipping the new in-content preferences out to people on the release builds of Firefox. That also means that this long list of contributors doesn’t have to stay at 59 people, it can keep growing :)

We have a list of bugs that we need to fix before we can call version 1 of this project complete. The easiest way for someone new to help out is to download Firefox Nightly and help test that the new preferences work just as well as the old preferences. If you find an issue and see that it hasn’t already been reported, please file a new bug in Bugzilla and leave a comment on this blog post with a link to the bug that you filed.

Tagged: firefox, msu, planet-mozilla

http://msujaws.wordpress.com/2014/05/18/in-content-preferences/

|

|

Priyanka Nag: MozCamp India 2014...a few FAQs answered here |

Personally, I have never been to a MozCamp before but what I understand after my conversations with several former MozCamp attendees (please correct me if I am wrong), it used to be a gathering for all Mozillians of a region (mostly continent level) to be able to interact with each other. People who have been working virtually were given a chance to meet in reality during this. Also,these MozCamps used to focus a lot on cross community interactions and cross-cultural learnings.

The structure of MozCamp is getting a bit modified from this year. Starting this year, MozCamp is going to be more like a 'Train the trainer' sort of event. Unlike previous MozCamps, not all active Mozillians will be called down to attend a MozCamp. Only selected ones will be invited to come and get trained to be better trainers in respective pathways (task-forces as we call them in India) and will also be responsible to recruit new Mozillians in their respective communities.

Why this new design of MozCamp?

If you remember, one of the biggest goal for Mozilla this year is, getting a million Mozillians. And only an active Mozilla global community can make this dream come true. Inorder to do that, we will need a lot of Mozillians to be able to host events, train (and mentor) newcomers and keep encouraging new people to be a part of this awesome community.

How are the participants of a MozCamp going to be selected?

In general, the plan is to make this process application based (i.e. Mozillians will apply to be a part of MozCamp and from the list of applicants, selection will be done). But for MozCamp India we didn't have sufficient time for an application process and thus the pathway and community leaders together nominated the probable applicants and then these nominations were voted to be final participants.

Ofcourse all of these were not done randomly, there were some definite pre-requisites for the formation of this participant list. Please check this wiki page for the invitation criteria for the MozCamp Beta India.

Why is the "Beta" tag attached to the event name?

Since MozCamp is being restructured, the first event in India is going to be like a test version of the original ones. The new structure, the content covered and also the overall conduction of this event are the major testing criteria. Thus, just like a software release is preceded by a 'beta' release, before the original MozCamps, we are having the 'beta' event.

Will there be other MozCamps after the beta one this year?

Yes. Just like we used to have, we will continue having MozCamp Asia, Europe Latin America and all the other ones. And yes the Indian participants will again be invited to attend MozCamp Asia but since you will be already trained once, get prepared to hold more responsibilities in the bigger MozCamp.

What is the venue for MozCamp India?

June 20 - June 22, 2014 in Bangalore, India

JW Marriott

24/1, Vittal Mallya Rd, Ashok Nagar, Bangalore, Karnataka 560001, India

For more information, you can refer to this wiki page.

What is there in it for the community?

This is probably the most important question we should all ask ourselves. One of the main reasons why India was chosen as the venue for test run was the active contribution from this region of the globe. When an event of this scale was being planned in less than 6 weeks time, it was a challenge for the entire community to get together and make this happen and yes, we have boldly accepted the challenge. The Indian community (I believe) has all the skill-set required to make this event a super success.

So, before I conclude, I would like to remind you that incase if you have not received an invitation for this MozCamp beta, its no way an implication that you are not awesome or your contribution is not being valued. There was a limitation on the number of participants that could be included in MozCamp India and thus, there might be some criteria based on which someone else had to be accommodated this time.

http://priyankaivy.blogspot.com/2014/05/mozcamp-india-2014a-few-faqs-answered.html

|

|

Nick Cameron: Rust for C++ programmers - part 6: Rc, Gc, and * pointers |

This post took a while to write and I still don't like it. There are a lot of loose ends here, both in my write up and in Rust itself. I hope some will get better with later posts and some will get better as the language develops. If you are learning Rust, you might even want to skip this stuff for now, hopefully you won't need it. Its really here just for completeness after the posts on other pointer types.

It might feel like Rust has a lot of pointer types, but it is pretty similar to C++ once you think about the various kinds of smart pointers available in libraries. In Rust, however, you are more likely to meet them when you first start learning the language. Because Rust pointers have compiler support, you are also much less likely to make errors when using them.

I'm not going to cover these in as much detail as unique and borrowed references because, frankly, they are not as important. I might come back to them in more detail later on.

Rc

Reference counted pointers come as part of the rust standard library. They are in the `std::rc` module (we'll cover modules soon-ish. The modules are the reason for the `use` incantations in the examples). A reference counted pointer to an object of type `T` has type `RcAs with the other pointer types, the `.` operator does all the dereferencing you need it to. You can use `*` to manually dereference.

To pass a ref-counted pointer you need to use the `clone` method. This kinda sucks, and hopefully we'll fix that, but that is not for sure (sadly). You can take a (borrowed) reference to the pointed at value, so hopefully you don't need to clone too often. Rust's type system ensures that the ref-counted variable will not be deleted before any references expire. Taking a reference has the added advantage that it doesn't need to increment or decrement the ref count, and so will give better performance (although, that difference is probably marginal since Rc objects are limited to a single thread and so the ref count operations don't have to be atomic). As in C++, you can also take a reference to the Gc pointer.

An Rc example:

use std::rc::Rc;Ref counted pointers are always immutable. If you want a mutable ref-counted object you need to use a RefCell (or Cell) wrapped in an `Rc`.

fn bar(x: Rc) { }

fn baz(x: &int) { }

fn foo() {

let x = Rc::new(45);

bar(x.clone()); // Increments the ref-count

baz(&*x); // Does not increment

println!("{}", 100 - *x);

} // Once this scope closes, all Rc pointers are gone, so ref-count == 0

// and the memory will be deleted.

Cell and RefCell

Gc

Gc example:

use std::gc::Gc;

fn bar(x: Gc) { }

fn baz(x: &int) { }

fn foo() {

let x = Gc::new(45);

bar(x);

baz(x.borrow());

println!("{}", 100 - *x.borrow());

}

*T - unsafe pointers

So, what is unsafe code? Rust has strong safety guarantees, and (rarely) they prevent you doing something you need to do. Since Rust aims to be a systems language, it has to be able to do anything that is possible and sometimes that means doing things the compiler can't verify is safe. To accomplish that, Rust has the concept of unsafe blocks, marked by the `unsafe` keyword. In unsafe code you can do unsafe things - dereference an unsafe pointer, index into an array without bounds checking, call code written in another language via the FFI, or cast variables. Obviously, you have to be much more careful writing unsafe code than writing regular Rust code. In fact, you should only very rarely write unsafe code. Mostly it is used in very small chunks in libraries, rather than in client code. In unsafe code you must do all the things you normally do in C++ to ensure safety. Furthermore, you must ensure that by the time the unsafe block finishes, you have re-established all of the invariants that the Rust compiler would usually enforce, otherwise you risk causing bugs in safe code too.

An example of using an unsafe pointer:

fn foo() {

let x = 5;

let xp: *int = &5;

println!("x+5={}", add_5(xp));

}

fn add_5(p: *int) -> int {

unsafe {

if !p.is_null() { // Note that *-pointers do not auto-deref, so this is

// a method implemented on *int, not int.

*p + 5

} else {

-1 // Not a recommended error handling strategy.

}

}

}

And that concludes our tour of Rust's pointers. Next time we'll take a break from pointers and look at Rust's data structures. We'll come back to borrowed references again in a later post though.

http://featherweightmusings.blogspot.com/2014/05/rust-for-c-programmers-part-6-rc-gc-and.html

|

|

Ben Hearsum: This week in Mozilla RelEng – May 16th, 2014 |

Major highlights:

- Aki is working on changing how we chunk our unittest builders. Because of some limits in the way we currently scale with Buildbot, this has became extremely important.

- Kim enabled a bunch of new Firefox for Android tests on emulators. These will let us move some on device tests into more scalable and reliable solutions.

- Andrew moved a ton of Mozharness test configs in-tree. Having them there reduces a lot of pain by giving us greater flexibility in branch specific configuration, and making it easier for test changes to follow code merges.

- Catlee changed our job scheduling in Buildbot to coalesce a maximum of 3 jobs together. This should help reduce the bisection windows when bad pushes are made. He wrote a great blog post detailing it here.

- Anhad (our first intern of the year), started detailing a plan to build a partial MAR generation service that we’ve wanted for a long time. When completed, it will allow us to serve many more users more efficient updates.

Completed work (resolution is ‘FIXED’):

- Buildduty

- No Tarako Build Today on 5/15/2014

- Please block/disable/stop updates to today’s Thunderbird nightly builds

- running out of disk space during linux64_gecko-debug jobs

- upload https://github.com/vitillo/python_cache_flusher to http://pypi.pub.build.mozilla.org/pub

- 10.5 hour wait time for mtnlion try test builds due to around 50 mtnlion slaves breaking last night

- Respin B2G Trunk Hamachi Builds Due to Critical Regressions from Bug 906164

- A lot of XP machines out of action

- General Automation

- The b2g desktop /latest/ directory contains way out of date builds

- Self-serve should be able to request arbitrary builds on a push (not just retriggers or complete sets of dep/PGO/Nightly builds)

- nuke lockfiles in .repo dir in b2g_build.py

- upload mars for other b2g device builds that need updates

- upload flame gecko/gaia mars to public ftp

- make it possible to post b2g mar info to balrog

- Mozharness test configs should live in-tree

- Jetpack jobs failing with “IOError: [Errno 13] Permission denied: ‘/etc/instance_metadata.json’”

- Don’t do Thunderbird builds on comm-* branches for non-Thunderbird pushes

- Stop running tests on 10.8 on B2g28-v1.3 and B2g26-v1.2

- Need Tarako 1.3t FOTA updates for testing purposes

- Move Android 2.3 reftests to ix slaves (ash only)

- Changes introduced for Bug 970918 break mozharness code when mock_target is not defined

- Reduce log retention on buildbot masters from 200 twistd.log files to 100 twistd.log files

- We need to pull in merge commit refs for gaia-try runs

- Limit coalescing

- Get e10s tests running on inbound, central, and try

- Make it possible to run gaia try jobs *without* doing a build

- inari eng nightlies failing because stage-update.py can’t find a mar

- Don’t enable sccache on PGO builds

- Disable branches to reduce builder count

- blobber TinderboxPrints could be tidier

- Run more Android 4.0 Debug tests on Cedar

- Schedule Android 2.3 crashtests, js-reftests, plain reftests, and m-gl on all trunk trees and make them ride the trains

- add b2gbld password to BuildSlaves.py

- Add MySQL specific database schema dumps to the BuildAPI repo

- Configure Elm for specific builds

- Loan Requests

- Slave loan request for a VS2013 build machine

- Slave loan request for a t-w732-ix machine

- Loan glandium a (the) linux build slave in scl3

- Other

- Platform Support

- mh and device changes for relocated p3 panda racks

- Create a Windows-native alternate to msys rm.exe to avoid common problems deleting files

- Release Automation

- Release tagging should use purge_builds.py

- release jobs don’t pay attention to jacuzzi allocations

- release repacks needs to submit data to balrog

- Repos and Hooks

- Tools

In progress work (unresolved and not assigned to nobody):

- Buildduty

- General Automation

- Run Android 2.3 tests against armv6 builds, on Ash only

- make mozharness test scripts easier to run standalone

- kill inari, leo builds

- Provide B2G Emulator builds for Darwin x86

- Figure out the correct path setup and mozconfigs for automation-driven MSVC2013 builds

- set-up initial balrog rules for b2g updates

- Add a MOZ_AUTOMATION environment to all builds

- Manage repo checkout directly from b2g_build.py

- Triggering arbitrary jobs gets branch wrong

- b2g balrog submission should point at dated dirs, not latest-*

- Create 4 more linux64 tests masters

- hamachi device builds submitting bad urls to balrog

- [Meta] Some “Android 4.0 debug” tests fail

- /builds/slave is read only for cltbld

- allow mozharness scripts to call other mozharness scripts

- [Flame] Please update the blobs to use blobs from 10F-3

- Add the build step or else process name to buildbot’s generic command timed out failure strings

- switch b2g builds to use aus4.mozilla.org as their update server

- Run Gaia unit oop and reftest sanity oop for b2g desktop on trunk

- Stop running cppunit and jittest on OS X 10.8 on all branches

- eng+noneng nightlies upload MARs (and maybe other files) that stomp on each other

- Please add non-unified builds to mozilla-central

- Do debug B2G desktop builds

- change how we chunk in desktop_unittest.py

- include device in fota mar filenames

- Add ‘hsb’ to the Firefox build

- Upload the list of all functions from hazard analysis

- Start doing mulet builds

- Don’t require puppet or DNS to launch new instances

- Put ccache on SSDs

- Add support for webapprt-test-chrome test jobs & enable them per push on Cedar

- Loan Requests

- Other

- Deprecate tinderbox-builds/old directories for desktop & mobile

- [tracking] infrastructure improvements

- Switch in house try builds to S3 for sccache

- Platform Support

- Cleanup temporary files on boot

- evaluate mac cloud options

- cancelled 2.3 mochitest jobs put ix slaves into weird state

- signing win64 builds is busted

- Windows slaves often get permission denied errors while rm’ing files

- slave pre-flight tasks

- Run unittests/talos on OS X 10.9 Mavericks

- Update version of pip installed on automation machines from 0.8.2 to 1.5.4+

- Release Automation

- release automation can’t update balrog blobs during the update step

- Figure out how to offer release build to beta users

- Releases

- tracking bug for build and release of Firefox and Fennec 30.0

- Trim rsync modules (May 2014 OMG we still have to do this edition)

- Repos and Hooks

- Tools

- b2g tagging script

- AWS Sanity Check lies about how long an instance was shut down for…

- implement “disable” action in slaveapi

- tegra/panda health checks (verify.py) should not swallow exceptions

- Setup docker apps for buildbot, buildapi and redis

- Blobber upload files not served with correct content type

http://hearsum.ca/blog/this-week-in-mozilla-releng-may-16th-2014/

|

|

Kim Moir: 20 years on the web |

I found this picture the other day. It's me on graduation day at Acadia, twenty years ago this month. A lot has changed since then.

In the picture, I'm in Carnegie Hall, where the Computer Science department had their labs, classrooms and offices. I'm sitting in front of a Sun workstation, which ran a early version of Mosaic. I recall the first time I saw a web browser display a web page, I was awestruck. I think it was NASA's web page. My immediate reaction was that I wanted to work on that, to be on the web.

As a I've mentioned before, my Dad was a manager at a software and services firm in Halifax. He brought home our first computer when I was 9. Dad was always upgrading the computers or fixing them and I'd watch him and asked lots of questions about how the components connected together. In junior high, I taught myself BASIC from the manual, wrote a bunch of simple programs, and played so many computer games that my dreams at night became pixelated. When I was 16, I started working at my Dad's office doing clerical work during the school break. One of my tasks was to run a series of commands to connect to BITNET via an accoustic coupler using Kermit and download support questions from their university customers. I thought it was so magical that these computers that were so physically distant could connect and communicate.

In high school, I took computer science in grade 12 and we wrote programs in Pascal on Apple IIs. My computer science teacher was very enthusiastic and welcoming. He taught us sorting algorithms, and binary trees, and other advanced topics that weren't on the curriculum. Since he had such an interest he taught a lot of extra material. Thanks Mr. B.

When it was time to apply to university, I didn't apply to computer science. I don't know why, my grades were fine and I certainly had the background. I really lacked self confidence that I could do it. In retrospect, I would have been fine. I enrolled at Acadia in their Bachelor of Business Administration program, probably because I liked reading the Globe and Mail.

I arrived on campus with a PC to write papers and do my accounting assignments. The reason I had access to a computer was that the company my Dad worked for allowed their employees borrow a computer for home use for a year at a time, then return it. Otherwise, they were prohibitively expensive at the time. My third year of university I decided that I was better suited to computer science than business so started taking all my elective courses from the computer science faculty. I still wanted to graduate on in four years so I didn't switch majors. It was such a struggle to scrape together the money from part-time jobs and student loans to pay for four years of university, let alone six.

One of my part-time jobs was helping people in the university computer labs with questions and fixing problems. Everything was very text based back then. We used Archie to search for files, read books transcribed by the Gutenberg project and use uudecode to assemble pictures posted to Usenet groups. I applied for a Unix account on the Sun system that only the Computer Science students had access to. It was called dragon and the head sysadmin had a sig that said "don't flame me, I'm on dragon". I loved learning all the obscure yet useful Unix commands.

My third year I had a 386 portable running Windows 3.1. I carried this computer all over campus, plugging it in at the the student union centre and working on finance projects with my business school colleagues. By my fourth year, they had installed Sun workstations in the Computer Science labs with Mosaic installed. This was my first view of the world wide web. It was beautiful. The web held such promise.

I applied for 40 different jobs before I graduated from Acadia and was offered a job in Ottawa working for the IT department of Revenue Canada. A ticket out of rural Nova Scotia! I didn't like my first job there that much but they paid for networking and operating system courses that I took at night. I was able to move to a new job in a year and started being a sysadmin for their email servers that served 30,000 users. It was a lot of fun and I learned a tremendous amount about networking, mail related protocols and operating systems. I also spent a lot of time in various server rooms across Canada installing servers. Always bring a sweater.

I left after a few years to work at Nortel as technical support for a telephony switch that offloaded internet traffic from voice switches to a dedicated switch. Most internet traffic back then was via modem which were longer duration calls than most voice calls and caused traffic issues. I took a lot of courses on telephony protocols, various Unix variants and networking. I traveled to several telco customers to help configure systems and demonstrate product features. More time in cold server rooms.

Shortly after Mr. Releng and I got married we moved to Athens, Georgia where he was completing his postdoc. I found a great job as a sysadmin for the UGA's computer systems division. The group provided FTP, electronic courseware, email services to the campus and securing a lot of hacked Linux servers set up by unknowing graduate students in various departments across campus. When I started, I didn't know Linux very well so my manager just advised me to install Red Hat about 30 times and change the options every time, learn how to compile custom kernels and so on. So that's what I did. At that time you also had to compile Apache from source to include any modules such as ssl support, or different databases so I also had fun doing that.

We used to do maintenance on the computer systems between 5 and 7am once a week. Apparently not many students are awake at that hour. I'd get up at 4am and drive in to the university in the early morning, the air heavy with the scent of Georgia pine and the ubiquitous humidity. My manager M, always made a list the night before of what we had to do, how long it would take, and how long it would take to back the changes out. His attention to detail and reluctance to ever go over the maintenance window has stayed with me over time. In fact, I'm still kind of a maintenance nerd, always figuring out how to conduct system maintenance in the least disruptive way to users. The server room at UGA was huge and had been in operation since the 1960s. The layers of cable under the tiles were an archeological record of the progress of cabling within the past forty years. M typed on a DVORAK keyboard, and was one of the most knowledgeable people about all the Unix variants, and how they differed. If he found a bug in Emacs or any other open source software, he would just write a patch and submit it to their mailing list. I thought that was very cool.

After Mr. Releng finished his postdoc, we moved back to Ottawa. I got a job at a company called OTI as a sysadmin. Shortly after joining, my colleague J said "We are going to release an open source project called Eclipse, are you interested in installing some servers for it?" So I set up Bugzilla, CVS, mailman, nntp servers etc. It was a lot of fun and the project became very popular and generated a lot of traffic. A couple years later the Eclipse consortium became the Eclipse Foundation and all the infrastructure management moved there.

I moved to the release engineering team at IBM and started working with S who taught me the fundamentals of release engineering. We would spent many hours testing and implementing new features in the build, and test environment, and working with the development team to implement new functionality, since we used Eclipse bundles to build Eclipse. I have written a lot about that before on my blog so I won't reiterate. Needless to say, being paid to work full time in an open source community was a dream come true.

A couple of years ago, I moved to work at Mozilla. And the 20 year old who looked Mosaic for the first time and saw the beauty and promise of the web, couldn't believe where she ended up almost 20 years later.

Many people didn't grow up with the privilege that I have, with access to computers at such a young age, and encouragement to pursue it as a career. I thank all of you who I have worked with and learned so much from. Lots still to learn and do!

http://relengofthenerds.blogspot.com/2014/05/20-years-on-web.html

|

|

Kim Moir: Release Engineering Special Issue |

|

| A different type of mobile farm ©Suzie Tremmel, https://flic.kr/p/6tQ3H Creative Commons by-nc-sa 2.0 |

Are you a release engineer with a great story to share? Perhaps the ingenious way that you optimized your build scripts to reduce end to end build time? Or how you optimized your cloud infrastructure to reduce your IT costs significantly? How you integrated mobile testing into your continuous integration farm? Or are you a researcher who would like to publish their latest research in a area related to release engineering?

If so, please consider submitting a report or paper to the first IEEE Release Engineering special issue. Deadline for submissions is August 1, 2014 and the special issue will be published in the Spring of 2015.

IEEE Release Engineering Special Issue

If you have any questions about the process or the special issue in general, please reach out to any of the guest editors. We're happy to help!

We're also conducting a roundtable interview with several people from the release engineering community in the issue. This should raise some interesting insights given the different perspectives that people from organizations with large scale release engineering efforts bring to the table.

http://relengofthenerds.blogspot.com/2014/05/release-engineering-special-issue.html

|

|

Nikhil Marathe: ServiceWorker implementation status in Firefox |

I've been working on the Gecko implementation for a while now, helped by several other Mozillians and some of the first patches are now beginning to land on Nightly. Interest in trying out a build has been high within both Mozilla and outside. I've been procrastinating about a detailed hacks article, but I thought I'd at least share the build and what Firefox supports for now and what it doesn't.

- Download the build

- Run it using a clean profile.

- Go to about:config and set "dom.serviceWorkers.enabled" to true.

Supported:

- Registration, installation, activation, unregistration. Update() is not according to the spec, the scripts themselves are not cached offline, so don't actually try to make offline apps yet.

- Intercepting navigation and fetch events and replying using a simple SameOriginResponse object.

- Intercepting Push API events.

- Cache API

- fetch() - You'll have to use XHR in the worker.

- ClientLists and postMessage() to talk to windows

- Persistence - ServiceWorker registrations are currently not stored across restarts.

- Anything that throws an error :)

- No devtools support right now.

SameOriginResponse is a relic of an earlier version of the spec. Similarly the Request object received from the fetch event is not exactly up to speed with the spec.

I have two sample applications on Github to try out the build. Note that ServiceWorkers require HTTPS connections. For development, please create a new boolean preference in about:config - "dom.serviceWorkers.testing.enabled" and set it to true. This will disable the HTTPS requirement.

Push API Test - Remember to run the Firefox build from the command line since this demo prints stuff to the terminal. Click the register button to sign up for Push notifications. To perform the push, copy the unique URL and run

curl -vX PUT 'URL'The ServiceWorker will be spun up and a 'push' notification delivered.

Simple Network Interception - This example will load an iframe with some content. Refresh the page and the iframe's content should change and the browser should show an alert dialog. These changes are done by the ServiceWorkers (there are 2, one for the current scope and one for the sub/ scope) intercepting the requests for both "fakescript.js" and the iframe and injecting their own responses.

Contributing

We could always use help with the specification, implementation and testing.The specification is improved by filing issues.

The tracking bug for the Gecko implementation is Bug 903441. There is a mercurial patch queue for patches that have not landed on trunk.

I (nsm) am around on the #content channel in irc.mozilla.org if you have questions.

http://blog.nikhilism.com/2014/05/serviceworker-implementation-status-in-firefox.html

|

|

Roberto A. Vitillo: Using Telemetry to recommend Add-ons for Firefox |

This post is about the beauty of a simple mathematical theorem and its applications in logic, machine learning and signal processing: Bayes’ theorem. During the way we will develop a simple recommender engine for Firefox add-ons based on data from Telemetry and reconstruct a signal from a noisy channel.

tl;dr: If you couldn’t care less about mathematical beauty and probabilities, just have a look at the recommender system.

Often the problem we are trying to solve can be reduced to determining the probability of an hypothesis H, given that we have observed data D and have some knowledge of the way the data was generated. In mathematical form:

Where P(D|H) is the likelihood of the data D given our hypothesis H, i.e. how likely it is to see data D given that our hypothesis H is true, and P(H) is the prior belief about H, i.e. how likely our hypothesis H is true in the first place.

That’s all there is, check out my last post to see a basic application of the theorem to find out why many science research findings based purely on statistical inference turn out later to be false.

Logic

According to logic, from the statement “if A is true then B is true” we can deduce that “if B is false then A is false”. This can be proven simply by using a truth table or probabilistic reasoning:

where

So we can see that probabilistic reasoning can be applied to make logical deductions, i.e. deductive logic can be seen as nothing more than a limiting case of probabilistic reasoning, where probabilities take only values of 0 or 1.

Recommender Engine

Telemetry submissions contain the IDs of the add-ons of our users. Note that Telemetry is opt-in for our release builds so we don’t collect data if you don’t grant us explicitly permission to do so.

We might be interested in answering the following question: given that a user has the add-ons , what’s the probability that the user also has add-on B? We could then use the answer to suggest new add-ons to users that don’t possess B but have

. So let’s use Bayes theorem to derive our probabilities. What we are after is the following probability:

eq. 1

which corresponds to the ratio between Telemetry submissions that contain and the submissions that contain all those add-ons but B. Note that if

then obviously eq. 1 is going have a value of 1 and it isn’t of interest, so we assume that

Since the denominator is constant for any B, we don’t really care about it.

eq. 2

Say we want to be smart and precompute all possible probabilities in order to avoid the work for each request. It’s going to be pretty slow considering that the number of possible subsets of N add-ons is exponential.

If we make the naive assumptions that the add-ons are conditionally independent, i.e. that

we can rewrite eq. 2 like so

Awesome, this means that once we calculate the probabilities

where is the total number of add-ons in the universe, we have everything we need to calculate eq. 2 with just

multiplications.

If we perform this calculation for every possible add-on B and return the add-on for which the equation is maximized, then we can make a reasonable suggestion. Follow the link to play with a simple recommendation engine that does exactly this.

Keep in mind that I didn’t spend time cleaning up the dataset from non interesting add-ons like Norton Toolbar, etc. The reason those add-ons show up is simply because a very large fraction of the population has them.

Signal Processing

Say we send a signal over a noisy channel and at the other end we would like to reconstruct the original signal by removing the noise. Let’s assume our original signal has the following form

where is the amplitude,

is the frequency and

is the phase. The i-th sample that we get on the other side of the channel is corrupted by some random noise

:

where ,

,

,

and

are random variables and

i.e. we assume the variance of the noise is the same for all samples.

Since we have some knowledge of how the data was generated, let’s see if we can apply Bayes’ theorem. We have a continuous number of possible hypothesis, one for each combination of the amplitude, frequency, phase and variance.

where the functions are probability density functions.

We are trying to find the quadruplet of values that maximizes the expression above so we don’t really care about the denominator since it’s constant. If we make the reasonable assumption that ,

,

and

are independent then

where ,

,

and

are our priors and since we don’t know much about them let’s assume that each one of them can be modeled as an Uniform distribution.

Assuming the measurements are independent from each other, the likelihood function for the vector of measurements is

Now we just have to specify our likelihood function for a single measurement, but since we have an idea of how the data was generated, we can do that easily

To compute the posterior distributions of our random variables given a series of measurements performed at the other end of the line, let’s use a Monte Carlo method with the python library pymc. As you can see from IPython notebook, it’s extremely easy to write and evaluate computationally a probabilistic model.

Final Thoughts

We have seen that Bayesian inference can be applied successfully in seemingly unrelated fields. Even though there are better suited techniques for logic, recommender systems and signal processing, it’s still interesting to see how such a simple theorem can have so many applications.

http://ravitillo.wordpress.com/2014/05/16/using-telemetry-to-recommend-add-ons-for-firefox/

|

|

Joel Maher: Some thoughts on being a good mentor |

I have done a good deal of mentored bugs as well as mentoring new Mozillians (gsoc, interns, employees) on their journey. I would like to share a few things which I have found that make things easier. Most of this might seem like common sense, but I find it so easy to overlook little details and forget things.

- When filing a bug (or editing an existing one), make sure to include:

- Link(s) to getting started with the code base (cloning, building, docs, etc.)

- Clear explanation of what is expected in the bug

- A general idea of where the problematic code is

- What testing should look like

- How to commit a patch

- A note of how best to communicate and not to worry about asking questions

- Avoid shorthand and acronyms!

- Spend a few minutes via IRC/Email getting to know your new friend, especially timezones and general schedule of availability.

- Make it a priority to do quick reviews and answer questions – nothing is more discouraging when you have 1 thing to work on and you need to wait for further information.

- It is your job to help them be effective – take the time to explain why coding styles and testing are important and how it is done at Mozilla.

- Make it clear how their current work plays a role in Mozilla as a whole. Nobody likes to work on something that is not valued.

- Granting access to Try server (or as a contributor to a git repository) really make you feel welcome and part of the team, consider doing this sooner than later. With this comes the responsibility of teaching them how to use their privileges responsibly!

- Pay attention to details- forming good habits up front go a long way!

With those things said, just try to put yourself in the shoes of a new Mozillian. Would you want honest feedback? Would you want to feel part of the larger community?

Being a good mentor should be rewarding (the majority of the time) and result in great Mozillians who people enjoy working with.

Lets continue to grow Mozilla!

http://elvis314.wordpress.com/2014/05/16/some-thoughts-on-being-a-good-mentor/

|

|

Leo McArdle: Mozilla & DRM |

Most people’s reaction to the Mozilla & DRM debacle makes me want to firmly and repeatedly smash my head against my desk - not a great idea when I’m surrounded by exams. I’ll outline why in a minute, but first, if you haven’t already, you really should go and read both Mitchell’s post on the Mozilla blog and Andreas’ post on the Mozilla Hacks blog.

Done? Good. Now for the things that make me want to hit myself over the head:

1. People who can’t (be bothered to) read

Most of the criticism comes from people who haven’t been bothered to go and read what Mozilla’s written about the issue (or just suck at it). If these people had, we’d have no complaints of Mozilla forcing users to use DRM, bundling proprietary code, or ‘giving up’ on user’s freedom and rights.

As you know from reading those two posts, essentially all that is happening is Adobe’s CDM is going to be implemented as an optional, monitored, special-type-of-plugin.

I’d say it’s no different from Flash, but it is going to be different. It’s going to be more secure, and presumably less buggy (being a ‘feature’ of Firefox). Once Firefox implements EME, there’s really no reason for Flash or Silverlight to continue to exist. Sure, this setup sucks. But I think Flash sucks more.

As for ‘giving up’: Mozilla can only be influential if it has influence. The primary source of Mozilla’s influence is the number of people using Firefox, which isn’t currently very big. Not implementing EME won’t help that. As others have said, this is not the hill to die on.

This all leads nicely onto my second point:

2. People who use Chrome

One of the best Tweets I found on the issue was somebody threatening to switch to Google Chrome because of this. I think the irony here is clear.

Yet, what astounds me more is not people threatening to switch, but people already using Chrome who want Mozilla to protect their rights.

Google is a for-profit company which exists to exploit users data. It’s collaborated with the NSA. It’s helped to lead the charge with Microsoft and Netflix for EME. Why on Earth, then, would you give Google support by using Chrome?

This may seem hypocritical from someone who uses Google’s services. Yet Google Search, Maps, Android (and so-on) are unparalleled. Chrome isn’t.

The single easiest thing you can do to support Mozilla is to use Firefox. It gives Mozilla the influence it needs to fight.

3. People who think Mozilla can single-handedly ‘change the industry’

I hate DRM as much as the next guy and I think copyright is fundamentally broken - it’s why I’m a member of the Pirate Party, it’s why I donate to ORG and EFF, and it’s through these avenues I expect to see real change.

Mozilla can only change the industry with user support. And users don’t care about DRM, they only care that video works. We clearly saw this with WebM and H.264.

There’s work to be done, but it can’t be done if Mozilla loses its influence, and it can only be done with the support (not ire) of other organisations.

Users want DRM. We should give them DRM. That doesn’t mean Mozilla supports DRM, and it doesn’t mean Mozilla can’t educate users about what DRM means (and there are some very good signs of that being bundled into Webmaker soon).

In conclusion

Don’t be disappointed in Mozilla.

Be disappointed in Google, Microsoft and Apple for implementing this first, and forcing Mozilla’s hand.

Be disappointed in Netflix and its friends (including, surprisingly, the BBC!) calling for DRM.

Be disappointed in your elected representatives creating an environment where it is potentially illegal to say specific things about DRM.

Now go out, educate users about what DRM means, and why it’s bad. Use Firefox, and donate time or money to Mozilla to give it the influence it needs. Support organisations (such as EFF, ORG, FSF, FSFE) and political parties who represent your views on DRM and Copyright reform.

This is by no means the end of the battle over DRM and Copyright - it’s just the beginning.

|

|

Mic Berman: Two Steps to Getting Better Organized |

Recently I learned, through ClearFit’s ‘job-fit’ profiling tool that being organized is not my strength - anyone who knows me might be surprised by this. I mean I manage my life by lists, I tend towards being OCD the day before a vacation, or running an event, and my filing systems are beautiful! Well it turns out that these are all coping mechanisms for naturally *not* being organized. If I was naturally so - this would simply flow effortlessly.

As a Leadership Coach - I have the advantage of hearing about this common problem from many vantage points. There is not one client I’ve had, from the C-suite on down who doesn’t struggle with this same thing. There are an amazing plethora of books dedicated to this topic and my favourite first foundational tool is Covey’s First Things First. This book is my go-to on this topic because he uses a super simple model that’s easy to remember and apply for any list of priorities. But when I am feeling particularly overwhelmed or stuck in the choice modality, I turn to the two action steps I share below

So, from the ‘trenches’, here is a dead simple 2-step daily practice. And the key to the success of this approach is daily practice!

-

Step 1 - Each morning take a few minutes to yourself to sit quietly and answer two questions: What is most important today to focus on? How do you want to show up for the people you will be meeting?

-

I do this sometimes in the car while waiting for the engine to warm up in winter - I close my eyes, and take some nice slow and deep breaths

-

My favourite version of this practice, is to take 15 minutes and mediate in my cozy been bag chair ideally in the morning sunrise light and find the answer to these questions before my time is done

-

Step 2 - When you get that 'overwhelm' feeling take 3 deep - and I mean deep - breaths. Then decide what is most important in that moment. And/or go for a walk around the block - essentially you want to get in touch with your physical being.

-

Bonus step - find an activity that works for you in practicing that brain/body connection eg,Yoga, TaiChi, Basketball, Running, whatever will do it for you.

So, what's working for you?

Comment below or tweet me @micberman

http://michalberman.typepad.com/my_weblog/2014/05/two-steps-to-getting-better-organized.html

|

|

Mozilla Reps Community: Rep Of The Month : May 2014 – Nino Vranesic |

When Nino joined the Mozilla community, he didn’t spend much time talking, asking questions and thinking about how he could start contributing. Instead, he already applied for Mozilla Student Reps (today known as Firefox Student Ambassadors) and started acting by organising a local Open Source conference, with strong presence from Mozilla.

When Nino joined the Mozilla community, he didn’t spend much time talking, asking questions and thinking about how he could start contributing. Instead, he already applied for Mozilla Student Reps (today known as Firefox Student Ambassadors) and started acting by organising a local Open Source conference, with strong presence from Mozilla.

Passionate about free and open technologies, Nino continues to be the driving force behind the event (Open Way), which is growing every year and has just completed its 4th incarnation. As the main community builder of the Mozilla Slovenija community, he made sure the New Firefox release party was part of the conference, too.

Nino is a kind of a person you want on your team: he gets things done.

https://blog.mozilla.org/mozillareps/2014/05/15/rep-of-the-month-may-2014-nino-vranesic/

|

|

Joel Maher: Are there any trends in our Talos regression bugs? |

Now that we have a better process for taking action on Talos alerts and pushing them to resolution, it is time to take a step back and see if any trends show up in our bugs.

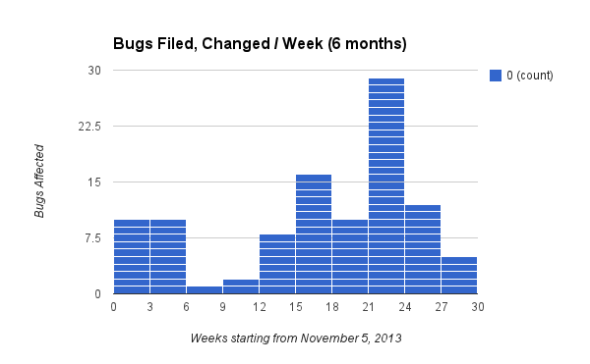

First I want to look at bugs filed/week:

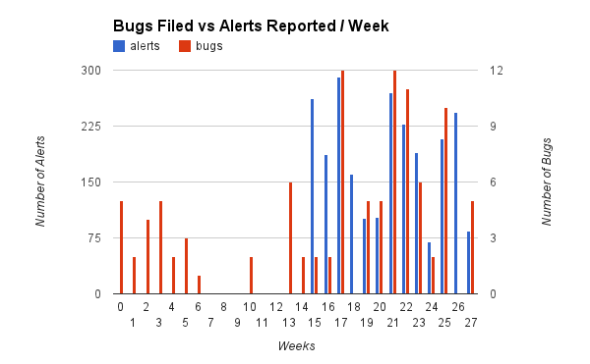

This is fun to see, now what if we stack this up side by side with the alerts we receive:

We started tracking alerts halfway through this process. We show that for about 1 out of every 25 alerts we file a bug. I had previously stated it was closer to 1/33 alerts (it appears that is averaging out the first few weeks).

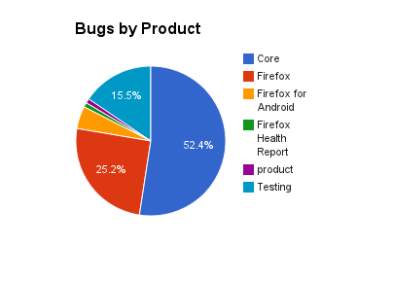

Lets see where these bugs are filed, here is a view of the different bugzilla products:

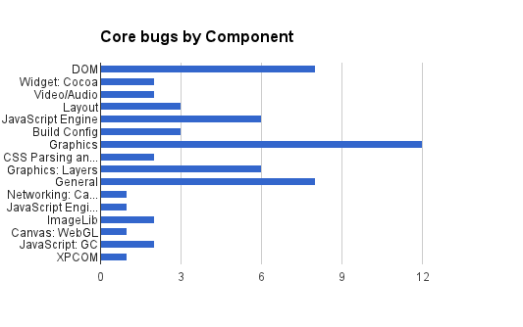

The Testing product is used to file bugs that we cannot figure out the exact changeset, so they get filed in testing::talos. As there are almost 30 unique components bugs are filed in, I took a few minutes to look at the Core product, here is where the bugs live in Core:

Pardon my bad graphing attempt here with the components cut off. Graphics is the clear winner for regressions (with “graphics: layers” being a large part of it). Of course the Javascript Engine and DOM would be there (a lot of our tests are sensitive to changes here). This really shows where our test coverage is more than where bad code lives.

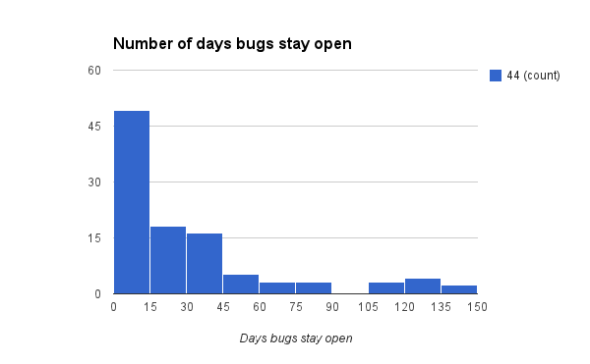

Now that I know where the bugs are, here is a view of how long the bugs stay open:

The fantastic news is most of our bugs are resolved in <=15 days! I think this is a metric we can track and get better at- ideally closing all Talos regression bugs in <30 days.

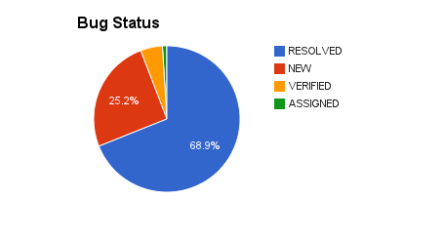

Looking over all the bugs we have, what is the status of them?

Yay for the blue pacman! We have a lot of new bugs instead of assigned bugs, that might be something we could adjust and assign owners once it is confirmed and briefly discussed- that is still up in the air.

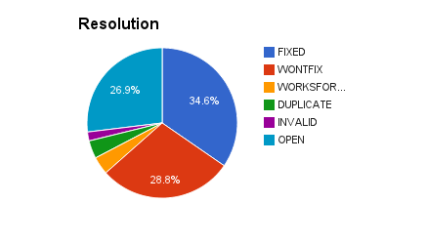

The burning question is what are all the bugs resolved as?

To me this seems healthy, it is a starting point. Tracking this over time will probably be a useful metric!

In summary, many developers have done great work to make improvements and fix patches over the last 6 months that we have been tracking this information. There are things we can do better, I want to know-

What information provided today is useful to track regularly?

Is there something you would rather see?

http://elvis314.wordpress.com/2014/05/15/are-there-any-trends-in-our-talos-regression-bugs/

|

|

David Boswell: Connecting with more people interested in contributing |

To increase the number of active contributors by 10x this year, we’re going to need to connect with more people interested in contributing. I’m excited that the Community Building team has helped reboot a couple of things that will let us do that.

Nightly builds used to bring up a page with information about how to help test Firefox, but it had been turned off about a year ago because the page was not being maintained. The page is now back on and we’re already seeing an increase in traffic.

We’ve taken a first pass at updating the Nightly First Run and What’s New page and are working on a larger redesign to get relevant contribution information in front of people who are interested in testing, developing and localizing Firefox.

We’ve also been working with Community Engagement to bring back the about:mozilla newsletter which has over 60,000 people who have signed up to receive regular contribution opportunities and news from us.

The first issue since October is coming out this week and new issues will be coming out every two weeks (sign up for the newsletter on the Get Involved page). We’re also making it easier for you to submit timely contribution opportunities that we can feature in future issues.

These are just a couple of the ways that we have to connect with people interested in contributing to Mozilla. We’re putting better documentation together to make it easy to tap into all of the ways to connect with new contributors.

If you have questions about any of this or would like to get help with bringing new contributors into your project, feel free to get in touch and we’ll be happy to work with you.

|

|

Adam Lofting: Contributor Dashboard Status Update (‘busy work’?) |

While I’m always itching to get on with doing the work that needs doing, I’ve spent this morning writing about it instead. Part of me hates this, but another realizes this is valuable. Especially when you’re working remotely and the project status in your head is of no use to your colleagues scattered around the globe.

So here’s the updated status page on our Mozilla Foundation Contributor Dashboard, and some progress on my ‘working open‘.

Filing bugs, linking them to each other, and editing wiki pages can be tedious work (especially wiki tables that link to bugs!) but the end result is very helpful, for me as well as those following and contributing to the project.

And a hat-tip to Pierros, whose hard-work on the project Baloo wiki page directly inspired the formatting here.

Now, back to doing! ![]()

http://feedproxy.google.com/~r/adamlofting/blog/~3/Hd27d4tMEk8/

|

|

Gervase Markham: To Serve Users |

My honourable friend Bradley Kuhn thinks Mozilla should serve its users by refusing to give them what they want.

[Clarificatory update: I wrote this post before I'd seen the official FSF position; the below was a musing on the actions of the area of our community to which Bradley ideologically belongs, not an attempt to imply he speaks for the FSF or wrote their opinion. Apologies if that was not clear. And I'm a big fan of (and member of) the FSF; the below criticisms were voiced by private mail at the time.]

One weakness I have seen in the FSF, in things like the PlayOgg and PDFReaders campaigns, is that they think that lecturing someone about what they should want rather than (or before) giving them what they do want is a winning strategy. Both of the websites for those campaigns started with large blocks of text so that the user couldn’t possibly avoid finding out exactly what the FSF position was in detail before actually getting their PDF reader or playback software. (Notably missing from the campaigns, incidentally, were any sense that the usability of the recommended software was at all a relevant factor.)

Bradley’s suggestion is that, instead of letting users watch the movies they want to watch, we should lecture them about how they shouldn’t want it – or should refuse to watch them until Hollywood changes its tune on DRM. I think this would have about as much success as PlayOgg and PDFReaders (link:pdfreaders.org: 821 results).

It’s certainly true that Mozilla has a different stance here. We have influence because we have market share, and so preserving and increasing that market share is an important goal – and one that’s difficult to attain. And we think our stance has worked rather well; over the years, the Mozilla project has been a force for good on the web that other organizations, for whatever reason, have not managed to be. But we aren’t invincible – we don’t win every time. We didn’t win on H.264, although the deal with Cisco to drive the cost of support to $0 everywhere at least allowed us to live to fight another day. And we haven’t, yet, managed to find a way to win on DRM. The question is: is software DRM on the desktop the issue we should die on a hill over? We don’t think so.

Bradley accuses us of selling out on our principles regarding preserving the open web. But making a DRM-free web is not within our power at the moment. Our choice is not between “DRM on the web” and “no DRM on the web”, it’s between “allow users to watch DRMed videos” and “prevent users from watching DRMed videos”. And we think the latter is a long-term losing strategy, not just for the fight on DRM (if Firefox didn’t exist, would our chances of a DRM-free web be greater?), but for all the other things Mozilla is working for. (BTW, Mitchell’s post does not call open source “merely an approach”, it calls it “Mozilla’s fundamental approach”. That’s a pretty big misrepresentation.)

Accusing someone of having no principles because they don’t always get their way when competing in markets where they are massively outweighed is unfair. Bradley would have us slide into irrelevance rather than allow users to continue to watch DRMed movies in Firefox. He’s welcome to recommend that course of action, but we aren’t going to take it.

http://feedproxy.google.com/~r/HackingForChrist/~3/g_fnoepF6N8/

|

|

Chris Double: Firefox Development on NixOS |

Now that I’ve got NixOS installed I needed a way to build and make changes to Firefox and Firefox OS. This post goes through the approach I’ve taken to work on the Firefox codebase. In a later post I’ll build on this to do Firefox OS development.

Building Firefox isn’t difficult as NixOS has definitions for standard Firefox builds to follow as examples. To build from a local source repository it requires all the pre-requisite packages to be installed. I don’t want to pollute my local user environment with all these packages though as I develop on other things which may have version clashes. As an example, Firefox requires autoconf-2.13 whereas other systems I develop with require different verisons.

NixOS (through the Nix package manager) allows setting up build environments that contain specific packages and versions. Switching between these is easy. The file ~/.nixpkgs/config.nix can contain definitions specific for a user. I add the definitions as a packageOverride in this file. The structure of the file looks like:

{

packageOverrides = pkgs : with pkgs; rec {

..new definitions here..

};

}My definition for a build environment for Firefox is:

firefoxEnv = pkgs.myEnvFun {

name = "firefoxEnv";

buildInputs = [ stdenv pkgconfig gtk glib gobjectIntrospection

dbus_libs dbus_glib alsaLib gcc xlibs.libXrender

xlibs.libX11 xlibs.libXext xlibs.libXft xlibs.libXt

ats pango freetype fontconfig gdk_pixbuf cairo python

git autoconf213 unzip zip yasm alsaLib dbus_libs which atk

gstreamer gst_plugins_base pulseaudio

];

extraCmds = ''

export C_INCLUDE_PATH=${dbus_libs}/include/dbus-1.0:${dbus_libs}/lib/dbus-1.0/include

export CPLUS_INCLUDE_PATH=${dbus_libs}/include/dbus-1.0:${dbus_libs}/lib/dbus-1.0/include

LD_LIBRARY_PATH=\$LD_LIBRARY_PATH:${gcc.gcc}/lib64

for i in $nativeBuildInputs; do

LD_LIBRARY_PATH=\$LD_LIBRARY_PATH:\$i/lib

done

export LD_LIBRARY_PATH

export AUTOCONF=autoconf

'';

};The Nix function pkgs.myEnvFun creates a program that can be run by the user to set up the environment such that the listed packages are available. This is done using symlinks and environment variables. The resulting shell can then be used for normal development. By creating special environments for development tasks it becomes possible to build with different versions of packages. For example, replace gcc with gcc46 and the environment will use that C compiler version. Environments for different versions of pango, gstreamer and other libraries can easily be created for testing Firefox builds with those specific versions.

The buildInputs field contains an array of the packages to be avaliable. These are all the pre-requisites as listed in the Mozilla build documentation. This could be modified by adding developer tools to be used (Vim, Emacs, Mercurial, etc) if desired.

When creating definitions that have a build product Nix will arrange the dynamic loader and paths to link to the correct versions of the libraries so that they can be found at runtime. When building an environment we need to change LD_LIBRARY_PATH to include the paths to the libraries for all the packages we are using. This is what the extraCmds section does. It is a shell script that is run to setup additional things for the environment.

The extraCmds in this definition adds to LD_LIBRARY_PATH the lib directory of all the packages in buildInputs. It exports an AUTOCONF environment variable to be the autoconf executable we are using. This variable is used in the Mozilla build system to find autoconf-2.13. It also adds to the C and C++ include path to find the DBus libraries which are in a nested dbus-1.0 directory.

To build and install this new package use nix-env:

$ nix-env -i env-firefoxEnvRunning the resulting load-env-firefoxEnv command will create a shell environment that can be used to build Firefox:

$ load-env-firefoxEnv

...

env-firefoxEnv loaded

$ git clone git://github.com/mozilla/gecko-dev

...

$ cd gecko-dev

$ ./mach buildExiting the shell will remove access to the pre-requisite libraries and tools needed to build Firefox. This keeps your global user environment free and minimizes the chance of clashes.

http://bluishcoder.co.nz/2014/05/15/firefox-development-on-nixos.html

|

|

Benjamin Kerensa: On DRM and Firefox |

There has been a lot of criticism of Mozilla’s decision to move forward in implementing W3C EME, a web standard that the standards body has been working on for some time. While it is understandable that many are upset and believe that Mozilla is not honoring its values, the truth is there really is no other decision Mozilla can make while continuing to compete with other browsers.

There has been a lot of criticism of Mozilla’s decision to move forward in implementing W3C EME, a web standard that the standards body has been working on for some time. While it is understandable that many are upset and believe that Mozilla is not honoring its values, the truth is there really is no other decision Mozilla can make while continuing to compete with other browsers.

The fact is, nearly 30% of Internet traffic today is Netflix, and Netflix is one of the content publishers pushing for this change along with other big names. If Mozilla were to choose not to implement this web standard, it would leave a significant portion of users with inability to access some of the locked content the a majority of users desire. A good portion of users would likely make a decision to leave Firefox rather quickly if this was not implemented and they were locked out.

So with that reality in mind, Mozilla has a choice to support this standard (which is not something the organization necessarily enjoys) or to not support it and lose much of its user base and have a very uncertain future.

“By open-sourcing the sandbox that limits the Adobe software’s access to the system, Mozilla is making it auditable and verifiable. This is a much better deal than users will get out of any of the rival browsers, like Safari, Chrome and Internet Explorer, and it is a meaningful and substantial difference.” – Cory Doctorow, The Guardian

The best thing that can be done right now is for users who are unhappy with the decision to continue to support Mozilla which will continue to fight for an open web. Users should also be vocal to the W3C and content publishers that are responsible for this web standard.

In closing Ben Moskowitz also wrote a great blog post on this topic explaining quite more in depth why Mozilla is in this position.

http://feedproxy.google.com/~r/BenjaminKerensaDotComMozilla/~3/c_Q9eW1_Jco/drm-firefox

|

|