Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Yunier Jos'e Sosa V'azquez: Mozilla ofrecer'a la opci'on de reproducir contenido con DRM en Firefox |

La gesti'on de derechos digitales (DRM por sus siglas en ingl'es) es un mecanismo utilizado por las empresas productoras de contenidos para intentar controlar el uso que hacen los usuarios del contenido. Hasta ahora, el contenido con DRM era reproducido a trav'es de plugins externos (como Adobe Flash o Microsoft Silverlight). Google, Microsoft y Netflix propusieron una nueva soluci'on en la W3C (la organizaci'on que dise~na los est'andares de la Web) conocida como EME.

La gesti'on de derechos digitales (DRM por sus siglas en ingl'es) es un mecanismo utilizado por las empresas productoras de contenidos para intentar controlar el uso que hacen los usuarios del contenido. Hasta ahora, el contenido con DRM era reproducido a trav'es de plugins externos (como Adobe Flash o Microsoft Silverlight). Google, Microsoft y Netflix propusieron una nueva soluci'on en la W3C (la organizaci'on que dise~na los est'andares de la Web) conocida como EME.

Como escribe Mitchell Baker, de Mozilla:

Hoy en Mozilla nos encontramos en un lugar dif'icil donde no es posible tomar una decisi'on perfecta. Tenemos que elegir entre una funcionalidad que nuestros usuarios quieren y un grado en que esa caracter'istica pueda ser creada para que mantenga al usuario en control y defienda su privacidad. (…) En Mozilla pensamos que esta nueva implementaci'on contiene las mismas profundas debilidades que el sistema viejo. No provee el balance correcto entre proteger a los individuos y proteger el contenido digital. El proveedor del contenido requiere que una parte importante del sistema sea de c'odigo cerrado, algo que est'a en contra de la forma de trabajar de Mozilla.

El problema est'a en que Microsoft, Google y Apple han comenzado a implementar esta tecnolog'ia y que pronto la soluci'on anterior basada en Flash y Silverlight desaparecer'a. Y las productoras de contenido no quieren abandonar los mecanismos de DRM.

Mozilla logr'o un acuerdo con Adobe para ofrecer una soluci'on que mantiene gran parte del control en sus usuarios, pero brindando las seguridades que la industria cinematogr'afica y musical pide al sistema.

Esta soluci'on ser'a completamente opcional, y el usuario tendr'a que descargarla especialmente. El c'odigo de Firefox seguir'a siendo completamente abierto.

Si quieres seguir leyendo sobre el tema recomendamos las siguientes lecturas:

- Preguntas frecuentes en castellano

- Escrito completo de Mitchell Baker (en)

- Escrito de Andreas Gal (en) (sobre la tecnolog'ia que se utilizar'a)

- Escrito de Guillermo Movia acerca del DRM y la soluci'on de Mozilla

- Escrito de Nukeador sobre el DRM, Firefox y los usuarios.

Fuente: Mozilla Hispano

http://firefoxmania.uci.cu/mozilla-ofrecera-la-opcion-de-reproducir-contenido-con-drm-en-firefox/

|

|

Mike Conley: Australis Performance Post-mortem Part 4: On Tab Animation |

Whoops, forgot that I had a blog post series going on here where I talk about the stuff we did to make Australis blazing fast. In that time, we’ve shipped the thing (Firefox 29 represent!), so folks are actually feeling the results of this performance work, which is pretty excellent.

I ended the last post on an ominous note – something about how we were clear to land Australis on Nightly, or so we thought.

This next bit is all about timing.

There is a Performance Team at Mozilla, who are charged with making our products crazy-fast and crazy-smooth. These folks are geniuses at wringing out every last millisecond possible from a computation. It’s what they do all day long.

A pretty basic principle that I’ve learned over the years is that if you want to change something, you first have to develop a system for measuring the thing you’re trying to change. That way, you can determine if what you’re doing is actually changing things in the way you want them to.

If you’ve been reading this Australis Performance Post-mortem posts, you’ll know that we have some performance tests like this, and they’re called Talos tests.

Just about as we were finishing off the last of the t_paint and ts_paint regressions, the front-end team was suddenly made aware of a new Talos test that was being developed by the Performance team. This test was called TART, and stands for Tab Animation Regression Test. The purpose of this test is to exercise various tab animation scenarios and measure the time it takes to paint each frame and to proceed through the entire animation of a tab open and a tab close.

The good news was that this new test was almost ready for running on our Nightly builds!

The bad news was that the UX branch, which Australis was still on at the time, was regressing this test. And since we cannot land if we regress performance like this… it meant we couldn’t land.

Bad news indeed.

Or was it? At the time, the lot of us front-end engineers were groaning because we’d just slogged through a ton of other performance regressions. Investigating and fixing performance regressions is exhausting work, and we weren’t too jazzed that another regression had just shown up.

But thinking back, I’m somewhat glad this happened. The test showed that Australis was regressing tab animation performance, and tabs are opened every day by almost every Firefox user. Regressing tab performance is simply not a thing one does lightly. And this test caught us before we landed something that regressed those tabs mightily!

That was a good thing. We wouldn’t have known otherwise until people started complaining that their tabs were feeling sluggish when we released it (since most of us run pretty beefy development machines).

And so began the long process of investigating and fixing the TART regressions.

So how bad were things?

Let’s take a look at the UX branch in comparison with mozilla-central at the time that we heard about the TART regressions.

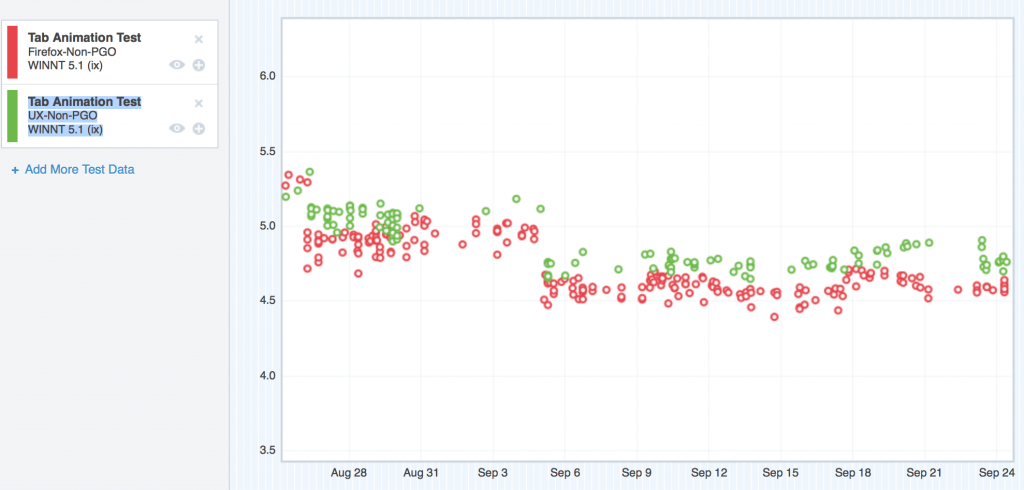

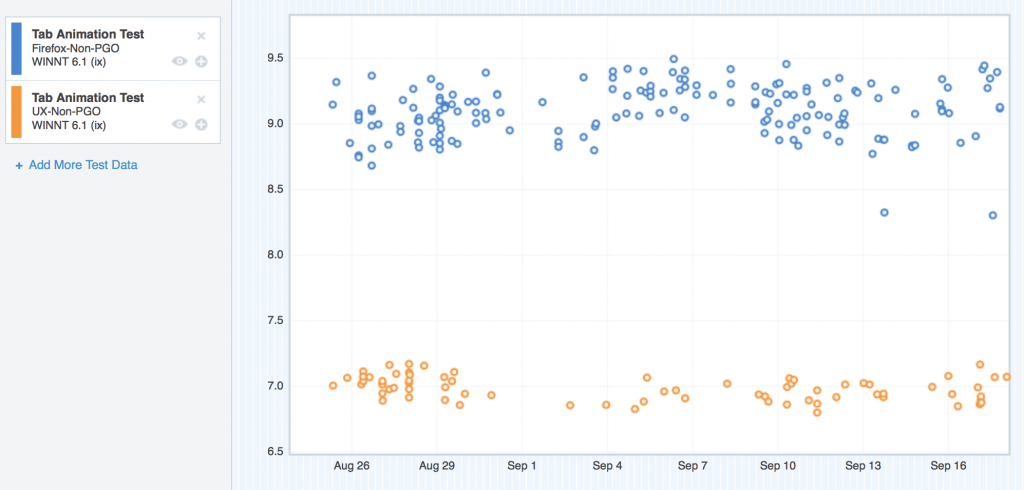

Here are the regressing platforms. I’ll start with Windows XP:

Forgive me, I couldn’t get the Graph Server to swap the colours of these two datasets, so my original silent pattern of “red is the regressor” has to be dropped. I could probably spend some time trying to swap the colours through various tricky methods, but I honestly don’t think anybody reading this will care too much.

So here we can see the TART scores for Windows XP, and the UX branch (green) is floating steadily over mozilla-central (red). Higher scores are bad. So here’s the regression.

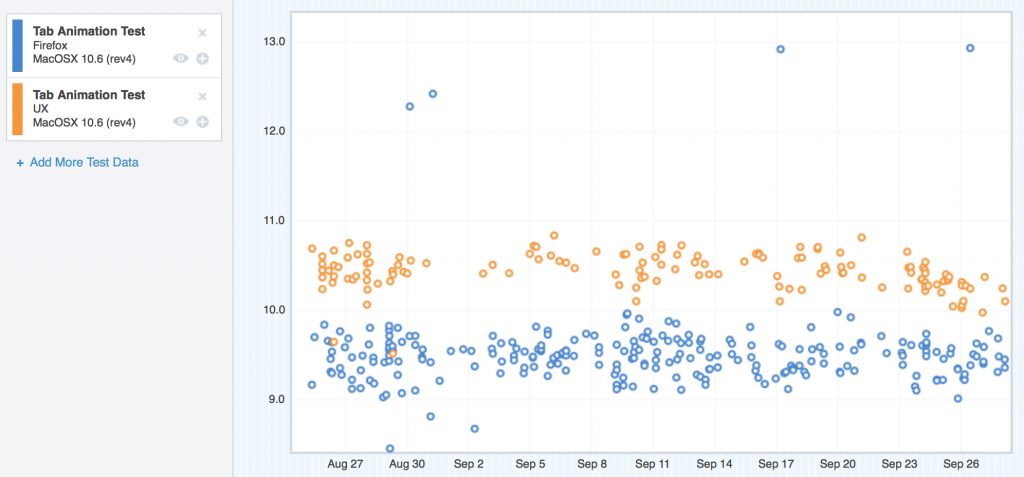

Now let’s see OS X 10.6.

Same problem here – the UX nodes (tan) are clearly riding higher than mozilla-central. This was pretty similar to OS X 10.7 too, so I didn’t include the graph.

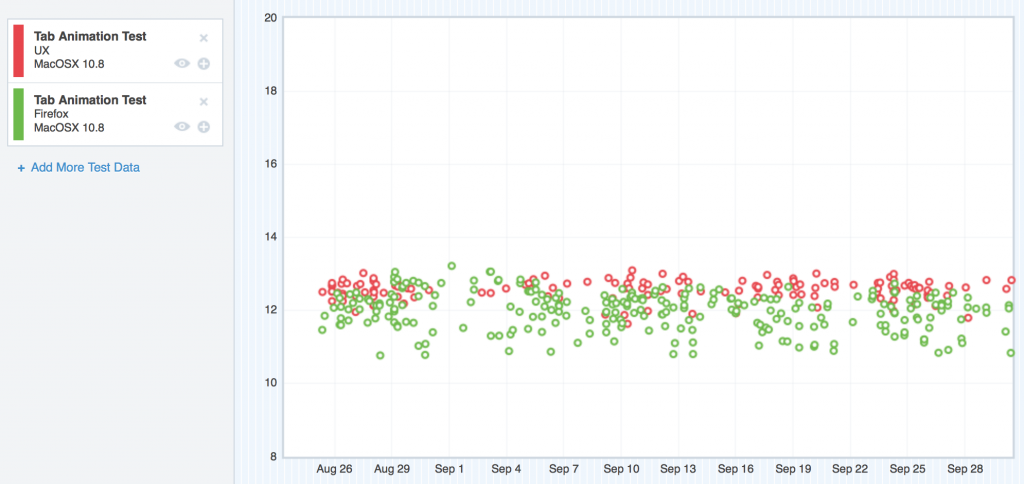

On OS X 10.8, things were a little bit better, but not too much:

Here, the regression was still easily visible, but not as large in magnitude.

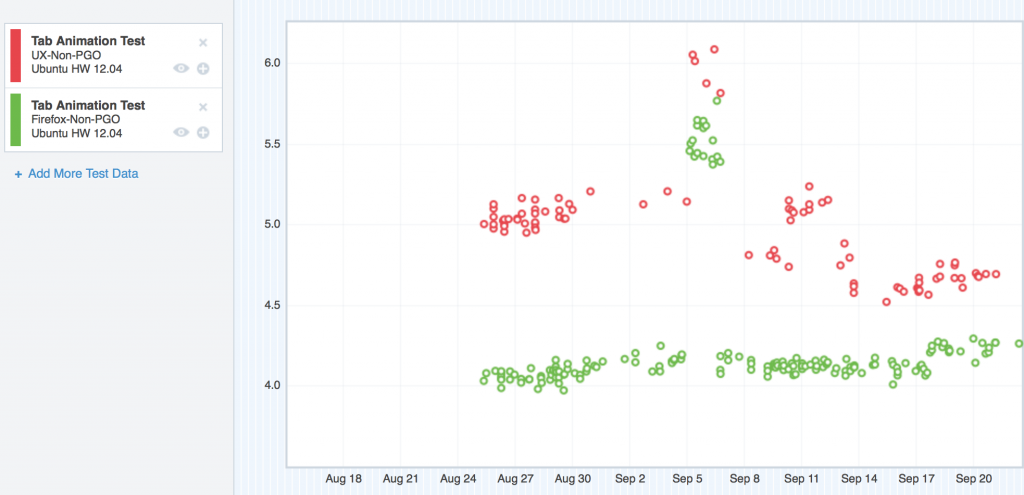

Ubuntu was in the same boat as OS X 10.6/10.7 and Windows XP:

But what about Windows 7 and Windows 8? Well, interesting story – believe it or not, on those platforms, UX seemed to perform better than mozilla-central:

Windows 7 (the blue nodes are mozilla-central, the tan nodes are UX)

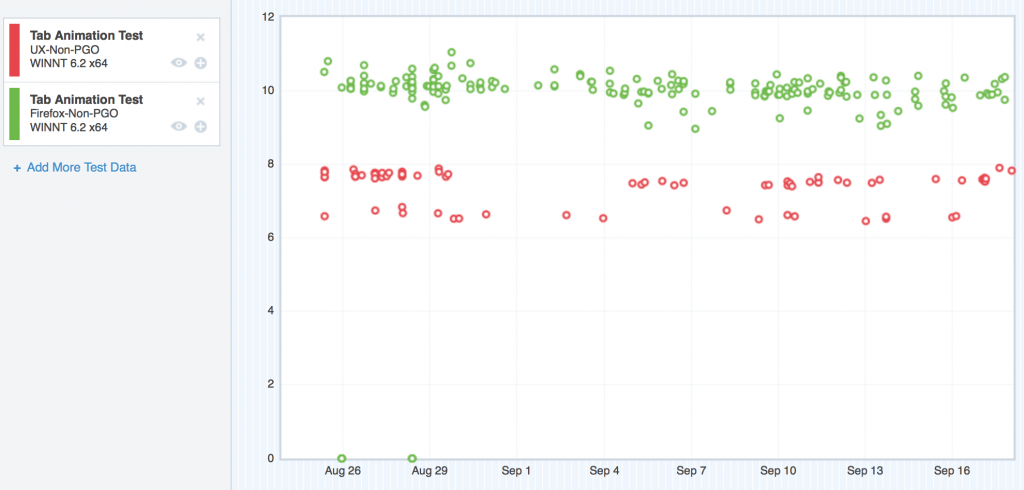

Windows 8 (the green nodes are mozilla-central, the red nodes are UX)

So what the hell was going on?

Well, we eventually figured it out. I’ll lay it out in the next few paragraphs. The following is my “rogue’s gallery” of regressions. This list does not include many false starts and red herrings that we followed during the months working on these regressions. Think of this as “getting to the good parts”.

Backfilling

The problem with having a new test, and having mozilla-central better than UX, is not knowing where UX got worse; there was no historical measurements that we could look at to see where the regression got introduced.

MattN, smart guy that he is, got us a few talos loaner machines, and wrote some scripts to download the Nightlies for both mozilla-central and UX going back to the point where UX split off. Then, he was able to run TART on these builds, and supply the results to his own custom graph server.

So basically, we were able to backfill our missing TART data, and that helped us find a few points of regression.

With that data, now it was time to focus in on each platform, and figure out what we could do with it.

Windows XP

We started with XP, since on the regressing platforms, that’s where most of our users are.

Here’s what we found and fixed:

Bug 916946 – Stop animating the back-button when enabling or disabling it.

During some of the TART tests, we start with single tab, open a new tab, and then close the new tab, and repeat. That first tab has some history, so the back button is enabled. The new tab has no history, so the back button is disabled.

Apparently, we had some CSS that was causing us to animate the back-button when we were flipping back and forth from the enabled / disabled states. That CSS got introduced in the patch that bound the back/forward/stop/reload buttons to the URL bar. It seemed to affect Windows only. Fixing that CSS gave us our first big win on Windows XP, and gave us more of a lead with Windows 7 and Windows 8!

Bug 907544 - Pass the D3DSurface9 down into Cairo so that it can release the DC and LockRect to get at the bits

I don’t really remember how this one went down (and I don’t want to really spend the time swapping it back in by reading the bug), but from my notes it looks like the Graphics team identified this possible performance bottleneck when I showed them some profiles I gathered when running TART.

The good news on this one, was that it definitely gave the UX branch a win on Windows XP. The bad news is that it gave the same win to mozilla-central. This meant that while overall performance got better on Windows XP, we still had the same regression preventing us from landing.

Bug 919541 - Consider not animating the opacity for Australis tabs

Jeff Muizelaar helped me figure this out while we were using paint flashing to analyze paint activity while opening and closing tabs. When we slowed down the transitions, we noticed that the closing tabs were causing paints even though they weren’t visible. Closing tabs aren’t visible because with Australis, we don’t show the tab shape around tabs when they’re not selected – and closing a tab automatically unselects it for something else.

For some reason, our layout and graphics code still wanted to paint this transition even though the element was not visible. We quickly nipped that in the bud, and got ourselves a nice win on tab close measurements for all platforms!

Bug 921038 – Move selected tab curve clip-paths into SVG-as-an-image so it is cached.

This was the final nail in the coffin for the TART regression on Windows XP. Before this bug, we were drawing the linear-gradients in the tab shape using CSS, and the clipping for the curve background colour was being pulled off using clip-path and an SVG curve defined in the browser.xul document.

In this bug, we moved from clipping a background to create the curve, to simply drawing a filled curve using SVG, and putting the linear-gradient for the texture in the “stroke” image (the image that overlays the border on the tab curve).

That by itself was not enough to win back the regression – but thankfully, Seth Fowler had been working on SVG caching, and with that cache backend, our patch here knocked the XP and Ubuntu regressions out! It also took out a chunk from OS X. Things were looking good!

OS X

Bug 924415 - Find out why setting chromemargin to 0,-1,-1,-1 is so expensive for TART on UX branch on OS X.

I don’t think I’ll ever forget this bug.

I had gotten my hands on a Mac Mini that (after some hardware modifications) matched the specs of our 10.6 Talos test machines. That would prove to be super useful, as I was easily able to reproduce the regression that machine, and we could debug and investigate locally, without having to remote in to some loaner device.

With this machine, it didn’t take us long to identify the drawing of the tabs in the titlebar as the main culprit in the OS X regression. But the “why” eluded us for weeks.

It was clear I wasn’t going to be able to solve it on my own, so Jeff Muizelaar from the Graphics team joined in to help me.

We looked at OpenGL profiles, we looked at apitraces, we looked at profiles using the Gecko Profiler, and we looked at profiles from Instruments – the profiler that comes included with XCode.

It seemed like the performance bottleneck was coming from the operating system, but we needed to prove it.

Jeff and I dug and dug. I remember going home one day, feeling pretty deflated by another day of getting nowhere with this bug, when as I was walking into my apartment, I got a phone call.

It was Jeff. He told me he’d found something rather interesting – when the titlebar of the browser overlapped the titlebar of another window, he was able to reproduce the regression. When it did not overlap, the regression went away.

Talos tests open a small window before they open any test browser windows. That little test window stays in the background, and is (from my understanding) a dispatch point for making talos tests occur. That little window has a titlebar, and when we opened new browser windows, the titlebars would overlap.

Jeff suggested I try modifying the TART test to move the browser approximately 22px (the height of a standard OS X titlebar) so that they would no longer overlap. I set that up, triggered a bunch of test runs, and went to bed.

I wasn’t able to sleep. Around 4AM, I got out of bed to look at the results – SUCCESS! The regression had gone away! Jeff was right!

I slept like a baby the rest of the night.

We closed this bug as a WONTFIX due to it being way outside our control.

Comment 31 and onward in that bug are the ones that describe our findings.

Eat it TART, your tears are delicious

Those were the big regressions we fixed for TART. It was a long haul, but we got there – and in the end, it means faster and smoother tab animation for our users, which means a better experience – and it’s totally worth it.

I’m particularly proud of the work we did here, and I’m also really happy with the cross-team support and collaboration we had – from Performance, to Layout, to Graphics, to Front-end – it was textbook teamwork.

Here’s an e-mail I wrote about us beating TART.

Where did we end up?

After the TART regression was fixed, we were set to land on mozilla-central! We didn’t just land a more beautiful browser, we also landed a more performant one.

Noice.

Stay tuned for Part 5 where I talk about CART.

http://mikeconley.ca/blog/2014/05/14/australis-performance-post-mortem-part-4-on-tab-animation/

|

|

Benoit Jacob: A Levinas quote that might be relevant to Intellectual Property |

First of all, let me stress that this is only a personal blog and I am at most only expressing personal opinions here. Now, this post isn’t even expressing an opinion, instead I would like to share a quote. Today’s events made me think about Intellectual Property. This reminded me of this quote from Emmanuel Levinas. It was written in 1934 in an entirely different context, and is part of an essay that can be read online. But the few sentences below talk about general notions that haven’t changed. Here’s the original quote in French (translation below):

L’id'ee qui se propage, se d'etache essentiellement de son point de d'epart. Elle devient, malgr'e l’accent unique que lui communique son cr'eateur, du patrimoine commun. Elle est fonci`erement anonyme. Celui qui l’accepte devient son ma^itre comme celui qui la propose. La propagation d’une id'ee cr'ee ainsi une communaut'e de « ma^itres » – c’est un processus d’'egalisation. Convertir ou persuader, c’est se cr'eer des pairs. L’universalit'e d’un ordre dans la soci'et'e occidentale refl`ete toujours cette universalit'e de la v'erit'e.

Mais la force est caract'eris'ee par un autre type de propagation. Celui qui l’exerce ne s’en d'epart pas. La force ne se perd pas parmi ceux qui la subissent. Elle est attach'ee `a la personnalit'e ou `a la soci'et'e qui l’exerce, elle les 'elargit en leur subordonnant le reste.

(Source)

Here is my modest attempt at a translation:

An idea, as it propagates, essentially detaches from its starting point. It becomes, despite the unique accent communicated to it by its creator, part of the common heritage. It is inherently anonymous. The one who accepts it becomes its master as much as the one who proposed it. The propagation of an idea creates a community of “masters” — it is a equalization process. To convert or to convince, is to make peers. The universality of an order in Western society always reflects this universality of truth.

But force is characterized by another type of propagation. The one who wields it does not dissociate himself from it. Force does not get lost among the ones subjected to it. It is attached to the personnality or society that uses it, and it enlarges them by subjecting the rest to them.

|

|

Ben Moskowitz: The Web Is Changing |

I spent 2 years of my life running a small media rights advocacy organization, the Open Video Alliance. We started in 2009, only a few years after YouTube came online, when people talked in glowing terms about how web video would benefit democracy. More diverse perspectives, less media consolidation, more participatory culture. All of that came true. Talk of citizen journalists and camera phones is old news.

But I am also from the free software world, so I think in long terms, absolutes, architecture. Part of our full-spectrum advocacy plan for “open video” was technical:

1. Open standards for video — no essential video technology should be controlled by a single party. Therefore: HTML5 instead of Flash.

2. Royalty-free technologies for video — no essential video technology should cost money. We don’t put a tax on text, why should we put a tax on video? Therefore: WebM instead of H264.

3. No use restrictions — people should be able to watch what they want, and no computer system should censor, restrict, or limit the making, sharing, or publishing of video content. Therefore: no ContentID-style filtering, no DRM in the browser.

I joined Mozilla because it was the only technology company to be seriously pushing this agenda in the marketplace. The long history of Firefox is “techno-social change through succeeding in the marketplace.”

Here is the theory: if a significant enough proportion of web users are on Firefox (Gecko, really), the makers of Firefox have some leverage in the technical development of the overall web. Put another way: “the role of Firefox is to keep the other guys honest.”

When I joined Mozilla, I thought Firefox was in a unique position to make (patriarchal?) technology decisions on behalf of users, that would make the technical architecture supporting video “more open.”

I am starting to doubt whether that’s the right way to position Firefox. Evidence shows that Firefox doesn’t have that kind of technical leverage. In an earlier time, we had immense leverage as “the alternative to Internet Explorer.” In the modern era we have tried—and failed—every time we’ve tried to pull those levers on our own.

We were the only hold outs on H.264, and we had to give in. Despite heroic efforts, our moves on Do Not Track and third-party cookies are turning out to be more thought leadership than real leverage. And today, we are reluctantly giving in on DRM in HTML5.

The price of success—introducing competition into the browser space—is that you don’t have leverage when you’re the only holdout against a industry-wide move in a different direction. Mozilla has gravity, but far less than combined weight of the mobile and content industries.

When your continued relevance depends on people liking and using your product, you can’t afford to be “the browser that can’t.”

With no H.264, Firefox was seen as “the browser that can’t play video.” An inaccurate but not unfair conclusion from a great many millions of browser users. And for several years. With no DRM, Firefox would have been seen as “the only browser that can’t play Netflix.”

The arguments against DRM are exceptionally well-articulated by Cory Doctorow and others. But the fact is, you definitely can’t afford to be the only hold-out on a feature that enables users to do more. In this case, for better or worse—”watch Netflix.” Yeah, that thing that accounts for 40% of all U.S. internet traffic.

This is confusing for free software people, because DRM clearly limits users. Yet Firefox is not the world. If Firefox doesn’t do DRM, Firefox limits its users in what they can do. It denies them functionality they can get by using other products. That’s a paradox which can’t be resolved by “taking a hard stance against DRM.” So if you’re mad today at Mozilla, read this a few times. You must understand the evidence supporting the logic for Mozilla’s decision to not die on this particular hill.

I believe there are hard lessons here for Mozilla, which is uniquely 1) a user-facing public benefit organization; and 2) a public benefit provider of products for users.

Chiefly: Freedom is not a technical feature. It’s a state of consciousness.

This sort of proclamation is personally tough, because it’s a concession that my two years pushing for royalty-free codecs as a defining political issue were—possibly misspent? It’s tough because it puts the proclaimer into unnecessary confrontation with allies like the EFF, the Software Freedom Law Center, and the Free Culture movement (where I come from), who imagine products like Tor and Freedom Boxes and other things taking over the marketplace, because they will enable users to do more. But often, they enable users to do less. The availability of such technologies is important weaponry in the battle for technical freedom. But they’re often not enough when you’re in a long-term war for the user.

Freedom is not a technical feature. At least, not for the mass market. It is truly heartbreaking to say, because I know people who have given literally everything for this idea.

But here is the good news: enabling users to do more is a feature.

There are legal fights, regulatory fights, political fights against DRM, which are appropriate and needed upstream. That’s why Mozilla is being very clear today that this is a painful decision.

But opposing DRM is not a good use of Mozilla’s resources. We’ve stood on principle before, with H.264, and it hurt us, and our ability to have impact elsewhere. A bit like cutting off the nose to spite the face.

A better role for Mozilla, and organizations like it—who have install bases, mindshare, some money, and a daily relationship with users—is to be affirmative. Proactive. Partners with its users for a better world. Not to be oppositional, but to actively innovate on the user’s behalf.

Mozilla’s at its strongest when its building new opportunities into the web platform. Teaching kids to code. Shining a light on who is watching. Empowering users, developers, and everyone in between. We have to help our users know more, do more, do better. Mozilla is about to undergo a renaissance, and I think you’ll see this reflected more and more with how we communicate with our users. Ultimately, any user-facing organization will have the greatest impact by proactively working on behalf of its users.

A coda: a case could be made that EME will make it easier for content distributors to experiment with—and perhaps eventually switch to—DRM-free distribution.

In 2007, Steve Jobs wrote an “open letter” on iTunes DRM. Today, most iTunes music is not DRM’d—it is watermarked. In that letter, Jobs laid out an argument against DRM, and a prediction that the short-term implementation of DRM would ultimately render DRM itself irrelevant. The argument was essentially this:

“We dislike DRM. But it’s a rational choice at this time to implement it. Because if we implement it, our users get access to content they otherwise wouldn’t. And the content owners can overcome their fears, come to our platform, experiment with less restrictive business models, and ultimately give up on DRM.”

Mozilla is essentially saying the same as Jobs in 2007. And I think this will also come true on the web. Perhaps there’s a sliver of truth in the idea, suggested even by Tim Berners-Lee (the father of the web!), that DRM in HTML5 is a victory, rather than a defeat.

That’s a rather shocking idea to some—including me. But one thing is for sure: the web, more than ever, is the platform through which we all access, publish, and share everything. It’s the web that’s changing, not Mozilla.

|

|

Daniel Stenberg: Why SFTP is still slow in curl |

Okay, there’s no point in denying this fact: SFTP transfers in curl and libcurl are much slower than if you just do them with your ordinary OpenSSH sftp command line tool or similar. The difference in performance can even be quite drastic.

Why is this so and what can we do about it? And by “we” I fully get that you dear reader think that I or someone else already deeply involved in the curl project should do it.

Background

I once blogged a lengthy post on how I modified libssh2 to do SFTP transfers much faster. curl itself uses libssh2 to do SFTP so there’s at least a good start. The problem is only that the speedup we did in libssh2 was because of SFTP’s funny protocol design so we had to:

- send off requests for a (large) set of data blocks at once, each block being N kilobytes big

- using a several hundred kilobytes big buffer (when downloading the received data would be stored in the big buffer)

- then return as soon as there’s one block (or more) that has returned from the server with data

- over time and in a loop, there are then blocks constantly in transit and a number of blocks always returning. By sending enough outgoing requests in the “outgoing pipe”, the “incoming pipe” and CPU can be kept fairly busy.

- never wait until the entire receive buffer is complete before we go on, but instead use a sliding buffer so that we avoid “halting points” in the transfer

This is more or less what the sftp tool does. We’ve also done experiments with using libssh2 directly and then we can reach quite decent transfer speeds.

libcurl

The libcurl transfer core is basically the same no matter which protocol that is being transferred. For a normal download this is what it does:

- waits for data to become available

- read as much data as possible into a 16KB buffer

- send the data to the application

- goto 1

So, there are two problems with this approach when it comes to the SFTP problems as described above.

The first one is that a 16KB buffer is very small in SFTP terms and immediately becomes a bottle neck in itself. In several of my experiments I could see how a buffer of 128, 256 or even 512 kilobytes would be needed to get high bandwidth high latency transfers to really fly.

The second being that with a fixed buffer it will come to a point every 16KB byte where it needs to wait for that specific response to come back before it can continue and ask for the next 16KB of data. That “sync point” is really not helping performance either – especially not when it happens so often as every 16KB.

A solution?

For someone who just wants a quick-fix and who builds their own libcurl, rebuild with CURL_MAX_WRITE_SIZE set to 256000 or something like that and you’ll get a notable boost. But that’s neither a nice nor clean fix.

A proper fix should first of all only be applied for SFTP transfers, thus deciding at run-time if it is necessary or not. Then it should dynamically provide a larger buffer and thirdly, for upload it should probably make the buffer “sliding” as in the libssh2 example code sftp_write_sliding.c.

This is also already mentioned in the TODO document as “Modified buffer size approach“.

There’s clearly room for someone to step forward and help us improve in this area. Welcome!

http://daniel.haxx.se/blog/2014/05/14/why-sftp-is-still-slow-in-curl/

|

|

Christian Heilmann: Open Web Apps – a talk at State of the Browser in London |

On my birthday, 26th of April 2014, I was lucky enough to once again be part of the State of the Browser conference. I gave the closing talk. In it I tried to wrap up what has been said before and remind people about what apps are. I ended with an analysis of how web technologies as we have them now are good enough already or on the way there.

The slides are available on Slideshare:

The video recording of the talk features the amazing outfit I wore, as originally Daniel Appelquist said he’ll be the best dressed speaker at the event.

Open web apps – going beyond the desktop from London Web Standards on Vimeo.

In essence, I talked about apps meaning four things:

- focused: fullscreen with a simple interface

- mobile: works offline

- contained: deleting the icon deletes the app

- integrated: works with the OS and has hardware access

responsive and fast: runs smooth, can be killed without taking down the rest of the OS

The resources I talked about are:

- LocalForage – making local storage easy by giving the API of localStorage to indexedDB/WebSQL

- A great discussion on installable webapps by Boris Smus

- Overview of the App model for Firefox(OS)

- Report on cross-platform open web apps

- Open web apps on Android and integration in the OS

- Responsive guidelines for FirefoxOS

- The Firefox Marketplace

- Write elsewhere, run on FirefoxOS – a list of apps that have been easily ported to HTML5

- Mozilla Appmaker – WYSIWYG for Open Web Apps

Make sure to also watch the other talks given at State of the Browser – there was some great information given for free. Thanks for having me, London Web Standards team!

http://christianheilmann.com/2014/05/14/open-web-apps-a-talk-at-state-of-the-browser-in-london/

|

|

Andreas Gal: Reconciling Mozilla’s Mission and the W3C EME |

With most competing browsers and the content industry embracing the W3C EME specification, Mozilla has little choice but to implement EME as well so our users can continue to access all content they want to enjoy. Read on for some background on how we got here, and details of our implementation.

Digital Rights Management (DRM) is a tricky issue. On the one hand content owners argue that they should have the technical ability to control how users share content in order to enforce copyright restrictions. On the other hand, the current generation of DRM is often overly burdensome for users and restricts users from lawful and reasonable use cases such as buying content on one device and trying to consume it on another.

DRM and the Web are no strangers. Most desktop users have plugins such as Adobe Flash and Microsoft Silverlight installed. Both have contained DRM for many years, and websites traditionally use plugins to play restricted content.

In 2013 Google and Microsoft partnered with a number of content providers including Netflix to propose a “built-in” DRM extension for the Web: the W3C Encrypted Media Extensions (EME).

The W3C EME specification defines how to play back such content using the HTML5

Mozilla believes in an open Web that centers around the user and puts them in control of their online experience. Many traditional DRM schemes are challenging because they go against this principle and remove control from the user and yield it to the content industry. Instead of DRM schemes that limit how users can access content they purchased across devices we have long advocated for more modern approaches to managing content distribution such as watermarking. Watermarking works by tagging the media stream with the user’s identity. This discourages copyright infringement without interfering with lawful sharing of content, for example between different devices of the same user.

Mozilla would have preferred to see the content industry move away from locking content to a specific device (so called node-locking), and worked to provide alternatives.

Instead, this approach has now been enshrined in the W3C EME specification. With Google and Microsoft shipping W3C EME and content providers moving over their content from plugins to W3C EME Firefox users are at risk of not being able to access DRM restricted content (e.g. Netflix, Amazon Video, Hulu), which can make up more than 30% of the downstream traffic in North America.

We have come to the point where Mozilla not implementing the W3C EME specification means that Firefox users have to switch to other browsers to watch content restricted by DRM.

This makes it difficult for Mozilla to ignore the ongoing changes in the DRM landscape. Firefox should help users get access to the content they want to enjoy, even if Mozilla philosophically opposes the restrictions certain content owners attach to their content.

As a result we have decided to implement the W3C EME specification in our products, starting with Firefox for Desktop. This is a difficult and uncomfortable step for us given our vision of a completely open Web, but it also gives us the opportunity to actually shape the DRM space and be an advocate for our users and their rights in this debate. The existing W3C EME systems Google and Microsoft are shipping are not open source and lack transparency for the user, two traits which we believe are essential to creating a trustworthy Web.

The W3C EME specification uses a Content Decryption Module (CDM) to facilitate the playback of restricted content. Since the purpose of the CDM is to defy scrutiny and modification by the user, the CDM cannot be open source by design in the EME architecture. For security, privacy and transparency reasons this is deeply concerning.

From the security perspective, for Mozilla it is essential that all code in the browser is open so that users and security researchers can see and audit the code. DRM systems explicitly rely on the source code not being available. In addition, DRM systems also often have unfavorable privacy properties. To lock content to the device DRM systems commonly use “fingerprinting” (collecting identifiable information about the user’s device) and with the poor transparency of proprietary native code it’s often hard to tell how much of this fingerprinting information is leaked to the server.

We have designed an implementation of the W3C EME specification that satisfies the requirements of the content industry while attempting to give users as much control and transparency as possible. Due to the architecture of the W3C EME specification we are forced to utilize a proprietary closed-source CDM as well. Mozilla selected Adobe to supply this CDM for Firefox because Adobe has contracts with major content providers that will allow Firefox to play restricted content via the Adobe CDM.

Firefox does not load this module directly. Instead, we wrap it into an open-source sandbox. In our implementation, the CDM will have no access to the user’s hard drive or the network. Instead, the sandbox will provide the CDM only with communication mechanism with Firefox for receiving encrypted data and for displaying the results.

Traditionally, to implement node-locking DRM systems collect identifiable information about the user’s device and will refuse to play back the content if the content or the CDM are moved to a different device.

By contrast, in Firefox the sandbox prohibits the CDM from fingerprinting the user’s device. Instead, the CDM asks the sandbox to supply a per-device unique identifier. This sandbox-generated unique identifier allows the CDM to bind content to a single device as the content industry insists on, but it does so without revealing additional information about the user or the user’s device. In addition, we vary this unique identifier per site (each site is presented a different device identifier) to make it more difficult to track users across sites with this identifier.

Adobe and the content industry can audit our sandbox (as it is open source) to assure themselves that we respect the restrictions they are imposing on us and users, which includes the handling of unique identifiers, limiting the output to streaming and preventing users from saving the content. Mozilla will distribute the sandbox alongside Firefox, and we are working on deterministic builds that will allow developers to use a sandbox compiled on their own machine with the CDM as an alternative. As plugins today, the CDM itself will be distributed by Adobe and will not be included in Firefox. The browser will download the CDM from Adobe and activate it based on user consent.

While we would much prefer a world and a Web without DRM, our users need it to access the content they want. Our integration with the Adobe CDM will let Firefox users access this content while trying to maximize transparency and user control within the limits of the restrictions imposed by the content industry.

There is also a silver lining to the W3C EME specification becoming ubiquitous. With direct support for DRM we are eliminating a major use case of plugins on the Web, and in the near future this should allow us to retire plugins altogether. The Web has evolved to a comprehensive and performant technology platform and no longer depends on native code extensions through plugins.

While the W3C EME-based DRM world is likely to stay with us for a while, we believe that eventually better systems such as watermarking will prevail, because they offer more convenience for the user, which is good for the user, but in the end also good for business. Mozilla will continue to advance technology and standards to help bring about this change.

Filed under: Mozilla

|

|

Richard Newman: Language switching in Firefox for Android |

Bug 917480 just landed in mozilla-central, and should show up in your next Nightly. This sizable chunk of work provides settings UI for selecting a locale within Firefox for Android.

If all goes well in the intervening weeks, Firefox 32 will allow you to choose from our 49 supported languages without restarting your browser, and regardless of the locales supported by your Android device. (For more on this, see my earlier blog post.)

We’ve tested this on multiple Android versions, devices, and form factors (and every one is different!), and we’re quite confident that things will work for almost everyone. But if something doesn’t work for you, please file a bug and let me know.

If you want more details, have a read through some of my earlier posts on the topic.

http://160.twinql.com/language-switching-in-firefox-for-android/

|

|

Will Kahn-Greene: Fiddling with Kibana |

I just kicked off a script that's going to take around 4 hours to complete mostly because the API it's running against doesn't want me doing more than 60 requests/minute. Given I've got like 13k requests to do, that takes a while.

I'm (ab)using Elasticsearch to store the data from my script so that I can analyze it more easily--terms facet is pretty handy here.

Given that I've got some free time now, I spent 5 minutes setting up Kibana.

Steps:

- download the tarball

- untar it into a directory

- edit kibana-3.0.1/config.js to point to my local Elasticsearch cluster (the defaults were fine, so I could have skipped this step)

- cd kibana-3.0.1/ and run python -m SimpleHTTPServer 5000 (I'm using a Python-y thing here, but you can use any web-server)

- point my browser to http://localhost:5000

Now I'm using Kibana.

Now that I've got it working, first thing I do is click on the cog in the upper right hand corner, click on the Index tab and change the index to the one I wanted to look at. Now I'm looking at the data my script is producing.

The Kibana site says Kibana excels at timestamped data, but I think it's helpful for what I'm looking at now despite it not being timestamped. I get immediate terms facets on the fields for the doc type I'm looking at. I can run queries, pick specific columns, reorder, do graphs, save my dashboard to look at later, etc.

If you're doing Elasticsearch stuff, it's worth looking at if only to give you another tool to look at data with.

|

|

Paul Rouget: Tracking what's going on in Firefox Nightly (help needed) |

Tracking what's going on in Firefox Nightly (help needed)

I'm behind the @FirefoxNightly twitter account (and there's a also a blog: firefoxnightly.tumblr.com). I'm supposed to find new features added to Firefox that might impact web developers and end users.

Keeping track of interesting changes without drowning into bugmails is hard. I tried for a long time to just follow a large set of components, then track the hg commits, but it's just too much work. I adopted a new strategy, less optimal, but I think it's good enough and doesn't take me too much time:

bugzilla requests:

List of bugs that have been fixed and might need my attention:

- http:///d06y9h(flagged as dev-doc-needed)

- http:///ByEOQG (flagged as dev-doc-complete)

- http:///x2hvnt (flagged as feature)

dev.platform: intent to ship/implement

In this mailing list, I look for the "intent to ship" or "intent to implement" posts.

Forums:

I regularly check mozillazine, geckozone and neowin threads. There's a lot of noise there, but there is always one or two users who are very good at spotting new things that I tend to miss.

Meeting notes:

blog.mozilla.org/meeting-notes/

@FirefoxNightly mentions:

Firefox developers sometimes tweet when a new feature lands, mentionning @FirefoxNightly.

How you can help:

If you know any tricks that could help me to find interesting bugs, please let me know. And if you spot anything that should be tweeted by the @FirefoxNightly account, just tweet and mention @FirefoxNightly.

Thanks

|

|

Nicholas Nethercote: AdBlock Plus’s effect on Firefox’s memory usage |

AdBlock Plus (ABP) is the most popular add-on for Firefox. AMO says that it has almost 19 million users, which is almost triple the number of the second most popular add-on. I have happily used it myself for years — whenever I use a browser that doesn’t have an ad blocker installed I’m always horrified by the number of ads there are on the web.

But we recently learned that ABP can greatly increase the amount of memory used by Firefox.

First, there’s a constant overhead just from enabling ABP of something like 60–70 MiB. (This is on 64-bit builds; on 32-bit builds the number is probably a bit smaller.) This appears to be mostly due to additional JavaScript memory usage, though there’s also some due to extra layout memory.

Second, there’s an overhead of about 4 MiB per iframe, which is mostly due to ABP injecting a giant stylesheet into every iframe. Many pages have multiple iframes, so this can add up quickly. For example, if I load TechCrunch and roll over the social buttons on every story (thus triggering the loading of lots of extra JS code), without ABP, Firefox uses about 194 MiB of physical memory. With ABP, that number more than doubles, to 417 MiB. This is despite the fact that ABP prevents some page elements (ads!) from being loaded.

An even more extreme example is this page, which contains over 400 iframes. Without ABP, Firefox uses about 370 MiB. With ABP, that number jumps to 1960 MiB. Unsurprisingly, the page also loads more slowly with ABP enabled.

So, it’s clear that ABP greatly increases Firefox’s memory usage. Now, this isn’t all bad. Many people (including me!) will be happy with this trade-off — they will gladly use extra memory in order to block ads. But if you’re using a low-end machine without much memory, you might have different priorities.

I hope that the ABP authors can work with us to reduce this overhead, though I’m not aware of any clear ideas on how to do so. In the meantime, it’s worth keeping these measurements in mind. In particular, if you hear people complaining about Firefox’s memory usage, one of the first questions to ask is whether they have ABP installed.

https://blog.mozilla.org/nnethercote/2014/05/14/adblock-pluss-effect-on-firefoxs-memory-usage/

|

|

Chris Crews: The End of Firebot |

It has been a long road for firebot, the sometimes overly chatty companion on Mozilla’s IRC server that I’ve operated for almost 10 years, but the time has come for me to part ways with the bot.

On June 1st, 2014, firebot will shut down.

The reasons for this decision are somewhat vague, but put simply, I don’t have the time to invest in continuing to maintain the bot anymore and I’m not interested in its development. Firebot is based on mozbot, which has seen very little development for basically the whole time firebot has run it, its code needs to be rewritten, moved to a modern version control system and broken features need to be fixed. Compounding that problem, I’m not involved with the mozilla project anymore, so configuration changes are hard for me to manage. I also have difficulty keeping up with all the channels the bot is in, which ones he should be and shouldn’t be to keep him available for new ones.

If someone else wants to step up and take him over before the June 1st deadline, contact me. (admin at firebot.pcfire dot org )

Thanks for all the support for firebot for the last 10 years

– Chris (Wolf)

(Cross-posted from The Firebot Blog: http://firebot.pcfire.org/2014/05/13/the-end-of-firebot/ )

|

|

Mike Hommey: Don’t ever use in-tree mozconfigs |

I just saw two related gists about how some people are building Firefox.

Both are doing the same mistake, which is not really surprising, since one is based on the other. As I’m afraid people might pick that up, I’m posting this:

Don’t ever use in-tree mozconfigs

If your mozconfig contains something like

. $topsrcdir/something

Then remove it. Now.

Those mozconfigs are for use in automated builds. They make many assumptions on the build environment being the one from the build slaves. Local developers shouldn’t need anything but minimalistic, self contained mozconfigs. If there are things that can be changed in the build system to accommodate developers, file bugs (I could certainly see the .noindex thing automatically added to MOZ_OBJDIR by default on mac)

Corollary: if you can’t build Firefox without a mozconfig (for a reason other than your build environment missing build requirements), file a bug.

|

|

Chris Double: Installing NixOS on a ThinkPad W540 with encrypted root |

I recently got a ThinkPad W540 laptop and I’m trying out the NixOS Linux distribution:

NixOS is a GNU/Linux distribution that aims to improve the state of the art in system configuration management. In existing distributions, actions such as upgrades are dangerous: upgrading a package can cause other packages to break, upgrading an entire system is much less reliable than reinstalling from scratch, you can’t safely test what the results of a configuration change will be, you cannot easily undo changes to the system, and so on.

I use the Nix package manager alongside other distributions and decided to try out the full operating system. This post outlines the steps I took to install NixOS with full disk encryption using LVM on LUKS.

Windows

The W540 comes with Windows 8.1 pre-installed and recovery partitions to enable rebuilding the system. I followed the install procedure to get Windows working and proceeding to make a recovery USB drive so I could get back to the starting state if things went wrong. Once this completed I went on with installing NixOS.

NixOS Live CD

I used the NixOS Graphical Live CD to install. I could have used the minimal CD but I went fo the graphical option to make sure the basic OS worked fine on the hardware. I installed the Live CD to a USB stick from another Linux machine using unetbootin.

To boot from this I had to change the W540 BIOS settings:

- Change the USB drive in the boot sequence so it was the first boot option.

- Disable Secure Boot.

- Change UEFI to be UEFI/Legacy Bios from the previous UEFI only setting.

Booting from the USB drive on the W540 worked fine and got me to a login prompt. Logging in with root and no password gives a root shell. Installation can proceed from there or the GUI can be started with start display-manager.

Networking

The installation process requires a connected network. I used a wireless network. This is configured in the Live CD using wpa_supplicant. This required editing /etc/wpa_supplicant.conf to contain the settings for the network I was connecting to. For a public nework it was something like:

network={

ssid="My Network"

key_mgmt=NONE

}The wpa_supplicant service needs to be restarted after this:

# systemctl restart wpa_supplicant.serviceIt’s important to get the syntax of the wpa_supplicant.conf file correct otherwise it will fail to restart with no visible error.

Partition the disk

Partitioning is done manually using gdisk. Three partitions are needed:

- A small partition to hold GPT information and provide a place for GRUB to store data. I made this 1MB in size and it must have a partition type of

ef02. This was/dev/sda1. - An unencrypted boot partition used to start the initial boot, and load the encrypted partition. I made this 1GB in size (which is on the large side for what it needs to be) and left it at the partition type

8300. This was/dev/sda2. - The full disk encrypted partition. This was set to the size of the rest of the drive and partition type set to

8e00for “Linux LVM”. This was/dev/sda3.

Create encrypted partitions

Once the disk is partitioned above we need to encrypt the main root partition and use LVM to create logical partitions within it for swap and root:

# cryptsetup luksFormat /dev/sda3

# cryptsetup luksOpen /dev/sda3 enc-pv

# pvcreate /dev/mapper/enc-pv

# vgcreate vg /dev/mapper/enc-pv

# lvcreate -L 40G -n swap vg

# lvcreate -l 111591 -n root vgThe lvcreate commands create the logical partitions. The first is a 40GB swap drive. The laptop has 32GB of memory so I set this to be enough to store all of memory when hibernating plus extra. It could be made quite a bit smaller. The second creates the root partition. I use the -l switch there to set the exact number of extents for the size. I got this number by trying a -L with a larger size than the drive and used the number in the resulting error message.

Format partitions

The unencrypted boot partition is formatted with ext2 and the root partition with ext4:

# mkfs.ext2 -L boot /dev/sda2

# mkfs.ext4 -O dir_index -j -L root /dev/vg/root

# mkswap -L swap /dev/vg/swapThese should be mounted for the install process as follows:

# mount /dev/bg/root /mnt

# mkdir /mnt/boot

# mount /dev/sda2 /mnt/boot

# swapon /dev/vg/swapConfigure NixOS

NixOS uses a declarative language for the configuration file that is used to install and configure the operating system. An initial file ready to be edited should be created with:

$ nixos-generate-config --root /mntThis creates the following files:

/mnt/etc/nixos/configuration.nix/mnt/etc/nixos/hardware-configuration.nix

The latter file is rewritten everytime this command is run. The first file can be edited and is never rewritten. For the initial boot I had to make one change to hardware-configuration.nix. I commented out this line:

# services.xserver.videoDrivers = [ "nvidia" ];I can re-add it later when configuring the X server if I want to use the nvidia driver.

The changes that need to be made to configuration.nix involve setting the GRUB partition, the Luks data and any additional packages to be installed. The Luks settings I added were:

boot.initrd.luks.devices = [

{

name = "root"; device = "/dev/sda3"; preLVM = true;

}

];I changed the GRUB boot loader device to be:

boot.loader.grub.device = "/dev/sda";To enable wireless I made sure I had:

networking.wireless.enable = true;I added my preferred editor, vim, to the system packages:

environment.systemPackages = with pkgs; [

vim

];Enable OpenSSH:

services.openssh.enable = true;I’ve left configuring X and other things for later.

Install NixOS

To install based on the configuration made above:

# nixos-installIf that completes successfully the system can be rebooted into the newly installed NixOS:

# rebootYou’ll need to enter the encryption password that was created during cryptsetup when rebooting.

Completing installation

Once rebooted re-enable the network by performing the /etc/wpa_supplicant.conf steps done during the install.

Installation of additional packages can continue following the NixOS manual. This mostly involves adding or changing settings in /etc/nixos/configuration.nix and then running:

# nixos-rebuild switchThis is outlined in Changing the Configuration in the manual.

Troubleshooting

The most common errors I made were syntax errors in wpa_supplicant.conf and configuration.nix. The other issue I had was not creating the initial GPT partition. GRUB will give an error in this case explaining the issue. You can reboot the Live USB drive at any time and mount the encrypted drives to edit files if needed. The commands to mount the drives are:

# cryptsetup luksOpen /dev/sda3 enc-pv

# vgscan --mknodes

# vgchange -ay

# mount /dev/bg/root /mnt

# mkdir /mnt/boot

# mount /dev/sda2 /mnt/boot

# swapon /dev/vg/swapTips

environment.systemPackages in /etc/configuration.nix is where you add packages that are seen by all users. When this is changed you need to run the following for it to take effect:

# nixos-rebuild switchTo find the package name to use, run something like (for vim):

$ nix-env -qaP '*'|grep vimA user can add their own packages using:

$ nix-env -i vimAnd remove with:

$ nix-env -e vimA useful GUI for connecting to wireless networks is wpa_gui. To enable this add wpa_supplicant_gui to environment.systemPackages in /etc/nixos/configuration.nix followed by a nixos-rebuild switch. Add the following line to /etc/wpa_supplicant.conf:

ctrl_interface=/var/run/wpa_supplicantRestart wpa_supplicant and run the gui:

$ systemctl restart wpa_supplicant.service

$ sudo wpa_guiIt’s possible to make custom changes to Nix packages for each user. This is controlled by adding definitions to ~/.nixpkgs/config.nix. The following config.nix will provide Firefox with the official branding:

{

packageOverrides = pkgs : with pkgs; rec {

firefoxPkgs = pkgs.firefoxPkgs.override { enableOfficialBranding = true; };

};

}Installing or re-installing for the user will use this version of Firefox:

$ nix-env -i firefoxhttp://bluishcoder.co.nz/2014/05/14/installing-nixos-with-encrypted-root-on-thinkpad-w540.html

|

|

Asa Dotzler: Tablets Still Shipping |

We’ve had some bumps in the road in distributing the tablets to the Tablet Contribution Program participants. Because of the overlap in timing with our Flame reference phone program and the distribution of those devices, we are over capacity for what our one heroic shipping person can manage. We’re about 25% of the way though shipping the tablets and will strive to get the remainder out in the coming week or two.

If you received a confirmation that you were going to receive a tablet, you will be receiving a tablet. When your tablet ships, you will get an email with a shipping tracking number. Sorry for the delay. In the mean time, you can start your adventure at the Tablet Contribution Program wiki.

|

|

Christian Heilmann: Thank you, TEDx Thessaloniki |

Last weekend was a milestone for me: I spoke at my first TEDx event. I am a big fan of TED and learned a lot from watching their talks and using them as teaching materials for coaching other speakers. That’s why this was a big thing for me and I want to take this opportunity to thank the organisers and point out just how much out of their way they went to make this a great experience for all involved.

Hey, come and speak at TEDx!

I got introduced to the TEDx Thessaloniki folk by my friend Amalia Agathou and once contacted and approved, I was amazed just how quickly everything fell into place:

- There was no confusion as to what was expected of me – a talk of 18 minutes tops, presented from a central computer so I needed to create powerpoint or keynote slides dealing with the overall topic of the event “every end is a beginning”

- I was asked to deliver my talk as a script and had an editor to review it to make it shorter, snappier or more catered to a “TED” audience

- My flights and hotel were booked for me and I got my tickets and hotel voucher as email – no issue getting there and no “I am with the conference” when trying to check into the hotel

- I had a deadline to deliver my slides and then all that was left was waiting for the big day to come.

A different stage

TEDx talks are different to other conferences as they are much more focused on the presenter. They are more performance than talk. Therefore the setup was different than stages I am used to:

- There were a lot of people in a massive theatre expecting me to say something exciting

- I had a big red dot to stand and move in with a stage set behind me (lots of white suitcases, some of them with video projection on them)

- There were three camera men; two with hand-held cameras and one with a boom-mounted camera that swung all around me

- I had two screens with my slides and a counter telling me the time

- I was introduced before my talk and had 7 seconds to walk on stage whilst a music was playing and my name shown on the big screens on stage

- In addition to the presentations, there were also short plays and bands performing on stage

Rehearsals, really?

Suffice to say, I was mortified. This was too cool to be happening and hearing all the other speakers and seeing their backgrounds (the Chief Surgeon of the Red Cross, famous journalists, very influential designers, political activists, the architect who designed the sea-side of the city, famous writers, early seed stage VCs, car designers, photo journalists and many, many more) made me feel rather inadequate with my hotch-potch career putting bytes in order to let people see kittens online.

We had a day of rehearsals before the event and I very much realised that they are not for me. Whilst I had to deliver a script, I never stick to one. I put my slides together to remind me what I want to cover and fill the gaps with whatever comes to me. This makes every talk exciting to me, but also a nightmare for translators (so, a huge SORRY and THANK YOU to whoever had to convert my stream of consciousness into Greek this time).

Talking to an empty room doesn’t work for me – I need audience reactions to perform well. Every speaker had a speaking coach to help them out after the rehearsal. They talked to us what to improve, what to enhance, how to use the stage better and stay in our red dot and so on. My main feedback was to make my jokes more obvious as subtle sarcasm might not get noticed. That’s why I added it thicker during the talk. Suffice to say, my coach was thunderstruck after seeing the difference of my rehearsal and the real thing. I told him I need feedback.

Event organisation and other show facts

All in all I was amazed by how well this event was organised:

- The hotel was in walking distance along a seaside boulevard to the theatre

- Food was organised in food trucks outside the building and allowing people to eat it on the lawn whilst having a chat. This avoided long queues.

- Coffee was available by partnering with a coffee company

- The speaker travel was covered by partnering with an airline – Aegean

- The day was organised into four sections with speakers on defined topics with long breaks in between

- There were Q&A sessions with speakers in breaks (15 minutes each, with a defined overall topic and partnering speakers with the same subject matter but differing viewpoints)

- All the videos were streamed and will end up on YouTube. They were also shown on screens outside the auditorium for attendees who preferred sitting on sofas and cushions

- There was an outside afterparty with drinks provided by a drinks company

- Speaker dinners were at restaurants in walking distance and going long into the night

Attendees

The best thing for me was that the mix of attendees was incredible. I met a few fellow developers, journalists, doctors, teachers, a professional clown, students and train drivers. Whilst TED has a reputation to be elitist, the ticket price of 40 Euro for this event ensured that there was a healthy cross-section and the afterparty blended in nicely with other people hanging out at the beach.

I am humbled and amazed that I pulled that off and I was asked to be part of this. I can’t wait to get my video to see how I did, because right now, it all still seems like a dream.

http://christianheilmann.com/2014/05/13/thank-you-tedx-thessaloniki/

|

|

Niko Matsakis: Focusing on ownership |

Over time, I’ve become convinced that it would be better to drop the distinction between mutable and immutable local variables in Rust. Many people are highly skeptical, to say the least. I wanted to lay out my argument in public. I’ll give various motivations: a philosophical one, an eductional one, and a practical one, and also address the main defense of the current system. (Note: I considered submitting this as a Rust RFC, but decided that the tone was better suited to a blog post, and I don’t have the time to rewrite it now.)

Just to be clear

I’ve written this article rather forcefully, and I do think that the path I’m advocating would be the right one. That said, if we wind up keeping the current system, it’s not a disaster or anything like that. It has its advantages and overall I find it pretty nice. I just think we can improve it.

One sentence summary

I would like to remove the distinction between immutable and mutable

locals and rename &mut pointers to &my, &only, or &uniq (I

don’t care). There would be no mut keyword.

Philosophical motivation

The main reason I want to do this is because I believe it makes the language more coherent and easier to understand. Basically, it refocuses us from talking about mutability to talking about aliasing (which I will call “sharing”, see below for more on that).

Mutability becomes a sideshow that is derived from uniqueness: “You can always mutate anything that you have unique access to. Shared data is generally immutable, but if you must, you can mutable it using some kind of cell type.”

Put another way, it’s become clear to me over time that the problems with data races and memory safety arise when you have both aliasing and mutability. The functional approach to solving this problem is to remove mutability. Rust’s approach would be to remove aliasing. This gives us a story to tell and helps to set us apart.

A note on terminology: I think we should refer to aliasing as sharing. In the past, we’ve avoided this because of its multithreaded connotations. However, if/when we implement the data parallelism plans I have proposed, then this connotation is not at all inappropriate. In fact, given the close relationship between memory safety and data races, I actually want to promote this connotation.

Eductional motivation

I think that the current rules are harder to understand than they have

to be. It’s not obvious, for example, that &mut T implies no

aliasing. Moreover, the notation &mut T suggests that &T implies

no mutability, which is not entirely accurate, due to types like

Cell. And nobody can agree on what to call them (“mutable/immutable

reference” is the most common thing to say, but it’s not quite right).

In contrast, a type like &my T or &only T seems to make

explanations much easier. This is a unique reference – of course

you can’t make two of them pointing at the same place. And

mutability is an orthogonal thing: it comes from uniqueness, but

also cells. And the type &T is precisely its opposite, a shared

reference. RFC PR #58 makes a number of similar arguments. I

won’t repeat them here.

Practical motivation

Currently there is a disconnect between borrowed pointers, which can

be either shared or mutable+unique, and local variables, which are

always unique, but may be mutable or immutable. The end result of this

is that users have to place mut declarations on things that are not

directly mutated.

Locals can’t be modeled using references

This phenomena arises from the fact that references are just not as expressive as local variables. In general, this hinders abstraction. Let me give you a few examples to explain what I mean. Imagine I have an environment struct that stores a pointer to an error counter:

struct Env { errors: &mut int }

Now I might create this structure (and use it) like so:

let mut errors = 0;

let env = Env { errors: &mut errors };

...

if some_condition {

*env.errors += 1;

}

OK, now imagine that I want to extract out the code that mutates

env.errors into a separate function. I might think that, since env

is not declared as mutable above, I can use a & reference:

let mut errors = 0;

let env = Env { errors: &mut errors };

helper(&env);

fn helper(env: &Env) {

...

if some_condition {

*env.errors += 1; // ERROR

}

}

But that is wrong. The problem is that &Env is an aliasable type,

and hence env.errors appears in an aliasable location. To make this

code work, I have to declare env as mutable and use an &mut

reference:

let mut errors = 0;

let mut env = Env { errors: &mut errors };

helper(&mut env);

This problem arises because we know about locals being unique, but we can’t put that knowledge into a borrowed reference without making it mutable.

This problem arises in a number of other places. Until now, we’ve papered over it in a variety of ways, but I continue to feel like we’re papering over a disconnect that just shouldn’t be there.

Type-checking closures

We had to work around this limitation with closures. Closures are

mostly desugarable into structs like Env, but not quite. This is

because I didn’t want to require that &mut locals be declared mut

if they are used in closures. In other words, given some code like:

fn foo(errors: &mut int) {

do_something(|| *errors += 1)

}

The closure expression will in fact create an Env struct like:

struct ClosureEnv<'a, 'b> {

errors: &uniq &mut int

}

Note the &uniq reference. That’s not something an end-user can type.

It means a “unique but not necessarily mutable” pointer. It’s needed

to make this all type check. If the user tried to write that struct

manually, they’d have to write &mut &mut int, which would in turn

require that the errors parameter be declared mut errors: &mut

int.

Unboxed closures and procs

I foresee this limitation being an issue for unboxed closures. Let me

elaborate on the design I was thinking of. Basically, the idea would

be that a || expression is equivalent to some fresh struct type that

implements one of the Fn traits:

trait Fn { fn call(&self, ...); }

trait FnMut { fn call(&mut self, ...); }

trait FnOnce { fn call(self, ...); }

The precise trait would be selected by the expected type, as today. In this case, consumers of closures can write one of two things:

fn foo(&self, closure: FnMut) { ... }

fn foo>(&self, closure: T) { ... }

We’ll … probably want to bikeshed the syntax, maybe add sugar like

FnMut(int) -> int or retain |int| -> int, etc. That’s not so

important, what matters is that we’d be passing in the closure by

value. Note that with current DST rules it is legal to pass in a

trait type by value as an argument, so the FnMut argument

is legal in DST and not an issue.

An aside: This design isn’t complete and I will describe the full details in a separate post.

The problem is that calling the closure will require an &mut

reference. Since the closure is passed by value, users will again

have to write a mut where it doesn’t seem to belong:

fn foo(&self, mut closure: FnMut) {

let x = closure.call(3);

}

This is the same problem as the Env example above: what’s really

happening here is that the FnMut trait just wants a unique

reference, but since that is not part of the type system, it requests

a mutable reference.

Now, we can probably work around this in various ways. One thing we

could do is to have the || syntax not expand to “some struct type”

but rather “a struct type or a pointer to a struct type, as dictated

by inference”. In that case, the callee could write:

fn foo(&self, closure: &mut FnMut) {

let x = closure.call(3);

}

I don’t mean to say this is the end of the world. But it’s one more in a growing of contortions we have to go through to retain this split between locals and references.

Other parts of the API

I haven’t done an exhaustive search, but naturally this distinction

creeps in elsewhere. For example, to read from a Socket, I need a

unique pointer, so I have to declare it mutable. Therefore, sometime

like this doesn’t work:

let socket = Socket::new();

socket.read() // ERROR: need a mutable reference

Naturally, in my proposal, code like this would work fine. You’d still

get an error if you tried to read from a &Socket, but then it would

say something like “can’t create a unique reference to a shared

reference”, which I personally find more clear.

But don’t we need mut for safety?

No, we don’t. Rust programs would be equally sound if you just declared all bindings as mut. The compiler is perfectly capable of tracking which locals are being mutated at any point in time – precisely because they are local to the current function. What the type system really cares about is uniqueness.

The value I see in the current mut rules, and I won’t deny there is value, is primarily that they help to declare intent. That is, when I’m reading the code, I know which variables may be reassigned. On the other hand, I spend a lot of time reading C++ code too, and to be honest I’ve never noticed this as a major stumbling block. (Same goes for the time I’ve spent reading Java, JavaScript, Python, or Ruby code.)

It is also true that I have occasionally found bugs because I declared

a variable as mut and failed to mutate it. I think we could get

similar benefits via other, more aggressive lints (e.g., none of the

variables used in the loop condition are mutated in the loop body). I

personally cannot recall having encountered the opposite situation:

that is, if the compiler says something must be mutable, that

basically always means I forgot a mut keyword somewhere. (Think:

when was the last time you responded to a compiler error about illegal

mutation by doing anything other than restructuring the code to make

the mutation legal?)

Alternatives

I see three alternatives to the current system:

- The one I have given, where you just drop “mutability” and track only uniqueness.

- One where you have three reference types:

&,&uniq, and&mut. (As I wrote, this is in fact the type system we have today, at least from the borrow checker’s point of view.) A stricter variant in which “non-mut” variables are always considered aliased. That would mean that you’d have to write:

let mut errors = 0; let mut p = &mut errors; // Note that `p` must be declared `mut` *p += 1;You’d need to declare

pasmutbecause otherwise it’d be considered aliased, even though it’s a local, and hence mutating*pwould be illegal. What feels weird about this scheme is that the local variable is not aliased, and we clearly know that, since we will allow it to be moved, run destructors on it and so forth. That is, we still have a notion of “owned” that is distinct from “not aliased”.On the other hand, if we described this system by saying that mutability inherits through

&mutpointers, and not by talking about aliasing at all, it might make sense.

Of these three, I definitely prefer #1. It’s the simplest, and right now I am most concerned with how we can simplify Rust while retaining its character. Failing that, I think I prefer what we have right now.

Conclusions

Basically, I feel like the current rules around mutability have some

value, but they come at a cost. They are basically presenting a kind

of leaky abstraction: that is, they present a simple story that turns

out to be incomplete. This causes confusion for people as they

transition from the initial understanding, in which &mut is how

mutability works, into the full understanding: sometimes mut is

needed just to get uniqueness, and sometimes mutability comes without

the mut keyword.

Moreover, we have to bend over backwards to maintain the fiction that

mut means mutable and not unique. We had to add special cases to

borrowck to check closures. We have to make the rules around &mut

mutability more complex in general. We have to either add mut to

closures so that we can call them, or make closure expressions have a

less obvious desugaring. And so forth.

Finally, we wind up with a more complicated language overall. Instead of just having to think about aliasing and uniqueness, the user has to think about both aliasing and mutability, and the two are somehow tangled up together.

I don’t think it’s worth it.

http://smallcultfollowing.com/babysteps/blog/2014/05/13/focusing-on-ownership/

|

|

Soledad Penades: A year at Mozilla! |

Today marks effectively my first year at Mozilla! A year and a day ago I took my flight to San Francisco and then spent my first week in Mountain View, meeting most of my team mates. Since then, I’ve…

- been involved in 181 Mozilla bugs

- done 2151 “things” in github.

- gone to 17 events, of which I spoke or something similar in 7 of them

When I joined, I said I wasn’t sure what I’d be working on–it would be web related, and so far it’s been. I also said I would not be working in Firefox, and I still am not, but I’m getting closer: I’m lending a bit of my brain to Developer Tools, and I’ve also contributed to Firefox itself by reporting some interesting WebRTC and Web Audio bugs that I accidentally triggered building something else. I’m glad my crazy ideas end up contributing to the betterment of the product and the platform and we can all both enjoy the web and enjoy building for the web :-)

But it’s not all about “the productivity”. If you told me a year ago that I would end up meeting, working and even becoming friends with so many great people, I would have laughed in your face and tell you something like “go home, you’re drunk”. Seriously. It is pointless to try and list all of them here, but you know who you are, and I’m so glad I know you :-)

Finally, I’m seriously bad at “corporate theming”. So I don’t have any picture of myself with a Mozilla t-shirt, or a picture with a cute red panda to end up this post, not even a picture with a fluffy plush fire fox, so I’ll just leave you with this poser:

Walk into the club like…

|

|

Julian Seward: LUL: A Lightweight Unwinder Library for profiling Gecko |

Last August I asked the question “How fast can CFI/EXIDX-based stack unwinding be?” At the time I was experimenting with native unwinding using our in-tree Breakpad copy, but getting dismal performance results. The posting observed that Breakpad’s CFI unwinder is around 30 times slower than Valgrind’s CFI unwinder, and looked in detail at the reasons for this slowness.

Based on that analysis, I wrote a new lightweight unwinder library. LUL — as it became known — is aimed directly at doing unwinding for profiling. It is fast, robust, fairly accurate, and designed to allow a pool of worker threads to do unwinding, if that’s somewhere we want to go. It is also set up to facilitate the space-saving schemes discussed in “How compactly can CFI/EXIDX stack unwinding info be represented?” although those have not been implemented as yet. LUL stores unwind information in a simple, quick-to-use format, which could conceivably be generated by the Javascript JITs so as to facilitate transparent unwinding through Javascript as well as C++.

LUL has been integrated into the SPS profiler, and landed a couple of weeks back.

It currently provides unwinding on x86_64-linux, x86_32-linux and arm-android, using the Dwarf CFI and ARM EXIDX unwind formats. Unwinding by stack scanning is also supported, although that should rarely be needed. Compared to the Breakpad unwinder, there is a very substantial performance increase, achieving a cost of about 40% of a 1.2 GHz Cortex A9 for 1000 unwinds/second from leaf frames all the way back to XRE_Main().